An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource

Abstract

1. Introduction

2. Thematic Map Quality Metrics

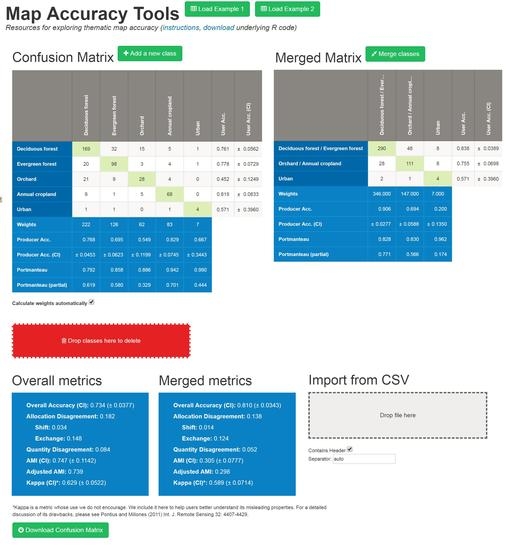

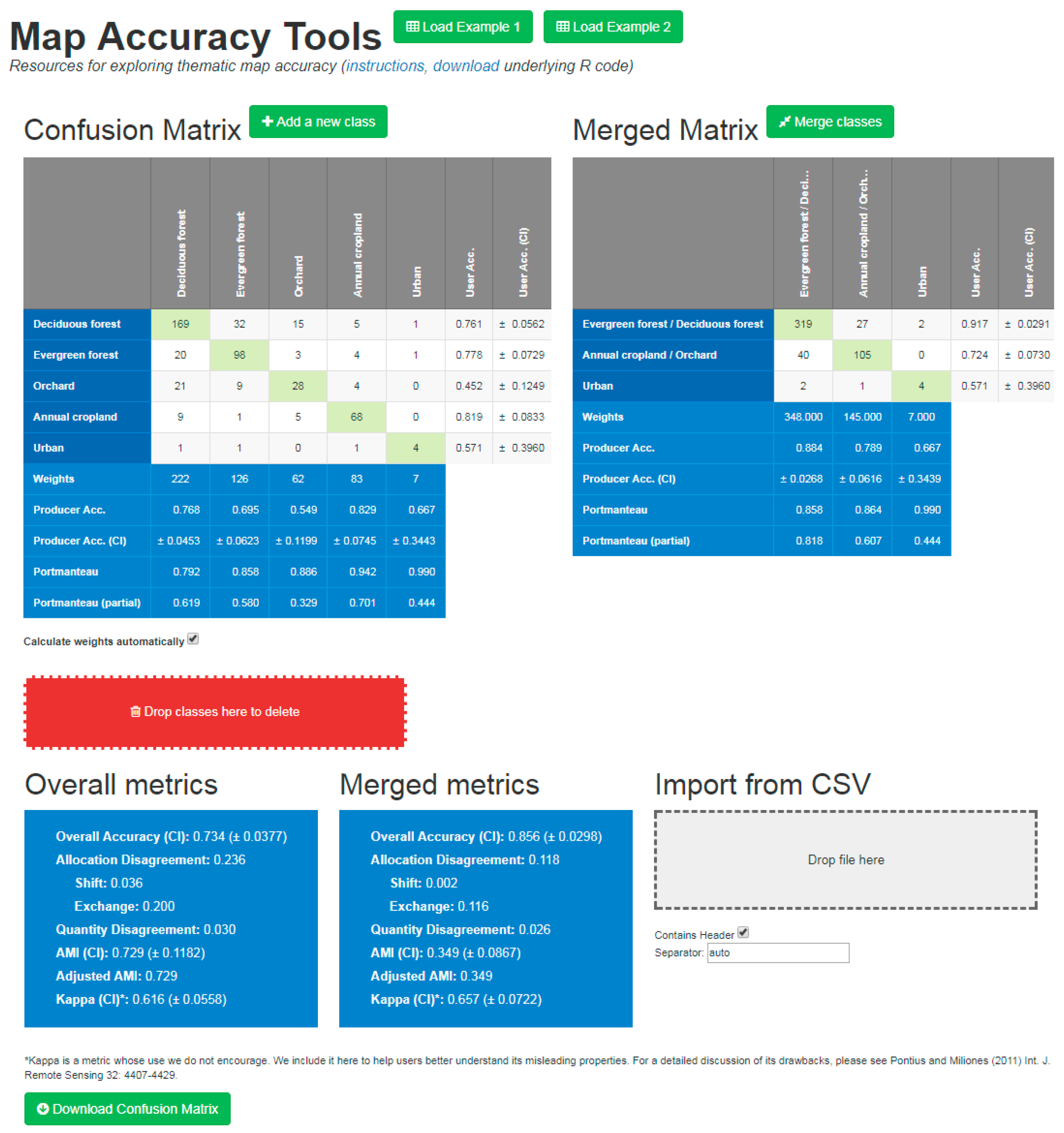

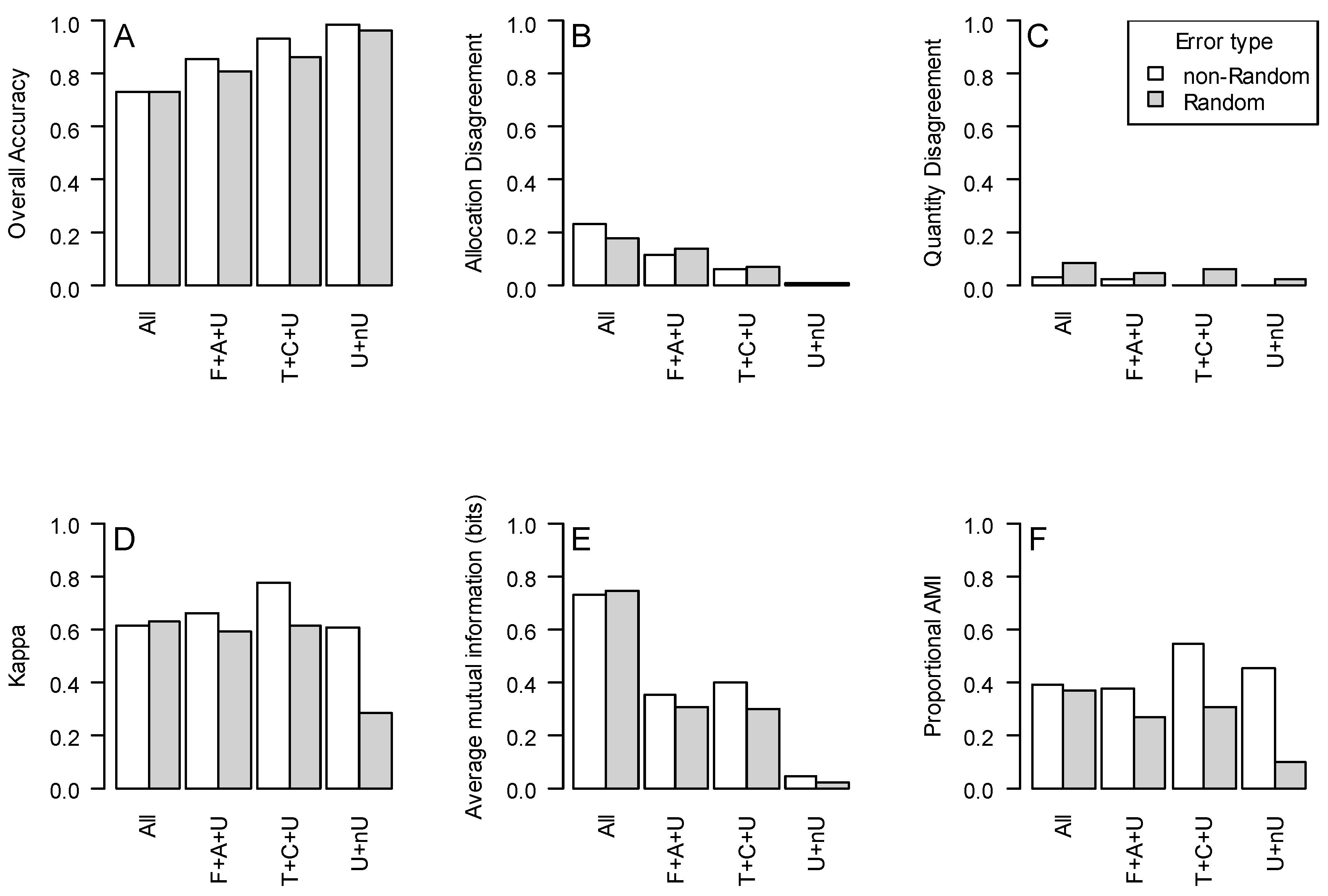

3. The Map Tools Website

4. Demonstrations of Some Under-appreciated Features of Accuracy Metrics

4.1. True Negative Validations Inflate Some Categorical Accuracy Metrics

4.2. Map-Wide Averages of User’s and Producer’s Accuracies Are not Meaningful

4.3 Combining Categories Shows Misleading Increases in Overall Accuracy and Kappa, but not AMI

4.4. The Misleadingness of Statistical Kappa Comparisons

4.5. How to Calculate the Statistical Significance of AMI Differences

5. Recommendations

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- European Union, Mapping Guide for a European Urban Atlas. Available online: https://cws-download.eea.europa.eu/local/ua2006/Urban_Atlas_2006_mapping_guide_v2_final.pdf (accessed on 15 February 2017).

- De Bruin, S.; Bregt, A.; van de Ven, M. Assessing fitness for use: the expected value of spatial data sets. Int. J. Geogr. Inf. Sci. 2001, 15, 457–471. [Google Scholar] [CrossRef]

- Stehman, S.V. Comparing thematic maps based on map value. Int. J. Remote Sens. 1999, 20, 234–2366. [Google Scholar] [CrossRef]

- Foody, G.M. Classification accuracy comparison: Hypothesis tests and the use of confidence intervals in evaluations of difference, equivalence and non-inferiority. Remote Sens. Environ. 2009, 113, 165–1663. [Google Scholar] [CrossRef]

- Congalton, R.G.; Oberwald, R.G.; Mead, R.A. Assessing Landsat classification accuracy using discrete multivariate analysis statistical techniques. Photogramm. Eng. Remote Sens. 1983, 49, 167–1678. [Google Scholar]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 7–89. [Google Scholar] [CrossRef]

- De Leeuw, J.; Jai, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A.K. Comparing accuracy assessments to infer superiority of image classification methods. Int. J. Remote Sens. 2006, 27, 22–232. [Google Scholar] [CrossRef]

- Pontius, R.G., Jr.; Millones, M. Death to kappa: birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 440–4429. [Google Scholar] [CrossRef]

- Liu, C.; Frazier, P.; Kumar, L. Comparative assessment of the measures of thematic classification accuracy. Remote Sens. Environ. 2007, 107, 60–616. [Google Scholar] [CrossRef]

- Pontius, R.G., Jr.; Malizia, N.R. Effect of category aggregation on map comparison. In International Conference on Geographic Information Science; Springer: Berlin, Germany, 2004; pp. 251–268. [Google Scholar]

- Card, D.H. Using known map category marginal frequencies to improve estimates of thematic map accuracy. Photogramm. Eng. Remote Sens. 1982, 48, 431–439. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 1999; pp. 45–48. [Google Scholar]

- Comber, A.; Fisher, P.; Brunsdon, C.; Khmag, A. Spatial analysis of remote sensing image classification accuracy. Remote Sens. Environ. 2012, 127, 237–246. [Google Scholar] [CrossRef]

- Foody, G.M. On the compensation for chance agreement in image classification accuracy assessment. Photogramm. Eng. Remote Sens. 1992, 58, 1459–1460. [Google Scholar]

- Congalton, R.; Mead, R.; Oderwald, R.; Heinen, J. Analysis of forest classification accuracy. In Remote Sensing Research Report 81-1; Virginia polytechnic Institute: Blacksburg, VR, USA, 1981. [Google Scholar]

- Rosenfeld, G.H.; Fitzpatrick-Lins, K. A coefficient of agreement as a measure of thematic classification accuracy. Photogramm. Eng. Remote Sens. 1986, 52, 224–227. [Google Scholar]

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef]

- Pontius, R.G.; Santacruz, A. Quantity, Exchange and Shift Components of Differences in a Square Contingency Table. Int. J. Remote Sens. 2014, 35, 7543–7554. [Google Scholar] [CrossRef]

- Finn, J.T. Use of the average mutual information index in evaluating classification error and consistency. Int. J. Geogr. Inf. Sci. 1993, 7, 349–366. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2013. [Google Scholar]

- Fritz, S.; See, L. Identifying and quantifying uncertainty and spatial disagreement in the comparison of Global Land Cover for different applications. Glob. Chang. Biol. 2008, 14, 1057–1075. [Google Scholar] [CrossRef]

- Pontius, R.G.; Walker, R.; Yao-Kumah, R.; Arima, E.; Aldrich, S.; Caldas, S.; Vergara, D. Accuracy assessment for a simulation model of Amazonian deforestation. Ann. Am. Assoc. Geogr. 2007, 97, 23–38. [Google Scholar] [CrossRef]

- Foody, G.M. What is the difference between two maps? A remote senser’s view. J. Geogr. Syst. 2006, 8, 119–130. [Google Scholar] [CrossRef]

- Liu, R.; Chen, Y.; Wu, J.; Gao, L.; Barrett, D.; Xu, T.; Li, X.; Li, L.; Huang, C.; Yu, J. Integrating Entropy-Based Naïve Bayes and GIS for Spatial Evaluation of Flood Hazard. Risk Anal. 2017, 37, 756–773. [Google Scholar] [CrossRef] [PubMed]

- Ramo, R.; Chuvieco, E. Developing a Random Forest Algorithm for MODIS Global Burned Area Classification. Remote Sens. 2017, 9, 1193–1195. [Google Scholar] [CrossRef]

- Franke, J.; Keuck, V.; Siegert, F. Assessment of grassland use intensity by remote sensing to support conservation schemes. J. Nat. Conserv. 2012, 20, 125–134. [Google Scholar] [CrossRef]

- Treitz, P.M.; Howarth, P.J.; Suffling, R.C.; Smith, P. Application of detailed ground information to vegetation mapping with high spatial resolution digital imagery. Remote Sens. Environ. 1992, 42, 65–82. [Google Scholar] [CrossRef]

- Wickham, J.D.; Stehman, S.V.; Fry, J.A.; Smith, J.H.; Homer, C.G. Thematic accuracy of the NLCD 2001 land cover for the conterminous United States. Remote Sens. Environ. 2010, 114, 1286–1296. [Google Scholar] [CrossRef]

- Fritz, S.; Fuss, S.; Havlik, P.; McCallum, I.; Obersteiner, M.; Szolgayova, J.; See, L. The value of determining global land cover for assessing climate change mitigation options. In The Value of Information; Laxminarayan, R., Macauley, M.K., Eds.; Springer: Dortrecht, The Netherlands, 2012. [Google Scholar]

- Herold, M.; Mayaux, P.; Woodcock, C.E.; Baccini, A.; Schmullius, C. Some challenges in global land cover mapping: An assessment of agreement and accuracy in existing 1 km datasets. Remote Sens. Environ. 2008, 112, 2538–2556. [Google Scholar] [CrossRef]

- Büttner, G.; Feranec, J.; Jaffrain, G.; Mari, L.; Maucha, G.; Soukup, T. The CORINE land cover 2000 project. EARSeL eProc. 2004, 3, 331–346. [Google Scholar]

- Jung, M.; Henkel, K.; Herold, M.; Churkina, G. Exploiting synergies of global land cover products for carbon cycle modeling. Remote Sens. Environ. 2006, 101, 534–553. [Google Scholar] [CrossRef]

- Moddemeijer, R. On estimation of entropy and mutual information of continuous distributions. Signal Process. 1989, 16, 233–248. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.F.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing of land change. Remote Sens. Environ. 2014, 148, 24–57. [Google Scholar] [CrossRef]

- Strahler, A.H.; Boschetti, L.; Foody, G.M.; Friedl, M.A.; Hansen, M.C.; Herold, M.; Mayaux, P.; Morisette, J.T.; Stehman, S.V.; Woodcock, C.E. Global Land Cover Validation: Recommendations for Evaluation and Accuracy Assessment of Global Land Cover Maps; European Communities: Luxembourg, 2006. [Google Scholar]

- Brovelli, M.A.; Molinari, M.E.; Hussein, E.; Chen, J.; Li, R. The first comprehensive accuracy assessment of GlobeLand30 at a national level: Methodology and results. Remote Sens. 2015, 7, 4191–4212. [Google Scholar] [CrossRef]

| Reference Class | ||||||

|---|---|---|---|---|---|---|

| Deciduous Forest | Evergreen Forest | Orchard | Annual Crops | Urban | ||

| Mapped Class | Deciduous forest | 169 | 32 | 15 | 5 | 1 |

| Evergreen forest | 20 | 98 | 3 | 4 | 1 | |

| Orchard | 21 | 9 | 28 | 4 | 0 | |

| Annual cropland | 9 | 1 | 5 | 68 | 0 | |

| Urban | 1 | 1 | 0 | 1 | 4 | |

| Reference Class | |||

|---|---|---|---|

| Mapped class | Urban | Non-Urban | |

| Urban | 4 | 3 | |

| Non-Urban | 2 | 491 | |

| Reference Class | ||||||

|---|---|---|---|---|---|---|

| Deciduous Forest | Evergreen Forest | Orchard | Annual Crops | Urban | ||

| Mapped Class | Deciduous forest | 169 | 17 | 16 | 18 | 4 |

| Evergreen forest | 6 | 98 | 6 | 8 | 4 | |

| Orchard | 9 | 11 | 28 | 10 | 4 | |

| Annual cropland | 4 | 4 | 5 | 68 | 4 | |

| Urban | 1 | 1 | 0 | 1 | 4 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salk, C.; Fritz, S.; See, L.; Dresel, C.; McCallum, I. An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource. Remote Sens. 2018, 10, 376. https://doi.org/10.3390/rs10030376

Salk C, Fritz S, See L, Dresel C, McCallum I. An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource. Remote Sensing. 2018; 10(3):376. https://doi.org/10.3390/rs10030376

Chicago/Turabian StyleSalk, Carl, Steffen Fritz, Linda See, Christopher Dresel, and Ian McCallum. 2018. "An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource" Remote Sensing 10, no. 3: 376. https://doi.org/10.3390/rs10030376

APA StyleSalk, C., Fritz, S., See, L., Dresel, C., & McCallum, I. (2018). An Exploration of Some Pitfalls of Thematic Map Assessment Using the New Map Tools Resource. Remote Sensing, 10(3), 376. https://doi.org/10.3390/rs10030376