High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Setup

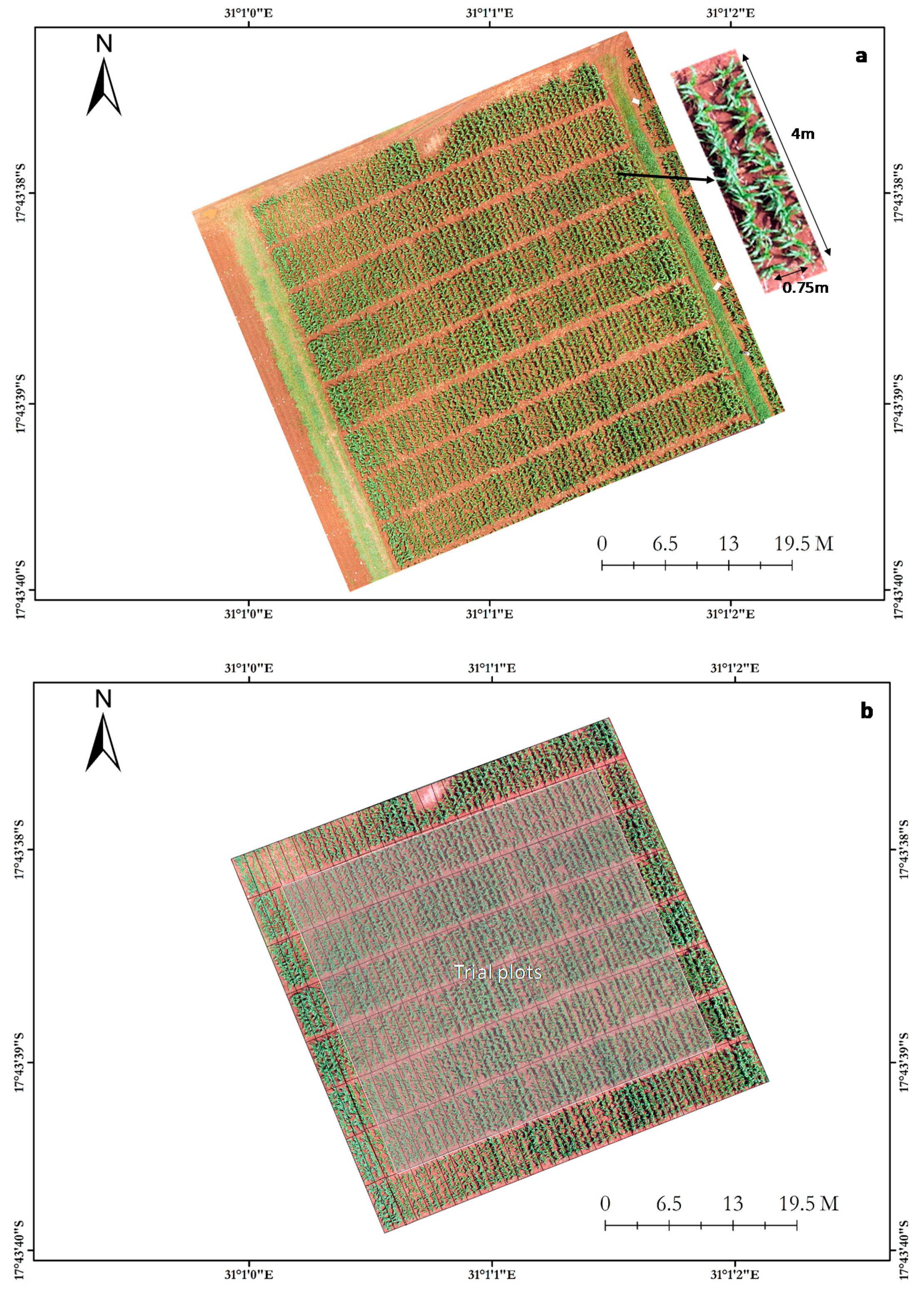

2.2. Image Acquisition

2.3. Image Processing

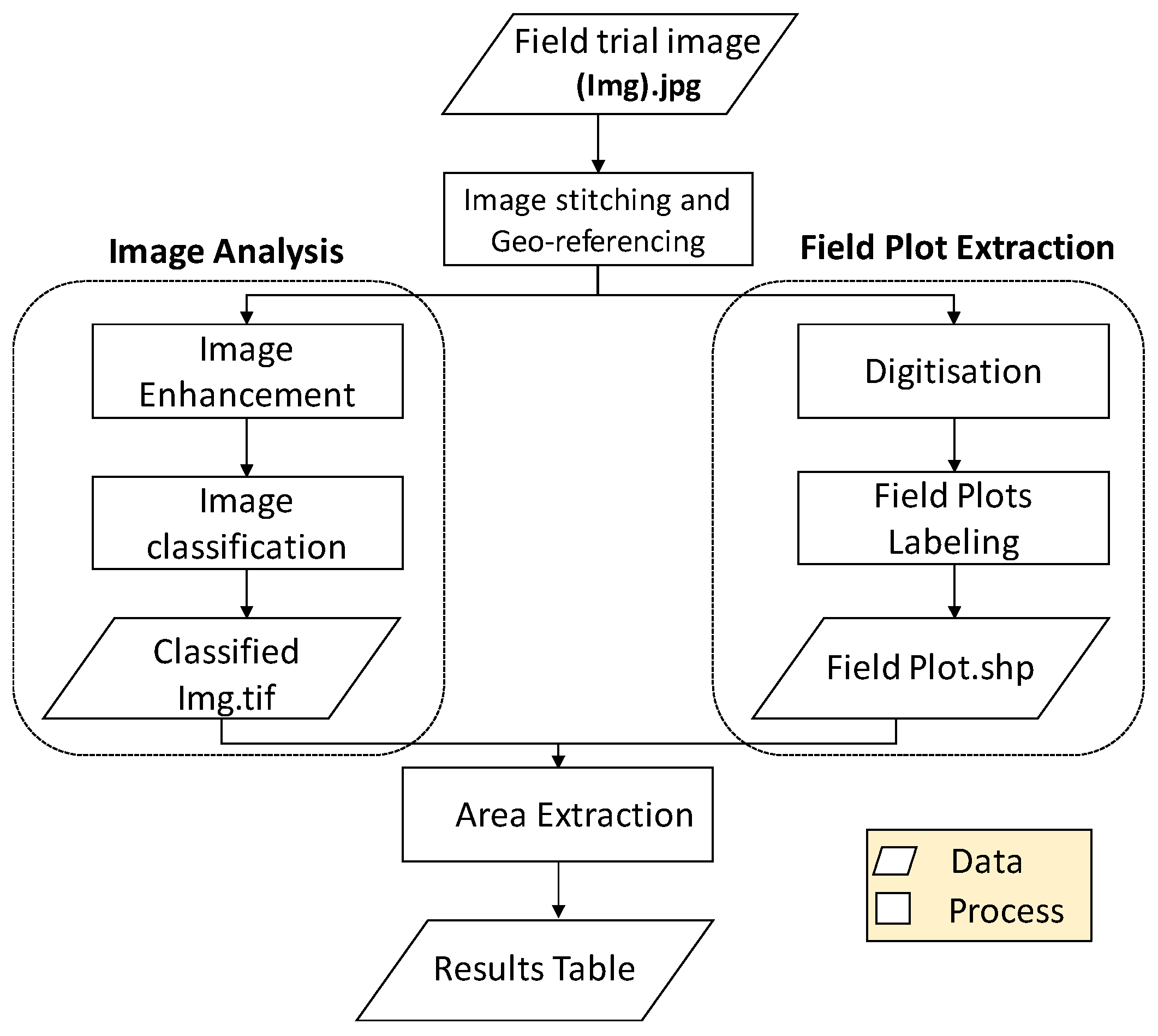

2.3.1. Image Preprocessing

2.3.2. Field Plot Extraction

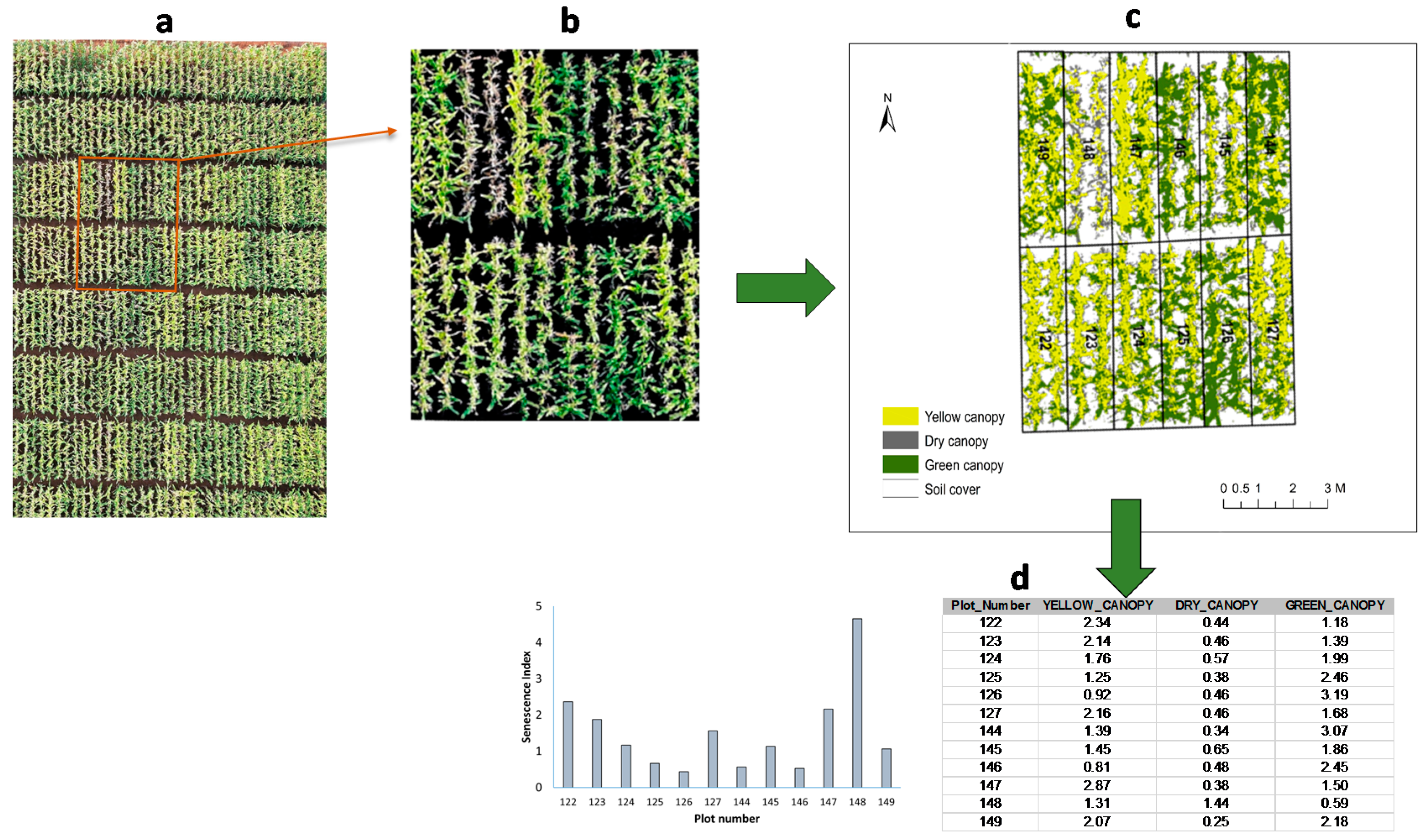

2.3.3. Analysis of Image Mosaics

2.3.4. Data Extraction

2.4. Senescence Index

2.5. Ground Truthing

2.6. Statistical Analysis

3. Results

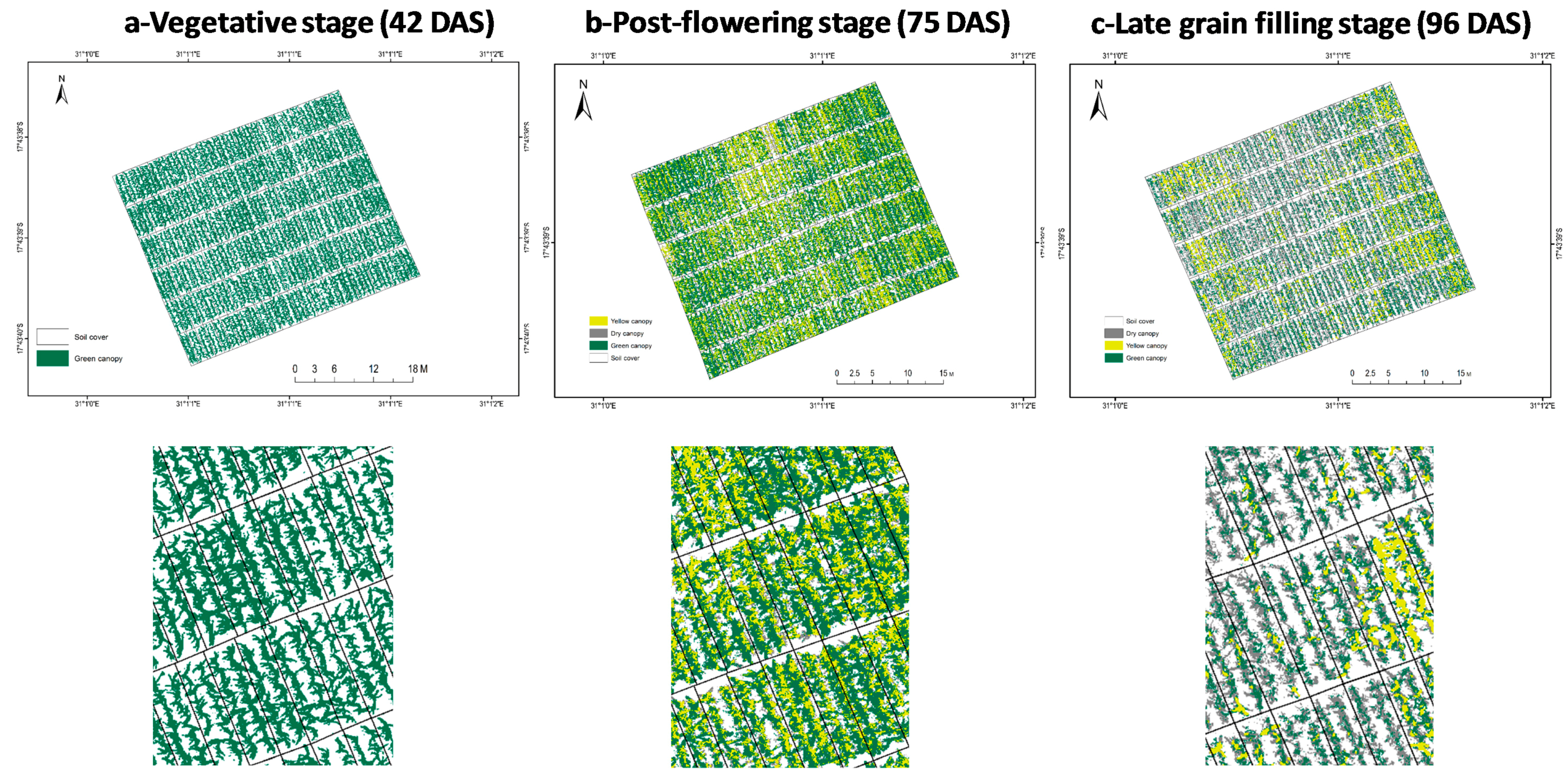

3.1. Ground Canopy Cover

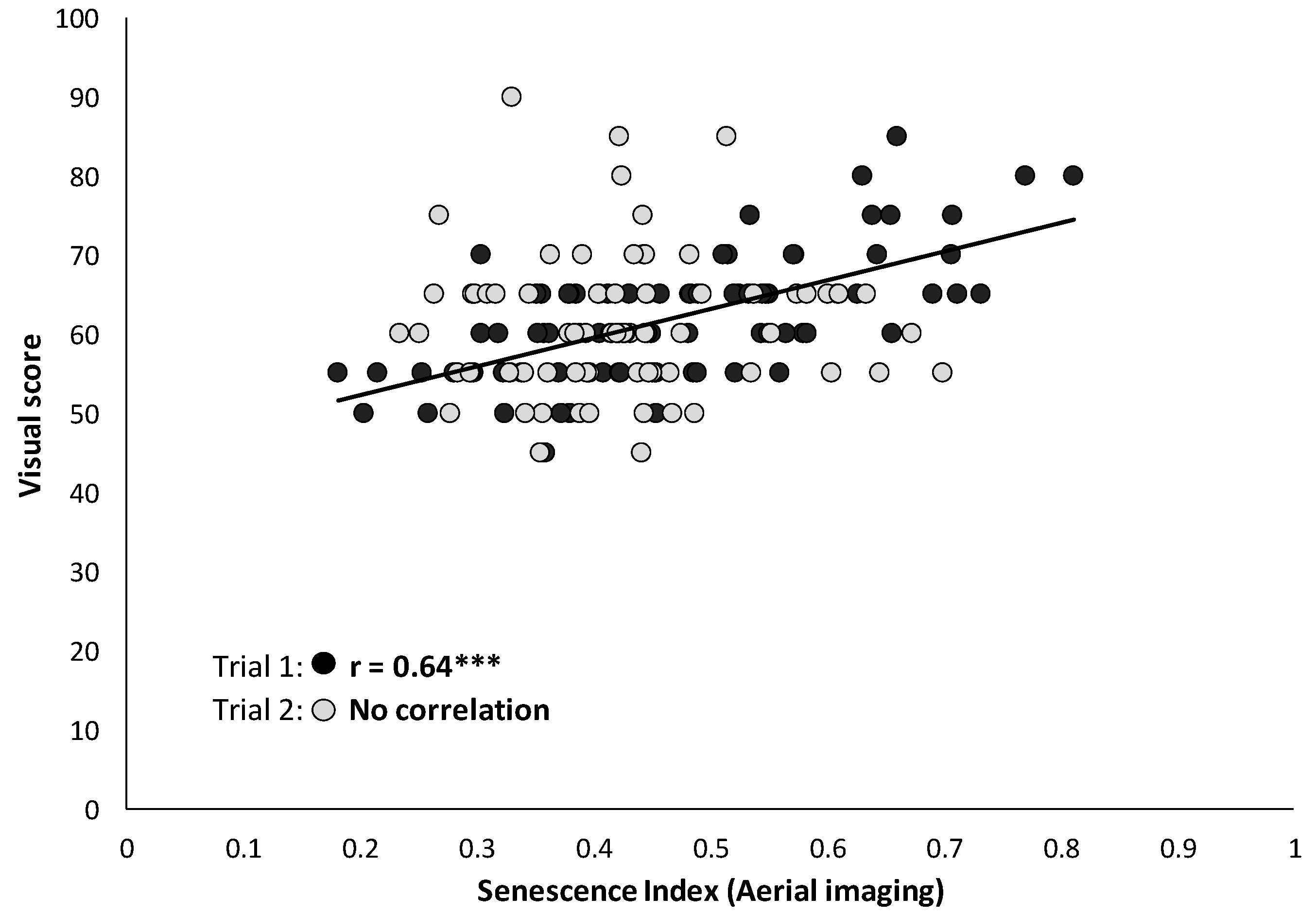

3.2. Leaf Senescence

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ray, D.K.; Ramankutty, N.; Mueller, N.D.; West, P.C.; Foley, J.A. Recent patterns of crop yield growth and stagnation. Nat. Commun. 2012, 3, 1293. [Google Scholar] [CrossRef] [PubMed]

- Masuka, B.; Atlin, G.N.; Olsen, M.; Magorokosho, C.; Labuschagne, M.; Crossa, J.; Bänziger, M.; Pixley, K.V.; Vivek, B.S.; von Biljon, A.; et al. Gains in Maize Genetic Improvement in Eastern and Southern Africa: I. CIMMYT Hybrid Breeding Pipeline. Crop Sci. 2017, 57, 168–179. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 35. [Google Scholar] [CrossRef] [PubMed]

- Vergara-Díaz, O.; Zaman-Allah, M.A.; Masuka, B.; Hornero, A.; Zarco-Tejada, P.; Prasanna, B.M.; Cairns, J.E.; Araus, J.L. A Novel Remote Sensing Approach for Prediction of Maize Yield under Different Conditions of Nitrogen Fertilization. Front. Plant Sci. 2016, 7. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Granier, C.; Aguirrezabal, L.; Chenu, K.; Cookson, S.J.; Dauzat, M.; Hamard, P.; Thioux, J.-J.; Rolland, G.; Bouchier-Combaud, S.; Lebaudy, A.; et al. PHENOPSIS, an automated platform for reproducible phenotyping of plant responses to soil water deficit in Arabidopsis thaliana permitted the identification of an accession with low sensitivity to soil water deficit. New Phytol. 2006, 169, 623–635. [Google Scholar] [CrossRef] [PubMed]

- Fahlgren, N.; Feldman, M.; Gehan, M.A.; Wilson, M.S.; Shyu, C.; Bryant, D.W.; Hill, S.T.; McEntee, C.J.; Warnasooriya, S.N.; Kumar, I.; et al. A Versatile Phenotyping System and Analytics Platform Reveals Diverse Temporal Responses to Water Availability in Setaria. Mol. Plant 2017, 8, 1520–1535. [Google Scholar] [CrossRef] [PubMed]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Chapman, S.C.; Merz, T.; Chan, A.; Jackway, P.; Hrabar, S.; Dreccer, M.F.; Holland, E.; Zheng, B.; Ling, T.J.; Jimenez-Berni, J. Pheno-Copter: A Low-Altitude, Autonomous Remote-Sensing Robotic Helicopter for High-Throughput Field-Based Phenotyping. Agronomy 2014, 4, 279–301. [Google Scholar] [CrossRef]

- Blackmer, T.M.; Schepers, J.S.; Varvel, G.E. Light reflectance compared with other nitrogen stress measurements in corn leaves. Agron. J. 1994, 86, 934–938. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W. A simplified approach for yield prediction of sugar beet based on optical remote sensing data. Remote Sens. Environ. 1997, 61, 221–228. [Google Scholar] [CrossRef]

- Adams, M.L.; Norvell, W.A.; Philpot, W.D.; Peverly, J.H. Toward the discrimination of manganese, zinc, copper, and iron deficiency in “Bragg” soybean using spectral detection methods. Agron. J. 2000, 92, 268–274. [Google Scholar] [CrossRef]

- Adams, M.L.; Philpot, W.D.; Norvell, W.A. Yellowness index: An application of spectral second derivatives to estimate chlorosis of leaves in stressed vegetation. Int. J. Remote Sens. 1999, 20, 3663–3775. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.; Berjón, A.; López-Lozano, R.; Miller, J.; Martín, P.; Cachorro, V. Assessing vineyard condition with hyperspectral González indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Martín, P.; Zarco-Tejada, P.; González, M.; Berjón, A. Using hyperspectral remote sensing to map grape quality in “Tempranillo” vineyards affected by iron deficiency chlorosis. Vitis 2007, 46, 7–14. [Google Scholar]

- Gamon, J.A.; Huemmrich, K.F.; Wong, C.Y.S.; Ensminger, I.; Garrity, S.; Hollinger, D.Y.; Noormets, A.; Peñuelas, J. A remotely sensed pigment index reveals photosynthetic phenology in evergreen conifers. Proc. Natl. Acad. Sci. USA 2016, 113, 13087–13092. [Google Scholar] [CrossRef] [PubMed]

- Arteca, R.N. Juvenility, Maturity and Senescence. In Plant Growth Substances: Principles and Applications; Springer: Boston, MA, USA, 1996; pp. 161–176. ISBN 978-1-4757-2451-6. [Google Scholar]

- Gan, S.; Amasino, R.M. Making Sense of Senescence’ Molecular Genetic Regulation and Manipulation of Leaf Senescence. Plant Physiol. 1997, 113, 313–319. [Google Scholar] [CrossRef] [PubMed]

- Borrás, L.; Maddonni, G.A.; Otegui, M.E. Leaf senescence in maize hybrids: Plant population, row spacing and kernel set effects. Field Crop. Res. 2003, 82, 13–26. [Google Scholar] [CrossRef]

- Lim, P.O.; Kim, H.J.; Nam, H.G. Leaf Senescence. Annu. Rev. Plant Biol. 2007, 58, 115–136. [Google Scholar] [CrossRef] [PubMed]

- Falqueto, A.R.; Cassol, D.; Magalhaes Junior, A.M.; Oliveira, A.C.; Bacarin, A.M. Physiological analysis of leaf senescence of two rice cultivars with different yield potential. Pesq. Agropec. Bras. 2009, 44, 695–700. [Google Scholar] [CrossRef]

- Borrás, L.; Curá, J.A.; Otegui, M.E. Maize Kernel Composition and Post-Flowering Source-Sink Ratio. Crop Sci. 2002, 42, 781–790. [Google Scholar] [CrossRef]

- Gan, S. Leaf Senescence as an Important Target for Improving Crop Production. Adv. Crop Sci. Technol. 2014, 2, e116. [Google Scholar] [CrossRef]

- Mogorokosho, C.; Tarekegne, A. Characterization of Maize Germplasm Grown in Eastern and Southern Africa: Results of the 2013 Regional Trials Coordinated by CIMMYT. International Maize and Wheat Improvement Center (CIMMYT): Harare, Zimbabwe, 2014. [Google Scholar]

- Alvarado, G.; López, M.; Vargas, M.; Pacheco, Á.; Rodríguez, F.; Burgueño, J.; Crossa, J. META-R (Multi Environment Trail Analysis with R for Windows) Version 5.0; International Maize and Wheat Improvement Center: Texcoco, Mexico, 2015. [Google Scholar]

- Berni, J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Blackmer, T.M.; Shepers, J.S. Aerial photography to detect nitrogen stress in corn. J. Plant Phys. 1996, 148, 440–444. [Google Scholar] [CrossRef]

- Filella, I.; Serrano, L.; Serra, J.; Peñuelas, J. Evaluating Wheat Nitrogen Status with Canopy Reflectance Indices and Discriminant Analysis. Crop Sci. 1995, 35, 1400–1405. [Google Scholar] [CrossRef]

- Terrance Booth, D.; Cox, S.E.; Fifield, C.; Phillips, M.; Williamson, N. Image Analysis Compared with Other Methods for Measuring Ground Cover. Arid Land Res. Manag. 2005, 19, 91–100. [Google Scholar] [CrossRef]

- Rajan, N.; Maas, S.J. Mapping crop ground cover using airborne multispectral digital imagery. Precis. Agric. 2009, 10, 304–318. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Potgieter, A.B.; George-Jaeggli, B.; Chapman, S.C.; Laws, K.; Suárez Cadavid, L.A.; Wixted, J.; Watson, J.; Eldridge, M.; Jordan, D.R.; Hammer, G.L. Multi-Spectral Imaging from an Unmanned Aerial Vehicle Enables the Assessment of Seasonal Leaf Area Dynamics of Sorghum Breeding Lines. Front. Plant Sci. 2017, 8, 1532. [Google Scholar] [CrossRef] [PubMed]

| Canopy | Total Cover | RGC | Grain Yield (Mg ha−1) | |||

|---|---|---|---|---|---|---|

| Yellow | Dry | Green | ||||

| Heritability | 0.526 | 0.766 | 0.544 | 0.602 | 0.547 | 0.547 |

| Mean | 1.625 | 0.376 | 2.379 | 0.660 | 0.358 | 1.670 |

| Genetic correlation (ρg) | 0.602 ** | −0.301 * | 0.616 *** | 0.792 *** | 0.650 *** | - |

| n Replicates | 3 | 3 | 3 | 3 | 3 | 3 |

| Aerial Imaging | Visual Assessment | |||

|---|---|---|---|---|

| Sen. Index | Sen1 | Sen2 | Sen3 | |

| Heritability | 0.529 | 0.285 | 0.585 | 0.500 |

| Mean | 0.466 | 12.731 | 28.666 | 61.944 |

| Genetic correlation with yield | −0.397 ** | −0.179 | 0.006 | −0.101 |

| n Replicates | 3 | 3 | 3 | 3 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Makanza, R.; Zaman-Allah, M.; Cairns, J.E.; Magorokosho, C.; Tarekegne, A.; Olsen, M.; Prasanna, B.M. High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging. Remote Sens. 2018, 10, 330. https://doi.org/10.3390/rs10020330

Makanza R, Zaman-Allah M, Cairns JE, Magorokosho C, Tarekegne A, Olsen M, Prasanna BM. High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging. Remote Sensing. 2018; 10(2):330. https://doi.org/10.3390/rs10020330

Chicago/Turabian StyleMakanza, Richard, Mainassara Zaman-Allah, Jill E. Cairns, Cosmos Magorokosho, Amsal Tarekegne, Mike Olsen, and Boddupalli M. Prasanna. 2018. "High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging" Remote Sensing 10, no. 2: 330. https://doi.org/10.3390/rs10020330

APA StyleMakanza, R., Zaman-Allah, M., Cairns, J. E., Magorokosho, C., Tarekegne, A., Olsen, M., & Prasanna, B. M. (2018). High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging. Remote Sensing, 10(2), 330. https://doi.org/10.3390/rs10020330