Calibrate Multiple Consumer RGB-D Cameras for Low-Cost and Efficient 3D Indoor Mapping

Abstract

1. Introduction

2. Related Works

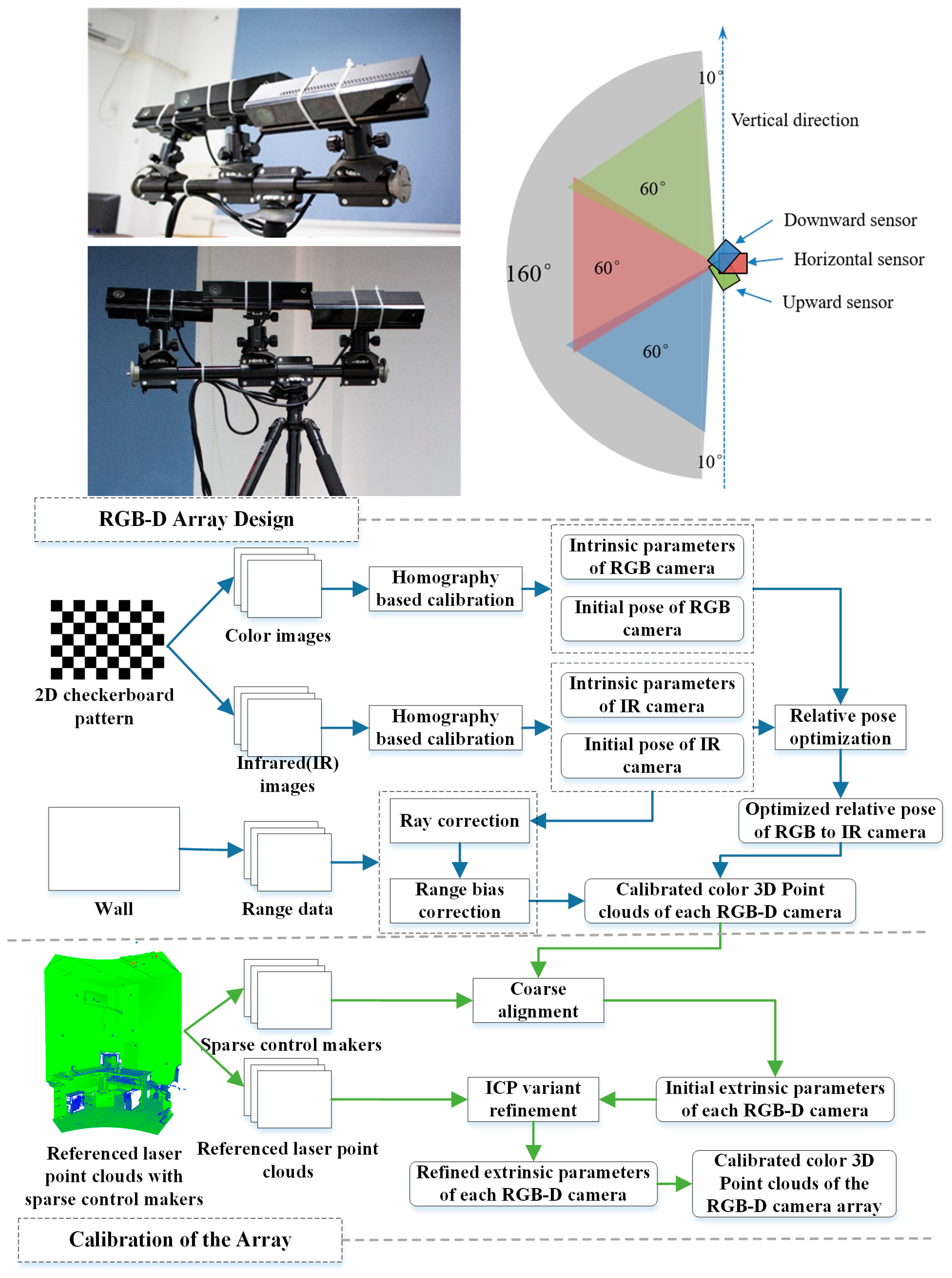

3. Methodology

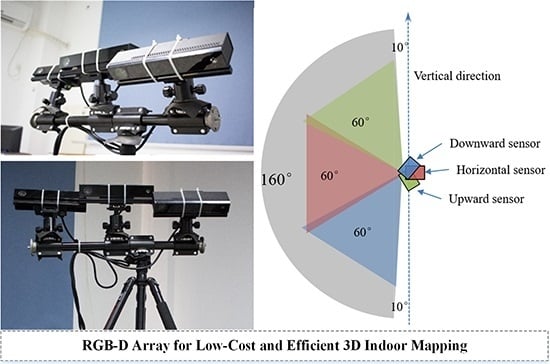

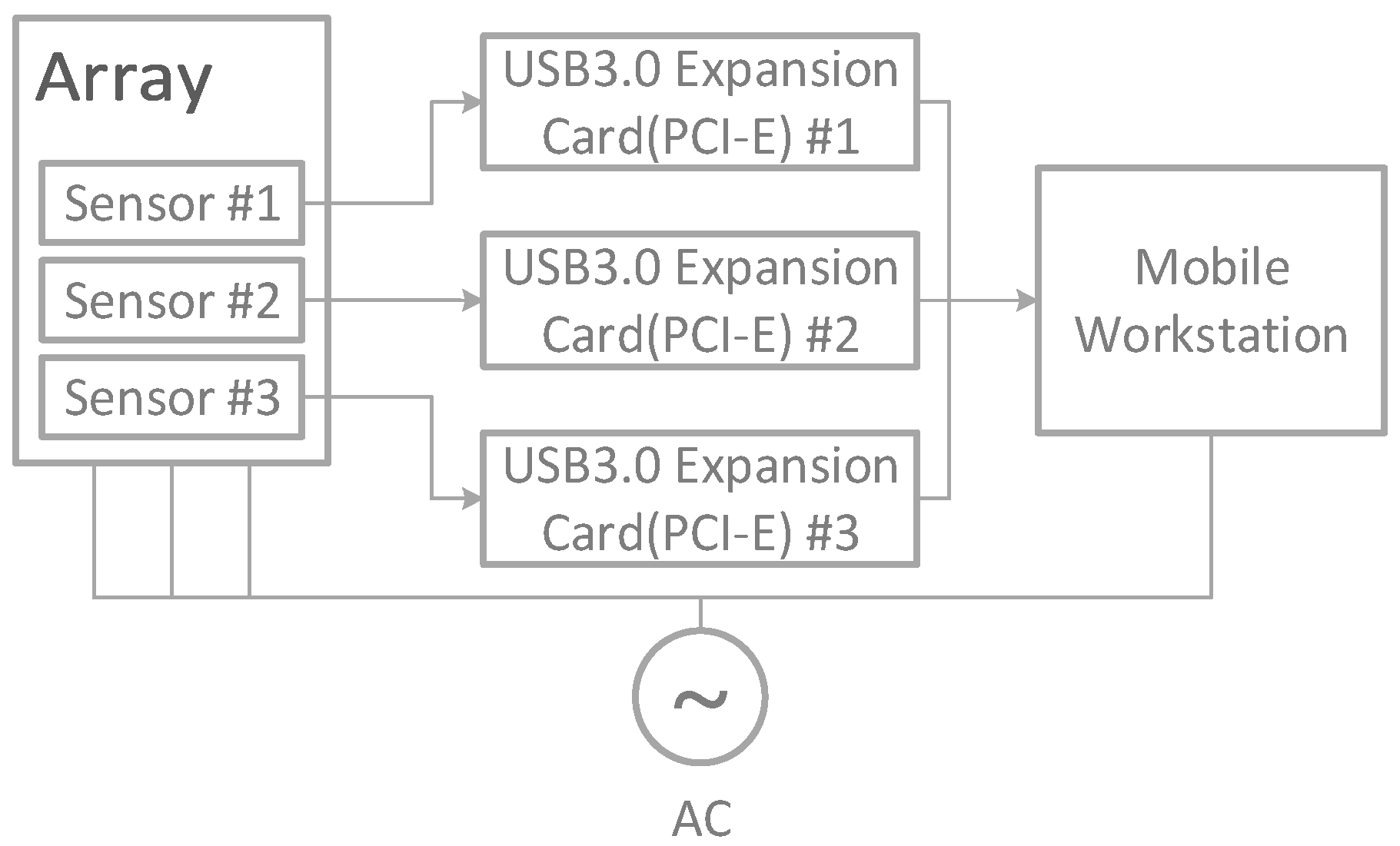

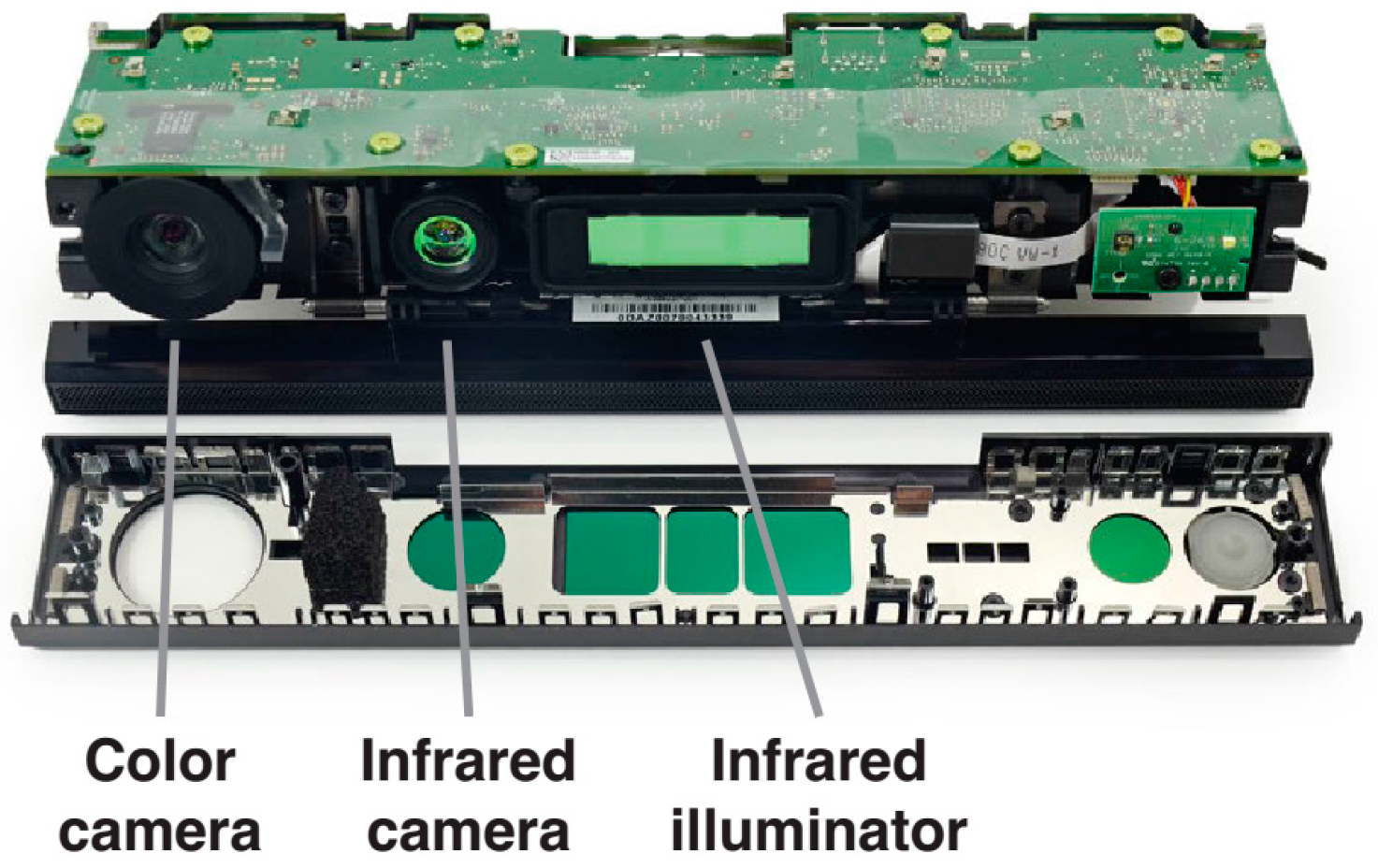

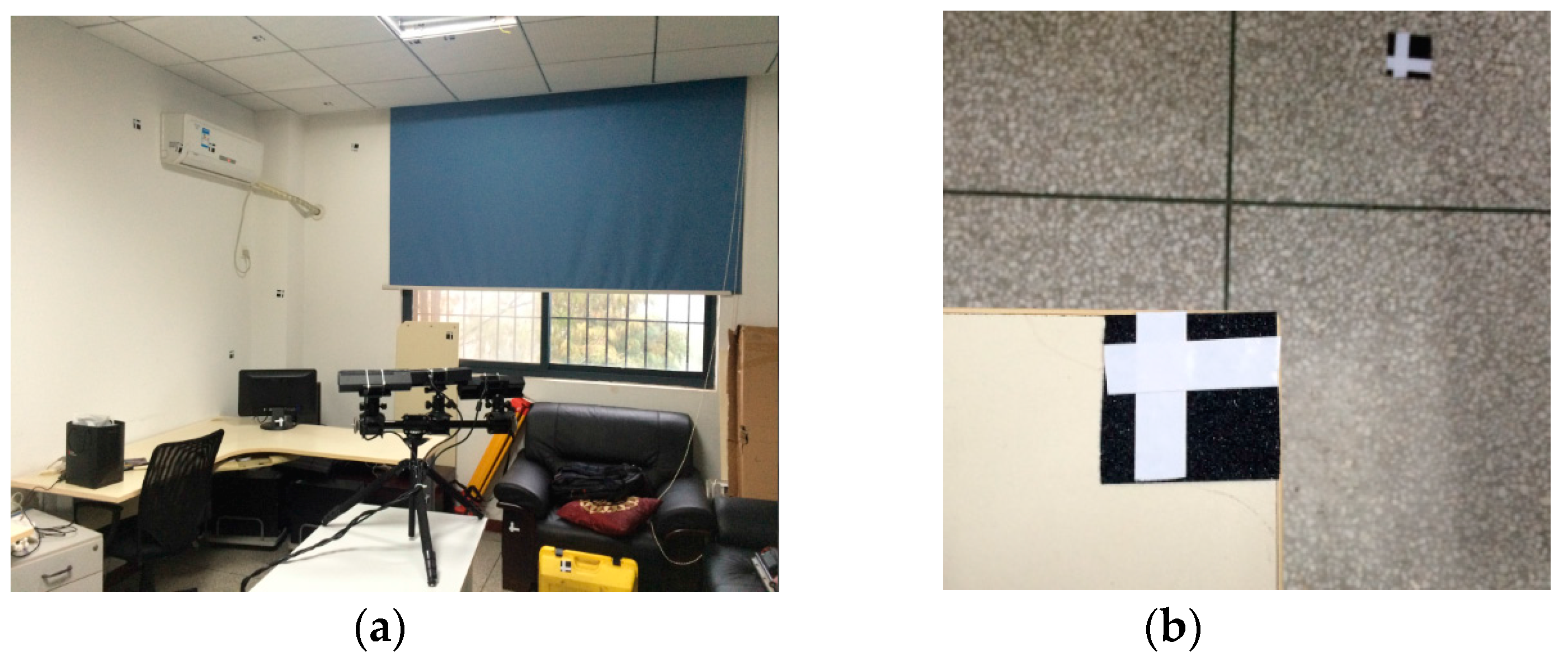

3.1. RGB-D Camera Array System Setup

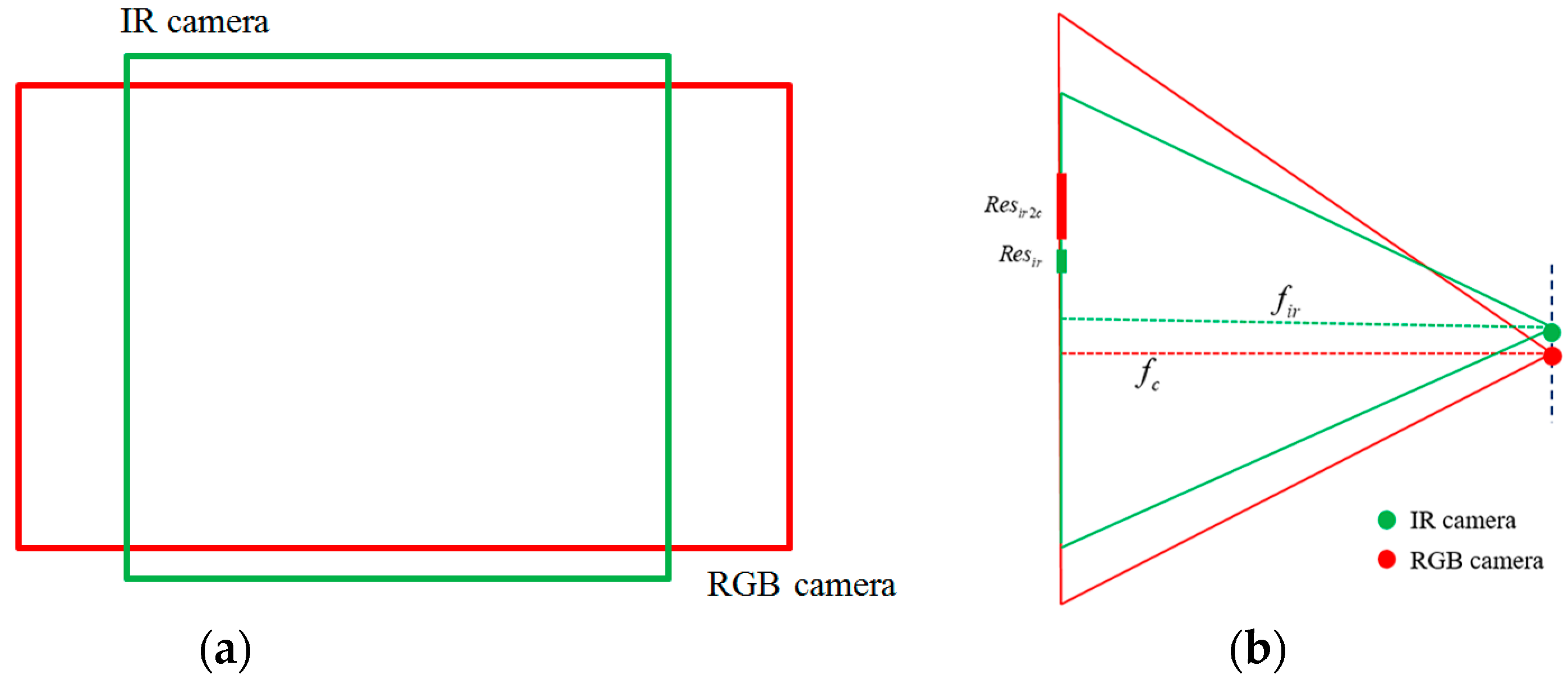

3.2. Intrinsic Calibration of Single RGB-D Sensor

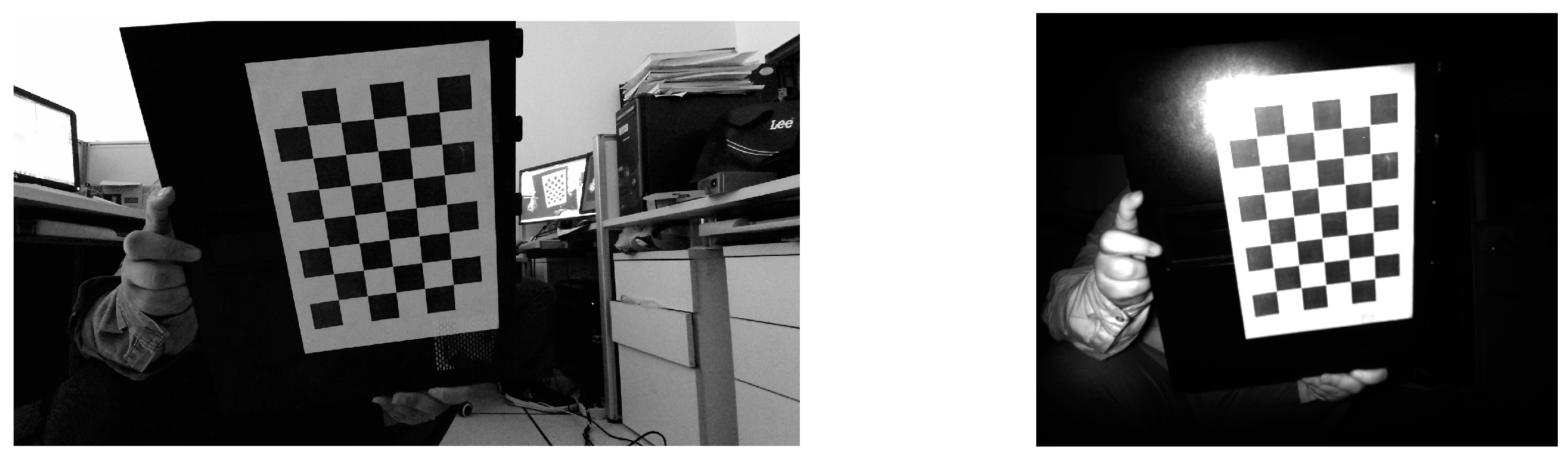

3.2.1. Geometry Calibration

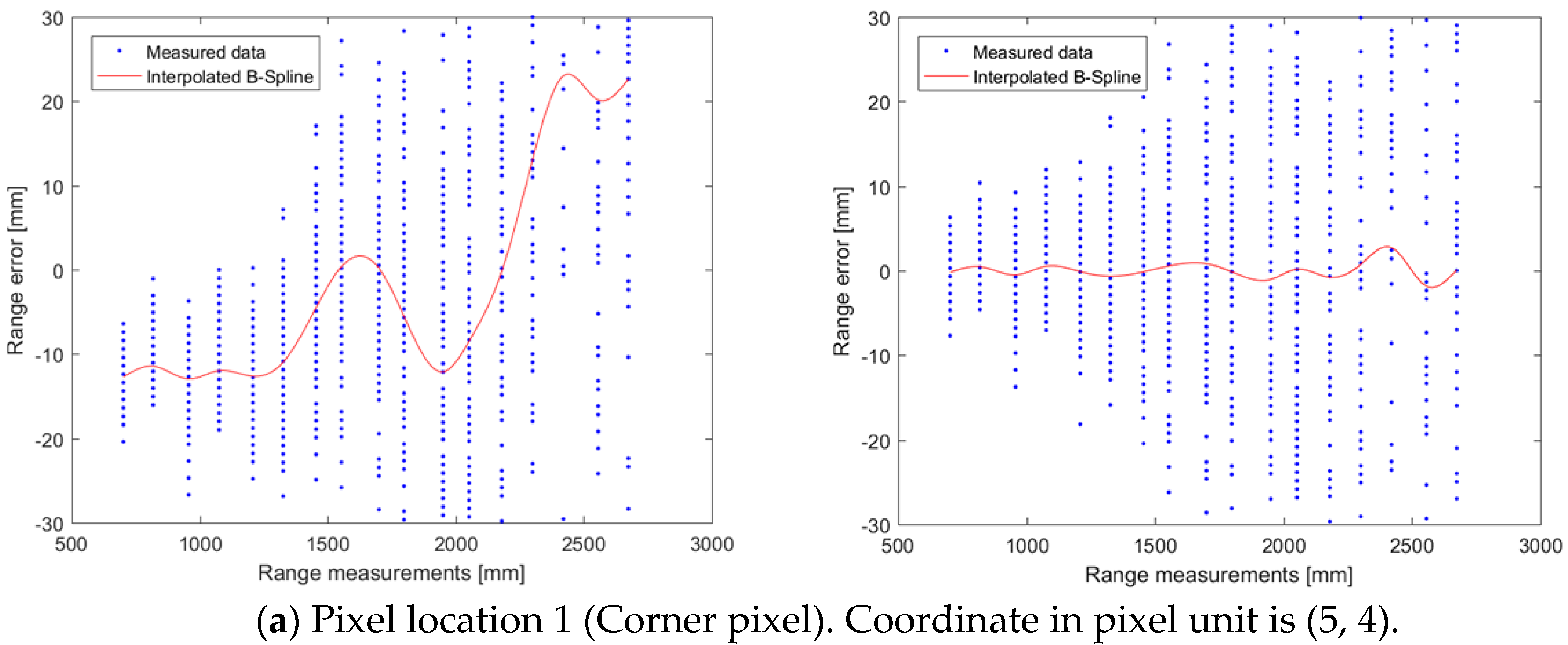

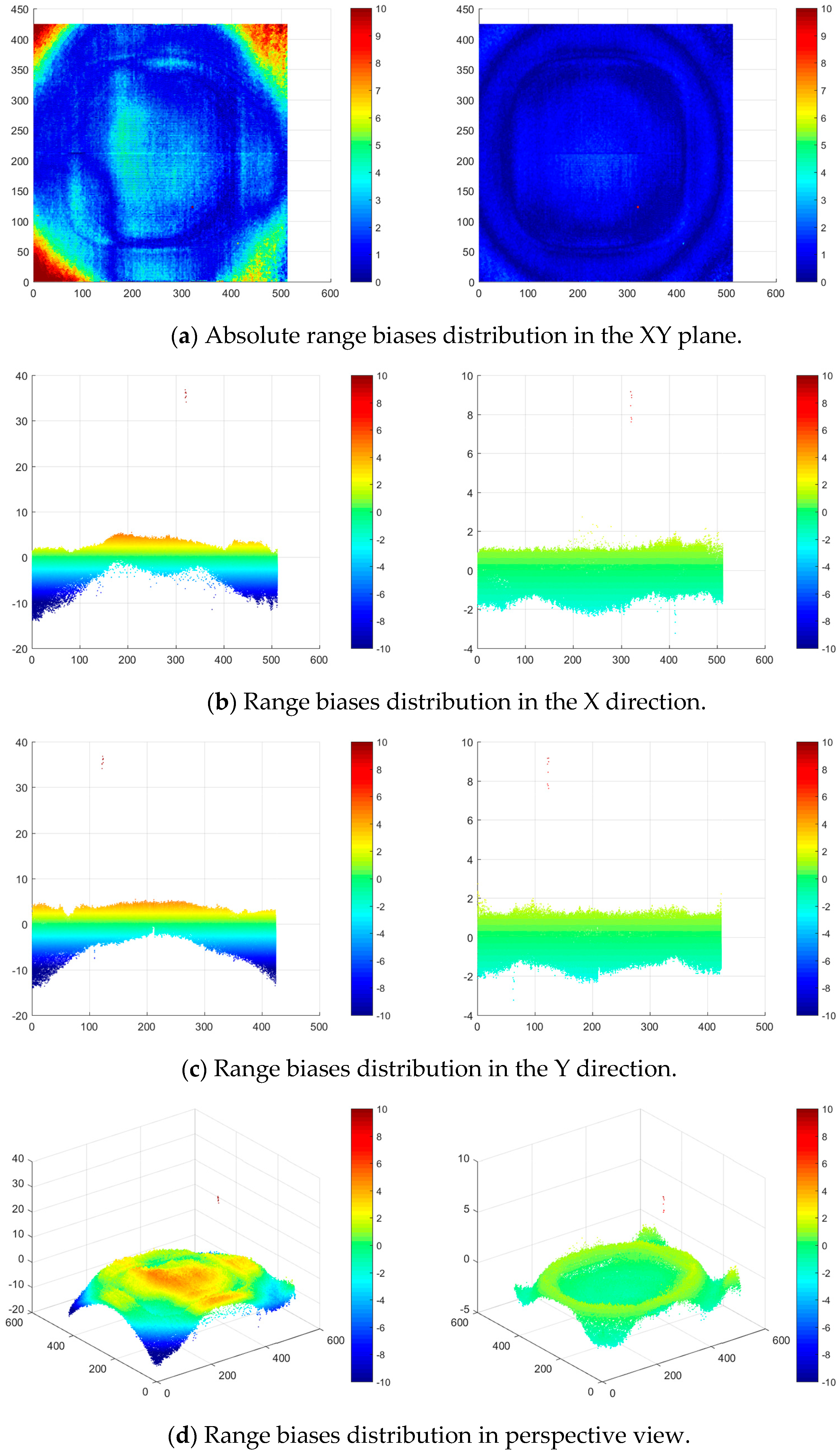

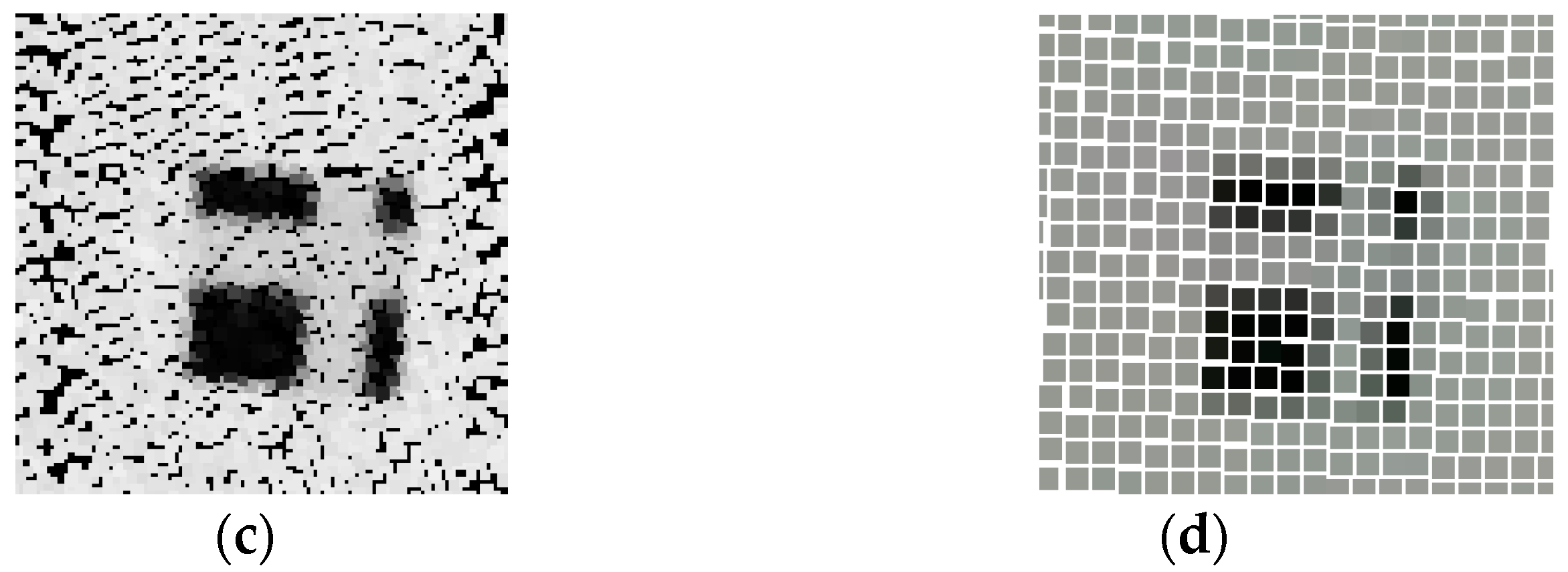

3.2.2. Depth Calibration

3.3. Extrinsic Calibration of RGB-D Sensor Array

4. Experiments and Analysis

4.1. Intrinsic Calibration Results of Single RGB-D Sensor

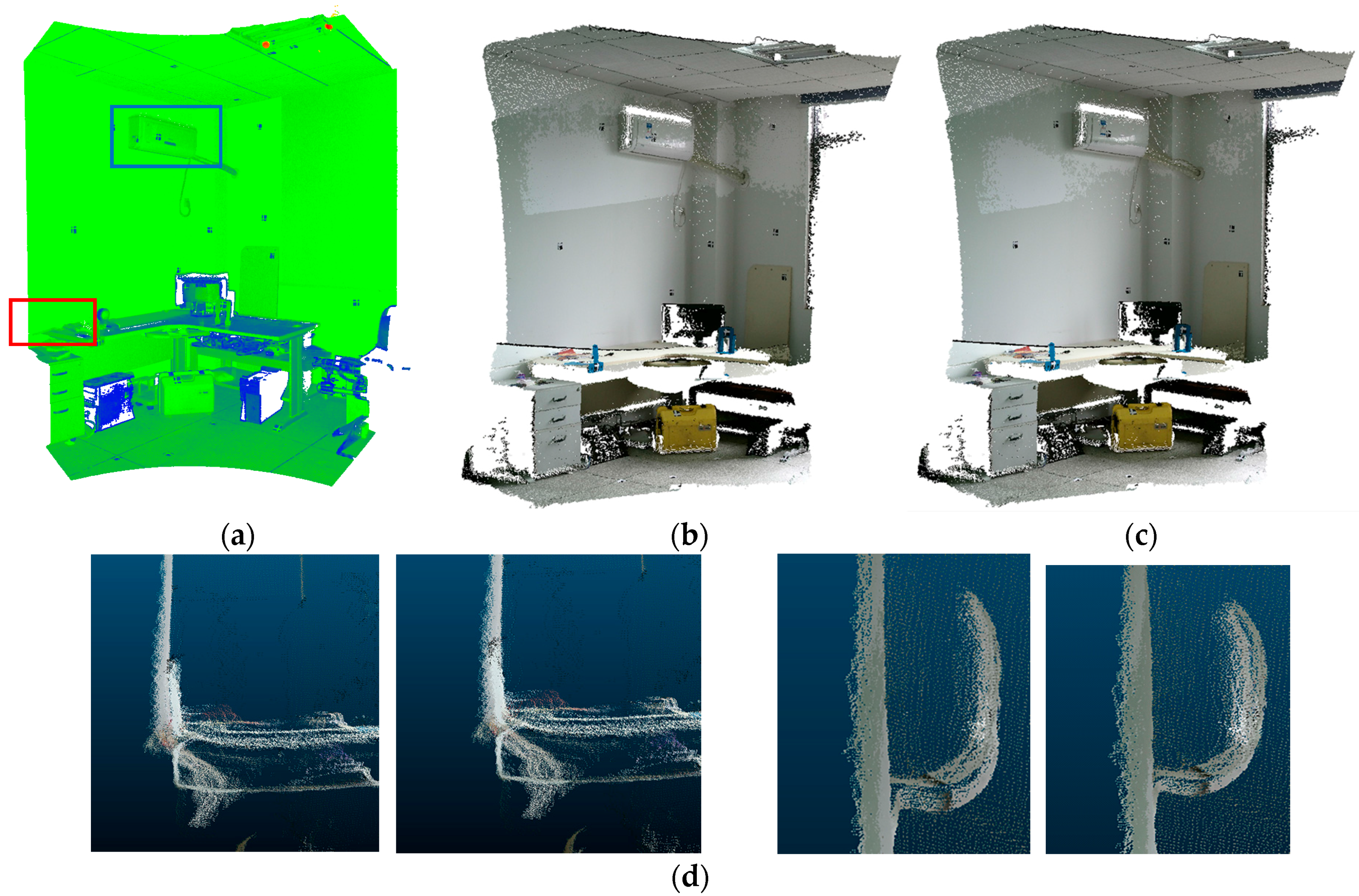

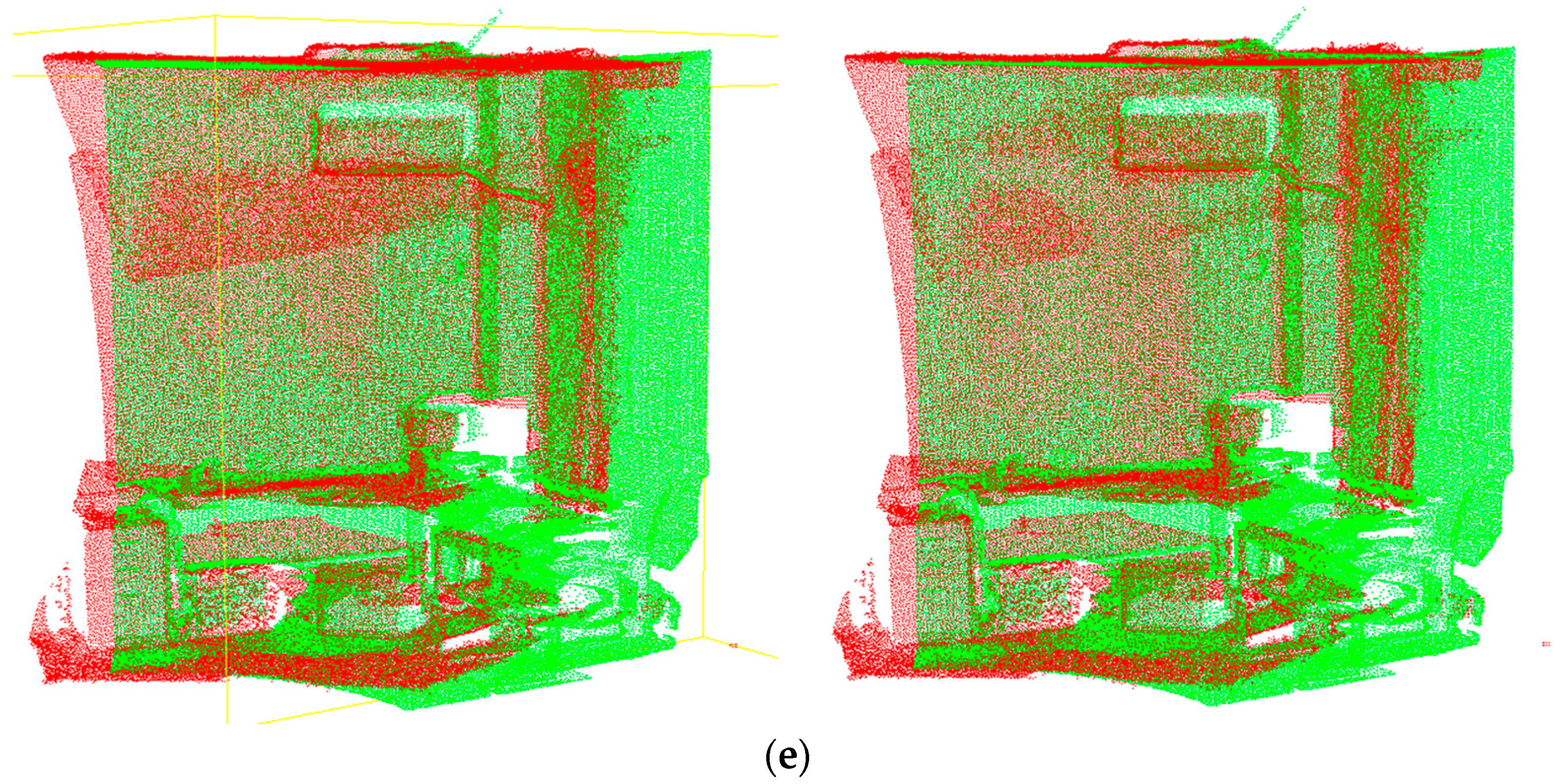

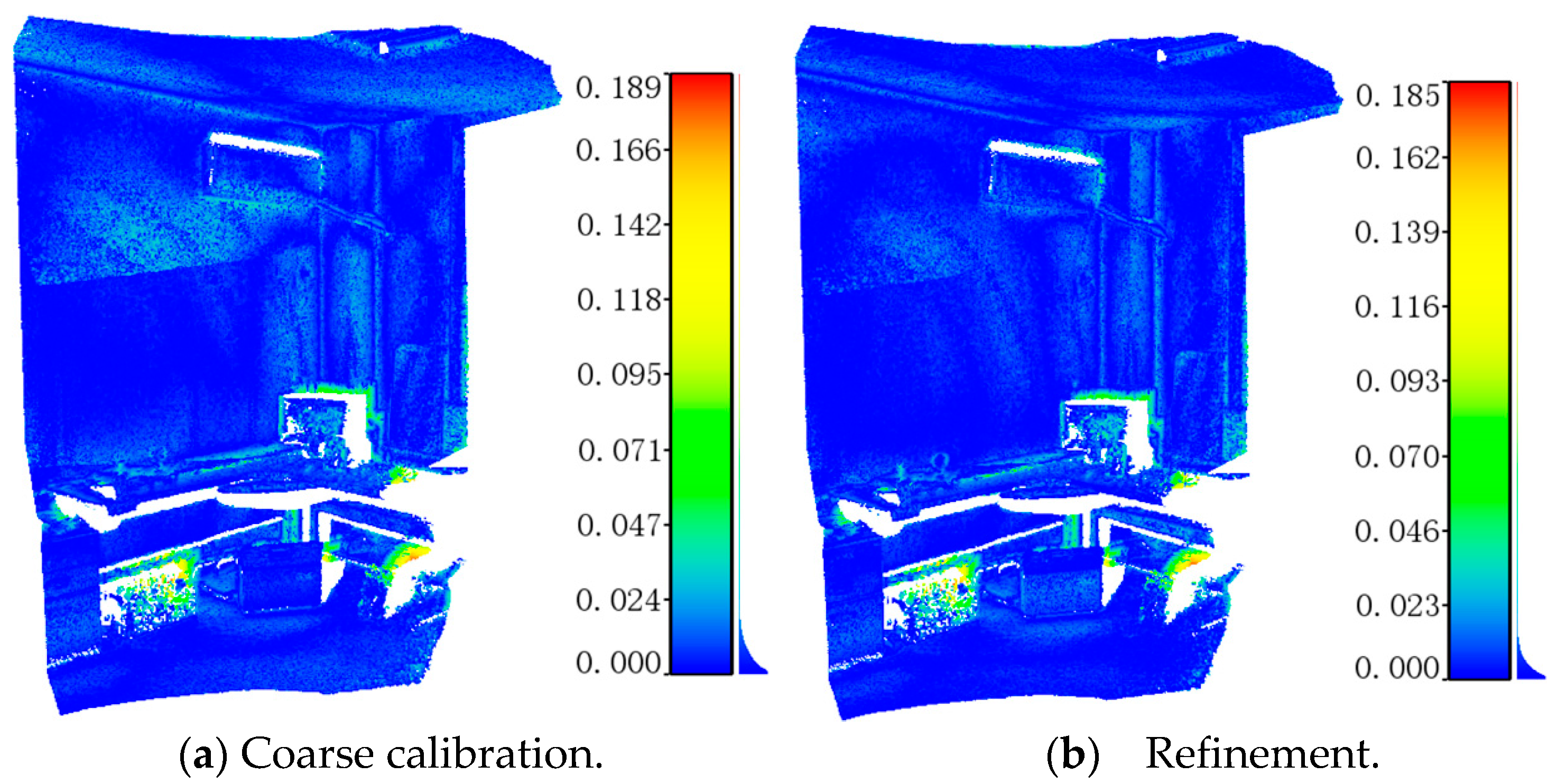

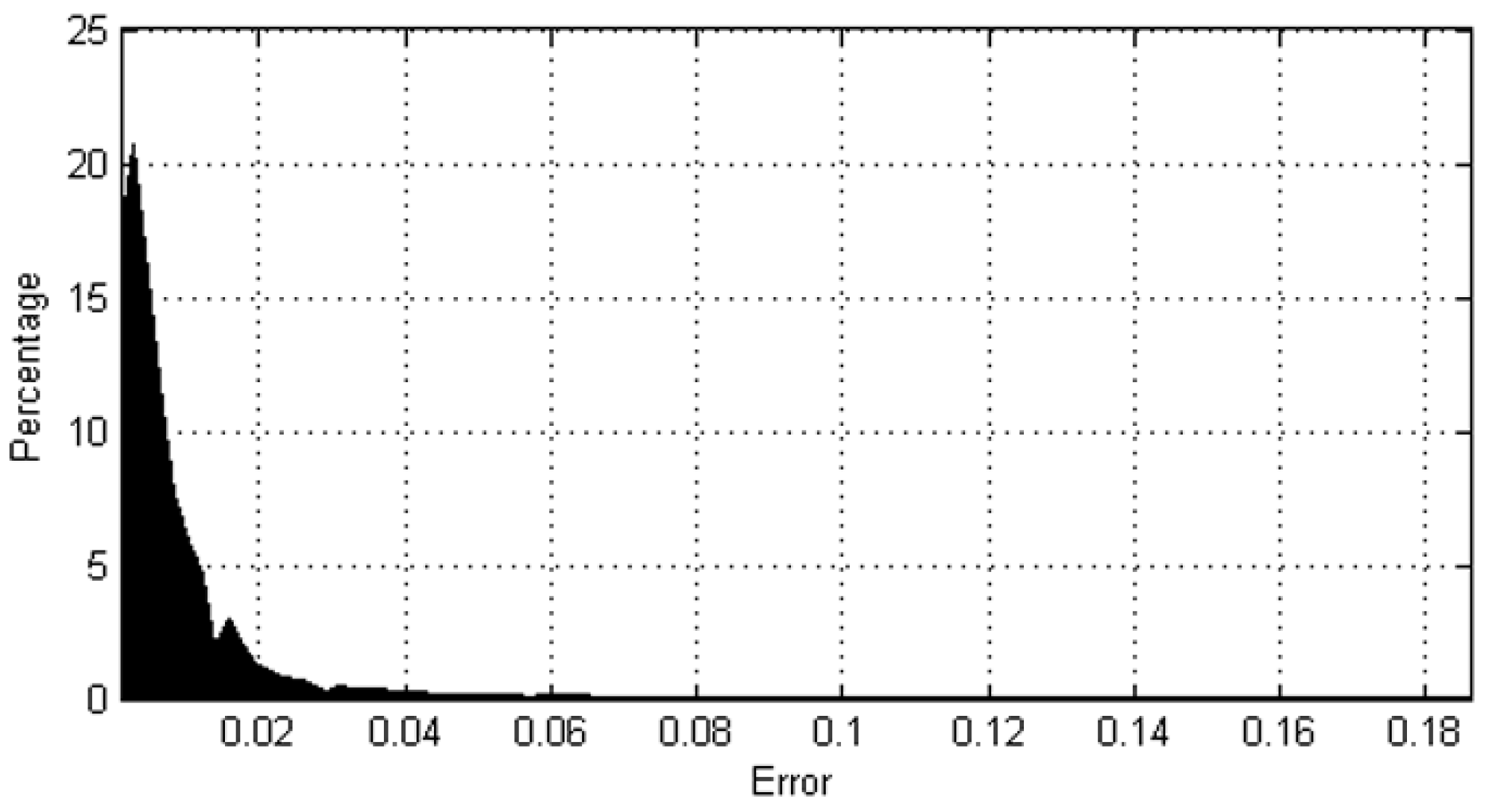

4.2. Extrinsic Calibration Results of the RGB-D Camera Array

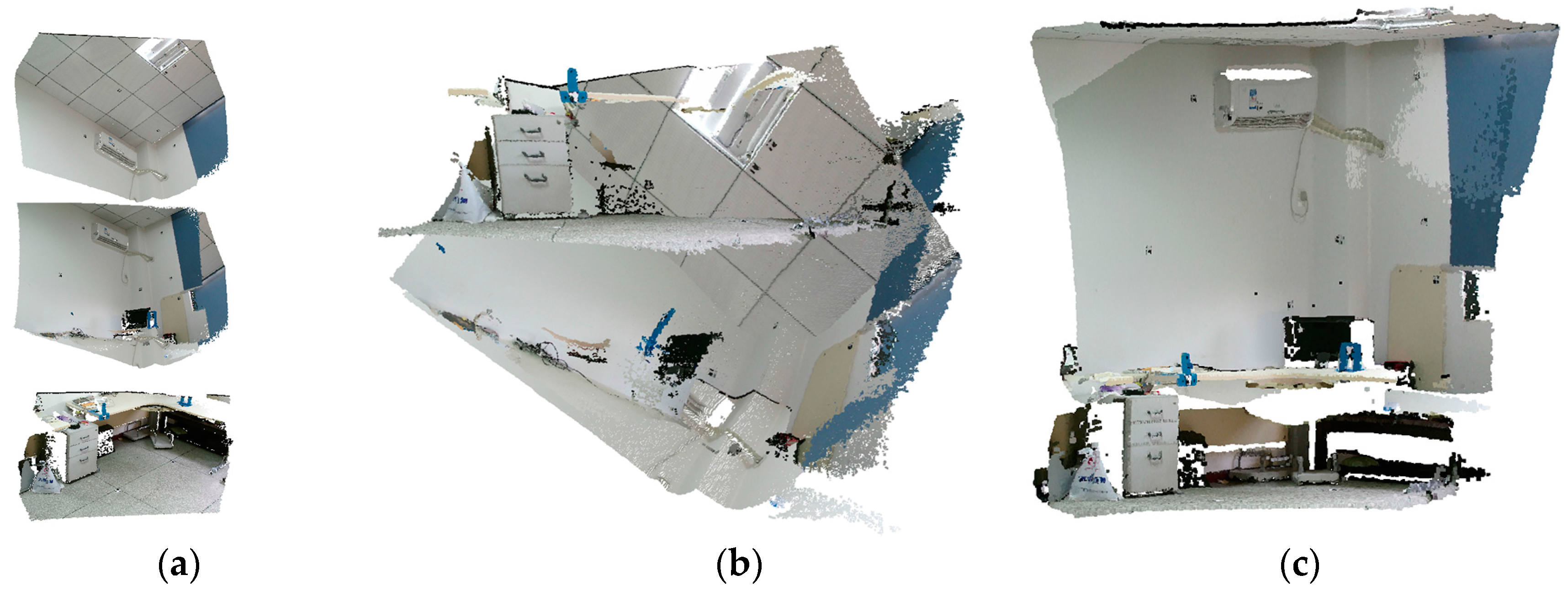

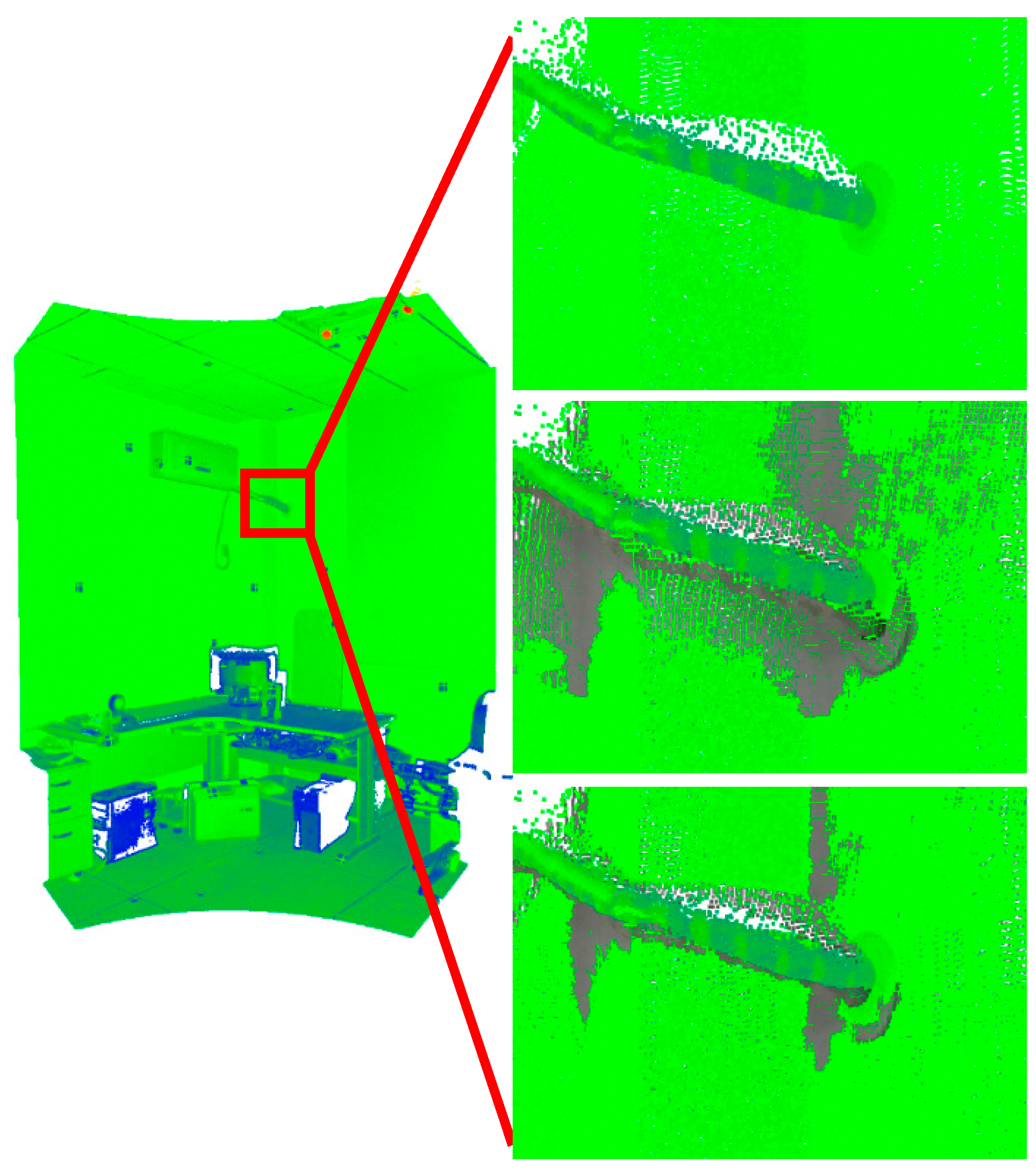

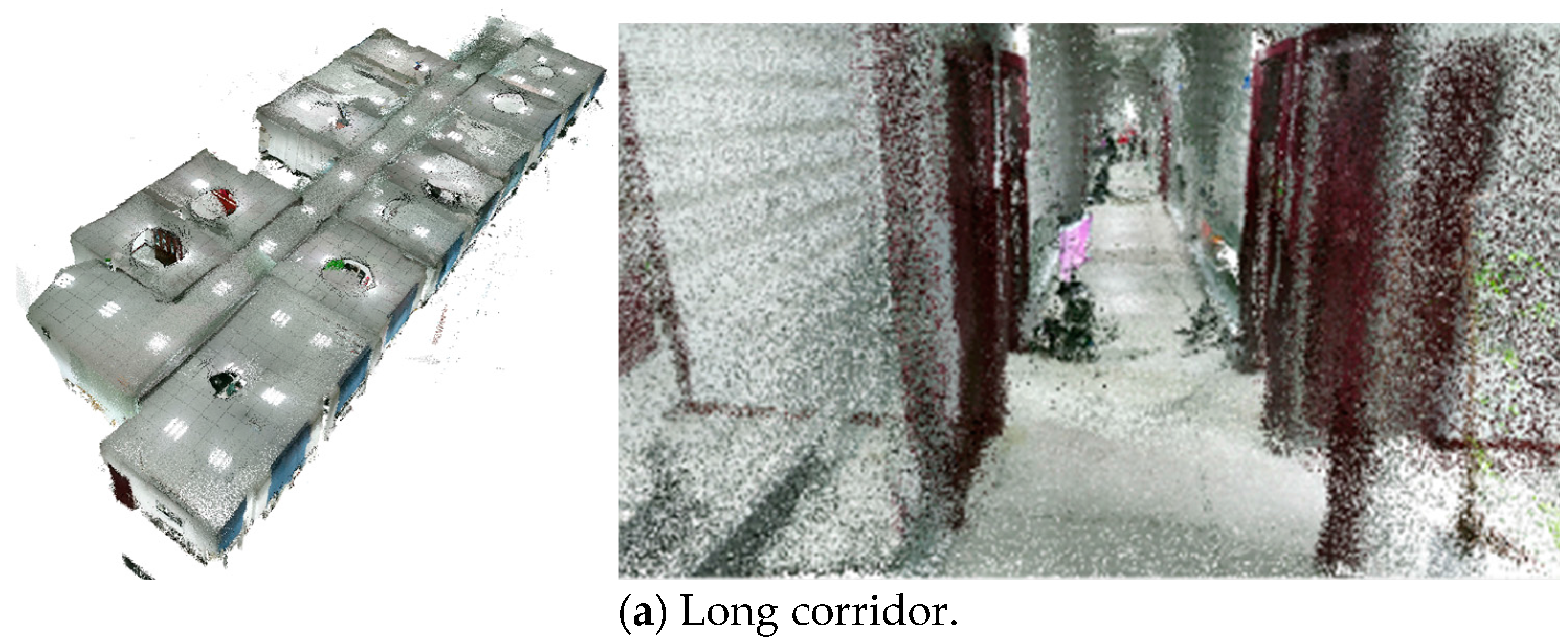

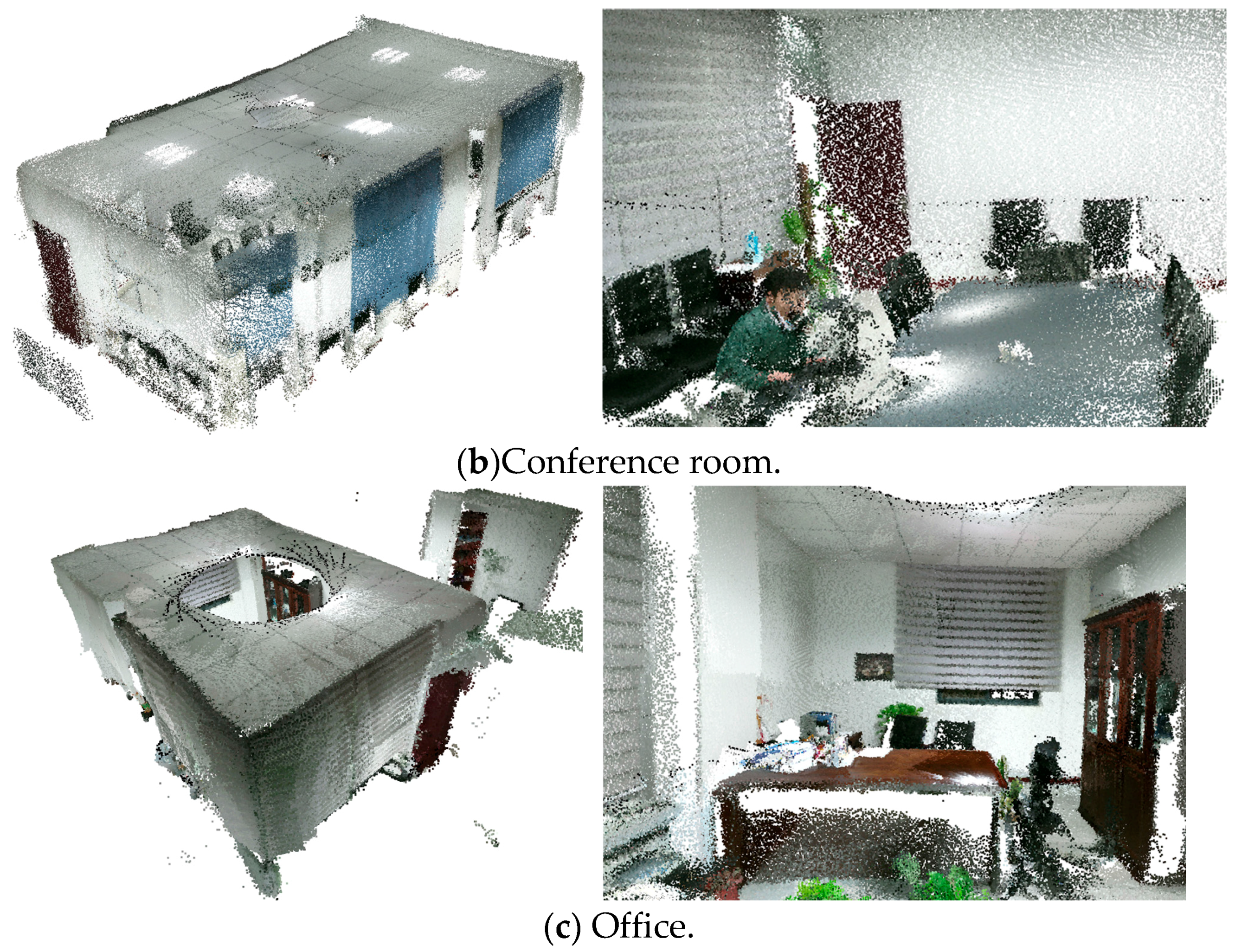

4.3. Indoor Mapping with the Calibrated RGB-D Camera Array

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Camplani, M.; Mantecon, T.; Salgado, L. Depth-Color Fusion Strategy for 3-D Scene Modeling With Kinect. IEEE Trans. Cybern. 2013, 43, 1560–1571. [Google Scholar] [CrossRef] [PubMed]

- Turner, E.; Cheng, P.; Zakhor, A. Fast, Automated, Scalable Generation of Textured 3D Models of Indoor Environments. IEEE J. Sel. Top. Signal Process. 2015, 9, 409–421. [Google Scholar] [CrossRef]

- Bachrach, A.; Prentice, S.; He, R.; Henry, P.; Huang, A.S.; Krainin, M. Estimation, planning, and mapping for autonomous flight using an RGB-D camera in GPS-denied environments. Int. J. Robot. Res. 2012, 31, 1320–1343. [Google Scholar] [CrossRef]

- Gemignani, G.; Capobianco, R.; Bastianelli, E.; Bloisi, D.D.; Iocchi, L.; Nardi, D. Living with robots: Interactive environmental knowledge acquisition. Robot. Auton. Syst. 2016, 78, 1–16. [Google Scholar] [CrossRef]

- Trimble Indoor Mapping Solution. Available online: http://www.trimble.com/Indoor-Mobile-Mapping-Solution/Indoor-Mapping.aspx (accessed on 28 March 2017).

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced Computer Vision with Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [PubMed]

- Lefloch, D.; Nair, R.; Lenzen, F.; Schäfer, H.; Streeter, L.; Cree, M.J.; Koch, R.; Kolb, A. Technical Foundation and Calibration Methods for Time-of-Flight Cameras. In Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications: Dagstuhl 2012 Seminar on Time-of-Flight Imaging and GCPR 2013 Workshop on Imaging New Modalities; Grzegorzek, M., Theobalt, C., Koch, R., Kolb, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 3–24. [Google Scholar]

- Dos Santos, D.R.; Basso, M.A.; Khoshelham, K.; Oliveira, E.D.; Pavan, N.L.; Vosselman, G. Mapping Indoor Spaces by Adaptive Coarse-to-Fine Registration of RGB-D Data. IEEE Geosci. Remote Sens. Lett. 2016, 13, 262–266. [Google Scholar] [CrossRef]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and Calibration of a RGB-D Camera (Kinect V2 Sensor) Towards a Potential Use for Close-Range 3D Modeling. Remote Sens. 2015, 7, 13070–13097. [Google Scholar] [CrossRef]

- Chow, J.; Lichti, D.; Hol, J.; Bellusci, G.; Luinge, H. IMU and Multiple RGB-D Camera Fusion for Assisting Indoor Stop-and-Go 3D Terrestrial Laser Scanning. Robotics 2014, 3, 247–280. [Google Scholar] [CrossRef]

- Weber, T.; Hänsch, R.; Hellwich, O. Automatic registration of unordered point clouds acquired by Kinect sensors using an overlap heuristic. ISPRS J. Photogramm. Remote Sens. 2015, 102, 96–109. [Google Scholar] [CrossRef]

- Yang, S.; Yi, X.; Wang, Z.; Wang, Y.; Yang, X. Visual SLAM using multiple RGB-D cameras. In Proceedings of the IEEE International Conference on Robotics and Biomimetic (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1389–1395. [Google Scholar]

- Xiao, J.; Owens, A.; Torralba, A. Sun3d: A database of big spaces reconstructed using sfm and object labels. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3–6 December 2013; pp. 1625–1632. [Google Scholar]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry for ground vehicle applications. J. Field Robot. 2006, 23, 3–20. [Google Scholar] [CrossRef]

- Huang, A.S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual odometry and mapping for autonomous flight using an RGB-D camera. In Robotics Research: The 15th International Symposium ISRR; Christensen, H.I., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 235–252. [Google Scholar]

- Whelan, T.; Kaess, M.; Johannsson, H.; Fallon, M.; Leonard, J.J.; McDonald, J. Real-time large-scale dense RGB-D SLAM with volumetric fusion. Int. J. Robot. Res. 2015, 34, 598–626. [Google Scholar] [CrossRef]

- Gutierrez-Gomez, D.; Mayol-Cuevas, W.; Guerrero, J.J. Dense RGB-D visual odometry using inverse depth. Robot. Auton. Syst. 2016, 75, 571–583. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Engelhard, N.; Sturm, J.; Cremers, D.; Burgard, W. An Evaluation of the RGB-D SLAM System. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2012), Saint Paul, MN, USA, 14–18 May 2012; pp. 1691–1696. [Google Scholar]

- Davison, A.J.; Cid, A.G.; Kita, N. Real-time 3D SLAM with wide-angle vision. In Proceedings of the IFAC Symposium on Intelligent Autonomous Vehicles, Lisbon, Portugal, 5–7 July 2004; pp. 31–33. [Google Scholar]

- Urban, S.; Wursthorn, S.; Leitloff, J.; Hinz, S. MultiCol Bundle Adjustment: A Generic Method for Pose Estimation, Simultaneous Self-Calibration and Reconstruction for Arbitrary Multi-Camera Systems. Int. J. Comput. Vis. 2017, 121, 234–252. [Google Scholar] [CrossRef]

- Blake, J.; Martin, H.; Machulis, K.; Xiang, L.; Fisher, D. OpenKinect: Open Source Drivers for the Kinect for Windows V2 Device. Available online: https://github.com/OpenKinect/libfreenect2 (accessed on 9 October 2016).

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; ACM: New York, NY, USA, 2011; pp. 559–568. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. Online global loop closure detection for large-scale multi-session graph-based SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 2661–2666. [Google Scholar]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Song, W.; Yun, S.; Jung, S.-W.; Won, C.S. Rotated top-bottom dual-kinect for improved field of view. Multimed. Tools Appl. 2016, 75, 8569–8593. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Huang, C.-H. Indoor Scene Point Cloud Registration Algorithm Based on RGB-D Camera Calibration. Sensors 2017, 17, 1874. [Google Scholar] [CrossRef] [PubMed]

- Serafin, J.; Grisetti, G. Using extended measurements and scene merging for efficient and robust point cloud registration. Robot. Auton. Syst. 2017, 92, 91–106. [Google Scholar] [CrossRef]

- Matterport Pro2 3D Camera. Available online: https://matterport.com/pro2–3d-camera/ (accessed on 19 May 2017).

- Daniel, H.C.; Kannala, J.; Heikkilä, J. Joint Depth and Color Camera Calibration with Distortion Correction. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2058–2064. [Google Scholar]

- Wang, K.; Zhang, G.; Bao, H. Robust 3D reconstruction with an RGB-D camera. IEEE Trans. Image Process. 2014, 23, 4893–4906. [Google Scholar] [CrossRef] [PubMed]

- Darwish, W.; Tang, S.; Li, W.; Chen, W. A New Calibration Method for Commercial RGB-D Sensors. Sensors 2017, 17, 1204. [Google Scholar] [CrossRef] [PubMed]

- Corti, A.; Giancola, S.; Mainetti, G.; Sala, R. A metrological characterization of the Kinect V2 time-of-flight camera. Robot. Auton. Syst. 2016, 75, 584–594. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Chow, J.C.; Lichti, D.D. Photogrammetric bundle adjustment with self-calibration of the PrimeSense 3D camera technology: Microsoft Kinect. IEEE Access 2013, 1, 465–474. [Google Scholar] [CrossRef]

- Fan, C.; Wang, F.; Yang, J.; Zhao, K.; Wang, L.; Liu, W.; Liu, Y.; Jia, Z. Improved camera calibration method based on perpendicularity compensation for binocular stereo vision measurement system. Opt. Express 2015, 23, 15205–15223. [Google Scholar]

- Cui, Y.; Zhou, F.; Wang, Y.; Liu, L.; Gao, H. Precise calibration of binocular vision system used for vision measurement. Opt. Express 2014, 22, 9134–9149. [Google Scholar] [CrossRef] [PubMed]

- Luo, P.F.; Wu, J. Easy calibration technique for stereo vision using a circle grid. Opt. Eng. 2008, 47, 281–291. [Google Scholar] [CrossRef]

- Machacek, M.; Sauter, M.; Rösgen, T. Two-step calibration of a stereo camera system for measurements in large volumes. Meas. Sci. Technol. 2003, 14, 1631. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, X.; Li, W. Binocular vision system calibration based on a one-dimensional target. Appl. Opt. 2012, 51, 3338–3345. [Google Scholar] [CrossRef] [PubMed]

- Habe, H.; Nakamura, Y. Appearance-based parameter optimization for accurate stereo camera calibration. Mach. Vis. Appl. 2012, 23, 313–325. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate Camera Calibration from Multi-View Stereo and Bundle Adjustment. Int. J. Comput. Vis. 2009, 84, 257–268. [Google Scholar] [CrossRef]

- Dornaika, F. Self-calibration of a stereo rig using monocular epipolar geometries. Pattern Recognit. 2007, 40, 2716–2729. [Google Scholar] [CrossRef]

- Dang, T.; Hoffmann, C.; Stiller, C. Continuous Stereo Self-Calibration by Camera Parameter Tracking. IEEE Trans. Image Process. 2009, 18, 1536–1550. [Google Scholar] [CrossRef] [PubMed]

- Beck, S.; Kunert, A.; Kulik, A.; Froehlich, B. Immersive Group-to-Group Telepresence. IEEE Trans. Vis. Comput. Graph. 2013, 19, 616–625. [Google Scholar] [CrossRef] [PubMed]

- Avetisyan, R.; Willert, M.; Ohl, S.; Staadt, O. Calibration of Depth Camera Arrays. In Proceedings of the SIGRAD 2014, Visual Computing, Göteborg, Sweden, 12–13 June 2014. [Google Scholar]

- Lindner, M.; Schiller, I.; Kolb, A.; Koch, R. Time-of-Flight sensor calibration for accurate range sensing. Comput. Vis. Image Underst. 2010, 114, 1318–1328. [Google Scholar] [CrossRef]

- Jiyoung, J.; Joon-Young, L.; Yekeun, J.; Kweon, I.S. Time-of-Flight Sensor Calibration for a Color and Depth Camera Pair. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1501–1513. [Google Scholar] [CrossRef] [PubMed]

- Beck, S.; Froehlich, B. Volumetric calibration and registration of multiple RGBD-sensors into a joint coordinate system. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Arles, France, 23–24 March 2015; pp. 89–96. [Google Scholar]

- Avetisyan, R.; Rosenke, C.; Staadt, O. Flexible Calibration of Color and Depth Camera Arrays. In Proceedings of the WSCG2016—24th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision, Plzen, Czech Republic, 30 May–3 June 2016. [Google Scholar]

- Kainz, B.; Hauswiesner, S.; Reitmayr, G.; Steinberger, M.; Grasset, R.; Gruber, L.; Veas, E.; Kalkofen, D.; Seichter, H.; Schmalstieg, D. OmniKinect: Real-time dense volumetric data acquisition and applications. In Proceedings of the 18th ACM Symposium on Virtual Reality Software and Technology, Toronto, ON, Canada, 10–12 December 2012; ACM: New York, NY, USA, 2012; pp. 25–32. [Google Scholar]

- Fernández-Moral, E.; González-Jiménez, J.; Rives, P.; Arévalo, V. Extrinsic calibration of a set of range cameras in 5 s without pattern. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 429–435. [Google Scholar]

- Heng, L.; Li, B.; Pollefeys, M. Camodocal: Automatic intrinsic and extrinsic calibration of a rig with multiple generic cameras and odometry. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2013), Tokyo, Japan, 3–7 November 2013; pp. 1793–1800. [Google Scholar]

- Schneider, S.; Luettel, T.; Wuensche, H.-J. Odometry-based online extrinsic sensor calibration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2013), Tokyo, Japan, 3–7 November 2013; pp. 1287–1292. [Google Scholar]

- Microsoft Kinect V2 for Microsoft Windows. Available online: https://en.wikipedia.org/wiki/Kinect (accessed on 6 June 2017).

- CubeEye 3D Depth Camera. Available online: http://www.cube-eye.co.kr/ (accessed on 6 June 2017).

- PMD CamCube 3.0. Available online: http://www.pmdtec.com/news_media/video/camcube.php (accessed on 6 June 2017).

- Coughlan, J.M.; Yuille, A.L. Manhattan World: Compass direction from a single image by Bayesian inference. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 942, pp. 941–947. [Google Scholar]

- Pagliari, D.; Pinto, L. Calibration of Kinect for Xbox One and Comparison between the Two Generations of Microsoft Sensors. Sensors 2015, 15, 27569–27589. [Google Scholar] [CrossRef] [PubMed]

- Fankhauser, P.; Bloesch, M.; Rodriguez, D.; Kaestner, R.; Hutter, M.; Siegwart, R. Kinect V2 for mobile robot navigation: Evaluation and modeling. In Proceedings of the International Conference on Advanced Robotics (ICAR 2015), Istanbul, Turkey, 27–31 July 2015; pp. 388–394. [Google Scholar]

- Sell, J.; Connor, P.O. The Xbox One System on a Chip and Kinect Sensor. IEEE Micro 2014, 34, 44–53. [Google Scholar] [CrossRef]

- Gui, P.; Qin, Y.; Hongmin, C.; Tinghui, Z.; Chun, Y. Accurately calibrate kinect sensor using indoor control field. In Proceedings of the 3rd International Workshop on Earth Observation and Remote Sensing Applications (EORSA 2014), Changsha, China, 11–14 June 2014; pp. 9–13. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. 1966, 32, 444–462. [Google Scholar]

- Lindner, M.; Kolb, A. Lateral and Depth Calibration of PMD-Distance Sensors. In Proceedings of the 2nd International Symposium on Visual Computing, Lake Tahoe, NV, USA, 6–8 November 2006; Volume 4292, pp. 524–533. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-Squares Fitting of Two 3-D Point Sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 698–700. [Google Scholar] [CrossRef]

- Diaz, M.G.; Tombari, F.; Rodriguez-Gonzalvez, P.; Gonzalez-Aguilera, D. Analysis and Evaluation between the First and the Second Generation of RGB-D Sensors. IEEE Sens. J. 2015, 15, 6507–6516. [Google Scholar] [CrossRef]

- Jiménez, D.; Pizarro, D.; Mazo, M.; Palazuelos, S. Modelling and correction of multipath interference in time of flight cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 893–900. [Google Scholar]

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. In Proceedings of the IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; Volume 2723, pp. 2724–2729. [Google Scholar]

- Chow, J.C.K.; Ang, K.D.; Lichti, D.D.; Teskey, W.F. Performance analysis of a low-cost triangulation-based 3D camera: Microsoft Kinect system. In Proceedings of the The 22nd Congress of the International Society for Photogrammetry and Remote Sensing, Melbourne, VIC, Australia, 25 August–1 September 2012; pp. 175–180. [Google Scholar]

- Lachat, E.; Macher, H.; Mittet, M.A.; Landes, T.; Grussenmeyer, P. First experiences with Kinect V2 sensor for close range 3D modelling. In Proceedings of the 6th International Workshop on 3D Virtual Reconstruction and Visualization of Complex Architectures (3D-ARCH 2015), Avila, Spain, 25–27 February 2015; pp. 93–100. [Google Scholar]

- Labbe, M.; Michaud, F. Appearance-Based Loop Closure Detection for Online Large-Scale and Long-Term Operation. IEEE Trans. Robot. 2013, 29, 734–745. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. Memory management for real-time appearance-based loop closure detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2011), San Francisco, CA, USA, 25–30 September 2011; pp. 1271–1276. [Google Scholar]

- Born, M.; Wolf, E. Fraunhofer diffraction in optical instruments. In Principles of Optics, 7th ed.; Cambridge University Press: London, UK, 1999; pp. 446–472. ISBN 0521642221. [Google Scholar]

| Depth Image Resolution | 512 × 424 pixels |

|---|---|

| Image Resolution | 1920 × 1080 pixels |

| Depth Range | 0.5–4.5 m (Official driver) 0.5–9 m (Open Kinect driver) |

| Validated FOV (H × V) | 70° × 60° |

| Frame Rate | 30 Hz |

| Sensors | IR Image | Color Image | Sync Image |

|---|---|---|---|

| Sensor #1 | 105 | 112 | 112 |

| Sensor #2 | 125 | 131 | 133 |

| Sensor #3 | 109 | 106 | 108 |

| RGB Camera | IR Camera | |||||

|---|---|---|---|---|---|---|

| Upward | Horizontal | Downward | Upward | Horizontal | Downward | |

| (Pixel) | 1.0476 × 103 | 1.0441 × 103 | 1.0496 × 103 | 3.5898 × 102 | 3.6303 × 102 | 3.6079 × 102 |

| (Pixel) | 1.0464 × 103 | 1.0461 × 103 | 1.0493 × 103 | 3.5925 × 102 | 3.6396 × 102 | 3.6104 × 102 |

| u0 | 9.3196 × 102 | 9.4695 × 102 | 9.4030 × 102 | 2.5353 × 102 | 2.4947 × 102 | 2.5407 × 102 |

| v0 | 5.3339 × 102 | 5.3738 × 102 | 5.4417 × 102 | 1.9781 × 102 | 2.1056 × 102 | 2.0385 × 102 |

| k1 | 3.8126 × 10−2 | 4.4726 × 10−2 | 3.9747 × 10−2 | 8.5110 × 10−2 | 7.0900 × 10−2 | 9.6235 × 10−2 |

| k2 | −4.4836 × 10−2 | −5.5681 × 10−2 | −4.5250 × 10−2 | −2.7264 × 10−1 | −2.3734 × 10−1 | −3.2029 × 10−1 |

| k3 | 1.0454 × 10−2 | 1.4570 × 10−2 | 4.5968 × 10−3 | 1.2137 × 10−1 | 7.8478 × 10−2 | 1.7748 × 10−1 |

| p1 | −4.4198 × 10−3 | 6.1033 × 10−3 | 2.5611 × 10−3 | −3.0022 × 10−3 | 3.0057 × 10−3 | −2.5319 × 10−3 |

| p2 | −7.0858 × 10−3 | −2.7240 × 10−4 | −5.6884 × 10−3 | −2.2251 × 10−3 | −5.8013 × 10−4 | 1.3550 × 10−3 |

| Max Residual Error | RMSE | |

|---|---|---|

| Color #1 | 0.8563 | 0.1830 |

| IR #1 | 0.3793 | 0.0826 |

| Color #2 | 0.9986 | 0.1644 |

| IR #2 | 0.3114 | 0.0695 |

| Color #3 | 0.8071 | 0.2377 |

| IR #3 | 0.3634 | 0.0909 |

| Bias Range | <1 mm | 1–3 mm | 3–5 mm | 5–10 mm | 10–15 mm | >15 mm |

|---|---|---|---|---|---|---|

| After depth calibration | 81.020% | 18.899% | 0.000 | 0.081% | 0.000% | 0.000% |

| Before depth calibration | 36.374% | 43.063% | 13.441% | 6.470% | 0.573% | 0.081% |

| Max Residual Error | Mean Residual Error | RMSE | |

|---|---|---|---|

| Coarse calibration | 0.189 | 0.008 | 0.04 |

| Fine calibration | 0.185 | 0.005 | 0.01 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Yang, B.; Song, S.; Tian, M.; Li, J.; Dai, W.; Fang, L. Calibrate Multiple Consumer RGB-D Cameras for Low-Cost and Efficient 3D Indoor Mapping. Remote Sens. 2018, 10, 328. https://doi.org/10.3390/rs10020328

Chen C, Yang B, Song S, Tian M, Li J, Dai W, Fang L. Calibrate Multiple Consumer RGB-D Cameras for Low-Cost and Efficient 3D Indoor Mapping. Remote Sensing. 2018; 10(2):328. https://doi.org/10.3390/rs10020328

Chicago/Turabian StyleChen, Chi, Bisheng Yang, Shuang Song, Mao Tian, Jianping Li, Wenxia Dai, and Lina Fang. 2018. "Calibrate Multiple Consumer RGB-D Cameras for Low-Cost and Efficient 3D Indoor Mapping" Remote Sensing 10, no. 2: 328. https://doi.org/10.3390/rs10020328

APA StyleChen, C., Yang, B., Song, S., Tian, M., Li, J., Dai, W., & Fang, L. (2018). Calibrate Multiple Consumer RGB-D Cameras for Low-Cost and Efficient 3D Indoor Mapping. Remote Sensing, 10(2), 328. https://doi.org/10.3390/rs10020328