1. Introduction

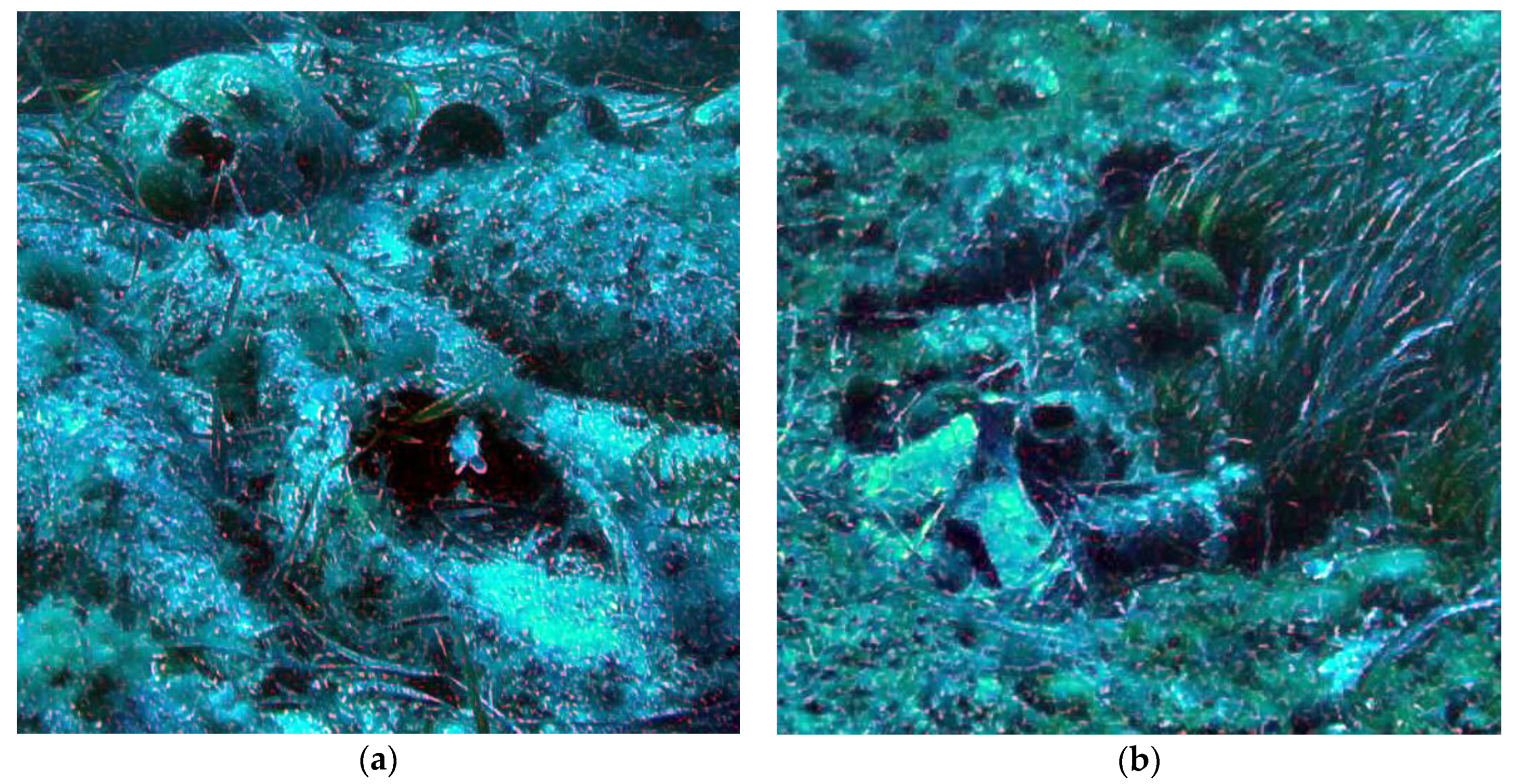

The scattering and absorption of light causes the quality degradation of underwater images. These phenomena are caused by suspended particles in water and by the propagation of light through the water, which is attenuated differently according to its wavelength, water column depth, and the distance between the objects and the point of view. Consequently, as the water column increases, the various components of sunlight are differently absorbed by the medium, depending on their wavelengths. This leads to a dominance of blue/green colours in underwater imagery, which is known as colour cast. The employment of artificial light can increase the visibility and recover the colour, but an artificial light source does not illuminate the scene uniformly and can produce bright spots in the images due to the backscattering of light in the water medium.

The benchmark presented in this research is a part of the iMARECULTURE project [

1,

2,

3], which aims to develop new tools and technologies to improve the public awareness of underwater cultural heritage. In particular, it includes the development of a Virtual Reality environment that reproduces faithfully the appearance of underwater sites, thus offering the possibility to visualize the archaeological remains as they would appear in air. This goal requires the benchmarking of different image enhancement methods to figure out which one performs better in different environmental and illumination conditions. We published another work [

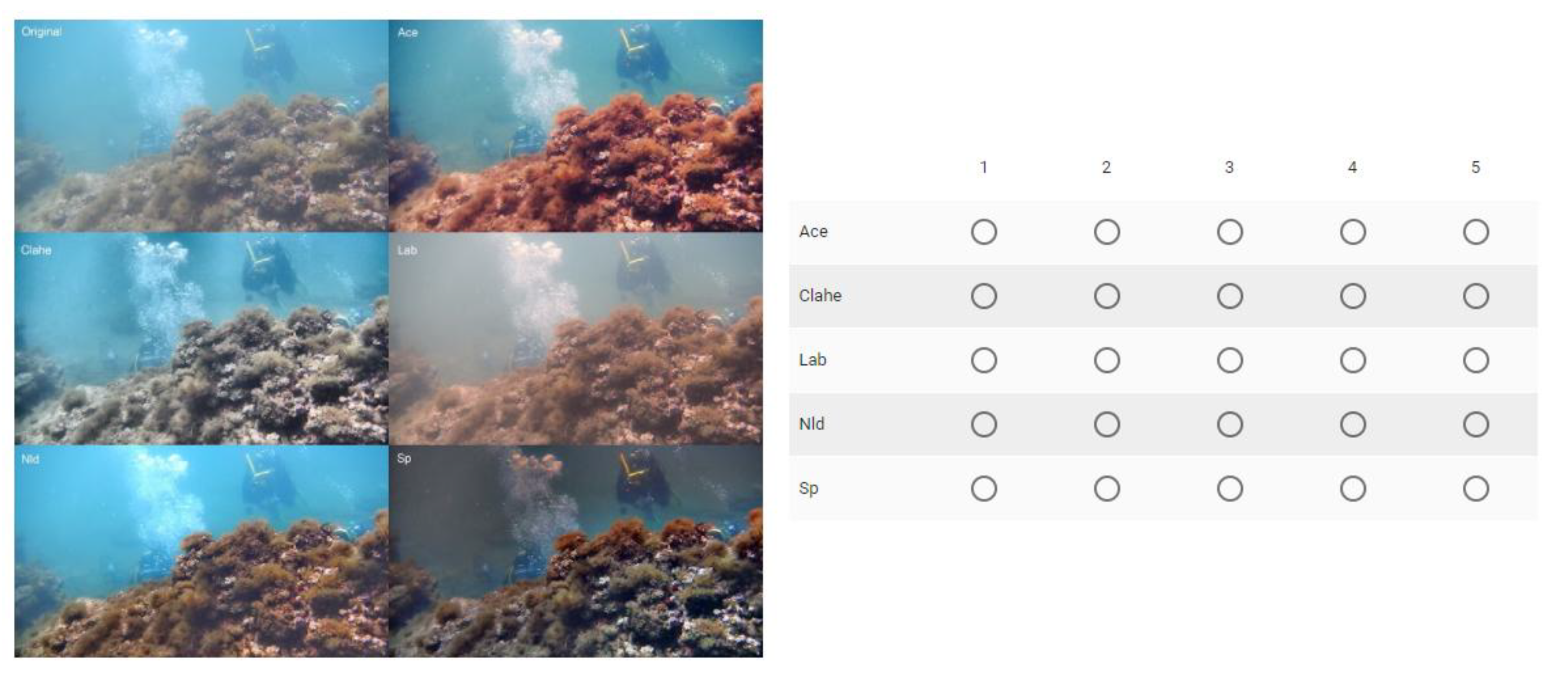

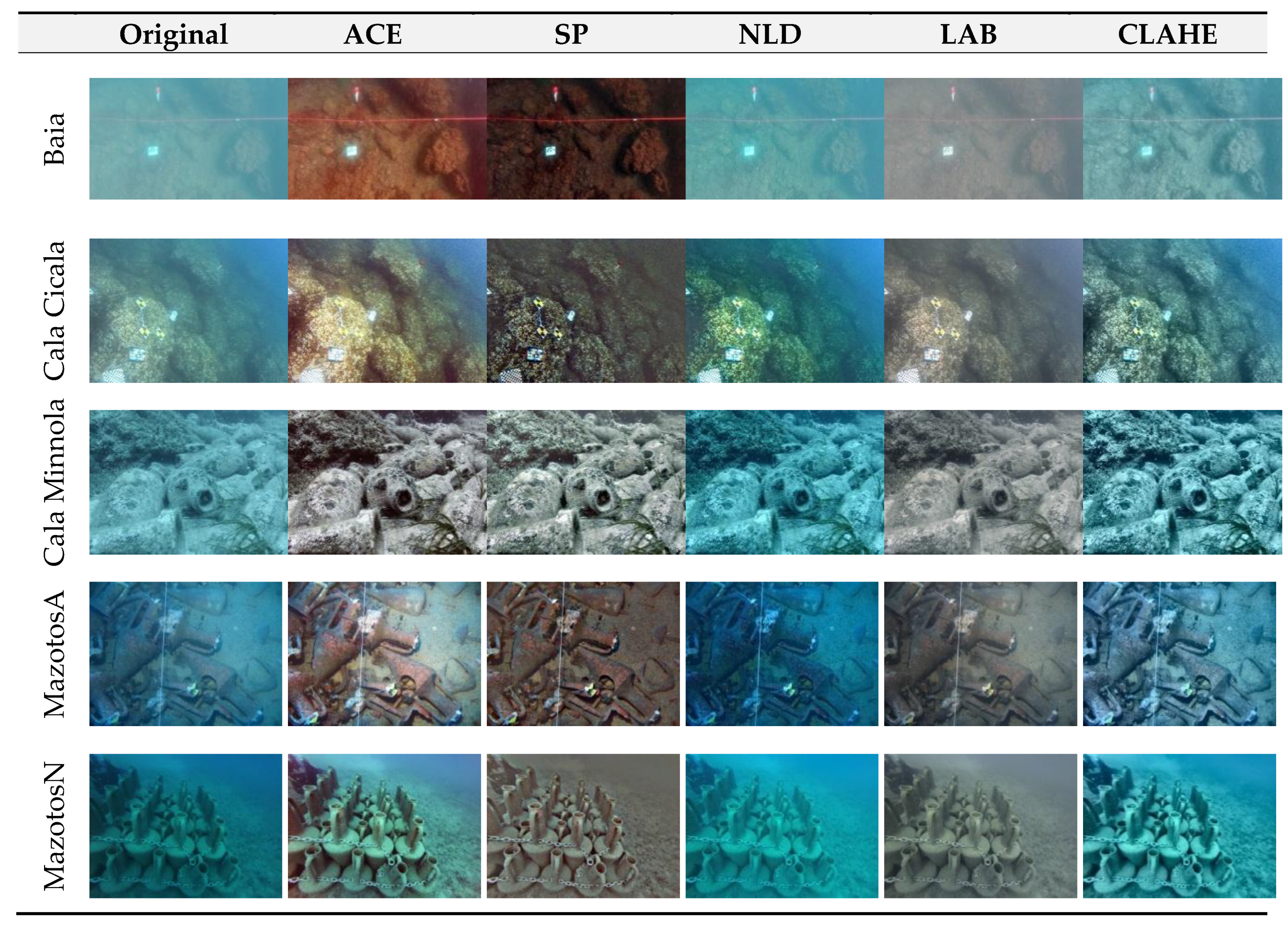

4] in which we selected five methods from the state of the art and used them to enhance a dataset of images produced in various underwater sites at heterogeneous conditions of depth, turbidity and lighting. These enhanced images were evaluated by means of some quantitative metrics. Presently, we will extend our previous work by introducing two more approaches meant for a more comprehensive benchmarking of the underwater image enhancement methods. The first of these additional approaches was conducted with a panel of experts in the field of underwater imagery, members of iMARECULTURE project, and the other one is based on the results of 3D reconstructions. Furthermore, since we modified some images in our dataset by adding some new ones, we also report the results of the quantitative metrics, as done in our previous work.

In highly detailed underwater surveys, the availability of radiometric information, along with 3D data regarding the surveyed objects, becomes crucial for many diagnostics and interpretation tasks [

5]. To this end, different image enhancement and colour correction methods have been proposed and tested for their effectiveness in both clear and turbid waters [

6]. Our purpose was to supply the researchers in the underwater community with more detailed information about the employment of a specific enhancement method in different underwater conditions. Moreover, we were interested in verifying whether different benchmarking approaches have produced consistent results.

The problem of underwater image enhancement is closely related to single image dehazing, in which images are degraded by weather conditions such as haze or fog. A variety of approaches have been proposed to solve image dehazing, and in the present paper we have reported their most effective examples. Furthermore, we also report methods that address the problem of non-uniform illumination in the images and those that focus on colour correction.

Single image dehazing methods assume that only one input image is available and rely on image priors to recover a dehazed scene. One of the most cited works on single image dehazing is the dark channel prior (DCP) [

7]. It assumes that, within small image patches, there will be at least one pixel with a dark colour channel. It then uses this assumption to estimate the transmission and to recover the image. However, this prior was not designed to work underwater, and it does not take into account the different absorption rates of the three colour channels. In [

8], an extension of DCP to deal with underwater image restoration is presented. Given that the red channel is often nearly dark in underwater images, this new prior called Underwater Dark Channel Prior (UDCP) considers just the green and the blue colour channels in order to estimate the transmission. An author mentioned many times in the field is Fattal, R and his two works [

9,

10]. In the first work [

9], Fattal et al., taking into account surface shading and the transmission function, tried to resolve ambiguities in data by searching for a solution in which the resulting shading and transmission functions are statistically uncorrelated.

The second work [

10] describes a new method based on a generic regularity in natural images, which is referred to as colour-lines. On this basis, Fattal et al. derived a local formation model that explains the colour-lines in the context of hazy scenes and used it to recover the image. Another work focused on lines of colour is presented in [

11,

12]. The authors describe a new prior for single image dehazing that is defined as a Non-Local prior, to underline that the pixels forming the lines of colour are spread across the entire image, thus capturing a global characteristic that is not limited to small image patches.

Some other works focus on the problem of non-uniform illumination that, in the case of underwater imagery, is often produced by an artificial light in deep water. The work proposed in [

13] assumes that natural underwater images are Rayleigh distributed and uses maximum likelihood estimation of scale parameters to map distribution of image to Rayleigh distribution. Next, Morel et al. [

14] presents a simple gradient domain method that acts as a high-pass filter, trying to correct the illumination without affecting the image details. A simple prior which estimates the depth map of the scene considering the difference in attenuation among the different colour channels is proposed in [

15]. The scene radiance is recovered from a hazy image through an estimated depth map by modelling the true scene radiance as a Markov Random Field.

Bianco et al. presented, in [

16], the first proposal for the colour correction of underwater images by using lαβ colour space. A white balancing is performed by moving the distributions of the chromatic components (α, β) around the white point and the image contrast is improved through a histogram cut-off and stretching of the luminance (l) component. More recently, in [

17], a fast enhancement method for non-uniformly illuminated underwater images is presented. The method is based on a grey-world assumption applied in the Ruderman-lab opponent colour space. The colour correction is performed according to locally changing luminance and chrominance by using the summed-area table technique. Due to the low complexity cost, this method is suitable for real-time applications, ensuring realistic colours of the objects, more visible details and enhanced visual quality. Works [

18,

19] present a method of unsupervised colour correction for general purpose images. It employs a computational model that is inspired by some adaptation mechanisms of the human vision to realize a local filtering effect by taking into account the colour spatial distribution in the image.

Additionally, we report a method for contrast enhancement, since underwater images are often lacking in contrast. This is the Contrast Limited Adaptive Histogram Equalization (CLAHE) proposed in [

20] and summarized in [

21], which was originally developed for medical imaging and has proven to be successful for enhancing low-contrast images.

In [

22], a fusion-based underwater image enhancement technique using contrast stretching and Auto White Balance is presented. In [

23], a dehazing approach that builds on an original colour transfer strategy to align the colour statistics of a hazy input to the ones of a reference image, also captured underwater, but with neglectable water attenuation, is delivered. There, the colour-transferred input is restored by inverting a simplified version of the McGlamery underwater image formation model, using the conventional Dark Channel Prior (DCP) to estimate the transmission map and the backscattered light parameter involved in the model.

Work [

24] proposes a Red Channel method in order to restore the colours of underwater images. The colours associated with short wavelengths are recovered, leading to a recovery of the lost contrast. According to the authors, this Red Channel method can be interpreted as a variant of the DCP method used for images degraded by the atmosphere when exposed to haze. Experimental results show that the proposed technique handles artificially illuminated areas gracefully, and achieves a natural colour correction and superior or equivalent visibility improvement when compared to other state-of-the-art methods. However, it is suitable either for shallow waters, where the red colour still exists, or for images with artificial illumination. The authors in [

25] propose a modification to the well-known DCP method. Experiments on real-life data show that this method outperforms competing solutions based on the DCP. Another method that relies in part on the DCP method is presented in [

26], where an underwater image restoration method is presented based on transferring an underwater style image into a recovered style using Multi-Scale Cycle Generative Adversarial Network System. There, a Structural Similarity Index Measure loss is used to improve underwater image quality. Then, the transmission map is fed into the network for multi-scale calculation on the images, which combine the DCP method and Cycle-Consistent Adversarial Networks. The work presented in [

27] describes a restoration method that compensates for the colour loss due to the scene-to-camera distance of non-water regions without altering the colour of pixels representing water. This restoration is achieved without prior knowledge of the scene depth.

In [

28], a deep learning approach is adopted; a Convolutional Neural Network-based image enhancement model is trained efficiently using a synthetic underwater image database. The model directly reconstructs the clear latent underwater image by leveraging on an automatic end-to-end and data-driven training mechanism. Experiments performed on synthetic and real-world images indicate a robust and effective performance of the proposed method.

In [

29], exposure bracketing imaging is used to enhance the underwater image by fusing an image that includes sufficient spectral information of underwater scenes. The fused image allows authors to extract reliable grey information from scenes. Even though this method gives realistic results, it seems to be limited in no real-time applications due to the exposure bracketing process.

In the literature, very few attempts at underwater image enhancement methods evaluation through feature matching have been reported, while even fewer of them focus on evaluating the results of 3D reconstruction using the initial and enhanced imagery. Recently, a single underwater image restoration framework based on the depth estimation and the transmission compensation was presented [

30]. The proposed scheme consists of five major phases: background light estimation, submerged dark channel prior, transmission refinement and radiance recovery, point spread function deconvolution and transmission and colour compensation. The authors used a wide variety of underwater images with various scenarios in order to assess the restoration performance of the proposed method. In addition, potential applications regarding autopilot and three-dimensional visualization were demonstrated.

Ancuti et al., in [

31], as well as in [

32], where an updated version of the method is presented, delivered a novel strategy to enhance underwater videos and images built on the fusion principles. There, the utility of the proposed enhancing technique is evaluated through matching by employing the SIFT [

33] operator for an initial pair of underwater images, and also for the restored versions of the images. In [

34,

35], the authors investigated the problem of enhancing the radiometric quality of underwater images, especially in cases where this imagery is going to be used for automated photogrammetric and computer vision algorithms later. There, the initial and the enhanced imagery were used to produce point clouds, meshes and orthoimages, which in turn were compared and evaluated, revealing valuable results regarding the tested image enhancement methods. Finally, in [

36], the major challenge of caustics is addressed by a new approach for caustics removal [

37]. There, in order to investigate its performance and its effect on the SfM-MVS (Structure from Motion—Multi View Stereo) and 3D reconstruction results, a commercial software performing SfM-MVS was used, the Agisoft’s Photoscan [

38] as well as other key point descriptors such as SIFT [

33] and SURF [

39]. In the tests performed using the Agisoft’s Photoscan, an image pair of the five different datasets was inserted and the alignment step was performed. Regarding the key point detection and matching, using the in-house implementations, a standard detection and matching procedure was followed, using the same image pairs and filtering the initial matches using the RANSAC [

40] algorithm and the fundamental matrix. Subsequently, all datasets were used in order to create 3D point clouds. The resulting point clouds were evaluated in terms of total number of points and roughness, a metric that also indicates the noise on the point cloud.

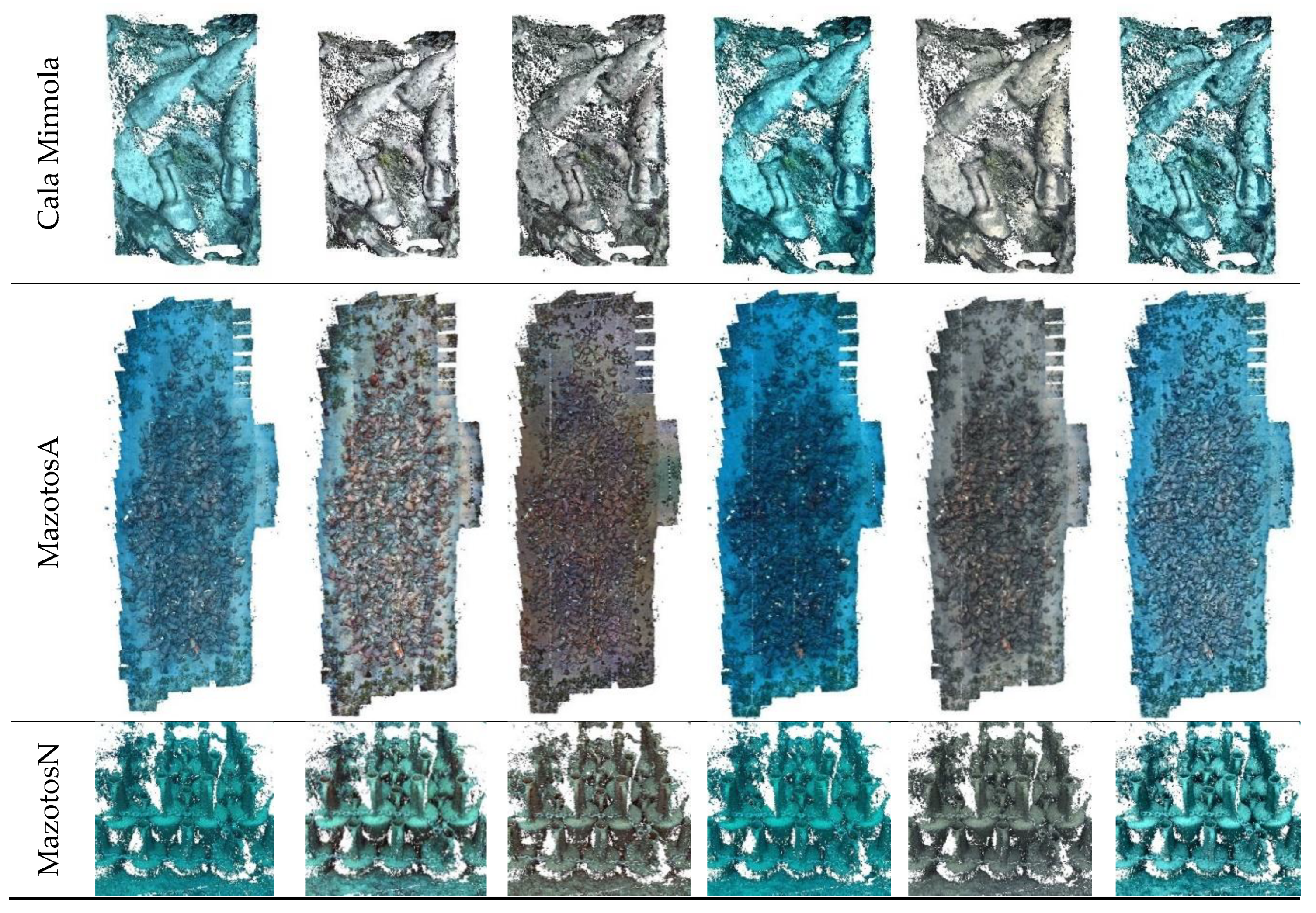

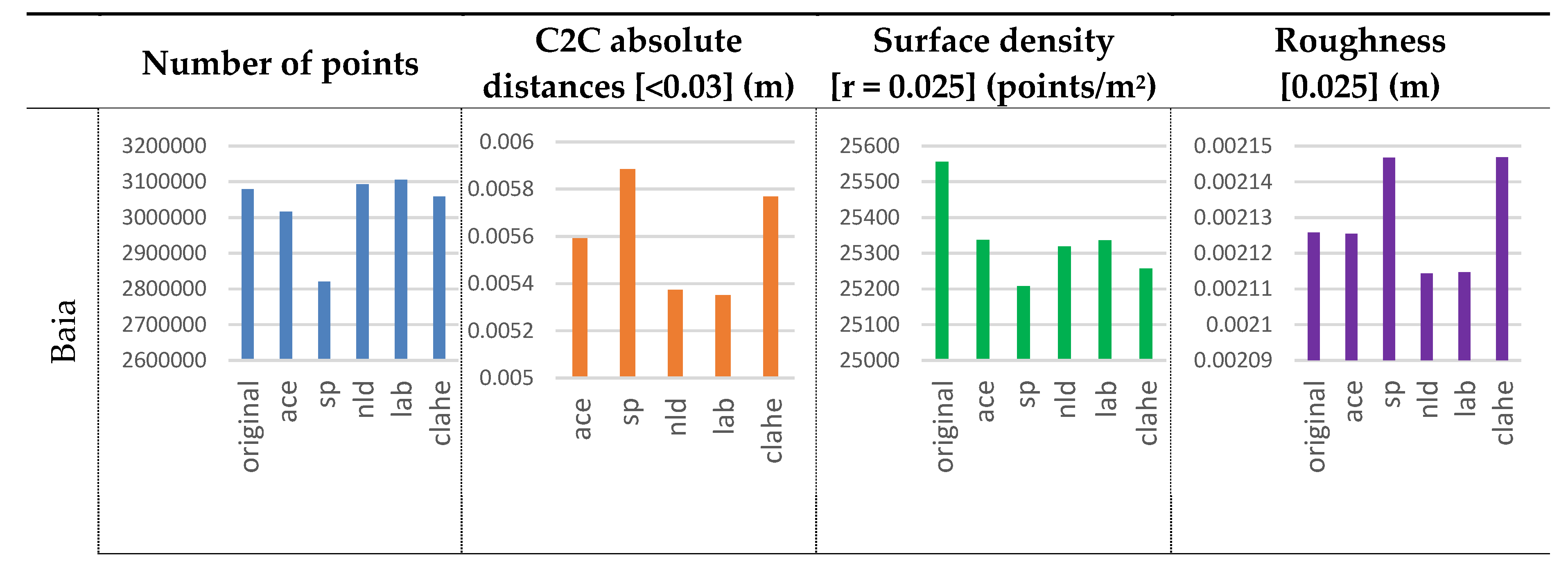

7. Comparison of the Three Benchmarks Results

According to the objective metrics results reported in

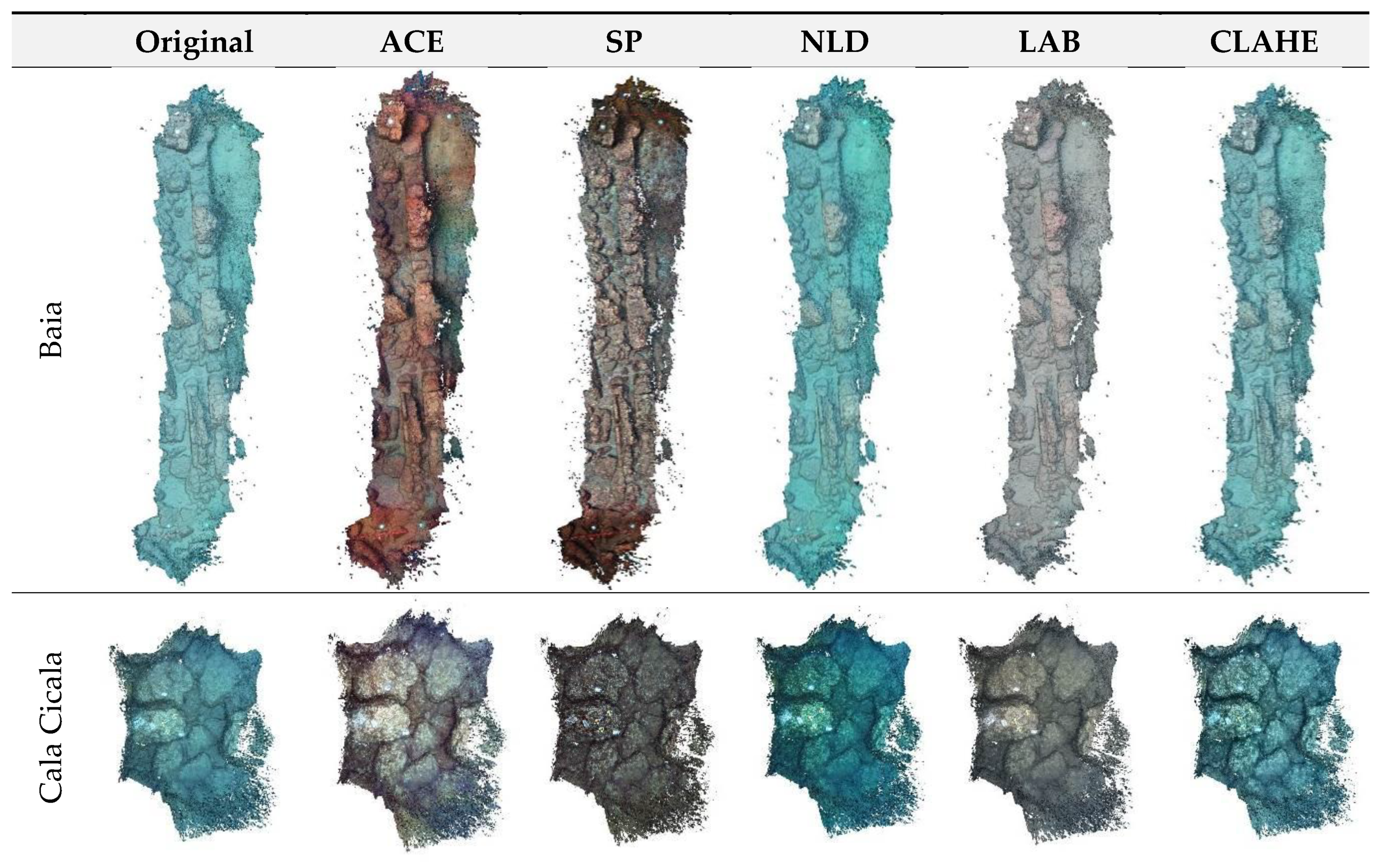

Section 4, the SP algorithm seemed to perform better than the others in all the underwater sites, except for the case MazotosA. For these images, taken on Mazotos with artificial light, each metric assigned a higher value to a different algorithm, preventing us from deciding which algorithm performed better on this dataset. It is also worth to remember that the ACE algorithm seems to be the one that performs better in enhancing the information entropy of the images. However, objective metrics do not seem consistent nor significantly different enough to allow the best algorithm nomination. On the other hand, the opinion of experts seems to be that the ACE algorithm is the one that performs better on all sites, and CLAHE and SP perform as fine as ACE at some sites. Additionally, the 3D reconstruction quality seems to be decreased by all the algorithms, except LAB that slightly improves it.

Table 12 shows a comparison between average objective metrics, average vote of experts and

C3Dm divided by site. The best score for each evaluation is marked in bold. Let us recall that the values highlighted in orange in the expert evaluation rows (

Exp) are not significantly different from each other within the related site. It is worth noting that the objective metric that seems to get closest to the expert opinion is

, i.e., information entropy. Indeed,

is consistent with the expert opinion, regarding the nomination of the best algorithm within the related site, in all the five sites.

and

are consistent with each other on 4/5 sites and with the expert opinion on 3/5 sites.

To recap, the concise result of the objective and expert evaluation seems to be that LAB and NLD do not perform as well as the other algorithms. ACE could be employed in different environmental condition with good results. CLAHE and SP can produce a good enhancement in some environmental conditions.

On the other hand, according to the evaluation based on the results of 3D reconstruction, the LAB algorithm seems to have the best performance, producing more 3D points, insignificant cloud to cloud distances, high surface density and low roughness 3D point clouds.

8. Conclusions

We have selected five well-known state-of-the-art methods of the enhancement of images taken on various underwater sites with five different environmental and illumination conditions. We have produced a benchmark for these methods based on three different evaluation techniques:

an objective evaluation based on metrics selected among those already adopted in the field of underwater image enhancement;

a subjective evaluation based on a survey conducted with a panel of experts in the field of underwater imagery;

an evaluation based on the improvement that these methods may bring to 3D reconstructions.

Our purpose was twofold. First of all, we tried to establish which methods perform better than the others and whether or not there existed an image enhancement method, among the selected ones, that could be employed seamlessly in different environmental conditions in order to accomplish different tasks such as visual enhancement, colour correction and 3D reconstruction improvement.

The second aspect was the comparison of the three above mentioned evaluation techniques in order to understand if they provide consistent results. Starting from the second aspect, we can state that the 3D reconstructions are not significantly improved by discussed methods, probably the minor improvement obtainable with the LAB could not justify the effort to pre-process hundreds or thousands of images required for larger models. On the other hand, the subjective metrics and the expert panel appear to be quietly consistent and, in particular, the identifies the same best methods of the expert panel on all the dataset. Consequently, an important conclusion that can be drawn from this analysis is that should be adopted in order to have an objective evaluation that provides results consistent with the judgement of qualitative evaluations performed by experts in image enhancement. This is an interesting point, because it is not so easy to organize an expert panel for such kind of benchmark.

On the basis of these considerations, we can compare the five selected methods by means of the objective metrics (in particular

) and the expert panel. It is quite apparent from

Table 12 that ACE, in almost all the environmental conditions, is the one that improves the underwater images more than the others. In some cases, SP and CLAHE can lead to similar good results.

Moreover, thanks to the tool described in

Section 2 and provided in

Supplementary Materials, the community working in underwater imaging would be able to quickly generate a dataset of enhanced images processed with five state of the art methods and use them in their works or to compare new methods. For instance, in case of an underwater 3D reconstruction, our tool can be employed to try different combinations of methods and quickly verify if the reconstruction process can be improved somehow. A possible strategy could be to pre-process the images with the LAB method trying to produce a more accurate 3D model and, afterwards, to enhance the original images with another method such as ACE to achieve a textured model more faithful to the reality (

Figure 9). Employing our tool for the enhancement of the underwater images ensures to minimize the pre-processing effort and enables the underwater community to quickly verify the performance of the different methods on their own datasets.

Finally,

Table 13 summarizes our conclusions and provides the community with some more categorical guidelines regarding which method should be used according to different underwater conditions and tasks. In this table, the visual enhancement row refers to the improvement of the sharpness, contrast and colour of the images. The 3D Reconstruction row refers to the improvement of the 3D model, apart from the texture. As previously described, the texture of the model should be enhanced with a different method, according to the environmental conditions and, therefore, to the previous “visual enhancement” guidelines. Furthermore, as far as the evaluation of other methods that have not debated here is concerned, our guideline is to evaluate them with the

metric, as pursuant to our results, it is the metric that is closest to the expert panel evaluation.

In the end, let us underline, though, that we are fully aware of the fact that there are several other methods for underwater image enhancement and manifold metrics for the evaluation of these methods. It was not possible to debate them all in a single paper. Our effort has been to guide the community towards the definition of a more effective and objective methodology for the evaluation of the underwater image enhancement methods.