Automated Attitude Determination for Pushbroom Sensors Based on Robust Image Matching

Abstract

:1. Introduction

2. Method

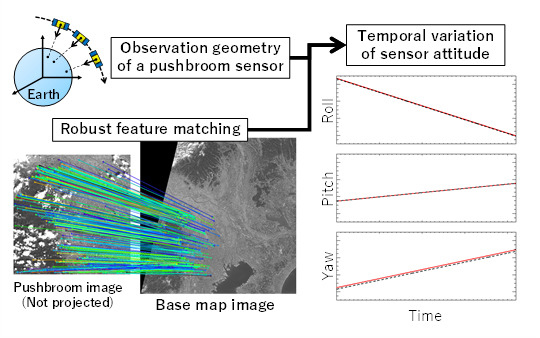

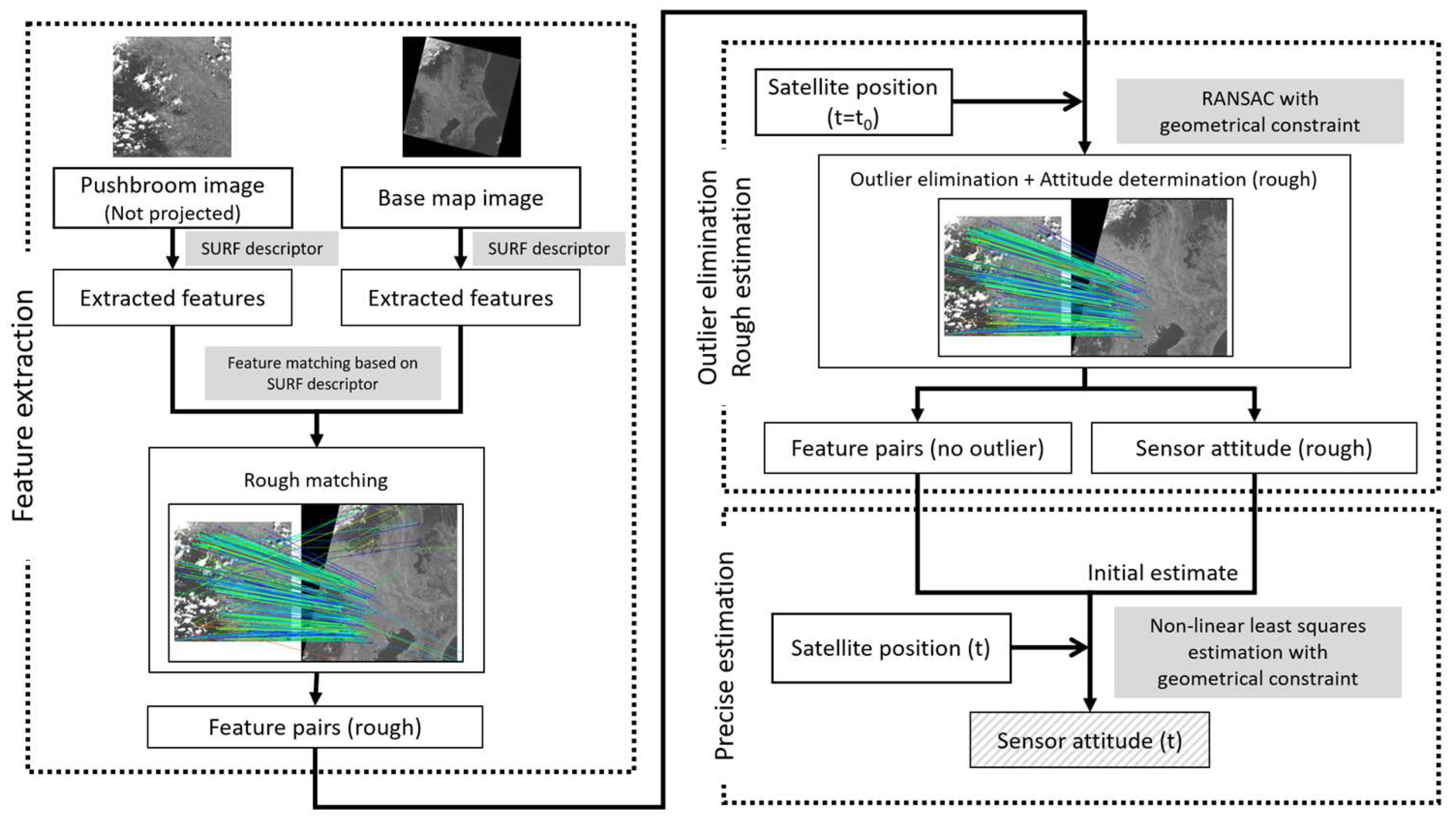

2.1. Overview of Proposed Method

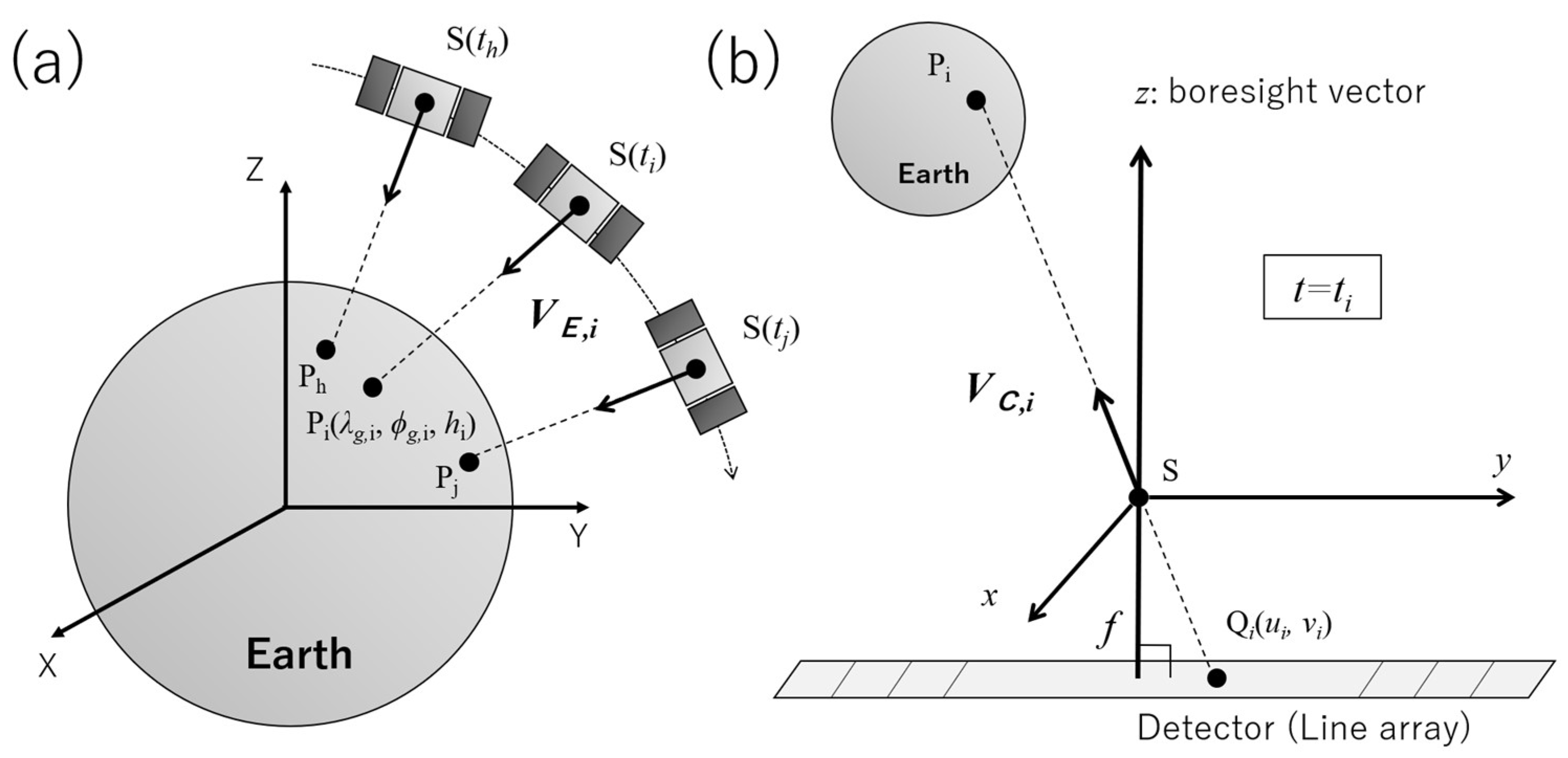

2.2. Mathematical Basis of Attitude Determination for a Pushbroom Sensor

2.3. Robust Estimation of Initial Estimates and Elimination of Incorreclly Matched Feature Pairs

3. Results

3.1. Test Data Sets

3.1.1. ASTER Images: Raw Pushbroom Images

3.1.2. Landsat-8/OLI Images: Base-Map Images

3.2. Sensor Attitude Determination

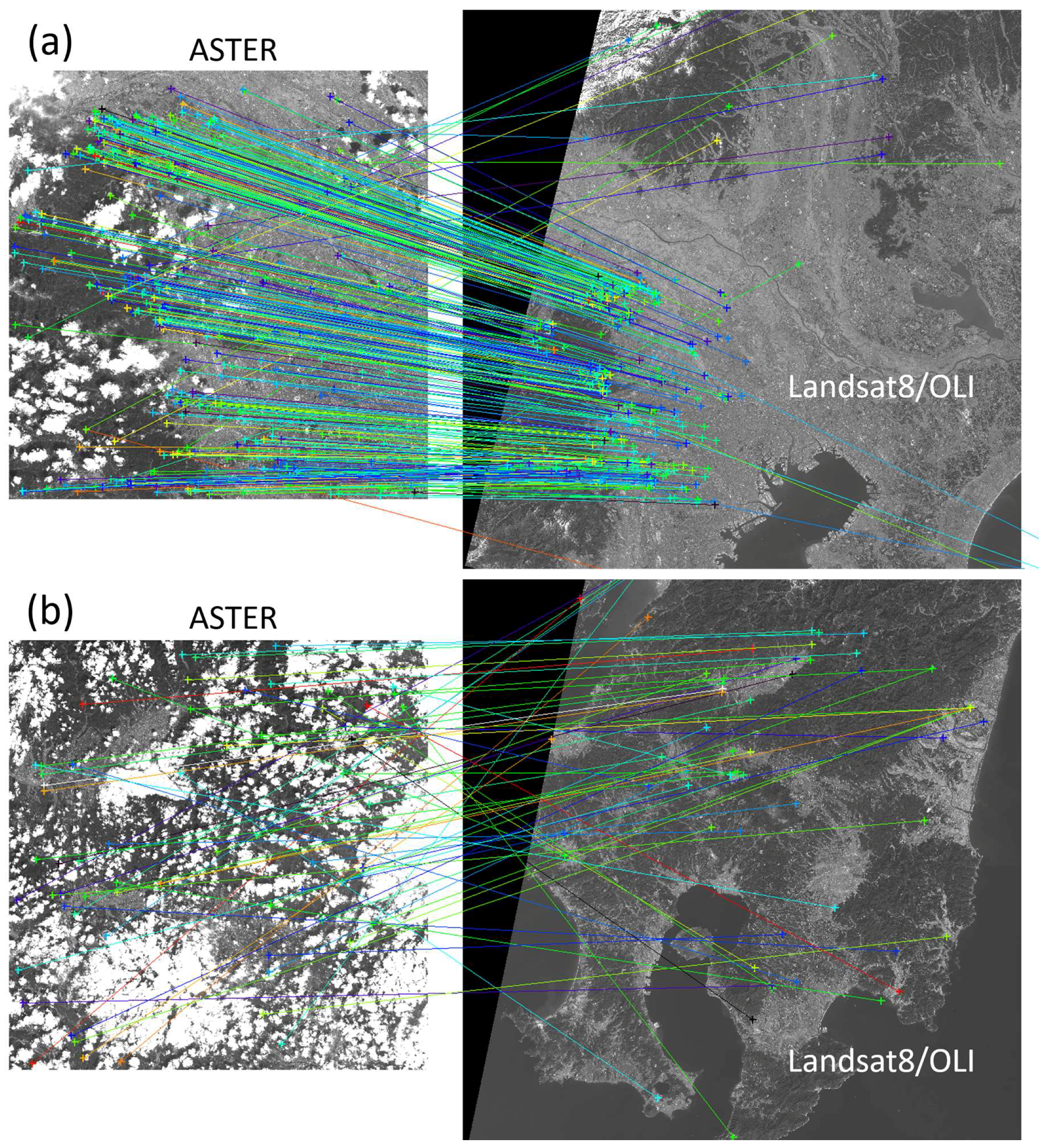

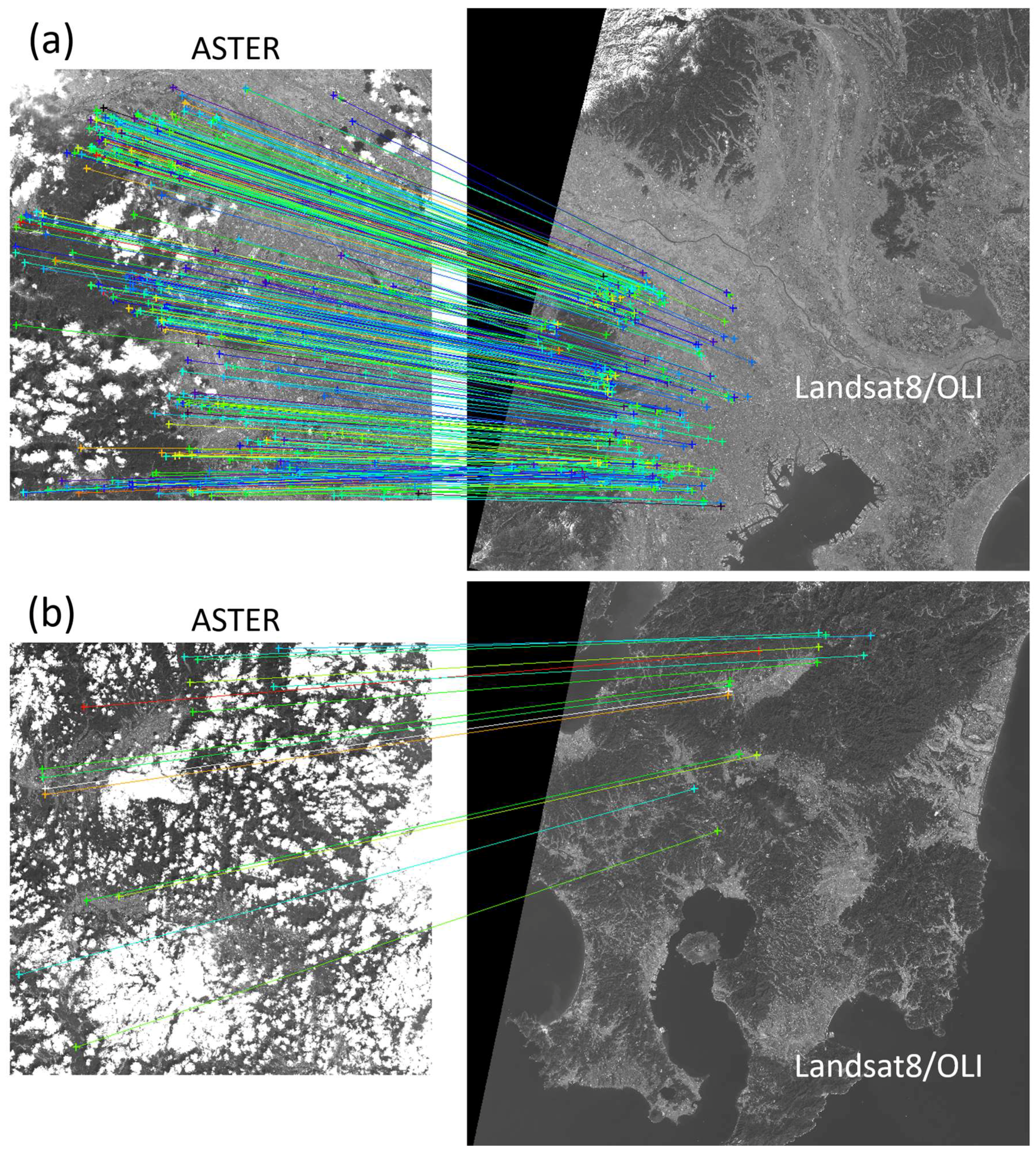

3.2.1. Robust Determination of Sensor Attitude (Rough) and Extracting Correctly Matched Feature Pairs

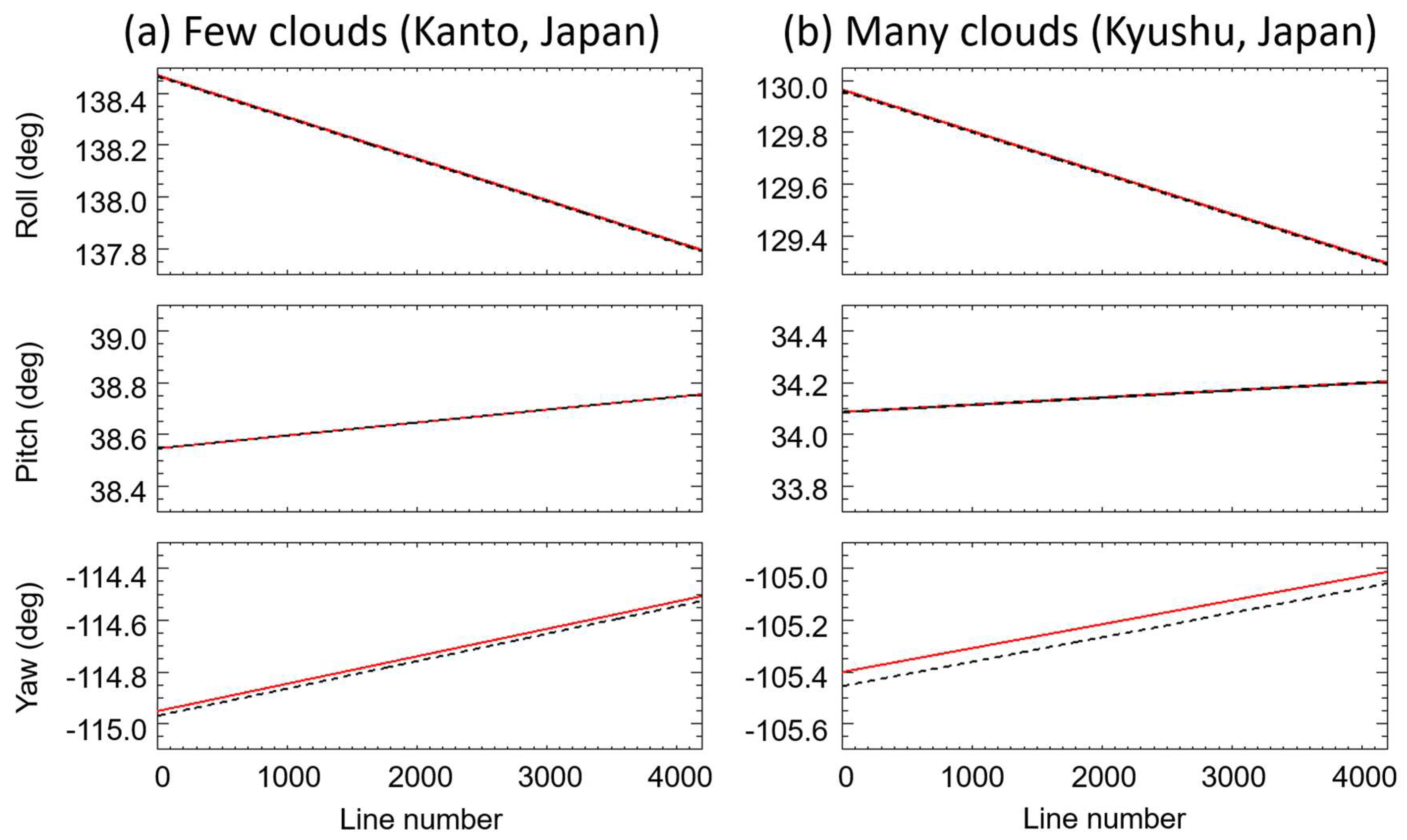

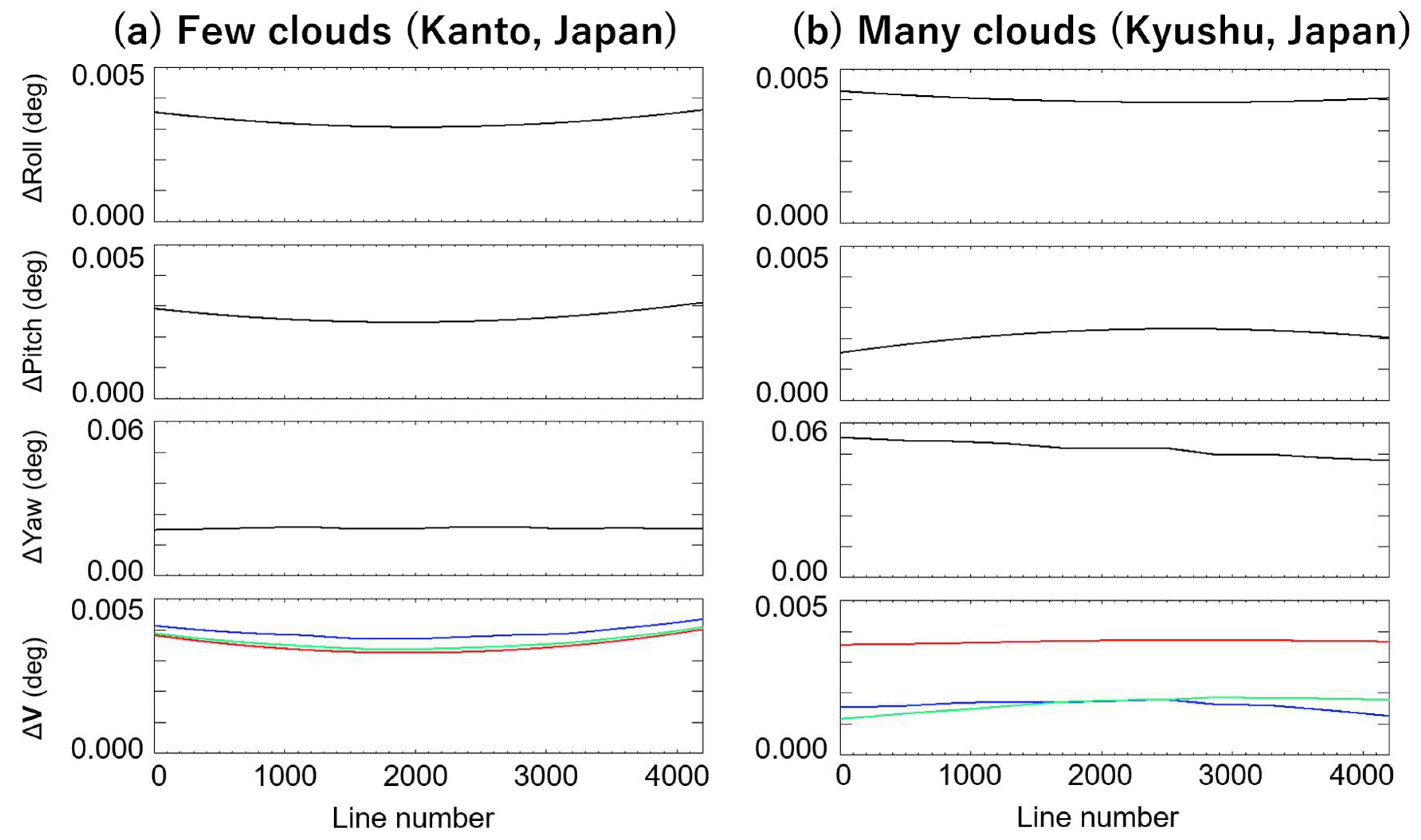

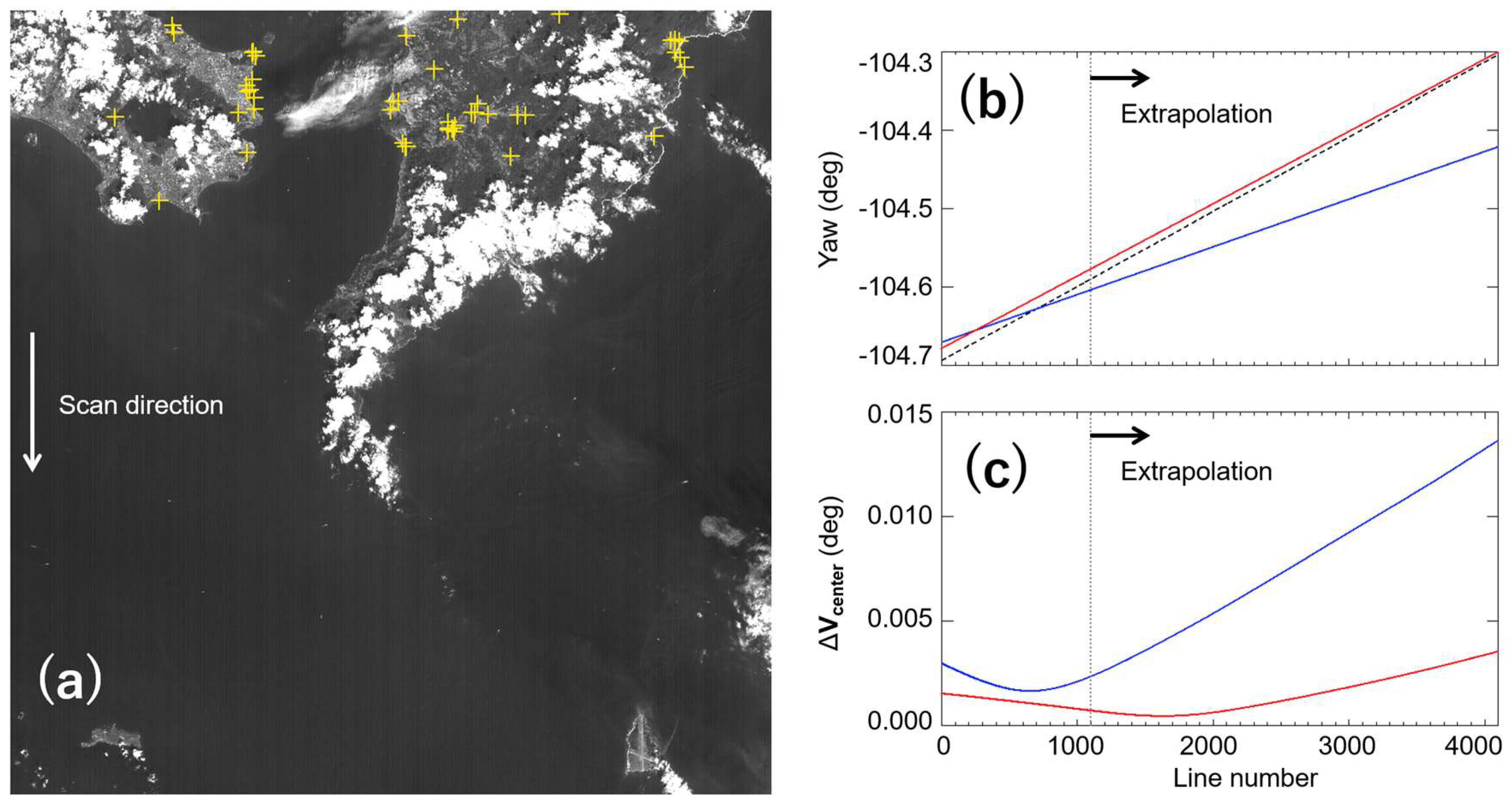

3.2.2. Precise Determination of Sensor Attitude and Its Accuracy

3.3. Acuracy of Determined Sensor Attitude

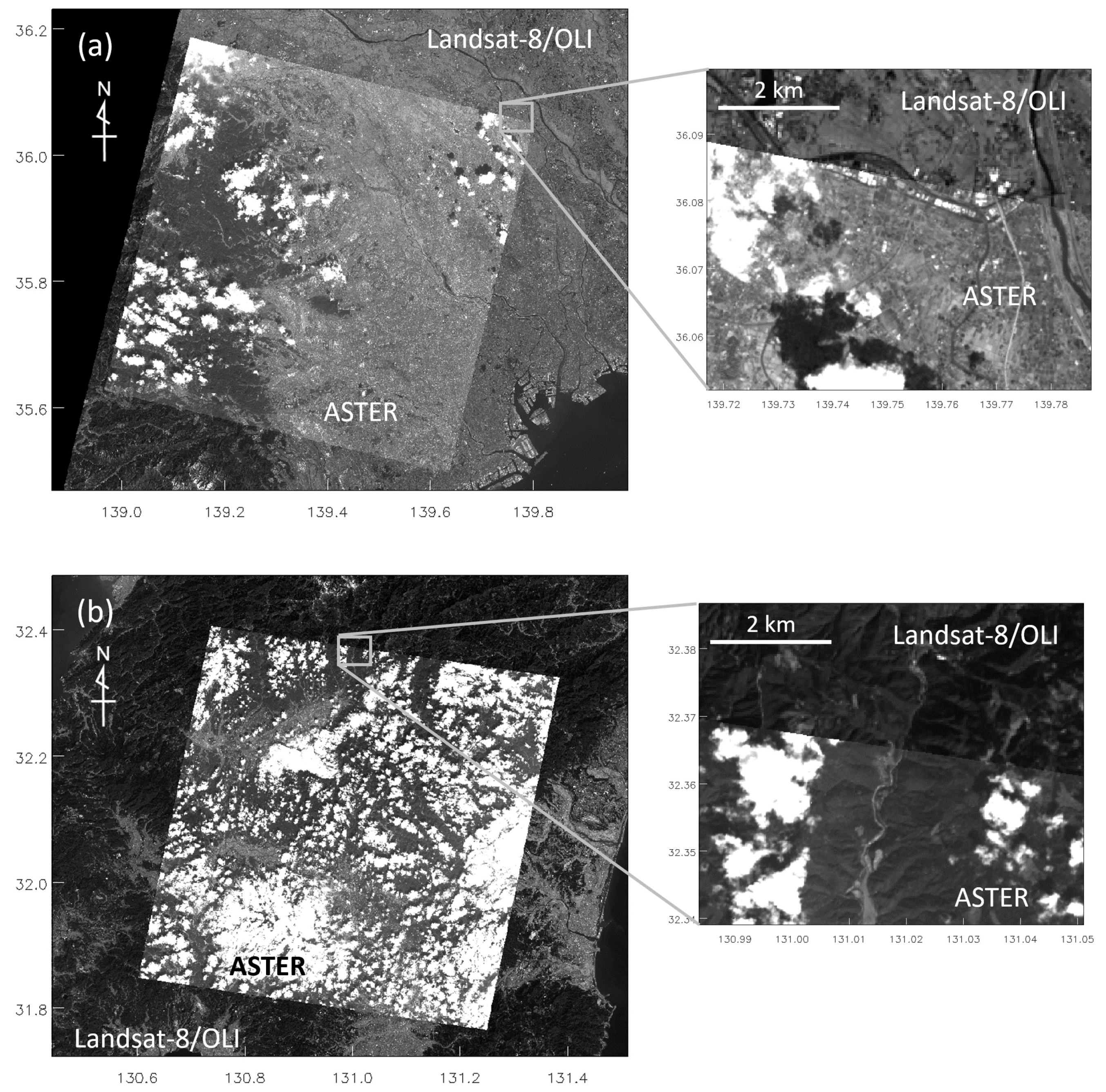

3.3.1. Map-Projection Accuracy

3.3.2. Comparison with Sensor Attitude from Onboard Sensors

4. Discussion

4.1. Performance of Attitude Determination in Extrapolated Areas

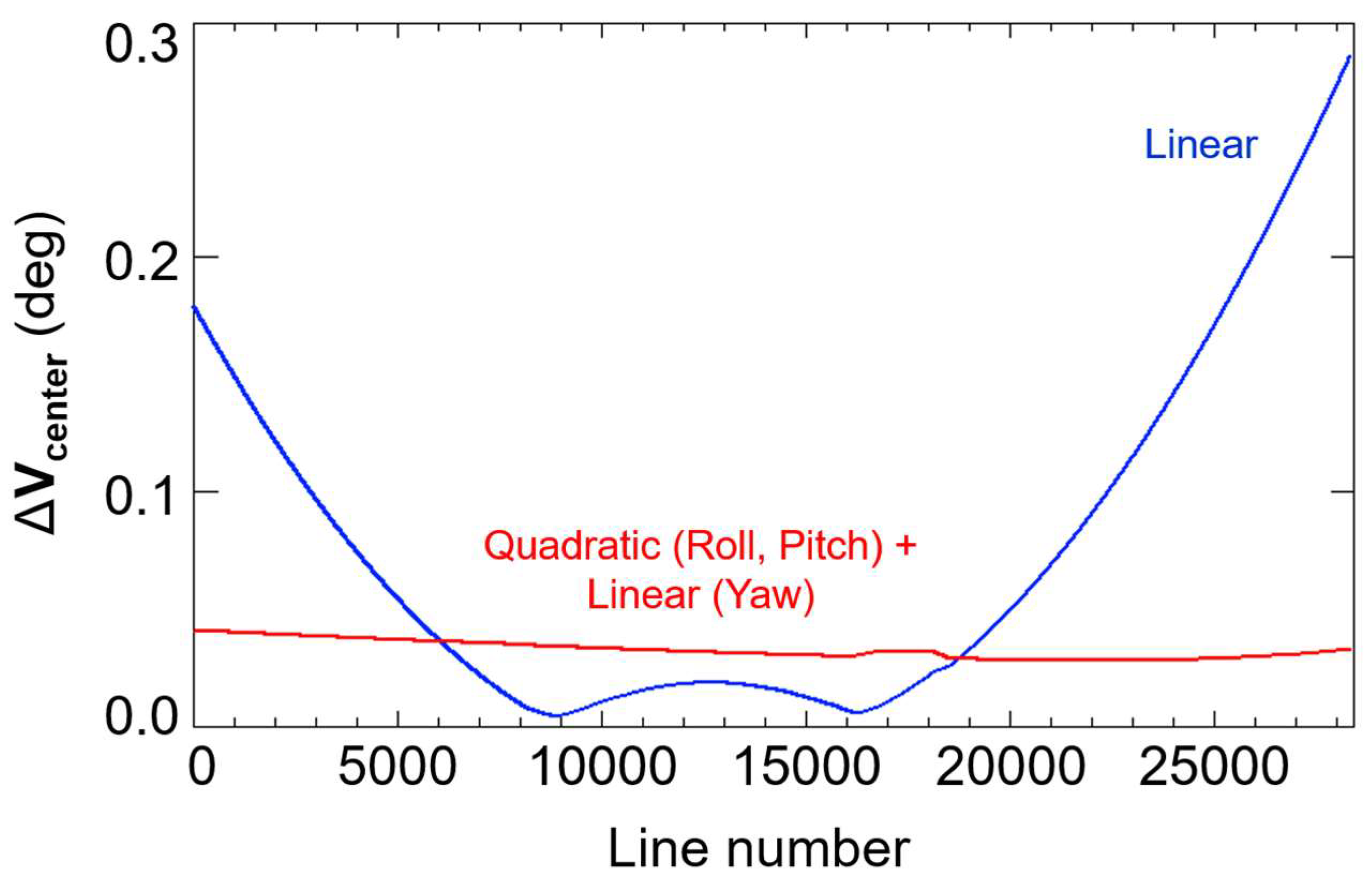

4.2. Design of Fitting Equations

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Tahoun, M.; Shabayayek, A.E.R.; Hassanien, A.E. Matching and co-registration of satellite images using local features. In Proceedings of the International Conference on Space Optical Systems and Applications (ICSOS), Kobe, Japan, 7–9 May 2014. [Google Scholar]

- Wang, X.; Li, Y.; Wei, H.; Lin, F. An ASIFT-based local registration method for satellite imagery. Remote Sens. 2015, 7, 7044–7061. [Google Scholar] [CrossRef]

- Markley, F.H.; Crassidis, J.L. Sensors and Actuators. In Fundamentals of Spacecraft Attitude Determination and Control; Springer: Berlin, Germany, 2014; pp. 123–181. [Google Scholar]

- Matsunaga, T.; Iwasaki, A.; Tsuchida, S.; Iwao, K.; Tanii, J.; Kashimura, O.; Nakamura, R.; Yamamoto, H.; Kato, S.; Mouri, K.; et al. Current status of Hyperspectral Imager Suite (HISUI) onboard International Space Station (ISS). In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques, Beijing, China, 18–20 October 2017; IEEE: Fort Worth, TX, USA, 2017. [Google Scholar]

- Iwasaki, A.; Ohgi, N.; Tanii, J.; Kawashima, T.; Inada, H. Hyperspectral Imager Suite (HISUI) -Japanese hyper-multi spectral radiometer. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 1025–1028. [Google Scholar] [CrossRef]

- Belyaev, M.Y.; Babkin, E.V.; Ryabukha, S.B.; Ryazantsev, A.V. Microperturbations on the International Space Station during physical exercises of the crew. Cosm. Res. 2011, 49, 160. [Google Scholar] [CrossRef]

- Morii, M.; Sugimori, K.; Kawai, N.; The Maxi team. Alignment calibration of MAXI/GSC. Physica E 2011, 43, 692–696. [Google Scholar] [CrossRef]

- Kibo Exposed Facility User Handbook. Available online: http://iss.jaxa.jp/kibo/library/fact/data/JFE_HDBK_all_E.pdf (accessed on 13 April 2018).

- Kouyama, T.; Kanemura, A.; Kato, S.; Imamoglu, N.; Fukuhara, T.; Nakamura, R. Satellite Attitude Determination and Map Projection Based on Robust Image Matching. Remote Sens. 2017, 9, 90. [Google Scholar] [CrossRef]

- Brum, A.G.V.; Pilchowski, H.U.; Faria, S.D. Attitude determination of spacecraft with use of surface imaging. In Proceedings of the 9th Brazilian Conference on Dynamics Control and Their Applications (DICON’10), Serra Negra, Brazil, 7–11 June 2010; pp. 1205–1212. [Google Scholar]

- Barbieux, K. Pushbroom Hyperspectral Data Orientation by Combining Feature-Based and Area-Based Co-Registration Techniques. Remote Sens. 2018, 10, 645. [Google Scholar] [CrossRef]

- Toutin, T. Review article: Geometric processing of remote sensing images: Models, algorithms and methods. Int. J. Remote Sens. 2004, 25, 1893–1924. [Google Scholar] [CrossRef]

- Poli, D. General Model for Airborne and Spaceborne Linear Array Sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 177–182. [Google Scholar]

- Poli, D.; Zhang, L.; Gruen, A. Orientation of satellite and airborne imagery from multi-line pushbroom sensors with a rigorous sensor model. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 130–135. [Google Scholar]

- Aguilar, M.A.; Saldana, M.M.; Aguilar, F.J. Assessing geometric accuracy of the orthorectification process from GeoEye-1 and WorldView-2 panchromatic images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Fisher, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar]

- Choi, S.; Kim, T.; Yu, W. Performance Evaluation of RANSAC Family. In Proceedings of the British Machine Vision Conference 2009, London, UK, 7–10 September 2009. [Google Scholar]

- Triggs, B.; McLauchlan, P.; Hartley, R.; Fitzgibbon, A. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice, Proceedings of the International Workshop on Vision Algorithms: Theory and Practice, Corfu, Greece, 20–25 September 1999; Springer: London, UK, 1999; pp. 298–372. ISBN 3-540-67973-1. [Google Scholar]

- Jing, L.; Xu, L.; Li, X.; Tian, X. Determination of Platform Attitude through SURF Based Aerial Image Matching. In Proceedings of the 2013 IEEE International Conference on Imaging Systems and Techniques, Beijing, China, 22–23 October 2013. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media: Sebastopol, CA, USA, 2008; pp. 521–526. [Google Scholar]

- Chum, O.; Matas, J. Matching with PROSAC—Progressive Sample Consensus. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Aster Overview. Available online: https://lpdaac.usgs.gov/dataset_discovery/aster (accessed on 13 April 2018).

- Keiffer, H.H.; Mullin, K.F.; MacKinnon, D.J. Validation of the ASTER Instrument Level 1A Scene Geometry. Photogramm. Eng. Remote Sens. 2008, 74, 289–301. [Google Scholar] [CrossRef]

- Kelly, A.; Moyera, E.; Mantziarasa, D.; Caseb, W. Terra mission operations: Launch to the present (and beyond). In Proceedings of the SPIE, Earth Observing Systems, San Diego, CA, USA, 17–21 August 2014. [Google Scholar]

- Ward, D.; Dang, K.; Slojkowski, S.; Blizzard, M.; Jenkins, G. Tracking and Data Relay Satellite (TDRS) Orbit Estimation Using an Extended Kalman Filter. In Proceedings of the 20th International Symposium on Space Flight Dynamics, Annapolis, MD, USA, 24–28 September 2007. [Google Scholar]

- Strorey, J.; Choate, M.; Lee, K. Landsat 8 operational land imager on-orbit geometric calibration and performance. Remote Sens. 2014, 6, 11127–11152. [Google Scholar] [CrossRef]

- ASTER GDEM Validation Team. ASTER Global Digital Elevation Model Version 2—Summary of Validation Results 2011. Available online: http://www.jspacesystems.or.jp/ersdac/GDEM/ver2Validation/Summary_GDEM2_validation_report_final.pdf (accessed on 8 August 2016).

- Athmania, D.; Achour, H. External validation of the ASTER GDEM2, GMTED2010 and CGIAR-CSI- SRTM v4.1 free access digital elevation models (DEMs) in Tunisia and Algeria. Remote Sens. 2014, 6, 4600–4620. [Google Scholar] [CrossRef]

| Wavelength (μm) | GSD (m) | Swath (km) | Bit Depth (bit) | |

|---|---|---|---|---|

| ASTER Band 1 | 0.52–0.60 | 15 | 60 | 8 |

| Wavelength (μm) | GSD (m) | Swath (km) | Bit Depth (bit) | |

|---|---|---|---|---|

| OLI Band 3 | 0.533–0.590 | 30 | 185 | 16 |

| Onboard Sensors | Proposed | |||||||

|---|---|---|---|---|---|---|---|---|

| (a) Kanto, Japan (Few clouds) | −3.1 | −2.1 | 15.5 | 12.0 | −3.8 | −2.1 | 14.4 | 13.2 |

| (b) Kyushu, Japan (Many clouds) | −20 | −5.1 | 15.0 | 13.0 | −5.2 | −5.6 | 11.7 | 13.8 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sugimoto, R.; Kouyama, T.; Kanemura, A.; Kato, S.; Imamoglu, N.; Nakamura, R. Automated Attitude Determination for Pushbroom Sensors Based on Robust Image Matching. Remote Sens. 2018, 10, 1629. https://doi.org/10.3390/rs10101629

Sugimoto R, Kouyama T, Kanemura A, Kato S, Imamoglu N, Nakamura R. Automated Attitude Determination for Pushbroom Sensors Based on Robust Image Matching. Remote Sensing. 2018; 10(10):1629. https://doi.org/10.3390/rs10101629

Chicago/Turabian StyleSugimoto, Ryu, Toru Kouyama, Atsunori Kanemura, Soushi Kato, Nevrez Imamoglu, and Ryosuke Nakamura. 2018. "Automated Attitude Determination for Pushbroom Sensors Based on Robust Image Matching" Remote Sensing 10, no. 10: 1629. https://doi.org/10.3390/rs10101629