Advancing Sustainable Mobility: Artificial Intelligence Approaches for Autonomous Vehicle Trajectories in Roundabouts

Abstract

1. Introduction

2. Literature Review

- The integration of human driving behavior models, real-time adaptability, and robust control mechanisms are central to improving AV navigation.

- Advances in Machine Learning, especially hybrid and hierarchical methods, have shown significant potential to improve the accuracy of predictions and the robustness of trajectories.

- Deep Learning, Transformer-based architectures, and Deep Reinforcement Learning are emerging as state-of-the-art approaches that enable AVs to make more accurate, adaptive and context-aware trajectory decisions.

- Graph Neural Networks (GNNs) and attention mechanisms show promise in capturing interactions between road users and improving AVs’ decision making in complex environments.

3. Methodology

3.1. Predictive Models

3.1.1. Linear Regression

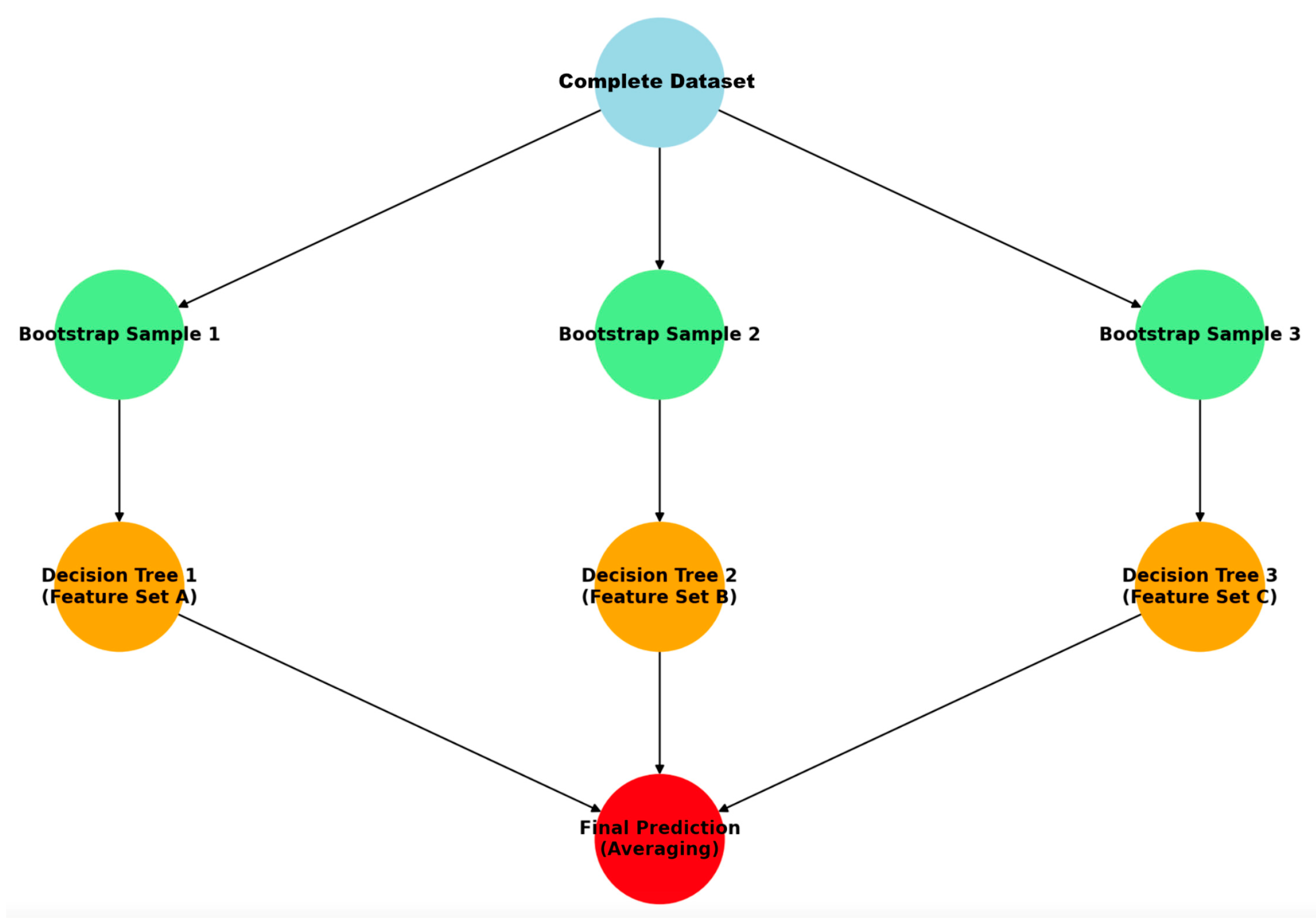

3.1.2. Random Forest Model

- Number of Trees (n_estimators): 100, which helps reduce the variance of the model.

- Maximum Depth of Trees (max_depth): 10, which prevents the trees from growing too deep and overfitting the training data.

- Minimum Samples to Split a Node (min_samples_split): 2, specifying the minimum number of samples required to split an internal node.

- Minimum Samples per Leaf (min_samples_leaf): 1, specifying the minimum number of samples required to be at a leaf node.

- Criterion for Splitting: MSE, used to measure the quality of a split.

3.1.3. Support Vector Regression

- Kernel Function: Radial Basis Function (RBF), which helps to handle non-linear relationships by mapping the input features into higher-dimensional space.

- Regularization Parameter (C): Set at 1.0, which controls the trade-off between achieving a low error on the training data and minimizing the model complexity.

- Kernel Coefficient (gamma): ‘scale’, which defines how far the influence of a single training example reaches.

- Epsilon (ε): Set at 0.1, specifying the margin of tolerance where no penalty is given to errors.

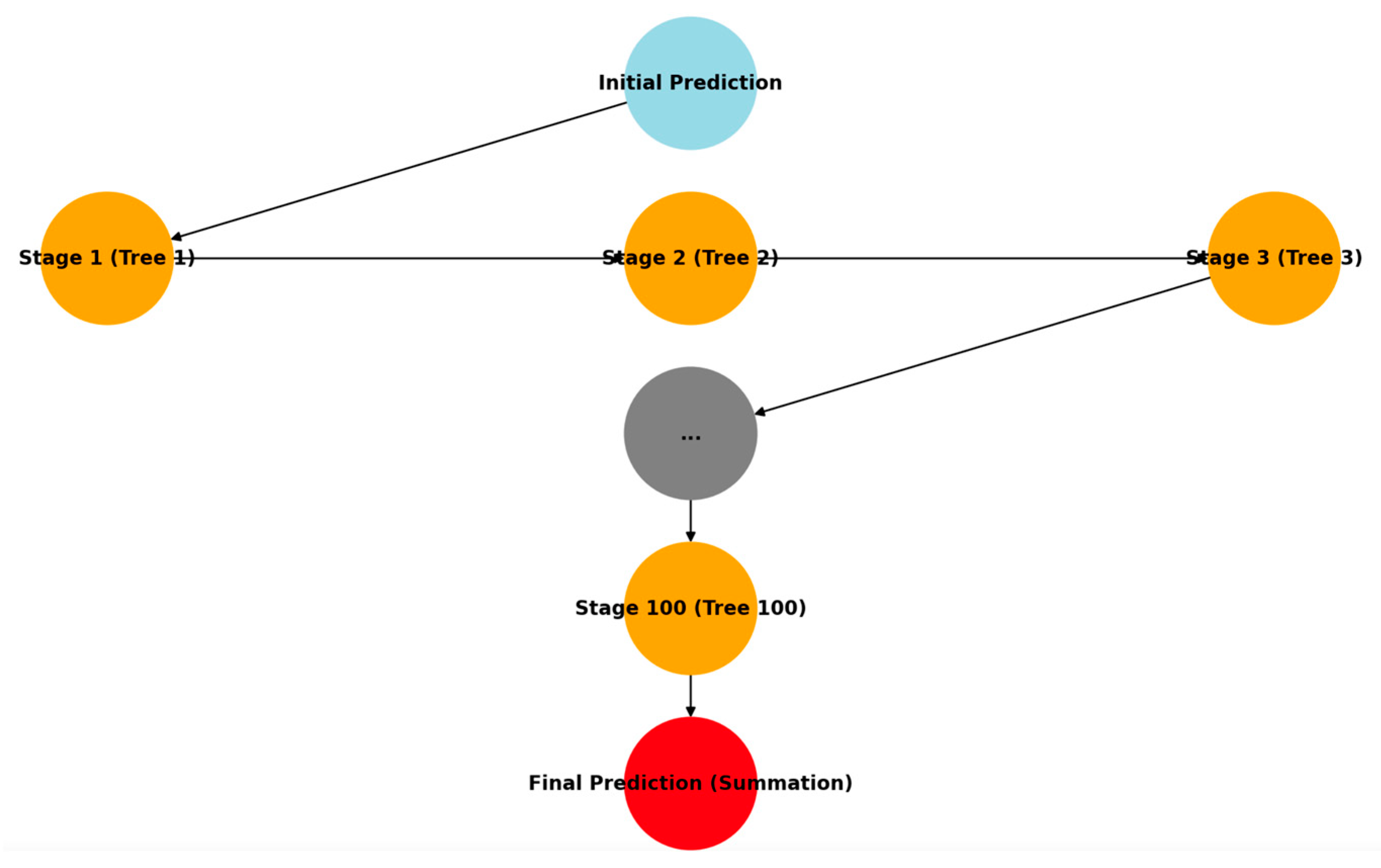

3.1.4. Gradient Boosting Regression

- Number of Boosting Stages (n_estimators): 100, which specifies the number of boosting stages to be run.

- Learning Rate: Set at 0.1, which controls the contribution of each tree to the final model.

- Maximum Depth of Trees (max_depth): Three, which limits the depth of the individual regression estimators to prevent overfitting.

- Minimum Samples to Split a Node (min_samples_split): Two, specifying the minimum number of samples required to split an internal node.

- Minimum Samples per Leaf (min_samples_leaf): One, specifying the minimum number of samples required to be at a leaf node.

- Loss Function: Least Squares (ls), which measures the difference between observed and predicted values.

3.1.5. Neural Networks

- Input Layer: Receives the independent variables (R1, R2, R3).

- First Hidden Layer: Sixty-four neurons, using the ReLU (Rectified Linear Unit) activation function.

- Second Hidden Layer: Thirty-two neurons, also using the ReLU activation function.

- Output Layer: One neuron, performing linear regression to predict the target variable.

3.2. Integration of the MRoundabout Model

- Radius of entry (R1): the minimum radius on the fastest through-route before reaching the entry line.

- Circulation path radius (R2): the minimum radius on the fastest passage path while the vehicle is circulating around the center island.

- Exit radius (R3): the minimum radius on the fastest passage route when the vehicle leaves the island.

- Division into Turning Regions (TRs):

- TR1: Starts before entering the roundabout, where the vehicle decelerates, and ends in the middle of the section passed under acceleration, between the first and second circular arcs.

- TR2: Starts at the end of TR1 and ends in the middle of the section passed under acceleration, between the second and third circular arcs.

- Starts at the end of TR2 and ends where the vehicle accelerates after the last section with constant curvature.

- Important distances:

- Lsi (i = 1, 2, 3): The distance from the start of each region to the point where the speed si is reached and remains constant.

- ΔSi (i = 1, 2, 3): The differential distance between the center of the region and the start of constant speed si, calculated as ΔSi = TRi/2 − LSi

- Additional Parameters:

- s0: Initial speed before the roundabout, influenced by road design or signage.

- d01: The model assumes a decrease in speed from s0 to s1 before entering the roundabout. This deceleration considers the natural reduction in speed required to navigate the tight entry curvature safely. Hypothetically, if a vehicle were to accelerate in this section, it would likely result in excessive lateral forces and reduced control, which is unusual driving behavior under normal conditions.

- a12: After deceleration, the model assumes that drivers gradually regain speed while circulating, transitioning from s1 to s2. This is because, in the fastest trajectory, vehicles experience a geometric widening of the path, allowing them to accelerate naturally. However, in cases where external factors (e.g., interactions with other vehicles or congestion) alter this dynamic, a deceleration phase could occur instead, impacting the expected trajectory. Since MRoundabout is designed to isolate the influence of geometry alone, traffic-induced effects are not explicitly modeled.

- a23: When the vehicle leaves the roundabout, it continues to accelerate from s2 to s3 and switches back to the regular lane of the exit leg. This behavior is a direct consequence of the decreasing curvature, which allows drivers to reach a higher cruising speed again. This structured approach ensures an accurate representation of the speed profile across the roundabout.

- Vehicle trajectory tracking using high-resolution cameras, LIDAR sensors, and GPS devices to ensure the accurate measurement of speed, acceleration, and lateral displacement.

- Speed profiles recorded along the fastest trajectory, capturing variations at different radii (R1, R2, R3).

- Time series data to analyze acceleration and deceleration behavior with special attention to transitions between turn zones (TRs).

3.3. Data Acquisition and Calibration

- Selection of five representative roundabouts in Italy.

- Collection of vehicle movement data using high-resolution cameras, LIDAR sensors and GPS devices.

- Analysis of 135 valid trajectories recorded by 15 drivers (7 men and 8 women) aged between 23 and 62, driving at off-peak times to minimize external influences.

- GBR: Training was stopped early if the validation loss had not improved after 10 consecutive iterations. This technique has been shown to prevent overfitting in gradient boosting algorithms by ensuring that models are not trained beyond the optimal performance threshold [55].

- NNs: L2 regularization (weight reduction) and a dropout rate of 20 in hidden layers have been used to reduce overfitting. L2 regularization helps prevent large weights that can lead to model instability, while the dropout rate improves generalization by randomly deactivating neurons during training [56].

- Five-fold cross-validation: applied to all models during training to ensure that the final test set remains fully segregated for unbiased evaluation. The five-fold approach strikes a balance between computational efficiency and robust performance evaluation, making it a widely accepted method in machine learning [57].

3.4. Role of ChatGPT-4 in Model Development

4. Results and Discussions

4.1. Numerical Analysis and Model Performance Evaluation

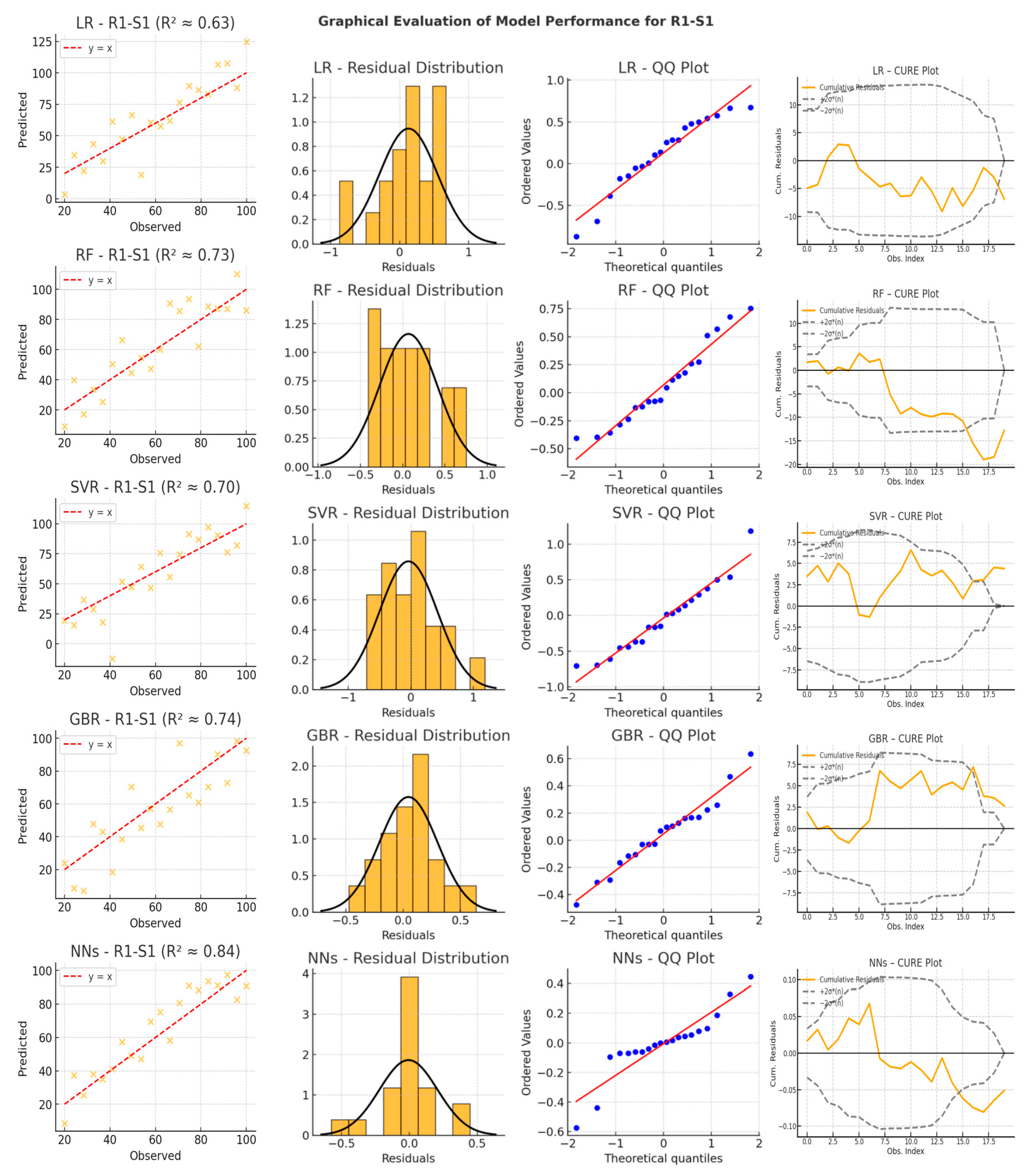

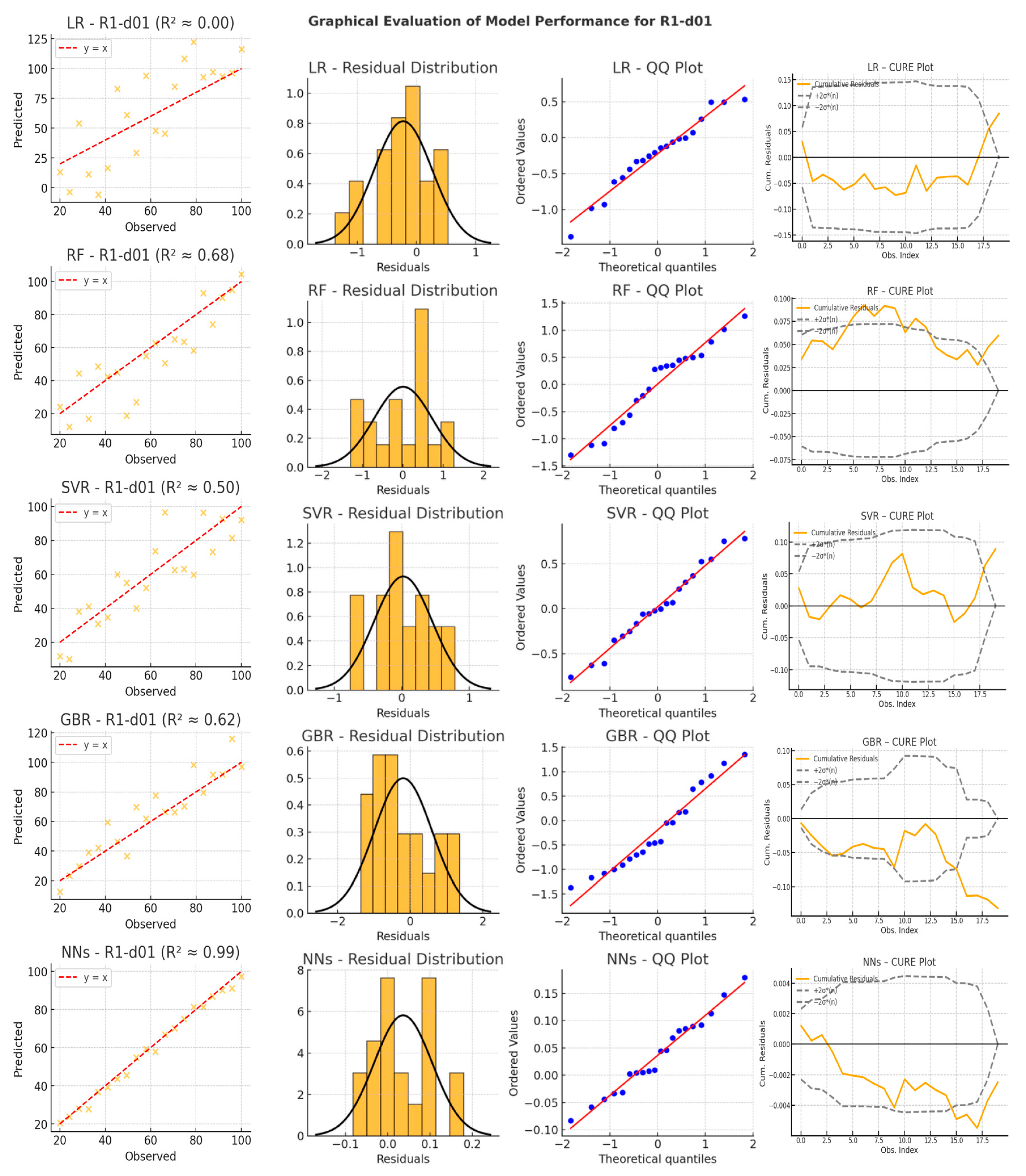

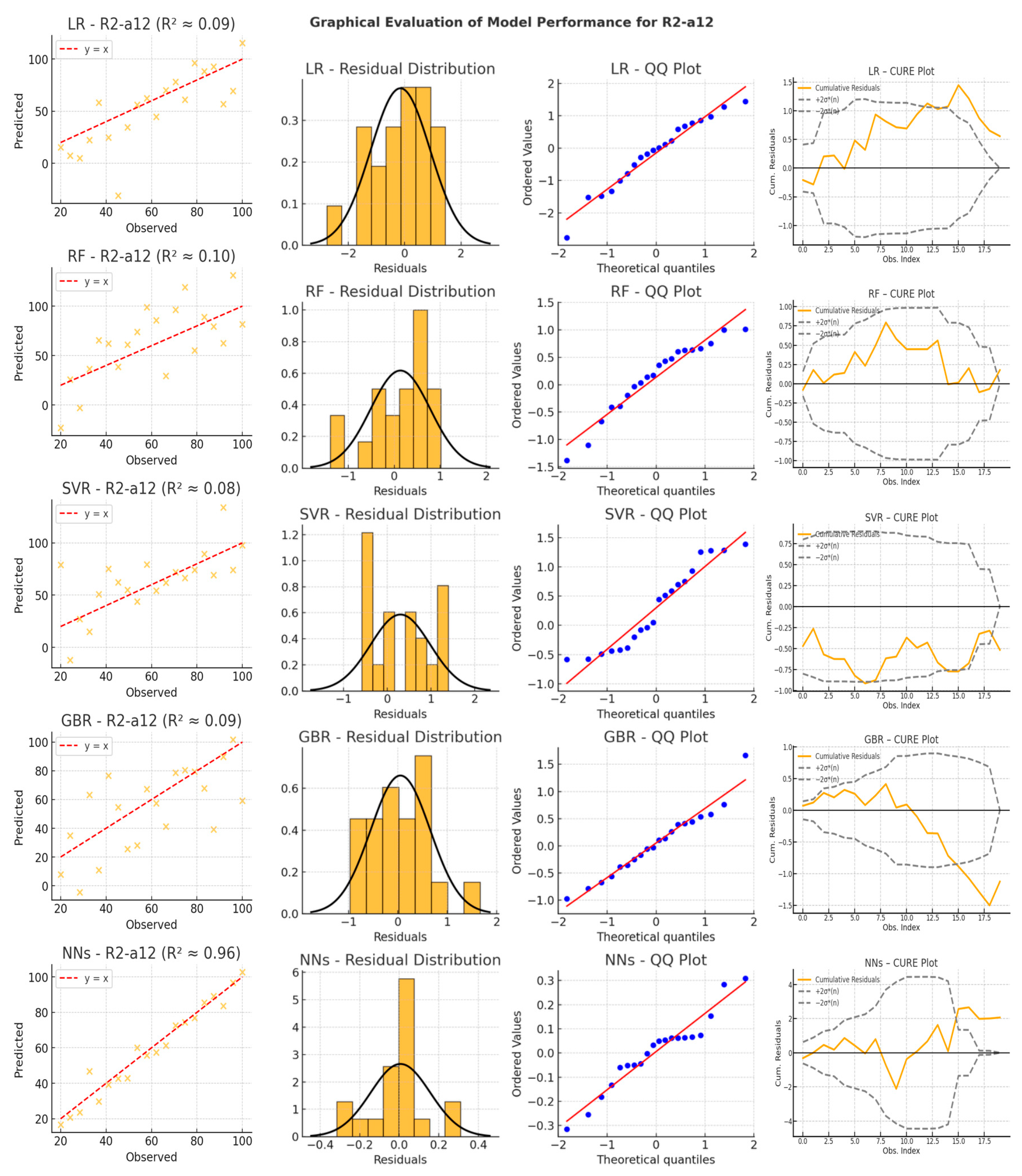

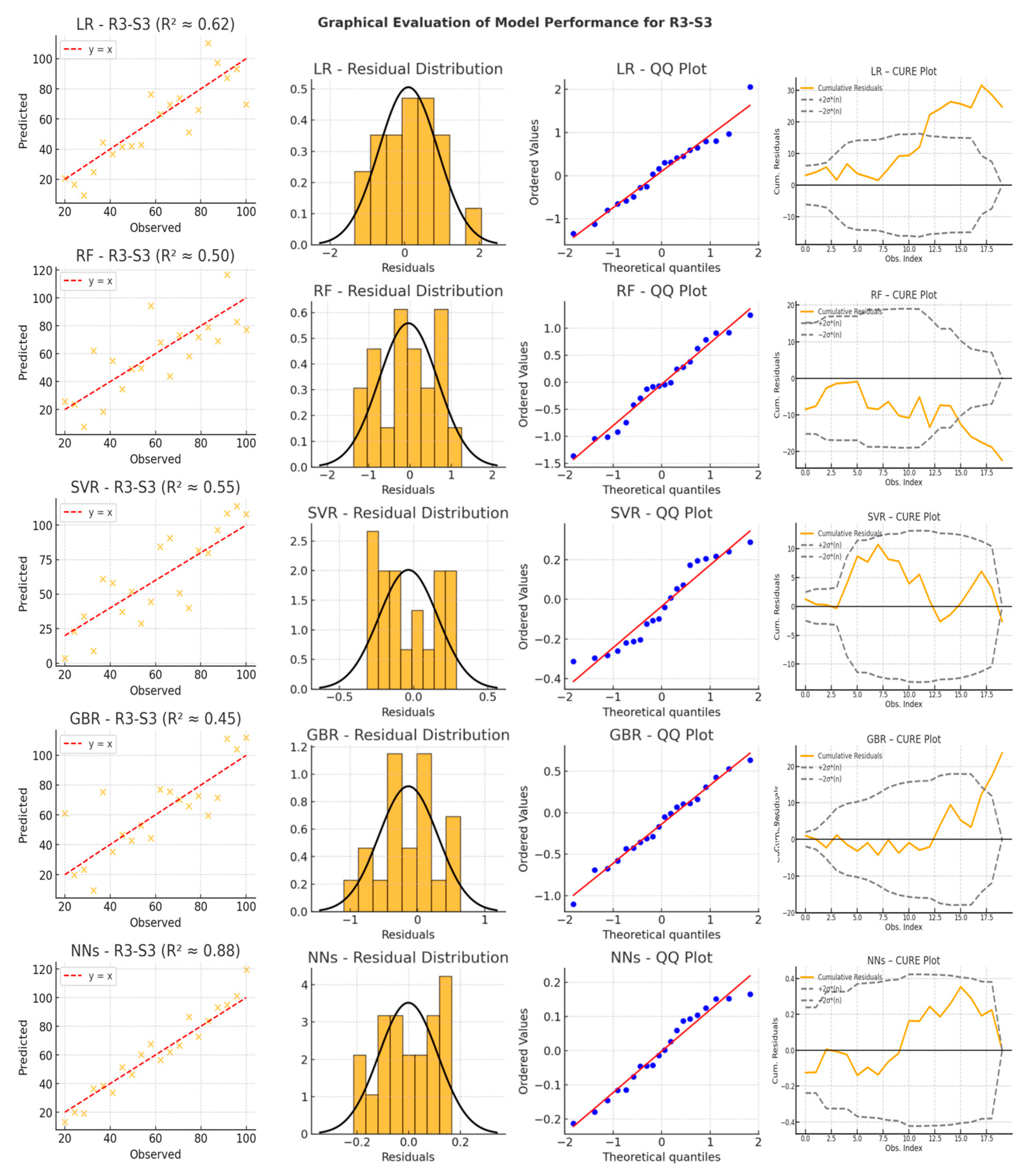

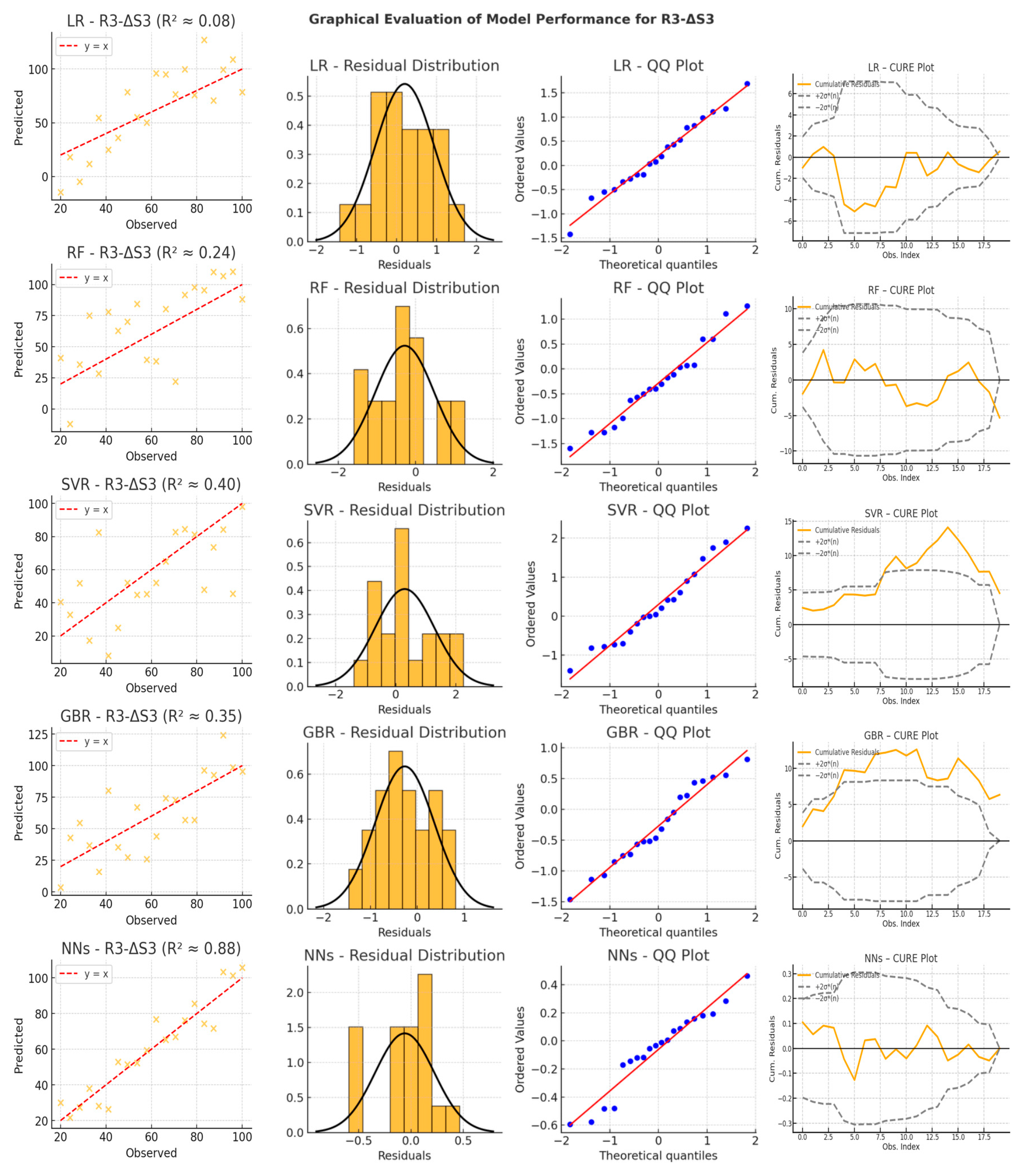

4.2. Graphical Analysis and Model Performance Evaluation

- Scatter plots compare observed versus predicted values, offering insight into model accuracy and bias. LR exhibits significant dispersion, particularly for acceleration and differential distance variables, confirming its limited ability to handle nonlinearity. NNs show the most tightly clustered points around the diagonal, reinforcing their capacity to model complex non-linear relationships with high accuracy. RF and GBR perform reasonably well for speed variables, but their scatter increases significantly in acceleration-related predictions (e.g., R2-a12, R3-a23), indicating instability when faced with rapid changes in driving behavior. SVR struggles to maintain predictive consistency, with some high-variance predictions and deviations from the diagonal, particularly in acceleration variables.

- Residual distribution plots reveal systematic errors across different models. LR residuals exhibit skewness and noticeable deviations from zero, further confirming its difficulty modeling vehicle motion dynamics. RF and GBR show wider residual distributions, capturing some patterns but suffering from high fluctuations. SVR residuals occasionally form multiple peaks, indicating a lack of smoothness and instability across different driving conditions. In contrast, NNs exhibit narrow, symmetric residual distributions, suggesting minimal bias and strong adaptability to both steady and abrupt transitions in vehicle trajectories.

- QQ plots provide further validation of the error distributions by comparing the residuals against a theoretical normal distribution. LR residuals deviate significantly at both tails, failing to capture extreme changes in speed and acceleration. RF and GBR display heavy tails, indicating that some predictions are prone to large errors, especially in more complex driving scenarios. SVR residuals exhibit deviations that suggest sensitivity to input conditions, particularly when tested outside the primary range of training data. Conversely, NNs align closely with the theoretical normal distribution, demonstrating well-distributed errors and a superior generalization ability.

- CURE (Cumulative Residuals) plots, generated according to the methodology proposed by Hauer and Bamfo (1997), illustrate how prediction errors accumulate across the dataset and allow a diagnostic evaluation of the model specification [68]. The inclusion of ±2σ*(n) confidence bands allows for the assessment of whether the cumulative residuals behave like a random walk—a key indicator of correct model specification. In this analysis, LR shows a monotonically increasing residual curve that consistently exceeds the confidence limits, confirming its systematic bias and limited goodness of fit. RF, GBR, and SVR show fluctuating but often divergent patterns, indicating a tendency to accumulate errors over time, especially for the acceleration and differential distance variables. In contrast, the NNs maintain an oscillating pattern that moves within confidence bands, emphasizing their ability to self-correct over successive observations. This stability reflects their superior ability to capture dynamic dependencies and ensure smooth, adaptive trajectory predictions.

4.3. Comparison of Machine Learning Techniques and Future Research Directions

- Linear Regression remains the simplest technique, but its linear assumptions are too restrictive for the inherently non-linear dynamics of vehicle motion in roundabouts. While it achieves moderate performance in speed-related variables (especially for the first radius, R1), its performance deteriorates significantly in predicting acceleration and differential distance. This is evidenced by its near-zero R2 values, indicating that in some cases, the LR errors exceed those of a naïve baseline model. Consequently, LR is ill-suited to capture the rapidly changing driving conditions, particularly during roundabout entries and exits.

- Random Forest and Gradient Boosting Regression, both tree-based methods, show promising results for speed variables, often achieving higher R2 scores than LR. However, their performance is inconsistent for acceleration and differential distance variables, where they frequently exhibit high error variance. In some cases, near-zero R2 values emerge, suggesting a lack of ability to handle rapid sequential changes. Their reliance on splitting the feature space into discrete regions can lead to overfitting on localized patterns, reducing their ability to generalize when driving conditions shift. This instability is particularly evident in the CURE plots, where residuals tend to accumulate unchecked over time, revealing an inability to correct sequential prediction errors. This limitation is especially critical in acceleration and differential distance variables, where tree-based models fail to incorporate sequential dependencies, resulting in inconsistent predictions across different driving scenarios.

- Support Vector Regression is theoretically capable of capturing non-linear relationships through kernel functions, but its predictive performance depends heavily on hyperparameter tuning. The results show that SVR is occasionally effective in speed prediction, but it struggles in other contexts, with notable dips into near-zero R2 values. This discrepancy is likely due to difficulties in selecting an optimal kernel width and regularization parameters, which are crucial to balancing variance across diverse driving scenarios. As a result, SVR errors become significant when conditions deviate from the range where the model was trained, limiting its applicability to more dynamic scenarios.

- Neural Networks, which already demonstrated superior performance in the graphical analysis, further confirm their dominance in numerical results, achieving the highest R2 values and the lowest MSE across all variables. Their ability to learn complex patterns and dynamically adapt to changing driving conditions makes them the most suitable model for trajectory prediction in roundabouts.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, Y.; Zhu, F. Junction Management for Connected and Automated Vehicles: Intersection or Roundabout? Sustainability 2021, 13, 9482. [Google Scholar] [CrossRef]

- Martin-Gasulla, M.; Elefteriadou, L. Traffic Management with Autonomous and Connected Vehicles at Single-Lane Roundabouts. Transp. Res. Part C Emerg. Technol. 2021, 125, 102964. [Google Scholar] [CrossRef]

- Ziraldo, E.; Govers, M.E.; Oliver, M. Enhancing Autonomous Vehicle Decision-Making at Intersections in Mixed-Autonomy Traffic: A Comparative Study Using an Explainable Classifier. Sensors 2024, 24, 3859. [Google Scholar] [CrossRef] [PubMed]

- Leonardi, S.; Distefano, N. Roundabout Trajectory Planning: Integrating Human Driving Models for Autonomous Vehicles. Sustainability 2023, 15, 6288. [Google Scholar] [CrossRef]

- Benciolini, T.; Fink, M.; Güzelkaya, N.; Wollherr, D.; Leibold, M. Safe and Non-Conservative Trajectory Planning for Autonomous Driving Handling Unanticipated Behaviors of Traffic Participants. arXiv 2024. [Google Scholar] [CrossRef]

- Yiting, W.; Wuxing, Z.; Hui, Z.; Jian, Z. Stochastic Trajectory Planning Considering Parameter and Initial State Uncertainty. IEEE Access 2021. [Google Scholar] [CrossRef]

- Vysotska, N.; Nakonechnyi, I. Automobile System for Predicting the Trajectory of Vehicle Movement. Int. Sci. J. Eng. Agric. 2024, 3, 38–50. [Google Scholar] [CrossRef]

- Zhang, Y. LIDAR–Camera Deep Fusion for End-to-End Trajectory Planning of Autonomous Vehicle. J. Phys. Conf. Ser. 2022, 2284, 012006. [Google Scholar] [CrossRef]

- Tramacere, E.; Luciani, S.; Feraco, S.; Circosta, S.; Khan, I.; Bonfitto, A.; Amati, N. Local Trajectory Planning for Autonomous Racing Vehicles Based on the Rapidly-Exploring Random Tree Algorithm. In Proceedings of the ASME 2021 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, (IDETC-CIE 2021), Virtual, 17–19 August 2021. [Google Scholar] [CrossRef]

- Singh, V.; Sastry Hari, S.K.; Tsai, T.; Pitale, M. Simulation Driven Design and Test for Safety of AI-Based Autonomous Vehicles. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 122–128. [Google Scholar] [CrossRef]

- An, H.; Choi, W.; Choi, S. Real-Time Path Planning for Trajectory Control in Autonomous Driving. In Proceedings of the 24th International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Republic of Korea, 13–16 February 2022; pp. 366–371. [Google Scholar] [CrossRef]

- Tezerjani, M.D.; Carrillo, D.; Qu, D.; Dhakal, S.; Mirzaeinia, A.; Yang, Q. Real-Time Motion Planning for Autonomous Vehicles in Dynamic Environments. arXiv 2024, arXiv:2406.02916. [Google Scholar] [CrossRef]

- Jie, C.; Yingbing, C.; Qingwen, Z.; Lu, G.; Ming, L. Real-Time Trajectory Planning for Autonomous Driving with Gaussian Process and Incremental Refinement. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 8999–9005. [Google Scholar] [CrossRef]

- Jiang, Y.; Jin, X.; Xiong, Y.; Liu, Z. A Dynamic Motion Planning Framework for Autonomous Driving in Urban Environments. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–30 July 2020; pp. 5429–5435. [Google Scholar] [CrossRef]

- Wang, Z.; Tu, J.; Chen, C. Reinforcement Learning Based Trajectory Planning for Autonomous Vehicles. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 2768–2773. [Google Scholar] [CrossRef]

- Liu, J.; Mao, X.; Fang, Y.; Zhu, D.; Meng, M.Q.H. A Survey on Deep-Learning Approaches for Vehicle Trajectory Prediction in Autonomous Driving. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 978–985. [Google Scholar] [CrossRef]

- Jiang, H.; Chang, L.; Li, Q.; Chen, D. Trajectory Prediction of Vehicles Based on Deep Learning. In Proceedings of the 2019 4th International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 5–7 September 2019; pp. 98–104. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Y.; Peeta, S. Leveraging Transformer Model to Predict Vehicle Trajectories in Congested Urban Traffic. Transp. Res. Rec. 2022, 2677, 898–909. [Google Scholar] [CrossRef]

- Frauenknecht, B.; Ehlgen, T.; Trimpe, S. Data-Efficient Deep Reinforcement Learning for Vehicle Trajectory Control. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023. [Google Scholar] [CrossRef]

- Amin, F.; Gharami, K.; Sen, B. TrajectoFormer: Transformer-Based Trajectory Prediction of Autonomous Vehicles with Spatio-Temporal Neighborhood Considerations. Int. J. Comput. Intell. Syst. 2024, 17, 87. [Google Scholar] [CrossRef]

- Li, G.; Li, S.; Li, S.; Qin, Y.; Cao, D.; Qu, X.; Cheng, B. Deep Reinforcement Learning Enabled Decision-Making for Autonomous Driving at Intersections. Automot. Innov. 2020, 3, 374–385. [Google Scholar] [CrossRef]

- Singh, D.; Srivastava, R. Graph Neural Network with RNNs-Based Trajectory Prediction of Dynamic Agents for Autonomous Vehicles. Appl. Intell. 2022, 52, 12801–12816. [Google Scholar] [CrossRef]

- Jiao, Y.; Miao, M.; Yin, Z.; Lei, C.; Zhu, X.; Nie, L.; Tao, B. A Hierarchical Hybrid Learning Framework for Multi-Agent Trajectory Prediction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10344–10354. [Google Scholar] [CrossRef]

- Bani-Hani, R.; Aljbour, S.; Shurman, M. Autonomous Vehicles Trajectory Prediction Approach Using Machine Learning. In Proceedings of the 2023 14th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 21–23 November 2023. [Google Scholar] [CrossRef]

- Hao, C.; Chen, Y.; Cheng, S.; Zhang, H. Improving Vehicle Trajectory Prediction with Online Learning. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023; pp. 561–567. [Google Scholar] [CrossRef]

- Jardine, P.T.; Givigi, S.N.; Yousefi, S. Experimental Results for Autonomous Model-Predictive Trajectory Planning Tuned with Machine Learning. In Proceedings of the 2017 Annual IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 April 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Ghariblu, H.; Moghaddam, H.B. Trajectory Planning of Autonomous Vehicle in Freeway Driving. Transp. Telecommun. J. 2021, 22, 278–286. [Google Scholar] [CrossRef]

- Bajić, J.; Herceg, M.; Resetar, I.; Velikic, I. Trajectory Planning for Autonomous Vehicle Using Digital Map. In Proceedings of the 2019 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 29–30 May 2019; pp. 44–49. [Google Scholar] [CrossRef]

- Wang, C.; Xu, N.; Huang, Y.; Guo, K.; Liu, Y.; Li, Q. Trajectory Planning for Autonomous Vehicles Based on Improved Hybrid A. Int. J. Veh. Des. 2020, 218, 218–239. [Google Scholar] [CrossRef]

- Hu, P.; Ma, Z.; Wang, H.; Li, H.; Peng, K.; Zhang, H. Trajectory Planning Method of Autonomous Vehicle in Road Scene. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 512–517. [Google Scholar] [CrossRef]

- Waleed, A.; Hammad, S.; Abdelaziz, M.; Maged, S. Trajectory Planning Approach for Autonomous Electric Bus in Dynamic Environment. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2023, 238, 4255–4270. [Google Scholar] [CrossRef]

- Said, A.; Talj, R.; Francis, C.; Shraim, H. Local Trajectory Planning for Autonomous Vehicle with Static and Dynamic Obstacles Avoidance. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2738–2743. [Google Scholar] [CrossRef]

- Levy, R.; Haddad, J. Path and Trajectory Planning for Autonomous Vehicles on Roads without Lanes. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2679–2684. [Google Scholar] [CrossRef]

- Zhang, D.; Yu, Z.; Xiong, L.; Zhao, J.; Zhang, P.; Li, Y.; Xia, L.; Wei, Y.; Li, Z.; Fu, Z. Driving-Behavior-Oriented Trajectory Planning for Autonomous Vehicle Driving on Urban Structural Road. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2020, 235, 975–995. [Google Scholar] [CrossRef]

- Fiorentini, N.; Pellegrini, D.; Losa, M. Overfitting Prevention in Accident Prediction Models: Bayesian Regularization of Artificial Neural Networks. Transp. Res. Rec. J. Transp. Res. Board 2022, 2677, 1455–1470. [Google Scholar] [CrossRef]

- Daoud, G.; El-Darieby, M. Towards a Benchmark for Trajectory Prediction of Autonomous Vehicles. In Proceedings of the 2023 10th International Conference on Dependable Systems and Their Applications (DSA), Tokyo, Japan, 10–11 August 2023; pp. 614–622. [Google Scholar] [CrossRef]

- Bala, J.A.; Adeshina, S.A.; Aibinu, A.M. Implementing Nonlinear Model Predictive Control for Enhanced Trajectory Tracking and Road Anomaly Avoidance in Autonomous Vehicles. In Proceedings of the 2023 2nd International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 1–3 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Malfoy, A.; Ajwad, S.A.; Boudkhil, M. Autonomous Parking System for Vehicles: Trajectory Planning and Control. In Proceedings of the 2023 IEEE 11th International Conference on Systems and Control (ICSC), Sousse, Tunisia, 18–20 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Maulud, D.H.; Abdulazeez, A. A Review on Linear Regression Comprehensive in Machine Learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Marrie, R.A.; Dawson, N.V.; Garland, A. Quantile Regression and Restricted Cubic Splines Are Useful for Exploring Relationships Between Continuous Variables. J. Clin. Epidemiol. 2009, 62, 511–517. [Google Scholar] [CrossRef] [PubMed]

- Miller, P.; Lubke, G.; McArtor, D.B.; Bergeman, C. Finding Structure in Data Using Multivariate Tree Boosting. Psychol. Methods 2015, 21, 583–602. [Google Scholar] [CrossRef]

- Şahin, E. Assessing the Predictive Capability of Ensemble Tree Methods for Landslide Susceptibility Mapping Using XGBoost, Gradient Boosting Machine, and Random Forest. SN Appl. Sci. 2020, 2, 1308. [Google Scholar] [CrossRef]

- Robnik-Šikonja, M. Improving Random Forests. Lect. Notes Comput. Sci. 2004, 359, 370. [Google Scholar] [CrossRef]

- Ustün, B.; Melssen, W.; Buydens, L. Visualization and Interpretation of Support Vector Regression Models. Anal. Chim. Acta 2007, 595, 299–309. [Google Scholar] [CrossRef]

- Dhhan, W.; Rana, S.; Alshaybawee, T.; Midi, H. The Single-Index Support Vector Regression Model to Address the Problem of High Dimensionality. Commun. Stat.—Simul. Comput. 2018, 47, 2792–2799. [Google Scholar] [CrossRef]

- Somers, M.; Casal, J. Using Artificial Neural Networks to Model Nonlinearity. Organ. Res. Methods 2009, 12, 403–417. [Google Scholar] [CrossRef]

- Blank, T.B.; Brown, S.D. Data Processing Using Neural Networks. Anal. Chim. Acta 1993, 277, 273–287. [Google Scholar] [CrossRef]

- Nti, I.K.; Yarko-Boateng, O.N.; Aning, J. Performance of Machine Learning Algorithms with Different K Values in K-Fold Cross-Validation. Int. J. Inf. Technol. Comput. Sci. 2021, 6, 5. [Google Scholar] [CrossRef]

- Prihandono, M.A.; Harwahyu, R.; Sari, R.F. Performance of Machine Learning Algorithms for IT Incident Management. In Proceedings of the 2020 11th International Conference on Awareness Science and Technology (iCAST), Qingdao, China, 7–9 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Fiorentini, N.; Leandri, P.; Losa, M. Defining Machine Learning Algorithms as Accident Prediction Models for Italian Two-Lane Rural, Suburban, and Urban Roads. Int. J. Inj. Control Saf. Promot. 2022, 29, 450–462. [Google Scholar] [CrossRef]

- Joseph, V.R. Optimal Ratio for Data Splitting. Stat. Anal. Data Min. ASA Data Sci. J. 2022, 15, 531–538. [Google Scholar] [CrossRef]

- Joseph, V.R.; Vakayil, A. SPlit: An Optimal Method for Data Splitting. Technometrics 2021, 63, 490–502. [Google Scholar] [CrossRef]

- Rácz, A.; Bajusz, D.; Héberger, K. Effect of Dataset Size and Train/Test Split Ratios in QSAR/QSPR Multiclass Classification. Molecules 2021, 26, 1111. [Google Scholar] [CrossRef] [PubMed]

- Bichri, H.; Chergui, A.; Hain, M. Investigating the Impact of Train/Test Split Ratio on the Performance of Pre-Trained Models with Custom Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 252–258. [Google Scholar] [CrossRef]

- Wei, Y.T.; Yang, F.; Wainwright, M.J. Early Stopping for Kernel Boosting Algorithms: A General Analysis with Localized Complexities. IEEE Trans. Inf. Theory 2019, 65, 6685–6703. [Google Scholar] [CrossRef]

- Scetbon, M.; Dohmatob, E. Robust Linear Regression: Gradient-Descent, Early-Stopping, and Beyond. In Proceedings of the 26th International Conference on Artificial Intelligence and Statistics (AISTATS), Valencia, Spain, 25–27 April 2023; Volume 206, pp. 11583–11607. [Google Scholar] [CrossRef]

- Wan, H. Gradient Descent Boosting: Convergence and Algorithm. Computer Science, Mathematics. Available online: https://courses.engr.illinois.edu/ece543/sp2017/projects/Haohua%20Wan.pdf (accessed on 10 January 2025).

- Malinin, A.; Prokhorenkova, L.; Ustimenko, A. Uncertainty in Gradient Boosting via Ensembles. In Proceedings of the 9th International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021; pp. 1–17. [Google Scholar] [CrossRef]

- Tayebi Arasteh, S.; Han, T.; Lotfinia, M.; Kuhl, C.; Kather, J.N.; Truhn, D.; Nebelung, S. Large Language Models Streamline Automated Machine Learning for Clinical Studies. Nat. Commun. 2024, 15, 1603. [Google Scholar] [CrossRef]

- Basheer, B.; Obaidi, M. Enhancing Healthcare Data Classification: Leveraging Machine Learning on ChatGPT-Generated Datasets. Int. J. Adv. Appl. Comput. Intell. 2024, 5, 33–34. [Google Scholar] [CrossRef]

- Nasayreh, A.; Mamlook, R.E.; Samara, G.; Gharaibeh, H.; Aljaidi, M.; Alzu’bi, D.; Al-Daoud, E.; Abualigah, L. Arabic Sentiment Analysis for ChatGPT Using Machine Learning Classification Algorithms: A Hyperparameter Optimization Technique. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 1–19. [Google Scholar] [CrossRef]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. Ethical and Social Risks of Harm from Language Models. arXiv 2021, arXiv:2112.04359. [Google Scholar] [CrossRef]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.T.; Blum, M.; Hutter, F. Auto-Sklearn: Efficient and Robust Automated Machine Learning. In Automated Machine Learning; Springer: Cham, Switzerland, 2019; pp. 113–134. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Alsanwy, S.; Qazani, M.C.; Al-Ashwal, W.; Shajari, A.; Nahavandi, S.; Asadi, H. Vehicle Trajectory Prediction Using Deep Learning for Advanced Driver Assistance Systems and Autonomous Vehicles. In Proceedings of the 2024 IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 15–18 April 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Fan, S.; Li, X.; Li, F. Intention-Driven Trajectory Prediction for Autonomous Driving. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 107–113. [Google Scholar] [CrossRef]

- Li, M.; Li, Z.; Xu, C.; Liu, T. Short-Term Prediction of Safety and Operation Impacts of Lane Changes in Oscillations with Empirical Vehicle Trajectories. Accid. Anal. Prev. 2020, 135, 105345. [Google Scholar] [CrossRef]

- Hauer, E.; Bamfo, J. Two Tools for Finding What Function Links the Dependent Variable to the Explanatory Variables. In Proceedings of the ICTCT Conference, Lund, Sweden, 5–7 November 1997. [Google Scholar]

| Model | Variable | MSE (Train) | MSE (Validation) | MSE (Test) | R2 (Train) | R2 (Validation) | R2 (Test) |

|---|---|---|---|---|---|---|---|

| LR | R1-S1 | 5.28 | 5.53 | 5.78 | 0.67 | 0.65 | 0.63 |

| RF | R1-S1 | 4.53 | 5.03 | 6.04 | 0.85 | 0.81 | 0.73 |

| SVR | R1-S1 | 4.81 | 5.06 | 6.33 | 0.85 | 0.80 | 0.70 |

| GBR | R1-S1 | 4.19 | 4.65 | 5.58 | 0.86 | 0.83 | 0.74 |

| NNs | R1-S1 | 6.00 × 10−4 | 9.00 × 10−4 | 1.10 × 10−3 | 1.00 | 0.96 | 0.84 |

| LR | R2-S2 | 16.61 | 17.41 | 18.21 | 0.70 | 0.68 | 0.66 |

| RF | R2-S2 | 14.25 | 15.83 | 19.00 | 0.40 | 0.39 | 0.30 |

| SVR | R2-S2 | 8.33 | 8.77 | 11.00 | 0.68 | 0.64 | 0.55 |

| GBR | R2-S2 | 16.61 | 18.46 | 23.00 | 0.30 | 0.29 | 0.22 |

| NNs | R2-S2 | 2.20 × 10−3 | 3.20 × 10−3 | 4.50 × 10−3 | 1.00 | 0.95 | 0.85 |

| LR | R3-S3 | 14.33 | 15.11 | 16.00 | 0.68 | 0.66 | 0.62 |

| RF | R3-S3 | 12.28 | 13.65 | 16.50 | 0.58 | 0.56 | 0.50 |

| SVR | R3-S3 | 9.48 | 9.98 | 12.50 | 0.67 | 0.64 | 0.55 |

| GBR | R3-S3 | 12.46 | 13.84 | 17.00 | 0.57 | 0.56 | 0.45 |

| NNs | R3-S3 | 3.20 × 10−3 | 4.50 × 10−3 | 5.80 × 10−3 | 0.98 | 0.93 | 0.88 |

| LR | R1-d01 | 1.00 × 10−3 | 1.00 × 10−3 | 1.00 × 10−3 | 5.00 × 10−4 | 5.00 × 10−4 | 5.00 × 10−4 |

| RF | R1-d01 | 3.00 × 10−4 | 3.00 × 10−4 | 4.00 × 10−4 | 0.73 | 0.71 | 0.68 |

| SVR | R1-d01 | 5.00 × 10−4 | 5.00 × 10−4 | 6.00 × 10−4 | 0.56 | 0.54 | 0.50 |

| GBR | R1-d01 | 4.00 × 10−4 | 4.00 × 10−4 | 5.00 × 10−4 | 0.68 | 0.66 | 0.62 |

| NNs | R1-d01 | 0.00 | 0.00 | 0.00 | 1.00 | 0.99 | 0.99 |

| LR | R2-a12 | 0.04 | 0.04 | 0.04 | 0.10 | 0.09 | 0.09 |

| RF | R2-a12 | 0.03 | 0.04 | 0.04 | 0.12 | 0.11 | 0.10 |

| SVR | R2-a12 | 0.03 | 0.04 | 0.04 | 0.10 | 0.09 | 0.08 |

| GBR | R2-a12 | 0.03 | 0.04 | 0.04 | 0.11 | 0.10 | 0.09 |

| NNs | R2-a12 | 4.00 × 10−4 | 5.00 × 10−4 | 6.00 × 10−4 | 0.98 | 0.97 | 0.96 |

| LR | R3-a23 | 0.07 | 0.07 | 0.07 | 0.19 | 0.18 | 0.17 |

| RF | R3-a23 | 0.07 | 0.07 | 0.08 | 0.21 | 0.19 | 0.17 |

| SVR | R3-a23 | 0.07 | 0.07 | 0.08 | 0.20 | 0.18 | 0.16 |

| GBR | R3-a23 | 0.07 | 0.07 | 0.08 | 0.21 | 0.19 | 0.18 |

| NNs | R3-a23 | 3.00 × 10−3 | 3.70 × 10−3 | 4.50 × 10−3 | 0.94 | 0.93 | 0.92 |

| LR | R1-ΔS1 | 0.33 | 0.35 | 0.36 | 0.22 | 0.21 | 0.18 |

| RF | R1-ΔS1 | 0.59 | 0.65 | 0.78 | 0.41 | 0.35 | 0.22 |

| SVR | R1-ΔS1 | 0.45 | 0.48 | 0.57 | 0.57 | 0.50 | 0.43 |

| GBR | R1-ΔS1 | 0.57 | 0.64 | 0.76 | 0.43 | 0.36 | 0.23 |

| NNs | R1-ΔS1 | 0.00 | 0.00 | 5.00 × 10−4 | 0.95 | 0.95 | 0.94 |

| LR | R2-ΔS2 | 1.97 | 2.07 | 2.18 | 0.08 | 0.07 | 0.07 |

| RF | R2-ΔS2 | 1.77 | 1.97 | 2.37 | 0.13 | 0.13 | 0.12 |

| SVR | R2-ΔS2 | 2.09 | 2.20 | 2.75 | 0.04 | 0.03 | 0.02 |

| GBR | R2-ΔS2 | 1.94 | 2.04 | 2.49 | 0.12 | 0.11 | 0.09 |

| NNs | R2-ΔS2 | 2.00 × 10−3 | 2.90 × 10−3 | 3.80 × 10−3 | 0.93 | 0.92 | 0.91 |

| LR | R3-ΔS3 | 2.51 | 2.64 | 2.77 | 0.09 | 0.09 | 0.08 |

| RF | R3-ΔS3 | 3.85 | 4.27 | 5.13 | 0.43 | 0.37 | 0.24 |

| SVR | R3-ΔS3 | 3.23 | 3.40 | 4.12 | 0.53 | 0.50 | 0.40 |

| GBR | R3-ΔS3 | 3.57 | 3.75 | 4.54 | 0.51 | 0.41 | 0.35 |

| NNs | R3-ΔS3 | 2.90 × 10−3 | 4.10 × 10−3 | 5.40 × 10−3 | 0.89 | 0.89 | 0.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leonardi, S.; Distefano, N.; Gruden, C. Advancing Sustainable Mobility: Artificial Intelligence Approaches for Autonomous Vehicle Trajectories in Roundabouts. Sustainability 2025, 17, 2988. https://doi.org/10.3390/su17072988

Leonardi S, Distefano N, Gruden C. Advancing Sustainable Mobility: Artificial Intelligence Approaches for Autonomous Vehicle Trajectories in Roundabouts. Sustainability. 2025; 17(7):2988. https://doi.org/10.3390/su17072988

Chicago/Turabian StyleLeonardi, Salvatore, Natalia Distefano, and Chiara Gruden. 2025. "Advancing Sustainable Mobility: Artificial Intelligence Approaches for Autonomous Vehicle Trajectories in Roundabouts" Sustainability 17, no. 7: 2988. https://doi.org/10.3390/su17072988

APA StyleLeonardi, S., Distefano, N., & Gruden, C. (2025). Advancing Sustainable Mobility: Artificial Intelligence Approaches for Autonomous Vehicle Trajectories in Roundabouts. Sustainability, 17(7), 2988. https://doi.org/10.3390/su17072988