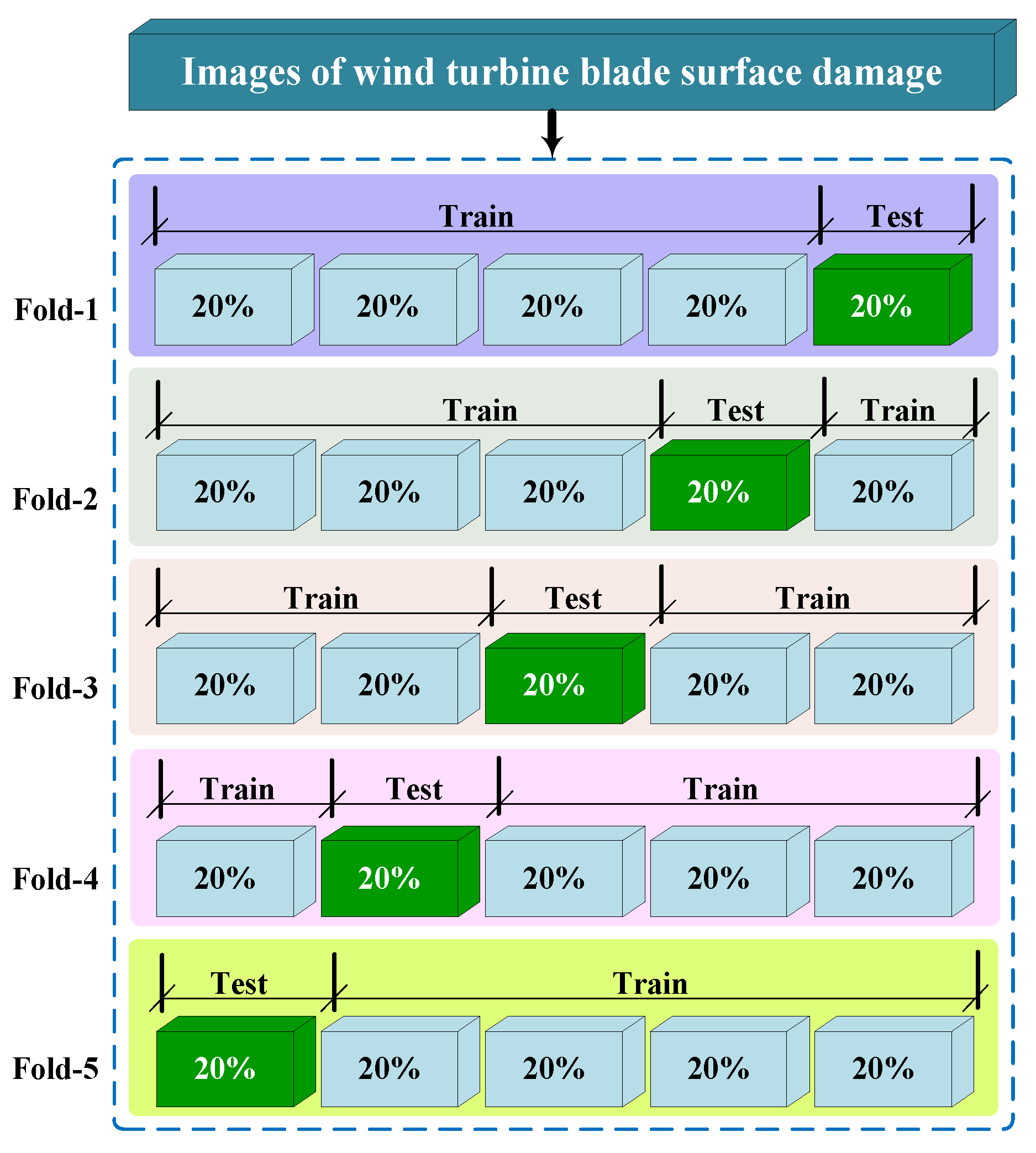

2.3.1. Overview of the Network Architecture

As a single-stage object detection algorithm, YOLOv5 [

20] enables high-speed, high-accuracy real-time detection. The core idea of YOLOv5 is to take an entire image as input to the neural network and divide it into multiple grid cells. However, each grid cell does not predict merely one object; instead, it predicts multiple potential targets through predefined anchor boxes. Specifically, each grid cell generates predictions for each anchor box, including bounding box coordinates and class probabilities. Consequently, a single grid cell can detect multiple objects depending on the number of anchor boxes. This design formulates object recognition as a regression problem, balancing detection diversity and computational efficiency.

The surface of wind turbine blades often exhibits a diverse array of damage patterns, varying in shape, size, and textural properties, which can complicate the detection process. Wind farms are typically situated in remote, rugged mountainous regions or open areas, characterized by complex backgrounds and variable natural lighting conditions, factors that can readily interfere with image-based detection methods. These challenges necessitate the development of detection models with enhanced accuracy and robustness to meet the stringent requirements of wind power blade maintenance. YOLOv5s [

21] is a computationally efficient variant of the YOLOv5 object detection framework. It achieves real-time performance using depthwise separable convolutions and a cross-stage feature fusion strategy. The multi-scale feature extraction architecture integrates Feature Pyramid Network (FPN) and Path Aggregation Network (PAN) modules, coupled with an adaptive anchor box generation mechanism. This allows the model to accurately detect defect targets with significant aspect ratio differences, as often encountered in wind turbine blade damage assessment. The modular design of YOLOv5s further enables flexible scalability of the network structure and incorporation of attention mechanisms. Hence, the YOLOv5s framework has been chosen as the foundational framework for developing a novel model for detecting damage in wind turbine blades.

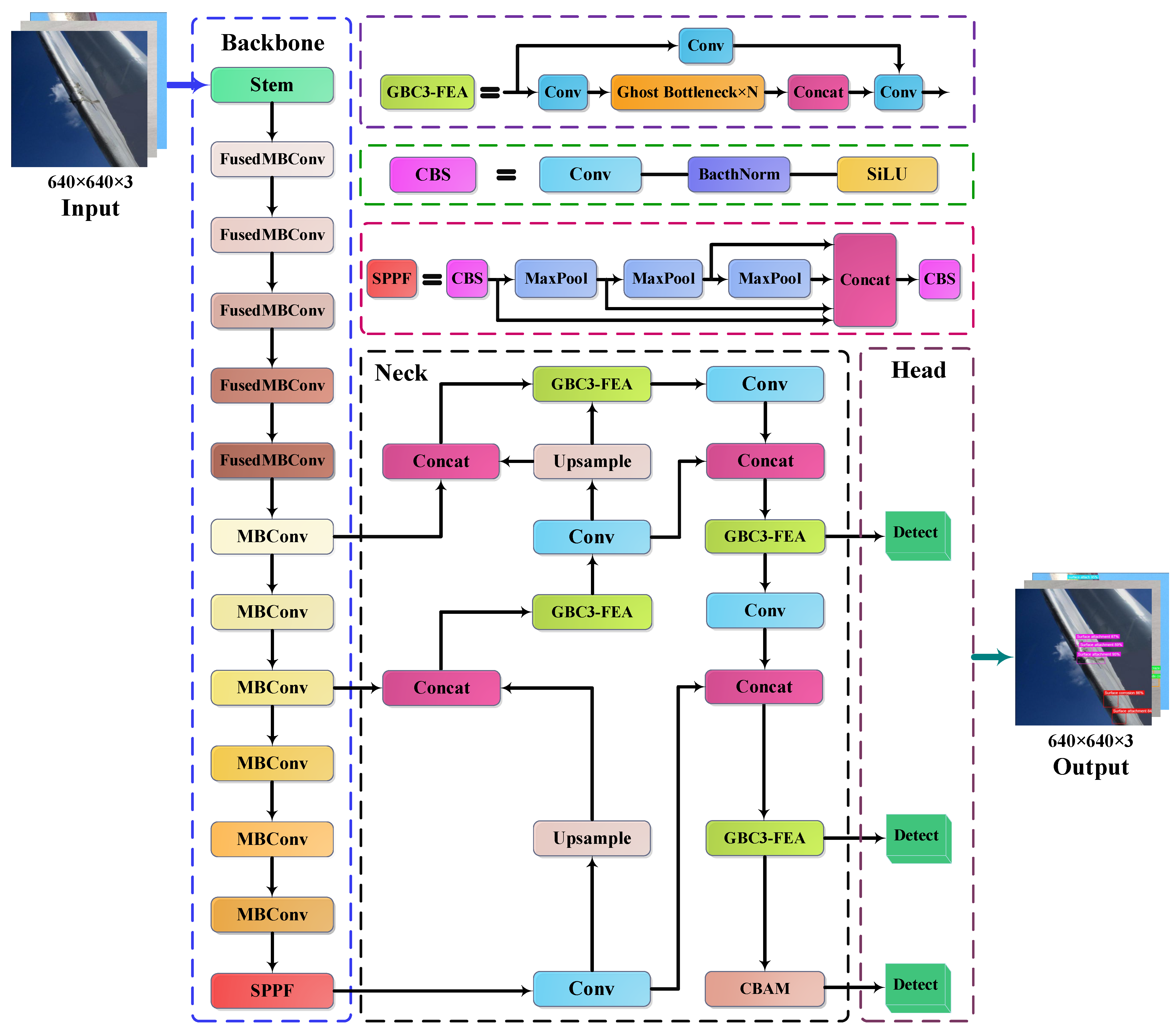

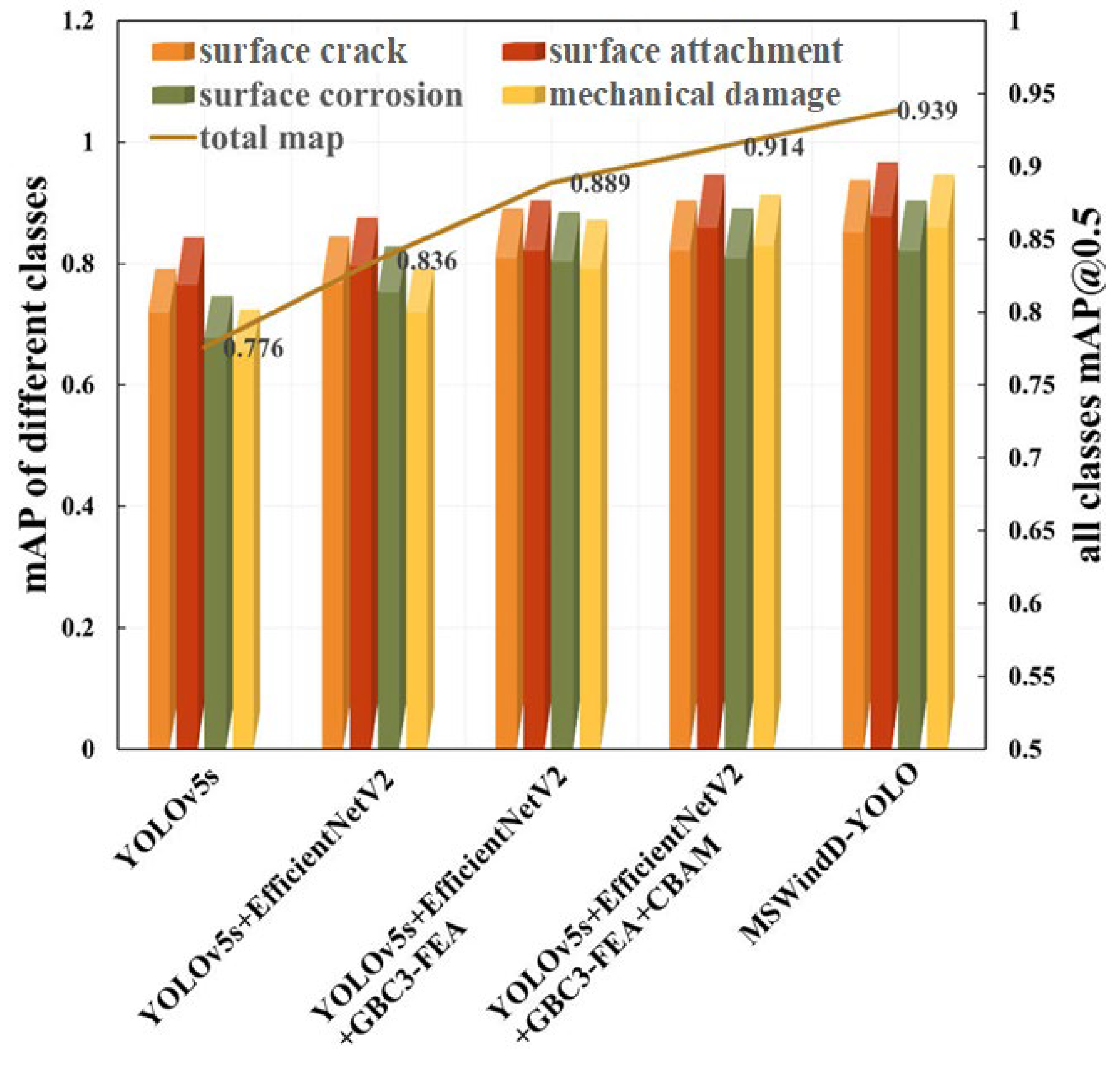

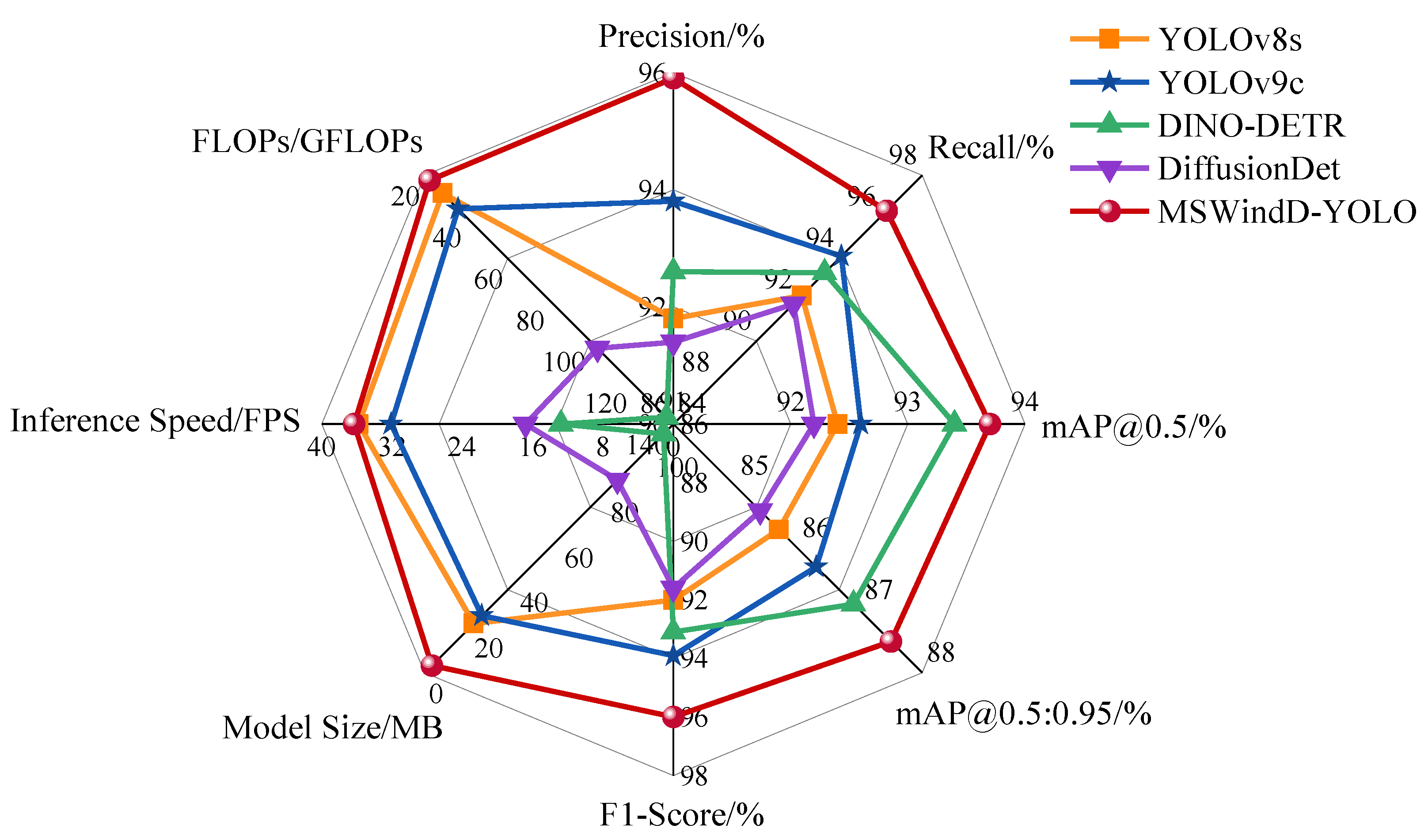

The network structure of the improved wind turbine blade damage detection model (MSWindD-YOLO) is illustrated in

Figure 2. To address computational redundancy in the original Focus module within the backbone network, it was replaced by a Stem module. This module demonstrates superior computational efficiency and multi-scale fusion capability, enabling more effective initial feature extraction from input images. Consequently, richer, more accurate information is provided for subsequent processing stages. Furthermore, the EfficientNetV2 architecture was incorporated, leveraging depthwise separable convolutions and attention mechanisms to maintain feature extraction capability while reducing model complexity. The original SPPF (Spatial Pyramid Pooling-Fast) module for spatial pyramid pooling was retained, with its multi-scale representation enhanced through an adaptive receptive field adjustment mechanism. In the Neck network, the original C3 feature extraction module was replaced with a novel GBC3-FEA module. The GBC3-FEA module incorporates a dynamic grouped convolution strategy combined with channel reparameterization techniques, enabling a lightweight design for the Neck network while preserving its feature expression capability. At the end of the feature pyramid, a hybrid attention mechanism (CBAM) was introduced. By enhancing the response intensity to key features through channel-space joint attention modeling, this mechanism effectively alleviates the issue of target missed detection under complex backgrounds. Furthermore, the original CIoU loss function was replaced with the Shape-IoU loss function, which leverages geometric prior information of the target bounding box to establish a dynamic weighting adjustment mechanism, thereby improving model training speed and localization accuracy.

The process flow for utilizing the enhanced wind turbine blade damage detection model (MSWindD-YOLO) involves several steps. Initially, a high-definition imaging module installed on a UAV platform captures real-time high-definition images of the blade surface. Subsequently, an airborne edge calculation unit conducts noise reduction, resolution standardization, and normalization procedures to produce standardized tensor data that conform to the input standards of the deep learning model. The processed data is then sent to the inference system via a low-latency communication protocol. Within the inference system, the feature extraction network’s Stem module carries out the initial extraction of shallow feature representations. Following this, deep semantic features are progressively extracted through the layers of the EfficientNetV2 architecture. Ultimately, the SPPF module facilitates the effective integration of multi-scale contextual information.

During the feature fusion stage, the GBC3-FEA module combines detailed multi-level information and semantic features using lightweight convolution. It selectively focuses on essential channels and spatial regions through the CBAM attention mechanism to effectively reduce background interference. The detection module then forecasts the coordinates, categories, and confidence levels of the damage’s bounding box using a multi-scale feature map. It employs the Shape-IoU loss function to enhance the precision of localization. Subsequently, redundant boxes are eliminated through non-maximum suppression. The resulting detection outcomes are structured and overlaid on the original image to create a visual report, which is promptly transmitted to the monitoring terminal in real-time.

2.3.2. Lightweight Optimization of Backbone Network

To enhance the model’s lightweight efficiency, the advanced convolutional neural network structure of EfficientNetV2 [

22] was strategically integrated into the backbone network. This approach aims to minimize the use of limited computing resources during wind turbine blade surface damage detection while ensuring real-time data transmission.

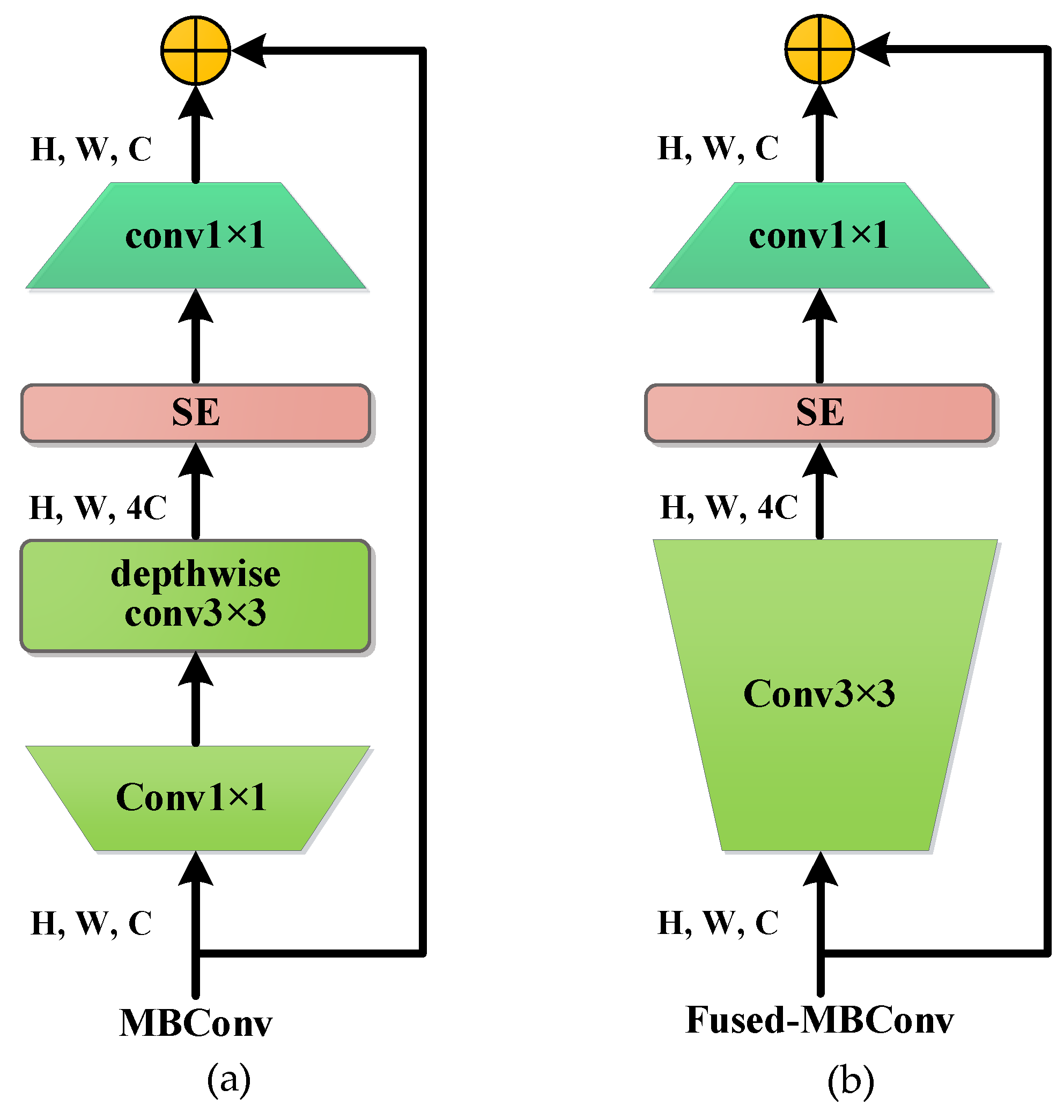

EfficientNetV2 is a convolutional neural network architecture that strategically adapts network depth, width, and resolution using a systematic scaling approach, demonstrating outstanding performance within resource-constrained environments. As illustrated in

Figure 3, its core structure is primarily composed of MBConv and Fused-MBConv modules.

The MBConv module [

23], serving as the core component of the EfficientNetV2 architecture, comprises a series of operations including a 1 × 1 pointwise convolution (expansion stage), a 3 × 3 depthwise convolution, a Squeeze-Excitation (SE) module, a 1 × 1 pointwise convolution (projection stage), and a skip connection. Initially, the 1 × 1 pointwise convolution in the expansion stage increases the number of input channels to expand feature dimensionality, enabling subsequent convolutions to capture richer contextual information. This is followed by a 3 × 3 depthwise convolution that extracts spatial features while operating independently on each input channel, significantly reducing model parameters and computational complexity. Subsequently, the Squeeze-Excitation module dynamically recalibrates channel-wise feature responses through an attention mechanism, emphasizing informative channels while suppressing less relevant ones. The following 1 × 1 pointwise convolution in the projection stage then reduces the channel count back to the original dimension, consolidating and compressing the extracted spatial features. Finally, a skip connection is employed to add the input tensor to the output of the projection stage when spatial dimensions and channel numbers match, thereby mitigating gradient vanishing issues, preserving feature diversity, and facilitating efficient gradient flow during training. This modular design achieves a balance between model capacity and computational efficiency while maintaining representation power.

The Fused-MBConv module [

24] integrates design principles from standard convolution and the MBConv architecture to enhance feature extraction efficiency. In shallow network layers with limited input channels, this module replaces the conventional depthwise separable convolution in traditional MBConv with a single standard convolutional layer. This substitution enables simultaneous spatial feature extraction and cross-channel information fusion within a unified operation. The workflow proceeds as follows: Initial feature extraction is performed through a standard convolutional layer, followed by Batch Normalization to accelerate training convergence and mitigate internal covariate shift. Nonlinear activation is introduced via SiLU or ReLU functions to enhance model expressiveness. By leveraging the dense connectivity characteristic of standard convolutions, this architecture maintains lightweight computation while improving the representational capacity of shallow-layer features, particularly beneficial in scenarios with constrained input channels. Compared to the fragmented computation of separate depthwise and pointwise convolutions in conventional MBConv, the fused structure reduces computational fragmentation and optimizes hardware utilization. This design achieves a superior accuracy-efficiency trade-off on resource-constrained platforms such as mobile devices, demonstrating the efficacy of architectural fusion in balancing performance and computational efficiency.

2.3.3. GBC3-FEA Feature Extraction Module

Deep convolutional neural networks typically incorporate numerous convolutional layers, inevitably incurring substantial computational costs. To address this, architectures such as MobileNet [

25] and ShuffleNet [

26] employ strategies like depthwise separable convolutions and channel shuffling, frequently combined with smaller filters for efficiency. However, a significant computational overhead persists in the 1 × 1 pointwise convolutions used for channel fusion, which remain a non-negligible source of memory consumption and FLOPs.

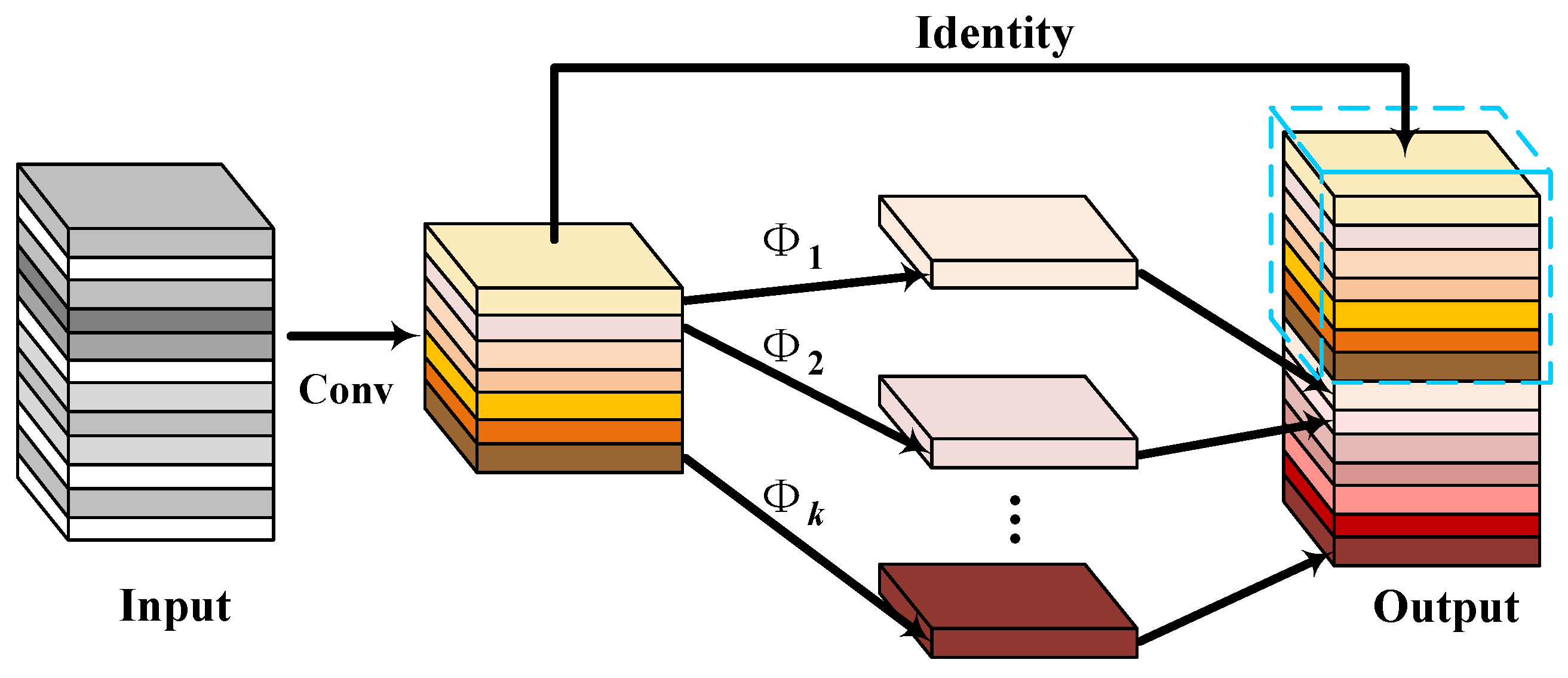

Regarding the ubiquity of redundancy in the computation process of intermediate feature maps within mainstream convolutional neural networks, existing literature [

27] has proposed mitigating this issue by reducing the computational resources required to generate these feature maps—specifically, through optimizing the quantity of convolutional filters employed in their generation. Formally, given input data

X ∈

Rc×h×w (where

c h and

w denote the number of channels, height and width, respectively), the transformation performed by a convolutional layer to produce

n feature maps can be formulated as Equation (1):

In Equation (1), ∗ denotes the convolution operation, b ∈ Rn is the bias vector, Y ∈ Rh′×w′×n is the output feature map with n channels, and f ∈ Rc×k×k×n represents the convolutional filters. Here, h′ and w′ denote the height and width of the output data, respectively, and k is the spatial dimension of the kernel. Given that both the number of filters n and input channels c are typically large, the resulting FLOPs can easily reach an order of millions or even billions, posing a significant computational burden.

According to Equation (1), the number of parameters to be optimized (including convolutional filters

f and bias vector b) explicitly depends on the dimensions of input and output feature maps. The feature maps generated by convolutional layers often exhibit significant redundancy, with partial feature maps potentially demonstrating high similarity. We argue that individually generating these redundant feature maps requires substantial computational resources (FLOPs) and parameter counts, which is not indispensable. Assuming output feature maps can be generated as “ghost” features through low-cost transformations applied to a small number of intrinsic feature maps, these intrinsic feature maps typically have smaller dimensions and are generated by standard convolutional layers. Specifically,

m intrinsic feature maps

Y’ ∈

Rh′×w′×m can be generated through primary convolution operations:

In Equation (2),

f ′ ∈

Rc×k×k×m represents the convolutional filters used (with

m ≤

n). For brevity, the bias vectors are omitted. Hyperparameters such as filter size, stride, and padding scheme remain identical to those in standard convolution (Equation (1)) to ensure consistency in spatial dimensions (

h′ and

w′) of the output feature maps. Building upon this foundation, to ultimately obtain the required

n feature maps, a series of low-complexity linear operations are applied to each intrinsic feature map

Y′ to generate

s ghost features. This process can be mathematically expressed as Equation (3):

In Equation (3),

∈

Y′ denotes the

i-th intrinsic feature map. The symbol

Φi,j represents the

j-th linear transformation applied to

for the generation of the

j-th ghost feature map, where

j ∈ [1,

s − 1]. The last operation,

Φi,s, is an identity mapping used to preserve the original intrinsic feature map, as illustrated in

Figure 4. Thus, Equation (3) yields

n =

m ·

s output feature maps in total, denoted as

Y = [

y11,

y12, …,

yms]. Critically, these linear transformations

Φ (e.g., 3 × 3 depthwise convolutions) are channel-wise operations, which incur significantly lower computational complexity than standard convolutions.

Given an input feature map

X ∈

Rc×h×k, the Ghost module first generates

m intrinsic feature maps

Y′ using a primary

k ×

k convolution. A

d ×

d depthwise convolution is then applied to each feature map in

Y′ as a linear transformation to produce

s ghost feature maps per intrinsic map. The final output

A ∈

Rn×h′×w′ is formed by concatenating these

n feature maps. The computational costs, measured in FLOPs, for generating

n output feature maps via conventional convolution (denoted as

B) and the proposed Ghost convolution (denoted as

C) are compared in Equation (4). It is evident from Equation (4) that standard convolution requires roughly

s times more computation than Ghost convolution.

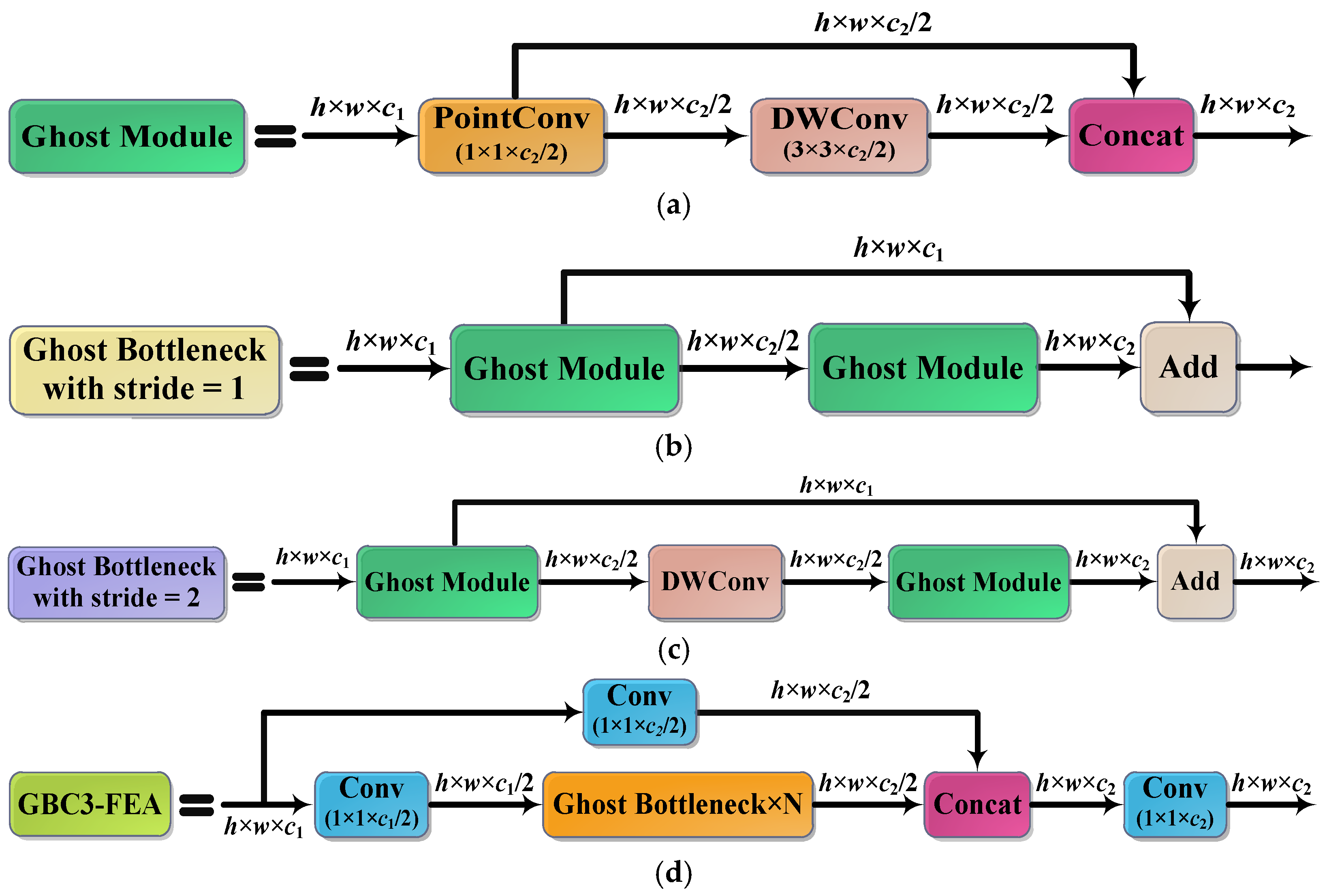

To enable the deployment of CNNs on resource-constrained devices by lowering their computational demands, we integrated the Ghost module (

Figure 5a) into a standard bottleneck structure, forming a Ghost bottleneck module. This bottleneck design has two variants, distinguished by their stride values (stride = 1 and stride = 2). As shown in

Figure 5b, the first variant (stride = 1) stacks two Ghost modules with a residual connection that bypasses the input to the output. Batch normalization (BN) and ReLU activation follow the first Ghost module, while the second employs a linear projection (followed by BN) without ReLU. The second variant (stride = 2,

Figure 5c) accommodates downsampling; a depthwise convolution (DWConv) layer is inserted between the two Ghost modules to enable cross-layer feature evolution. To maximize efficiency, the primary convolution within each Ghost module utilizes pointwise convolution for channel expansion.

To further reduce the model’s computational footprint and enhance its damage feature extraction efficiency, we developed a lightweight feature extraction architecture, termed the GBC3-FEA module (

Figure 5d), by integrating Ghost Bottlenecks into the YOLOv5s C3 module. The C3 module’s multi-branch design with dense connections serves as a suitable foundation for this integration, enabling a significant reduction in computational complexity while preserving representational capacity. In our GBC3-FEA design, an initial standard convolutional layer first halves the input channel depth. The features are then processed through a sequential cascade of Ghost bottleneck layers and a residual branch. This dual-pathway architecture captures rich semantic information, which is subsequently merged via a concatenation operation. Finally, a convolutional layer refines the fused features to enhance contextual coherence.

The novelty of the proposed GBC3-FEA module lies in its integration of Ghost convolution and the C3 module from YOLOv5 into a unified lightweight feature extraction architecture, specifically designed for wind turbine blade damage detection. Unlike standard lightweight approaches such as MobileNet or ShuffleNet, which focus mainly on general-purpose efficiency, our module explicitly reduces computational cost while preserving multi-scale feature fusion capabilities through the C3 structure. Moreover, by embedding Ghost Bottlenecks—which use low-cost linear operations to generate “ghost” features—within the C3′s multi-branch layout, we achieve a better trade-off between accuracy and computational efficiency compared to conventional lightweight convolutional designs. This innovation is particularly valuable in the context of vision-based structural health monitoring, where real-time processing under hardware constraints is critical.

2.3.4. Attention Mechanism Introduction

In developing a damage detection model for wind turbine blades, a critical challenge is the precise identification of defects within complex and dynamically changing natural environments. To address this, we have conducted an in-depth study on methods to enhance the focus on blade damage characteristics, with the goal of comprehensively extracting latent damage features from inspected blades. Our approach integrates the Convolutional Block Attention Module (CBAM) [

28] into the MSWindD-YOLO framework. By combining channel and spatial attention, CBAM dynamically recalibrates feature map weights across different dimensions, enabling the model to adaptively concentrate on regions critical for damage identification. This mechanism significantly enhances the sensitivity towards blade damage features while simultaneously improving robustness and accuracy against complex backgrounds. Consequently, our work contributes to the advancement of efficient and intelligent detection systems for wind turbine blade damage.

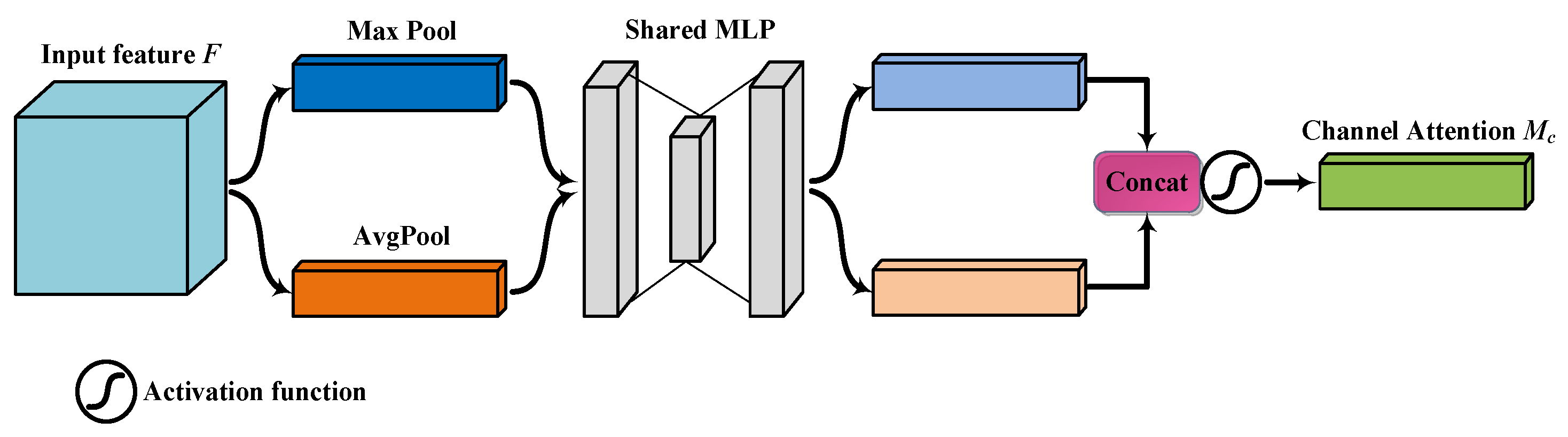

CBAM is an attention mechanism consisting of a Channel Attention Module (CAM) and a Spatial Attention Module (SAM). CBAM is designed to enhance the performance of Convolutional Neural Networks in tasks such as image recognition. As illustrated in

Figure 6, the input features are sequentially processed first by the channel attention mechanism and subsequently by a mechanism for emphasizing informative spatial locations. This design enables the network to concentrate on salient features across both the channel and spatial dimensions.

The Channel Attention Module (CAM) is responsible for assigning varying weights to features across the channel dimension, with its architecture illustrated in

Figure 7. Within the CAM, global average pooling (GAP) and global maximum pooling (GMP) are applied in parallel to each channel of the input feature map. These two pooling operations extract the global average and global maximum information of the feature map, respectively. Subsequently, the results of these pooling processes are fed into a shared multilayer perceptron (MLP) with hidden layers for further processing. Following summation, the MLP outputs are fed into a sigmoid activation function, yielding the final channel attention weights. These weights are utilized to adjust the channel-wise weights of the input feature map, thereby enhancing the model’s focus on critical channels.

Specifically, the processed output

Mc(

F) of the Channel Attention Module (CAM) can be mathematically formulated as Equation (5):

where

denotes the sigmoid activation function,

MLP represents a shared multi-layer perceptron with hidden layers,

W1 and

W2 correspond to the output layer weights and hidden layer weights within the

MLP architecture, while

and

respectively indicate the channel-wise global average pooling features and global max pooling features.

Although the Channel Attention Module (CAM) can effectively highlight the importance of different channels, it may overlook spatial positional information within the feature maps. To address this limitation, CBAM introduces a Spatial Attention Module (SAM) to complement its functionality. The architecture of the Spatial Attention Module is illustrated in

Figure 8.

The Spatial Attention Module (SAM) generates a spatial attention mask by leveraging inter-spatial relationships of features. The module first applies both global average pooling (GAP) and global maximum pooling (GMP) along the channel dimension to the input feature map. This operation produces two 2D feature maps, each encoding a different type of global spatial context (average-pooled and max-pooled). These two maps are then concatenated along the channel axis to form a composite feature descriptor. Subsequently, this descriptor is processed by a standard convolutional layer with a 7 × 7 kernel, and subsequently passed through a sigmoid function to create the spatial weight map. This final weight map is applied to the input feature map via element-wise multiplication, effectively recalibrating it to emphasize semantically informative regions. The output

of the Spatial Attention Module can be formally expressed by Equation (6):

where

f7×7 denotes a convolution operation with a 7 × 7 kernel, and

and

represent the feature maps obtained by global average pooling and global maximum pooling, respectively.

2.3.5. Loss Function Redefinition

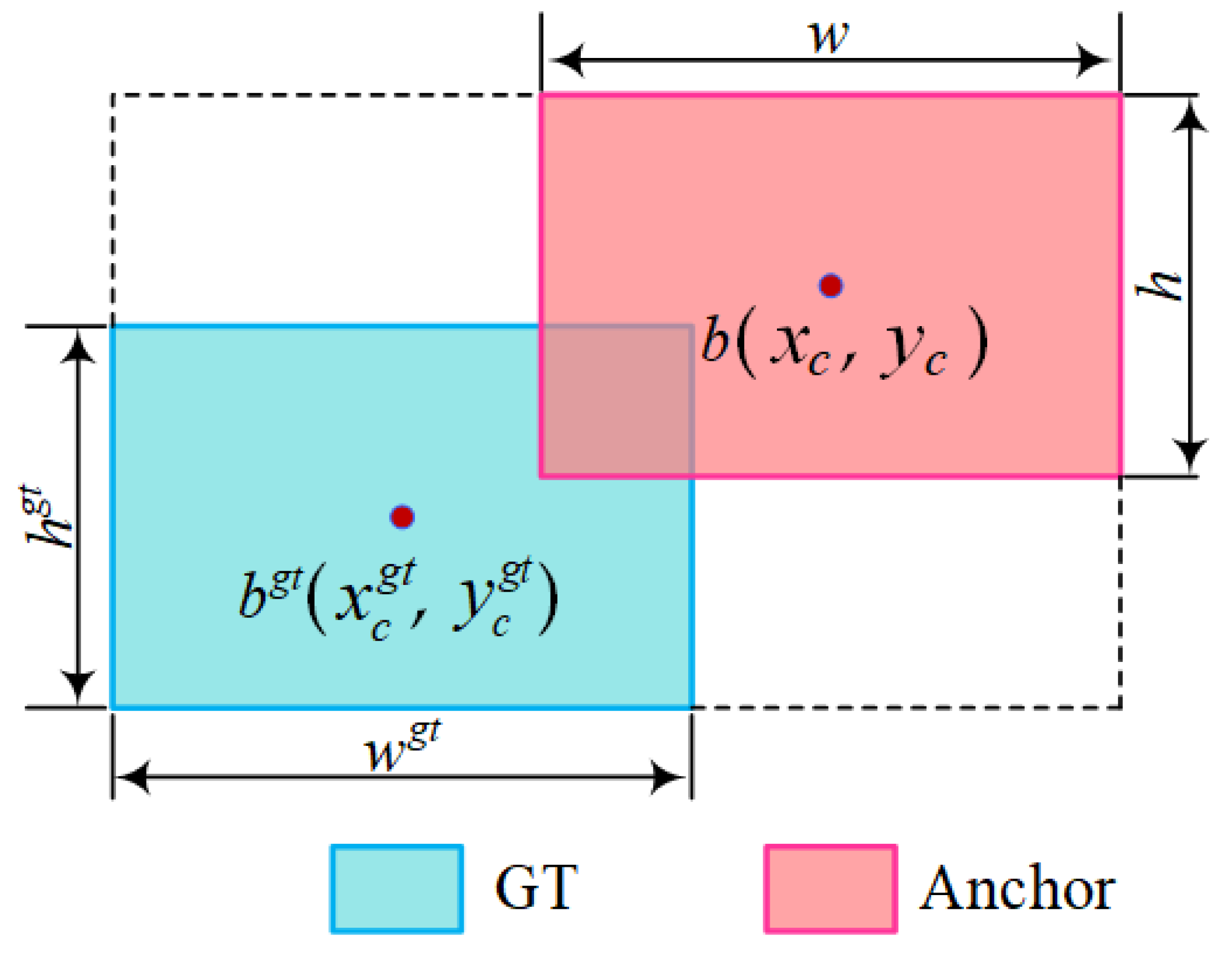

In target detection tasks, bounding box regression serves as a critical component within the detector’s localization branch, playing a pivotal role in precise object localization. While the CIoU loss function [

29] adopted by traditional YOLOv5 models improves bounding box regression accuracy to some extent, its over-reliance on the aggregation of regression metrics overlooks the intrinsic attributes of bounding boxes, such as shape and scale characteristics. This limitation results in slower convergence rates and suboptimal detection efficiency during model training.

To address this challenge and improve both training efficiency and detection accuracy, we introduced a novel Shape-IoU loss function [

30] integrated into the MSWindD-YOLO framework, replacing the conventional CIoU loss function. The Shape-IoU loss function redefines the penalty terms in the regression objective by emphasizing the inherent geometric properties of bounding boxes, particularly their shape and scale. This reparameterization enables more precise control during the bounding box regression stage. Specifically, the loss function comprises three key components: the Shape Distance cost function, the Angle cost function, and the IoU cost function.

The Shape Distance cost function primarily accounts for discrepancies between predicted and ground-truth bounding boxes along horizontal and vertical dimensions. By integrating the deviation in center coordinates with shape-dependent weighting factors derived from the ground-truth box geometry, this component accurately quantifies the extent of shape discrepancy between predicted and actual bounding boxes, as formulated in Equation (7):

where (

xc,

yc) and

denote the center coordinates of the predicted and ground-truth bounding boxes, respectively. The terms

ww and

hh are the shape-dependent weighting coefficients derived from the ground-truth box’s dimensions.

The Angle cost function is introduced as an extension based on the definition of the Shape Distance cost function, serving to quantify rotational discrepancies between predicted bounding boxes and ground-truth bounding boxes. The function is mathematically formulated in Equation (10), thereby further enhancing the accuracy of bounding box regression.

The IoU term in the Shape-IoU loss retains the standard formulation, as defined in Equation (13). It directly minimizes the discrepancy between the predicted and ground-truth bounding boxes by optimizing their Intersection over Union (IoU), thereby enhancing regression accuracy.

The aforementioned three functions collectively constitute the integrated operational framework of the Shape-IoU loss function, as illustrated in

Figure 9. The definition of the Shape-IoU loss function can be explicitly formulated by Equation (14):

As indicated in Equation (14), the Shape-IoU loss function provides comprehensive optimization of the bounding box regression process by integrating multiple geometric factors, including shape, scale, and IoU. When applied to the MSWindD-YOLO model, this loss function significantly enhances both the training convergence speed and the final inference accuracy.