Short-Term Passenger Flow Forecasting for Rail Transit Inte-Grating Multi-Scale Decomposition and Deep Attention Mechanism

Abstract

1. Introduction

- (1)

- Data Layer Optimization: Enhancing Input Signal Quality

- (2)

- Model Layer Innovation: Improving Prediction Model Architecture

2. Methods

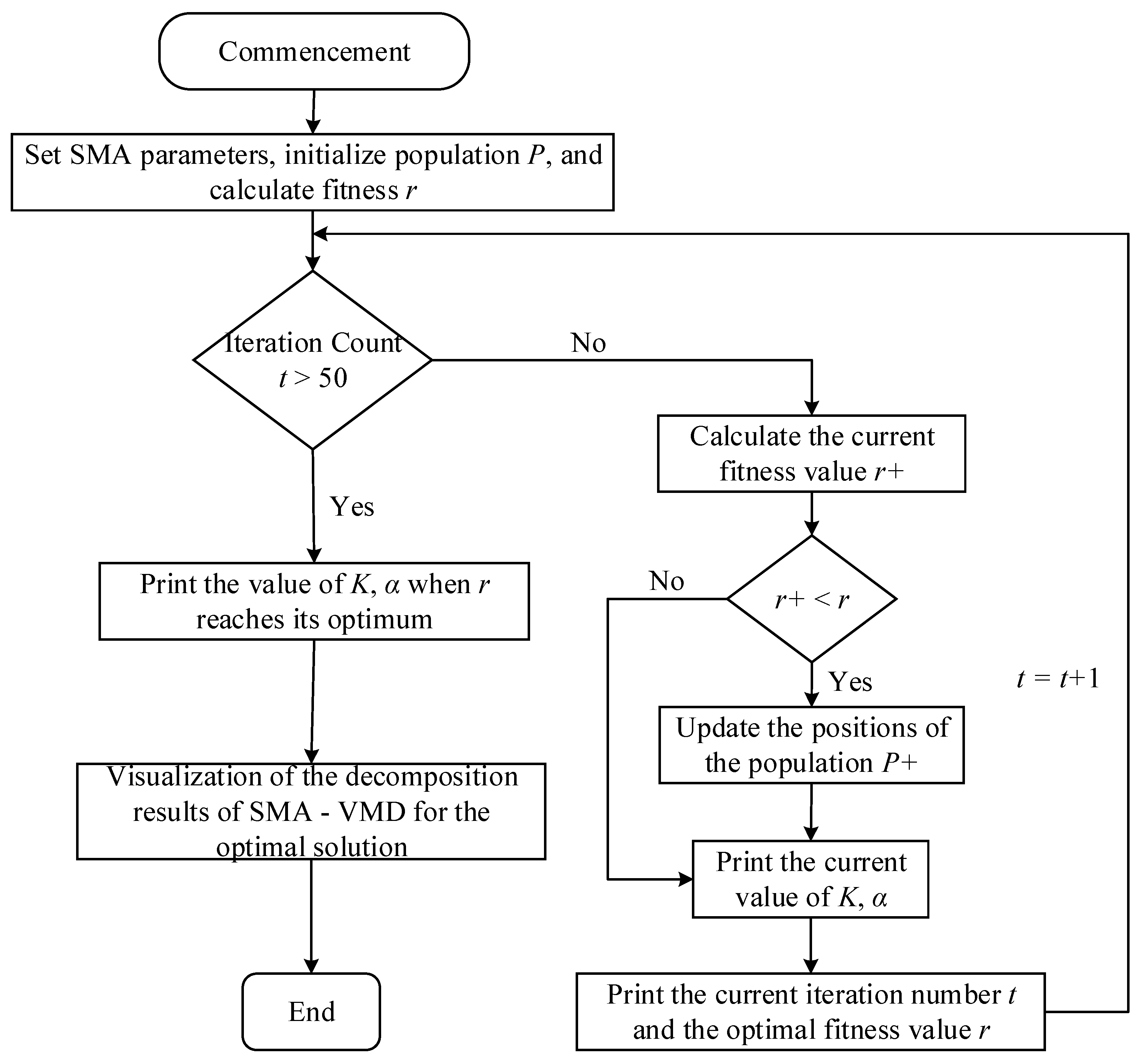

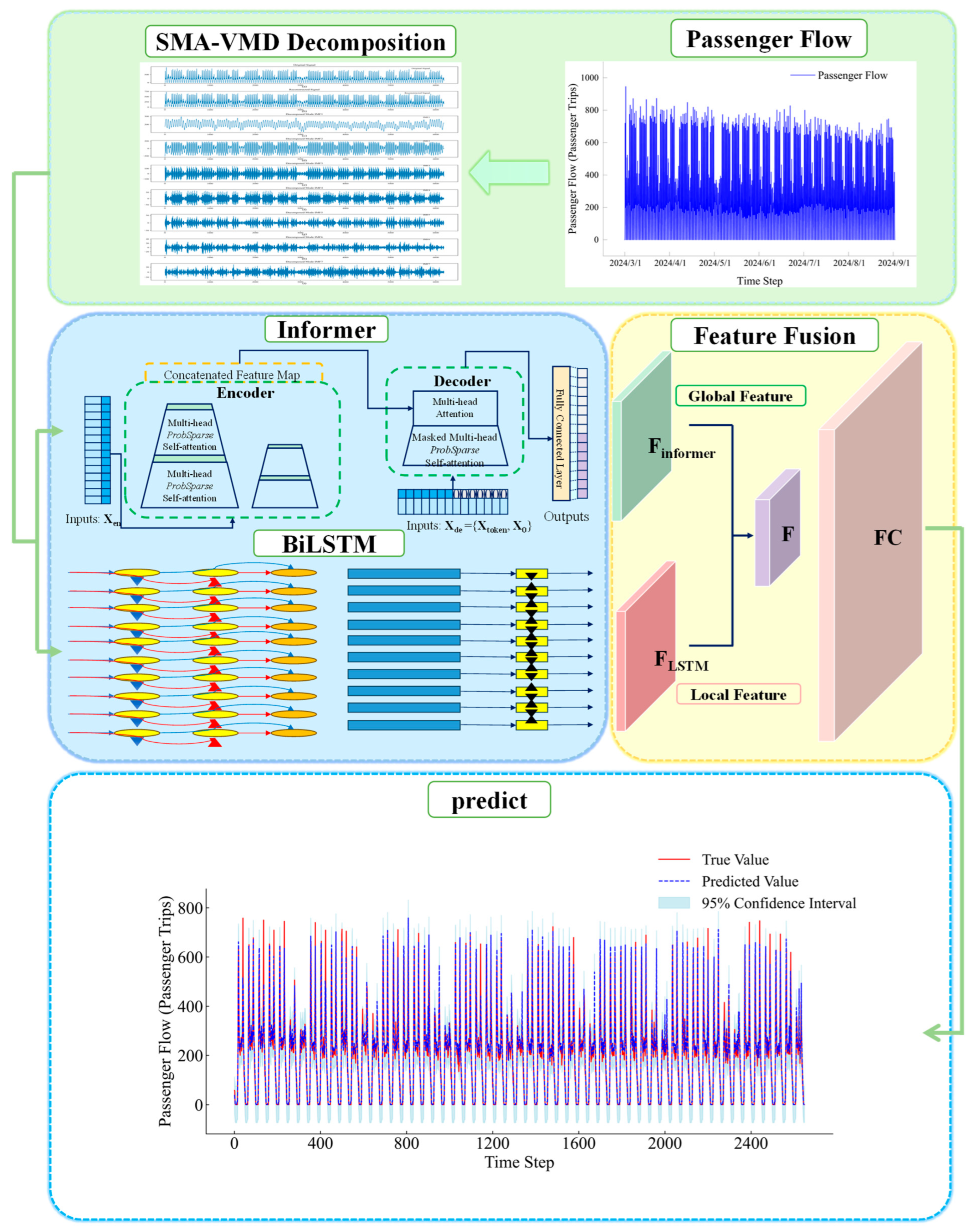

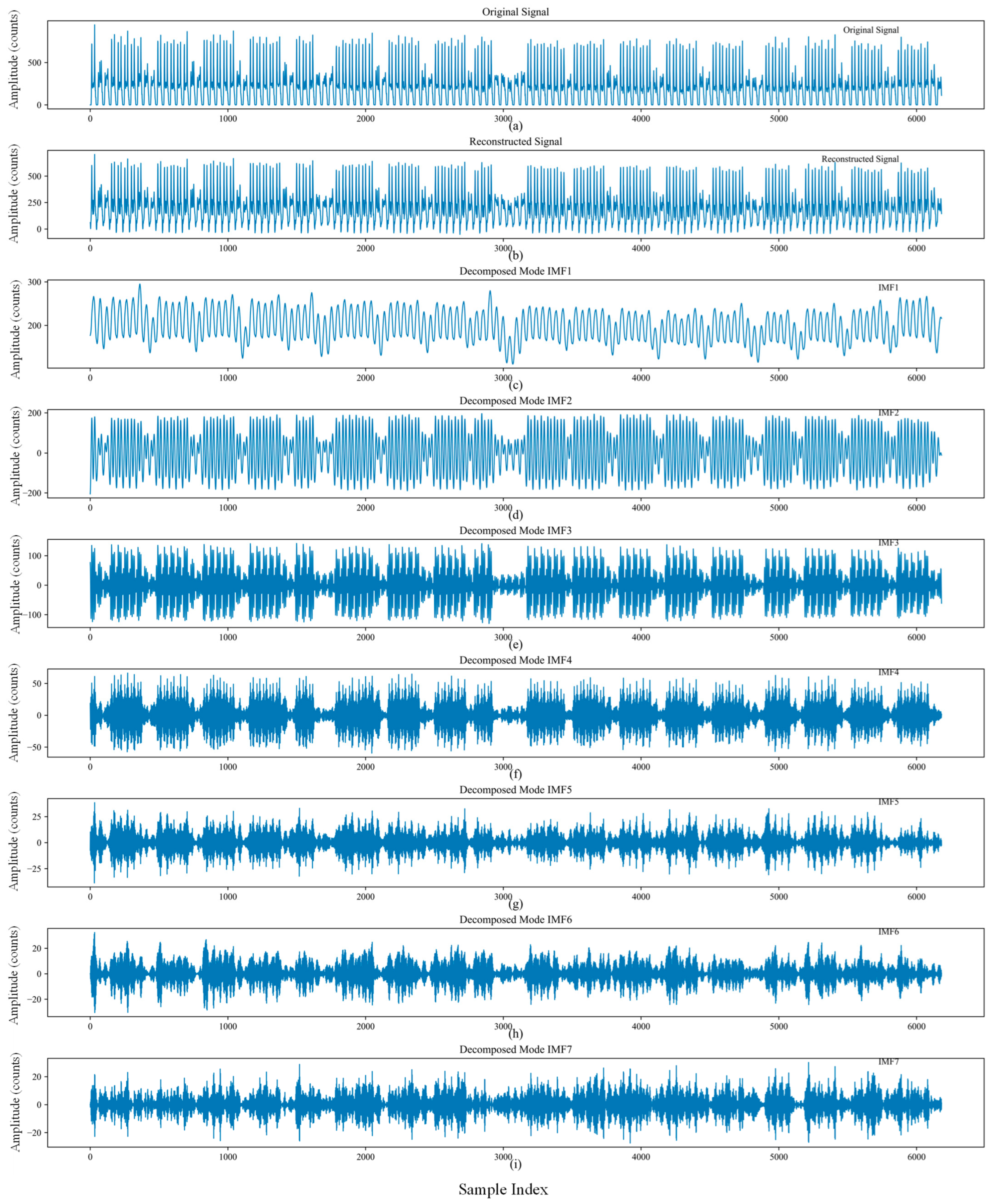

2.1. Denoising Decomposition Method Based on SMA-VMD

2.2. Informer-BiLSTM Model

2.2.1. Informer

2.2.2. BiLSTM

2.2.3. Informer-BiLSTM Model

2.3. SMA-VMD-Informer-BiLSTM Model

3. Case Study

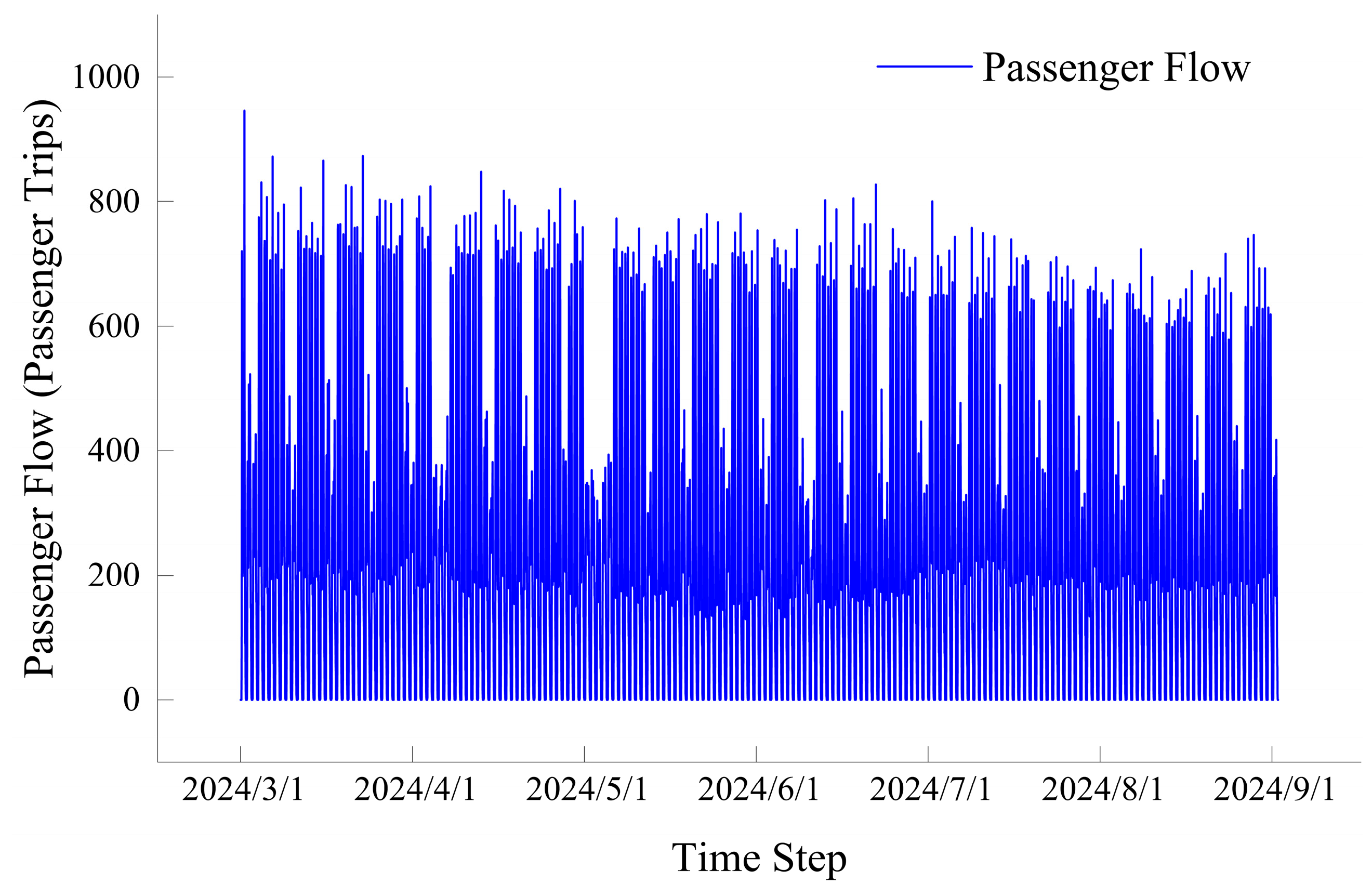

3.1. Data Description

3.2. Model Preprocessing

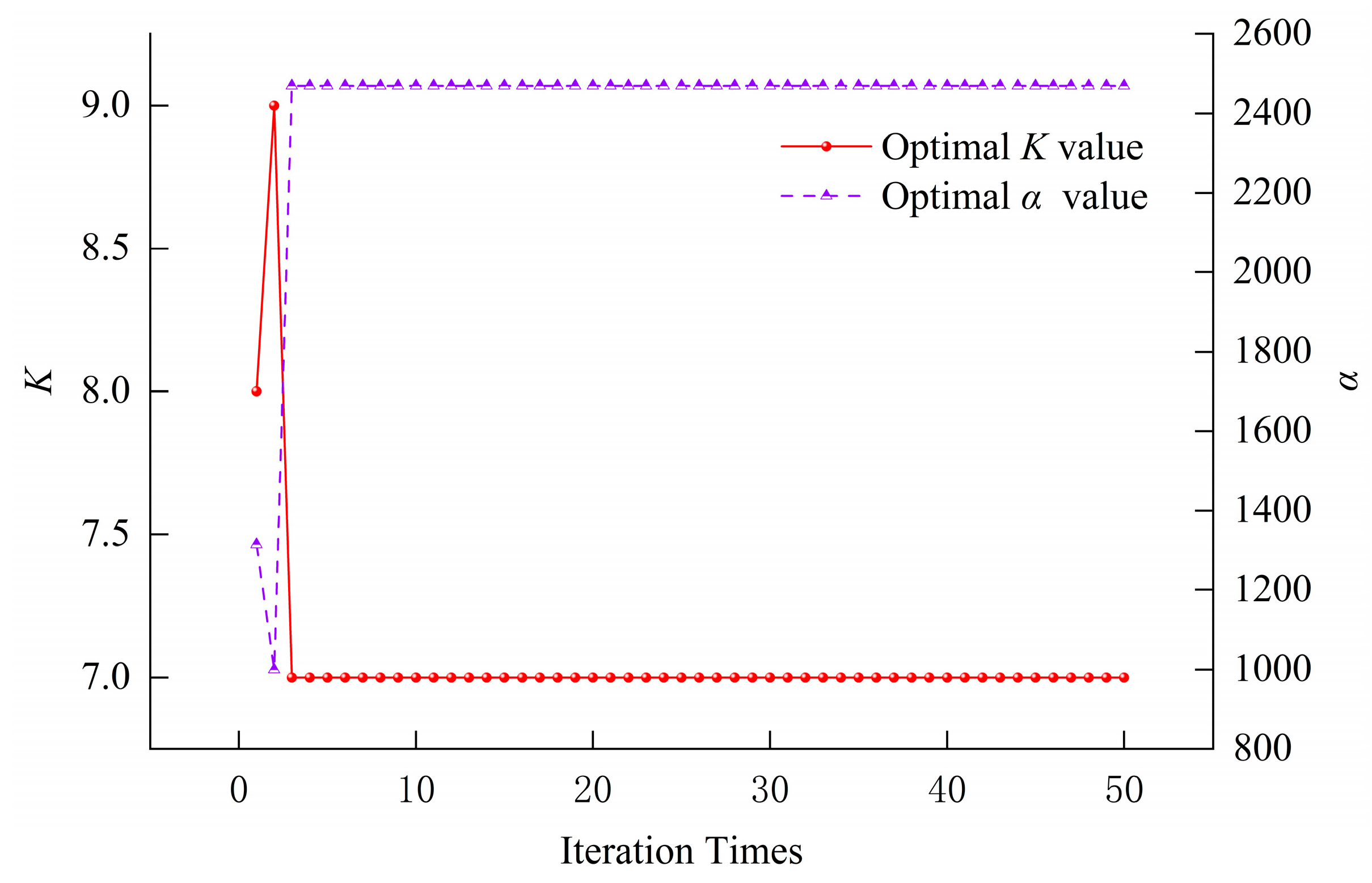

3.3. Parameter Optimization

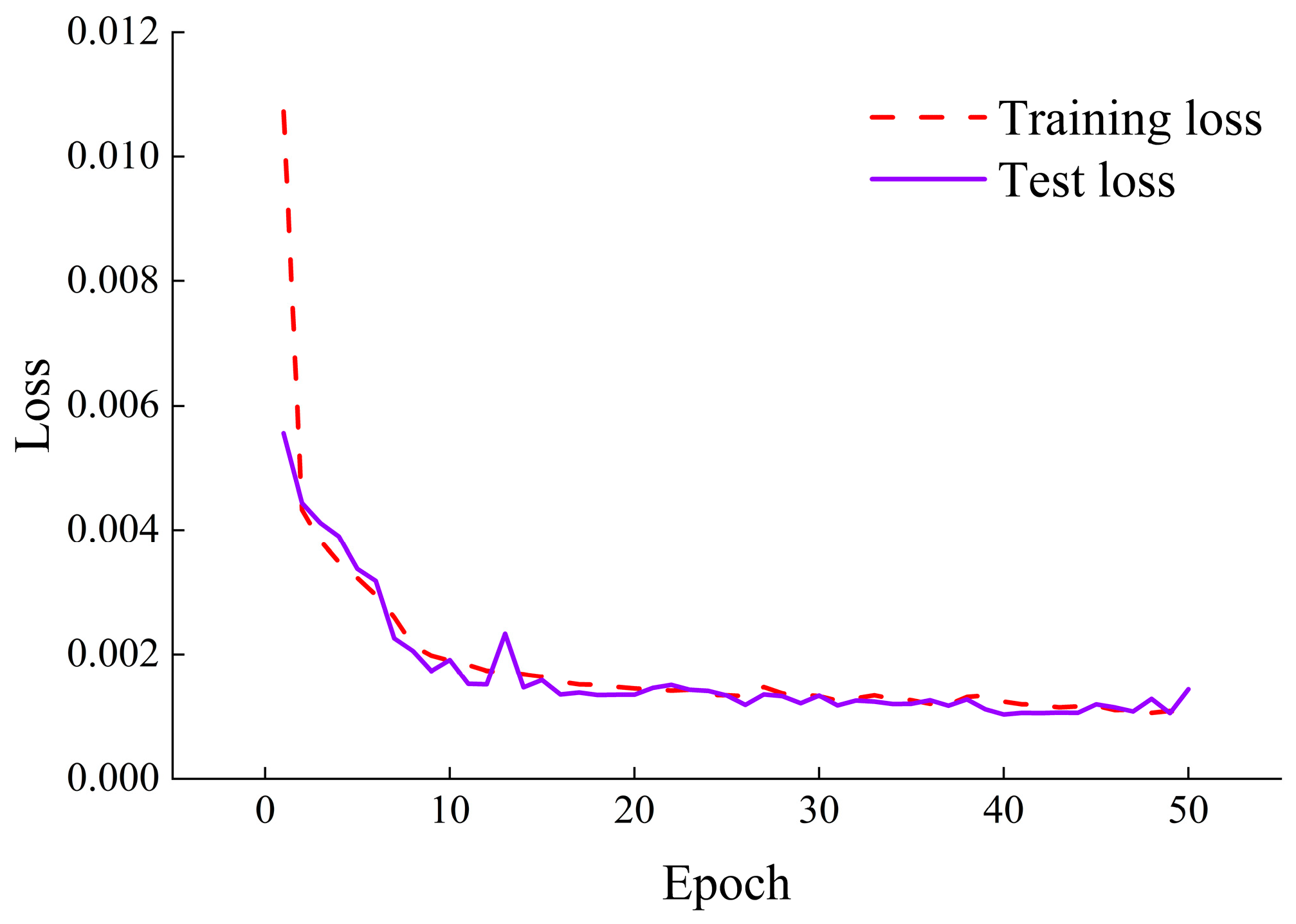

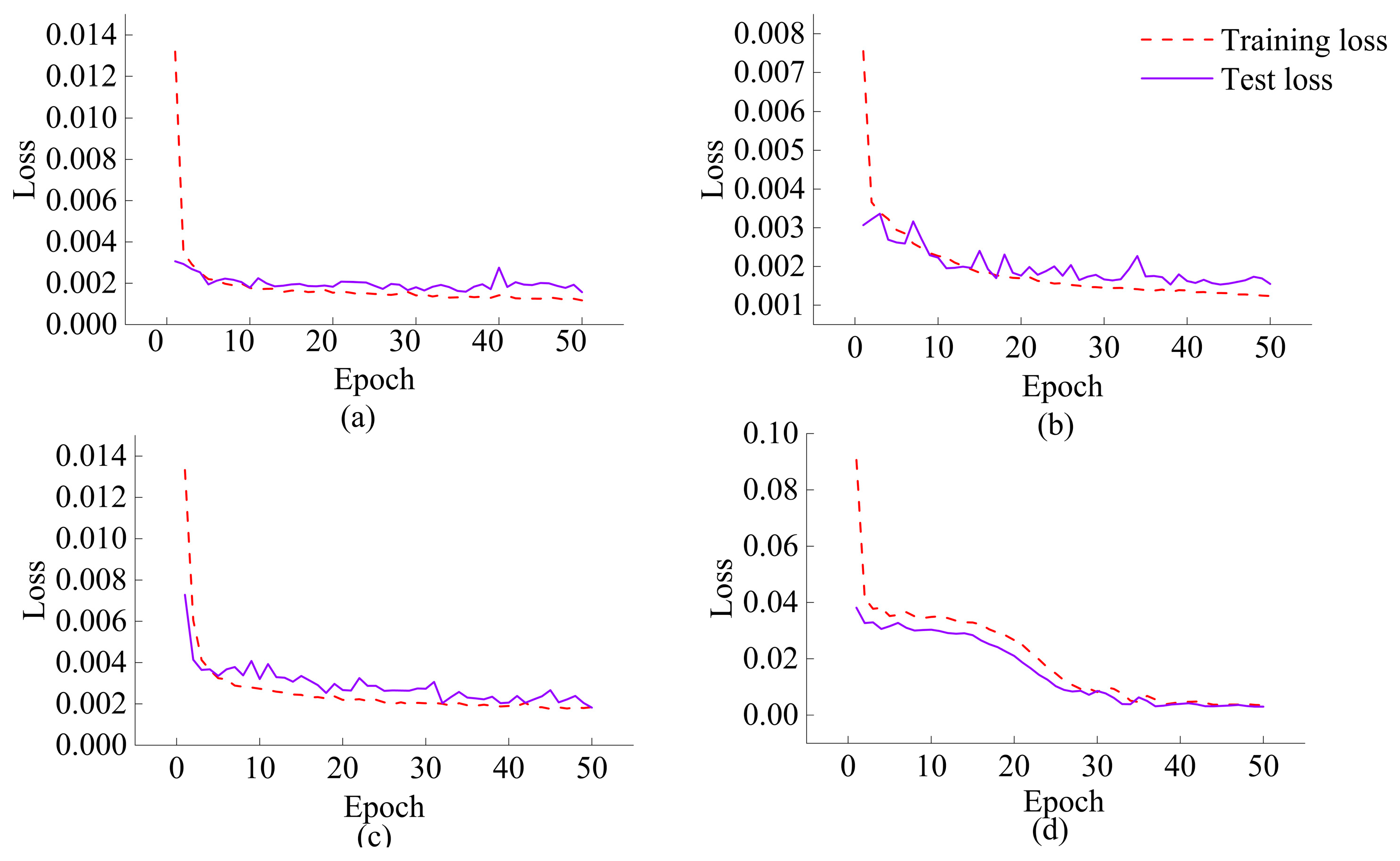

3.4. Model Training

3.5. Evaluation Metrics

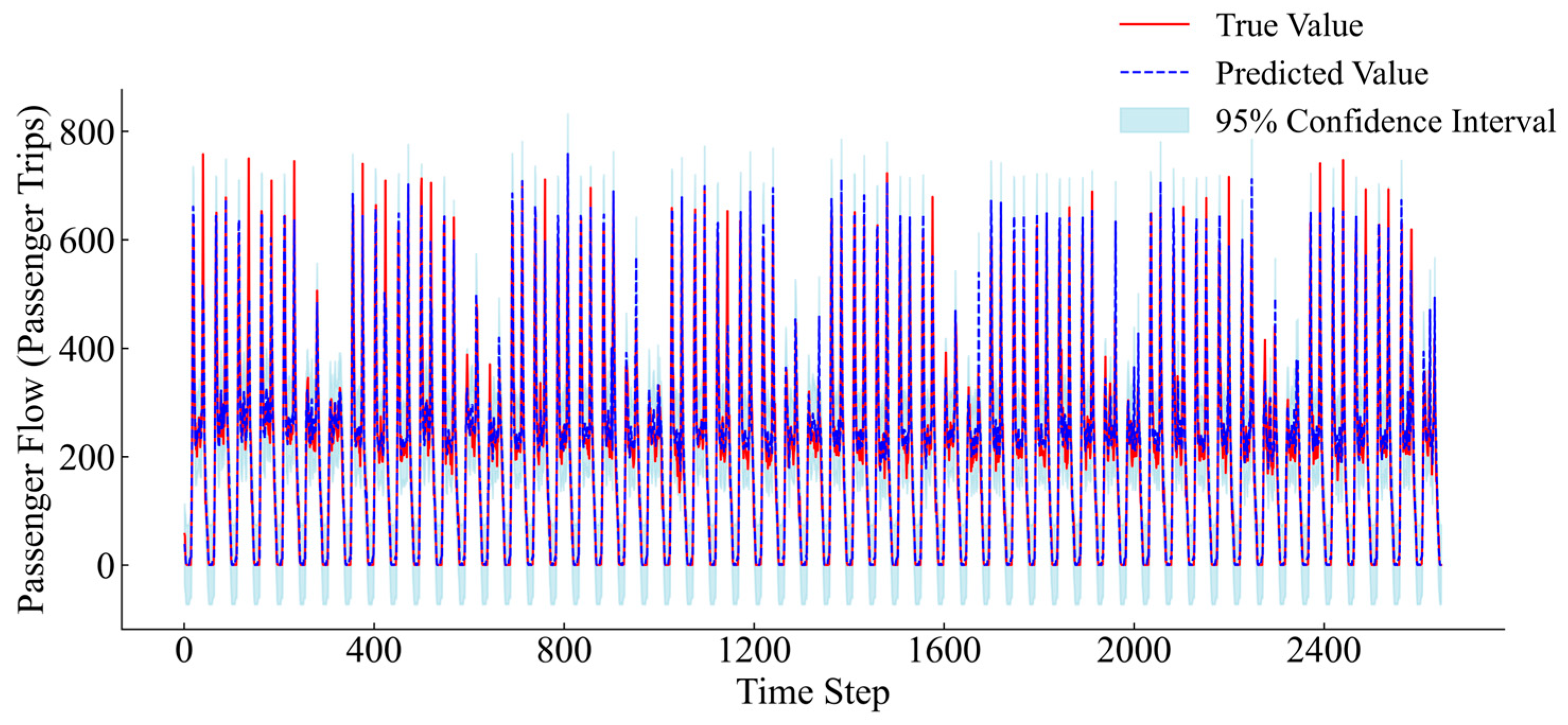

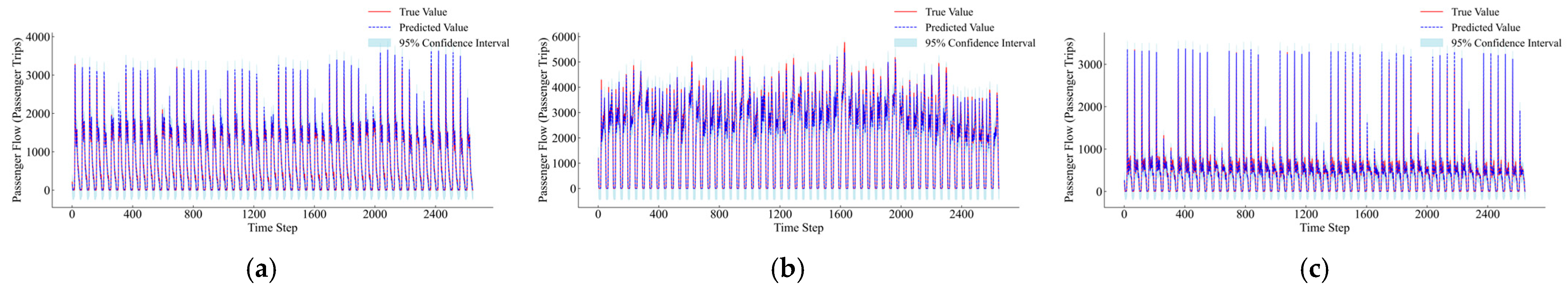

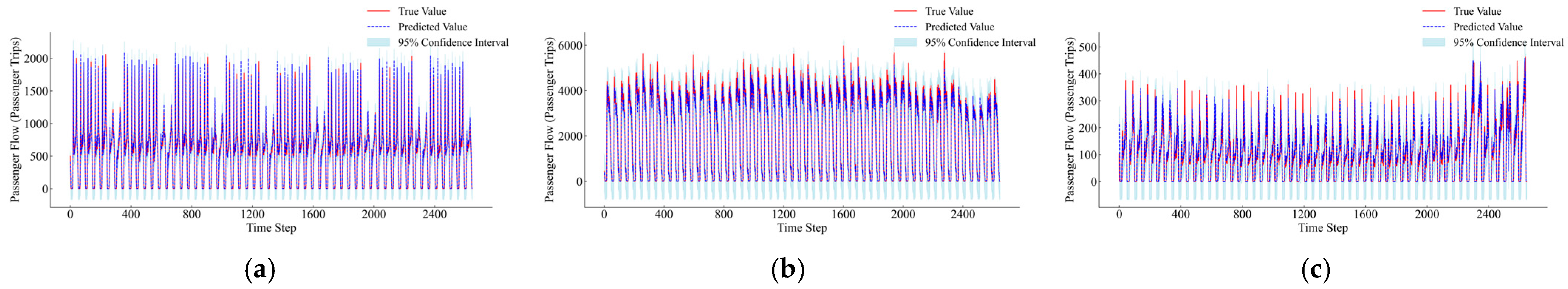

3.6. Results and Discussion

3.7. Comparison of Models

4. Conclusions

- (1)

- Model methodology and performance advantages. This study presents a short-term passenger flow forecasting method. In this method, the parameters of VMD are optimized using the SMA, and the passenger inflow signals are decomposed accordingly. Subsequently, predictions are made through a combined Informer-BiLSTM model, and the results are output after fusion via a fully connected layer. Compared with traditional forecasting models, this proposed method demonstrates superior performance in terms of both various error metrics and the goodness-of-fit. From the perspective of interpretability, the process of SMA-optimized VMD parameter adjustment can automatically modify the number of decomposed modes and the central frequencies based on the inherent characteristics of passenger flow data. This enables the decomposed subsequences to better represent different patterns of passenger flow variations. The combined Informer-BiLSTM model, on the other hand, captures the temporal dependencies of passenger flow from both global and local perspectives. The fusion output through the fully connected layer allows the model to comprehensively consider the impacts of various factors on passenger flow, thereby providing operators with more credible forecasting results.

- (2)

- Model applicability and interpretability expansion. This model primarily centers on longitudinal temporal dependencies and is applicable to various types of rail transit stations. Particularly, when conducting real-time forecasting of passenger flows at stations with minimal external influence and strong regularity, such as those near universities, it exhibits remarkable applicability. From a theoretical standpoint, this is because the passenger flow variations at these stations display distinct periodicity and stability, which are highly congruent with the temporal dependencies captured by the model. In practical applications, the model can accurately predict passenger flows at different stations during various time periods, providing a scientific basis for operators to make rational arrangements for transportation capacity and personnel scheduling.

- (3)

- Subsequent research directions and model limitations. Under the premise of maintaining a single temporal dependency relationship unchanged, further capturing passenger flow abrupt changes through state-space reconstruction to enhance the dynamic response to transportation hub stations, and further exploring the group behavior patterns of different stations through state-transition modeling, represent directions for subsequent research. However, this study also has certain limitations. On the one hand, when dealing with passenger flow data under extreme circumstances, such as abnormal passenger flow fluctuations caused by sudden large-scale events or natural disasters, the prediction accuracy of the model may be compromised. This is because these extreme situations are characterized by uncertainty and complexity, which surpass the statistical patterns of the historical data on which the model is based. On the other hand, during the process of SMA-optimized VMD parameter adjustment, the model requires a substantial amount of computational resources and time. Future research will focus on addressing these issues, while also considering the use of multi-source data for prediction to further enhance the robustness and practicality of the model.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Macioszek, E. Analysis of the Rail Cargo Transport Volume in Poland in 2010–2021. Sci. J. Sil. Univ. Technol. Ser. Transp. 2023, 119, 125–140. [Google Scholar] [CrossRef]

- Khamis, A. Smart Mobility Education and Capacity Building for Sustainable Development: A Review and Case Study. Sustainability 2025, 17, 7999. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, Y. Short-Term Passenger Flow Forecast of Rail Station Based on Random Forest Algorithm. J. Wuhan Univ. Technol. 2022, 46, 406–410. [Google Scholar]

- Lin, L.; Gao, Y.; Cao, B.; Wang, Z.; Jia, C. Passenger Flow Scale Prediction of Urban Rail Transit Stations Based on Multilayer Perceptron (MLP). Complexity 2023, 2023, 1430449. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, W. Subway Passenger Flow Forecasting Model Based on Temporal and Spatial Characteristics. Comput. Sci. 2019, 46, 292–299. [Google Scholar]

- Deng, H.; Zhu, X.; Zhang, Q.; Zhao, J. Prediction of Short-Term Public Transportation Flow Based on Multiple-Kernel Least Square Support Vector Machine. J. Transp. Eng. Inf. 2012, 2, 84–88. [Google Scholar]

- Wang, X.; Zhang, N.; Zhang, Y.; Shi, Z. Forecasting of Short-Term Metro Ridership with Support Vector Machine Online Model. J. Adv. Transp. 2018, 2018, 3189238. [Google Scholar] [CrossRef]

- Yao, R.; Zhang, W.; Zhang, L. Hybrid Methods for Short-Term Traffic Flow Prediction Based on ARIMA-GARCH Model and Wavelet Neural Network. J. Transp. Eng. A Syst. 2020, 146, 4020086. [Google Scholar] [CrossRef]

- Li, L.; Wang, Y.; Zhong, G.; Zhang, J.; Ran, B. Short-to-Medium Term Passenger Flow Forecasting for Metro Stations Using a Hybrid Model. KSCE J. Civ. Eng. 2018, 22, 1937–1945. [Google Scholar] [CrossRef]

- Ma, C.; Li, P.; Zhu, C.; Lu, W.; Tian, T. Short-Term Passenger Flow Forecast of Urban Rail Transit Based on Different Time Granularities. J. Chang’an Univ. (Nat. Sci. Ed.) 2020, 40, 75–83. [Google Scholar]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long Short-Term Memory Neural Network for Traffic Speed Prediction Using Remote Microwave Sensor Data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Zhang, H. Short-Term Passenger Flow Forecasting of Urban Rail Transit Based on Recurrent Neural Network. J. Jilin Univ. (Eng. Technol. Ed.) 2023, 53, 430–438. [Google Scholar]

- Liu, Y.; Liu, Z.; Jia, R. DeepPF: A Deep Learning Based Architecture for Metro Passenger Flow Prediction. Transp. Res. Part C Emerg. Technol. 2019, 101, 18–34. [Google Scholar] [CrossRef]

- Zeng, L.; Li, Z.; Yang, J.; Xu, X. Short-Term Passenger Flow Prediction Method of Urban Rail Transit Based on CEEMDAN-IPSO-LSTM. J. Railw. Sci. Eng. 2023, 20, 3273–3286. [Google Scholar]

- Zhang, B.; Yang, X.; Zhang, Y.; Li, D. Short-Term Inbound Passenger Flow Prediction of Model Rail Transit Based on Combined Deep Learning. J. Chongqing Jiaotong Univ. (Nat. Sci.) 2024, 43, 92–99. [Google Scholar]

- Gao, C.; Liu, H.; Huang, J.; Wang, Z.; Li, X.; Li, X. Regularized Spatial–Temporal Graph Convolutional Networks for Metro Passenger Flow Prediction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11241–11255. [Google Scholar] [CrossRef]

- Wang, J.; Ou, X.; Chen, J.; Tang, Z.; Liao, L. Passenger Flow Forecast of Urban Rail Transit Stations Based on Spatio-Temporal Hypergraph Convolution Model. J. Railw. Sci. Eng. 2023, 20, 4506–4516. [Google Scholar]

- Wang, X.; Xu, X.; Wu, Y.; Liu, J. Short Term Passenger Flow Forecasting of Urban Rail Transit Based on Hybrid Deep Learning Model. J. Railw. Sci. Eng. 2022, 19, 3557–3568. [Google Scholar]

- Alshehri, A.; Owais, M.; Gyani, J.; Aljarbou, M.H.; Alsulamy, S. Residual Neural Networks for Origin–Destination Trip Matrix Estimation from Traffic Sensor Information. Sustainability 2023, 15, 9881. [Google Scholar] [CrossRef]

- Owais, M. Deep Learning for Integrated Origin–Destination Estimation and Traffic Sensor Location Problems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6501–6513. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime Mould Algorithm: A New Method for Stochastic Optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Wang, Y.; Lin, W.; Liang, Y.; Yang, J.; Li, A.; Diao, H. Railway Communication QoS Alarm Mechanism Based on XGBoost-Informer Model and Multi-Source Data. J. China Railw. Soc. 2024, 46, 86–96. [Google Scholar]

| Research | Model Methodology | Key Innovations | Prediction Performance |

|---|---|---|---|

| Ma et al. [11] | LSTM | This study represents the first application of LSTM networks to passenger flow forecasting. | Achieved notable prediction accuracy, thereby pioneering the application of deep learning in passenger flow forecasting. |

| Zhang et al. [12] | LSTM, GRU | Utilized LSTM and GRU networks, respectively, to forecast passenger volumes at different station types during various time periods. | Demonstrates robust predictive performance across diverse scenarios, illustrating the adaptability of different models to distinct data characteristics. |

| Liu et al. [13] | LSTM—FC Integrated Model | Integrates the sequential modeling capability of LSTM with the feature integration strength of fully connected layers. | Effectively captures both long-term and short-term data dependencies, efficiently integrates and reduces high-dimensional feature dimensions, thereby enhancing prediction accuracy |

| Zeng et al. [14] | CEEMDAN—IPSO—LSTM Integrated Model | Through multi-scale feature extraction and hyperparameter optimization | Significantly enhances the prediction accuracy and robustness of LSTM models |

| Li et al. [15] | CNN—ResNet—BiLSTM Model | Through multi-level feature fusion and deep residual networks | Further elevates prediction accuracy and noise resilience |

| Gao [16], Wang [17], Wang et al. [18] | Integrated Model with Heterogeneous Architectures | Propose a Novel Architecture-Integrated Model | Validate the Effectiveness of a Heterogeneous Architecture-Integrated Model, Providing New Insights for Passenger Flow Prediction |

| Algorithm | Number of Training Iterations | Mean Training Time (min) |

|---|---|---|

| SMA | 20 | 16.37 |

| PSO | 20 | 21.38 |

| GA | 20 | 53.91 |

| Bayesian optimization | 20 | 68.43 |

| Hyperparameter | Value | |

|---|---|---|

| SMA | population_size | 20 |

| the upper bound is , | [10, 5000] | |

| The lower bound is , | [2, 1000] | |

| max_iter | 50 | |

| VMD | Original passenger flow data | |

| SMA optimum value | ||

| 0 | ||

| SMA optimum value | ||

| 0 | ||

| 1 | ||

| 10−6 | ||

| Informer | enc_in | IMFs |

| dec_in | IMFs | |

| seq_len | 96 | |

| label_len | 48 | |

| factor | 48 | |

| out_len | 48 | |

| BiLSTM | hidden_layer_sizes | [32, 64] |

| Training Hyperparameters | batch_size | 64 |

| epochs | 50 | |

| learn_rate | 0.001 | |

| dropout | 0.3 |

| Passenger Flow Periods | |||

|---|---|---|---|

| Morning peak | 0.0045 | 0.0671 | 0.0412 |

| Evening peak | 0.0025 | 0.0500 | 0.0289 |

| Weekend | 0.0018 | 0.0424 | 0.0256 |

| Holiday | 0.0075 | 0.0866 | 0.0572 |

| Stations | ||||

|---|---|---|---|---|

| Fangzhicheng | 0.0013 | 0.0364 | 0.0221 | 0.9876 |

| Bell Tower | 0.0015 | 0.0385 | 0.0212 | 0.9708 |

| Giant Wild Goose Pagoda | 0.0021 | 0.0461 | 0.0219 | 0.9686 |

| Xi’an North Railway Station | 0.0062 | 0.0788 | 0.0527 | 0.9252 |

| Xi’an Railway Station | 0.0098 | 0.0989 | 0.0697 | 0.9011 |

| Xianyang International Airport | 0.0100 | 0.1000 | 0.0678 | 0.9114 |

| Northwest Polytechnical University | 0.0009 | 0.0303 | 0.0196 | 0.9971 |

| Xi’an University of Science and Technology | 0.0012 | 0.0353 | 0.0205 | 0.9954 |

| Qinling West | 0.0030 | 0.0545 | 0.0328 | 0.9530 |

| Models | ||||

|---|---|---|---|---|

| SMA-VMD-Informer-BiLSTM | 0.0014 | 0.0378 | 0.0226 | 0.9925 |

| CNN-BiLSTM | 0.0016 | 0.0396 | 0.0248 | 0.9489 |

| CNN-BiGRU | 0.0015 | 0.0393 | 0.0251 | 0.9496 |

| Transformer-LSTM | 0.0018 | 0.0426 | 0.0273 | 0.9409 |

| ARIMA-LSTM | 0.0030 | 0.0549 | 0.0365 | 0.9517 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Wang, J. Short-Term Passenger Flow Forecasting for Rail Transit Inte-Grating Multi-Scale Decomposition and Deep Attention Mechanism. Sustainability 2025, 17, 8880. https://doi.org/10.3390/su17198880

Lu Y, Wang J. Short-Term Passenger Flow Forecasting for Rail Transit Inte-Grating Multi-Scale Decomposition and Deep Attention Mechanism. Sustainability. 2025; 17(19):8880. https://doi.org/10.3390/su17198880

Chicago/Turabian StyleLu, Youpeng, and Jiming Wang. 2025. "Short-Term Passenger Flow Forecasting for Rail Transit Inte-Grating Multi-Scale Decomposition and Deep Attention Mechanism" Sustainability 17, no. 19: 8880. https://doi.org/10.3390/su17198880

APA StyleLu, Y., & Wang, J. (2025). Short-Term Passenger Flow Forecasting for Rail Transit Inte-Grating Multi-Scale Decomposition and Deep Attention Mechanism. Sustainability, 17(19), 8880. https://doi.org/10.3390/su17198880