Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review

Abstract

1. Introduction

- (i)

- A comprehensive deep learning-based multimodal fusion framework is constructed, encompassing data acquisition, feature fusion, and decision optimization.

- (ii)

- The application of multimodal deep learning in three core areas—crop status detection, intelligent agricultural equipment operations, and resource management—is systematically reviewed.

- (iii)

- Key challenges, including data heterogeneity, real-time processing bottlenecks, and limited model generalization, are analyzed in depth, and future research directions, such as federated learning, self-supervised pretraining, and dynamic computation frameworks, are proposed.

2. Technical Framework for Multimodal Fusion

2.1. Data Collection Layer

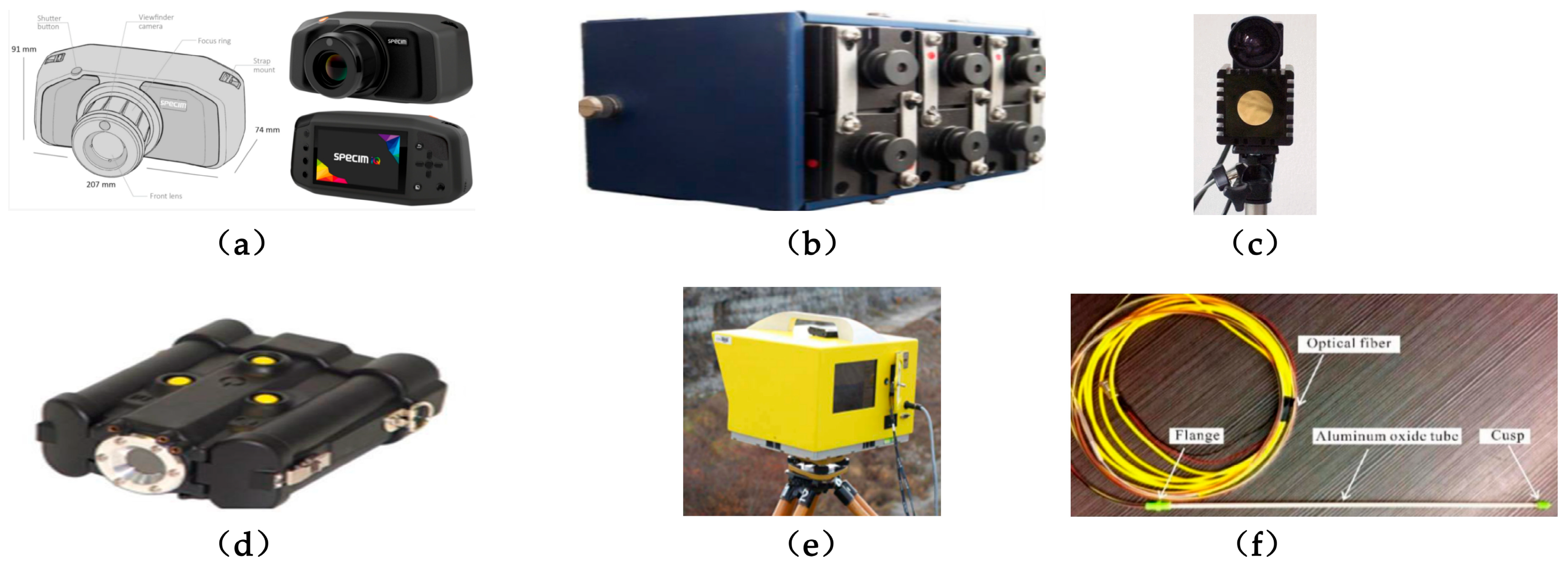

2.1.1. Sensor Types

| Sensor Type | Advantages | Limitations | Applications | References |

|---|---|---|---|---|

| Hyperspectral Camera | Accurately identifies crop physiological states and minor biochemical changes | High data volume and high cost, limiting large-scale application | High data volume and high cost, limiting large-scale application | [13,14,15] |

| Multispectral Camera | Low cost, portable, suitable for large-area monitoring | Limited data dimensionality, difficulty in capturing subtle changes | Crop classification, large-area field monitoring, detection of group physiological stress | [16,17,18,19] |

| LiDAR | Provides high-precision 3D spatial information, suitable for complex terrain measurement | High equipment cost, complex data processing | Crop height measurement, terrain modeling, 3D digital twin construction | [20] |

| Thermal Imaging Camera | Identifies irrigation unevenness and early-stage disease regions | Sensitive to environmental temperature changes, may be affected by weather | Irrigation optimization, early disease detection, identification of thermal anomalies | [21] |

| RGB Camera | Low cost, real-time imaging, high resolution, foundational tool for agricultural monitoring | Only captures visible light information, difficulty in identifying non-visible spectral features | Crop classification, disease detection, basic agricultural monitoring | [22,23] |

| Soil Multiparameter Sensors | Provides root microenvironment data, supports precision irrigation and fertilization decisions | Limited sensor depth, may not fully reflect soil profile information | Precision irrigation, fertilizer optimization, soil health management | [24,25] |

2.1.2. Data Alignment

2.2. Feature Fusion Layer

2.2.1. Fusion Strategies

2.2.2. Deep Learning Models

2.3. Decision Optimization Layer

3. Deep Learning-Driven Crop Detection for Plant Care

3.1. Multimodal Deep Learning for Comprehensive Crop Condition Assessment

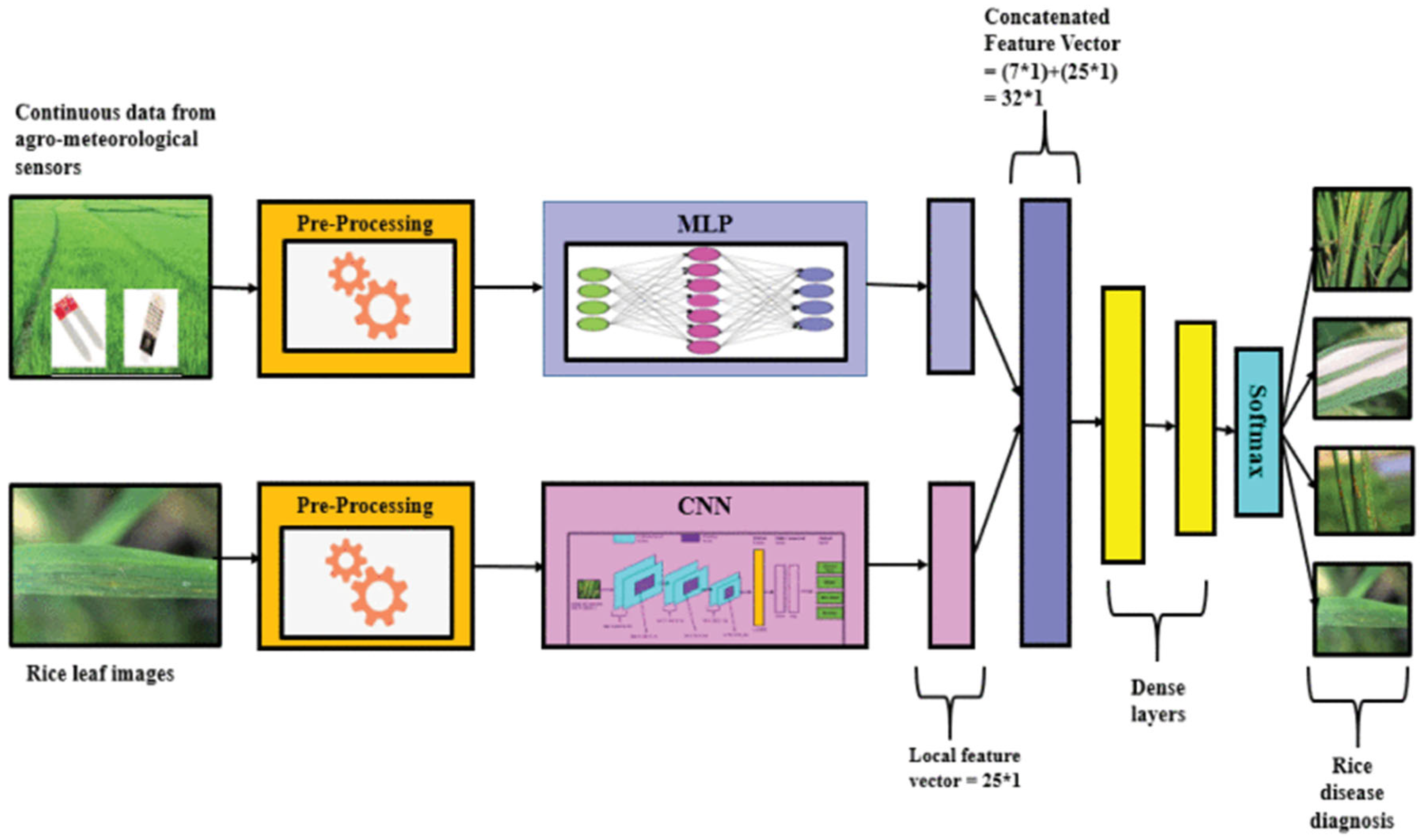

3.1.1. Disease Diagnosis

3.1.2. Maturity Detection and Yield Prediction

3.1.3. Weed Identification

3.2. Agricultural Machinery Intelligence for Crop Detection

3.2.1. Agricultural Machinery Navigation

3.2.2. Harvesting Robots

3.2.3. Plant Protection Robots

3.3. Resource Management and Ecological Monitoring for Plant Health

3.3.1. Smart Monitoring

3.3.2. Targeted Resource Allocation

4. Technical Challenges and Future Directions

4.1. Data Heterogeneity

4.2. Real-Time Bottlenecks

4.3. Insufficient Generalization Capacity

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Falcon, W.P.; Naylor, R.L.; Shankar, N.D. Rethinking global food demand for 2050. Popul. Dev. Rev. 2022, 48, 921–957. [Google Scholar] [CrossRef]

- Klerkx, L.; Jakku, E.; Labarthe, P. A review of social science on digital agriculture, smart farming and agriculture 4.0: New contributions and a future research agenda. NJAS-Wagen J. Life Sci. 2019, 90, 100315. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R. Enhancing smart farming through the applications of Agriculture 4.0 technologies. Int. J. Intell. Netw. 2022, 3, 150–164. [Google Scholar] [CrossRef]

- Wang, R.; Su, W. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Tu, Y.; Wang, R.; Su, W. Active disturbance rejection control—New trends in agricultural cybernetics in the future: A comprehensive review. Machines 2025, 13, 111. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of consumer RGB-D cameras for fruit detection and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Llorens, J.; Rosell-Polo, J.R.; Gregorio, E.; Arnó, J.; Solanelles, F.; Martínez-Casasnovas, J.A.; Escolà, A. Assessing the performance of rgb-d sensors for 3d fruit crop canopy characterization under different operating and lighting conditions. Sensors 2020, 20, 7072. [Google Scholar] [CrossRef]

- Li, W.; Du, Z.; Xu, X.; Bai, Z.; Han, J.; Cui, M.; Li, D. A review of aquaculture: From single modality analysis to multimodality fusion. Comput. Electron. Agric. 2024, 226, 109367. [Google Scholar] [CrossRef]

- El Sakka, M.; Ivanovici, M.; Chaari, L.; Mothe, J. A review of CNN applications in smart agriculture using multimodal data. Sensors 2025, 25, 472. [Google Scholar] [CrossRef]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal data fusion: An overview of methods, challenges, and prospects. Proc. IEEE Inst. Electr. Electron. Eng. 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep learning in multimodal remote sensing data fusion: A comprehensive review. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Vadivambal, R.; Jayas, D.S. Applications of thermal imaging in agriculture and food industry—A review. Food Bioproc. Tech. 2011, 4, 186–199. [Google Scholar] [CrossRef]

- Polk, S.L.; Chan, A.H.; Cui, K.; Plemmons, R.J.; Coomes, D.A.; Murphy, J.M. Unsupervised detection of ash dieback disease (Hymenoscyphus fraxineus) using diffusion-based hyperspectral image clustering. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2287–2290. [Google Scholar]

- Polk, S.L.; Cui, K.; Chan, A.H.; Coomes, D.A.; Plemmons, R.J.; Murphy, J.M. Unsupervised diffusion and volume maximization-based clustering of hyperspectral images. Remote Sens. 2023, 15, 1053. [Google Scholar] [CrossRef]

- Cui, K.; Li, R.; Polk, S.L.; Lin, Y.; Zhang, H.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Superpixel-based and spatially-regularized diffusion learning for unsupervised hyperspectral image clustering. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4405818. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row detection BASED navigation and guidance for agricultural robots and autonomous vehicles in row-crop fields: Methods and applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Moreno, H.; Valero, C.; Bengochea-Guevara, J.M.; Ribeiro, Á.; Garrido-Izard, M.; Andújar, D. On-ground vineyard reconstruction using a LiDAR-based automated system. Sensors 2020, 20, 1102. [Google Scholar] [CrossRef]

- Wang, X.; Pan, H.; Guo, K.; Yang, X.; Luo, S. The evolution of LiDAR and its application in high precision measurement. IOP Conf. Ser. Earth Environ. Sci. 2020, 502, 12008. [Google Scholar] [CrossRef]

- Ishimwe, R.; Abutaleb, K.; Ahmed, F. Applications of thermal imaging in agriculture—A review. Adv. Remote Sens. 2014, 3, 128–140. [Google Scholar] [CrossRef]

- Xu, X.; Wang, L.; Shu, M.; Liang, X.; Ghafoor, A.Z.; Liu, Y.; Ma, Y.; Zhu, J. Detection and counting of maize leaves based on two-stage deep learning with UAV-based RGB image. Remote Sens. 2022, 14, 5388. [Google Scholar] [CrossRef]

- Li, L.; Qiao, J.; Yao, J.; Li, J.; Li, L. Automatic freezing-tolerant rapeseed material recognition using UAV images and deep learning. Plant Methods 2022, 18, 5. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Liu, M.; Li, C.; Liu, X.; Cao, C.; Li, X.; Kan, Z. Precision fertilization and irrigation: Progress and applications. AgriEngineering 2022, 4, 626–655. [Google Scholar] [CrossRef]

- Ahmad, U.; Alvino, A.; Marino, S. Solar fertigation: A sustainable and smart IoT-based irrigation and fertilization system for efficient water and nutrient management. Agronomy 2022, 12, 1012. [Google Scholar] [CrossRef]

- Behmann, J.; Acebron, K.; Emin, D.; Bennertz, S.; Matsubara, S.; Thomas, S.; Bohnenkamp, D.; Kuska, M.T.; Jussila, J.; Salo, H. Specim IQ: Evaluation of a new, miniaturized handheld hyperspectral camera and its application for plant phenotyping and disease detection. Sensors 2018, 18, 441. [Google Scholar] [CrossRef]

- Pozo, S.D.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious radiometric calibration of a multispectral camera on board an unmanned aerial system. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Van den Bergh, M.; Van Gool, L. Combining RGB and ToF cameras for real-time 3D hand gesture interaction. In Proceedings of the 2011 IEEE Workshop on Applications Of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 66–72. [Google Scholar]

- Szajewska, A. Development of the thermal imaging camera (TIC) technology. Procedia Eng. 2017, 172, 1067–1072. [Google Scholar] [CrossRef]

- Harrap, R.; Lato, M. An overview of LIDAR: Collection to application. NGI Publ. 2010, 2, 1–9. [Google Scholar]

- Leone, M. Advances in fiber optic sensors for soil moisture monitoring: A review. Results Opt. 2022, 7, 100213. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Duan, Y.; Li, X.; Zhang, X.; Ji, J.; Dong, E.; Zhang, Y. USTC FLICAR: A sensors fusion dataset of LiDAR-inertial-camera for heavy-duty autonomous aerial work robots. Int. J. Robot. Res. 2023, 42, 1015–1047. [Google Scholar] [CrossRef]

- Nidamanuri, R.R.; Jayakumari, R.; Ramiya, A.M.; Astor, T.; Wachendorf, M.; Buerkert, A. High-resolution multispectral imagery and LiDAR point cloud fusion for the discrimination and biophysical characterisation of vegetable crops at different levels of nitrogen. Biosyst. Eng. 2022, 222, 177–195. [Google Scholar] [CrossRef]

- Liu, J.; Liang, X.; Hyyppä, J.; Yu, X.; Lehtomäki, M.; Pyörälä, J.; Zhu, L.; Wang, Y.; Chen, R. Automated matching of multiple terrestrial laser scans for stem mapping without the use of artificial references. Int. J. Appl. Earth Obs. Geoinf. 2017, 56, 13–23. [Google Scholar] [CrossRef]

- Couprie, C.; Farabet, C.; Najman, L.; LeCun, Y. Indoor semantic segmentation using depth information. arXiv 2013, arXiv:1301.3572. [Google Scholar]

- Zhao, F.; Zhang, C.; Geng, B. Deep multimodal data fusion. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Zamani, S.A.; Baleghi, Y. Early/late fusion structures with optimized feature selection for weed detection using visible and thermal images of paddy fields. Precis. Agric. 2023, 24, 482–510. [Google Scholar] [CrossRef]

- Hu, X.; Yang, K.; Fei, L.; Wang, K. Acnet: Attention based network to exploit complementary features for rgbd semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1440–1444. [Google Scholar]

- Hung, S.; Lo, S.; Hang, H. Incorporating luminance, depth and color information by a fusion-based network for semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2374–2378. [Google Scholar]

- Man, Q. Fusion of Hyperspectral and LiDAR Data for Urban Land Use Classifification. Ph.D. Dissertation, School of Geographic Sciences at East China Normal University, Shanghai, China, 2015. [Google Scholar]

- Sun, Y.; Zuo, W.; Yun, P.; Wang, H.; Liu, M. FuseSeg: Semantic segmentation of urban scenes based on RGB and thermal data fusion. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1000–1011. [Google Scholar] [CrossRef]

- He, X.; Cai, Q.; Zou, X.; Li, H.; Feng, X.; Yin, W.; Qian, Y. Multi-modal late fusion rice seed variety classification based on an improved voting method. Agriculture 2023, 13, 597. [Google Scholar] [CrossRef]

- Gadzicki, K.; Khamsehashari, R.; Zetzsche, C. Early vs late fusion in multimodal convolutional neural networks. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–6. [Google Scholar]

- Wang, Y.; Huang, W.; Sun, F.; Xu, T.; Rong, Y.; Huang, J. Deep multimodal fusion by channel exchanging. Adv. Neural Inf. Process. Syst. 2020, 33, 4835–4845. [Google Scholar]

- Huang, N.; Jiao, Q.; Zhang, Q.; Han, J. Middle-level feature fusion for lightweight RGB-D salient object detection. IEEE Trans. Image Process. 2022, 31, 6621–6634. [Google Scholar] [CrossRef]

- Mangai, U.G.; Samanta, S.; Das, S.; Chowdhury, P.R. A survey of decision fusion and feature fusion strategies for pattern classification. IETE Tech. Rev. 2010, 27, 293–307. [Google Scholar] [CrossRef]

- Sinha, A.; Chen, H.; Danu, D.G.; Kirubarajan, T.; Farooq, M. Estimation and decision fusion: A survey. Neurocomputing 2008, 71, 2650–2656. [Google Scholar] [CrossRef]

- Jeon, B.; Landgrebe, D.A. Decision fusion approach for multitemporal classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1227–1233. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Zhu, N.; Liu, X.; Liu, Z.; Hu, K.; Wang, Y.; Tan, J.; Huang, M.; Zhu, Q.; Ji, X.; Jiang, Y. Deep learning for smart agriculture: Concepts, tools, applications, and opportunities. Int. J. Agric. Biol. Eng. 2018, 11, 32–44. [Google Scholar] [CrossRef]

- Grm, K.; Štruc, V.; Artiges, A.; Caron, M.; Ekenel, H.K. Strengths and weaknesses of deep learning models for face recognition against image degradations. IET Biom. 2018, 7, 81–89. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Zhao, C.-T.; Wang, R.-F.; Tu, Y.-H.; Pang, X.-X.; Su, W.-H. Automatic lettuce weed detection and classification based on optimized convolutional neural networks for robotic weed control. Agronomy 2024, 14, 2838. Available online: https://proceedings.neurips.cc/paper_files/paper/2014/hash/f033ed80deb0234979a61f95710dbe25-Abstract.html (accessed on 20 April 2025). [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019, 108, 115–120. [Google Scholar] [CrossRef]

- Pan, C.; Qu, Y.; Yao, Y.; Wang, M. HybridGNN: A Self-Supervised graph neural network for efficient maximum matching in bipartite graphs. Symmetry 2024, 16, 1631. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Lu, Y.; Chen, D.; Olaniyi, E.; Huang, Y. Generative adversarial networks (GANs) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 2022, 200, 107208. [Google Scholar] [CrossRef]

- Lu, C.; Rustia, D.J.A.; Lin, T. Generative adversarial network based image augmentation for insect pest classification enhancement. IFAC-PapersOnLine 2019, 52, 1–5. [Google Scholar] [CrossRef]

- Rizvi, S.K.J.; Azad, M.A.; Fraz, M.M. Spectrum of advancements and developments in multidisciplinary domains for generative adversarial networks (GANs). Arch. Comput. Methods Eng. 2021, 28, 4503–4521. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Zhou, G.; Rui-Feng, W. The Heterogeneous Network Community Detection Model Based on Self-Attention. Symmetry 2025, 17, 432. [Google Scholar] [CrossRef]

- Xie, W.; Zhao, M.; Liu, Y.; Yang, D.; Huang, K.; Fan, C.; Wang, Z. Recent advances in Transformer technology for agriculture: A comprehensive survey. Eng. Appl. Artif. Intell. 2024, 138, 109412. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, R.; Wang, M.; Lai, T.; Zhang, M. Self-supervised transformer-based pre-training method with General Plant Infection dataset. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen, China, 13–15 October 2024; pp. 189–202. [Google Scholar]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Xiong, X.; Zhong, R.; Tian, Q.; Huang, J.; Zhu, L.; Yang, Y.; Lin, T. Daily DeepCropNet: A hierarchical deep learning approach with daily time series of vegetation indices and climatic variables for corn yield estimation. ISPRS J. Photogramm. Remote Sens. 2024, 209, 249–264. [Google Scholar] [CrossRef]

- Li, W.; Wang, C.; Cheng, G.; Song, Q. Optimum-statistical Collaboration Towards General and Efficient Black-box Optimization. Trans. Mach. Learn. Res. 2023. Available online: https://par.nsf.gov/servlets/purl/10418406 (accessed on 20 April 2025).

- Chai, L.; Qu, Y.; Zhang, L.; Liang, S.; Wang, J. Estimating time-series leaf area index based on recurrent nonlinear autoregressive neural networks with exogenous inputs. Int. J. Remote Sens. 2012, 33, 5712–5731. [Google Scholar] [CrossRef]

- Fang, W.; Chen, Y.; Xue, Q. Survey on research of RNN-based spatio-temporal sequence prediction algorithms. J. Big Data 2021, 3, 97. [Google Scholar] [CrossRef]

- Cao, Y.; Sun, Z.; Li, L.; Mo, W. A study of sentiment analysis algorithms for agricultural product reviews based on improved bert model. Symmetry 2022, 14, 1604. [Google Scholar] [CrossRef]

- Sayeed, M.S.; Mohan, V.; Muthu, K.S. Bert: A review of applications in sentiment analysis. HighTech Innov. J. 2023, 4, 453–462. [Google Scholar] [CrossRef]

- Murugesan, R.; Mishra, E.; Krishnan, A.H. Forecasting agricultural commodities prices using deep learning-based models: Basic LSTM, bi-LSTM, stacked LSTM, CNN LSTM, and convolutional LSTM. Int. J. Sustain. Agric. Manag. Inform. 2022, 8, 242–277. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Ayesha Barvin, P.; Sampradeepraj, T. Crop recommendation systems based on soil and environmental factors using graph convolution neural network: A systematic literature review. Eng. Proc. 2023, 58, 97. [Google Scholar]

- Gupta, A.; Singh, A. Agri-gnn: A novel genotypic-topological graph neural network framework built on graphsage for optimized yield prediction. arXiv 2023, arXiv:2310.13037. [Google Scholar]

- Manzano, R.M.; Pérez, J.E. Theoretical framework and methods for the analysis of the adoption-diffusion of innovations in agriculture: A bibliometric review. Boletín De La Asoc. De Geógrafos Españoles 2023, 96, 4. [Google Scholar] [CrossRef]

- Macours, K. Farmers’ demand and the traits and diffusion of agricultural innovations in developing countries. Annu. Rev. Resour. Econ. 2019, 11, 483–499. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Chua, L.O.; Roska, T. The CNN paradigm. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 1993, 40, 147–156. [Google Scholar] [CrossRef]

- Pearton, S.J.; Zolper, J.C.; Shul, R.J.; Ren, F. GaN: Processing, defects, and devices. J. Appl. Phys. 1999, 86, 1–78. [Google Scholar] [CrossRef]

- Borji, A. Pros and cons of GAN evaluation measures: New developments. Comput. Vis. Image Underst. 2022, 215, 103329. [Google Scholar] [CrossRef]

- Min, E.; Chen, R.; Bian, Y.; Xu, T.; Zhao, K.; Huang, W.; Zhao, P.; Huang, J.; Ananiadou, S.; Rong, Y. Transformer for graphs: An overview from architecture perspective. arXiv 2022, arXiv:2202.08455. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y. A survey on vision transformer. IEEE Trans. Pattern. Anal. Mach. Intell 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of CNN and RNN for natural language processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.; Leiserson, C. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 5363–5370. [Google Scholar]

- Sankar, A.; Wu, Y.; Gou, L.; Zhang, W.; Yang, H. Dynamic graph representation learning via self-attention networks. arXiv 2018, arXiv:1812.09430. [Google Scholar]

- Yang, L.; Chatelain, C.; Adam, S. Dynamic graph representation learning with neural networks: A survey. IEEE Access 2024, 12, 43460–43484. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.; Cui, K. A local perspective-based model for overlapping community detection. arXiv 2025, arXiv:2503.21558. [Google Scholar]

- Chen, Y.; Xing, X. Constructing dynamic knowledge graph based on ontology modeling and neo4j graph database. In Proceedings of the 2022 5th International Conference on Artificial Intelligence and Big Data (ICAIBD), Fuzhou, China, 8–10 July 2022; pp. 522–525. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Wiering, M.A.; Van Otterlo, M. Reinforcement learning. Adapt. Learn. Optim. 2012, 12, 729. [Google Scholar]

- Sun, L.; Yang, Y.; Hu, J.; Porter, D.; Marek, T.; Hillyer, C. Reinforcement learning control for water-efficient agricultural irrigation. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; pp. 1334–1341. [Google Scholar]

- Chen, M.; Cui, Y.; Wang, X.; Xie, H.; Liu, F.; Luo, T.; Zheng, S.; Luo, Y. A reinforcement learning approach to irrigation decision-making for rice using weather forecasts. Agric. Water Manag. 2021, 250, 106838. [Google Scholar] [CrossRef]

- Renaudo, E.; Girard, B.; Chatila, R.; Khamassi, M. Respective advantages and disadvantages of model-based and model-free reinforcement learning in a robotics neuro-inspired cognitive architecture. Procedia Comput. Sci. 2015, 71, 178–184. [Google Scholar] [CrossRef]

- Jain, S.; Ramesh, D.; Bhattacharya, D. A multi-objective algorithm for crop pattern optimization in agriculture. Appl. Soft. Comput. 2021, 112, 107772. [Google Scholar] [CrossRef]

- Groot, J.C.; Oomen, G.J.; Rossing, W.A. Multi-objective optimization and design of farming systems. Agric. Syst. 2012, 110, 63–77. [Google Scholar] [CrossRef]

- Li, Z.; Sun, C.; Wang, H.; Wang, R. Hybrid optimization of phase masks: Integrating Non-Iterative methods with simulated annealing and validation via tomographic measurements. Symmetry 2025, 17, 530. [Google Scholar] [CrossRef]

- Qin, Y.; Tu, Y.; Li, T.; Ni, Y.; Wang, R.; Wang, H. Deep learning for sustainable agriculture: A systematic review on applications in lettuce cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Habibi Davijani, M.; Banihabib, M.E.; Nadjafzadeh Anvar, A.; Hashemi, S.R. Multi-objective optimization model for the allocation of water resources in arid regions based on the maximization of socioeconomic efficiency. Water Resour. Manag. 2016, 30, 927–946. [Google Scholar] [CrossRef]

- Zhou, Y.; Fan, H. Research on multi objective optimization model of sustainable agriculture industrial structure based on genetic algorithm. J. Intell Fuzzy Syst. 2018, 35, 2901–2907. [Google Scholar] [CrossRef]

- Li, Q.; Yu, G.; Wang, J.; Liu, Y. A deep multimodal generative and fusion framework for class-imbalanced multimodal data. Multimed. Tools Appl. 2020, 79, 25023–25050. [Google Scholar] [CrossRef]

- Zhang, Q.; Wei, Y.; Han, Z.; Fu, H.; Peng, X.; Deng, C.; Hu, Q.; Xu, C.; Wen, J.; Hu, D. Multimodal fusion on low-quality data: A comprehensive survey. arXiv 2024, arXiv:2404.18947. [Google Scholar]

- Pawłowski, M.; Wróblewska, A.; Sysko-Romańczuk, S. Effective techniques for multimodal data fusion: A comparative analysis. Sensors 2023, 23, 2381. [Google Scholar] [CrossRef] [PubMed]

- Xu, K.; Yu, Z.; Wang, X.; Mi, M.B.; Yao, A. Enhancing video super-resolution via implicit resampling-based alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 2546–2555. [Google Scholar]

- Zhu, H.; Wang, Z.; Shi, Y.; Hua, Y.; Xu, G.; Deng, L. Multimodal Fusion Method Based on Self-Attention Mechanism. Wireless Commun. Mob. Comput. 2020, 2020, 8843186. [Google Scholar] [CrossRef]

- Shang, Y.; Gao, C.; Chen, J.; Jin, D.; Ma, H.; Li, Y. Enhancing adversarial robustness of multi-modal recommendation via modality balancing. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 6274–6282. [Google Scholar]

- Li, J.; Xu, M.; Xiang, L.; Chen, D.; Zhuang, W.; Yin, X.; Li, Z. Foundation models in smart agriculture: Basics, opportunities, and challenges. Comput. Electron. Agric. 2024, 222, 109032. [Google Scholar] [CrossRef]

- Yu, K.; Xu, W.; Zhang, C.; Dai, Z.; Ding, J.; Yue, Y.; Zhang, Y.; Wu, Y. ITFNet-API: Image and Text Based Multi-Scale Cross-Modal Feature Fusion Network for Agricultural Pest Identification. 2023. Available online: https://www.researchsquare.com/article/rs-3589884/v1 (accessed on 20 April 2025).

- Pantazi, X.; Moshou, D.; Bochtis, D. Intelligent Data Mining and Fusion Systems in Agriculture; Academic Press: Cambridge, MA, USA, 2019; ISBN 0128143924. [Google Scholar]

- Yashodha, G.; Shalini, D. An integrated approach for predicting and broadcasting tea leaf disease at early stage using IoT with machine learning—A review. Mater. Today Proc. 2021, 37, 484–488. [Google Scholar] [CrossRef]

- Patil, R.R.; Kumar, S. Rice-fusion: A multimodality data fusion framework for rice disease diagnosis. IEEE Access 2022, 10, 5207–5222. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, L.; Xie, C.; Wang, R.; Wang, F.; Bu, Y.; Zhang, S. An effective automatic system deployed in agricultural Internet of Things using Multi-Context Fusion Network towards crop disease recognition in the wild. Appl. Soft Comput. 2020, 89, 106128. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Li, B.; Lecourt, J.; Bishop, G. Advances in non-destructive early assessment of fruit ripeness towards defining optimal time of harvest and yield prediction—A review. Plants 2018, 7, 3. [Google Scholar] [CrossRef]

- Surya Prabha, D.; Satheesh Kumar, J. Assessment of banana fruit maturity by image processing technique. J. Food Sci. Technol. 2015, 52, 1316–1327. [Google Scholar] [CrossRef] [PubMed]

- Radu, V.; Tong, C.; Bhattacharya, S.; Lane, N.D.; Mascolo, C.; Marina, M.K.; Kawsar, F. Multimodal deep learning for activity and context recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–27. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Chu, Z.; Yu, J. An end-to-end model for rice yield prediction using deep learning fusion. Comput. Electron. Agric. 2020, 174, 105471. [Google Scholar] [CrossRef]

- Liu, Y.; Wei, C.; Yoon, S.; Ni, X.; Wang, W.; Liu, Y.; Wang, D.; Wang, X.; Guo, X. Development of multimodal fusion technology for tomato maturity assessment. Sensors 2024, 24, 2467. [Google Scholar] [CrossRef]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal deep learning and visible-light and hyperspectral imaging for fruit maturity estimation. Sensors 2021, 21, 1288. [Google Scholar] [CrossRef]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal deep learning via late fusion for non-destructive papaya fruit maturity classification. In Proceedings of the 2021 18th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 10–12 November 2021; pp. 1–6. [Google Scholar]

- Colbach, N.; Fernier, A.; Le Corre, V.; Messéan, A.; Darmency, H. Simulating changes in cropping practises in conventional and glyphosate-tolerant maize. I. Effects on weeds. Environ. Sci. Pollut. Res. Int. 2017, 24, 11582–11600. [Google Scholar] [CrossRef]

- Wang, R.; Tu, Y.; Chen, Z.; Zhao, C.; Su, W. A Lettpoint-Yolov11l Based Intelligent Robot for Precision Intra-Row Weeds Control in Lettuce. Available at SSRN 5162748. 2025. Available online: https://ssrn.com/abstract=5162748 (accessed on 20 April 2025).

- Krähmer, H.; Andreasen, C.; Economou-Antonaka, G.; Holec, J.; Kalivas, D.; Kolářová, M.; Novák, R.; Panozzo, S.; Pinke, G.; Salonen, J. Weed surveys and weed mapping in Europe: State of the art and future tasks. Crop. Prot. 2020, 129, 105010. [Google Scholar] [CrossRef]

- Eide, A.; Koparan, C.; Zhang, Y.; Ostlie, M.; Howatt, K.; Sun, X. UAV-assisted thermal infrared and multispectral imaging of weed canopies for glyphosate resistance detection. Remote Sens. 2021, 13, 4606. [Google Scholar] [CrossRef]

- Eide, A.; Zhang, Y.; Koparan, C.; Stenger, J.; Ostlie, M.; Howatt, K.; Bajwa, S.; Sun, X. Image based thermal sensing for glyphosate resistant weed identification in greenhouse conditions. Comput. Electron. Agric. 2021, 188, 106348. [Google Scholar] [CrossRef]

- Yang, Z.; Xia, W.; Chu, H.; Su, W.; Wang, R.; Wang, H. A comprehensive review of deep learning applications in cotton industry: From field monitoring to smart processing. Plants 2025, 14, 1481. [Google Scholar] [CrossRef] [PubMed]

- Xia, F.; Lou, Z.; Sun, D.; Li, H.; Quan, L. Weed resistance assessment through airborne multimodal data fusion and deep learning: A novel approach towards sustainable agriculture. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103352. [Google Scholar] [CrossRef]

- Xu, K.; Xie, Q.; Zhu, Y.; Cao, W.; Ni, J. Effective Multi-Species weed detection in complex wheat fields using Multi-Modal and Multi-View image fusion. Comput. Electron. Agric. 2025, 230, 109924. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Teng, H.; Wang, Y.; Song, X.; Karydis, K. Multimodal dataset for localization, mapping and crop monitoring in citrus tree farms. In Proceedings of the International Symposium on Visual Computing, Lake Tahoe, NV, USA, 16–18 October 2023; pp. 571–582. [Google Scholar]

- Man, Z.; Yuhan, J.I.; Shichao, L.I.; Ruyue, C.; Hongzhen, X.U.; Zhenqian, Z. Research progress of agricultural machinery navigation technology. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2020, 51, 4. [Google Scholar]

- Li, A.; Cao, J.; Li, S.; Huang, Z.; Wang, J.; Liu, G. Map construction and path planning method for a mobile robot based on multi-sensor information fusion. Appl. Sci. 2022, 12, 2913. [Google Scholar] [CrossRef]

- Xie, B.; Jin, Y.; Faheem, M.; Gao, W.; Liu, J.; Jiang, H.; Cai, L.; Li, Y. Research progress of autonomous navigation technology for multi-agricultural scenes. Comput. Electron. Agric. 2023, 211, 107963. [Google Scholar] [CrossRef]

- Dong, Q.; Murakami, T.; Nakashima, Y. Recalculating the agricultural labor force in China. China Econ. J. 2018, 11, 151–169. [Google Scholar] [CrossRef]

- Krishnan, A.; Swarna, S. Robotics, IoT, and AI in the automation of agricultural industry: A review. In Proceedings of the 2020 IEEE Bangalore Humanitarian Technology Conference (B-HTC), Vijiyapur, Karnataka, India, 8–10 October 2020; pp. 1–6. [Google Scholar]

- Bu, L.; Hu, G.; Chen, C.; Sugirbay, A.; Chen, J. Experimental and simulation analysis of optimum picking patterns for robotic apple harvesting. Sci. Hortic. 2020, 261, 108937. [Google Scholar] [CrossRef]

- Ji, W.; Qian, Z.; Xu, B.; Chen, G.; Zhao, D. Apple viscoelastic complex model for bruise damage analysis in constant velocity grasping by gripper. Comput. Electron. Agric. 2019, 162, 907–920. [Google Scholar] [CrossRef]

- Birrell, S.; Hughes, J.; Cai, J.Y.; Iida, F. A field-tested robotic harvesting system for iceberg lettuce. J. Field Robot. 2020, 37, 225–245. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Mao, S.; Li, Y.; Ma, Y.; Zhang, B.; Zhou, J.; Wang, K. Automatic cucumber recognition algorithm for harvesting robots in the natural environment using deep learning and multi-feature fusion. Comput. Electron. Agric. 2020, 170, 105254. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, Z.; Spohrer, K.; Schock, S.; He, X.; Müller, J. Non-contact leaf wetness measurement with laser-induced light reflection and RGB imaging. Biosyst. Eng. 2024, 244, 42–52. [Google Scholar] [CrossRef]

- Zou, K.; Ge, L.; Zhou, H.; Zhang, C.; Li, W. Broccoli seedling pest damage degree evaluation based on machine learning combined with color and shape features. Inf. Process. Agric. 2021, 8, 505–514. [Google Scholar] [CrossRef]

- Ye, K.; Hu, G.; Tong, Z.; Xu, Y.; Zheng, J. Key intelligent pesticide prescription spraying technologies for the control of pests, diseases, and weeds: A review. Agriculture 2025, 15, 81. [Google Scholar] [CrossRef]

- Yin, D.; Chen, S.; Pei, W.; Shen, B. Design of map-based indoor variable weed spraying system. Trans. Chin. Soc. Agric. Eng. 2011, 27, 131–135. [Google Scholar]

- Maslekar, N.V.; Kulkarni, K.P.; Chakravarthy, A.K. Application of unmanned aerial vehicles (UAVs) for pest surveillance, monitoring and management. In Innovative Pest Management Approaches for the 21st Century: Harnessing Automated Unmanned Technologies; Springer: Singapore, 2020; pp. 27–45. [Google Scholar]

- Chostner, B. See & spray: The next generation of weed control. Resour. Mag. 2017, 24, 4–5. [Google Scholar]

- Mumtaz, N.; Nazar, M. Artificial intelligence robotics in agriculture: See & spray. J. Intell. Pervasive Soft Comput. 2022, 1, 21–24. [Google Scholar]

- Chittoor, P.K.; Dandumahanti, B.P.; Veerajagadheswar, P.; Samarakoon, S.B.P.; Muthugala, M.V.J.; Elara, M.R. Developing an urban landscape fumigation service robot: A Machine-Learned, Gen-AI-Based design trade study. Appl. Sci. 2025, 15, 2061. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C. Selective spraying of grapevines for disease control using a modular agricultural robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Constantinou, I.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. Design and development of a semi-autonomous agricultural vineyard sprayer: Human–robot interaction aspects. J. Field Robot. 2017, 34, 1407–1426. [Google Scholar] [CrossRef]

- Lochan, K.; Khan, A.; Elsayed, I.; Suthar, B.; Seneviratne, L.; Hussain, I. Advancements in precision spraying of agricultural robots: A comprehensive Review. IEEE Access 2024, 12, 129447–129483. [Google Scholar] [CrossRef]

- Prathibha, S.R.; Hongal, A.; Jyothi, M.P. IoT based monitoring system in smart agriculture. In Proceedings of the 2017 International Conference on Recent Advances in Electronics and Communication Technology (ICRAECT), Bangalore, India, 16–17 March 2017; pp. 81–84. [Google Scholar]

- Wan, S.; Zhao, K.; Lu, Z.; Li, J.; Lu, T.; Wang, H. A modularized ioT monitoring system with edge-computing for aquaponics. Sensors 2022, 22, 9260. [Google Scholar] [CrossRef]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.P.; Arvanitis, K.G.; Sygrimis, N.A. Sustainable energy management of solar greenhouses using open weather data on MACQU platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Suma, N.; Samson, S.R.; Saranya, S.; Shanmugapriya, G.; Subhashri, R. IOT based smart agriculture monitoring system. Int. J. Recent Innov. Trends Comput. Commun. 2017, 5, 177–181. [Google Scholar]

- Boobalan, J.; Jacintha, V.; Nagarajan, J.; Thangayogesh, K.; Tamilarasu, S. An IOT based agriculture monitoring system. In Proceedings of the 2018 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 3–5 April 2018; pp. 594–598. [Google Scholar]

- Munaganuri, R.K.; Yamarthi, N.R. PAMICRM: Improving precision agriculture through multimodal image analysis for crop water requirement estimation using multidomain remote sensing data samples. IEEE Access 2024, 12, 52815–52836. [Google Scholar] [CrossRef]

- Mubaiwa, O.; Chilo, D. Female genital mutilation (FGM/C) in garissa and isiolo, kenya: Impacts on education and livelihoods in the context of cultural norms and food insecurity. Societies 2025, 15, 43. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Gordon, W.B.; Whitney, D.A.; Raney, R.J. Nitrogen management in furrow irrigated, ridge-tilled corn. J. Prod. Agric. 1993, 6, 213–217. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision agriculture techniques and practices: From considerations to applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef]

- Yuan, H.; Cheng, M.; Pang, S.; Li, L.; Wang, H.; NA, S. Construction and performance experiment of integrated water and fertilization irrigation recycling system. Trans. Chin. Soc. Agric. Eng. 2014, 30, 72–78. [Google Scholar]

- Wang, H.; Fu, Q.; Meng, F.; Mei, S.; Wang, J.; Li, L. Optimal design and experiment of fertilizer EC regulation based on subsection control algorithm of fuzzy and PI. Trans. Chin. Soc. Agric. Eng. 2016, 32, 110–116. [Google Scholar]

- Dhakshayani, J.; Surendiran, B. M2F-Net: A deep learning-based multimodal classification with high-throughput phenotyping for identification of overabundance of fertilizers. Agriculture 2023, 13, 1238. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Pandey, M. PCFRIMDS: Smart Next-Generation approach for precision crop and fertilizer recommendations using integrated multimodal data fusion for sustainable agriculture. IEEE Trans. Consum. Electron. 2024, 70, 6250–6261. [Google Scholar] [CrossRef]

- Kilinc, H.C.; Apak, S.; Ozkan, F.; Ergin, M.E.; Yurtsever, A. Multimodal Fusion of optimized GRU–LSTM with self-attention layer for Hydrological Time Series forecasting. Water Resour. Manag. 2024, 38, 6045–6062. [Google Scholar] [CrossRef]

- Bianchi, P.; Jakubowicz, J.; Roueff, F. Linear precoders for the detection of a Gaussian process in wireless sensors networks. IEEE Trans. Signal. Process. 2010, 59, 882–894. [Google Scholar] [CrossRef]

- Wang, L. Heterogeneous data and big data analytics. Autom. Control. Inf. Sci. 2017, 3, 8–15. [Google Scholar] [CrossRef]

- Peng, Y.; Bian, J.; Xu, J. Fedmm: Federated multi-modal learning with modality heterogeneity in computational pathology. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1696–1700. [Google Scholar]

- Wei, Y.; Yuan, S.; Yang, R.; Shen, L.; Li, Z.; Wang, L.; Chen, M. Tackling modality heterogeneity with multi-view calibration network for multimodal sentiment detection. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 5240–5252. [Google Scholar]

- Bertrand, C.; Burel, F.; Baudry, J. Spatial and temporal heterogeneity of the crop mosaic influences carabid beetles in agricultural landscapes. Landsc. Ecol. 2016, 31, 451–466. [Google Scholar] [CrossRef]

- Xu, L.; Jiang, J.; Du, J. The dual effects of environmental regulation and financial support for agriculture on agricultural green development: Spatial spillover effects and spatio-temporal heterogeneity. Appl. Sci. 2022, 12, 11609. [Google Scholar] [CrossRef]

- Dutilleul, P. Spatio-Temporal Heterogeneity: Concepts and Analyses; Cambridge University Press: Cambridge, UK, 2011; ISBN 0521791278. [Google Scholar]

- Bakshi, W.J.; Shafi, M. Semantic Heterogeneity-An overview. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2018, 4, 197–200. [Google Scholar]

- Wang, T.; Murphy, K.E. Semantic heterogeneity in multidatabase systems: A review and a proposed meta-data structure. J. Database Manag. 2004, 15, 71–87. [Google Scholar] [CrossRef][Green Version]

- Luo, Z.; Yang, W.; Yuan, Y.; Gou, R.; Li, X. Semantic segmentation of agricultural images: A survey. Inf. Process. Agric. 2024, 11, 172–186. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, W.; Lin, S.; Quek, T.Q. Heterogeneous sensor data fusion by deep multimodal encoding. IEEE J. Sel. Top. Signal Process. 2017, 11, 479–491. [Google Scholar] [CrossRef]

- Jiao, T.; Guo, C.; Feng, X.; Chen, Y.; Song, J. A comprehensive survey on deep learning Multi-Modal fusion: Methods, technologies and applications. Comput. Mater. Contin. 2024, 80, 1–35. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Ficuciello, F.; Falco, P.; Calinon, S. A brief survey on the role of dimensionality reduction in manipulation learning and control. IEEE Robot. Autom. Lett. 2018, 3, 2608–2615. [Google Scholar] [CrossRef]

- Munir, A.; Blasch, E.; Kwon, J.; Kong, J.; Aved, A. Artificial intelligence and data fusion at the edge. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 62–78. [Google Scholar] [CrossRef]

- Ajili, M.T.; Hara-Azumi, Y. Multimodal neural network acceleration on a hybrid CPU-FPGA architecture: A case study. IEEE Access 2022, 10, 9603–9617. [Google Scholar] [CrossRef]

- Bultmann, S.; Quenzel, J.; Behnke, S. Real-time multi-modal semantic fusion on unmanned aerial vehicles. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–3 September 2021; pp. 1–8. [Google Scholar]

- Jani, Y.; Jani, A.; Prajapati, K.; Windsor, C. Leveraging multimodal ai in edge computing for real-time decision-making. Computing 2023, 1, 2. [Google Scholar]

- Pokhrel, S.R.; Choi, J. Understand-before-talk (UBT): A semantic communication approach to 6G networks. IEEE Trans. Veh. Technol. 2022, 72, 3544–3556. [Google Scholar] [CrossRef]

- Yang, W.; Du, H.; Liew, Z.Q.; Lim, W.Y.B.; Xiong, Z.; Niyato, D.; Chi, X.; Shen, X.; Miao, C. Semantic communications for future internet: Fundamentals, applications, and challenges. IEEE Commun. Surv. Tutor. 2022, 25, 213–250. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Tse, M.; Lin, K. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Mammen, P.M. Federated learning: Opportunities and challenges. arXiv 2021, arXiv:2101.05428. [Google Scholar]

- Xu, X.; Ding, Y.; Hu, S.X.; Niemier, M.; Cong, J.; Hu, Y.; Shi, Y. Scaling for edge inference of deep neural networks. Nat. Electron. 2018, 1, 216–222. [Google Scholar] [CrossRef]

- Shuvo, M.M.H.; Islam, S.K.; Cheng, J.; Morshed, B.I. Efficient acceleration of deep learning inference on resource-constrained edge devices: A review. Proc. IEEE Inst. Electr. Electron. Eng. 2022, 111, 42–91. [Google Scholar] [CrossRef]

- Geng, X.; He, X.; Hu, M.; Bi, M.; Teng, X.; Wu, C. Multi-attention network with redundant information filtering for multi-horizon forecasting in multivariate time series. Expert Syst. Appl. 2024, 257, 125062. [Google Scholar] [CrossRef]

- Dayal, A.; Bonthu, S.; Saripalle, P.; Mohan, R. Deep learning for multi-horizon water levelforecasting in KRS reservoir, India. Results Eng. 2024, 21, 101828. [Google Scholar] [CrossRef]

- Kaur, A.; Goyal, P.; Rajhans, R.; Agarwal, L.; Goyal, N. Fusion of multivariate time series meteorological and static soil data for multistage crop yield prediction using multi-head self attention network. Expert Syst. Appl. 2023, 226, 120098. [Google Scholar] [CrossRef]

- Deforce, B.; Baesens, B.; Diels, J.; Serral Asensio, E. Forecasting sensor-data in smart agriculture with temporal fusion transformers. Trans Comput. Sci. Comput. Intell. 2022. Available online: https://lirias.kuleuven.be/retrieve/668581 (accessed on 20 April 2025).

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar] [CrossRef]

- Jakubovitz, D.; Giryes, R.; Rodrigues, M.R. Generalization error in deep learning. In Proceeding of the Compressed Sensing and its Applications: Third International Matheon Conference, Berlin, Germany, 4–8 December 2017; Birkhäuser: Basel, Switzerland, 2019; pp. 153–193. [Google Scholar]

- Ahmad, A.; El Gamal, A.; Saraswat, D. Toward generalization of deep learning-based plant disease identification under controlled and field conditions. IEEE Access 2023, 11, 9042–9057. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A review of deep learning in multiscale agricultural sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, T.; Park, D.S. Open set self and across domain adaptation for tomato disease recognition with deep learning techniques. Front. Plant. Sci. 2021, 12, 758027. [Google Scholar] [CrossRef]

- Qi, F.; Yang, X.; Xu, C. A unified framework for multimodal domain adaptation. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 429–437. [Google Scholar]

- Singhal, P.; Walambe, R.; Ramanna, S.; Kotecha, K. Domain adaptation: Challenges, methods, datasets, and applications. IEEE Access 2023, 11, 6973–7020. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M.; Furht, B. Text data augmentation for deep learning. J. Big Data 2021, 8, 101. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Alijani, S.; Fayyad, J.; Najjaran, H. Vision transformers in domain adaptation and domain generalization: A study of robustness. Neural Comput. Appl. 2024, 36, 17979–18007. [Google Scholar] [CrossRef]

- Wilson, G.; Cook, D.J. A survey of unsupervised deep domain adaptation. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–46. [Google Scholar] [CrossRef] [PubMed]

- Oubara, A.; Wu, F.; Amamra, A.; Yang, G. Survey on remote sensing data augmentation: Advances, challenges, and future perspectives. In Proceedings of the International Conference on Computing Systems and Applications, Algiers, Algeria, 17–18 May 2022; pp. 95–104. [Google Scholar]

- Mao, H.H. A survey on self-supervised pre-training for sequential transfer learning in neural networks. arXiv 2020, arXiv:2007.00800. [Google Scholar]

- Berg, P.; Pham, M.; Courty, N. Self-supervised learning for scene classification in remote sensing: Current state of the art and perspectives. Remote Sens. 2022, 14, 3995. [Google Scholar] [CrossRef]

- Andrychowicz, M.; Denil, M.; Gomez, S.; Hoffman, M.W.; Pfau, D.; Schaul, T.; Shillingford, B.; De Freitas, N. Learning to learn by gradient descent by gradient descent. Adv. Neural Inf. Process. Syst. 2016, 29. Available online: https://proceedings.neurips.cc/paper/2016/hash/fb87582825f9d28a8d42c5e5e5e8b23d-Abstract.html (accessed on 20 April 2025).

- Wang, J.X. Meta-learning in natural and artificial intelligence. Curr. Opin. Behav. Sci. 2021, 38, 90–95. [Google Scholar] [CrossRef]

- De Andrade Porto, J.V.; Dorsa, A.C.; de Moraes Weber, V.A.; de Andrade Porto, K.R.; Pistori, H. Usage of few-shot learning and meta-learning in agriculture: A literature review. Smart Agric. Technol. 2023, 5, 100307. [Google Scholar] [CrossRef]

- Tseng, G.; Kerner, H.; Rolnick, D. TIML: Task-informed meta-learning for agriculture. arXiv 2022, arXiv:2202.02124. [Google Scholar]

| Networks | URL |

|---|---|

| PointNet | https://github.com/charlesq34/pointnet (accessed on 10 May 2025) |

| DCP | https://github.com/WangYueFt/dcp (accessed on 10 May 2025) |

| Characteristic | Fusion Stage | Modal Interaction Depth | Computational Complexity | Robustness | Flexibility | Information Integrity | Applicable Scenarios | References |

|---|---|---|---|---|---|---|---|---|

| Early Fusion | Data Input Stage | High | High | Low | Low | Highest | Tasks requiring deep interaction | [43] |

| Mid Fusion | Feature Extraction Stage | Medium | Medium | High | High | Medium | Tasks combining images and text | [44,45] |

| Late Fusion | Decision Stage | Low | Low | Highest | Highest | Low | Tasks with strong modality independence | [46,47,48] |

| Model | Training Efficiency | Model Complexity | Robustness | References |

|---|---|---|---|---|

| CNNs | High training efficiency, parallelizable processing | Relatively simple structure, fewer parameters, not suitable for sequential data | Moderate robustness to data noise and deformation | [77,78] |

| GANs | Low training efficiency, complex and unstable training process | Complex structure, many parameters | Good robustness for generation tasks, but unstable training process | [79,80] |

| Transformer | High training efficiency, parallelizable processing | Relatively complex structure, many parameters | Strong robustness to data noise and deformation | [81,82] |

| RNNs | Relatively low training efficiency, difficult to parallelize | Relatively complex structure, many parameters | Moderate robustness to data noise and deformation | [83,84] |

| Application Scenario | Algorithms | Dataset | Precision | Metric Type | References |

|---|---|---|---|---|---|

| Crop Disease Detection | MCFN | Over 50,000 crop disease images and Contextual Information | 97.5% | Accuracy | [112] |

| Banana Localization | RetinaNet, Custom Classifier | Pixel-based multispectral UAV and satellite image dataset for banana classification | 92% | Accuracy | [113] |

| Soybean grain yield prediction | DNN-F1, DNN-F2 | UAV-acquired RGB, multispectral, and thermal imaging data | 72% | Accuracy | [118] |

| Estimation of biochemical parameters in soybean | PLSR, SVR, ELR | UAV-acquired RGB, multispectral, and thermal imaging data | 22.6% | RMSE | [119] |

| Rice yield prediction | BBI | summer/winter rice yields, meteorological data, and cultivation areas for 81 counties in Guangxi, China | 0.57% | RMSE | [120] |

| Tomato maturity classification | a fully connected neural network | 2568 data collections comprising visual, spectral, and tactile modalities | 99.4% | Accuracy | [121] |

| Papaya growth stage estimation | Deep Convolutional Neural Network | 4427 RGB images and 512 hyperspectral (HS) images | 90% | F1 | [122] |

| Papaya growth stage estimation | imaging-specific deep convolutional neural networks | Hyperspectral and visible-light images | 97% | F1 | [123] |

| Quantification of weed resistance | CNN | Hyperspectral data, High-resolution RGB images and point cloud data | 54.7% | RMSE | [130] |

| Leaf occlusion problem in weed detection | Swin Transform | The multimodal dataset comprises 1288 RGB images and 1288 PHA images, while the multiview dataset contains 692 images | 85.14% | Accuracy | [131] |

| Weed detection in paddy fields | ELM-E | 100 pairs of visible and thermal images of rice and weeds | 98.08% | Accuracy | [38] |

| Application Scenario | Algorithms | Dataset | Precision | References |

|---|---|---|---|---|

| Autonomous navigation of agricultural machinery in complex farmland environments | LiDAR, GNSS/INS, Point Cloud Processing Algorithms, SLAM | Self-collected three-dimensional point cloud data of farmland environment | Significant improvement in navigation accuracy, enhanced robustness | [134] |

| Mobile robot mapping and path planning with multi-sensor information fusion | EKF, Improved Ant Colony Optimization, Dynamic Window Approach, SLAM | LiDAR, Inertial Measurement Unit, Depth Camera | Significant improvement in mapping accuracy and robustness; enhanced path planning efficiency and safety with error within 4 cm | [135] |

| Iceberg lettuce harvesting robot | Customized Convolutional and CNN | A dataset of 1505 lettuce images annotated with weather conditions, camera height, plant spacing, and maturity stages, complemented by force feedback data | 97% | [141] |

| Apple harvesting robot | Circular Hough Transform, Blob Analysis | RGB and 3D fruit localization data | 84% | [142] |

| Cucumber harvesting robot | MPCNN, I-RELIEF, SVM | Data were collected from a cucumber plantation in Shouguang, Shandong, China, with an image resolution of 1024 × 768 pixels and a total of 218 validated samples | Correct identification rate: >90% with false recognition rate < 22% | [142] |

| Targeted weeding by plant protection robot | CNN | Field-captured image data, real-time operational telemetry, multi-sensor readings, historical farm records | 90% reduction in herbicide usage | [149,150] |

| Urban Landscape Fumigation | IMU, SLAM | Sensor data from urban environments, prescription maps for targeted spraying, real-time feedback dataset | Reduced chemical usage by optimizing spray, enhanced navigation accuracy during application in complex terrains | [151] |

| Application Scenario | Algorithms | Dataset | Precision | References |

|---|---|---|---|---|

| Irrigation scheduling optimization | GWO | Hydrological datasets across Australia, U.S. Department of Agriculture Dataset | 92.3% | [160] |

| Flood detection and assessment | CNN, ANN, DBP | Social media data: typically comprises both visual and textual features for flood detection and classification; remote sensing data: generally consists of multispectral satellite imagery for flood detection and mapping. | 96.1% | [161] |

| Diagnosis of fertilizer overuse | Novel Multi-modal Fusion Network | The experiment was conducted in a village in Karaikal, India, where agrometeorological data and image data were collected. | 91% | [167] |

| To provide precise and tailored recommendations for crop and fertilizer management | Graph Convolutional FPMax Model, Recurrent FPMax Model | Covering multiple aspects, including crops, soil, meteorology, and fertilizers | The accuracy of crop recommendations was improved by 4.9%; the accuracy of fertilizer recommendations was improved by 2.5 percentage points. | [168] |

| Hydrological time series forecasting | PSO, Bi-LSTM, Bi-GRU, Self-Attention | A hydrological dataset comprising 3652 daily discharge measurements across the Kızılırmak Basin, Turkey | Root Mean Square Error (RMSE): 0.085; Mean Absolute Error (MAE): 0.040; Coefficient of Determination (R2): 0.964 | [169] |

| Bottleneck | Problem Description | Proposed Solutions | Key Technologies |

|---|---|---|---|

| Data Heterogeneity | Multimodal data vary in structure, spatiotemporal resolution, and semantic meaning, making integration challenging. | Dynamic adaptive architectures; cross-modal causal reasoning | Meta-learning, memory-augmented networks, causal graphs |

| Real-Time Processing Bottlenecks | Limited computational resources on edge devices hinder high-frequency, real-time decision making in the field. | Dynamic computation frameworks; semantic communication; federated learning | Model compression, 6G semantic transmission, edge inference |

| Insufficient Generalization Capacity | Models perform poorly when transferred to new regions or crop types due to strong environmental variability. | Self-supervised pretraining; meta-learning | Large-scale unsupervised feature learning, task metadata adaptation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.-X.; Li, Y.; Wang, R.-F.; Hu, P.; Su, W.-H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability 2025, 17, 5255. https://doi.org/10.3390/su17125255

Yang Z-X, Li Y, Wang R-F, Hu P, Su W-H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability. 2025; 17(12):5255. https://doi.org/10.3390/su17125255

Chicago/Turabian StyleYang, Zhi-Xiang, Yusi Li, Rui-Feng Wang, Pingfan Hu, and Wen-Hao Su. 2025. "Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review" Sustainability 17, no. 12: 5255. https://doi.org/10.3390/su17125255

APA StyleYang, Z.-X., Li, Y., Wang, R.-F., Hu, P., & Su, W.-H. (2025). Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability, 17(12), 5255. https://doi.org/10.3390/su17125255