Abstract

Background: Developing young people’s technical and Information and Communication Technology skills is an SDG 4 target (4.4.), while the use of online educational material is a promoted tool to implement SDGs-related measures. Methodology: This is a case study exploring the complex role of ICT in teaching social sciences at a higher education institution using quantitative and qualitative research methods. Employing Urie Bronfenbrenner’s ecological systems theory, the research investigates how digital tools impact students’ academic performance across three courses with varying levels of ICT integration. The study evaluates students’ digital skills, compares the effectiveness of ICT-based and traditional teaching methods, and analyses how these methods influence the students’ comprehension and performance of the subject matter. Results: The findings revealed that while certain ICT skills and competencies enhance students’ academic performance, students value ICT tools differently depending on the course’s subject matter and their own digital competencies. The research also highlights the importance of teachers’ ability to blend ICT and non-ICT activities effectively to enhance students’ understanding. Conclusions: The study contributes to the ongoing discourse on ICT in education, emphasising the need for a more nuanced, multi-layered approach to understanding the intersection between technology and education, particularly in non-technical fields like social sciences.

1. Introduction

Information and Communication Technology (ICT), an extension of the term Information Technology as we know it today, is a relatively new domain in human social development compared to our long anthropological evolution. ICT first emerged as a concept in mathematics, connected with computing and communicating information in the 1950s. Soon after, its use extended to private and public economic and administrative fields (military, research, diplomatic, etc.) [1,2,3]. Its huge potential for social and economic development made it spread quickly to other fields, such as health and education, though there is an innovation gap between the private and public sectors [4,5]. Compared to a century ago, we cannot conceive of our personal, academic, and work lives without the use of ICT tools. at least in developed and many developing countries [6]. Despite little initial confidence that ICT would be a part of educational facilities, and as a result of COVID-19 accelerating the effect of the digitalisation process has become the focus of educational institutions more so than before, building what Manuel Castells calls an Information Society [7,8,9].

Current research shows that solely using ICT as tools mediating the process of reaching educational objectives may not have the same range of results as a set of educational tools and strategies chosen, adapted, and combined for specific classes and fields by a skilled teacher, or even using a student-centred approach as opposed to a teacher-centred approach [10,11,12]. The social component of learning is perhaps the main reason why, today, with all the knowledge and technology available to us, teaching is not an entirely self-conducted activity performed fully online, using only digital tools and content, and classes are not taught solely by relying on technology to mediate the transmission of content. Lev Vygotsky founded his theory on the use of linguistic communication between child and teacher to enhance understanding of scientific principles in an era without digital tools [13]. Additionally, Seymour Papert applies his principle to devise games with a social component that would implicitly teach children scientific principles of an abstract nature [14,15]. Moreover, other researchers, mentioned in the next section, state that digital platforms in learning may not be sufficient for an increase in knowledge acquisition or skill development, as success in using digital platforms in teaching to enhance students’ skills and/or knowledge may depend on the field being taught [16,17,18].

Research on methods to develop ICT skills in young students from countries or universities lacking an appropriate digital infrastructure and educational resources is scarce, while increasing the proportion of youth and adults alike worldwide with ICT skills is one of the critical targets of SDGs concerning the building of an inclusive and equitable education system [19,20,21]. This is the research gap that the current study sets out to address, as the research project is situated in a context meeting these conditions: a university social sciences program that historically underuses digital tools in teaching, and whose small size makes it prone to underinvesting in the development of the digital infrastructure and educational resources that would otherwise use and support more effectively the development of digital skills and competence of its students.

Accordingly, the objective set for our study is to investigate whether ICT-based pedagogical tools and methods in HE social sciences programs impact the academic performance of the students participating in these programs.

The research questions set for this study are as follows:

RQ1.

What is the relationship between students’ digital skills and their performance in class?

RQ2.

What is the relationship between the intensity of ICT use in teaching and the students’ seminar assignment and exam performance?

RQ3.

From the students’ perspective, which teaching tools are valuable for increasing their understanding?

2. Literature Review

2.1. The Benefits and Costs of Using ICT Tools in Education

The use of technology in class by students may have a distracting role for both students and teachers [22,23]. Even for the adult population, studies show that there is little transfer between domains for the cognitive abilities developed using digital devices such as games or brain teasers [24,25]. Higher education-based research findings indicate that the use of off-task digital tools impacts the performance on low-order tasks (e.g., knowledge, comprehension) but not on high-order tasks (e.g., essay writing), and that it affects the quality of note-taking and overall class performance [26,27].

In fact, studies investigating the effects of ICT in teaching on student learning, or of ICT in learning alone, state that a myriad of factors corroborate their effects on student performance. These range from micro-level factors (e.g., the socio-economic status of the child’s family, the child’s intrinsic or extrinsic motivation) to exo factors (teacher’s attitudes towards ICT, school’s location and access to infrastructure and other teaching sources) and even to macro-level factors (the national or regional policy in areas affecting education, telecommunication infrastructure, GDP, and cultural and social values) [11,12,28,29,30,31,32,33,34,35]. The benefits for students exist if there are policies in place to motivate schools and train teachers to systematically incorporate ICT in teaching [3,29,35]. For example, in a large study that included 39 countries, Park and Weng [29] found a positive relationship between students’ PISA results and a country’s GDP per capita, as well as students’ interest, perceived ICT competence, and autonomy. Similarly, the authors of [36] found that the country’s ICT development level and personal use of ICT significantly predicted the academic performance in mathematics, science and reading of fourth and eighth-grade students. However, a further study of Spanish Autonomous Communities found that only PISA science scores improved as a result of a greater use of ICT in education, whilst the PISA scores in mathematics and reading decreased [12]. Additionally, the UN ICT and sustainable development goals (SDGs) unit mentions that access to ICT for education is a prerequisite for child development in an inclusive and equalitarian society, which represents testimonies for the importance of ICT’s role in education has been acquired globally [19]. Increasing the proportion of youth with ICT skills to offer them a better chance at decent working and living conditions and offering online materials to facilitate access to education in developing regions are important SDG 4 targets [20,21].

A literature review on the benefits of ICT in school shows that research is far from decisively concluding that ICT in education has a positive effect on students’ performance [16,21,27,37], and those positive effects found are mainly between the use of ICT and cognitive abilities in pre-K12 students [29].

The lack of conclusive findings may be due to the complex nature of the relationship between the adoption of technology by non-commercial fields, such as education, regulatory policy regarding ICT, and the digital maturity (DM) level of ICT users [34]. At the same time, technology adoption in institutions following a standardised framework is much slower than its adoption in private life, yet these effects can be confounding [38]. Additionally, the initial research on the effects of ICT on students’ abilities, performed over almost two decades to inform regulatory policies, research, and assessment frameworks, has become outdated because of the fast pace at which technology is taking over more aspects of our lives before it enters education. To counteract this aspect, Gibson [39], in a study that is part of the UNESCO SDGs 2030 initiative, recommends that steps be taken to align the frameworks of data collection tools on ICT in education. This would make its effects more comparable, and also more inclusive of all the factors that may influence students’ performance, such as country and school infrastructure and funding, teachers’ beliefs, learner-centred approaches, and mobile learning, which are elements of the student’s micro, meso, and macro systems [40].

2.2. A Multi-Layered Framework for a Sustainable ICT-Based Education

In the absence of a clear research framework for the complexity of ICT in education [39], this study proposes that the multi-layered reality of the adoption of ICT in education and its effects on students’ academic performance should be investigated using the ecological system theory proposed by Urie Bronfenbrenner, applicable from human to systemic development.

His theory postulates several layers that interact and influence the measured behavioural outcome as follows [40,41]:

- The microsystem (items in direct proximity and interaction with the developing individual, e.g., a student’s own digital skills);

- The mesosystem (the interactions between two or more microsystems in which the individual is an active participant, e.g., teachers’ digital and/or pedagogical skills, the intensity of ICT use in class, for communication with teachers, administrators, and peers, etc.);

- The exosystem (the system which indirectly affects the individual’s development, though they are not an active participant or determinant in its activities);

- The macrosystem (items of influence over a larger group, such as political, educational, social systems, school size, educational programs, etc.);

- The chronosystem (important historical events which influence the dynamic of the setting in which the developing person is active, and can be normative or non-normative).

When analysing the ecology of ICT in teaching and its effect on students’ performance in this study, several factors from Bronfenbrenner’s theory are considered within the given design, in order to produce an accurate image of how the ICT systemic and individual factors interact to influence the efficiency of ICT in higher education (HE). Such an approach has been used by other researchers to investigate the digital disconnect phenomenon between home and educational institutions and its effect on children’s digital skills [41]. The presented studies are based on the premise that digital skills are continuously developing within an ecological system framework, with an input level of participants’ digital skills, school infrastructure, and teacher abilities that help students acquire knowledge and develop further their digital and reasoning abilities.

In the current case study, carried out in 2023, we focused on a Czech private higher-education institution that has bachelor’s and master’s programs only in the field of social sciences. Our aim is to investigate whether ICT tools in education impact the academic performance of students of social sciences.

In Section 3, we present the research objectives, instruments, and techniques used to collect the data and provide triangulation for specific assessments, the participants, and the procedure followed in this study. The results of the statistical and content analysis performed on the collected data are presented in Section 4 and discussed further against the reviewed literature in Section 5. We included Section 7 to ensure future research can use designs that reduce these limitations. We summarised our findings and their contribution to research and practice in Section 6.

3. Materials and Methods

While there is a plethora of taxonomies of digital skills aligned with specific computer applications, or clusters of digital skills later defined as digital competences with levels of proficiency [42,43,44,45,46,47], in the last decade international governmental institutions, such as the European Union and United Nations, have allocated grants for the development of several select platforms that outline digital skills more consistently with the current use of ICT in education and employment [48]. For our study we selected an ICT assessment tool (see next paragraph) which is aligned with the UNESCO SDGs standards, the National Educational Technology Standards [48,49], and aligned with the ECDL criteria used to assess ICT users [50], aiming to make our results more comparable with those of other researchers.

3.1. Research Objectives

As mentioned in the Introduction, the aim of our study is to investigate whether ICT-based pedagogical tools and methods in HE social sciences programs impact the academic performance of students participating in these programs.

The study uses a combination of methods in an overall case study, using both quantitative (structured questionnaires and experimental interventions) and qualitative (qualitative course feedback) methods, to understand whether introducing ICT in teaching non-technical subjects (social sciences—such as Economic Policies, Global Economy Studies, and Political Economy of the EU) can increase the academic performance of students in the included courses.

These subjects are taught traditionally without the aid of any applications, or with the minimum use of MS Office apps (PowerPoint presentations for the lecturer, MS Outlook and/or email, and MS Teams for teacher–student communication, along with MS Word and PowerPoint for seminar assignments). The pedagogical intervention in the project consists of the following:

- Using applications and sites appropriate for the course content in the delivery of the course material and basing the seminar exercises on the functionalities of ICT resources.

- Requiring class assignments that are based on the use of specific course-appropriate applications, databases, and SW, making use of ICT competences (creativity and innovation, communication, technology and operations concepts and skills, critical thinking and research planning, digital citizenship, research and information fluency) part of the ISTE-based maturity model (ICTE-MM) used in [48,49].

3.2. Data Collection

This is a case study that uses a combination of research methods, qualitative and quantitative, for data collection and analysis. The following methods and instruments were used for data collection:

- The assessment of the students’ digital skills used a comprehensive Information Technology Self-Assessment Tool developed in 2009 by Virginia Niebuhr, Donna D’Alessandro and Marney Gundlach (researchers at the University of Texas Medical Branch). This tool was developed as part of the Education Technology for the Educational Scholars Program at the Academic Paediatric Association, and its authors created it as an adaptable tool for various fields of activity. It covers 13 areas of competencies, which are aligned with Van Deursen and Van Dijk’s digital skills framework [45]. The original assessment tool is retrievable from https://www.utmb.edu/pedi_ed/ADAPT/Toolbox/Information%20Technology%20Self-Assessment%20Tool.doc (accessed on 20 September 2020).

- The seminar assessments were assessed using the ISTE criteria [48,49], which are aligned with the questionnaires’ constructs on digital competencies.

- Exam grades from the courses’ oral examination were included as relevant indicators for the students’ performance to assimilate and evaluate course-relevant material delivered using ICT tools and traditional methods.

- At the end of each course examination, which was performed orally for the purpose of this research, each examined student was asked about the teaching methods—digital or traditional—that were helpful for them to comprehend the course material. This allowed the extraction of qualitative material from a total of 33 students, for three courses, but with a total of 55 responses as feedback, with some students having attended two or all three courses.

- Individual semi-structured interviews were performed with 11 students to collect qualitative data on the use and usefulness of ICT tools in teaching and communication with the teachers, school administration, and peers.

3.3. Participants

The participants in this study were students of a small-sized higher education institution (HEI) majoring in economics, political science, or a combination of these fields. At the time of data collection, all students were enrolled in classes taught by one author of this study. In the intended sample of 50 students who were officially enrolled in the three courses included in this study, several did not attend the classes, while some took more than one class, leaving a total of 45 students who took the final examination in the classes they attended. Of these 45 students, 17 were male and 28 were female (Table 1). Due to withdrawal, late or no submission of the seminar assignment, and/or no exam participation, not all registered students were included in all the measurements. Further on, out of the 45 students, 29 were evaluated in Course 1, 13 in Course 2, and 17 in Course 3. Some students took more than one course included in this study. The demographics of the participants are presented in Table 1: Student participants by course and gender.

Table 1.

Student participants by course and gender.

3.4. Procedure and Ethical Considerations

As presented in Table 2, the materials for the three classes taught by one of the paper’s authors were designed to have different proportions of ICT tools used in teaching and seminar activities. The number of taught classes was 12 for Course 1 (Political Economy of the EU), and 24 (12 lectures and 12 seminars) for the other two courses (Global Economy Studies—Course 2, and Economic Policies—Course 3).

Table 2.

Techniques and tools used in teaching the courses included in the study.

All lectures and seminars for all courses made use of ICT and non-ICT resources and activities. All three assignments involved using digital tools, both provided in class and researched independently, filtering, analysing, and structuring data. Furthermore, the assignment made use of critical thinking to identify relevant information, integrate it to meet the requirements, and provide a coherent explanation for the presented content.

Qualitative feedback on the teaching tools and methods used was sought from each examined student after the teacher had entered the grade in the school information system (IS), to avoid increased demand characteristics. Quantitative feedback on the digital maturity of the class (DMC) and digital maturity of the HEI (DMHEI) was given in questions 8–9 of the semi-structured interviews (the full qualitative study, which includes a questionnaire with students, school managers, and IT administrators, is not included in this study report).

Content analysis was performed on the qualitative material from the course feedback.

The questionnaires based on ICTE and ISTE criteria were created in Google Forms and were distributed via email to all participants, and, additionally, via the course MS Teams channels to students.

All questionnaires had an answering scale from 1 to 5, 1 being the lowest score and 5 being the highest score for the measured construct or variable.

The quantitative data was analysed statistically using SPSS v 28 licensed software.

The study was performed following the British Psychological Society (BPsS) ethical rules of conduct in research.

In the first class of each course, students were informed about the research and asked to complete the questionnaires online in class, explaining their rights as participants. Participants’ informed consent was explicitly requested in the questionnaire heading and granted to the researchers upon submission of the questionnaire.

4. Results

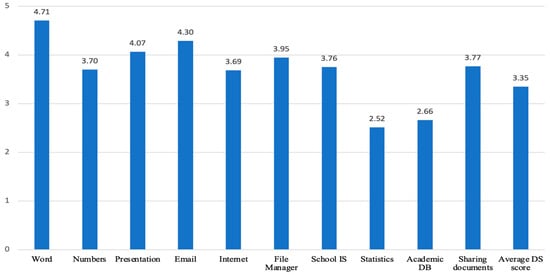

For the RQ1, descriptive statistics of digital skills per the entire sample completing this questionnaire (N = 22) (Figure 1) indicate that students’ greatest strengths in digital skills are in word processors (mean 4.71), email (mean 4.3), and presentation creators (mean 4.07). Their greatest skill gaps are in statistical analysis applications (mean 2.52) and academic DB searches (mean 2.66).

Figure 1.

Self-reported digital skills per application’s area of functionality.

Independent t-sample tests showed no significant differences between female and male students, though in the critical aspect of academic DB searches, the mean scores of female students were lower than those of male students by one standard deviation (2.24 vs. 3.02, SD = 0.76). The non-assumed variance condition for the groups showed a statistically significant difference between female and male students on this criterion with t(20) = −2.012, p = 0.029, but the confidence interval range does not confirm this significance (95% CI [−1.58; 0.29]).

There were no significant correlational relationships between self-reported digital skills and course performance found for Course 2.

The figures in Table 3 show that the word processor and email communication proficiencies were significantly correlated with Course 1 assignments and exams, with a medium-to-strong positive relationship at a significance level of p < 0.05. Email and school IS proficiency have a medium-strong positive and statistically significant correlational relationship with Course 3 exam score in Course 3, at p < 0.05.

Table 3.

Significant correlations between self-reported digital skills and course performance.

Linear regression models were performed for all courses to investigate the predictability of the measured digital skills of students on their academic performance, measured in assignment and exam scores. Table 4 presents several results of linear regression modelling run on each course. Other regression models, with different combinations of digital skills, were not found to be statistically significant predictors for either the assignment or the exam score.

Table 4.

Linear regression modelling results.

The digital skills–digital competences relationship was investigated only using correlational analysis. Table 5 shows that for Course 1, the analysis found strong, significant correlations between word processing proficiency on one side, and research and information fluency (RIF) along with critical thinking (CT) on the other side. Similarly, medium significant correlations were found between word processor, communication and collaboration (CC), digital citizenship (DC), and technology and operational concepts (TOCs).

Table 5.

Correlation coefficients between ICTE-MM criteria and self-reported digital skills.

Number processor skills were found to have a medium significant correlational relationship with TOCs for Course 1, and a strong significant correlational relationship with TOCs for Course 3.

Email skills were found to have a medium significant correlational relationship with creativity and innovation (CI), CC, and CT for Course 1.

School IS skills were found to have a medium significant correlational relationship with CI for Course 3, though the confidence intervals marginally disprove the significance of this relationship.

There was no significant correlation found between self-reported digital skills and assessed digital competences for Course 2.

For RQ2, several statistical tests were performed, such as mean comparison, linear regressions, and bivariate correlations between the ICTE-MM factors and assignment and exam scores.

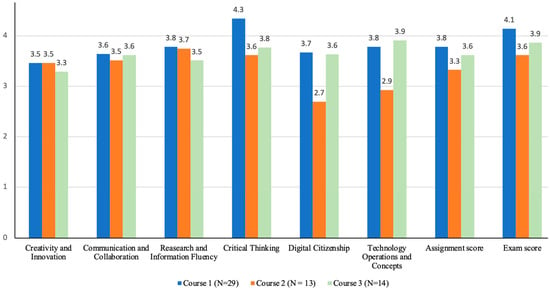

The means of digital competences measured in course assignments per each measured domain and the exam scores in each course are presented in Figure 2, and they indicate that in Course 1 students’ assignments showed higher levels of competences in all ICTE-MM fields than in the other two assignments, indicating that the students assignment scores in Course 1 which have a wider variety of ICT and non-ICT teaching tools are higher than scores in Course 2 where students had to rely mostly on the data sites provided in class and invest effort to search further independently for statistical data to produce a good assignment. Similarly, scores in Course 3 were superior to those in Course 2. In Course 3, independent research was needed aside from the course information, but required less statistical data and more policy-based information than in Course 2, which is easier to evaluate and use at this educational level than statistical data. These differences in the competences used for the assignments may also explain the lower DC and TOC mean scores and the higher RIF mean score in Course 2 compared to the other courses.

Figure 2.

ICTE-MM digital competences measured in course assignments and exam scores.

Linear regressions of all ICTE-MM criteria as impacting variables on exams showed that for Course 1, CT was a significant predictor of the exam score (t = 2.5, p = 0.02) with F(6,22) = 12.455, p < 0.001, while all digital competences criteria explained 77% of the exam score.

Linear regressions of all ICTE-MM criteria as impacting variables on exams showed that for Course 2, DC was a significant predictor of the exam score (t = 2.95, p = 0.03) with F(6,6) = 5.842, p = 0.025, while all digital competences criteria explain 85% of the exam score.

There were no statistically significant results for linear regression tests between ICTE-MM criteria and exam scores for Course 3.

The digital maturity of the class (DMC) is medium to strongly negatively correlated with the assignment score and the RIF score in Course 1 and Course 3, and CC in Course 1 (Table 6). These correlation relations are based on a small sample (N-10) for Course 1, and with N = 5 for Course 3, which reduces the relevance of the correlations. However, the fact that students systematically rated the author’s classes with a higher digital maturity than that of other classes or the institution as a whole is indicative that the interventions performed in these classes are noticeable and beyond the institution’s norms or average practices on the use of ICT tools in teaching. In the semi-structured interviews, questions 8–9 asked the interviewees to evaluate the DMHEI and DMC on a scale from 1 to 5, 1 being the smallest rating. The average DMC was 4.36 (SD = 0.5) while the average DMHEI was 3.64 (SD = 0.71), indicating that the included classes used more and/or more effective ICT tools in teaching compared to other teachers from the HEI considered by students in their evaluations.

Table 6.

Correlation coefficients between ICT-MM criteria, exam scores, and reported DMHEI and DMC.

The digital maturity of the institution, as rated by the interviewed students, is highly correlated with the digital maturity of the classes taught by the author, which indicates the reliance of the teaching methods and digital tools employed in class on the institution’s digital infrastructure, resources, and processes.

For Course 1, all the ICTE-MM criteria showed medium to strong positive correlations with the exam score, with all but one item at a high significance level (p < 0.001).

For Course 2, the CC, RIF, and critical thinking (CT) items were medium positively correlated with the exam score at a p < 0.05.

The figures for Course 3 show similar small–medium positive correlations with the exam scores in the same areas as Course 2, in addition to the creativity and innovation (CI) item, also at a p < 0.05.

We extracted additional meaning regarding the teaching exercise involving ICT tools from the content analysis of the qualitative data.

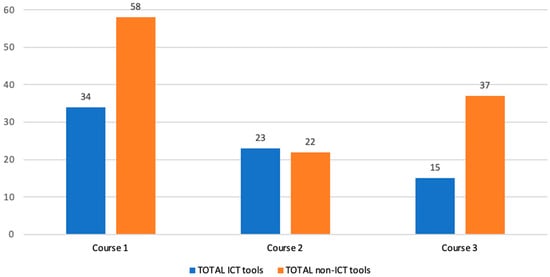

To address RQ3, using content analysis on the course feedback given after the oral examination, the frequency of occurrence of the methods used in teaching was recorded. Figure 3 shows the split between ICT and non-ICT tools used in teaching that were mentioned in the course feedback by students.

Figure 3.

Frequency of occurrence of teaching tool type in course feedback, per course.

As shown in Figure 3 and Table 7, and reported in exam feedback, Course 1 students mentioned almost twice as many non-ICT tools as ICT tools as being helpful in their learning. The most memorable activities for students were debates, presentations, and the negotiation/voting simulation exercise (which made use of an ICT tool—the voting application—but which was counted as a non-ICT practical method). For Course 2, the difference between the two types was insignificant, with site links, debates, and other non-ICT resources receiving the most mentions.

Table 7.

Frequency of occurrence of ICT and non-ICT teaching tools/methods per course, reported in exam feedback.

For Course 3, twice as many non-ICT tools as ICT tools were mentioned, with the most mentions received by the other non-ICT group, followed by debates.

5. Discussion

The interpretation of the quantitative results will be aided by the findings from the qualitative research, while comparing the results with both empirical and theoretical research. It is important to mention that the interpretation of the results aims to reduce subjectivity through the triangulation of the data. For this purpose, to reduce the teacher bias in evaluating the seminar assignments, all assignments submitted in the form of a presentation on a course topic were also separately assessed by a second teacher at the same HEI, and the evaluations were introduced in SPSS to calculate the coder agreement rate (Cohen’s Kappa score for the 16 criteria was between 0.707 and 0.946).

5.1. Discussion of Findings Against Empirical Research

Supporting existing research [29,30,51,52], the results from the self-reported digital skills questionnaires and the students’ performance in the three courses show that self-perceived ICT competency and ICT used in teaching are positively linked to students’ performance in certain areas (word processors, email, and school IS). Additionally, the differences between the courses’ content were reflected in the type of assignments received and the information students needed to master in the oral exam for each of these courses. Therefore, with the same level of digital skills, as an average over the group, they handled these requirements best in Course 1, where ICT was most used and in more activities, regardless of the fact that it consisted of fewer lectures and seminars. This is followed by Course 2, in which their strengths (word processors, email, presentations, and school IS) were weighed more in the assignment and exam. In Course 3, their gaps in skills (statistical analysis and academic DB search) were weighted more for course performance. The gender difference should also be noted, as even in developed countries, female students appear to score higher on gender-stereotypical digital competences (communication-oriented), compared to investigative areas (scientific database search). Alternatively, the gender difference may only reflect the gender-stereotypical level of self-esteem [8].

The medium-strong positive correlations between communication-based digital skills proficiency and assignment and/or exam scores in Course 1 and Course 3 suggest that online and in-person communication were essential for students’ understanding. They were also able to take notes and use them effectively later, clarify requirements with teachers and peers, and express their understanding. These findings support the prior research on the mediating role of ICT in teaching and on the benefits of a student-approach to teaching, compared to a teacher-approach [10,11,32]. It may also suggest that the ability to locate study materials and information about class activities, submit one’s assignments according to requirements and on time, and communicate with teachers and peers for class purposes are all similarly essential for class performance. These findings also align with Waite [26], who found that the use of ICT tools in teaching helps with material comprehension. Students can also effectively use these skills if the institutional and class environment allows it, as part of the systemic influence of ICT tools on learning (cf. [16,29,32,36,39,40,41]). As reported by students in qualitative feedback and semi-structured interviews, being able to pay attention to teachers in class makes it less likely they will become distracted by the digital tools used to take notes during class, which they can transfer in digital form at home—an aspect also reported by [22,27]. At the same time, effective use of digital communication in offline communication or online streaming benefits students by helping them clarify information with teachers and peers quickly to absorb the material while it is still fresh.

School IT infrastructure was also a contributing factor to students’ performance, as stipulated by Graham and Cullinan [33,53], which should be taken into consideration as a critical factor for the students’ academic performance, especially in developing countries where the infrastructure is still developing. Policymakers must take note of the positive role of infrastructure in schools that provide opportunities for both students and teachers to access and use up-to-date and diverse materials and applications in learning and teaching. Having a Wi-Fi connection in the school and on their devices, as well as a course online streaming platform, allows them also to search for information instantaneously, connect to classes remotely, and not miss as much as they would if they were absent. Communication in person with the teacher was also mentioned as being refreshing in the school, which is a result of being a small-sized HEI. In larger HEI classes, teachers are reported as “always on the move”, which hinders effective personal teacher–student communication, which can make the students more receptive to the information. These factors are indicative of the positive effect of the synchronous social interaction with a teacher, and of the role of exo- and mesosystem factors in the student’s performance, supporting the use of Bronfenbrenner’s ecological system theory when analysing the complex role of ICT in education (cf. [13,16,30,40,51]).

Furthermore, as previously suggested by Alshmmary and Kim [16,17], the study showed that solely using more ICT tools does not always improve class performance, and the digitalisation of teaching and learning is a complex issue involving institutional policies towards ICT adoption, teacher’s pedagogical and ICT skills, and student’s own motivation to learn (cf. [4,8,11,16,30,51]). This complex picture is revealed when ICT tools are used in a wider range of diverse activities, including practical exercises, which make use of the students’ digital skills strengths. In these situations, we observed an increase in students’ class performance. Courses 2 and 3 had the same range of ICT tools used in class and required in seminar assignments, yet the differences in the type of digital skills used made the difference between the scores they received in seminar assignments. Moreover, the ICT tools used in class in Course 2, in addition to the students’ own digital skills, seem to have impacted their digital citizenship score, making it a reliable predictor for the exam score. In Course 1, though, critical thinking was found to be a reliable predictor for the exam score, indicating that perhaps the larger range of ICT and non-ICT tools used in teaching increased the students’ ability to effectively evaluate and integrate information and perform better in the exam. The findings, however, indicate that students cannot consistently transfer digital skills to different domains, suggesting they do not have mature digital competences, and supporting the studies of Simons and Souders [24,25].

By answering RQ1 and RQ2, our study found that for each course, different ICTE-MM competences were found to correlate strongly and positively with the exam score, indicating that the assignments and the exams required more from the students than memorising facts and figures, such as critical thinking, communication skills, and research fluency. Also, by answering RQ3 our findings suggest that the more, and more diverse, ICT and non-ICT tools were used in class, the more of those ICTE-maturity model (ICTE-MM) key areas were used by students in class and exams, supporting research that showed positive effects between ICT in teaching and student’s developed abilities (cf. [16,30,52]). Additionally, more non-ICT tools are being mentioned in Courses 1 and 3 and as being useful in their overall learning, with students positively evaluating the role of class dynamics and teachers’ approach to communication. We can infer from the data in this case study that ICT tools can help develop skills and increase class performance in students, but only in conjunction with good teacher–student communication (ICT-mediated or in-person) and appropriate pedagogical skills.

A more unusual finding was the strong negative correlation between the DMC and ICTE-MM competencies in Course 1 and Course 3. It is difficult to reliably interpret these findings due to not knowing how students understand the concept of digital maturity and the small sample size. However, they may roughly suggest that students with higher-than-average digital skills also have higher expectations of the institution’s and class’s DM, leading to lower ratings for the school and class compared to their own skills. Similarly, students with lower-than-average digital skills also have lower expectations from the institution’s and class’s DM, leading to higher ratings for the school and class compared to their own skills. In other words, students compare the institution’s and class’s digital skills with their own digital skills. These inferences are also based on the results of the DM rating for the school, as evaluated by the managerial, administrative, academic, and IT staff. The managerial, administrative, and teaching staff mirror the estimates of students in the included classes, overestimating the DM of the HEI compared to the results based on qualitative data (interviews, observations, and assessments) collected by the researchers. However, the IT department estimated the DM of the HEI as being at the same level as inferred by the researchers from additional qualitative data, which were not included in the results here. It is important to mention this aspect, as the digital skills and competences of the evaluator have a clear influence on the evaluation of the DM of the HEI and classes, with these two levels of competences (of the evaluator and the HEI/class) seemingly going in opposite directions for evaluators with non-technical expertise (e.g., administrative staff compared to IT staff).

5.2. Discussion of Findings Against Theoretical Perspectives

Furthermore, the field of the HEI is important for the range of ICT tools that can be employed to convey the subject matter to students, and, similarly, to attract teaching and non-teaching staff who are capable of innovating the school’s activities by incorporating more ICT tools. Tegmark in the mathematical universe hypothesis [54] proposes that the further we go towards social sciences, the more interpretative language we use to explain the field’s subject matter, as opposed to objective mathematical structures. Thus, the range of ICT tools used in education for natural science fields (drill and practice programs, tutoring system, intelligent tutoring systems, simulations, and hypermedia systems) is broader than for social sciences, where teachers can generally aim at most to use ICT to engage students in social and civic activities discussed and critically debated in person, or to use ICT for information searches [55,56]. As a consequence, in social sciences, an HEI’s range of ICT tools in teaching is reduced mostly to digitally stored interpretative content (e.g., hypermedia systems, presentations, videos, pictures, etc), and has less available algorithm-based data/information (e.g., interactive graphs, live maps, simulations, etc.). Thus, the HEI’s size and its non-technical field constitute influential factors in formally and consistently adopting (or delaying the adoption) of ICT in teaching, infrastructure, and school administration activities, which supports the findings of prior research [56,57,58]. The pedagogical interventions in the three classes included in this study were noticeable to students precisely because they introduced ICT in a manner and number that differed from the HEI norms and average practices, which, due to its novelty in the field, could have contributed to a higher engagement of the students in classes.

All these findings can be useful for teachers in developing countries that implement UN SDGs programs in the fields of education and technology improvement. There is a parallel that can be drawn between educational institutions or programs (such as social sciences) from developed countries with low implementation of technology-based teaching tools and methods, and newly built educational institutions in developing countries that are slowly implementing ICT infrastructure and skills in teaching any type of subjects. Both sides of this comparison rely on the (incipient) technical skills of students and teachers, as well as on an infrastructure that may not be accessible to all students alike and usable for each subject to the same degree [53,59]. Thus, the role of the teacher adjusting the teaching material and methods to make the best use of the students’ skills remains crucial to the academic performance of their students, in order to increase their competencies in an increasingly technologized world.

In an integrative manner, using the ecological system theory, we can interpret the findings from all these sources as the interaction between the students’ microsystem and the teacher’s microsystem through their own digital skills, communication skills, and pedagogical skills (only teacher), within the meso- and macrosystem offered by the infrastructure and processes established at the HEI level. These interactions give rise to the proximal processes, which can nurture or hinder the student’s learning activity and their academic performance (cf. [40,41]). Thus, evaluating the ICT tools used in teaching in isolation cannot offer an accurate image of what factors influence students’ performance. An integrated evaluation of ICT, non-ICT tools, and students’ and teachers’ skills and competences provides a more accurate image of the role of ICT in HEI, especially when the subject matter is dynamic and prone to interpretation, as is the case with specific fields of social sciences (cf. [8,28,38,39]). It is also important to look at this topic in a systemic manner, as we integrate more skills and competences into our human activity to meet sustainable development goals that were set only in the last three decades and which we will need to constantly (re)evaluate and adapt in a reliable and realistic manner.

6. Conclusions

This study demonstrates that the integration of ICT in social sciences education is a nuanced process that significantly influences students’ academic performance, but its impact is closely tied to the context in which it is used. Our research shows that while ICT tools can enhance learning, their effectiveness depends on how well they are integrated with traditional teaching methods and how they align with students’ existing digital skills. The study confirms that the role of the teacher remains crucial, not just in guiding the use of technology, but in maintaining a balance between digital and non-digital teaching strategies to optimise learning outcomes.

The findings also suggest that the field of study (social sciences in this case) and the institutional environment significantly influence the adoption and success of ICT in education. Students benefit most when ICT tools are used in a manner that complements their learning styles and when teachers are adept at blending these tools with traditional methods. This research underscores the importance of developing a more integrated and flexible approach to ICT in education, one that considers the complex interplay between technology, pedagogy, and student capabilities.

Policymakers setting sustainable development goals need to constantly monitor and adapt to the dynamic interplay between technological development and human adaptability to new technology, while not ignoring the important role of economics in deciding who can access (faster) new technology to fuel social development. Our study highlights the complexity of this matter while providing insights into how even students from programs or universities with lower digital endowment can perform better when the available human and other resources are appropriately tuned to provide the Vygotskyan scaffolding necessary for the improvement of their academic performance.

7. Limitations and Further Research

The study’s limitations are related to the sample size, of only 45 individuals, and using self-reports, which we attempted to overcome with the triangulation of data on students’ performance and digital skills and included multiple measures on overlapping groups of students. Since this is a case study performed on three groups of students from a small university with specific social sciences programs, the findings cannot be generalised to larger institutions and/or other study programs. In future research, we will attempt to use a larger student sample, include more courses from social sciences as data sources, and compare the students’ performance with that of control groups of students in classes taught by teachers using only conservative teaching methods.

Future research should focus on expanding the sample size, including more courses, and comparing results across different types of educational institutions. Such studies could further clarify the relationship between ICT use and academic performance, providing more generalisable insights that could inform educational policy and practice in a variety of contexts, and particularly for sustainable goals that are part of the political agenda of regional and global policymakers, such as the UN and European Commission.

Author Contributions

Conceptualisation, E.M. and M.M.; methodology, E.M. and M.M.; validation, E.M., T.K. and M.M.; formal analysis, M.M.; investigation, M.M.; resources, M.M. and E.M.; data curation, M.M.; writing—original draft preparation, E.M. and M.M.; writing—review and editing, E.M., M.M. and T.K.; visualisation, M.M.; supervision, E.M.; project administration, E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

All the informed consent forms, the code of ethics of the Czech Ministry of Education and of the University of Hradec Kralove have been already sent prior to the study entering the peer-review process, on 5 March 2025.

Informed Consent Statement

The study followed the British Psychological Society’s code of ethics for research with human participants. Informed consent was obtained from all human subjects involved in the study, as described in the section on the study procedure, though the Ethical code for research of the Czech Ministry of Education (https://msmt.gov.cz/file/35780_1_1/, 9 May 2025) specifies that research studies that involve anonymous collection of data or research on educational methods are exempted from Informed Consent for Research. A formal ethical review and approval were waived for this study due to the fact that approval from the Ethical Committee of the University of Hradec Kralove is not needed for students’ research projects conducted as part of their final thesis.

Data Availability Statement

Due to the confidentiality of the data collected from the HE institution, the data are not readily available for other purposes outside the scope of this research study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CC | Communication and Collaboration |

| CI | Creativity and innovation |

| CT | Critical thinking |

| DC | Digital citizenship |

| DM | Digital maturity |

| DMC | Digital maturity of the class |

| DMHEI | Digital maturity of the HEI |

| ECDL | European Computer Driving License |

| EU | European Union |

| HE | Higher education |

| HEI | Higher education institution |

| ICT | Information and Communication Technology |

| ICTE | Information and Communication Technology in Education |

| ICTE-MM | ICTE-Maturity Model |

| IS | Information system |

| ISTE | International Society for Technology in Education |

| PISA | Programme for International Student Assessment |

| RIF | Research and information fluency |

| SDGs | Sustainable development goals |

| TOCs | Technology Operations and Concepts |

| UN | United Nations |

| UNESCO | United Nations Educational, Scientific and Cultural Organisation |

References

- Kline, R.R. Cybernetics, management science, and technology policy: The emergence of “Information Technology” as a keyword, 1948–1985. Technol. Cult. 2006, 47, 513–535. [Google Scholar] [CrossRef]

- Spariosu, M.I. Information and communication technology for human development: An intercultural perspective. In Remapping Knowledge: Intercultural Studies for a Global Age; Berghahn Books: New York, NY, USA, 2006; pp. 95–142. Available online: https://www.jstor.org/stable/j.ctv3znztw.6 (accessed on 14 March 2021).

- Haddad, C.R.; Nakić, V.; Bergek, A.; Hellsmark, H. Transformative innovation policy: A systematic review. Environ. Innov. Soc. Transit. 2022, 43, 14–40. [Google Scholar] [CrossRef]

- Suchitwarasan, C.; Cinar, E.; Simms, C.; Demircioglu, M.A. Innovation for sustainable development goals: A comparative study of the obstacles and tactics in public organizations. J. Technol. Transf. 2024, 49, 2234–2259. [Google Scholar] [CrossRef]

- Hinkley, S. Technology in the Public Sector and the Future of Government Work. UC Berkeley Labor Center, 10 January 2023. Available online: https://laborcenter.berkeley.edu/technology-in-the-public-sector-and-the-future-of-government-work/ (accessed on 22 May 2023).

- ITU. Measuring Digital Development: Facts and Figures 2022. International Telecommunication Union, 2022. Available online: https://www.itu.int/itu-d/reports/statistics/facts-figures-2022/ (accessed on 22 May 2023).

- Chandi, F.O. ICT in Education: Possibilities and Challenges. Universitat Oberta de Catalunya, October 2004. Available online: https://www.academia.edu/1006536/ICT_in_education_Possibilities_and_challenges?auto=citations&from=cover_page (accessed on 7 April 2022).

- Timotheou, S.; Miliou, O.; Dimitriadis, Y.; Sobrino, S.V.; Giannoutsou, N.; Cachia, R.; Monés, A.M.; Ioannou, A. Impacts of digital technologies on education and factors influencing schools’ digital capacity and transformation: A literature review. Educ. Inf. Technol. 2022, 28, 6695–6726. [Google Scholar] [CrossRef]

- Garnham, N. Information society theory as ideology: A critique. Loisir Soc. Leis. 1998, 21, 97–120. [Google Scholar] [CrossRef]

- Islam, K.; Sarker, F.H.; Islam, M.S. Promoting student-centred blended learning in higher education: A model. E-Learn. Digit. Media 2021, 19, 36–54. [Google Scholar] [CrossRef]

- Abedi, E.A. Tensions between technology integration practices of teachers and ICT in education policy expectations: Implications for change in teacher knowledge, beliefs and teaching practices. J. Comput. Educ. 2023, 11, 1215–1234. [Google Scholar] [CrossRef]

- Fernández-Gutiérrez, M.; Gimenez, G.; Calero, J. Is the use of ICT in education leading to higher student outcomes? Analysis from the Spanish Autonomous Communities. Comput. Educ. 2020, 157, 103969–104007. [Google Scholar] [CrossRef]

- Vygotsky, L. Thinking and Speech. 1962. Available online: https://www.marxists.org/archive/vygotsky/works/words/Thinking-and-Speech.pdf (accessed on 23 September 2020).

- Tsalapatas, H. Programming games for logical thinking. EAI Endorsed Trans. Game-Based Learn. 2013, 1, e4. [Google Scholar] [CrossRef]

- Harel, I.; Papert, S. Constructionism; Ablex Publishing Corporation: New York, NY, USA, 1991. [Google Scholar]

- Alshammary, F.M.; Alhalafawy, W.S. Digital platforms and the improvement of learning outcomes: Evidence extracted from meta-analysis. Sustainability 2023, 15, 1305. [Google Scholar] [CrossRef]

- Kim, S.; Chung, K.; Yu, H. Enhancing digital fluency through a training program for creative problem solving using computer programming. J. Creat. Behav. 2013, 47, 171–199. [Google Scholar] [CrossRef]

- Fatmi, N.; Muhammad, I.; Muliana, M.; Nasrah, S. The utilization of Moodle-based learning management system (LMS) in learning mathematics and physics to students’ cognitive learning outcomes. Int. J. Educ. Vocat. Stud. 2021, 3, 155. [Google Scholar] [CrossRef]

- The Earth Institute, Columbia University; Ericsson. ICT and Education. Sustainable Development Solutions Network. 2016. Available online: http://www.jstor.org/stable/resrep15879.11 (accessed on 22 May 2023).

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development. Department of Economic and Social Affairs, United Nations Sustainable Development Goals, 25 September 2015. Available online: https://sdgs.un.org/2030agenda (accessed on 28 January 2025).

- United Nations. SDG 4: Ensure Inclusive and Equitable Quality Education and Promote Lifelong Learning Opportunities for All. Sustainable Development, Department of Economic and Social Affairs, United Nations, 2023. Available online: https://sdgs.un.org/goals/goal4#targets_and_indicators (accessed on 28 January 2025).

- Warsi, L.Q.; Rahman, U.; Nawaz, H. Exploring influence of technology distraction on students’ academic performance. Hum. Nat. J. Soc. Sci. 2024, 5, 317–335. [Google Scholar] [CrossRef]

- Deepa, V.; Sujatha, R.; Baber, H. Moderating role of attention control in the relationship between academic distraction and performance. High. Learn. Res. Commun. 2022, 12, 3. [Google Scholar] [CrossRef]

- Simons, D.J.; Boot, W.R.; Charness, N.; Gathercole, S.E.; Chabris, C.F.; Hambrick, D.Z.; Stine-Morrow, E.A.L. Do “brain-training” programs work? Psychol. Sci. Public Interest 2016, 17, 103–186. [Google Scholar] [CrossRef] [PubMed]

- Souders, D.J.; Boot, W.R.; Blocker, K.; Vitale, T.; Roque, N.A.; Charness, N. Evidence for narrow transfer after short-term cognitive training in older adults. Front. Aging Neurosci. 2017, 9, 41. [Google Scholar] [CrossRef]

- Waite, B.M.; Lindberg, R.; Ernst, B.; Bowman, L.L.; Levine, L.E. Off-task multitasking, note-taking and lower- and higher-order classroom learning. Comput. Educ. 2018, 120, 98–111. [Google Scholar] [CrossRef]

- Dontre, A.J. The influence of technology on academic distraction: A review. Hum. Behav. Emerg. Technol. 2020, 3, 379–390. [Google Scholar] [CrossRef]

- Salvati, S. Use of Digital Technologies in Education: The Complexity of Teachers’ Everyday Practice. Ph.D. Thesis, Linnaeus University, Växjö, Sweden, 2016. Available online: https://www.diva-portal.org/smash/get/diva2:1039657/fulltext01.pdf (accessed on 22 May 2023).

- Park, S.; Weng, W. The relationship between ICT-related factors and student academic achievement and the moderating effect of country economic indexes across 39 countries: Using multilevel structural equation modelling. Educ. Technol. Soc. 2020, 23, 1–15. Available online: https://www.jstor.org/stable/10.2307/26926422 (accessed on 23 September 2021).

- Ben Youssef, A.; Dahmani, M.; Ragni, L. ICT use, digital skills and students’ academic performance: Exploring the digital divide. Information 2022, 13, 129. [Google Scholar] [CrossRef]

- Afzal, H.; Ali, I.; Khan, M.A.; Hamid, K. A study of university students’ motivation and its relationship with their academic performance. Int. J. Bus. Manag. 2010, 5, 80–88. [Google Scholar] [CrossRef]

- Bice, H.; Tang, H. Teachers’ beliefs and practices of technology integration at a school for students with dyslexia: A mixed methods study. Educ. Inf. Technol. 2022, 27, 10179–10205. [Google Scholar] [CrossRef] [PubMed]

- Graham, M.A.; Stols, G.; Kapp, R. Teacher practice and integration of ICT: Why are or aren’t South African teachers using ICTs in their classrooms? Int. J. Instr. 2020, 13, 749–766. [Google Scholar] [CrossRef]

- Wang, J.; Tigelaar, D.E.H.; Admiraal, W. From policy to practice: Integrating ICT in Chinese rural schools. Technol. Pedagog. Educ. 2022, 31, 509–524. [Google Scholar] [CrossRef]

- Goldstein, O.; Ropo, E. Preparing student teachers to teach with technology: Case studies in Finland and Israel. Int. J. Integr. Technol. Educ. 2021, 10, 19–35. [Google Scholar] [CrossRef]

- Skryabin, M.; Zhang, J.; Liu, L.; Zhang, D. How the ICT development level and usage influence student achievement in reading, mathematics, and science. Comput. Educ. 2015, 85, 49–58. [Google Scholar] [CrossRef]

- Hu, X.; Gong, Y.; Lai, C.; Leung, F.K. The relationship between ICT and student literacy in mathematics, reading, and science across 44 countries: A multilevel analysis. Comput. Educ. 2018, 125, 1–13. [Google Scholar] [CrossRef]

- Redjep, N.B.; Balaban, I.; Zugec, B. Assessing digital maturity of schools: Framework and instrument. Technol. Pedagog. Educ. 2021, 30, 643–658. [Google Scholar] [CrossRef]

- Gibson, D.; Broadley, T.; Downie, J.; Wallet, P. Evolving learning paradigms: Re-setting baselines and collection methods of information and communication technology in education statistics. J. Educ. Technol. Soc. 2018, 21, 62–73. Available online: https://www.jstor.org/stable/10.2307/26388379 (accessed on 23 September 2019).

- Rosa, E.M.; Tudge, J. Urie Bronfenbrenner’s theory of human development: Its evolution from ecology to bioecology. J. Fam. Theory Rev. 2013, 5, 243–258. [Google Scholar] [CrossRef]

- Edwards, S.; Henderson, M.; Gronn, D.; Scott, A.; Mirkhil, M. Digital disconnect or digital difference? A socio-ecological perspective on young children’s technology use in the home and the early childhood centre. Technol. Pedagog. Educ. 2016, 26, 1–17. [Google Scholar] [CrossRef]

- Martin, A.; Grudziecki, J. DigEuLit: Concepts and tools for digital literacy development. Innov. Teach. Learn. Inf. Comput. Sci. 2006, 5, 249–267. [Google Scholar] [CrossRef]

- Eshet, Y. Digital literacy: A conceptual framework for survival skills in the digital era. J. Educ. Multimed. Hypermedia 2004, 13, 93–106. Available online: https://www.learntechlib.org/primary/p/4793/ (accessed on 23 September 2019).

- Aviram, A.; Eshet-Alkalai, Y. Towards a theory of digital literacy: Three scenarios for the next steps. Eur. J. Open Distance eLearning 2006, 1, 1–11. [Google Scholar]

- Van Deursen, A.; Van Dijk, J. Measuring Digital Skills. In Proceedings of the 58th Conference of the International Communication Association, Montreal, QC, Canada, 22–26 May 2008; Available online: https://www.utwente.nl/nl/bms/com/bestanden/ICA2008.pdf (accessed on 23 September 2019).

- Helsper, E.J.; Eynon, R. Distinct skill pathways to digital engagement. Eur. J. Commun. 2013, 28, 696–713. [Google Scholar] [CrossRef]

- Volungevičienė, A.; Brown, M.; Greenspon, R.; Gaebel, M.; Morrisroe, A. Developing a High-Performance Digital Education Ecosystem: Institutional Self-Assessment Instruments. European University Association absl. 2021. Available online: https://eua.eu/downloads/publications/digi-he%20desk%20research%20report.pdf (accessed on 18 November 2022).

- Solar, M.; Sabattin, J.; Parada, V. A maturity model for assessing the use of ICT in school education. J. Educ. Technol. Soc. 2013, 16, 206–218. Available online: http://www.jstor.org/stable/jeductechsoci.16.1.206 (accessed on 23 September 2019).

- ISTE. ISTE Standards. International Society for Technology in Education. Available online: https://www.iste.org/iste-standards (accessed on 1 August 2022).

- ECDL. International Certification of Digital Literacy. European/International Certification of Digital Literacy and Digital Skills. Available online: https://ecdl.cz/o_projektu.php (accessed on 1 February 2023).

- Rahman, A.; Rahman, F.M. Knowledge, attitude and practice of ICT use in teaching and learning: In the context of Bangladeshi tertiary education. Int. J. Sci. Res. (IJSR) 2015, 4, 755–760. [Google Scholar] [CrossRef]

- Hsin, C.T.; Li, M.C.; Tsai, C.C. The influence of young children’s use of technology on their learning: A review. J. Educ. Technol. Soc. 2014, 17, 85–99. Available online: https://www.jstor.org/stable/10.2307/jeductechsoci.17.4.85 (accessed on 23 September 2019).

- Cullinan, J.; Flannery, D.; Harold, J.; Lyons, S.; Palcic, D. The disconnected: COVID-19 and disparities in access to quality broadband for higher education students. Int. J. Educ. Technol. High. Educ. 2021, 18, 26. [Google Scholar] [CrossRef]

- Tegmark, M. The mathematical universe. Found. Phys. 2007, 38, 101–150. [Google Scholar] [CrossRef]

- Hillmayr, D.; Ziernwald, L.; Reinhold, F.; Hofer, S.I.; Reiss, K.M. The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis. Comput. Educ. 2020, 153, 103897–103921. [Google Scholar] [CrossRef]

- Langan, D.; Schott, N.; Wykes, T.; Szeto, J.; Kolpin, S.; Lopez, C.; Smith, N. Students’ use of personal technologies in the university classroom: Analysing the perceptions of the digital generation. Technol. Pedagog. Educ. 2016, 25, 101–117. [Google Scholar] [CrossRef]

- Ferreira, P.D.; Bombardelli, O. Editorial: Digital tools and social science education. J. Soc. Sci. Educ. 2016, 15, 1–4. [Google Scholar] [CrossRef]

- Zubković, B.R.; Pahljina-Reinić, R.; Kolić-Vehovec, S. Predictors of ICT use in teaching in different educational domains. Humanit. Today Proc. 2022, 1, 75–91. [Google Scholar] [CrossRef]

- Hernandez, R.M. Impact of ICT on education: Challenges and perspectives. Propos. Represent. 2017, 5, 325. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).