1. Introduction

The process of collecting feedback about the quality of services provided was used successfully for the first time by business organizations in the economic sector and boomed when both the usefulness of IT tools in this field and the positive values of the real feedback provided by the beneficiaries in the elaboration of institutional development strategies were highlighted. Thus, the literature on this topic stresses that organizational behavior is a determining factor of an organization’s performance and defines TQM—Total Quality Management, as a systemic approach leading to a continual improvement in the quality of an organization’s products and services, including the human motivational factor, which can be identified by assessing the beneficiaries’ level of satisfaction on a recurrent basis [

1]. We aimed to assess how a new feedback questionnaire is perceived by students; thus, we piloted a new feedback questionnaire for students to evaluate the educational process, the improvement in teaching, learning materials, professor-student interaction, and other aspects of the educational process.

A student feedback questionnaire regarding the educational process can directly contribute to promoting the values of a sustainable society in several ways; we considered its impact on education and the development of the academic community as follows: (a) transparency and accountability; (b) educational quality and equity; (c) active participation and engagement; (d) innovation and adaptability; and (e) social sustainability and community development. The feedback questionnaire regarding the educational process not only improves the quality of education but also aligns with the core values of a sustainable society, such as transparency, equity, active participation, innovation, and sustainability. It allows for the adjustment of educational processes in a way that responds to the current and future needs of students and society as a whole.

With the expansion of digitalization, the use of surveys and polls has become even easier, offering the possibility of surveying large numbers of people, sometimes located in very distant areas, in a fixed/relatively brief time and at minimal costs. However, in order to carry out a sociological survey/to investigate a social phenomenon/a social reality/a social fact, one needs a complex knowledge of the methodological underpinnings related to the development and the application of the instrument and the interpretation of the data collected.

Artificial intelligence (AI) advances and the rapid adoption of generative AI tools, like ChatGPT [

2,

3,

4] present new opportunities and challenges for higher education and for the evaluation of didactic activities through the feedback questionnaire [

5]. Some examples can be (a) feedback analysis; (b) learning analytics; (c) data mining for education; (d) intelligent web-based education; (e) AI robots that assist teachers in the educational process in a collaborative way; (f) tools for teachers in order to create courses and assignments for students; (g) tools for university staff to improve the management of time-consuming and repetitive tasks; and (h) AI and other Industry 4.0 technologies, such as the Internet of Things, which enable smart classrooms and the digital transformation of education management, teaching, and learning [

5].

Students demonstrate awareness of both the risks and benefits associated with generative AI in academic settings. The research [

6] concludes that failing to recognize and effectively use generative AI in higher education (e.g., for the evaluation of didactic activities) impedes educational progress and the adequate preparation of citizens and workers to think and act in an AI-mediated world [

6]. Starting from the obvious statement, the environment in a university classroom should be kept comfortable because the conditions in the classroom can significantly improve learning motivation and performance [

7], it is a real fact that our students are using AI tools for education. They are using chatbots and virtual assistants for their tasks, homework, case studies, etc. On the other hand, teachers are using AI in order to improve the educational process by, for example, automatic checking for homework, practical case studies at seminars, automatic feedback analysis, and others. In the last years, teachers and researchers tried to develop AI-based applications useful for the entire educational system, like:

- (1)

An assistant that uses a chatbot to provide decision support and recommend the most appropriate specialization according to the students’ profile [

8]. The application also provides a long-term educational direction, including tips for master’s programs. The application can be seen as a response to the students’ need to personalize their educational profile.

- (2)

An example used at our university, the Politehnica of Bucharest, is the Moodle platform, integrated with MS Teams, through which teachers can organize their courses online and students have quick access to the information and tasks created [

9]. There are some new and useful features like the online sessions, where the communication is made in real time; the possibility of using some AI features integrated into the apps; the possibility of recording and watching the courses later; the possibility of starting the transcriptions of a meeting in many languages; tools for implementing evaluation and grading systems; and monitoring the students in terms of the activities carried out, but also of the progress achieved; the use of virtual libraries containing information from academic journals, videos, pictures, podcasts, and others.

There are researchers who investigate the integration of ChatGPT into educational environments, focusing on its potential to enhance personalized learning and the ethical concerns it raises [

10,

11]. Other research is related to the perception and feedback of students in the education system or related to the evaluation of informatics educational systems. Ana Remesal [

12] studied that aimed to explore faculty and students’ perceptions about potentially empowering assessment practices in blended teaching and learning environments during remote teaching and learning. Cirneanu and Moldoveanu [

13] proposed introducing an approach for solving mathematical problems embedded in technical scenarios within the defense and security fields with the aid of digital technology using different software environments, such as Python, Matlab, or SolidWorks. The research also helps to reinforce key concepts and enhance problem-solving skills, sparking curiosity and creativity, and encouraging active participation and collaboration [

14,

15]. Moa [

14] evaluated students’ assessment of their learning after a teaching period of volleyball training in a university course. The teaching was research-based and linked to relevant theories of motor learning, small-sided games (SSGs), teaching games for understanding (TGfU), and motivational climate. Ciobanu and Mohora [

16] wrote an interesting paper about factorial analysis—a method that could serve as an evaluation study for a variety of solutions dedicated to online distance learning systems. These applications/solutions lead to many research studies for approaching the criteria of the selection process for virtual educational systems.

1.1. Legal Context

At the European level, there are the Standards and Guidelines for Quality Assurance in the European Higher Education Area (ESG) that were revised and approved at the Yerevan Ministerial Conference on 14–15 May 2015 [

17]. According to these standards, in higher education, quality assurance is based on four major principles, one of which emphasizes explicitly the need to consult the students: “(...) Quality assurance takes into account the needs and expectations of students (...)”. Moreover, the first part of the ESG, the one referring to internal quality assurance, places special emphasis on information management, highlighted by Standard 1.7. This standard specifies that “Institutions should ensure that they collect, analyze and use relevant information for the effective management of their programs and other activities”. Therefore, higher education institutions (HEIs) have a responsibility to collect student feedback and see it as an essential source of data for continuous improvement in the quality of education [

18].

In the Romanian higher education system, the provision and development of university-level activities are regulated by primary legislation, i.e., Law no. 199/2023 on Higher Education, with its subsequent amendments and additions and by a complex set of legal provisions derived from the Law (Framework Methodologies & Regulations, Nomenclatures) approved by Government Decisions [

19,

20] or Orders of the Minister of Education [

21], as well as by a set of Methodologies/Regulations and Procedures drafted and adopted by each higher education institution, based on the principle of university self-governance, in compliance with the legal provisions in force and approved by the University Senate—the decision-making body within these HEI’s.

The teaching staff in state-funded HEIs are remunerated according to a national salary grid and depend on the position held and length of service, approved by Law no. 153/2017 on the salary of staff in state-funded institutions, with its subsequent amendments and additions, which provides no variations based on professional performance.

On the other hand, the Methodology for the External Evaluation of the standards, reference standards, and the list of performance indexes used by the Romanian Agency for Quality Assurance in Higher Education, approved by Government Decision no. 1418/2006, with its subsequent amendments and additions, provides details about the procedures used in the periodic evaluation of teaching staff performance, including the following dimensions.

Teaching staff competency and the ratio of teaching staff members to students—the education provider/Higher Education Institution must ensure the competency of its teaching staff and implement correct and transparent processes for staff recruitment, integration, and professional development, in accordance with the national regulations in force. The institution explicitly supports and promotes the professional, pedagogical, and scientific development of its teaching staff [

22]. Peer evaluation is organized periodically, based on general criteria and on clear and public procedures [

23]. Teaching staff evaluation by students is mandatory. The institution uses a student feedback form approved by the university senate for all its teaching staff members and distributed at the end of each semester; the form is filled in exclusively in the absence of any external factors, and the respondent’s anonymity is guaranteed. Evaluation results are confidential, being accessible only to the Dean, the Rector, and the person evaluated.

The results of teaching staff evaluation by students are discussed individually, processed statistically by each department, faculty, and university, and analyzed at the faculty and university level to ensure transparency and to formulate policies on the quality of training.

Student feedback is one of the key forms of evaluating the quality of education. In this research, we focused on this type of evaluation, with significant implications for the continuous development of higher education institutions: (a) Improvement in teaching quality: Feedback from students helps identify a professor’s strengths and areas for improvement. This allows instructors to adjust their teaching methods to better meet the needs and learning styles of students, leading to a more effective and tailored educational experience [

24]; (b) Motivating professors: Evaluations provide professors with clear insights into their performance, which can motivate them to improve and diversify their teaching approaches. Positive feedback can contribute to greater professional satisfaction and a stronger commitment to their careers [

25]; (c) Continuous development: Student feedback fosters a culture of continuous learning. Professors can use evaluations to better understand how they are perceived and how they can develop professionally, adapting to changes in education and students’ expectations [

26]; (d) Relationship between professors and students: Evaluations help establish an open and transparent relationship between professors and students. By expressing their opinions, students feel more engaged in the learning process, and professors can become more responsive to their needs and concerns [

27]; (e) Improvement in educational programs: Student evaluations go beyond teaching performance and provide valuable insights about study programs, course structures, and available resources. This feedback helps institutions adapt their curriculum and improve educational offerings to better meet the demands of the job market and educational standards [

28].

1.2. Institutional Context

The National University of Science and Technology Politehnica Bucharest (POLITEHNICA of Bucharest—UNSTPB) is an accredited higher education institution and is part of the Romanian higher education system, boasting over 200 years of tradition; as a member of numerous international academic organizations and bodies, it includes 21 faculties and two specialized departments (of which: fifteen faculties and one department—in its Bucharest University Center, and six faculties and one department—in its Pitesti University Center).

At the beginning of the 2023–2024 academic year, the institution announced a generous educational offer:

A total of 155 bachelor’s degree programs in the fundamental subject areas: Mathematics and Natural Sciences—5 programs, Engineering Sciences—116 programs, Biological and Biomedical Sciences—2 programs, Social Sciences—21 programs, Arts and Humanities—8 programs, Sport Science and Physical Education—3 programs, according to Government Decision no. 367/2023, with its subsequent amendments and additions;

A total of 244 master’s degree programs in the fundamental subject areas: Mathematics and Natural Sciences—19 programs, Engineering Sciences—183 programs, Biological and Biomedical Sciences—1 programs, Social Sciences—27 programs, Arts and Humanities—10 programs, Sport Science and Physical Education—4 programs, according to Government Decision no. 356/2023, with its subsequent amendments and additions;

A total of 19 doctoral schools accredited by order of the Minister of Education, as well as numerous postgraduate programs, for an academic community made up of approximately 40,000 students enrolled in university study programs (bachelor’s/master’s/doctorate), approximately 2000 tenured teaching staff, over 500 employees in administrative positions, and numerous collaborators.

In 2019, the higher education institution took important steps towards its integration into the European research area and supporting researcher mobility by adopting the principles formulated in the European Charter for Researchers and the Code of Conduct for the Recruitment of Researchers. The efforts made in this direction came to fruition when the University received the “HR Excellence in Research” Award from the European Commission in September 2020. Taking into account the national context and the progress made towards its integration into the European Higher Education Area—EHEA and the New European Research Area—ERA, the higher education institution developed its own Development Strategy for the period 2020–2024. What is more, the rector’s managerial program for the period 2020–2024 includes specific measures to achieve the objectives set in the Strategy and the Action Plan for HR in Research (HR Action Plan) details specific actions and activities to achieve the strategic goal related to human resources.

The collection of student feedback is firmly anchored in the fundamental documents of UNSTPB, including the University Charter. Art. 82 para. (2) of the Charter provides that “The students’ opinion, expressed individually or by their representatives, or in surveys conducted using validated methodologies, is a means of self-monitoring, evaluation and improvement of academic activity”. This provision emphasizes that student feedback is not just a formality, but a central tool in internal assessment mechanisms [

29]. By including feedback in self-monitoring processes, the university is committed to responding effectively and promptly to student needs and expectations, thus ensuring the delivery of high-quality education services [

30].

Moreover, art. 124 of the Charter describes the criteria underpinning the process of quality assessment and assurance at the university level. Among these criteria, letter (k) explicitly mentions “feedback collection and implementation in the relationship with students”, which confirms that student feedback is a fundamental element in the assessment of academic performance. In addition, letter(s) of the same article makes mandatory “the collection, analysis and use of data in order to develop managerial policies and strategies based on evidence”. This emphasizes the importance of an informed and data-driven approach to managerial decision-making. Collecting and analyzing student feedback provides a solid basis for the development of effective strategies that meet the real needs of the academic community and contribute to increasing the quality of the education provided.

Considering that a crucial element in the evaluation of teaching staff is the quality of the instruments used for this purpose (such as the feedback questionnaire for teaching performance evaluation, the feedback questionnaire for administrative services evaluation, the feedback questionnaire for program evaluation, the peer-to-peer teaching staff evaluation feedback, and the annual self-evaluation form for teaching staff), the development, improvement, and updating of these forms have been the focus of several research projects supported by the higher education institution.

3. Results

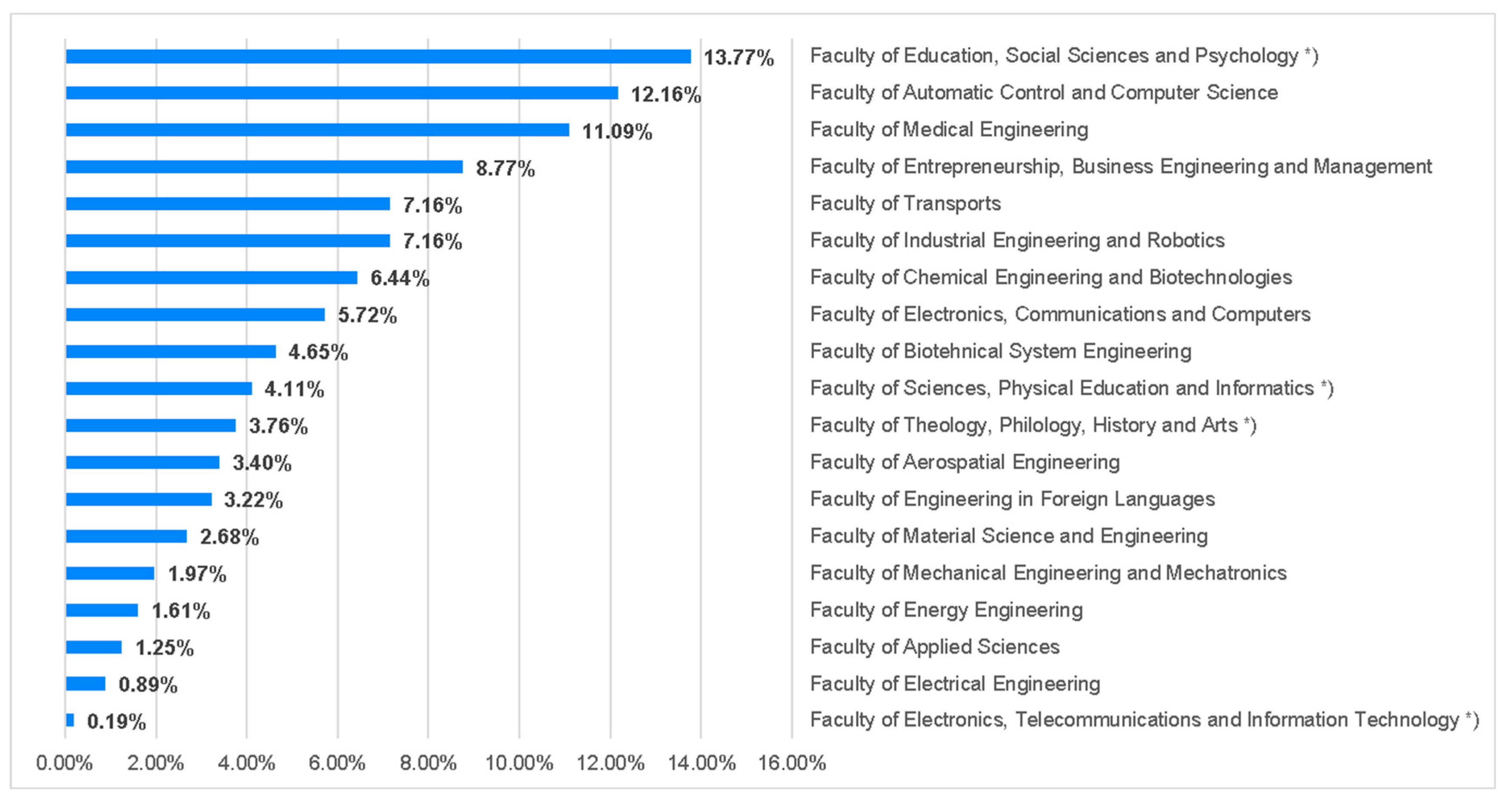

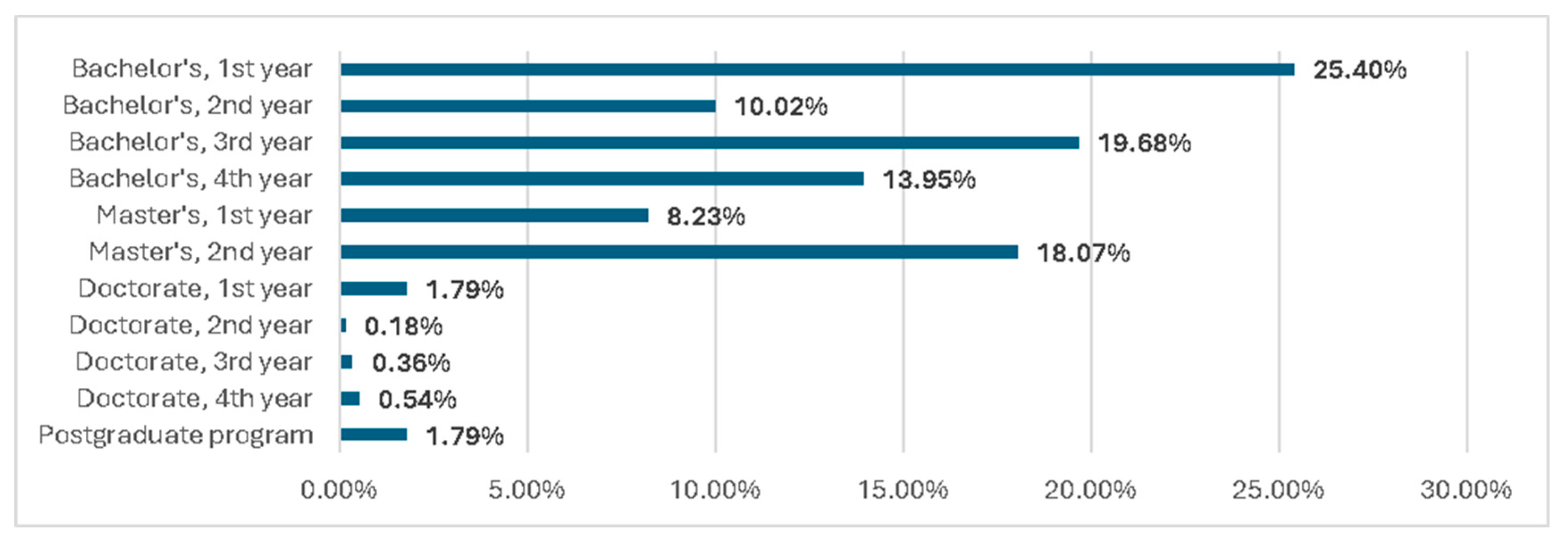

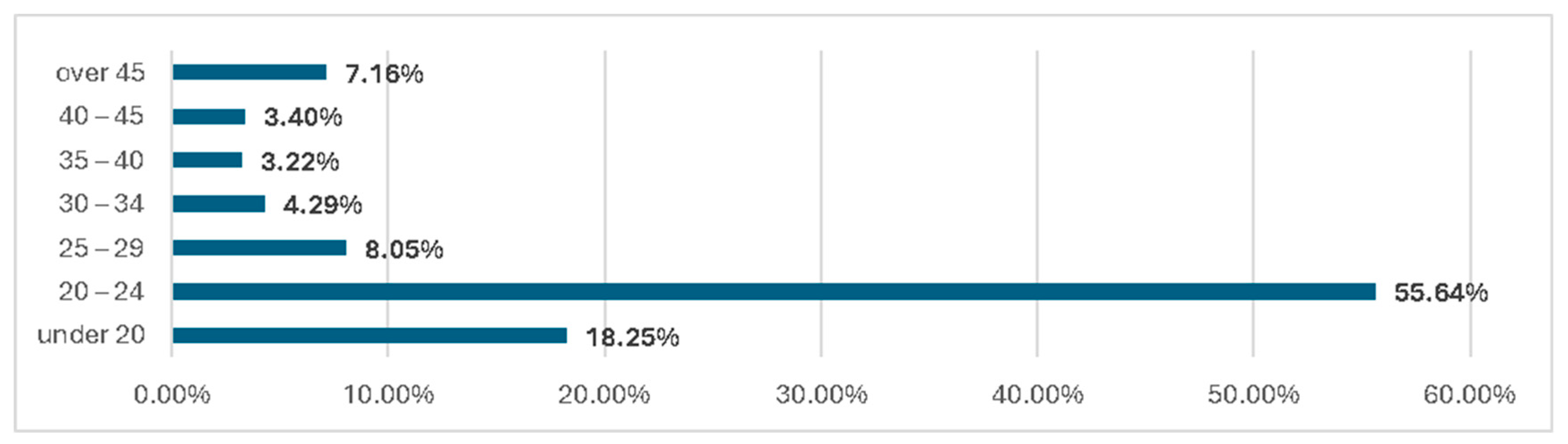

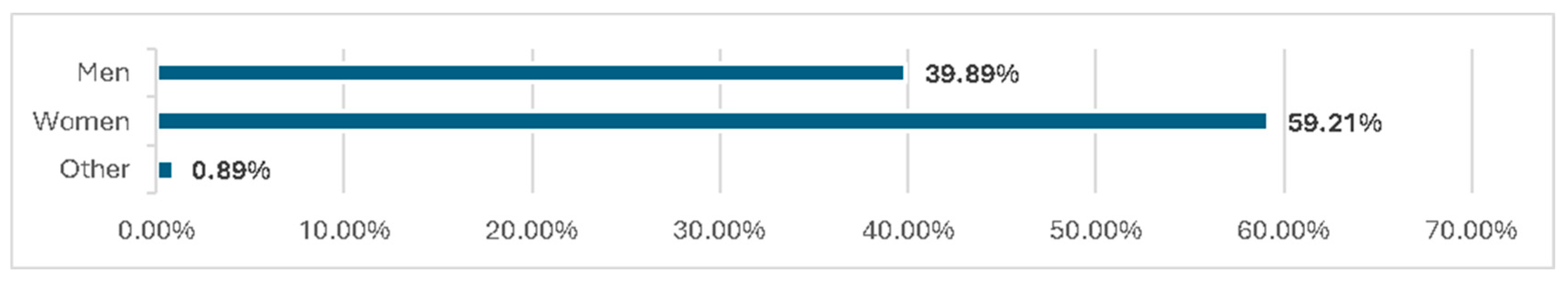

The analysis of

Figure 1,

Figure 2,

Figure 3 and

Figure 4 below shows that students enrolled in 19 out of the 21 faculties in the structure of the university responded to our survey. The most significant percentages of participation in the research carried out were recorded at the Faculty of Education, Social Sciences and Psychology—13.77%, the Faculty of Automatic Control and Computer Science—12.16%, and the Faculty of Medical Engineering—11.09% (

Table 1).

We also note that the sample on which this study was conducted has the following structure: mostly female—59.21%, aged 20–24—55.64%, enrolled in undergraduate study programs—69.05%, master’s degree programs—26.03%, doctoral degree programs—2.87% and postgraduate programs—1.74%.

The first two items in the questionnaire, two multiple-choice closed-ended questions, helped the researchers identify: (a) the students’ perception of the main motivations underlying an organization’s/institution’s decision to apply/use a feedback questionnaire, which resulted in the following ranking of pre-formulated answers: Identifying the beneficiaries’ problems in a timely manner so that they can be remedied—27.91%, Evaluating the quality of the services received—27.55%, Optimizing the services offered—20.57%; and (b) the students’ perception of the importance of the stages involved in investigating a social reality by the questionnaire method, which resulted in the following ranking of pre-formulated answers: Qualitative interpretation of the results—43.11%, Elaboration of the feedback questionnaire —31.13%, Data collection and centralization—10.20%.

The following three questionnaire items, closed-ended Likert questions, allowed the respondent students to show their willingness to answer the feedback form, always—37.39%, most of the time—28.26%, and never—only 3.40%; a total of 95.17% of the respondents rated the evaluation by feedback questionnaire as very useful and useful, in relation to the university’s institutional development needs, and 71.38% of the respondents rated their answering the feedback form as having a very high, high, and medium impact on teaching staff performance, while 66.55% of the respondents considered that their answers to the feedback questionnaire had a very high, high, and medium impact on the structure of their study programs curricula.

By centralizing and analyzing the data gathered from two other closed-ended and multiple-choice items, the researchers identified: (a) the main motivation that makes students want to become involved in the teacher evaluation activity by filling in the feedback form, with the following ranking of pre-formulated answers: Desire to contribute to the development of teaching activities in the institution—70.30%, Possibility of expressing negative opinions—11.09%, Possibility of expressing positive opinions—8.59%, Activity requested by teaching staff members—6.08%, Activity requested by the institution—3.40%, Possibility of expressing opinions in general—0.18%, and (b) the main motivation that supports the students in the act of learning, with the following ranking of pre-formulated answers: Desire to know, to possess knowledge—55.10%, Desire to graduate from a prestigious higher education institution—19.32%, Subject matter contents—14.85%, Teaching staff member—5.55%, Desire for affirmation—3.94%, Family—0.89%, Classmates—0.36%.

Although previously only 5.55% of the student respondents considered that their teacher supported them in the act of learning, when they were asked using a closed Liker item to assess the existence of a causal/determining relationship between the quality of the teacher’s teaching performance and the level of understanding of the subject matter contents, an overwhelming proportion of 89.98% of the respondents considered that such a causal relationship existed to a very great and great extent.

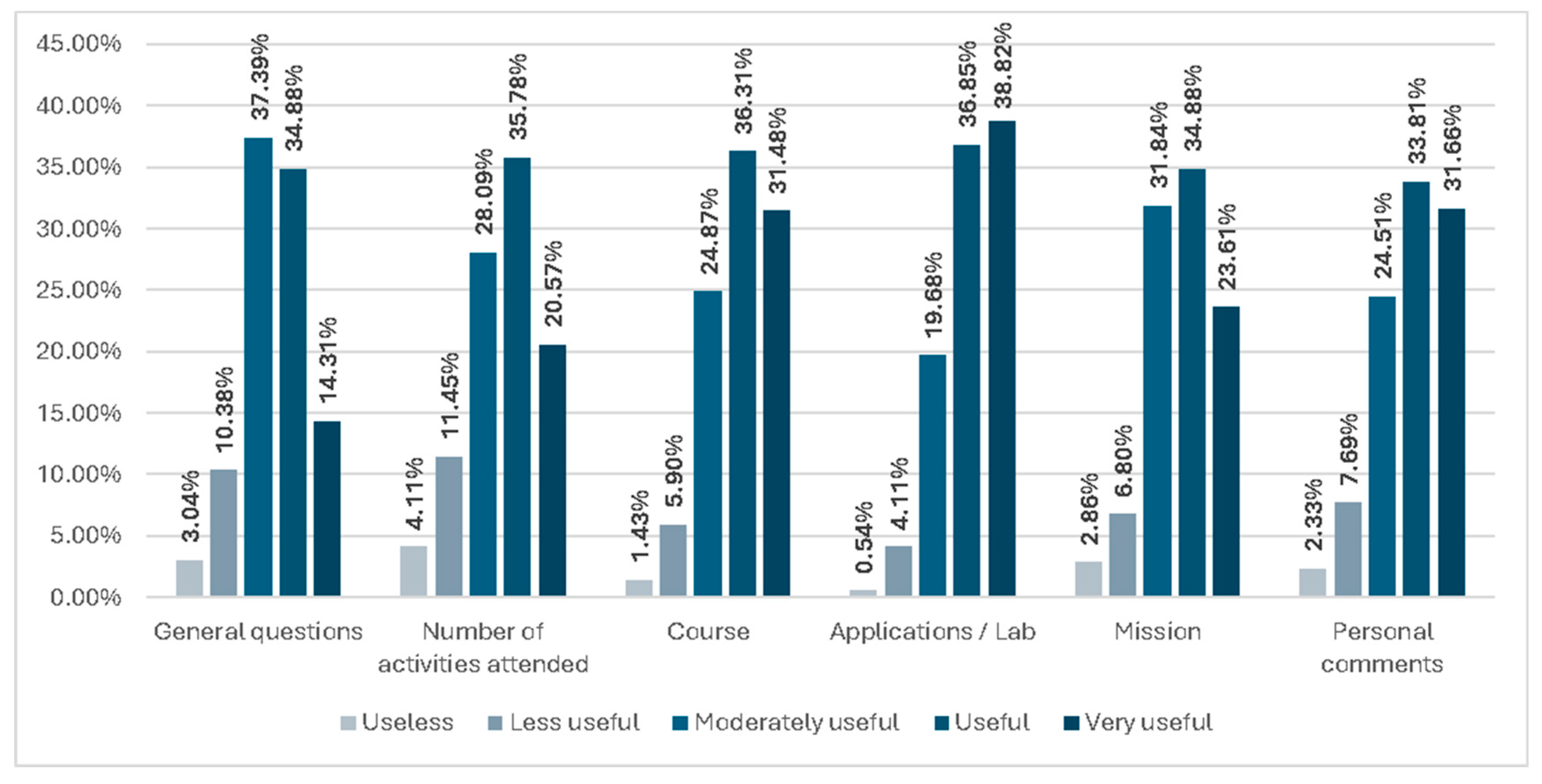

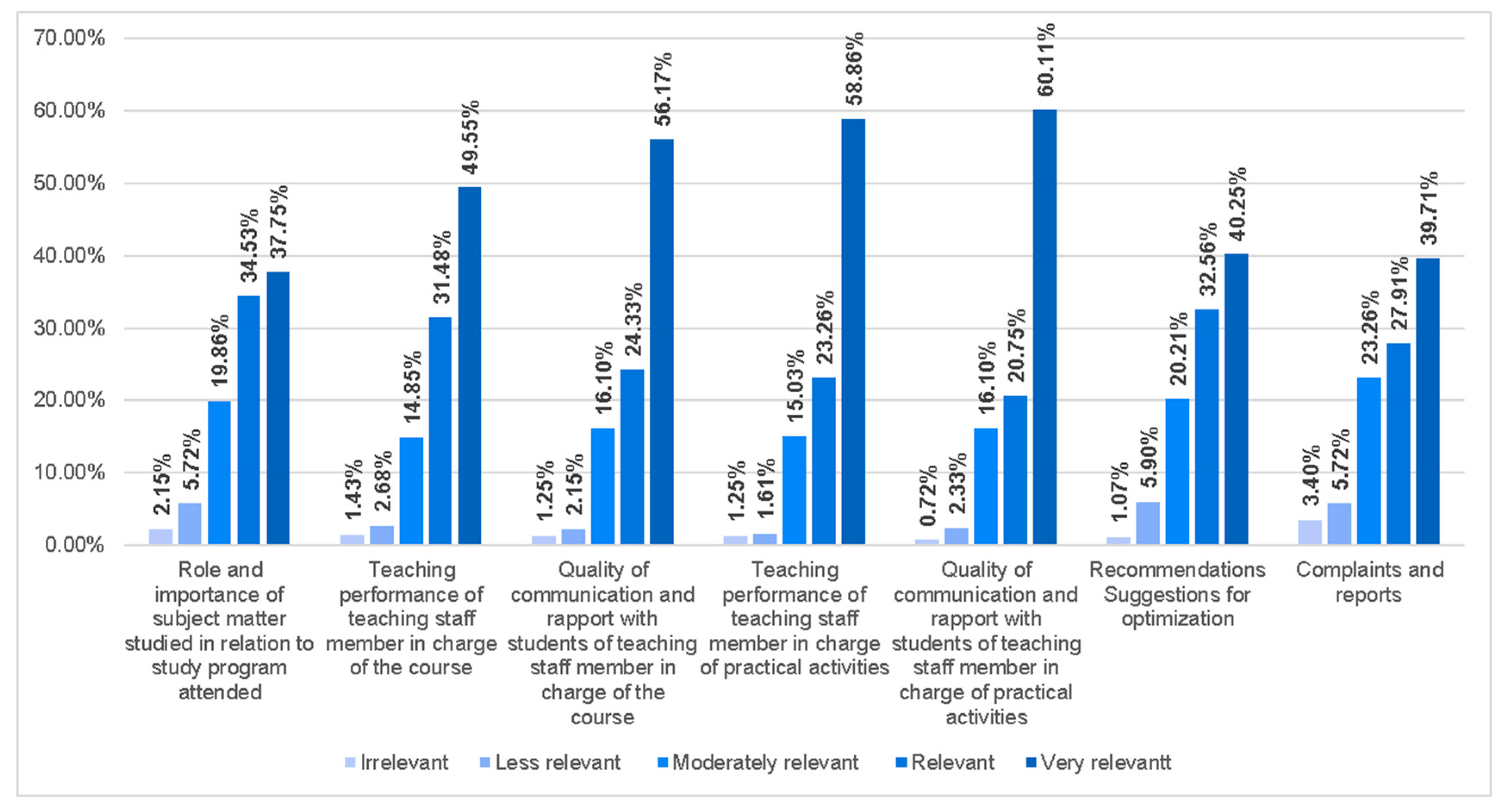

Closed-ended Likert items were also used by the respondent students to assess the usefulness of the sections in the existing UNSTPB feedback questionnaire for teaching staff evaluation (General questions, Number of activities attended, Course, Practical labs, Mission, Personal comments), as well as the relevance of the sections in the feedback questionnaire elaborated to be used by the university in the future. (Role and importance of subject matter studied in relation to study program attended, Teaching performance of teaching staff member in charge of the course, Quality of communication and rapport with students of teaching staff member in charge of the course, Teaching performance of teaching staff member in charge of practical activities, Quality of communication and rapport with students of teaching staff member in charge of practical activities, Recommendations, Suggestions for optimization, Complaints and reports). Percentages are shown in

Figure 5 and

Figure 6. It is worth noting the significant level of appreciation shown by the respondent students for the sections in the new questionnaire.

Moreover, the students responding to this questionnaire had the opportunity to express their own recommendations and suggestions regarding the development of the new feedback questionnaire, through an open-ended item. Their answers were integrated into thematic categories and centralized, as shown in

Table 2.

4. Discussion

The student respondents also had the opportunity to express their opinion on the most efficient way of structuring and distributing the new feedback questionnaire, as well as on the most appropriate moment/period when it could be sent out to students, answering three other closed-ended items with pre-formulated answers. Thus, centralizing the answers provided by our students resulted in the following ranking: (a) As for the structuring of the new feedback questionnaire: 44.72% of the respondents stated that it would be effective for it to be customized by subject area/faculty, 39.36% of the respondents said it should be structured by study cycle (bachelor’s/master’s/doctorate), and 15.92% considered it would be relevant to use a unique questionnaire form for the entire university, irrespective of cycle and subject area. Taking into account that 25.40% of the respondents were first-year undergraduate students and, implicitly, were less experienced in academic activities, only for this item, we considered the answers of the respondents enrolled in graduate and postgraduate programs to be more relevant. In this configuration, the weight of the answers was distributed as follows: 47.24% thought that structuring the questionnaire by study cycles (bachelor’s/master’s/doctorate) would be most effective, 34.36% considered it necessary to structure the feedback questionnaire by subject area/faculties, and only 18.40% supported the use of a unique form. (b) As for the most efficient way of distributing the feedback questionnaire, 94.81% of the respondent students opted for it to be sent out using digital means (online platforms, e-mail, etc.), only 4.41% in printed format, on paper, and 0.72% by phone, with the help of an operator. (c) In what regards the most appropriate moment/period to conduct the evaluation of teachers using the feedback questionnaire, 61.90% of the respondents thought it appropriate for it to be carried out after they sit in the final exam for the discipline they are asked to offer feedback for, and only 38.10% considered that their evaluation of the teaching staff would be most objective before they sit in the final exam for the discipline they are asked to offer feedback for. In fact, this is also highlighted by the answers of the students who made suggestions and recommendations using the open-ended item, regarding the possibility of also evaluating, using the institution’s feedback form, the evaluation activities organized by the teaching staff for each subject matter.

The analysis of all the data gathered from the students having answered the Questionnaire assessing the students’ perception of the Feedback form used by institution showed us that they are very attentive to and interested in the quality of the tools developed by the university, that it is necessary to provide them the opportunity to express and affirm their opinions in a constructive way, that they have intrinsic, solid, deep motivations that support them both in their learning and in other academic activities, and that they have a potential for innovation, which must be valued and capitalized on in all academic activities. All the qualities shown in and revealed by this micro-study make the students an integral part of the academic community; the university must offer them high-quality educational services and, at the same time, be a platform for them to use in their processes of discovering (themselves) and actively participating in socio-professional, personal, and institutional development—and even in the evolution of society.

After carefully analyzing the students’ answers, we found that the feedback form used in the teaching staff performance evaluation was perceived by the students as a tool whose efficiency grows insofar as it is very carefully developed and the collected answers are subjected to a rigorous process of qualitative interpretation able to identify problems in a timely manner in order to adopt the measures needed to remedy any situations uncomfortable for them.

Taking into account that only 27.73% of the student respondents said that they are very satisfied with the quality of the feedback form currently used by the university, we highlight the need to revise, update, and rebuild a new Feedback Questionnaire for the institution’s teaching staff evaluation process, a questionnaire which, on the one hand, should be adapted to the students’ psychological peculiarities and level of understanding and, on the other hand, should be structured in such a way as to better answer the need of using this instrument in the first place.

Taking into account the results revealed by our study, the recommendations and suggestions made by the student respondents, the provisions of the national legislation in force, the structure of the university’s study programs, the specificities of questionnaire-based sociological research as a method of investigating social reality, and consulting the feedback questionnaires used in teaching staff performance evaluation by other higher education institutions in the country and from abroad, the teaching staff evaluation process of UNSTPB was extensively revised: by developing a Feedback Questionnaire for students enrolled in bachelor’s and master’s degree programs and a Feedback Questionnaire for students enrolled in doctoral programs.

The Feedback Questionnaire for students enrolled in the first and second study cycle (bachelor’s/master’s) is made up of 16 items divided into four sections. Thus, in the first section, Data about the study program, the respondent students have the possibility to express their general opinion on the quality of the study program in which they are enrolled; they can assess the discipline they provide feedback for in relation to (a) the qualification awarded after graduating from the study program, and (b) the skills needed for labor market insertion; they can also assess the number of hours allotted to studying the subject matter in the study program curriculum and the extent of their participation in the didactic activities of that discipline. The questionnaire items included in the 2nd section, Data on the performance of the teaching staff member responsible for the course, aim to identify the students’ evaluation of (a) the quality of the teaching staff member’s teaching performance, with reference to the following dimensions: selection of contents in relation to the objectives of the discipline; relevance of the information presented in relation to labor market requirements; the way in which new concepts are correlated with the contents of other subjects studied; suitability of teaching methods and techniques to the contents of the discipline; quality of teaching aids used (course materials, specialized bibliography, etc.); and objectivity in the assessment of students; and (b) the quality of communication and the rapport with the teaching staff member in charge of the course, in relation to the following dimensions: motivational support offered to students in the act of learning, receptivity and availability of the teaching staff member to the students’ questions, the quality of additional explanations. The questionnaire items included in the 3rd section, Data on the performance of the teaching staff member responsible for practical activities/seminars/laboratories, provide the respondents the opportunity to assess (a) the quality of the teaching methods used, taking into account the following dimensions: correlation between the contents presented and the objectives of the discipline; correlation between the theoretical contents presented and practical-applicative situations; suitability of teaching methods and techniques to the contents of the discipline; suitability of teaching methods and techniques to the students’ psycho-individual peculiarities; quality of the teaching aids used; and objectivity shown in student evaluation; (b) the quality of communication and the rapport established with the teaching staff member in charge of practical activities/laboratories/seminars, taking into account the following dimensions: motivational support offered to students in the act of learning, quality of additional guidance/explanations, ability to create a stimulating, attractive educational environment, and ability to stimulate the students’ creativity/innovation.

Although the dimensions intended to be evaluated by the items in the 2nd section and the 3rd section of the Questionnaire are very similar, they refer to distinct teaching staff members and contain sensitive differentiations depending on the specificities of the teaching activities provided by each teaching staff member, their level of interaction with the students, and perhaps most importantly, taking into account the suggestions and recommendations provided by student respondents in the previously conducted micro-study.

The 4th section in the Questionnaire, Data on the respondents, contains items that help to identify the faculty/cycle/program and the year of study the respondents are enrolled in, as well as their gender and age bracket.

According to the provisions of the Regulatory Framework on doctoral studies approved by Order of the Minister of Education no. 3020/2024, doctoral study programs are organized at the national level, are coordinated by a scientific advisor for each doctoral student, and contain an Advanced University Training Program (PPUA) with a common core of three disciplines, 1. Ethics; 2. Project management; and 3. Research methodology and Scientific Authorship, and a supplemental set of two elective specialized disciplines.

As a result, the Feedback Questionnaire for students enrolled in the 3rd study cycle is divided into four similar sections, totaling a number of 15 items, in accordance with the specificities of these study programs and the general level of understanding of advanced doctoral students. Thus, while the 1st section, Data on the study program, and the 4th section, Data on the respondents, help us collect general data, as the name of each section suggests, the center of gravity in this instrument is made up of the 2nd section, Data on the quality of the collaboration with one’s scientific advisor, which allows doctoral students to assess their rapport with their supervisor by referring to the following dimensions, capacity to coordinate studies from a scientific and methodological viewpoint, quality of scientific and methodological recommendations, selection of scientific contents in relation to the trends in the field, adequacy of teaching and research methods in relation to the topic, motivational support provided to the doctoral student in research and learning, support offered to facilitate the doctoral student’s participation in scientific activities (conferences, projects, internal/international mobilities, publication in journals, etc.), quality of administrative guidance (regulations, procedures of the doctoral school), and the 3rd section, Data on the discipline from the doctoral study program you provide feedback for, which assesses (a) the quality of the activity carried out by the teaching staff member responsible for the discipline, considering the following dimensions: systematization of scientific contents, novelty of the information presented, correlation between the theoretical contents presented and practical-applicative situations, adequacy of teaching methods, quality of the teaching aids used, objectivity shown in the evaluation of the doctoral students, and (b) the quality of communication and the rapport with the teaching staff member, from the perspective of the following dimensions: responsiveness and availability to answer the doctoral students’ questions, quality of scientific and methodological guidance, motivational support offered to doctoral students in learning and research.

As specified by the legal provisions that regulate this process, teaching staff performance evaluation can be carried out from several perspectives, peer evaluation, self-evaluation, evaluation by university management, and evaluation by beneficiaries, but also depending on one’s development of certain professional skills: assessment of scientific skills, assessment of psycho-pedagogical skills, assessment of communication skills, assessment of research skills, assessment of managerial skills, etc. Without any doubt, the feedback questionnaire for teaching staff performance evaluation is only one of the tools used in teaching staff performance evaluation, addressing the students and aimed at evaluating only the professional skills noticeable by students in the teacher’s didactic activity, which justifies the creation of some items included in the 2nd section and the 3rd section of the new instruments, which offer the students the possibility to evaluate two major components: (a) the quality of the teaching activities carried out by the teaching staff, and (b) the quality of the teaching staff’s rapport with the respondent students.

Of course, without being exhaustive, the dimensions proposed to be evaluated in the 2nd section and the 3rd section of both Feedback Questionnaires are the result of an elaborative approach adapted to the specificities of the Romanian education system and the institution but also to the psychological peculiarities of the beneficiaries of the educational process. They can be reformulated and updated according to the need to correlate university study programs with the trends in the evolution of the labor market.

At the same time, the 2nd section and the 3rd section of the draft instruments also contain an open-ended item that provides the respondents the opportunity to formulate observations/suggestions to improve the activity of each teacher evaluated or report in a timely manner the undesirable behaviors on their part.

From 15 April to 30 May 2024, right in the middle of the teaching period in the 2nd semester of the current academic year, the New Feedback Questionnaire for students enrolled in bachelor’s and master’s degree programs was piloted on 545 students, while the New Feedback Questionnaire for students enrolled in doctoral studies was piloted on 84 doctoral students from our higher education institution, who were requested explicitly to participate only in the evaluation of the disciplines studied in the first semester, so that the feedback collected could also include their assessment of the way they had been evaluated by the teaching staff member, according to their previously expressed recommendations.

After piloting the new tools, it was possible to produce detailed evaluation reports with multiple correlations, which are not the subject of this contribution. However, we can exemplify a qualitative-type observation resulting from piloting the questionnaires, e.g., the most positive feedback was provided to the teaching staff member responsible for the discipline Web Programming, included in the 4th-year curriculum of the undergraduate study program in Computers and Information Technology (Faculty of Automatic Control and Computer Science) and to the teaching staff member in charge of Project Management, a core discipline studied in doctoral programs.

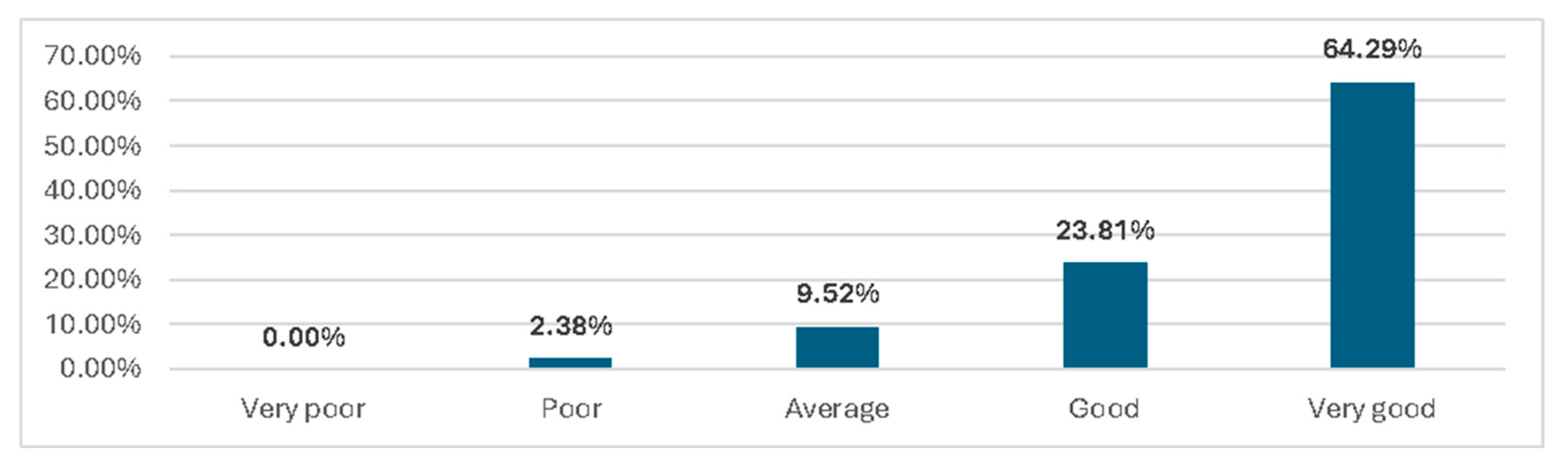

At the end of each new instrument, an independent item allowed the respondents to assess the quality of the form in relation to its purpose, i.e., to generate and collect objective, constructive, and high-quality feedback.

The analysis of the answers provided to this item, independent of the content in the Questionnaire, showed a significant increase in the students’ degree of satisfaction with the quality of the new feedback tools, as a result of the research carried out. Percentages for this item are illustrated in

Figure 7 and

Figure 8.

Thus, designing the IT application involved the following stages:

Identifying the specific requirements for collecting feedback, including question types, anonymity of responses, and reporting requirements.

Developing the system’s module-based architecture, which integrates the feedback component into the main UNSTPB Connect platform, including the following elements: Front-end—user interface to fill in the feedback forms, built to be intuitive and accessible from various devices; Back-end—data management system, which centralizes the responses and stores them in secure databases; and Anonymization mechanisms—which dissociate the responses from the identity of the students, to protect the respondents’ privacy.

In the implementation phase, the IT application development team integrated the technical components and performed rigorous testing: functional testing—checking the functionality of the system components; security testing—verifying the protection of personal data in accordance with the provisions of Regulation (EU) 2016/679 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/CE (General Data Protection Regulation); and usability testing—which assessed the interface’s easiness of use and efficiency.

Once the online feedback collection system was implemented into the UNSTPB Connect platform, the university initiated a systematic evaluation of its impact on the educational process. This assessment was carried out by comparing response rates—there was a significant increase in the number of students who completed the feedback questionnaires compared to previously used methods and analyzing feedback quality—anonymizing responses and facilitating access from various devices (computer, e-mail, mobile phone) led to more detailed and honest feedback from students, providing more valuable information for teaching staff and university management. Thus, the use of electronic tools in the evaluation process had the following benefits: anonymization, accessibility, and convenience—for students and detailed, timely feedback reports—for teaching staff and institution management.