The Performance and Qualitative Evaluation of Scientific Work at Research Universities: A Focus on the Types of University and Research

Abstract

1. Introduction

2. Materials and Methods

3. Literature Review

- -

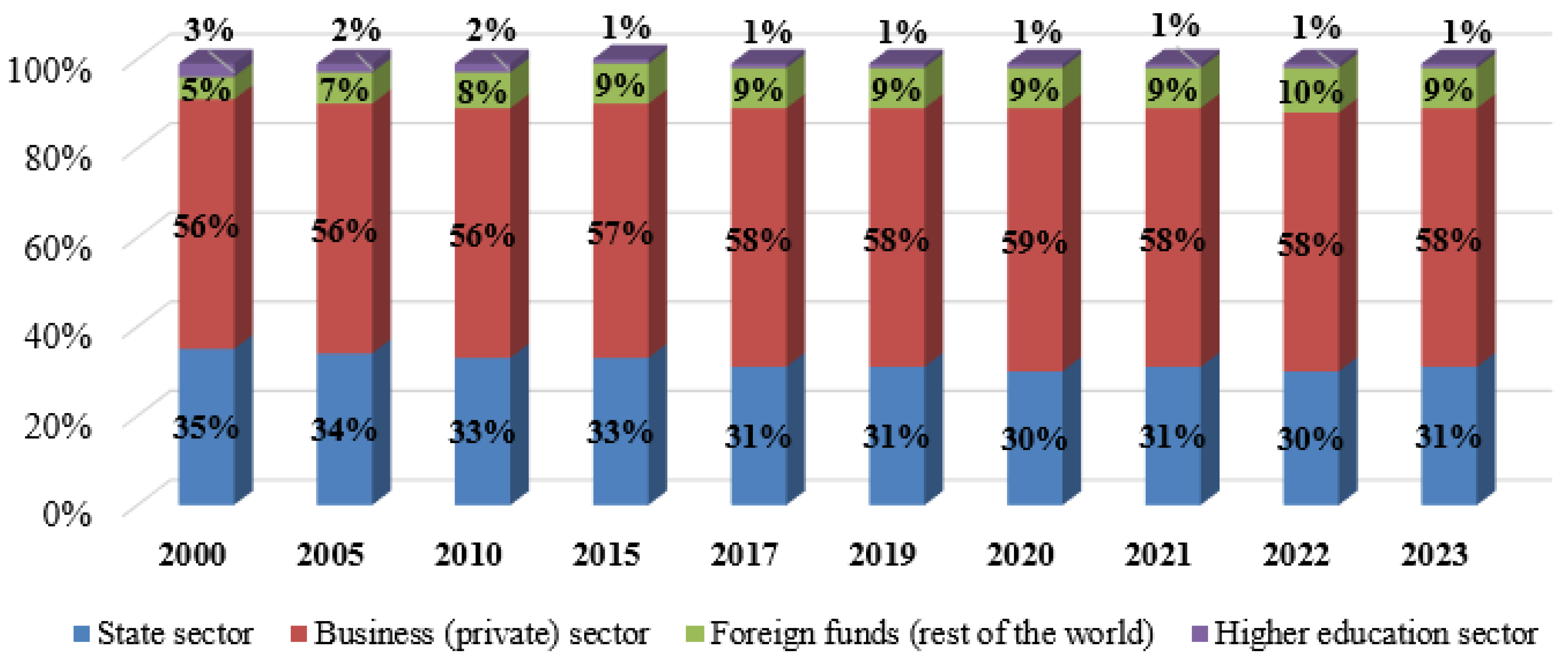

- the internal costs for research and development in priority areas of technology development, science, and technology;

- -

- the number of small innovative enterprises created with the participation of an organization (university);

- -

- the shares of the development and production (in the region) using critical technologies (including universities);

- -

- the share of patents, which scientific results were put into practice.

- -

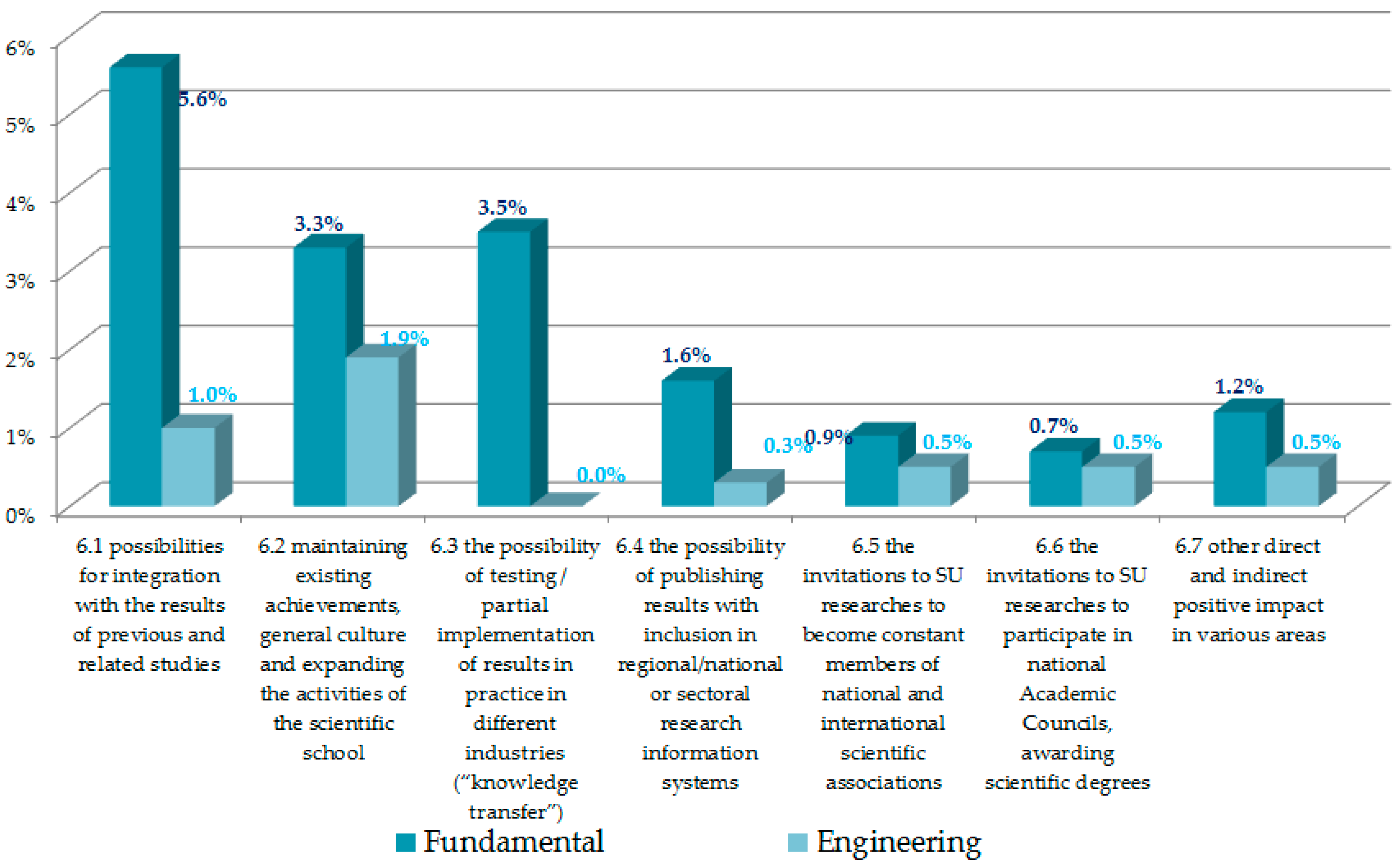

- possibilities for integration with the results of previous and related studies;

- -

- maintaining existing achievements, the general culture, and expanding the activities of the scientific school;

- -

- the possibility for testing/partial implementation of results in practice in different industries—“knowledge transfer”—on a test or stream basis;

- -

- the possibility for publishing results, with inclusion in regional/sectoral research and national information systems;

- -

- other direct and indirect impacts of the results obtained in the long term on various areas of scientific and practical activities.

4. Results

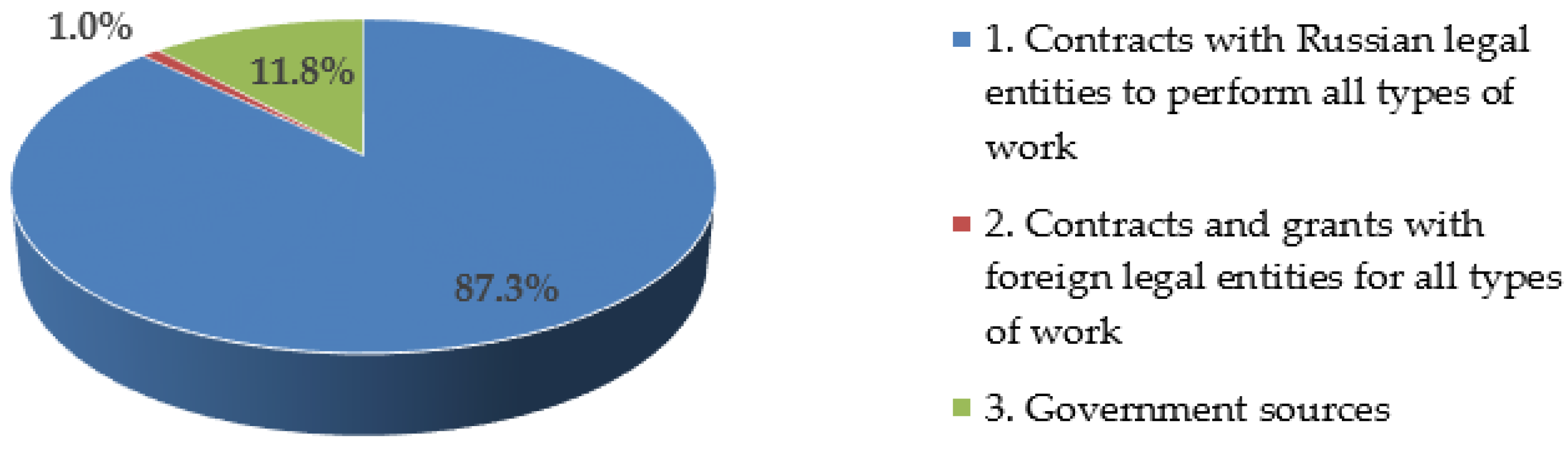

4.1. Description of the Research Object and University Research Data Analysis

- -

- comparatively numerous scientific units belong to ITMO University and to the Mining University (line 4);

- -

- the same universities were mostly focused on non-state contracts (line 6);

- -

- the share of lecturers who published at least one scientific article for the period 2021–2023 in journals of the Scopus/WoS 1–2 quartile ranged from 14 to 39%, which is less than the corresponding share of researchers 39–64% (lines 7 and 8);

- -

- from 62 to 85% of the patent authorships belong to university researchers (line 10);

- -

- a higher volume of scientific work per researcher was performed at the Mining University (line 11).

- -

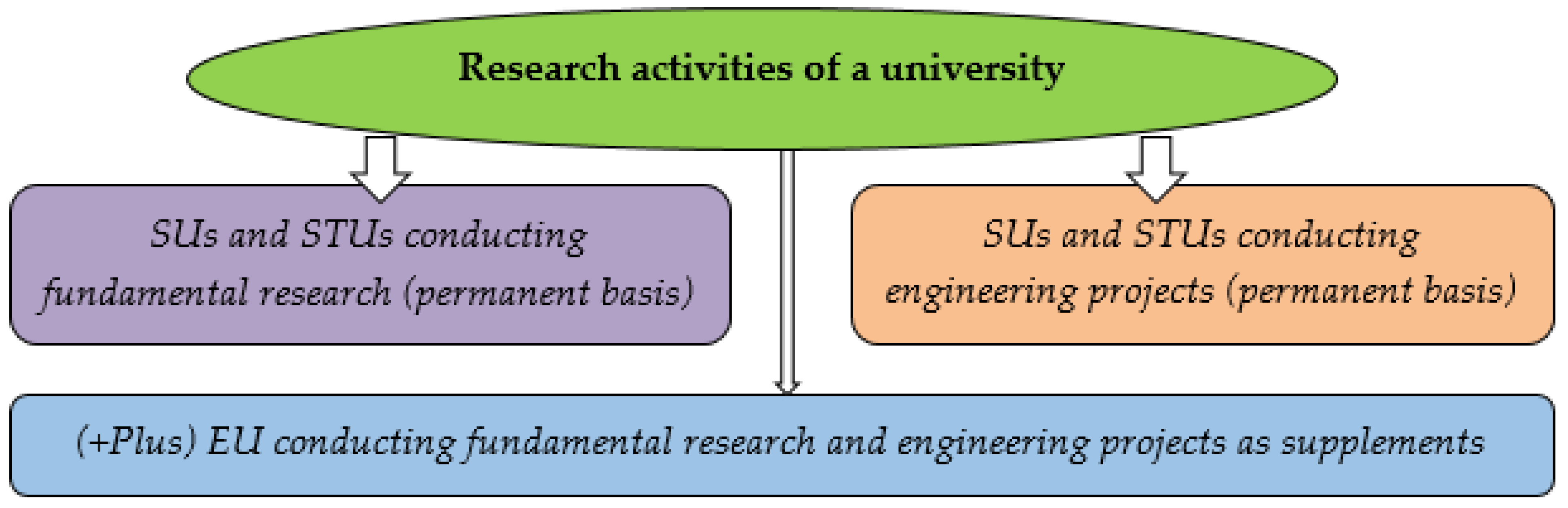

- the share of the fundamental research is greater than the share of the engineering projects at comprehensive-type St. Petersburg State University and at the mixed-type LETI; also, according to Table 5 (line 6) these two universities had the largest share of the state-ordered research—69.7% and 78.9%. (This proves research hypothesis H5, which was formulated in the Materials and Methods section: a larger share of government funding characterizes comprehensive universities);

- -

- the share of engineering projects exceeded the average level of 68.2% at engineering-type universities, including the “mixed-type” SPb Polytechnical University;

- -

- specialized scientific units (SUs), on average, performed a larger part of the research work, nearly 84%. However, at engineering-type universities—Mining and ITMO—that indicator exceeded 90%. (Thus, we proved research hypothesis H6, which stated that comprehensive-profile universities use their educational staff for research more often).

4.2. Survey Result Analysis

- -

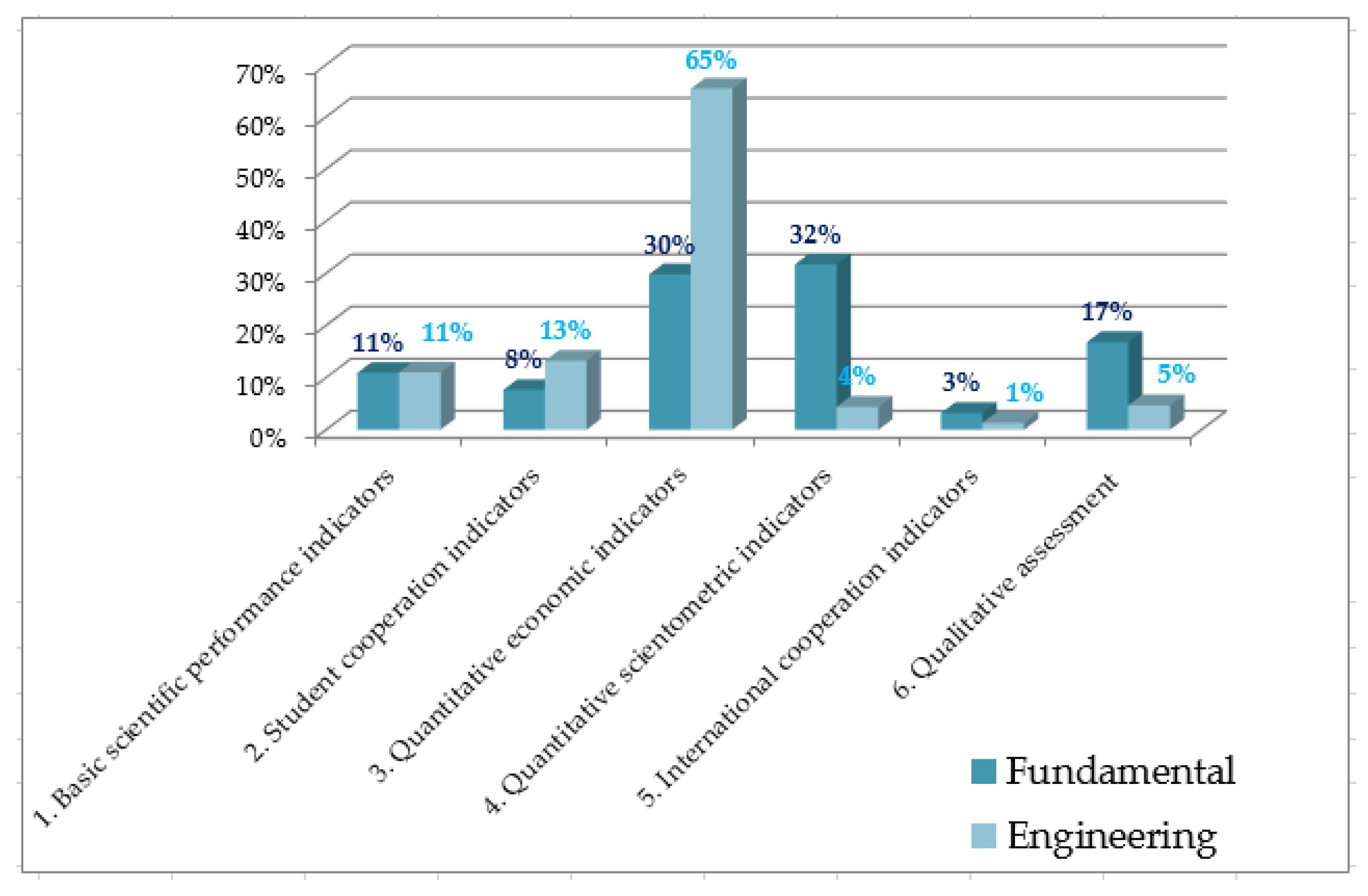

- basic scientific performance indicators have almost an equal weight of about 11%. We will show further, however, that the values of the sub-indicators differ for fundamental research and for engineering projects;

- -

- student cooperation appears to be more valuable for engineering projects rather than for fundamental research—13% and 8%, respectively. We suppose the reason is that the SU teams, which perform most of the engineering projects, more actively seek new members among students and young specialists;

- -

- quantitative economic indicators determine nearly the 2/3 (65%) of the quality value for engineering projects, while they determine close to only 30% of the quality value for fundamental research evaluation. The economic indicators’ group is the most numerous of all the groups. For engineering work, which is carried out under contracts with business, economic indicators reflect the best way of the effectiveness of an individual project and of an SU’s performance as a whole for the reporting period;

- -

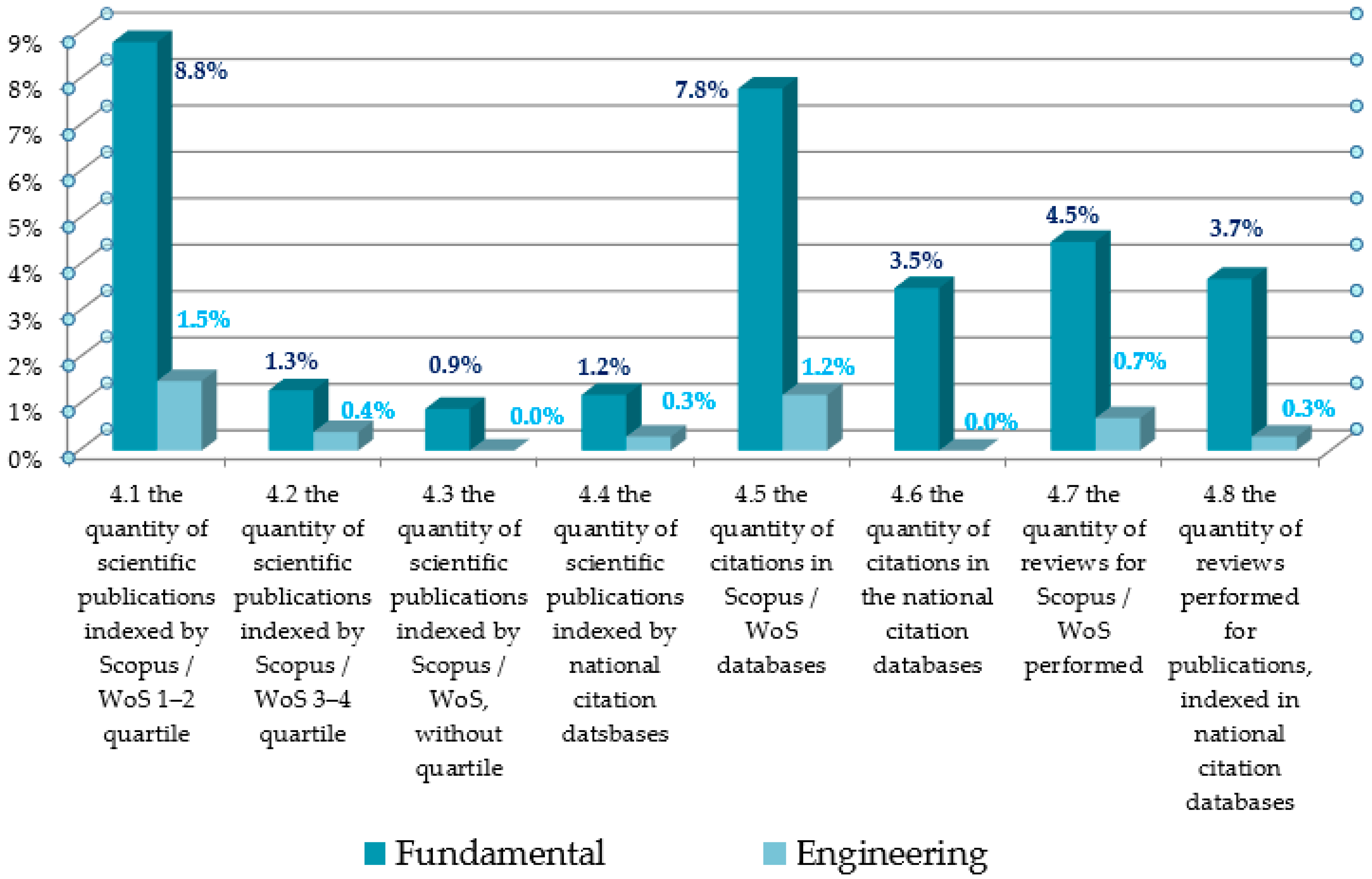

- in contrast, scientometrics are much more valuable for fundamental research rather than for engineering projects (32% against 4%);

- -

- international cooperation indicators were appreciated at a low level in both cases, though it means that for fundamental research, they exceed 3%, which is quite sufficient;

- -

- the qualitative assessment group for fundamental research has three times more value than that for engineering projects (17% against 5%).

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Survey Form for Indicators for Assessing the Quality of the Scientific Research

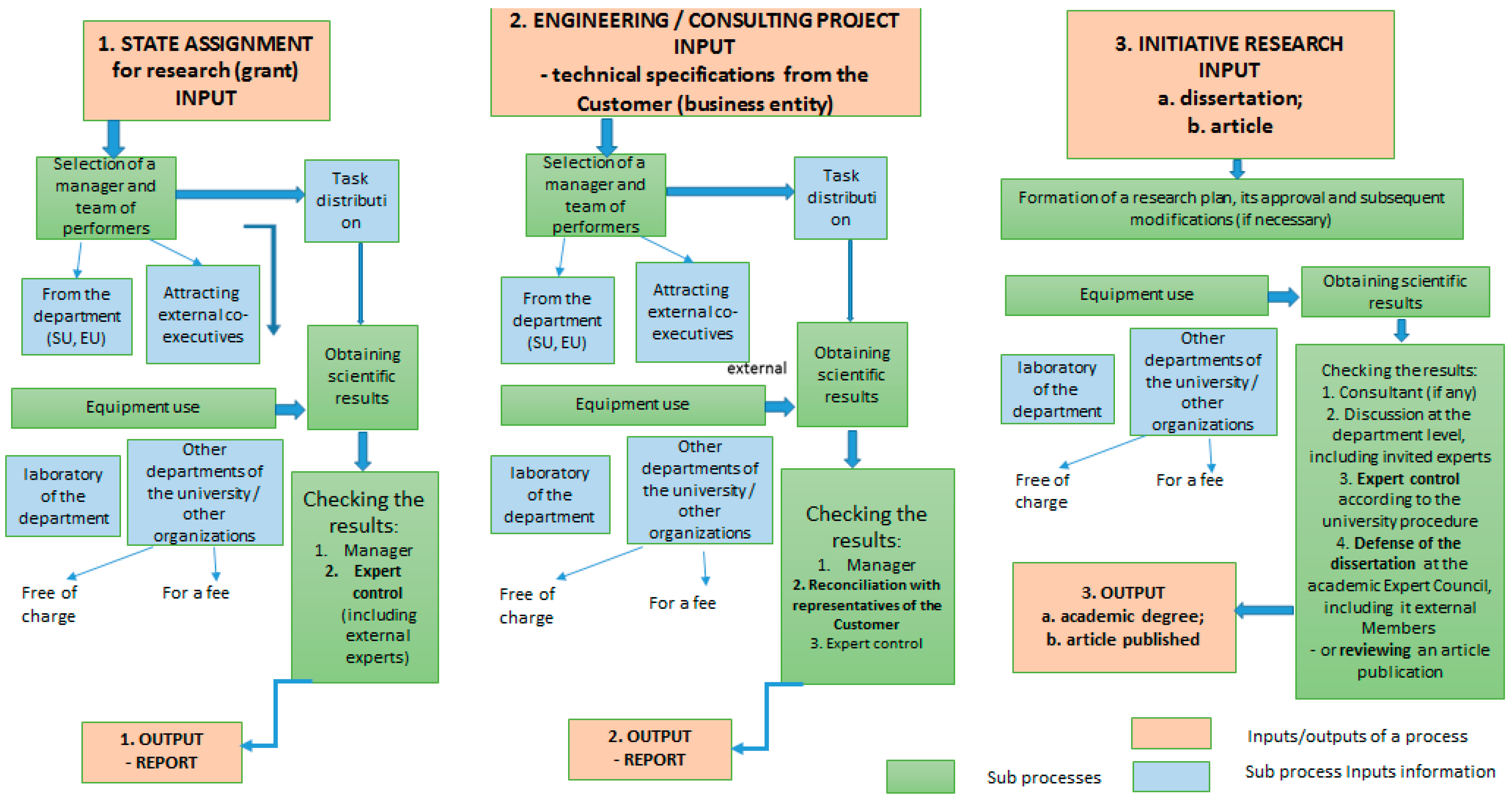

Appendix B. University Research Processes

References

- Etzkowitz, H. The Triple Helix University—Industry—Government Innovation in Action; Routledge: New York, NY, USA, 2008; p. 225. ISBN 978-0415964500. [Google Scholar]

- Tang, H.H. The strategic role of world-class universities in regional innovation system: China’s Greater Bay Area and Hong Kong’s academic profession. Asian Educ. Dev. Stud. 2020, 11, 7–22. [Google Scholar] [CrossRef]

- National Research Council, USA. Research Universities and the Future of America: Ten Breakthrough Actions Vital to Our Nation’s Prosperity and Security (Report); National Research Council: Washington, DC, USA, 2012; p. 225. ISBN 978-0-309-25639-1.

- Powell, J.J.W.; Dusdal, J. The European Center of Science Productivity: Research Universities and Institutes in France, Germany, and the United Kingdom. Century Sci. Int. Perspect. Educ. Soc. 2017, 33, 55–83. [Google Scholar] [CrossRef]

- Intarakumnerd, P.; Goto, A. Role of public research institutes in national innovation systems in industrialized countries: The cases of Fraunhofer, NIST, CSIRO, AIST, and ITRI. Res. Policy 2018, 47, 1309–1320. [Google Scholar] [CrossRef]

- Vlasova, V.V.; Gokhberg, L.M.; Ditkovsky, K.A.; Kotsemir, M.N.; Kuznetsova, I.A.; Martynova, S.V.; Nesterenko, A.V.; Pakhomov, S.I.; Polyakova, V.V.; Ratay, T.V.; et al. Science Indicators: 2023: Statistical Collection; National Research University Higher School of Economics: Moscow, Russia, 2023; p. 416. ISBN 978-5-7598-2765-8. (In Russian) [Google Scholar]

- Textor, C. Breakdown of R&D spending in China 2017–2022, by Entity. Available online: https://www.statista.com/statistics/1465556/research-and-development-expenditure-in-china-distribution-by-entity/ (accessed on 12 August 2024).

- Chen, K.; Zhang, C.; Feng, Z.; Zhang, Y.; Ning, L. Technology transfer systems and modes of national research institutes: Evidence from the Chinese Academy of Sciences. Res. Policy 2022, 51, 104471. [Google Scholar] [CrossRef]

- Vasilev, Y.; Vasileva, P.; Batova, O.; Tsvetkova, A. Assessment of Factors Influencing Educational Effectiveness in Higher Educational Institutions. Sustainability 2024, 16, 4886. [Google Scholar] [CrossRef]

- Ilyushin, Y.V.; Pervukhin, D.A.; Afanaseva, O.V. Application of the theory of systems with distributed parameters for mineral complex facilities management. ARPN J. Eng. Appl. Sci. 2019, 14, 3852–3864. [Google Scholar]

- Raupov, I.; Burkhanov, R.; Lutfullin, A.; Maksyutin, A.; Lebedev, A.; Safiullina, E. Experience in the Application of Hydrocarbon Optical Studies in Oil Field Development. Energies 2022, 15, 3626. [Google Scholar] [CrossRef]

- Rudnik, S.N.; Afanasev, V.G.; Samylovskaya, E.A. 250 years in the service of the Fatherland: Empress Catherine II Saint Petersburg Mining university in facts and figures. J. Min. Inst. 2023, 263, 810–830. [Google Scholar]

- Olcay, G.F.; Bulu, M. Is measuring the knowledge creation of universities possible? A review of university rankings. Technol. Forecast. Soc. Chang. 2017, 123, 153–160. [Google Scholar] [CrossRef]

- Lapinskas, A.; Makhova, L.; Zhidikov, V. Responsible resource wealth management in ensuring inclusive growth [Odpowiedzialne zarzdzanie zasobami w zapewnieniu wzrostu wczajcego]. Pol. J. Manag. Stud. 2021, 23, 288–304. [Google Scholar] [CrossRef]

- Peris-Ortiz, M.; García-Hurtado, D.; Román, A.P. Measuring knowledge exploration and exploitation in universities and the relationship with global ranking indicators. Eur. Res. Manag. Bus. Econ. 2023, 29, 100212. [Google Scholar] [CrossRef]

- Ponomariov, B.L.; Boardman, P.C. Influencing scientists’ collaboration and productivity patterns through new institutions: University research centers and scientific and technical human capital. Res. Policy 2010, 39, 613–624. [Google Scholar] [CrossRef]

- Isaeva, N.V.; Borisova, L.V. Comparative analysis of national policies for developing research universities’ campuses. Univ. Manag. Pract. Anal. 2013, 6, 74–87. (In Russian) [Google Scholar]

- Ponomarenko, T.V.; Marinina, O.A.; Nevskaya, M.A. Innovative learning methods in technical universities: The possibility of forming interdisciplinary competencies. Espacios 2019, 40, 1–10. Available online: http://www.revistaespacios.com/a19v40n41/19404116.html (accessed on 10 September 2024).

- Zhang, H.; Patton, D.; Kenney, M. Building global-class universities: Assessing the impact of the 985 Project. Res. Policy 2013, 42, 765–775. [Google Scholar] [CrossRef]

- Shang, J.; Zeng, M.; Zhang, G. Investigating the mentorship effect on the academic success of young scientists: An empirical study of the 985 project universities of China. J. Informetr. 2022, 16, 101285. [Google Scholar] [CrossRef]

- Chen, Y.; Pan, Y.; Liu, H.; Wu, X.; Deng, G. Efficiency analysis of Chinese universities with shared inputs: An aggregated two-stage network DEA approach. Socio-Econ. Plan. Sci. 2023, 90, 101728. [Google Scholar] [CrossRef]

- Mindeli, L.E. Financial Support for the Development of the Scientific and Technological Sphere; Mindeli, L.E., Chernykh, S.I., Frolova, N.D., Todosiychuk, A.V., Fetisov, V.P., Eds.; Institute for Problems of Science Development of the Russian Academy of Sciences (IPRAN): Moscow, Russia, 2018; p. 215. ISBN 978-5-91294-125-2. (In Russian) [Google Scholar]

- Guba, K.S.; Slovogorodsky, N.A. “Publish or Perish” in Russian social sciences: Patterns of co-authorship in “predatory” and “pure” journals. Issues Educ. Educ. Stud. Mosc. 2022, 4, 80–106. (In Russian) [Google Scholar] [CrossRef]

- Slepykh, V.; Lovakov, A.; Yudkevich, M. Academic career after defending a PhD thesis on the example of four branches of Russian science. Issues Educ. Educ. Stud. Mosc. 2022, 4, 260–297. (In Russian) [Google Scholar] [CrossRef]

- Mohrman, K.; Ma, W.; Baker, D. The Research University in Transition: The Emerging Global Model. High. Educ. Policy 2008, 21, 5–27. [Google Scholar] [CrossRef]

- Altbach, P.G. The Road to Academic Excellence: The Making of World-Class Research Universities; Altbach, P.G., Salmi, J., Eds.; World Bank: Washington, DC, USA, 2011; p. 381. ISBN 978-0-8213-9485-4. [Google Scholar] [CrossRef]

- Powell, J.J.W.; Fernandez, F.; Crist, J.T.; Dusdal, J.; Zhang, L.; Baker, D.P. Introduction: The Worldwide Triumph of the Research University and Globalizing Science. Century Sci. Int. Perspect. Educ. Soc. 2017, 33, 1–36. [Google Scholar] [CrossRef]

- Colina-Ysea, F.; Pantigoso-Leython, N.; Abad-Lezama, I.; Calla-Vásquez, K.; Chávez-Campó, S.; Sanabria-Boudri, F.M.; Soto-Rivera, C. Implementation of Hybrid Education in Peruvian Public Universities: The Challenges. Educ. Sci. 2024, 14, 419. [Google Scholar] [CrossRef]

- Fernandez, F.; Baker, D.P. Science Production in the United States: An Unexpected Synergy between Mass Higher Education and the Super Research University. Century Sci. Int. Perspect. Educ. Soc. 2021, 33, 85–111. [Google Scholar] [CrossRef]

- Jamil, S. The Challenge of Establishing World-Class Universities: Directions in Development; World Bank: Washington, DC, USA, 2009; p. 115. ISBN 082-1378-767, 978-0821-3787-62. [Google Scholar] [CrossRef]

- Mudzakkir, M.; Sukoco, B.; Suwignjo, P. World-class Universities: Past and Future. Int. J. Educ. Manag. 2022, 36, 277–295. [Google Scholar] [CrossRef]

- Tian, L. Rethinking the global orientation of world-class universities from a comparative functional perspective. Int. J. Educ. Dev. 2023, 96, 102700. [Google Scholar] [CrossRef]

- Clark, B.R. Creating Entrepreneurial Universities: Organizational Pathways of Transformation; Emerald Group Publishing Limited: London, UK, 1998; p. 200. ISBN 978-0 0804-3342-4. [Google Scholar]

- Etzkowitz, H. The Entrepreneurial University: Vision and Metrics. Ind. High. Educ. 2016, 3, 83–97. [Google Scholar] [CrossRef]

- Kazin, F.A.; Kondratiev, A.V. Development of the concept of an entrepreneurial university in Russian universities: New assessment tools. Univ. Upr. Prakt. Anal. Univ. Manag. Pract. Anal. 2022, 26, 18–41. (In Russian) [Google Scholar] [CrossRef]

- Berestov, A.V.; Guseva, A.I.; Kalashnik, V.M.; Kaminsky, V.I.; Kireev, S.V.; Sadchikov, S.M. The “national research university” project is a driver of Russian higher education. Vyss. Obraz. V Ross. High. Educ. Russ. 2020, 29, 22–34. (In Russian) [Google Scholar] [CrossRef]

- Matveeva, N.; Ferligoj, A. Scientific Collaboration in Russian Universities before and after the Excellence Initiative Project “5–100”. Scientometrics 2020, 124, 2383–2407. [Google Scholar] [CrossRef]

- Matveeva, N.; Sterligov, I.; Yudkevich, M. The Effect of Russian University Excellence Initiative on Publications and Collaboration Patterns. J. Informetr. 2021, 15, 101110. [Google Scholar] [CrossRef]

- Semenov, V.P.; Mikhailov Yu, I. Challenges and trends of quality management in the context of industrial and mineral resources economy. J. Min. Inst. 2017, 226, 497. [Google Scholar] [CrossRef]

- Litvinenko, V.S.; Bowbrick, I.; Naumov, I.A.; Zaitseva, Z. Global guidelines and requirements for professional competencies of natural resource extraction engineers: Implications for ESG principles and sustainable development goals. J. Clean. Prod. 2022, 338, 130530. [Google Scholar] [CrossRef]

- Semenova, T.; Martínez Santoyo, J.Y. Economic Strategy for Developing the Oil Industry in Mexico by Incorporating Environmental Factors. Sustainability 2024, 16, 36. [Google Scholar] [CrossRef]

- Rozhdestvensky, I.V.; Filimonov, A.V.; Khvorostyanaya, A.S. Methodology for assessing the readiness of higher educational institutions and scientific organizations for technology transfer. Innov. Innov. 2020, 9, 11–16. (In Russian) [Google Scholar] [CrossRef]

- Bakthavatchaalam, V.; Miles, M.; de Lourdes, M.-T.; Jose, S. Research productivity and academic dishonesty in a changing higher education landscape. On the example of technical universities in India (translated from English). Educ. Issues Educ. Stud. Mosc. 2021, 2, 126–151. (In Russian) [Google Scholar] [CrossRef]

- Aven, T. Perspectives on the nexus between good risk communication and high scientific risk analysis quality. Reliab. Eng. Syst. Saf. 2018, 178, 290–296. [Google Scholar] [CrossRef]

- Marinina, O.A.; Kirsanova, N.Y.; Nevskaya, M.A. Circular economy models in industry: Developing a conceptual framework. Energies 2022, 15, 9376–9386. [Google Scholar] [CrossRef]

- Siegel, D.; Bogers, M.; Jennings, D.; Xue, L. Technology transfer from national/federal labs and public research institutes: Managerial and policy implications. Res. Policy 2022, 52, 104646. [Google Scholar] [CrossRef]

- Snegirev, S.D.; Savelyev, V.Y. Quality management for scientific activities. Stand. I Kachestvo Stand. Qual. 2014, 3, 54–57. (In Russian) [Google Scholar]

- Leontyuk, S.M.; Vinogradova, A.A.; Silivanov, M.O. Fundamentals of ISO 9001:2015. J. Phys. Conf. Ser. 2019, 1384, 012068. [Google Scholar] [CrossRef]

- Wieczorek, O.; Muench, R. Academic capitalism and market thinking in higher education. In International Encyclopedia of Education, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2023; pp. 37–47. [Google Scholar] [CrossRef]

- Overchenko, M.N.; Marinin, M.A.; Mozer, S.P. Quality improvement of mining specialists training on the basis of cooperation between Saint-Petersburg mining university and Orica company. J. Min. Inst. 2017, 228, 681. [Google Scholar] [CrossRef]

- Hernandez-Diaz, P.M.; Polanco, J.-A.; Escobar-Sierra, M. Building a measurement system of higher education performance: Evidence from a Latin-American country. Int. J. Qual. Reliab. Manag. 2021, 38, 1278–1300. [Google Scholar] [CrossRef]

- Zharova, A.; Karl, W.; Lessmann, H. Data-driven support for policy and decision-making in university research management: A case study from Germany. Eur. J. Oper. Res. 2023, 308, 353–368. [Google Scholar] [CrossRef]

- Lubango, L.M.; Pouris, A. Is patenting activity impeding the academic performance of South African University researchers? Technol. Soc. 2009, 31, 315–324. [Google Scholar] [CrossRef][Green Version]

- de Jesus, C.S.; Cardoso, D.d.O.; de Souza, C.G. Motivational factors for patenting: A study of the Brazilian researchers profile. World Pat. Inf. 2023, 75, 102241. [Google Scholar] [CrossRef]

- Kiseleva, M.A. Development of an organizational and economic mechanism for managing the research activities of national research universities. Vestn. YURGTU NPI Bull. SRSTU NPI 2021, 3, 182–190. (In Russian) [Google Scholar] [CrossRef]

- Alakaleek, W.; Harb, Y.; Harb, A.A.; Shishany, A. The impact of entrepreneurship education: A study of entrepreneurial outcomes. Int. J. Manag. Educ. 2023, 21, 100800. [Google Scholar] [CrossRef]

- Rudko, V.A.; Gabdulkhakov, R.R.; Pyagay, I.N. Scientific and technical substantiation of the possibility for the organization of needle coke production in Russia. J. Min. Inst. 2023, 263, 795–809. Available online: https://pmi.spmi.ru/index.php/pmi/article/view/16246?setLocale=en_US (accessed on 10 September 2024).

- Gromyka, D.S.; Gogolinskii, K.V. Introduction of evaluation procedure of excavator bucket teeth into maintenance and repair: Prompts. MIAB. Mining Inf. Anal. Bull. 2023, 8, 94–111. (In Russian) [Google Scholar] [CrossRef]

- Michaud, J.; Turri, J. Values and credibility in science communication. Logos Epistem. 2018, 9, 199–214. [Google Scholar] [CrossRef][Green Version]

- Oblova, I.S.; Gerasimova, I.G.; Goman, I.V. The scientific career through a gender lens: A contrastive analysis of the EU and Russia. Glob. J. Eng. Educ. 2022, 24, 21–27. [Google Scholar]

- Chiware, E.R.T.; Becker, D.A. Research trends and collaborations by applied science researchers in South African universities of technology: 2007–2017. J. Acad. Librariansh. 2018, 44, 468–476. [Google Scholar] [CrossRef]

- Palavesm, K.; Joorel, S. IRINS: Implementing a Research Information Management System in Indian Higher Education Institutions. Procedia Comput. Sci. 2022, 211, 238–245. [Google Scholar] [CrossRef]

- Litvinenko, V.S.; Petrov, E.I.; Vasilevskaya, D.V.; Yakovenko, A.V.; Naumov, I.A.; Ratnikov, M.A. Assessment of the role of the state in the management of mineral resources. J. Min. Inst. 2023, 259, 95–111. [Google Scholar] [CrossRef]

- Carillo, M.R.; Papagni, E.; Sapio, A. Do collaborations enhance the high-quality output of scientific institutions? Evidence from the Italian Research Assessment Exercise. J. Socio-Econ. 2013, 47, 25–36. [Google Scholar] [CrossRef]

- Chen, S.; Ren, S.; Cao, X. A comparison study of educational scientific collaboration in China and the USA. Phys. A Stat. Mech. Its Appl. 2021, 585, 126330. [Google Scholar] [CrossRef]

- Arpin, I.; Likhacheva, K.; Bretagnolle, V. Organising inter- and transdisciplinary research in practice. The case of the meta-organisation French LTSER platforms. Environ. Sci. Policy 2023, 144, 43–52. [Google Scholar] [CrossRef]

- Liew, M.S.; Tengku Shahdan, T.N.; Lim, E.S. Enablers in Enhancing the Relevancy of University-industry Collaboration. Procedia—Soc. Behav. Sci. 2013, 93, 1889–1896. [Google Scholar] [CrossRef][Green Version]

- Tunca, F.; Kanat, Ö.N. Harmonization and Simplification Roles of Technology Transfer Offices for Effective University—Industry Collaboration Models. Procedia Comput. Sci. 2019, 158, 361–365. [Google Scholar] [CrossRef]

- Sciabolazza, V.L.; Vacca, R.; McCarty, C. Connecting the dots: Implementing and evaluating a network intervention to foster scientific collaboration and productivity. Soc. Netw. 2020, 61, 181–195. [Google Scholar] [CrossRef]

- Ovchinnikova, E.N.; Krotova, S.Y. Training mining engineers in the context of sustainable development: A moral and ethical aspect. Eur. J. Contemp. Educ. 2022, 11, 1192–1200. [Google Scholar] [CrossRef]

- Duryagin, V.; Nguyen Van, T.; Onegov, N.; Shamsutdinova, G. Investigation of the Selectivity of the Water Shutoff Technology. Energies 2023, 16, 366. [Google Scholar] [CrossRef]

- Mohamed, M.; Altinay, F.; Altinay, Z.; Dagli, G.; Altinay, M.; Soykurt, M. Validation of Instruments for the Improvement of Interprofessional Education through Educational Management: An Internet of Things (IoT)-Based Machine Learning Approach. Sustainability 2023, 15, 16577. [Google Scholar] [CrossRef]

- Tuan, N.A.; Hue, T.T.; Lien, L.T.; Van, L.H.; Nhung, H.T.T.; Dat, L.Q. Management factors influencing lecturers’ research productivity in Vietnam National University, Hanoi, Vietnam: A structural equation modeling analysis. Heliyon 2022, 8, e10510. [Google Scholar] [CrossRef]

- Akcay, B.; Benek, İ. Problem-Based Learning in Türkiye: A Systematic Literature Review of Research in Science Education. Educ. Sci. 2024, 14, 330. [Google Scholar] [CrossRef]

- Cherepovitsyn, A.E.; Tretyakov, N.A. Development of New System for Assessing the Applicability of Digital Projects in the Oil and Gas Sector. J. Min. Inst. 2023, 262, 628–642. Available online: https://pmi.spmi.ru/pmi/article/view/15795?setLocale=en_US (accessed on 10 September 2024).

- Murzo, Y.; Sveshnikova, S.; Chuvileva, N. Method of text content development in creation of professionally oriented online courses for oil and gas specialists. Int. J. Emerg. Technol. Learn. 2019, 14, 143–152. [Google Scholar] [CrossRef]

- Sveshnikova, S.A.; Skornyakova, E.R.; Troitskaya, M.A.; Rogova, I.S. Development of engineering students’ motivation and independent learning skills. Eur. J. Contemp. Educ. 2022, 11, 555–569. [Google Scholar] [CrossRef]

- Rijcke, S.D.; Wouters, P.F.; Rushforth, A.D.; Franssen, T.P.; Hammarfelt, B. Evaluation Practices and Effects of Indicator Use—A Literature Review. Res. Eval. 2016, 25, rvv038. [Google Scholar] [CrossRef]

- Cappelletti-Montano, B.; Columbu, S.; Montaldo, S.; Musio, M. New perspectives in bibliometric indicators: Moving from citations to citing authors. J. Informetr. 2021, 15, 101164. [Google Scholar] [CrossRef]

- García-Villar, C.; García-Santos, J.M. Bibliometric indicators to evaluate scientific activity. Radiología Engl. Ed. 2021, 63, 228–235. [Google Scholar] [CrossRef]

- Guskov, A.E.; Kosyakov, D.V. National factional account and assessment of scientific performance of organizations. Nauchnyye Tekhnicheskiye Bibl. Sci. Tech. Libr. 2020, 1, 15–42. (In Russian) [Google Scholar] [CrossRef]

- Khuram, S.; Rehman, C.A.; Nasir, N.; Elahi, N.S. A bibliometric analysis of quality assurance in higher education institutions: Implications for assessing university’s societal impact. Eval. Program Plan. 2023, 99, 102319. [Google Scholar] [CrossRef] [PubMed]

- Buehling, K. Changing research topic trends as an effect of publication rankings—The case of German economists and the Handelsblatt Ranking. J. Informetr. 2021, 15, 101199. [Google Scholar] [CrossRef]

- Kremcheev, E.A.; Kremcheeva, D.A. The content of the operation quality concept of the scientific and technical organization. Opcion 2019, 35, 3052–3066. [Google Scholar]

- Nyondo, D.W.; Langa, P.W. The development of research universities in Africa: Divergent views on relevance and experience. Issues Educ. Educ. Stud. Mosc. 2021, 1, 237–256. (In Russian) [Google Scholar] [CrossRef]

- Marozau, R.; Guerrero, M. Impacts of Universities in Different Stages of Economic Development. J. Knowl. Econ. 2016, 12, 1–21. [Google Scholar] [CrossRef]

- Fayomi, O.O.; Okokpujie, I.P.; Fayomi, O.S.I.; Udoye, N.E. An Overview of a Prolific University from Sustainable and Policy Perspective. Procedia Manuf. 2019, 35, 343–348. [Google Scholar] [CrossRef]

- Do, T.H.; Krott, M.; Böcher, M. Multiple traps of scientific knowledge transfer: Comparative case studies based on the RIU model from Vietnam, Germany, Indonesia, Japan, and Sweden. For. Policy Econ. 2020, 114, 102134. [Google Scholar] [CrossRef]

- See, K.F.; Ma, Z.; Tian, Y. Examining the efficiency of regional university technology transfer in China: A mixed-integer generalized data envelopment analysis framework. Technol. Forecast. Soc. Chang. 2023, 197, 122802. [Google Scholar] [CrossRef]

- Dusdal, J.; Zapp, M.; Marques, M.; Powell, J.J.W. Higher Education Organizations as Strategic Actors in Networks: Institutional and Relational Perspectives Meet Social Network Analysis. In Theory and Method in Higher Education Research; Huisman, J., Tight, M., Eds.; Emerald Publishing Limited: Bingley, UK, 2021; Volume 7, pp. 55–73. [Google Scholar] [CrossRef]

- Silva, M.D.C.; de Mello, J.C.C.B.S.; Gomes, C.F.S.; Carlos, I.C. Efficiency analysis of scientific laboratories. Meta Aval. 2020, 2, 625–645. [Google Scholar] [CrossRef]

- Vinogradova, A.; Gogolinskii, K.; Umanskii, A.; Alekhnovich, V.; Tarasova, A.; Melnikova, A. Method of the Mechanical Properties Evaluation of Polyethylene Gas Pipelines with Portable Hardness Testers. Inventions 2022, 7, 125. [Google Scholar] [CrossRef]

- Chen, W.; Yan, Y. New components and combinations: The perspective of the internal collaboration networks of scientific teams. J. Informetr. 2023, 17, 101407. [Google Scholar] [CrossRef]

- Corcoran, A.W.; Hohwy, J.; Friston, K.J. Accelerating scientific progress through Bayesian adversarial collaboration. Neuron 2023, 111, 3505–3516. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Yi, F.; Huang, Y. Toward scientific collaboration: A cost-benefit perspective. Res. Policy 2024, 53, 104943. [Google Scholar] [CrossRef]

- Ilyushin, Y.; Afanaseva, O. Spatial Distributed Control System Of Temperature Field: Synthesis And Modeling. ARPN J. Eng. Appl. Sci. 2021, 16, 1491–1506. [Google Scholar]

- Cossani, G.; Codoceo, L.; Caceres, H.; Tabilo, J. Technical efficiency in Chile’s higher education system: A comparison of rankings and accreditation. Eval. Program Plan. 2022, 92, 102058. [Google Scholar] [CrossRef]

- Marinin, M.A.; Marinina, O.A.; Rakhmanov, R.A. Methodological approach to assessing influence of blasted rock fragmentation on mining costs. Gorn. Zhurnal 2023, 9, 28–34. [Google Scholar] [CrossRef]

- Sutton, E. The increasing significance of impact within the Research Excellence Framework (REF). Radiography 2020, 26 (Suppl. S2), S17–S19. [Google Scholar] [CrossRef]

- Basso, A.; di Tollo, G. Prediction of UK Research excellence framework assessment by the departmental h-index. Eur. J. Oper. Res. 2022, 296, 1036–1049. [Google Scholar] [CrossRef]

- Groen-Xu, M.; Bös, G.; Teixeira, P.A.; Voigt, T.; Knapp, B. Short-term incentives of research evaluations: Evidence from the UK Research Excellence Framework. Res. Policy 2023, 52, 104729. [Google Scholar] [CrossRef]

- Reddy, K.S.; Xie, E.; Tang, Q. Higher education, high-impact research, and world university rankings: A case of India and comparison with China. Pac. Sci. Rev. B Humanit. Soc. Sci. 2016, 2, 1–21. [Google Scholar] [CrossRef]

- Shima, K. Changing Science Production in Japan: The Expansion of Competitive Funds, Reduction of Block Grants, and Unsung Heroe. Century Sci. Int. Perspect. Educ. Soc. 2017, 33, 113–140. [Google Scholar] [CrossRef]

- Li, D. There is more than what meets the eye: University preparation for the socio-economic impact requirement in research assessment exercise 2020 in Hong Kong. Asian Educ. Dev. Stud. 2021, 11, 702–713. [Google Scholar] [CrossRef]

- Radushinsky, D.A.; Kremcheeva, D.A.; Smirnova, E.E. Problems of service quality management in the field of higher education of the economy of the Russian Federation and directions for their solution. Relacoes Int. No Mundo Atual 2023, 6, 33–54. [Google Scholar]

- Shi, Y.; Wang, D.; Zhang, Z. Categorical Evaluation of Scientific Research Efficiency in Chinese Universities: Basic and Applied Research. Sustainability 2022, 14, 4402. [Google Scholar] [CrossRef]

- Cheng, Z.; Xiao, T.; Chen, C.; Xiong, X. Evaluation of Scientific Research in Universities Based on the Idea of Education for Sustainable Development. Sustainability 2022, 14, 2474. [Google Scholar] [CrossRef]

- Hou, L.; Luo, J.; Pan, X. Research Topic Specialization of Universities in Information Science and Library Science and Its Impact on Inter-University Collaboration. Sustainability 2022, 14, 9000. [Google Scholar] [CrossRef]

- Elbawab, R. University Rankings and Goals: A Cluster Analysis. Economies 2022, 10, 209. [Google Scholar] [CrossRef]

- Kifor, C.V.; Olteanu, A.; Zerbes, M. Key Performance Indicators for Smart Energy Systems in Sustainable Universities. Energies 2023, 16, 1246. [Google Scholar] [CrossRef]

- Guironnet, J.P.; Peypoch, N. The geographical efficiency of education and research: The ranking of U.S. universities. Socio-Econ. Plan. Sci. 2018, 62, 44–55. [Google Scholar] [CrossRef]

- Ma, Z.; See, K.F.; Yu, M.M.; Zhao, C. Research efficiency analysis of China’s university faculty members: A modified meta-frontier DEA approach. Socio-Econ. Plan. Sci. 2021, 76, 100944. [Google Scholar] [CrossRef]

- Tavares, R.S.; Angulo-Meza, L.; Sant’Anna, A.P. A proposed multistage evaluation approach for Higher Education Institutions based on network Data envelopment analysis: A Brazilian experience. Eval. Program Plan. 2021, 89, 101984. [Google Scholar] [CrossRef] [PubMed]

- Le, M.H.; Afsharian, M.; Ahn, H. Inverse Frontier-based Benchmarking for Investigating the Efficiency and Achieving the Targets in the Vietnamese Education System. Omega 2021, 103, 102427. [Google Scholar] [CrossRef]

- Adot, E.; Akhmedova, A.; Alvelos, H.; Barbosa-Pereira, S.; Berbegal-Mirabent, J.; Cardoso, S.; Domingues, P.; Franceschini, F.; Gil-Doménech, D.; Machado, R.; et al. SMART-QUAL: A dashboard for quality measurement in higher education institutions. Qual. Reliab. Manag. 2023, 40, 1518–1539. [Google Scholar] [CrossRef]

| Country | Share of the Total Volume, % | Average | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 2011 | 2015 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | ||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| USA | 3.4% | 3.4% | 3.4% | 3.2% | 3.2% | 3.0% | 3.0% | 3.0% | 3.2% |

| China | 7.1% | 8.0% | 8.0% | 8.2% | 8.5% | 8.5% | 8.5% | 8.5% | 8.2% |

| Japan | 5.9% | 5.4% | 5.1% | 5.2% | 5.2% | 5.2% | 5.2% | 5.2% | 5.3% |

| Russia | 8.0% | 9.0% | 9.5% | 10.7% | 10.1% | 10.2% | 11.0% | 11.0% | 9.9% |

| Turkey | 20.4% | 19.2% | 18.9% | 18.4% | 18.6% | 16.4% | 15.7% | 15.5% | 17.9% |

| Serbia | 25.1% | 24.0% | 25.3% | 25.4% | 44.7% | 45.9% | 41.9% | 43.0% | 34.4% |

| Spain | 4.1% | 4.3% | 4.4% | 4.2% | 3.9% | 4.0% | 4.0% | 4.0% | 4.1% |

| France | 1.0% | 2.8% | 3.1% | 2.9% | 3.0% | 3.0% | 3.0% | 3.0% | 2.7% |

| EU | 0.8% | 0.8% | 1.2% | 1.2% | 1.2% | 1.2% | 1.2% | 1.2% | 1.1% |

| Indicators for Assessing the Quality of Project Results and the Performances of Specialized SUs | Significance of Indicators, % | |

|---|---|---|

| Fundamental | Engineering | |

| 1 | 2 | 3 |

| 1. Basic scientific performance indicators: | ||

| 1.1. the number of patents registered | ||

| 1.2. the number of original computer programs registered | ||

| 1.3. the number of defended dissertations (master/science candidates) by employees of SUs | ||

| 1.4. the number of defended dissertations (Ph.D./doctoral) by employees of SUs | ||

| 2. Student cooperation indicators: (the statistics of the students attracted to the project teams/the work of the SUs during the reporting period—the number of persons and percentages of staff and of the total working time) | ||

| 2.1. students | ||

| 2.2. postgraduate students | ||

| 2.3. young specialists (25–35 years) | ||

| 2.4. foreign students and postgraduates | ||

| 3. Quantitative economic indicators: | ||

| 3.1. total number of researchers involved in the project | ||

| 3.2. working time of researchers, hours | ||

| 3.3. working time of researchers, costs (if available) | ||

| 3.4. constantly used spaces of laboratories, m2 | ||

| 3.5. constantly used office spaces, m2 | ||

| 3.6. costs for maintaining laboratory and office spaces | ||

| 3.7. residual value of the laboratory equipment used, which belongs to SUs/STUs | ||

| 3.8. cost of specially purchased equipment for the project | ||

| 3.9. laboratory equipment use of other departments (SUs) and organizations (costs and hours) | ||

| 3.10. costs of materials used for laboratory experiments | ||

| 3.11. other costs | ||

| 3.12. net profit or pure income (proceeds minus all the costs and taxes) | ||

| 3.13. proceeds per researcher on a project or in a reporting period | ||

| 3.14. net profit per researcher on a project or in a reporting period | ||

| 4. Quantitative scientometric indicators: | ||

| 4.1. the quantity of scientific publications indexed by Scopus/WoS 1–2 quartile | ||

| 4.2. the quantity of scientific publications indexed by Scopus/WoS 3–4 quartile | ||

| 4.3. the quantity of scientific publications indexed by Scopus/WoS, without quartile | ||

| 4.4. the quantity of scientific publications indexed by national citation databases (for example, the Russian Science Citation Index, RSCI) | ||

| 4.5. the quantity of citations in Scopus/WoS databases * | ||

| 4.6. the quantity of citations in the national citation databases * | ||

| 4.7. the quantity of reviews for Scopus/WoS performed | ||

| 4.8. the quantity of reviews performed for publications, indexed in national citation databases | ||

| 5. International cooperation indicators: | ||

| 5.1. foreign researchers attracted to the project teams/the work of SUs during the reporting period (the number of persons and percentages of staff and of working hours) | ||

| 5.2. researchers of SUs attracted to work with foreign partners during the reporting period (the number of persons and percentages of staff and of working hours) | ||

| 6. Qualitative assessment (comprehensive multifactorial assessment) | ||

| 6.1. possibilities for integration with the results of previous and related studies | ||

| 6.2. maintaining existing achievements, general culture, and expanding the activities of the scientific school | ||

| 6.3. the possibility for testing/the partial implementation of the results in practice in different industries—“knowledge transfer”—on a test or stream basis | ||

| 6.4. the possibility for publishing results with inclusion in regional/national or sectoral research information systems | ||

| 6.5. invitations to SU researchers to become constant members of national and international scientific associations | ||

| 6.6. invitations to SU researchers to participate in national academic councils which are awarding the scientific degrees | ||

| 6.7. other direct and indirect positive impacts in various areas | ||

| TOTAL | 100.0% | 100.0% |

| Characteristic | Mining University | St. Petersburg State University | SPb Polytechnical University | ITMO University | LETI University |

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 |

| Total number of researchers (employees of SUs/STUs) | 180 | 230 | 250 | 200 | 50 |

| Total number of researchers who took part in the survey (246) Of them | 103 | 59 | 29 | 27 | 18 |

| SU leaders | 5 | 4 | 1 | 1 | 1 |

| Middle managers and specialists | 79 | 40 | 19 | 15 | 10 |

| Post-graduate students | 12 | 7 | 5 | 5 | 3 |

| Students | 7 | 8 | 4 | 6 | 4 |

| Aged | |||||

| 20–25 | 20 | 19 | 9 | 11 | 7 |

| 25–35 | 31 | 12 | 6 | 3 | 3 |

| 35–55 | 40 | 18 | 11 | 9 | 3 |

| >55 | 12 | 10 | 3 | 4 | 5 |

| Problem | Possible Solution | |

|---|---|---|

| 1. The insufficient involvement of students, postgraduates, and young specialists in research, which complicates the transfer of innovations in the long term and is a threat to the sustainability of the developments of both the university and its macroenvironment region, industry, and country [27,28,34]. | The creation of conditions for the development of university science by the state: the construction of laboratory premises, acquisition of equipment, and engineering school support [36,37,39]. Attracting students to research via the entrepreneurial activities of the university [34,35]. | |

| 2. The risk of unjustified investment in university research: “the system for identifying promising developments at universities is retroactive, which leads to a low potential for their commercialization... and to unjustified investments.” [42]; “Falsification of research at technical universities can not only deprive the university of the trust of sponsoring companies but also leads to emergency situations when trying to implement it” [43]; publication of results in “predatory” journals is a research management risk [44,45]. | The correct defining of a task, drawing up detailed technical specifications, and bearing responsibility for the results of research [46]; implementing the terms from international quality standards of the ISO 9000 series and their analogs for science products in research contracts and technical specifications: “product”—“scientific result” and “requirement”—“scientific criteria” and “quality”—“the degree of scientific validity of a research result” [47,48]. | |

| 3. The separation of the functions of research contracting and contract execution: “the creation of scientific products and their successful sale as products or services on the market are different types of activities that require separate management and organizational efforts and structures” [47,49]. | Attracting managers from international companies in university science contract and sales divisions [5,50,51] and the implementation of support schemes and promotional programs for key specialists, who can present, sell, and execute research as incentives [52]. | |

| 4. The incomplete reflection of the specialists’ competencies: shortcomings in realizing the potential of temporary and constant scientific teams (SUs, engineering centers, etc.) in patents and grant activities [53,54,55]. | Involving researchers, lecturers, and students in the work of “entrepreneurial university” small enterprises and encouraging them to register patents and IT-industry products and to apply for grants [56,57,58]. | |

| 5. Low levels of scientific collaborations and communications between researchers within and between universities and production companies: insufficient levels of trust and cooperation for joint scientific research between university units [59,60]; the absence or shortcomings of academic research communication and management systems (RCMSs), like European “EuroCRIS”, complicates the exchange of experience within and between universities and production companies and research result implementation [61,62,63]. | Stimulating scientific collaboration within and between universities and production companies by organizing inter- and trans-disciplinary research [64,65,66]; organizing internships for employees of universities and production companies [67,68,69]; the creation of personalized algorithms and systems of research communication and management with high-tech partner companies of universities [70,71]; introducing an internet-of-things (IoT)-based machine-learning approach [72]. | |

| 6. Involving lecturers in scientific activities: “lecturers (teachers) are, for the most part, interested in educational activities, and conducting scientific research is perceived as something forced” [73]; current real-world problems or scenarios are not invented enough in educational practice [74]. | Shifting the focus to the formation of “interdisciplinary competencies” and problem-solving skills of lecturers, which allows for them to carry out desk research on their own, as well as to involve talented students in scientific work [75,76,77]. | |

| 7. Limitations of scientometric (bibliometric) indicators: quantitative methods of the integer counting of publications for assessing the effectiveness of academic research are not sufficiently objective, and they need additional qualitative diversification [78,79,80]. | The use of the “fractional counting” of scientific publications to increase the objectivity of scientific result evaluation [81], taking into account the societal impact, research topic, and other qualitative factors while ranking the publication [82,83,84]. | |

| 8. Problems of small (regional) universities in attracting qualified scientific personnel capable to “make a significant contribution to … the production of knowledge and its transfer” [85,86]. | Regional universities should stress the most-relevant area of research for the territory, with the partial involvement of qualified specialists from local production leaders as consultants [87,88,89]. |

| Characteristic | Mining University | St. Petersburg State University | SPb Polytechnical University | ITMO University | LETI University |

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 |

| 1. Number of undergraduate and graduate students, thousands of people | 16.7 | 32.1 | 34 | 14.5 | 9.1 |

| 2. Number of lecturers (employees of education units, teaching staff, and support staff), thousands of people | 2.5 | 3.3 | 2.5 | 1.3 | 1.1 |

| 3. Number of researchers (employees of scientific units), thousands of people | 0.18 | 0.23 | 0.25 | 0.2 | 0.05 |

| 4. Ratio of the number of researchers to the number of lecturers, % | 12% | 7% | 8% | 15% | 5% |

| 5. Annual volume of scientific work performed, millions of rubles | 1500–1950 | 580–650 | 710–790 | 650–780 | 130–170 |

| 6. Share of government and organizations with state participation that order research, percentage of the total volume of the contracts | 20.7% | 69.7% | 59.5% | 48.5% | 78.9% |

| 7. Lecturers who published research in journals in the Scopus/WoS level 1–2 quartile | 36% | 14% | 29% | 39% | 17% |

| 8. Share of researchers who regularly publish the results of their research in journals in the Scopus/WoS level 1–2 quartile | 53% | 44% | 57% | 64% | 39% |

| 9. Number of patents registered to the university | 187–298 | 55–112 | 312–628 | 215–365 | 89–178 |

| 10. Share of patent authorship attributable to researchers/lecturers | 65/35% | 85/15% | 78/22% | 62/38% | 82/18% |

| 11. Annual volume of scientific work per employee of the SU, thousands of rubles (average estimate) | 9444 | 2652 | 3000 | 3625 | 3000 |

| Characteristic | Mining University | SPb State University | SPb Polytechnical University | ITMO University | LETI University |

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 |

| The share of students and postgraduates who study technical specialties | 93% | 44% | 68% | 94% | 78% |

| University type (EE—engineering; C—comprehensive; E—mixed, closer to engineering) | EE | C | E | EE | E |

| Performed by Units | Share of the Total Volume, % | |||||

|---|---|---|---|---|---|---|

| Mining University | St. Petersburg State University | SPb Polytechnical University | ITMO University | LETI University | Weighted Average * | |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 1. Scientific units (SUs/STUs), total | 90.3% | 62.8% | 79.8% | 93.6% | 65.3% | 83.7% |

| Including | ||||||

| (a) fundamental research | 19.8% | 16.8% | 14.6% | 12.6% | 27.8% | 17.3% |

| (b) engineering projects | 70.5% | 46.0% | 65.2% | 81.0% | 37.5% | 66.4% |

| 2. Education units (EUs) | 9.7% | 37.2% | 20.2% | 6.4% | 34.7% | 16.3% |

| Including | ||||||

| (a) fundamental research | 9.1% | 35.0% | 15.3% | 6.0% | 28.0% | 14.4% |

| (b) engineering projects | 0.6% | 2.2% | 4.9% | 0.4% | 6.7% | 1.9% |

| TOTAL | 100% | 100% | 100% | 100% | 100% | 100.0% |

| Including | ||||||

| (a) fundamental research | 28.9% | 51.8% | 29.9% | 18.6% | 55.8% | 31.8% |

| (b) engineering projects | 71.1% | 48.2% | 70.1% | 81.4% | 44.2% | 68.2% |

| Groups of Indicators | Significance of Indicators, % | |

|---|---|---|

| Fundamental | Engineering | |

| 1. Basic scientific performance indicators | 10.9% | 11.0% |

| 2. Student cooperation indicators | 7.6% | 13.2% |

| 3. Quantitative economic indicators | 29.8% | 65.4% |

| 4. Quantitative scientometric indicators | 31.7% | 4.4% |

| 5. International cooperation indicators | 3.2% | 1.3% |

| 6. Qualitative assessment (comprehensive multifactorial assessment) | 16.8% | 4.7% |

| TOTAL | 100.0% | 100.0% |

| Indicators for Fundamental Research | % | Indicators for Engineering Projects | % |

|---|---|---|---|

| 1 | 2 | 3 | 4 |

| 4.1. the quantity of scientific publications indexed by Scopus/WoS 1–2 quartile | 8.8% | 1.1. the number of registered patents | 7.8% |

| 4.5. the quantity of citations in Scopus/WoS databases | 7.8% | 3.12. net profit or pure income (proceeds minus all the costs and taxes) | 6.9% |

| 6.1. possibilities for integration with the results of previous and related studies | 5.6% | 3.4. constantly used spaces of laboratories, m2 | 6.4% |

| 1.3. the number of defended dissertations (Ph.D.; science candidate) by employees of SUs | 5.3% | 3.2. working time of researchers, hours | 6.1% |

| 4.7. the quantity of reviews for Scopus/WoS performed | 4.5% | 3.3. working time of researchers, costs (if available) | 5.7% |

| 4.8. the quantity of reviews performed for publications, indexed in national citation databases | 3.7% | 3.8. cost of specially purchased equipment for the project | 5.7% |

| Subtotal | 35.7% | Subtotal | 38.6% |

| Hypothesis | Conclusion |

|---|---|

| H1 | Partially proved hypothesis (70%) |

| H2 | Proved hypothesis |

| H3 | Partially proved hypothesis (90%) |

| H4 | Partially proved hypothesis (50%) |

| H5 | Proved hypothesis |

| H6 | Proved hypothesis |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radushinsky, D.A.; Zamyatin, E.O.; Radushinskaya, A.I.; Sytko, I.I.; Smirnova, E.E. The Performance and Qualitative Evaluation of Scientific Work at Research Universities: A Focus on the Types of University and Research. Sustainability 2024, 16, 8180. https://doi.org/10.3390/su16188180

Radushinsky DA, Zamyatin EO, Radushinskaya AI, Sytko II, Smirnova EE. The Performance and Qualitative Evaluation of Scientific Work at Research Universities: A Focus on the Types of University and Research. Sustainability. 2024; 16(18):8180. https://doi.org/10.3390/su16188180

Chicago/Turabian StyleRadushinsky, Dmitry A., Egor O. Zamyatin, Alexandra I. Radushinskaya, Ivan I. Sytko, and Ekaterina E. Smirnova. 2024. "The Performance and Qualitative Evaluation of Scientific Work at Research Universities: A Focus on the Types of University and Research" Sustainability 16, no. 18: 8180. https://doi.org/10.3390/su16188180

APA StyleRadushinsky, D. A., Zamyatin, E. O., Radushinskaya, A. I., Sytko, I. I., & Smirnova, E. E. (2024). The Performance and Qualitative Evaluation of Scientific Work at Research Universities: A Focus on the Types of University and Research. Sustainability, 16(18), 8180. https://doi.org/10.3390/su16188180