1. Introduction

The air quality problem is not only a health issue but also an urgent issue for sustainable development. With the rapid development of the global economy, the challenge of air pollution has become increasingly apparent. Air quality issues are complex and receive increasing attention. In most cities, multiple monitoring stations are located at different locations to report air quality indicators in real-time. Typically, levels of air pollutants are recorded hourly by multiple stations (such as the data used in this article). This means that at every timestamp, one air quality matrix with the shape of station × feature can be collected by all the stations, where features include NO

2, CO, PM

2.5, PM

10, etc. Based on this air quality data matrix, an air quality index (AQI) can be calculated to inform the public of the air quality at present [

1]. However, the general public is more interested in predicting future air quality rather than real-time reporting. This prediction not only benefits people’s daily activities (such as developing travel plans or avoiding routes with poor air quality), but also improves their health by wearing masks to reduce exposure to air pollution. It also provides policy implications for the government.

There are a large number of works for air quality prediction in the literature [

2,

3,

4,

5,

6]. Most of them are based on the temporal dependency between future states and historical data, such as time series models [

4,

7,

8] and deep neural networks [

3,

9,

10,

11]. However, there are several limitations to the existing methods. First, many models [

4,

9,

10] take the air quality prediction as a single-pollutant regression problem; for example, focusing on only the particulate matter PM

2.5. To predict the level of another pollutant, e.g., carbon monoxide CO, a different model needs to be trained. Second, to improve the performance for prediction, some methods [

8,

12] choose to incorporate extra knowledge, such as the weather forecasting results [

12] or the traffic data [

8]. In practical applications, it is not convenient to collect additional information and synchronize it with air quality data. When data from multiple stations are available, the geographic correlation between these stations is expected to contribute to air quality prediction [

8,

12]. However, most existing methods can only process data from one station at a time, leaving spatial correlations between multiple stations ignored [

3,

4,

10] or partially considered [

8,

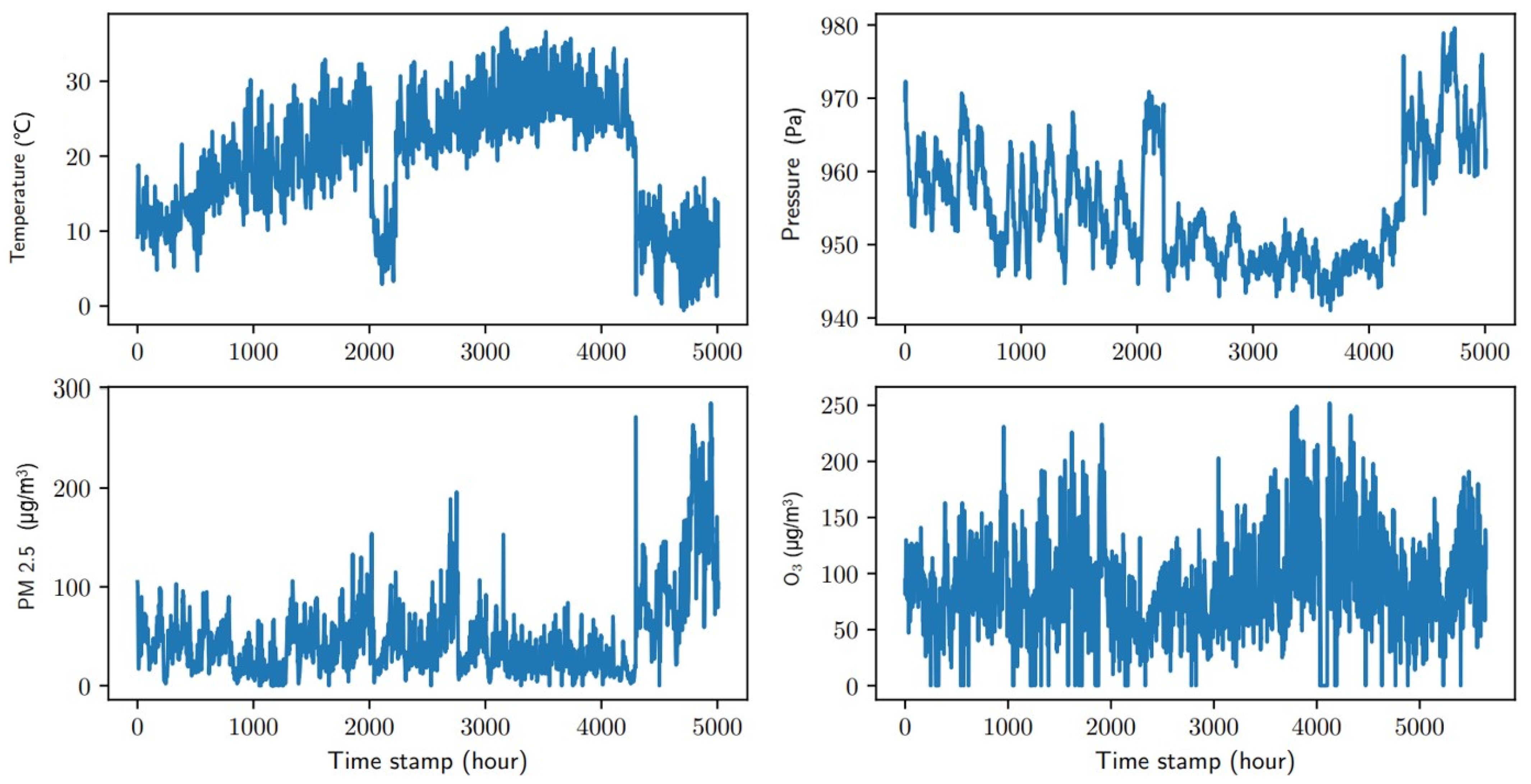

12]. Last but not least, air quality data usually represent a high degree of non-stationarity, as shown in

Figure 1, where the mean value of the data varies over time, making the modeling problem even more difficult. Ignoring the non-stationarity in the data can lead to unacceptable prediction errors and severely weaken the predictive power of the model. Therefore, learning potential spatio-temporal features from non-smooth processes is particularly important for prediction.

We propose to solve the aforementioned problems using a non-stationary diffusion graph convolutional LSTM (long short-term memory). In detail, the spatio–temporal characteristics of air quality data from multiple sites motivate us to use the diffusion convolutional LSTM network [

13]. The diffusion convolution [

14] captures the spatial dependency using bidirectional random walks on the meteorological monitoring sites graph

as shown in

Figure 2b. In addition, we add a de-trending step to the diffusion convolutional LSTM to accommodate the non-stationarity of the data. This de-trending operation can better capture the true characteristics of the data, thus improving the accuracy of the prediction. In time series analysis, the de-trending step is usually implemented via a differencing procedure

[

15,

16]. As a result, the input of the proposed model involves both

and

. We name the proposed model as the long–short de-trending graph convolutional network (LS-deGCN).

Motivated by the vast applications of LSTM in the area of natural language processing (NLP), we propose two variants of the LS-deGCN, as shown in

Figure 3d,e. At every timestamp, there is one station × feature matrix of data

observed, where

M is the number of stations and

N is the number of features. Therefore, the final dataset is a three-dimensional tensor

by stacking all

’s along the time, where

T is the number of timestamps. Given a window length, for example, 3, which is a tuning parameter in our model, we can slice the samples along the third dimension

T. For ease of exposition, we omit the first two dimensions and denote

. The sliced samples are in the form of

, etc. We propose two different ways of defining the target

, which correspond to the two variants of our models. One is a sequence-to-frame model, that is,

, etc., and the other is a sequence-to-sequence model, that is,

, etc.

The contributions of this research are threefold:

- 1.

Firstly, we introduce a de-trending operation into the traditional LSTM model to effectively eliminate the long-term trend in non-stationary data. This improvement enables our model to more accurately capture the changing patterns in non-stationary data.

- 2.

Secondly, we utilize a diffusion graph convolution to extract the spatial correlations present in the air quality data across multiple stations. This innovative method not only improves prediction accuracy, but also has important implications for understanding and predicting air pollutant spread and impacts.

- 3.

Lastly, we propose two distinct models based on LS-deGCN for multi-site air quality prediction and evaluate them on air quality data from Chengdu and seven other major cities. The experimental results demonstrate that the proposed models significantly outperform other existing methods in terms of prediction accuracy and stability.

The rest of the paper is structured as follows.

Section 2 presents a brief review of related works and research gaps. In

Section 3, we introduce the proposed LS-deGCN and its two variants: the sequence-to-frame model and the sequence-to-sequence model. The experimental results are shown in

Section 4.

Section 5 concludes this paper with some remarks.

2. Literature Review and Research Gaps

Traditionally, meteorologists often make air quality predictions based on their empirical knowledge of meteorology. With the development of statistics and machine learning, data-driven methods for air quality prediction are becoming increasingly popular nowadays, which can be typically divided into statistical approaches [

4,

17,

18,

19,

20,

21] and deep learning approaches [

9,

10,

12,

13,

22,

23].

Some existing deep learning models treat air quality forecasting as a single pollutant regression problem; thus, they only predict one pollutant at a time [

4,

9,

24]. As a result, separate models need to be trained for different pollutants if all of the pollutants are of interest, where each model only focuses on one pollutant. Zhang et al. [

4] conducted a statistical analysis of the PM

2.5 data in the years 2013–2016 from the city of Beijing based on a flexible non-stationary hierarchical Bayesian model. Mukhopadhyay and Sahu [

20] proposed a Bayesian spatio-temporal model to estimate the long-term exposure to air pollution levels in England. Since the air quality records are typically monitored over time, Ghaemi et al. [

18] designed a LaSVM-based online algorithm to deal with the streaming of the air quality data. Along another direction, the Granger causality has been proposed to analyze the correlations among the air pollution sequences from different monitoring stations [

7,

8,

12]. Suppose that the sequence of air pollutant records from one station is denoted by

, and the sequence of a factor (such as the geographical correlation) from another station by

, then the mathematical representation of the Granger causality is given by

where

is a weight indicating how the width of time window

k affects the future evolution,

represents the correspondent weight for

and

, and

is the residual for time series

. If

and

, it means that the sequence

is caused by its own history. Wang and Song [

12] combined the Granger causality with deep learning models and there are also some connections between the Granger causality and LSTM [

25].

Deep learning, more specifically, the recurrent neural network (RNN) and LSTM [

25] have achieved vast success in the area of NLP [

26] and video analysis [

27]. The LSTM can capture both the long and short contextual dependency of the data via different types of gates. In the classical LSTM, the well-designed gates, for example, the input gate and the forget gate, make the network very powerful to model the temporal correlations of the sequential data. Thus, much literature has applied LSTM and RNN to the field of air quality prediction.

Guo et al. [

28] proposed a multi-variable LSTM based on both temporal- and variable-level attention mechanisms, which was used to predict the PM

2.5 level in Beijing. Fan et al. [

11] also used the LSTM as a framework to predict air quality in Beijing based on air pollution and meteorological information. Compared with [

28], the data used in [

11] are collected from multiple stations, while the data from different stations are analyzed separately by ignoring the spatial correlation. In big cities, there is usually more than one station deployed to monitor air pollutants and meteorological information. The correlations among readings from different stations are highly informative in forecasting future air quality; thus, the spatial information should be incorporated. Xu et al. [

29] proposed a multi-scale three-dimensional tensor decomposition algorithm to deal with the spatio-temporal correlation in climate modeling. In deep learning, a convolutional neural network (CNN) has advantages in extracting spatial features, while the RNN has superior performance in processing sequence data. Therefore, it is expected to achieve more accurate prediction by combining convolution and RNN in the analysis of spatial and temporal data. Huang and Kuo [

9] proposed to stack a CNN over LSTM to predict the level of PM

2.5. However, this modification results in a non-time series model, which may result in a loss of power when quantifying sequential air quality data.

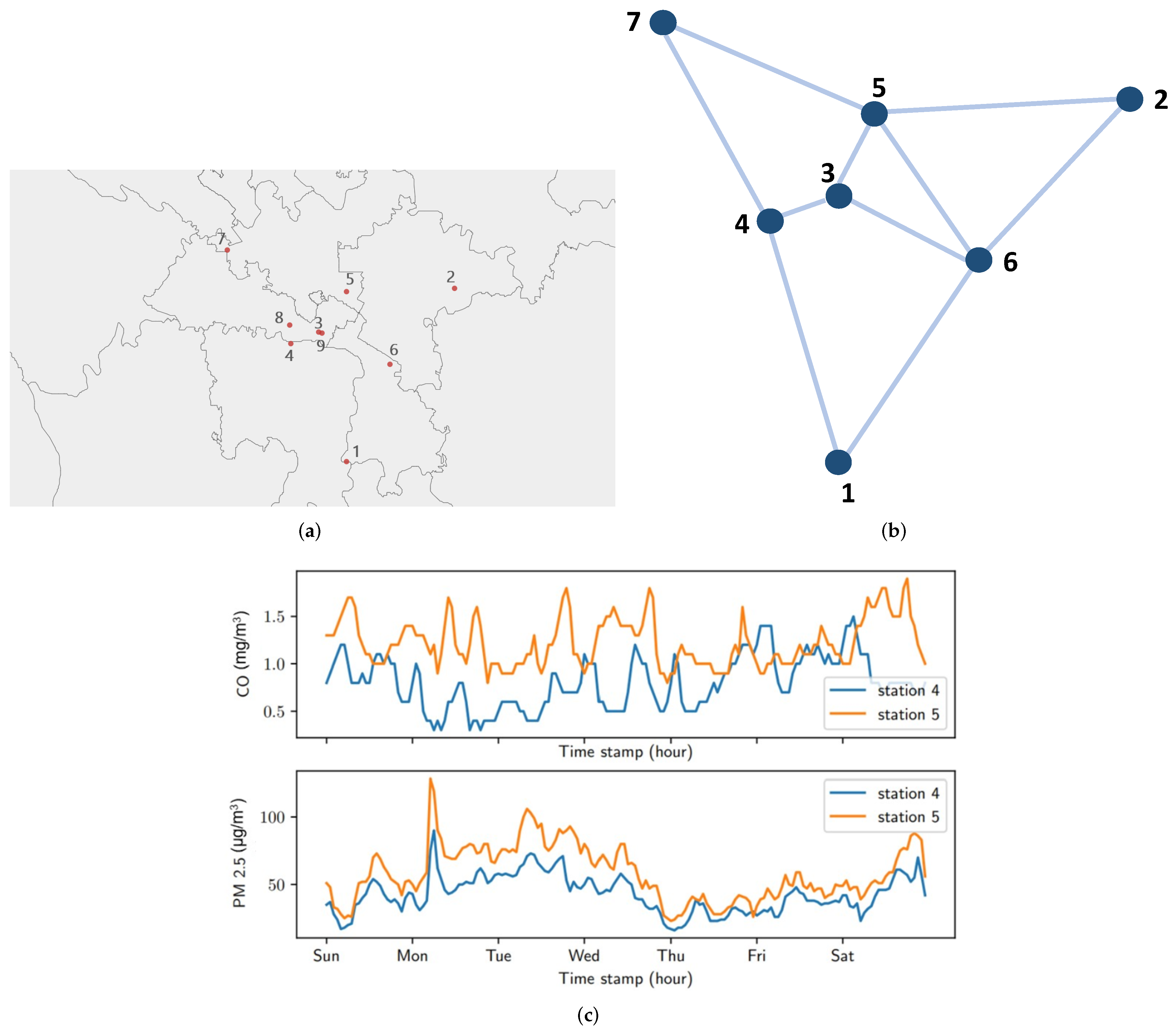

Although the classical RNN and LSTM possess powerful capabilities in modeling time series data, they may not be suitable to capture the spatial correlation in the air quality data [

11]. Let us look at the specifics. The air quality data are hourly recorded by multiple stations. At each timestamp, the observed data can be represented by a station × feature matrix

, where

M is the number of stations and

N is the number of features. The features refer to air pollutants (e.g., CO

2, PM

2.5) and meteorological parameters (e.g., air pressure, air temperature, and air humidity). As shown in

Figure 2, the air quality data of the city of Chengdu involves nine monitoring stations and nine features. The entire data, thus, can be treated as a three-dimensional tensor

, with respect to the three axes station × feature × time. The third dimension

T corresponds to the number of timestamps, which indicates the sequential nature of the data

.

The air quality data in Chengdu has a typical spatio-temporal patternn, and the data from different meteorological stations have a strong spatial correlation.

Figure 2a shows the locations of different monitoring stations.

Figure 2c shows the weekly readings of two types of pollutants, namely CO and PM

2.5, from Station 4 and Station 5, respectively. In the records of CO and PM

2.5, there is a significant change from four stations to five stations, and their patterns are similar. The area where Station 5 is located seems to be more polluted than the area where Station 4 is located, and this information is useful for government policy making in different regions. The similar oscillating patterns in the records of the two stations imply that the rows and columns in the matrix station × feature are correlated and further series correlation over time can also be observed. However, there seems no obvious daily periodicity or “weekends/holidays” effect from the data.

In this paper, we propose to model the air quality data from multiple stations with an LS-deGCN, which replaces the fully connected layer in the classical LSTM with graph diffusion convolution. According to the pairwise geographic distances between different stations, we compute the undirected graph

with a thresholded Gaussian kernel [

30], where

V,

E, and

A denote the vertex (station) set, edge set, and adjacent matrix of the graph, respectively.

, as shown in

Figure 2b, where

is an element of the adjacent matrix

A,

and

are the geographical locations of the

-th nodes, and

calculates the distance between them. The LS-deGCN architecture can be well adapted to the spatio-temporal data. As far as we know, LS-deGCN was first proposed to analyze the two-dimensional radar echo map [

13]. In addition, our model can accommodate the non-stationarity of the air quality data. Compared with the work of Wilson et al. [

31], our method is easier to be implemented and trained. A similar diffusion convolutional recursive neural network was proposed in [

14] to model the traffic flow.

4. Experiments and Results

4.1. Baselines

We choose the following three models as the baselines for comparisons.

- 1.

Linear regression: This is one of the most commonly used approaches to modeling the relationship between a dependent variable y and covariates .

- 2.

Support vector regression: Equipped with a radial basis kernel, it extends linear regression by controlling how much error in regression is acceptable.

- 3.

LSTM sequence-to-scalar (seq2scalar): Samples under this model are constructed in the same way as those under the seq2seq model. The difference is that we take the target y as one of the nine pollutants one by one; that is, we need to train nine separate models for the nine pollutants.

It is worth noting that neither linear regression nor support vector regression can capture spatial correlations with other stations. This means that each station needs to be predicted separately with this method. In addition, all three baseline methods are single-pollutant regression procedures, that is, we need to train separate models on each pollutant at a time. Taking the linear regression as an example, to compare with the proposed model that predicts the whole station × feature map, we need to separately train nine linear regression models corresponding to the six air pollutants (NO2, CO, SO2, O3, PM2.5, and PM10) and the three meteorological measurements.

We use the root mean squared error (RMSE), accuracy, and mean absolute error (MAE) as assessment criteria for prediction [

9],

where

is the ground truth,

is the predicted value, and

n is the size of the testing dataset.

4.2. Data Description and Preprocessing

The Chengdu dataset is composed of the air quality records from 1 January 2013 to 31 December 2016, from nine monitoring stations in the city of Chengdu. Since stations 3 and 9 were very close to each other and their records were the same, we removed the redundancy by dropping the data from station 9. Moreover, we deleted the data from station 8 because over 40% of readings for CO, SO2, and O3 were missing, which mainly occurred between June 2014 to January 2016 due to the sensor dysfunction. As a result, we only used data from seven stations and there are 35,064 instances for each station. Each air quality instance consists of the concentration of six air pollutants: NO2, CO, SO2, O3, PM2.5, and PM10, and three meteorological measurements including air pressure, air temperature, and air humidity. Therefore, the observed data are in the form of , where , , and 35,064.

Since the missing values in the data are mostly concentrated between two points rather than large areas, we use linear interpolation to fill in the missing values of

on the time domain for each column. For example, we can interpolate the missing

with the values of

and

. The specific steps are as follows [

32]: (1) Calculate the slope

between these two points. (2) Use the formula

, where

m is the slope, and the

-intercept

b is obtained. (3) Substitute

into the formula and we can obtain an estimated value for the missing value

. After interpolation, we normalize all the data into the range of

using the min–max normalization. The specific formula is

.

The training and testing samples for baselines are generated in the same way as those for the seq2frame model. The difference is how to extract the target. In the seq2frame architecture, the network receives one frame (matrix) as a target, while the targets of the baseline models are all scalars. Instead of using the whole frame as the target, we take a statistic (e.g., the mean) of the measurement of one pollutant within that frame as the target. Taking the linear regression as an example, we model the air quality prediction as a one-pollutant linear regression problem. Suppose that we fit a linear regression model to predict the PM2.5 emission, then we can use the mean or the median of the PM2.5 level within that frame as the target.

4.3. Training

After data normalization, we extracted the training samples and testing samples from dataset

. We set the window width to be

h to strike a balance between the length of contextual information and the LSTM model complexity. As a result, every sample is a consecutive record of air quality measurements for one day or two days. We partitioned the data into training, testing, and validation sets with a similar ratio according to [

33] as shown in

Table 1. We constructed a two-layer LS-deGCN by stacking two one-layer LS-deGCNs.

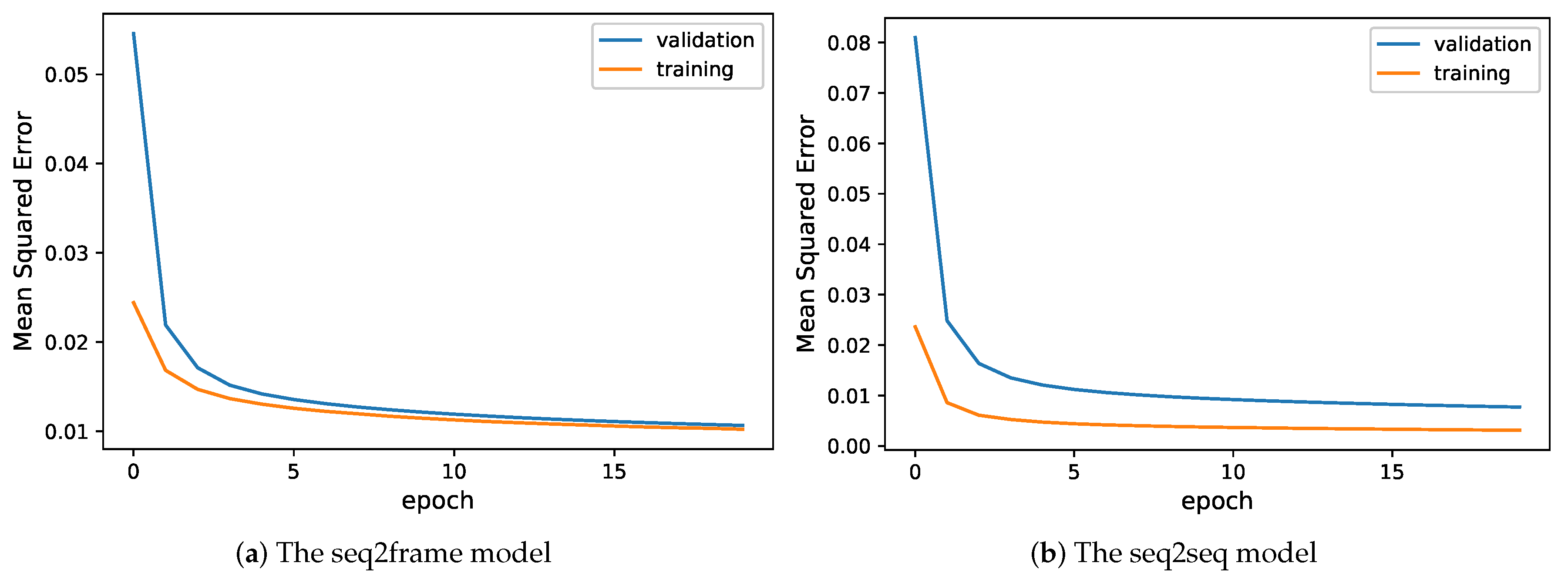

Figure 4a,b reports the training and validation errors under the seq2frame and seq2seq models, respectively. Both models converged in 10 epochs, while the patterns of their convergence are different. Under the seq2frame model, both the training error and validation error drop sharply within the first three epochs, and then the gap between them gradually decreases. By contrast, the gap between the training error and validation error under the seq2seq model remains at a stable level even after 15 epochs. The different error patterns may be due to the different ways of extracting the target variable

under the two models, as shown in

Figure 3d,e.

4.4. Visualization of Predictions

To visualize part of the predicted results,

Figure 5 shows four

air quality maps randomly selected from the testing dataset, as well as the corresponding predicted station × feature frames by the seq2seq model with

and

. For each square of the station × feature matrix, the dark blue color represents a larger value and the light blue color indicates a smaller value.

The far right-end three columns (corresponding to the three meteorological measurements: air pressure, air temperature, and air humidity) of the ground-truth map are almost identical across seven stations. In contrast, the levels of air pollutants recorded by different stations are quite different. For example, the levels of O3 (the third column) reported at different locations show very different patterns, and in the third frame, stations 2 and 5 reported a much higher value than other stations. The proposed model can make an accurate prediction of O3 for each station. We also observe that the values of air pressure in the third frame are much lower than the other three frames, and the level of suspended particulate matter, PM10 and PM2.5, in the last frame is serious, with the level of PM10 from stations 1 and 6 being the top two highest. These trends are all correctly predicted by our proposed seq2seq model. From the visualization results, we conclude that (1) the patterns of the nine air quality measurements are different from one another and (2) the proposed model can make an accurate prediction based on all of the nine measurements from seven stations.

To examine the proposed air quality model across different cities, we conducted another experiment with seven stations from seven major cities in China, including Beijing, Shanghai, Chengdu, Wuhan, Guangzhou, Xi’an, and Nanjing. The data were collected daily (instead of hourly) on seven pollutants (AQI, PM

2.5, PM

10, SO

2, CO, NO

2, and O

3) from 2 December 2013 to 29 February 2020 for each city.

Figure 6 shows that the performance of air quality prediction for seven cities is not as good as that for the seven stations from the same city of Chengdu. One possible reason is that the spatial correlation among seven stations in Chengdu is much stronger than the seven cities that are far away from each other. This is consistent with our general understanding, thus proving the effectiveness of the proposed LS-deGCN in capturing spatial associations.

4.5. Evaluation with Three Metrics

RMSE. As discussed in

Section 3.2, the proposed model can predict the future level of air pollution from 1 h to 48 h by varying

l from 1 to 48. Moreover, the data used for prediction can be a collection of samples on the last day or the last several days, for which

is used to control the length of samples. In other words, we can vary the parameter

to obtain different training and testing datasets for different experiments. We explore six experiments by combinations of

and

. Therefore, we utilize the data collected from yesterday and the last two days to predict the air quality for one hour later, one day later, and two days later, respectively.

Table 2 displays the RMSEs from the testing set for

concerning

. The RMSEs of the non-stationary LS-deGCN seq2frame and seq2seq models can be calculated directly based on the station × feature matrix. However, for the other three models, we need to conduct training and testing for each pollutant separately, and then calculate the average RMSE over all the pollutants. Our proposed models demonstrate significant improvements compared with the baseline methods and, in particular, the non-stationary LS-deGCN seq2seq model achieves the best performance under all six scenarios. The LSTM-based methods outperform the traditional linear regression and support vector regression. Moreover, the RMSEs of our models increase as the time lag

l increases and decreases as

takes a larger value. Intuitively, predicting air quality two days in the future is more challenging than predicting it one hour in the future. However, as the sample size increases, the accuracy of prediction improves, as more historical information can help us capture long-term trends in the data.

Accuracy.

Table 3 compares the accuracy of air quality prediction among the five methods for combinations of

and

. The proposed non-stationary LS-deGCN seq2frame and seq2seq models yield much higher accuracy than the others, and the non-stationary LS-deGCN seq2seq model achieves the highest accuracy among all.

MAE.

Table 4 shows the MAE of all the methods for the six scenarios with

and

. In those experiments, the two proposed models, the non-stationary LS-deGCN seq2frame and seq2seq models, report much lower MAE than the others, and the non-stationary LS-deGCN seq2seq model outperforms all the rest of the competitors.

For the three metrics, we also observe that the scenarios with outperforming those with , especially for the LSTM-based methods (i.e., LSTM seq2scalar, non-stationary LS-deGCN seq2frame and seq2seq). This is expected as the prediction of air quality with the historical data of the last two days is a better strategy than that of only utilizing the data of yesterday. Apart from that, models that take into account spatial dependence (Non-stationary LS-deGCN seq2frame and Non-stationary LS-deGCN seq2seq) perform better than models that do not consider spatial dependence (Linear regression, Support vector regression, and LSTM seq2scalar), which demonstrates the necessity and effectiveness of considering spatial correlation.

For the results of RMSE and MAE in

Table 2 and

Table 4, we observe that the performances continue to decrease when the value of

l increases. However, for the accuracy results in

Table 3, we observe that the performance does not monotonically decrease with an increasing value of

l for our models. In contrast, the accuracy achieves a peak at

and the performance deteriorates for both

and

. This indicates that the three evaluation metrics do not exactly match with each other. As discussed earlier, the larger the time lag

l, the more difficult it is to make a prediction. However, if

l is too small, the overlapped information under the non-stationary LS-deGCN seq2seq model would be large. The model may not fully capture the dynamic changes in the data, resulting in overly simple weight updates. At the same time, the error information may be concentrated on recent data, making the gradient update too drastic, which affects the model’s prediction performance.