A Prediction Model for Remote Lab Courses Designed upon the Principles of Education for Sustainable Development

Abstract

1. Introduction

1.1. Remote Labs’ Features

- Various technologies could be employed to implement a remote lab tailored to students’ needs.

- It is easy for students to perform assessment practices and experiments.

- Remote labs are suitable for industrial applications owing to their remote monitoring potential [12].

- Physical presence is needed to foster collaboration between students and teachers and also among students.

- There is a need for IT administrators to solve possible technical problems and support students during the experimental process.

- Some practical skills and hands-on dexterities cannot be easily developed through simulations and online remote experiments.

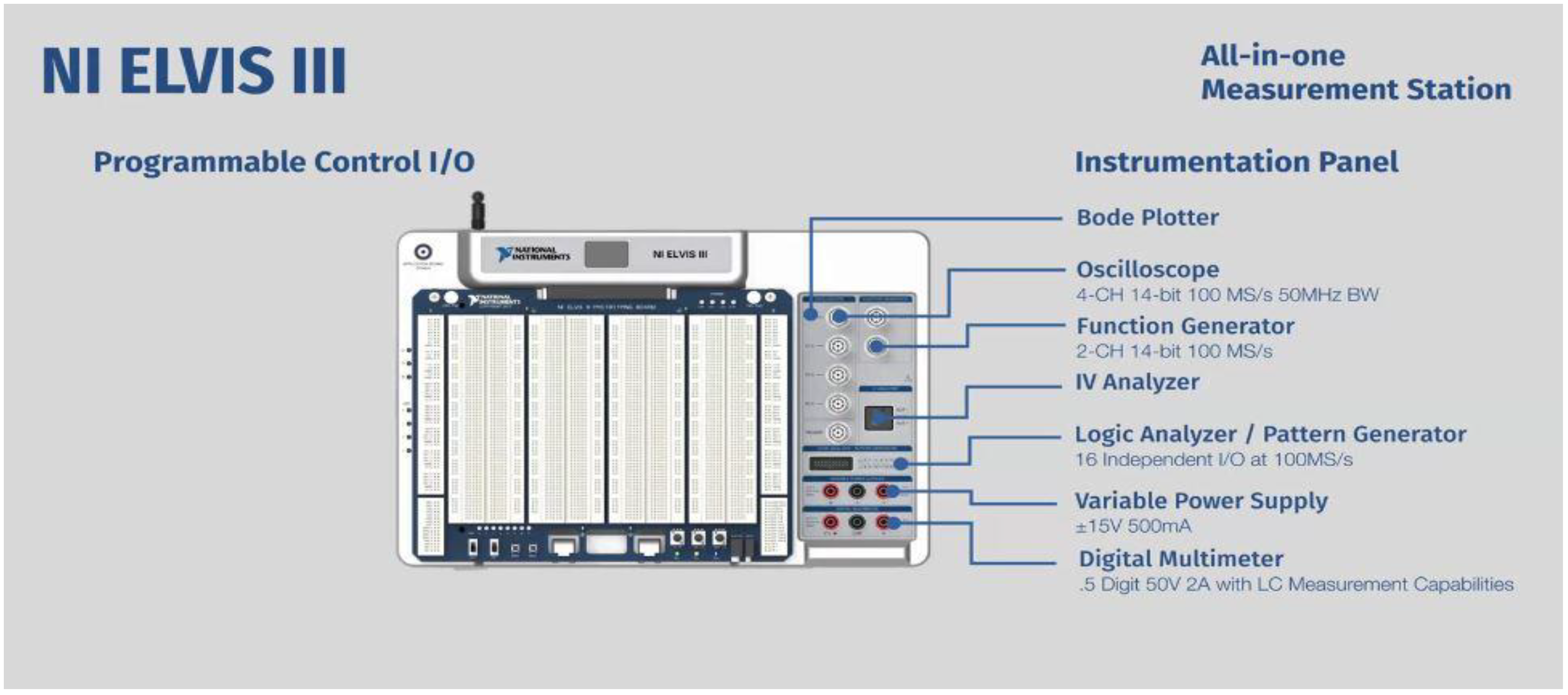

1.2. NI-ELVIS Remote Labs

- Multimedia Web-based operation

- Interactive labs that contribute to theory and emphasize on projects.

- Real-time Experiments that are performed by using real hardware.

- Hardware-sharing capability at a course level.

- Programming potential (Python and C).

- Help students to develop innovative qualities through real experiments.

- Aid students to assimilate knowledge by appropriate resources

- Stir students’ engagement by offering a web-driven environment, augmenting students’ desire to get involved in the learning process.

1.3. Metrics for Assessing Remote Labs

1.4. Predicting Students at Risk in Remote Labs

1.5. Education for Sustainable Development

- Poverty eradication;

- Water and sanitation;

- Energy;

- Transportation;

- Sustainable cities and human settlements;

- Health and population;

- Employment;

- Oceans and seas;

- Forests/desertification/biodiversity;

- Sustainable consumption and production [34].

1.6. Designing Remote Labs upon Sustainability Principles

1.7. Our Research Objective

2. Materials and Methods

2.1. Procedure

- Collect all students’ engagement data elicited from the NI-ELVIS LMS.

- Analyzing the respective data in a binary logistic regression to develop a risk model for students at risk (non-achievers).

- Using a discriminant function analysis based on the binary logistic regression outcome to predict students at risk (non-achievers).

2.2. Our Remote Lab

2.3. Applying Our Method

- To develop a risk model in the context of which risk factors could be identified.

- To provide a classification table for at-risk students and those not at risk.

- To constitute the basis on which a prediction model could be generated.

3. Results

3.1. Risk Model Characteristics

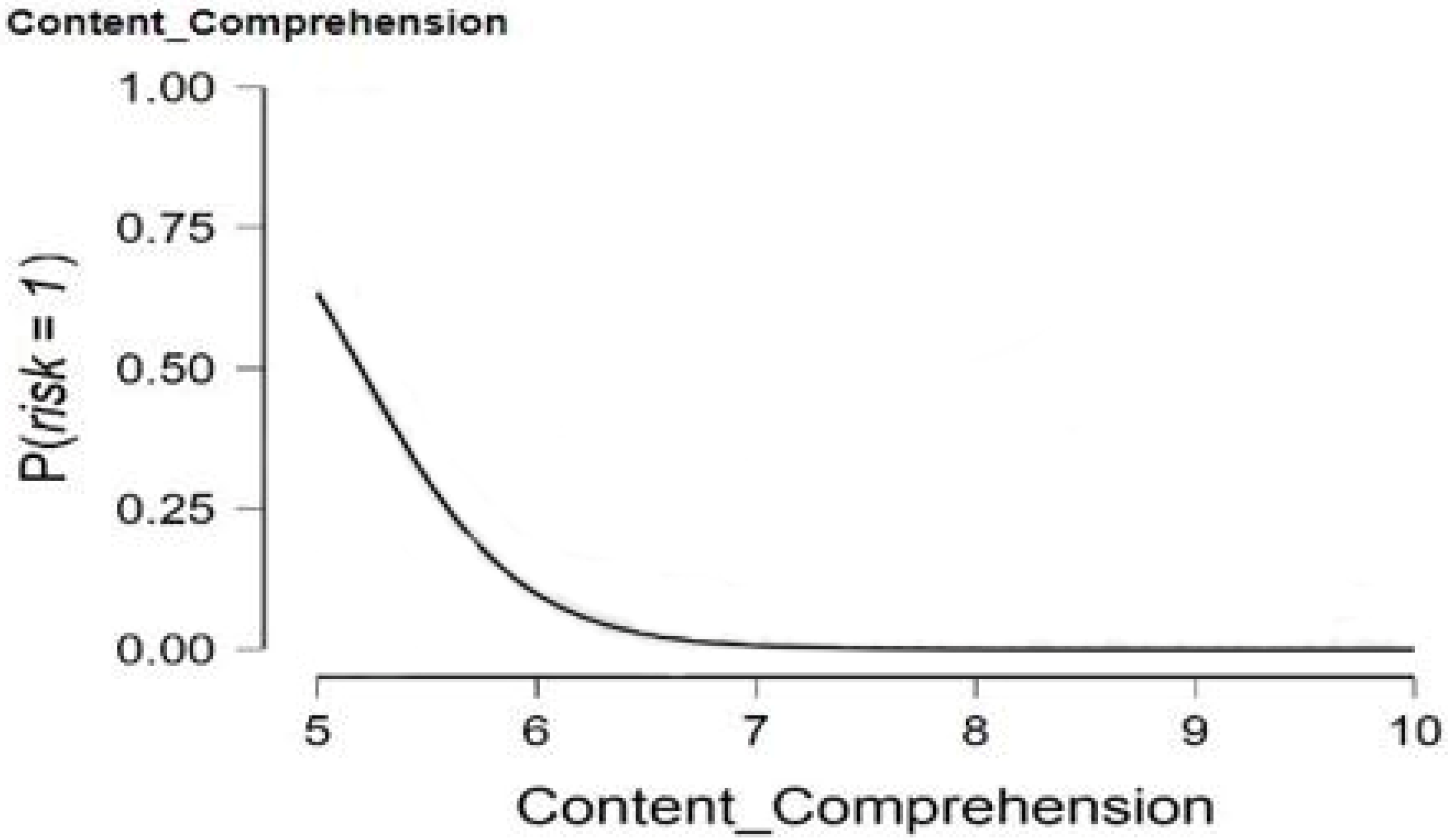

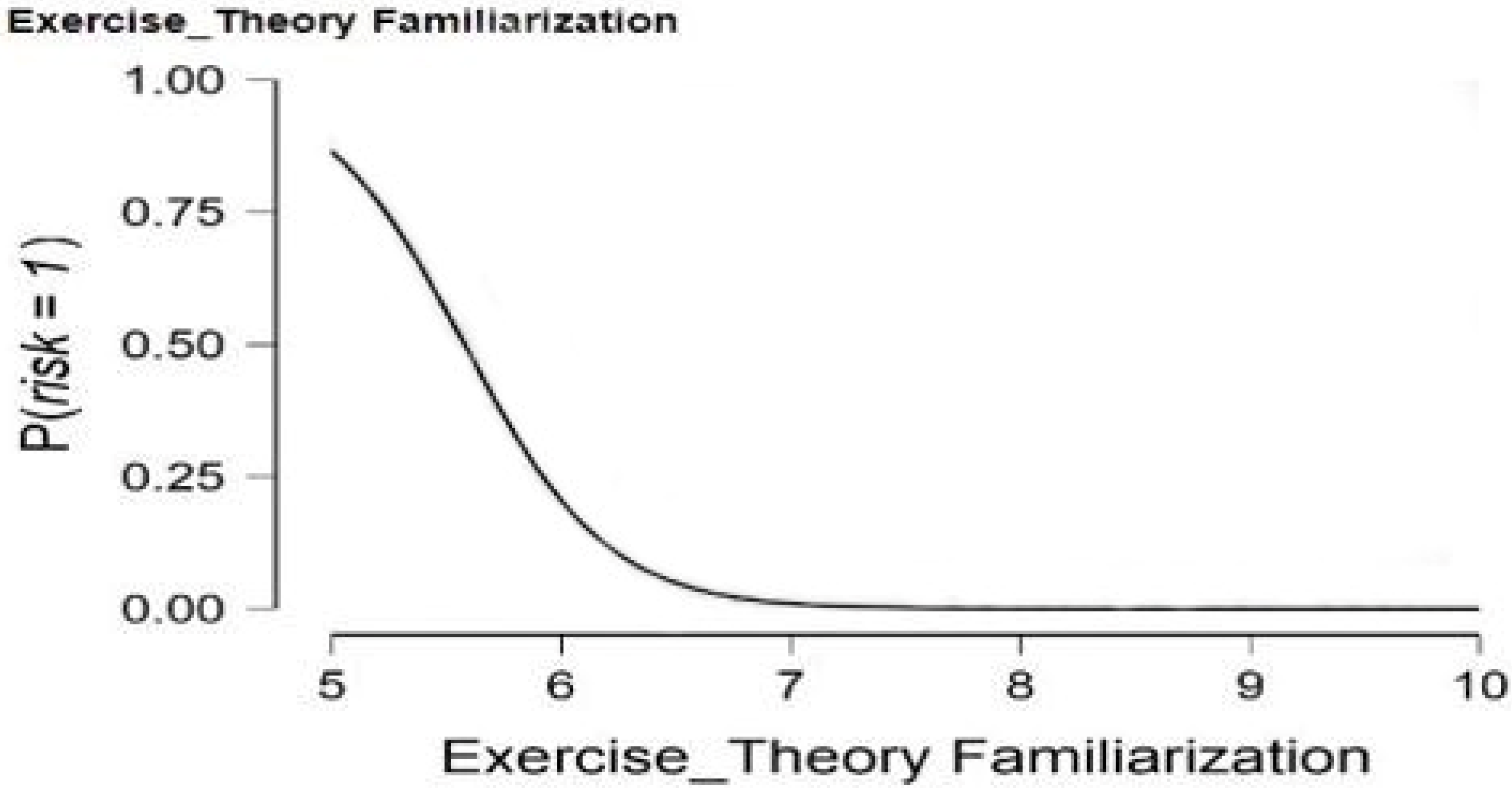

3.2. Risk Factors

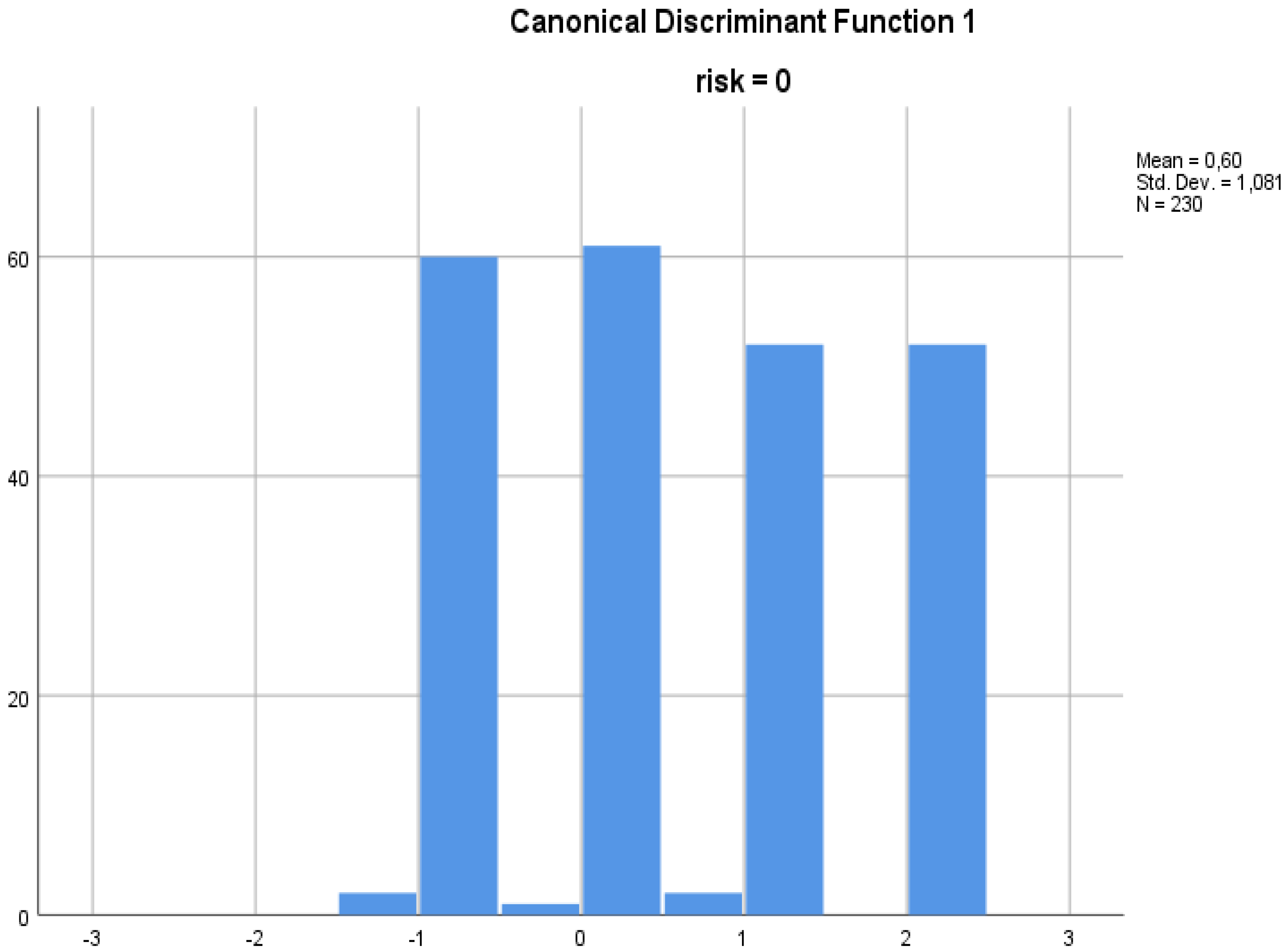

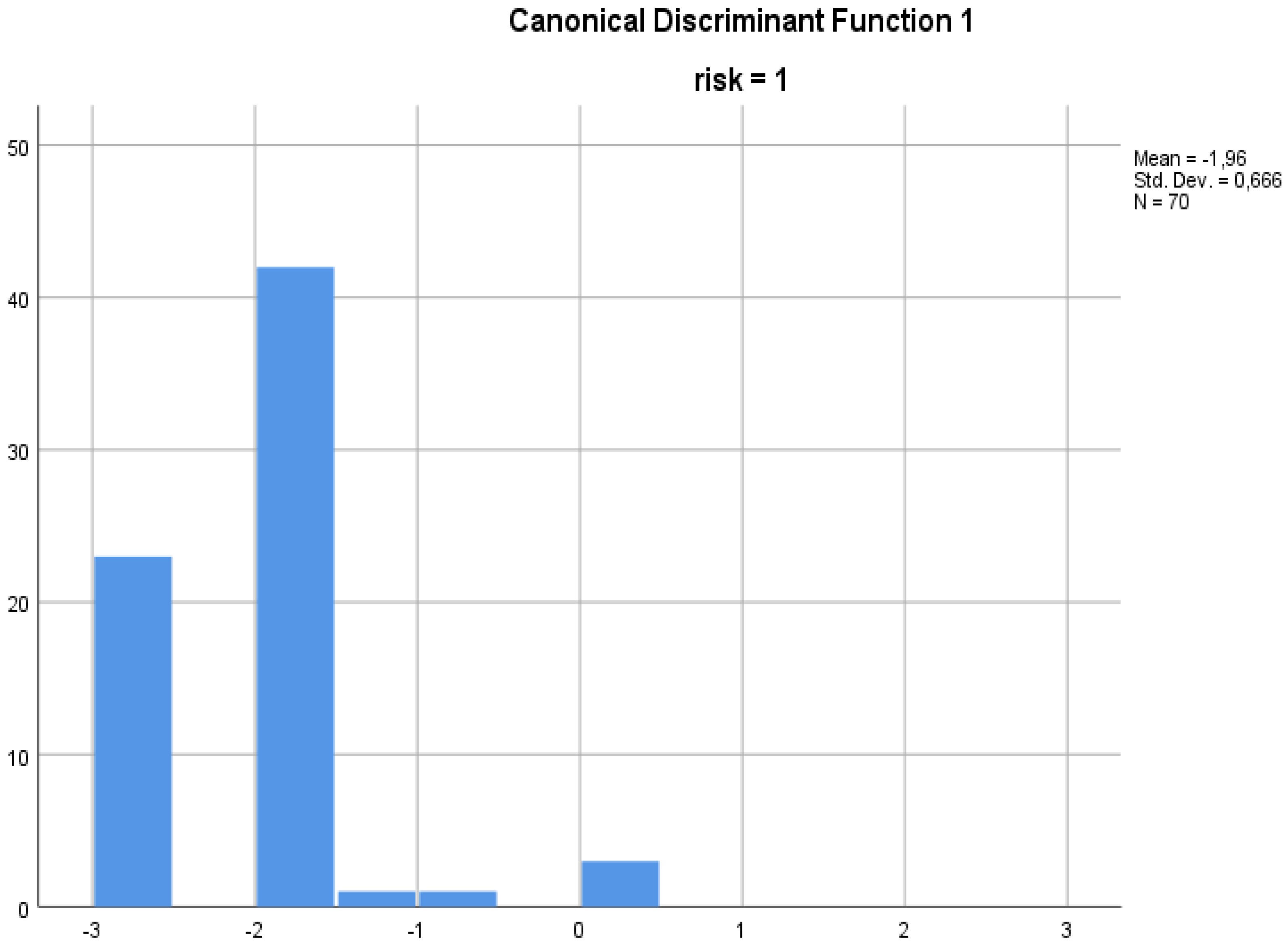

3.3. Prediction Model

Fisher’s linear discriminant functions

y1 = 1.788 × Content_Comprehension + 3.654 × Exercise_Theory Familiarization − 17.198

y2 = 3.325 × Content_Comprehension + 4.592 × Exercise_Theory Familiarization − 33.560

4. Discussion and Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Williamson, B.; Eynon, R.; Potter, J. Políticas, pedagogias e práticas pandêmicas: Tecnologias digitais e educação a distância durante a emergência do coronavírus. Learn. Media Technol. 2020, 45, 107–114. [Google Scholar] [CrossRef]

- Anderson, J. The Coronavirus Pandemic is Reshaping Education. Quartz Daily Brief. 2020. Available online: https://qz.com/1826369/how-coronavirus-is-changing-education/ (accessed on 1 January 2022).

- Zimmerman, J. Coronavirus and the great online-learning experiment. Chron. High. Educ. 2020, 10. [Google Scholar]

- Gerhátová, Ž. Experiments on the Internet-removing barriers facing students with special needs. Procedia-Soc. Behav. Sci. 2014, 114, 360–364. [Google Scholar] [CrossRef]

- Schauer, F.; Ožvoldová, M.; Lustig, F. Integrated e-Learning—New Strategy of Cognition of Real Word in Teaching Physics. In INNOVATIONS 2009: World Innovations in Engineering Education and Research; iNEER: Arlington, VA, USA, 2009; ISBN 978-0-9741252-9-9. [Google Scholar]

- Gerhátová, Ž; Perichta, P.; Drienovský, M.; Palcut, M. Temperature Measurement—Inquiry-Based Learning Activities for Third Graders. Educ. Sci. 2021, 11, 506. [Google Scholar] [CrossRef]

- Grieves, M. Digital twin: Manufacturing excellence through virtual factory replication. White Pap. 2014, 1, 1–7. [Google Scholar]

- Chen, X.; Song, G.; Zhang, Y. Virtual and remote laboratory development: A review. In Earth and Space 2010: Engineering, Science, Construction, and Operations in Challenging Environments; ASCE: Reston, VA, USA, 2010; pp. 3843–3852. [Google Scholar]

- Song, G.; Olmi, C.; Bannerot, R. Enhancing vibration and controls teaching with remote laboratory experiments. In Proceedings of the 2007 Annual Conference & Exposition, Honolulu, HI, USA, 24–27 June 2007; pp. 12–677. [Google Scholar]

- Chang, T.; Jaroonsiriphan, P.; Sun, X. Integrating Nanotechnology into Undergraduate Experience: A Web-based Approach. Int. J. Eng. Educ. 2002, 18, 557–565. [Google Scholar]

- Chen, X.; Jiang, L.; Shahryar, D.; Kehinde, L.; Olowokere, D. Technologies for the Development Of Virtual And Remote Laboratories: A Case Study. In Proceedings of the 2009 Annual Conference & Exposition, Austin, TX, USA, 14–17 June 2009; pp. 14–1168. [Google Scholar]

- Heradio, R.; De La Torre, L.; Galan, D.; Cabrerizo, F.J.; Herrera-Viedma, E.; Dormido, S. Virtual and remote labs in education: A bibliometric analysis. Comput. Educ. 2016, 98, 14–38. [Google Scholar] [CrossRef]

- Mokhtar, A.; Mikhail, G.; Joo, C. A survey on Remote Laboratories for E-Learning and Remote Experimentation. Contemp. Eng. Sci. 2014, 7, 1617–1624. [Google Scholar] [CrossRef]

- Riman, C. A Remote Robotic Laboratory Experiment Platform with Error Management. Int. J. Comput. Sci. Issues 2011, 8, 242. [Google Scholar]

- Faulconer, E.K.; Gruss, A.B. A review to weigh the pros and cons of online, remote, and distance science laboratory experiences. Int. Rev. Res. Open Distrib. Learn. 2018, 19, 3386. [Google Scholar] [CrossRef]

- Yoo, S.; Kim, S.; Choudhary, A.; Roy, O.P.; Tuithung, T. Two-phase malicious web page detection scheme using misuse and anomaly detection. Int. J. Reliab. Inf. Assur. 2014, 2, 1–9. [Google Scholar] [CrossRef]

- NI-Elvis Product Flyer. Available online: https://www.ni.com/pdf/product-flyers/ni-elvis.pdf (accessed on 10 December 2022).

- Singh, S.; Singh, S.; Kumat, N. A Comparative Study on Different Applications of National Instrument ELVIS LabVIEW in automation. In Proceedings of the ISTE Trends & Innovations in Management Engineering, Education & Sciences (TIMEES), Ropar, India, 4–5 November 2016. [Google Scholar]

- Ertugrul, N. Towards virtual laboratories: A survey of LabVIEW-based teaching/learning tools and future trends. Int. J. Eng. Educ. 2000, 16, 171–180. [Google Scholar]

- Zacharia, Z.C. Comparing and combining real and virtual experimentation: An effort to enhance students’ conceptual understanding of electric circuits. J. Comput. Assist. Learn. 2007, 23, 120–132. [Google Scholar] [CrossRef]

- Feisel, L.D.; Rosa, A.J. The role of the laboratory in undergraduate engineering education. J. Eng. Educ. 2005, 94, 121–130. [Google Scholar] [CrossRef]

- Nickerson, J.V.; Corter, J.E.; Esche, S.K.; Chassapis, C. A model for evaluating the effectiveness of remote engineering laboratories and simulations in education. Comput. Educ. 2007, 49, 708–725. [Google Scholar] [CrossRef]

- Lowe, D.; Yeung, H.; Tawfik, M.; Sancristobal, E.; Castro, M.; Orduña, P.; Richter, T. Interoperating remote laboratory management systems (RLMSs) for more efficient sharing of laboratory resources. Comput. Stand. Interfaces 2016, 43, 21–29. [Google Scholar] [CrossRef]

- Toderick, L.; Mohammed, T.; Tabrizi, M.H. A reservation and equipment management system for secure hands-on remote labs for information technology students. In Proceedings of the Frontiers in Education 35th Annual Conference, Indianopolis, IN, USA, 19–22 October 2005; p. S3F. [Google Scholar]

- Macfadyen, L.P.; Dawson, S. Mining LMS data to develop an “early warning system” for educators: A proof of concept. Comput. Educ. 2010, 54, 588–599. [Google Scholar] [CrossRef]

- Georgakopoulos, I.; Kytagias, C.; Psaromiligkos, Y.; Voudouri, A. Identifying risks factors of students’ failure in e-learning systems: Towards a warning system. Int. J. Decis. Support Syst. 2018, 3, 190–206. [Google Scholar] [CrossRef]

- Georgakopoulos, I.; Chalikias, M.; Zakopoulos, V.; Kossieri, E. Identifying factors of students’ failure in blended courses by analyzing students’ engagement data. Educ. Sci. 2020, 10, 242. [Google Scholar] [CrossRef]

- Tsakirtzis, S.; Georgakopoulos, I. Developing a Risk Model to identify factors that critically affect Secondary School students’ performance in Mathematics. J. Math. Educ. Teach. Pract. 2020, 1, 63–72. [Google Scholar]

- Lytras, D.M.; Serban, C.A.; Torres-Ruiz, M.J.; Ntanos, S.; Sarirete, A. Translating knowledge into innovation capability: An exploratory study investigating the perceptions on distance learning in higher education during the COVID-19 pandemic—The case of Mexico. J. Innov. Knowl. 2022, 7, 100258. [Google Scholar] [CrossRef]

- Anagnostopoulos, T.; Kytagias, C.; Xanthopoulos, T.; Georgakopoulos, I.; Salmon, I.; Psaromiligkos, Y. Intelligent predictive analytics for identifying students at risk of failure in Moodle courses. In Intelligent Tutoring Systems, Proceedings of the 16th International Conference, ITS 2020, Proceedings 16, Athens, Greece, 8–12 June 2020; Springer: Cham, Switzerland, 2020; pp. 152–162. [Google Scholar]

- Georgakopoulos, I.; Piromalis, D.; Makrygiannis, P.S.; Zakopoulos, V.; Drosos, C. A Robust Risk Model to Identify Factors that Affect Students’ Critical Achievement in Remote Lab Courses. Int. J. Econ. Bus. Adm. 2022, 10, 3–22. [Google Scholar] [CrossRef]

- UNESCO. Education for Sustainable Development Goals and Learning Objectives. 2017. Available online: https://millenniumedu.org/wp-content/uploads/2017/08/en-unescolearningobjectives (accessed on 28 January 2023).

- McClanahan, L.G. Essential Elements of Sustainability Education. J. Sustain. Educ. 2014, 6, 3582. [Google Scholar]

- UNESCO. Global Action Programme; UNESCO: Paris, France, 2013. [Google Scholar]

- Tetiana, H.; Malolitneva, V. Conceptual and legal framework for promotion of education for sustainable development: Case study for Ukraine. Eur. J. Sustain. Dev. 2020, 9, 42–54. [Google Scholar] [CrossRef]

- Ourbak, T.; Magnan, A.K. The Paris Agreement and climate change negotiations: Small Islands, big players. Reg. Environ. Chang. 2018, 18, 2201–2207. [Google Scholar] [CrossRef]

- Burns, W. Loss and damage and the 21st Conference of the Parties to the United Nations Framework Convention on Climate Change. ILSA J. Int’l Comp. L. 2015, 22, 415. [Google Scholar] [CrossRef]

- Zangerolame Taroco, L.S.; Sabbá Colares, A.C. The UN Framework Convention on Climate Change and the Paris Agreement: Challenges of the Conference of the Parties. Prolegómenos 2019, 22, 125–135. [Google Scholar] [CrossRef]

- Bell, S.; Douce, C.; Caeiro, S.; Teixeira, A.; Martín-Aranda, R.; Otto, D. Sustainability and distance learning: A diverse European experience? Open Learn. J. Open Distance E-Learn. 2017, 32, 95–102. [Google Scholar] [CrossRef]

- Arora, N.K.; Mishra, I. United Nations Sustainable Development Goals 2030 and environmental sustainability: Race against time. Environ. Sustain. 2019, 2, 339–342. [Google Scholar] [CrossRef]

- Kara, A.; Ozbeka, M.E.; Cagiltaya, N.E.; Aydin, E. Maintenance, sustainability, and extendibility in virtual and remote Laboratories. Procedia Soc. Behav. Sci. 2011, 28, 722–728. [Google Scholar] [CrossRef]

- Gkika, E.C.; Anagnostopoulos, T.; Ntanos, S.; Kyriakopoulos, G.L. User Preferences on Cloud Computing and Open Innovation: A Case Study for University Employees in Greece. J. Open Innov. Technol. Mark. Complex. 2020, 6, 41. [Google Scholar] [CrossRef]

- Goller, A.; Rieckmann, M. What do We Know About Teacher Educators’ Perceptions of Education for Sustainable Development? A Systematic Literature Review. J. Teach. Educ. Sustain. 2022, 24, 19–34. [Google Scholar] [CrossRef]

- Kyriakopoulos, G.; Ntanos, S.; Asonitou, S. Investigating the environmental behavior of business and accounting university students. Int. J. Sustain. High. Educ. 2020, 21, 819–839. [Google Scholar] [CrossRef]

- Santoveña-Casal, S.; Pérez, M.D.F. Sustainable Distance Education: Comparison of Digital Pedagogical Models. Sustainability 2020, 12, 9067. [Google Scholar] [CrossRef]

- Dias, B.G.; Onevetch, R.T.d.S.; dos Santos, J.A.R.; Lopes, G.d.C. Competences for Sustainable Development Goals: The Challenge in Business Administration Education. J. Teach. Educ. Sustain. 2022, 24, 73–86. [Google Scholar] [CrossRef]

- Gustavsson, I.; Nilsson, K.; Zackrisson, J.; Garcia-Zubia, J.; Hernandez-Jayo, U.; Nafalski, A.; Nedic, Z.; Gol, O.; Machotka, J.; Pettersson, M.I.; et al. On objectives of instructional laboratories, individual assessment, and use of collaborative remote laboratories. IEEE Trans. Learn. Technol. 2009, 2, 263–274. [Google Scholar] [CrossRef]

- Orduña, P.; Garcia-Zubia, J.; Rodriguez-Gil, L.; Angulo, I.; Hernandez-Jayo, U.; Dziabenko, O.; López-de-Ipiña, D. The weblab-deusto remote laboratory management system architecture: Achieving scalability, interoperability, and federation of remote experimentation. In Cyber-Physical Laboratories in Engineering and Science Education; Springer: Cham, Switzerland, 2018; pp. 17–42. [Google Scholar]

- Tabunshchyk, G.; Kapliienko, T.; Arras, P. Sustainability of the remote laboratories based on systems with limited resources. In Smart Industry & Smart Education, Proceedings of the 15th International Conference on Remote Engineering and Virtual Instrumentation 15, Banglore, India, 3–6 February 2019; Springer: Cham, Switzerland, 2019; pp. 197–206. [Google Scholar]

- Felgueiras, C.; Costa, R.; Alves, G.R.; Viegas, C.; Fidalgo, A.; Marques, M.A.; Lima, N.; Castro, M.; García-Zubía, J.; Pester, A.; et al. A sustainable approach to laboratory experimentation. In Proceedings of the Seventh International Conference on Technological Ecosystems for Enhancing Multiculturality, León, Spain, 16–18 October 2019; pp. 500–506. [Google Scholar]

- Vose, D. Risk Analysis: A Quantitative Guide; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Allison, P.D. Measures of fit for logistic regression. In Proceedings of the SAS Global Forum 2014 Conference, Washington, DC, USA, 23–26 March 2014; SAS Institute Inc.: Cary, NC, USA, 2014; pp. 1–13. [Google Scholar]

- Smith, T.J.; McKenna, C.M. A comparison of logistic regression pseudo R2 indices. Mult. Linear Regres. Viewp. 2013, 39, 17–26. [Google Scholar]

- Bach, F.R.; Jordan, M.I. A Probabilistic Interpretation of Canonical Correlation Analysis; University of California: Berkeley, CA, USA, 2005. [Google Scholar]

- Büyüköztürk, Ş.; Çokluk-Bökeoğlu, Ö. Discriminant function analysis: Concept and application. Eurasian J. Educ. Res. 2008, 33, 73–92. [Google Scholar]

- Brown, M.T.; Wicker, L.R. Discriminant analysis. In Handbook of Applied Multivariate Statistics and Mathematical Modeling; Academic Press: Cambridge, MA, USA, 2000; pp. 209–235. [Google Scholar]

| Competence | Constitutive Definition |

|---|---|

| Systemic thinkingcompetence | Ability to recognize and understand relationships; analyze complex systems; consider how systems are incorporated within different domains and scales; and deal with uncertainty. |

| Anticipatory competence | Ability to understand and evaluate various futures possible, probable, and desirable; create your visions for the future; apply the precautionary principle; assess the consequences of actions; and deal with risks and changes. |

| Normative competence | Ability to understand and reflect on the norms and values that underlie people’s actions; and negotiate sustainability values, principles, objectives, and goals in a context of conflicts of interest and concessions, uncertain knowledge, and contradictions. |

| Strategic competence | Ability to collectively develop and implement innovative actions that promote sustainability at the local level and in a broader context. |

| Collaboration competence | Ability to learn from others; understand and respect other people’s needs, perspectives, and actions (empathy); understand, relate, and be sensitive to others (empathic leadership); deal with conflicts in a group; and facilitate collaboration and participation in problem-solving. |

| Critical thinking competence | Ability to question norms, practices, and opinions; reflect on one’s values, perceptions, and actions; and take a stand in sustainability discourse. |

| Self-knowledge competence | Ability to reflect on one’s role in the local community and (global) society; continuously evaluate and further motivate one’s actions; and deal with one’s feelings and desires. |

| Integrated problem-solving competence | Ability to apply different problem-solving frameworks to complex sustainability problems and develop viable, inclusive, and equitable solution options that promote sustainable development by integrating the competencies mentioned above. |

| Total number of theoretical material resources viewed |

| Total number of theoretical exercises completed |

| Total number of experiments completed |

| Grades on theoretical exercises |

| Grades on experiments |

| Grades on the large-scale exercise (LabView exercise)Time spent on experiments’ completionTime spent on theoretical exercises’ completionTime spent on the large-scale exercise completion |

| Nagelkerke R2 | 0.905 |

| Cox and Snell R2 | 0.600 |

| Classification Table | |||||

|---|---|---|---|---|---|

| Observed | Predicted | ||||

| Risk | Percentage correct | ||||

| 0 | 1 | ||||

| Step 1 | Risk | 0 | 230 | 0 | 100.0 |

| 1 | 3 | 67 | 95.7 | ||

| Overall percentage | 99.0 | ||||

| Coefficient | Β | p-Value |

|---|---|---|

| Total number of theoretical material resources viewed | −2.759 | 0.017 |

| Total number of theoretical exercises completed | −3.224 | 0.037 |

| Grades on theoretical exercises | 0.813 | 0.867 |

| Grades on experiments | 4.149 | 0.828 |

| Grades on the large-scale exercise (LabView exercise) | −0.938 | 0.745 |

| Time spent on experiments’ completion | 23.094 | 0.990 |

| Time spent on theoretical exercises’ completion | −26.679 | 0.989 |

| Time spent on the large-scale exercise’s completion | 25.325 | 0.998 |

| Eigenvalues | ||||

|---|---|---|---|---|

| Function | Eigenvalue | % of Variance | Cumulative % | Canonical Correlation |

| 1 | 1.175 a | 100.0 | 100.0 | 0.735 |

| Classification Function Coefficients | ||

|---|---|---|

| Risk | ||

| 0 | 1 | |

| Content_Comprehension | 3.325 | 1.788 |

| Exercise_Theory Familiarization | 4.592 | 3.654 |

| (Constant) | −33.560 | −17.198 |

| Classification Results | |||||

|---|---|---|---|---|---|

| Risk | Predicted Group Membership | Total | |||

| 0 | 1 | ||||

| Original | Count | 0 | 230 | 0 | 230 |

| 1 | 4 | 66 | 70 | ||

| % | 0 | 100.0 | 0.0 | 100.0 | |

| 1 | 5.7 | 94.3 | 100.0 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Georgakopoulos, I.; Piromalis, D.; Ntanos, S.; Zakopoulos, V.; Makrygiannis, P. A Prediction Model for Remote Lab Courses Designed upon the Principles of Education for Sustainable Development. Sustainability 2023, 15, 5473. https://doi.org/10.3390/su15065473

Georgakopoulos I, Piromalis D, Ntanos S, Zakopoulos V, Makrygiannis P. A Prediction Model for Remote Lab Courses Designed upon the Principles of Education for Sustainable Development. Sustainability. 2023; 15(6):5473. https://doi.org/10.3390/su15065473

Chicago/Turabian StyleGeorgakopoulos, Ioannis, Dimitrios Piromalis, Stamatios Ntanos, Vassilis Zakopoulos, and Panagiotis Makrygiannis. 2023. "A Prediction Model for Remote Lab Courses Designed upon the Principles of Education for Sustainable Development" Sustainability 15, no. 6: 5473. https://doi.org/10.3390/su15065473

APA StyleGeorgakopoulos, I., Piromalis, D., Ntanos, S., Zakopoulos, V., & Makrygiannis, P. (2023). A Prediction Model for Remote Lab Courses Designed upon the Principles of Education for Sustainable Development. Sustainability, 15(6), 5473. https://doi.org/10.3390/su15065473