Artificial Intelligence and Public Values: Value Impacts and Governance in the Public Sector

Abstract

1. Introduction

2. Artificial Intelligence Systems and Public Values

2.1. Artificial Intelligence as Intelligent Systems

2.2. Public Values and Artificial Intelligence Systems in the Public Sector

3. Artificial-Intelligence-Affected Public Values and Artificial Intelligence Governance Challenges and Solutions

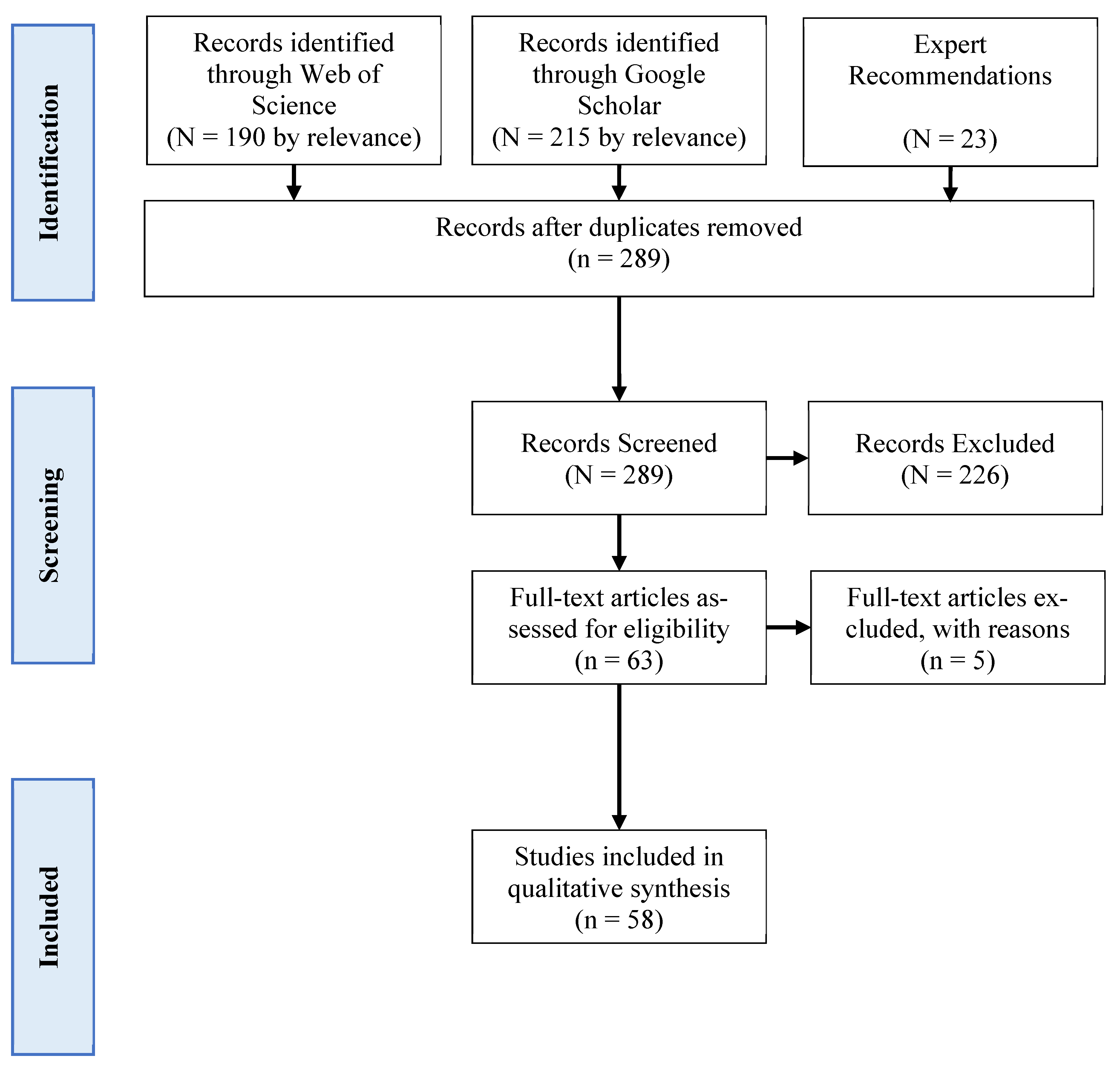

3.1. Systematic Literature Review

3.2. Public Values and Artificial Intelligence Systems

3.3. Governance Challenges

3.4. Governance Solutions

4. Perspectives of Government Employees on Artificial Intelligence Value Impacts and Governance Challenges and Solutions

4.1. Artificial Intelligence Governance and Public Values from the Government Employees’ Perspective

4.2. Public Value Impacts of Artificial Intelligence Use in Government

4.3. Governance Challenges of Artificial Intelligence Use in Government

4.4. Transparency and Participation as Governance Solutions to Artificial Intelligence Use in Government

5. Discussions

5.1. Implications of the Results from the Systematic Literature Review

5.2. Implications of the Results on the Perspective of Government Employees

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Butcher, J.; Beridze, I. What is the State of Artificial Intelligence Governance Globally? RUSI J. 2019, 164, 88–96. [Google Scholar] [CrossRef]

- Engstrom, D.F.; Ho, D.E.; Sharkey, C.M.; Cuéllar, M.-F. Government by Algorithm: Artificial Intelligence in Federal Administrative Agencies; Administrative Conference of the United States: Washington, DC, USA, 2020.

- Wirtz, B.W.; Weyerer, J.C.; Geyer, C. Artificial Intelligence and the Public Sector—Applications and Challenges. Int. J. Public Adm. 2019, 42, 596–615. [Google Scholar] [CrossRef]

- Desouza, K.C. Delivering Artificial Intelligence in Government: Challenges and Opportunities; IBM Center for the Business of Government: Washington, DC, USA, 2018.

- Government Accountability Office. Artificial Intelligence: Emerging Opportunities, Challenges, and Implications; Government Accountability Office: Washington, DC, USA, 2018; p. 95.

- Misuraca, G.; van Noordt, C. Overview of the Use and Impact of AI in Public Services in the EU; JRC120399; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar]

- Frederickson, G.H.; Smith, K.; Larimer, C.W.; Licari, M.J. Public Administration Theory Primer, 3rd ed.; Westview Press: Boulder, CO, USA, 2016. [Google Scholar]

- Janssen, M.; Kuk, G. The Challenges and Limits of Big Data Algorithms in Technocratic Governance. Gov. Inf. Q. 2016, 33, 371–377. [Google Scholar] [CrossRef]

- Fountain, J.E. The moon, the ghetto and artificial intelligence: Reducing systemic racism in computational algorithms. Gov. Inf. Q. 2022, 39, 101645. [Google Scholar] [CrossRef]

- Eubanks, V. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor; St. Martin’s Press: New York, NY, USA, 2017. [Google Scholar]

- European Political Strategy Centre. The Age of Artificial Intelligence. In Towards a European Strategy for Human-Centric Machines; European Commission: Brussels, Belgium, 2018. [Google Scholar]

- Wirtz, B.W.; Weyerer, J.C.; Sturm, B.J. The Dark Sides of Artificial Intelligence: An Integrated AI Governance Framework for Public Administration. Int. J. Public Adm. 2020, 43, 818–829. [Google Scholar] [CrossRef]

- Bovens, M.; Zourisdis, S. From Street-Level to System-Level Bureaucracies: How Information and Communication Technology is Transforming Administrative Discretion and Constitutional Control. Public Adm. Rev. 2002, 62, 174–184. [Google Scholar] [CrossRef]

- Busch, P.A.; Henriksen, H.Z. Digital Discretion: A Systematic Literature Review of ICT and Street-level Discretion. Inf. Polity 2018, 23, 3–28. [Google Scholar] [CrossRef]

- Young, M.M.; Bullock, J.; Lecy, J.D. Artificial Discretion as a Tool of Governance: A Framework for Understanding the Impact of Artificial Intelligence on Public Administration. Perspect. Public Manag. Gov. 2019, 2, 301–314. [Google Scholar] [CrossRef]

- Vogl, T.M.; Siedelin, C.; Ganesh, B.; Bright, J. Algorithmic Bureaucracy. In Proceedings of the dg.o 2019: 20th Annual International Conference on Digital Government Research (dg.o 2019), Dubai, United Arab Emirates, 18 June 2019; pp. 148–153. [Google Scholar]

- Madhavan, R.; Kerr, J.A.; Corcos, A.R.; Isaacoff, B.P. Toward Trustworthy and Responsible Artificial Intelligence Policy Development. IEEE Intell. Syst. 2020, 35, 103–108. [Google Scholar] [CrossRef]

- Fukuyama, F. What is Governance? Governance 2013, 26, 347–368. [Google Scholar] [CrossRef]

- Smith, S.R.; Ingram, H. Policy Tools and Democracy. In The Tools of Government: A Guide to the New Governance; Salamon, L.M., Ed.; Oxford University Press: New York, NY, USA, 2002; pp. 565–584. [Google Scholar]

- Lynn, L.E., Jr.; Heinrich, C.J.; Hill, C.J. Studying Governance and Public Management: Challenges and Prospects. J. Public Adm. Res. Theory 2000, 10, 233–262. [Google Scholar] [CrossRef]

- Chen, Y.-C. Managing Digital Governance: Issues, Challenges, and Solutions; Routledge: New York, NY, USA; Taylor & Francis Group: London, UK, 2017. [Google Scholar]

- Salamon, L.M. (Ed.) The Tools of Government: A Guide to the New Governance; Oxford University Press: Oxford, UK; New York, NY, USA, 2002. [Google Scholar]

- Shneiderman, B. Bridging the Gap Between Ethics and Practice. ACM Trans. Interact. Intell. Syst. 2020, 10, 1–31. [Google Scholar] [CrossRef]

- Wirtz, B.W.; Müller, W.M. An integrated artificial intelligence framework for public management. Public Manag. Rev. 2019, 21, 1076–1100. [Google Scholar] [CrossRef]

- Zuiderwijk, A.; Chen, Y.-C.; Salem, F. Implications of the use of artificial intelligence in public governance: A systematic literature review and a research agenda. Gov. Inf. Q. 2021, 38, 101577. [Google Scholar] [CrossRef]

- Shin, D.; Fotiadis, A.; Yu, H. Prospectus and Limitations of Algorithmic Governance: An Ecological Evaluation of Algorithmic Trends. Digit. Policy Regul. Gov. 2019, 21, 369–383. [Google Scholar] [CrossRef]

- Bannister, F.; Connolly, R. Administration by algorithm: A risk management framework. Inf. Polity 2020, 25, 471–490. [Google Scholar] [CrossRef]

- Nilsson, N. The Quest for Artificial Intelligence; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Stone, P.; Brooks, R.; Brynjolfsson, E.; Calo, R.; Etzioni, O.; Hager, G.; Hirschberg, J.; Kalyanakrishnan, S.; Kamar, E.; Kraus, S.; et al. Artificial Intelligence and Life in 2030; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 3rd ed.; Pearson India: Bengaluru, India, 2015. [Google Scholar]

- U.S. National Science Foundation. National Artificial Intelligence (AI) Research Institutes: Accelerating Research, Transforming Society, and Growing the American Workforce (Program Solicitation); NSF, Ed.; U.S. National Science Foundation: Alexandria, VA, USA, 2019.

- Bechmann, A.; Bowker, G.C. Unsupervised by any other name: Hidden layers of knowledge production in artificial intelligence on social media. Big Data Soc. 2019, 6, 205395171881956. [Google Scholar] [CrossRef]

- Coglianese, C.; Lehr, D. Regulating by Robot: Administrative Decision Making in the Machine-Learning Era. Georget. Law J. 2017, 105, 1147. [Google Scholar]

- Henman, P. Improving public services using artificial intelligence: Possibilities, pitfalls, governance. Asia Pac. J. Public Adm. 2020, 42, 209–221. [Google Scholar] [CrossRef]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef] [PubMed]

- Bryson, J.; Sancino, A.; Benington, J.; Sørensen, E. Towards a multi-actor theory of public value cocreation. Public Manag. Rev. 2017, 19, 640–654. [Google Scholar] [CrossRef]

- Nabatchi, T. Public Values Frames in Administration and Governance. Perspect. Public Manag. Gov. 2018, 1, 59–72. [Google Scholar] [CrossRef]

- Bullock, J. Artificial Intelligence, Discretion, and Bureaucracy. Am. Rev. Public Adm. 2019, 49, 751–761. [Google Scholar] [CrossRef]

- Barth, T.J.; Arnold, E. Artificial Intelligence and Administrative Discretion. Am. Rev. Public Adm. 1999, 29, 332–351. [Google Scholar] [CrossRef]

- Bannister, F.; Connolly, R. ICT, Public Values and Transformative Government: A Framework and Programme for Research. Gov. Inf. Q. 2014, 31, 119–128. [Google Scholar] [CrossRef]

- Pang, M.-S.; Lee, G.; Delone, W.H. IT Resources, Organizational Capabilities, and Value Creation in Public-Sector Organizations: A Public-value Management Perspective. J. Inf. Technol. 2014, 29, 187–205. [Google Scholar] [CrossRef]

- Cordella, A.; Bonina, C.M. A Public Value Perspective for ICT enabled Public Sector Reforms: A Theoretical Reflection. Gov. Inf. Q. 2012, 29, 512–520. [Google Scholar] [CrossRef]

- Chen, T.; Guo, W.; Gao, X.; Liang, Z. AI-based self-service technology in public service delivery: User experience and influencing factors. Gov. Inf. Q. 2020, 38, 101520. [Google Scholar] [CrossRef]

- Raab, C.D. Information privacy, impact assessment, and the place of ethics. Comput. Law Secur. Rev. 2020, 37, 105404. [Google Scholar] [CrossRef]

- Winfield, A.F.; Michael, K.; Pitt, J.; Evers, V. Machine Ethics: The Design and Governance of Ethical AI and Autonomous Systems [Scanning the Issue]. Proc. IEEE 2019, 107, 509–517. [Google Scholar] [CrossRef]

- Holton, R.; Boyd, R. ‘Where are the people? What are they doing? Why are they doing it?’(Mindell) Situating artificial intelligence within a socio-technical framework. J. Sociol. 2019, 57, 179–195. [Google Scholar] [CrossRef]

- Latour, B. Reassembling the Social: An Introduction to Actor-Network-Theory; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Janssen, M.; Brous, P.; Estevez, E.; Barbosa, L.S.; Janowski, T. Data governance: Organizing data for trustworthy Artificial Intelligence. Gov. Inf. Q. 2020, 37, 101493. [Google Scholar] [CrossRef]

- Reddy, S.; Allan, S.; Coghlan, S.; Cooper, P. A governance model for the application of AI in health care. Journal of the American Med. Inform. Assoc. 2020, 27, 491–497. [Google Scholar] [CrossRef]

- Fountain, J. Building the Virtual State: Information Technology and Institutional Change; Brookings Institution Press: Washington, DC, USA, 2001. [Google Scholar]

- Desouza, K.C.; Dawson, G.S.; Chenok, D. Designing, developing, and deploying artificial intelligence systems: Lessons from and for the public sector. Bus. Horiz. 2020, 63, 205–213. [Google Scholar] [CrossRef]

- Fatima, S.; Desouza, K.C.; Dawson, G.S. National strategic artificial intelligence plans: A multi-dimensional analysis. Econ. Anal. Policy 2020, 67, 178–194. [Google Scholar] [CrossRef]

- Select Committee on Artificial Intelligence of the National Science & Technology Council. The National Artificial Intelligence Research and Development Strategic Plan: 2019 Update; Executive Office of the President: Washington, DC, USA, 2019.

- Bannister, F.; Connolly, R. Definiing E-Governance. E Serv. J. 2012, 8, 3–25. [Google Scholar] [CrossRef]

- Williamson, B. Governing software: Networks, databases and algorithmic power in the digital governance of public education. Learn. Media Technol. 2015, 40, 83–105. [Google Scholar] [CrossRef]

- Williamson, B. Knowing public services: Cross-sector intermediaries and algorithmic governance in public sector reform. Public Policy Adm. 2014, 4, 292–312. [Google Scholar] [CrossRef]

- Hsieh, H.-F.; Shannon, S.E. Three Approaches to Qualitative Content Analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef] [PubMed]

- König, P.D.; Wenzelburger, G. Opportunity for renewal or disruptive force? How artificial intelligence alters democratic politics. Gov. Inf. Q. 2020, 37, 101489. [Google Scholar] [CrossRef]

- Larsson, S. On the Governance of Artificial Intelligence through Ethics Guidelines. Asian J. Law Soc. 2020, 7, 437–451. [Google Scholar] [CrossRef]

- Wirtz, B.W.; Weyerer, J.C.; Kehl, I. Governance of artificial intelligence: A risk and guideline-based integrative framework. Gov. Inf. Q. 2022, 39, 101685. [Google Scholar] [CrossRef]

- Djeffal, C.; Siewert, M.B.; Wurster, S. Role of the state and responsibility in governing artificial intelligence: A comparative analysis of AI strategies. J. Eur. Public Policy 2022, 29, 1799–1821. [Google Scholar] [CrossRef]

- Erman, E.; Furendal, M. The global governance of artificial intelligence: Some normative concerns. Moral Philos. Politics 2022, 9, 267–291. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Fisher, S.; Rosella, L.C. Priorities for successful use of artificial intelligence by public health organizations: A literature review. BMC Public Health 2022, 22, 2146. [Google Scholar] [CrossRef]

- Gulson, K.N.; Webb, P.T. Mapping an emergent field of ‘computational education policy’: Policy rationalities, prediction and data in the age of Artificial Intelligence. Res. Educ. 2017, 98, 14–26. [Google Scholar] [CrossRef]

- Coglianese, C.; Lehr, D. Transparency and Algorithmic Governance. Adm. Law Rev. 2019, 71, 1–56. [Google Scholar]

- Jimenez-Gomez, C.E.; Cano-Carrillo, J.; Falcone Lanas, F. Artificial Intelligence in Government. Computer 2020, 53, 23–27. [Google Scholar] [CrossRef]

- Lauterbach, A. Artificial intelligence and policy: Quo vadis? Digit. Policy Regul. Gov. 2019, 21, 238–263. [Google Scholar] [CrossRef]

- Lepri, B.; Staiano, J.; Sangokoya, D.; Letouzé, E.; Oliver, N. The Tyranny of Data? The Bright and Dark Sides of Data-Driven Decision-Making for Social Good; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 3–24. [Google Scholar]

- Lodders, A.; Paterson, J.M. Scrutinising COVIDSafe: Frameworks for evaluating digital contact tracing technologies. Altern. Law J. 2020, 45, 153–161. [Google Scholar] [CrossRef]

- Montes, G.A.; Goertzel, B. Distributed, decentralized, and democratized artificial intelligence. Technol. Forecast. Soc. Chang. 2019, 141, 354–358. [Google Scholar] [CrossRef]

- Palladino, N. A ‘biased’emerging governance regime for artificial intelligence? How AI ethics get skewed moving from principles to practices. Telecommun. Policy 2022, 102479. [Google Scholar] [CrossRef]

- Wallach, W.; Marchant, G. Toward the Agile and Comprehensive International Governance of AI and Robotics [point of view]. Proc. IEEE 2019, 107, 505–508. [Google Scholar] [CrossRef]

- Butterworth, M. The ICO and artificial intelligence: The role of fairness in the GDPR framework. Comput. Law Secur. Rev. 2018, 34, 257–268. [Google Scholar] [CrossRef]

- Kerr, A.; Barry, M.; Kelleher, J.D. Expectations of artificial intelligence and the performativity of ethics: Implications for communication governance. Big Data Soc. 2020, 7, 205395172091593. [Google Scholar] [CrossRef]

- Pesapane, F.; Volonté, C.; Codari, M.; Sardanelli, F. Artificial intelligence as a medical device in radiology: Ethical and regulatory issues in Europe and the United States. Insights Into Imaging 2018, 9, 745–753. [Google Scholar] [CrossRef] [PubMed]

- Calo, R. Artificial Intelligence Policy: A Primer and Roadmap. Univ. Bologna Law Rev. 2018, 3, 180–218. [Google Scholar] [CrossRef]

- Campion, A.; Gasco-Hernandez, M.; Jankin Mikhaylov, S.; Esteve, M. Overcoming the Challenges of Collaboratively Adopting Artificial Intelligence in the Public Sector. Soc. Sci. Comput. Rev. 2020, 40, 462–477. [Google Scholar] [CrossRef]

- Winter, J.S.; Davidson, E. Governance of artificial intelligence and personal health information. Digit. Policy Regul. Gov. 2019, 21, 280–290. [Google Scholar] [CrossRef]

- Todolí-Signes, A. Algorithms, artificial intelligence and automated decisions concerning workers and the risks of discrimination: The necessary collective governance of data protection. Transfer 2019, 25, 465–481. [Google Scholar] [CrossRef]

- Ho, C.W.L.; Ali, J.; Caals, K. Ensuring trustworthy use of artificial intelligence and big data analytics in health insurance. Bull. World Health Organ. 2020, 98, 263–269. [Google Scholar] [CrossRef] [PubMed]

- Vanderelst, D.; Winfield, A. An architecture for ethical robots inspired by the simulation theory of cognition. Cogn. Syst. Res. 2018, 48, 56–66. [Google Scholar] [CrossRef]

- Nishant, R.; Kennedy, M.; Corbett, J. Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda. Int. J. Inf. Manag. 2020, 53, 102104. [Google Scholar] [CrossRef]

- Meske, C.; Bunde, E.; Schneider, J.; Gersch, M. Explainable Artificial Intelligence: Objectives, Stakeholders, and Future Research Opportunities. Inf. Syst. Manag. 2020, 39, 53–63. [Google Scholar] [CrossRef]

- Kim, E.-S. Deep learning and principal–agent problems of algorithmic governance: The new materialism perspective. Technol. Soc. 2020, 63, 101378. [Google Scholar] [CrossRef]

- Linkov, I.; Trump, B.D.; Anklam, E.; Berube, D.; Boisseasu, P.; Cummings, C.; Ferson, S.; Marie-Valentine, F.; Goldstein, B.; Hristozov, D.; et al. Comparative, collaborative, and integrative risk governance for emerging technologies. Environ. Syst. Decis. 2018, 38, 170–176. [Google Scholar] [CrossRef]

- Engin, Z.; Treleaven, P. Algorithmic Government: Automating Public Services and Supporting Civil Servants in using Data Science Technologies. Comput. J. 2019, 62, 448–460. [Google Scholar] [CrossRef]

- Sachs, J.D. Some Brief Reflections on Digital Technologies and Economic Development. Ethics Int. Aff. 2019, 33, 159–167. [Google Scholar] [CrossRef]

- Popenici, S.A.D.; Kerr, S. Exploring the impact of artificial intelligence on teaching and learning in higher education. Res. Pract. Technol. Enhanc. Learn. 2017, 12, 22. [Google Scholar] [CrossRef] [PubMed]

- Harrison, T.M.; Luna-Reyes, L.F. Cultivating Trustworthy Artificial Intelligence in Digital Government. Soc. Sci. Comput. Rev. 2022, 40, 494–511. [Google Scholar] [CrossRef]

- Siau, K.; Wang, W. Building trust in artificial intelligence, machine learning, and robotics. Cut. Bus. Technol. J. 2018, 31, 47–53. [Google Scholar]

- Nazerdeylami, A.; Majidi, B.; Movaghar, A. Autonomous litter surveying and human activity monitoring for governance intelligence in coastal eco-cyber-physical systems. Ocean. Coast. Manag. 2021, 200, 105478. [Google Scholar] [CrossRef]

- deSousa, W.G.; deMelo, E.R.P.; Bermejo, P.H.D.S.; Farias, R.A.S.; Gomes, A.O. How and where is artificial intelligence in the public sector going? A literature review and research agenda. Gov. Inf. Q. 2019, 36, 101392. [Google Scholar]

- Mikhaylov, S.J.; Esteve, M.; Campion, A. Artificial Intelligence for the Public Sector: Opportunities and Challenges of Cross-sector Collaboration. Philos. Trans. R. Soc. A 2018, 376, 20170357. [Google Scholar] [CrossRef]

- Sun, T.Q.; Medaglia, R. Mapping the challenges of Artificial Intelligence in the public sector: Evidence from public healthcare. Gov. Inf. Q. 2019, 36, 362–383. [Google Scholar] [CrossRef]

- Stritch, J.M.; Pedersen, M.J.; Taggart, G. The Opportunities and Limitations of Using Mechanical Turk (MTURK) in Public Administration and Management Scholarship. Int. Public Manag. J. 2017, 20, 489–511. [Google Scholar] [CrossRef]

- Marvel, J.D.; Girth, A.M. Citizen Attributions of Blame in Third-Party Governance. Public Adm. Rev. 2016, 76, 96–108. [Google Scholar] [CrossRef]

- Pencheva, I.; Esteve, M.; Mikhaylov, S.J. Big Data and AI–A transformational shift for government: So, what next for research? Public Policy Adm. 2020, 35, 24–44. [Google Scholar] [CrossRef]

- Mergel, I.; Edelmann, N.; Haug, N. Defining digital transformation: Results from expert interviews. Gov. Inf. Q. 2019, 36, 101385. [Google Scholar] [CrossRef]

- Panagiotopoulos, P.; Klievink, B.; Cordella, A. Public value creation in digital government. Gov. Inf. Q. 2019, 36, 101421. [Google Scholar] [CrossRef]

- Young, M.M.; Himmelreich, J.; Bullock, J.B.; Kim, K.-C. Artificial Intelligence and Administrative Evil. Perspect. Public Manag. Gov. 2021, 4, 244–258. [Google Scholar] [CrossRef]

- Marsh, S. Councils Scrapping Use of Algorithms in Benefit and Welfare Decisions. The Guardian. 24 August 2020. Available online: https://www.theguardian.com/society/2020/aug/24/councils-scrapping-algorithms-benefit-welfare-decisions-concerns-bias (accessed on 12 January 2023).

- Government Accountability Office. Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities; Government Accountability Office: Washington, DC, USA, 2021; p. 112.

| Public Values | |

|---|---|

| Duty Orientation | Responsibility to citizens [1,12,23,35,59,60,61,62,63,64] Compliance with laws [1,35] |

| Service Orientation | Transparency [1,17,35,50,60,63,65,66,67,68,69,70,71,72,73,74] Effectiveness [15,23,39,75] Efficiency [15,39] |

| Social Orientation | Accountability to public [1,12,15,35,49,50,61,63,66,69,71,72,74,76,77,78] Privacy [3,12,49,50,60,64,74,79,80,81] Equality of treatment and access [3,12,15,39,49,72,79,82] Fairness [9,17,50,60,61,63,66,74,76] Justice [35,60,64,79] Due process [35] Inclusiveness [23,74] Security [64] |

| Governance Challenges | |

|---|---|

| Public Value Challenges | Lack of transparency [3,15,36,50,65,68,70,73,83] Lack of accountability [1,12,15,24,36,59,61] Privacy concerns [3,12,36,50,72,79,80,81] Inequity concerns [3,12,73,77,79] Lack of responsibility [1,3,12,61,64] Safety concerns [3,12,84] Malicious use of AI [1,77] Moral dilemmas [3,12] Social acceptance and trust concerns [3,12] Administrative evil [9,39] Cybersecurity risks [85] Discrimination in recruitment, promotion, and dismissals in organizations [82] Fairness concerns in data processing [76,86] Lack of AI expertise and knowledge [3] Lack of responsiveness [59] Principal–agent problem [87] Sustainability challenges [88] Violation of laws [60] |

| Data Quality, Processing, and Outcome Challenges | Data quality and management [3,17,49,50,71,83,85,89] AI rule-making concerns [3,12] Lost control of AI [12,64] Adverse impacts, difficulty of measuring the performance of AI, and uncertain human behavioral responses to AI-based interventions [85] Financial feasibility [3] Interaction problem with humans [12] |

| Societal Governance Challenges | Replacing human jobs [1,3,12,90,91] Insufficient regulation and “soft laws” [75] Replacing human discretion [39,64] Value judgment concerns [3,12] Authoritarian abuses [17] Cross-sector collaboration hardship [70] Power asymmetry [86] Threatening autonomy [81] |

| Governance Solutions | |

|---|---|

| Public Values Solutions | Explainable AI [59,61,65,68,74,86] Inclusion of more public values [50,60,66,71,72,74] Ethical AI [46,61,74,77] Distributed, decentralized, and democratized market [73] Ethical agent [46] Impacts on bureaucratic discretion [39] Privacy by design [35] Trustworthy AI [92,93] |

| Data Quality and Processing sSolutions | Regulation [12,17,35,74,76,78,82,88] Bias assessment [35,74] Data audit [74,81] Data-driven digital government [69] Data-sharing agreement [81] Human audition [61,74,94] Independent quality assurance [35] Oversight committee [74,81] Recognition and removal of bias [83] Understanding of AI [64] |

| Societal Governance Solutions | Collaborative governance [66,70,80,82,95] Multilevel approach [49,85] Artificial discretion analysis [15] Facilitative leadership, alignment of goals and objectives, shared knowledge, socialization, expert insights, and strategies [74,96] Governance coordinating committee [75] Holistic industrywide solution with governmental involvement [23] Integration of workflow and governance [50] Levels of governance [23] Pluralist approach [87] Risk governance [88] Stakeholder participation [74,83] Systems dynamics approaches [85] Task characteristics (complexity and uncertainty) [39] |

| No Improvement | Modest | Substantial | Transformative | Substantial and Transformative Combined | |

|---|---|---|---|---|---|

| Decision Making | 24 (7.43%) | 102 (31.58%) | 126 (39.01%) | 71 (21.98%) | 60.99% |

| Effectiveness | 12 (3.72%) | 100 (30.96%) | 154 (47.68%) | 57 (17.65%) | 65.3% |

| Efficiency | 9 (2.79%) | 63 (19.75%) | 147 (45.51%) | 104 (32.20%) | 77.7% |

| Accountability | 43 (13.31%) | 106 (32.82%) | 123 (38.08%) | 51 (15.79%) | 53.8% |

| Development of AI in Government Is Likely to Negatively Impact Transparency Due to the Technical Nature of the Algorithm | Use of AI in My Government Agency Will Take Away Your Discretionary Authority | Goal Setting of AI Use in Government Cannot Fully Consider Societal Values beyond Technical Efficiency | |

|---|---|---|---|

| 1 (Strongly Disagree) | 22 (6.81%) | 27 (8.36%) | 13 (4.02%) |

| 2 (Disagree) | 40 (12.38%) | 29 (8.98%) | 33 (10.22%) |

| 3 (Slightly Disagree) | 37 (11.46%) | 46 (14.24%) | 54 (16.72%) |

| 4 (Neutral) | 68 (21.05%) | 55 (17.03%) | 70 (21.67%) |

| 5 (Slightly Agree) | 57 (17.65%) | 69 (21.36%) | 64 (19.81%) |

| 6 (Agree) | 67 (20.74%) | 54 (16.72%) | 52 (16.10%) |

| 7 (Strongly Agree) | 32 (9.91%) | 43 (13.31%) | 37 (11.46%) |

| Mean | 4.32 | 4.37 | 4.37 |

| Median | 4 | 5 | 4 |

| Mode | 4 | 5 | 4 |

| Do You Agree That the Information on the Development of Algorithms Used in the AI System Should Be Made Available to Government Managers? | Do You Agree That the Information on the Development of Algorithms Used in the AI System Should Be Made Available to the General Public? | Do You Agree That Information about the Data That the AI System Uses Should Be Made Available to the Government Managers Responsible for the Service? | Do You Agree That Information about the Data That the AI System Uses Should Be Made Available to the General Public? | |

|---|---|---|---|---|

| 1 (Strongly Disagree) | 2 (0.62%) | 12 (3.72%) | 4 (1.24%) | 5 (1.55%) |

| 2 (Disagree) | 13 (4.02%) | 23 (7.12%) | 7 (2.17%) | 22 (6.81%) |

| 3 (Slightly Disagree) | 18 (5.57%) | 12 (3.72%) | 10 (3.10%) | 27 (8.36%) |

| 4 (Neutral) | 49 (15.17%) | 68 (21.05%) | 43 (13.31%) | 63 (19.50%) |

| 5 (Slightly Agree) | 86 (26.63%) | 77 (23.84%) | 101 (31.27%) | 70 (21.67%) |

| 6 (Agree) | 86 (26.63%) | 75 (23.22%) | 92 (28.48%) | 71 (21.98%) |

| 7 (Strongly Agree) | 69 (21.36%) | 56 (17.34%) | 66 (20.43%) | 65 (20.12%) |

| Mean | 5.285 | 4.932 | 5.384 | 4.994 |

| Median | 5 | 5 | 5 | 5 |

| Participants /Stages | Public Officials | Members of the General Public | ||

|---|---|---|---|---|

| Stages | Count | Percentage | Count | Percentage |

| Goal Setting for Government AI use | 47 | 14.55% | 47 | 14.55% |

| Development of the AI System | 87 | 26.93% | 68 | 21.05% |

| Use of AI Systems in Government Decisions | 50 | 15.48% | 83 | 25.70% |

| Impact Assessment of AI-Enabled Decisions | 23 | 7.12% | 48 | 14.86% |

| All of the Above | 116 | 35.91% | 77 | 23.84% |

| Total | 323 | 100% | 323 | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.-C.; Ahn, M.J.; Wang, Y.-F. Artificial Intelligence and Public Values: Value Impacts and Governance in the Public Sector. Sustainability 2023, 15, 4796. https://doi.org/10.3390/su15064796

Chen Y-C, Ahn MJ, Wang Y-F. Artificial Intelligence and Public Values: Value Impacts and Governance in the Public Sector. Sustainability. 2023; 15(6):4796. https://doi.org/10.3390/su15064796

Chicago/Turabian StyleChen, Yu-Che, Michael J. Ahn, and Yi-Fan Wang. 2023. "Artificial Intelligence and Public Values: Value Impacts and Governance in the Public Sector" Sustainability 15, no. 6: 4796. https://doi.org/10.3390/su15064796

APA StyleChen, Y.-C., Ahn, M. J., & Wang, Y.-F. (2023). Artificial Intelligence and Public Values: Value Impacts and Governance in the Public Sector. Sustainability, 15(6), 4796. https://doi.org/10.3390/su15064796