SCDNet: Self-Calibrating Depth Network with Soft-Edge Reconstruction for Low-Light Image Enhancement

Abstract

1. Introduction

- (1)

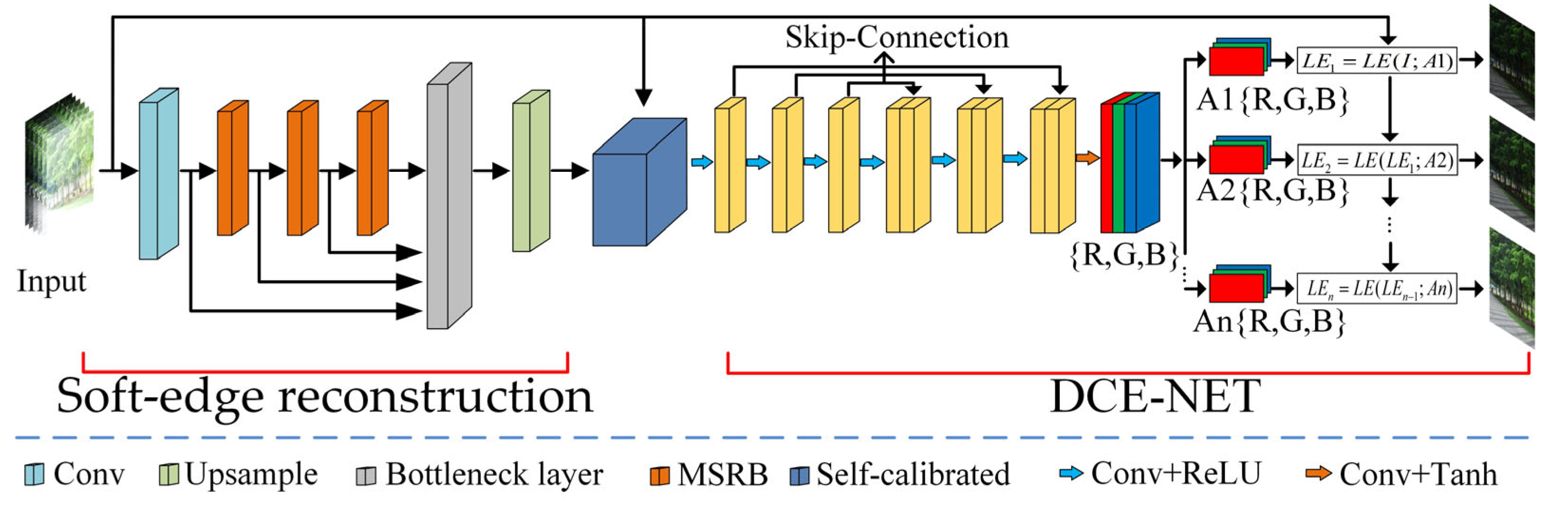

- We propose a self-calibrated iterative image enhancement method based on soft edge reconstruction. This method first softens the input image and then extracts the feature details, then the self-calibration module accelerates the convergence of the input and feeds it into the enhancement network, and finally iteratively enhances the low-light image according to the gloss curve to obtain a high-quality image. This method can not only better extract image details, but also accelerate the convergence speed of the network, making the output more efficient and stable.

- (2)

- For low-light image texture loss and detail blur, we introduce a soft edge reconstruction module to detect image features at different scales, extracting clear and sharp edge features of the image through the softening extraction of image edges and the reconstruction of image details.

- (3)

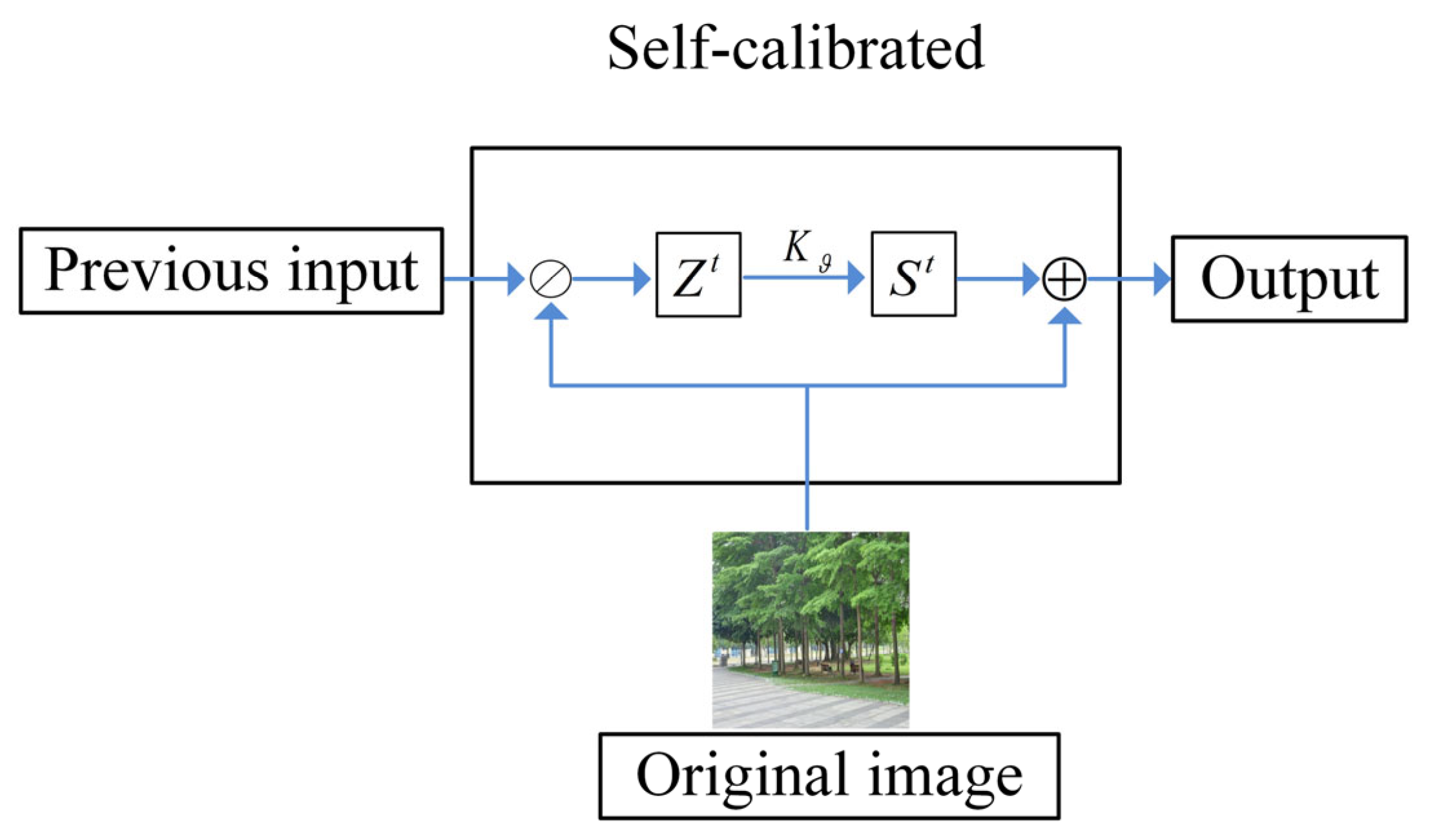

- This paper ensures exposure stability of the input image by introducing a self-calibration module to perform stepwise convergence of the image. Lightweight networks can significantly improve computational efficiency, thereby accelerating network convergence, which enhances image quality while reducing processing speed.

2. Proposed Method

2.1. Network Model

2.2. Soft-Edge Reconstruction Module

2.3. Self-Calibrated Module

2.4. Iteration Module

2.5. Loss Function

3. Experimental Results

3.1. Experimental Settings

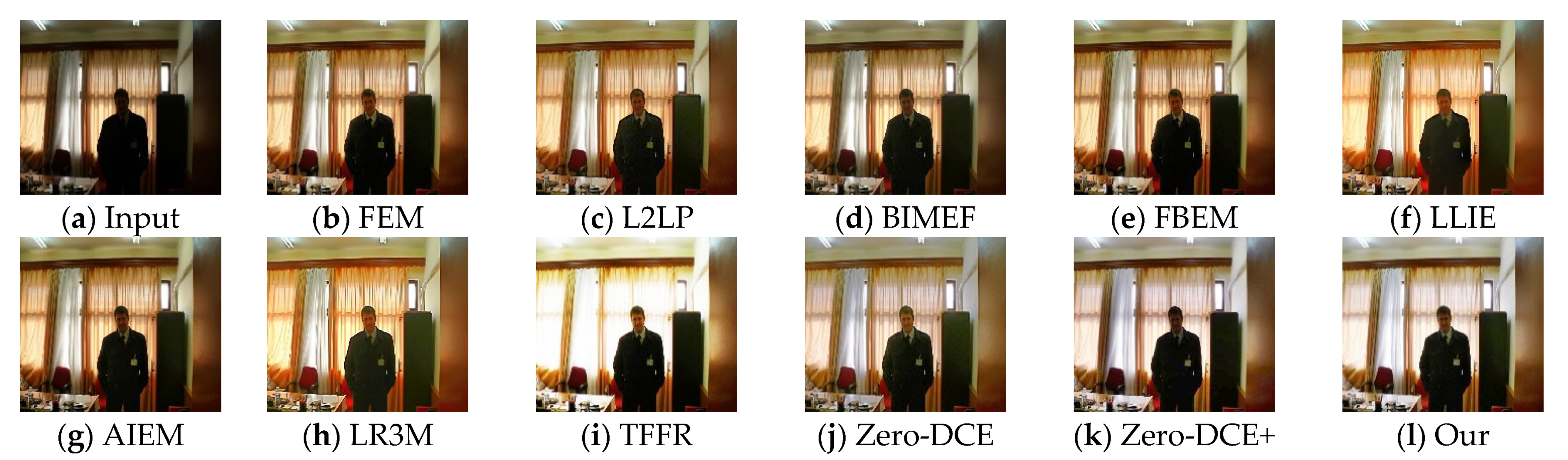

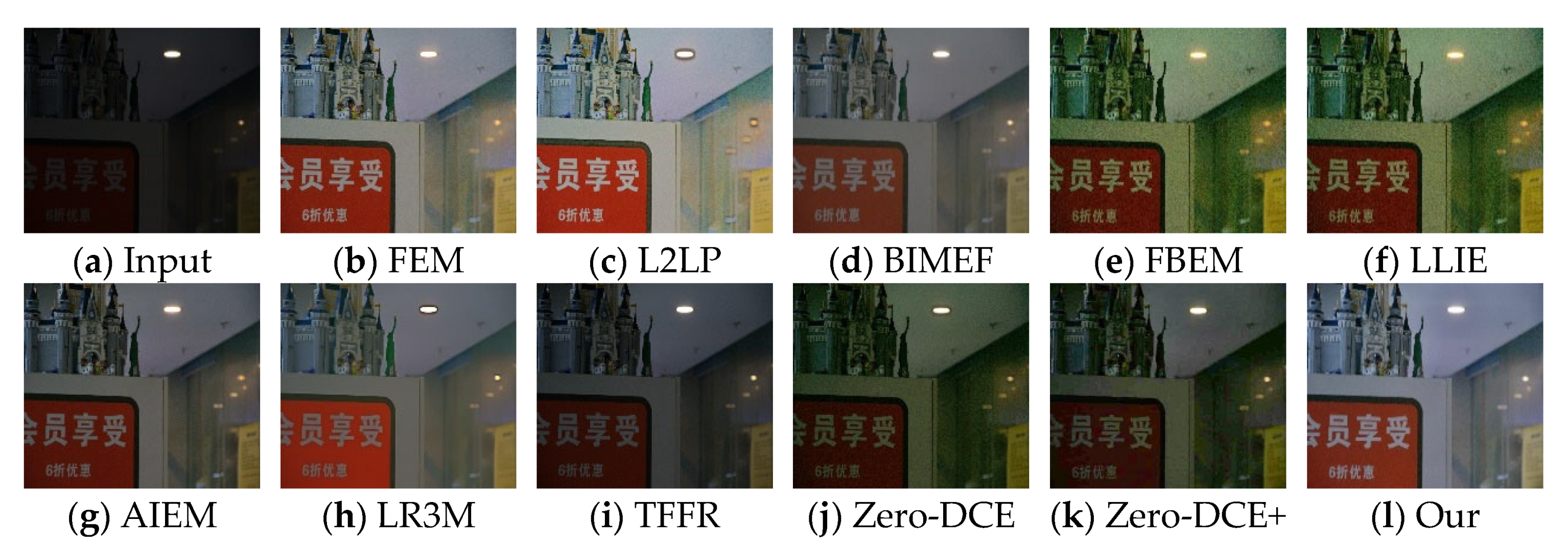

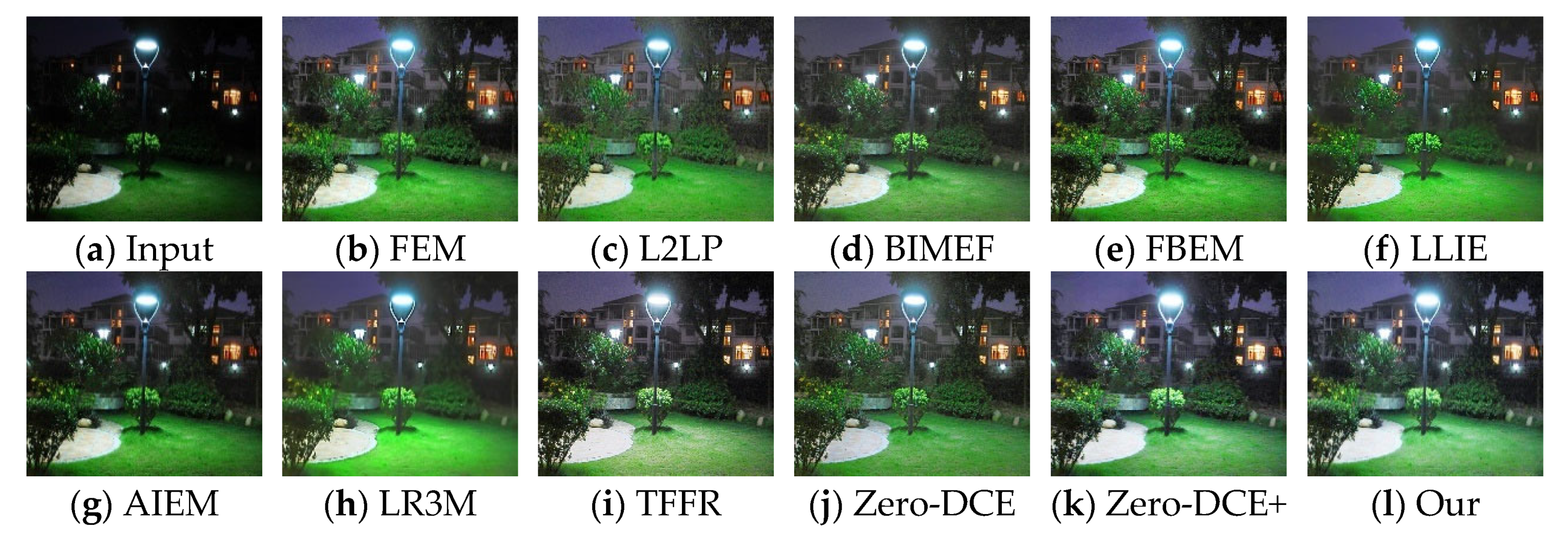

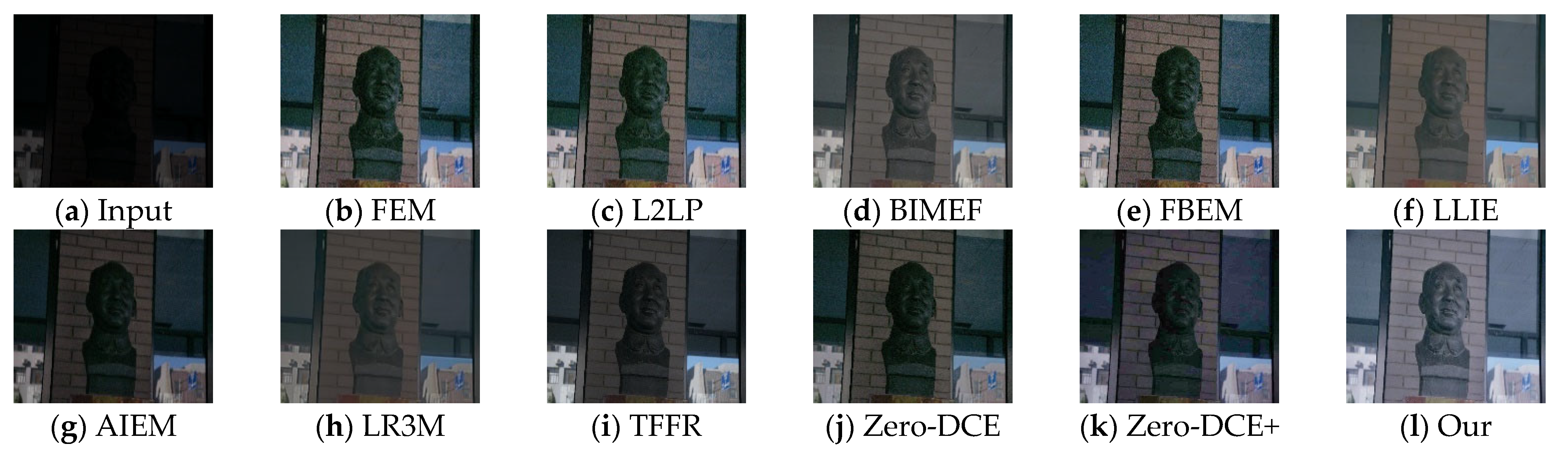

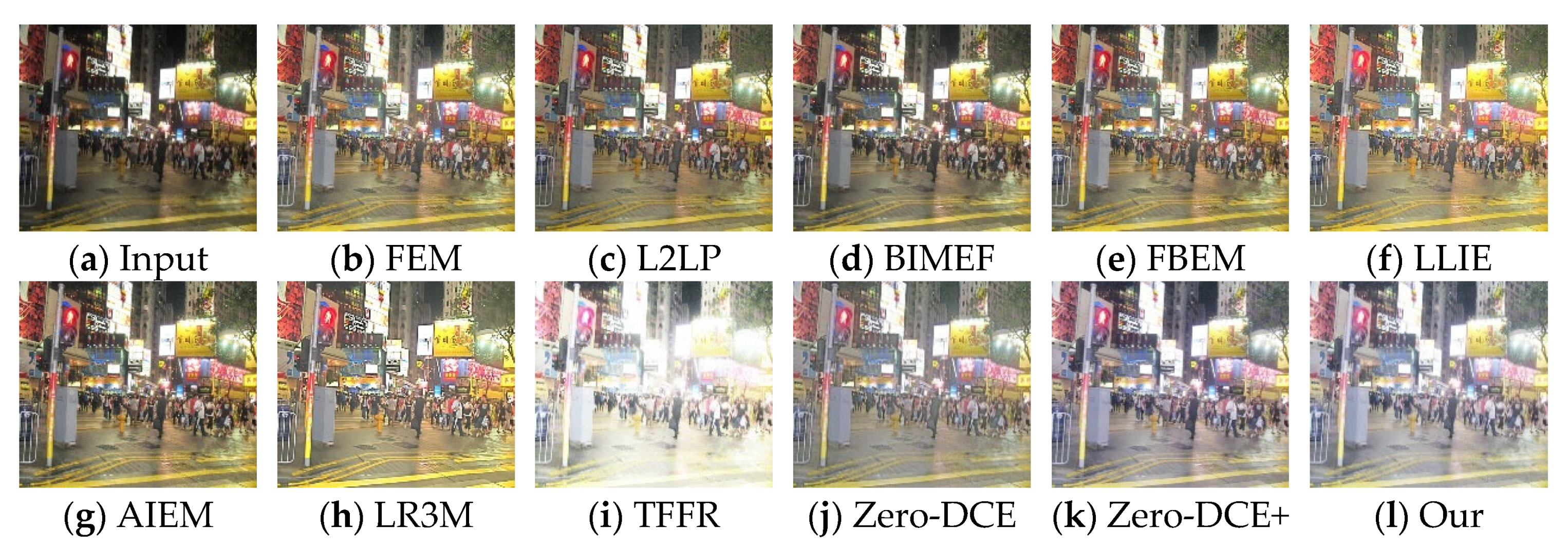

3.2. Subjective Evaluation

3.3. Objective Evaluation

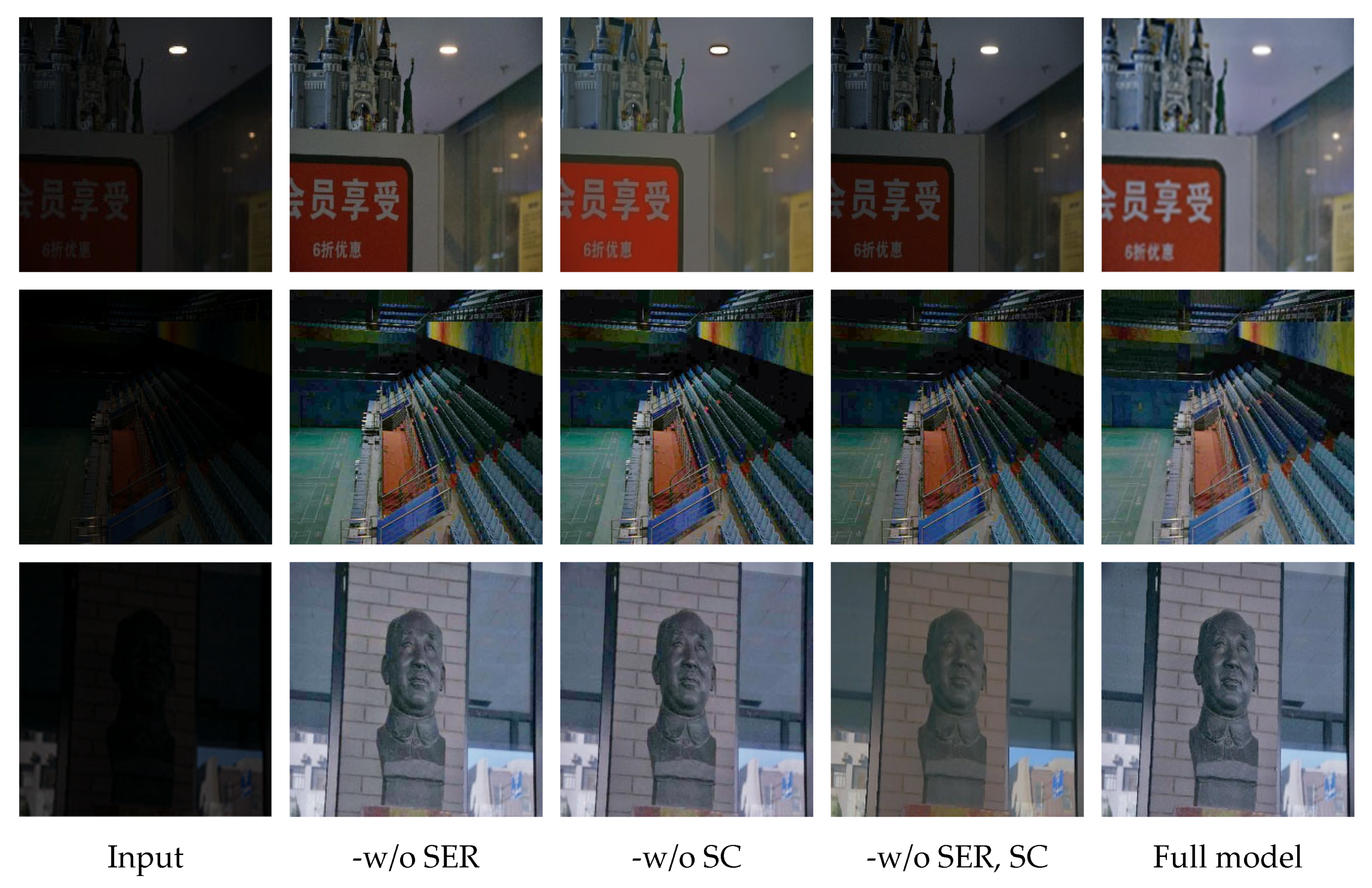

3.4. Ablation Study

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, Z.; Yin, Z.; Qin, L.; Xu, F. A Novel Method of Fault Diagnosis for Injection Molding Systems Based on Improved VGG16 and Machine Vision. Sustainability 2022, 14, 14280. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Y.; Li, C. Underwater Image Enhancement by Attenuated Color Channel Correction and Detail Preserved Contrast Enhancement. IEEE J. Ocean. Eng. 2022, 47, 718–735. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, Z.; Tan, J.; Li, Y. Multi-Purpose Oriented Single Nighttime Image Haze Removal Based on Unified Variational Retinex Model. IEEE Trans. Circuits Syst. Video Technol. 2022. [Google Scholar] [CrossRef]

- Li, J.; Zhang, X.; Feng, P. Detection Method of End-of-Life Mobile Phone Components Based on Image Processing. Sustainability 2022, 14, 12915. [Google Scholar] [CrossRef]

- Qi, Q.; Li, K.; Zheng, H.; Gao, X.; Hou, G.; Sun, K. SGUIE-Net: Semantic Attention Guided Underwater Image Enhancement with Multi-Scale Perception. IEEE Trans. Image Process. 2022, 31, 6816–6830. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Dong, L.; Xu, W. Retinex-Inspired Color Correction and Detail Preserved Fusion for Underwater Image Enhancement. Comput. Electron. Agric. 2022, 192, 106585. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Zhang, T.; Xu, W. Enhancing Underwater Image via Color Correction and Bi-Interval Contrast Enhancement. Signal Process. Image Commun. 2021, 90, 116030. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, S.; Wang, H.; Ma, P.; Ma, Z.; Duan, G. Deep USRNet Reconstruction Method Based on Combined Attention Mechanism. Sustainability 2022, 14, 14151. [Google Scholar] [CrossRef]

- Voronin, V.; Tokareva, S.; Semenishchev, E.; Agaian, S. Thermal Image Enhancement Algorithm Using Local and Global Logarithmic Transform Histogram Matching with Spatial Equalization. In Proceedings of the 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Las Vegas, NV, USA, 8–10 April 2018; pp. 5–8. [Google Scholar]

- Ueda, Y.; Moriyama, D.; Koga, T.; Suetake, N. Histogram Specification-Based Image Enhancement for Backlit Image. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 958–962. [Google Scholar]

- Pugazhenthi, A.; Kumar, L.S. Image Contrast Enhancement by Automatic Multi-Histogram Equalization for Satellite Images. In Proceedings of the 2017 Fourth International Conference on Signal Processing, Communication and Networking (ICSCN), Chennai, India, 16–18 March 2017; pp. 1–4. [Google Scholar]

- Kong, X.-Y.; Liu, L.; Qian, Y.-S. Low-Light Image Enhancement via Poisson Noise Aware Retinex Model. IEEE Signal Process. Lett. 2021, 28, 1540–1544. [Google Scholar] [CrossRef]

- Xu, J.; Hou, Y.; Ren, D.; Liu, L.; Zhu, F.; Yu, M.; Wang, H.; Shao, L. Star: A Structure and Texture Aware Retinex Model. IEEE Trans. Image Process. 2020, 29, 5022–5037. [Google Scholar] [CrossRef]

- Hao, S.; Han, X.; Guo, Y.; Xu, X.; Wang, M. Low-Light Image Enhancement with Semi-Decoupled Decomposition. IEEE Trans. Multimed. 2020, 22, 3025–3038. [Google Scholar] [CrossRef]

- Zhang, Z.; Su, Z.; Song, W.; Ning, K. Global Attention Super-Resolution Algorithm for Nature Image Edge Enhancement. Sustainability 2022, 14, 13865. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Guo, K.; Jia, K.; Hu, B.; Tao, D. A Joint Intrinsic-Extrinsic Prior Model for Retinex. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4000–4009. [Google Scholar]

- Ren, Y.; Ying, Z.; Li, T.H.; Li, G. LECARM: Low-Light Image Enhancement Using the Camera Response Model. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 968–981. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, G.; Xiao, C.; Zhu, L.; Zheng, W.-S. High-Quality Exposure Correction of Underexposed Photos. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 582–590. [Google Scholar]

- Mariani, P.; Quincoces, I.; Haugholt, K.H.; Chardard, Y.; Visser, A.W.; Yates, C.; Piccinno, G.; Reali, G.; Risholm, P.; Thielemann, J.T. Range-Gated Imaging System for Underwater Monitoring in Ocean Environment. Sustainability 2018, 11, 162. [Google Scholar] [CrossRef]

- Shen, L.; Yue, Z.; Feng, F.; Chen, Q.; Liu, S.; Ma, J. Msr-Net: Low-Light Image Enhancement Using Deep Convolutional Network. arXiv 2017, arXiv:1711.02488. [Google Scholar]

- Guo, H.; Lu, T.; Wu, Y. Dynamic Low-Light Image Enhancement for Object Detection via End-to-End Training. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5611–5618. [Google Scholar]

- Al Sobbahi, R.; Tekli, J. Low-Light Homomorphic Filtering Network for Integrating Image Enhancement and Classification. Signal Process. Image Commun. 2022, 100, 116527. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Porikli, F.; Pang, Y. LightenNet: A Convolutional Neural Network for Weakly Illuminated Image Enhancement. Pattern Recognit. Lett. 2018, 104, 15–22. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Ma, L.; Xu, Q.; Xu, X.; Cao, X.; Du, J.; Yang, M.-H. Low-Light Image Enhancement via a Deep Hybrid Network. IEEE Trans. Image Process. 2019, 28, 4364–4375. [Google Scholar] [CrossRef]

- Tao, L.; Zhu, C.; Xiang, G.; Li, Y.; Jia, H.; Xie, X. LLCNN: A Convolutional Neural Network for Low-Light Image Enhancement. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A Deep Autoencoder Approach to Natural Low-Light Image Enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. From Fidelity to Perceptual Quality: A Semi-Supervised Approach for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3063–3072. [Google Scholar]

- Zhang, W.; Zhuang, P.; Sun, H.-H.; Li, G.; Kwong, S.; Li, C. Underwater Image Enhancement via Minimal Color Loss and Locally Adaptive Contrast Enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater Image Enhancement with Hyper-Laplacian Reflectance Priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Ding, X.; Wang, Y.; Yan, X.; Fu, X. Gudcp: Generalization of Underwater Dark Channel Prior for Underwater Image Restoration. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4879–4884. [Google Scholar] [CrossRef]

- Al Sobbahi, R.; Tekli, J. Comparing Deep Learning Models for Low-Light Natural Scene Image Enhancement and Their Impact on Object Detection and Classification: Overview, Empirical Evaluation, and Challenges. Signal Process. Image Commun. 2022, 109, 116848. [Google Scholar] [CrossRef]

- Fang, F.; Li, J.; Zeng, T. Soft-Edge Assisted Network for Single Image Super-Resolution. IEEE Trans. Image Process. 2020, 29, 4656–4668. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward Fast, Flexible, and Robust Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 5637–5646. [Google Scholar]

- Li, C.; Guo, C.; Loy, C.C. Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4225–4238. [Google Scholar] [CrossRef]

- Cai, J.; Gu, S.; Zhang, L. Learning a Deep Single Image Contrast Enhancer from Multi-Exposure Images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef]

- Lee, C.; Lee, C.; Kim, C.-S. Contrast Enhancement Based on Layered Difference Representation of 2D Histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual Quality Assessment for Multi-Exposure Image Fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Ren, X.; Yang, W.; Cheng, W.-H.; Liu, J. LR3M: Robust Low-Light Enhancement via Low-Rank Regularized Retinex Model. IEEE Trans. Image Process. 2020, 29, 5862–5876. [Google Scholar] [CrossRef]

- Fu, G.; Duan, L.; Xiao, C. A Hybrid L2 − Lp Variational Model for Single Low-Light Image Enhancement with Bright Channel Prior. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1925–1929. [Google Scholar]

- Ying, Z.; Li, G.; Gao, W. A Bio-Inspired Multi-Exposure Fusion Framework for Low-Light Image Enhancement. arXiv 2017, arXiv:1711.00591. [Google Scholar]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A Fusion-Based Enhancing Method for Weakly Illuminated Images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

- Wang, Q.; Fu, X.; Zhang, X.-P.; Ding, X. A Fusion-Based Method for Single Backlit Image Enhancement. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4077–4081. [Google Scholar]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-Revealing Low-Light Image Enhancement via Robust Retinex Model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Chen, Z.; Yuan, X.; Wu, X. Adaptive Image Enhancement Method for Correcting Low-Illumination Images. Inf. Sci. 2019, 496, 25–41. [Google Scholar] [CrossRef]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

| Methods | IE ↑ | PSNR ↑ | SSIM ↑ | NRMSE ↓ | RMSE ↓ |

|---|---|---|---|---|---|

| LR3M [40] | 6.856 | 15.316 | 0.591 | 1.009 | 39.215 |

| L2LP [41] | 7.325 | 14.835 | 0.503 | 1.012 | 43.264 |

| BIMEF [42] | 7.235 | 15.635 | 0.492 | 1.695 | 43.586 |

| FEM [44] | 7.519 | 13.876 | 0.487 | 2.211 | 52.367 |

| FBEM [43] | 7.232 | 13.437 | 0.498 | 2.024 | 61.354 |

| LLIE [45] | 7.124 | 14.235 | 0.506 | 1.351 | 47.391 |

| AIEM [46] | 7.465 | 13.987 | 0.469 | 2.036 | 52.367 |

| TFFR [33] | 6.754 | 11.789 | 0.417 | 1.975 | 74.259 |

| Zero-DCE [47] | 7.544 | 13.849 | 0.478 | 1.857 | 52.152 |

| Zero-DCE+ [34] | 7.635 | 12.954 | 0.389 | 1.956 | 59.367 |

| Our | 7.873 | 15.642 | 0.673 | 0.360 | 42.113 |

| Methods | IE ↑ | PSNR ↑ | SSIM ↑ | NRMSE ↓ | RMSE ↓ |

|---|---|---|---|---|---|

| LR3M [40] | 6.657 | 14.909 | 0.531 | 1.175 | 44.529 |

| L2LP [41] | 6.960 | 14.083 | 0.534 | 1.348 | 46.694 |

| BIMEF [42] | 7.018 | 15.251 | 0.460 | 1.808 | 45.193 |

| FEM [44] | 7.318 | 13.493 | 0.404 | 2.623 | 57.106 |

| FBEM [43] | 7.018 | 13.232 | 0.472 | 2.131 | 69.154 |

| LLIE [45] | 6.966 | 13.983 | 0.452 | 1.877 | 51.743 |

| AIEM [46] | 7.256 | 13.520 | 0.395 | 2.542 | 58.878 |

| TFFR [33] | 6.353 | 11.089 | 0.367 | 2.183 | 76.629 |

| Zero-DCE [47] | 7.104 | 13.459 | 0.412 | 2.003 | 55.519 |

| Zero-DCE+ [34] | 7.152 | 12.352 | 0.359 | 2.274 | 62.720 |

| Our | 7.832 | 15.194 | 0.655 | 0.434 | 44.840 |

| Methods | IE↑ | PSNR ↑ | SSIM ↑ | NRMSE ↓ | RMSE ↓ |

|---|---|---|---|---|---|

| -w/o SER | 7.179 | 11.078 | 0.321 | 2.531 | 72.924 |

| -w/o SC | 7.176 | 10.838 | 0.313 | 2.640 | 74.772 |

| -w/o SER, SC | 7.122 | 11.854 | 0.341 | 2.309 | 66.709 |

| Full model | 7.832 | 15.194 | 0.655 | 0.434 | 44.840 |

| Losses | IE↑ | PSNR ↑ | SSIM ↑ | NRMSE ↓ | RMSE ↓ |

|---|---|---|---|---|---|

| 7.514 | 14.297 | 0.395 | 0.725 | 54.648 | |

| 7.431 | 13.173 | 0.357 | 2.516 | 63.374 | |

| 7.145 | 12.572 | 0.403 | 1.363 | 46.729 | |

| 7.334 | 13.425 | 0.449 | 1.373 | 50.304 | |

| (full loss) | 7.832 | 15.194 | 0.655 | 0.434 | 44.840 |

| Methods | Platform | Time | Methods | Platform | Time |

|---|---|---|---|---|---|

| LR3M [40] | Matlab | 0.579 | AIEM [46] | Matlab | 0.529 |

| L2LP [41] | Matlab | 0.076 | TFFR [33] | Python | 0.117 |

| BIMEF [42] | Matlab | 32.429 | Zero-DCE [47] | Python | 0.259 |

| FEM [44] | Matlab | 3.742 | Zero-DCE+ [34] | Python | 0.143 |

| FBEM [43] | Matlab | 0.246 | Our | Python | 0.093 |

| LLIE [45] | Matlab | 0.932 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qu, P.; Tian, Z.; Zhou, L.; Li, J.; Li, G.; Zhao, C. SCDNet: Self-Calibrating Depth Network with Soft-Edge Reconstruction for Low-Light Image Enhancement. Sustainability 2023, 15, 1029. https://doi.org/10.3390/su15021029

Qu P, Tian Z, Zhou L, Li J, Li G, Zhao C. SCDNet: Self-Calibrating Depth Network with Soft-Edge Reconstruction for Low-Light Image Enhancement. Sustainability. 2023; 15(2):1029. https://doi.org/10.3390/su15021029

Chicago/Turabian StyleQu, Peixin, Zhen Tian, Ling Zhou, Jielin Li, Guohou Li, and Chenping Zhao. 2023. "SCDNet: Self-Calibrating Depth Network with Soft-Edge Reconstruction for Low-Light Image Enhancement" Sustainability 15, no. 2: 1029. https://doi.org/10.3390/su15021029

APA StyleQu, P., Tian, Z., Zhou, L., Li, J., Li, G., & Zhao, C. (2023). SCDNet: Self-Calibrating Depth Network with Soft-Edge Reconstruction for Low-Light Image Enhancement. Sustainability, 15(2), 1029. https://doi.org/10.3390/su15021029