1. Introduction

In today’s education, a key challenge is to provide students with equal opportunities that facilitate the acquisition of skills and competencies required in the modern labor market [

1]. Recent research has highlighted agency as a crucial element of professionalism [

2], which aligns closely with the principles of sustainable education. Many researchers have found agency to play an important role in developing personal well-being and a meaningful career [

3,

4], creativity and transformed practices of expert work (e.g., [

5]), as well as coping with work–life changes and lifelong learning [

6]. Despite being acknowledged as an established educational objective at the policy level, student agency development has not received enough attention yet, especially in higher education [

1]. For instance, according to Trede et al. [

7], university students are not well-prepared for the work world which needs agency, and universities give more attention to acquiring formal and theoretical knowledge.

There exist various research directions/studies focusing on student agency. For instance, some revolve around participatory structures and learning relations (e.g., [

8,

9]) and singular constructs or characteristics of beliefs or self-processes of students (e.g., [

10,

11]). Several other studies focus on the development of knowledge in micro-level learning interactions (with a focus on epistemic agency) (e.g., [

12]). Additionally, several recent literature reviews have addressed student agency (e.g., [

13,

14]). For example, Stenalt and Lassesen [

14] conducted a systematic review of student agency and its connection to student learning in higher education.

One prospective way to support student agency and its foundations is through learning analytics (LA). LA aims to make use of learners’ data to understand, enhance, and optimize learning. According to Wise [

15], one of the key features of LA is that it enables students to take an active role in their learning process and supports the development of their self-regulatory skills. For instance, LA methods could predict student performance in courses and feed back information about learners’ competencies, knowledge levels, misconceptions, motivation, engagement status, and more (e.g., [

16]). Such support can potentially facilitate learners’ engagement, meta-cognition, and decision making, and it can also provide customizability, which would eventually contribute to student agency and empowerment. To this end, Chen and Zhang [

17] indicate that supporting choice-making using LA could promote high-level epistemic agency and design mode thinking competencies. Wise et al. [

18] propose that LA could support fostering student agency by providing options for the customization of learning goals. van Leeuwen et al. [

19] summarize that LA dashboards for students differ from earlier feedback systems in several ways—instead of providing simple static feedback, dashboards often visualize complex processes, and feedback can be available not only after the learning activity but also on demand.

Despite the potential, some studies argue that LA might restrict student agency. For instance, Ochoa and Wise [

20] state that a lack of co-creation in developing and interpreting LA could restrict student agency because students are not provided with opportunities to act as active agents in the interpretation process, which is required to identify actionable insights. Saarela et al. [

21] and Prinsloo and Slade [

22] argue that open practices (e.g., lack of algorithm opaqueness) and issues related to data ownership in LA could hinder student ownership of analytics and trust as well as hinder the empowerment of students as participants in their own learning.

In the history of learning analytics research, there have been several literature reviews investigating different aspects of learning analytics for different levels of education. The closest review to our study was conducted by Matcha et al. [

23]. While shedding light on many existing challenges in learning analytics research, this study overlooks the agency of learning and merely deals with learning analytics from self-regulated learning perspectives. Even though supporting self-regulated learning could contribute to fostering agency [

24], these two differ in that the agency of learning emphasizes students’ ability to actively enhance learning environments, contribute to knowledge development, or engage in innovation processes. Given the absence of an existing systematic review of LA in supporting student agency and the lack of consensus among researchers regarding whether or not LA can contribute to student agency, this research aims to explore the potential effects of LA in promoting student agency.

1.1. The Construct of Agency

There has been an increasing interest in agency within research revolving around lifelong learning, the workplace, and learning frameworks such as constructivism and sociocultural theories (e.g., [

25,

26,

27,

28]). Nonetheless, the construct of agency’s origin dates back to the agency conceptualization in social sciences (e.g., [

29,

30]) where it refers to the capability of individuals to engage in self-defined, deliberate, and meaningful actions in situations limited by contextual and structural relations and factors [

31].

The social–cognitive psychology standpoint sees agency as a mediating factor from thoughts to actions that are related to an individual’s intentionality, self-processes, and self-reflection, as well as competence beliefs and self-efficacy (e.g., [

32]). Constructivist viewpoints on agency mostly deal with the active actions of individuals in knowledge development and reorganization [

33]. On the other hand, a

sociocultural viewpoint places more emphasis on agency exhibiting itself through decision making and instances of action (e.g., [

34]). Moreover, aside from cultural and social structures, a subject-centered sociocultural viewpoint on agency considers the purposes, meanings, and interpretations that individuals attribute to actions that are deemed crucial in promoting agency (e.g., [

35]). Generally, while many researchers acknowledge the subjective standpoint of agency, they place more emphasis on the dynamic, contextually situated, and relationally constructed nature of agency (e.g., [

26]). For instance, Jääskelä et al. [

36] synthesize the existing literature across psychology, educational sciences, and social sciences to define agency as “

a student’s experience of having access to or being empowered to act through personal, relational, and participatory resources, which allow him/her to engage in purposeful, intentional, and meaningful action and learning in study contexts”.

1.2. The Potential of Learning Analytics to Promote Student Agency

Several disciplines have studied the concept of agency, which generally refers to an individual’s capacity to take action and effect changes. According to many researchers, when it comes to the educational context, an increase in student agency and deeper learning leads to more effective pedagogical practices (e.g., [

37,

38]). For example, Lindgren and McDaniel [

39] state that considering agency while designing course guidance and instructions can facilitate engaging students in challenging learning tasks and improve their learning. Vaughn [

35] indicates that through cultivating agency, teachers can adapt a flexible method to teaching that supports students’ social emotional needs and direct them better toward thinking about their engagement in the learning process. According to Scardamalia and Bereiter [

40], creating instructional environments that encourage learners to pose educationally valuable questions can enhance agency and contribute to the development of knowledge structures. Moreover, students’ opportunities to contribute to their educational environments and engage in participatory learning have been highlighted as means of augmenting agency (e.g., [

41]).

One potential way to support student agency is through learning analytics. In this regard, LA dashboards are often employed to visualize study pathways and learning processes, thereby enhancing learners’ awareness and providing them with personalized feedback. LA holds the potential to facilitate the promotion of student agency in numerous ways. For example, it can provide options allowing for goal setting and reflection, supporting meta-cognitive activities, facilitating student decision and choice making based on the analytics, supporting productive engagement and discussion in the online discussion environment, enabling students to identify actionable insights by involving them in the design and creation of analytic tools, and many more. Despite its potential, LA methods and dashboards frequently neglect to consider the integration of theoretical knowledge about learning and student agency into their designs (e.g., [

15,

42]).

1.3. Research Questions

To fill the mentioned gaps and build upon existing work, we have set four research questions as below:

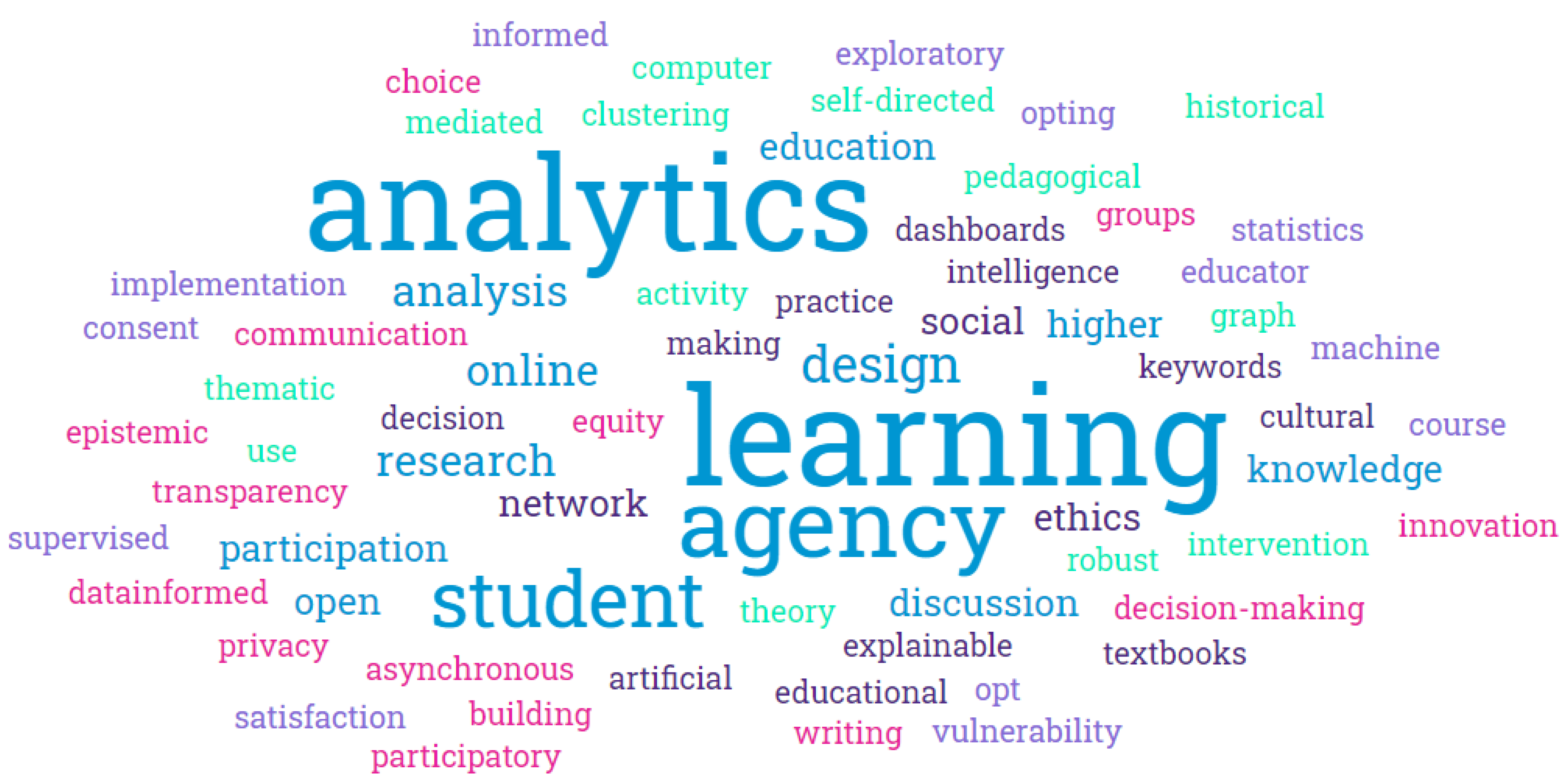

RQ1: What are the objectives for the application of learning analytics in the context of student agency?

RQ2: What types and methods of learning analytics have been employed to promote student agency?

RQ3: How can learning analytics more effectively support student agency?

RQ4: What majors and education levels were mostly targeted, and what types of data visualization were mostly employed by the LA dashboards or methods?

This article’s structure is as follows:

Section 2 describes our systematic method,

Section 3 deals with results,

Section 4 illustrates the discussion (including existing challenges), and finally,

Section 5 presents the conclusions.

4. Discussion

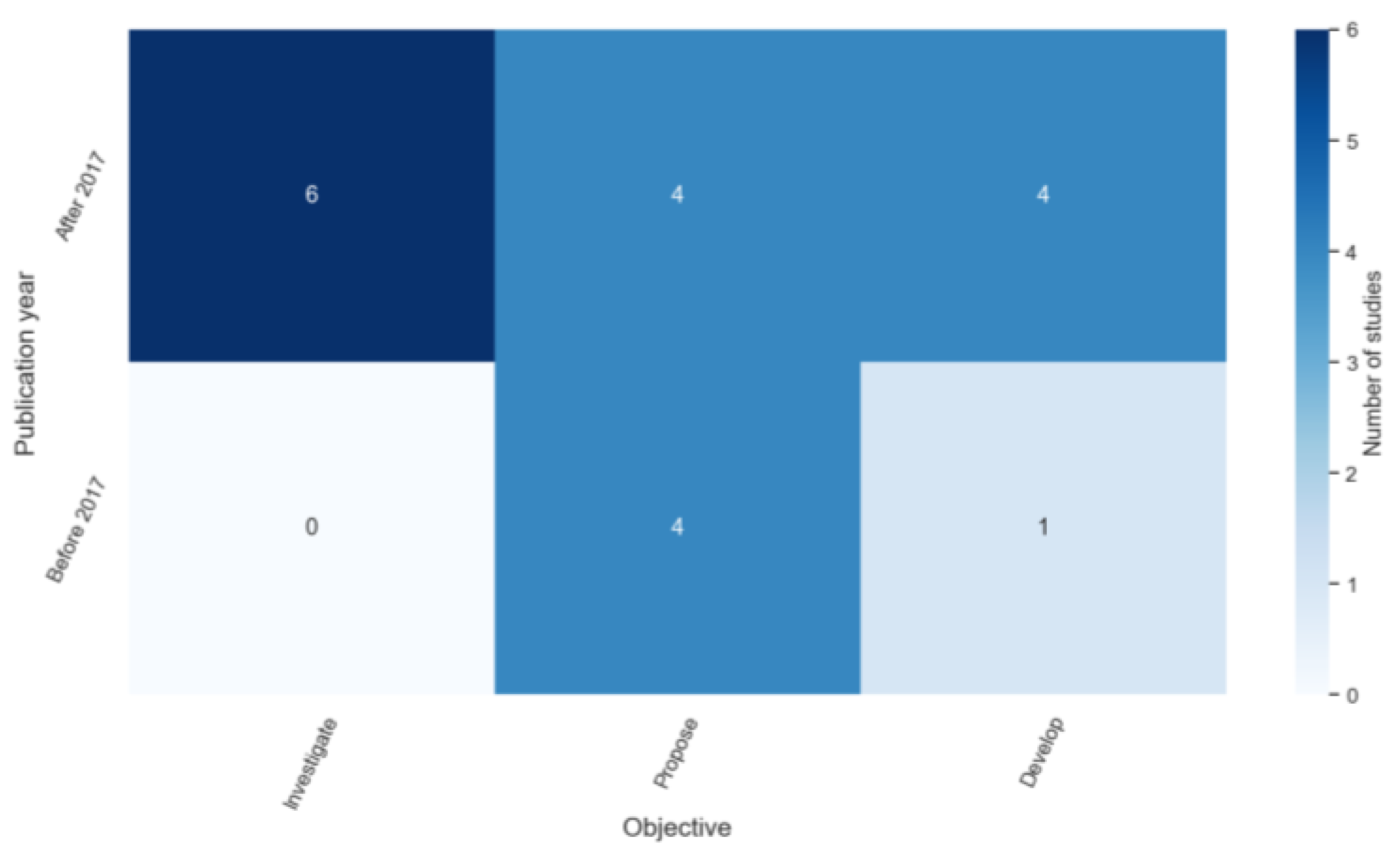

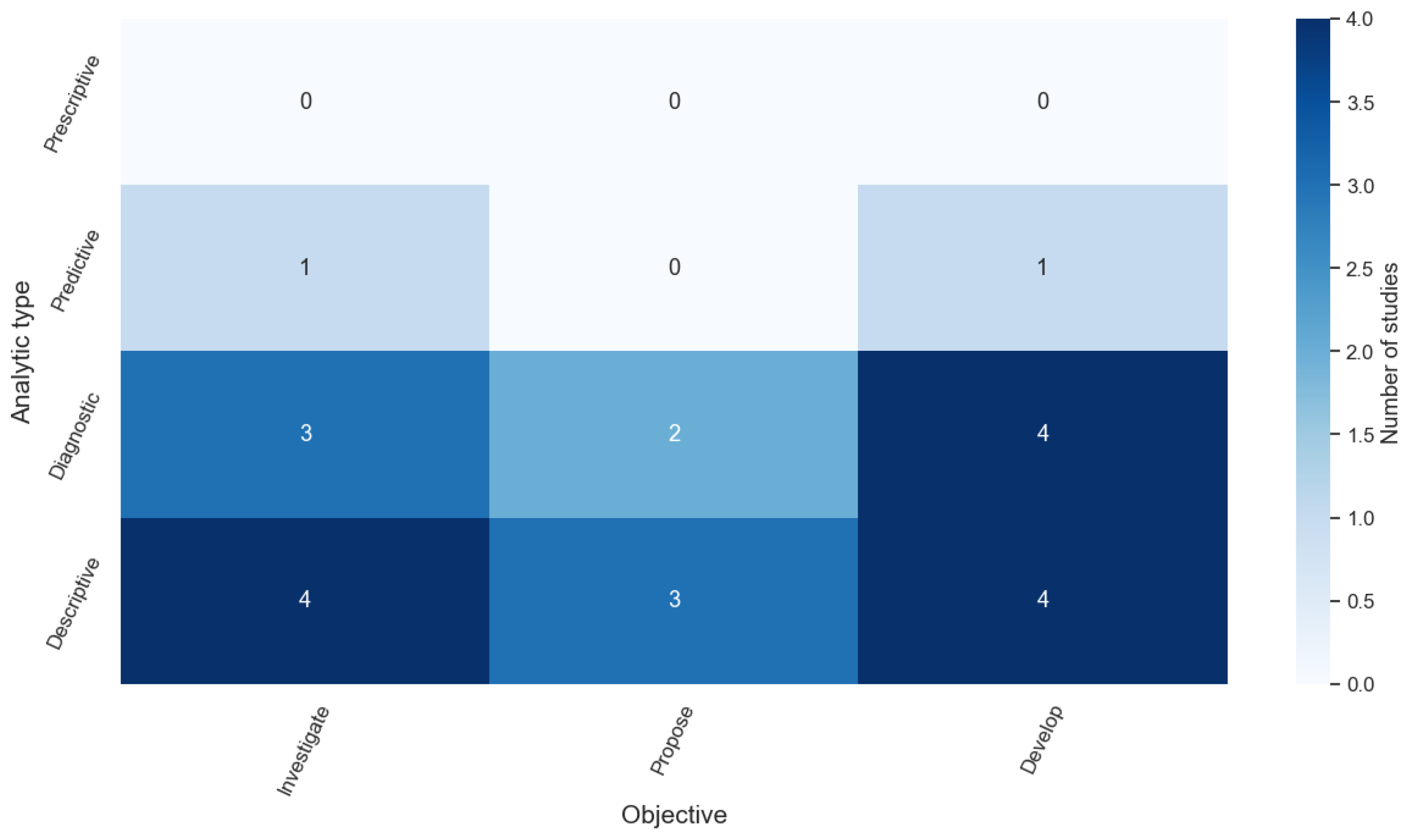

Concerning the first research question, our findings reveal that the included articles mainly aim to propose design principles or frameworks for LA methods or dashboards in supporting agency. The second and third main objectives were to investigate student experiences of agency or educators’ experiences about student agency in LA as well as develop LA methods and dashboards to support student agency, respectively. While some of the studies that investigated student or staff experiences of agency proposed design principles or frameworks that contribute to supporting student agency in learning analytics, none of those studies develop any new LA methods and dashboards. We also found that not only more research works have evolved dealing with LA in supporting agency in the past five years, but also all three objectives of investigating, proposing, and developing have been covered by the LA research community compared to before 2017.

Our findings from the second research question show that most of the included articles rely on descriptive and diagnostic analytics, paying less attention to predictive learning analytics and completely ignoring the potential of prescriptive learning analytics in supporting agency. Statistical analysis and data mining-related methods were mostly employed to cater for descriptive and diagnostic analytics. Moreover, we found that despite the potential of artificial intelligence and machine learning, they have been rarely used, and statistical methods are still widely preferred.

Regarding the third research question, we found that nine main elements are important to consider in the design principles of LA methods/dashboards for supporting student agency. These include developing agency analytics, offering customization, embedding dashboards, meta-cognition support, decision-making support, engagement and discussion support, considering transparency and privacy, relying on learning sciences, and facilitating co-design. The most frequently recommended are to consider transparency and privacy, co-design, as well as developing agency analytics in LA methods/dashboards.

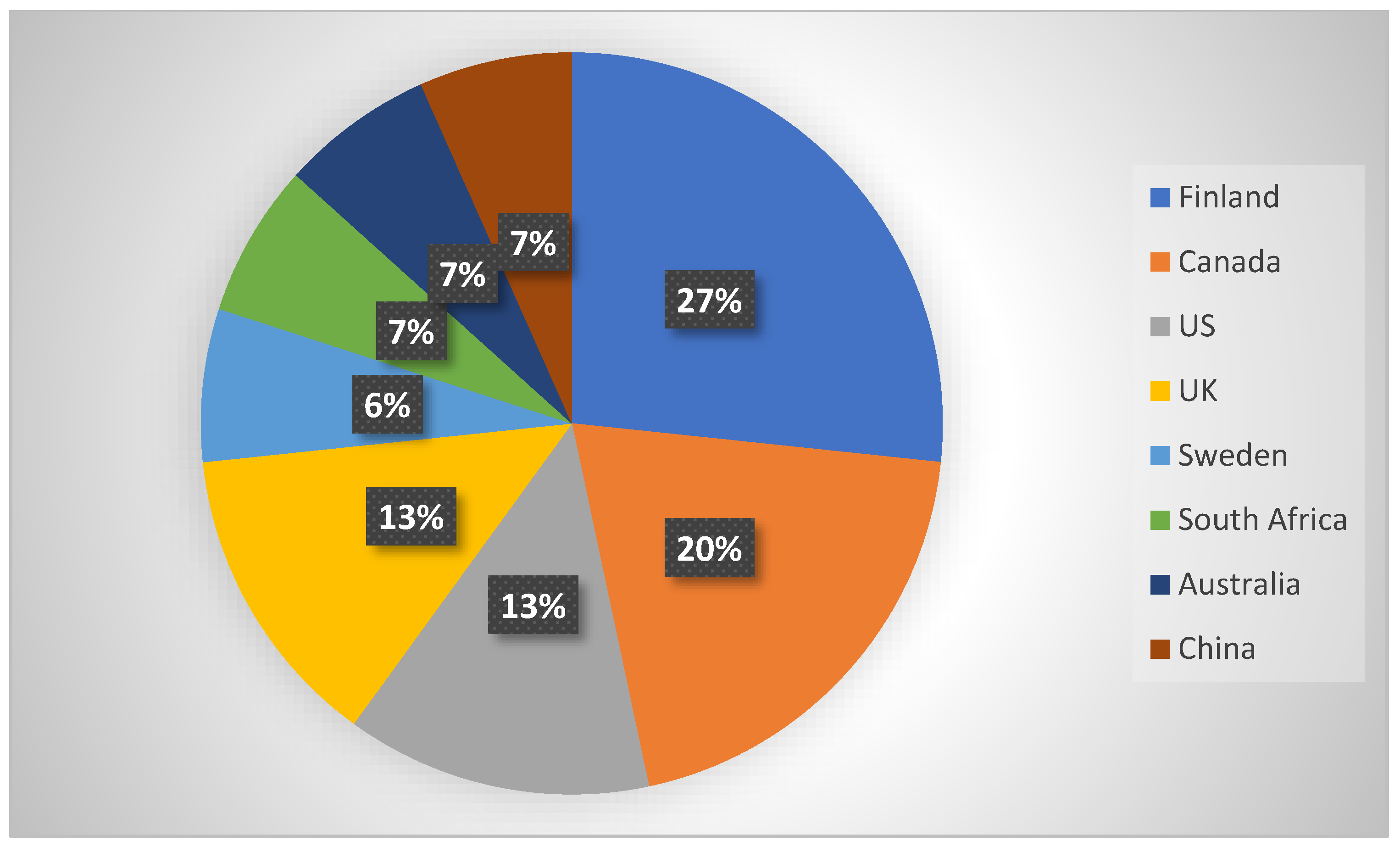

Concerning the last research question, our findings show that, surprisingly, no studies have considered the use of LA in supporting student agency in K–12 education (higher education has been the focus of the LA community thus far). Bar charts were the most frequently employed visualization type in the LA dashboards followed by textual, color coding, or summary tables. Also, most studies targeted promoting student agency using LA in social science and humanities majors. In addition, we have identified eight main challenges facing LA in supporting student agency according to our findings.

(1) General lack of LA research for supporting student agency. Despite the importance of student agency and its effects on the quality of education systems as well as LA in supporting student agency (e.g., [

1,

59,

60]), there seems not to be enough attention on this topic. Our systematic search of the topic returned only 278 articles (including duplicates) from eight major databases dealing with LA and agency. More research is required to investigate the potential different types and methods of LA in supporting student agency. Moreover, there is still a lack of proper framework or design principles for LA methods and dashboards to support student agency.

(2) Complete ignorance of student agency for K–12. One of the most striking findings of this research is that there have yet to be any research studies revolving around the investigation, proposing, or development of LA methods or dashboards in supporting student agency in K–12 education. Despite OECD’s call on supporting student agency and its advantages for society and education [

1], the potential of LA has not been explored for fostering student agency. LA methods and dashboards can promote student agency through different means that eventually lead to increasing their engagement and motivation throughout the teaching and learning process, thus increasing their comprehension of content. According to our findings, some prospective ways that LA can foster student agency include enabling students to identify actionable insights, providing interventions to balance what students want and what is good for them, offering different types of analytics with explanations facilitating student decision and choice making, allowing for goal setting and reflection (supporting meta-cognitive activities in general), and many more.

(3) Open practices and data ownership problems of LA. Among the included studies, only one had developed LA methods that consider reducing ethical issues by developing an explainable predictive LA [

21]. There is not enough developmental work in LA to support agency in ways to address privacy challenges like anonymizing and deidentifying individuals and data transparency and ownership. For instance, there are no LA-related studies in supporting student agency that include students in the transparency of the data process and the opaqueness of algorithms (open practices and data ownership). As argued by Prinsloo and Slade [

22], to bring about student ownership of analytics and trust, the ethical adoption of LA requires empowering students as participants in their learning and increasing student agency.

(4) Lack of co-creation in developing LA. Even though emphasized by several studies (e.g., [

20,

49,

53]), there seem to be no studies that actually involve students and/or educators in the development and interpretation of LA for supporting agency. Considering students and educators in the development process of LA could promote the identification of actionable insights and even help bridge the gap between theory and practice. More specifically, it enables students to act as active agents in the interpretation process rather than digesting the data for them, which facilitates assisting them in identifying what actions they could take next (translating their understandings into practical actions). Also, it can help take into account how students may change their behavior because of using the dashboard.

(5) Not enough attention toward tracing student agency using log data. The majority of LA methods relied on self-reported answers of students collected through assessment tools for student agency (e.g., Jääskelä et al. [

37]). One key potential of LA is its capability to benefit from students’ log data during the learning process and provide continuous and uninterrupted assessments that can further be used to support students and educators in agency. Unfortunately, of all the included studies, none had identified indicators of student agency during the learning process and benefited from these to provide different means of LA. For instance, the explainable agency analytics developed by Saarela et al. [

21] mainly relies on students’ responses to their assessment tool to create agency profiles for students. Future works could take advantage of both log and self-reported data to develop more comprehensive LA methods and dashboards.

(6) Less attention toward predictive analytics and ignoring early predictive modeling. Of all included articles, only two considered predictive modeling in their learning analytics types. Most studies employed descriptive or diagnostic analytics. Interestingly, none of the two articles took advantage of early predictive modeling and merely used students’ answers to learner agency questionnaires to predict agency profiles of future students after responding to the same assessment tools. According to Macfadyen and Dawson [

61], the primary objective of predictive modeling is to make early predictions and provide timely intervention. In the context of education, the prediction of students’ performance and agency has the potential to enhance various educational systems (e.g., [

62]). However, in practice, these predictive models struggle to make accurate and timely predictions during the initial stages of the learning process. If learners are only informed about their performance or agency profiles after completing a learning session without considering the element of time, it becomes challenging for educators or the educational system to offer personalized interventions that can prevent failure or enhance their learning agency.

(7) Neglecting the potential of prescriptive learning analytics. Another important finding was the complete ignorance of prescriptive analytics and its potential to foster student agency. Prescriptive learning analytics aims to employ data-driven prescriptive analytics to interpret the internal of the predictive LA models, explain their predictions for individual students, and accordingly generate evidence-based hints, feedback, etc. Despite its potential (see Susnjak [

63]), there have been no works considering the developed predictive learning analytics and the desired outcome for an individual student to prescribe an optimized input (course of actions) to achieve the outcome. For instance, the benefits of such an LA type could be to guide students engaging in discussion with certain peers, revising a concept, attempting extra quizzes, solving specific practice examples, taking extra assignments, and so forth. Therefore, translating predictions into actionable insights is missing from the literature.

(8) General absence of explainable AI. Of all 15 included articles, only one developed interpretability for their model using a general explainer model (like Shapely) (Saarela et al., [

21]). While effective and successful in generating an approximation of the decisions, there is still a long way to go in the field had we wanted truly practical LA methods and dashboards to support students. Unfortunately, general explainer algorithms like Shapely and LIME suffer from serious challenges: (i) using a particular attribute to predict without that attribute appearing in the prediction explanation, (ii) producing unrealistic scenarios, and (iii) being unable to reason the path to their decisions (e.g., [

64,

65]). According to this and many other related works in LA research (see [

63]), most LA methods focus on the development of models that are more accurate and effective in their predictions. There is a general absence of explainable AI for LA usage. In general, LA methods and dashboards will have limited capabilities, and their uptake will remain constrained if they are not equipped with the ability to provide reasoning behind their made decisions/predictions as well as recommendations on proper pathways for moving forward.

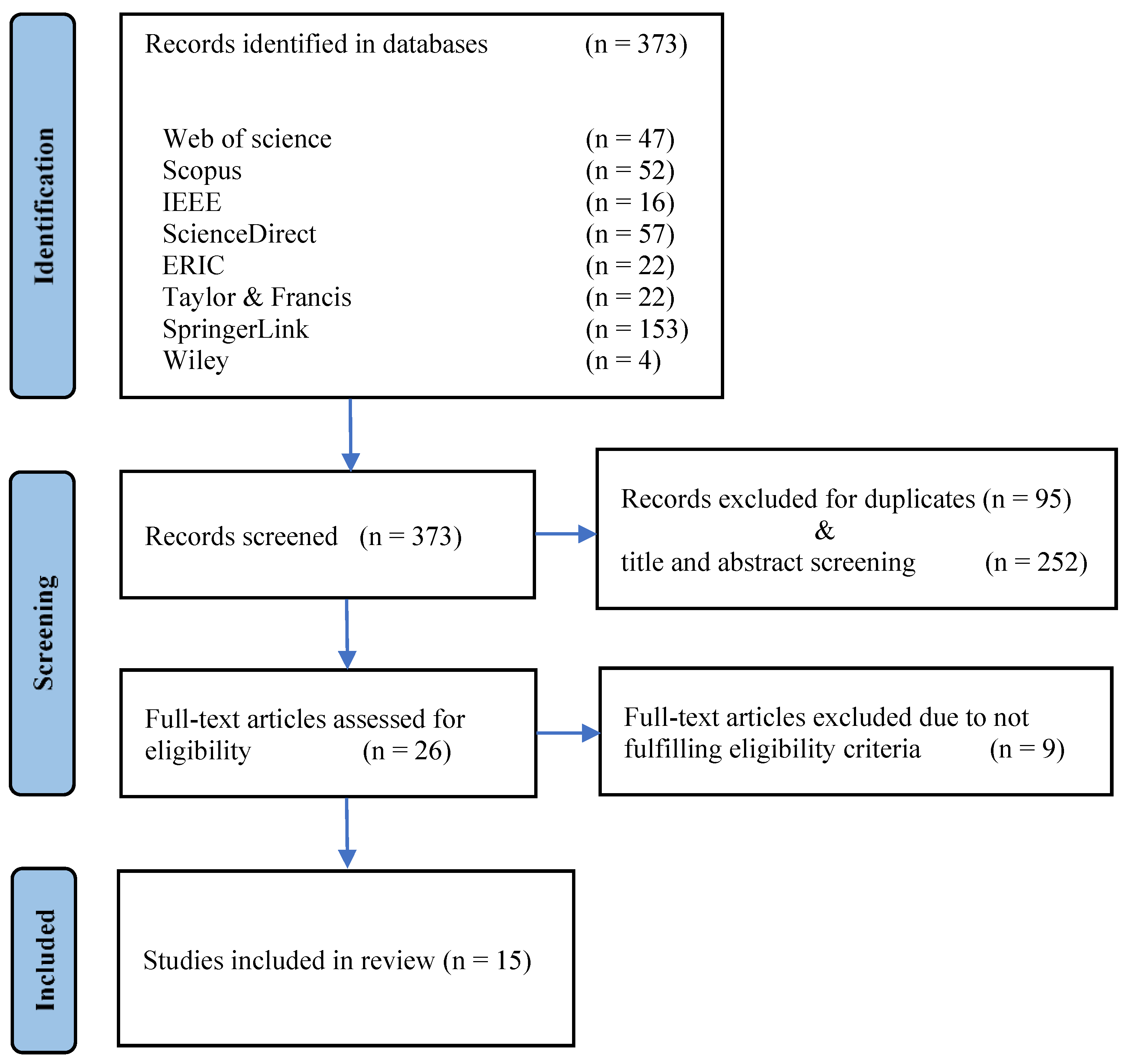

Limitations

We have employed eligibility criteria to constrain our references. For instance, we only considered studies that properly dealt with the use of LA in supporting student agency. We did not consider studies published in books and thesis or dissertations. Moreover, we excluded articles with a focus on agency of teachers or staff. Finally, studies that implicitly revolve around student agency were not considered (e.g., through the lens of other concepts like self-regulation). More specifically, only articles that specifically used the term “agency” were considered. If we had not specified this limitation, we probably would have been dealing with a larger number of articles.

5. Conclusions

This research examined 15 articles to answer four main research questions related to the use of LA in supporting student agency. Analysis of the included articles showed that most of the articles’ objectives were to investigate student or educators’ experiences with respect to agency, propose design principles or frameworks for supporting LA methods/dashboards to support agency more effectively, and develop LA dashboards or methods to promote agency. Of those studies with the aims of investigating or proposing, none have developed any new LA methods and dashboards for promoting student agency. Similarly, none of the studies with the development objective had first investigated student agency experiences and then used findings to develop (evidence-based) LA methods and dashboards to support student agency. Further analysis revealed that not only more research works have evolved dealing with LA in supporting agency in the past five years, but also all three objectives of investigating, proposing, and developing have been covered by the LA research community compared to before 2017.

Regarding the second research question, our findings showed that the LA community overlooks the potential of predictive and prescriptive learning analytics in supporting agency and yet mainly relies on descriptive and diagnostic analytics. To this end, statistical analysis and data mining-related methods were mostly employed to cater for descriptive and diagnostic analytics, while the potential of artificial intelligence and machine learning techniques has not been exploited. Further analysis revealed that more studies have considered investigating, proposing, and developing elements contributing to fostering student agency using descriptive analytics followed by diagnostics analytics.

The findings of the third research question revealed nine main LA design elements for more effectively supporting student agency. Some examples include offering customization, meta-cognition support, engagement and discussion support, considering transparency and privacy, relying on learning sciences, and facilitating co-design. Of these nine, the most frequently recommended were to consider transparency and privacy, co-design, as well as developing agency analytics in LA methods/dashboards. The fourth research question found that no studies have considered the use of LA in supporting student agency in K–12 education, and bar charts were the most frequently employed visualization type in the LA dashboards followed by textual, color coding, and summary tables. Also, the findings showed that most studies targeted promoting student agency using LA in social science and humanities majors. Further analysis showed that while textual and summary tables were investigated by some studies, no studies had actually employed them in developmental work for promoting agency using LA. Finally, our analysis highlighted eight main challenges facing LA in supporting student agency according to our findings. These include the general lack of LA research for supporting student agency, a complete ignorance of student agency for K–12, open practices and data ownership problems of LA, lack of co-creation in developing LA, not enough attention toward tracing student agency using log data, less attention toward predictive analytics and ignoring early predictive modeling, neglecting the potential of prescriptive learning analytics, and the general absence of explainable AI.

In conclusion, the analysis of the articles has provided valuable insights regarding the role of LA in fostering student agency. A key observation is the importance of researching and implementing ways to support student agency, recognizing the role of LA and AI. To ensure the maintenance of agency in a data-enriched environment, it is imperative to investigate the essential skills that learners and students must possess. This can be achieved by creating and testing models that combine LA with student agency, especially in K–12 education. Engaging in a co-creative approach that involves educators, students, and other stakeholders in the design and evaluation of LA systems promises to yield more effective and widely adopted tools. This collaborative approach not only guarantees the effectiveness of the tools developed but also enhances their widespread acceptance. By prioritizing a human-centered methodology in designing LA solutions, a direct link can be established between end-users and the developmental process. Consequently, this approach not only aligns the tools with pedagogical frameworks but also profoundly advances and reinforces student agency in a synergistic manner.