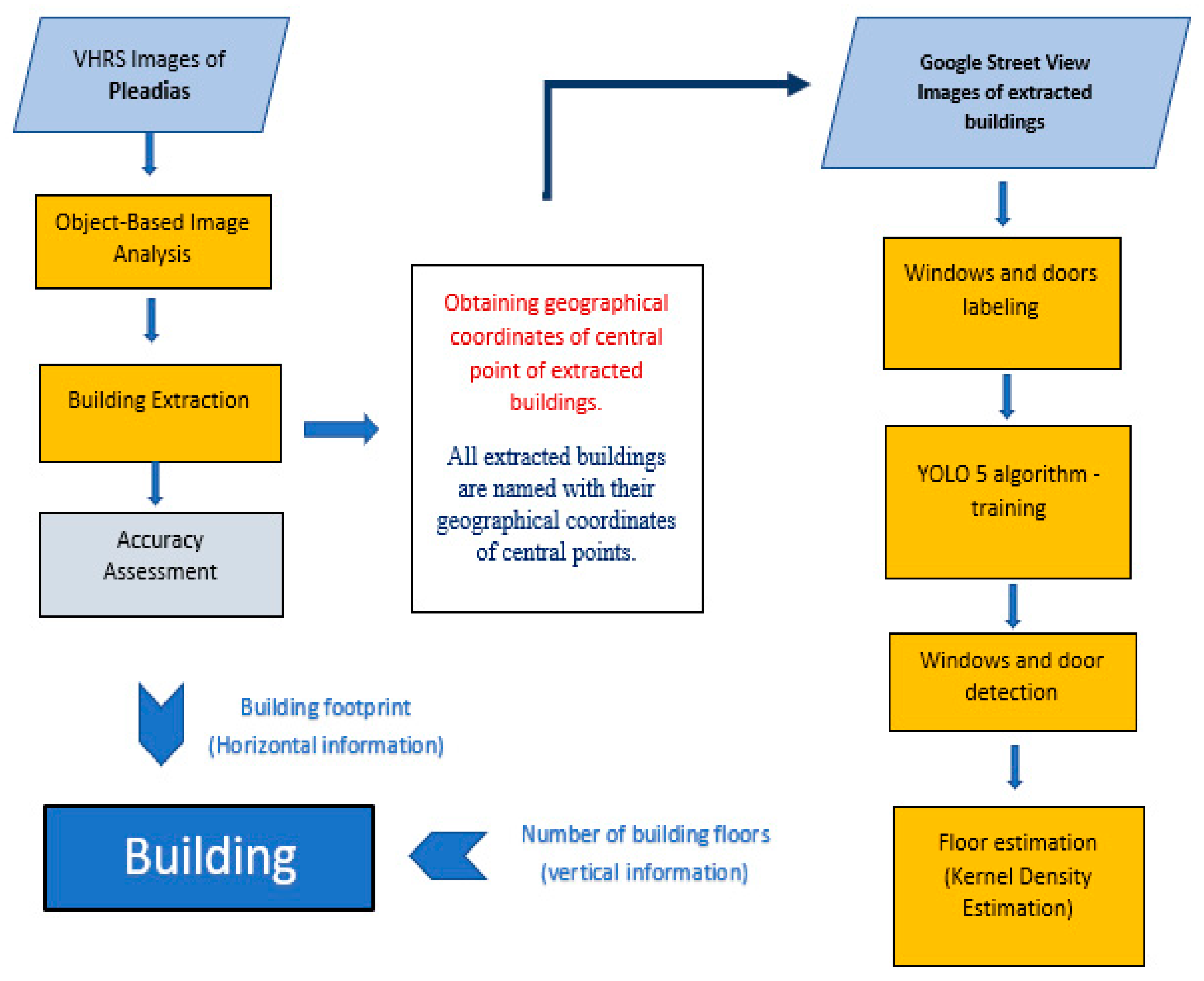

1.1.1. OBIA for Building Extraction from VHRS Images

One of the main issues in satellite image analysis is obtaining accurate information about buildings’ shape, size, and structure with satisfactory accuracy in short times [

11]. High-resolution satellite (HRS) images provide an opportunity to extract building boundaries at a specific time and analyze the spatial distribution of buildings across a specific period. Studies of HRS images have recently increased for semi-automated or automated building extraction techniques. Building extraction from satellite images in urban applications is complicated [

12,

13] due to the different sizes and shapes of buildings, varying roof textures, and obstacles in surrounding buildings [

13]. Classification is the focal point of building extraction techniques. Classification methods can be grouped into two main types: pixel-based image analysis and object-based image analysis (OBIA). Whereas pixel-based classification only uses the spectral information of every pixel within the boundaries of the study areas, object-based classification also considers its spatial, textual, or contextual information [

13]. The object-based method can be considered more advanced in this regard.

Several previous studies have discussed the performance of object-based and pixel-based analyses. Myint et al. examined whether an object-based classification could accurately identify urban classes [

14]. They used QuickBird images of central region in Phoenix, Arizona. The object-based classifier produced a high overall accuracy of 90.40%, whereas the maximum-likelihood classifier, a per-pixel classifier, provided a lower overall accuracy of 67.60% [

14]. Rittle et al. investigated the performance of object-based and pixel-based techniques for producing land cover mapping of the Alto Riberia Tourist State Park, in the Brazilian Atlantic rainforest area [

15]. This comparative study showed that object-based classification provided higher accuracy, with a kappa index value of 0.8687, than a hybrid per- the pixel-based classification, with a kappa index value of 0.2224 [

15]. Gao and Mas investigated the success of object-based image analysis in classifying satellite images with varying spatial resolutions, comparing the classification results from the pixel-based method [

16]. This study showed that object-based image analysis in higher-spatial-resolution images achieved higher classification accuracy [

16].

Many studies based on the OBIA technique have provided high-quality results for building extraction. Prathiba et al. proposed a method combining object-based nearest-neighbor classification and a rule-based classifier for extracting building footprints from VHRS images [

17]. This method provided good results with an accuracy of over 82.5% [

17]. Jamali et al. tested the two automated building extraction approaches on Istanbul’s aerial LiDAR point cloud and digital imaging datasets [

18]. The object-based automated technique presented better results than the threshold-based building extraction technique in regard to visual interpretation [

18]. Gavankar and Ghosh applied an object-based automatic building extraction technique to obtain building footprints using pan-sharpened IKONOS multispectral images [

13]. This proposed method did not require training samples or a digital elevation model to extract highly accurate building footprints [

13].

Some other studies also used OBIA on UAV and satellite images to identify the physical characteristics of urban slum settlements [

19] and differentiate formal and informal housing in the built-up areas [

20]. It should be noted that some alterations in formal buildings, which include increasing the number of floors without authorization, do not generally have common slum characteristics that differentiate them from the formal urban environment. Therefore, using common image analysis techniques that detect and classify formal and slum settlements is insufficient for these conditions. Our study aimed to develop an automated and rapid approach for extracting and documenting all existing buildings to control and update public records, rather than classifying slums and formal housing based on HRS images.

1.1.2. YOLO Algorithm for the Extraction of Building Façade Elements from SV Images

Compared to the traditional remote sensing approach, SV images effectively contribute to vertical urban research, enabling us to understand street spatial structures, identify urban features, and extract building façade elements. Traditional aerial photogrammetry produces a two-dimensional image of the city using vertical overview (i.e., nadir) imaging [

21]. Unlike overlook imaging, oblique imaging can accurately characterize the specific details of urban structures [

21]. Oblique aerial images from UAVs can produce meaningful information for building façade studies. However, they also require the development of matching algorithms to cope with the unique characteristics of large-scale urban oblique aerial imagery, such as depth discontinuities, occlusions, shadows, low texture, and repetitive patterns [

22]. One of the typical problems in UAV image analysis is that a transformation must be performed, since the building façade is not frontally viewed [

23].

Street view images can be easily used to collect critical information about urban areas at the street scale. This new data tool has become popular in many kinds of research. These studies have mainly interested in evaluating visual perceptions of urban streets and street space qualities [

9,

24,

25,

26,

27,

28], detecting trees and plants in urban cities [

29,

30,

31], exploring the climatic conditions and air pollution of cities [

32,

33,

34], and determining walkability in the urban streets [

35,

36]. These efforts have proven that the analysis of SVIs produces comprehensive and reliable results for understanding human interactions with urban environments and outdoor dynamics.

In addition to cultural and social studies, it should be noted that building façade information can be used for other technical purposes such as building reconstruction [

10], monitoring structural health [

23], detecting façade faults [

10], identifying urban heritage, building energy performance analysis [

37] and low-energy building design [

37,

38]. Object detection is the central part of SVI analysis when fulfilling these technical purposes. Object detection is utilized to identify and locate one or more types of objects in images [

39,

40,

41]. Detecting building façade elements, which is a sub-theme of object detection, is a critical issue in computer vision for image analysis [

10]. Multi-source data, analyzed with machine learning and computer vision techniques, have provided a broad understanding of urban analysis. Deep learning algorithms have recently been widely employed in object recognition and detail extraction [

10].

Traditional detection approaches usually encounter some challenges making them difficult to implement due to their high temporal complexity, low robustness, and strong scene dependency [

42]. In recent years, target detection methods based on convolutional neural networks (CNNs) have provided satisfactory detection results [

42]. Deep learning algorithms based on convolutional neural networks have significantly improved the efficiency and accuracy of target detection methods. Object detection methods based on deep neural architectures can be categorized as one-stage or two-stage detectors [

43,

44]. Classification and localization are the two significant issues for object detection, and two-stage algorithms have been developed to solve these difficulties [

45]. R-CNN, Fast R-CNN, Faster R-CNN, SPP-Net, and Mask-RCNN are two-stage models that involve producing region proposals and classifying region proposals in the classifier and correcting positions [

46]. They extract a set of candidates’ bounding boxes in the initial stage [

45]. In the second step, the CNN detector collects objects from the selected candidate bounding boxes, and these features are then utilized for classification and bounding box regression [

41]. The one-stage detectors, including the Single Shot Multibox Detector (SSD), RetinaNet, Fully Convolutional One-Stage (FCOS), DEtection TRansformer (DETR), and the You Only Look Once (YOLO) family, predict the bounding boxes and compute the class probabilities of these boxes through a single network [

39]. When developing a model, it is crucial to balance computation speed and detection accuracy [

42]. Two-stage algorithms provide accurate results but are slow and structurally complicated [

45]. One-stage detection methods are simple and more suitable for quick object detection [

41].

YOLO is one of the best known deep learning algorithms, and its single-stage detection architecture makes it particularly fast [

47]. It addresses categorization and localization difficulties as a single regression problem [

45]. YOLOv5, based on YOLOv4, is the most widely used YOLO series detection technique. Furthermore, YOLOv5 aims to improve small-target detection capabilities [

37].

The YOLO algorithm is widely used for target detection in many different kinds of studies, such as estimating the positions of pedestrians from video recordings [

48], identifying insect pests that influence agricultural production [

49], iceberg and ship discrimination [

50], ship detection and recognition in complex-scene SAR images [

51], bridge detection [

52], underwater object detection [

53], automatic roadside feature detection [

54], and object detection in automated driving [

55].

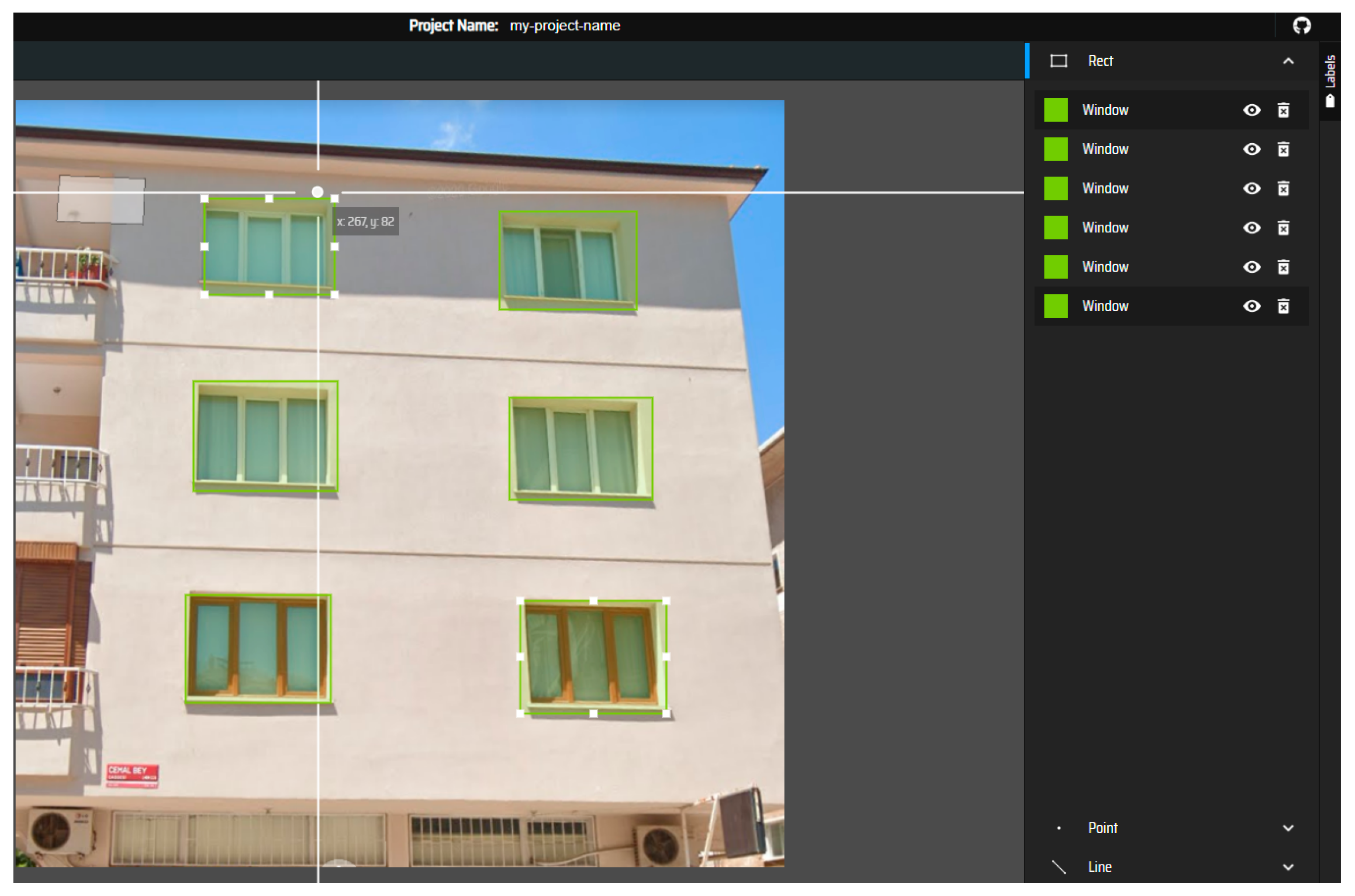

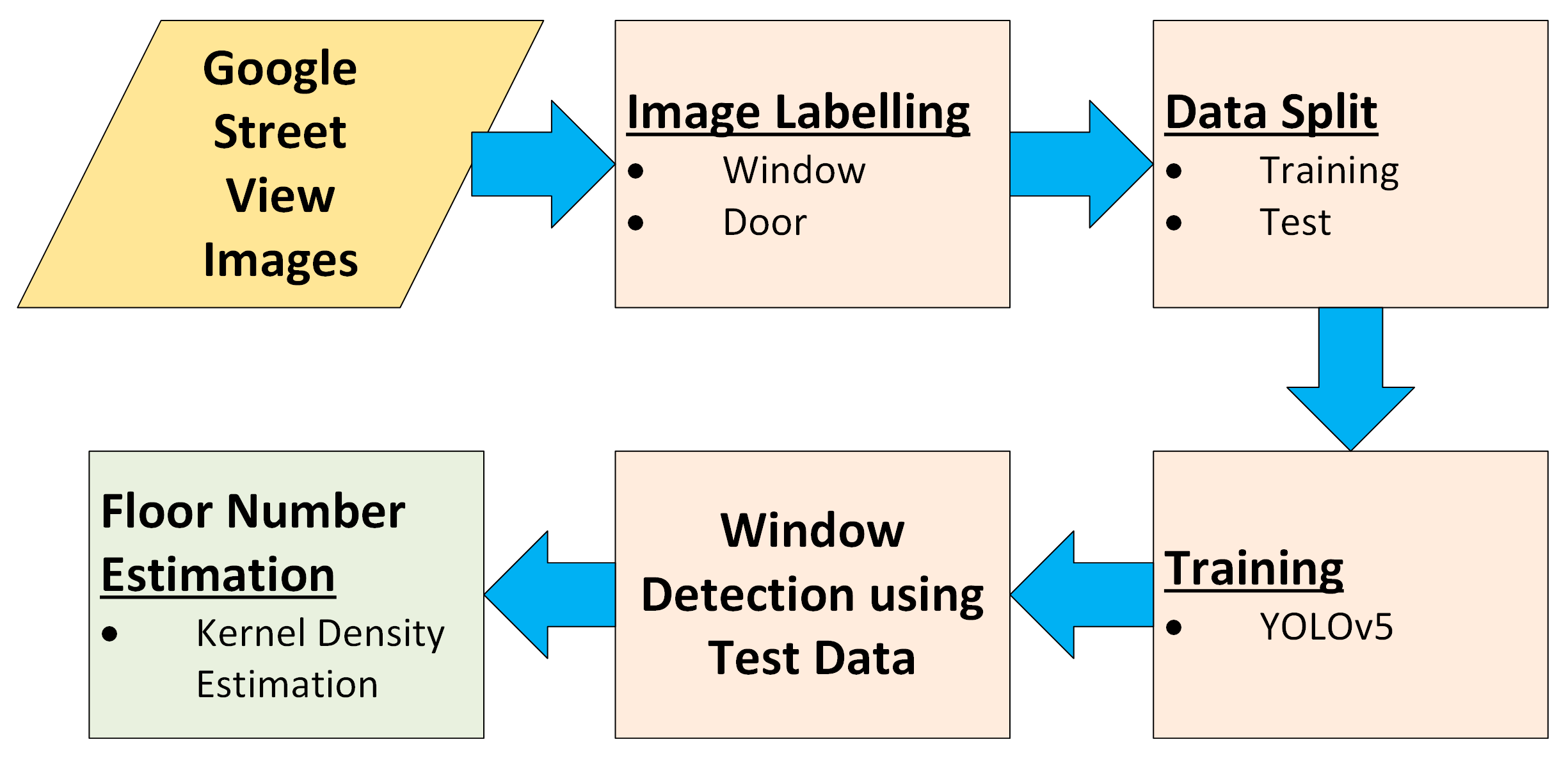

Some studies have examined YOLO algorithms for door and window detections. Bayomi et al. described a deep learning approach to extract window and door elements that can be used in analyses of buildings’ energy performance [

37]. Zhang et al. developed the DenseNet SPP–YOLO algorithm based on the YOLOv3 version, to enable autonomous mobile robots to recognize doors and windows in unfamiliar environments [

43]. Sezen et al. compared the performance of YOLOv3, YOLOv4, YOLOv5, and Faster R-CNN in detecting doors and windows [

10]. This study showed that YOLOv5 may be suitable for extracting the door and window elements of buildings by examining accuracy and speed together [

10]. For this reason, YOLOv5 was chosen to perform window extraction in our study.