A Novel Multi-Scale Feature Fusion-Based 3SCNet for Building Crack Detection

Abstract

1. Introduction

- (1)

- The proposed study demonstrates a novel three-scale feature fusion model capable of producing high classification accuracy.

- (2)

- The SLIC and LBP, along with the grey image, improve the building crack detection with minimum loss.

- (3)

- The proposed model has fewer parameters and high sensitivity for building crack detection.

2. The Proposed Method

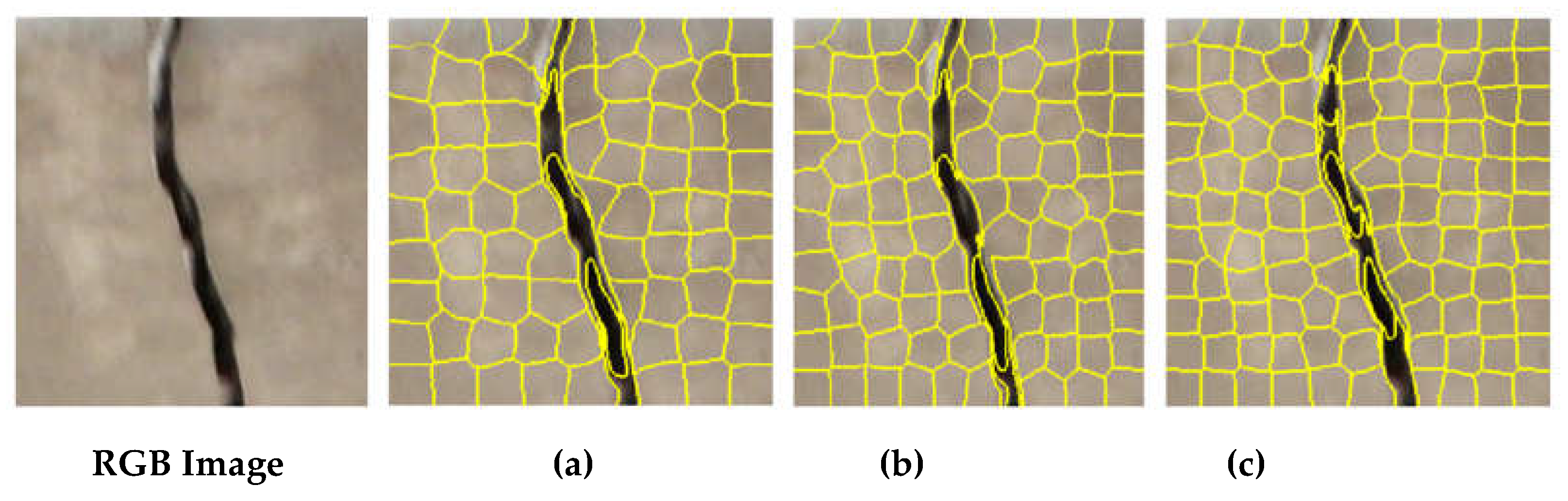

2.1. The SLIC Segmented Image Formation

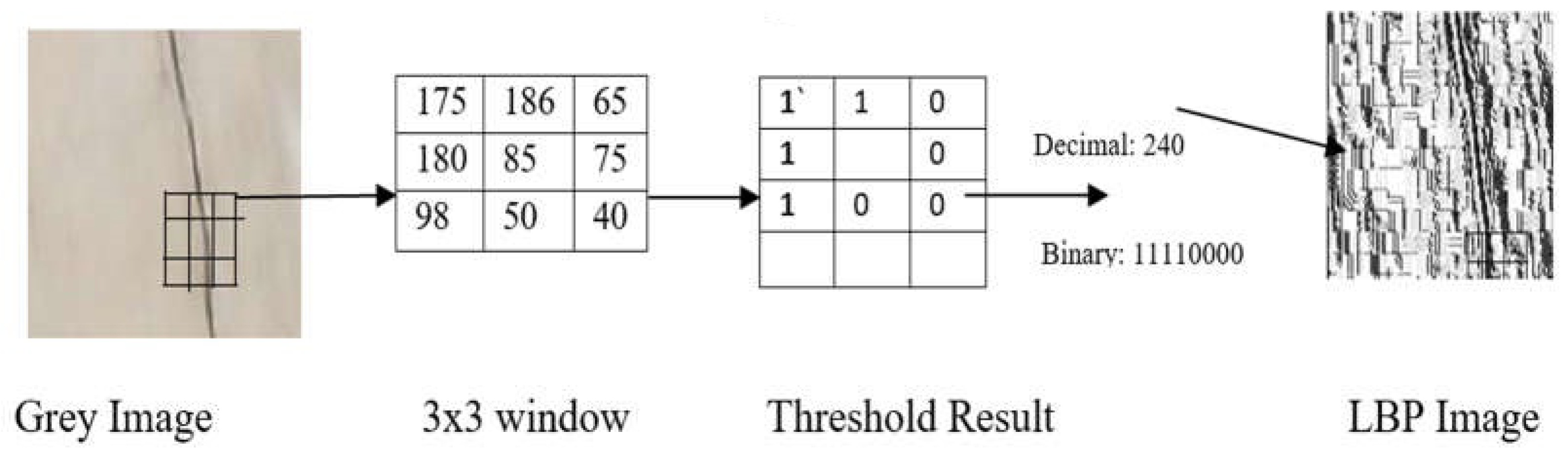

2.2. LBP Image Formation

2.3. Proposed 3SCNet Deep CNN Model

2.3.1. Local Response Normalization (LRN)

2.3.2. Loss Function

| Algorithm 1: Building crack detection using 3SCNet |

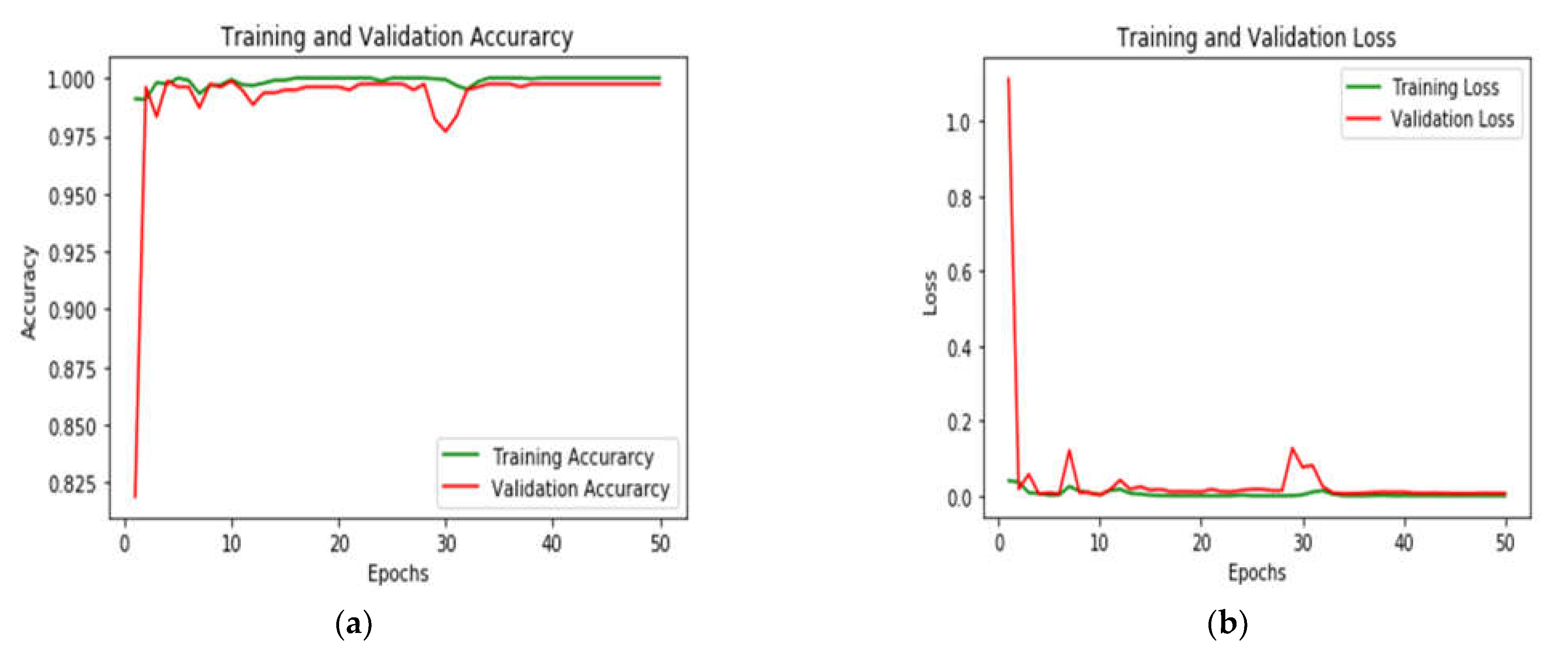

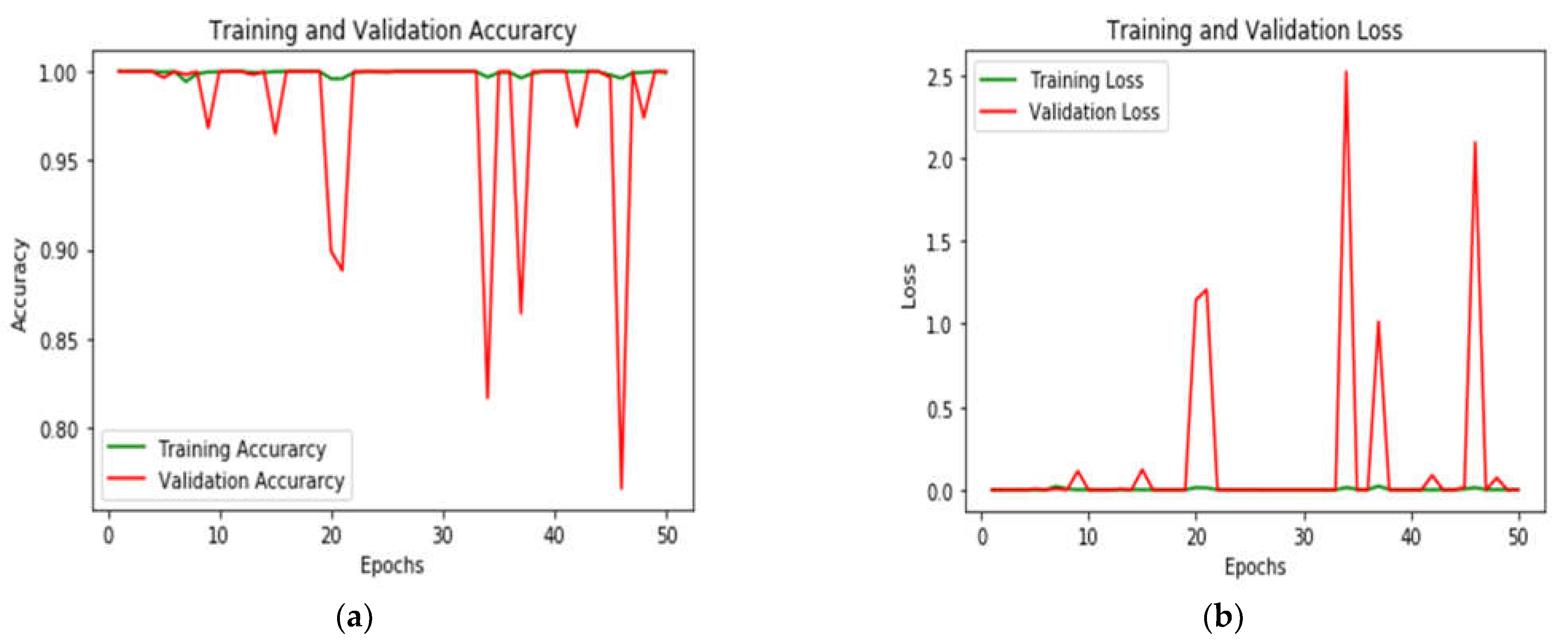

| Algorithm 1 1: Create a crack and non-crack image dataset 2: Convert RGB image to grey image 3: Find the SLIC image using the method discussed in Section 2.1 4: Find the LBP image using the method discussed in Section 2.2 5: for I = 1 to 50 train the 3SCNet (a): Input the SLIC, grey, and LBP images to 3SCNet (b): Apply Equation (7) and Equation (8) to convert logits into probability values 6: For J = 1 to 50 do (a) Find the training accuracy (b) Find the validation accuracy (c) Find the loss of the hybrid 3SCNet 7: Plot training and validation loss graph for the 50 epochs |

3. Results

3.1. Dataset

3.2. The Performance Evaluation Mathematical Method

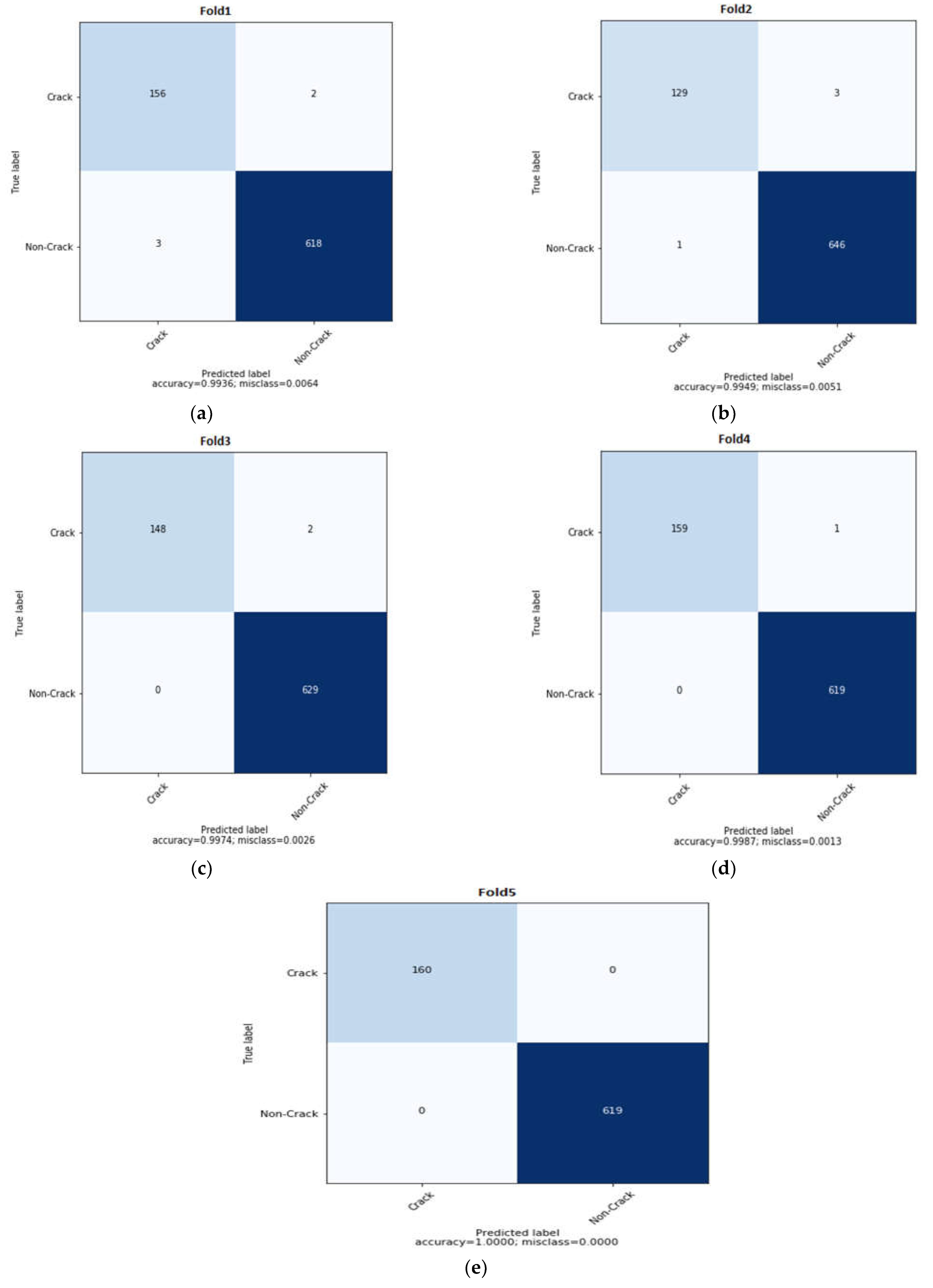

3.3. Building Crack Detection Using the SLIC, Greyscale, and LBP Image Input to 3SCNet

3.4. The Building Crack Detection Using Only the Greyscale Image Input to 3SCNet

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jarrett, K.; Kavukcuoglu, K.; Ranzato, M.A.; LeCun, Y. What is the best multi-stage architecture for object recognition? In Proceedings of the IEEE 12th International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2146–2153. [Google Scholar]

- Noh, Y.; Koo, D.; Kang, Y.M.; Park, D.G.; Lee, D.H. Automatic crack detection on concrete images using segmentation via fuzzy C-means clustering. In Proceedings of the 2017 IEEE International Conference on Applied System Innovation: Applied System Innovation for Modern Technology, ICASI 2017, Sapporo, Japan, 13–17 May 2017; pp. 877–880. [Google Scholar]

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Informatics 2015, 29, 196–210. [Google Scholar] [CrossRef]

- Kishore, K.; Gupta, N. Application of domestic & industrial waste materials in concrete: A review. Mater. Today Proc. 2020, 26, 2926–2931. [Google Scholar]

- Tomar, R.; Kishore, K.; Parihar, H.S.; Gupta, N. A comprehensive study of waste coconut shell aggregate as raw material in concrete. Mater. Today Proc. 2020, 44, 437–443. [Google Scholar] [CrossRef]

- Nishikawa, T.; Yoshida, J.; Sugiyama, T.; Fujino, Y. Concrete Crack Detection by Multiple Sequential Image Filtering. Comput. Civ. Infrastruct. Eng. 2011, 27, 29–47. [Google Scholar] [CrossRef]

- Zaurin, R.; Catbas, F.N. Integration of computer imaging and sensor data for structural health monitoring of bridges. Smart Mater. Struct. 2009, 19, 015019. [Google Scholar] [CrossRef]

- Huang, X.; Yang, M.; Feng, L.; Gu, H.; Su, H.; Cui, X.; Cao, W. Crack detection study for hydraulic concrete using PPP-BOTDA. Smart Struct. Syst. 2017, 20, 75–83. [Google Scholar]

- Kim, H.; Ahn, E.; Cho, S.; Shin, M.; Sim, S.H. Comparative analysis of image binarization methods for crack identification in concrete structures. Cem. Concr. Res. 2017, 99, 53–61. [Google Scholar] [CrossRef]

- Song, J.; Kim, S.; Liu, Z.; Quang, N.N.; Bien, F. A Real Time Nondestructive Crack Detection System for the Automotive Stamping Process. IEEE Trans. Instrum. Meas. 2016, 65, 2434–2441. [Google Scholar] [CrossRef]

- Le Bas, P.Y.; Anderson, B.E.; Remillieux, M.; Pieczonka, L.; Ulrich, T.J. Elasticity Nonlinear Diagnostic Method for Crack Detection and Depth Estimation. J. Acoust. Soc. Am. 2015, 138, 1836. [Google Scholar] [CrossRef]

- Budiansky, B.; O’Connell, R.J. Elastic Moduli of a Cracked Solid. Int. J. Solids Struct. 1976, 12, 81–97. [Google Scholar] [CrossRef]

- Aboudi, J. Stiffness reduction of cracked solids. Eng. Fract. Mech. 1987, 26, 637–650. [Google Scholar] [CrossRef]

- Dhital, D.; Lee, J.R. A Fully Non-Contact Ultrasonic Propagation Imaging System for Closed Surface Crack Evaluation. Exp. Mech. 2012, 52, 1111–1122. [Google Scholar] [CrossRef]

- Oliveira, H.; Correia, P.L. Automatic road crack detection and characterization. IEEE Trans. Intell. Transp. Syst. 2013, 14, 155–168. [Google Scholar] [CrossRef]

- Gupta, N.; Kishore, K.; Saxena, K.K.; Joshi, T.C. Influence of industrial by-products on the behavior of geopolymer concrete for sustainable development. Indian J. Eng. Mater. Sci. 2021, 28, 433–445. [Google Scholar]

- Kishore, K.; Gupta, N. Experimental Analysis on Comparison of Compressive Strength Prepared with Steel Tin Cans and Steel Fibre. Int. J. Res. Appl. Sci. Eng. Technol. 2019, 7, 169–172. [Google Scholar] [CrossRef]

- Shukla, A.; Kishore, K.; Gupta, N. Mechanical properties of cement mortar with Lime & Rice hush ash. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1116, 012025. [Google Scholar]

- Chalioris, C.E.; Kytinou, V.K.; Voutetaki, M.E.; Karayannis, C.G. Flexural damage diagnosis in reinforced concrete beams using a wireless admittance monitoring system—Tests and finite element analysis. Sensors 2021, 21, 1. [Google Scholar] [CrossRef]

- Chalioris, C.E.; Voutetaki, M.E.; Liolios, A.A. Structural health monitoring of seismically vulnerable RC frames under lateral cyclic loading. Earthq. Struct. 2020, 19, 29–44. [Google Scholar]

- Jahanshahi, M.R.; Jazizadeh, F.; Masri, S.F.; Becerik-Gerber, B. Unsupervised Approach for Autonomous Pavement-Defect Detection and Quantification Using an Inexpensive Depth Sensor. J. Comput. Civ. Eng. 2013, 27, 743–754. [Google Scholar] [CrossRef]

- Fujita, Y.; Hamamoto, Y. A robust automatic crack detection method from noisy concrete surfaces. Mach. Vis. Appl. 2011, 22, 245–254. [Google Scholar] [CrossRef]

- Zhang, Y. The design of glass crack detection system based on image preprocessing technology. In Proceedings of the 2014 IEEE 7th Joint International Information Technology and Artificial Intelligence Conference, ITAIC 2014, Chongqing, China, 20–21 December 2014; Volume 2014, pp. 39–42. [Google Scholar]

- Broberg, Surface crack detection in welds using thermography. NDT&E Int. 2013, 57, 69–73.

- Wang, P.; Huang, H. Comparison analysis on present image-based crack detection methods in concrete structures. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, CISP 2010, Yantai, China, 16–18 October 2010; Volume 5, pp. 2530–2533. [Google Scholar]

- Cheng, H.D.; Shi, X.J.; Glazier, C. Real-Time Image Thresholding Based on Sample Space Reduction and Interpolation Approach. J. Comput. Civ. Eng. 2003, 17, 264–272. [Google Scholar] [CrossRef]

- Li, S.; Zhao, X. Image-Based Concrete Crack Detection Using Convolutional Neural Network and Exhaustive Search Technique. Adv. Civ. Eng. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Yeum, C.M.; Dyke, S.J. Vision-Based Automated Crack Detection for Bridge Inspection. Comput. Civ. Infrastruct. Eng. 2015, 30, 759–770. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Z.; Qi, D.; Liu, Y. Automatic crack detection and classification method for subway tunnel safety monitoring. Sensors 2014, 14, 19307–19328. [Google Scholar] [CrossRef] [PubMed]

- Rathor, S.; Agrawal, S. A robust model for domain recognition of acoustic communication using Bidirectional LSTM and deep neural network. Neural Comput. Appl. 2021, 33, 11223–11232. [Google Scholar] [CrossRef]

- Sharma, H.; Jalal, A.S. Visual question answering model based on graph neural network and contextual attention. Image Vis. Comput. 2021, 110, 104165. [Google Scholar] [CrossRef]

- Singh, L.K.; Garg, H.; Khanna, M. Performance evaluation of various deep learning based models for effective glaucoma evaluation using optical coherence tomography images. Multimedia Tools Appl. 2022, 81, 27737–27781. [Google Scholar] [CrossRef]

- Pant, G.; Yadav, D.P.; Gaur, A. ResNeXt convolution neural network topology-based deep learning model for identi fi cation and classi fi cation of Pediastrum. Algal Res. 2020, 48, 101932. [Google Scholar] [CrossRef]

- Yadav, D.P.; Rathor, S. Bone Fracture Detection and Classification using Deep Learning Approach. In Proceedings of the 2020 International Conference on Power Electronics & IoT Applications in Renewable Energy and Its Control (PARC), Uttar Pradesh, India, 28–29 February 2020; Volume 2020, pp. 282–285. [Google Scholar]

- Saar, T.; Talvik, O. Automatic asphalt pavement crack detection and classification using neural networks. In Proceedings of the BEC 2010–2010 12th Biennial Baltic Electronics Conference, Tallinn, Estonia, 4–6 October 2010; pp. 345–348. [Google Scholar]

- German, S.; Brilakis, I.; Desroches, R. Rapid entropy-based detection and properties measurement of concrete spalling with machine vision for post-earthquake safety assessments. Adv. Eng. Informatics 2012, 26, 846–858. [Google Scholar] [CrossRef]

- Prasanna, P.; Dana, K.J.; Gucunski, N.; Basily, B.B.; La, H.M.; Lim, R.S.; Parvardeh, H. Automated Crack Detection on Concrete Bridges. IEEE Trans. Autom. Sci. Eng. 2016, 13, 591–599. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Chen, F.C.; Jahanshahi, M.R. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naïve Bayes Data Fusion. IEEE Trans. Ind. Electron. 2018, 65, 4392–4400. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road Crack Detection Using Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Makantasis, K.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Loupos, C. Deep Convolutional Neural Networks for efficient vision based tunnel inspection. In Proceedings of the 2015 IEEE 11th International Conference on Intelligent Computer Communication and Processing, ICCP, Cluj-Napoca, Romania, 3–5 September 2015; pp. 335–342. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Civ. Infrastruct. Eng. 2017, 00, 1–18. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Robust Semantic Pixel-Wise Labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Bang, S.; Park, S.; Kim, H.; Kim, H. Encoder–decoder network for pixel-level road crack detection in black-box images. Comput. Civ. Infrastruct. Eng. 2019, 34, 713–727. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on feature distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Elhariri, E.; El-Bendary, N.; Taie, S. Historical_Building_Crack_2019. Mendeley Data, V1. 2020. Available online: https://data.mendeley.com/datasets/xfk99kpmj9/1 (accessed on 5 March 2022).

- Design, L.; Zhu, J.; Zhang, C.; Qi, H.; Lu, Z. Vision-based defects detection for bridges using transfer learning and convolutional neural networks. Struct. Infrastruct. Eng. 2019, 16, 1–13. [Google Scholar]

- Hung, P.D.; Su, N.T.; Diep, V.T. Surface Classification of Damaged Concrete Using Deep Convolutional Neural Network. Pattern Recognit. Image Anal. 2019, 29, 676–687. [Google Scholar] [CrossRef]

- Feng, C.; Zhang, H.; Wang, S.; Li, Y.; Wang, H.; Yan, F. Structural Damage Detection using Deep Convolutional Neural Network and Transfer Learning. KSCE J. Civ. Eng. 2019, 23, 4493–4502. [Google Scholar] [CrossRef]

- Hüthwohl, P.; Lu, R.; Brilakis, I. Multi-classifier for reinforced concrete bridge defects. Autom. Constr. 2019, 105, 102824. [Google Scholar] [CrossRef]

- Bukhsh, Z.A.; Jansen, N.; Saeed, A. Damage detection using in-domain and cross-domain transfer learning. Neural Comput. Appl. 2021, 33, 16921–16936. [Google Scholar] [CrossRef]

- Soni, A.N. Crack Detection in buildings using convolutional neural Network. J. Innov. Dev. Pharm. Tech. Res. 2019, 2, 54–59. [Google Scholar]

- Dung, C.V.; Anh, L.D. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Słoński, M. A comparison of deep convolutional neural networks for image-based detection of concrete surface cracks. Comput. Assist. Methods Eng. Sci. 2019, 26, 105–112. [Google Scholar]

- Wang, Z.; Xu, G.; Ding, Y.; Wu, B.; Lu, G. A vision-based active learning convolutional neural network model for concrete surface crack detection. Adv. Struct. Eng. 2020, 23, 2952–2964. [Google Scholar] [CrossRef]

- Miao, P.; Srimahachota, T. Cost-effective system for detection and quantification of concrete surface cracks by combination of convolutional neural network and image processing techniques. Constr. Build. Mater. 2021, 293, 123549. [Google Scholar] [CrossRef]

- Kung, R.; Pan, N.; Wang, C.C.N.; Lee, P.C. Application of Deep Learning and Unmanned Aerial Vehicle on Building Maintenance. Adv. Civ. Eng. 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Loverdos, D.; Sarhosis, V. Automatic image-based brick segmentation and crack detection of masonry walls using machine learning. Autom. Constr. 2022, 140, 104389. [Google Scholar] [CrossRef]

- Elhariri, E.; El-Bendary, N.; Taie, S.A. Using hybrid filter-wrapper feature selection with multi-objective improved-salp optimization for crack severity recognition. IEEE Access 2020, 8, 84290–84315. [Google Scholar] [CrossRef]

| Model | Parameters | Limitations |

|---|---|---|

| VGG16 | 33 × 106 | High training time due to large numbers of trainable parameters. |

| AlexNet | 24 × 106 | This model cannot scan all features and the computation cost is high. |

| ResNet50 | 23 × 106 | This model is challenging to apply in real-time application and it is 50 layers deep. |

| DenseNet-161 | 7.2 × 106 | This model is small in size but performance is less compared to other state-of-the art models. |

| Measures | Formula | Interpretation |

|---|---|---|

| Accuracy | It is an actual non-crack sample value and the model also classifies non-crack. | |

| Precision | ||

| Recall | It is an actual crack sample value and the model also classifies crack. | |

| F1-Score | ||

| MCC | It is an actual non-crack sample value and the model classifies as a crack. It is an actual crack sample and the model classifies as non-crack. |

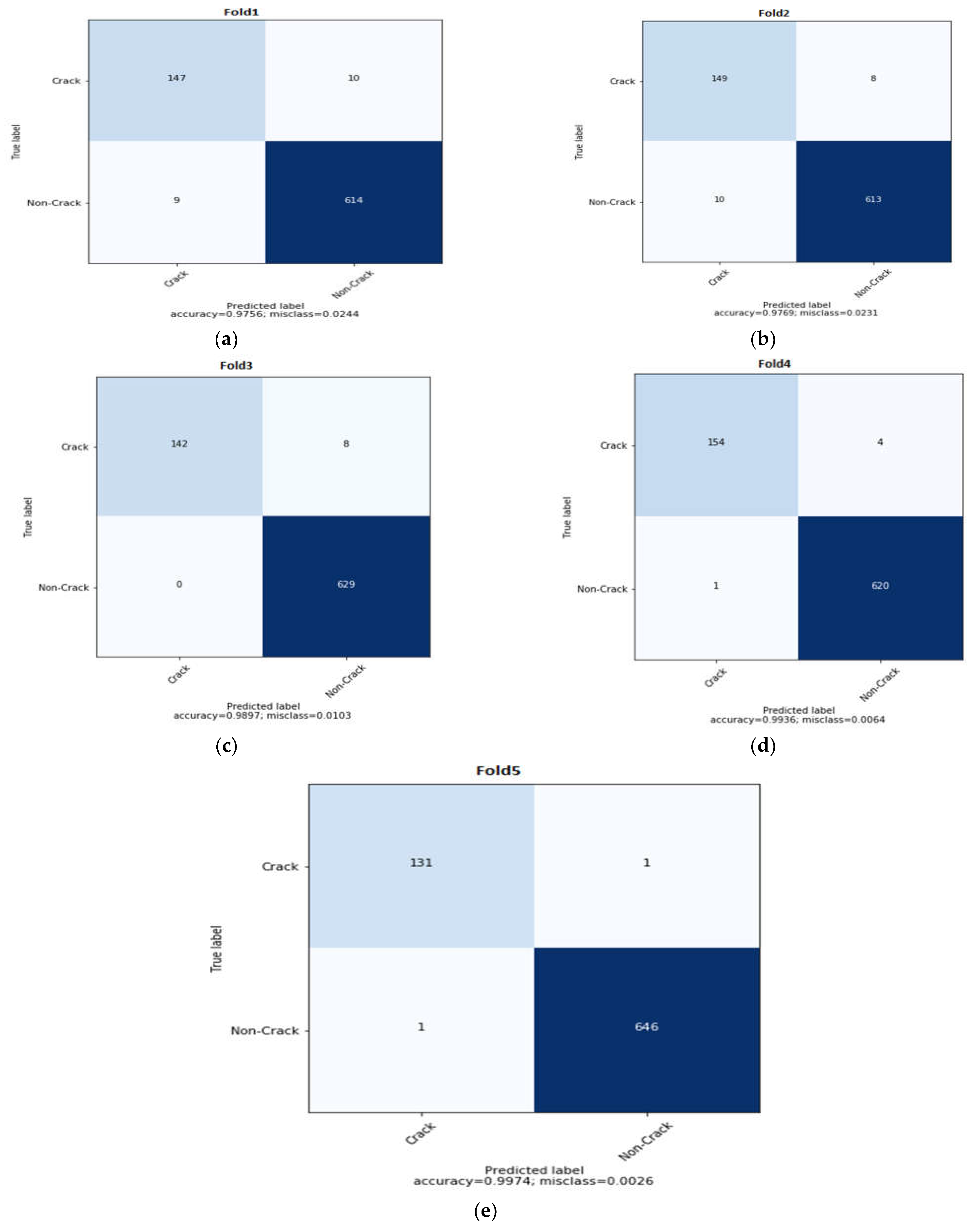

| Folds | Performance Metrics (%) | |||||

|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Precision | F1-Score | Accuracy | MCC | |

| Fold 1 | 98.11 | 99.68 | 98.73 | 98.42 | 99.36 | 98.02 |

| Fold 2 | 99.23 | 99.54 | 97.73 | 98.47 | 99.49 | 98.17 |

| Fold 3 | 100 | 99.68 | 98.67 | 99.33 | 99.74 | 99.17 |

| Fold 4 | 100 | 99.84 | 99.38 | 99.69 | 99.87 | 99.61 |

| Fold 5 | 100 | 100 | 100 | 100 | 100 | 100 |

| Average | 99.47 | 99.75 | 98.90 | 99.18 | 99.69 | 98.99 |

| Folds | Performance Metrics (%) | |||||

|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Precision | F1-Score | Accuracy | MCC | |

| Fold 1 | 94.23 | 98.40 | 93.63 | 93.93 | 97.56 | 92.41 |

| Fold 2 | 93.71 | 98.71 | 94.90 | 94.30 | 97.69 | 92.86 |

| Fold 3 | 100 | 98.74 | 94.67 | 97.26 | 98.97 | 96.68 |

| Fold 4 | 99.35 | 99.36 | 97.47 | 98.40 | 99.36 | 98.01 |

| Fold 5 | 99.24 | 99.85 | 99.24 | 99.24 | 99.74 | 99.09 |

| Average | 97.30 | 99.01 | 95.98 | 96.26 | 98.66 | 95.81 |

| Method | Performance Metrics (%) | |||||

|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Precision | F1-Score | Accuracy | MCC | |

| 3SCNet+Grey | 97.30 | 99.01 | 95.98 | 96.26 | 98.66 | 95.81 |

| 3SCNet+ Grey+SLIC+LBP | 99.47 | 99.75 | 98.90 | 99.18 | 99.69 | 98.99 |

| Study | Model | Dataset | Accuracy (%) |

|---|---|---|---|

| Design et al. [51] | Pretrained Inception V3 | 435 images | 97.8 |

| Hung et al. [52] | DCNN | 636 images | 92.29 |

| Feng et al. [53] | Pretrained Inception V3 | 435 images | 96.8 |

| Hüthwohl et al. [54] | Inception V3 | 2545 images | 96.8 |

| Bukhsh et al. [55] | InceptionV3, VGG16, ResNet50 | 1028 images | 86.9 |

| Soni et al. [56] | VGG16 | 40,000 images | 90 |

| Dung et al. [57] | DCNN | 40,000 images | 90 |

| Słoński et al. [58] | VGG16 | 56,400 images | 94 |

| Wang et al. [59] | Pretrained AlexNet | 1350 images | 95.56 |

| Miao et al. [60] | GoogLeNet | 23 images | 96.69 |

| Kung et al. [61] | VGG16 | 3500 images | 92.27 |

| Loverdos et al. [62] | CNN | 2814 images | 96.86 |

| Proposed 3SCNet+Grey | 3SCNet | 3896 images | 98.66 |

| Proposed3SCNet+Grey+SLIC+LBP | 3SCNet | 3896 images | 99.69 |

| Study | Precision (%) | Recall (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|

| Elhariri et al. [63] | 99.07 | 93.52 | 96.22 | 96.84 |

| Proposed 3SCNet+Grey | 95.98 | 97.30 | 96.26 | 98.66 |

| Proposed 3SCNet+Grey+SLIC+LBP | 98.90 | 99.75 | 99.18 | 99.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yadav, D.P.; Kishore, K.; Gaur, A.; Kumar, A.; Singh, K.U.; Singh, T.; Swarup, C. A Novel Multi-Scale Feature Fusion-Based 3SCNet for Building Crack Detection. Sustainability 2022, 14, 16179. https://doi.org/10.3390/su142316179

Yadav DP, Kishore K, Gaur A, Kumar A, Singh KU, Singh T, Swarup C. A Novel Multi-Scale Feature Fusion-Based 3SCNet for Building Crack Detection. Sustainability. 2022; 14(23):16179. https://doi.org/10.3390/su142316179

Chicago/Turabian StyleYadav, Dhirendra Prasad, Kamal Kishore, Ashish Gaur, Ankit Kumar, Kamred Udham Singh, Teekam Singh, and Chetan Swarup. 2022. "A Novel Multi-Scale Feature Fusion-Based 3SCNet for Building Crack Detection" Sustainability 14, no. 23: 16179. https://doi.org/10.3390/su142316179

APA StyleYadav, D. P., Kishore, K., Gaur, A., Kumar, A., Singh, K. U., Singh, T., & Swarup, C. (2022). A Novel Multi-Scale Feature Fusion-Based 3SCNet for Building Crack Detection. Sustainability, 14(23), 16179. https://doi.org/10.3390/su142316179