Pedagogical Design of K-12 Artificial Intelligence Education: A Systematic Review

Abstract

1. Introduction

1.1. Interdisciplinary Nature of K-12 AI Education

1.2. Existing Reviews of K-12 AI Education

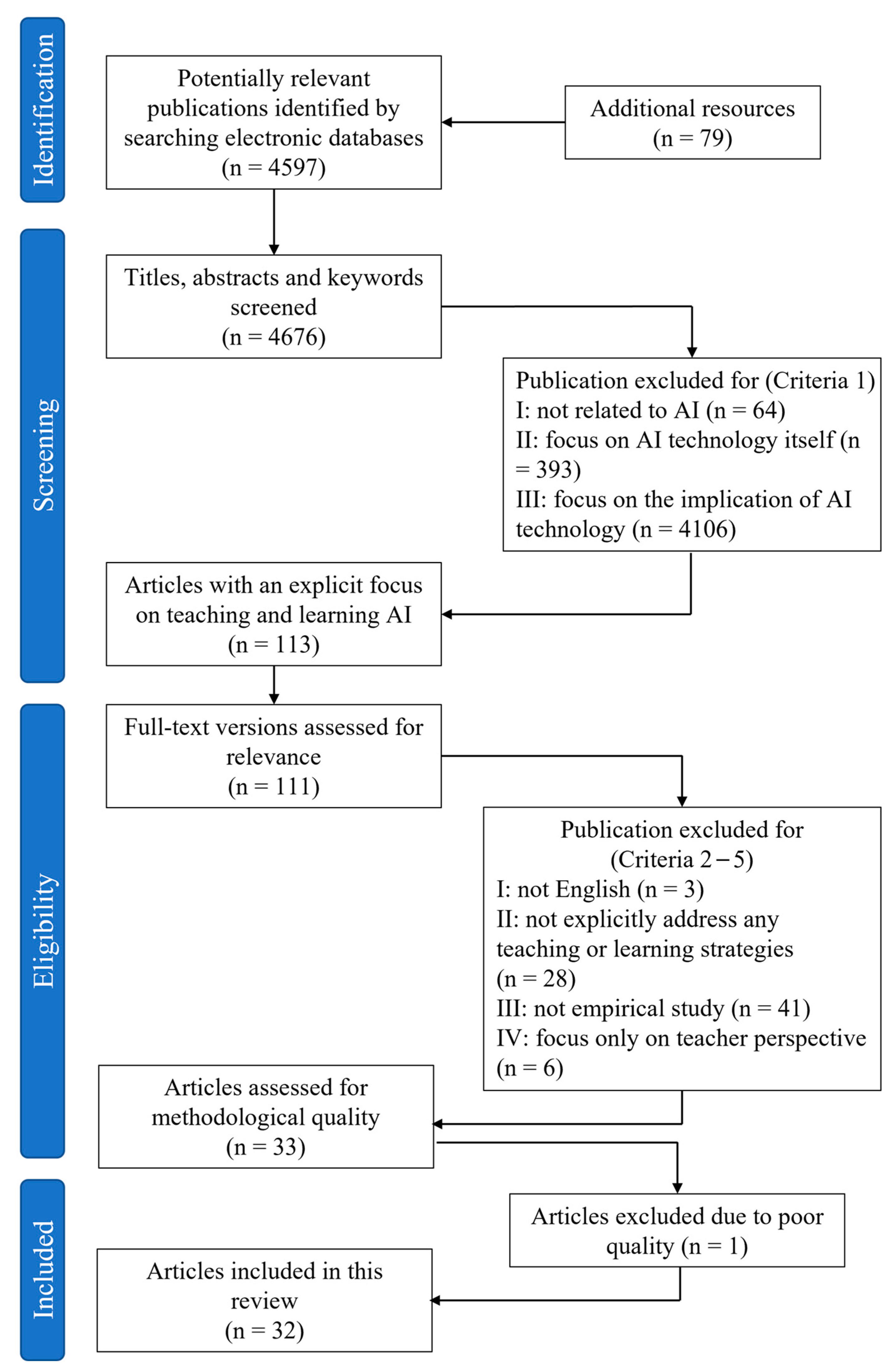

2. Method

2.1. Literature Search

2.2. Inclusion and Exclusion Criteria

2.3. Data Coding

3. Results

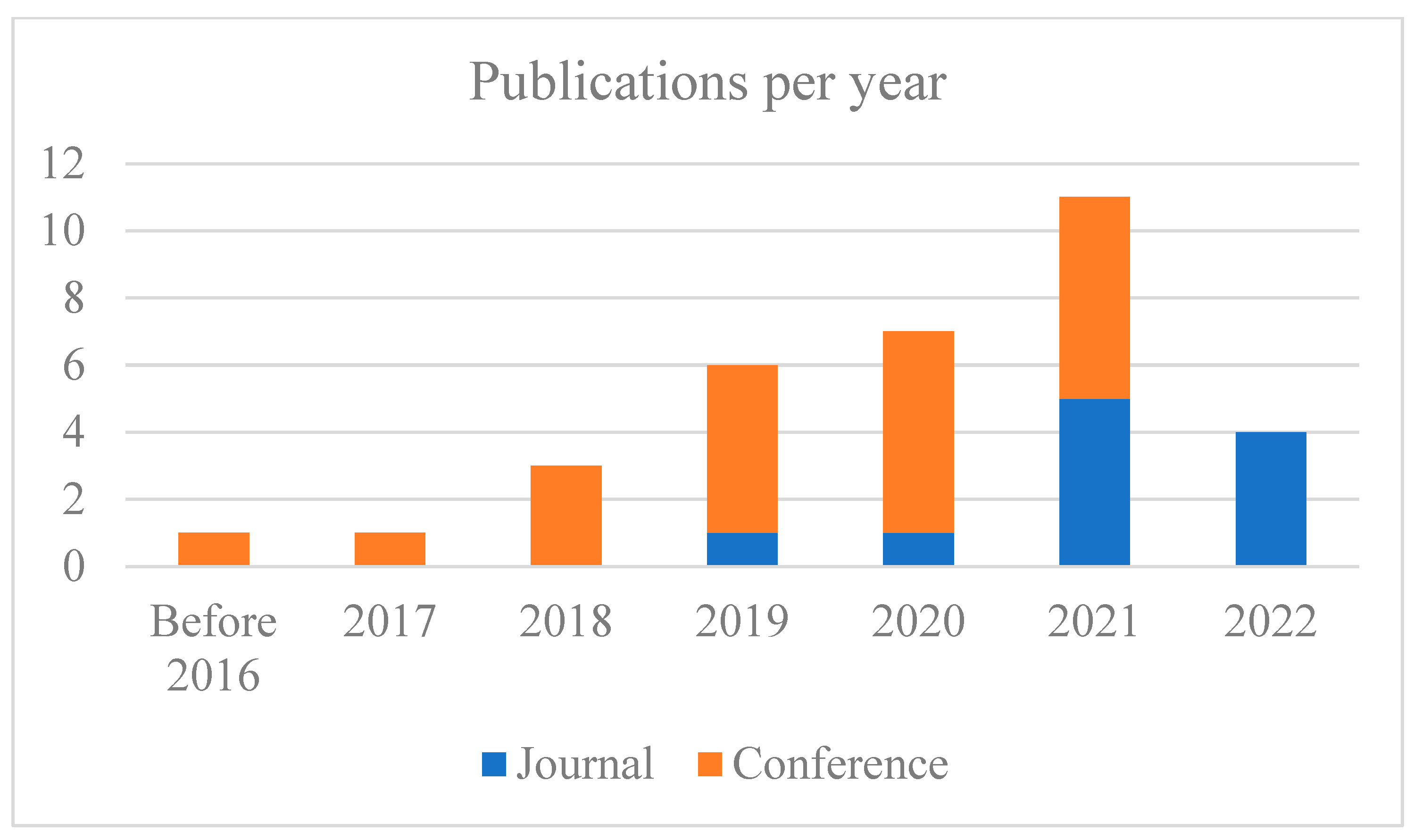

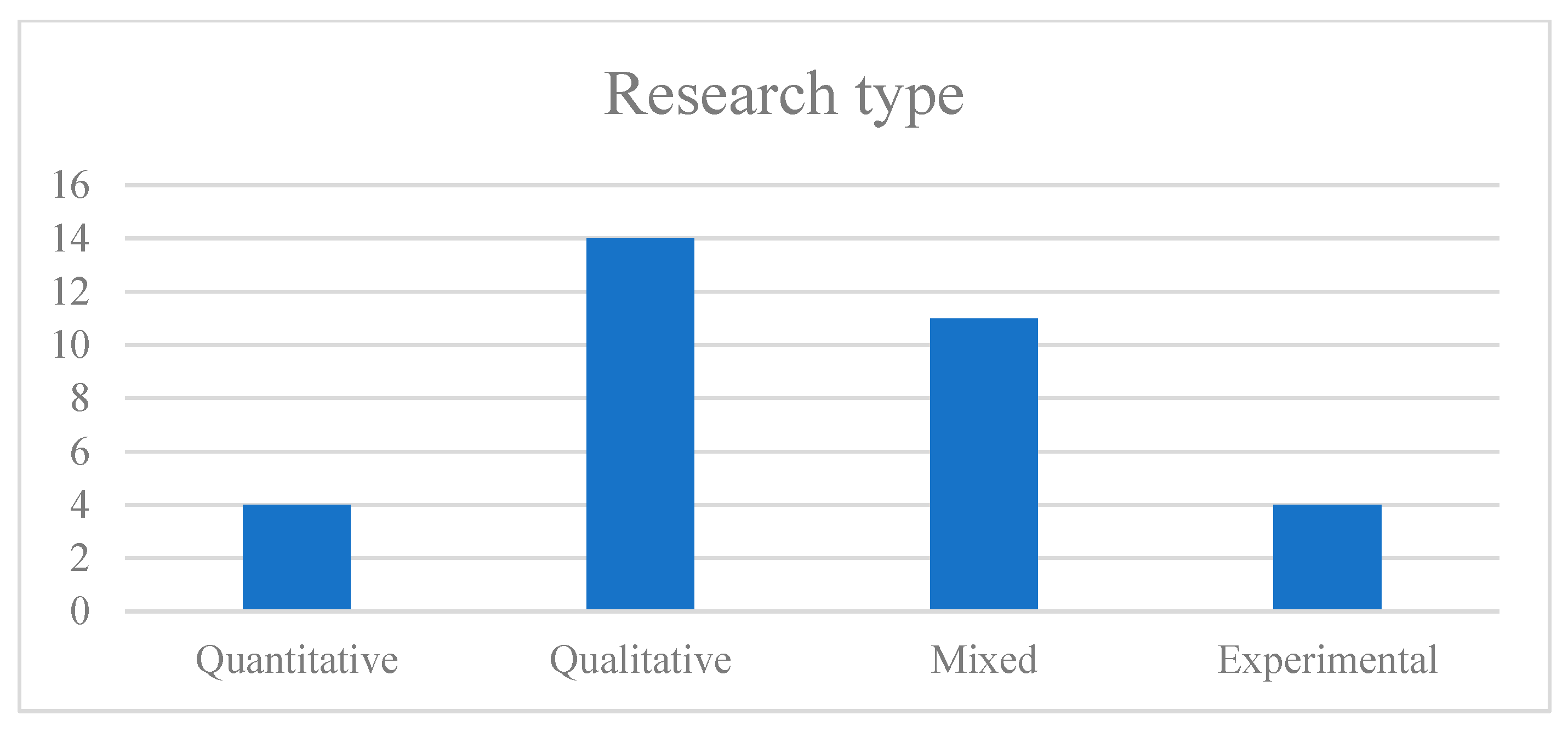

3.1. Status of Research on Teaching AI in the K-12 Context

3.2. Pedagogical Characteristics of Reported AI Teaching Units

3.2.1. Scale (Target Audience, Setting, and Duration) of the Teaching Unit

Target Audience

Setting

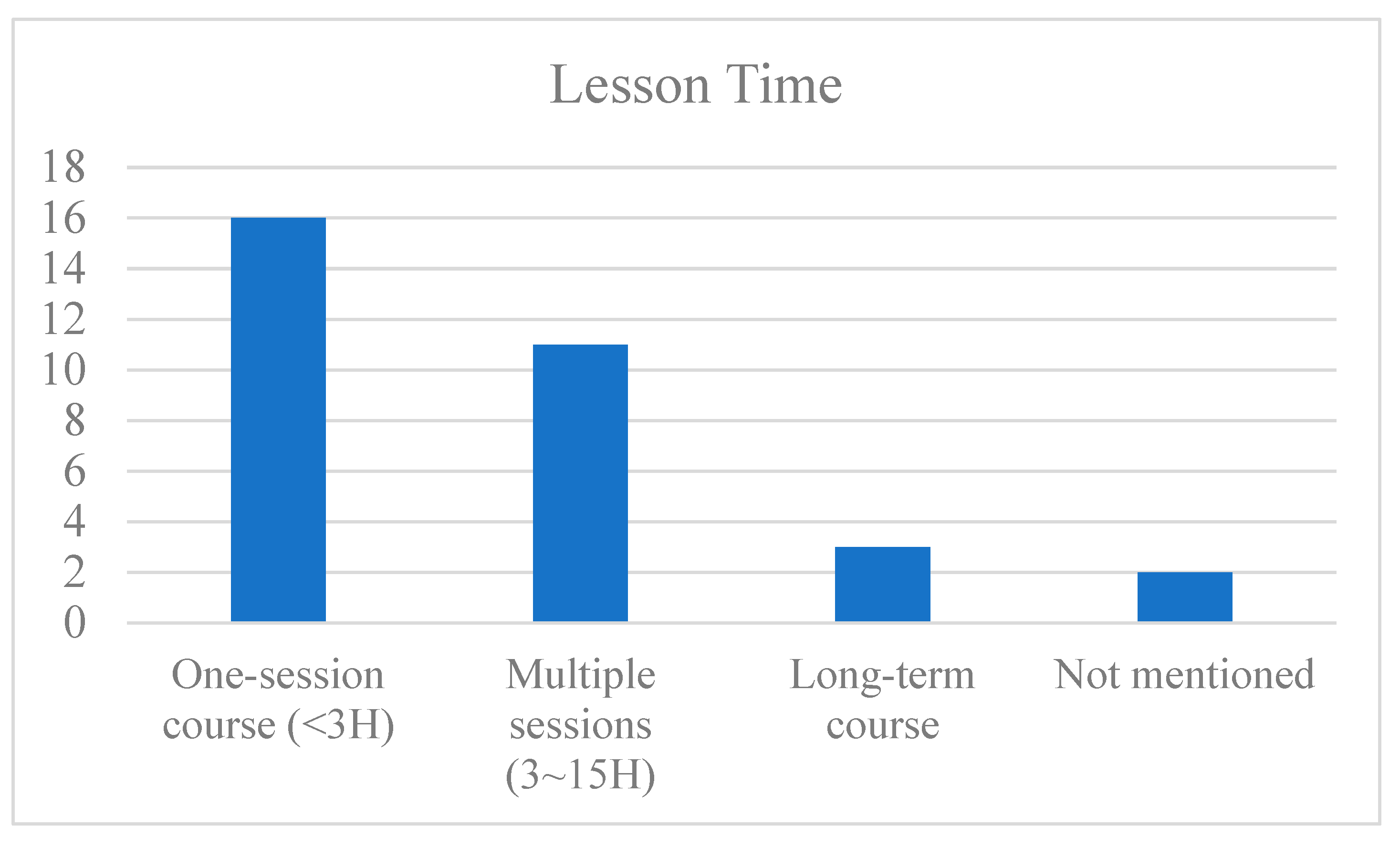

Duration

3.2.2. Learning Content, Tools and Materials, and Prior Knowledge Prerequisites of the Teaching Units

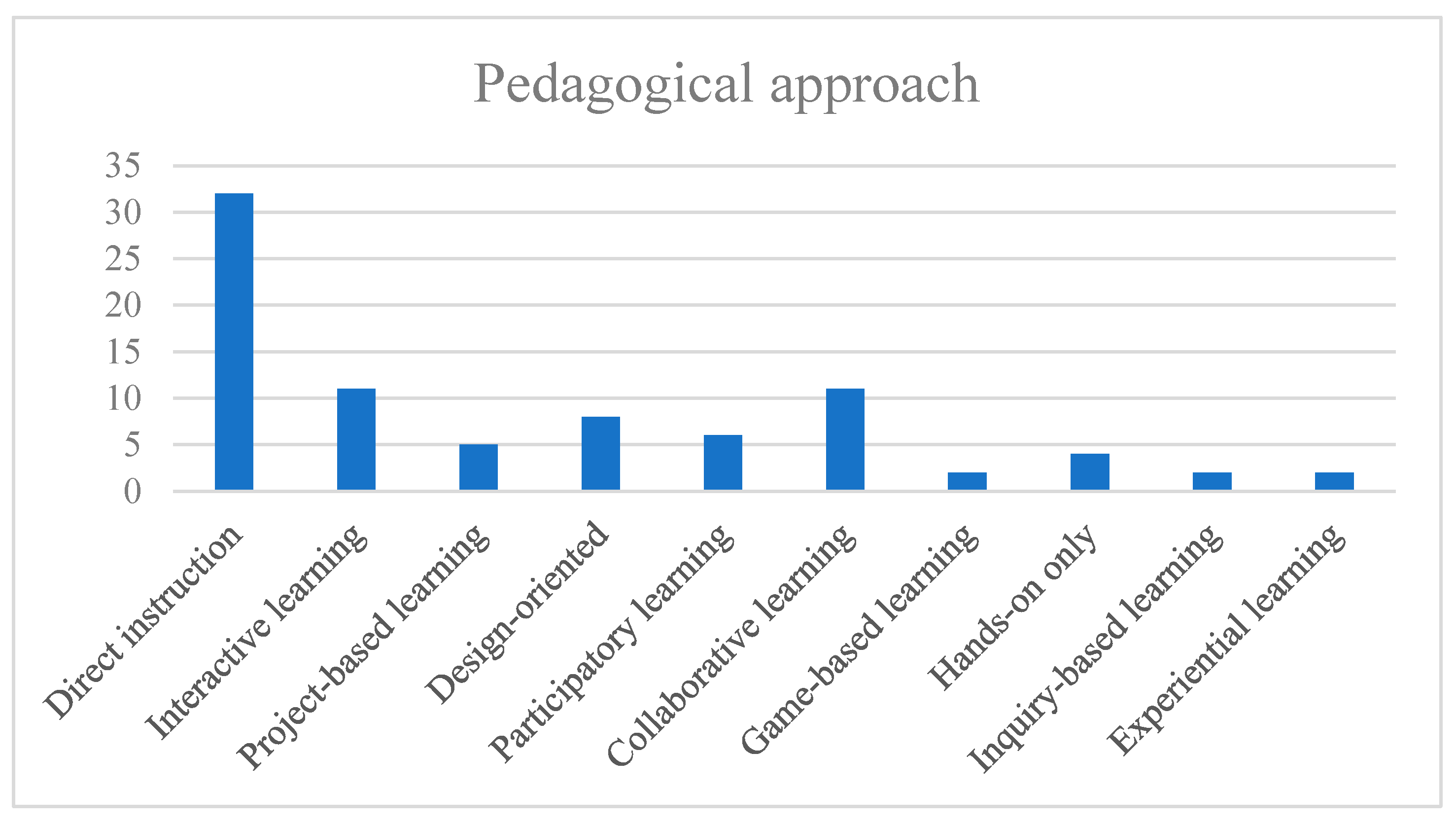

3.2.3. Learning Theory, Pedagogical Approach, and T&L Activities of the Teaching Units

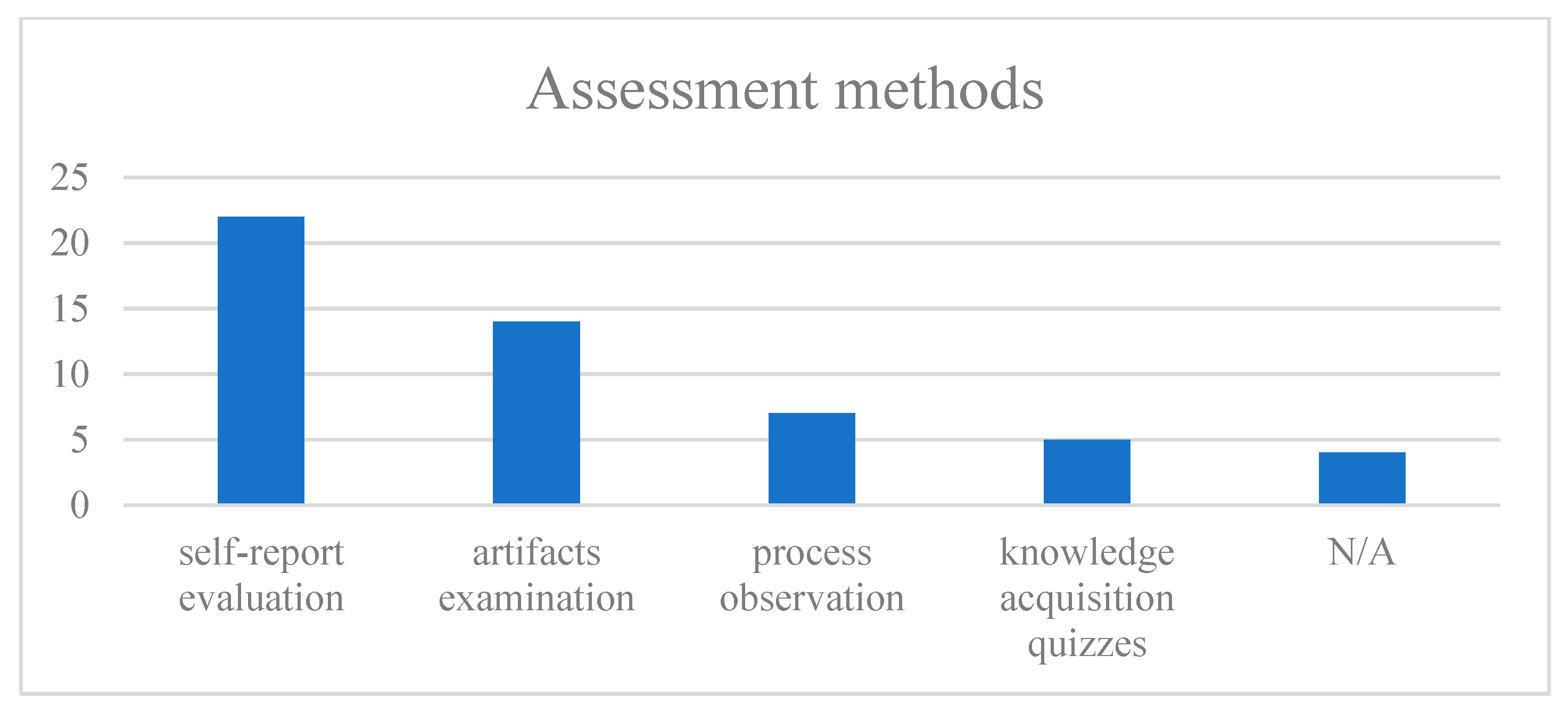

3.3. Assessments and Learning Outcomes of the Reported Teaching Units

4. Discussion and Future Directions

4.1. Main Findings

4.2. Selected Exemplary Designs

4.3. Strengths and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Authors and Year | Country/ Region | Age Level | Lesson Duration | Pedagogical Approach | Assessment |

| Henry et al. (2021) [9] | Belgium | Middle school + primary school | 1 Session; 2–4 h | Role-playing game: children alternate between the roles of developers, testers, and AI | Open questions: personal definition of AI |

| Van Brummelen et al. (2021) [47] | United Kingdom | Grade 6–12 (middle school) | 5 Sessions; 13–15 h | Direct instruction | Questionnaire Students’ artifacts |

| Vartiainen et al. (2021) [44] | Finland | Age 12–13 | 3 Sessions; 8–9 h | Design-oriented pedagogy Collaborative learning | Products; Process observation |

| Bilstrup et al. (2020) [56] | Denmark | Age 16–20 | 1 Session: ~3 h | Design as a learning approach | Artifact analysis |

| Lin et al. (2020) [60] | USA | Age 8–10 | 1 Session; ~2 h | Interactive learning | Five-item open question assessment; Self-evaluation questionnaire |

| Norouzi et al. (2020) [53] | USA | Secondary school students | 1 Month; ~80 h | Collaborative learning Instruction transitioned away from objectivist (basic knowledge) strategies to constructivist strategies (project) | Questionnaire for knowledge acquisition; Questionnaire for self-evaluation; |

| Vartiainen et al. (2020) [41] | Finland | Age 12–13 | 3 Sessions; 8–9 h | Design-oriented pedagogy emphasizes open-ended, real-life learning tasks | Products Process observation; |

| Wan et al. (2020) [48] | USA | Age 15–17 | 1 Session; ~3 h | Design space involves data visualization; hands-on exploration; collaborative learning | Questionnaire for knowledge acquisition |

| Toivonen et al. (2019) [42] | Finland | Age 12–13 | 3 Sessions; 8–9 h | Meta design approach: children as designers and creators in the evolving process of learning Project-based learning | Tests; group discussion; artifacts; interviews |

| Hitron et al. (2019) [49] | Israel | Age 10–13 | 1 Session | Learning by design approach Experience Predefined structured support | Observation; Interview |

| Mariescu-Istodor & Jormanainen (2019) [33] | Romania | Age 13–19 | 1 Session; ~2 h | Collaborative learning | Questionnaire (motivation) Self-assessment (perceived competence) |

| Estevez et al. (2019) [52] | Spain | Age 16–17 | 1 Session; ~2 h | Direct instruction Hands-on practice Collaborative learning | N/A |

| Williams et al. (2019a) [38] | USA | Age 4–6 | 1 Session; ~2 h (designed) 2–4 days in total | Interactive learning Collaborative learning | Perception of robots questionnaire; Theory of mind assessment |

| Williams et al. (2019b) [8] | USA | Age 4–6 | 1 Session; ~2 h (designed) 2–4 days in total | Interactive learning Collaborative learning | Multiple-choice questions for AI knowledge |

| Druga et al. (2019) [50] | USA, Germany, Denmark, Sweden | Age 7–9; Age 10–12 | 1 Session ~2 h | Interactive learning | AI perception questionnaire |

| Hitron et al. (2018) [51] | Israel | Age 10–12 | 1 Session | Interactive learning | Artifact analysis |

| Sakulkueakulsuk et al. (2018) [55] | Thailand | Grade 7–9 (middle school) | 3 sessions; 9 h | Participatory learning Four Ps of Creative Learning (Projects, Passion, Play, and Peers) PBL, GBL, CL | AI: product evaluation; Other: self-report survey (learning experiences and the adoption of new learning and thinking processes) |

| Woodward et al. (2018) [66] | US | Age 7–12 | 4 sessions; 6–8 h | Cooperative inquiry Codesign | N/A |

| Srikant & Aggarwal (2017) [37] | India | Age 10–15 | 1 Session | Direct instruction Hands-on practice Cognitive-based task design | N/A |

| Burgsteine et al. (2016) [54] | Euro | Grade 9–11 (secondary school) | 7 Sessions: 14 h | Theoretical and hands-on components; Group work | Self-assessment questionnaire |

| Vartiainen et al. (2020) [39] | Finland | Age 3–9 | ~1 h | Participatory learning | N/A |

| Druga & Ko (2021) [90] | USA | Age 7–12 | N/M | Project-based learning | Observation; Questionnaire (for perception) |

| Tseng et al., (2021) [45] | USA & Japan | Age 8–14 | ~2 h | Direct instruction Project-based learning Design-oriented learning | Survey about knowledge of ML |

| Shamir (2021) [46] | Israel | Age 12 | 6-Day course | Participatory learning Interactive learning | Artifact analysis Course questionnaire (multiple choice) |

| Zhang et al. (2022) [34] | N/M | Grade 7–9 (middle school) | >25 h | Interactive learning Collaborative learning Participatory learning | AI concept inventory (Good example) |

| Hsu et al. (2022) [67] | N/M | Grade 7 (middle school) | 6-Week curriculum | Experiential learning (interactive learning) vs. cycle of doing projects (direct instruction) | Course questionnaire (multiple choice) |

| Lee et al. (2021) [65] | N/M | Age 8–11 | N/M | Game-based learning Problem-based learning Collaborative learning | Pre/post assessment of AI concepts, ethics, life science |

| Kaspersen et al. (2021) [43] | Denmark | Age 17–20 | 6 interventions (Sessions) ~10 h | Project-based learning Collaborative learning Participatory learning (social science) | Observation |

| Fernandez-Martinez et al. (2021) [44] | Spain | Grade 8/ Grade 10 | 2 Sessions: 3–4 h | Individual work Direct instruction Interactive learning | Quiz with open and multiple-choice questions |

| Melsion et al. (2021) [36] | N/M | Age 10–14 | <30 min | Direct instruction Interactive learning | Questions evaluating understanding of ML and bias: multiple-choice, open-ended, Likert scale |

| Ng et al. (2022) [35] | Hong Kong | Primary school students | 7 Session + self-create workshop | Digital story writing Inquiry-based learning: five phases (orientation, conceptualization, investigation, conclusion, and discussion) | Posttest about AI knowledge |

| Hsu et al. (2021) [64] | Taiwan | Grade 5 | 9 Sessions (9 weeks) | Game-based learning Learning in making (Robots) Experiential learning | Learning effectiveness test |

References

- Jennings, C. Artificial Intelligence: Rise of the Lightspeed Learners; Rowman & Littlefield: Lanham, MD, USA, 2019. [Google Scholar]

- Trustworthy Artificial Intelligence (AI) in Education: Promises and Challenges. Available online: https://www.oecd.org/education/trustworthy-artificial-intelligence-ai-in-education-a6c90fa9-en.htm (accessed on 25 October 2022).

- Long, D.; Magerko, B. What is AI Literacy? Competencies and Design Considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 21 April 2020; pp. 1–16. [Google Scholar]

- Ho, J.W.; Scadding, M.; Kong, S.C.; Andone, D.; Biswas, G.; Hoppe, H.U.; Hsu, T.C. Classroom Activities for Teaching Artificial Intelligence to Primary School Students. In Proceedings of the International Conference on Computational Thinking Education, Hong Kong, China, 12–15 June 2019; pp. 157–159. [Google Scholar]

- Kandlhofer, M.; Steinbauer, G.; Hirschmugl-Gaisch, S.; Huber, P. Artificial Intelligence and Computer Science in Education: From Kindergarten to University. In Proceedings of the IEEE Frontiers in Education Conference (FIE), Eire, PA, USA, 12–15 October 2016; pp. 1–9. [Google Scholar]

- Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000366994 (accessed on 25 October 2022).

- Su, J.; Yang, W. Artificial Intelligence in early childhood education: A scoping review. Comput. Educ. Artif. Intell. 2022, 3, 100049. [Google Scholar] [CrossRef]

- Williams, R.; Park, H.W.; Oh, L.; Breazeal, C. Popbots: Designing an Artificial Intelligence Curriculum for Early Childhood Education. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9729–9736. [Google Scholar]

- Henry, J.; Hernalesteen, A.; Collard, A.-S. Teaching artificial intelligence to K-12 through a role-playing game questioning the Intelligence Concept. KI-Künstliche Intell. 2021, 35, 171–179. [Google Scholar] [CrossRef]

- Piaget, J. Intellectual evolution from adolescence to adulthood. Hum. Dev. 1972, 15, 1–12. [Google Scholar] [CrossRef]

- Vygotsky, L. Interaction between learning and development. Read. Dev. Child. 1978, 23, 34–41. [Google Scholar]

- Spector, J.M.; Anderson, T.M. Integrated and Holistic Perspectives on Learning, Instruction and Technology; Kluwer Academic Publishers: Boston, MA, USA, 2000. [Google Scholar]

- Bahar, M. Misconceptions in biology education and conceptual change strategies. Educ. Sci. Theory Pract. 2003, 3, 55–64. [Google Scholar]

- D’Silva, I. Active learning. J. Educ. Adm. Policy Stud. 2010, 6, 77–82. [Google Scholar]

- Makgato, M. Identifying constructivist methodologies and pedagogic content knowledge in the teaching and learning of Technology. Procedia-Soc. Behav. Sci. 2012, 47, 1398–1402. [Google Scholar] [CrossRef]

- Papert, S.; Solomon, C.; Soloway, E.; Spohrer, J.C. Twenty Things to do with a Computer. In Studying the Novice Programmer; Psychology Press: Hove, UK, 1971; pp. 3–28. [Google Scholar]

- Marques, L.S.; Gresse von Wangenheim, C.; Hauck, J.C. Teaching machine learning in school: A systematic mapping of the state of the art. Inform. Educ. 2020, 19, 283–321. [Google Scholar] [CrossRef]

- Touretzky, D.; Gardner-McCune, C.; Martin, F.; Seehorn, D. Envisioning AI for K-12: What Should Every Child Know About AI? In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 17 July 2019; pp. 9795–9799. [Google Scholar]

- Zhou, X.; Van Brummelen, J.; Lin, P. Designing AI learning experiences for K-12: Emerging works, future opportunities and a design framework. arXiv 2020, arXiv:2009.10228. [Google Scholar]

- Sanusi, I.T.; Oyelere, S.S. Pedagogies of Machine Learning in K-12 Context. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–8. [Google Scholar]

- Gresse von Wangenheim, C.; Hauck, J.C.; Pacheco, F.S.; Bertonceli Bueno, M.F. Visual tools for teaching machine learning in K-12: A ten-year systematic mapping. Educ. Inf. Technol. 2021, 26, 5733–5778. [Google Scholar] [CrossRef]

- Su, J.; Zhong, Y.; Ng, D.T. A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Comput. Educ. Artif. Intell. 2022, 3, 100065. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The Prisma statement. PLoS Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Hill, H.C.; Rowan, B.; Ball, D.L. Effects of teachers’ mathematical knowledge for teaching on student achievement. Am. Educ. Res. J. 2005, 42, 371–406. [Google Scholar] [CrossRef]

- Branch, R.M. Instructional Design: The Addie Approach; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar]

- Gerlach, V.S.; Ely, D.P. Teaching & Media: A Systematic Approach, 2nd ed.; Prentice-Hall Incorporated: Englewood Cliffs, NJ, USA, 1980. [Google Scholar]

- Susilana, R.; Hutagalung, F.; Sutisna, M.R. Students’ perceptions toward online learning in higher education in Indonesia during COVID-19 pandemic. Elem. Educ. Online 2020, 19, 9–19. [Google Scholar]

- Yusnita, I.; Maskur, R.; Suherman, S. Modifikasi model Pembelajaran Gerlach Dan Ely Melalui Integrasi Nilai-Nilai Keislaman Sebagai upaya meningkatkan Kemampuan Representasi matematis. Al-Jabar J. Pendidik. Mat. 2016, 7, 29–38. [Google Scholar] [CrossRef]

- Setiawati, R.; Netriwati, N.; Nasution, S.P. DESAIN model Pembelajaran Gerlach Dan Ely Yang Berciri nilai-nilai ke-islaman Untuk Meningkatkan Kemampuan Komunikasi Matematis. AKSIOMA J. Program Studi Pendidik. Mat. 2018, 7, 371. [Google Scholar] [CrossRef]

- Surur, A.M. Gerlach and Ely’s learning model: How to implement it to online learning for statistics course. Edumatika J. Ris. Pendidik. Mat. 2022, 4, 174–188. [Google Scholar] [CrossRef]

- Tuckett, A.G. Applying thematic analysis theory to practice: A researcher’s experience. Contemp. Nurse 2005, 19, 75–87. [Google Scholar] [CrossRef]

- Dai, Y.; Lu, S.; Liu, A. Student pathways to understanding the Global Virtual Teams: An Ethnographic Study. Interact. Learn. Environ. 2019, 27, 3–14. [Google Scholar] [CrossRef]

- Mariescu-Istodor, R.; Jormanainen, I. Machine Learning for High School Students. In Proceedings of the 19th Koli Calling International Conference on Computing Education Research, New York, NY, USA, 21–24 November 2019; pp. 1–9. [Google Scholar]

- Zhang, H.; Lee, I.; Ali, S.; DiPaola, D.; Cheng, Y.; Breazeal, C. Integrating ethics and career futures with technical learning to promote AI literacy for middle school students: An exploratory study. Int. J. Artif. Intell. Educ. 2022, 1, 1–35. [Google Scholar] [CrossRef]

- Ng, D.T.; Luo, W.; Chan, H.M.; Chu, S.K. Using digital story writing as a pedagogy to develop AI literacy among primary students. Comput. Educ. Artif. Intell. 2022, 3, 100054. [Google Scholar] [CrossRef]

- Melsión, G.I.; Torre, I.; Vidal, E.; Leite, I. Using explainability to help children understand gender bias in ai. In Proceedings of the Interaction Design and Children, Athens, Greece, 24–30 June 2021; pp. 87–99. [Google Scholar]

- Srikant, S.; Aggarwal, V. Introducing Data Science to School Kids. In Proceedings of the 2017 ACM SIGCSE Technical Symposium on Computer Science Education, Seattle, WA, USA, 8–11 March 2017; pp. 561–566. [Google Scholar]

- Williams, R.; Park, H.W.; Breazeal, C. A is for Artificial Intelligence. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–11. [Google Scholar]

- Vartiainen, H.; Tedre, M.; Valtonen, T. Learning machine learning with very young children: Who is teaching whom? Int. J. Child-Comput. Interact. 2020, 25, 100182. [Google Scholar] [CrossRef]

- Vartiainen, H.; Toivonen, T.; Jormanainen, I.; Kahila, J.; Tedre, M.; Valtonen, T. Machine learning for middle schoolers: Learning through data-driven design. Int. J. Child-Comput. Interact. 2021, 29, 100281. [Google Scholar] [CrossRef]

- Vartiainen, H.; Toivonen, T.; Jormanainen, I.; Kahila, J.; Tedre, M.; Valtonen, T. Machine Learning for Middle-Schoolers: Children as Designers of Machine-Learning Apps. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–9. [Google Scholar]

- Toivonen, T.; Jormanainen, I.; Kahila, J.; Tedre, M.; Valtonen, T.; Vartiainen, H. Co-designing Machine Learning Apps in K-12 with Primary School Children. In Proceedings of the IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 308–310. [Google Scholar]

- Kaspersen, M.H.; Bilstrup, K.-E.K.; Van Mechelen, M.; Hjorth, A.; Bouvin, N.O.; Petersen, M.G. VotestratesML: A High School Learning Tool for Exploring Machine Learning and its Societal Implications. In Proceedings of the FabLearn Europe/MakeEd 2021-An International Conference on Computing, Design and Making in Education, St. Gallen, Switzerland, 2–3 June 2021; pp. 1–10. [Google Scholar]

- Fernández-Martínez, C.; Hernán-Losada, I.; Fernández, A. Early introduction of AI in Spanish Middle Schools. A motivational study. KI-Künstliche Intell. 2021, 35, 163–170. [Google Scholar] [CrossRef]

- Tseng, T.; Murai, Y.; Freed, N.; Gelosi, D.; Ta, T.D.; Kawahara, Y. PlushPal: Storytelling with Interactive Plush Toys and Machine Learning. In Proceedings of the Interaction Design and Children, Athens, Greece, 24–30 June 2021; pp. 236–245. [Google Scholar]

- Shamir, G.; Levin, I. Neural network construction practices in elementary school. KI-Künstliche Intell. 2021, 35, 181–189. [Google Scholar] [CrossRef]

- Van Brummelen, J.; Heng, T.; Tabunshchyk, V. Teaching Tech to Talk: K-12 Conversational Artificial Intelligence Literacy Curriculum and Development Tools. In Proceedings of the AAAI Conference on Artificial Intelligence 2021, Online, 2–9 February 2021; Volume 35, pp. 15655–15663. [Google Scholar]

- Wan, X.; Zhou, X.; Ye, Z.; Mortensen, C.K.; Bai, Z. Smileycluster: Supporting accessible machine learning in K-12 scientific discovery. In Proceedings of the Interaction Design and Children Conference, London, UK, 21–24 June 2020; pp. 23–35. [Google Scholar]

- Hitron, T.; Orlev, Y.; Wald, I.; Shamir, A.; Erel, H.; Zuckerman, O. Can Children Understand Machine Learning Concepts? In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 2 May 2019; pp. 1–11. [Google Scholar]

- Druga, S.; Vu, S.T.; Likhith, E.; Qiu, T. Inclusive AI Literacy for Kids Around the World. In Proceedings of the FabLearn 2019, New York, NY, USA, 9–10 March 2019; pp. 104–111. [Google Scholar]

- Hitron, T.; Wald, I.; Erel, H.; Zuckerman, O. Introducing Children to Machine Learning Concepts through Hands-On Experience. In Proceedings of the 17th ACM Conference on Interaction Design and Children, Trondheim, Norway, 19–22 June 2018; pp. 563–568. [Google Scholar]

- Estevez, J.; Garate, G.; Grana, M. Gentle introduction to artificial intelligence for high-school students using scratch. IEEE Access 2019, 7, 179027–179036. [Google Scholar] [CrossRef]

- Norouzi, N.; Chaturvedi, S.; Rutledge, M. Lessons Learned from Teaching Machine Learning and Natural Language Processing to High School Students. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 3 April 2020; Volume 34, pp. 13397–13403. [Google Scholar]

- Burgsteiner, H.; Kandlhofer, M.; Steinbauer, G. Irobot: Teaching the Basics of Artificial Intelligence in High Schools. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AR, USA, 5 May 2016; Volume 30. [Google Scholar]

- Sakulkueakulsuk, B.; Witoon, S.; Ngarmkajornwiwat, P.; Pataranutaporn, P.; Surareungchai, W.; Pataranutaporn, P.; Subsoontorn, P. Kids making AI: Integrating Machine Learning, Gamification, and Social Context in STEM Education. In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, Australia, 4–7 December 2018; pp. 1005–1010. [Google Scholar]

- Bilstrup, K.-E.K.; Kaspersen, M.H.; Petersen, M.G. Staging Reflections on Ethical Dilemmas in Machine Learning. In Proceedings of the 2020 ACM Designing Interactive Systems Conference, Eindhoven, The Netherlands, 3 July 2020; pp. 1211–1222. [Google Scholar]

- Lane, D. Machine Learning for Kids: A Project-Based Introduction to Artificial Intelligence; No Starch Press: San Francisco, CA, USA, 2021. [Google Scholar]

- Carney, M.; Webster, B.; Alvarado, I.; Phillips, K.; Howell, N.; Griffith, J.; Jongejan, J.; Pitaru, A.; Chen, A. Teachable Machine: Approachable Web-Based Tool for Exploring Machine Learning Classification. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25 April 2020; pp. 1–8. [Google Scholar]

- Abadi, M. Tensorflow: Learning Functions at Scale. In Proceedings of the 21st ACM SIGPLAN International Conference on Functional Programming, Nara, Japan, 4 September 2016; p. 1. [Google Scholar]

- Lin, P.; Van Brummelen, J.; Lukin, G.; Williams, R.; Breazeal, C. Zhorai: Designing a Conversational Agent for Children to Explore Machine Learning Concepts. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 3 April 2020; Volume 34, pp. 13381–13388. [Google Scholar]

- Krapfl, J.E. Behaviorism and society. Behav. Anal. 2016, 39, 123–129. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Abramson, C. Problems of teaching the behaviorist perspective in the Cognitive Revolution. Behav. Sci. 2013, 3, 55–71. [Google Scholar] [CrossRef] [PubMed]

- Mandler, G. Origins of the cognitive (r)evolution. J. Hist. Behav. Sci. 2002, 38, 339–353. [Google Scholar] [CrossRef] [PubMed]

- Hsu, T.-C.; Abelson, H.; Lao, N.; Tseng, Y.-H.; Lin, Y.-T. Behavioral-pattern exploration and development of an instructional tool for young children to learn AI. Comput. Educ. Artif. Intell. 2021, 2, 100012. [Google Scholar] [CrossRef]

- Lee, S.; Mott, B.; Ottenbreit-Leftwich, A.; Scribner, A.; Taylor, S.; Park, K.; Rowe, J.; Glazewski, K.; Hmelo-Silver, C.E.; Lester, J. AI-Infused Collaborative Inquiry in Upper Elementary School: A Game-Based Learning Approach. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 18 May 2021; Volume 35, pp. 15591–15599. [Google Scholar]

- Woodward, J.; McFadden, Z.; Shiver, N.; Ben-hayon, A.; Yip, J.C.; Anthony, L. Using Co-design to Examine How Children Conceptualize Intelligent Interfaces. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, Canada, 21–26 April 2018; pp. 1–14. [Google Scholar]

- Hsu, T.C.; Abelson, H.; Van Brummelen, J. The effects on secondary school students of applying experiential learning to the Conversational AI Learning Curriculum. Int. Rev. Res. Open Distrib. Learn. 2022, 23, 82–103. [Google Scholar] [CrossRef]

- Libarkin, J.C.; Kurdziel, J.P. Research methodologies in science education: The Qualitative-Quantitative Debate. J. Geosci. Educ. 2002, 50, 78–86. [Google Scholar] [CrossRef]

- Global Education Monitoring Report 2020: Inclusion and Education-All Means All; United Nations Educational, Scientific and Cultural Organization: Paris, France, 2021; p. S.l.

- Resnick, M.; Silverman, B. Some Reflections on Designing Construction Kits for Kids. In Proceeding of the 2005 Conference on Interaction Design and Children-IDC, Boulder, CO, USA, 8–10 June 2005; pp. 117–122. [Google Scholar]

- Sorva, J. Visual Program Simulation in Introductory Programming Education; Aalto Univ. School of Science: Espoo, Finland, 2012. [Google Scholar]

- Steinbauer, G.; Kandlhofer, M.; Chklovski, T.; Heintz, F.; Koenig, S. A differentiated discussion about AI education K-12. KI-Künstliche Intell. 2021, 35, 131–137. [Google Scholar] [CrossRef] [PubMed]

- Guzdial, M. Learner-Centered Computing Education for Computer Science Majors. In Synthesis Lectures on Human-Centered Informatics; Springer: New York, NY, USA, 2016; pp. 83–94. [Google Scholar]

- Papert, S. Mindstorms: Children, Computers, and Powerful Ideas; Harvester Press: Brighton, UK, 1980. [Google Scholar]

- Palesuvaran, P.; Karpathy, A. (Tesla): CVPR 2021 Workshop on Autonomous Vehicles. Available online: https://pharath.github.io/self%20driving/Karpathy-CVPR-2021/ (accessed on 26 October 2022).

- Koehler, M.J.; Mishra, P. What Is Technological Pedagogical Content Knowledge? Contemporary Issues in Technology and Teacher Education; Society for Information Technology & Teacher Education: Waynesville, NC USA, 2009; Volume 9. [Google Scholar]

- Valiant, L. Probably Approximately Correct Nature’s Algorithms for Learning and Prospering in a Complex World; Basic Books, A Member of the Perseus Books Group: New York, NY, USA, 2014. [Google Scholar]

- Jong, M.S.-Y.; Geng, J.; Chai, C.S.; Lin, P.-Y. Development and predictive validity of the Computational Thinking Disposition Questionnaire. Sustainability 2020, 12, 4459. [Google Scholar] [CrossRef]

- Chiu, T.K.; Meng, H.; Chai, C.-S.; King, I.; Wong, S.; Yam, Y. Creation and evaluation of a pretertiary Artificial Intelligence (AI) curriculum. IEEE Trans. Educ. 2022, 65, 30–39. [Google Scholar] [CrossRef]

- Lehrer, R.; Pritchard, C. Symbolizing Space into Being. Symbolizing, Modeling and Tool Use in Mathematics Education; Springer: New York, NY, USA, 2002; pp. 59–86. [Google Scholar]

- Druga, S. Growing Up with AI: Cognimates: From Coding to Teaching Machines. Doctoral Dissertation, Massachusetts Institute of Technology, Cambridge, MA, USA, 2018. [Google Scholar]

- Evangelista, I.; Blesio, G.; Benatti, E. Why Are We Not Teaching Machine Learning at High School? A Proposal. In Proceedings of the 2018 World Engineering Education Forum-Global Engineering Deans Council (WEEF-GEDC), Albuquerque, NM, USA, 12 November 2018; pp. 1–6. [Google Scholar]

- Reyes, A.A.; Elkin, C.; Niyaz, Q.; Yang, X.; Paheding, S.; Devabhaktuni, V.K. A Preliminary Work on Visualization-Based Education Tool for High School Machine Learning Education. In Proceedings of the 2020 IEEE Integrated STEM Education Conference (ISEC), Online, 1 August 2020; pp. 1–5. [Google Scholar]

- Gong, X.; Wu, Y.; Ye, Z.; Liu, X. Artificial Intelligence Course Design: Istream-based Visual Cognitive Smart Vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26 June 2018; pp. 1731–1735. [Google Scholar]

- Chiu, T.K. A holistic approach to the design of Artificial Intelligence (AI) education for K-12 schools. TechTrends 2021, 65, 796–807. [Google Scholar] [CrossRef]

- Zhang, X.; Tlili, A.; Shubeck, K.; Hu, X.; Huang, R.; Zhu, L. Teachers’ adoption of an open and interactive e-book for teaching K-12 students artificial intelligence: A mixed methods inquiry. Smart Learn. Environ. 2021, 8, 34. [Google Scholar] [CrossRef]

- Sabuncuoglu, A. Designing One Year Curriculum to Teach Artificial Intelligence for Middle School. In Proceedings of the 2020 ACM Conference on Innovation and Technology in Computer Science Education, Trondheim, Norway, 15–19 June 2020; pp. 96–102. [Google Scholar]

- Jong, M.S.Y.; Shang, J.J.; Lee, F.L.; Lee, J.H.M.; Law, H.Y. Learning online: A comparative study of a game-based situated learning approach and a traditional web-based learning approach. In Lecture Notes in Computer Science: Technologies for e-Learning and Digital Entertainment; Pan, Z., Aylett, R., Diener, H., Jin, X., Gobel, S., Li, L., Eds.; Springer: New York, NY, USA, 2006; Volume 3942, pp. 541–551. [Google Scholar]

- Li, X.; Jiang, M.C.Y.; Jong, M.S.Y.; Zhang, X.; Chai, C.S. Understanding medical students’ perceptions of and behavioral intentions toward learning artificial intelligence. Int. J. Environ. Res. Public Health 2022, 17, 8733. [Google Scholar] [CrossRef] [PubMed]

- Druga, S.; Ko, A.J. How do children’s perceptions of machine intelligence change when training and coding smart programs? In Proceedings of the IDC ′21: Interaction Design and Children, Athens, Greece, 24–30 June 2021; pp. 49–61. [Google Scholar]

| AI Terms | Education Terms | School Level Terms |

|---|---|---|

| machine learning OR artificial intelligence OR deep learning OR neural network OR AI | teaching OR learning OR education OR curriculum OR curricula OR pedagog OR instruct | K-12 OR kid OR child OR primary OR elementary OR secondary OR middle OR high school OR pretertiary |

| Model Used in Current Study | Gerlach and Ely’s Model | |

|---|---|---|

| Learning theory Pedagogical approach | Determination of strategy | |

| Special T&L activity | ||

| Learning content | Specification of content | |

| Scale | Target audience | Organization of groups |

| Course duration | Allocation of time | |

| Setting | Allocation of space | |

| Teaching resources | Selection of resources | |

| Prior knowledge prerequisites | Assessment of entering behaviors | |

| Aims and objectives | Specification of objectives | |

| Assessment and learning outcome | Evaluation of performance | |

| Analysis of feedback | ||

| Categories | Code List | Example |

|---|---|---|

| General information | Author, title, year, country | |

| Publication type | Conference/Journal | |

| Project/course name (if any) | AI4Future | |

| Research information | RQs | |

| Target audience | Secondary school | |

| Sample size | ||

| Type of research | Qualitative | |

| Data source | Survey | |

| Pedagogical design | Aims and objectives | |

| T&L setting | Classroom | |

| Project/course duration | One day (3 h) | |

| Learning content | ||

| Pedagogical approach | PBL | |

| Theories | Constructionism | |

| Prior knowledge prerequisites | Scratch | |

| Special T&L activities | Unplugged | |

| Materials and tools | Robot | |

| Evaluation method | Self-evaluation | |

| Learning outcome |

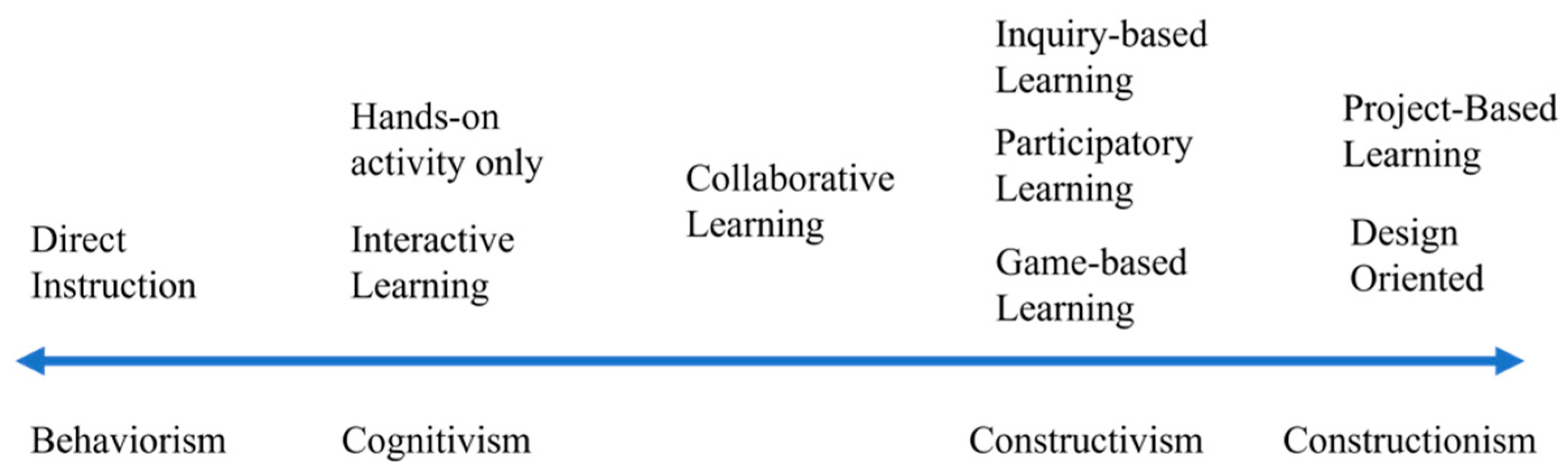

| Theory | Description | Implications |

|---|---|---|

| Behaviorism | This theory focuses solely on observable behavior, with the sense that actions are shaped by environmental stimuli [61] Discounts mental activities such as cognition and emotion, which are regarded as too subjective [62]. | Direct instruction is prioritized. Feedback is provided on answers and quizzes |

| Cognitivism | This theory emphasizes the process and storage of information in the human brain [63]. Popular guiding theories in education include information process theory, cognitive load theory, and metacognitive learning theory. Relevant learning strategies include outcome prediction, research step planning, time management, decision-making, and alternate strategy use when a search fails. | Design of lessons and materials is based on communicative language teaching. The different mental processes of novice and expert problem-solving are discussed. |

| (Social) Constructivism | This theory focuses on learners constructing their own understanding, including rules and mental models, of new knowledge or phenomenon by activating and reflecting on their prior knowledge. | Active learning is prioritized. Learning is enhanced through social interaction. Authentic and real-world problems are employed. |

| Constructionism | This theory, based on constructivism, holds that learning is most effective when people actively construct tangible objects in the real world. | Project-based learning is prioritized. Students learn by doing (making). Artifacts are constructed. |

| Pedagogical Approach | Description in the Context of Teaching AI |

|---|---|

| Direct instruction | Teachers present the target knowledge through lectures, videos, and demonstrations. |

| Hands-on activity only | Students experience or explore tools and materials but are not involved in the construction of them. |

| Interactive learning | Students engage in part of the construction of the AI or ML process, but they cannot necessarily define their own projects or problems. |

| Collaborative learning | Students conduct group work or paired work |

| Inquiry-based learning | Students set their own learning goal, ask their own questions, and attempt to solve problems. However, they do not necessarily actually construct artifacts or products. |

| Game-based learning | Students learn through educational games. |

| Participatory learning | Students interact with their peers and experiment with different roles. |

| Project-based learning | Students learn by participating in the development of a project, typically involving artifact construction with the objective of solving a real-life problem. |

| Design-oriented learning | Students focus on the design element, with open-ended problems; children design their own projects instead of being assigned problems or projects. |

| Experiential learning | Students experience, reflect, think, and act in the learning process. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, M.; Jong, M.S.-Y.; Dai, Y. Pedagogical Design of K-12 Artificial Intelligence Education: A Systematic Review. Sustainability 2022, 14, 15620. https://doi.org/10.3390/su142315620

Yue M, Jong MS-Y, Dai Y. Pedagogical Design of K-12 Artificial Intelligence Education: A Systematic Review. Sustainability. 2022; 14(23):15620. https://doi.org/10.3390/su142315620

Chicago/Turabian StyleYue, Miao, Morris Siu-Yung Jong, and Yun Dai. 2022. "Pedagogical Design of K-12 Artificial Intelligence Education: A Systematic Review" Sustainability 14, no. 23: 15620. https://doi.org/10.3390/su142315620

APA StyleYue, M., Jong, M. S.-Y., & Dai, Y. (2022). Pedagogical Design of K-12 Artificial Intelligence Education: A Systematic Review. Sustainability, 14(23), 15620. https://doi.org/10.3390/su142315620