Curve-Aware Model Predictive Control (C-MPC) Trajectory Tracking for Automated Guided Vehicle (AGV) over On-Road, In-Door, and Agricultural-Land

Abstract

:1. Introduction

1.1. Problem Analysis and Motivation

1.2. Related Work

1.3. Contribution

- The proposed curve finding algorithm is used to locate curves in Google map data and to Find the properties (curve radius, starting and ending points, and speed limit) of the extracted curve;

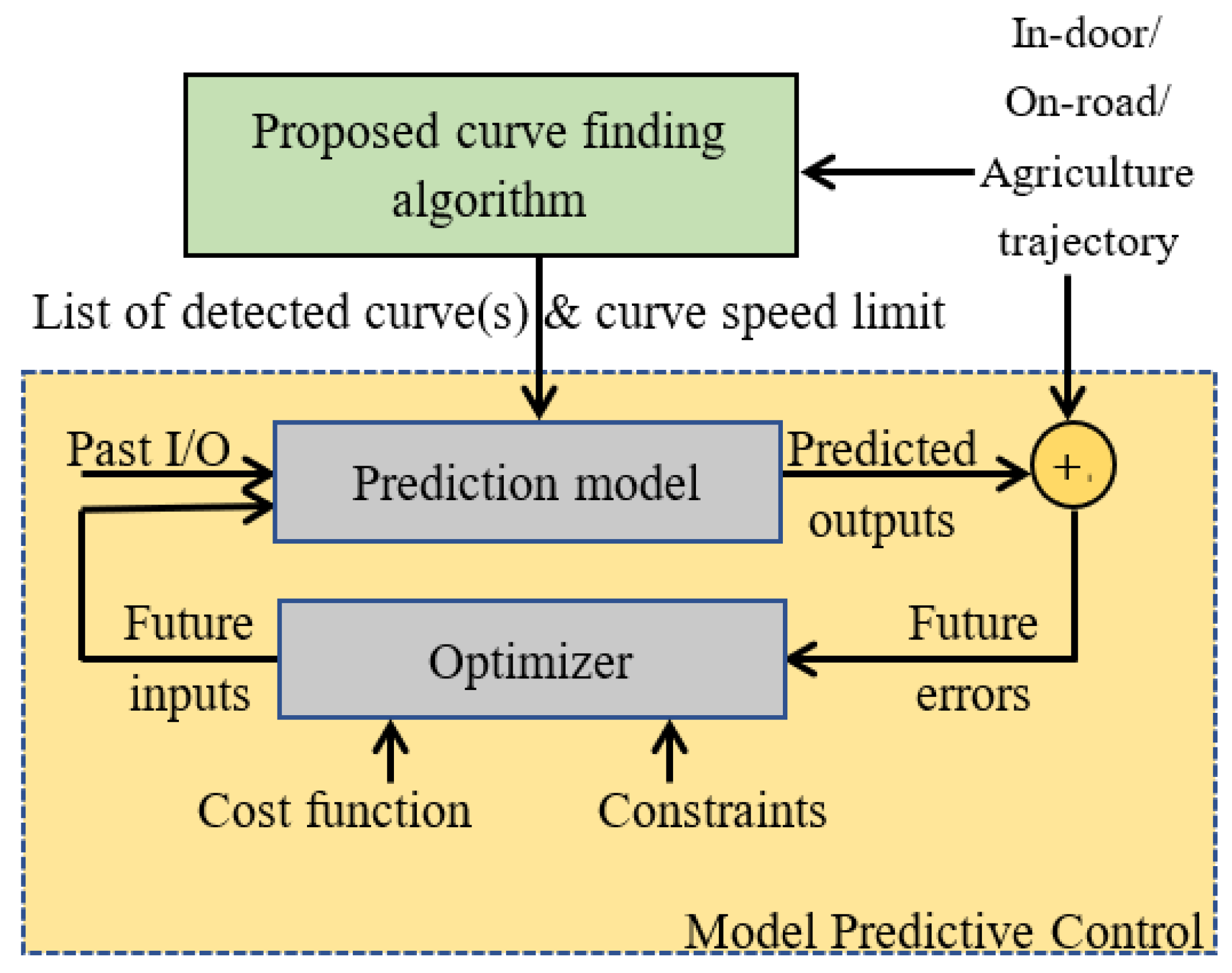

- The Model Predictive Control (MPC) technique is employed in the path tracking algorithm to improve the awareness of an oncoming curve. The predictive model of the MPC has loaded a list of upcoming curves on the course and has drawn up a future path that is aware of curvature. The enhanced MPC algorithm must reduce the AGV speed based on the curve speed limit;

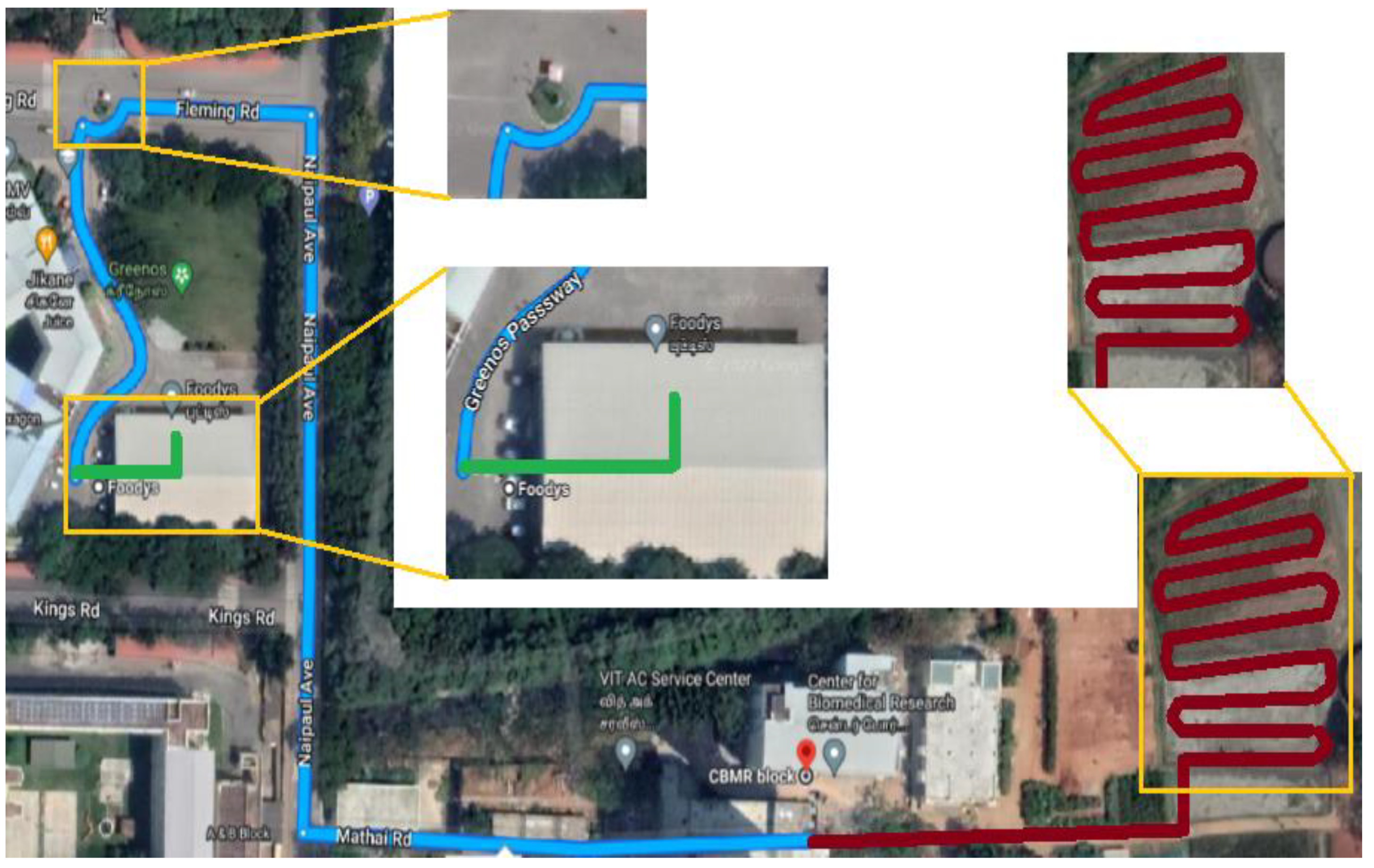

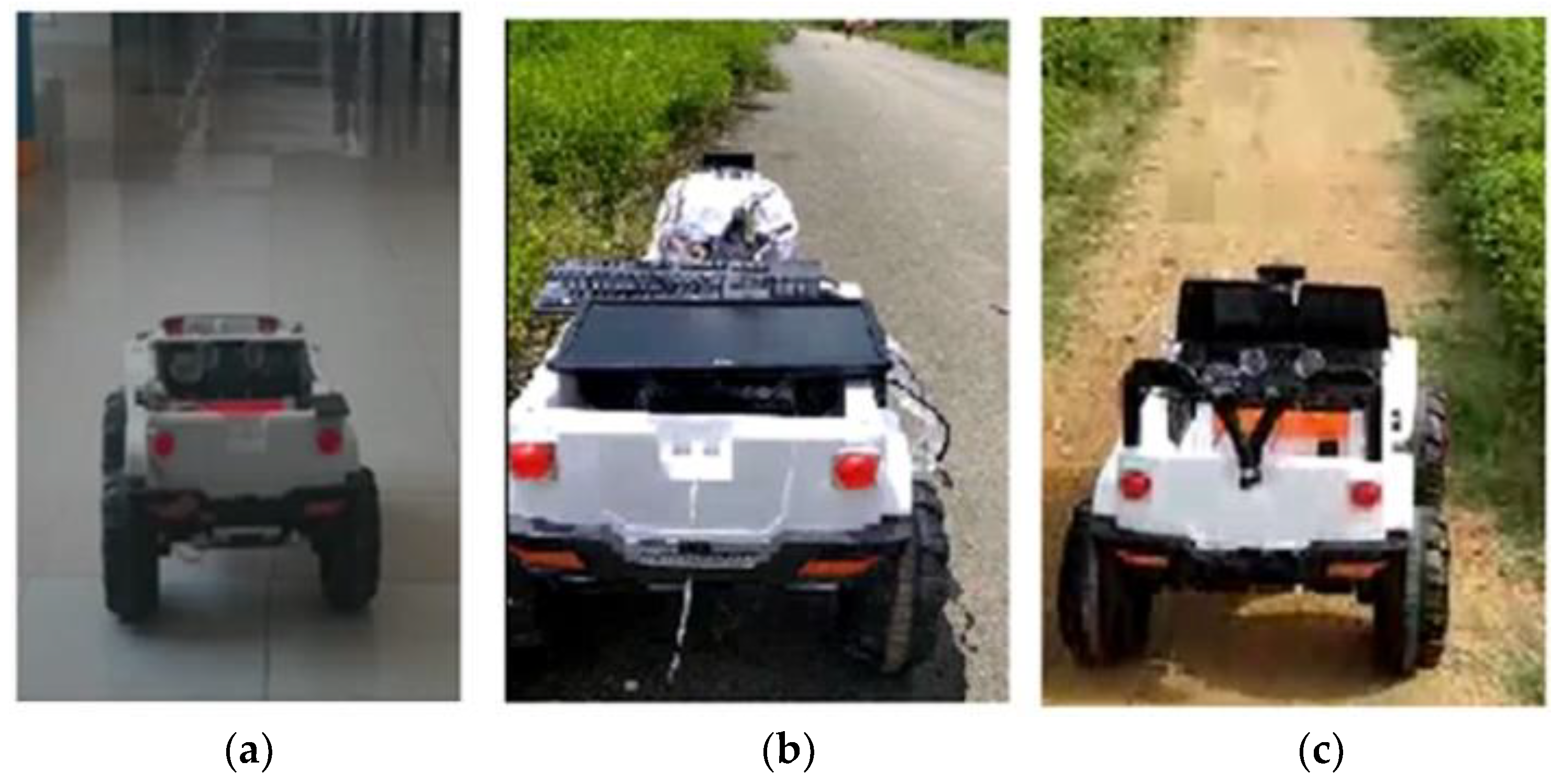

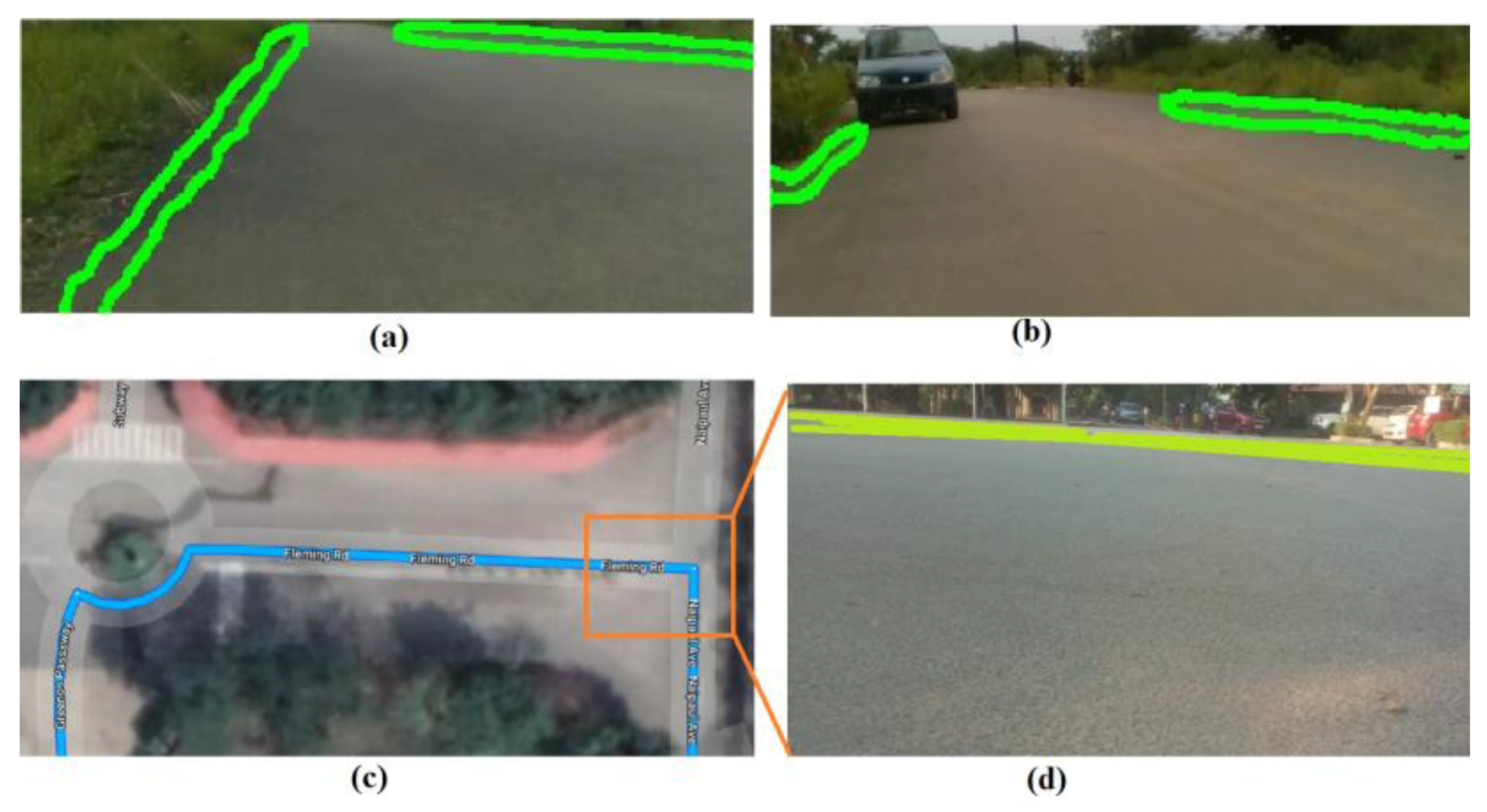

- In addition, practical experiments on the in-door, on-road, and agricultural paths depicted in Figure 1 are carried out to ensure that the proposed system is feasible.

2. Proposed Model

2.1. Automated Guided Vehicle (AGV) Architecture

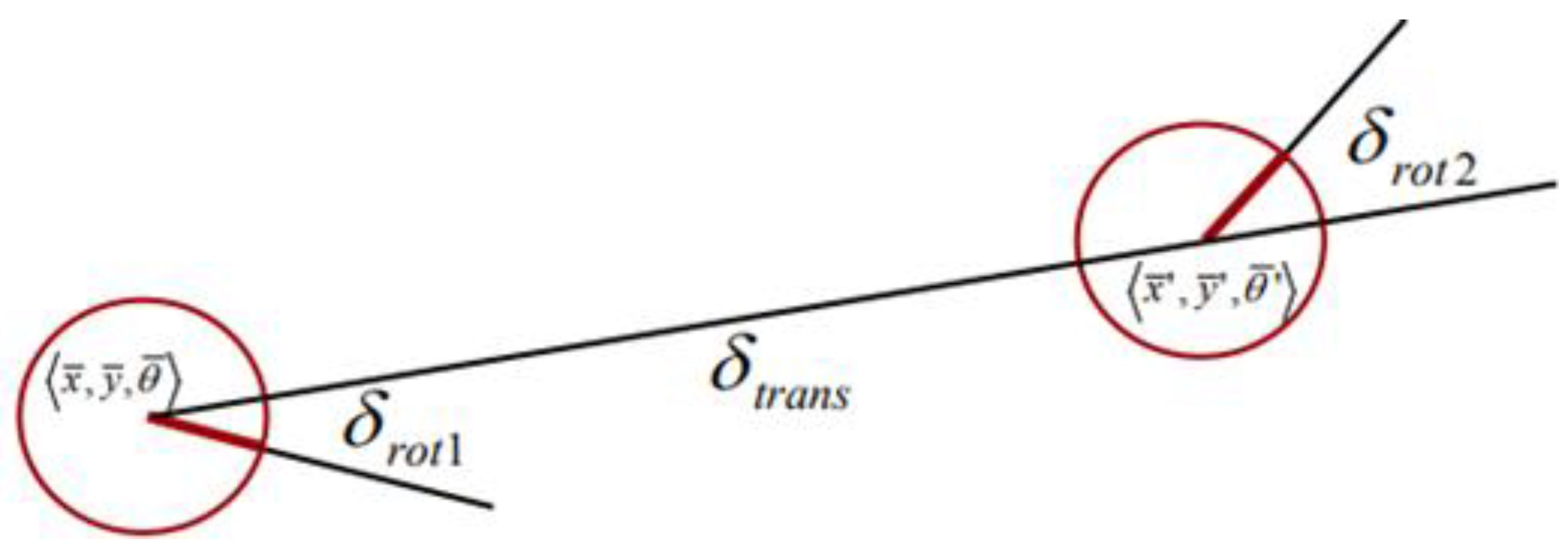

2.2. Odometry Motion Model [34]

2.3. Noise Model for Odometry

2.4. Non-GPS Location Update

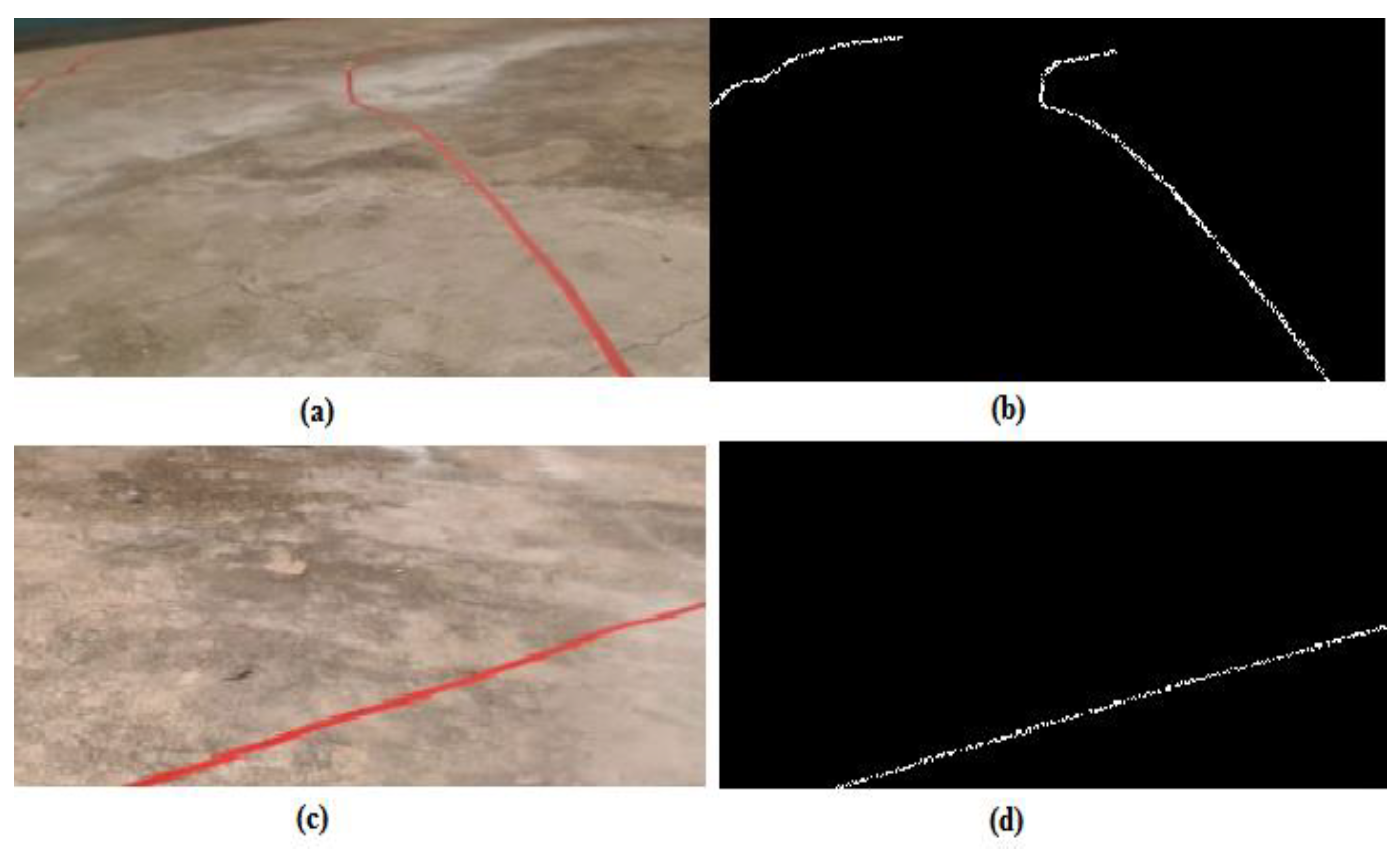

2.5. Trajectory (Path) Creation

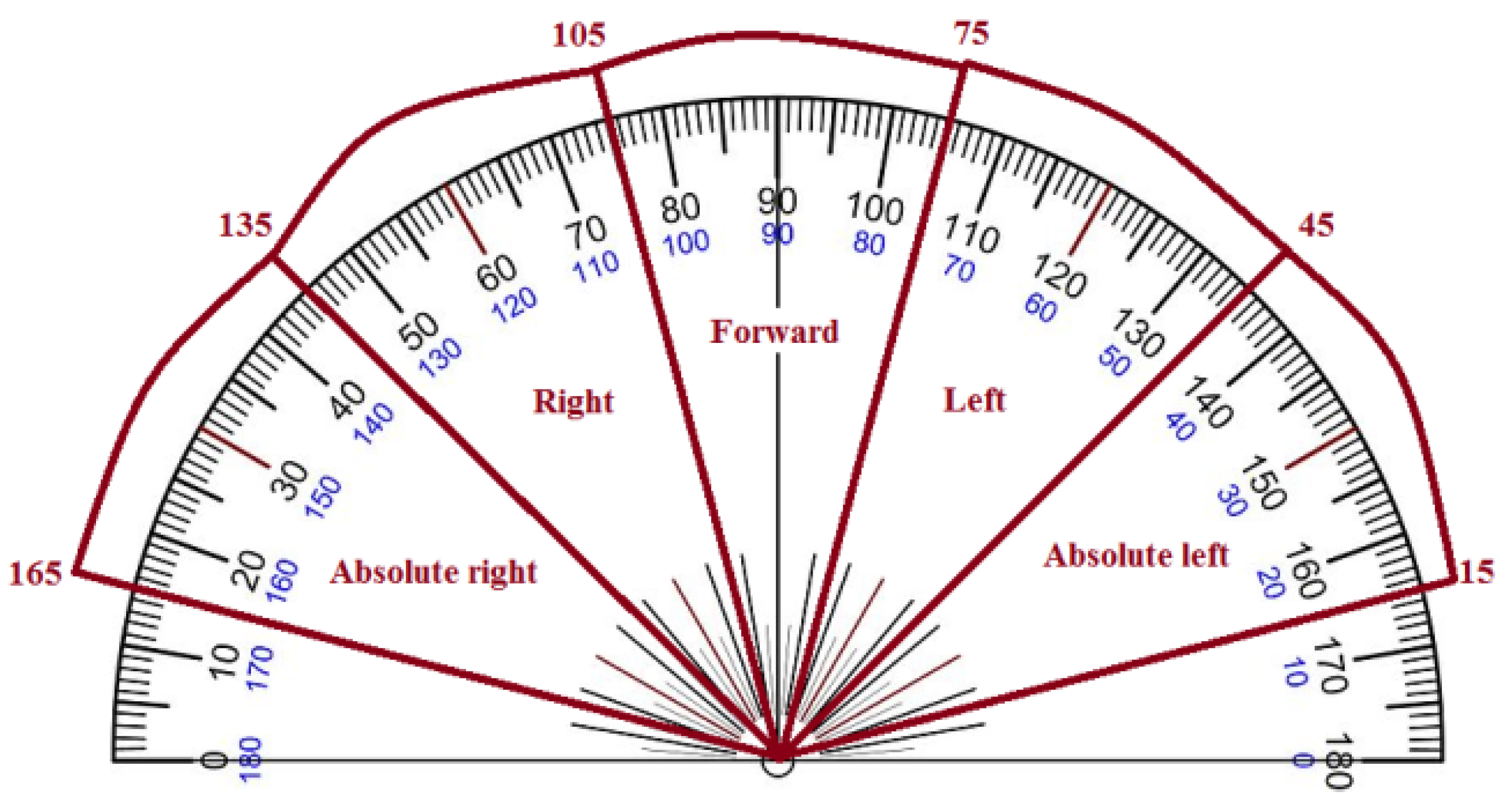

2.6. Proposed Curve Finding Algorithm

| Algorithm 1: Curve finding algorithm. |

| Input: GPS waypoints, radius in meter |

| Output: list of found curves and its properties |

|

1: Function curve_finding(radius) 2: for each waypoint from curve_points do 3: x = distance of waypoints 1 and 2 4: y = distance of waypoints 2 and 3 5: z = distance of waypoints 1 and 3 6: curve_radius = (x ∗ y ∗ z)/ 7: (sqrt ((x + y + z) ∗ (y + z − x) ∗ (z + x − y) ∗ (x + y − z))) 8: count = 0 9: if curve radius ≤ radius_meters then: 10: curve_distance = curve_distance + (x + y) 11: total_radius = total_radius + curve_radius 12: count = count + 1 13: else: 14: Radius = total_radius/count 15: curve_List [radius, curve_distance] 16: return curve_List 17: end Function 18: 19: list_curve_points = curve_finding(radius = 200) #200 m radius |

2.7. Curve-Aware MPC (C-MPC) Design

| Algorithm 2: Curve-aware prediction model algorithm. |

| Input: List of curves point, list of curve safe alert points |

| Output: The predicted control output. Abbreviation state: vehicle current state, T: prediction horizon value |

|

1: function Prediction_model (list_of_curve_properties,curve_alert,T) 2: for curve ∈ [list_of_curve_properties) do 3: for i in range T: 4: if (state.x < curve_start.x) and (state.y < curve_start.y) then: 5: if (state.x > curve_alert.x) and (state.y > curve_alert.y) then: 6: if (state.v > curve_speed_limit) then: 7: state.v = state.v ∗ 0.25 8: future [0, i] = state.x # x value 9: future [0, i] = state.y # y value 10: future [0, i] = state.v # velocity value 11: future [0, i] = state.yaw # yaw value 12: return future 13:end Function |

3. Results

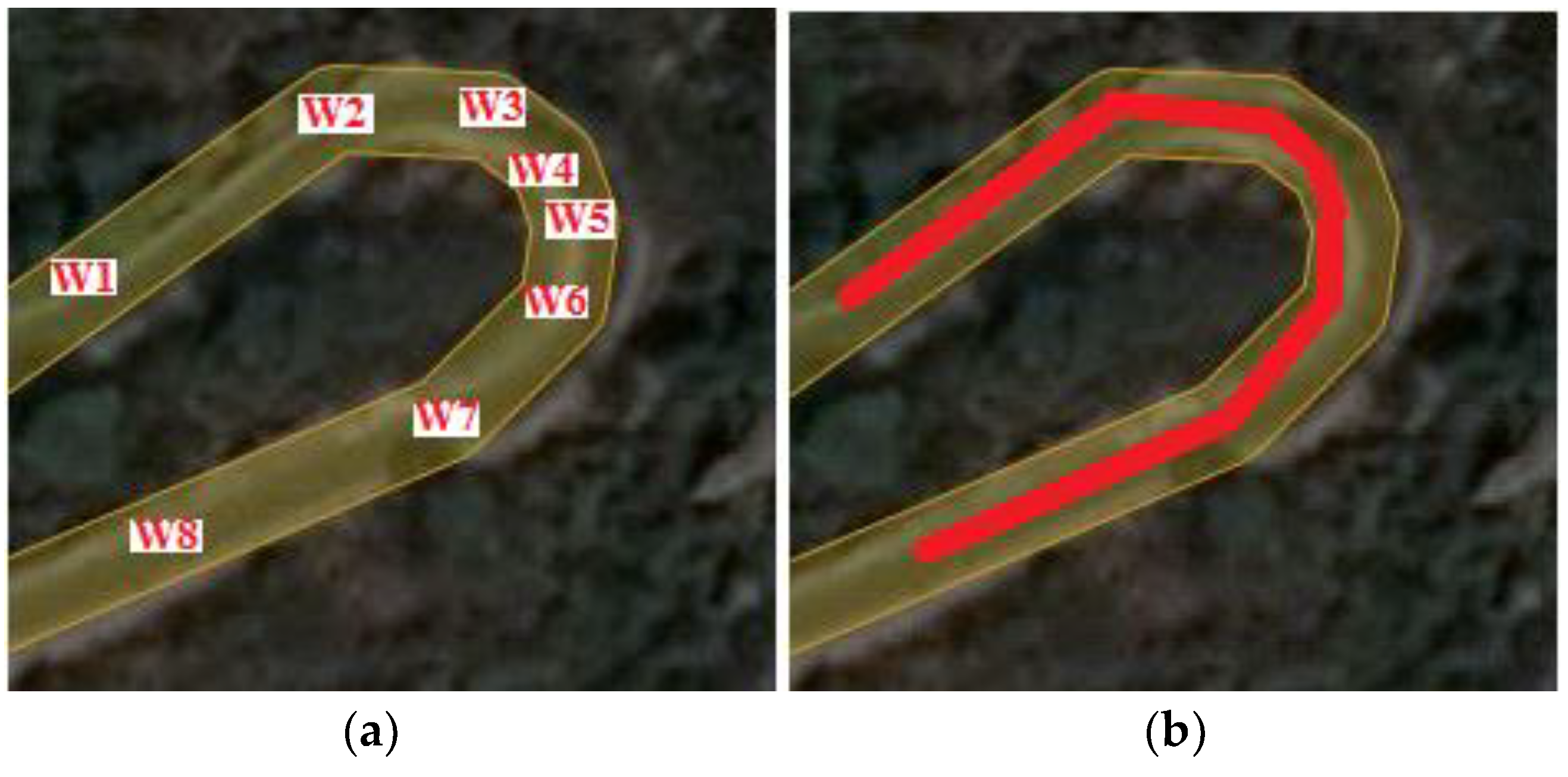

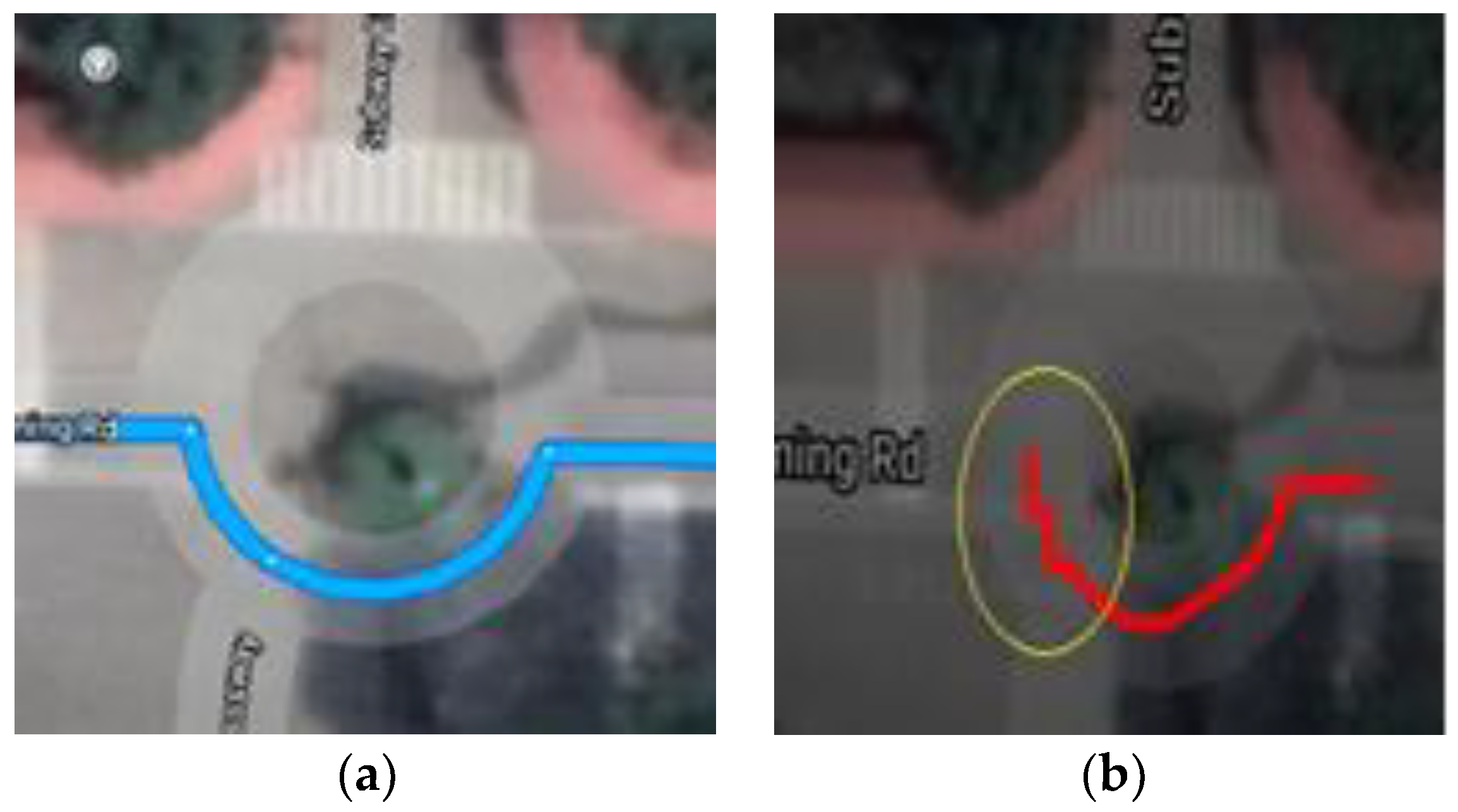

3.1. Proposed Curve Finding Method

3.2. Metrics for Navigation Results

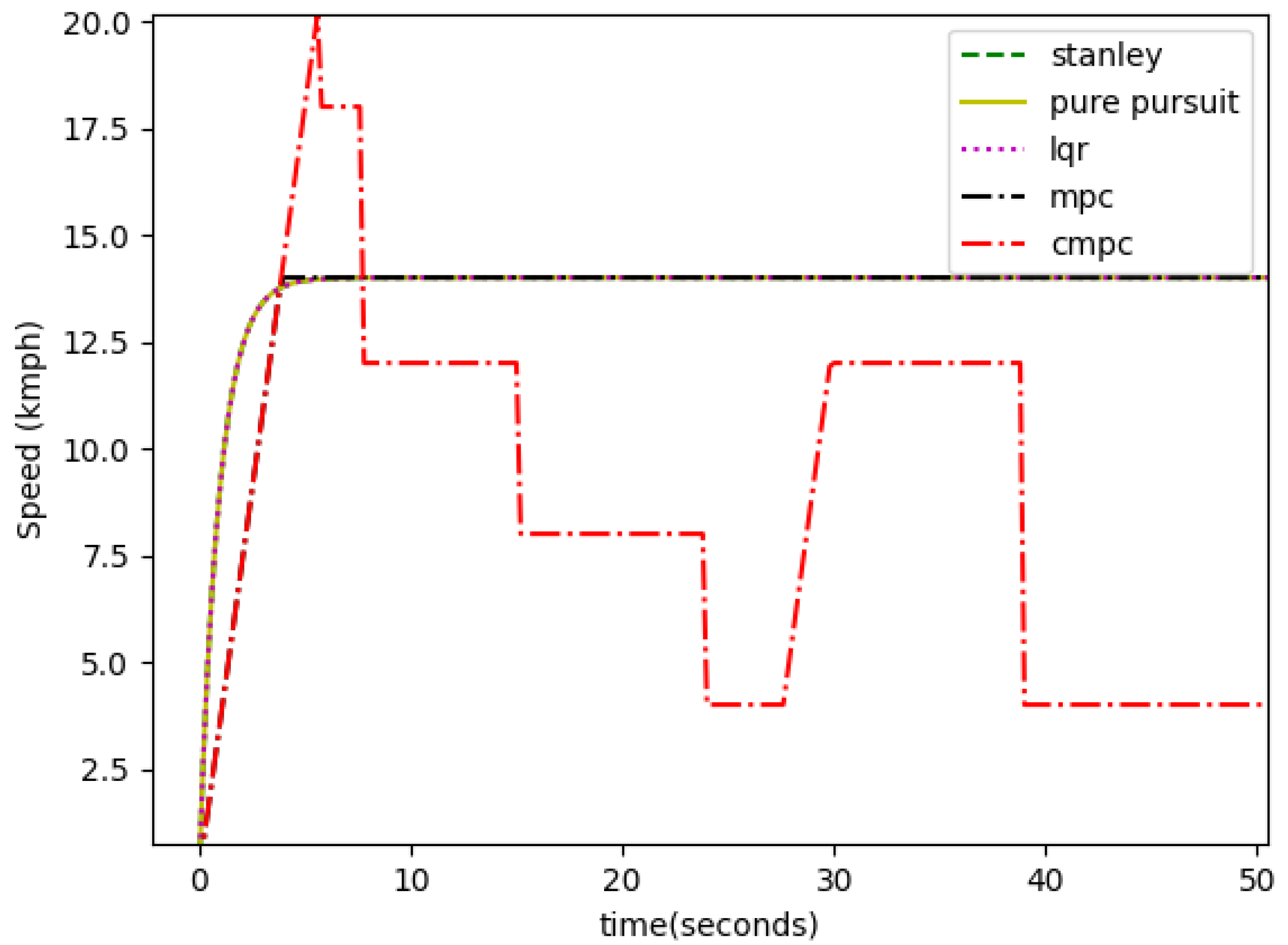

3.3. Curve-Aware MPC Result

3.4. Simulation Setup and Results

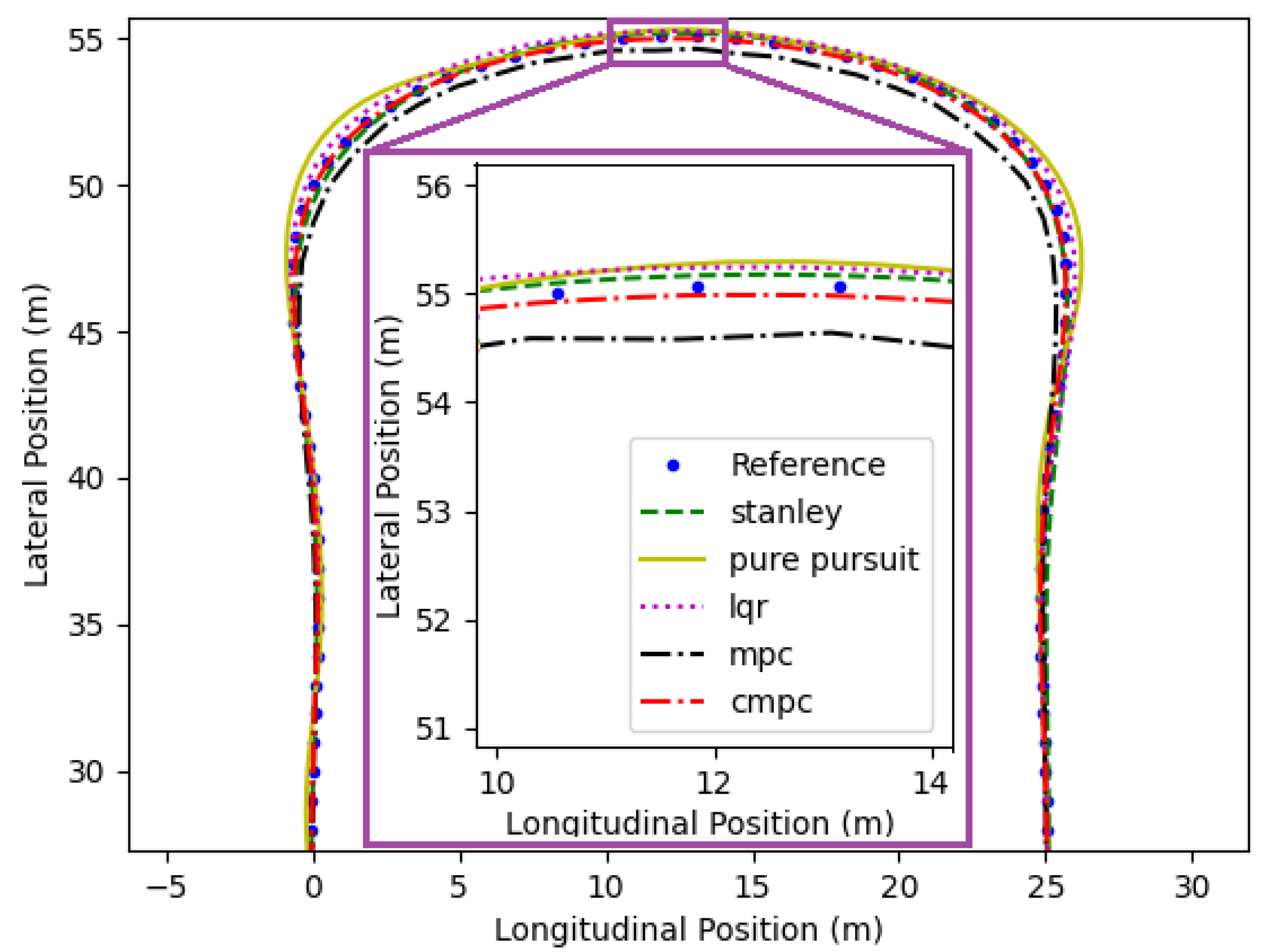

- Test-bed 1:

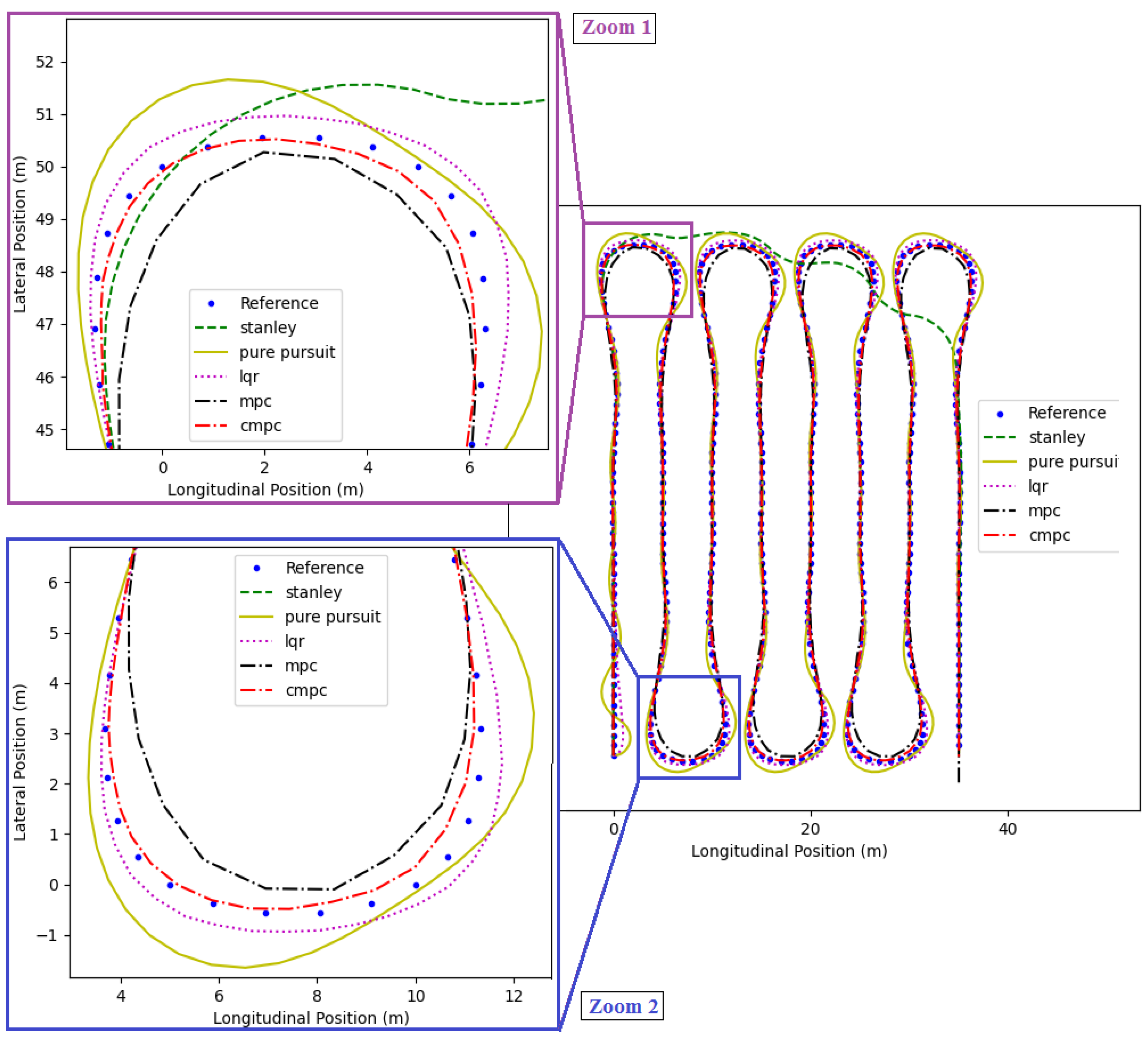

- Test-bed 2:

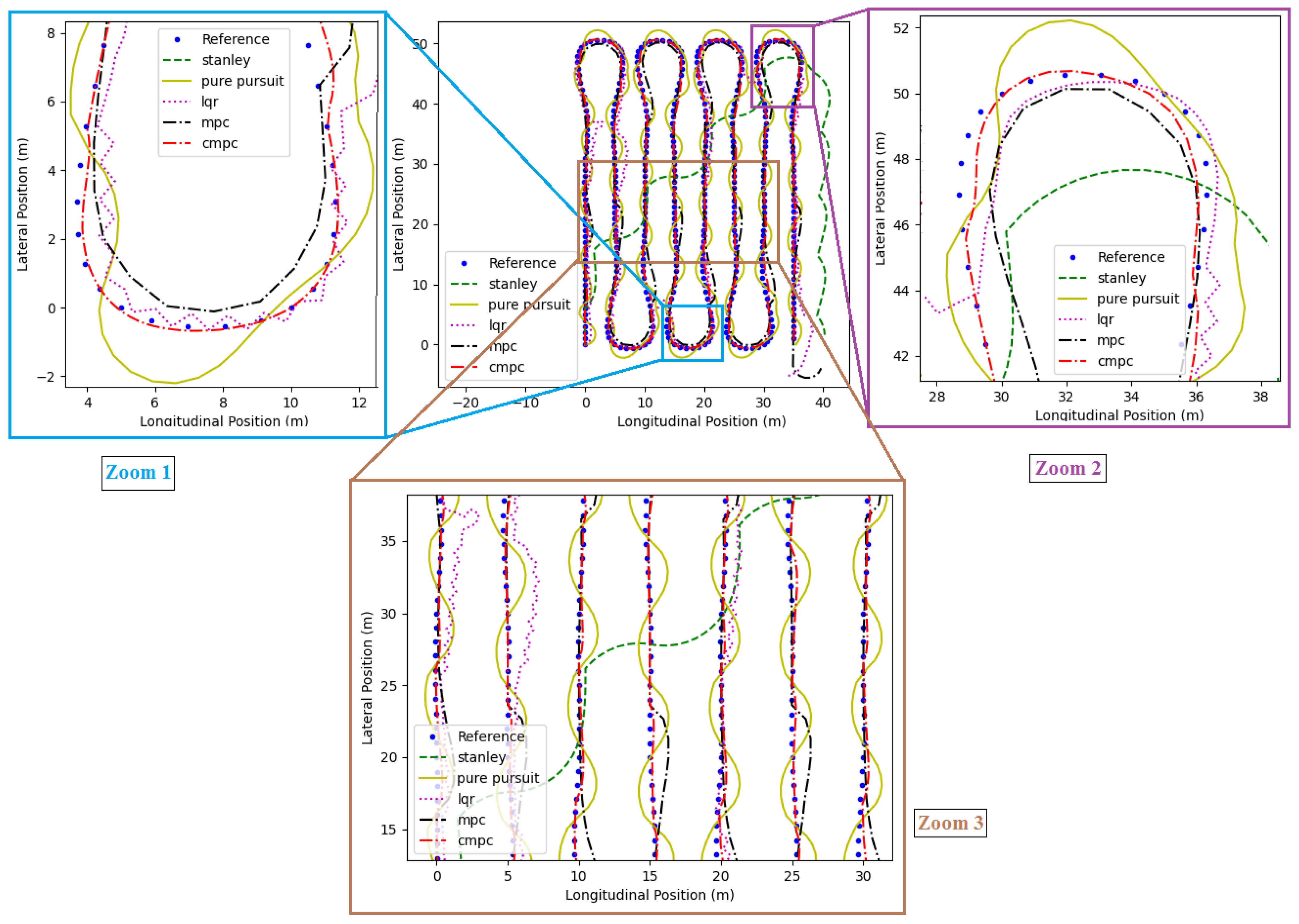

- Test-bed 3:

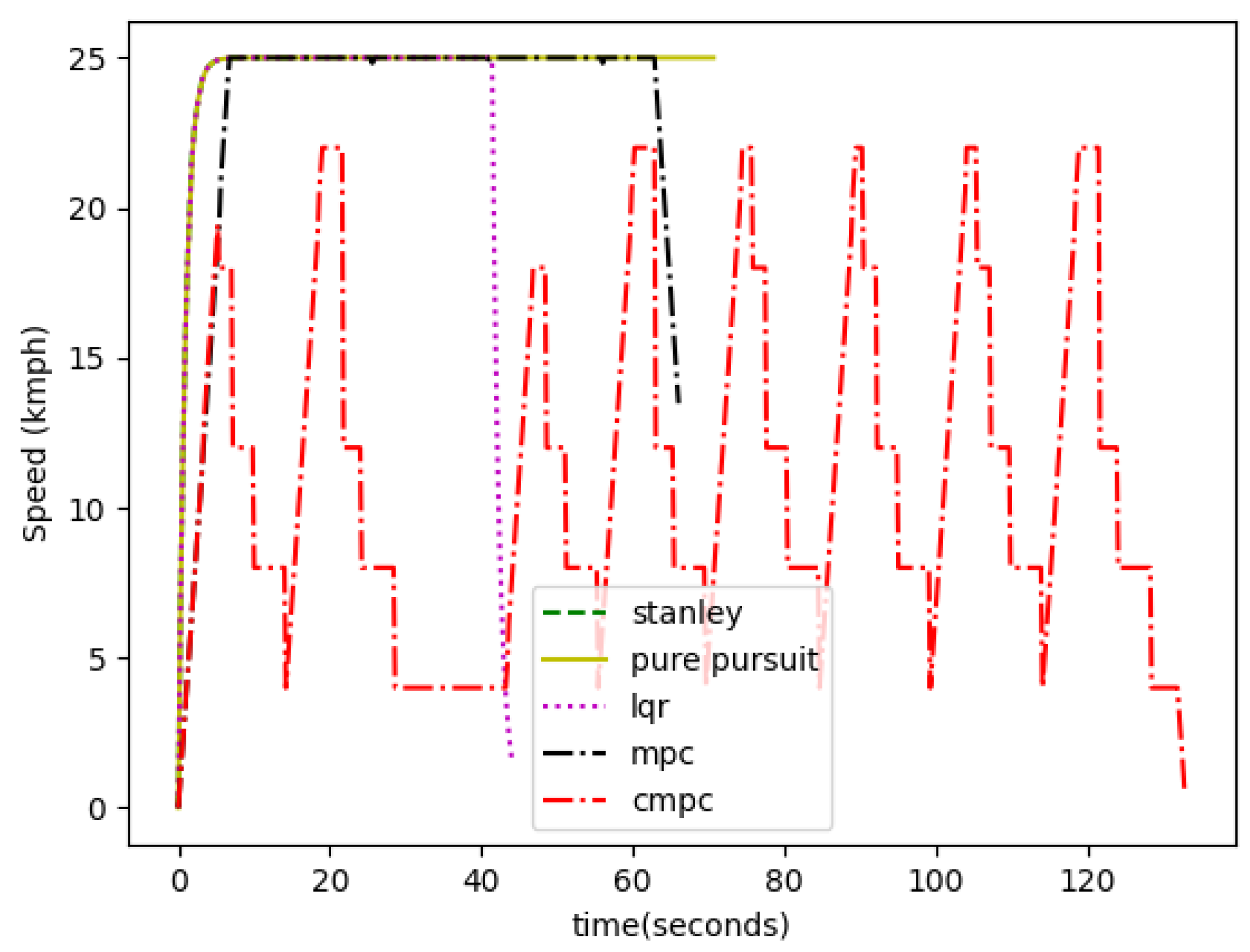

- Test-bed 4:

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Motroni, A.; Buffi, A.; Nepa, P. Forklift Tracking: Industry 4.0 Implementation in Large-Scale Warehouses through UWB Sensor Fusion. Appl. Sci. 2021, 11, 10607. [Google Scholar] [CrossRef]

- Stetter, R. A Fuzzy Virtual Actuator for Automated Guided Vehicles. Sensors 2020, 20, 4154. [Google Scholar] [CrossRef] [PubMed]

- Teso-Fz-Betoño, D.; Zulueta, E.; Fernandez-Gamiz, U.; Aramendia, I.; Uriarte, I. A Free Navigation of an AGV to a Non-Static Target with Obstacle Avoidance. Electronics 2019, 8, 159. [Google Scholar] [CrossRef]

- Ito, S.; Hiratsuka, S.; Ohta, M.; Matsubara, H.; Ogawa, M. Small Imaging Depth LIDAR and DCNN-Based Localization for Automated Guided Vehicle. Sensors 2018, 18, 177. [Google Scholar] [CrossRef]

- Saputra, R.P.; Rijanto, E. Automatic Guided Vehicles System and Its Coordination Control for Containers Terminal Logistics Application. arXiv 2021, arXiv:2104.08331. [Google Scholar]

- Gonzalez-de-Santos, P.; Fernández, R.; Sepúlveda, D.; Navas, E.; Emmi, L.; Armada, M. Field Robots for Intelligent Farms—Inhering Features from Industry. Agronomy 2020, 10, 1638. [Google Scholar] [CrossRef]

- Gu, Y.; Li, Z.; Zhang, Z.; Li, J.; Chen, L. Path Tracking Control of Field Information-Collecting Robot Based on Improved Convolutional Neural Network Algorithm. Sensors 2020, 20, 797. [Google Scholar] [CrossRef] [PubMed]

- Gul, F.; Rahiman, W.; Nazli Alhady, S.S. A Comprehensive Study for Robot Navigation Techniques. Cogent Eng. 2019, 6, 1632046. [Google Scholar] [CrossRef]

- Moshayedi, A.J.; Jinsong, L.; Liao, L. AGV (automated guided vehicle) robot: Mission and obstacles in design and performance. J. Simul. Anal. Nov. Technol. Mech. Eng. 2019, 12, 5–18. [Google Scholar]

- Bechtel, M.G.; Mcellhiney, E.; Kim, M.; Yun, H. DeepPicar: A Low-Cost Deep Neural Network-Based Autonomous Car. In Proceedings of the 2018 IEEE 24th International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Hokkaido, Japan, 28–31 August 2018. [Google Scholar]

- Wang, S.; Chen, X.; Ding, G.; Li, Y.; Xu, W.; Zhao, Q.; Gong, Y.; Song, Q. A Lightweight Localization Strategy for LiDAR-Guided Autonomous Robots with Artificial Landmarks. Sensors 2021, 21, 4479. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.; Zhu, Z.; Li, Z.; Du, Y.; Mao, E. Tractor Path Tracking Control Based on Binocular Vision. Inf. Process. Agric. 2018, 5, 422–432. [Google Scholar] [CrossRef]

- Akhshirsh, G.S.; Al-Salihi, N.K.; Hamid, O.H. A Cost-Effective GPS-Aided Autonomous Guided Vehicle for Global Path Planning. Bull. Electr. Eng. Inform. 2021, 10, 650–657. [Google Scholar] [CrossRef]

- Hodson, T.O. Root-mean-square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. 2022, 15, 5481–5487. [Google Scholar] [CrossRef]

- Aghi, D.; Cerrato, S.; Mazzia, V.; Chiaberge, M. Deep Semantic Segmentation at the Edge for Autonomous Navigation in Vineyard Rows. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar] [CrossRef]

- Puppim de Oliveira, D.; Pereira Neves Dos Reis, W.; Morandin Junior, O. A Qualitative Analysis of a USB Camera for AGV Control. Sensors 2019, 19, 4111. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.T.-T.; Yan, S.-W.; Liao, J.-F.; Kuo, C.-H. Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments. Appl. Sci. 2021, 11, 5963. [Google Scholar] [CrossRef]

- Wu, X.; Sun, C.; Zou, T.; Xiao, H.; Wang, L.; Zhai, J. Intelligent Path Recognition against Image Noises for Vision Guidance of Automated Guided Vehicles in a Complex Workspace. Appl. Sci. 2019, 9, 4108. [Google Scholar] [CrossRef]

- Han, J.-H.; Kim, H.-W. Lane Detection Algorithm Using LRF for Autonomous Navigation of Mobile Robot. Appl. Sci. 2021, 11, 6229. [Google Scholar] [CrossRef]

- Bi, S.; Yuan, C.; Liu, C.; Cheng, J.; Wang, W.; Cai, Y. A Survey of Low-Cost 3D Laser Scanning Technology. Appl. Sci. 2021, 11, 3938. [Google Scholar] [CrossRef]

- Medina Sánchez, C.; Zella, M.; Capitán, J.; Marrón, P.J. From Perception to Navigation in Environments with Persons: An Indoor Evaluation of the State of the Art. Sensors 2022, 22, 1191. [Google Scholar] [CrossRef]

- Suzen, A.A.; Duman, B.; Sen, B. Benchmark Analysis of Jetson TX2, Jetson Nano and Raspberry PI Using Deep-CNN. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–28 June 2020. [Google Scholar] [CrossRef]

- Bengochea-Guevara, J.M.; Conesa-Muñoz, J.; Andújar, D.; Ribeiro, A. Merge Fuzzy Visual Servoing and GPS-Based Planning to Obtain a Proper Navigation Behavior for a Small Crop-Inspection Robot. Sensors 2016, 16, 276. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, W.; Wang, N.; Lyu, Z.; Zhang, W. Trajectory Tracking Control of Agricultural Vehicles Based on Disturbance Test. Int. J. Agric. Biol. Eng. 2020, 13, 138–145. [Google Scholar] [CrossRef]

- Han, J.-H.; Park, C.-H.; Kwon, J.H.; Lee, J.; Kim, T.S.; Jang, Y.Y. Performance Evaluation of Autonomous Driving Control Algorithm for a Crawler-Type Agricultural Vehicle Based on Low-Cost Multi-Sensor Fusion Positioning. Appl. Sci. 2020, 10, 4667. [Google Scholar] [CrossRef]

- Li, Z.; Chitturi, M.V.; Bill, A.R.; Noyce, D.A. Automated Identification and Extraction of Horizontal Curve Information from Geographic Information System Roadway Maps. Transp. Res. Rec. 2012, 2291, 80–92. [Google Scholar] [CrossRef]

- Bíl, M.; Andrášik, R.; Sedoník, J.; Cícha, V. ROCA-An ArcGIS toolbox for road alignment identification and horizontal curve radii computation. PLoS ONE 2018, 13, e0208407. [Google Scholar] [CrossRef]

- Ge, J.; Pei, H.; Yao, D.; Zhang, Y. A Robust Path Tracking Algorithm for Connected and Automated Vehicles under I-VICS. Transp. Res. Interdiscip. Perspect. 2021, 9, 100314. [Google Scholar] [CrossRef]

- Wang, L.; Zhai, Z.; Zhu, Z.; Mao, E. Path Tracking Control of an Autonomous Tractor Using Improved Stanley Controller Optimized with Multiple-Population Genetic Algorithm. Actuators 2022, 11, 22. [Google Scholar] [CrossRef]

- Yang, T.; Bai, Z.; Li, Z.; Feng, N.; Chen, L. Intelligent Vehicle Lateral Control Method Based on Feedforward + Predictive LQR Algorithm. Actuators 2021, 10, 228. [Google Scholar] [CrossRef]

- Chen, J.; Shi, Y. Stochastic Model Predictive Control Framework for Resilient Cyber-Physical Systems: Review and Perspectives. Philos. Trans. A Math. Phys. Eng. Sci. 2021, 379, 20200371. [Google Scholar] [CrossRef]

- Huang, Z.; Li, H.; Li, W.; Liu, J.; Huang, C.; Yang, Z.; Fang, W. A New Trajectory Tracking Algorithm for Autonomous Vehicles Based on Model Predictive Control. Sensors 2021, 21, 7165. [Google Scholar] [CrossRef]

- Jeong, Y.; Yim, S. Model Predictive Control-Based Integrated Path Tracking and Velocity Control for Autonomous Vehicle with Four-Wheel Independent Steering and Driving. Electronics 2021, 10, 2812. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: London, UK, 2005; ISBN 9780262201629. [Google Scholar]

- IRC:73-1980; Geometric Design Standards for Rural (Non-Urban) Highways. Indian Roads Congress: New Delhi, India, 1990.

- IRC:38-1988; Guidelines for Design of Horizontal Curves for Highways and Design Tables. Indian Roads Congress: New Delhi, India, 1989.

- Available online: https://github.com/AtsushiSakai/PythonRobotics (accessed on 17 December 2021).

- Lannelongue, L.; Grealey, J.; Inouye, M. Green Algorithms: Quantifying the Carbon Footprint of Computation. Adv. Sci. 2021, 8, 2100707. [Google Scholar] [CrossRef] [PubMed]

- Dev, K.; Xiao, Y.; Gadekallu, T.R.; Corchado, J.M.; Han, G.; Magarini, M. Guest Editorial Special Issue on Green Communication and Networking for Connected and Autonomous Vehicles. IEEE Trans. Green Commun. Netw. 2022, 6, 1260–1266. [Google Scholar] [CrossRef]

- Dulebenets, M. A diploid evolutionary algorithm for sustainable truck scheduling at a cross-docking facility. Sustainability 2018, 10, 1333. [Google Scholar] [CrossRef]

- Kavoosi, M.; Dulebenets, M.A.; Abioye, O.F.; Pasha, J.; Wang, H.; Chi, H. An augmented self-adaptive parameter control in evolutionary computation: A case study for the berth scheduling problem. Adv. Eng. Inform. 2019, 42, 100972. [Google Scholar] [CrossRef]

- Dulebenets, M.A. An Adaptive Polyploid Memetic Algorithm for scheduling trucks at a cross-docking terminal. Inf. Sci. 2021, 565, 390–421. [Google Scholar] [CrossRef]

- Pasha, J.; Nwodu, A.L.; Fathollahi-Fard, A.M.; Tian, G.; Li, Z.; Wang, H.; Dulebenets, M.A. Exact and metaheuristic algorithms for the vehicle routing problem with a factory-in-a-box in multi-objective settings. Adv. Eng. Inform. 2022, 52, 101623. [Google Scholar] [CrossRef]

- Tripathy, B.K.; Reddy Maddikunta, P.K.; Pham, Q.V.; Gadekallu, T.R.; Dev, K.; Pandya, S.; ElHalawany, B.M. Harris Hawk Optimization: A Survey onVariants and Applications. Comput. Intell. Neurosci. 2022, 2022, 2218594. [Google Scholar] [CrossRef]

- Ravi, C.; Tigga, A.; Reddy, G.T.; Hakak, S.; Alazab, M. Driver Identification Using Optimized Deep Learning Model in Smart Transportation. ACM Trans. Internet Technol. 2020. [Google Scholar] [CrossRef]

- Hakak, S.; Gadekallu, T.R.; Ramu, S.P.; Maddikunta, P.K.R.; de Alwis, C.; Liyanage, M. Autonomous Vehicles in 5G and Beyond: A Survey. arXiv 2022, arXiv:2207.10510. [Google Scholar]

| S.no | Radius of Curve Range | IRC Speed Limit for Vehicles | Declared Speed Limit for AGV | Curve Alert Location |

|---|---|---|---|---|

| 1 | 50–100 m | 20 km/h | 3 km/h | 5 m before the curve starting point |

| 2 | 70–150 m | 25 km/h | 5 km/h | 5 m before the curve starting point |

| 3 | 100–200 m | 30 km/h | 7 km/h | 5 m before the curve starting point |

| S.no | Origin & Destination | Total Distance of Path | Total No. of Curve Exist | Total No. of Curve Identified | Type 1 Error | Type 2 Error | Noise Corrected | TIIR | Total No. of Curve Identified by Reference [26] | Performance Delay (Milliseconds) | Performance Delay by Reference [27] (Milliseconds) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| a | 12.969096, 79.158385 & 12.968991, 79.158133 | 30 m | 2 | 2 | 0 | 0 | 1 | 0.00 | 3 | 53 | 1245 |

| b | 12.968970, 79.158103 & 12.969291, 79.158617 | 200 m | 3 | 3 | 0 | 1 | 1 | 0.33 | 4 | 355 | 8217 |

| c | 12.968467, 79.160714 & 12.969006, 79.161010 | 100 m | 8 | 8 | 1 (25%) | 4 | 10 | 0.5 | 18 | 177 | 4108 |

| S.no | Mobile Robot Speed Set | Curve Speed Limit | RMSE of Lateral Error (m) | RMSE of Longitudinal Error (m) | ||

|---|---|---|---|---|---|---|

| MPC | C-MPC | MPC | C-MPC | |||

| a | 7 km/h | 3 km/h | 2.27 | 0.85 | 1.86 | 0.62 |

| b | 15 km/h | 7 km/h | 3.82 | 0.98 | 1.15 | 0.54 |

| c | 10 km/h | 3 km/h | 5.63 | 1.03 | 1.60 | 0.92 |

| S.no | Path Tracking Algorithms | RMSE of Lateral Error (m) | RMSE of Longitudinal Error (m) |

|---|---|---|---|

| 1 | Pure Pursuit | 0.22 | 0.37 |

| 2 | Stanley | 1.98 | 4.57 |

| 3 | LQR | 0.07 | 0.10 |

| 4 | MPC | 1.49 | 0.41 |

| 5 | Proposed C-MPC | 0.12 | 0.30 |

| S.no | Path Tracking Algorithms | RMSE of Lateral Error (m) | RMSE of Longitudinal Error (m) |

|---|---|---|---|

| 1 | Pure Pursuit | 0.23 | 0.37 |

| 2 | Stanley | 0.84 | 1.26 |

| 3 | LQR | 0.15 | 0.37 |

| 4 | MPC | 0.32 | 2.78 |

| 5 | Proposed C-MPC | 0.06 | 0.36 |

| S.no | Path Tracking Algorithms | RMSE of Lateral Error (m) | RMSE of Longitudinal Error (m) |

|---|---|---|---|

| 1 | Pure Pursuit | 0.21 | 0.81 |

| 2 | Stanley (failed) | 1.37 | 4.01 |

| 3 | LQR | 0.08 | 0.73 |

| 4 | MPC | 0.30 | 1.80 |

| 5 | Proposed C-MPC | 0.07 | 0.62 |

| S.no | Path Tracking Algorithms | RMSE of Lateral Error (m) | RMSE of Longitudinal Error (m) |

|---|---|---|---|

| 1 | Pure Pursuit | 1.76 | 1.82 |

| 2 | Stanley (failed) | 11.71 | 3.44 |

| 3 | LQR | 0.84 | 1.51 |

| 4 | MPC | 0.71 | 2.77 |

| 5 | Proposed C-MPC | 0.09 | 0.69 |

| S.no | Methods | Navigation Type | Purpose | Curvature Method | Cost of Navigation |

|---|---|---|---|---|---|

| 1 | Ref. [19] | Vision | on-Road navigation | Image processing-based road edge features are extracted and calculated for a curvature or straight line. AGV moves at a constant speed. | High (embedded board and camera, 3D laser beam is high cost) [19,20,22] |

| 2 | Ref. [16] | Tape and vision | In-door navigation | Image processing-based tape features are extracted and calculated for a curvature or straight line. AGV moves at a constant speed. | Moderate (depends on embedded board, camera, and amount of floor fixing tape required) [20,22] |

| 3 | Ref. [23] | Vision | Agricultural navigation | Image processing-based crop row features are extracted and calculated for a curvature or straight line. AGV moves at a constant speed. | Moderate (depends on embedded board, camera, and other sensors cost) [20,22] |

| 4 | Proposed model | Google map data | on-Road, In-door, and Agricultural navigation | The proposed curve finding method extracts the curve from generated trajectory (path) and reduces mobile robot speed before reaching the curve starting point. AGV moves at variable speed. | Low (using low-cost embedded board) [22] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manikandan, S.; Kaliyaperumal, G.; Hakak, S.; Gadekallu, T.R. Curve-Aware Model Predictive Control (C-MPC) Trajectory Tracking for Automated Guided Vehicle (AGV) over On-Road, In-Door, and Agricultural-Land. Sustainability 2022, 14, 12021. https://doi.org/10.3390/su141912021

Manikandan S, Kaliyaperumal G, Hakak S, Gadekallu TR. Curve-Aware Model Predictive Control (C-MPC) Trajectory Tracking for Automated Guided Vehicle (AGV) over On-Road, In-Door, and Agricultural-Land. Sustainability. 2022; 14(19):12021. https://doi.org/10.3390/su141912021

Chicago/Turabian StyleManikandan, Sundaram, Ganesan Kaliyaperumal, Saqib Hakak, and Thippa Reddy Gadekallu. 2022. "Curve-Aware Model Predictive Control (C-MPC) Trajectory Tracking for Automated Guided Vehicle (AGV) over On-Road, In-Door, and Agricultural-Land" Sustainability 14, no. 19: 12021. https://doi.org/10.3390/su141912021

APA StyleManikandan, S., Kaliyaperumal, G., Hakak, S., & Gadekallu, T. R. (2022). Curve-Aware Model Predictive Control (C-MPC) Trajectory Tracking for Automated Guided Vehicle (AGV) over On-Road, In-Door, and Agricultural-Land. Sustainability, 14(19), 12021. https://doi.org/10.3390/su141912021