Deep Learning Approach for the Detection of Noise Type in Ancient Images

Abstract

1. Introduction

2. Literature Survey

- For removing noise from the images, it is necessary to detect the type of noise so that the content of the image will not get hampered while removing noise pixels.

- Also, there is a need to develop system in such a way that irrespective of the content of the image the type of noise should get detected.

| Paper | Type of Detection | Technique Used | Dataset | Accuracy (%) |

|---|---|---|---|---|

| [9] | Classification of murals | MultiChannel seperable network model (MCSN) | China Dunhuang Murals | 88.16 |

| [16] | Biological images | Deep Learning | Wood boards | 93 |

| [18] | COVID-19 | deep CNN-LSTM | X-ray images | 99.4 |

| [37] | Living or non living things | VGG16 | ImageNet | 99.8 |

| [38] | Cloud shape | CNN and FDM | 200 actual photos of real scenes a | 94 |

| [39] | Image detection | Two stage training | USPS, ILSVRC2012, MNIST, SVHN, CIFAR10, CIFAR100 | 98 |

| Proposed System Architecture | Noise type | Wavelet Transform and CNN | Ancient mural images | 99.25 |

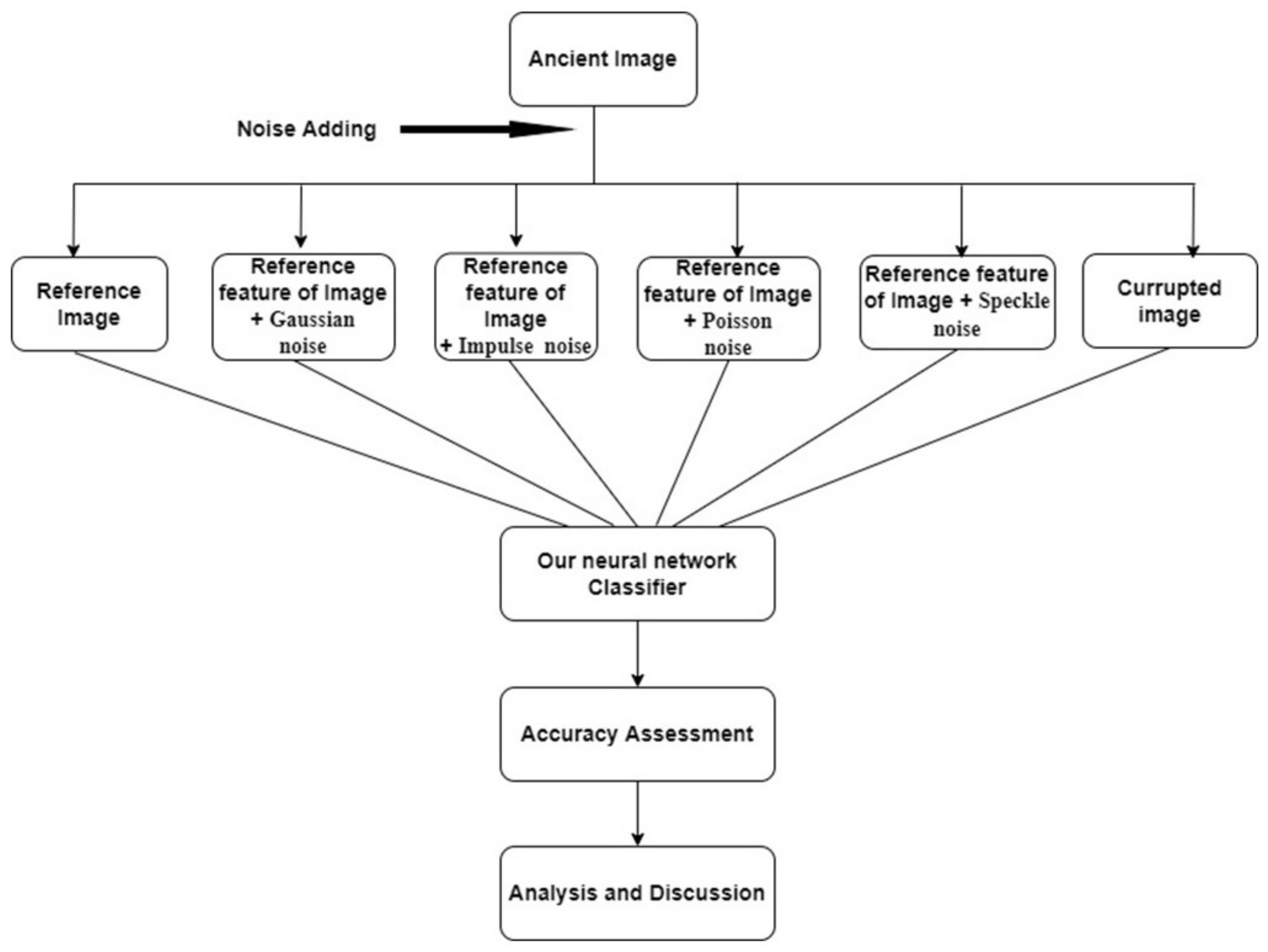

3. Proposed Noise Identification

4. Algorithm Steps and Processes

- Step 1: Acquire ancient images from dataset

- Step 2: Decompose image using wavelet transform and extract the features [43].

- Step 3: Dimensional reduction of features

- Step 4: Pass the features to Convolutional Neural Network

- Step 5: Flattening of the pooled features

- Step 6: Noise classification

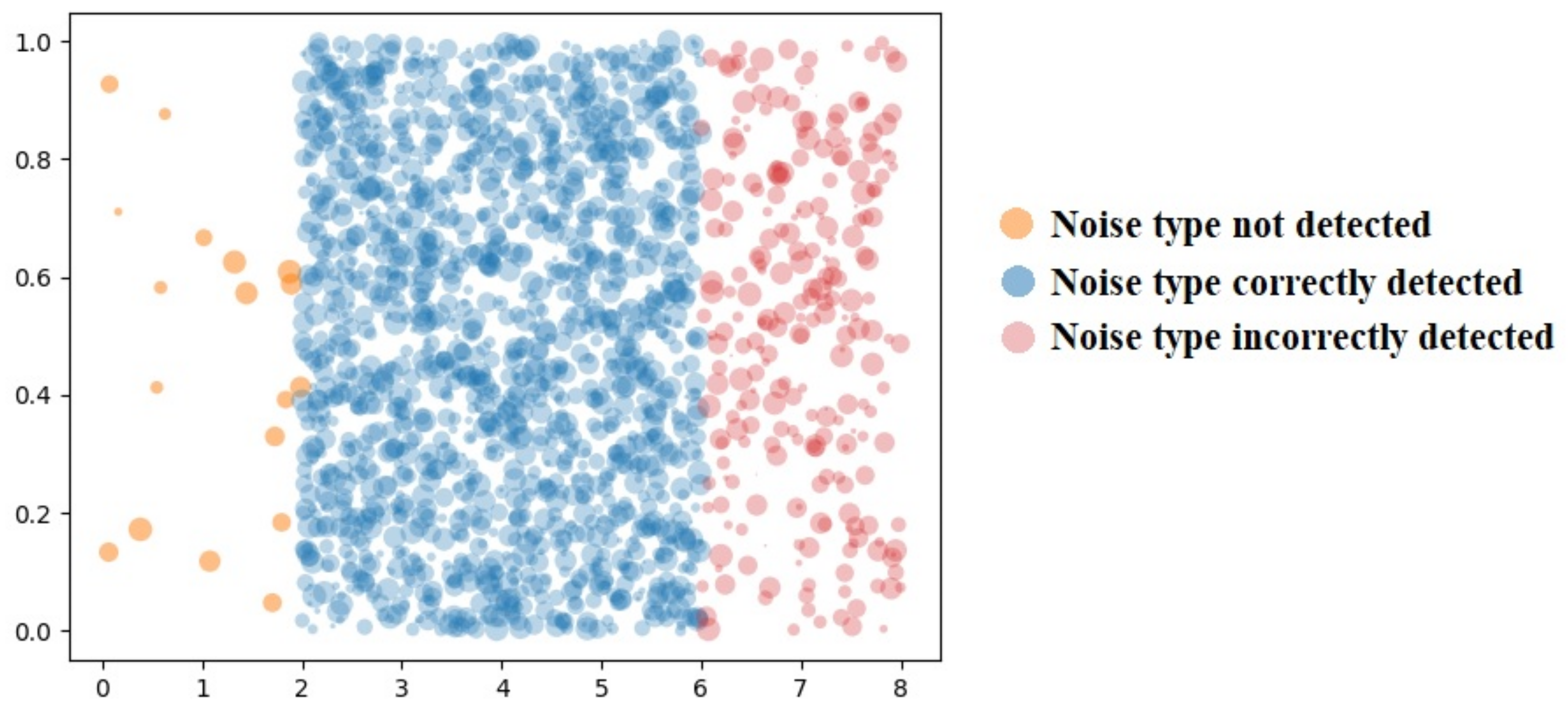

5. Results and Discussion

5.1. Comparative Methods

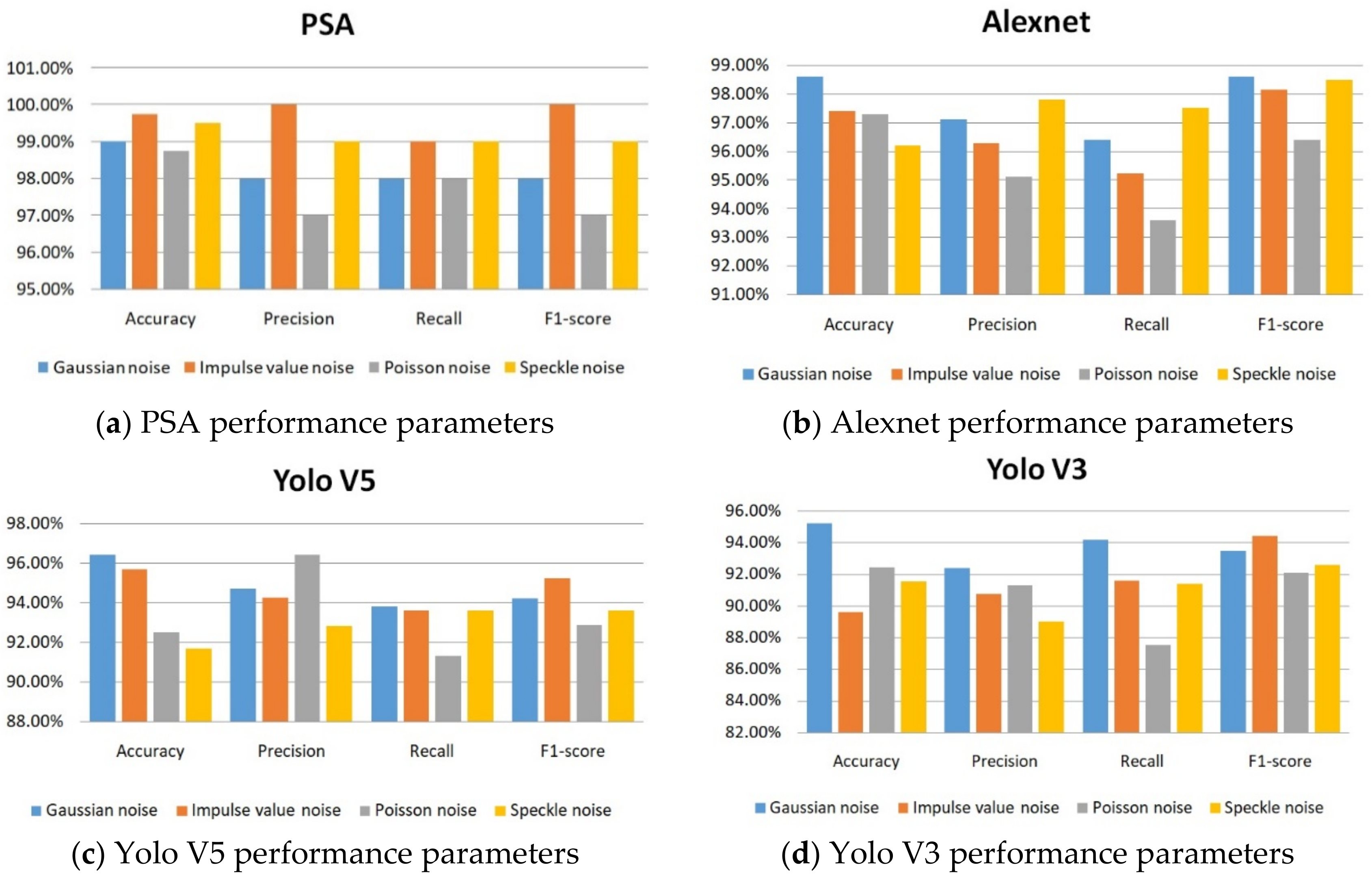

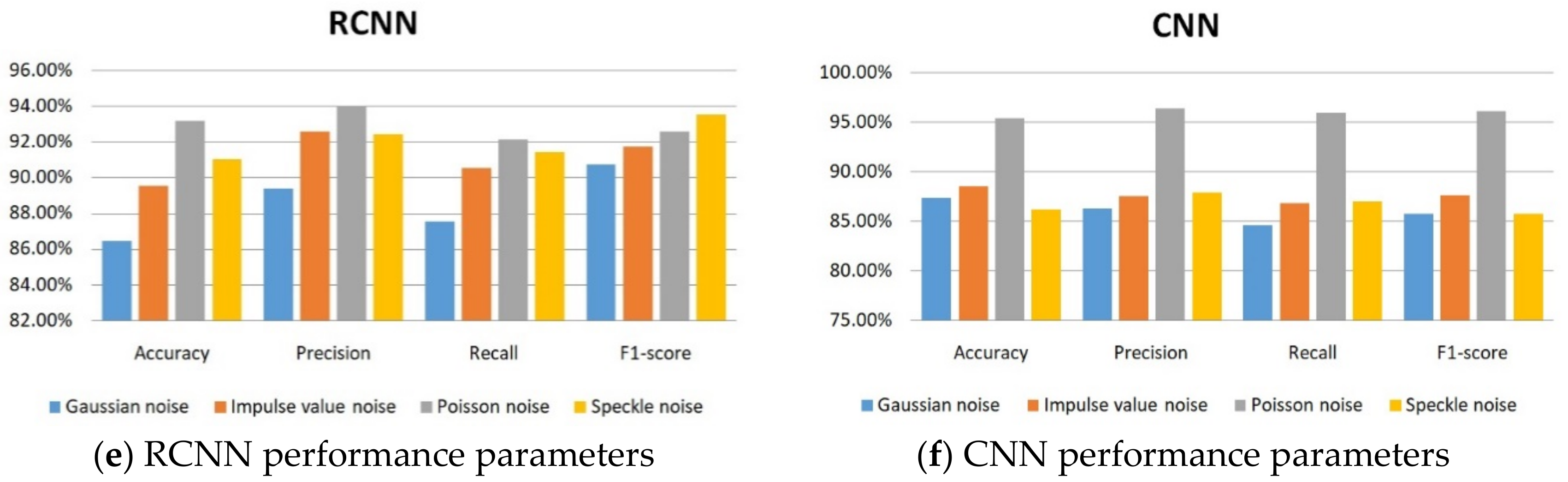

5.2. Comparative Analysis

5.3. Comparative Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reeves, S.J. Image Restoration: Fundamentals of Image Restoration. In Academic Press Library in Signal Processing; Elsevier: Amsterdam, The Netherlands, 2014; Volume 4, pp. 165–192. [Google Scholar]

- Pawar, P.Y.; Ainapure, B.S. Image Restoration using Recent Techniques: A Survey. Des. Eng. 2021, 7, 13050–13062. [Google Scholar]

- Maru, M.; Parikh, M.C. Image Restoration Techniques: A Survey. Int. J. Comput. Appl. 2017, 160, 15–19. [Google Scholar]

- Cao, J.; Zhang, Z.; Zhao, A.; Cui, H.; Zhang, Q. Ancient mural restoration based on a modified generative adversarial network. Herit. Sci. 2020, 8, 7. [Google Scholar] [CrossRef]

- Zeng, Y.; Gong, Y.; Zeng, X. Controllable digital restoration of ancient paintings using convolutional neural network and nearest neighbor. Pattern Recognit. Lett. 2020, 133, 158–164. [Google Scholar] [CrossRef]

- Li, H. Restoration method of ancient mural image defect information based on neighborhood filtering. J. Comput. Methods Sci. Eng. 2021, 21, 747–762. [Google Scholar] [CrossRef]

- Bayerová, T. Buddhist Wall Paintings at Nako Monastery, North India: Changing of the Technology throughout Centuries. Stud. Conserv. 2018, 63, 171–188. [Google Scholar] [CrossRef]

- Mol, V.R.; Maheswari, P.U. The digital reconstruction of degraded ancient temple murals using dynamic mask generation and an extended exemplar-based region-filling algorithm. Herit. Sci. 2021, 9, 137. [Google Scholar] [CrossRef]

- Cao, J.; Jia, Y.; Chen, H.; Yan, M.; Chen, Z. Ancient mural classification methods based on a multichannel separable network. Herit. Sci. 2021, 9, 88. [Google Scholar] [CrossRef]

- Cao, J.; Li, Y.; Zhang, Q.; Cui, H. Restoration of an ancient temple mural by a local search algorithm of an adaptive sample block. Herit. Sci. 2019, 7, 39. [Google Scholar] [CrossRef]

- Boyat, A.K.; Joshi, B.K. A review paper: Noise models in digital image processing. arXiv 2015, arXiv:1505.03489. [Google Scholar] [CrossRef]

- Owotogbe, J.S.; Ibiyemi, T.S.; Adu, B.A. A comprehensive review on various types of noise in image processing. Int. J. Sci. Eng. Res. 2019, 10, 388–393. [Google Scholar]

- Kaur, S. Noise types and various removal techniques. Int. J. Adv. Res. Electron. Commun. Eng. (IJARECE) 2015, 4, 226–230. [Google Scholar]

- Halse, M.M.; Puranik, S.V. A Review Paper: Study of Various Types of Noises in Digital Images. Int. J. Eng. Trends Technol. (IJETT) 2018. [Google Scholar] [CrossRef]

- Karibasappa, K.G.; Hiremath, S.; Karibasappa, K. Neural network based noise identification in digital images. Assoc. Comput. Electron. Electr. Eng. Int. J. Netw. Secur. 2011, 2, 28–31. [Google Scholar]

- Affonso, C.; Rossi, A.L.D.; Vieira, F.H.A.; de Leon Ferreira, A.C.P. Deep learning for biological image classification. Expert Syst. Appl. 2017, 85, 114–122. [Google Scholar] [CrossRef]

- Chai, J.; Zeng, H.; Li, A.; Ngai, E.W. Deep learning in computer vision: A critical review of emerging techniques and application scenarios. Mach. Learn. Appl. 2021, 6, 100134. [Google Scholar] [CrossRef]

- Islam, Z.; Islam, M.; Asraf, A. A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inform. Med. Unlocked 2020, 20, 100412. [Google Scholar] [CrossRef]

- Wang, P.; Fan, E.; Wang, P. Comparative analysis of image classification algorithms based on traditional machine learning and deep learning. Pattern Recognit. Lett. 2021, 141, 61–67. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, J.; Yu, S.; Tan, L. Noise Detection and image denoising based on fractional calculus. Chaos Solitons Fractals 2020, 131, 109463. [Google Scholar] [CrossRef]

- Liufu, Y.; Jin, L.; Xu, J.; Xiao, X.; Fu, D. Reformative noise-immune neural network for equality-constrained optimization applied to image target detection. IEEE Trans. Emerg. Top. Comput. 2021, 10, 973–984. [Google Scholar] [CrossRef]

- Yadav, K.; Mohan, D.; Parihar, A.S. Image Detection in Noisy Images. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; IEEE: New York, NY, USA, 2021; pp. 917–923. [Google Scholar]

- Li, K.; Zhou, W.; Li, H.; Anastasio, M.A. Anastasio. Assessing the impact of deep neural network-based image denoising on binary signal detection tasks. IEEE Trans. Med. Imaging 2021, 40, 2295–2305. [Google Scholar] [CrossRef]

- Hellassa, S.; Souag-Gamane, D. Improving a stochastic multi-site generation model of daily rainfall using discrete wavelet de-noising: A case study to a semi-arid region. Arab. J. Geosci. 2019, 12, 53. [Google Scholar] [CrossRef]

- Xia, H.; Montresor, S.; Picart, P.; Guo, R.; Li, J. Comparative analysis for combination of unwrapping and de-noising of phase data with high speckle decorrelation noise. Opt. Lasers Eng. 2018, 107, 71–77. [Google Scholar] [CrossRef]

- Pimpalkhute, V.A.; Page, R.; Kothari, A.; Bhurchandi, K.M.; Kamble, V.M. Digital image noise estimation using DWT coefficients. IEEE Trans. Image Processing 2021, 30, 1962–1972. [Google Scholar] [CrossRef]

- Langampol, K.; Wasayangkool, K.; Srisomboon, K.; Lee, W. Applied Switching Bilateral Filter for Color Images under Mixed Noise. In Proceedings of the 2021 9th International Electrical Engineering Congress (iEECON), Pattaya, Thailand, 10–12 March 2021; IEEE: New York, NY, USA, 2021; pp. 424–427. [Google Scholar]

- Singh, A.; Sethi, G.; Kalra, G.S. Spatially adaptive image denoising via enhanced noise detection method for grayscale and color images. IEEE Access 2020, 8, 112985–113002. [Google Scholar] [CrossRef]

- Gil Sierra, D.G.; Sogamoso, K.V.A.; Cuchango, H.E.E. Integration of an adaptive cellular automaton and a cellular neural network for the impulsive noise suppression and edge detection in digital images. In Proceedings of the 2019 IEEE Colombian Conference on Applications in Computational Intelligence (ColCACI), Barranquilla, Colombia, 5–7 June 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Joshi, P.; Prakash, S. Image quality assessment based on noise detection. In Proceedings of the 2014 International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 20–21 February 2014; IEEE: New York, NY, USA, 2014; pp. 755–759. [Google Scholar]

- Maragatham, G.; Roomi, S.M.; Vasuki, P. Noise Detection in Images using Moments. Res. J. Appl. Sci. Eng. Technol. 2015, 10, 307–314. [Google Scholar] [CrossRef]

- Santhanam, T.; Radhika, S. A Novel Approach to Classify Noises in Images Using Artificial Neural Network 1. 2010. Available online: https://www.researchgate.net/publication/47554429_A_Novel_Approach_to_Classify_Noises_in_Images_Using_Artificial_Neural_Network (accessed on 22 July 2022).

- Karibasappa, K.G.; Karibasappa, K. AI based automated identification and estimation of noise in digital images. In Advances in intelligent Informatics; Springer: Cham, Switzerland, 2015; pp. 49–60. [Google Scholar]

- Khaw, H.Y.; Soon, F.C.; Chuah, J.H.; Chow, C. Image noise types recognition using convolutional neural network with principal components analysis. IET Image Process. 2017, 11, 1238–1245. [Google Scholar] [CrossRef]

- Hiremath, S.; Rani, A.S. A Concise Report on Image Types, Image File Format and Noise Model for Image Preprocessing. 2020. Available online: https://www.irjet.net/archives/V7/i8/IRJET-V7I8852.pdf (accessed on 22 July 2022).

- Karimi, D.; Dou, H.; Warfield, S.K.; Gholipour, A. Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis. Med. Image Anal. 2020, 65, 101759. [Google Scholar] [CrossRef]

- Tiwari, V.; Pandey, C.; Dwivedi, A.; Yadav, V. Image classification using deep neural network. In Proceedings of the 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 18–19 December 2020; IEEE: New York, NY, USA, 2020; pp. 730–733. [Google Scholar]

- Zhao, M.; Chang, C.H.; Xie, W.; Xie, Z.; Hu, J. Cloud shape classification system based on multi-channel CNN and improved FDM. IEEE Access 2020, 8, 44111–44124. [Google Scholar] [CrossRef]

- Zheng, Q.; Yang, M.; Yang, J.; Zhang, Q.; Zhang, X. Improvement of generalization ability of deep CNN via implicit regularization in two-stage training process. IEEE Access 2018, 6, 15844–15869. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhao, P.; Li, Y.; Wang, H.; Yang, Y. Spectrum interference-based two-level data augmentation method in deep learning for automatic modulation classification. Neural Comput. Appl. 2021, 33, 7723–7745. [Google Scholar] [CrossRef]

- Ladkat, A.S.; Date, A.A.; Inamdar, S.S. Development and comparison of serial and parallel image processing algorithms. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Boonprong, S.; Cao, C.; Chen, W.; Ni, X.; Xu, M.; Acharya, B.K. The classification of noise-afflicted remotely sensed data using three machine-learning techniques: Effect of different levels and types of noise on accuracy. ISPRS Int. J. Geo-Inf. 2018, 7, 274. [Google Scholar] [CrossRef]

- Subashini, P.; Bharathi, P.T. Automatic noise identification in images using statistical features. Int. J. Comput. Sci. Technol. 2011, 2, 467–471. [Google Scholar]

- Masood, S.; Jaffar, M.A.; Hussain, A. Noise Type Identification Using Machine Learning. 2014. Available online: https://pdfs.semanticscholar.org/6049/ee6606315cb6bb8f4db26cd5d1da5e1fb873.pdf (accessed on 22 July 2022).

- Krishnamoorthy, T.V.; Reddy, G.U. Noise detection using higher order statistical method for satellite images. Int. J. Electron. Eng. Res. 2017, 9, 29–36. [Google Scholar]

- Mutlag, W.K.; Ali, S.K.; Aydam, Z.M.; Taher, B.H. Taher. Feature extraction methods: A review. J. Phys. Conf. Ser. 2020, 1591, 012028. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Sasaki, Y. The truth of the F-measure. Teach Tutor Mater 2007, 1, 1–5. [Google Scholar]

- Vakili, M.; Ghamsari, M.; Rezaei, M. Performance analysis and comparison of machine and deep learning algorithms for IoT data classification. arXiv 2020, arXiv:2001.09636. [Google Scholar]

- AlZoman, R.; Alenazi, M. A comparative study of traffic classification techniques for smart city networks. Sensors 2021, 21, 4677. [Google Scholar] [CrossRef]

- Li, W.; Liu, H.; Wang, Y.; Li, Z.; Jia, Y.; Gui, G. Deep Learning-Based Classification Methods for Remote Sensing Images in Urban Built-Up Areas. IEEE Access 2019, 7, 36274–36284. [Google Scholar] [CrossRef]

| PSA DWT | Proposed System Architecture Discrete Wavelet Transform |

| ND | Noise Detection |

| IR | Image Restoration |

| Probability Density Function | |

| ANN | Artificial Neural Network |

| RDN PSNR | Residual Dense Network Peak signal-to-noise ratio |

| IO | Ideal Observer |

| AMT | Automatic Machine Translation |

| DWT | Discrete Wavelet Transform |

| FDM | Frame Difference Method |

| AI WT RCNN | Artificial Intelligence Wavelet Transform Region-based Convolutional Neural Network |

| Wavelet Level | db Value | Accuracy (%) |

|---|---|---|

| 2 | 1 | 89.23 |

| 2 | 2 | 92.34 |

| 2 | 3 | 95.23 |

| 2 | 4 | 93.12 |

| 2 | 5 | 92.59 |

| 3 | 1 | 94.93 |

| 3 | 2 | 92.34 |

| 3 | 3 | 95.23 |

| 3 | 4 | 97.23 |

| 3 | 5 | 98.93 |

| 4 | 1 | 96.34 |

| 4 | 2 | 95.23 |

| 4 | 3 | 97.52 |

| 4 | 4 | 98.29 |

| 4 | 5 | 97.42 |

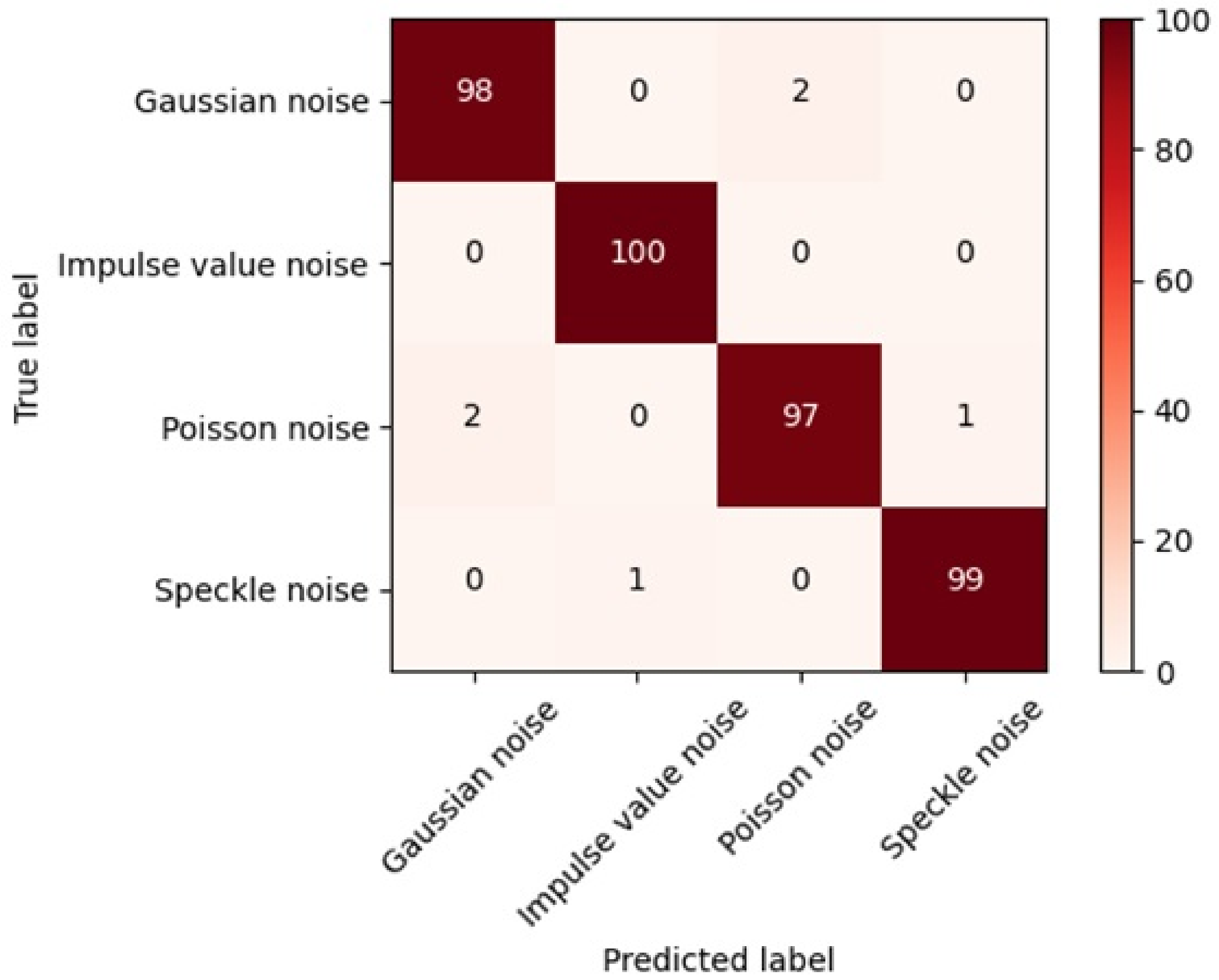

| Class Name | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Poisson noise | 98.75% | 97% | 98% | 98% |

| Speckle noise | 99.50% | 99% | 99% | 100% |

| Gaussian noise | 99% | 98% | 98% | 97% |

| Impulse value noise | 99.75% | 100% | 99% | 99% |

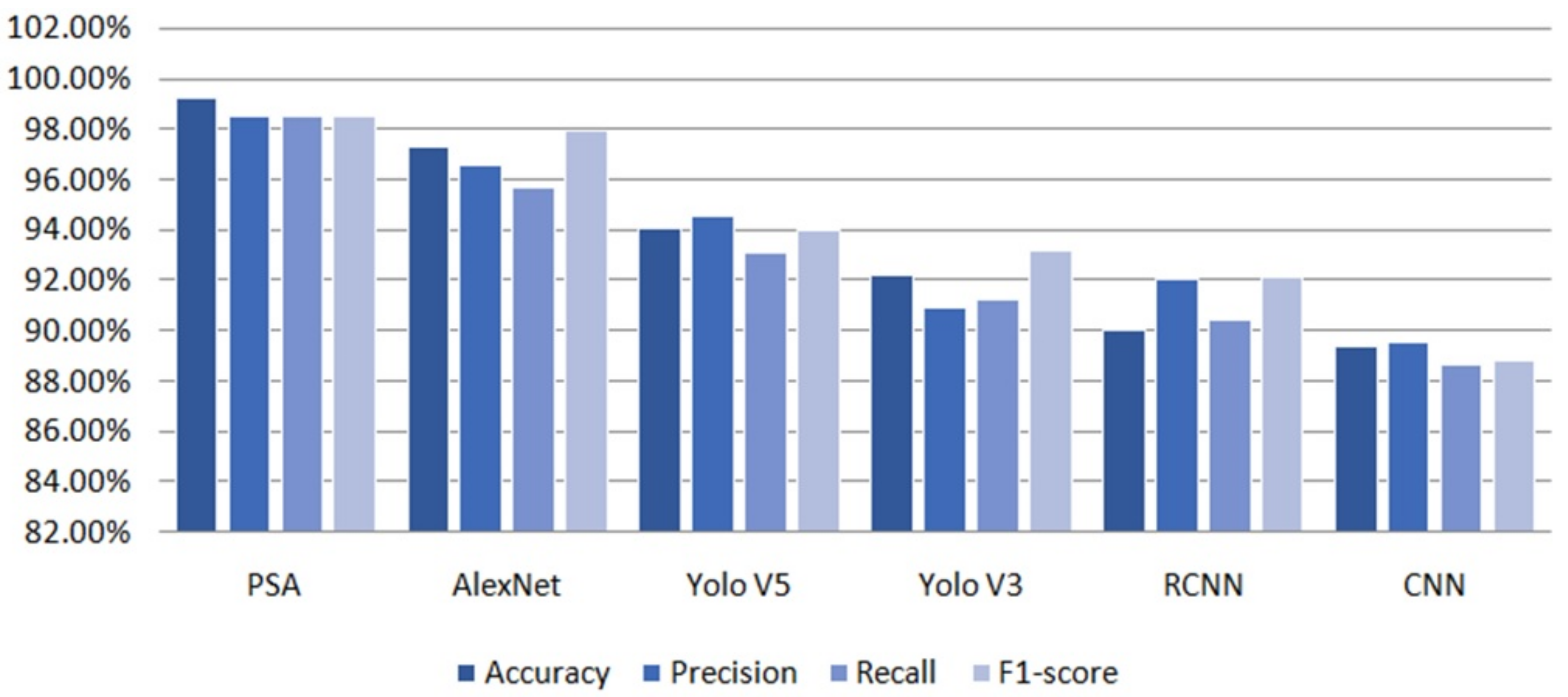

| Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| PSA | 99.25% | 98.50% | 98.50% | 98.50% |

| AlexNet | 97.30% | 96.60% | 95.70% | 97.93% |

| Yolo V5 | 94.09% | 94.58% | 93.10% | 94% |

| Yolo V3 | 92.24% | 90.88% | 91.20% | 93.17% |

| RCNN | 90.03% | 92.08% | 90.39% | 92.13% |

| CNN | 89.39% | 89.53% | 88.62% | 88.82% |

| Number of Hidden Layers | Accuracy |

|---|---|

| 1 | 86.23 |

| 2 | 87.43 |

| 3 | 89.56 |

| 4 | 90.88 |

| 5 | 92.66 |

| 6 | 94.09 |

| 7 | 95.69 |

| 8 | 97.29 |

| 9 | 98.93 |

| 10 | 97.29 |

| 11 | 96.23 |

| Algorithm | Class Name | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| PSA | Gaussian noise | 99.00% | 98.00% | 98.00% | 98.00% |

| Impulse value noise | 99.75% | 100% | 99.00% | 100% | |

| Poisson noise | 98.75% | 97.00% | 98.00% | 97.00% | |

| Speckle noise | 99.50% | 99.00% | 99.00% | 99.00% | |

| AlexNet | Gaussian noise | 98.62% | 97.13% | 96.42% | 98.62% |

| Impulse value noise | 97.41% | 96.30% | 95.23% | 98.17% | |

| Poisson noise | 97.32% | 95.14% | 93.62% | 96.42% | |

| Speckle noise | 96.21% | 97.83% | 97.53% | 98.50% | |

| Yolo V5 | Gaussian noise | 96.43% | 94.72% | 93.84% | 94.24% |

| Impulse value noise | 95.72% | 94.28% | 93.63% | 95.24% | |

| Poisson noise | 92.52% | 96.46% | 91.30% | 92.88% | |

| Speckle noise | 91.69% | 92.84% | 93.61% | 93.62% | |

| Yolo V3 | Gaussian noise | 95.24% | 92.43% | 94.20% | 93.53% |

| Impulse value noise | 89.63% | 90.75% | 91.64% | 94.45% | |

| Poisson noise | 92.48% | 91.30% | 87.53% | 92.12% | |

| Speckle noise | 91.59% | 89.03% | 91.41% | 92.60% | |

| RCNN | Gaussian noise | 86.43% | 89.35% | 87.53% | 90.70% |

| Impulse value noise | 89.53% | 92.54% | 90.51% | 91.73% | |

| Poisson noise | 93.15% | 94.02% | 92.09% | 92.56% | |

| Speckle noise | 91.00% | 92.42% | 91.42% | 93.51% | |

| CNN | Gaussian noise | 87.35% | 86.25% | 84.62% | 85.73% |

| Impulse value noise | 88.53% | 87.53% | 86.83% | 87.62% | |

| Poisson noise | 95.42% | 96.42% | 96.00% | 96.19% | |

| Speckle noise | 86.24% | 87.92% | 87.03% | 85.72% |

| PSA | ||

|---|---|---|

| Image Size | Time (Seconds) | Required Memory for Processing (kb) |

| 50 kb | 0.0001 | 12 |

| 100 kb | 0.0001 | 16 |

| 200 kb | 0.000294 | 25 |

| 500 kb | 0.000784 | 39 |

| 750 kb | 0.001862 | 48 |

| 1 Mb | 0.1274 | 74 |

| 5 Mb | 1.0388 | 91 |

| 10 Mb | 2.764 | 128 |

| 15 Mb | 2.9543 | 381 |

| Configuration | |||

|---|---|---|---|

| CPU/GPU | Processor | RAM | Required Time in Seconds |

| CPU | i3 | 8GB | 0.393 |

| CPU | i5 | 8GB | 0.292 |

| CPU | i7 | 8GB | 0.286 |

| GPU | Nvidia K80 | 24 GB | 0.003 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pawar, P.; Ainapure, B.; Rashid, M.; Ahmad, N.; Alotaibi, A.; Alshamrani, S.S. Deep Learning Approach for the Detection of Noise Type in Ancient Images. Sustainability 2022, 14, 11786. https://doi.org/10.3390/su141811786

Pawar P, Ainapure B, Rashid M, Ahmad N, Alotaibi A, Alshamrani SS. Deep Learning Approach for the Detection of Noise Type in Ancient Images. Sustainability. 2022; 14(18):11786. https://doi.org/10.3390/su141811786

Chicago/Turabian StylePawar, Poonam, Bharati Ainapure, Mamoon Rashid, Nazir Ahmad, Aziz Alotaibi, and Sultan S. Alshamrani. 2022. "Deep Learning Approach for the Detection of Noise Type in Ancient Images" Sustainability 14, no. 18: 11786. https://doi.org/10.3390/su141811786

APA StylePawar, P., Ainapure, B., Rashid, M., Ahmad, N., Alotaibi, A., & Alshamrani, S. S. (2022). Deep Learning Approach for the Detection of Noise Type in Ancient Images. Sustainability, 14(18), 11786. https://doi.org/10.3390/su141811786