Abstract

Information asymmetry is everywhere in financial status, financial information, and financial reports due to agency problems and thus may seriously jeopardize the sustainability of corporate operations and the proper functioning of capital markets. In this era of big data and artificial intelligence, deep learning is being applied to many different domains. This study examines both the financial data and non-financial data of TWSE/TEPx listed companies in 2001–2019 by sampling a total of 153 companies, consisting of 51 companies reporting financial statement fraud and 102 companies not reporting financial statement fraud. Two powerful deep learning algorithms (i.e., recurrent neural network (RNN) and long short-term memory (LSTM)) are used to construct financial statement fraud detection models. The empirical results suggest that the LSTM model outperforms the RNN model in all performance indicators. The LSTM model exhibits accuracy as high as 94.88%, the most frequently used performance indicator.

1. Introduction

The origin of information asymmetry is found in the lemon market theory proposed in 1970 by the 2001 Nobel laureate economist Akerlof [1]. In a free market, it should be easy to strike a deal between the buyer and the seller with payment and delivery, but this is often not the reality. As the theory posits, information asymmetry about the product is the main reason. Akerlof uses the secondhand car market as an example. Only the seller knows whether the car is good or not, and the buyer has no idea. A buyer who has been cheated would then tell others, and as a result, all buyers would only be willing to make low price offers for secondhand cars so as to mitigate risks and avoid losses. This in turn makes the sellers with actual quality secondhand cars less willing to trade compared to somebody with a “lemon” (an American slang meaning an inferior product), because the price is simply too bad. The overly low price also discourages sellers from providing quality products. Consequently, low-quality goods awash the market and high-quality goods are squeezed out. In the end, the secondhand market shrinks or even disappears. Information asymmetry among market participants causes adverse selection and moral hazards, and bad money drives out good money in the market.

Information asymmetry is commonplace in the capital markets due to agency problems [2]. This is particularly the case with financial statements on which companies present operating results, corporate health, and the ability for sustainable development. As senior managers possess information different from others, it is difficult for shareholders, creditors, employees, and other stakeholders to understand the true picture of the company’s financials, until the company sinks into financial distress or declares bankruptcy [3]. This may compromise the public’s confidence in the financial system [4] or even the capital market [5,6]. It is in opposition to the health, development, and sustainability of the global capital market [7].

Financial statements are the basic reports that reflect the financial status and operating results of a company. They are also the primary reference for decision making by investors, shareholders, creditors, employees, other stakeholders, and other users of accounting and financial reporting information [8,9,10,11]. The faithful representation of a company’s operating result and financial status in financial statements is indeed a form of corporate social responsibility to society and the capital market.

The Enron scandal in the U.S. in 2001 and the global financial crisis spanning 2008–2009 severely damaged the world economy. One of the main causes of these events was the failure of CPAs and auditors through issuances of inaccurate audit reports [3,12]. There has also been much financial statement fraud in the Taiwan capital market. Examples include PEWC, Procomp Informatics, Infodisc Technology, Summit Technology, and ABIT Computer in 2004, Rebar in 2007, XPEC Entertainment in 2016, and Pharmally and TOPBI in 2020–2021.

According to the 2020 Global Study on Occupational Fraud and Abuse published by the U.S. Association of Certified Fraud Examiners [13], financial statement fraud occurs at a lower frequency than asset misappropriation and corruption but cause higher economic losses than other fraud. The integration of the international capital markets and the complexity of economic models these days have seen a growing variety of fraudulent practices. Fraud prevention is becoming more and more difficult. Therefore, the effective reduction and prevention of financial statement fraud are imperative.

CPAs or auditors are sometimes involved in fraud. The Enron scandal in the U.S. in 2001 is the most obvious case in point. Enron Corporation’s external auditor, Arthur Andersen, was headquartered in Chicago of the U.S. and was among the Big Five accounting firms in the world at that time. Following the scandal, parts of Arthur Andersen were acquired Ernst and Young (EY) and parts by Price Waterhouse Coopers (PwC). Arthur Andersen was accused of using reckless audit standards due to a conflict of interest from the huge fees paid by Enron. In 2000, Arthur Andersen received USD 25 million in audit fees and USD 27 million in consulting fees from Enron. The audit method was questioned, because of a lack of appropriate audits on Enron’s revenue recognition and knowledge in auditing special purpose entities (SPEs), derivatives, and other accounting topics [14].

After the Enron fraud in 2001, the U.S. Congress passed the Sarbanes–Oxley Act (SOX Act) in 2002 to protect the rights of the investing public and the functioning of capital markets. The SOX Act mainly covers the strengthening of regulatory oversight, corporate governance, and CPA independence. It also enhances the legal liability of company CEOs, CFOs, and external CPAs. The SOX Act requires U.S. listed companies to ensure the effectiveness of internal control procedures and auditors to prove management’s internal control assessment. The SOX Act seeks to increase the robustness of financial reporting procedures in order to reduce financial statement fraud.

According to the 2002 SOX Act, the Public Company Accounting Oversight Board (PCAOB) was established as the regulator of CPAs auditing the financial statements of public companies in the U.S. The stringent requirement is for CPAs and auditors to avoid willful or material negligence and to shun away from direct or indirect assistance to management’s financial statement fraud. Subsequently, the American Institute for Certified Public Accountants (AICPA) published the Statement on Auditing Standards No. 99 (SAS 99): Consideration of Fraud in a Financial Statement Audit, to foster accuracy and reliability of financial reporting and disclosure. Under the regulation of the Sarbanes–Oxley Act and SAS 99, CPAs and auditors must gather the adequate and appropriate evidence to determine the issuance of audit reports and opinions on the audited company as a going concern. To deter and avoid fraud in financial statements, the board should also establish an effective management system and fraud prevention mechanism [9].

Taiwan implemented “Consideration of Fraud in a Financial Statement Audit” Auditing Standard Bulletin No. 43 [15] in 2006. Bulletin 43 sets out specific regulations on the audits conducted by CPAs and auditors, in order to ensure effective audits on errors in financial reporting and to prevent material and unfaithful representation of financial statements due to fraud. Audit teams should discuss the possibility of management seeking to manipulate earnings and devise audit procedures and measures accordingly. Auditing Standards Bulletin No. 74 [16] effective at the end of 2020 was formulated in reference to the International Standards on Auditing (ISA) 240 (ISA 240) under the same topic of auditors’ responsibilities relating to fraud in the audit of financial statements. This replaced “Consideration of Fraud in a Financial Statement Audit” Auditing Standards Bulletin No. 43 released in 2006. According to Auditing Standards Bulletin No. 74, financial statement fraud involve willful and unfaithful representation, including deliberate and erroneous amounts, omissions, or negligence in disclosure, so as to deceive users of financial statements. To mislead the users of financial statements and influence the perception about corporate performance and profitability, management may attempt to manipulate earnings and conduct fraudulent financial reporting.

Earnings manipulation typically starts with small and inappropriate adjustments to assumptions or changes to judgement. This escalates to financial statement fraud due to pressure and incentives. Financial statement fraud comes in a number of forms: (1) manipulation, forgery, or tampering of accounting records or relevant evidence; (2) intentional and unfaithful statement or deliberate omission of transactions, events, or other material information; and (3) willful and erroneous use, recognition, measurement, classification, expression, or disclosures of relevant accounting principles. The reasons contributing to fraud can be classified into three categories: (1) incentives or pressure; (2) opportunities and attitudes; and (3) behavioral rationalization. This is the fraud triangle people are familiar with. In addition to audits carried out according to generally accepted auditing principles, auditors have the responsibility to exercise professional skepticism throughout the audit process, take into account the possibility of management breaching control, and perceive fraud that can slip through effective audit procedures for errors.

Article 9 of Auditing Standards Bulletin No. 74 says, “Regarding the failure of the audited parties in compliance (such as committing fraud), auditors may have extra responsibility under laws and regulations or relevant occupational ethics, or the laws and regulations or the relevant occupational ethics may have different requirements from this or other bulletins or outside the scope of this or other bulletins.” Compared with Auditing Standards Bulletin No. 43, Auditing Standards Bulletin No. 74 require auditors, in the event of identified fraud or suspicious fraud, to confirm whether laws or relevant occupational ethics demand that: (1) CPAs should report to appropriate competent authorities; and (2) CPAs should honor responsibilities when it is appropriate to report to appropriate competent authorities. In addition to the definition of fraud characteristics and the responsibilities of preventing and detecting fraud, Auditing Standards Bulletin No. 74 also emphasizes the exercise of professional skepticism by CPAs and auditors and the discussion within audit teams. The exercise of professional skepticism requires auditors to continuously question the information and audit evidence obtained for any material and unfaithful representation due to fraud. This includes consideration of the reliability of the information as audit evidence and identification of the elements in control operations concerning the control over the preparation and maintenance of such information. Due to the unique nature of fraud, the exercise of professional skepticism is particularly important in the consideration of the risks of material and unfaithful representations. Even if experience tells auditors that management and governance units are honest and reliable, there may still be material and unfaithful representations as a result of fraud. Auditors should exercise professional skepticism throughout the entire audit process.

“Audit Reports on Financial Statements” Auditing Standards Bulletin No. 57 [17] effective in 2016 primarily regulates the responsibility of CPAs in forming the audit opinions on financial statements and the format and contents of the issued audit reports based on audit results. According to Auditing Standards Bulletin No. 57, CPAs should form audit opinions based on whether all the material aspects of the financial statements are prepared by following the applicable financial reporting framework. To form audit opinions, CPAs should reach conclusions regarding whether it can be reasonably assured that there are no material unfaithful representations in the financial statements due to fraud or errors. When a CPA arrives at conclusions regarding whether all material aspects of the financial statements follow the applicable financial reporting framework, the CPA should issue an unqualified audit opinion. The circumstances where modified opinions must be issued are regulated and guided in detail in “Modified Audit Opinions” Auditing Standards Bulletin No. 59 [18] effective in 2018.

When auditing the financial statements of TWSE/TPEx listed companies for general purposes, CPAs should also observe the regulations described in “Communication of Key Audit Matters for Auditing Reports” Auditing Standards Bulletin No. 58 [19] effective in 2018 regarding the communication of key audit matters when auditing reports.

With the COVID-19 global crisis, the number of infections and deaths has increased, and the impact on the performance indicators, sustainability, sustainable development of capital markets, and information asymmetry of companies is extremely huge and unprecedented. Some literature points out that the impact of COVID-19 includes: economic recession, stock market turmoil, severe employment situation (increased unemployment), depression in travel and related industries, medical system collapse, corporate financial distress or bankruptcy [20,21,22,23,24]. Because of these severe impacts, the performance of enterprises is worse, and the sustainability and sustainable development of capital markets have been negatively affected. Of course, the information will be more opaque and the information asymmetry will be more obvious and serious. Furthermore, COVID-19 has changed the type of work and the way of going to work. Many companies adopt the method of remote work, and performance indicators must be changed accordingly. The huge harm and economic and commercial losses caused by COVID-19 will indeed increase the motivation and behavior of financial statement fraud. CPAs and auditors should be more careful during the audit process, especially for physical inventory and important confirmations. In 2020, the Financial Examination Bureau in Taiwan published “Matters of Attention for Remote Auditing of Financial Reports for Publicly Issued Companies” due to the impact of COVID-19 on audits. The purpose of these matters of attention is to remind auditors to make use of digital technology in the assistance for the acquisition of appropriate audit evidence. However, this does not replace audit procedures, and CAPs may not refer to the matters of attention as a basis for audit opinions.

The UN Global Compact proposed the concept of ESG (environment, social, governance) in 2004, which is regarded as an indicator for evaluating the operation of a company. There are three main aspects of ESG. Environmental protection: greenhouse gas emissions, water and sewage management, biodiversity and other environmental pollution prevention and control. Social responsibility: customer welfare, labor relations, diversification and inclusiveness and other aspects of the stakeholders affected by the sales industry. Corporate governance: business ethics, competitive behavior, supply chain management, etc. are related to company stability and reputation. Due to the challenges of the current business environment, green and sustainable finance, corporate social responsibility, and financial and non-financial performance are attracting widespread attention. In addition, green and sustainable finance, corporate social responsibility, and intellectual and human capital have become core issues for measuring organizational success, competitive advantage, and market influence [25]. If the company’s operators and top management can pay more attention to corporate social responsibility, uphold conscience and sustainable operation, it will also reduce the occurrence of financial statement fraud.

Financial statement fraud may be a precursor to financial distress or bankruptcy of an enterprise, and has a great impact on macro and business activity levels, especially the listed companies. The macro impact includes: capital markets, financial markets, and even the country’s overall economy and GDP. The impact at the business activity level includes: the sustainable operation of the company and the interests of the stakeholders (shareholders, creditors, customers, suppliers, employees, and social relations).

Governments around the world have formulated stricter regulations to prevent financial statement fraud and required company boards to establish an effective internal control and fraud prevention mechanism and for CPAs and auditors to carefully carry out audits. However, financial statement fraud emerges from time to time. It is imperative to find an effective model to detect financial statement fraud so that CPAs and auditors can use this tool as assistance for better decision-making and issuance of accurate audit reports and opinions. This also avoids any losses due to audit failures. One after another, corporate fraud compromise the creditability of CPAs and affect the reliability of financial reporting principles and audit standards and the effectiveness of regulatory regimes. This is the responsibility shared by practitioners and academics.

To tackle the shortcomings of the traditional approach to financial statement fraud detection and to embrace the era of big data and artificial intelligence, the use of deep learning algorithms to detect financial statement fraud appears to be the way to go, but there is limited literature. Deep learning algorithms can quickly and effectively handle large amounts of data and are powerful tools for modeling. This study thus aims to use the recurrent neural network (RNN) and long short-term memory (LSTM), two powerful and advantageous deep learning algorithms, to construct a detection model for financial statement fraud. Both financial and non-financial variables (also known as corporate governance variables) are used. The purpose is to establish an effective detection model for financial statement fraud, so as to enhance the awareness of financial statement users in potential fraud and detect the signs of financial statement fraud. This model is expected to reduce the losses due to financial statement fraud and maintain the sustainable development of capital markets.

2. Related Works

Some studies in the literature indicate that financial statement fraud is increasingly serious [8,9,10,11,26,27,28]. What is worse is that criminals are getting better at circumventing regulatory schemes, and fraud are evolving into greater complexity [29]. In fact, most financial statement fraud is implemented with the awareness or consent of management [30]. Ill-intended managers seek to manipulate earnings by committing financial statement fraud [31].

Except for major shareholders sitting on the board, most shareholders cannot directly participate in corporate operations, but rather have no choice but to entrust the company to management. This inevitably creates information asymmetry and insufficient transparency due to agency problems. At this juncture, it is up to the ethical standard of managers, the robust auditing of financial statements, and the issuance of close to faithful audit reports and opinions by CPAs (certified public accountants) and auditors. Except for CPAs who collude with management by issuing untruthful audit reports and opinions, most CPAs adhere to occupational ethics, relevant accounting and audit standards, and financial laws and regulations. However, audit failure occurs from time to time. This seriously undermines the reputation of CPAs and may lead to claims by investors for compensations or even criminal liability. Many business decisions depend on the accuracy of financial statements, given the lack of available resources for complete due diligence. In fact, the penalties on perpetrators are not a sufficient deterrent in many regulatory jurisdictions [32].

The detection of financial statement fraud is difficult and challenging [33]. The traditional approaches to the detection of financial statement fraud are regression analysis, discriminant analysis, cluster analysis, and factor analysis. Hamal and Senvar [34] suggest that the detection of financial statement fraud requires sophisticated analytical tools and techniques, rather than the traditional methods adopted by decision-makers like auditors. However, there is no one-size-fits-all method for the detection of financial statement fraud according to the literature. Craja, Kim, and Lessmann [35] indicate that the models constructed with machine learning techniques can effectively detect financial statement fraud, keep up with the constant evolution of financial reporting fraudulent behavior, and adopt the newest technology to respond. Gupta and Mehta [36] also prove that the detection models built with machine learning techniques boast higher accuracy than traditional methods. Most of the early studies on the detection of financial statement fraud refer to financial ratios as the research variable. Current research widely uses both financial ratios and non-financial variables (or corporate governance variables), given the advocacy and emphasis of corporate governance by practitioners [6,8,10,28,32,37,38,39].

Different from studies relying on traditional statistical methods, some literature uses data mining and machine learning techniques for the detection of financial statement fraud. In addition to better accuracy in prediction than traditional statistical methods, data mining and machine learning techniques produce more accurate classifications and predictions with machine learning on massive amounts of data [9,10,40]. Moreover, data mining and machine learning techniques do not need the presumptions required by traditional statistics and can effectively handle non-linear problems [9]. For example, methods such as Artificial Neural Network (ANN), Support Vector Machine (SVM), Decision Tree (DT), and Bayesian Belief Network (BBN) are being used to detect financial statement fraud [7,8,10,28,32,34,38,40,41]. In the era of artificial intelligence, deep learning techniques are used by studies for the detection of financial statement fraud [35,42]. Compared to machine learning, deep learning can better process massive volumes of data and have greater predictivity [43]. While deep learning is based on neural networks, it has more layers than neural networks and can effectively capture features and handle complex issues. Compared to machine learning, it can more effectively detect financial statement fraud [35].

3. Materials and Methods

This study samples a total of 153 TWSE/TPEx listed companies in 2001–2019. The sample pool consists of 51 companies reporting financial statement fraud and 102 companies not reporting financial statement fraud. Two deep learning algorithms (i.e., recurrent neural network (RNN) and long short-term memory (LSTM)) are used to construct the detection model for financial statement fraud based on financial data and non-financial data. Both RNN and LSTM can quickly and effectively process large data volumes and are ideal for model construction [35,42].

3.1. Research Subjects and Data Sources

This study samples the TWSE/TEPx listed companies in 2001–2019. After the deletion of incomplete data, 153 companies in total are sampled. This includes 51 companies reporting financial statement fraud (the publication of untruthful financial statements) and 102 companies not reporting financial statement fraud (FSF:non-FSF = 1:2). Both financial data and non-financial data are sourced from the Taiwan Economic Journal (TEJ). The sampled industries are summarized in Table 1.

Table 1.

Summary of sampled Industries.

3.2. Research Variables

3.2.1. Dependent Variable

The dependent variable is a dummy variable, taking on 1 for companies reporting financial statement fraud and 0 for companies not reporting financial statement fraud.

3.2.2. Independent Variables (Research Variables)

This study selects a total of 18 variables frequently used to measure financial statement fraud. They consist of 14 financial variables and 4 non-financial variables (also known as corporate governance variables). The research variables are summarized in Table 2:

Table 2.

Research variables.

3.3. Methods

This study uses the recurrent neural network (RNN) and long short-term memory (LSTM), which are two powerful and advantageous deep learning algorithms.

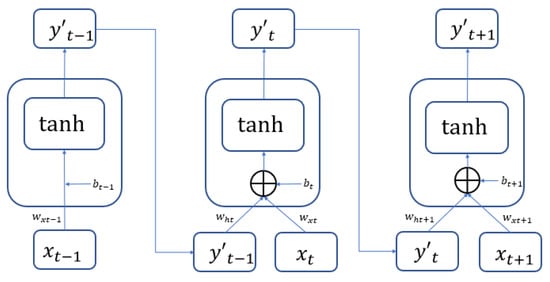

3.3.1. Recurrent Neural Network

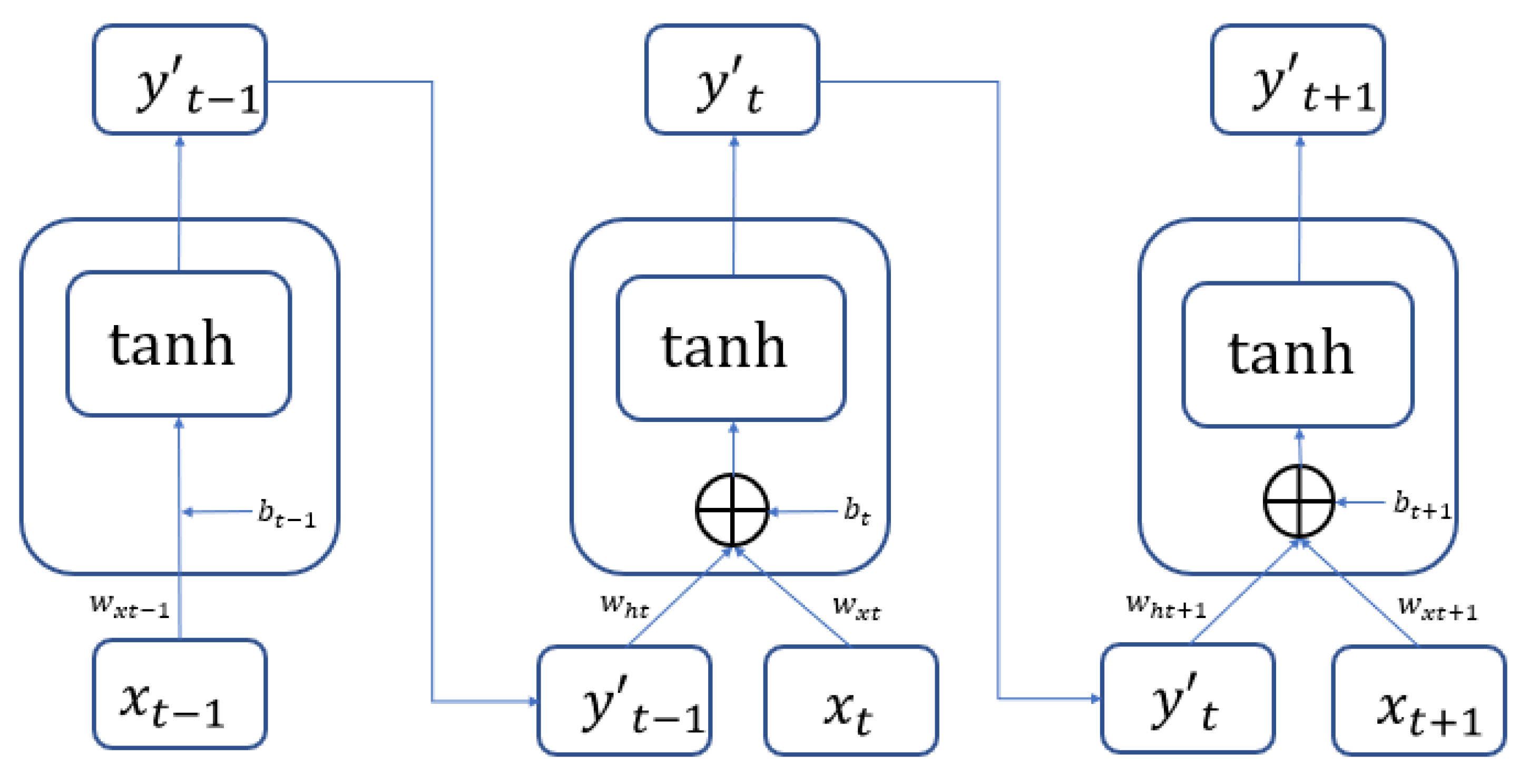

The recurrent neural network (RNN) is one of the currently most popular deep learning network structures and is often used for processing data with a sequential nature. RNN is good at capturing the relations between each data point and can produce predictions on sequential data with higher accuracy. Important information from the past is preserved in the modeling process as the input variable to be computed with current data. The computing architecture is illustrated in Figure 1. The computing result of the tth data will be in a hidden state as the input variable. This input variable and the t + 1 data will be introduced into the hidden layer together for computation. The activation function used in the hidden layer of the recurrent neural network is a hyperbolic tangent function (tanh). This is expressed in Equation (1) below.

Figure 1.

The principle and structure of RNN.

The RNN is usually used for the description of temporal dynamic behavior sequences. The status is circulated and transmitted in its own network. It can accept a wider range of inputs in time-sequential structures. Different from feedforward neural networks, RNN places a greater emphasis on network feedback. In both academic and practical applications, RNN has demonstrated good results in solving problems such as voice to texts or translation. RNN is often used to model video sequences and the dependence relations between these sequences. The advantage lies in the capability of coding a video into an expression of a fixed length, so that it can be adopted for different tasks by using RNN decoders. RNN can also be used to model the dependence of image spatial domains. This requires the adoption of certain rules to go over two-dimensional surfaces in order to form the sequence for RNN descriptions. The multi-dimensional RNN model is the representative model. RNN has also been used for cross-modal retrieval of text and video descriptions. Recurrency as the concept introduced by RNN is an innovation compared to other convolutional neural networks (CNNs).

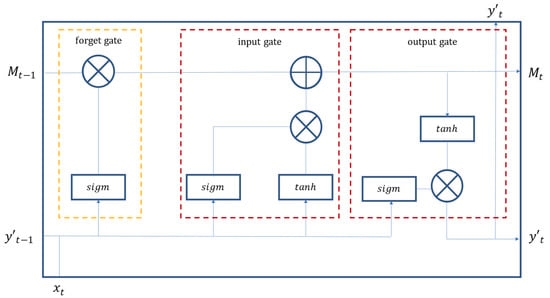

3.3.2. Long Short-Term Memory

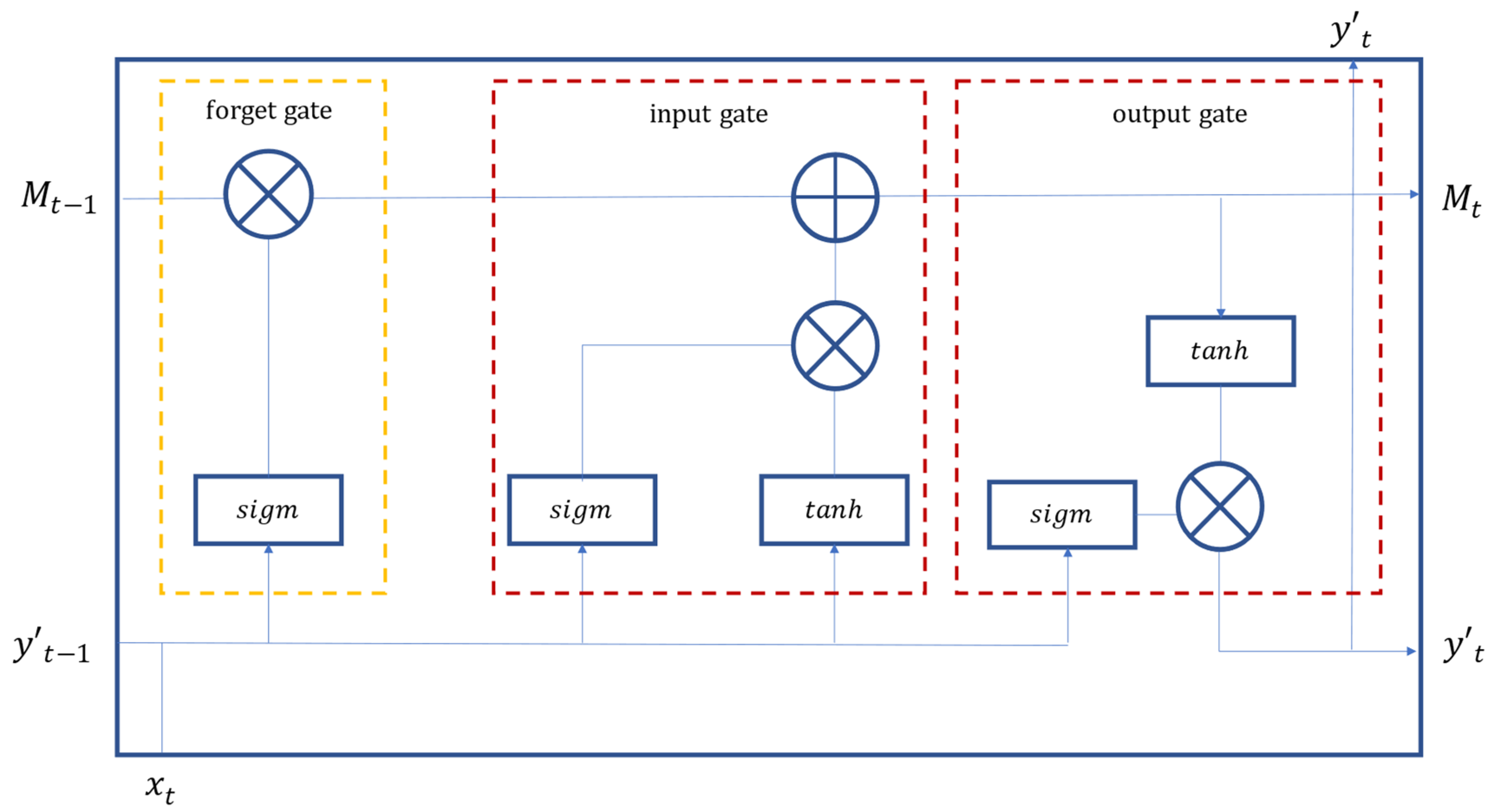

The long short-term memory (LSTM) is an extension of the RNN based on the improvement proposed by Hochreiter and Schmidhuber [44] in 1997 to address the potential poor performance of long-term memory in RNN. LSTM is innovative in the addition of three steps (i.e., forget, update, and input) in the neurons, to greatly enhance the long-term memory performance. On the basis of RNN, LSTM replaces the neurons in the hidden layer of RNN with a gate control mechanism and memory cells, so as to resolve the problem of gradient disappearance and poor long-term memory effects of RNN. The gate control mechanism consists of Forget Gate (), Input Gate (), and Output Gate () as shown in Figure 2. Forget Gate determines the portion to be forgotten from the input of the previous period to the memory status of the current period, as expressed in Equation (2). Input Gate determines the input variable during the current period and the output result from the previous data to be updated to the memory status, as expressed in Equation (3). The completion of the update is expressed in Equation (4). Output Gate determines the output information from the memory status, as expressed in Equation (5). The final calculation of the input value is expressed in Equation (6).

Figure 2.

The principle and structure of LSTM.

The LSTM also consists of repeated and interconnected units. However, the design of internal units is more sophisticated as they interact with each other. RNN and LSTM are powerful in the acceptance of sequences of either single or multiple vectors for input data or output data. When the vector input of a fixed size only allows the output of single vectors, the network can process classification problems. If multiple outputs are possible from one set of inputs, the network can handle automatic text labeling of images. Moreover, both RNN and LSTM achieve high accuracy in an instant due to high effectiveness in the large data input, processing, stabilization, and modeling procedures.

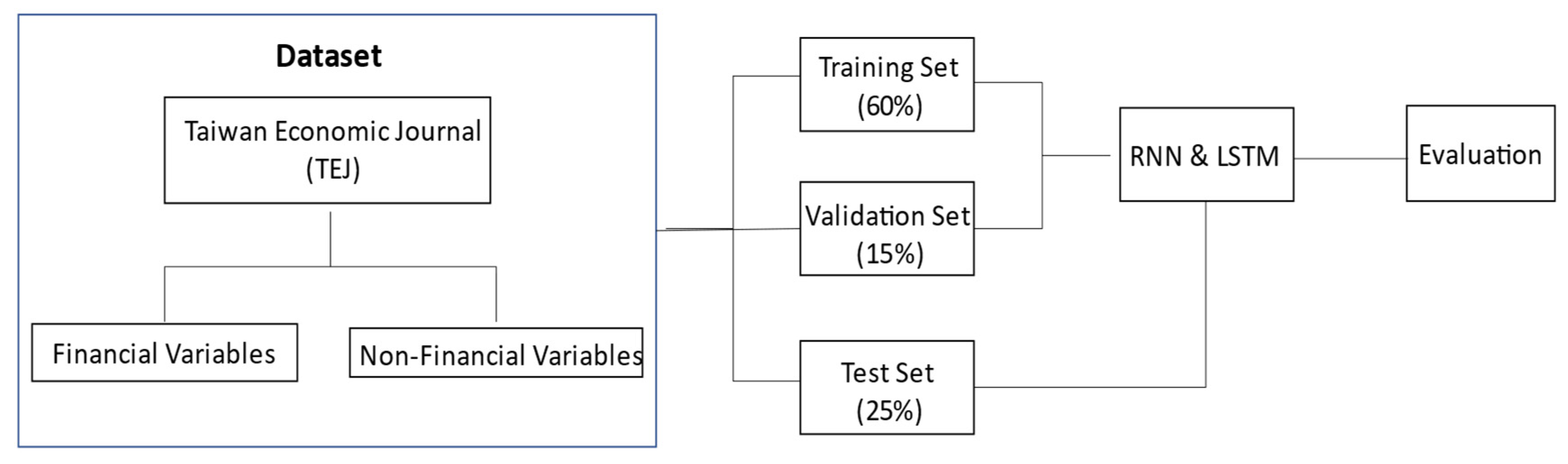

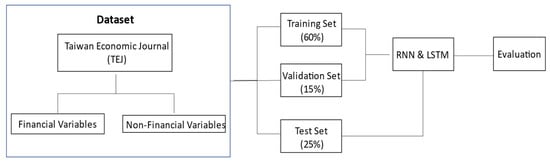

3.4. Sampling and Research Process

This study samples a total of 153 companies, consisting of 51 companies reporting financial statement fraud (the publication of untruthful financial statements) and 102 companies without reporting financial statement fraud. Both financial and non-financial data are sourced. In the process of constructing detection models with RNN and LSTM, 60% (= 80% × 75%) of data are randomly sampled as the training dataset. The best prediction model is derived with constant optimization in the learning process. The validation dataset is sampled randomly from 15% (= 20% × 75%) of the total data, for the validation of the constructed model and the determination of the convergence in order to stop training. The remaining 25% of the data are used as the test dataset, to assess the generalization capability of the model. The research design and process flows are illustrated in Figure 3.

Figure 3.

Research design and process flows.

4. Empirical Results

According to Section 3.4 Sampling and Research Process, the empirical results of this study based on the RNN model and the LSTM model are as follows.

For the algorithms, programming, and coding of this study, please see the Appendix A.

4.1. Validation for Modeling

Validation is important to modeling. This study adopts a number of measures to ensure model validity.

First, the raw data are normalized and standardized, so that all values are between 0 and 1. Normalization and standardization provide two benefits to machine learning algorithms: (1) acceleration of convergence speed; and (2) enhancement of model detection accuracy.

Second, data are randomly sampled and not sent back to the pool, to avoid bias due to repeated sampled data. In the process of RNN and LSTM modeling, 60% (= 80% × 75%) of the data are randomly sampled as the training dataset for parameter fitting in the learning process. The best detection model is established via constant optimization. The validation dataset is based on 15% (= 20% × 75%) randomly sampled data, for the validation of the model and the status of convergence. Hyper-parameters are adjusted to avoid overfitting and to determine the stopping point of training. The remaining 25% of data serve as the test dataset to assess model generalization and detection capabilities after 250 epochs.

Third, the optimizer Adaptive Moment Estimation (Adam) built in TensorFlow is used to adjust the optimal parameters in this study. These optimal parameters are: learning rate = 0.001, beta 1 = 0.9, beta 2 = 0.999, epsilon = 1 × 10−7, batch size = 3, epochs = 250, validation_split = 0.2, dropout = 0.25, activation = Sigmoid and ReLU.

Finally, a loss function is deployed for model optimization. Multiple performance metrics are referenced to assess the model performance. These metrics include the confusion matrix (accuracy, precision, sensitivity (recall), specificity, and F1 score), Type I and Type II error rate, and ROC curve/AUC.

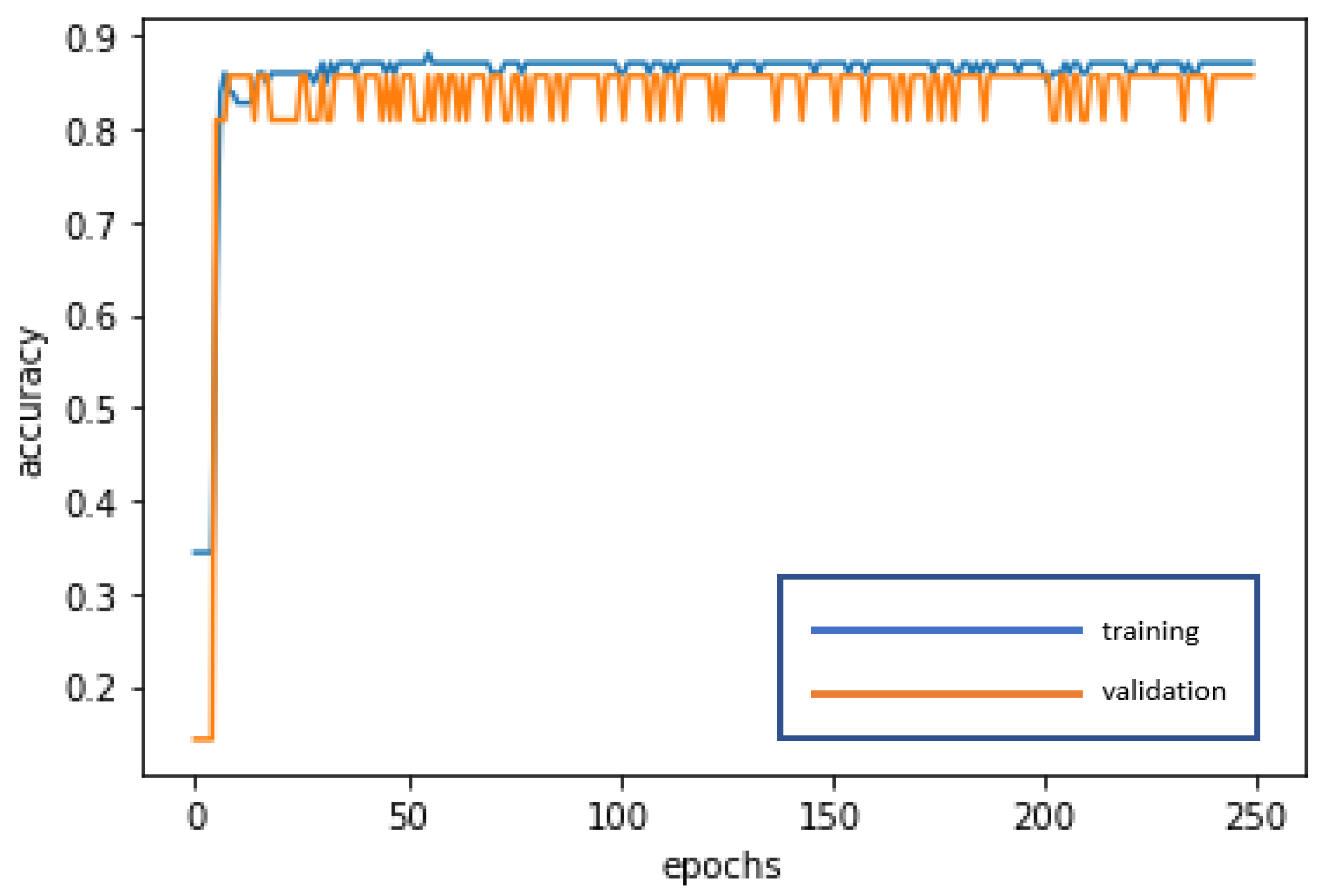

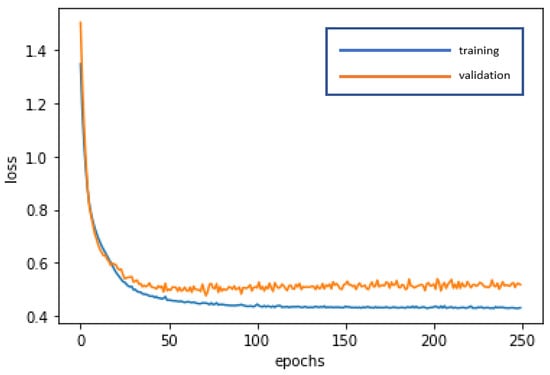

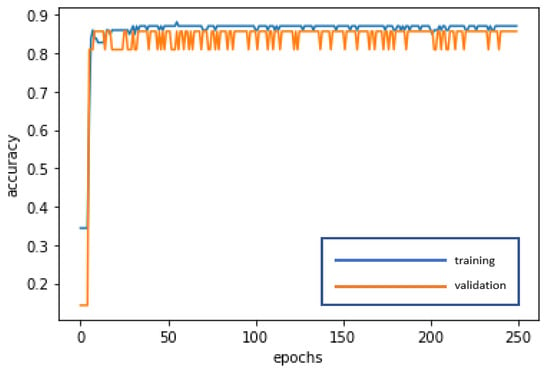

4.2. RNN Modeling and the RNN Model

The RNN is used for deep learning and training to achieve stabilization and establish the optimal model. The loss curve becomes smoother, the gradient drops, and the accuracy improves along with training. The training of 250 epochs only takes 0.3 seconds. The training dataset and the validation dataset exhibit accuracy of 87.10% and 85.71%, respectively. The test dataset is also used to test model stability with an accuracy of 87.18%. The limited difference in the results with the training dataset and the validation dataset demonstrates model stability and goodness-of-fit (no overfitting). The model accuracy rates are summarized in Table 3. Figure 4 and Figure 5 present the loss function graph and the accuracy graph of the modeling process. Type I error rate and Type II error rate of the test dataset are 5.13% and 7.69%, respectively. These levels are not considered high.

Table 3.

Accuracy of the RNN model.

Figure 4.

Loss function graph of the RNN modeling process.

Figure 5.

Accuracy graph of the RNN modeling process.

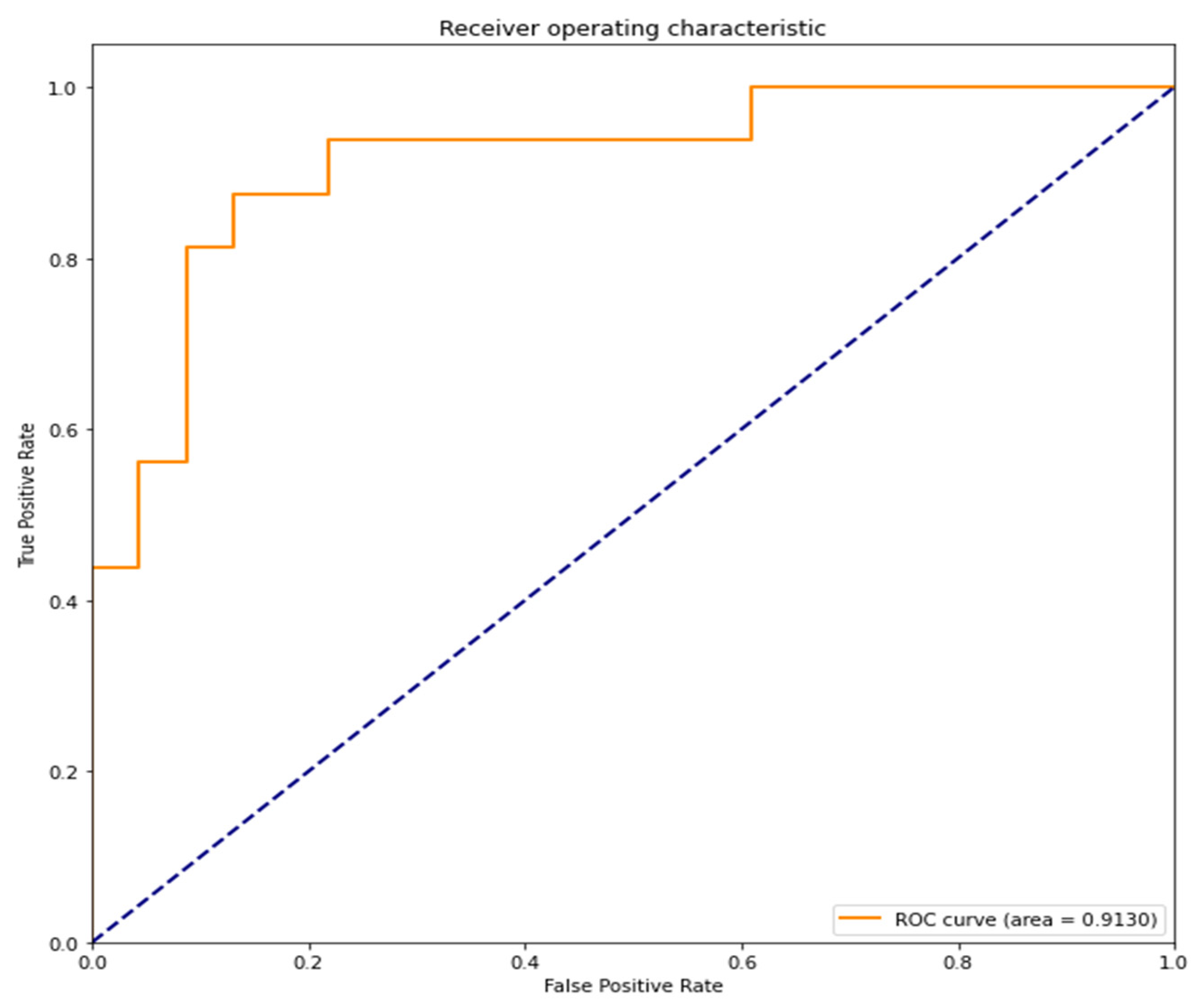

The confusion matrix of the RNN model is as follows: accuracy = 87.18%, precision = 86.67%, sensitivity (recall) = 81.25%, specificity = 91.30%, and F1-score = 83.87%. These values suggest good model performance.

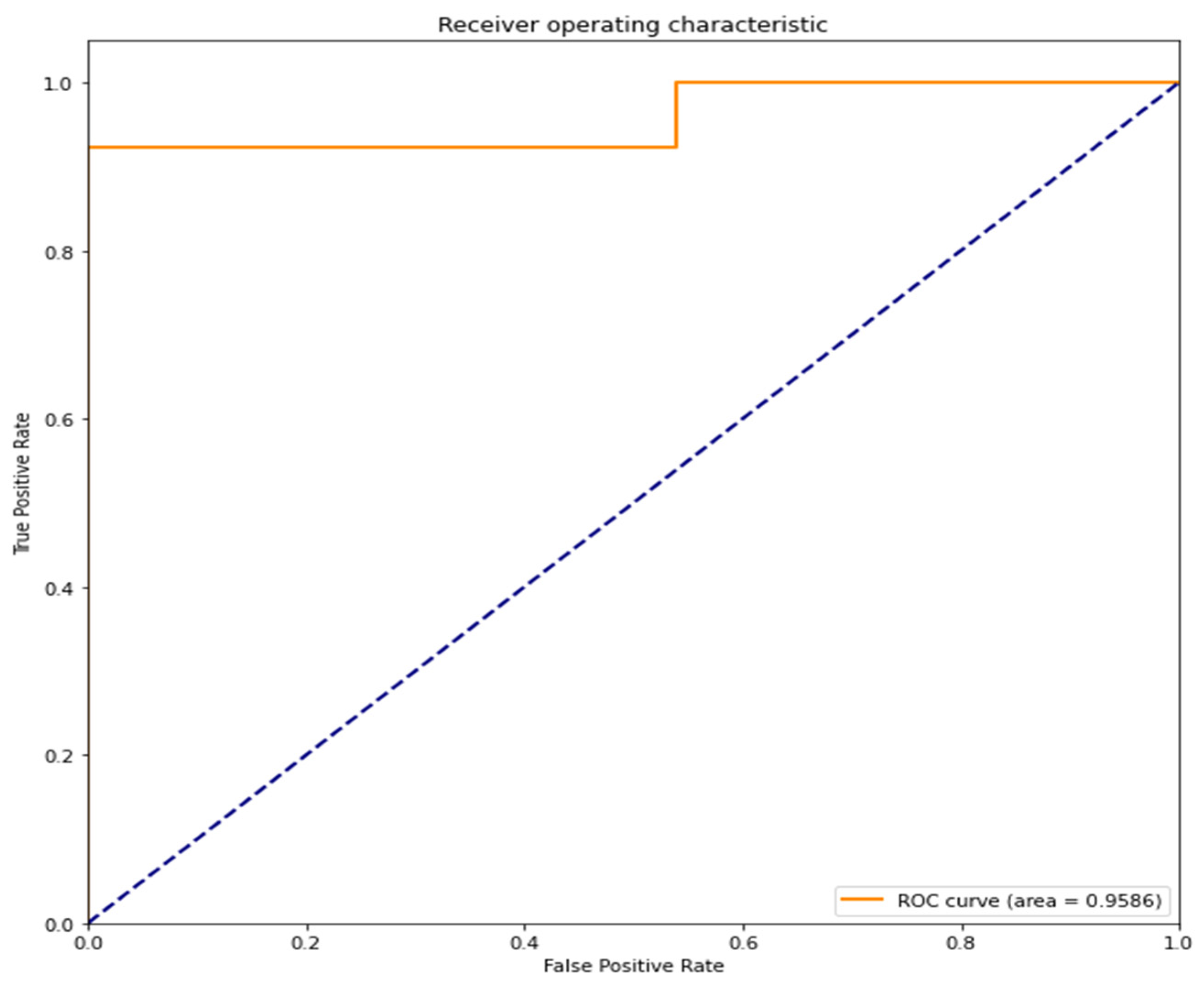

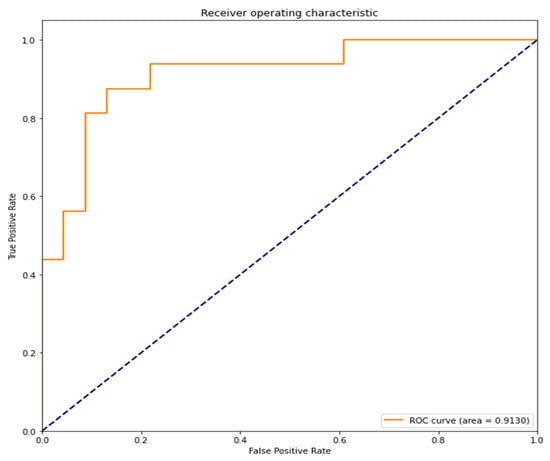

The area under the curve (AUC) of the receiver operating characteristic curve (ROC curve) is 0.9130 (91.30%). A value close to 1 indicates high accuracy (Figure 6).

Figure 6.

ROC curve and AUC of the RNN model.

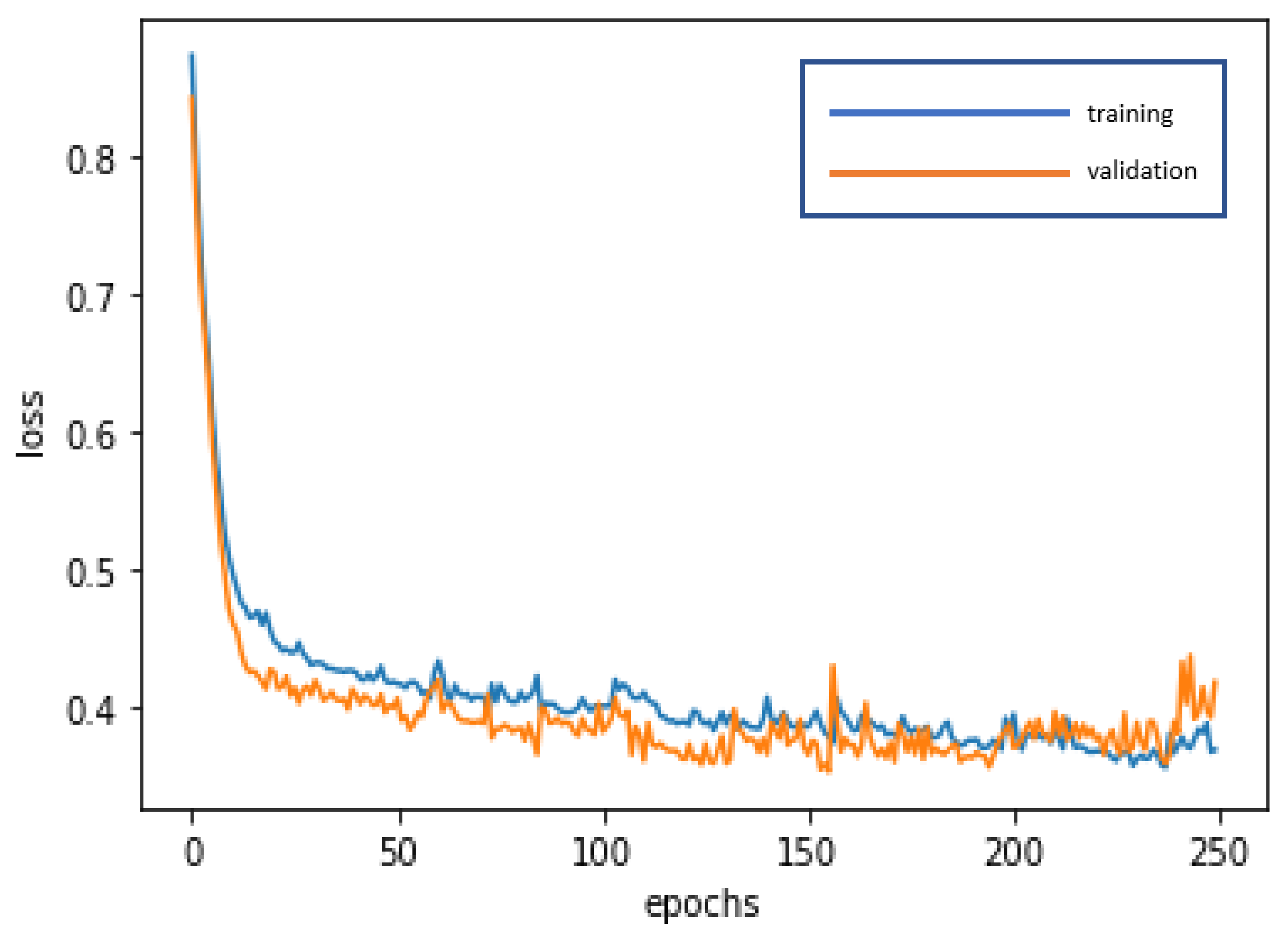

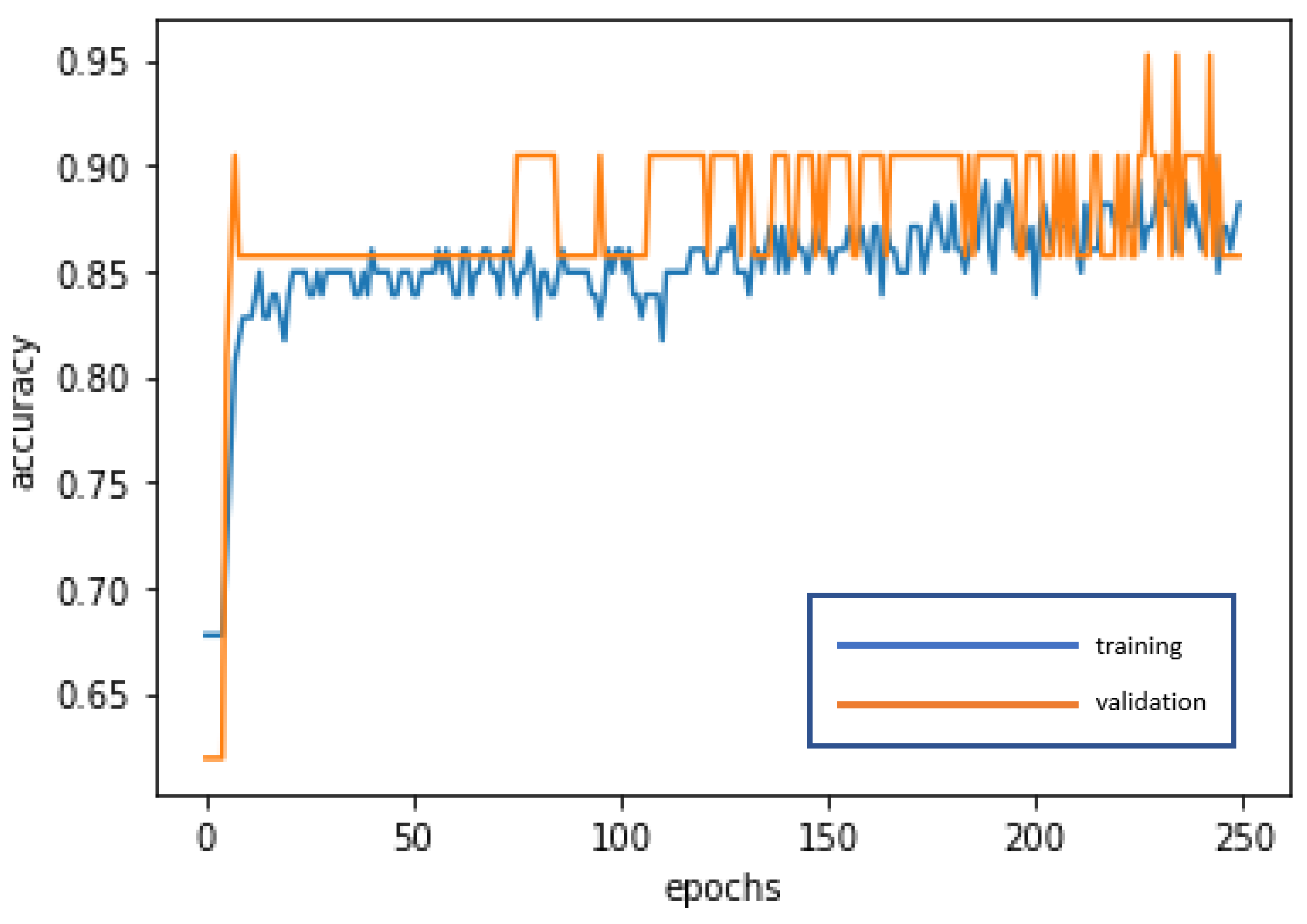

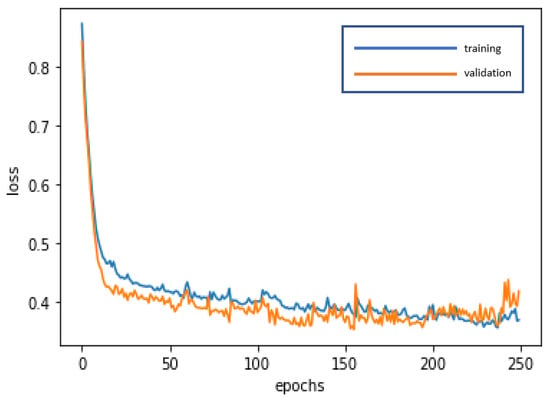

4.3. LSTM Modeling and the LSTM Model

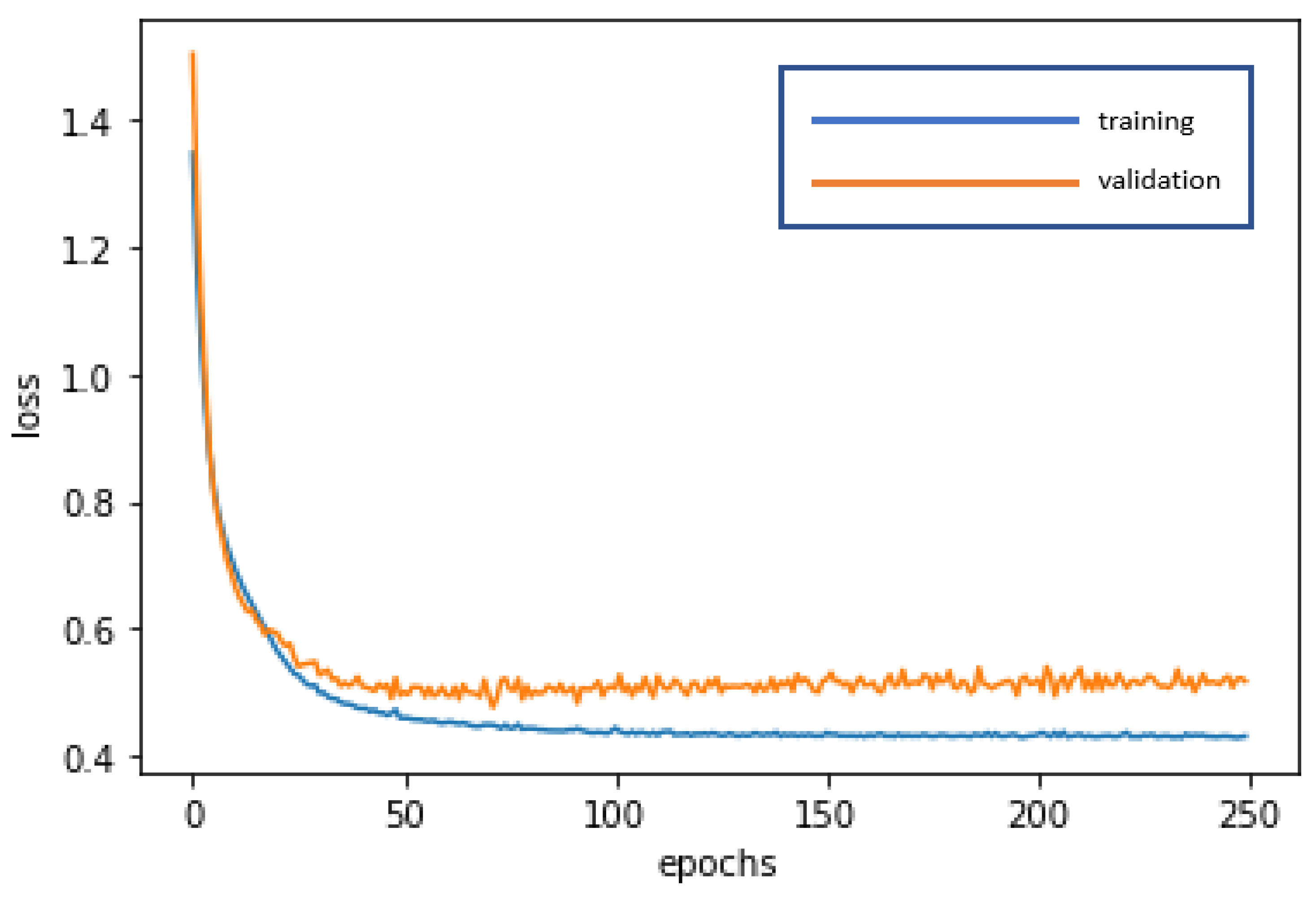

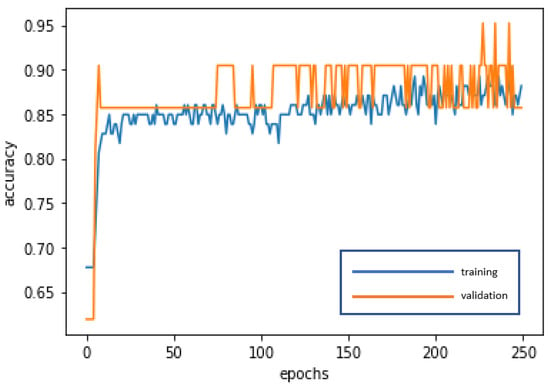

The LSTM is used for deep learning and training to achieve stabilization and establish the optimal model. The loss curve becomes smoother, the gradient drops, and the accuracy increases along with training. The training of 250 epochs only takes 1.5 seconds. The training dataset and the validation dataset report accuracy of 88.17% and 85.71%, respectively. The test dataset used to test model stability has an accuracy of 94.88%. The model is highly stable and has goodness-of-fit (no obvious overfitting). The model accuracy rates are summarized in Table 4. Figure 7 and Figure 8 present the loss function graph and the accuracy graph of the modeling process. Type I error rate and Type II error rate of the test dataset are 2.56% and 2.56%, respectively. These levels are considered fairly low.

Table 4.

Accuracy of the LSTM model.

Figure 7.

Loss function graph of the LSTM modeling process.

Figure 8.

Accuracy graph of the LSTM modeling process.

The confusion matrix of the LSTM model is as follows: accuracy = 94.88%, precision = 92.31%, sensitivity (recall) = 92.31%, specificity = 96.15%, and F1-score = 92.31%. These values suggest great model performance.

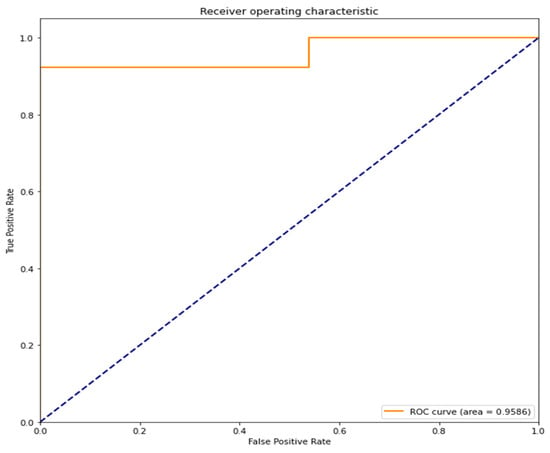

The area under the curve (AUC) of the receiver operating characteristic curve (ROC curve) is 0.9586 (95.86%). A value close to 1 indicates high accuracy (Figure 9).

Figure 9.

ROC curve and AUC of the LSTM model.

5. Discussion

RNN and LSTM are two popular and representative deep learning algorithms. LSTM addresses the possible poor performance of long-term memory of RNN models. LSTM is innovative for the addition of three steps (i.e., forget, update, and input) in the neurons, so as to dramatically enhance long-term memory performance. Both RNN and LSTM can quickly and effectively process large data volumes and establish models. They both have good capabilities in learning, optimization, and prediction (detection). In addition, validation is important to modeling.

This study adopts a number of measures to ensure model validity as follows. (1) The raw data are normalized and standardized so that all the data values are between 0 and 1. (2) Random sampling is performed and data are not placed back to the pool, in order to avoid bias due to repeated data. (3) Adaptive Moment Estimation (Adam), the optimizer built-in TensorFlow, is used to adjust the optimal parameters. (4) Multiple performance metrics are referenced to evaluate the model performance.

This paper selects a total of 18 variables frequently used to measure financial statement fraud. They consist of 14 financial variables and 4 non-financial variables (also known as corporate governance variables). RNN and LSTM are used for deep learning and optimization to establish an optimal model. The modeling process starts with a random sampling of 60% of the data as the training dataset. The parameters in the dataset are fitted in the learning process, and the best prediction model is derived with constant optimization. The validation dataset is sampled randomly from 15% of the total data, for the validation of the status and convergence with stable accuracy. Hyper-parameters are adjusted to avoid overfitting. The remaining 25% of the data is used as the test dataset, to assess the generalization and detection capability of the model (i.e., the performance of the model).

The empirical results indicate that the test dataset in the LSTM model boasts a detection accuracy of 94.88%, or better than that of the RNN test dataset at 87.18%. Both models produce an accuracy rate higher than 85%. These are great results in social science [10,11]. As far as error rates are concerned, Type I error rate and Type II error rate of the LSTM model are both 2.56%, or lower than Type I error rate of 5.13% and Type II error rate of 7.69% for the RNN model. Type I error rate indicates the probability of predicting fraud for non-fraudulent companies. Type II error rate indicates the probability of predicting no fraud for fraudulent companies. Type II errors cause the heaviest losses for financial statement users.

To enhance the effectiveness of model performance and stability assessment, this paper incorporates the confusion matrix and the ROC curve/AUC indicators. The confusion matrix of the LSTM model is as follows: accuracy = 94.88%, precision = 92.31%, sensitivity (recall) = 92.31%, specificity = 96.15%, and F1-score = 92.31%. The values suggest great model performance. The confusion matrix of the RNN model is as follows: accuracy = 87.18%, precision = 86.67%, sensitivity (recall) = 81.25%, specificity = 91.30%, and F1-score = 83.87%. These numbers also indicate good model performance. However, the LSTM model outperforms the RNN model in detection according to confusion matrix values.

ROC curve/AUC is often used as a performance metric for binary classification models. An ROC curve consists of sensitivity and specificity. The closer the area underneath is to 1, the more accurate the model’s classifications are. The LSTM model reports an AUC (area under the curve) value of 0.9586 (95.86%). This is very close to 1, indicating high accuracy. It is also better than the RNN model’s AUC value of 0.9130 (91.30%). Both models perform well.

Table 5 summarizes the performance of the RNN model and the LSTM model in the detection of financial statement fraud. Both models achieve good results. The two models constructed by this study are suitable for the detection of financial statement fraud. That said, LSTM outperforms the RNN model.

Table 5.

Summary of performance indicators.

6. Conclusions and Suggestions

Information asymmetry is commonplace in capital markets due to agency problems. This is particularly the case with financial statements in which companies present operating results, corporate health, and their ability for sustainable development. Except for a few shareholders involved in company operations, it is difficult for other stakeholders to understand the true picture of the company’s financials until the company sinks into financial distress or declares bankruptcy. This undermines the public’s confidence in the financial system and the capital market. It also damages the health, development, and sustainability of the global capital market. All governments in the world have formulated more stringent regulations to prevent fraud, advocated corporate governance, and required company boards to establish an effective internal control and fraud prevention mechanism. CPAs and auditors are also strictly required to adhere to occupational ethics, relevant accounting and auditing standards, and financial regulations, in order to act as gatekeepers by using audit reports and audit opinions to reduce the likelihood of financial statement fraud. However, financial statement fraud still occurs from time to time. It is imperative to find an effective model to detect financial statement fraud so that CPAs and auditors can use this tool as assistance.

To tackle the shortcomings of the traditional approach to financial statement fraud detection and to embrace the era of big data and artificial intelligence, the use of deep learning algorithms to detect financial statement fraud is the proper way to go, but there is limited relevant literature. Hence, this study uses a recurrent neural network (RNN) and long short-term memory (LSTM), which are two powerful and advantageous deep learning algorithms. Both financial and non-financial data of 153 TWSE/TPEx listed companies in 2001–2019 are utilized for deep learning and training, until the model is stabilized as the optimal model.

To ensure model validation and the effectiveness of the model’s generalization and prediction capabilities, this study refers to multiple performance indicators, including the confusion matrix (accuracy, precision, sensitivity (recall), specificity, and F1-score), Type I error rate and Type II Error rate, and ROC curve/AUC values to compare the performance of the two models. The results show that the LSTM model outperforms slightly at 94.88% accuracy (the most frequently used performance metric). The two models constructed by this study perform well and are suitable for the detection of financial statement fraud. This research thus contributes to academic research and capital market practices in relation to fraud prevention.

This study urges CPAs, management teams, and financial regulatory bodies to ensure the fulfillment of auditing responsibilities and support to companies in their robust corporate governance and internal control. CPAs should issue audit reports such as “qualified opinion”, “disclaimer opinion”, and “adverse opinion” upon financial information and physical asset inventory to which they have doubt. Management should act with morality and integrity and serve as good role models to be accountable to all stakeholders, without forging or cheating with financial data and financial reporting. Financial regulatory bodies should ensure that laws and regulations are robust and up to date. Strict control should be exercised on companies and CPAs by keeping a close eye on any illegal or inappropriate behavior, in order to protect the rights of the investing public and the sustainability of the capital markets.

This study makes the following recommendations for future research: To begin, deep learning algorithms are a concrete representation of academic research methods in the age of artificial intelligence; researchers may also use other deep learning algorithms, such as DNN, DBN, CNN, CDBN, and GRU, to construct financial statement fraud detection models. Second, as research variables, incorporate indicators of economic growth or recession (such as GDP) or other macroeconomic variables [45]. Third, consider using additional econometric models, such as QARDL-ECM, to address specific economic and financial asymmetry issues, such as long- and short-term asymmetry, as well as asymmetry between different financial markets [46]. Fourth, in addition to the commonly used financial variables, it is necessary to consider non-financial variables when evaluating financial statement fraud. Finally, financial and non-financial variables should be adjusted to reflect different economic conditions, capital markets, financial regulations, and corporate types.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data can be provided upon request from the corresponding author.

Acknowledgments

The author would like to thank the editors and the anonymous reviewers of this journal.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Algorithms, Programming, and Coding

Appendix A.1. Preparation for Model Construction/Modeling

Appendix A.1.1. Importing Libraries

Import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dropout

from keras.layers import Input, Dense, SimpleRNN, RNN

from numpy import ndarray

from sklearn.preprocessing import MinMaxScaler

from tensorflow import keras

from keras.layers.core import Activation, Dense

from keras.layers.embeddings import Embedding

from keras.preprocessing import sequence

from sklearn.model_selection import train_test_split

from sklearn.model_selection import StratifiedKFold

Appendix A.1.2. Data Access

df = pd.read_excel(“dataset.xlsx”)

df.shape

Appendix A.1.3. Data Pre-Treatment

Random Data Splitting

df_num = df.shape[0]

indexes = np.random.permutation(df_num)

train_indexes = indexes[:int(df_num *0.75)]

test_indexes = indexes[int(df_num *0.75):]

train_df = df.loc[train_indexes]

test_df= df.loc[test_indexes]

Data Normalization and Standardization

scaler = MinMaxScaler(feature_range = (0, 1))

df= scaler.fit_transform(df)

Definition of Independent Variables and Dependent Variables

x_train = np.array(train_df.drop(‘FSF’,axis = ‘columns’))

y_train = np.array(train_df[‘FSF’])

x_test = np.array(test_df.drop(‘FSF’,axis = ‘columns’))

y_test = np.array(test_df[‘FSF’])

Data Matrix Reshaping to Meet with Model Input Requirements

x_train = np.reshape(x_train, (x_train.shape[0],1, x_train.shape[1]))

x_test = np.reshape(x_test, (x_test.shape[0],1, x_test.shape[1]))

Appendix A.2. Model Construction/Modeling

Appendix A.2.1. RNN

model = Sequential()

model.add(SimpleRNN(16, kernel_regularizer = regularizers.l2(0.01),input_shape = (x_train.shape[1], x_train.shape[2]), return_sequences = True))

model.add(Dense(8,kernel_regularizer = regularizers.l2(0.01),activation = ‘sigmoid’))

model.add(Dense(1,kernel_regularizer = regularizers.l2(0.01),activation = ‘sigmoid’))

model.compile(loss = “binary_crossentropy”, optimizer = “adam”,metrics = [‘accuracy’])

Appendix A.2.2. LSTM

model = Sequential()

model.add(LSTM(14, kernel_regularizer = regularizers.l2(0.007),input_shape = (x_train.shape[1], x_train.shape[2]), return_sequences = True))

model.add(Dense(7,kernel_regularizer = regularizers.l2(0.007),activation = ‘relu’))

model.add(Dense(1,kernel_regularizer = regularizers.l2(0.007),activation = ‘sigmoid’))

model.compile(loss = “binary_crossentropy”, optimizer = “adam”,metrics = [‘accuracy’])

Appendix A.2.3. Callbacks to Store the Optimal Model

checkpoint_filepath = ‘.\\tmp\\checkpoint’

model_checkpoint_callback = [tf.keras.callbacks.ModelCheckpoint(filepath = checkpoint_filepath, save_weights_only = True)]

Appendix A.2.4. Training and Randomly Sampling 20% Data (= 15% of all data) as Validation Dataset

history = model.fit(x_train, y_train, epochs = 250, batch_size = 3, validation_split = 0.2, callbacks = model_checkpoint_callback)

Appendix A.2.5. Test Dataset to Complete Model Training

t_loss,t_acc = model.evaluate(x_test,y_test)

Appendix A.2.6. Exporting of Actual Values

print(y_test)

Appendix A.2.7. Exporting of Predicted Values

y_pred = model.predict_classes(x_test)

print(y_pred)

Appendix A.2.8. Modeling Performance

Loss Value

plt.plot(history.history[‘loss’],label = ‘train’)

plt.plot(history.history[‘val_loss’],label = ‘validation’)

plt.ylabel(‘loss’)

plt.xlabel(‘epochs’)

Accuracy

plt.plot(history.history[‘accuracy’],label = ‘train’)

plt.plot(history.history[‘val_accuracy’],label = ‘validation’)

plt.ylabel(‘accuracy’)

plt.xlabel(‘epochs’)

Confusion Matrix and ROC Curve

preds = model.predict_classes(x_test)

preds = preds.reshape(−1,1)

preds_prob = model.predict(x_test)

preds_prob = preds_prob.reshape(−1,1)

labels = y_test

labels = labels.reshape(−1,1)

from sklearn.metrics import confusion_matrix, roc_curve,auc

fpr, tpr, threshold = roc_curve(labels, preds_prob)

from sklearn.metrics import confusion_matrix

from sklearn import metrics

metrics.classification_report(labels,preds)

metrics.confusion_matrix(labels,preds)

roc_auc = auc(fpr,tpr)

plt.figure()

lw = 2

plt.figure(figsize = (10,10))

plt.plot(fpr, tpr, color = ‘darkorange’,

lw = lw, label = ‘ROC curve (area = %0.4f)’ % roc_auc)

plt.plot([0, 1], [0, 1], color = ‘navy’, lw = lw, linestyle = ‘--’)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel(‘False Positive Rate’)

plt.ylabel(‘True Positive Rate’)

plt.title(‘Receiver operating characteristic’)

plt.legend(loc = “lower right”)

plt.show()

References

- Akerlof, G.A. The market for “lemons”: Quality uncertainty and the market mechanism. Q. J. Econ. 1970, 84, 488–500. [Google Scholar] [CrossRef]

- Jensen, M.C.; Meckling, W.H. Theory of the firm: Managerial behavior, agency costs, and ownership structure. J. Financ. Econ. 1976, 3, 305–360. [Google Scholar] [CrossRef]

- Jan, C.L. Financial information asymmetry: Using deep learning algorithms to predict financial distress. Symmetry 2021, 13, 443. [Google Scholar] [CrossRef]

- Dechow, P.; Ge, M.W.; Larson, C.R.; Sloan, R.G. Predicting material accounting misstatements. Contemp. Account. Res. 2011, 28, 17–82. [Google Scholar] [CrossRef]

- Cunha, P.R.; Silva, J.O.D.; Fernandes, F.C. Pesquisas sobre a lei Sarbanes-Oxley: Uma análise dos journals em língua inglesa. Enfoque Reflexão Contábil 2013, 32, 37–51. [Google Scholar] [CrossRef]

- Martins, O.S.; Júnior, R.V. The influence of corporate governance on the mitigation of fraudulent financial reporting. Rev. Bus. Manag. 2020, 22, 65–84. [Google Scholar] [CrossRef]

- Omidi, M.; Min, Q.; Moradinaftchali, V.; Piri, M. The efficacy of predictive methods in financial statement fraud. Discrete. Dyn. Nat. Soc. 2019, 2019, 4989140. [Google Scholar] [CrossRef]

- Yeh, C.C.; Chi, D.J.; Lin, T.Y.; Chiu, S.H. A hybrid detecting fraudulent financial statements model using rough set theory and support vector machines. Cyb. Sys. 2016, 47, 261–276. [Google Scholar] [CrossRef]

- Jan, C.L. An effective financial statements fraud detection model for the sustainable development of financial markets: Evidence from Taiwan. Sustainability 2018, 10, 513. [Google Scholar] [CrossRef] [Green Version]

- Chi, D.J.; Chu, C.C.; Chen, D. Applying support vector machine, C5.0, and CHAID to the detection of financial statements frauds. Lect. Notes Comput. Sci. 2019, 11645, 327–336. [Google Scholar] [CrossRef]

- Chen, S. An effective going concern prediction model for the sustainability of enterprises and capital market development. Appl. Econ. 2019, 51, 3376–3388. [Google Scholar] [CrossRef]

- Sanoran, K. Auditors’ going concern reporting accuracy during and after the global financial crisis. J. Contemp. Account. Econ. 2018, 14, 164–178. [Google Scholar] [CrossRef]

- Report to the Nations Editorial Board. 2020 Global Study on Occupational Fraud and Abuse; Association of Certified Fraud Examiners: Austin, TX, USA, 2020. [Google Scholar]

- Healy, P.M.; Palepu, K.G. The fall of Enron. J. Econ. Perspect. 2003, 17, 3–26. [Google Scholar] [CrossRef] [Green Version]

- Auditing Standard Committee. Statements on Auditing Standards (SASs) No. 43; Accounting Research and Development Foundation: Taipei, Taiwan, 2006. [Google Scholar]

- Auditing Standard Committee. Statements on Auditing Standards (SASs) No. 74; Accounting Research and Development Foundation: Taipei, Taiwan, 2020. [Google Scholar]

- Auditing Standard Committee. Statements on Auditing Standards (SASs) No. 57; Accounting Research and Development Foundation: Taipei, Taiwan, 2018. [Google Scholar]

- Auditing Standard Committee. Statements on Auditing Standards (SASs) No. 59; Accounting Research and Development Foundation: Taipei, Taiwan, 2018. [Google Scholar]

- Auditing Standard Committee. Statements on Auditing Standards (SASs) No. 58; Accounting Research and Development Foundation: Taipei, Taiwan, 2018. [Google Scholar]

- Khan, M.A.; Vivek, V.; Nabi, M.K.; Khojah, M.; Tahir, M. Students’ perception towards E-learning during COVID-19 pandemic in India: An empirical study. Sustainability 2021, 13, 57. [Google Scholar] [CrossRef]

- Hwang, J.; Kim, H. The effects of expected benefits on image, desire, and behavioral intentions in the field of drone food delivery services after the outbreak of COVID-19. Sustainability 2021, 13, 117. [Google Scholar] [CrossRef]

- Cai, G.; Hong, Y.; Xu, L.; Gao, W.; Wang, K.; Chi, X. An evaluation of green ryokans through a tourism accommodation survey and customer-satisfaction-related CASBEE–IPA after COVID-19 pandemic. Sustainability 2021, 13, 145. [Google Scholar] [CrossRef]

- Radulescu, C.V.; Ladaru, G.-R.; Burlacu, S.; Constantin, F.; Ioanas, C.; Petre, I.L. Impact of the COVID-19 pandemic on the Romanian labor market. Sustainability 2021, 13, 271. [Google Scholar] [CrossRef]

- Sung, Y.-A.; Kim, K.-W.; Kwon, H.-J. Big data analysis of Korean travelers’ behavior in the post-COVID-19 era. Sustainability 2021, 13, 310. [Google Scholar] [CrossRef]

- Popescu, C.R.G.; Popescu, G.N. An exploratory study based on a questionnaire concerning green and sustainable finance, corporate social responsibility, and performance: Evidence from the Romanian business environment. J. Risk Financ. Manag. 2019, 12, 162. [Google Scholar] [CrossRef] [Green Version]

- Humpherys, S.L.; Moffitt, K.C.; Burns, M.B.; Burgoon, J.K.; Felix, W.F. Identification of fraudulent financial statements using linguistic credibility analysis. Decis. Support. Sys. 2011, 50, 585–594. [Google Scholar] [CrossRef]

- Kamarudin, K.A.; Ismail, W.A.W.; Mustapha, W.A.H.W. Aggressive financial reporting and corporate fraud. Procedia Soc. Behav. Sci. 2012, 65, 638–643. [Google Scholar] [CrossRef] [Green Version]

- Goo, Y.J.; Chi, D.J.; Shen, Z.D. Improving the prediction of going concern of Taiwanese listed companies using a hybrid of LASSO with data mining techniques. SpringerPlus 2016, 5, 539. [Google Scholar] [CrossRef] [Green Version]

- Dorminey, J.; Fleming, A.S.; Kranacher, M.J.; Riley, R.A. The evolution of fraud theory. Issues Account. Educ. 2012, 27, 555–579. [Google Scholar] [CrossRef]

- Chui, L.; Pike, B. Auditors’ responsibility for fraud detection: New wine in old bottles? J. Forensic Investig. Account. 2013, 56, 204–233. [Google Scholar]

- Papik, M.; Papikova, L. Detection models for unintentional financial restatements. J. Bus. Econ. Manag. 2020, 21, 64–86. [Google Scholar] [CrossRef] [Green Version]

- Gepp, A.; Kumar, K.; Bhattacharya, S. Lifting the numbers game: Identifying key input variables and a best-performing model to detect financial statement fraud. Account. Financ. 2020, 1–38. [Google Scholar] [CrossRef]

- Yao, J.; Zhang, J.; Wang, L. A financial statement fraud detection model based on hybrid data mining methods. In Proceedings of the 2018 International Conference on Artificial Intelligence and Big Data, Chengdu, China, 26–28 May 2018; pp. 57–61. [Google Scholar]

- Hamal, S.; Senvar, O. Comparing performances and effectiveness of machine learning classifiers in detecting financial accounting fraud for Turkish SMEs. Int. J. Comput. Intell. Syst. 2021, 14, 769–782. [Google Scholar] [CrossRef]

- Craja, P.; Kim, A.; Lessmann, S. Deep learning for detecting financial statement fraud. Decis. Support Syst. 2020, 139, 113421. [Google Scholar] [CrossRef]

- Gupta, S.; Mehta, S.K. Data mining-based financial statement fraud detection: Systematic literature review and meta-analysis to estimate data sample mapping of fraudulent companies against non-fraudulent companies. Glob. Bus. Rev. 2021, 1–24. [Google Scholar] [CrossRef]

- Chen, S.; Goo, Y.J.; Shen, Z.D. A hybrid approach of stepwise regression, logistic regression, support vector machine, and decision tree for forecasting fraudulent financial statements. Sci. World J. 2014, 2014, 968712. [Google Scholar] [CrossRef]

- Chen, S. Detection of fraudulent financial statements using the hybrid data mining approach. SpringerPlus 2016, 5, 89. [Google Scholar] [CrossRef] [Green Version]

- Karpoff, J.M.; Koester, A.; Lee, D.S.; Martin, G.S. Proxies and databases in financial misconduct research. Account. Rev. 2017, 92, 129–163. [Google Scholar] [CrossRef]

- Lin, C.; Chiu, A.; Huang, S.Y.; Yen, D.C. Detecting the financial statement fraud: The analysis of the differences between data mining techniques and experts’ judgments. Knowl. Based Syst. 2015, 89, 459–470. [Google Scholar] [CrossRef]

- Seemakurthi, P.; Zhang, S.; Qi, Y. Detection of fraudulent financial reports with machine learning techniques. In Proceedings of the 2015 Systems and Information Engineering Design Symposium, Taipei, Taiwan, 9–11 April 2015; pp. 358–361. [Google Scholar] [CrossRef]

- Sun, Y.; Wu, Y.; Xu, Y. Using an Ensemble LSTM Model for Financial Statement Fraud Detection. In Proceedings of the 24th Pacific Asia Conference on Information Systems, PACIS 2020 Proceedings, Dubai, UAE, 22–24 June 2020; p. 144. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural. Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Tsagkanos, G.A. Stock market development and income inequality. J. Econ. Stud. 2017, 44, 87–98. [Google Scholar] [CrossRef]

- Vartholomatou, K.; Pendaraki, K.; Tsagkanos, A. Corporate bonds, exchange rates and business strategy. Int. J. Bank. Account. Financ. 2021, 12, 97–117. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).