Abstract

The response of most educational institutions to the health crisis triggered by the COVID-19 pandemic was the adoption of emergency remote teaching and assessment. The paper aims to evaluate students’ satisfaction with assessment activities in a Romanian university and to identify elements pertaining to sustainable assessment in the post-pandemic period. A collaborative research strategy was developed with students being invited as co-researchers for data collection by distributing an online questionnaire and for interpretation of the results in a focus group. The factor analysis of the responses to the survey extracted two pillars pertaining to students’ appraisal of remote assessment activities: Knowledge, and leisure and stress. The discussion in the focus group showed that the research helped participants to process and reason their experience with remote assessment activities in the summer of 2020. Students missed their academic rituals and interactions with peers and teachers. Despite their enthusiasm for technological innovation and the benefits brought by computer assisted assessment, students are inclined towards preserving human evaluators, preferably from their familiar teachers, in educational settings resembling pre-pandemic academic life. A sustainable, resilient model of education needs to be based on retaining features identified as acceptable by students as examinees.

1. Introduction

The public health crisis triggered by the COVID-19 pandemic dramatically changed all sectors of social life. Following the lead of the World Health Organization (WHO), some governments implemented emergency solutions, such as lockdowns, social distancing, wearing facemasks, self-isolation of people exposed to possible infection, as well as recommending online solutions for all operations and situations that could sustain such activities [1]. The educational system was deeply challenged, since the lockdown in early 2020 sent home over 1.37 billion students of all levels of education, according to the statistics provided by UNESCO [2]. Moving instruction online was discussed for some time prior to the pandemic and was considered to be beneficial due to the flexibility of teaching and learning anywhere, anytime. The speed of the shift in early 2020 was challenging for many of the actors engaged in traditional, face-to-face educational environments, whose possibility to overcome the challenges of the pandemic depended not only on the availability of technological infrastructure, but also on the willingness and readiness to embrace digital solutions. At that stage in the management of the health issues, WHO, UNICEF, and the International Federation of Red Cross and Red Crescent Societies (IFRC) issued guidance related to the prevention and control of COVID-19 in schools, recommending a risk-based approach for organizing educational activities [3]. Policymakers, health specialists, educational stakeholders, and the media shared the concern that the disruption of educational processes would deepen the gap between high- versus low- and lower-middle-income countries. The possibility of countries being able to pursue sustainability goals and work towards reducing disparities was severely challenged; the estimates for the education sector only (SDG 4—ensuring inclusive and equitable quality education and promote lifelong learning opportunities for all) [4] had risen to US$148 billion for the annual financing gap in low-and lower-middle-income countries in achieving SDG 4 from 2020 until 2030 [5]. Prior to the pandemic, technology-oriented enthusiasts called upon education to vigorously implement the Internet and media-rich applications instead of the familiar, conventional classrooms-based solutions, so that students who cannot attend classes can receive the same educational opportunities as students anchored in face-to-face learning environments [6]. The lockdown in spring 2020 showed, as studies and reports highlighted in numerous occasions, that online education and remote learning still face numerous challenges, varying in intensity and nature from one region of the world to another [5].

1.1. Impact of COVID-19 on Higher Education

The WHO declared the COVID-19 outbreak a Public Health Emergency of International Concern on 30 January 2020, and a pandemic on 11 March 2020. In April 2020, UNICEF expressed concerns regarding the integrity of the academic year, debating on the fate of schooling, educational outcomes, and exams. For instance, 58 out of 84 surveyed countries had postponed or rescheduled exams, 23 introduced alternative methods such as online or home-based testing, 22 maintained exams, while in 11 countries, they were cancelled altogether [7]. In universities, all face-to-face teaching and traditional examinations were initially suspended, and solutions were discussed concerning exams towards graduation, so as to help students graduate on time with a quality assured degree, but without posing health risks to students or to their examiners [7]. The World Bank, which carefully monitors education as a driver towards development and social sustainability, also expressed the concern that “a failure to sustain effective tertiary systems can lead to perilous social upheavals, as youth fall outside the education system, unable to engage in active learning and uncertain about the future of their education and prospects” [8]. The obvious choice for universities was to go online, especially since online education, flipped classroom activities, and e-learning have been under debate and in use for quite some time [9]. However, as numerous studies have highlighted, many students enrolled in traditional (face-to-face) educational cohorts proved that they were not experienced as online learners and had to deal with serious challenges both during attending courses and course-related activities, and (especially) in assessment activities [8,10,11,12,13,14,15]. As Schiavio, Biasutti, and Antonini Philippe point out, students and teachers may face problems when changes between settings occur suddenly [16] and a creative educational approach is required. Engaged in the process of adapting to these settings and post-factum, students encounter and recall both the difficulties and the positive outcomes of their experiences in exceptional educational contexts, such as those stemming from the COVID-19 pandemic [16].

At more than a year since the declaration that COVID-19 had become a pandemic, educators, communities of practice, and researchers still have mixed appraisals regarding the resilience of education systems and of the response to the crisis. The initial phase of “rapid transition to remote teaching and learning”, as Barbour and colleagues tentatively labeled it, was succeeded by the hasty development of tools for course navigation and a focus on the quality of course delivery and contingency planning [17]. There was a perceived sense of uncertainty at the end of the 2019–2020 academic year, the continued turmoil making it difficult to anticipate whether the mode of instruction needed to stay ready for the return to face-to-face education or if root decision-making should take place in the realities of the pandemic. The new academic year, 2020–2021 allows for hopes for the emergence of the new normal [17], as COVID-19 appears to decelerate and be restricted due to intervention. Educational institutions return at least partially to face-to-face activities, as teachers and students alike have accumulated experience in using digital tools to foster knowledge acquisition and production. While the dominant qualifications given to the impact of COVID-19 on education are linked to disruption and drawback [6,7,8,11,12,15], an attempt to re-frame the situation towards an optimistic note can be traced. The pandemic is presented as an unusual driver towards modernization, in the sense of accelerating the adoption of digitalization [18,19], especially because the investment in the technological infrastructure and the experience of teachers, delivering classes in digital formats, contributed to overcoming some of the barriers encountered in the large-scale introduction of digital technologies in educational settings. Against this background, the present research aims to gauge students’ satisfaction with only one dimension of academic life during the COVID-19 pandemic, namely assessment activities. Students’ voices, in a student-centered educational paradigm, can help understand the examinees’ perceptions on the assessment activities and extract features worth carrying into the post-pandemic pedagogy, toward increasing the sustainable character of learners’ assessment.

1.2. Literature Review

In a higher education context, assessment refers to judging students’ performance by awarding them score/mark about the quality and extent of their achievement and providing qualitative feedback [20]. It is viewed as a central feature of education, being often presented as a pivotal element for modernizing education systems, affecting how teaching and learning take place [9,21,22,23]. It serves multiple purposes. To students, assessment is one of the major ways of providing feedback on their performance. It is an instrument of measurement, evaluating student knowledge, abilities, and/or skills. It also serves standardization purposes, since grading is used to signal progress. Lastly, assessment is a tool for certification, enabling potential employers to understand the academic achievements of an individual [9].

Learning and teaching literature urge scholars to reflect on assessment, to innovate and root curricular design in solid, realistic, and evolving assessment practices [24,25], to develop communities of practice [25], and to overcome conventional assessment procedures [21]. A special place in the community of research and practice on assessment is held by David Boud, whose interest in sustainable assessment is largely quoted in educational literature [26,27]. He drew attention to the fact that often assessment practices in higher education institutions “tend not to equip students well for the processes of effective learning in a learning society” [26] and defined the notion of sustainable assessment. In his words, higher education assessment must be sustainable so it “meets the needs of the present and prepares students to meet their future learning needs” [26], creating learners who are more able to cope with the changes they will experience in their working life [27]. Adaptability is a feature critical for future professionals, who must quickly understand new trends on the labor market and maintain employability in a world that is changing at a cosmic pace [22,28].

Innovation is not always easy to implement, student satisfaction with novel forms of assessment such as a portfolio, reflective paper, self- and peer assessments, computer-based assessment, take-home exams, or open-book exams depend largely on the capacity of educators to provide sufficient detail, preparation, and stress-reducing activities in preparation of assessment activities [29,30]. In addition, studies on technology and assessment show that the primary perspective represented has been that of the test maker rather than the test taker [31]. Evidence indicates that computer adaptive testing (CAT) improves the quality of assessment [31], although there is still a need for increasing the awareness of stakeholders on how innovative tools can motivate and recognize authentic learning, for enhancing teaching and learning practices towards adapting digital assessment models to the learners’ needs, for developing open access platforms with e-assessment tools, and for conducting research on the effectiveness of innovative assessment practices [22].

The outbreak of the COVID-19 pandemic placed enormous pressure on the higher education system worldwide. While many universities developed, following the trend towards digitalization, e-learning capabilities, and acquired experiences in distance education, the online delivery of courses, and remote examining, the sudden transition to online education took universities by surprise. Although there is an inclination towards considering the emergency measures undertaken by universities to deliver educational activities online as a grand experiment leading to the rapid digitalization of education, the temporary and somewhat hasty features of the process are ignored [32]. Educational literature abounds in advice to carefully plan from the beginning the environment and tools for learning and assessment activities [33,34], urging teachers to incorporate all the features of assessment criteria in the curriculum and to make information visible to students at the beginning of each course [20]. The specific conditions of the COVID-19 pandemic made it impossible to organize assessment activities in the manner in which they were presented in the curriculum, universities having to adopt emergency remote plans for assessment, building upon lessons learned from e-learning practices.

In Romania, the first attempts to implement distance learning platforms date back to 1995, and by 2007, 58% of Romanian higher education institutions declared that they use e-learning solutions in their current activities [35]. Some of these made use of the educational offers provided by Microsoft and Google, others chose to develop online courses on a Moodle platform, or to create their own virtual campus variant [35]. The existence of such experience and infrastructure proved useful during the outbreak of the pandemic, when all educational activities had to be continued remotely. For the K–12 system, the Romanian Ministry of Education made specific provisions. Universities, due to their academic freedom, while having to implement the general rules of social distancing, health-related measures, and remote work, could tailor their response to the pandemic challenge according to their infrastructure, expertise, resources, and leadership [36].

What happened in spring 2020 was a temporary shift of instructional delivery to an alternate delivery mode due to crisis circumstances, better falling under the description of emergency remote teaching (ERT) than under online education [32]. ERT involves the use of fully remote teaching solutions for instruction or education (including assessment) that would otherwise be delivered face-to-face or as blended or hybrid courses that will return to the face-to-face format once the crisis or emergency has abated [32]. First-response studies on ERT showed that “assessment was deprioritized in initial planning” and that “teachers saw assessment expectations as unstable or unfair during a crisis” [37]. Furthermore, “assessment activities were constrained by technical, time-related, and even regulatory factors” [38], so universities had to make the necessary adjustments [10], according to their experiences, local conditions, and technological capabilities [39]. UNESCO warns that mechanisms to strengthen the resilience of higher education institutions in the face of future crises are needed, special attention being directed towards developing technical, technological, and pedagogical capacities to appropriately use non-face-to-face methodologies, as well as abilities to monitor students, particularly the most vulnerable, in all types of educational activities, including assessment [11,40]. Researchers inclined to view the silver lining in the ERT experience state that universities will most probably be able to carry into the post-pandemic practice valuable lessons, leading to the modernization of educational and assessment practices [41]. In 2020, an evaluation of the online education sector showed that it comprises roughly 2% of the global higher education sector (which is worth 2.2 USD trillion). It is likely that the “online higher education sector is ready for the much-needed disruption” [42] and for capitalizing on the digital resilience built during the pandemic. Digitally literate students declare that they are ready to embrace a model that values their skills and easiness in navigating the online delivered material, to overcome the status of passive recipients of knowledge transmitted by instructors, and to actively engage in inquiry and identify steps that can help them reach their intellectual potential and academia-related expectations [43].

Most of the literature on the response of higher education institutions to the COVID-19 pandemic builds upon reflections and/or opinions of policymakers [2,3,11,12,40] or teachers [10,13,14,19,24,44], student voices being less present, although, as educational partners, they are the ones assessed during such unusual times and have to manage technological, subjective, and educational challenges [45]. Students enrolled in face-to-face programs had the difficult task of adapting to a new educational environment. According to a study on the e-Learning education system during the COVID-19 pandemic in Romania, only 9% of the students had previous experience with the e-learning system [36].

This research aims to contribute to the literature on remote emergency assessment during the COVID-19 crisis with evidence gathered from students themselves. Our study focuses on student perceptions of assessment experiences in Politehnica University Timisoara, Romania, endeavoring to identify the features of sustainable assessment models to be implemented already in the 2020–2021 academic year, but more vigorously in the post-pandemic period, when lessons learned from the emergency reactions to the crisis can be best put to use and built into resilience plans. Politehnica University Timisoara is one of 12 universities in Romania, rated as an advanced research and education university by the national Ministry of Education and Technology [46]. During the peak of the pandemic, it rapidly expanded the existing personalized virtual campus, providing tutorials and support both for teachers and students [36,47]. The research does not look for an appraisal of the technical tools available to students during the lockdown period, but for their perceptions on a segment of the learning process, the end-term exams, which served as feedback on their ability to absorb new knowledge and demonstrate skills and abilities in solving academic-related tasks, in the virtual environment. Instead, it aims to contribute to the body of literature focusing on students’ evaluation of the sudden shift in pedagogical offers caused by the COVID-19 health emergency [16] and to take a glimpse into the future, to anticipate the acceptability of change in the education system in the “new normal” [17], at least in assessment activities.

The objectives set forth by the research team are:

- Research objective 1 (RO1): Students’ overall appraisal of the end-term assessments during the COVID-19 pandemic;

- Research objective 2 (RO2): Mapping students’ perception of the exams, along intrinsic or extrinsic parameters of the assessments;

- Research objective 3 (RO3): Identifying the main factors that influenced students’ answers to the parametric questions;

- Research objective 4 (RO4): Determining the students’ appraisal on the future trends in evaluation/assessment, based on their experiences during the COVID-19 pandemic.

2. Materials and Methods

Our approach is a collaborative one, students being invited as co-researchers, thus having the opportunity to offer an insider perspective to the topic under scrutiny and to give voice to the community or group that is being researched, in the interpretation stage [48] (p. 599). Together, the senior researchers and participants work to come to conclusions, engaging in dialogue and offering feedback.

Additionally, utilizing participants as co-researchers gives researchers the opportunity to use the experiences and knowledge of participants to learn about and discuss the research. Students as co-researchers contributed to equalizing the power relations between teacher and student during the research, bringing authenticity and insider perspectives to the process. The overall goal for the research was to not only benefit the researchers’ community of practice, but to also help participants develop a greater understanding of their social situation, to process the crisis that placed them as distant learners, and to help them see in action research techniques and challenges. With this aim in mind, we conducted a study where the subjects of the research were involved with collecting data and discussing/interpreting results. In this study, students’ views and ideas were not replaced by theorical considerations, but carefully transcribed and reported in their own wording [49]. The dialogic perspective goes beyond identifying student voices in collecting data from surveys and/or focus groups, followed by speaking about or for students as data-sources. Instead, the present research engaged students as fellow researchers, enquirers, and makers of meaning [50].

Based on the embraced educational philosophy [51,52] and on academic freedom, teachers had the choice to organize assessment activities according to the specifics of the taught subject, availability of technology, personal experience, and university’s guidelines to carry out exams in a remote educational setting [53]. For the purpose of this study, the authors did not discriminate between the different educational philosophies or practices displayed by the teachers, but looked for assessment features perceived by students in the online environment, in the first half of 2020.

The data collection was carried out between 15 and 30 July 2020, during the specialized practice activity of second year students in Communication and Public Relations, from the Faculty of Communication Sciences in Politehnica University Timisoara.

The research strategy was based on the following scenario:

- Building research instruments: Questionnaire and guide for focus group discussions;

- Online distribution of the questionnaire to students in Politehnica University Timisoara, Romania, enrolled in traditional (face-to-face) programs;

- Building the sample of respondents and a database of student opinions;

- Coding of responses obtained for an open-ended question;

- Data analysis, with SPSS program, version 20.0;

- Factorial analysis of the results;

- Discussion of the results in a focus group of students, participating in the study and data interpretation.

A total of 15 students participated voluntarily in the research. As investigators, they had to distribute the link to the online questionnaire, posted on ISondaje.ro, a Romanian online platform for data gathering used in sociological research. The questionnaire contained four questions asking for student opinions as well as three factual questions (Appendix A). One of the opinion items was formulated as an open-ended question, asking for word associations. The questionnaire was not pre-tested, but the senior researchers applied it on a pilot-sample of 20 students, to estimate the response time, to ensure that future respondents understand the wording of the questions, and to anticipate whether data collection is possible.

Student investigators were given the responsibility to distribute the link to assigned faculties, via any online channel they chose (e-mail to intranet groups or student leagues, Facebook, personal connections, etc.), according to their experience and preferences [54]. Furthermore, they were invited to participate in a focus group, to comment on the results of the survey, and reflect on the research skills developed in the process of distributing the questionnaire and interpreting results. The invitation was extended to all the co-researchers, but only 9 students took part in the focus group discussion, due to their availability to meet via Zoom and synchronize their calendar with the one proposed by the senior researchers. For the survey, the anonymity of respondents was ensured, and students could opt out from the study.

The matrix for the research strategy is presented in Table 1 below.

Table 1.

Matrix for the collaborative research strategy.

The obtained sample is one of convenience: 345 students responded to the survey, 124 of whom were boys (35.9%) and 221 were girls (64.1%) (Table 2).

Table 2.

Distribution by gender.

An attempt was made to reach out to all 10 faculties of the university, but the success in eliciting responses depended on the researcher-students’ capacity to make the questionnaire visible and attractive, as shown in Table 3 below:

Table 3.

Distribution of respondents by faculty.

Responses from one of the faculties, Mechanical Engineering, could not be validated since some of the respondents abandoned the completion of the questionnaire or chose not to take part in the study. The distribution of students per study year is balanced, as presented in Table 4 below:

Table 4.

Distribution of respondents by study year.

Co-researchers could draw lessons on the efficiency of the online distribution of questionnaires, on the response rate, and on the readiness of respondents to follow through a questionnaire, thus deepening their understanding of social science research and practicing their research skills, in view of their bachelor thesis to be completed in the next year of study.

3. Results

Hereunder the results of the survey are presented, accompanied by interpretations in the focus group, with excerpts from the most representative answers. The data processing, data visualization, and conclusions are drawn by the senior researchers of this study. The senior researchers also guided the interview in the focus group, ensuring balanced and fair participation of all those involved. All the results were presented to the focus group for examination and comments (Appendix B). The discussions were recorded, and senior researchers extracted instances of participants’ appraisal of the research results.

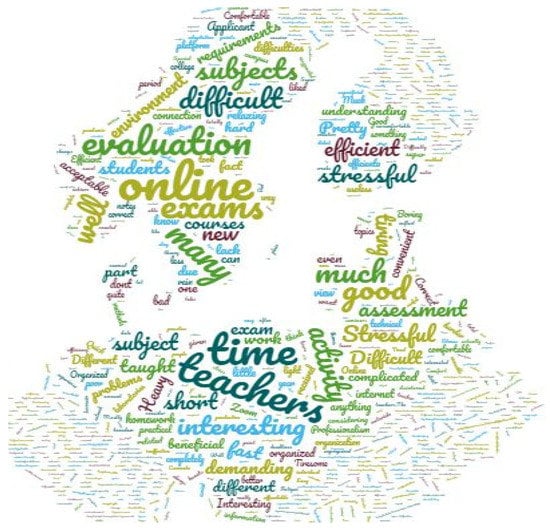

To obtain a general assessment of students on the evaluation period carried out during the pandemic with COVID-19 (RO1) in a trustworthy manner, respondents were asked to define in three words/three sentences the assessment activities undertaken online during the pandemic period, the word association items being used in research to jumpstart reflection and critical thinking [55,56]. The free form nature of the task led to a large variety of ideas, expressed in the collected answers, with more than 1000 variants. The senior researchers coded the responses and generated a word cloud, ranking concepts according to their importance with the topic (as indicated by their size in the word cloud) [56]. The original version of the cloud was in Romanian, for the purpose of publication an English version of the visual was generated. Studies show that for online inquiry, students are more proficient in inferring meaning from visual representations that avoid information overload while helping them focus on relevant ideas. Xie and Lin advocate that word clouds are useful to represent student knowledge for formative and summative assessment purposes, providing an excellent starting point for analysis and coding of qualitative data, especially in the Internet medium. [56,57].

The word cloud, generated on the basis of survey responses to Q1 was presented in the focus group as an icebreaker for interpreting research results.

The most common words, highlighted in the cloud are as follows: Online—26 times; time—23 times; teachers—19 times; evaluations—14 times; exams—14 times; many—13 times; good—12 times; much—12 times; activity—11 times; difficult—11 times; subjects—11 times; well—11 times; interesting—10 times; stressful—9 times; evaluation—8 times; effective—8 times (Figure 1), etc.

Figure 1.

Students’ perception of the assessment activities during the pandemic.

The results indicate a mixed perception still requiring a focused frame. Students were not ready to process at a cognitive level educational activities during the emergency remote teaching and end-term assessment. The mixed perception can be explained as a consequence of the unexpected character of the educational activity, which shifted abruptly to online processes without warning or preparations. On the one hand, the courses (alongside seminars, labs, and practical activities) went online, and, for the first time, all assessment activities were transferred from face-to-face practice to the virtual environment. All stakeholders were confused as the duration of the emergency remote education was impossible to predict. Students were faced with the challenge of adapting to new online tools and to an uncharted environment from the point of view of academic life. One of the students participating in the focus group proposed that the respondents displayed frustration both with respect to the experience of the sudden online education, and to the lockdown period itself.

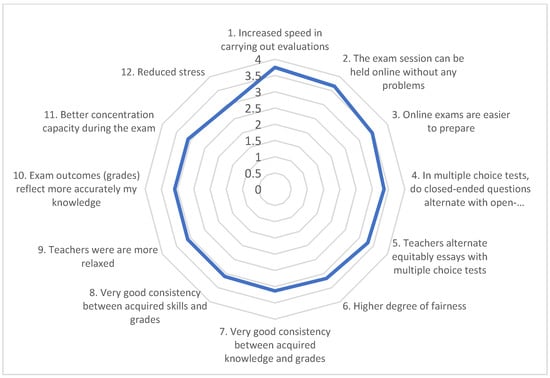

To map students’ perception of the exams, along intrinsic or extrinsic parameters of the assessments (RO2) respondents were asked to appraise, using a 5-point Likert scale (from total disagreement to total agreement), the assessment activities in the pandemic period, from the perspective of 12 parameters (Appendix A). Responses, recorded in descending order of averages, are shown in Figure 2 below.

Figure 2.

Students’ appraisal of the features identified for assessment activities.

3.1. Quantitative Analysis of the Emergency Remote Assessment Parameters

The results are rather positive. A single parameter, the stress felt in the exam (m = 2.93) displays an average below the middle of the scale. It shows that, even in the online environment, the end-term exams trigger, in addition to the value of assessment as such, stress due to the fear of failure or of an unwanted qualification (grade). For the rest of the parameters, the obtained results are above the average of the scale, i.e., higher speed in the conduct of evaluations (m = 3.75); the exam session can be held online without problems (m = 3.66); and the exams in the online version are easier to prepare (m = 3.46). When using multiple choice tests there is an alternation of closed-ended questions and open-ended questions (m = 3.36); teachers alternate equitably essays with multiple choice tests (m = 3.30); greater accuracy (m = 3.17); very good consistency between obtained grades and acquired knowledge (m = 3.13); very good consistency between obtained grades and acquired skills (m = 3.10); teachers were more relaxed (m = 3.10); exam results more accurately reflect my knowledge (m = 3.09); and better concentration during exams (m = 3.09). These scores indicate that the respondents positively appraise the assessment carried out during the evaluated period, considering online assessment to be good, with a tendency to be evaluated as very good.

3.2. Qualitative Analysis of the Assessment Parameters, in the Focus Group

Students were ambivalent when evaluating the emergency remote assessment. In the focus group they nuanced the expressed opinions, by offering additional explanations: “Online exams have been much more simplified… to say, much easier than if we were in college”; “it was something different and it caught our attention”; “it’s greater comfort to work from home and not to commute so much”; and “it was simpler, easier because we were alone. There was no teacher sitting next to us, face to face, to stress us out”. In other words, the family environment has brought for many of the students more comfort, and on the other hand, reduced the stress due to distancing the authority of the teacher from close proximity. It is possible that teachers have struggled to choose exam items/questions that are easier to address, to prevent social stress from escalating, and diminish exam-related stress [6,13,14,15,38,39], but the present research did not deepen this aspect.

3.3. Quantitative Analysis of the Extrinsic Parameters Pertaining to the Emergency Remote Assessment

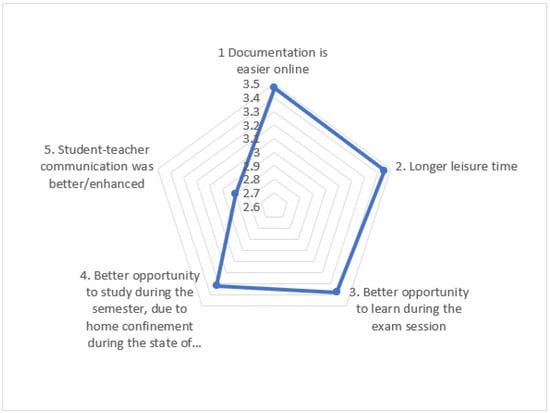

The appraisal of the end-term assessment during the pandemic was also carried out from the perspective of the following side effects related to assessment activities (Figure 3):

Figure 3.

Associated features with the assessment activities during the pandemic period.

- Documentation is easier online (m = 3.47);

- Leisure time is longer (m = 3.45);

- We have the ability to learn better during the end-term exam session (m = 3.38);

- Better opportunity to study during the semester, due to home confinement during the state of emergency lockdown (m = 3.32);

- Student-teacher communication was better/enhances (m = 2.90).

The responses indicate that students felt dissatisfaction with the communication with teachers, this parameter recording the lowest average (below 3, the middle of the measurement scale). All the other aspects are appreciated as positive.

3.4. Qualitative Analysis of the Extrinsic Parameters Pertaining to Emergency Remote Assessment

Students in the focus group interpreted the results and offered several valuable comments. For the parameter indicating that documentation is better online, by comparison to the pre-pandemic realities (m = 3.47), the interpretation of students was that “anyway we inform ourselves more from online sources than from books from the library”. The obligation to work online forced them to expand their searching skills and refine the ability to identify useful documentation on the Internet.

Regarding longer leisure time (m = 3.45), student explanations are as follows: “(we had more time) because we stayed indoors and we didn’t have to go to college, or waste any more time”. However, the interpretation needs refining and some opinions, asserted in the focus group, are worthy of attention. The students say that “the time we used to focus on projects and homework was longer, because it took more to find information” or the homework tasks “were also a little more complicated, because we had to do the work from home. We weren’t used to work from the computer, from home…” It goes without saying that sitting at home, students did not benefit from the advice of teachers in preparing projects and that the apparent leisure time obtained by staying indoors and not commuting to college was redistributed to carry out the homework that took a longer time. In addition, during the state of emergency period, when travel was limited for all, time management could no longer fit into the habitual patterns. Students admitted concern in phrases such as the following: “There was a pandemic and we were stressed on one hand that we didn’t know how long it was going to take, and on the other, that we could not get out to fresh air, to hang out with friends and colleagues”. Another student said that “it really was a period…well, of anxiety and the situation in society at the time played a very important role, psychologically, for any student. Frankly, for me, it was awfully hard. I can’t say I was afraid of the virus itself, the restriction of the freedom of movement bothered me”. For others, the temporary course of life changed: “All my plans have been messed up. It was a very big disappointment, then, at the time, because I had to give up a lot of projects that I worked on and that I was very involved in up to that point and boom… All of a sudden, they’re gone. It was harder to assimilate, so to speak, to accept”. Therefore, the extra leisure time was only apparently more extensive. In part, additional time was used up for completing school-related tasks. Additionally, time compressed subjectively due to social anxiety, disappointments caused by the impossibility of pursuing certain ongoing projects. The responses indicate a generational appraisal of the situation. Both adaptive and maladaptive forms of relationship between students and teachers were registered [58], while the peer interactions were restructured in unpredicted ways.

Another important aspect to discuss is student-teacher communication (m = 2.90). Although one of the students responded that being “online brought us so close to the teachers, that is, I felt it so”, the vast majority complained of a decrease in educational communication. Students participating in the interpretation of the results consider that in the case of online courses “we were not always attentive or very involved… because we were able to turn off the camera and everything, and do other activities” or “a lot of communication was carried out through e-mails and messages…”, and “the teachers were very busy, they probably had a lot of emails and less time to check correspondence and then the answers came later”. Other points of view are also symptomatic for students’ appraisal of this parameter: “It’s altogether different when we talk face to face versus online”; “it was a big difference… when it went online”; “well, I don’t know, I… I’d rather come to college and talk face-to-face than now, when we communicate via e-mails or online classes/zoom”; or “there are such problems as a bad Internet connection or teachers do not get along with some students”; “you also have the reluctance to interrupt the teacher. Isn’t it annoying to interrupt everyone and ask what you lost?” Classes programmed for the second semester of the academic year 2019–2021 started in Romania directly in the pandemic period, with some new teachers that students never met physically. Occasionally this led to clumsy situations, to numerous hiccups in establishing a relationship with teachers, who were not always able to grasp the meaning of students’ messages. There was also embarrassment caused by the fact that students did not always have access to computers or other electronic devices and a working Internet connection. Another comment offered by one of the students was nostalgic: “When coming to college and the course was over, you could stay and ask the teacher. That’s right…” Another identified technical syncope was generated by the “havoc that occurs when 3–4 people activate their microphone simultaneously, so it’s total chaos. I don’t think anyone can handle this. It’s because of the lack of live visual contact, I think”, said one of the students in the results interpretation group. Another student believes that, “We haven’t even seen each other. Not even the expressions. Very few people had an opinion (online). Or we did, but we didn’t have the guts to say we did. As others have said it, difficulties with the internet, and the microphone, and so on. And with understanding all that… At any time, we could interrupt the teacher in the middle of a course and ask questions, but online many had reservations to do so”.

Face-to-face educational communication has its sets of rules and rituals, acquired in the years of schooling. Shifting to online schooling involved the need to adapt and create new rules that students and teachers had to build together, as highlighted also by Antonini Philippe, Schiavio, and Biasutti, who present the case of the teacher-student dyad [58]. There was little time for such co-creation. However, students were not the only ones who encountered difficulties, teachers reported uneasiness linked to their ability to use the sometimes unfamiliar teaching tools as well as the burden of their social position’s responsibility. To all this, teachers had to deal with their own pandemic-related stress [39].

3.5. Quantitative Analysis of the Factors Influencing Students’ Responses

To identify the main factors that influenced students’ answers to the parametric questions (RO3), a factor analysis is useful. The Bartlett test shows the value of the square chi (2,429,143), the number of degrees of freedom (df = 91), and the associated probability (p < 0.01) that indicate that the data set is suitable for an exploratory factorial analysis (Table 5). The responses to the questionnaire were further refined to identify the main factors influencing students’ perceptions on the online assessment. Furthermore, Table 6 below shows the value of the KMO (Kaiser–Meyer–Olkin Measure of Sampling Adequacy) test, which has a value close to 1 (0.920, p < 0.01), ensuring that the model can be considered valid. A total of 92% of the variation of the 14 variables is explained by two extracted factors. The KMO criterion selects factors that have eigenvalues greater than 1, i.e., they have an explanatory power greater than a single variable.

Table 5.

Results with the two factors extracted.

Table 6.

Value of Kaiser–Meyer–Olkin Measure of Sampling Adequacy test to verify the veracity of factor analysis.

According to Table 7 below, only two factors have been extracted that have their own value greater than 1, i.e., they have more explanatory power than a single variable. The rest of the factors that have not been extracted show a variation in the error that cannot be explained. Factor rotation shows that the first factor accounts for 28.722% of the variance and the second factor explains 58.622% of the variance. In total, the two factors explain 57.344% of the variance, which for our study represents a satisfactory percentage.

Table 7.

Saturations of variable-values in each extracted factor, obtained after factor rotation.

Variables are ordered in Table 7 according to the saturation they have in the extracted factors. Factor 1, which we labeled Knowledge Factor loads the variable values entered by us in the questionnaire with the following saturations: Knowledge—grades matching by 0.836; grades—skills matching with 0.809; teachers alternate essays with multiple choice tests by 0.728; the results better reflect my knowledge by 0.709; greater accuracy by 0.699; and tests alternating closed- and open-ended questions with 0.652.

Factor 2, labeled Leisure and Stress Factor, loads variables—values included in the questionnaire with the following saturations: Longer leisure time by 0.757; the opportunity to learn better during exam session with 0.742; less stress by 0.742; the opportunity to learn better during the semester with 0.719; better concentration during exams with 0.693; online exams easier to prepare with 0.657; better documentation capacity in online with 0.541; and teachers feeling more relaxed during exams with 0.467. For the presence in the Leisure and Stress Factor group of the items, the opportunity to learn better during the semester (course attendance, seminars, as well as lab and project work) with 0.719, and better concentration during exams with 0.693 respectively can be associated with the reassuring effect of the familiar context, the perceived safety of students’ homes on the respondents in the sample.

The two factors extracted by the factor analysis indicate that the students included in the study were influenced by the accumulated knowledge, with all that implies the fairness of its evaluation, and, on the other hand, by the perceived longer leisure time and reduced stress due to the online assessment methods.

The senior researchers applied Cronbach’s Alpha’s test to verify the correlations among the 14 item scores in the factor analysis, which resulted in a coefficient of 0.91 of internal consistency reliability. The value of alpha is considered good, being higher than the minimum 0.7 acceptable level for internal consistency [43] (pp. 242–244).

3.6. Qualitative Analysis of the Factors Influencing Students’ Responses

The perspective of the students involved in the interpretation of the results leads, from the perspective of the two factors selected by us, to the following explanations: “Stress, because there are several subjects to learn. Relaxation, because we could close the camera and do other things during this time”; “on the stress side, it depends on each person, how they divide their time”; and “if I prepared my exam, it did not seem so difficult, if not, I know then I find it extremely difficult to pass, to solve the exercises or the homework”. The analyst students said that time management, and adaptation to a different kind of effort was the key to overcoming the stressful moments of the evaluation period in the pandemic. The additional knowledge was explained by the fact that: “I really ended up doing a lot more new things that I didn’t think I’d be able to do. I discovered myself like this, some skills…”.

The relaxation-stress duality is explained by the following answers: “The stress of the exams was not so great because the examination setting was more relaxing. But since I was getting projects, projects, projects, from every teacher, I was very stressed. I felt like I’d never finish them in time”. Another respondent commented: “I think the comfort of home is definitely extra when it comes to examination and doing homework, i.e., it’s different, it’s much more relaxing. It’s the environment you come from. You’re the native of the place. You know the traps around here”. Personal explanations were also given about non-separation of home life, work at home, and domestic chores becoming intertwined and non-compartmentalized. In this sense a student states that “when I am at home, my mother tells me go shopping or, help her too… Well, yes, but I have homework, or I have an exam in half an hour. Forget about time!” Another student stated that “we squeeze our brains and rush away from what we have to do. When you see yourself at home and you say you have nothing to do, or you don’t have to do anything right now because you’re at home”; “relaxation at home is a pain”; “when you’re at home you don’t hit anything. You’re at home”; “a message on campus doesn’t hurt as much as when the teacher calls himself and looks at you that, oh well—You didn’t hand in the homework. It’s like… it’s different. And in front of the other colleagues, you feel…”.

In conclusion, beyond all the negative aspects mentioned, in the end, the positive side of this force of adaptation for the future was identified: “Let’s look at it from another perspective; that teachers at this college have been good to us. The experience is not bad. That’s kind of… This future is about ONLINE. Whoever wants to be active in this field needs to keep up. And we’ve got the stress and the relaxation, all of it. I’ve kind of seen what work is like in this field. And being young, we can work and travel. Not necessarily sitting at home, like it was in this pandemic… We can go to the seaside and do your job from there. You know when you’re sitting on the beach and you know when you’re going to join a meeting. The glass is also half full…”.

3.7. Quantitative Analysis of the Students’ Appraisal, Regarding the Features Acceptable to Be Carried out into the Post-Pandemic Assessment Practices

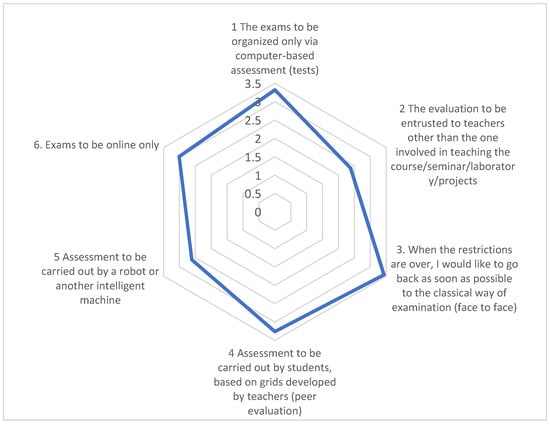

Finally, to determine student appraisal on future trends in evaluation/assessment, based on their experiences during the COVID-19 pandemic (RO4), students were asked a question with several possible variants of evaluation development. In descending order (according to Table 8) the results are as follows: Return to the classical method of examination—m = 3.53; evaluation in examinations to be carried out only by computer tests—m = 3.32; assessment to be carried out by students on the basis of scales developed by teachers—m = 3.26; evaluation in exams to be carried out only online—m = 3.01; evaluation in examinations to be carried out by a teacher other than that of the course/seminar/laboratory/project—m = 2.38; and assessment to be carried out by a robot or any other intelligent machine—m = 2.61.

Table 8.

Students’ readiness to accept changes in the assessment activities in the post-pandemic period.

3.8. Qualitative Analysis of the Students’ Appraisal, Regarding the Features Acceptable to Be Carried out into the Post-Pandemic Assessment Practices

Students will return to normal ‘face-to-face’ activity, but in a safe and fair environment. Therefore, they want computer tests, based on scales developed by the teacher, but testing to be done by their fellow students. Interestingly, I do not want the assessment to be carried out by another teacher (2.38) or by a robot or other intelligent machine score low. The conclusion indicates that students feel comfortable within human interaction (colleagues and not robots, familiar/class teacher, not other teachers). It is difficult to understand whether such an attitude is a sign of immature reasoning, a refusal to face a tougher competitive environment, unpreparedness for novel assessment methods, or some other reason. The result resonates with the remarks of Struyven and Devesa [29], who commented on the necessity to thoroughly introduce and prepare students for new forms of assessment, due to the fact that students may react negatively to unfamiliar or new experiences in an academic setting.

Asked to offer their opinion on possible developments in the evaluation activity, students favored a human-led evaluation, rather than a technical or robotic one (Figure 4).

Figure 4.

Students’ readiness to accept changes in the assessment activities.

Once restrictions are over, the student responses showed that they would like to return to the classic way of examination as soon as possible because: “We were used to it”, “we miss the coffee before the exam”, “because we socialize before the exam and after the exam we expect…”, or “we have a seat next to a friend in the exam”, and after the exam “we ask each other ‘What did you do there? What did you write?’ To check if we answered correctly”. The ritual form of relation, established and rehearsed in face-to-face evaluation, was no longer in place in the case of online evaluations. Students did not even call each other after the exam to exchange opinions or share emotions. This result also resonates with findings in similar studies, Buttler, George, and Bruggemann indicate that students wish to return to university [39].

The choice for exams to be carried out only via computer tests is due to a variety of reasons: “Maybe because it is happening faster”, “I think it is time for Romania to evolve to move forward a little more. Let’s not stay the same as in communism with schools and education”, “this prepares us for a job in the field”, “update to the present day”, “it was a little more convenient this method of evaluation online. I think. I took the exam and received the results immediately. It was better this way”, and “we also had the opportunity to do projects and work on them over time”. It is beyond a doubt that online evaluation is faster by comparison to the classical variant and that computer-based examination resonates with students’ digital skills [31].

The choice for assessment to be carried out by students on the basis of grids developed by the teachers was explained among other aspects, as follows: “Probably they are those who want to become teachers and this can prepare them for further activities” or “and if there are more evaluators(students) the results are received more quickly. They’re more lenient than teachers”, “I’m… that’s what (s)he said about there, to give him additional 0.50 for the grade there…”, and “… (s)he’s a student like me”.

4. Discussion and Conclusions

The research journey requires a closing and appraisal moment, and a scrutiny of possible continuation of the work. The present research unfolded aspects, identified jointly by senior researchers and by students as researchers. The COVID crisis that led to ERT took place, at the time of this research, less than a year ago, and the growing volume of literature on this topic is beginning to shed light on the dynamics. There is now enough content that researchers are beginning to find consensus on certain aspects of this shift. The focus of the present article is to unveil students’ understanding of educational processes during the pandemic and to identify pillars for incorporating sustainability features in the post-pandemic assessment activities.

The current literature on sustainable evaluation [26,27] is a ‘synthesis of the past’ with a focus on the training needs of the future. If students are steered towards coping with future challenges and displaying readiness to overcome unpredicted obstacles and risks, they will build the resilience to treat crises situations (such as the pandemic) as opportunities and co-create solutions in a creative and productive manner [39]. Thus, lessons learned from the research can be incorporated into post-pandemic education, which should not be a swift return to the pre-pandemic status quo, but an enriched and resilient practice, incorporating experiences and solutions built during the COVID-19 crisis.

The research aimed to extract students’ overall appraisal of the end-term assessments during the COVID-19 pandemic (RO1). The initial appreciation of the students regarding their experience with academic life during lockdown and isolation, due to the COVID-19 pandemic expressed through word association and represented in the cloud (Figure 1), reflected general confusion. The X-ray probing of students’ perceptions through undirected opinions revealed bewilderment, revolt, frustration, and perhaps anarchy mirroring a sense of change. The words of many students were: Online, exams, difficulty, evaluation, much and many, etc., i.e., paraphrasing a Romanian expression “to all of us it is difficult, but now it’s hard to all at once”. As one of the respondents in the focus group stated, “For education, the main command was adaptation. Whoever manages to go through the periods of change much faster, manages to stay in the lead. Hence a directive for the education system to be prepared to deal with the changes and possible crises that will come”. Assessment activities can generate dissatisfaction, frustration, and anxiety [24], and the heath crisis and concerns of 2020 echo-chambered anxiety for most students [6,11].

Mapping students’ perception of the exams, along intrinsic and extrinsic parameters of the assessments (RO2) nuanced students’ responses and offered insights into the examinees’ appraisal of their experience with end-term exams. The intrinsic and extrinsic parameters pertaining to end-term assessment during the early stage of the pandemic perceived by the surveyed students indicate that while the examinees were stressed, because of the novel examination environment (virtual, instead of in the traditional classroom), they identified a certain simplification or a reduced complexity of the exams. The parameter that stands out is the one related to the perceived speed of evaluation, m = 3.75, an aspect positively received by the respondents, who built upon experiences prior to the end-term exams in the summer of 2020. In addition, a second feature highly appraised by the respondent was that exams seemed easier to prepare, m = 3.46. The reasons behind students’ perceptions can be analyzed from two perspectives. On the one hand, the domestic/family environment, that replaced the institutional one, made many of the students (especially the shy individuals) feel more relaxed. On the other hand, faced with their own stress generated by the pandemic, teachers approached teaching differently, including student evaluation activities. The assessment was set at lower standards so as not to add additional stress to the social stress [7,8]. Technology-mediated education, this “corpus callosum” of our days did not allow for full communication between examiners and examinees and forces a revisitation of the classical approach to evaluation. A transitional period is needed in which, on the one hand, technology, and on the other hand, the teaching body, establishes an appropriate package of teaching-assessment tools under the new conditions. As Katz and Gorin rightfully stated, an increased use and exploration of computer support for assessment of CL is needed and feasible [31], but a more thorough preparation of assessment tools and methods need to be put in place. The effect of technology use on assessments has shown to be both positive and negative for examinees and examiners. Compared with traditional assessments, computer-mediated assessment allows for more authentic, complex, and interactive assessments that more closely mimic real-world performances in ways that many examinees have come to expect [31]. However, the remote assessment activities revealed the necessity to scrutinize closer the inferences that teachers traditionally made about examinees.

The analysis of some aspects pertaining to evaluation, such as the increased possibility of documentation, the time devoted to the study, or teacher-student communication also sometimes revealed features less analyzed in the appraisal of assessment activities. The documentation was carried out online, as students used to do in the pre-pandemic period, but the impossibility to borrow from the library physical resources (books, guides, standards, etc.) created additional efforts, supplanted with the search of additional online resources, especially because documentation had to be done from home. Some of the students found the activity tedious and tiresome because of the lack of appropriate devices and Internet connection. A simple mobile phone is not useful as an interface for documentation from virtual libraries. Many Romanian universities, like Politehnica Timisoara, where the research was carried out, had to purchase, in an expeditive manner, computers or offered access for temporarily borrowing digital devices for students with low financial means. In our opinion, the education of the future needs to undergo substantial changes in providing not only quality digital content and procedures for the teaching-learning process, but also in facilitating access to devices available to teachers and students, to complete the technological infrastructure that can sustain more computer-based education. For instance, university libraries could offer to their subscribers, in addition to documents, research and education resources, the devices that allow for accessing information from home, in a virtual environment. Or TV signal providers could allow for transforming television into a tool displaying content from virtual libraries of the future. Low quality Internet connection was another problem, raised particularly by students residing in rural areas. However, technological infrastructure is a matter of national investment, which depends on the financial strength of each country. In face-to-face education, the university has the means and capacity to offer continuous and good quality Internet connection, but for remote, off-campus teaching, unfortunately, there was little that the university could do. This topic remains open for post-pandemic measures, to be discussed with the Ministry of Education and with the government working group, in charge with the digitalization of Romania.

The existence of extra leisure time proved to be a relative matter. The stay-at-home policy during the lockdown in March, April, and May 2020 eliminated commuting to and from college, and added extra personal time to students and teachers alike. However, this extra time was redistributed towards completing homework, documentation, and solving household chores. There was also a need for a more judicious use of time and a clear-cut division of the time devoted to educational activities, to personal time, to relaxation, and/or to family time. In the pre-pandemic period, the landmarks were traced by going to college, to the library, to the gym, to some favorite place to meet friends, to the mall, etc. During the lockdown, barriers suddenly disappeared and everything had to be managed from within the four walls of one’s home. Students felt inclined to procrastinate, overwhelmed by the task of managing their own time.

Technology-mediated teacher-student communication was also a challenge for both sides. The teacher had to resort to various training strategies with which (s)he was not necessarily accustomed, and students had to extract from online messages, content and instructions similar to those provided in direct (face-to-face) meetings. Teachers had to overcome the syncope of the absence of students’ faces as feedback, a partner, and main recipient of educational actions, and students had to overcome emotional challenges in asking questions or eliciting clarifications. Our findings converge with those formulated by Patricia Aguilera-Hermida in assessing that flexibility, tolerance, and communication needed to be a common factor during remote classes. Due to the pandemic, many professors had to use new tools without having the possibility to prepare students for them [59]. Based on the students’ responses, it was clear that students’ self-efficacy was not at its highest peaks. Students needed a lot of encouragement, reassurance, and positive feedback to remain tuned to the educational process. This is where the technological shortcomings that no longer allowed interactions similar to direct meetings were superimposed.

The research aimed also at identifying the main factors that influenced students’ answers to the parametric questions (RO3) of the assessment activities during the pandemic period. The factor analysis of the variables highlighted two factors that influenced the answers of the respondents: Factor 1, labeled factor of new knowledge, and factor 2—the factor of stress and relaxation. The first factor accounts for 28.722%, and the second one for 28.622% of the variance. With a total of 57.344% in the variance, the two factors proved to be, in a nutshell, the “price of knowledge” paid by students during the pandemic. Factor 1, knowledge, is the main factor of the presence of any young person in the university, while the energy consumed for this translates into the stress/relaxation ratio expressed by factor 2.

Knowledge acquisition has been a constant focus for all participants in the educational process. Digital technology, applications, and usage were the test stones for all. Classical learning has slotted into a secondary plan, while technology-assisted teaching has become technology-mediated learning. As one of the students enrolled in the interpretation group pointed out, the online life for work (and not only for leisure) is a step towards the future, even a means by which students were forced “to probe the future”.

The results indicate that the pandemic-related stress was easier to overcome from home, due to the surrounding familiar objects and persons. The stress was caused by the unknown situation, related to adaptation to the restrictions generated by the spread of COVID-19. On the other hand, the stress component related to the obligation of adapting to (new/digital) technology has been long overdue because the future is moving towards an increasingly platformed, digital society and digital does not only mean leisure, relaxation, or commerce, but also learning, instruction, and work.

Finally, the research team set as an important objective for the study the determination of the students’ acceptance of certain features in evaluation/assessment, rooted in their experiences during the COVID-19 pandemic (RO4) to be maintained in the post-pandemic education, thus anticipating trends in evaluation benefitting from students’ support. Regarding the trends in evaluation, the pandemic period clearly steers the process to an increased implementation of digital solutions. Student responses to the survey and their comments in the focus group indicate that in the short run they wish to return to pre-pandemic normality. In the medium and long term, a technology-assisted assessment is desired, as many voices in the educational arena claimed even before the health crisis [21,22,24] and can build upon experiences accumulated during ERT [39]. Questioned upon their preference regarding the assessment activities in the post-pandemic situation, respondents in the survey indicated that the in presentia, classical model is the preferred one, m = 3.53 being the highest average in the total response variants. Students also held the opinion that the complete withdrawal of the teacher from the assessment is not desirable, at least not at this stage, a result reflected by m = 2.61, the lowest obtained in the proposed list of changes to be implemented in education when the crisis is over. Human presence and touch in the assessment process turns out to be a necessary one. While being open to novelty and to the retaining, in the post-pandemic educational model, features defining e-learning, practiced during the lockdown of spring 2020, students imagine a future where at least some familiar features of face-to-face academic encounters are present [60], such as blended learning, or m-learning or some other model developed to best fit a generation of students that experienced such a profound crisis like the one caused by the COVID-19 pandemic.

The final discussion with students involved in the research showed that participating in the investigation and in the discussion helped them process and reason their experience with remote assessment activities in the summer of 2020. Not only did they make sense of the educational road travelled upon during the peak of the pandemic, but the research empowered them to understand the process, express their readiness for or rejection of changes in future exams, and be more capable of expressing an informed point of view in the consultations concerning the future organization of educational activities in 2021 and beyond. Despite their enthusiasm for technological innovation and for the benefits brought by computer-assisted assessment, students are inclined towards preserving human evaluators, preferably from their familiar teachers and in educational settings resembling the pre-crisis academic life. Students missed their academic rituals and interactions with peers and teachers, and expressed the need for a well-prepared approach for the introduction of novel forms of assessment.

The participatory model, embraced in the present research that builds upon engaging students as co-researchers, encourages an epistemic agency, a capacity to construct legitimate knowledge in the student-centered education paradigm. Students and teachers need each other, need to work as active partners in the process if it is to be either worthwhile or successful. The strength of dialogue is in its mutuality, as Fielding compellingly argued [50]. The COVID-19 crisis tested the university’s resilience and capacity to overcome sudden obstacles. Once the health threat of the pandemic is over, the experience accumulated during the crisis needs to be incorporated into the fabric of the education system. Prior to COVID-19, campuses have been closed due to natural disasters such as wildfires, hurricanes, and earthquakes [17]. Some universities already had emergency plans actionable in the case of health threats. This was not the case for Romanian universities, which did not encounter such challenges, at least not in the last century. The present research contributes to rationalizing COVID-19-related emergency educational responses, found in a particular university, and proposes the lessons learned in 2020 to be incorporated in a sustainable, resilient model of education, in which the assessment activities can be improved based on features identified as acceptable by students as examinees.

5. Limitations and Future Extension of the Study

When the research strategy for this study was developed, professional journals had not yet published findings related to ERT due to the COVID-19 crisis. Organizational responses to the crisis were proposed by national bodies (ministries of education) and by international organizations interested in the topic (WHO, UNESCO, and the World Bank). Meanwhile, the growing volume of literature on ERT due to the pandemic is now sufficient to provide better insights for future studies. The present study’s unique contributions include the students’ appraisal of the emergency remote assessment and their readiness to maintain certain features of the remote assessment in the post-pandemic model. In future studies, it may be further explored as to how student input is voiced in the organizational context, how and if the identified features become part of the new educational practice in the same university, and what is student satisfaction with assessment activities in an online/offline context.

Further, this study is a reflection of the pandemic situation dealt with in one university only. The validity of the developed research instrument should be tested on other samples so as to verify its predictive validity and to take a deeper step into the analysis, by carrying out a confirmatory factor analysis (CFA). Professional communities of practice can benefit corroborating the present findings with similar studies in other technical universities, on the one hand, and, on the other, with studies carried out in comprehensive universities. The questionnaire method should also be corroborated with individual or group interviews. The senior researchers involved in the present study have partially compensated these shortcomings by inviting students to interpret results in a focus group, thus putting the examinees’ opinions in the center of the research, with a clear understanding that the findings reflect the first reaction of the respondents to a major unpredicted and profound challenge in their academic life.

Author Contributions

Conceptualization, M.C.-B. and G.-M.D.; methodology, M.C.-B. and G.-M.D.; formal analysis, G.-M.D.; investigation, M.C.-B. and G.-M.D.; writing—original draft preparation, M.C.-B. and G.-M.D.; writing—review and editing, M.C.-B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author G.-M.D.

Acknowledgments

The authors wish to express their gratitude to the students involved as co-researchers, who contributed to data collection and focus group discussions. Further, the authors are grateful to the anonymous reviewers, whose comments helped improve the clarity of the present article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Questionnaire: Assessment Activities during the Pandemic Period (2020)

Q1. Please define in three words/three sentences the assessment activities undertaken online during the pandemic period.

Q2. Please rate to what extent you agree with the features displayed by assessment activities during the pandemic, considering the parameters listed below (choose only one response for each parameter):

| Parameters of the Evaluation during the Pandemic Period | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

| 2.1. Increased speed in carrying out evaluations | 1 | 2 | 3 | 4 | 5 |

| 2.2. Higher degree of fairness | 1 | 2 | 3 | 4 | 5 |

| 2.3. Very good consistency between acquired knowledge and grades | 1 | 2 | 3 | 4 | 5 |

| 2.4. Very good consistency between acquired skills and grades | 1 | 2 | 3 | 4 | 5 |

| 2.5 Reduced stress | 1 | 2 | 3 | 4 | 5 |

| 2.6. Better concentration capacity during the exam | 1 | 2 | 3 | 4 | 5 |

| 2.7. Teachers are more relaxed | 1 | 2 | 3 | 4 | 5 |

| 2.8. Teachers alternate equitably essays with multiple choice tests | 1 | 2 | 3 | 4 | 5 |

| 2.9. In multiple choice tests, closed-ended questions alternate with open-ended questions | 1 | 2 | 3 | 4 | 5 |

| 2.10. The exam session can be held online without any problems | 1 | 2 | 3 | 4 | 5 |

| 2.11. Exam outcomes (grades) reflect more accurately my knowledge | 1 | 2 | 3 | 4 | 5 |

Q3. Please rate to what extent you agree with the following.

| Extrinsic Parameters of the Evaluation during the Pandemic Period | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

| 3.1. Better documentation (online) | 1 | 2 | 3 | 4 | 5 |

| 3.2. Longer leisure time | 1 | 2 | 3 | 4 | 5 |

| 3.3. Better opportunity to learn during the exam session | 1 | 2 | 3 | 4 | 5 |

| 3.4. Better opportunity to study during the semester (course attendance, seminar, lab, project, etc.), because we had to stay indoors | 1 | 2 | 3 | 4 | 5 |

| 3.5 Better/more complete student-teacher communication | 1 | 2 | 3 | 4 | 5 |

Q4. Please rate to what extent you would agree that the assessment activities undertake one of the following changes:

| Proposed Changes | Very Large Extent | Large Extent | Some Extent | Little Extent | Very Little Extent/Not at All |

| 4.1. The exams to be organized only via computer-based assessment (tests) | 1 | 2 | 3 | 4 | 5 |

| 4.2. The evaluation to be entrusted to teachers other than the one involved in teaching the course/seminar/laboratory/projects | 1 | 2 | 3 | 4 | 5 |

| 4.3. Assessment to be carried out by students, based on grids developed by teachers (peer evaluation) | 1 | 2 | 3 | 4 | 5 |

| 4.4. Assessment to be carried out by a robot or another intelligent machine | 1 | 2 | 3 | 4 | 5 |

| 4.5. Exams to be online only | 1 | 2 | 3 | 4 | 5 |

| 4.6. When the restrictions are over, I would like to go back as soon as possible to the classical way of examination (face-to-face/in presentia) | 1 | 2 | 3 | 4 | 5 |

Q5. Faculty:

- Automation and Computing (AC);

- Electronics, Telecommunications, and Information Technologies (ETC);

- Electrical and Power Engineering (ET);

- Mechanical Engineering (MEC);

- Industrial Chemistry and Environmental Engineering (Ch);

- Management in Production and Transportation (MPT);

- Communication Sciences (SC);

- Architecture and City Planning (ARH);

- Civil Engineering (C-TII);

- Engineering, Hunedoara Campus (Ing. HD).

Q6. Year of study:

- Year I;

- Year II;

- Year III;

- Year IV;

- Year V;

- Year VI.

Q7. Gender:

- Male;

- Female.

Appendix B

Guide for focus-group discussions (zoom meeting).

- Step 1—Brief presentation of the research (aim, objectives, procedure);

- Step 2—Presentation of results;

- Step 3—Discussions.

- Q1 resulted in the image presented in the word cloud (Figure 1). How do you interpret results? Do you resonate that these concepts dominated the period (March–June 2020)?

- For Q2 your colleagues were asked to rate the assessment activities during the pandemic, according to a list of parameters. See results (Appendix A). What is your opinion/interpretation of the order of parameters in the tables?

- Q3 asked for the ranking of extrinsic parameters (list of Q. in Annex A). Do you resonate with the ranking? Can you elaborate?

- Q4 asked for respondents’ acceptance of certain experimented features of online/computer-assisted assessment to be preserved in the post-pandemic period (list of Q in Appendix A). Do you believe that these features can innovate assessment practices? Do you anticipate that they will increase students’ satisfaction with the assessment process and outcomes?

- Q2 and Q 3 were subjected to factor analysis, to unveil the embedded factors influencing respondents in shaping their appraisal of the end-term assessment. (Table 5, Table 6, Table 7 and Table 8). The identified factors boil down to knowledge, and leisure and stress. What is your interpretation of the knowledge factor? What about the dichotomy leisure vs. stress?

- What did you learn from participating in the research? Did you find the survey to be a useful tool for your graduation thesis preparation? What about the focus group?

References

- World Health Organization. WHO Timeline—COVID-19. 2020. Available online: https://www.who.int/news-room/detail/27-04-2020-who-timeline—covid-19 (accessed on 27 January 2021).

- UNESCO. Education: From Disruption to Recovery. March 2020. Available online: https://en.unesco.org/news/137-billion-students-now-home-covid-19-school-closures-expand-ministers-scale-multimedia (accessed on 27 January 2021).

- World Health Organization. Considerations for School-Related Public Health Measures in the Context of COVID-19: Annex to Considerations in Adjusting Public Health and Social Measures in the Context of COVID-19. 10 May 2020. Available online: https://apps.who.int/iris/handle/10665/332052 (accessed on 2 February 2021).

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development. 2015. Available online: https://sdgs.un.org/2030agenda (accessed on 27 January 2021).

- UNESCO. Policy Paper 42. Act Now: Reduce the Impact of COVID-19 on the Cost of Achieving SDG 4, ED/GEM/MRT/2020/PP/42REV. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000374163 (accessed on 3 December 2020).

- Wu, J.; Guo, S.; Huang, H.; Liu, W.; Xiang, Y. Information and Communications Technologies for Sustainable Development Goals: State-of-the-Art, Needs and Perspectives. IEEE Commun. Surv. Tutor. 2018, 20, 2389–2406. [Google Scholar] [CrossRef]

- UNESCO. Exams and Assessments in COVID-19 Crisis: Fairness at the Centre. 10 April 2020. Available online: https://en.unesco.org/news/exams-and-assessments-covid-19-crisis-fairness-centre (accessed on 3 December 2020).

- World Bank. The COVID-19 Crisis Response: Supporting Tertiary Education for Continuity, Adaptation, and Innovation; World Bank: Washington, DC, USA, 2020; Available online: https://openknowledge.worldbank.org/handle/10986/34571 (accessed on 5 January 2021).

- Fry, H.; Ketteridge, S.; Marshall, S. A Handbook for Teaching and Learning in Higher Education Enhancing Academic Practice, 3rd ed.; Routledge: New York, NY, USA; London, UK, 2009; ISBN 0-203-89141-4. [Google Scholar]

- Gatti, T.; Helm, F.; Huskobla, G.; Maciejowska, D.; McGeever, B.; Pincemin, J.-M. Practices at Coimbra Group Universities in Response to the COVID-19: A Collective Reflection on the Present and Future of Higher Education in Europe, The Coimbra Group. Available online: https://www.coimbra-group.eu/wpcontent/uploads/Final-Report-Practices-at-CG-Universities-in-response-to-the-COVID19.pdf (accessed on 2 October 2020).

- UNESCO; IESALC. COVID-19 and Higher Education: Today and Tomorrow. Impact Analysis, Policy Responses and Recommendations, UNESCO IESALC. April 2020. Available online: http://www.iesalc.unesco.org/en/wp-content/uploads/2020/04/COVID-19-EN090420-2.pdf (accessed on 27 July 2020).

- Farnell, T.; Skledar Matijević, A.; Šćukanec Schmidt, N. The Impact of COVID-19 on Higher Education: A Review of Emerging Evidence; NESET Report; Publications Office of the European Union: Luxembourg, 2021. [Google Scholar] [CrossRef]

- Gamage, K.A.A.; de Silva, E.K.; Gunawardhana, N. Online Delivery and Assessment during COVID-19: Safeguarding Academic. Integr. Educ. Sci. 2020, 10, 301. [Google Scholar] [CrossRef]