Confirmatory Factor Analysis of a Questionnaire for Evaluating Online Training in the Workplace

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Instrument

2.3. Process

2.4. Data Analysis

3. Results

3.1. Item Description

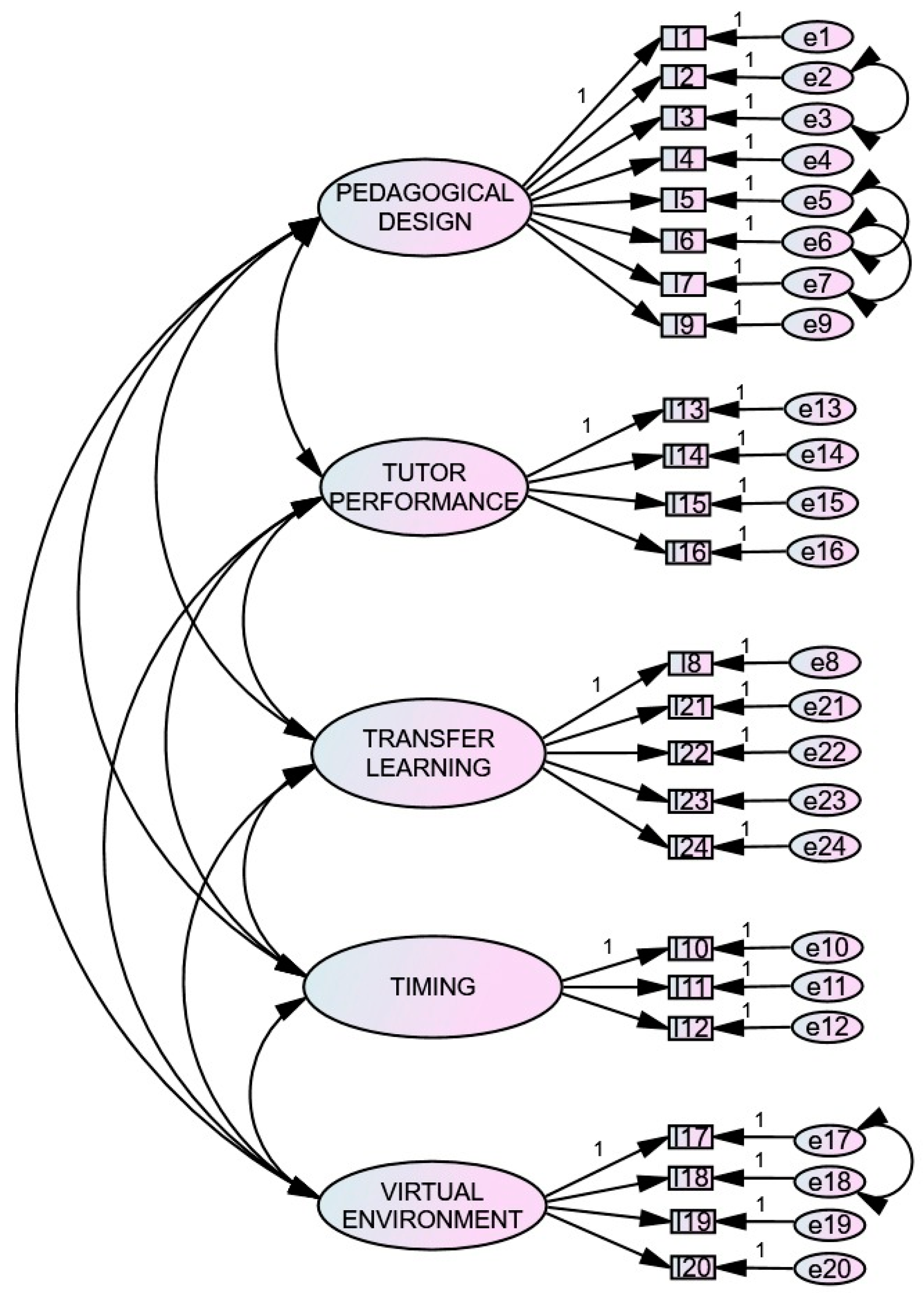

3.2. Factor Structure

3.3. Reliability

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Allen, M.; Bourhis, J.; Burrell, N.; Mabry, E. Comparing student satisfaction with distance education to traditional classrooms in higher education: A meta-analysis. Am. J. Dist. Educ. 2002, 16, 83–97. [Google Scholar] [CrossRef]

- Joo, Y.J.; Lim, K.Y.; Kim, S.M. A model for predicting learning flow and achievement in corporate e-Learning. Educ. Technol. Soc. 2012, 15, 313–325. [Google Scholar]

- Kauffman, H. A review of predictive factors of student success in and satisfaction with online learning. Res. Learn. Technol. 2015, 23, 1–13. [Google Scholar] [CrossRef]

- Moore, M.G. (Ed.) Handbook of Distance Education, 3rd ed.; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Rodríguez-Santero, J.; Torres-Gordillo, J.J. La evaluación de cursos de formación online: El caso ISTAS (Evaluation of Online Training. ISTAS Case). Rev. Educ. Distancia 2016, 49. [Google Scholar] [CrossRef]

- Williams, S.L. The effectiveness of distance education in allied health science programs: A meta-analysis of outcomes. Am. J. Dist. Educ. 2006, 20, 127–141. [Google Scholar] [CrossRef]

- Zambrano, J. Factores predictores de la satisfacción de estudiantes de cursos virtuales (Prediction factors of student satisfaction in online courses). Rev. Iberoam. Educ. Distancia 2016, 19. [Google Scholar] [CrossRef][Green Version]

- Dziuban, C.; Moskal, P.; Kramer, L.; Thompson, J. Student satisfaction with online learning in the presence of ambivalence: Looking for the will-o’-the-wisp. Internet High. Educ. 2013, 17, 1–8. [Google Scholar] [CrossRef]

- Allen, M.; Omori, K.; Burrell, N.; Mabry, E.; Timmerman, E. Satisfaction with distance education. In Handbook of Distance Education, 3rd ed.; Moore, M.G., Ed.; Routledge: New York, NY, USA, 2013; pp. 143–154. [Google Scholar]

- Croxton, R.A. The role of interactivity in student satisfaction and persistence in online learning. MERLOT J. Online Learn. Teach. 2014, 10, 314–324. [Google Scholar]

- Kranzow, J. Faculty leadership in online education: Structuring courses to impact student satisfaction and persistence. MERLOT J. Online Learn. Teach. 2013, 9, 131–139. [Google Scholar]

- Dabbagh, N. The online learner: Characteristics and pedagogical implications. Contemp. Issues Technol. Teach. Educ. 2007, 7, 217–226. [Google Scholar]

- Swan, K. Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Educ. 2001, 22, 306–331. [Google Scholar] [CrossRef]

- Lee, S.J.; Srinivasan, S.; Trail, T.; Lewis, D.; Lopez, S. Examining the relationship among student perception of support, course satisfaction, and learning outcomes in online learning. Internet High. Educ. 2011, 14, 158–163. [Google Scholar] [CrossRef]

- Rubin, B.; Fernandes, R.; Avgerinou, M.D. The effects of technology on the Community of Inquiry and satisfaction with online courses. Internet High. Educ. 2013, 17, 48–57. [Google Scholar] [CrossRef]

- Khalid, M.; Quick, D. Teaching presence influencing online students’ course satisfaction at an institution of higher education. Int. Educ. Stud. 2016, 9, 62–70. [Google Scholar] [CrossRef]

- Keeler, L.C. Student Satisfaction and Types of Interaction in Distance Education Courses. Ph.D. Thesis, Colorado State University, Fort Collins, CO, USA, 2006. Retrieved from Proquest Dissertations & Theses: Full text, Order No. 3233345. Available online: https://search.proquest.com/docview/305344216 (accessed on 4 March 2020).

- Arbaugh, J.B. Virtual classroom characteristics and student satisfaction with Internet-based MBA courses. J. Manag. Educ. 2000, 24, 32–54. [Google Scholar] [CrossRef]

- Khalid, M. Factors Affecting Course Satisfaction of Online Malaysian University Students. Ph.D. Thesis, Colorado State University, Fort Collins, CO, USA, 2014. Available online: https://dspace.library.colostate.edu/bitstream/handle/10217/88444/Khalid_colostate_0053A_12779.pdf (accessed on 4 March 2020).

- Garrison, D.R.; Anderson, T.; Archer, W. Critical inquiry in a text-based environment: Computer conferencing in higher education. Internet High. Educ. 2000, 2, 87–105. [Google Scholar] [CrossRef]

- Garrison, D.R.; Anderson, T.; Archer, W. The first decade of the community of inquiry framework: A retrospective. Internet High. Educ. 2010, 13, 5–9. [Google Scholar] [CrossRef]

- Bulu, S.T. Place presence, social presence, co-presence, and satisfaction in virtual worlds. Comput. Educ. 2012, 58, 154–161. [Google Scholar] [CrossRef]

- Artino, A.R. Motivational beliefs and perceptions of instructional quality: Predicting satisfaction with online training. J. Comput. Assist. Learn. 2008, 24, 260–270. [Google Scholar] [CrossRef]

- McKeough, A.; Lupart, J.; Marini, A. (Eds.) Teaching for Transfer: Fostering Generalization in Learning; Erlbaum Associates: Mahwah, NJ, USA, 1995. [Google Scholar]

- Baldwin, T.T.; Ford, J.K. Transfer of training: A review and directions for future research. Pers. Psychol. 1988, 41, 63–105. [Google Scholar] [CrossRef]

- De Grip, A.; Sauermann, J. The effect of training on productivity: The transfer of on-the-job training from the perspective of economics. Educ. Res. Rev. 2013, 8, 28–36. [Google Scholar] [CrossRef]

- Gegenfurtner, A.; Festner, D.; Gallenberger, W.; Lehtinen, E.; Gruber, H. Predicting autonomous and controlled motivation to transfer training. Int. J. Train. Dev. 2009, 13, 124–138. [Google Scholar] [CrossRef]

- Gegenfurtner, A.; Veermans, K.; Vauras, M. Effects of computer support, collaboration, and time lag on performance self-efficacy and transfer of training: A longitudinal meta-analysis. Educ. Res. Rev. 2013, 8, 75–89. [Google Scholar] [CrossRef]

- Grover, V.K. Identification of best practices in transfer of training in teacher education as perceived by teacher trainees. IJMSS 2015, 3, 147–163. [Google Scholar]

- Olsen, J.H. The evaluation and enhancement of training transfer. Int. J. Train. Dev. 1998, 2, 61–75. [Google Scholar] [CrossRef]

- Gegenfurtner, A. Motivation and transfer in professional training: A meta-analysis of the moderating effects of knowledge type, instruction, and assessment conditions. Educ. Res. Rev. 2011, 6, 153–168. [Google Scholar] [CrossRef]

- Gegenfurtner, A. Dimensions of motivation to transfer: A longitudinal analysis of their influences on retention, transfer, and attitude change. Vocat. Learn. 2013, 6, 187–205. [Google Scholar] [CrossRef]

- Quesada-Pallarès, C. Training transfer evaluation in the Public Administration of Catalonia: The MEVIT factors model. Proc. Soc. Behav. Sci. 2012, 46, 1751–1755. [Google Scholar] [CrossRef][Green Version]

- Quesada-Pallarès, C.; Ciraso-Calí, A.; Pineda-Herrero, P.; Janer-Hidalgo, Á. Training for innovation in Spain. Analysis of its effectiveness from the perspective of transfer of training. In Working and Learning in Times of Uncertainly; Bohlinger, S., Haake, U., Helms, C., Toiviainen, H., Wallo, A., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2015; pp. 183–195. Available online: http://eprints.whiterose.ac.uk/89399/1/Book-WPL-2015_-_Training%20for%20innovation%20in%20Spain%20%5Bchapter%2014%5D.pdf (accessed on 4 March 2020).

- Feixas, M.; Durán, M.M.; Fernández, I.; Fernández, A.; García-San Pedro, M.J.; Márquez, M.D.; Pineda, P.; Quesada, C.; Sabaté, S.; Tomàs, M.; et al. ¿Cómo medir la transferencia de la formación en Educación Superior?: El Cuestionario de Factores de Transferencia (How to measure transfer of training in Higher Education: The questionnaire of transfer factors). Rev. Docencia Univ. 2013, 11, 219–248. Available online: http://red-u.net/redu/documentos/vol11_n3_completo.pdf (accessed on 4 March 2020). [CrossRef]

- García-Jiménez, E. La evaluación del aprendizaje: De la retroalimentación a la autorregulación. El papel de las tecnologías (Evaluation of learning: From feedback to self-regulation. The role of technologies). Rev. Electron. Investig. Eval. Educ. 2015, 21, M2. [Google Scholar] [CrossRef][Green Version]

- Grohmann, A.; Beller, J.; Kauffeld, S. Exploring the critical role of motivation to transfer in the training transfer process. Int. J. Train. Dev. 2014, 18, 84–103. [Google Scholar] [CrossRef]

- Pineda-Herrero, P.; Ciraso-Calí, A.; Quesada-Pallarès, C. ¿Cómo saber si la formación genera resultados? El modelo FET de evaluación de la transferencia (How to know if training generates results? The FET model of transfer evaluation). Cap. Hum. 2014, 292, 74–80. Available online: http://factorhuma.org/attachments_secure/article/11261/c427_el_modelo_fet.pdf (accessed on 4 March 2020).

- Pineda-Herrero, P.; Quesada-Pallarès, C.; Ciraso-Calí, A. Evaluating training effectiveness: Results of the FET model in the public administration in Spain. In Proceedings of the 7th International Conference on Researching Work and Learning, Shanghai, China, 4–7 December 2011. [Google Scholar]

- Kirkpatrick, D.L.; Kirkpatrick, J.D. Evaluating Training Programs: The Four Levels, 3rd ed.; Berrett-Koehler Publishers: Oakland, CA, USA, 2006. [Google Scholar]

- Singleton, K.K. Reimagining the Community of Inquiry Model for a Workplace Learning Setting: A Program Evaluation. Ph.D. Thesis, University of South Florida, Tampa, FL, USA, 2019. Retrieved from ProQuest Dissertations & Theses: Full text, Order No. 13814361. Available online: https://scholarcommons.usf.edu/etd/7944 (accessed on 26 May 2020).

- Moore, A.L.; Klein, J.D. Facilitating Informal Learning at Work. TechTrends 2020, 64, 219–228. [Google Scholar] [CrossRef]

- Riley, J. The Relationship between Job Satisfaction and Overall Wellness in Counselor Educators. Ph.D. Thesis, Capella University, Minneapolis, MN, USA, 2017. Retrieved from ProQuest Dissertations & Theses: Full text, Order No. 10623122. Available online: https://search.proquest.com/docview/1973267475 (accessed on 26 May 2020).

- Elliott, K.M.; Shin, D. Student satisfaction: An alternative approach to assessing this important concept. J. High. Educ. Policy Manag. 2002, 24, 197–209. [Google Scholar] [CrossRef]

- Sun, P.C.; Tsai, R.J.; Finger, G.; Chen, Y.Y.; Yeh, D. What drives a successful e-Learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- Prendes-Espinosa, M.P.; Castañeda-Quintero, L.; Solano-Fernández, I.M.; Roig-Vila, R.; Aguilar-Perera, M.V.; Serrano-Sánchez, J.L. Validation of a Questionnaire on Work and Learning Habits for Future Professionals: Exploring Personal Learning Environments. RELIEVE 2016, 22, 6. [Google Scholar] [CrossRef]

- Torres-Gordillo, J.J.; Herrero-Vázquez, E.A. PLE: Entorno personal de aprendizaje vs. entorno de aprendizaje personalizado (PLE: Personal Learning Environment vs. Customised Environment for Individualised Learning). REOP 2016, 27, 26–42. [Google Scholar] [CrossRef][Green Version]

- Torres-Gordillo, J.J.; Cobos-Sanchiz, D. Evaluación de la satisfacción de los participantes en e-Learning. Un estudio sobre formación en prevención de riesgos y medio ambiente (Assessment of participants’ satisfaction with e-learning: A study on risk prevention and environment training). Cult. Educ. 2013, 25, 109–122. [Google Scholar] [CrossRef]

- Asare, S.; Ben-Kei, D. Factors influencing response rates in online student evaluation systems: A systematic review approach. J. Interact. Learn. Res. 2018, 29, 133–144. [Google Scholar]

- Rose, M. What are Some Key Attributes of Effective Online Teachers? J. Open Flex. Distance Learn. 2018, 22, 32–48. [Google Scholar]

- Balladares, J. Diseño pedagógico de la educación digital para la formación del profesorado (Instructional design of digital education for teacher training). Rev. Latinoamer. Tecnol. Educ. 2018, 17, 41–60. [Google Scholar]

- Boomsma, A. Reporting Analyses of Covariance Structures. Struct. Equ. Modeling 2000, 7, 461–483. [Google Scholar] [CrossRef]

- Bollen, K.A. Structural Equations with Latent Variables; Wiley: New York, NY, USA, 1989. [Google Scholar]

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Modeling 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Marsh, H.W.; Hau, K.T.; Wen, Z. In search of golden rules: Comment on hypothesis testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu & Bentler’s (1999) findings. Struct. Equ. Modeling 2004, 11, 320–341. [Google Scholar]

- Ntoumanis, N. A self-determination approach to the understying of motivation in physical education. Brit. J. Educ. Psychol. 2001, 71, 225–242. [Google Scholar] [CrossRef]

- George, D.; Mallery, P. SPSS for Windows Step by Step: A Simple Guide and Reference; 11.0 update; Allyn & Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Hutchins, H.M.; Burke, L.A.; Berthelsen, A.M. A missing link in the transfer problem? Examining how trainers learn about training transfer. Hum. Resour. Manag. 2010, 49, 599–618. [Google Scholar] [CrossRef]

| Factors | Items |

|---|---|

| Pedagogical design | 1, 2, 3, 4, 5, 6, 7, 9 |

| Tutor performance | 13, 14, 15, 16 |

| Virtual environment design | 17, 18, 19, 20 |

| Timing | 10, 11, 12 |

| Transfer of learning | 8, 21, 22, 23, 24 |

| Item | Mean | Standard Deviation | Asym. | Kurtosis |

|---|---|---|---|---|

| 1. The objectives developed met my needs. | 3.27 | 0.61 | −0.789 | 2.212 |

| 2. The objectives of the course were fully met. | 3.25 | 0.60 | −0.637 | 1.839 |

| 3. The content of the course corresponded to the proposed objectives. | 3.26 | 0.57 | −0.470 | 1.789 |

| 4. There was clear coordination between the content sections of the course. | 3.27 | 0.57 | −0.269 | 0.656 |

| 5. The content was presented clearly. | 3.23 | 0.64 | −0.578 | 0.916 |

| 6. The method used in the course was adequate for acquiring the desired competences. | 3.20 | 0.63 | −0.541 | 1.046 |

| 7. The method used involved the application of the acquired knowledge. | 3.15 | 0.61 | −0.491 | 1.441 |

| 8. The method that was developed involved the resolution of real problems in my professional practice. | 3.17 | 0.61 | −0.434 | 0.696 |

| 9. The course materials were useful and provided adequate information. | 3.15 | 0.60 | −0.558 | 1.666 |

| 10. The duration of the course was adequate. | 3.12 | 0.68 | −0.394 | 0.769 |

| 11. The time scheduled in the course for working on course content was adequate. | 3.16 | 0.57 | −0.396 | 0.874 |

| 12. The time scheduled in the course to execute the activities/tasks was adequate. | 3.14 | 0.57 | −0.356 | 0.800 |

| 13. The course tutor used the results of the assessments to guide the learning of the course participants. | 3.13 | 0.57 | −0.591 | 0.209 |

| 14. The tutor guided me according to my specific needs. | 3.35 | 0.67 | −0.718 | 0.779 |

| 15. The tutor resolved existing doubts, using all available resources. | 3.34 | 0.70 | −0.955 | 1.350 |

| 16. Communication with the tutor was fluid. | 3.06 | 0.59 | −0.971 | 1.055 |

| 17. The structure of the platform was clear, logical, and well organized. | 2.93 | 0.65 | −0.618 | 0.463 |

| 18. I could easily access the different sites of the course. | 2.85 | 0.61 | −0.674 | 0.309 |

| 19. The communication channels available to course participants were adequate. | 3.01 | 0.54 | −0.605 | 0.815 |

| 20. The resources offered by the platform were useful and sufficient to manage my self-learning. | 3.11 | 0.58 | −0.401 | 0.312 |

| 21. The lessons learned in the course seemed easy to apply to my daily practice. | 3.29 | 0.64 | −0.344 | 0.566 |

| 22. The course presented us with potential applications of the learning to our professional field. | 3.35 | 0.64 | −0.316 | 1.651 |

| 23. I am applying or intend to apply partially/totally the content acquired in the course. | 3.32 | 0.61 | −0.208 | 0.711 |

| 24. The course contributed to my professional development. | 3.29 | 0.61 | −0.328 | 0.029 |

| Model | χ2/d.f. | CFI | GFI | RMR | RMSEA (CI 90%) |

|---|---|---|---|---|---|

| Single factor | 10.826 | 0.693 | 0.621 | 0.035 | 0.145 (0.140–0.150) |

| Five factors | 3.130 | 0.936 | 0.873 | 0.019 | 0.067 (0.062–0.073) |

| Five factors with EC | 2.272 | 0.962 | 0.911 | 0.018 | 0.052 (0.046–0.058) |

| Items | Loading | R2 |

|---|---|---|

| Pedagogical design | ||

| 1. The objectives developed met my needs. | 0.754 | 0.569 |

| 2. The objectives of the course have been fully met. | 0.823 | 0.677 |

| 3. The content of the course corresponded to the proposed objectives. | 0.825 | 0.681 |

| 4. There was clear coordination between the content sections of the course. | 0.843 | 0.711 |

| 5. The contents were presented clearly. | 0.753 | 0.567 |

| 6. The method used in the course was adequate for acquiring the desired competences. | 0.770 | 0.593 |

| 7. The method used involved the application of the acquired knowledge. | 0.780 | 0.608 |

| 9. The course materials were useful and provided adequate information. | 0.728 | 0.530 |

| Tutor performance | ||

| 13. The course tutor used the results of the assessments to guide the learning of the course participants. | 0.762 | 0.581 |

| 14. The tutor guided me according to my specific needs. | 0.888 | 0.789 |

| 15. The tutor resolved any existing doubts, using all available resources. | 0.919 | 0.845 |

| 16. Communication with the tutor was fluid. | 0.849 | 0.721 |

| Virtual environment design | ||

| 17. The structure of the platform was clear, logical, and well organized. | 0.767 | 0.588 |

| 18. I could easily access the different course sites. | 0.752 | 0.566 |

| 19. The communication channels available to course participants were adequate. | 0.858 | 0.736 |

| 20. The resources offered by the platform were useful and sufficient to manage my self-learning. | 0.879 | 0.773 |

| Timing | ||

| 10. The duration of the course was adequate. | 0.739 | 0.546 |

| 11. The time scheduled in the course to work on course content was adequate. | 0.958 | 0.918 |

| 12. The time scheduled in the course for the execution of activities/tasks was adequate. | 0.894 | 0.799 |

| Transfer of learning | ||

| 8. The method developed involved the resolution of real problems in my professional practice. | 0.715 | 0.511 |

| 21. The lessons learned in the course seemed easy to apply to my daily practice. | 0.592 | 0.350 |

| 22. The course presented us with potential applications of the learning to our professional field. | 0.700 | 0.490 |

| 23. I am applying or intend to apply partially/totally the content acquired in the course. | 0.630 | 0.397 |

| 24. The course contributed to my professional development. | 0.618 | 0.382 |

| Pedagogical Design | Tutor Performance | Virtual Environment Design | Timing | |

|---|---|---|---|---|

| Pedagogical design | ||||

| Tutor performance | 0.684 | |||

| Virtual environment design | 0.728 | 0.733 | ||

| Timing | 0.616 | 0.483 | 0.512 | |

| Transfer of learning | 0.727 | 0.562 | 0.606 | 0.481 |

| Subscales | Number of Items | Cronbach’s Alpha |

|---|---|---|

| Pedagogical design | 8 | 0.93 |

| Tutor performance | 4 | 0.91 |

| Virtual environment design | 4 | 0.90 |

| Timing | 3 | 0.89 |

| Transfer of learning | 5 | 0.78 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodríguez-Santero, J.; Torres-Gordillo, J.J.; Gil-Flores, J. Confirmatory Factor Analysis of a Questionnaire for Evaluating Online Training in the Workplace. Sustainability 2020, 12, 4629. https://doi.org/10.3390/su12114629

Rodríguez-Santero J, Torres-Gordillo JJ, Gil-Flores J. Confirmatory Factor Analysis of a Questionnaire for Evaluating Online Training in the Workplace. Sustainability. 2020; 12(11):4629. https://doi.org/10.3390/su12114629

Chicago/Turabian StyleRodríguez-Santero, Javier, Juan Jesús Torres-Gordillo, and Javier Gil-Flores. 2020. "Confirmatory Factor Analysis of a Questionnaire for Evaluating Online Training in the Workplace" Sustainability 12, no. 11: 4629. https://doi.org/10.3390/su12114629

APA StyleRodríguez-Santero, J., Torres-Gordillo, J. J., & Gil-Flores, J. (2020). Confirmatory Factor Analysis of a Questionnaire for Evaluating Online Training in the Workplace. Sustainability, 12(11), 4629. https://doi.org/10.3390/su12114629