Tunnel Surface Settlement Forecasting with Ensemble Learning

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Selection of Base Prediction Models

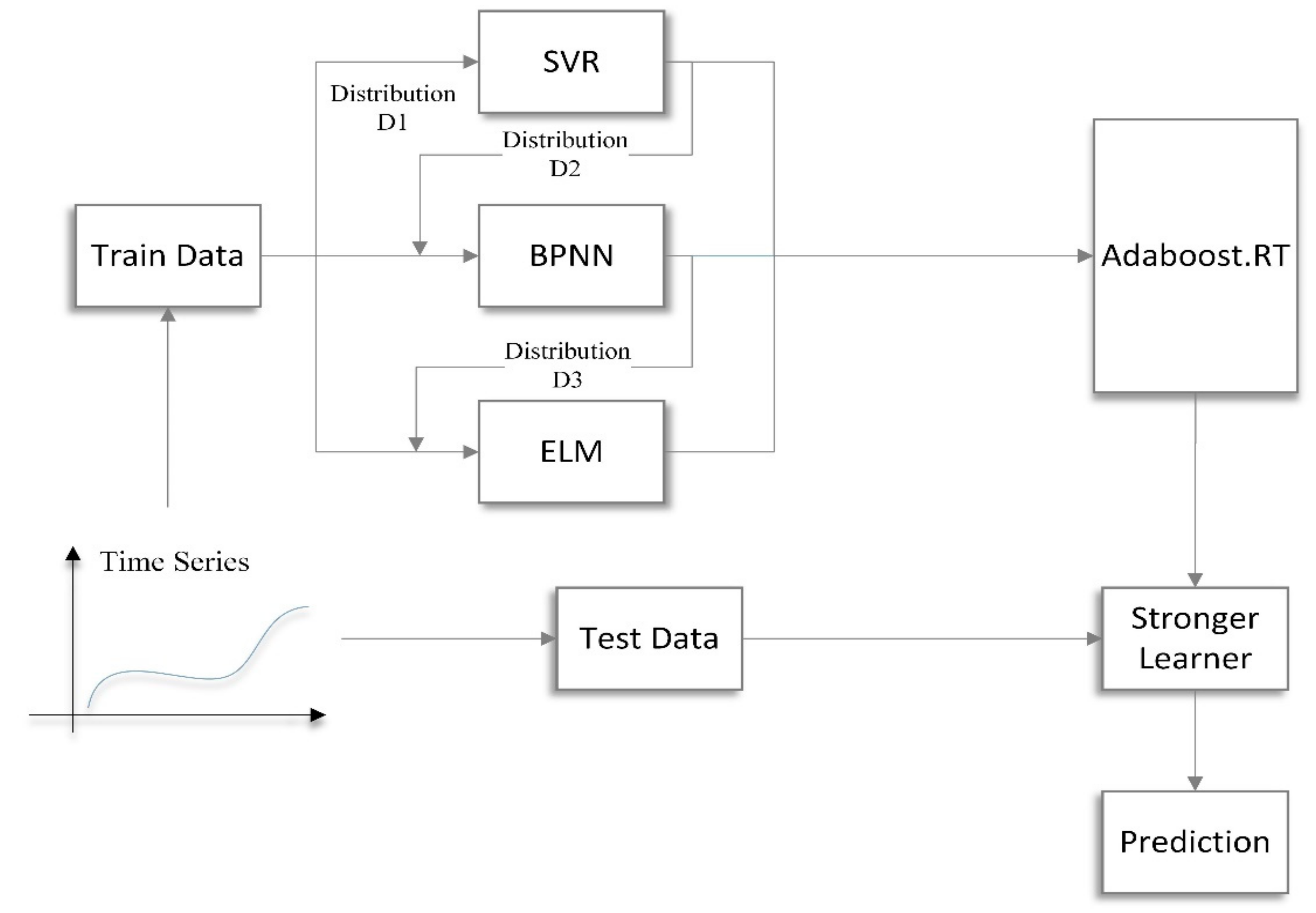

3.2. The Proposed Method

| Algorithm 1. A customized Adaboost.RT algorithm for tunnel settlement forecasting |

| Input: Training dataset M, weak learning algorithm (base learner) l, integer T specifying number of iterations (machines), threshold ϕ for differentiating correct, and incorrect predictions. Initialization: Error rate εt, sample distribution Dt(i) = 1/m, machine number or iteration t = 1. Iteration: While t < T: Step 1: Calling base learner, providing it with distribution Dt(i) = 1/m. Step 2: Building a regression model: Step 3: Calculating absolute relative error for each training example as Step 4: Calculating the error rate: Step 5: Setting βt = (εt)h, where h = 1, 2 or 3 (linear, square or cubic). Step 6: Updating distribution Dt(i) Step 7: Adjusting ϕ according to the algorithm proposed in [31]. Step 8: Setting t = t + 1 Output: Outputting the ensemble learner: |

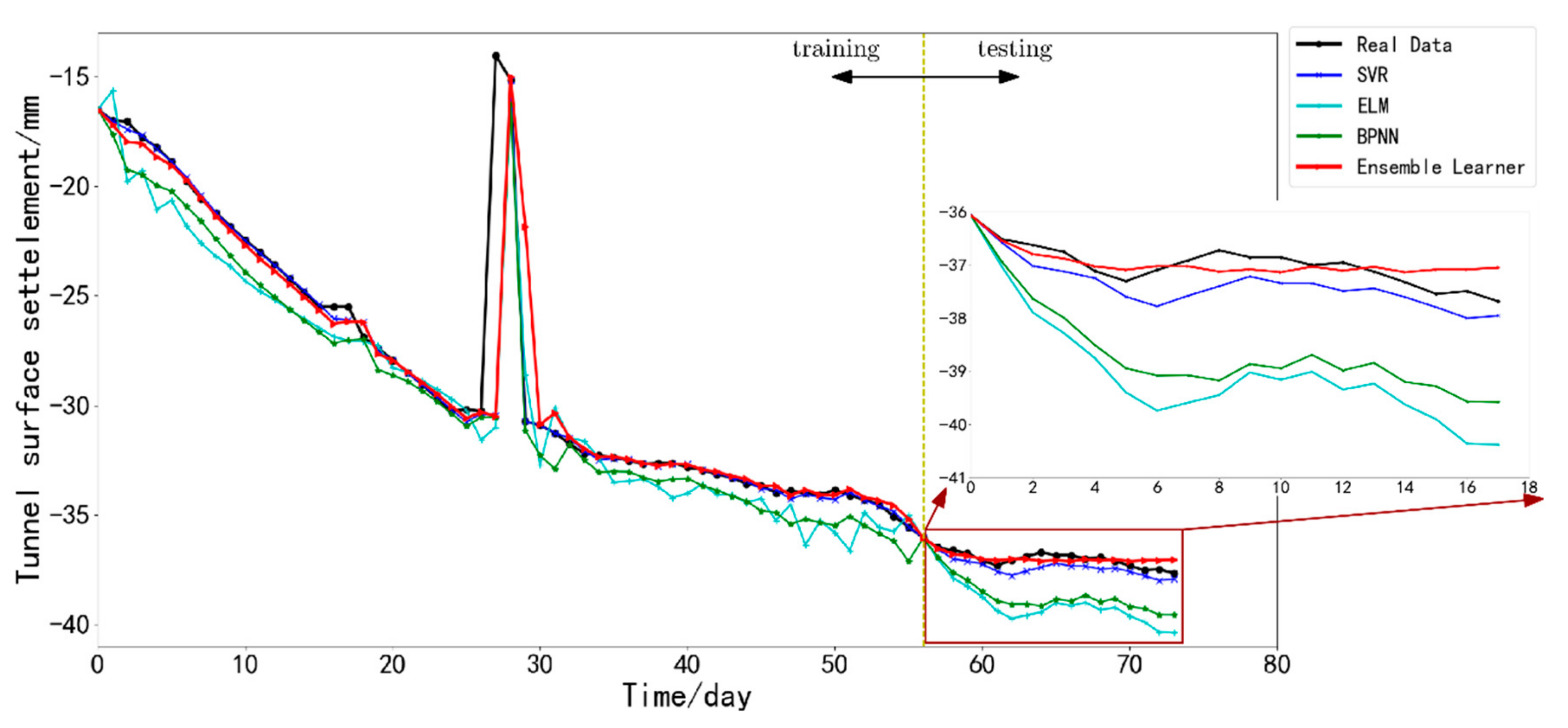

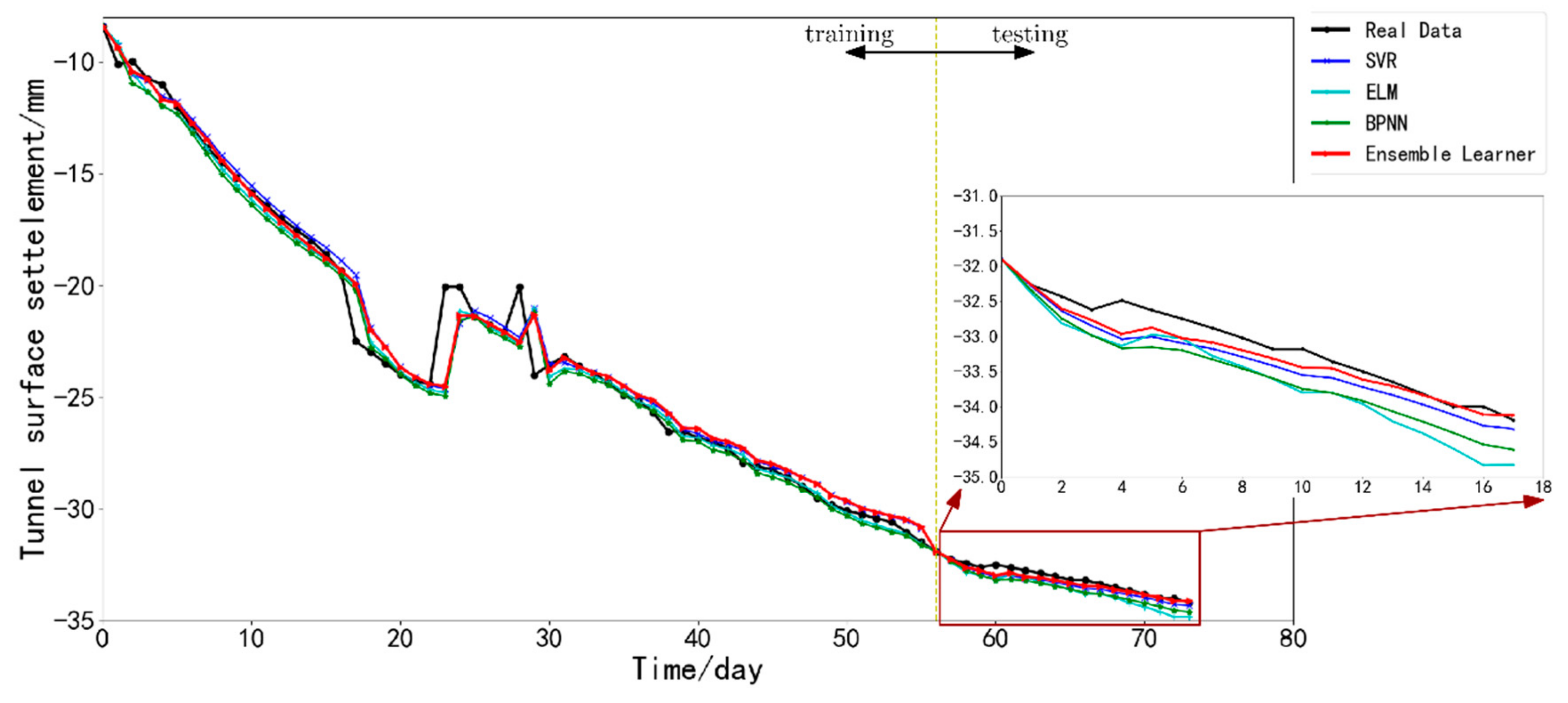

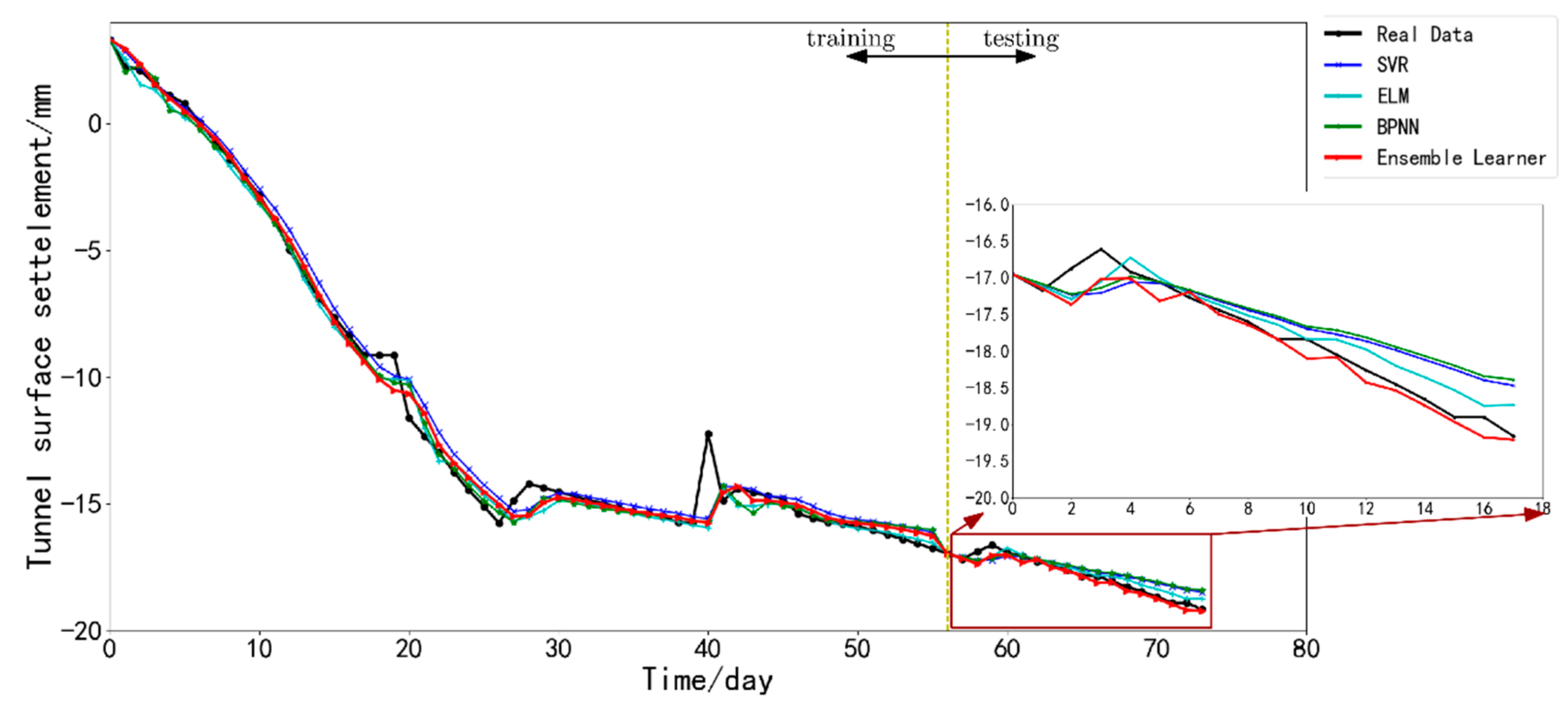

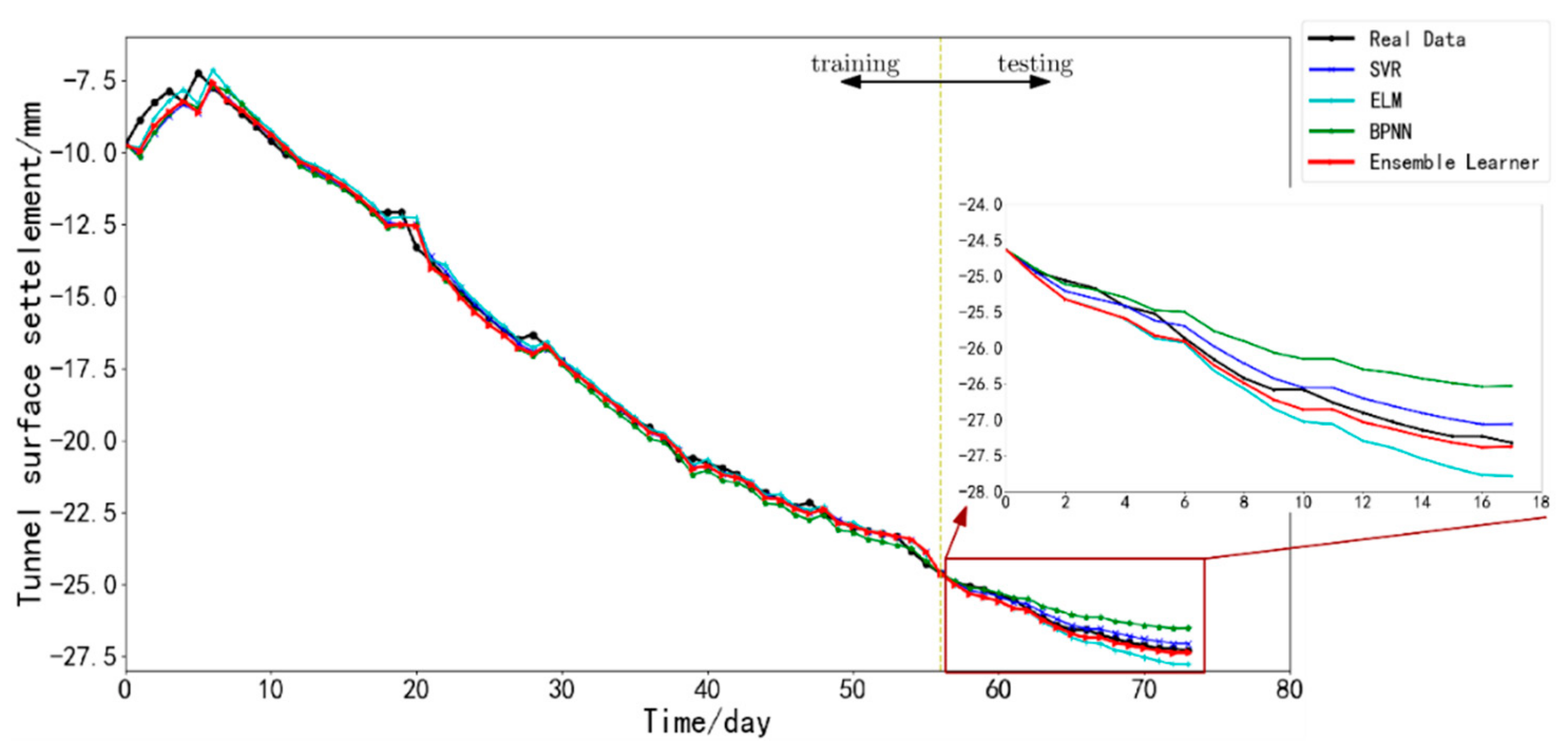

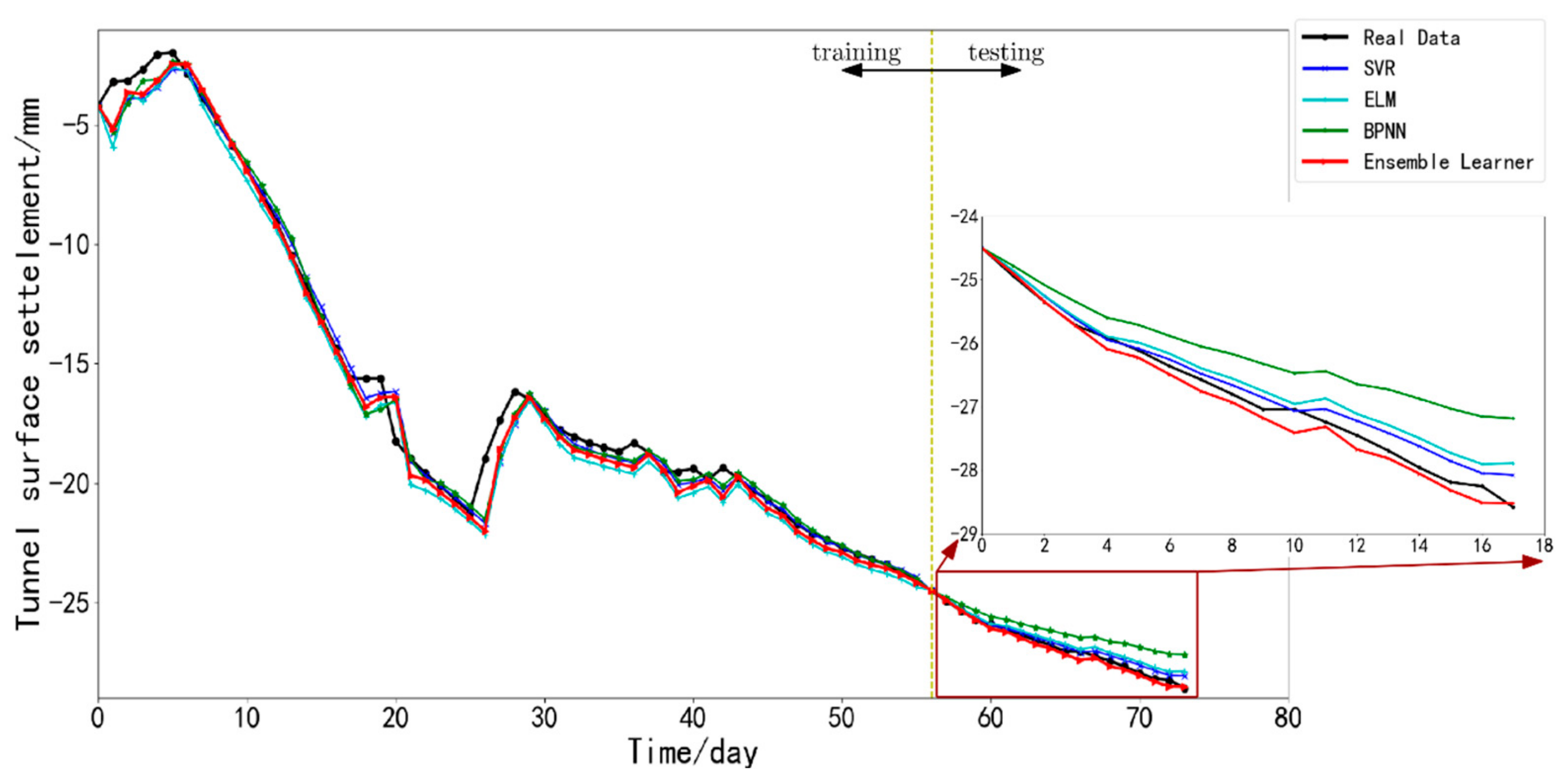

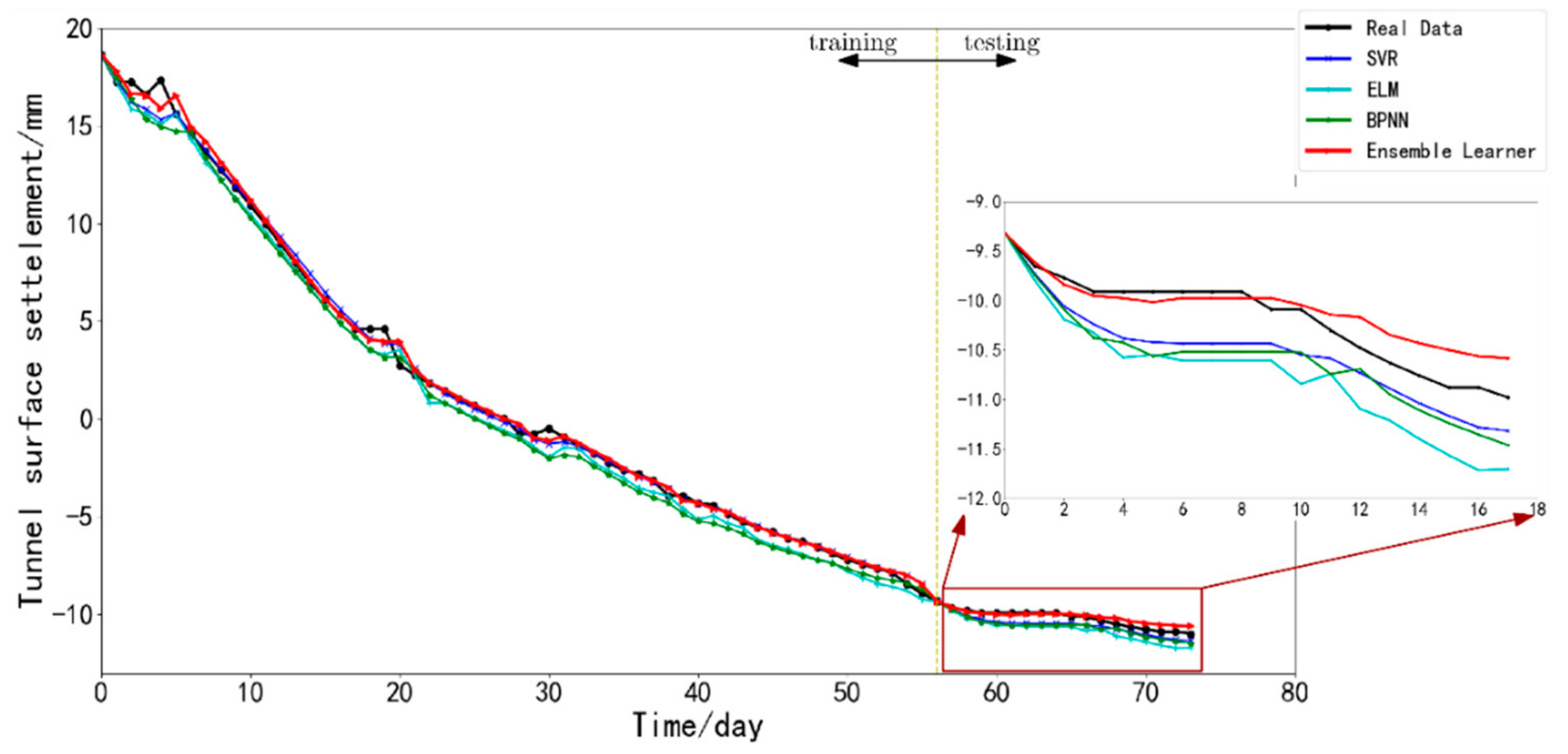

4. Results

5. Conclusions, Limitations, and Future Works

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, L.; Wu, X.; Ding, L.; Skibniewski, M.J. A novel model for risk assessment of adjacent buildings in tunneling environments. Build. Environ. 2013, 65, 185–194. [Google Scholar] [CrossRef]

- Hu, M.; Feng, X.; Ji, Z.; Yan, K.; Zhou, S. A novel computational approach for discord search with local recurrence rates in multivariate time series. Inf. Sci. 2019, 477, 220–233. [Google Scholar] [CrossRef]

- Yan, K.; Wang, X.; Du, Y.; Jin, N.; Huang, H.; Zhou, H. Multi-Step Short-Term Power Consumption Forecasting with a Hybrid Deep Learning Strategy. Energies 2018, 11, 3089. [Google Scholar] [CrossRef]

- Li, H.Z.; Guo, S.; Li, C.J.; Sun, J.Q. A hybrid annual power load forecasting model based on generalized regression neural network with fruit fly optimization algorithm. Knowl. Based Syst. 2013, 37, 378–387. [Google Scholar] [CrossRef]

- Hu, M.; Li, W.; Yan, K.; Ji, Z.; Hu, H. Modern Machine Learning Techniques for Univariate Tunnel Settlement Forecasting: A Comparative Study. Math. Probl. Eng. 2019, 2019. [Google Scholar] [CrossRef]

- Yan, K.; Li, W.; Ji, Z.; Qi, M.; Du, Y. A Hybrid LSTM Neural Network for Energy Consumption Forecasting of Individual Households. IEEE Access 2019, 7, 157633–157642. [Google Scholar] [CrossRef]

- Yan, X.; Cui, B.; Xu, Y.; Shi, P.; Wang, Z. A Method of Information Protection for Collaborative Deep Learning under GAN Model Attack. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019. [Google Scholar] [CrossRef]

- Moghaddam, M.A.; Golmezergi, R.; Kolahan, F. Multi-variable measurements and optimization of GMAW parameters for API-X42 steel alloy using a hybrid BPNN–PSO approach. Measurement 2016, 92, 279–287. [Google Scholar] [CrossRef]

- Yan, K.; Ji, Z.; Lu, H.; Huang, J.; Shen, W.; Xue, Y. Fast and accurate classification of time series data using extended ELM: Application in fault diagnosis of air handling units. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 1349–1356. [Google Scholar] [CrossRef]

- Yan, K.; Zhong, C.; Ji, Z.; Huang, J. Semi-supervised learning for early detection and diagnosis of various air handling unit faults. Energy Build. 2018, 181, 75–83. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Xu, Y.; Gao, W.; Zeng, Q.; Wang, G.; Ren, J.; Zhang, Y. A feasible fuzzy-extended attribute-based access control technique. Secur. Commun. Netw. 2018, 2018. [Google Scholar] [CrossRef]

- Fang, Y.S.; Wu, C.T.; Chen, S.F.; Liu, C. An estimation of subsurface settlement due to shield tunneling. Tunn. Undergr. Space Technol. 2014, 44, 121–129. [Google Scholar] [CrossRef]

- Islam, M.N.; Gnanendran, C.T. Elastic-viscoplastic model for clays: Development, validation, and application. J. Eng. Mech. 2017, 143, 04017121. [Google Scholar] [CrossRef]

- Mei, G.; Zhao, X.; Wang, Z. Settlement prediction under linearly loading condition. Mar. Georesour. Geotechnol. 2015, 33, 92–97. [Google Scholar] [CrossRef]

- Hu, M.; Ji, Z.; Yan, K.; Guo, Y.; Feng, X.; Gong, J.; Dong, L. Detecting anomalies in time series data via a meta-feature based approach. IEEE Access 2018, 6, 27760–27776. [Google Scholar] [CrossRef]

- Ocak, I.; Seker, S.E. Calculation of surface settlements caused by EPBM tunneling using artificial neural network, SVM, and Gaussian processes. Environ. Earth Sci. 2013, 70, 1263–1276. [Google Scholar] [CrossRef]

- Azadi, M.; Pourakbar, S.; Kashfi, A. Assessment of optimum settlement of structure adjacent urban tunnel by using neural network methods. Tunn. Undergr. Space Technol. 2013, 37, 1–9. [Google Scholar] [CrossRef]

- Moghaddasi, M.R.; Noorian-Bidgoli, M. ICA-ANN, ANN and multiple regression models for prediction of surface settlement caused by tunneling. Tunn. Undergr. Space Technol. 2018, 79, 197–209. [Google Scholar] [CrossRef]

- Yan, K.; Ji, Z.; Shen, W. Online fault detection methods for chillers combining extended kalman filter and recursive one-class SVM. Neurocomputing 2017, 228, 205–212. [Google Scholar] [CrossRef]

- Yan, K.; Ma, L.; Dai, Y.; Shen, W.; Ji, Z.; Xie, D. Cost-sensitive and sequential feature selection for chiller fault detection and diagnosis. Int. J. Refrig. 2018, 86, 401–409. [Google Scholar] [CrossRef]

- Du, Y.; Yan, K.; Ren, Z.; Xiao, W. Designing localized MPPT for PV systems using fuzzy-weighted extreme learning machine. Energies 2018, 11, 2615. [Google Scholar] [CrossRef]

- Lu, H.; Meng, Y.; Yan, K.; Xue, Y.; Gao, Z. Classifying Non-linear Gene Expression Data Using a Novel Hybrid Rotation Forest Method. In International Conference on Intelligent Computing, Proceedings of the 13th International Conference, ICIC 2017, Liverpool, UK, 7–10 August 2017; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Zhong, C.; Yan, K.; Dai, Y.; Jin, N.; Lou, B. Energy Efficiency Solutions for Buildings: Automated Fault Diagnosis of Air Handling Units Using Generative Adversarial Networks. Energies 2019, 12, 527. [Google Scholar] [CrossRef]

- Tang, L.; Yu, L.; Wang, S.; Li, J.; Wang, S. A novel hybrid ensemble learning paradigm for nuclear energy consumption forecasting. Appl. Energy 2012, 93, 432–443. [Google Scholar] [CrossRef]

- Li, S.; Wang, P.; Goel, L. A novel wavelet-based ensemble method for short-term load forecasting with hybrid neural networks and feature selection. IEEE Trans. Power Syst. 2015, 31, 1788–1798. [Google Scholar] [CrossRef]

- Wang, H.Z.; Li, G.Q.; Wang, G.B.; Peng, J.C.; Jiang, H.; Liu, Y.T. Deep learning based ensemble approach for probabilistic wind power forecasting. Appl. Energy 2017, 188, 56–70. [Google Scholar] [CrossRef]

- Drucker, H. Improving regressors using boosting techniques. In Proceedings of the Fourteenth International Conference on Machine Learning ICML, Nashville, TN, USA, 8–12 July 1997; Volume 97, pp. 107–115. [Google Scholar]

- Solomatine, D.P.; Shrestha, D.L. AdaBoost. RT: A boosting algorithm for regression problems. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; Volume 2, pp. 1163–1168. [Google Scholar]

- Yan, K.; Lu, H. Evaluating ensemble learning impact on gene selection for automated cancer diagnosis. In International Workshop on Health Intelligence; Springer: Cham, Switzerland, 2019; pp. 183–186. [Google Scholar]

- Zhang, P.; Yang, Z. A robust AdaBoost. RT based ensemble extreme learning machine. Math. Probl. Eng. 2015, 2015. [Google Scholar] [CrossRef]

| Point | Proposed | SVR | BPNN | ELM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | |

| 181 | 0.2641 | 0.2041 | 0.5488 | 0.4158 | 0.3684 | 0.9950 | 1.7444 | 1.6349 | 4.4094 | 2.1531 | 2.0166 | 5.4360 |

| 182 | 0.2945 | 0.2470 | 1.2853 | 0.3675 | 0.3113 | 1.6157 | 0.3807 | 0.3168 | 1.6391 | 0.3067 | 0.2548 | 1.3304 |

| 184 | 0.2001 | 0.1587 | 0.4796 | 0.3960 | 0.3653 | 1.1017 | 0.4342 | 0.4027 | 1.2115 | 0.5547 | 0.5169 | 1.5559 |

| 188 | 0.1953 | 0.1370 | 0.7826 | 0.3773 | 0.3071 | 1.6968 | 0.4038 | 0.3261 | 1.7950 | 0.2463 | 0.1985 | 1.1090 |

| 189 | 0.1597 | 0.1325 | 0.5083 | 0.1702 | 0.1484 | 0.5587 | 0.4949 | 0.4084 | 1.5241 | 0.3200 | 0.2825 | 1.0639 |

| 190 | 0.1375 | 0.0867 | 0.3058 | 0.1610 | 0.1075 | 0.3793 | 0.1455 | 0.0973 | 0.3448 | 0.1650 | 0.1166 | 0.4132 |

| 210 | 0.1530 | 0.1239 | 0.4873 | 0.3358 | 0.2157 | 0.8560 | 0.2274 | 0.1741 | 0.6898 | 0.2254 | 0.1786 | 0.7066 |

| 225 | 0.1821 | 0.1473 | 0.3585 | 0.1986 | 0.1587 | 0.3862 | 0.2822 | 0.2132 | 0.5193 | 0.2624 | 0.1974 | 0.4808 |

| 235 | 0.2619 | 0.1979 | 0.9103 | 0.3779 | 0.3272 | 1.4988 | 0.5462 | 0.4614 | 2.1122 | 0.2867 | 0.2498 | 1.1457 |

| Point Number | Proposed | Adaboost.RT + SVR | Adaboost.RT + BPNN | Adaboost.RT + ELM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | RMSE | MAE | MAPE (%) | |

| 181 | 0.2641 | 0.2041 | 0.5488 | 0.3901 | 0.3395 | 0.9179 | 0.4201 | 0.3616 | 0.9723 | 1.2479 | 1.1167 | 3.0138 |

| 182 | 0.2945 | 0.2470 | 1.2853 | 0.3082 | 0.2645 | 1.3765 | 0.3019 | 0.2629 | 1.3661 | 0.3305 | 0.2862 | 1.4857 |

| 184 | 0.2001 | 0.1587 | 0.4796 | 0.1943 | 0.1618 | 0.4901 | 0.2392 | 0.1847 | 0.5615 | 0.4215 | 0.3913 | 1.1773 |

| 188 | 0.1953 | 0.1370 | 0.7826 | 0.2193 | 0.1569 | 0.8887 | 0.2981 | 0.2406 | 1.3338 | 0.1976 | 0.1475 | 0.8324 |

| 189 | 0.1597 | 0.1325 | 0.5083 | 0.2609 | 0.2131 | 0.8072 | 0.3616 | 0.2963 | 1.1064 | 0.3674 | 0.3031 | 1.1320 |

| 190 | 0.1375 | 0.0867 | 0.3058 | 0.1700 | 0.1168 | 0.4126 | 0.1569 | 0.1190 | 0.4271 | 0.1424 | 0.0933 | 0.3305 |

| 210 | 0.1530 | 0.1239 | 0.4873 | 0.2158 | 0.1829 | 0.7137 | 0.1884 | 0.1538 | 0.6063 | 0.1665 | 0.1410 | 0.5518 |

| 225 | 0.1821 | 0.1473 | 0.3585 | 0.1969 | 0.1565 | 0.3811 | 0.2090 | 0.1620 | 0.3945 | 0.2142 | 0.1649 | 0.4011 |

| 235 | 0.2619 | 0.1979 | 0.9103 | 0.3590 | 0.3061 | 1.3999 | 0.3327 | 0.2865 | 1.3114 | 0.2701 | 0.2362 | 1.0834 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, K.; Dai, Y.; Xu, M.; Mo, Y. Tunnel Surface Settlement Forecasting with Ensemble Learning. Sustainability 2020, 12, 232. https://doi.org/10.3390/su12010232

Yan K, Dai Y, Xu M, Mo Y. Tunnel Surface Settlement Forecasting with Ensemble Learning. Sustainability. 2020; 12(1):232. https://doi.org/10.3390/su12010232

Chicago/Turabian StyleYan, Ke, Yuting Dai, Meiling Xu, and Yuchang Mo. 2020. "Tunnel Surface Settlement Forecasting with Ensemble Learning" Sustainability 12, no. 1: 232. https://doi.org/10.3390/su12010232

APA StyleYan, K., Dai, Y., Xu, M., & Mo, Y. (2020). Tunnel Surface Settlement Forecasting with Ensemble Learning. Sustainability, 12(1), 232. https://doi.org/10.3390/su12010232