Patient Voice and Treatment Nonadherence in Cancer Care: A Scoping Review of Sentiment Analysis

Abstract

1. Introduction

Aim and Research Questions

2. Method

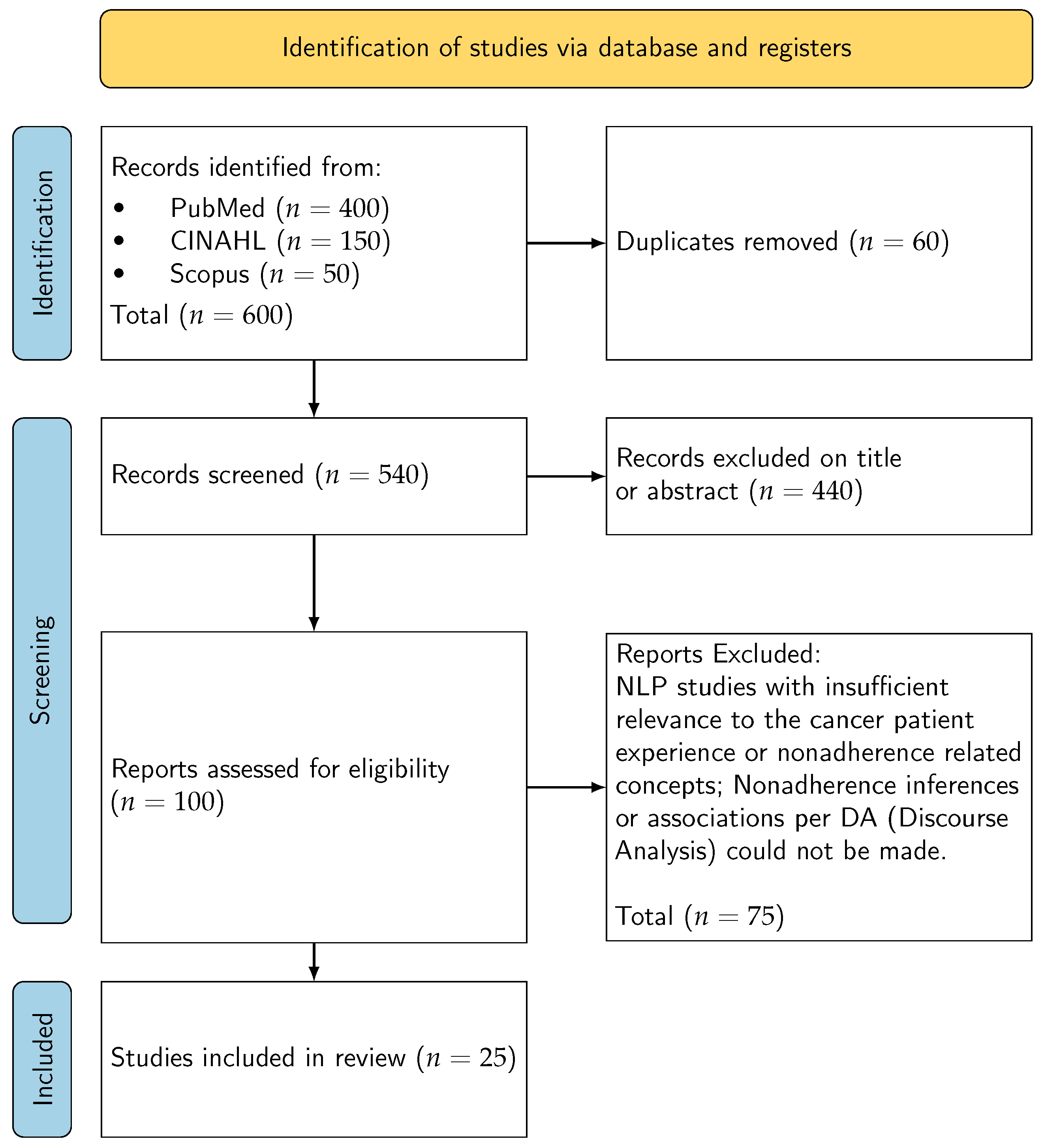

2.1. Design and Reporting

2.2. Operational Definitions

2.3. Eligibility Criteria

2.3.1. Inclusion

2.3.2. Operational Definitions

2.3.3. Exclusion

2.4. Information Sources and Search Strategy

- Concept A (cancer): (cancer* OR neoplasm* OR oncolog*)

- Concept B (NLP/SA): (“natural language processing” OR “text mining” OR sentiment OR emotion* OR classifier* OR lexicon* OR transformer*)

- Concept C (patient voice/platforms): (“social media” OR Twitter OR Reddit OR forum* OR blog* OR “patient review” OR “free text”)

- Concept D (adherence/concordance): (adheren* OR nonadheren* OR concordan* OR complian* OR persistence OR discontinu* OR “shared decision*”)

Automation Sensitivity

2.5. Study Selection

2.6. Data Charting

2.7. Critical Appraisal

2.8. Ethical Considerations for Online Patient-Generated Data

2.9. Synthesis Approach

2.10. Synthesis Without Meta Analysis (SWiM)

3. Results

3.1. Study Selection and Overview

3.2. Characteristics of Included Studies

3.3. Reliability and Validation of NLP Methods

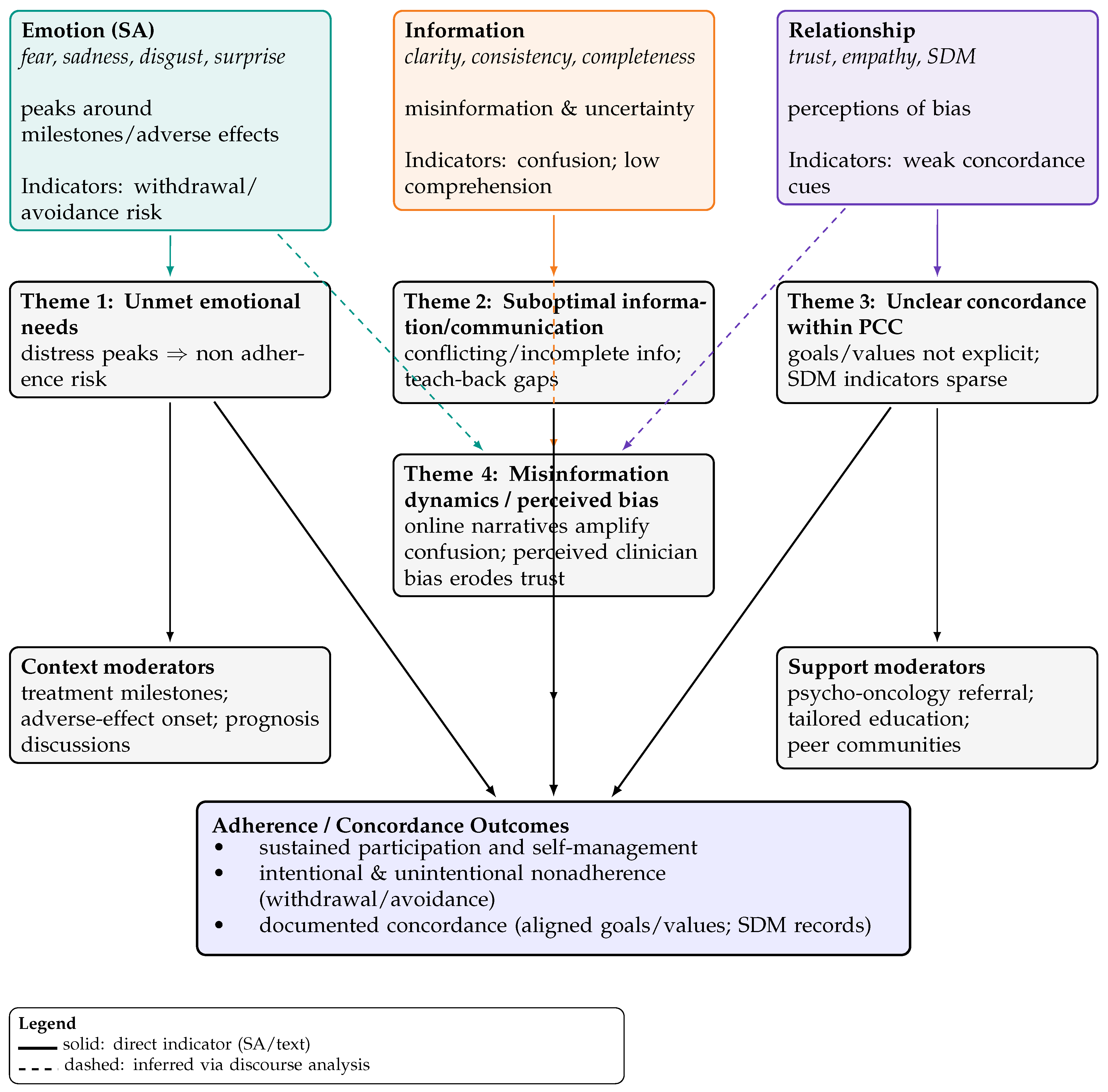

3.4. Emergent Patient-Side Themes

3.5. Theme Prevalence Across Studies

3.6. Narrative Summary

4. Discussion

4.1. Linking Findings to Review Questions

4.2. Conceptual Contributions

4.3. Platform and Methodological Insights

4.4. Interpretation of Findings

4.5. Implications for Practice

4.6. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Public Involvement Statement

Guidelines and Standards Statement

Use of Artificial Intelligence

References

- Belcher, S.M.; Mackler, E.; Muluneh, B.; Ginex, P.K.; Anderson, M.K.; Bettencourt, E.; DasGupta, R.K.; Elliott, J.; Hall, E.; Karlin, M.; et al. ONS Guidelines™ to Support Patient Adherence to Oral Anticancer Medications. Oncol. Nurs. Forum 2022, 49, 279–295. [Google Scholar] [CrossRef] [PubMed]

- Sabaté, E.; WHO. Adherence to Long-Term Therapies: Evidence for Action; World Health Organization: Geneva, Switzerland, 2003; p. 198p. Available online: https://www.google.com.au/books/edition/Adherence_to_Long_term_Therapies/kcYUTH8rPiwC?hl=en&gbpv=0 (accessed on 1 July 2023).

- Brown, M.T.; Bussell, J.K. Medication adherence: WHO cares? Mayo Clin. Proc. 2011, 86, 304–314. [Google Scholar] [CrossRef]

- Wreyford, L.; Gururajan, R.; Zhou, X. When can cancer patient treatment nonadherence be considered intentional or unintentional? A scoping review. Unkn. J. 2023, 18, e0282180. [Google Scholar] [CrossRef] [PubMed]

- Chung, E.H.; Mebane, S.; Harris, B.S.; White, E.; Acharya, K.S. Oncofertility research pitfall? Recall bias in young adult cancer survivors. F&S Rep. 2023, 4, 98–103. [Google Scholar] [CrossRef]

- Felipe, D.; David, E.; Mario, M.; Qiao, W. Bias in patient satisfaction surveys: A threat to measuring healthcare quality. BMJ Glob. Health 2018, 3, e000694. [Google Scholar] [CrossRef]

- Lindberg, P.; Netter, P.; Koller, M.; Steinger, B.; Klinkhammer-Schalke, M. Breast cancer survivors` recollection of their quality of life: Identifying determinants of recall bias in a longitudinal population-based trial. PLoS ONE 2017, 12, e0171519. [Google Scholar] [CrossRef]

- Shah, A.M.; Yan, X.; Tariq, S.; Ali, M. What patients like or dislike in physicians: Analyzing drivers of patient satisfaction and dissatisfaction using a digital topic modeling approach. Inf. Process. Manag. 2021, 58, 102516. [Google Scholar] [CrossRef]

- Nawab, K.; Ramsey, G.; Schreiber, R. Natural Language Processing to Extract Meaningful Information from Patient Experience Feedback. Appl. Clin. Inf. 2020, 11, 242–252. [Google Scholar] [CrossRef]

- Ng, J.; Luk, B. Patient satisfaction: Concept analysis in the healthcare context. Patient Educ. Couns. 2019, 102, 790–796. [Google Scholar] [CrossRef]

- Lasala, R.; Santoleri, F. Association between adherence to oral therapies in cancer patients and clinical outcome: A systematic review of the literature. Br. J. Clin. Pharmacol. 2022, 88, 1999–2018. [Google Scholar] [CrossRef]

- Yoder, A.K.; Dong, E.; Yu, X.; Echeverria, A.; Sharma, S.; Montealegre, J.; Ludwig, M.S. Effect of Quality of Life on Radiation Adherence for Patients with Cervical Cancer in an Urban Safety Net Health System. Int. J. Radiat. Oncol. Biol. Phys. 2023, 116, 182–190. [Google Scholar] [CrossRef]

- Clark, J.; Jones, T.; Alapati, A.; Ukandu, D.; Danforth, D.; Dodds, D. A Sentiment Analysis of Breast Cancer Treatment Experiences and Healthcare Perceptions Across Twitter. arXiv 2018, arXiv:1805.09959. [Google Scholar] [CrossRef]

- Turpen, T.; Matthews, L.; Matthews, S.G.; Guney, E. Beneath the surface of talking about physicians: A statistical model of language for patient experience comments. Patient Exp. J. 2019, 6, 51–58. [Google Scholar] [CrossRef]

- Lai, D.M.; Rayson, P.; Payne, S.; Liu, Y. Analysing Emotions in Cancer Narratives: A Corpus-Driven Approach. In Proceedings of the First Workshop on Patient-Oriented Language Processing (CL4Health) @ LREC-COLING 2024, Torino, Italia; ELRA: Paris, France; ICCL: Cham, Switzerland, 2024; pp. 73–83. [Google Scholar]

- Mishra, M.V.; Bennett, M.; Vincent, A.; Lee, O.T.; Lallas, C.D.; Trabulsi, E.J.; Gomella, L.G.; Dicker, A.P.; Showalter, T.N. Identifying barriers to patient acceptance of active surveillance: Content analysis of online patient communications. PLoS ONE 2013, 8, e68563. [Google Scholar] [CrossRef]

- Yin, Z.; Malin, B.; Warner, J.; Hsueh, P.; Chen, C. The Power of the Patient Voice: Learning Indicators of Treatment Adherence from an Online Breast Cancer Forum. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017. [Google Scholar] [CrossRef]

- Babel, R.; Taneja, R.; Mondello, M.; Monaco, A.; Donde, S. Artificial Intelligence Solutions to Increase Medication Adherence in Patients with Non-communicable Diseases. Front. Digit. Health 2021, 3, 669869. [Google Scholar] [CrossRef] [PubMed]

- Robinson, S.; Vicha, E. Twitter Sentiment at the Hospital and Patient Level as a Measure of Pediatric Patient Experience. Open J. Pediatr. 2021, 11, 706–722. [Google Scholar] [CrossRef]

- Sim, J.A.; Huang, X.; Horan, M.R.; Baker, J.N.; Huang, I.C. Using natural language processing to analyze unstructured patient-reported outcomes data derived from electronic health records for cancer populations: A systematic review. Expert Rev. Pharmacoecon. Outcomes Res. 2024, 24, 467–475. [Google Scholar] [CrossRef]

- Li, C.; Fu, J.; Lai, J.; Sun, L.; Zhou, C.; Li, W.; Jian, B.; Deng, S.; Zhang, Y.; Guo, Z.; et al. Construction of an Emotional Lexicon of Patients with Breast Cancer: Development and Sentiment Analysis. J. Med. Internet Res. 2023, 25, e44897. [Google Scholar] [CrossRef]

- Chichua, M.; Filipponi, C.; Mazzoni, D.; Pravettoni, G. The emotional side of taking part in a cancer clinical trial. PLoS ONE 2023, 18, e0284268. [Google Scholar] [CrossRef]

- Freedman, R.A.; Viswanath, K.; Vaz-Luis, I.; Keating, N.L. Learning from social media: Utilizing advanced data extraction techniques to understand barriers to breast cancer treatment. Breast Cancer Res. Treat. 2016, 158, 395–405. [Google Scholar] [CrossRef]

- Verberne, S.; Batenburg, A.; Sanders, R.; van Eenbergen, M.; Das, E.; Lambooij, M.S. Analyzing Empowerment Processes Among Cancer Patients in an Online Community: A Text Mining Approach. JMIR Cancer 2019, 5, e9887. [Google Scholar] [CrossRef]

- Greene, S.M.; Tuzzio, L.; Cherkin, D. A framework for making patient-centered care front and center. Perm J. 2012, 16, 49–53. [Google Scholar] [CrossRef]

- Robinson, J.H.; Callister, L.C.; Berry, J.A.; Dearing, K.A. Patient-centered care and adherence: Definitions and applications to improve outcomes. J. Am. Acad. Nurs. Pract. 2008, 20, 600–607. [Google Scholar] [CrossRef] [PubMed]

- Fix, G.M.; VanDeusen Lukas, C.; Bolton, R.E.; Hill, J.N.; Mueller, N.; LaVela, S.L.; Bokhour, B.G. Patient-centred care is a way of doing things: How healthcare employees conceptualize patient-centred care. Health Expect 2018, 21, 300–307. [Google Scholar] [CrossRef] [PubMed]

- Mead, H.; Wang, Y.; Cleary, S.; Arem, H.; Pratt-Chapman, L.M. Defining a patient-centered approach to cancer survivorship care: Development of the patient centered survivorship care index (PC-SCI). BMC Health Serv. Res. 2021, 21, 1353. [Google Scholar] [CrossRef] [PubMed]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.; Horsley, T.; Weeks, L.; et al. PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Australian Commission on Safety and Quality in Health Care. Australian Charter of Healthcare Rights, 2nd ed.; Australian Commission on Safety and Quality in Health Care: Sydney, Australia, 2020. Available online: https://www.safetyandquality.gov.au/publications-and-resources/resource-library/australian-charter-healthcare-rights-second-edition-a4-accessible (accessed on 1 July 2023).

- Moralejo, D.; Ogunremi, T.; Dunn, K. Critical Appraisal Toolkit (CAT) for assessing multiple types of evidence. Can. Commun. Dis. Rep. 2017, 43, 176–181. [Google Scholar] [CrossRef]

- Skeat, J.; Roddam, H. The qual-CAT: Applying a rapid review approach to qualitative research to support clinical decision-making in speech-language pathology practice. Evid.-Based Commun. Assess. Interv. 2019, 13, 3–14. [Google Scholar] [CrossRef]

- CASP. Critical Appraisal Skills Programme; CASP UK-OAP Ltd.: Oxford, UK, 2023; Available online: https://casp-uk.net/casp-tools-checklists/ (accessed on 23 September 2024).

- Cho, J.E.; Tang, N.; Pitaro, N.; Bai, H.; Cooke, P.V.; Arvind, V. Sentiment Analysis of Online Patient-Written Reviews of Vascular Surgeons. Ann. Vasc. Surg. 2023, 88, 249–255. [Google Scholar] [CrossRef]

- Masiero, M.; Spada, G.E.; Fragale, E.; Pezzolato, M.; Munzone, E.; Sanchini, V.; Pietrobon, R.; Teixeira, L.; Valencia, M.; Machiavelli, A.; et al. Adherence to oral anticancer treatments: Network and sentiment analysis exploring perceived internal and external determinants in patients with metastatic breast cancer. Support. Care Cancer 2024, 32, 458. [Google Scholar] [CrossRef]

- Meksawasdichai, S.; Lerksuthirat, T.; Ongphiphadhanakul, B.; Sriphrapradang, C. Perspectives and Experiences of Patients with Thyroid Cancer at a Global Level: Retrospective Descriptive Study of Twitter Data. JMIR Cancer 2023, 9, e48786. [Google Scholar] [CrossRef]

- Podina, I.R.; Bucur, A.-M.; Todea, D.; Fodor, L.; Luca, A.; Dinu, L.P.; Boian, R.F. Mental health at different stages of cancer survival: A natural language processing study of Reddit posts. Front. Psychol. 2023, 14, 1150227. [Google Scholar] [CrossRef] [PubMed]

- Mazza, M.; Piperis, M.; Aasaithambi, S.; Chauhan, J.; Sagkriotis, A.; Vieira, C. Social Media Listening to Understand the Lived Experience of Individuals in Europe With Metastatic Breast Cancer: A Systematic Search and Content Analysis Study. Front. Oncol. 2022, 12, 863641. [Google Scholar] [CrossRef]

- Watanabe, T.; Yada, S.; Aramaki, E.; Yajima, H.; Kizaki, H.; Hori, S. Extracting Multiple Worries from Breast Cancer Patient Blogs Using Multilabel Classification with the Natural Language Processing Model Bidirectional Encoder Representations from Transformers: Infodemiology Study of Blogs. JMIR Cancer 2022, 8, e37840. [Google Scholar] [CrossRef]

- Cercato, M.C.; Colella, E.; Fabi, A.; Bertazzi, I.; Giardina, B.G.; Di Ridolfi, P.; Mondati, M.; Petitti, P.; Bigiarini, L.; Scarinci, V.; et al. Narrative medicine: Feasibility of a digital narrative diary application in oncology. J. Int. Med Res. 2021, 50, 1–12. [Google Scholar] [CrossRef]

- Vehviläinen-Julkunen, K.; Turpeinen, S.; Kvist, T.; Ryden-Kortelainen, M.; Nelimarkka, S.; Enshaeifar, S.; Faithfull, S. Experience of Ambulatory Cancer Care: Understanding Patients’ Perspectives of Quality Using Sentiment Analysis. Cancer Nurs. 2021, 44, E331–E338. [Google Scholar] [CrossRef]

- Law, E.H.; Auil, M.J.; Spears, P.A.; Berg, K.; Winnette, R. Voice Analysis of Cancer Experiences Among Patients with Breast Cancer: VOICE-BC. J. Patient Exp. 2021, 8, 23743735211048058. [Google Scholar] [CrossRef]

- Yerrapragada, G.; Siadimas, A.; Babaeian, A.; Sharma, V.; O’Neill, T.J. Machine Learning to Predict Tamoxifen Nonadherence Among US Commercially Insured Patients with Metastatic Breast Cancer. JCO Clin. Cancer Inform. 2021, 5, 814–825. [Google Scholar] [CrossRef] [PubMed]

- Moraliyage, H.; De Silva, D.; Ranasinghe, W.; Adikari, A.; Alahakoon, D.; Prasad, R.; Lawrentschuk, N.; Bolton, D. Cancer in Lockdown: Impact of the COVID-19 Pandemic on Patients with Cancer. Oncologist 2021, 26, e342–e344. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, A.; Idicula, S.M.; Jones, J. Deep learning-based analysis of sentiment dynamics in online cancer community forums: An experience. Health Inform. J. 2021, 27, 14604582211007537. [Google Scholar] [CrossRef]

- Arditi, C.; Walther, D.; Gilles, I.; Lesage, S.; Griesser, A.-C.; Bienvenu, C.; Eicher, M.; Peytremann-Bridevaux, I. Computer-assisted textual analysis of free-text comments in the Swiss Cancer Patient Experiences (SCAPE) survey. BMC Health Serv. Res. 2020, 20, 1029. [Google Scholar] [CrossRef]

- Adikari, A.; Ranasinghe, W.; de Silva, D.; Alahakoon, D.; Prasad, R.; Lawrentschuk, N.; Bolton, D. Can online support groups address psychological morbidity of cancer patients? An AI-based investigation of prostate cancer trajectories. PLoS ONE 2020, 15, e0229361. [Google Scholar] [CrossRef]

- Mikal, J.; Hurst, S.; Conway, M. Codifying Online Social Support for Breast Cancer Patients: Retrospective Qualitative Assessment. J. Med. Internet Res. 2019, 21, e12880. [Google Scholar] [CrossRef]

- Elbers, L.A.; Minot, J.R.; Gramling, R.; Brophy, M.T.; Do, N.V. Sentiment analysis of medical record notes for lung cancer patients at the Department of Veterans Affairs. PLoS ONE 2023, 18, e0280931. [Google Scholar] [CrossRef]

- Patel, M.R.; Friese, C.R.; Mendelsohn-Victor, K.; Fauer, A.J.; Ghosh, B.; Ramakrishnan, A.; Bedard, L.; Griggs, J.J.; Manojlovich, M. Clinician Perspectives on Electronic Health Records, Communication, and Patient Safety Across Diverse Medical Oncology Practices. J. Oncol. Pract. 2019, 15, e529–e536. [Google Scholar] [CrossRef]

| Author and Year | Method | Data Sources | Sample | Results | NLP Technique | Nonadherence/Inference |

|---|---|---|---|---|---|---|

| Clark et al., 2018 [13] | Quantitative | ∼5.3 million “breast cancer” related tweets. CAT: Strength = Moderate; Quality = Medium | Invisible patient-reported outcomes (iPROs) captured; positive experiences shared; fear of legislation causing loss of coverage. | Supervised machine learning combined with NLP | Method of Inference:

| |

| Turpen et al., 2019 [14] | Analysis of patient comments from surveys | Anonymous patient satisfaction surveys | 25,161 surveys. CAT: Strength = Moderate; Quality = Medium | Statistically significant differences in language used for higher- vs. lower-rated physicians. | Frequency of 300 pre-selected n-grams | Method of Inference:

|

| Lai et al., 2024 [15] | Quantitative (corpus-driven emotion analysis) | Cancer narratives corpus | — | Analyzing emotions in cancer narratives; corpus-driven approach highlights emotion categories relevant to adherence/concordance. | Corpus linguistics + NLP | Method of Inference:

|

| Mishra et al., 2013 [16] | Qualitative | Online conversations (prostate cancer patients) | 34 websites; 3499 online conversations. CAT: Strength = Weak; Quality = Low | Patients often believed specialist information was biassed. | NLP to identify content and sentiment; ML; internal data dictionary | Method of Inference:

|

| Yin et al., 2017 [17] | Quantitative | Breastcancer.org | Retrospective study of 130,000 posts from 10,000 patients over 9 years. CAT: Strength = Moderate; Quality = Medium | Results correlated with traditional adherence surveys; emotions/personality readily detected online; scale increases relevance. | Logistic Regression (ML); emotion analysis | Method of Inference:

|

| Li J et al., 2023 [21] | Qualitative | Weibo (Chinese social media platform) | 150 written materials; 17 interviews; 6689 posts/comments. CAT: Strength = Weak; Quality = Low | Emotional lexicon with fine-grained categories; new perspective for recognizing emotions/needs; enables tailored emotional management plans. | Emotional lexicon; manual annotation (two general lexicons + BC-specific) | Method of Inference:

|

| Chichua et al., 2023 [22] | Quantitative | Public posts re: clinical trials; Reddit communities | 129 cancer patients; 112 caregivers. CAT: Strength = Moderate; Quality = Medium | Fear identified as the highest emotion. | Keywords; NRC Emotion Lexicon; sentiment analysis | Method of Inference:

|

| Freedman et al., 2016 [23] | Qualitative | Message boards; blogs; topical sites; content-sharing sites; social networks | 1,024,041 social media posts about breast cancer treatment. CAT: Strength = Moderate; Quality = Medium | Fear was the most common emotional sentiment expressed. | Machine Learning (ConsumerSphere software) | Method of Inference:

|

| Verberne et al., 2019 [24] | Posts labelled for empowerment and psychological processes | Forum for cancer patients and relatives | 5534 messages in 1708 threads by 2071 users. CAT: Strength = Weak; Quality = Medium | The need for informational support exceeded emotional support. | LIWC | Method of Inference:

|

| Cho et al., 2023 [34] | Quantitative (sentiment analysis and machine learning) | SVS member directory cross-referenced with a patient–physician review website | 1799 vascular surgeons.CAT: Strength = Weak; Quality = Medium | The positivity/negativity of reviews largely related to words associated with the patient–doctor experience and pain. | Word-frequency assessments; multivariable analyses | Method of Inference:

|

| Masiero et al., 2024 [35] | Qualitative | Division of Senology, European Institute of Oncology | 19 female metastatic breast cancer patients. CAT: Strength = Moderate; Quality = Medium | Themes: individual clinical pathway; barriers to adherence; resources to adherence; perception of new technologies. | Word-cloud plots; network analysis; sentiment analysis | Method of Inference:

|

| Meksawasdichai et al., 2023 [36] | Quantitative | Retrospective tweets relevant to thyroid cancer | 13,135 tweets. CAT: Strength = Moderate; Quality = Low | Twitter may provide an opportunity to improve patient–physician engagement or serve as a research data source. | Twitter scraping; sentiment analysis | Method of Inference:

|

| Podina et al., 2023 [37] | Mixed methods | 187 users; 72,524 posts. CAT: Strength = Moderate; Quality = Medium | Short-term survivors are more likely to suffer depression than long-term; support in daily needs is lacking. | Lexicon and machine learning | Method of Inference:

| |

| Mazza et al., 2022 [38] | Non-interventional retrospective analysis of public social media | Social media posts (Twitter; patient forums) | 76,456 conversations during 2018–2020. CAT: Strength = Moderate; Quality = Medium | Twitter was the most common platform; 61% authored by patients, 15% by friends/family, 14% by caregivers. | Predefined search string; content analysis | Method of Inference:

|

| Watanabe et al., 2022 [39] | Quantitative | Blog posts by patients with breast cancer in Japan | 2272 blog posts. CAT: Strength = Moderate; Quality = Medium | Results helpful to identify worries and give timely social support. | BERT (context-aware NLP) | Method of Inference:

|

| Cercato et al., 2021 [40] | Qualitative (focus groups; thematic qualitative analysis) | National Cancer Institute (Rome) | 31 cancer patients. CAT: Strength = Weak; Quality = Medium | Digital narrative medicine could improve oncologist relationship with greater patient input. | Narrative prompts for patients | Method of Inference:

|

| Vehviläinen-Julkunen et al., 2021 [41] | Mixed methods qualitative analysis (survey sentiment + focus groups) | National Cancer Patient Survey data; focus groups | 92 participants (>65 years avg) and 7 focus groups (31 patients). CAT: Strength = Moderate; Quality = Medium | NLP automated sentiment analysis supported with focus groups informed the initial thematic analysis. | Automated sentiment analysis algorithm + focus groups | Method of Inference:

|

| Law et al., 2021 [42] | Observational (voice/text analysis) | Online breast cancer forums | ∼15,000 posts; 3906 unique users. CAT: Strength = Moderate; Quality = Medium | Engagement scores ranked relationships with HCPs as high; information needs are extremely high. | Lexicon-based analysis | Method of Inference:

|

| Yerrapragada et al., 2021 [43] | Machine learning (insurance claims; population-based) | Commercial claims and encounters; Medicare claims | 3022 breast cancer patients. CAT: Strength = Moderate; Quality = High | 48% of patients were tamoxifen nonadherent. | Algorithms trained to predict nonadherence from claims features | Method of Inference:

|

| Moraliyage et al., 2021 [44] | Mixed methods (Quant ML/Qual NLP) | Online support groups; Twitter | 2,469,822 tweets and 21,800 patient discussions. CAT: Strength = Moderate; Quality = Medium | Cancer patient information needs, expectations, mental health, and emotional wellbeing states can be extracted. | PRIME on Twitter; machine learning algorithms | Method of Inference:

|

| Balakrishnan, 2021 [45] | Quantitative | Breastcancer.org (3 OHS forums) | 150,000 posts. CAT: Strength = Moderate; Quality = Medium | Deep learning more effective than machine learning. | Co-training: word embedding and sentiment embedding; domain-dependent lexicon | Method of Inference:

|

| Shah et al., 2021 [8] | Quantitative | National Health Service subsidiary website | 53,724 online reviews of 3372 doctors. CAT: Strength = Moderate; Quality = Medium | Unstructured text mining identified key topics of satisfaction and dissatisfaction. | Sentic-LDA topic model; text mining | Method of Inference:

|

| Arditi et al., 2020 [46] | Cross-sectional survey (free-text comments) | Swiss Cancer Patients survey | 844 patient comments. CAT: Strength = Moderate; Quality = Medium | Free text allows patients greater expression “in their own words”; enhances patient-centred care. | Computer-assisted textual analysis; manual expert corpus formatting | Method of Inference:

|

| Adikari et al., 2020 [47] | Quantitative/Qualitative | Conversations in ten international OCSGs | 18,496 patients; 277,805 conversations. CAT: Strength = Moderate; Quality = Medium | Patients joining pre-treatment had improved emotions; long-term participation increased wellbeing; lower negative emotions after 12 months vs. post-treatment. | Validated AI techniques and NLP framework | Method of Inference:

|

| Mikal et al., 2019 [48] | Qualitative | 30 breast cancer survivors; ∼100,000 lines of data.CAT: Strength = Weak; Quality = Medium | Coding schema identified social support exchange at diagnosis and transition off therapy; social media support buffers stress. | Systematic coding (retrospective) | Method of Inference:

|

| Theme | % of Selected Sources |

|---|---|

| Unmet emotional needs (distress, fear, sadness, disgust, surprise) [15,17,22,23] | 41% |

| Suboptimal information and communication (conflicting/insufficient info; perceived bias) [18,23,24] | 50% |

| Concordance within PCC unclear (goal alignment, shared decision making indicators limited) [25,26,28] | 55% |

| Online misinformation dynamics/perceived clinician bias [23,24,36] | (subset; qualitatively frequent) |

| Emotion Signal | Likely Behaviour | Concordance Oriented Response |

|---|---|---|

| Fear/anxiety | Delay, dose skipping | SDM Team talk: acknowledge fear; distress screen; Option talk: clarify risks; Decision talk: values clarification. |

| Sadness/hopelessness | Withdrawal from self care | Psycho-oncology referral; follow up within 72 h; problem solving supports. |

| Disgust (AEs) | Refusal/cessation | AE management options; normalize toxicity; reframe goals. |

| Confusion/surprise | Inconsistent adherence | Teach back; simplified plan; bilingual materials. |

| Positive emotions | Sustained engagement | Reinforce self efficacy; document concordance; milestone planning. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Wreyford, L.; Gururajan, R.; Zhou, X.; Higgins, N. Patient Voice and Treatment Nonadherence in Cancer Care: A Scoping Review of Sentiment Analysis. Nurs. Rep. 2026, 16, 18. https://doi.org/10.3390/nursrep16010018

Wreyford L, Gururajan R, Zhou X, Higgins N. Patient Voice and Treatment Nonadherence in Cancer Care: A Scoping Review of Sentiment Analysis. Nursing Reports. 2026; 16(1):18. https://doi.org/10.3390/nursrep16010018

Chicago/Turabian StyleWreyford, Leon, Raj Gururajan, Xujuan Zhou, and Niall Higgins. 2026. "Patient Voice and Treatment Nonadherence in Cancer Care: A Scoping Review of Sentiment Analysis" Nursing Reports 16, no. 1: 18. https://doi.org/10.3390/nursrep16010018

APA StyleWreyford, L., Gururajan, R., Zhou, X., & Higgins, N. (2026). Patient Voice and Treatment Nonadherence in Cancer Care: A Scoping Review of Sentiment Analysis. Nursing Reports, 16(1), 18. https://doi.org/10.3390/nursrep16010018