1. Introduction

During the coronavirus (SAR-CoV-2) pandemic, health professionals worldwide were exposed to a high risk of infection. This risk was greater at the beginning of the pandemic, when the mechanisms of transmission were not completely clear. Throughout the months in which the virus spread worldwide, health services adapted to this situation with protection guidelines for workers, to decrease the risk of infection. These guidelines included every type of PPE, which, in each case, eliminated the visual perception of essential parts of the worker’s face. The use of surgical masks, goggles or a face shield, gowns and respirators for specific procedures (i.e., N95 or FFP2 standard or equivalent) [

1] were recommended. In some cases (especially in intensive care units), more complex equipment was and is being used, such as powered air-purifying respirators (PAPRs) [

2,

3]. All this equipment can reduce non-verbal communication based on facial expressions [

4,

5] among the professionals who use them, and between health professionals and patients.

Health professionals were strongly affected, not only due to exposure to physical dangers but also to stress, which negatively impacted their mental health [

6,

7,

8,

9,

10]. For health professionals, adequate communication skills were shown to be a fundamental aspect in preventing Burnout Syndrome [

11]. The communication of emotions, and their detection by the rest of the work team, could lead to the early activation of mutual support behaviours in situations of continued stress. These emotions can be shared verbally or can be detected consciously or unconsciously [

12] through non-verbal cues, principally through facial expressions. However, due to the measures that restrict personal contact in healthcare environments, and the physical barrier presented by wearing PPE, the detection of other’s emotions could be altered, especially with respect to the interpretation of facial expressions through this equipment [

13].

The objective of the present study was to analyse the interpretation of emotions manifested through facial expressions when wearing different types of facial protection equipment, and to identify the emotions that are easier or more difficult to interpret when wearing different types of PPE. The study’s main hypothesis was that the PPE, which hides part of the face, impedes the correct interpretation of the emotions of the individuals wearing them.

Emotions are generated as reactions to important events (those that are irrelevant do not produce emotion) [

14]. Emotions result in motivational states, activate the body for action and produce recognisable facial expressions. Although emotions generate feelings, they differ from feelings as they have a direct connection with the world (emotions are expressed and can be recognised by others [

15]). That is the social dimension of emotion, as it can be expressed verbally or non-verbally and recognised by another human being. This aspect is essential in communication and interaction between people [

16].

Another critical aspect is the involuntary character of emotion: to put it simply, emotions are automatic. Emotions have an adaptive value, which is linked to the expression of a feeling in response to an important event. If a human being recognises the expression of emotion in another person, she or he can then have an idea about how the other is feeling and adjust her or his behaviour accordingly (if someone is sad, we can console or provide support, for example). In the healthcare context, the interpretation of the patient’s emotions, and that of co-workers, is important. On many occasions, it has implications for the activation of support behaviours and mutual care, especially in a context of work overload, such as that found presently in healthcare settings [

17,

18].

Facial recognition of emotion is the primary way to know the emotional state of an individual and consequently activate an appropriate affective response [

19]. Until the present, authors have discussed whether or not the recognition of emotions is innate or universal. Many prototypical universal emotions have been described. The classic work by Paul Ekman [

20,

21], in which he described six emotions that are present in every human culture (anger, fear, disgust, surprise, sadness and happiness), has been the basis of almost all of the research conducted with respect to the facial recognition of emotions. At the time of writing, specific software programmes have been developed, so that computers to recognise the emotional patterns in human faces [

22].

Very recently, up to 16 recognisable facial expressions were associated with emotions, which can occur in any social context in any part of the world [

23]; however, it seems that only four universal basic emotions can be clearly and immediately recognised, without any context to provide us with more information [

24]. The latter study postulated that facial signals evolve from biologically basic minimal information to socially specific complex information. Accordingly, the signalling dynamic allows the discrimination of four categories of emotions (and not six, which has prevailed until now). In line with this, fear, surprise, anger and disgust are frequently misread. Although facial expressions of happiness and sadness are different from start to finish, both fear and surprise share a base signal before they are produced: the eyes are fully opened. Likewise, disgust/anger have common facial signals, such as a wrinkled nose, which are observed right before the emotion is shown.

For this reason, the present work focused on the analysis of these four prototypical basic units of emotion (happiness, sadness, fear/surprise and disgust/anger), following the pattern proposed by Ekman in 1970 [

20], discerning facial expressions with images, and adapting it to the context of personal protection equipment. The working hypothesis of this study was that the increased limitation in the visibility of the face due to the use of a personal protective device would interfere with the perception of basic emotions in the participants.

2. Materials and Methods

A cross-sectional and descriptive observational study was designed to evaluate photographs by volunteers [

20,

25,

26]. We followed STROBE reporting guidelines (see

Appendix A).

2.1. Participants, Procedure and Data Collection

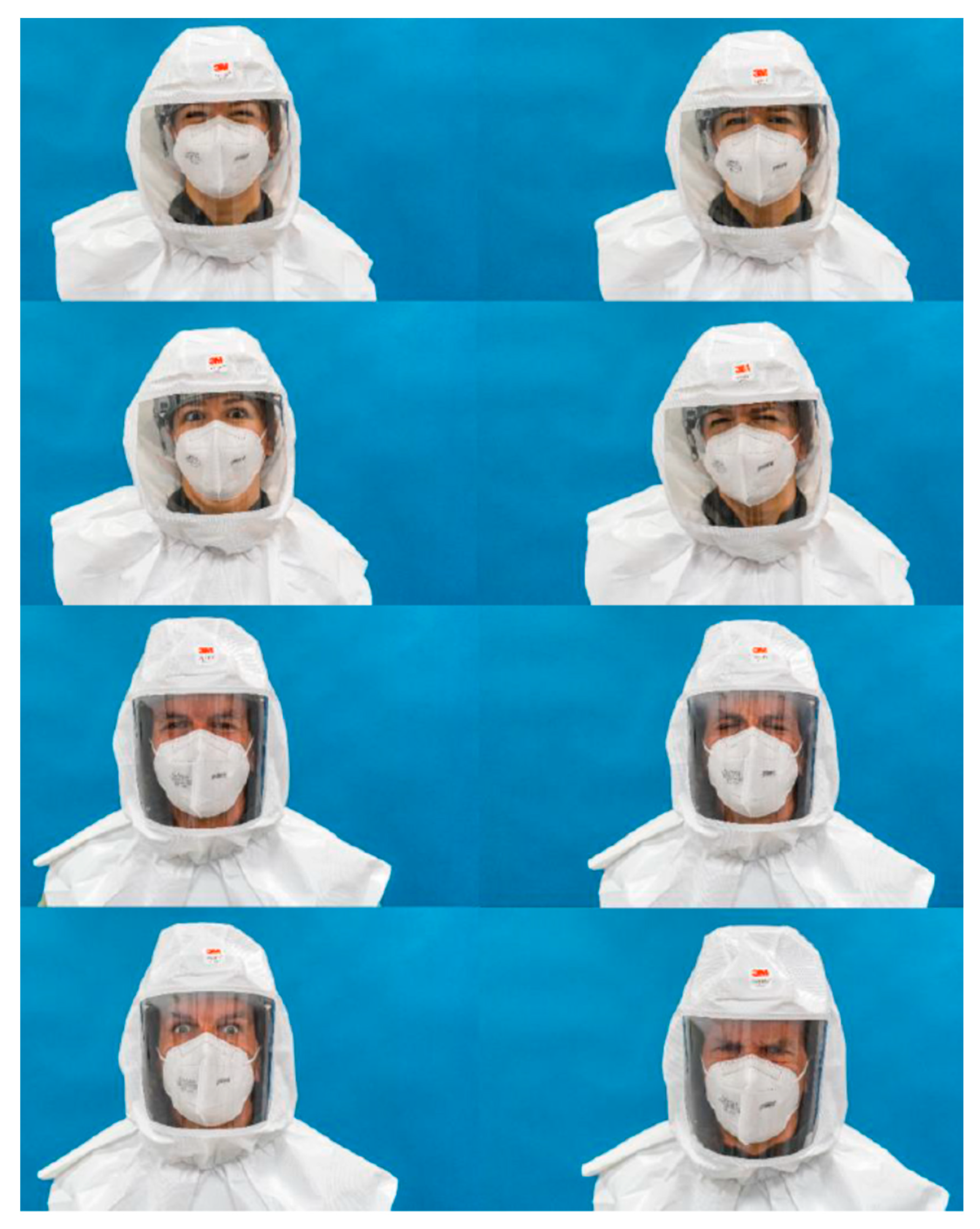

The study was conducted using volunteer participants who met the inclusion criteria (individuals older than 13 without cognitive limitations). Participants had to recognise the emotions of actors in photographs that were randomly displayed in an online form. Thirty-two high-resolution pictures of two actors, a Caucasian man and woman, were taken. In these pictures, they appeared both without PPE and wearing different PPEs that partially covered their faces. The PPEs chosen were an FFP2 mask, protective overalls (PO)/ body, mask, protection goggles and, finally, a powered air-purifying respirator (PAPR). The actors gave their interpretations of four different universal basic emotions (happiness, sadness, fear/surprise and disgust/anger), which are not easily mistaken [

24].

For the interpretation/acting of the emotions, the actors had a period of adaptation and used the prototypical images present in similar, validated studies as the gold standard [

26].

Figure 1,

Figure 2,

Figure 3 and

Figure 4 show the actor’s photographs according to the type of PPE/emotion.

The 32 photographs were inserted into a Google Forms form (Google Forms

®), which, aside from explaining the objective of the study, collected sociodemographic information and asked the participants for their consent (the form is available at

https://forms.gle/rVxCBWSsWNr49udEA accessed on 7 July 2021). The photographs randomly appeared in the form each time the questionnaire was opened. The form encouraged the participants to identify the emotion transmitted by the actor/actress from the five response options, which coincided with the four emotions studied (happiness, sadness, fear/surprise and disgust/anger), and an optional answer (I am not sure). At the end of the questionnaire, the following questions were asked: (1) Which device have you had more difficulties identifying emotions with? and (2) Which part of the face do you consider to be more relevant for interpreting others’ emotions?

For the selection of the participants, we took into account the innate human ability to recognise primary forms of affective expression (any person can recognise the emotions of others) [

27]. The questionnaire was distributed through social networks and email groups throughout Spain. The study was conducted using volunteer participants who met the inclusion criteria (individuals older than 13 without cognitive limitations who responded to the online form within a specific period of 3 months, from July to September 2021). Participants in the study were chosen by convenience (no statistical sampling was used). The study was approved by the Ethics Committee of the Catholic University of Murcia (Spain), dated 27 July 2020 (reference number: CE072002).

2.2. Analysis

The data collection was conducted through the online questionnaire and is detailed in the following section. The sociodemographic variables collected were gender (male/female), age (in years), city/country of origin and level of education (basic: mandatory secondary education; medium: high school/practical training; high: university).

Student’s t-test was utilised to analyse the existence of statistically significant differences between the dichotomous variable means (sex and nationality (Spanish/other)), and an ANOVA test was used in those with three and more categories. Post-hoc test used were Bonferroni with equal variances and Tahmane in different ones. The categorical variables were described as frequencies and percentages (%). To compare the data, three variables were created that measured the correct identification of each emotion in the photographs, with a point given to each response that coincided with the emotion expressed:

- (1)

Score per emotion, with a maximum score of 8.

- (2)

Score by type of PPE, with a maximum score of 8.

- (3)

Total score per individual, achieved by adding all the responses given with the correct identification, with a maximum score of 32.

The processing and analysis of the data was conducted with the statistical package IBM SPSS® v.22.0 for Windows.

3. Results

From the 702 responses obtained, seven were excluded as participant consent was not provided, and five were excluded were due to the participants being underage. Thus, the responses from 690 individuals were analysed (n = 690). The mean age of the participants was 41 years (SD 12.97), of which 473 (69%) were women and 665 (96%) were Spanish. As for the level of education, 79% had a university degree, 16% had a high school/practical training degree and 5% had primary education. Once the age data were analysed, the participants were divided into four age groups: younger than 18; 19–40 (young adults); 41–60 (middle-aged adults); and older than 60. According to these groupings, the sample was composed of 10 individuals younger than 18 years old; 310 were aged between 19 and 40 years old; 306 were aged between 41–60 years old; and 56 were older than 60.

After looking at the images, the participants best recognised the emotions of happiness and fear, followed by anger, regardless of the PPE utilised (

Table 1). The emotion of sadness was the most difficult to recognise, regardless of age. The participants were unsure when trying to identify it, and frequently confused it with anger (

p = 0.047 [

t-Student with Bonferroni correction]).

According to gender, statistically significant differences were found in the identification of all the emotions except for happiness, with women obtaining a better mean value (24.95 ± 3.41) than men (23.93 ± 3.6) (

Table 2). In addition, women recognised the emotions better than men when the actor/actress was wearing a PAPR (

p = 0.013) or an FFP2 mask (

p < 0.001) (

Table 3).

The results did not show statistically significant differences between the Spanish nationals and foreign participants concerning the recognition of emotions as a function of PPE utilised.

According to age groups, statistically significant differences were found concerning emotions, except for sadness (happiness: F = 5.48 p-value = 0.001; sadness: F = 2.83 p-value = 0.038; fear: F = 2.65 p-value = 0.048; anger: F = 6.67 p-value < 0.001). In general, the 19–40 years group recognised emotions better than those in the 41–60 years group (mean difference: 1.01 p = 0.002) and those older than 60 (mean difference: 1.38 p = 0.037). More specifically, the 19–40 years group recognised anger better than those in the 41–60 years group (mean difference: 0.43 p= 0.001) and those older than 60 (mean difference: 0.60 p = 0.013), and happiness (mean difference: 0.22 p = 0.049) better than those in the the 41–60 years group. According to age groups, regarding the type of PPE (PAPR: F = 5.55 p-value = 0.001; PO: F = 1.44 p-value = 0.231; FFP2: F = 2.34 p-value = 0.072; no PPE: F = 4.26 p-value = 0.005), the 19–40 years group recognised emotions better with the PAPR than those in the 41–60 years group (mean difference: 0.38 p = 0.003) and those older than 60 (mean difference: 0.59 p = 0.017); they also recognised emotions better without PPE than those in the 41–60 years group (mean difference: 0.19 p = 0.041).

Concerning the level of education, the results showed statistically significant differences in recognition of emotions when the actors wore the PAPR (F = 5.21 p = 0.006), the PO (F = 3.39 p = 0.034), the FFP2 mask (F = 3.04 p = 0.048), and when PPE was not used (F = 11.28 p < 0.001). In general, participants with a higher level of education were better at recognising emotions than those with lower education levels. Participants with a university education were more capable of recognising all the emotions, with significant differences found between the participants with a basic level of education when the actors wore the PAPR (mean difference: 0.60 p = 0.028), the PO (mean difference: 0.53 p = 0.028), and when a PPE was not used (mean difference: 0.0.47 p = 0.006). When compared to those with a medium level of education (high school), participants with higher education (university) were better at recognising emotions when the actors did not wear any PPE (mean difference: 0.37 p < 0.001). The group of participants aged 18 years or younger did not show different results from the rest of the participants in the recognition of emotions.

When the participants were asked about the part of the face that was most significant in the expression of emotions, 80% indicated that the eyes, eyebrows and the degree of eye widening were the most important, and 20% considered the mouth more important (smile, grimaces, etc.) (PAPR: F = 5.78 p-value = 0.003; PO: F = 1.59 p-value = 0.204; FFP2: F = 4.89 p-value = 0.008; no PPE: F = 0.88 p-value = 0.417). Both groups obtained similar scores when PPE was absent (7.89 vs. 7.79). However, when PPE was present, the former obtained better scores in recognition of happiness (mean difference: 0.52 p < 0.001), wearing the PAPR (mean difference: 0.37 p = 0.019) and with the FFP2 (mean difference: 0.36 p = 0.017), when compared to the latter.

Participants indicated that the most challenging situation to recognise emotions was when the actors wore the PAPR. However, the lowest scores were obtained when the actors wore the PO (5.42 ± 1.22), followed by the PAPR (5.83 ± 1.38). The best scores were obtained when the FFP2 mask images were shown (6.57 ± 1.20). When the PPE was absent, the mean score obtained in recognition of emotions was 6.82 ± 0.91, from a maximum of 8 points.

4. Discussion

The main findings from the present study show that the ability to interpret emotions through facial expressions is reduced when individuals wear different types of PPE. Thus, it is expected that, even without conducting research, we could therefore deduce that any obstacle that reduces the visibility of facial expressions could, in theory, influence the recognition of such expressions by others. In the present work, we studied how this influence could be observed in a large sample of individuals who viewed images of actors wearing different types of PPE.

The different variables considered when collecting and interpreting the data gave us a broad view of the types of emotions that were easy or more difficult to recognise, and the influence of age and gender when interpreting others’ emotions. The level of education also influenced how the participants recognised the emotions of the actors. Specifically, we analysed the impact of the type of PPE when participants were trying to recognise the emotions of individuals wearing them.

Different contemporary studies [

28,

29] described how physical barriers that hide the face interfere in the interpretation of verbal and non-verbal cues in human interaction. In our study, the types of emotions that were easy to recognise were happiness and fear/surprise. This was the case in each kind of photograph evaluated. Another study found that the expression of the eyes was not as crucial as the mouth in recognition of happiness [

30], but a more recent investigation found that wearing a mask may increase the recruitment of the eyes during smiling, activating the orbicularis oculi when smiling with a mask [

31]. In our study, the eyes and the area surrounding them was the only facial element involved in making expressions, excepting those images in which the actors did not wear any PPE. In our study, the emotion that was more difficult to recognise was sadness, which was confused with anger on many occasions, as has been previously found [

32]. The fact that sadness was the most poorly recognised emotion in people wearing PPE has implications for clinical practice, especially concerning mutual support between professionals subjected to an elevated stress level.

The pandemic has led to a significant increase in stress and work overload for health professionals [

7,

33,

34]. For the activation of support behaviours between co-workers [

35], the negative emotions must be expressed by the person who is feeling exhausted, stressed or sad, or be detected by others. The difficulty in the recognition of sadness by the rest of the work team could impede the adequate development of mutual support behaviours. This could be important in initiating support behaviours by co-workers and in individuals who do not explicitly speak about their emotions. Moreover, it has been stated that the impact of the mask on interpreting emotions could even be more decisive in natural settings, because, in everyday interactions, we invest less attention and time in looking for emotional cues on others’ faces [

32].

Another interesting finding in our study was that women were able to identify emotions better than men, especially when the actress/actor wore a PAPR or FFP2 mask, and we can therefore discuss emotional intelligence, defined as the ability of individuals to recognise their own emotions and that of others [

36]. Although it could be thought of as stereotypical, many studies [

37,

38,

39,

40,

41] argue that women are better at recognising emotions in others since they have been socialised to be more empathetic and perceptive. The field of neuroscience has demonstrated [

42,

43] the existence of larger areas in woman’s brains that are dedicated to the processing of emotions. These differences could also be because of the socialization process. Furthermore, other studies have demonstrated that gender differences in emotional intelligence decrease substantially or disappear when age is considered a mediator and that age accounted for a higher percentage of the variance in emotional intelligence [

44]. In Spain, 72% of the health professionals currently employed are women, and 84% of the registered nurses in Spain are also women [

45]. This could also have implications for the ease of emotional recognition, despite the use of PPE by primarily female health professionals, and could provide a gendered perspective to the problem of emotion recognition and the putting into practice of mutual support behaviours.

With regards to the age of the participants, the group that was able to better recognise emotions were those in the 19–40-year range, both in general (without PPE) and in the presence of the PAPR. In older age, the ability to recognise emotions seems to decrease [

46], evidenced by the decreased stimulation observed in older people when facing emotional situations, which makes sense in the context of our study. As for the level of education, the individuals with a high level of education were better at recognising emotions (especially university graduates) in every situation.

Regarding the type of PPE, more difficulties were found in the recognition of emotions with the PAPR at the subjective level (when participants were asked about the device with which they believed they would have a greater difficulty). However, the lowest scores were found with the PO, and the highest with the FFP2 mask. These results show that greater exposure of areas of the face leads to a better interpretation of emotions by others.

As for the areas considered fundamental for the recognition of emotions by participants, the eyes obtained the highest scores. This idea is in agreement with other studies, which investigated how the eyes and mouth were the elements that were most involved in the facial recognition of others’ emotions, from sadness and fear (trust in the eyes) to disgust and happiness (mouth) [

30].

One of the limitations of this study was that it was restricted to Spanish speakers. The external validity of the study is perhaps limited for this reason. Statistical sampling was also not carried out, and the sample was chosen by convenience. However, all analyses were conducted with a sample of 690 participants and a significance level of 0.05; therefore, even for a small effect size (d = 0.2) the power of each test would be 0.9995.

5. Conclusions

The results from this study provide evidence that PPE (which is commonly used by health professionals in times of high risk of infections) interferes in the recognition of emotions, with the PO interfering the most and the FFP2 mask the least. The emotions that were best recognised were happiness and fear/surprise, while the least recognised emotion was sadness. Women were better at identifying emotions, as well as participants with higher education, and young and middle-aged adults.

Relevance for Clinical Practice

While it is true that the recognition of co-workers’ emotions could be affected by the use of PPE, especially with regard to negative emotions, we believe that the interaction with patients wearing masks should be taken into account. As an implication for clinical practice, we can add that patients’ feelings are often not verbalised and are perceived by professionals through non-verbal cues. This perception could be limited using PPE. We propose the establishment of protocols for detecting emotions, which include open questions that show the professional’s interest in patients’ emotions. This aspect is fundamental in the development of the work of healthcare professionals [

47].

Health professionals must receive training related to emotional intelligence and verbal expression of emotions, so that systems of mutual support between co-workers can be activated as soon as possible.

Detecting emotions in the professional area of nursing is fundamental, especially concerning situations of stressm such as those experienced during the pandemic, which necessacitated, more than ever, the activation of empathetic attitudes and behaviours.

The use of PPE should be adapted to each situation and should be based on scientific evidence, to allow for adequate interpersonal communication.

Employers and health institutions must be aware of the factors that impede the adequate detection of emotions and must implement actions to prevent negative emotional states and to detect them as early as possible, especially when the personal protection equipment artificially alters normal non-verbal communication.

Author Contributions

Conceptualization, methodology and data collection: A.R.-R., J.A.G.-M. and J.L.D.-A.; data analysis: M.J.P.-J. and V.A.-L.; writing–original draft: A.R.-R., J.L.D.-A., M.J.P.-J., J.A.G.-M. and V.A.-L.; supervision: I.L.-C.-G., A.R.-R. and J.L.D.-A.; writing—review and editing: I.L.-C.-G., A.R.-R., J.L.D.-A., M.J.P.-J., J.A.G.-M. and V.A.-L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by UCAM (Catholic University of Murcia), grant number PMAFI-ACPE-I.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board/Ethics Committee of UCAM (dated 27 July 2020, and with reference number CE072002).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data are available to those who wish to access them by sending an email to the corresponding authors.

Acknowledgments

Thanks to all the people who have contributed their time, especially the simulation technicians (Pedro, Vicente and Luis) and the actors (Laura and David), for their valuable and selfless collaboration in this project.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. STROBE Reporting Guidelines Checklist

Table A1.

STROBE Statement—checklist of items that should be included in reports of observational studies.

Table A1.

STROBE Statement—checklist of items that should be included in reports of observational studies.

| | Item No. | Recommendation | Page No. | Line with Relevant Text from Manuscript |

|---|

| Title and abstract | 1 | (a) Indicate the study’s design with a commonly used term in the title or the abstract | 1 | 4 |

| (b) Provide in the abstract an informative and balanced summary of what was done and what was found | 1 | 11–31 |

| Introduction | |

| Background/rationale | 2 | Explain the scientific background and rationale for the investigation being reported | 1–2 | 41–110 |

| Objectives | 3 | State specific objectives, including any prespecified hypotheses | 3 | 110–113 |

| Methods | |

| Study design | 4 | Present key elements of study design early in the paper | 3 | 115–117 |

| Setting | 5 | Describe the setting, locations, and relevant dates, including periods of recruitment, exposure, follow-up, and data collection | 8 | 119–170 |

| Participants | 6 | (a) Cross-sectional study—Give the eligibility criteria, and the sources and methods of selection of participants | 8 | 147–149 |

| Variables | 7 | Clearly define all outcomes, exposures, predictors, potential confounders, and effect modifiers. Give diagnostic criteria, if applicable | 8 | 165–171 |

| Data sources/ measurement | 8 * | For each variable of interest, give sources of data and details of methods of assessment (measurement). Describe comparability of assessment methods if there is more than one group | 8 | 171–176 |

| Bias | 9 | Describe any efforts to address potential sources of bias | 7 | 149–153 |

| Study size | 10 | Explain how the study size was arrived at | 8 | 198 |

| Quantitative variables | 11 | Explain how quantitative variables were handled in the analyses. If applicable, describe which groupings were chosen and why | 8 | 171–190 |

| Statistical methods | 12 | (a) Describe all statistical methods, including those used to control for confounding | 8 | 171–190 |

| (b) Describe any methods used to examine subgroups and interactions | 8 | 157–164 |

| (c) Explain how missing data were addressed | - | |

| (d) If applicable, describe analytical methods taking account of sampling strategy | - | |

| (e) Describe any sensitivity analyses | - | |

| Results |

| Participants | 13 * | (a) Report numbers of individuals at each stage of study—eg numbers potentially eligible, examined for eligibility, confirmed eligible, included in the study, completing follow-up, and analysed | 8 | 198–205 |

| (b) Give reasons for non-participation at each stage | - | |

| (c) Consider use of a flow diagram | - | |

| Descriptive data | 14 * | (a) Give characteristics of study participants (eg demographic, clinical, social) and information on exposures and potential confounders | 8 | 198–205 |

| (b) Indicate number of participants with missing data for each variable of interest | - | |

| Outcome data | 15 * | Cross-sectional study—Report numbers of outcome events or summary measures | 9–10 | Table 1, Table 2 and Table 3 |

| Main results | 16 | (a) Give unadjusted estimates and, if applicable, confounder-adjusted estimates and their precision (e.g., 95% confidence interval). Make clear which confounders were adjusted for and why they were included | 9–10–11 | |

| (b) Report category boundaries when continuous variables were categorized | 8 | 181–185 |

| (c) If relevant, consider translating estimates of relative risk into absolute risk for a meaningful time period | - | |

| Other analyses | 17 | Report other analyses done—eg analyses of subgroups and interactions, and sensitivity analyses | - | |

| Discussion |

| Key results | 18 | Summarise key results with reference to study objectives | 12, 13 | 272–353 |

| Limitations | 19 | Discuss limitations of the study, taking into account sources of potential bias or imprecision. Discuss both direction and magnitude of any potential bias | 13 | 349–353 |

| Interpretation | 20 | Give a cautious overall interpretation of results considering objectives, limitations, multiplicity of analyses, results from similar studies, and other relevant evidence | 11–12 | |

| Generalisability | 21 | Discuss the generalisability (external validity) of the study results | 13 | 355–385 |

| Other information | |

| Funding | 22 | Give the source of funding and the role of the funders for the present study and, if applicable, for the original study on which the present article is based | 14 | 392–393 |

References

- World Health Organization. Rational Use of Personal Protective Equipment (PPE) for Coronavirus Disease (COVID-19), Interim Guidance. 19 March 2020. Available online: https://covid19-evidence.paho.org/handle/20.500.12663/840 (accessed on 14 July 2021).

- Licina, A.; Silvers, A.; Stuart, R. Use of powered air-purifying respirator (PAPR) by healthcare workers for preventing highly infectious viral diseases—A systematic review of evidence. Syst. Rev. 2020, 9, 173. [Google Scholar] [CrossRef]

- Roberts, V. To PAPR or not to PAPR? Can. J. Respir. Ther. 2014, 50, 87–90. [Google Scholar]

- Marra, A.; Buonanno, P.; Vargas, M.; Iacovazzo, C.; Ely, E.W.; Servillo, G. How COVID-19 pandemic changed our communication with families: Losing nonverbal cues. Crit. Care 2020, 24, 297. [Google Scholar] [CrossRef]

- Mheidly, N.; Fares, M.Y.; Zalzale, H.; Fares, J. Effect of Face Masks on Interpersonal Communication During the COVID-19 Pandemic. Front. Public Health 2020, 8, 582191. [Google Scholar] [CrossRef]

- Badahdah, A.; Khamis, F.; Al Mahyijari, N.; Al Balushi, M.; Al Hatmi, H.; Al Salmi, I.; Albulushi, Z.; Al Noomani, J. The mental health of health care workers in Oman during the COVID-19 pandemic. Int. J. Soc. Psychiatry 2020, 67, 90–95. [Google Scholar] [CrossRef] [PubMed]

- Leal Costa, C.; Diaz Agea, J.L.; Ruzafa-Martínez, M.; Ramos-Morcillo, A.J. Work-related stress amongst health professionals in a pandemic. An. Sist. Sanit. Navar. 2021, 44, 123–124. [Google Scholar] [PubMed]

- d’Ettorre, G.; Ceccarelli, G.; Santinelli, L.; Vassalini, P.; Innocenti, G.P.; Alessandri, F.; Koukopoulos, A.E.; Russo, A.; d’Ettorre, G.; Tarsitani, L. Post-Traumatic Stress Symptoms in Healthcare Workers Dealing with the COVID-19 Pandemic: A Systematic Review. Int. J. Environ. Res. Public Health 2021, 18, 601. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Nayar, K.R. COVID 19 and its mental health consequences. J. Ment. Health 2020, 30, 1–2. [Google Scholar] [CrossRef]

- Xiang, Y.-T.; Yang, Y.; Li, W.; Zhang, L.; Zhang, Q.; Cheung, T.; Ng, C.H. Timely mental health care for the 2019 novel coronavirus outbreak is urgently needed. Lancet Psychiatry 2020, 7, 228–229. [Google Scholar] [CrossRef]

- Leal-Costa, C.; Díaz-Agea, J.; Tirado-González, S.; Rodríguez-Marín, J.; Hofstadt-Román, C.V.-D. Communication skills: A preventive factor in Burnout syndrome in health professionals. An. Sist. Sanit. Navar. 2015, 38, 213–223. [Google Scholar] [CrossRef]

- Michael, J. Shared Emotions and Joint Action. Rev. Philos. Psychol. 2011, 2, 355–373. [Google Scholar] [CrossRef]

- Marini, M.; Ansani, A.; Paglieri, F.; Caruana, F.; Viola, M. The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep. 2021, 11, 5577. [Google Scholar] [CrossRef] [PubMed]

- Lazarus, R.S. Emotion and Adaptation; Oxford University Press: Oxford, UK, 1991; 572p. [Google Scholar]

- Emotion—APA Dictionary of Psychology [Internet]. Available online: https://dictionary.apa.org/emotion (accessed on 22 July 2021).

- Reeve, J. Understanding Motivation and Emotion; John Wiley & Sons: Hoboken, NJ, USA, 2014; 648p. [Google Scholar]

- Romero, C.S.; Delgado, C.; Catalá, J.; Ferrer, C.; Errando, C.; Iftimi, A.; Benito, A.; de Andrés, J.; Otero, M.; The PSIMCOV group. COVID-19 psychological impact in 3109 healthcare workers in Spain: The PSIMCOV group. Psychol. Med. 2020, 52, 188–194. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, L.; Li, H.; Pan, Y.; Wang, J.; Li, Q.; Wu, Q.; Wei, H. The Psychological Change Process of Frontline Nurses Caring for Patients with COVID-19 during Its Outbreak. Issues Ment. Health Nurs. 2020, 41, 525–530. [Google Scholar] [CrossRef] [PubMed]

- Lottridge, D.; Chignell, M.; Jovicic, A. Affective Interaction: Understanding, Evaluating, and Designing for Human Emotion. Rev. Hum. Factors Ergon. 2011, 7, 197–217. [Google Scholar] [CrossRef]

- Ekman, P. Universal facial expressions of emotion. Calif. Ment. Health Res. Dig. 1970, 8, 151–158. [Google Scholar]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Emotion Recognition in Context. 2017, pp. 1667–1675. Available online: https://openaccess.thecvf.com/content_cvpr_2017/html/Kosti_Emotion_Recognition_in_CVPR_2017_paper.html (accessed on 12 August 2022).

- Cowen, A.S.; Keltner, D.; Schroff, F.; Jou, B.; Adam, H.; Prasad, G. Sixteen facial expressions occur in similar contexts worldwide. Nature 2021, 589, 251–257. [Google Scholar] [CrossRef]

- Jack, R.E.; Garrod, O.G.B.; Schyns, P.G. Dynamic Facial Expressions of Emotion Transmit an Evolving Hierarchy of Signals over Time. Curr. Biol. 2014, 24, 187–192. [Google Scholar] [CrossRef]

- Ekman, P.; Oster, H. Facial Expressions of Emotion. Ann. Rev. Psychol. 1979, 30, 527–554. [Google Scholar] [CrossRef]

- Young, A.W.; Perrett, D.; Calder, A.; Sprengelmeyer, R.; Ekman, P. Facial Expressions of Emotion: Stimuli and Test (FEEST). University of St Andrews [Internet]. 2002. Available online: https://risweb.st-andrews.ac.uk/portal/en/researchoutput/facial-expressions-of-emotion-stimuli-and-test-feest(9fe02161-64a6-41f3-88e6-23240d4538bb)/export.html (accessed on 27 July 2021).

- McArthur, L.Z.; Baron, R.M. Toward an ecological theory of social perception. Psychol. Rev. 1983, 90, 215–238. [Google Scholar] [CrossRef]

- Bani, M.; Russo, S.; Ardenghi, S.; Rampoldi, G.; Wickline, V.; Nowicki, S.; Strepparava, M.G. Behind the Mask: Emotion Recognition in Healthcare Students. Med. Sci. Educ. 2021, 31, 1273–1277. [Google Scholar] [CrossRef] [PubMed]

- Barrick, E.M.; Thornton, M.A.; Tamir, D.I. Mask exposure during COVID-19 changes emotional face processing. PLoS ONE 2021, 16, e0258470. [Google Scholar] [CrossRef] [PubMed]

- Wegrzyn, M.; Vogt, M.; Kireclioglu, B.; Schneider, J.; Kissler, J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE 2017, 12, e0177239. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5426715/ (accessed on 5 November 2021). [CrossRef] [PubMed]

- Okazaki, S.; Yamanami, H.; Nakagawa, F.; Takuwa, N.; Kawabata Duncan, K.J. Mask wearing increases eye involvement during smiling: A facial EMG study. Sci. Rep. 2021, 11, 20370. Available online: https://www.nature.com/articles/s41598-021-99872-y (accessed on 8 August 2022). [CrossRef] [PubMed]

- Carbon, C.-C. Wearing Face Masks Strongly Confuses Counterparts in Reading Emotions. Front. Psychol. 2020, 11, 566886. [Google Scholar] [CrossRef]

- Braquehais, M.D.; Vargas-Cáceres, S.; Gómez-Durán, E.; Nieva, G.; Valero, S.; Casas, M.; Bruguera, E. The impact of the COVID-19 pandemic on the mental health of healthcare professionals. QJM Int. J. Med. 2020, 113, 613–617. [Google Scholar] [CrossRef]

- Tsamakis, K.; Rizos, E.; Manolis, A.J.; Chaidou, S.; Kympouropoulos, S.; Spartalis, E.; Spandidos, D.A.; Tsiptsios, D.; Triantafyllis, A.S. [Comment] COVID-19 pandemic and its impact on mental health of healthcare professionals. Exp. Ther. Med. 2020, 19, 3451–3453. Available online: https://www.spandidos-publications.com/10.3892/etm.2020.8646 (accessed on 5 November 2021). [CrossRef]

- Clancy, C.M.; Tornberg, D.N. TeamSTEPPS: Assuring Optimal Teamwork in Clinical Settings. Am. J. Med. Qual. 2007, 22, 214–217. [Google Scholar] [CrossRef]

- Salovey, P.; Grewal, D. The Science of Emotional Intelligence. Curr. Dir. Psychol. Sci. 2005, 14, 281–285. [Google Scholar] [CrossRef]

- Argyle, M. The Psychology of Interpersonal Behaviour; Penguin UK: London, UK, 1994; 284p. [Google Scholar]

- Baron, R.A.; Byrne, D. Psicología Social; Pearson Educación: London, UK, 2005; 577p. [Google Scholar]

- Hargie, O.; Saunders, C.; Dickson, D. Social Skills in Interpersonal Communication; Psychology Press: London, UK, 1994; 390p. [Google Scholar]

- Lafferty, J. The Relationships BETWEEN Gender, Empathy, and Aggressive Behaviors among Early Adolescents [Internet]. 2004. Available online: https://www.proquest.com/openview/ebe226ffd6813ce80757ed397c73a2b9/1?pq-origsite=gscholar&cbl=18750&diss=y (accessed on 7 November 2021).

- Landazabal, M.G. A comparative analysis of empathy in childhood and adolescence: Gender differences and as-sociated socio-emotional variables. Int. J. Psychol. Psychol. Ther. 2009, 9, 217–235. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=2992452 (accessed on 7 November 2021).

- Baron-Cohen, S. The Essential Difference: Men, Women and the Extreme Male Brain; Penguin UK: London, UK, 2004; 239p. [Google Scholar]

- Baron-Cohen, S. The Essential Difference: The Truth about the Male and Female Brain; Penguin UK: London, UK, 2003. [Google Scholar]

- Fernández-Berrocal, P.; Cabello, R.; Castillo, R.; Extremera, N. Gender Differences in Emotional Intelligence: The Mediating Effect of Age. Behav. Psychol. 2012, 20, 77–89. [Google Scholar]

- INE. Instituto Nacional de Estadística [Internet]. INE. Available online: https://ine.es/index.htm (accessed on 13 August 2022).

- Márquez-Gonzélez, M.; de Trocóniz, M.I.F.; Cerrato, I.M.; Baltar, A.L. Experiencia y regulación emocional a lo largo de la etapa adulta del ciclo vital: Análisis comparativo en tres grupos de edad. [Emotional experience and regulation across the adult lifespan: Comparative analysis in three age groups]. Psicothema 2008, 20, 616–622. [Google Scholar]

- Blue, A.V.; Chessman, A.W.; Gilbert, G.; Mainous, A. Responding to patients’ emotions: Important for standardized patient satisfaction. Fam. Med. 2000, 32, 326–330. [Google Scholar] [PubMed]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).