An Ontology-Based Expert System Approach for Hearing Aid Fitting in a Chaotic Environment

Abstract

1. Introduction

2. Materials and Methods

- Data collection and complaint modeling. Review a dataset of patient complaints to identify common auditory issues. Exclude ambiguous terms and categorize the complaints based on key characteristics.

- Development of the self-assessment questionnaire. Design a self-assessment questionnaire to capture patient complaints.

- Design of the fitting guide. Consult with experts to identify potential hearing aid solutions for each complaint. Classify the complaints into solution-based categories for ease of fitting recommendation.

- Construction of the HAFO ontology. Build the Hearing Aid Fitting Ontology (HAFO) to map patient complaints to hearing aid fitting rules and parameters.

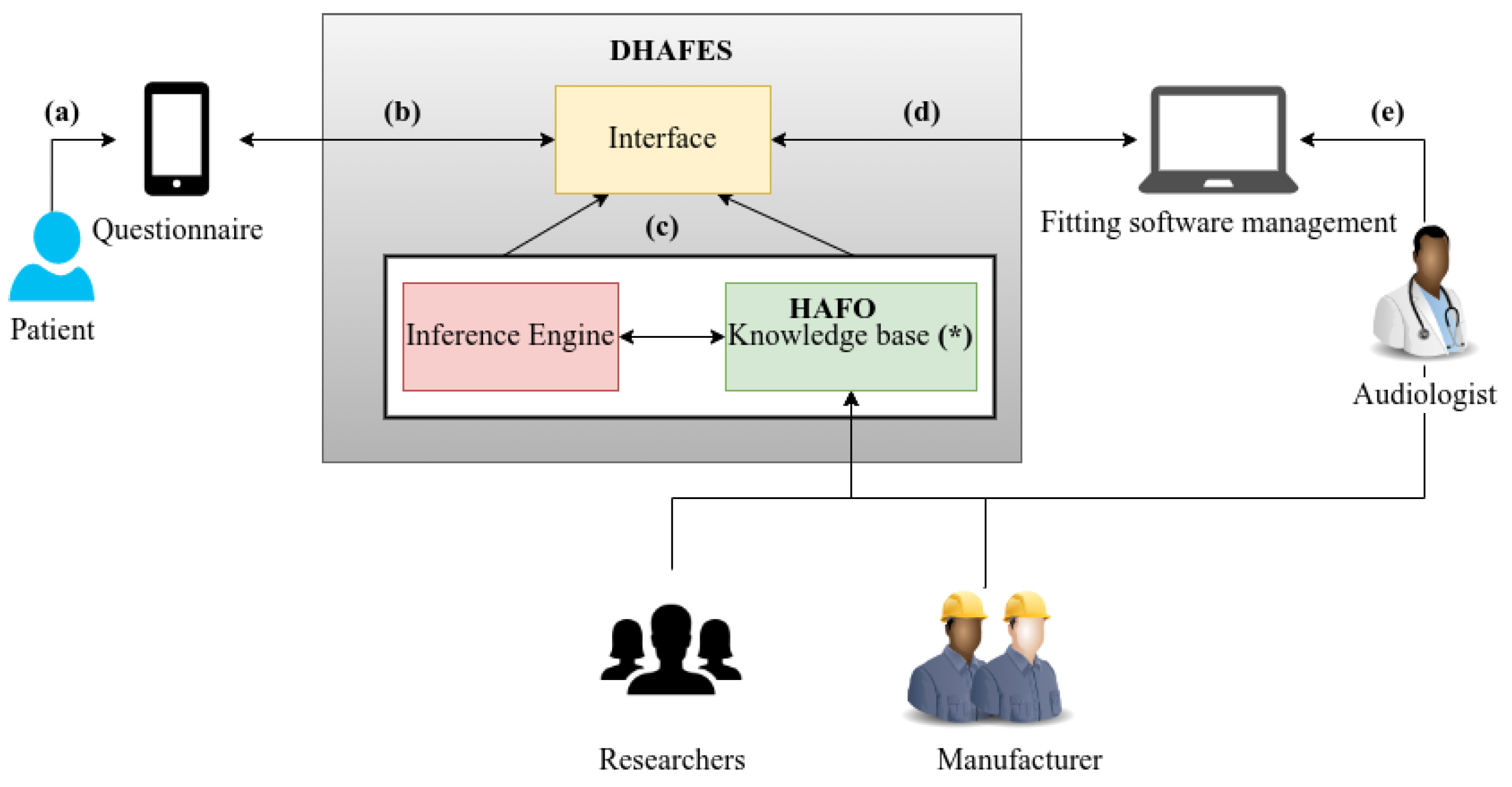

- Implementation of the DHAFES system. Develop the DHAFES system to automatically generate hearing aid fitting recommendations based on the HAFO ontology, allowing audiologists to review and adjust the suggestions.

2.1. Data Collection and Complaint Modeling

- Cause: This presents patients’ difficulty (e.g., I cannot hear well, I hear a lot of noise, etc.);

- Specification: This indicates if the problem is encountered with particular sounds (e.g., loud sounds or soft sounds) and if so, which ones;

- Environment: This indicates the environment in which the problem was encountered (very quiet, quiet, noisy, and very noisy). We made the following considerations according to Paul and association JNA [31]: very quiet (0–20 dB), quiet (25–60 dB), noisy (65–80 dB), very noisy (90–140 dB);

- Activity: This indicates the activity the patient was engaged in when he/she encountered the problem (e.g., conversation with someone/in a group/on the phone, etc.);

- Frequency: This indicates the number of times the patient encountered the problem (rarely, occasionally, often, usually, almost always, always);

- Discomfort level: This indicates the degree to which this problem bothers the patient (tolerable, annoying, unbearable).

2.2. Development of the Self-Questionnaire

2.3. Design of the Fitting Guide

- Experts’ hearing care experiences.Complaint modeling according to 6 characteristics allows reporting 5040 different complaints. We asked hearing care professionals to select among these the coherent complaints commonly reported by wearers and to associate fitting solutions. Based on expert consultations, we classified these solutions into six categories:

- Recommendation (R): This tells the patients what they can do to fix or alleviate the situation. For example, increasing or decreasing the volume of the hearing aid;

- Physical adjustment (AP): This includes situations when the complaint refers to a change in the characteristics or physical elements of the prosthesis. For example, changing the tube, cleaning the prosthesis;

- Electroacoustic adjustment (AE): This solution requires the knowledge of the parameter to be modified and the operation to be applied to this parameter (increase the global gain, decrease the low-frequency gain, etc);

- Therapy (T): It is used when the complaint refers to an intrinsic characteristic of the patient, such as speech comprehension problems like “I hear but I do not understand”;

- Consultation (C): This occurs when the information contained in the complaint is not sufficient to adjust;

- Accommodation (AC): This situation arises when no immediate solution can be found and the patient must adapt to it. As noted by AuditionSanté [33], the use of hearing aids may require an adjustment period.

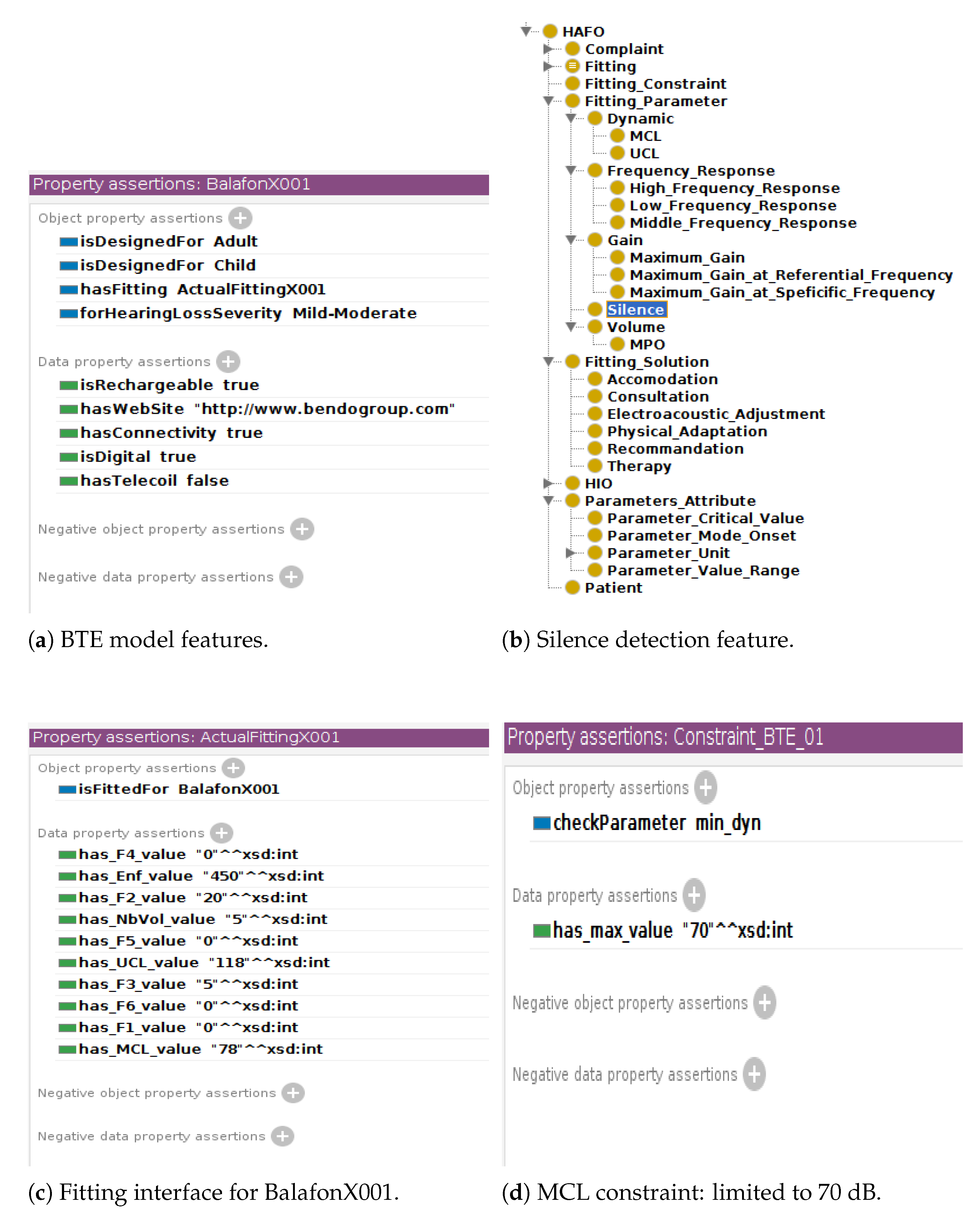

Audiologists were instructed to prioritize solutions for each complaint. In clinical settings, they would attempt the first solution, and if the patient remained dissatisfied, they would proceed to the next solution, continuing this process until the patient was satisfied. Complaints with identical solutions were grouped into an equivalence class, while unresolved complaints were categorized into a “consultation” class. For electroacoustic adjustments, audiologists were also asked to specify which fitting parameters to modify, such as frequency response, compression characteristics, or noise reduction. Following this procedure, 33 complaint classes were identified, each associated with a specific solution. This forms the basis of our fitting guide, an excerpt of which is presented in Table 1. For the full fit guide, see Appendix B.Based on the developed guide, the field labeled Command contains the values decrease − and increase +, indicating the necessary operations for adjusting the fitting. For example, for the class described as “loud sound in a quiet environment” (see Table 1, line 11), the corresponding solution is “decrease MCL”. However, in order to decrease the gain, it is important to consider the specific constraints of the hearing aid (e.g., the maximum and minimum values) and the extent to which the gain should be adjusted to achieve a noticeable change. While the first piece of information is provided by the manufacturer, the last one can be provided by the hearing care professional’s experiences and/or relevant scientific research. - Manufacturer’s recommendations.The manufacturers of the Balafon hearing aid have provided detailed recommendations for each hearing aid parameter, including default values, critical values, constraints, recommended variations, and relevant use cases. For instance, the permissible range for MCL (Most Comfortable Loudness) is 0–70 dB. Figure A2b,d, in Appendix C.2 describe how this manufacturer’s information is used.

- Research results.A review of the literature was conducted to identify studies addressing hearing aid parameter adjustment methods. This initial investigation focused on works related to frequency gain, as reported by Caswell-Midwinter and Whitmer [34], Jenstad et al. [35], Dirks et al. [36], and Caswell-Midwinter and Whitmer [37], as well as studies on dynamic compression by Franck et al. [18] and Anderson et al. [17]. Each study was classified according to the relevant parameter, experimental conditions, and reported outcomes, such as recommended adjustments.Based on the findings, recommended adjustments for a noticeable frequency gain include variation in a range of 4–12 dB. Depending on the clinical context, the applied gain may correspond to the minimum, maximum, or average of these values. For example, a solution for the complaint “loud sound in quiet environment” may involve “decrease MCL by 6 dB”. These findings were integrated into HAFO (available at https://github.com/annekevs/hafo (accessed on 26 March 2025)), the domain ontology developed for hearing aid fitting.

2.4. Construction of the HAFO Ontology

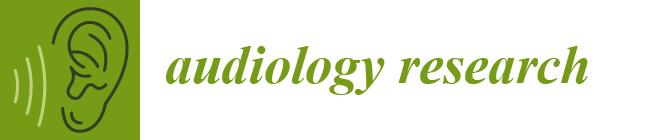

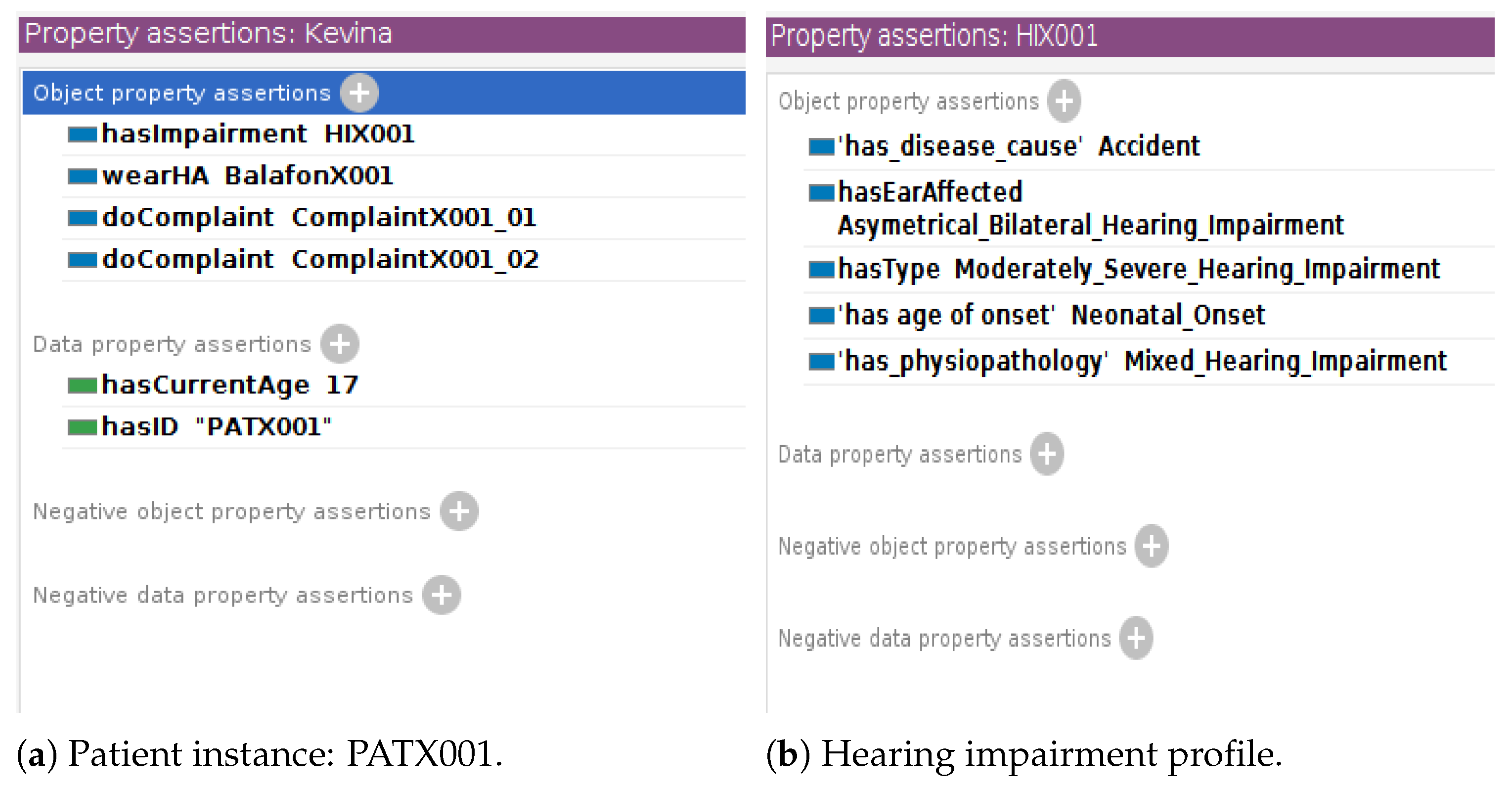

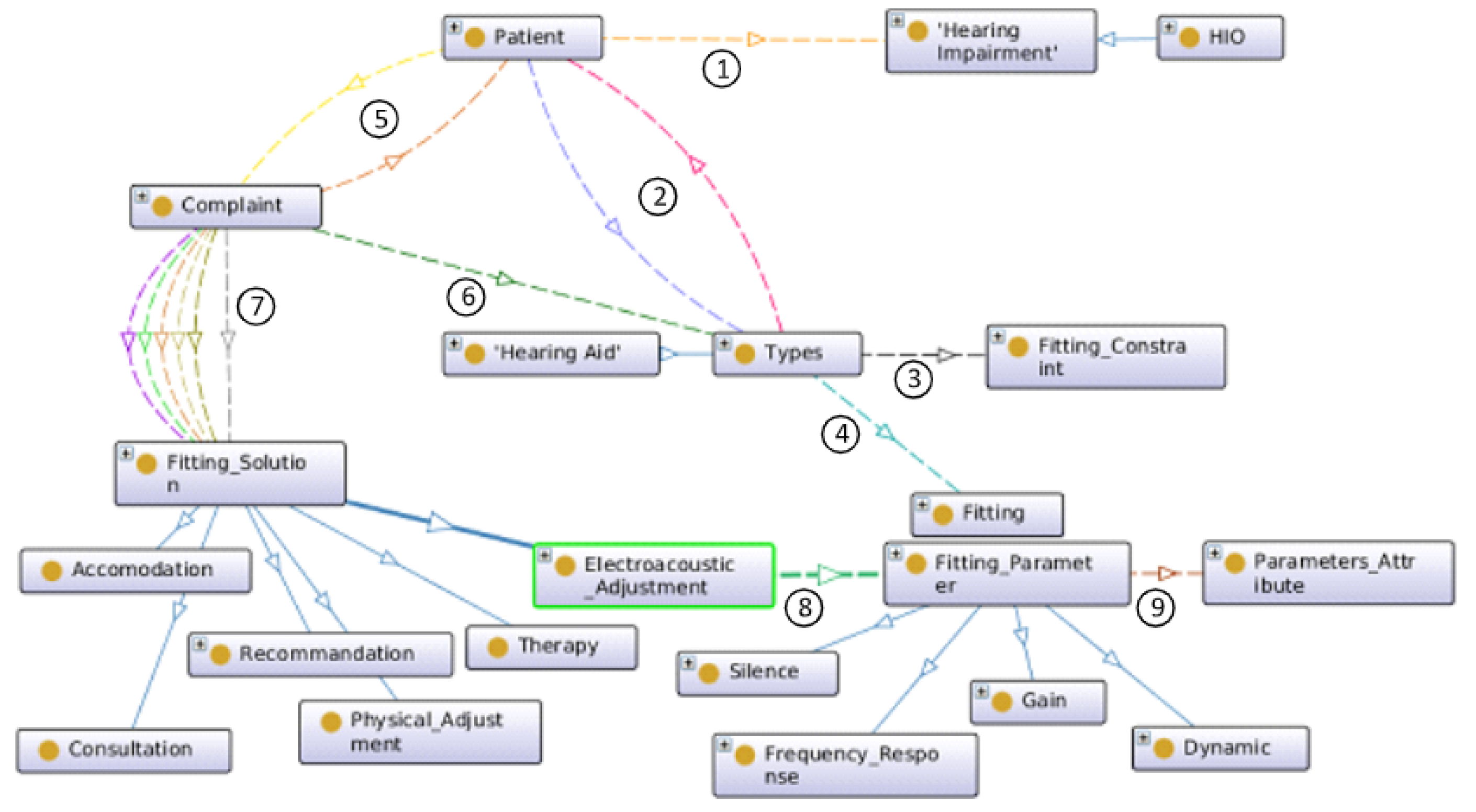

- HAFO design.The concepts related to hearing aid fitting mainly involve the patient, the complaint, and the fitting parameters of the hearing aid to be modified. The domain knowledge of the hearing impairment is based on the Hearing Impairment Ontology developed by Hotchkiss et al. [23], while the commercial description of the hearing aid is provided by in Hearing Aid Ontology designed by Napoli-Spatafora [24]. Figure 1 gives a graphical overview of the concepts related to the fitting of hearing aids. A patient has ① hearing loss and uses a hearing aid as ② treatment. Each hearing aid has ③ fitting constraints. For example, body hearing aids (traditional large hearing aids worn on the body) have a wider range of amplification than Behind-The-Air (BTE) aids. A hearing aid type has ④ a current fitting. A patient submits ⑤ a complaint. A complaint is characterized by the following: description, cause, specificity, environment, activity, frequency, and degree of discomfort. A complaint is related to ⑥ a hearing aid type. Each patient complaint is associated with one or more ⑦ fitting solutions (consultation, recommendation, physical adjustment, therapy, accommodation, and electroacoustic adjustment) depending on their degree of priority. The electroacoustic adjustment consists of a modification of the ⑧ adjustment parameters (or electroacoustic characteristics) of the hearing aid. Each parameter has its own ⑨ specific attributes (value range, mode, default value, unit, etc.).

- HAFO implementation.The HAFO ontology was developed in English using OWL 2 to define concepts and relationships for interoperability. Protégé (http://protege.stanford.edu (accessed on 26 March 2025)) 5.5.0 facilitated the ontology creation, while SWRL enabled rule-based logic based on the fit guide in Section 2.3. The inference engine Pellet (https://www.w3.org/2001/sw/wiki/Pellet (accessed on 26 March 2025)) was employed to infer implicit relationships. Pellet performs reasoning tasks such as consistency checking, classification, and query answering by processing the ontology’s defined relationships. This ensures that the system’s recommendations are logically consistent with HAFO, supporting dynamic adaptation to various fitting scenarios. For instance, Pellet can suggest adjustments like increasing microphone sensitivity or enhancing noise reduction for complaints about hearing in noise. OntoGraf was used for visualizing the ontology structure.

2.5. DHAFES System Architecture

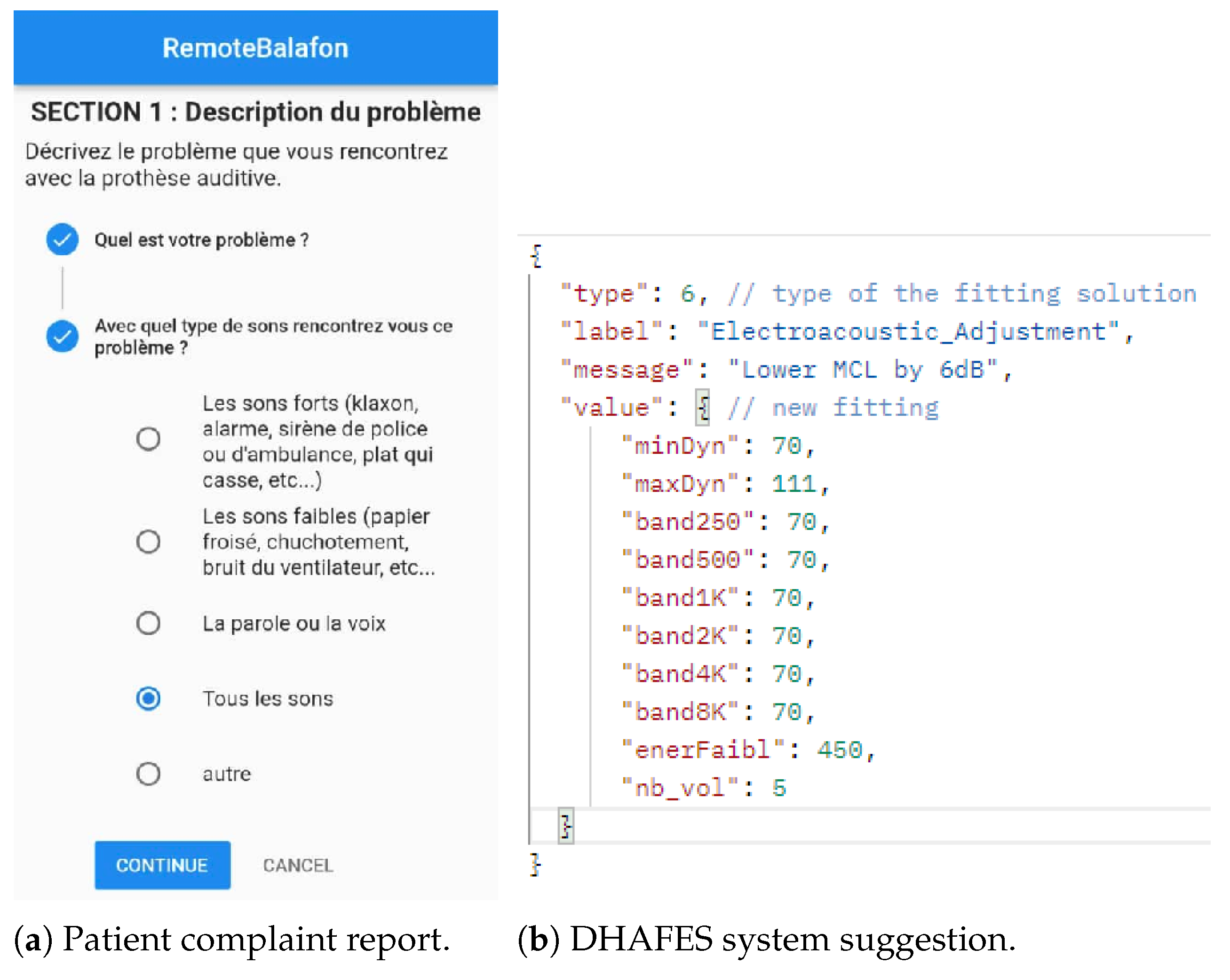

- Step 1: Patient interaction and complaint collection.Patients interact with DHAFES through a bilingual mobile interface, completing the structured, expert-validated questionnaire designed in Section 2.2.

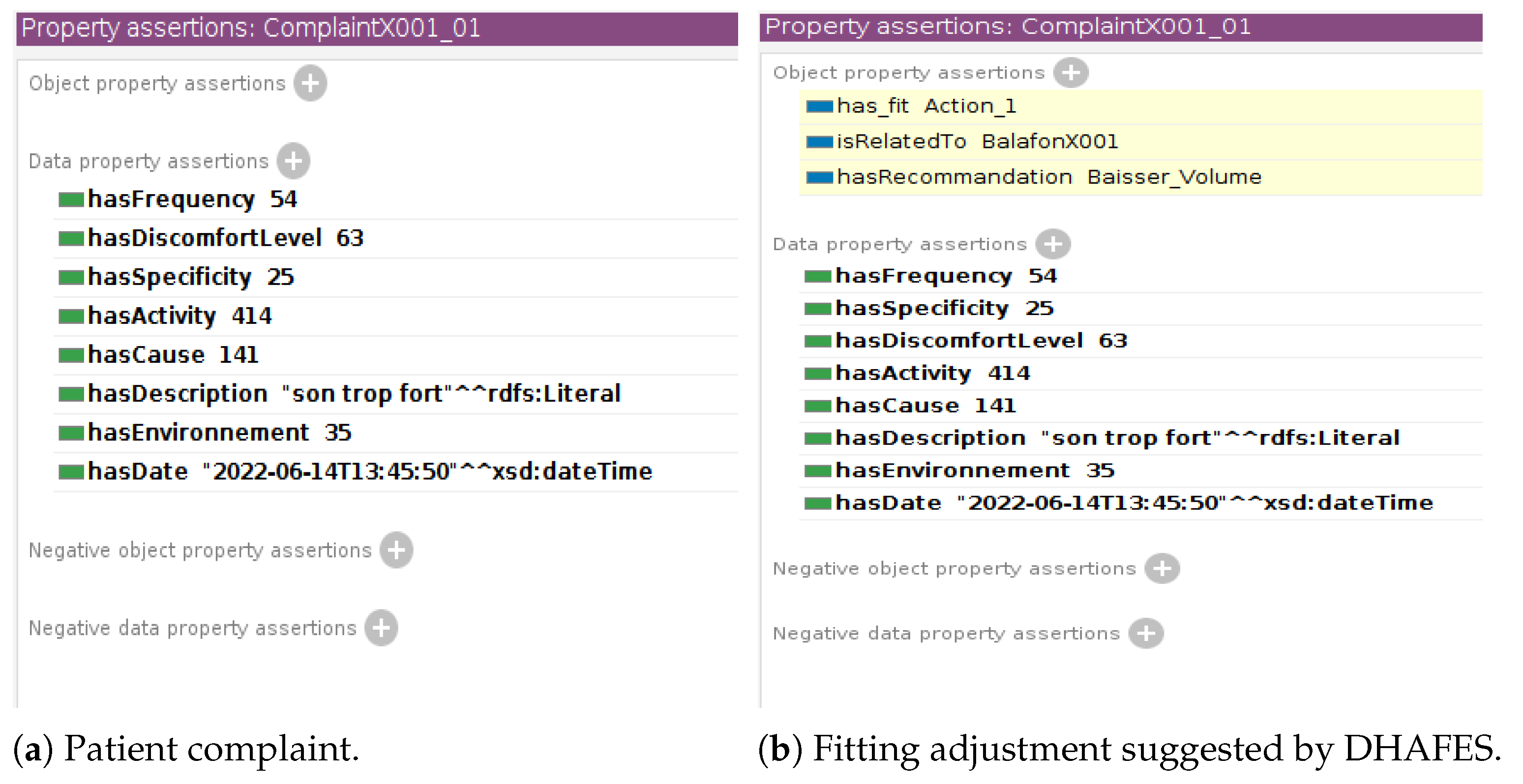

- Step 2: Ontology-based inference and recommendation generation.Once the questionnaire is submitted, DHAFES converts the patient’s structured responses into an ontological query, uses a semantic reasoning engine (Pellet) in combination with the HAFO ontology, determines which class(es) of complaints the input falls under (e.g., feedback, speech-in-noise, volume discomfort), and applies predefined expert rules to generate recommendations and parameter adjustments. Each complaint class is mapped to a specific response strategy, such as electroacoustic adjustment (e.g., reduce gain at certain frequencies), physical adjustment (e.g., change ear tip), recommendation (e.g., better placement of the hearing aid), or referral or therapy (e.g., auditory training or ENT consultation).

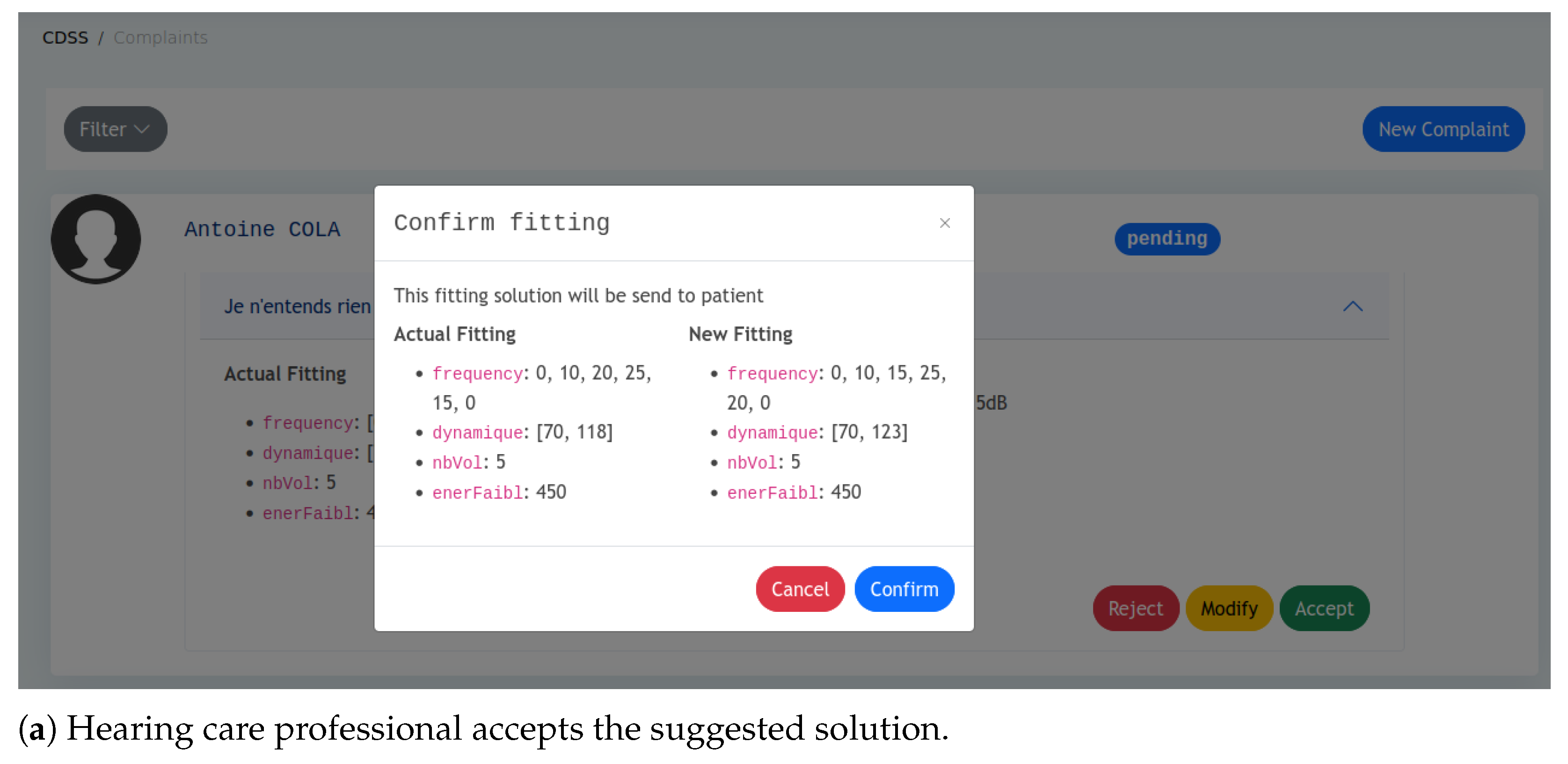

- Step 3: Audiologist review and remote fitting.All recommendations are reviewed by an audiologist via the Fitting Management Software interface. The audiologist can approve, modify, request more information, or suggest clinical referral. Once approved, adjustments are applied to the hearing aid, including in remote settings, ensuring clinical oversight. After the adjustment, the patient is prompted to rate their satisfaction during follow-up. These feedback points are logged to track resolution rates per complaint class, flag complaints needing ontology refinement, and support continuous improvement of DHAFES rules via expert review.

- Step 4: Feedback and system evolution.Post-adjustment, patients can rate their satisfaction. Expert analysis of this feedback informs updates to the HAFO ontology. Although DHAFES is not self-learning, it evolves through expert input, clinical collaboration, manufacturer guidance, and research. The system is designed to be robust, explainable, and operable without constant internet access or local audiology services.

3. Results

- Step 1: HAFO verification.This step aims to verify that the ontology includes all the necessary parameters for the Balafon hearing aid. If any are missing, new concepts must be added. For example, one of the features of the Balafon hearing aid is the silence detection, which is managed by the parameter enerFaibl. So, we added the “Silence” class as a subclass of the “Fitting Parameter” class of the ontology (see Figure A2b in Appendix C.2).

- Step 2: Instances creation.In this step, we create permanent instances that will be used by the inference engine to process the query. These instances involve hearing aid models (BTE, body hearing aid), parameters, adjustment constraints (related to device limitation), etc.… For instance, the BTE model of the Balafon hearing aid limits MCL to 70 dB despite the typical 0–120 dB range (see Figure A2d in Appendix C.2). Such constraints ensure that recommendations remain within device capabilities. Other instances related to Patient, Hearing Impairment, Hearing Aid, Complaint, and Fitting can be temporary and will be created dynamically during the prediction.

- Step 3: Rules update.If necessary, adjustment rules (derived from the fit guide developed in Section 2.3) can be updated based on the patient’s complaints, parameter ranges, and adjustment constraints. An example of a rule, written in SWRL language, is provided in Appendix C.3. Additional rules can be added based on patient characteristics such as age, gender, and demographics, with the system automatically inferring the appropriate adjustments. The result of this step is HAFO-Balafon, a fully customized version of HAFO for the hearing aid Balafon. This knowledge base will be stored in DHAFES, allowing the RemoteBalafon Platform to make API calls for adjustment recommendations.

- Step 4: Integration.The DHAFES expert system was integrated with the existing remote fitting platform called RemoteBalafon, and the resulting physical architecture is shown in Figure 3. The digital questionnaire is integrated into the m-Health application on the patient side. When a patient reports a complaint, the encoded data are transmitted to both the audiologist’s web platform and the DHAFES system. DHAFES then performs inference and returns the recommended adjustments along with an explanation of the reasoning behind them to the audiologist.

4. Discussion

4.1. Strengths and Clinical Impact

4.2. DHAFES vs. AI/ML Models

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Self-Evaluation Questionnaire

Appendix A.1. Section 1: Describe the Problem You Are Facing

- I do not hear anything.

- I hear a lot of noise:

- -

- The sound of rain or wind.

- -

- Ambient noises.

- -

- Buzzing, grinding noises.

- -

- Whistling sounds.

- -

- Other:__________________________

- I hear but do not understand.

- I do not hear well because:

- -

- The sound is too loud.

- -

- The sound is too weak.

- -

- The sound cuts out intermittently.

- -

- The sound is strange, unnatural.

- -

- The sound is distorted.

- -

- The sound is muffled.

- -

- The sound is metallic.

- -

- The sound is painful.

- -

- Other:__________________________

- I hear an echo.

- Other:__________________________

- Loud sounds (horn, alarm, siren, dish breaking, running water, door slamming, etc.).

- Soft sounds (paper rustling, fan noise, etc.).

- Speech or voice.

- My own voice.

- Men’s voices (deep pitch).

- Women’s or children’s voices (high pitch).

- Any type of voice.

- All sounds.

- Other:__________________________

Appendix A.2. Section 2: Describe the Situation Where You Experienced This Problem

- Very quiet (recording studio, etc.).

- Quiet (residential area, office, etc.).

- Noisy (playground, restaurant, traffic, party, etc.).

- Very noisy (bar, market, concert, packed stadium, etc.).

- Any environment.

- Other:__________________________

- Talking.

- Talking to someone.

- Talking in a group.

- Talking on the phone.

- Listening to the radio, TV, or a movie.

- Attending a class, conference, or seminar

- Listening to music.

- Doing anything.

- Other:__________________________

Appendix A.3. Section 3: Frequency and Discomfort Level

- Never.

- Rarely.

- Occasionally.

- Sometimes.

- Often.

- Very often.

- Always.

- Bearable.

- Annoying.

- Unbearable.

Appendix A.4. Summary: Complaint Description

- Sample 1 (coherent):When I am in a quiet place, I have difficulty conversing with someone because the sound cuts out. I experience this problem all the time, and I find it unbearable.

- Sample 2 (incoherent):When I’m in a quiet place, listening to the radio, my voice makes a lot of noise.

- Yes.

- Almost.

- No.

Appendix B. Fitting Guide

| N° | Complaint | Solution a | Category b | Parameter c | Command | Recommendation |

|---|---|---|---|---|---|---|

| 1 | not hearing sounds | C | The hearing aid may be in standby mode. Restart (turn off and on) the hearing aid and check the battery level. Ensure that your hearing aid is on. | |||

| 2 | wind noise in quiet environment | EA | dynamic | MCL | decrease− | Do not worry, this is the intrinsic noise of the hearing aid. |

| 3 | whistling in quiet environment | AP | Check that your hearing aid is properly positioned in the ear. | |||

| 4 | whistling in noisy environment | AP | Check that your hearing aid is properly positioned. | |||

| 5 | whistling | EA | dynamic | UCL | decrease− | Lower the volume. |

| 6 | noise in quiet environment | EA | dynamic | MCL | decrease− | Lower the volume. |

| 7 | noise in noisy environment | EA | volume | nb_vol | increase+ | Lower the volume. |

| 8 | hearing but not understanding | T | Follow a therapy. Please make an appointment with an audiologist. | |||

| 9 | loud sounds are very loud | EA | dynamic | UCL | decrease− | Lower the volume. |

| 10 | soft sounds loud in quiet environment | EA | dynamic | MCL | decrease− | Lower the volume. |

| 11 | loud sound in quiet environment | EA | dynamic | UCL | decrease− | Lower the volume. |

| 12 | loud sound in noisy environment | C | Lower the volume, reduce sensitivity. | |||

| 13 | loud sound | EA | dynamic | MCL | decrease−− | Lower the volume. |

| 14 | loud sounds are faint in a quiet environment | EA | dynamic | UCL | increase++ | Increase the volume. |

| 15 | loud sounds are faint in noisy environment | EA | dynamic | MCL | decrease−− | |

| 16 | loud sounds are soft | EA | dynamic | UCL | increase++ | Increase the volume. |

| 17 | weak sounds are soft in quiet environment | EA | dynamic | MCL | increase+ | Increase the volume. |

| 18 | weak sounds are soft in noisy environment | R | Ask the speaker to speak louder. | |||

| 19 | weak sounds are very soft | EA | dynamic | MCL | increase+ | Increase the volume. |

| 20 | soft sound in quiet environment | EA | dynamic | MCL | increase++ | |

| 21 | soft sound in noisy environment | R | Lower the volume, ask the speaker to speak louder. | |||

| 22 | soft sound | EA | dynamic | MCL | increase++ | |

| 23 | sound cutting in quiet environment | EA | silence | enerFaibl | decrease−− | |

| 24 | sound cutting in noisy environment | EA | silence | enerFaibl | decrease−−−− | |

| 25 | sound cutting | EA | silence | enerFaibl | decrease−− | |

| 26 | distorted sound | EA | frequency | medium | Make speech intelligible. | |

| 27 | muffled sound | EA | frequency | low and medium | Attenuate low frequencies, amplify mid frequencies. | |

| 28 | painful sound ( high-pitched sound) | EA | frequency | high | decrease− | |

| 29 | loud male voices in quiet environment | EA | frequency | low | decrease− | |

| 30 | loud children’s voices in quiet environment | EA | frequency | high | decrease− | |

| 31 | weak sounds are loud | EA | dynamic | MCL | decrease− | Lower the volume. |

| 32 | I hear but I do not understand + specific voice | C | ||||

| 33 | other | C |

- MCL (Most Comfortable Level): The volume level at which sound is perceived as comfortable for listening.

- UCL (Uncomfortable Loudness Level): The threshold at which sound becomes intolerably loud.

- Frequency: This governs the adjustment of sound pitch, affecting the amplification of low, mid, or high frequencies to enhance speech clarity and listening comfort.

- enerFaibl: A parameter regulating silence detection.

- nb_vol: A control for adjusting the hearing aid’s overall volume output.

Appendix C. DHAFES Entities

Appendix C.1. HAFO Patient’s Characteristics

Appendix C.2. Manufacturer’s Brand

Appendix C.3. DHAFES Rules, Inference, and Path Explanation

| # | Explanation | Justification |

|---|---|---|

| 1 | ComplaintX001_01 hasSpecificity 25 | YES |

| 2 | ComplaintX001_01 hasCause 141 | YES |

| 3 | doComplaint Domain Patient | NO |

| 4 | ComplaintX001_01 hasEnvironnement 35 | YES |

| 5 | PATX001 doComplaint ComplaintX001_01 | YES |

| 6 | hasCause(?com, ?cau), Patient(?p), equal(?env, 35), doComplaint(?p, ?com), hasSpecificity(?com, ?spec), equal(?cau, 141), hasEnvironnement(?com, ?env), equal(?spec, 25) ⇒ has_fit(?com, Action_1), hasRecommandation(?com, Baisser_Volume) | YES |

Appendix D. DHAFES—Fitting Platform

References

- World Health Organization. World Report on Hearing; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Balling, L.W.; Mølgaard, L.L.; Townend, O.; Nielsen, J.B.B. The Collaboration between Hearing Aid Users and Artificial Intelligence to Optimize Sound. Semin. Hear. 2021, 42, 282–294. [Google Scholar] [CrossRef] [PubMed]

- Wonkam Tingang, E.; Noubiap, J.J.; Fokouo, J.V.; Oluwole, O.G.; Nguefack, S.; Chimusa, E.R.; Wonkam, A. Hearing Impairment Overview in Africa: The Case of Cameroon. Genes 2020, 2, 233. [Google Scholar] [CrossRef] [PubMed]

- Angley, G.P.; Schnittker, J.A.; Tharpe, A.M. Remote hearing aid support: The next frontier. J. Am. Acad. Audiol. 2017, 28, 893–900. [Google Scholar]

- Kim, J.; Jeon, S.; Kim, D.; Shin, Y. A Review of Contemporary Teleaudiology: Literature Review, Technology, and Considerations for Practicing. J. Audiol. Ontol. 2021, 25, 1–7. [Google Scholar] [CrossRef]

- Fabry, D.A.; Bhowmik, A.K. Improving Speech Understanding and Monitoring Health with Hearing Aids Using Artificial Intelligence and Embedded Sensors. Semin. Hear. 2021, 42, 295–308. [Google Scholar] [CrossRef]

- Rapoport, N.; Pavelchek, C.; Michelson, A.P.; Shew, M.A. Artificial Intelligence in Otology and Neurotology. Otolaryngol. Clin. N. Am. 2024, 57, 791–802. [Google Scholar] [CrossRef]

- Sutton, R.T.; Pincock, D.; Baumgart, D.C.; Sadowski, D.C.; Fedorak, R.N.; Kroeker, K.I. An overview of clinical decision support systems: Benefits, risks, and strategies for success. NPJ Digit. Med. 2020, 3, 17. [Google Scholar] [CrossRef] [PubMed]

- Jenstad, L.; Van Tasell, D.; Chiquita, E. Hearing aid troubleshooting based on patients’ descriptions. Am. J. Audiol. 2003, 14, 34760. [Google Scholar] [CrossRef]

- Thielemans, T.; Pans, D.; Chenault, M.; Anteunis, L. Hearing aid fine-tuning based on Dutch descriptions. Int. J. Audiol. 2017, 56, 507–515. [Google Scholar] [CrossRef]

- Chiriboga, L.F. Hearing aids: What are the most recurrent complaints from users and their possible relationship with fine tuning? Audiol. Commun. Res. 2021, 27, e2550. [Google Scholar] [CrossRef]

- ASHA. Guidelines for hearing aid fitting for adults. Am. J. Audiol. 1998, 7, 5–13. [Google Scholar] [CrossRef]

- Valente, M.; Benson, D.; Chisolm, T.; Citron, D.; Hampton, D.; Loavenbruck, A.; Ricketts, T.; Solodar, H.; Sweetow, R. Guidelines for the Audiologic Management of Adult Hearing Impairment Task Force Members; American Academic of Audiology: Danbury, CT, USA, 2007. [Google Scholar]

- Anderson, M.C.; Arehart, K.H.; Souza, P.E. Survey of Current Practice in the Fitting and Fine-Tuning of Common Signal-Processing Features in Hearing Aids for Adults. J. Am. Acad. Audiol. 2018, 29, 118–124. [Google Scholar] [CrossRef]

- Froehlich, M.; Branda, E.; Apel, D. Signia TeleCare Facilitates Improvements in Hearing Aid Fitting Outcomes. 2019. Available online: https://www.audiologyonline.com/articles/signia-telecare-facilitates-improvements-in-24096 (accessed on 8 August 2022).

- Liang, R.; Guo, R.; Xi, J.; Xie, Y.; Zhao, L. Self-Fitting Algorithm for Digital Hearing Aid Based on Interactive Evolutionary Computation and Expert System. Appl. Sci. 2017, 7, 272. [Google Scholar] [CrossRef]

- Anderson, M.C.; Arehart, K.H.; Souza, P.E. Acoustical and Perceptual Comparison of Noise Reduction and Compression in Hearing Aids. J. Speech Lang. Hear. Res. 2015, 58, 1363–1376. [Google Scholar] [CrossRef]

- Franck, B.A.; van Kreveld-Bos, C.S.; Dreschler, W.A.; Verschuure, H. Evaluation of spectral enhancement in hearing aids, combined with phonemic compression. J. Acoust. Soc. Am. 1999, 106, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Matentzoglu, N.; Malone, J.; Mungall, C. MIRO: Guidelines for minimum information for the reporting of an ontology. J. Biomed. Semant. 2018, 9, 6. [Google Scholar] [CrossRef]

- Haque, A.K.M.B.; Arifuzzaman, B.M.; Siddik, S.A.N.; Kalam, A.; Shahjahan, T.S.; Saleena, T.S.; Alam, M.; Islam, M.R.; Ahmmed, F.; Hossain, M.J. Semantic Web in Healthcare: A Systematic Literature Review of Application, Research Gap, and Future Research Avenues. Int. J. Clin. Pract. 2022, 2022, 6807484. [Google Scholar] [CrossRef] [PubMed]

- Rubin, D.; Shah, N.; Noy, N. Biomedical ontologies: A functional perspective. Briefings Bioinform. 2008, 9, 75–90. [Google Scholar] [CrossRef]

- Robin, C.R.R.; Jeyalaksmi, M.S. Cochlear Implantation Domain Knowledge Representation and Recommendation. Ann. Rom. Soc. Cell Biol. 2021, 25, 13719. Available online: http://annalsofrscb.ro/index.php/journal/article/view/8183 (accessed on 26 March 2025).

- Hotchkiss, J.; Manyisa, N.; Mawuli Adadey, S.; Oluwole, O.G.; Wonkam, E.; Mnika, K.; Yalcouye, A.; Nembaware, V.; Haendel, M.; Vasilevsky, N.; et al. The Hearing Impairment Ontology: A Tool for Unifying Hearing Impairment Knowledge to Enhance Collaborative Research. Genes 2019, 10, 960. [Google Scholar] [CrossRef]

- Napoli-Spatafora, M.A. HAO: Hearing Aid Ontology. In Proceedings of the International Conference on Biomedical Ontologies, Bozen-Bolzano, Italy, 16–18 September 2021; Available online: http://ceur-ws.org/Vol-3073/paper12.pdf (accessed on 26 March 2025).

- Cox, R.M.; Alexander, G.C. The Abbreviated Profile of Hearing Aid Benefit. Ear Hear. 1995, 16, 176–186. [Google Scholar] [CrossRef] [PubMed]

- Newman, C.W.; Weinstein, B.E. The Hearing Handicap Inventory for the Elderly as a measure of hearing aid benefit. Ear Hear. 1988, 9, 81–85. [Google Scholar] [CrossRef]

- Newman, C.W.; Weinstein, B.E.; Jacobson, G.P.; Hug, G.A. The Hearing Handicap Inventory for Adults: Psychometric adequacy and audiometric correlates. Ear Hear. 1990, 11, 430–433. [Google Scholar] [CrossRef] [PubMed]

- Gatehouse, S. Glasgow hearing aid benefit profile: Derivation and validation of a client-centered outcome measure for hearing aid services. J. Am. Acad. Audiol. 1999, 10, 80–103. [Google Scholar]

- Laboratories, N.A. COSI—Client Oriented Scale of Improvement. 2022. Available online: https://www.nal.gov.au/nal_products/cosi (accessed on 26 March 2025).

- Schum, D.J. Responses of elderly hearing aid users on the hearing aid performance inventory. J. Am. Acad. Audiol. 1992, 3, 308–314. [Google Scholar]

- Paul, Z. Les Guides d’Informations de l’Association JNA: Échelle des Décibels et Anatomie de l’Oreille; Japanese Nursing Association: Tokyo, Japan, 2018. [Google Scholar]

- Gatehouse, S.; Noble, W. The Speech, Spatial and Qualities of Hearing Scale (SSQ). Int. J. Audiol. 2004, 43, 85–99. [Google Scholar] [CrossRef]

- AuditionSanté. Vivre Avec Des Appareils Auditifs. 2022. Available online: https://www.auditionsante.fr/experience-avec-appareils-auditifs/ (accessed on 26 March 2025).

- Caswell-Midwinter, B.; Whitmer, W. Discrimination of Gain Increments in Speech. Trends Hear. 2019, 23. [Google Scholar] [CrossRef]

- Jenstad, L.; Bagatto, M.; Seewald, R.; Scollie, S.; Cornelisse, L.; Scicluna, R. Evaluation of the Desired Sensation Level [Input/Output] Algorithm for Adults with Hearing Loss: The Acceptable Range for Amplified Conversational Speech. Ear Hear. 2007, 28, 793–811. [Google Scholar] [CrossRef]

- Dirks, D.; Ahlstrom, J.; Noffsinger, P. Preferred frequency response for two- and three-channel amplification systems. J. Rehabil. Res. Dev. 1993, 30, 305–317. [Google Scholar]

- Caswell-Midwinter, B.; Whitmer, W.M. The perceptual limitations of troubleshooting hearing-aids based on patients’ descriptions. Int. J. Audiol. 2020, 60, 427–437. [Google Scholar] [CrossRef]

- Fokouo, J.V.F.; Ngounou, G.M.; Nyeki, A.-R.N.; Choffor-Nchinda, E.; Dalil, A.B.; Ngom, E.G.S.M.; Vokwely, J.E.E.; Nti, A.B.A.; Kom, M.; Njock, L.R. Hearing Assessment of Medical Equipment for Health Facilities in Cameroon. New Horizons Med. Med. Res. 2022, 9, 139–150. [Google Scholar] [CrossRef]

- Sadeghi, Z.; Alizadehsani, R.; CIFCI, M.A.; Kausar, S.; Rehman, R.; Mahanta, P.; Bora, P.K.; Almasri, A.; Alkhawaldeh, R.S.; Hussain, S.; et al. A review of Explainable Artificial Intelligence in healthcare. Comput. Electr. Eng. 2024, 118, 109370. [Google Scholar] [CrossRef]

- Tagne, J.F.; Burns, K.; O’Brein, T.; Chapman, W.; Cornell, P.; Huckvale, K.; Ameen, I.; Bishop, J.; Buccheri, A.; Reid, J.; et al. Challenges for Remote Patient Monitoring Programs in Rural and Regional Areas: A Qualitative Study. BMC Health Serv. Res. 2025, 25, 374. [Google Scholar] [CrossRef]

- Chodosh, J.; Blustein, J. Hearing assessment—The challenges and opportunities of self report. J. Am. Geriatr. Soc. 2022, 70, 386–388. [Google Scholar] [CrossRef]

- Sandström, J.; Swanepoel, D.; Laurent, C.; Umefjord, G.; Lundberg, T. Accuracy and Reliability of Smartphone Self-Test Audiometry in Community Clinics in Low Income Settings: A Comparative Study. Ann. Otol. Rhinol. Laryngol. 2020, 129, 578–584. [Google Scholar] [CrossRef]

| N° | Complaint | Solution | Category 1 | Parameter 2 | Command | Recommendation |

|---|---|---|---|---|---|---|

| 1 | not hearing sounds | C | The hearing aid may be in standby mode. Restart (turn off and on) the hearing aid and check the battery level. Ensure that your hearing aid is on. | |||

| 2 | wind noise in quiet environment | EA | dynamic | MCL | decrease− | Do not worry, this is the intrinsic noise of the hearing aid. |

| 3 | whistling in quiet environment | AP | Check that your hearing aid is properly positioned in the ear. | |||

| 4 | whistling in noisy environment | AP | Check that your hearing aid is properly positioned. | |||

| 5 | whistling | EA | dynamic | UCL | decrease− | Lower the volume. |

| 6 | noise in quiet environment | EA | dynamic | MCL | decrease− | Lower the volume. |

| 7 | noise in noisy environment | EA | volume | nb_vol | increase+ | Lower the volume. |

| 8 | hearing but not understanding | T | Follow a therapy. Please make an appointment with an audiologist. | |||

| 9 | loud sounds | EA | dynamic | UCL | decrease− | Lower the volume. |

| 10 | soft sounds loud in quiet environment | EA | dynamic | MCL | decrease− | Lower the volume. |

| 11 | loud sound in quiet environment | EA | dynamic | UCL | decrease− | Lower the volume. |

| 12 | loud sound in noisy environment | C | Lower the volume, reduce sensitivity. | |||

| 13 | loud sound | EA | dynamic | MCL | decrease−− | Lower the volume. |

| 14 | loud soft sounds in quiet environment | EA | dynamic | UCL | increase++ | Increase the volume. |

| 15 | loud soft sounds in noisy environment | EA | dynamic | MCL | decrease−− | |

| 16 | loud soft sounds | EA | dynamic | UCL | increase++ | Increase the volume. |

| 17 | soft sounds soft in quiet environment | EA | dynamic | MCL | increase+ | Increase the volume. |

| 19 | soft sounds soft | EA | dynamic | MCL | increase+ | Increase the volume. |

| 20 | soft sound in quiet environment | EA | dynamic | MCL | increase++ | |

| 23 | sound cutting in quiet environment | EA | silence | enerFaibl | decrease−− | |

| 24 | sound cutting in noisy environment | EA | silence | enerFaibl | decrease−−−− | |

| 25 | sound cutting | EA | silence | enerFaibl | decrease−− |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ngounou, G.M.; Chana, A.M.; Batchakui, B.; Nguen, K.A.; Fokouo Fogha, J.V. An Ontology-Based Expert System Approach for Hearing Aid Fitting in a Chaotic Environment. Audiol. Res. 2025, 15, 39. https://doi.org/10.3390/audiolres15020039

Ngounou GM, Chana AM, Batchakui B, Nguen KA, Fokouo Fogha JV. An Ontology-Based Expert System Approach for Hearing Aid Fitting in a Chaotic Environment. Audiology Research. 2025; 15(2):39. https://doi.org/10.3390/audiolres15020039

Chicago/Turabian StyleNgounou, Guy Merlin, Anne Marie Chana, Bernabé Batchakui, Kevina Anne Nguen, and Jean Valentin Fokouo Fogha. 2025. "An Ontology-Based Expert System Approach for Hearing Aid Fitting in a Chaotic Environment" Audiology Research 15, no. 2: 39. https://doi.org/10.3390/audiolres15020039

APA StyleNgounou, G. M., Chana, A. M., Batchakui, B., Nguen, K. A., & Fokouo Fogha, J. V. (2025). An Ontology-Based Expert System Approach for Hearing Aid Fitting in a Chaotic Environment. Audiology Research, 15(2), 39. https://doi.org/10.3390/audiolres15020039