An Adaptive Weight Collaborative Driving Strategy Based on Stackelberg Game Theory

Abstract

1. Introduction

2. Modeling of the Vehicle Dynamics and Human–Machine Steering Controllers

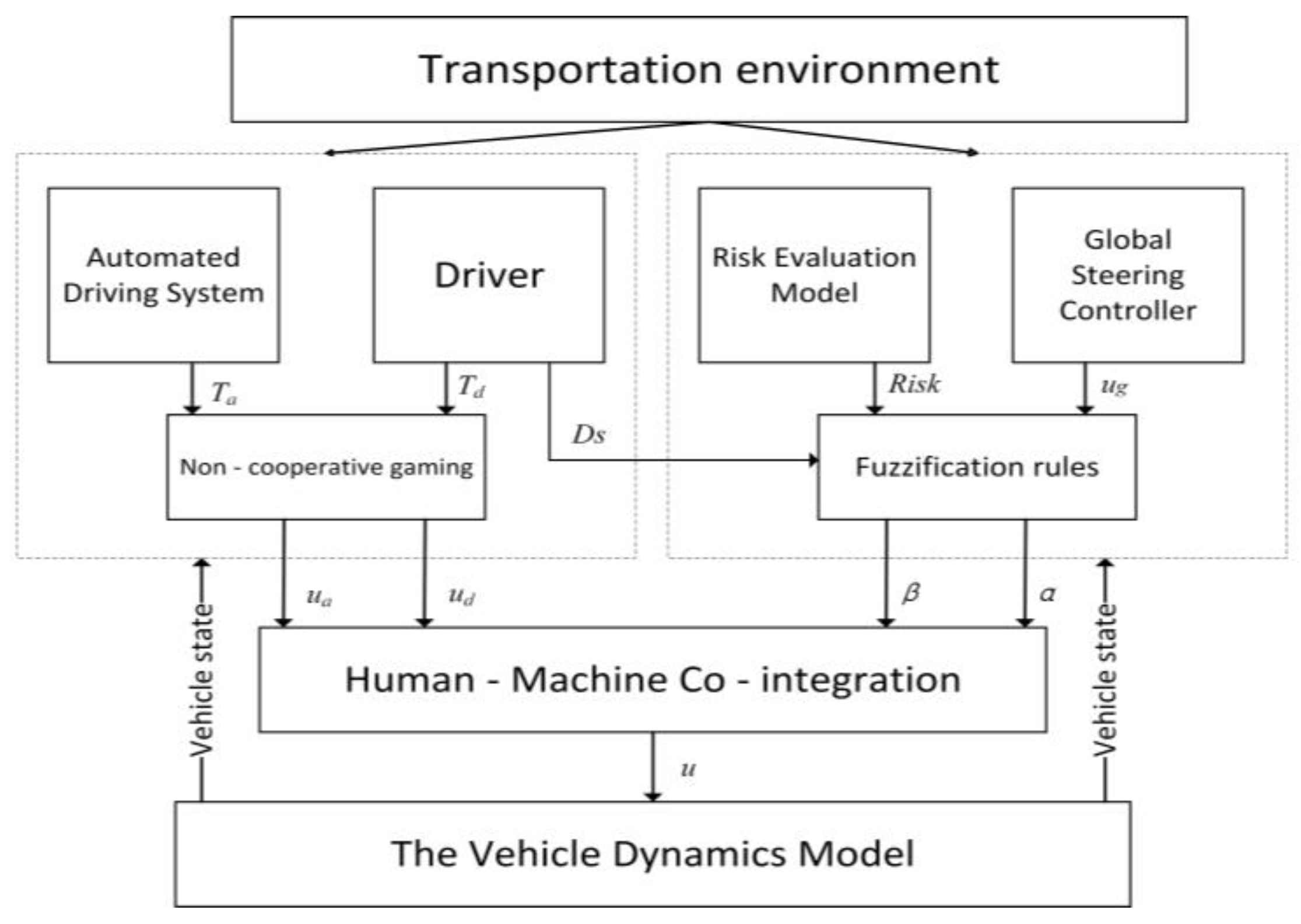

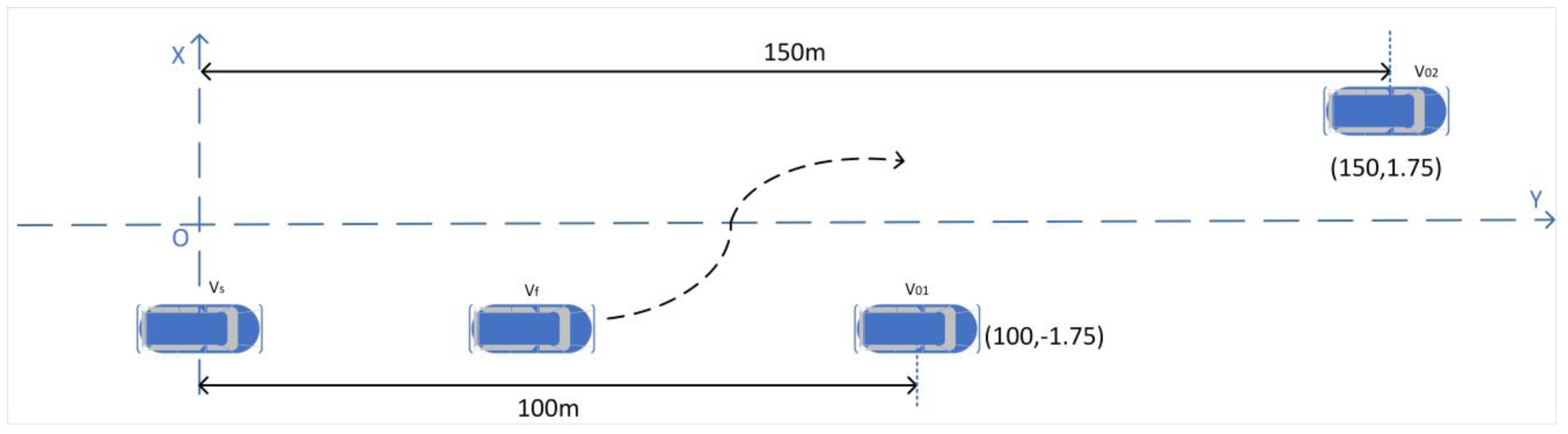

2.1. The Control Architecture

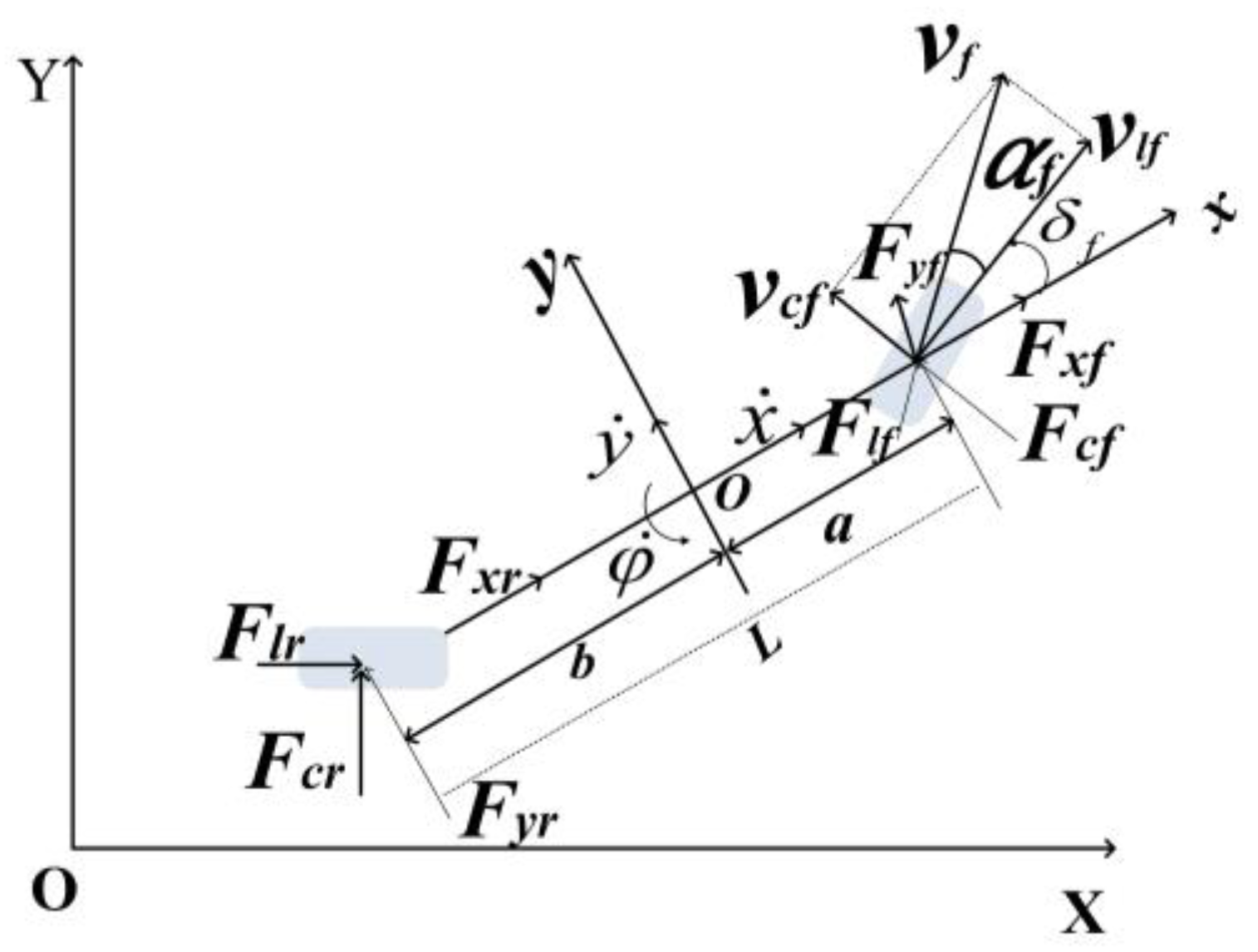

2.2. Modeling of the Vehicle Dynamics

2.3. The Driver Model

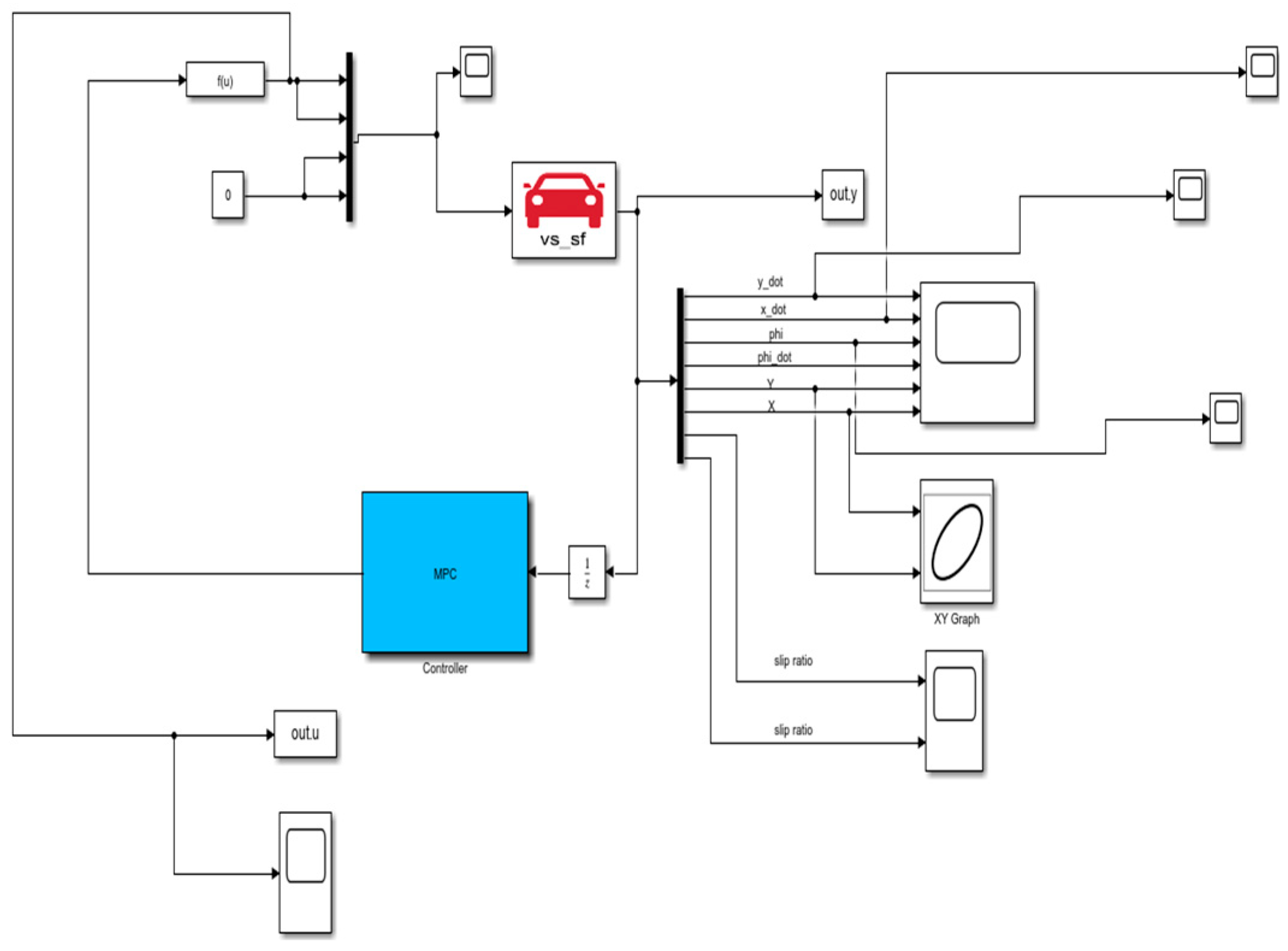

2.4. A Transverse Controller Based on Linear Time-Varying Model Predictive Control

3. The Leader–Follower Game-Based Optimal Control Strategy

3.1. A Solution to the Leader–Follower Game

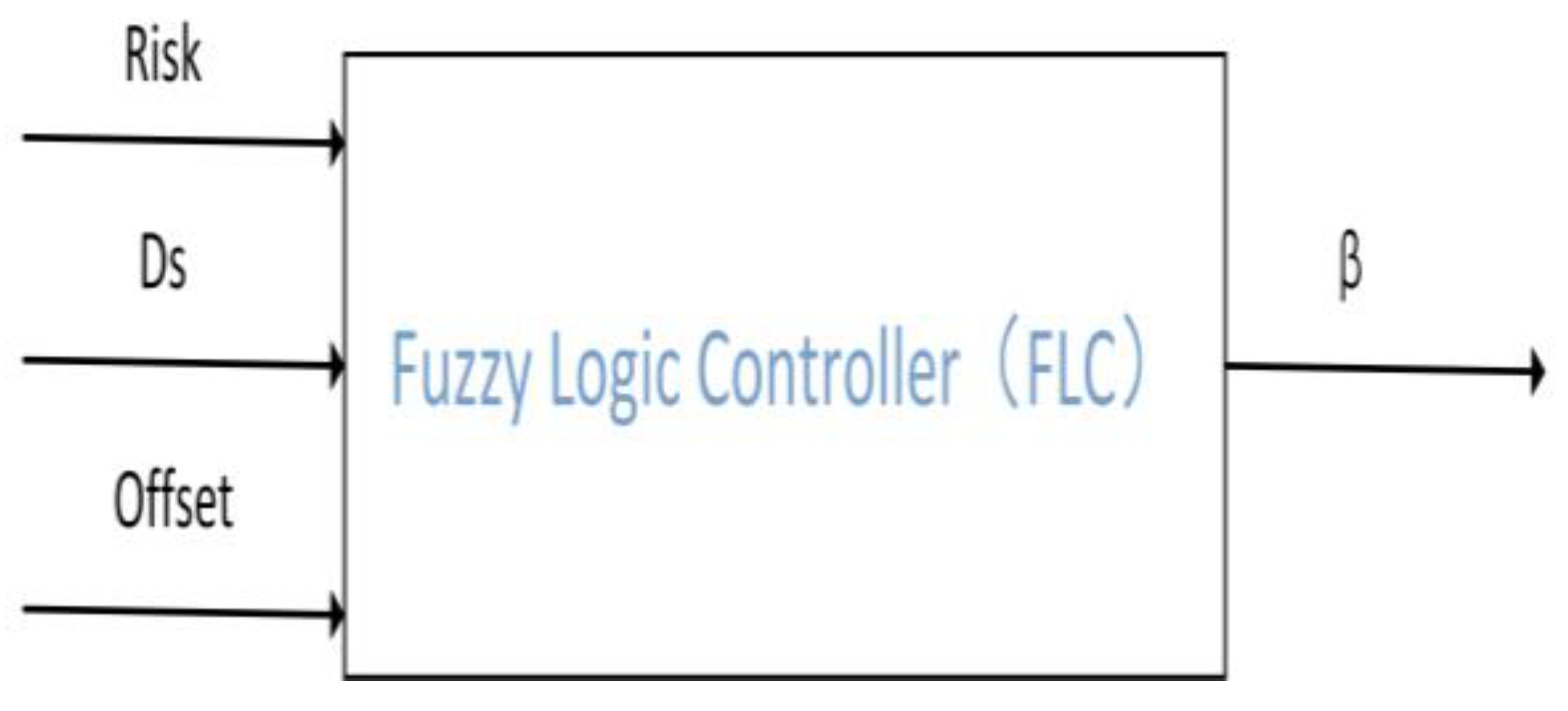

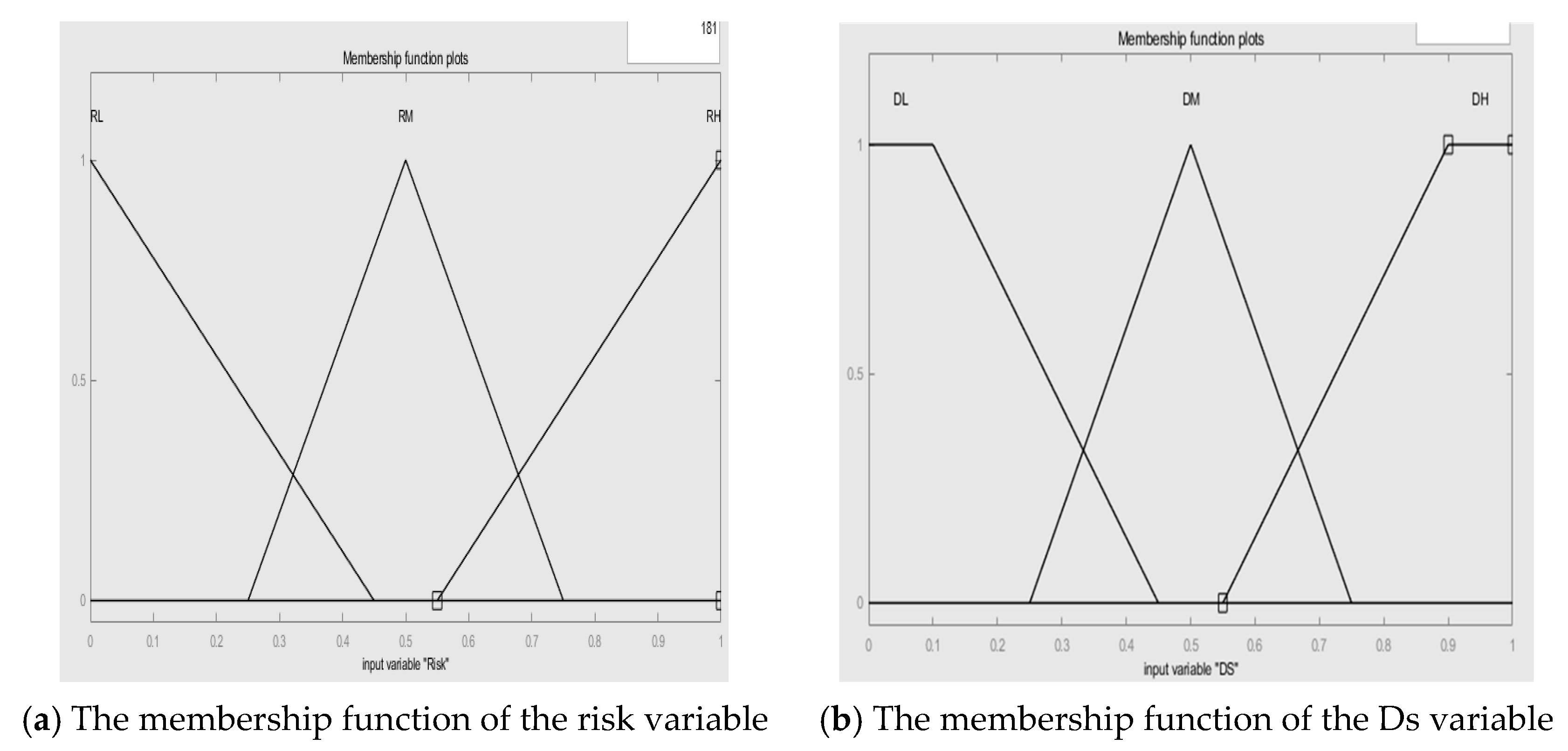

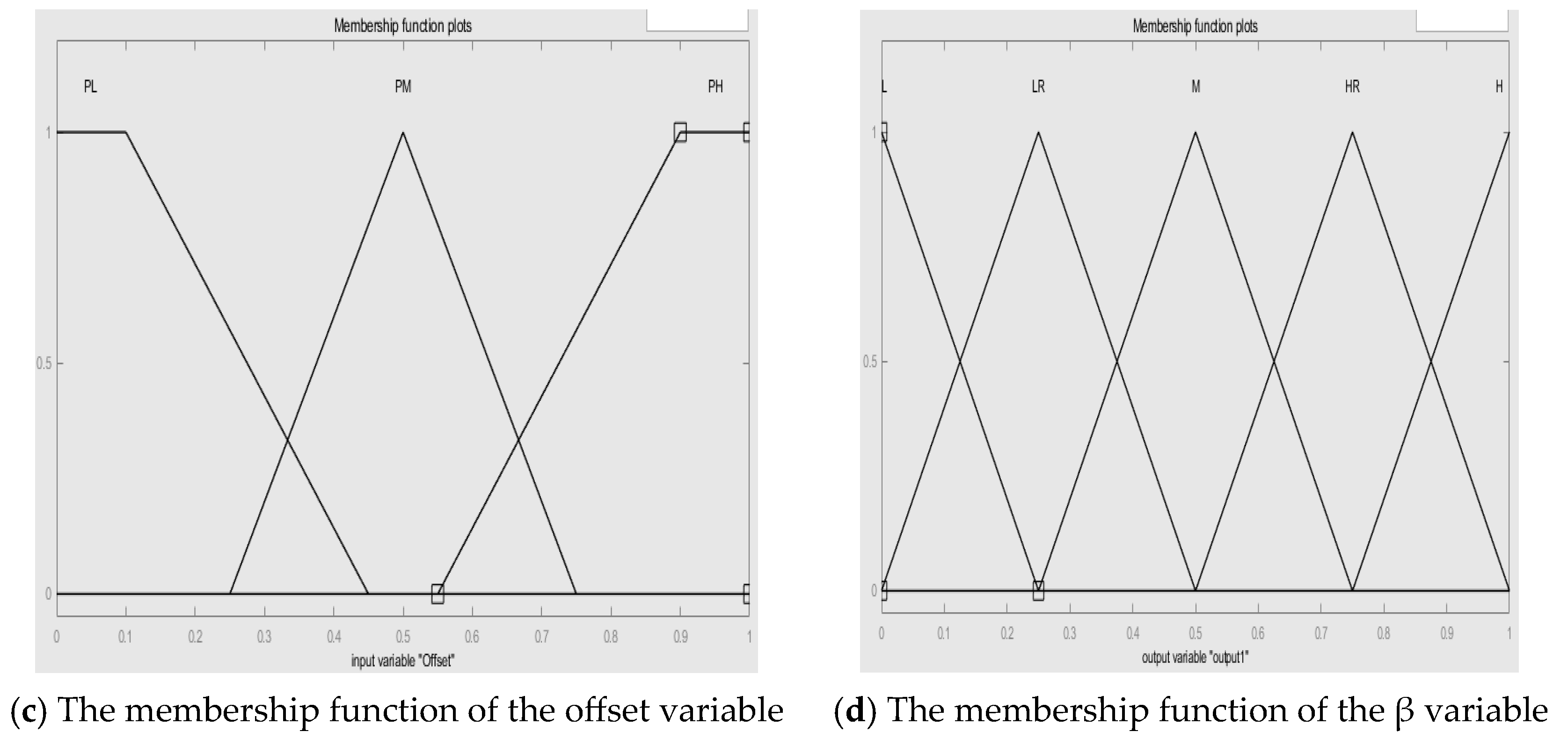

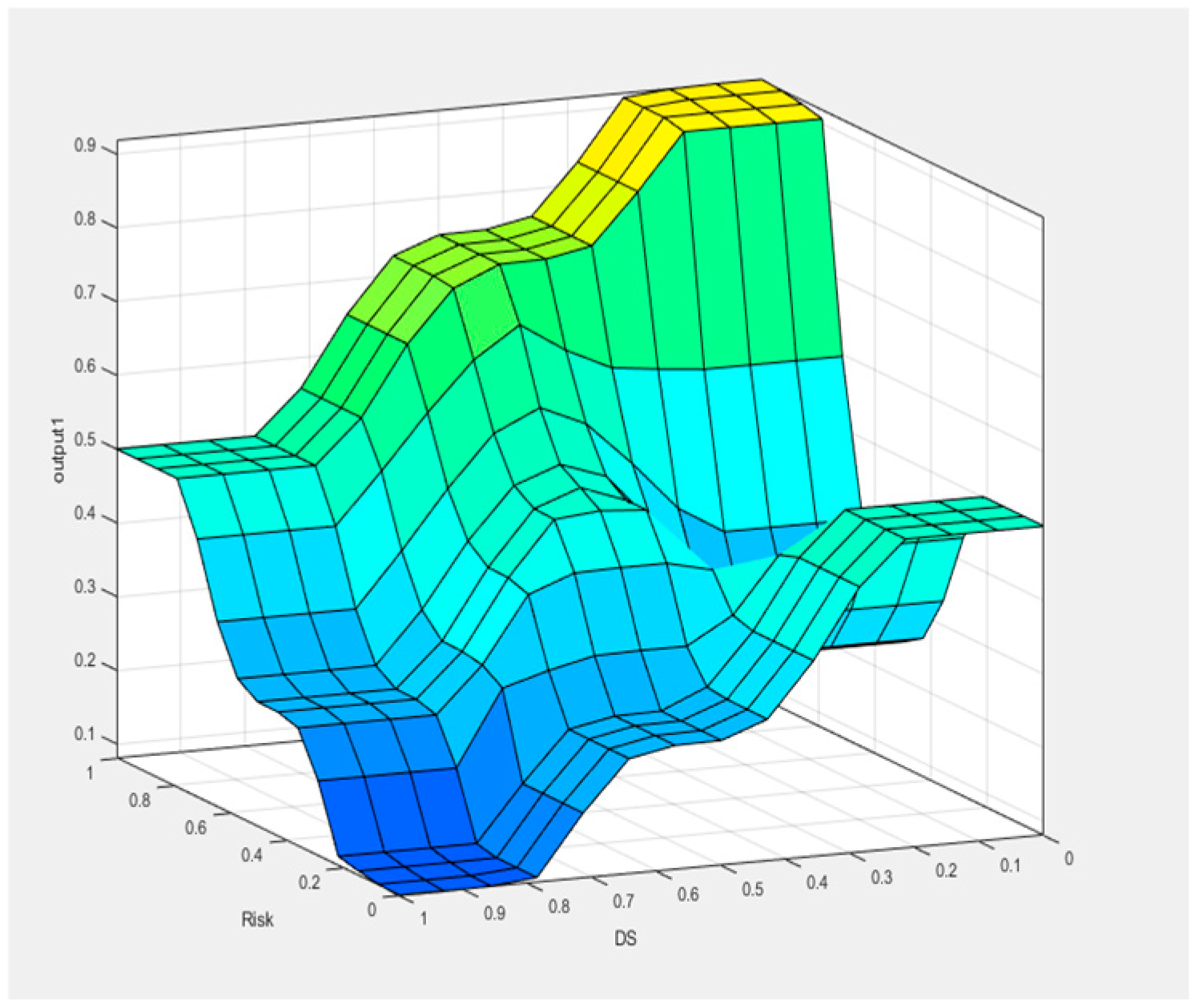

3.2. The Adaptive Weight Fuzzy Decision-Making Model

3.2.1. Assessment of Environmental Risks

3.2.2. The Deviation in Vehicle Control

3.2.3. Dynamic Weight Adjustment of Driving Authority Based on Fuzzy Rules

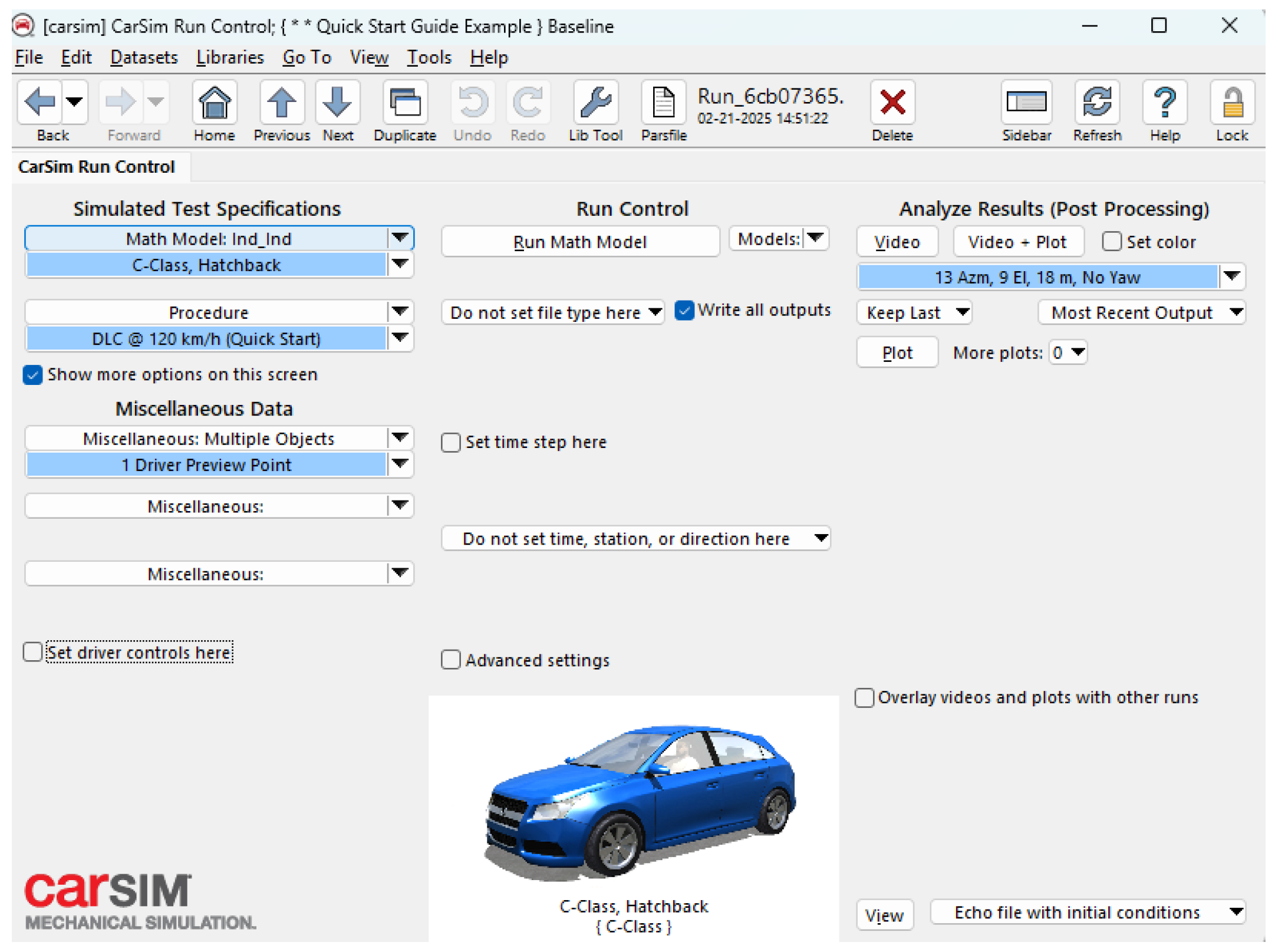

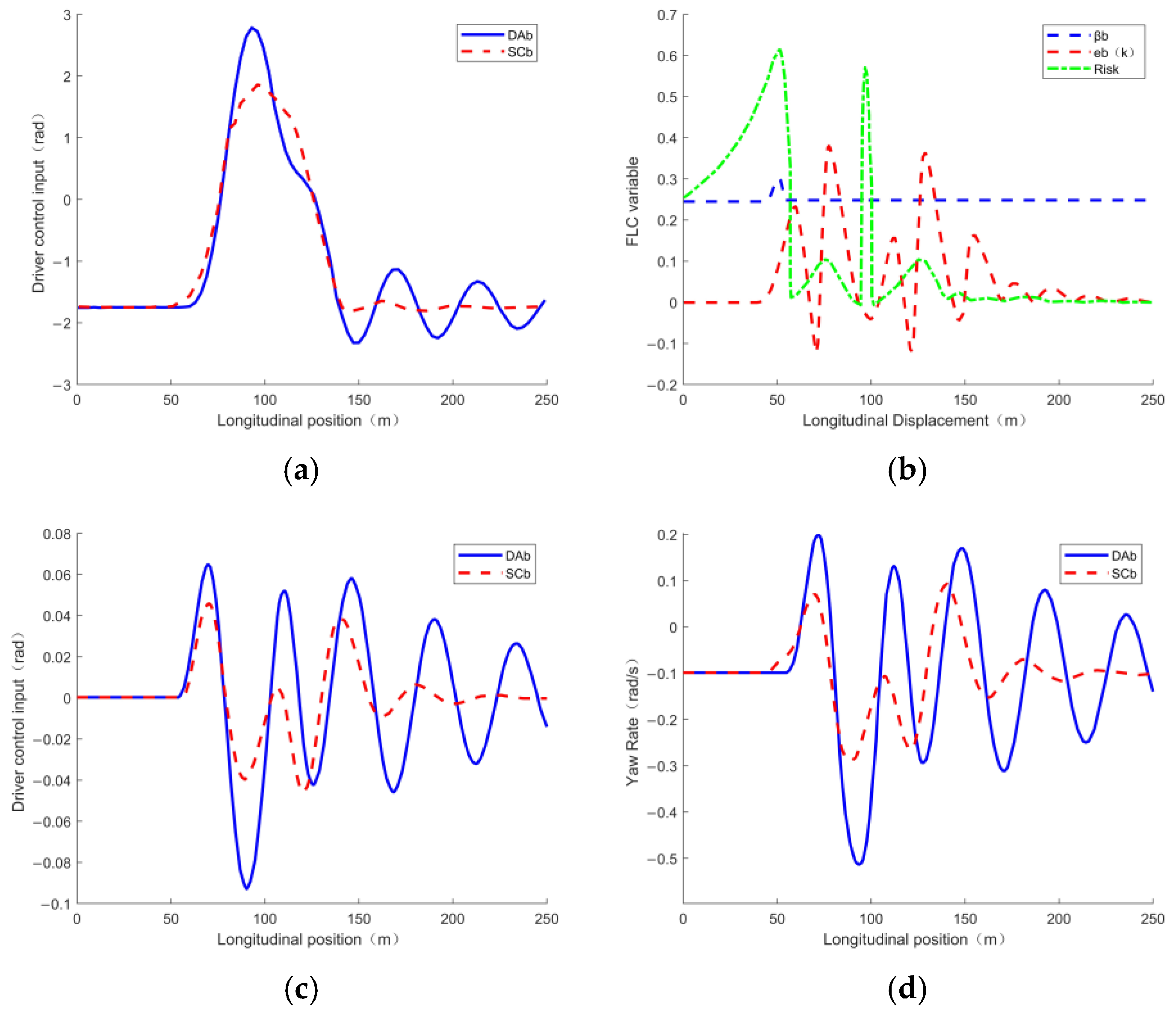

4. Simulation Results and Their Analysis

5. General Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, F.; Gao, F.; Wang, Z. Driving Capability-Based Transition Strategy for Cooperative Driving: From Manual to Automatic. IEEE Access 2020, 8, 139013–139022. [Google Scholar] [CrossRef]

- Gao, F.; He, B.; He, Y. Detection of Driving Capability Degradation for Human-Machine Cooperative Driving. Sensors 2020, 20, 1968. [Google Scholar] [CrossRef]

- Wu, J.; Kong, Q.; Yang, K.; Liu, Y.; Cao, D.; Li, Z. Research on the Steering Torque Control for Intelligent Vehicles Co-Driving With the Penalty Factor of Human–Machine Intervention. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 59–70. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Cao, D.; Hang, P. Toward human-vehicle collaboration: Review and perspectives on human-centered collaborative automated driving. Transp. Res. Part C Emerg. Technol. 2021, 128, 103199. [Google Scholar] [CrossRef]

- Fang, Z.; Wang, J.; Wang, Z.; Liang, J.; Liu, Y.; Yin, G. A Human-Machine Shared Control Framework Considering Time-Varying Driver Characteristics. IEEE Trans. Intell. Veh. 2023, 8, 3826–3838. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, X.; Lei, Z.; Wen, G.; Li, X.; Su, Y.; Gu, S. Robust path-tracking control for intelligent vehicles considering human–vehicle multi-factors. J. Vib. Control. 2025. [Google Scholar] [CrossRef]

- Xie, W.; Lu, S.; Dai, L.; Jia, R. Interactive strategy of shared control vehicle and automated vehicles based on adaptive non-cooperative game. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2024. [Google Scholar] [CrossRef]

- Zhao, X.; Yin, Z.; He, Z.; Nie, L.; Li, K.; Kuang, Y.; Lei, C. Indirect Shared Control Strategy for Human-Machine Cooperative Driving on Hazardous Curvy Roads. IEEE Trans. Intell. Veh. 2023, 8, 2257–2270. [Google Scholar] [CrossRef]

- Liu, J.; Guo, H.; Meng, Q.; Shi, W.; Gao, Z.; Chen, H. Game-Theoretic Driver-Automation Cooperative Steering Control on Low-Adhesion Roads With Driver Neuromuscular Delay. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10115–10130. [Google Scholar] [CrossRef]

- Dai, C.; Zong, C.; Zhang, D.; Hua, M.; Zheng, H.; Chuyo, K. A Bargaining Game-Based Human–Machine Shared Driving Control Authority Allocation Strategy. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10572–10586. [Google Scholar] [CrossRef]

- Zhou, Y.; Huang, C.; Hang, P. Game Theory-Based Interactive Control for Human–Machine Cooperative Driving. Appl. Sci. 2024, 14, 2441. [Google Scholar] [CrossRef]

- Feng, J.; Yin, G.; Liang, J.; Lu, Y.; Xu, L.; Zhou, C.; Peng, P.; Cai, G. A Robust Cooperative Game Theory-Based Human–Machine Shared Steering Control Framework. IEEE Trans. Transp. Electrif. 2024, 10, 6825–6840. [Google Scholar] [CrossRef]

- Lu, X.; Zhao, H.; Li, C.; Gao, B.; Chen, H. A Game-Theoretic Approach on Conflict Resolution of Autonomous Vehicles at Unsignalized Intersections. IEEE Trans. Intell. Transp. Syst. 2023, 24, 12535–12548. [Google Scholar] [CrossRef]

- Liu, M.; Wan, Y.; Lewis, F.L.; Nageshrao, S.; Filev, D. A Three-Level Game-Theoretic Decision-Making Framework for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20298–20308. [Google Scholar] [CrossRef]

- Liu, M.; Kolmanovsky, I.; Tseng, H.E.; Huang, S.; Filev, D.; Girard, A. Potential Game-Based Decision-Making for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8014–8027. [Google Scholar] [CrossRef]

- Li, G.; Tian, T.; Song, J.; Li, N.; Bai, H. Research on trajectory tracking control of driverless cars based on game theory. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2024, 239, 1536–1553. [Google Scholar] [CrossRef]

- Wang, H.; Zheng, W.; Zhou, J.; Feng, L.; Du, H. Intelligent vehicle path tracking coordinated optimization based on dual-steering cooperative game with fault-tolerant function. Appl. Math. Model. 2024, 139, 115808. [Google Scholar] [CrossRef]

- Gong, J. Model Predictive Control of Unmanned Vehicles; Beijing Institute of Technology Press Co., Ltd.: Beijing, China, 2020. [Google Scholar]

- Guo, K.; Guan, H. Modelling of driver/vehicle directional control system. Veh. Syst. Dyn. 1993, 22, 141–184. [Google Scholar] [CrossRef]

- Rasekhipour, Y.; Khajepour, A.; Chen, S.K.; Litkouhi, B. A potential field-based model predictive path-planning controller for autonomous road vehicles. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1255–1267. [Google Scholar] [CrossRef]

- Wakasugi, T. A study on warning timing for lane change decision aid systems based on driver’s lane change maneuver. In Proceedings of the 19th International Technical Conference on the Enhanced Safety of Vehicles, Washington, DC, USA, 6–9 June 2005. Paper No. 05-0290. [Google Scholar]

- Wang, S.; Jia, D.; Weng, X. Deep reinforcement learning for autonomous driving. arXiv 2018, arXiv:1811.11329. [Google Scholar]

- Tseng, K.-K.; Yang, H.; Wang, H.; Yung, K.L.; Lin, R.F.Y. Autonomous driving for natural paths using an improved deep reinforcement learning algorithm. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 5118–5128. [Google Scholar] [CrossRef]

- Islam, F.; Ball, J.E.; Goodin, C.T. Enhancing longitudinal velocity control with attention mechanism-based deep deterministic policy gradient (DDPG) for safety and comfort. IEEE Access 2024, 12, 30765–30780. [Google Scholar] [CrossRef]

| Parameter | Driver A | Driver B |

|---|---|---|

| 1 | 0.9 | |

| 0.1 | 0.2 | |

| 0.2 | 0.3 | |

| 1 | 0.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Z.; Zhao, J.; Zheng, J.; Liu, H. An Adaptive Weight Collaborative Driving Strategy Based on Stackelberg Game Theory. World Electr. Veh. J. 2025, 16, 386. https://doi.org/10.3390/wevj16070386

Zhou Z, Zhao J, Zheng J, Liu H. An Adaptive Weight Collaborative Driving Strategy Based on Stackelberg Game Theory. World Electric Vehicle Journal. 2025; 16(7):386. https://doi.org/10.3390/wevj16070386

Chicago/Turabian StyleZhou, Zhongjin, Jingbo Zhao, Jianfeng Zheng, and Haimei Liu. 2025. "An Adaptive Weight Collaborative Driving Strategy Based on Stackelberg Game Theory" World Electric Vehicle Journal 16, no. 7: 386. https://doi.org/10.3390/wevj16070386

APA StyleZhou, Z., Zhao, J., Zheng, J., & Liu, H. (2025). An Adaptive Weight Collaborative Driving Strategy Based on Stackelberg Game Theory. World Electric Vehicle Journal, 16(7), 386. https://doi.org/10.3390/wevj16070386