Methods for Indirect Treatment Comparison: Results from a Systematic Literature Review

Abstract

1. Introduction

Background and Rationale

2. Methods

2.1. Search Strategy and Selection Criteria

2.2. Data Extraction and Synthesis

3. Results

3.1. Identification of Articles

3.2. Description of the Included Articles

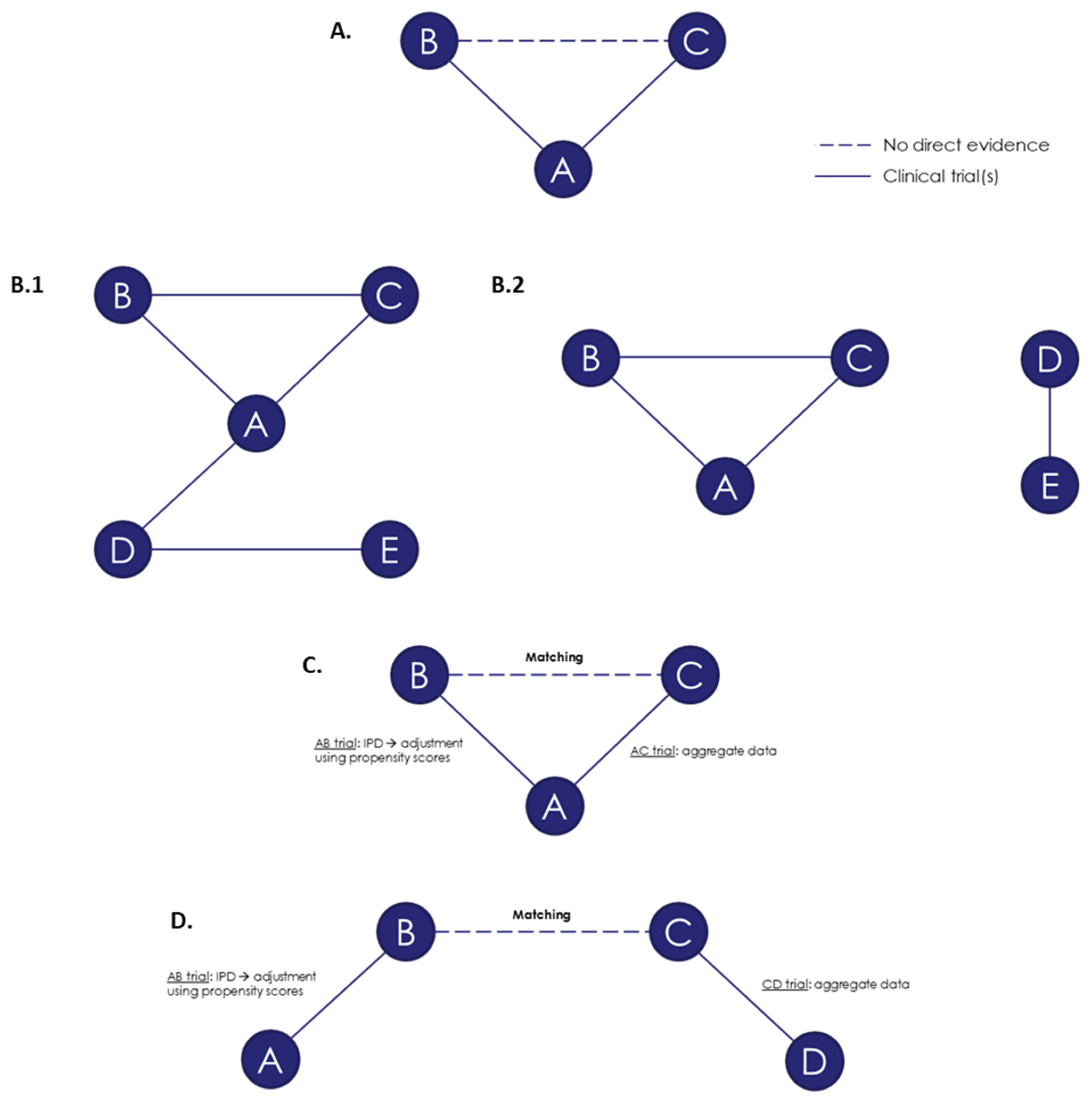

3.3. Summary of the Methods for ITC Techniques

3.3.1. The Bucher Method for Adjusted ITC

3.3.2. NMA

3.4. Population-Adjusted Methods for Indirect Comparisons

3.4.1. MAIC

3.4.2. STC

3.4.3. Comparison of MAIC and STC

3.4.4. NMR

3.4.5. PS-Based Techniques

PSM

PSW

3.5. Additional Statistical Considerations

3.5.1. Fixed-Effects and Random-Effects Approaches

3.5.2. Frequentist Versus Bayesian Approach

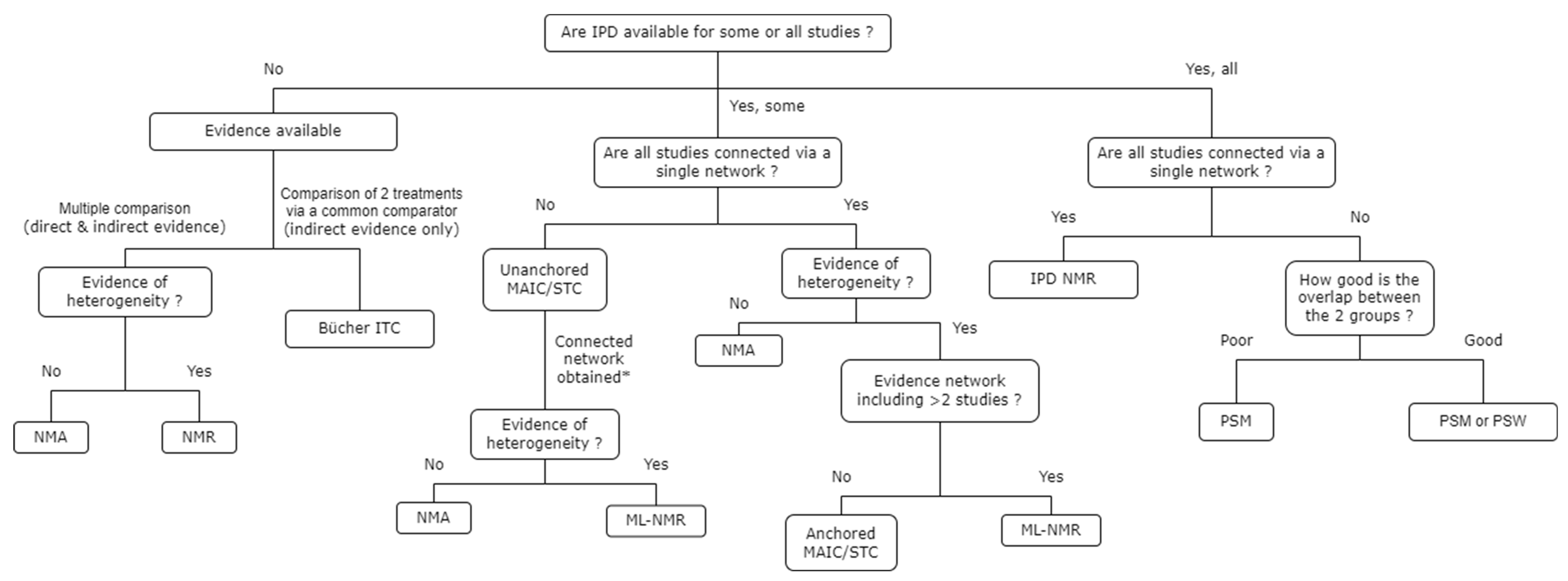

3.6. Choice of ITC Techniques

4. Discussion

Study Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- National Institute for Health and Care Excellence (NICE). Single Technology Appraisal and Highly Specialised Technologies Evaluation: User Guide for Company Evidence Submission Template. 2022. Available online: https://www.nice.org.uk/process/pmg24/resources (accessed on 18 July 2023).

- Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen (IQWIG). General Methods. 2022. Available online: https://www.iqwig.de/methoden/general-methods_version-6-1.pdf (accessed on 18 July 2023).

- National Institute for Health and Care Excellence (NICE). NICE Health Technology Evaluations: The Manual: Process and Methods [PMG36]. 2022. Available online: https://www.nice.org.uk/process/pmg36/chapter/evidence (accessed on 18 July 2023).

- Menzies-Gow, A.; Steenkamp, J.; Singh, S.; Erhardt, W.; Rowell, J.; Rane, P.; Martin, N.; Llanos, J.P.; Quinton, A. Tezepelumab compared with other biologics for the treatment of severe asthma: A systematic review and indirect treatment comparison. J. Med. Econ. 2022, 25, 679–690. [Google Scholar] [CrossRef] [PubMed]

- Privitera, M.; Richy, F.F.; Schabert, V.F. Indirect treatment comparison of cenobamate to other ASMs for the treatment of uncontrolled focal seizures. Epilepsy Behav. 2022, 126, 108429. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Zhu, F.; Zhang, W.; Lu, S. Comparison of Efficacy and Safety of Brigatinib in First-Line Treatments for Patients with Anaplastic Lymphoma Kinase-Positive Non-Small-Cell Lung Cancer: A Systematic Review and Indirect Treatment Comparison. J. Clin. Med. 2022, 11, 2963. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 10, 372:n71. [Google Scholar] [CrossRef]

- European Network for Health Technology Assessment (EUnetHTA). Methods Guideline D4.3.2 Direct and Indirect Comparisons. 2022. Available online: https://www.eunethta.eu/wp-content/uploads/2022/08/EUnetHTA-21-Deliverable-D4.3.2-Methodological-Guideline-on-Direct-and-indirect-comparisons-V1.0.pdf?x69613 (accessed on 18 July 2023).

- Haute Autorité de Santé (HAS). Indirect Comparison Methods and Validity. 2009. Available online: https://www.has-sante.fr/upload/docs/application/pdf/2011-02/summary_report__indirect_comparisons_methods_and_validity_january_2011_2.pdf (accessed on 18 July 2023).

- Phillippo, D.M.; Ades, A.; Dias, S.; Palmer, S.; Abrams, K.R.; Welton, N.J. NICE Decision Support Unit (DSU) Technical Support Document 18: Methods for Population-Adjusted Indirect Comparisons in Submissions to NICE. National Institute for Health and Care Excellence (NICE). 2016. Available online: https://www.sheffield.ac.uk/nice-dsu/tsds/population-adjusted (accessed on 18 July 2023).

- Pharmaceutical Benefits Advisory Committee (PBAC). Guidelines for Preparing a Submission to the Pharmaceutical Benefits Advisory Committee. 2016. Available online: https://pbac.pbs.gov.au/content/information/files/pbac-guidelines-version-5.pdf (accessed on 18 July 2023).

- Carlin, J.; Coory, M.; Defina, J.; Eckermann, S.; Frauman, A.; Hunt, L.; McCloud, P.; McColl, G.; Sansom, L.; Viney, R.; et al. Report of the Indirect Comparisons Working Group to the Pharmaceutical Benefits Advisory Committee: Assessing Indirect Comparisons. Indirect Comparisons Working Group (ICWG). 2008. Available online: https://www.pbs.gov.au/industry/useful-resources/pbac-technical-working-groups-archive/indirect-comparisons-working-group-report-2008.pdf (accessed on 18 July 2023).

- Wells, G.A.; Sultan, S.A.; Chen, L.; Khan, M.; Coyle, D. Indirect Evidence: Indirect Treatment Comparisons in Meta-Analysis. CADTH 2009. Available online: https://www.cadth.ca/sites/default/files/pdf/H0462_itc_tr_e.pdf (accessed on 18 July 2023).

- Remiro-Azócar, A.; Heath, A.; Baio, G. Methods for population adjustment with limited access to individual patient data: A review and simulation study. Res. Synth. Methods. 2021, 12, 750–775. [Google Scholar] [CrossRef] [PubMed]

- Ortega, A.; Fraga, M.D.; Alegre-Del-Rey, E.J.; Puigventós-Latorre, F.; Porta, A.; Ventayol, P.; Tenias, J.; Hawkins, N.S.; Caldwell, D.M. A checklist for critical appraisal of indirect comparisons. Int. J. Clin. Pract. 2014, 68, 1181–1189. [Google Scholar] [CrossRef]

- Bucher, H.C.; Guyatt, G.H.; Griffith, L.E.; Walter, S.D. The results of direct and indirect treatment comparisons in meta-analysis of randomized controlled trials. J. Clin. Epidemiol. 1997, 50, 683–691. [Google Scholar] [CrossRef]

- Dias, S.; Welton, N.J.; Sutton, A.J.; Caldwell, D.M.; Lu, G.; Ades, A. NICE Decision Support Unit (DSU) Technical Support Document 4: Inconsistency in Networks of Evidence Based on Randomised Controlled Trials. National Institute for Health and Care Excellence (NICE). 2014. Available online: https://www.sheffield.ac.uk/sites/default/files/2022-02/TSD4-Inconsistency.final_.15April2014.pdf (accessed on 18 July 2023).

- Weber, D.; Jensen, K.; Kieser, M. Comparison of Methods for Estimating Therapy Effects by Indirect Comparisons: A Simulation Study. Med. Decis. Mak. 2020, 40, 644–654. [Google Scholar] [CrossRef] [PubMed]

- Lebioda, A.; Gasche, D.; Dippel, F.-W.; Theobald, K.; Plantör, S. Relevance of indirect comparisons in the German early benefit assessment and in comparison to HTA processes in England, France and Scotland. Health Econ. Rev. 2014, 4, 31. [Google Scholar] [CrossRef]

- Bender, R.; Sturtz, S. Mixed Treatment Comparisons (MTC)—Concepts and Problems. IQWIG 2013. Presented at the ISPE 2013 Mid-Year Meeting and Symposium. 11–13 April 2013. Available online: https://www.pharmacoepi.org/pub/?id=1c22ee69%2D2354%2Dd714%2D51ab%2Da2ef52d1a7dd (accessed on 18 July 2023).

- Fu, R.; Gartlehner, G.; Grant, M.; Shamliyan, T.; Sedrakyan, A.; Wilt, T.J.; Griffith, L.; Oremus, M.; Raina, P.; Ismaila, A.; et al. Conducting quantitative synthesis when comparing medical interventions: AHRQ and the Effective Health Care Program. J. Clin. Epidemiol. 2011, 64, 1187–1197. [Google Scholar] [CrossRef]

- Gartlehner, G.; Moore, C.G. Direct versus indirect comparisons: A summary of the evidence. Int. J. Technol. Assess. Health Care 2008, 24, 170–177. [Google Scholar] [CrossRef] [PubMed]

- Kiefer, C.; Sturtz, S.; Bender, R. Indirect comparisons and network meta-analyses. SDtsch Arztebl. Int. 2015, 112, 803–808. [Google Scholar] [CrossRef] [PubMed]

- Béliveau, A.; Goring, S.; Platt, R.W.; Gustafson, P. Network meta-analysis of disconnected networks: How dangerous are random baseline treatment effects? Res. Synth. Methods 2017, 8, 465–474. [Google Scholar] [CrossRef] [PubMed]

- Brignardello-Petersen, R.; Murad, M.H.; Walter, S.D.; McLeod, S.; Carrasco-Labra, A.; Rochwerg, B.; Schünemann, H.J.; Tomlinson, G.; Guyatt, G.H. GRADE approach to rate the certainty from a network meta-analysis: Avoiding spurious judgments of imprecision in sparse networks. J. Clin. Epidemiol. 2019, 105, 60–67. [Google Scholar] [CrossRef] [PubMed]

- Brignardello-Petersen, R.; Mustafa, R.A.; Siemieniuk, R.A.; Murad, M.H.; Agoritsas, T.; Izcovich, A.; Schünemann, H.J.; Guyatt, G.H. GRADE approach to rate the certainty from a network meta-analysis: Addressing incoherence. J. Clin. Epidemiol. 2019, 108, 77–85. [Google Scholar] [CrossRef] [PubMed]

- Saramago, P.; Chuang, L.-H.; Soares, M.O. Network meta-analysis of (individual patient) time to event data alongside (aggregate) count data. BMC Med. Res. Methodol. 2014, 14, 105. [Google Scholar] [CrossRef] [PubMed]

- Seide, S.E.; Jensen, K.; Kieser, M. A comparison of Bayesian and frequentist methods in random-effects network meta-analysis of binary data. Res. Synth. Methods 2020, 11, 363–378. [Google Scholar] [CrossRef] [PubMed]

- Stevens, J.W.; Fletcher, C.; Downey, G.; Sutton, A. A review of methods for comparing treatments evaluated in studies that form disconnected networks of evidence. Res. Synth. Methods 2018, 9, 148–162. [Google Scholar] [CrossRef] [PubMed]

- Sturtz, S.; Bender, R. Unsolved issues of mixed treatment comparison meta-analysis: Network size and inconsistency. Res. Synth. Methods 2012, 3, 300–311. [Google Scholar] [CrossRef]

- Sutton, A.; Ades, A.; Cooper, N.; Abrams, K. Use of indirect and mixed treatment comparisons for technology assessment. PharmacoEconomics 2008, 26, 753–767. [Google Scholar] [CrossRef]

- Falissard, B.; Zylberman, M.; Cucherat, M.; Izard, V.; Meyer, F. Real medical benefit assessed by indirect comparison. Therapie. 2009, 64, 225–232. [Google Scholar] [CrossRef] [PubMed]

- European Network for Health Technology Assessment (EUnetHTA). Comparators & Comparisons: Direct and Indirect Comparisons. 2015. Available online: https://www.eunethta.eu/wp-content/uploads/2018/03/Direct_comparators_comparisons.pdf?x50316 (accessed on 18 July 2023).

- Lumley, T. Network meta-analysis for indirect treatment comparisons. Stat. Med. 2002, 21, 2313–2324. [Google Scholar] [CrossRef] [PubMed]

- Bhatnagar, N.; Lakshmi, P.; Jeyashree, K. Multiple treatment and indirect treatment comparisons: An overview of network meta-analysis. Perspect. Clin. Res. 2014, 5, 154. [Google Scholar] [CrossRef] [PubMed]

- Dias, S.; Ades, A.E.; Welton, N.J.; Jansen, J.P.; Sutton, A. Network Meta Analysis for Decision Making; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Phillippo, D.M.; Ades, A.E.; Dias, S.; Palmer, S.; Abrams, K.R.; Welton, N.J. Methods for population-adjusted indirect comparisons in health technology appraisal. Med. Decis. Mak. 2018, 38, 200–211. [Google Scholar] [CrossRef] [PubMed]

- Signorovitch, J.E.; Sikirica, V.; Erder, M.H.; Xie, J.; Lu, M.; Hodgkins, P.S.; Betts, K.A.; Wu, E.Q. Matching-adjusted indirect comparisons: A new tool for timely comparative effectiveness research. Value Health 2012, 15, 940–947. [Google Scholar] [CrossRef] [PubMed]

- Welton, N.; Phillippo, D.; Owen, R.; Jones, H.; Dias, S.; Bujkiewicz, S.; Ades, A.; Abrams, K. CHTE2020 Sources and Synthesis of Evidence: Update to Evidence Synthesis Methods. 2020. Available online: https://www.sheffield.ac.uk/nice-dsu/methods-development/chte2020-sources-and-synthesis-evidence (accessed on 18 July 2023).

- Aouni, J.; Gaudel-Dedieu, N.; Sebastien, B. Matching-adjusted indirect comparisons: Application to time-to-event data. Stat. Med. 2021, 40, 566–577. [Google Scholar] [CrossRef] [PubMed]

- Cassidy, O. Matching-Adjusted Indirect. Comparisons: Identifying Method. Variations and Implementing Models in R. 2020. Available online: http://www.tara.tcd.ie/bitstream/handle/2262/92678/Owen%20Cassidy%20Thesis%20Final.pdf?sequence=3 (accessed on 18 July 2023).

- Hatswell, A.J.; Freemantle, N.; Baio, G. The Effects of Model Misspecification in Unanchored Matching-Adjusted Indirect Comparison: Results of a Simulation Study. Value Health 2020, 23, 751–759. [Google Scholar] [CrossRef] [PubMed]

- Ishak, K.J.; Proskorovsky, I.; Benedict, A. Simulation and matching-based approaches for indirect comparison of treatments. PharmacoEconomics 2015, 33, 537–549. [Google Scholar] [CrossRef] [PubMed]

- Jackson, D.; Rhodes, K.; Ouwens, M. Alternative weighting schemes when performing matching-adjusted indirect comparisons. Res. Synth. Methods 2021, 12, 333–346. [Google Scholar] [CrossRef]

- Jiang, Y.; Ni, W. Performance of unanchored matching-adjusted indirect comparison (MAIC) for the evidence synthesis of single-arm trials with time-to-event outcomes. BMC Med. Res. Methodol. 2020, 20, 241. [Google Scholar] [CrossRef]

- Phillippo, D.M.; Dias, S.; Ades, A.E.; Welton, N.J. Assessing the performance of population adjustment methods for anchored indirect comparisons: A simulation study. Stat. Med. 2020, 39, 4885–4911. [Google Scholar] [CrossRef] [PubMed]

- Phillippo, D.M.; Dias, S.; Ades, A.E.; Belger, M.; Brnabic, A.; Schacht, A.; Saure, D.; Kadziola, Z.; Welton, N.J. Multilevel network meta-regression for population-adjusted treatment comparisons. J. R. Stat. Soc. Ser. A Stat. Soc. 2020, 183, 1189–1210. [Google Scholar] [CrossRef] [PubMed]

- Regnier, S.A.; Alsop, J.; Wright, J.; Nixon, R.; Staines, H.; Fajnkuchen, F. Review and comparison of methodologies for indirect comparison of clinical trial results: An illustration with ranibizumab and aflibercept. Exp. Rev. Pharmacoecon. Outcomes Res. 2016, 16, 793–801. [Google Scholar] [CrossRef] [PubMed]

- Jansen, J.P.; Crawford, B.; Bergman, G.; Stam, W. Bayesian meta-analysis of multiple treatment comparisons: An introduction to mixed treatment comparisons. Value Health 2008, 11, 956–964. [Google Scholar] [CrossRef] [PubMed]

- Greco, T.; Landoni, G.; Biondi-Zoccai, G.; D’Ascenzo, F.; Zangrillo, A. A Bayesian network meta-analysis for binary outcome: How to do it. Stat. Methods Med. Res. 2016, 25, 1757–1773. [Google Scholar] [CrossRef] [PubMed]

- Hoaglin, D.C.; Hawkins, N.; Jansen, J.P.; Scott, D.A.; Itzler, R.; Cappelleri, J.C.; Boersma, C.; Thompson, D.; Larholt, K.M.; Diaz, M.; et al. Conducting indirect-treatment-comparison and network-meta-analysis studies: Report of the ISPOR Task Force on Indirect Treatment Comparisons Good Research Practices: Part 2. Value Health 2011, 14, 429–437. [Google Scholar] [CrossRef] [PubMed]

- Jones, B.; Roger, J.; Lane, P.W.; Lawton, A.; Fletcher, C.; Cappelleri, J.C.; Tate, H.; Moneuse, P. Statistical approaches for conducting network meta-analysis in drug development. Pharm. Stat. 2011, 10, 523–531. [Google Scholar] [CrossRef] [PubMed]

- Rücker, G.; Schwarzer, G. Ranking treatments in frequentist network meta-analysis works without resampling methods. BMC Med. Res. Methodol. 2015, 15, 58. [Google Scholar] [CrossRef] [PubMed]

- Le Nouveau, P.; Gauthier, A. POSC314 Indirect Treatment Comparison: A Proposed Decision Algorithm to Define the Best Approach. Value Health 2022, 25, S209. [Google Scholar] [CrossRef]

- Senchaudhuri, E. New Directions in Indirect. Treatment Comparisons. 2022. Available online: https://www.cytel.com/blog/new-directions-in-indirect-treatment-comparisons (accessed on 18 July 2023).

- Freitag, A.; Gurskyte, L.; Sarri, G. Increasing transparency in indirect treatment comparisons: Is selecting effect modifiers the missing part of the puzzle? A review of methodological approaches and critical considerations. J. Comp. Eff. Res. 2023, 12, e230046. [Google Scholar] [CrossRef]

- O’Regan, C.; Ghement, I.; Eyawo, O.; Guyatt, G.H.; Mills, E.J. Incorporating multiple interventions in meta-analysis: An evaluation of the mixed treatment comparison with the adjusted indirect comparison. Trials. 2009, 10, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Glenny, A.M.; Altman, D.G.; Song, F.; Sakarovitch, C.; Deeks, J.J.; D’Amico, R.; Bradburn, M.; Eastwood, A.J. Indirect comparisons of competing interventions. Health Technol. Assess. 2005, 9, 1–148. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Regulation on Health Technology Assessment. Available online: https://health.ec.europa.eu/health-technology-assessment/regulation-health-technology-assessment_en (accessed on 18 July 2023).

| Inclusion criteria |

|

| Exclusion criteria |

|

| Assumptions | |

|---|---|

| Homogeneity | No variation in the treatment effect between trials within a pairwise comparison, i.e., for each pairwise comparison, the relative efficacy of each treatment is the same across all trials. This is induced by the similarity of trials (in terms of study design, patient characteristics, treatments and outcomes measured) concerning the relevant treatment effect for each pairwise comparison. |

| Similarity or transitivity | Similarity of all the trials that contribute to an ITC in terms of study design, patient characteristics, treatments, and outcomes measured. This relies on the similarity of trials with regard to TEMs that may impact the relevant treatment effect between pairwise comparisons that contribute to an ITC. |

| Consistency | No variation in the treatment effect between pairwise comparisons, therefore leading to the same treatment effect produced by direct and indirect estimates. Consistency is equal to transitivity across a simple triangular loop. |

| Exchangeability | Combination of similarity, homogeneity and consistency assumptions. |

| Connectivity | Existence of common comparators to connect the network. |

| Constancy of treatment effect | Treatment effects are constant across trial populations: constancy of relative effects (NMA); conditional constancy of relative effects (anchored population-adjusted indirect comparison); conditional constancy of absolute effects (unanchored population-adjusted indirect comparison). |

| Other definitions | |

| Treatment effect modifier (TEM) | Patient or study characteristic that influences the treatment effect on a clinical outcome (impacts the relative treatment effect). |

| Prognostic factor | Patient or study characteristic that influences clinical outcomes, regardless of the intervention and comparator (impacts the absolute treatment effect). |

| ITC Methods | Standard Techniques | Population-Adjusted Techniques | |||||

|---|---|---|---|---|---|---|---|

| Bucher ITC | NMA | MAIC | STC | NMR | PSM | IPTW | |

| Number of treatments compared | 2 | Unlimited | 2 | 2 | Unlimited | 2 | 2 |

| Need for IPD | No | No | Yes, for at least one trial | Yes, for at least one trial | No for NMR Yes for ML-NMR | Yes for all trials | Yes for all trials |

| Possible inclusion of single-arm trials | No | No | Yes | Yes | No | Yes | Yes |

| Requires a connected network | Yes | Yes | No | No | Yes | No | No |

| Allows random- and fixed-effect approaches | NA | Yes | NA | NA | Yes | NA | NA |

| Allows the inclusion of any type of outcomes | Yes | Yes | Yes | Yes | No for TTE | Yes | Yes |

| Assumptions required | |||||||

| Homogeneity | Yes | Yes | Yes | Yes | Yes a | Yes | Yes |

| Similarity | Yes | Yes | No | No | No | No | No |

| Consistency | NA | Yes | No | No | Yes | NA | NA |

| Constancy of TE b | Yes | Yes | Yes | Yes | Yes | No | No |

| Other | Independence between pairwise comparisons | / | No unobserved prognostic factors or TEM | No unobserved prognostic factors or TEM | No unobserved prognostic factors or TEM | No unobserved prognostic factors or TEM | No unobserved prognostic factors or TEM |

| Strengths | / | Unlimited number of trials |

|

|

|

| |

| Limitations |

| Does not adjust for TEM |

| Rarely feasible, as it requires an important number of trials | Biased estimates if unobserved prognostic factors and TEM | ||

| Frequentist | Bayesian | |

|---|---|---|

| Probability | Probability of the data given a hypothesis (likelihood) 95% CI gives estimates of how many times, out of 100 trials, the point estimate will be found | Conditional probabilities: probability of a hypothesis given the data and the prior distribution of the parameter 95% CrI gives the probability that the point estimate lies within the interval |

| Uncertainty | Unknown parameters are assumed to be fixed, and data are repeatedly taken from random samples | Unknown parameters are treated probabilistically and estimated based on simulations |

| Prior information | None | Prior distributions are used to estimate treatment effect, and possibly between-study heterogeneity, so as not to influence the results (results driven by the data only) |

| Interpretation | Point estimate and dispersion (CI) around it | Ranking, probabilities of being best, second best, etc. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Macabeo, B.; Quenéchdu, A.; Aballéa, S.; François, C.; Boyer, L.; Laramée, P. Methods for Indirect Treatment Comparison: Results from a Systematic Literature Review. J. Mark. Access Health Policy 2024, 12, 58-80. https://doi.org/10.3390/jmahp12020006

Macabeo B, Quenéchdu A, Aballéa S, François C, Boyer L, Laramée P. Methods for Indirect Treatment Comparison: Results from a Systematic Literature Review. Journal of Market Access & Health Policy. 2024; 12(2):58-80. https://doi.org/10.3390/jmahp12020006

Chicago/Turabian StyleMacabeo, Bérengère, Arthur Quenéchdu, Samuel Aballéa, Clément François, Laurent Boyer, and Philippe Laramée. 2024. "Methods for Indirect Treatment Comparison: Results from a Systematic Literature Review" Journal of Market Access & Health Policy 12, no. 2: 58-80. https://doi.org/10.3390/jmahp12020006

APA StyleMacabeo, B., Quenéchdu, A., Aballéa, S., François, C., Boyer, L., & Laramée, P. (2024). Methods for Indirect Treatment Comparison: Results from a Systematic Literature Review. Journal of Market Access & Health Policy, 12(2), 58-80. https://doi.org/10.3390/jmahp12020006