3. Double Boomerang Watermarking

In this Section, the principles of the

tracing watermarking procedure and the metrics employed for the QoS assessment are introduced. Although copyright protection was the very first application of watermarking, different uses have been recently proposed in the literature. Fingerprinting, broadcast monitoring, data authentication, multimedia indexing, content-based retrieval applications [

17]-[

19], are only a few of the new applications where watermarking can be usefully employed. When these techniques are used to preserve the copyright ownership with the purpose of avoiding unauthorized data duplications, the embedded watermark should be

detectable. Usually, these techniques use a robust watermarking which means that the embedded watermark is supposed to be detectable (

i.e. the watermark used for copyright protection should not allow the use of the watermarked content until it has not been correctly extracted). Indeed, there are a number of desirable characteristics that a watermark should exhibit [

20]. It at least should comply with the following two basic requirements for image watermark: first, the digital watermark should not be noticeable to the viewer (

i.e. transparency of digital watermark). Second, the digital watermark is still present in the image after distortion and can be detected by the watermark detector (

i.e. robustness of digital watermark to image processing). The key to the watermarking technique is to compromise between the two aforementioned requirements. Recently, an

unconventional use of fragile watermarking has been proposed to blindly estimate the quality of service of a communication link [

5], and for QoS provisioning and control purposes [

2]. The rationale behind the approach is that the alterations suffered by the watermark are

likely to be suffered by the data, since they follow the same communication link. Therefore, the watermark degradation can be used to evaluate the alterations endured by the data, obtaining a QoS index of the communication link (as explained in principles in

Fig. 1).

Figure 1.

Idea of tracing watermarking: the watermark degradations can be used to trace the alterations endured by the data.

Figure 1.

Idea of tracing watermarking: the watermark degradations can be used to trace the alterations endured by the data.

At the receiving side the watermark is extracted and compared with its original counterpart. Spatial spread-spectrum techniques perform the watermarking embedding. In practices, the watermark (narrow band low energy signal) is spread over the image (larger bandwidth signal) so that the watermark energy contribution for each host frequency bins is negligible, which makes the watermark near imperceptible. Following the same methodological approach of [

5] and referring to the block scheme of

Fig. 2, a set of uncorrelated pseudo-random noise (PN) matrices (one per each frame and known to the receiver) is multiplied by the reference watermark (one for all the transmission session and known to the receiver):

, where

is the original watermark,

the PN matrices and

the spread version of the watermark to be embedded in the

i-th frame. The embedding is performed in the DCT (discrete cosine transform) domain according to the following:

where

Fi[

k1, k2] =

DCT{

fi[

k1, k2]} is the DCT transform of the

i-th frame;

Φ is the region of middle-high frequencies of the image in the DCT domain, while

β determines the watermark strength and

Fi(w)[

k1, k2] is the DCT of the

i-th watermarked frame. By increasing the value of

β, the mark becomes more evident and a visual degradation of the image (or video) occurs. On the contrary, by diminishing its value, the mark can be easily removed by the coder and/or channel’s errors. Therefore, in the application scenario of our simulation trials, the scaling factor

β has been chosen in such a way to compromise between the two aforementioned requirements. The

i-th watermarked frame is then obtained by performing the IDCT (inverse DCT) of

Fi(w)[

k1, k2], finally the whole sequence is MPEG-coded and then transmitted through a noisy channel.

The receiver implements video decoding as well as watermark detection. In fact, at the same time after decoding of the video-stream, a matched filter extracts the (known) watermark from the DCT of each

n-th received I-frame of the sequence. The estimated watermark is matched to the reference one (despread with the known PN matrix). The matched filter is tuned to the particular embedding procedure, so that it can be matched to the randomly spread watermark only. It is assumed that the receiver knows the initial spatial application point of the mark in the DCT domain. The QoS is evaluated by comparing the extracted watermark with respect to the original one. In particular, its mean-square-error (MSE) is evaluated as an index of the effective degradation of the provided QoS. A possible index of the degradation is simply obtained by calculating the mean of the error energy:

where

and

, represent the original and the extract watermark respectively, and

n = 1, ..., M is the current frame index.

It is worth noting that the metric (2), which is evaluated using the estimated watermarks over the M transmitted frames, is employed to provide a quality assessment of the received video after the MPEG coding/transmission process. In particular, such a QoS index can be usefully employed for a number of different purposes in mobile multimedia communications such as: control feedback to the sending user on the effective quality of the link; detailed information to the operator for billing purposes and diagnostic information to the operator about the communication link status.

Although sophisticated video quality perceptual metrics could be used for quality assessment purposes in multimedia communications (see, for instance, [

21]), the MSE between the estimated watermark and the original one is used in this contribution as a proof of concept of the objective cooperative method proposed here, and shown by extensive simulation trials in

Section 4.

Figure 2.

Principle scheme of the double boomerang approach for QoS purposes.

Figure 2.

Principle scheme of the double boomerang approach for QoS purposes.

4. Simulation Results and Discussion

In this Section, some experimental results characterizing the effectiveness of the proposed method are presented. The dimensions of the video-sequences employed in our experimentations have been properly chosen in order to simulate a multimedia service in a UMTS scenario, i.e. typical 3G video-calls. Therefore, QCIF (144x176) video sequences, which well match the limited dimensions of a mobile terminal’s display, have been employed and a frame rate of 15 fps has been chosen. The marked video is then transmitted over noisy channels, simulated by Poisson’s generators of random transmission errors. Specifically, wireless channels characterized by different levels of bit error rate (BER) have been designed, considering the customers near and/or far from the base station (BS), respectively. Specifically, we take into account the near-far-effect typical of cellular systems: when the user is near the BS, the uplink communication has been considered worse than the downlink path, in terms of BER while, when the user is far from the BS, the uplink path has been considered better than the downlink (i.e. the mobile transmits with much more power to compensate the distance from the BS). For users closer to the BS, we have imposed an uplink BER of 10-4 and a downlink BER of 10-5, while for users far from the BS, the uplink BER has been considered equal to 10-4 and the downlink BER to 10-3. Our simulation trials are proposed to test the effectiveness of the double boomerang approach, which can be used for error control purposes in e.g. Hybrid ARQ techniques in UMTS applications. Four situations are simulated: -two mobile agents far from the BS (scenario 1); -two mobile agents near to the BS (scenario 2); -the first mobile agent is near while the second is far from the BS (scenario 3), -the first mobile agent is far from the BS while the second is near (scenario 4).

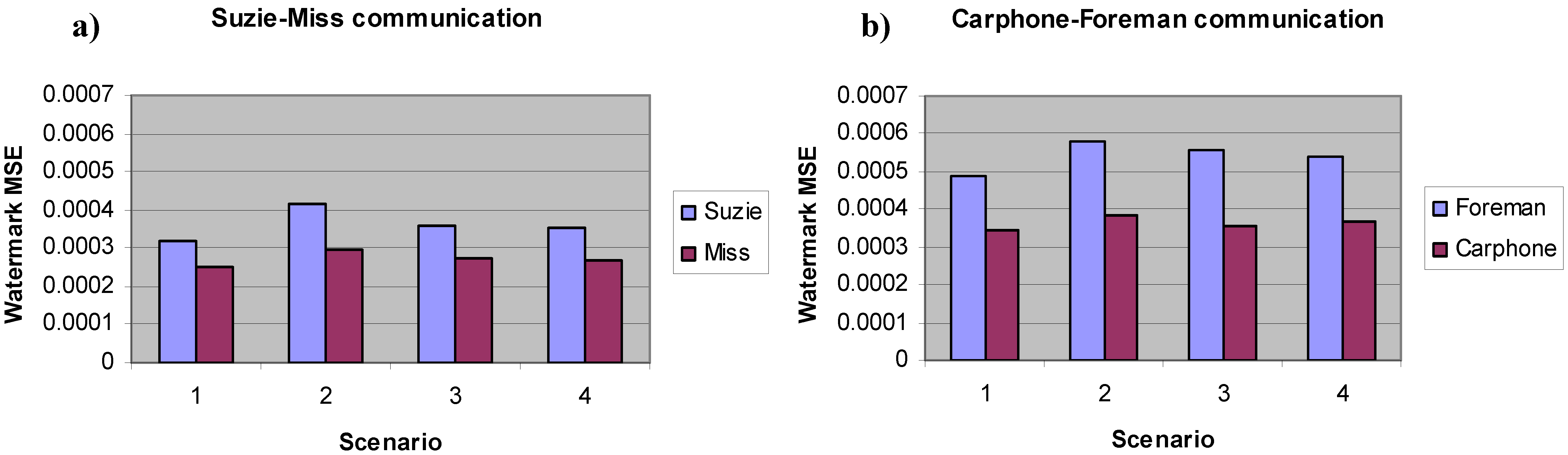

Figure 3.

Watermark MSE versus the different scenarios in the case of the video-communication between: (a) Suzie and Miss America; (b) Carphone and Foreman.

Figure 3.

Watermark MSE versus the different scenarios in the case of the video-communication between: (a) Suzie and Miss America; (b) Carphone and Foreman.

In all the following simulations, we have used the common test video-sequences such as Carphone, Foreman, Miss America and Suzie, all MPEG-4 coded. In particular, we have supposed two scenarios: a video-call between the users Carphone and Foreman and another video-call between MissAmerica and Suzie. We have first verified that with this double boomerang approach is still possible to trace the alterations endured by the data in the communication link. Then, we have evaluated an empirical relationship (obtained with the videos under investigation) between the MSE of the watermark and of the multimedia content transmitted trough the network, as a proof of concept of the objective QoS assessment method proposed here. Hence, we have used four different video-sequences and, in particular, each MSE value of our simulation trials is obtained after 106 Montecarlo runs. Moreover, we have evaluated the confidence level of our simulations, verifying that the confidence interval varies from the ±3% up to the ±10% corresponding to the different operating scenario under investigation. In particular, we have verified that the confidence interval varies (as expected) with the BER of the channel and hence is strictly connected to the link quality of the considered scenario: in fact, the confidence level becomes relevant when considering the worst channel conditions (i.e. scenarios 3 and 4) and it is in such conditions that the minimum MSE of the watermark may not correspond to the minimum MSE of the video (as it will be shown in the following graphs).

Figure 3(

a) shows here the watermark MSE of the video-communication between MissAmerica-Suzie, while

Figure 3(

b) depicts the watermark MSE of the Carphone-Foreman video-communication. The MSE metric is evaluated using (2), comparing the received watermark (embedded using the double-boomerang approach explained in

Section 3) and its original counterpart,

i.e. the original frame opportunely adapted (

i.e. cropped and binarized). It has to be noted that the MSE behavior is a direct consequence of the QoS of the network. In fact, the communication link is supposed to have the best quality in the first case (

i.e. scenario 1), worst quality in the second case (

i.e. scenario 2) and intermediate quality otherwise (

i.e. scenarios 3 and 4). In other words, the degradations suffered by the watermark during the communication are proportional to the QoS (

i.e. the BER) of the link: in fact, it can be easily seen from

Figure 3 that the watermark is highly corrupted in the second scenario (corresponding to the worst BER), while it is slightly damaged in the first scenario (corresponding to the best BER). In the third and fourth scenarios, the MSE is at an intermediate level because the BER of the channel has an intermediate value between the first and second scenario.

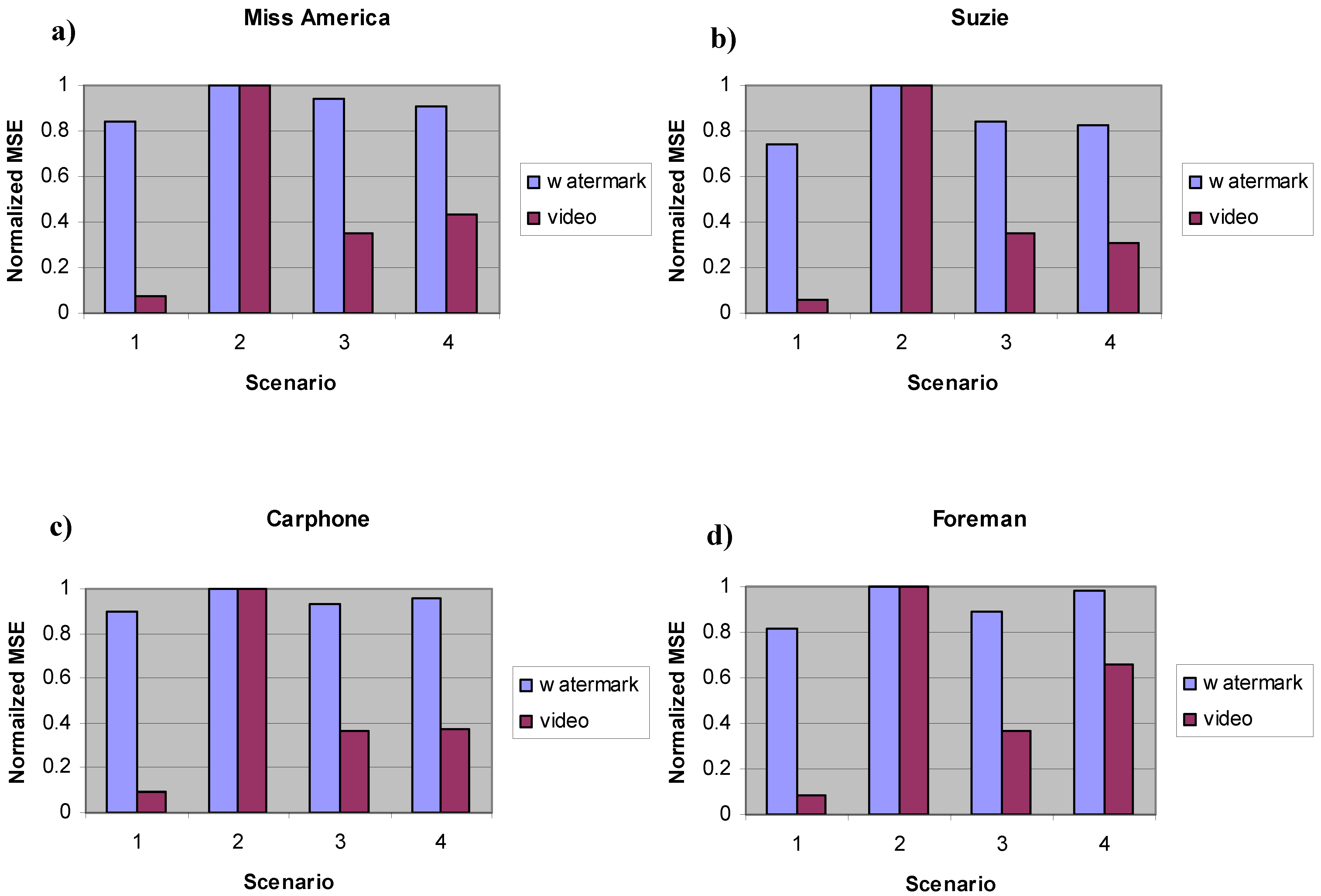

Figure 4.

MSE (normalized to 1) versus the different scenarios of the double boomerang watermark in the case of: (a) Miss America (b) Suzie (c) Carphone (d) Foreman video-sequences.

Figure 4.

MSE (normalized to 1) versus the different scenarios of the double boomerang watermark in the case of: (a) Miss America (b) Suzie (c) Carphone (d) Foreman video-sequences.

In order to characterize the performance of the proposed method to provide a quality measure of the transmission process of the multimedia content through the network,

Figure 4 shows here the MSE of both the received video and the watermark, in respect to the four different operating scenarios (

i.e. versus different BER of the transmission channel): in fact in our simulation trials, the communication link (

i.e. the channel BER) is supposed to have the best quality in the first case (

i.e. scenario 1), worst quality in the second case (

i.e. scenario 2) and intermediate quality otherwise (

i.e. scenarios 3 and 4). Once again, it can be easily seen from the figure that the watermark is highly corrupted in the second scenario (corresponding to the worst BER), while it is slightly damaged in the first scenario (corresponding to the best BER). In the third and fourth scenarios, the MSE is at an intermediate level because the BER of the channel has an intermediate value between the first and second scenario. It is not surprising that there are cases, shown in

Fig. 4, where the minimum mean square error of the watermark does not closely follow the minimum mean square error of the video trace per se. This is a consequence of the particular scenario (

i.e. the BER of the channel) under investigation. In fact, this situation happens only in the case of scenario 3 and 4 that are characterized by an intermediate quality level between the other scenarios: since the transmission errors introduced by the channel are modeled as a random Poisson process, characterized by a parametric probability of symbol error (

i.e. proportional to the BER), operating in the scenarios 3 and 4 means to have two different quality levels for both the downlink and uplink channel. Moreover, it has to be underlined that these two scenarios are characterized by a confidence level that is greater than the one of the other two scenarios. The consequence is that the watermark degradations closely follow the ones of the video (constrained between the higher and lower performance bounds) but the minimum of the video MSE may not more correspond to the minimum of the watermark MSE, since the quality of the (uplink and downlink) channels can be different. Moreover, we can say that the first and the second scenarios represent the (upper and lower) bounds of the system performances, characterized by the same channel quality for both the uplink and downlink trajectory. It has to be underlined, as said before, that these two scenarios are characterized by a confidence level that is lower than the one of the other two scenarios. The consequence is that the watermark degradations closely follow the ones of the video, since they are transmitted over the same (uplink and downlink) noisy channels and hence, the minimum mean square error of the watermarking corresponds to the minimum mean square error of the video trace per se.

To fully understand the relation between the multimedia content and the watermark, we compare the MSE of the watermark with the MSE of the video after the double boomerang trajectory. It has to be remembered that the video-sequence of every active user travels on the communication link one time as video (transparent mode), one time as watermark (hidden mode). Hence, the watermark suffers twice the degradations of the communication link, due to the boomerang path. It is worth noting that the MSE of the video-sequences

increases when the BER

increases: this is in accordance with the degradation that the videos suffer at increasing BER. In addition, the quality degradation of the extracted watermark embedded into the host video has the same behaviour of the one affecting the video itself, as shown in terms of MSE in

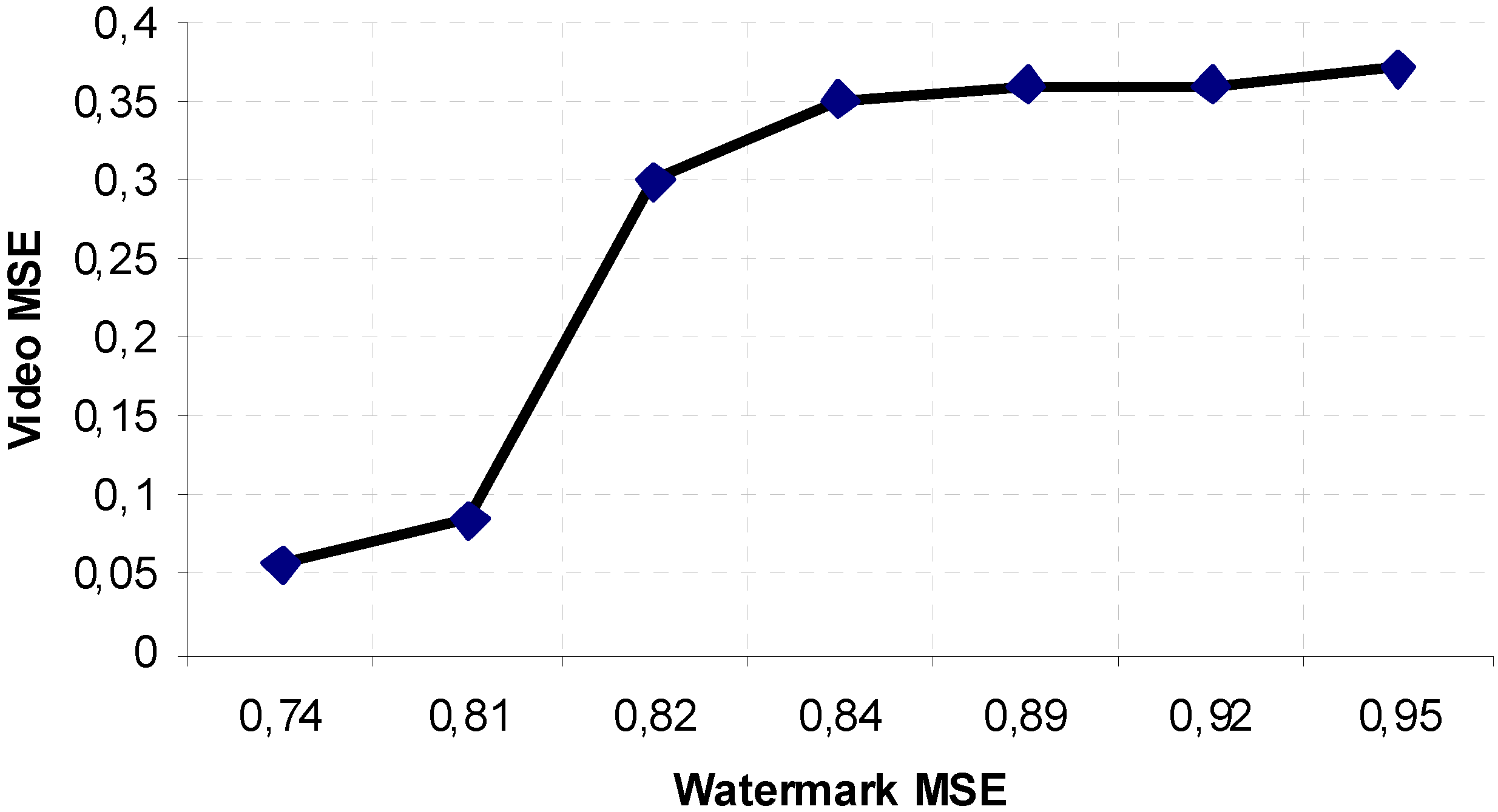

Figure 4. Comparing these experimental results, it is now possible to evaluate an empirical relationship between the MSE of the boomerang watermark and the one of the host multimedia content, as shown in

Figure 5. In particular, the values of the curve have been selected considering only the cases of

Fig. 4 where the watermark degradations closely follow the ones of the video. Hence, the values in

Fig. 5 have been obtained exploiting the values of the four videos under investigation only in the first and second scenarios,

i.e. considering the (

asymptotic)performance of the system in the best and worst case, respectively.

Figure 5.

Relationship between watermark and video degradation in terms of MSE for the analyzed cases and scenarios under investigation.

Figure 5.

Relationship between watermark and video degradation in terms of MSE for the analyzed cases and scenarios under investigation.

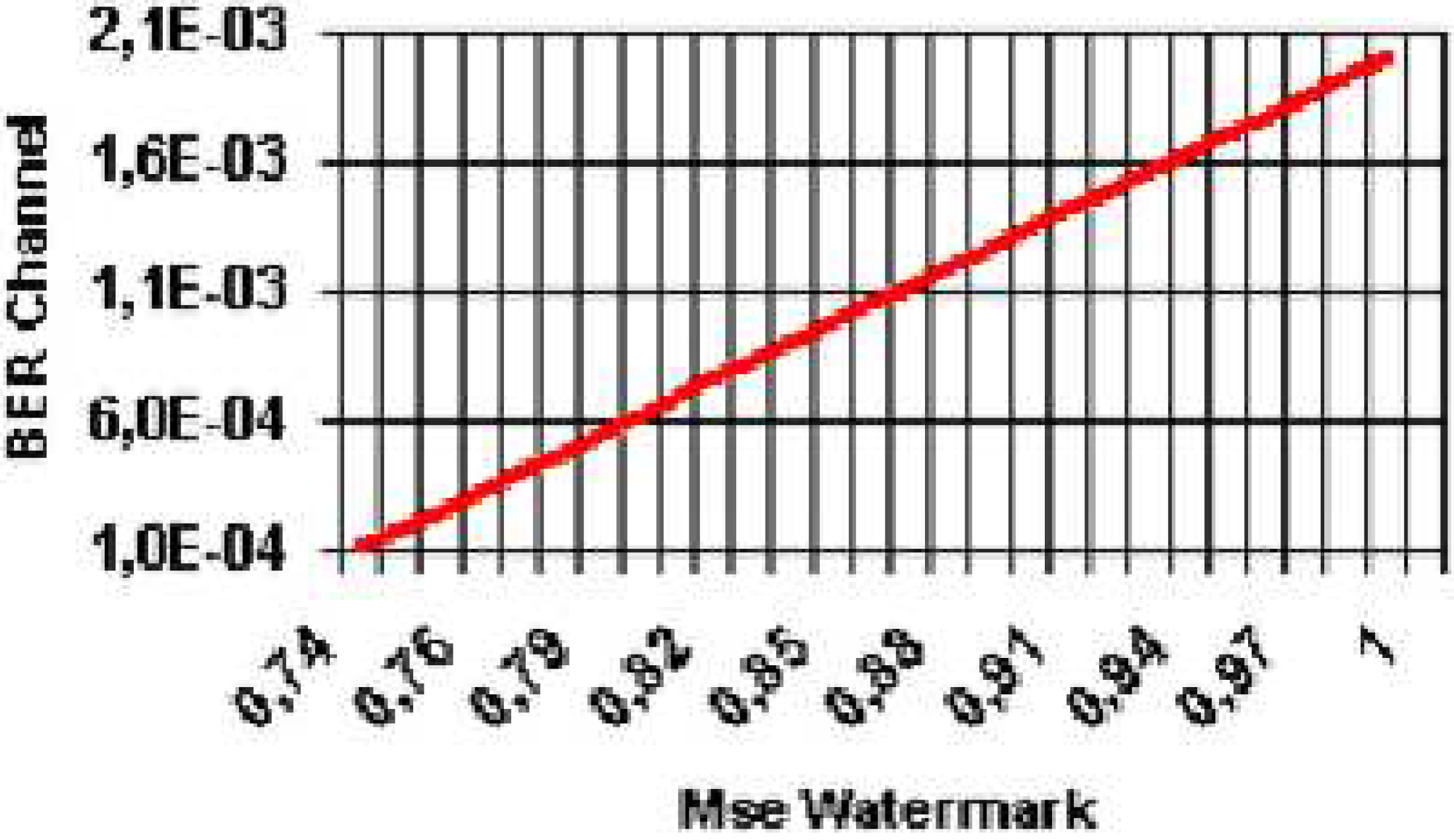

As it can be easily seen in

Fig. 5, the graph shows only very large values of the MSE of the watermark and this is a consequence of the operating scenarios used in our research. In fact, considering the quality of the communication channel used in all the four scenarios, we can evaluate the relationship between the watermark MSE and the channel BER. This relationship is represented in

Fig. 6, where it is clearly visible that for the minimum BER of 10

-4, the watermark MSE is characterized by a large value (equal to 0.74) while for the worst BER of more than 10

-3 the watermark MSE assumes a value that is even larger than before (almost equal to 1). Hence, these are the values of great interest for the mark MSE used in this work. Moreover, here we are interested in evaluating an

objective relationship between the mark and the video (

i.e. we are using only the MSE as a quality metric) and, considered that the watermark is a narrow band low energy signal, large values of the watermark MSE are needed to appreciate alterations of the video objective quality. Future researches will be devoted to analyze subjective indicators of the video quality investigating how lower values of the watermark MSE reflect in video alterations.

Figure 6.

Relationship between the channel BER and the watermark MSE for the analyzed cases and scenarios under investigation.

Figure 6.

Relationship between the channel BER and the watermark MSE for the analyzed cases and scenarios under investigation.

It has to be underlined that if some other videos (

i.e., cases or scenarios) would be used, the curves in

Fig. 5 and

Fig. 6 would be characterized by a different behavior. Nonetheless, even if sophisticated quality metrics or cumbersome relationships could be used for quality assessment purposes in multimedia communications, the MSE between the estimated watermark and the original one is used in this contribution as a proof of concept of the objective method proposed here. This means that it would be always possible to trace the alterations endured by the data, tracing the alterations of the embedded mark, with a relationship that depends on the transmitted videos and scenarios under investigation.

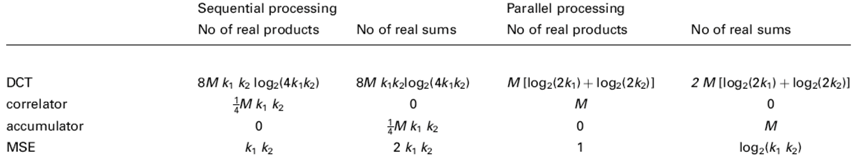

Let us now focus on the computational complexity of the proposed technique, by analyzing the number of operations performed by the MS for the QoS assessment. According to

Fig. 2, the floating point operations (flops) to be implemented by the MS processor are: first, the computation of the

k1-by-

k2 DCT of each frame; second, the correlation with the spread version of the watermark made of ¼

k1k2 pixels (

i.e. the original watermark pre-multiplied by one known PN matrix, different frame by frame); third, the accumulation of the detected mark averaged over

M frames; last, the final evaluation of the MSE of the detected mark at the end of the procedure. The whole computation can be implemented either by sequential or parallel processing, depending on the available hardware. The approximate computing time needed by the QoS assessment procedure (from

M frames of

k1 by

k2 pixels) operating either on sequential or parallel processors on a mobile device is depicted in

Table. 1, in terms of the time required by the number of flops. For sake of compactness, we have reported only the number of real products and sums, assuming that:

– in sequential implementation, the time of one complex product is the same as four real products and two real sums, whereas the time of one complex sum time is equivalent to two real sums;

– in parallel implementation, the time of one complex product is the same as one real product and one real sum, whereas the time of one complex sum time is equivalent to one real sum.

Table 1.

Approximate number of the flops-time required for the QoS detection on a mobile device.

Table 1.

Approximate number of the flops-time required for the QoS detection on a mobile device.

![Futureinternet 02 00060 i001]() |

It has to be noted that in all the aforementioned cases, the DCT computation is the most time-consuming step: 98.8% of operations for sequential implementation and 92.2% for parallel implementation are required for the only DCT processing over the whole computation time. The tremendous advantage of parallel computing is motivated by the fact that DCT computation is implemented by fast Fourier transform (FFT). In fact, the computational complexity of FFT (over N data) is of the order of N log2 N, whereas it reduces to the order of log2 N by parallel processing schemes. It is opinion of the authors that the implementation of the proposed technique is going to be feasible on a mobile device as the MS, in a short time, will host large processing capabilities (including hardware DCT co-processors) because of the monotonically decreasing cost of very large-scale integration as well as the ever increasing running speed. Therefore the complexity of the QoS evaluation procedure, which is presented in this paper, appears negligible in comparison with real-time MPEG decoding and adaptive on-board array processing.

The obtained results prove the sensitivity of the watermarking quality index to the actual quality for given target quality levels and show the capability of this network-aware processing of multimedia content to trace the alterations suffered by the data through the network. Hence, the advantages of distributing the management process can be summarized in: (i) an easier and more precise localization of the cause of QoS problems, (ii) better knowledge of local situations, (iii) a lower complexity for a single QoS agent and (iv) an increase in possible actions.