Deep Learning Approaches for Automatic Livestock Detection in UAV Imagery: State-of-the-Art and Future Directions

Abstract

1. Introduction

- Reviewing deep learning object detection techniques, prior application on UAV-based livestock monitoring, and challenges in aerial livestock detection.

- Presenting a case study evaluating representative models on a custom UAV cattle dataset, detailing the experimental setup, evaluation experiments, and results analysis.

- Providing an understanding and recommendations for future research related to livestock detection.

2. Related Work

2.1. Deep Learning-Based Object Detection Techniques

2.1.1. R-CNN Based

- The R-CNN was the first to successfully apply deep learning to object detection, significantly improving accuracy over traditional methods; however, it was slow due to redundant CNN computations across overlapping regions. Successive R-CNN-based models, including Fast R-CNN [18] and Faster R-CNN [19], streamlined this process.

- The Fast R-CNN improves on the R-CNN by performing feature extraction on the entire image only once, which strengthens a shared convolutional backbone. Instead of processing each region proposal separately, it employs a region-of-interest (RoI) pooling layer to derive fixed-size feature vectors from the shared feature map for every proposal.

- The Faster R-CNN improves upon previous models by incorporating a Region Proposal Network (RPN) that generates region proposals directly from the feature maps, replacing the slower Selective Search algorithm. The RPN and the detection network share convolutional layers, enabling fully end-to-end training and much faster performance while preserving the high accuracy of two-stage detection.

- Following region-based fully convolutional networks RFCN [37] RoI pooling is replaced with a position-sensitive score map to reduce the calculation per region while maintaining accuracy.

- The Mask-RCNN [17] is also a technique that replaces RoI clustering with RoIAlign to improve mask accuracy by preserving spatial alignment.

2.1.2. YOLO

- YOLOv1 [20] introduced the core concept of grid-based detection but struggled with localization accuracy and the detection of small objects.

- YOLOv2 (YOLO9000) [21] improved accuracy and recall through the use of batch normalization, multi-scale training, as well as anchor boxes.

- YOLOv3 [22] added residual connections and feature pyramid networks to enhance multi-scale detection, making it more robust for small objects.

- YOLOv8 [42] adopts a more modular and flexible design, featuring anchor-free detection, dynamic label assignment, and improved generalization, leading to good performance across various benchmarks.

2.1.3. Anchor Based

2.1.4. DETR (DEtection TRansformer)

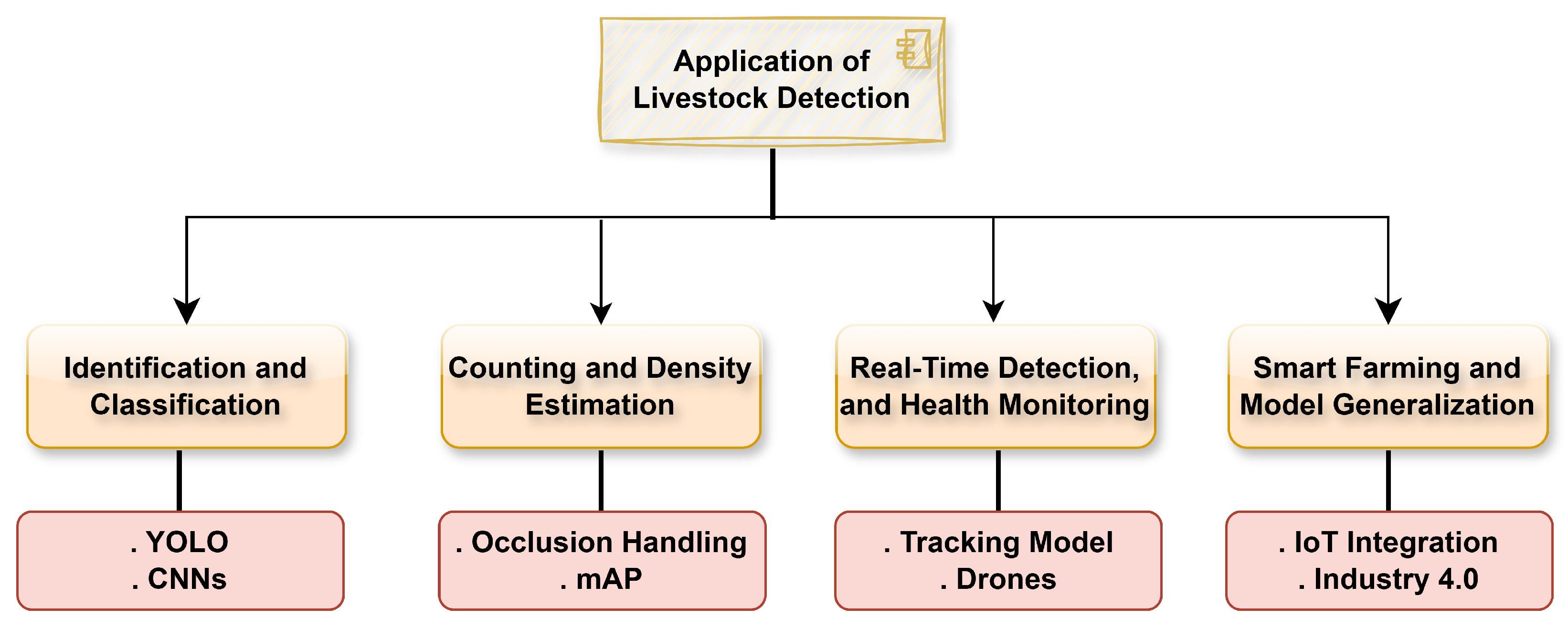

2.2. Applications in Aerial Livestock Detection

2.2.1. Livestock Identification and Classification

2.2.2. Livestock Counting and Density Estimation

2.2.3. Real-Time Detection, Surveillance, and Health Monitoring

2.2.4. Smart Farming, Ecosystem Monitoring, and Model Generalization

- Small object size: Livestock often occupies only a few pixels in high-altitude imagery, reducing feature richness and detection accuracy.

- Occlusion and overlapping: Animals may be covered by vegetation, landscape, or each other, problematizing object separation and identification.

- Variable lighting and weather conditions: Changes in sunlight, shadows, cloud cover, or fog can degrade image quality and model reliability.

- Posture and orientation variability: Animals appear in different poses and angles, requiring models to generalize across a wide range of visual patterns.

- Background complexity: Natural topography, like grasslands, forests, or drylands, introduces visual noise that can lead to false positives.

- Limited Annotated Datasets: High-quality, labeled aerial livestock datasets are scarce, limiting supervised learning and model generalization.

- Domain adaptability: This refers to the issue that models trained in one region may not perform well elsewhere without retraining or adaptation to the new domain.

- Real-time inference constraints: UAV-based systems require efficient models that can run on limited hardware while maintaining high accuracy.

- Counting and tracking Errors: Challenges such as double counting, missed detections, and dynamic group movement impact population estimation and monitoring.

3. Case Study: Evaluation of the Object Detection Models on a Custom UAV Cattle Dataset

3.1. Data Collection and Pre-Processing

3.2. Evaluation Metrics

- mAP@0.5: Measures precision at a relaxed threshold where IoU ≥ 0.5.

- mAP@0.5:0.95: A more stringent metric averaging mAP over 10 IoU thresholds ranging from 0.5 to 0.95.

3.3. Experiments and Evaluation

3.3.1. Experiment I—YOLOv12 Variants Evaluation

3.3.2. Experiment II—YOLO Family Comparison

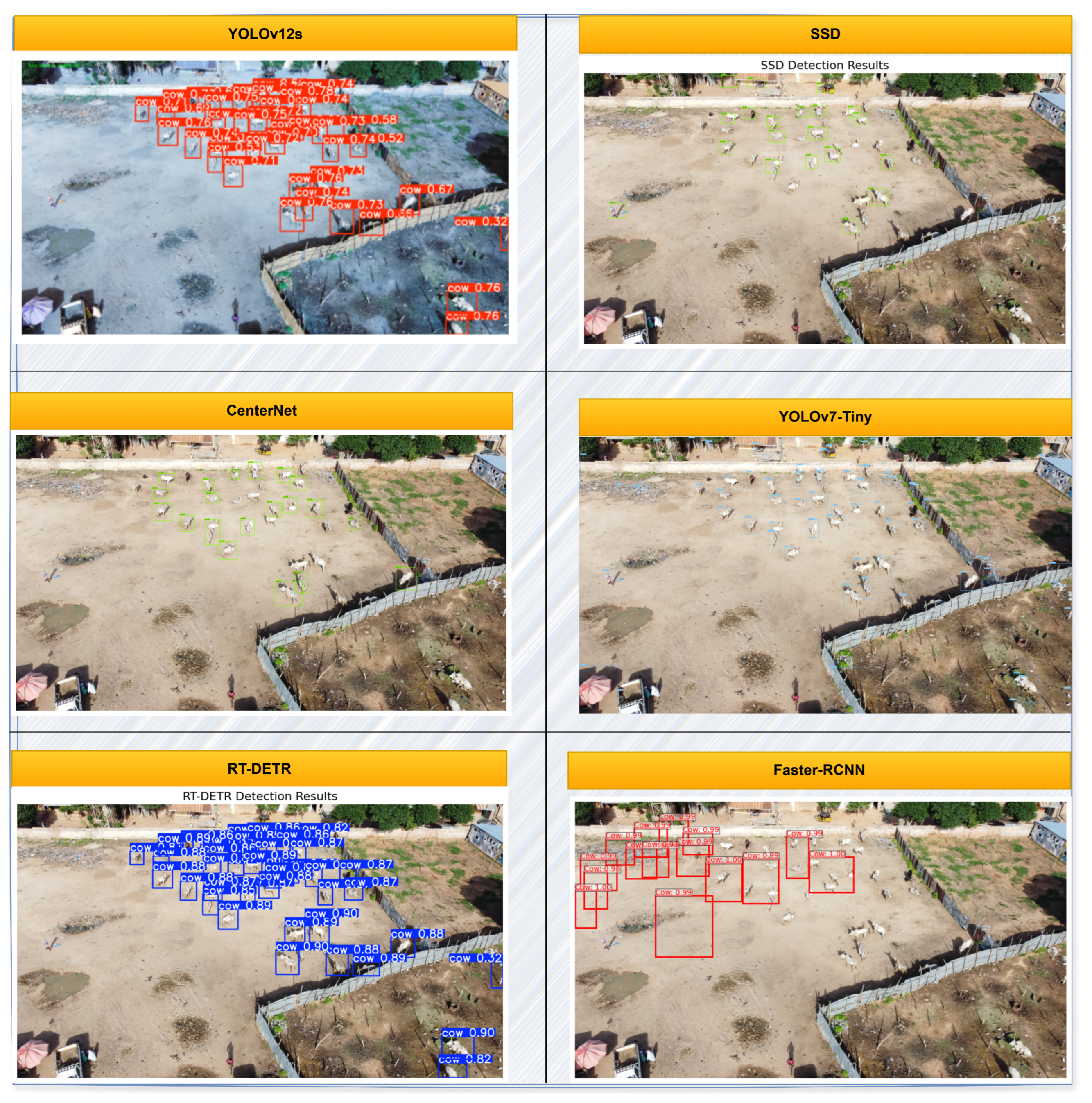

3.3.3. Experiment III—Benchmarking Against Other Models

- SSD (Single Shot Multibox Detector)—A classical one-stage detector, included as a baseline for real-time object detection.

- Faster R-CNN—A two-stage detector known for high accuracy, providing a comparison against precision-focused architectures.

- YOLOv7-tiny—A lightweight detector optimized for resource-constrained environments from YOLOv7, offering fast inference at the cost of reduced accuracy.

- CenterNet [75]—an anchor-free, keypoint-based detector known for its robustness in small-object detection, making it relevant for aerial livestock imagery.

- RT-DETR (Real-Time DEtection TRansformer) [76]—A recent transformer-based detector balancing accuracy and efficiency, included to assess the potential of transformer backbones for UAV-based livestock monitoring.

3.4. Results Analysis

3.4.1. Performance of YOLOv12 Variants

3.4.2. Performance of YOLO Family Comparison

3.4.3. Performance of Benchmarking Against Other Models

4. Discussion and Future Research Directions

- Domain adaptation and transfer learning to improve model generalization across diverse environments.

- Multi-modal data fusion, incorporating RGB, thermal, and multispectral imagery for enhanced health monitoring and behavior analysis.

- Onboard AI acceleration using edge TPUs or lightweight transformer backbones for more efficient UAV processing.

- Autonomous UAV flight planning integrated with the digital twin to dynamically adjust flight routes based on real-time livestock distribution and anomalies.

- Via the integration of UAV-based detection with digital twin technology, livestock management can be revolutionized, offering proactive decision-making, reduced operational costs, and improved animal welfare.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Said, M.I. The role of the livestock farming industry in supporting the global agricultural industry. In Agricultural Development in Asia—Potential Use of Nano-Materials and Nano-Technology; IntechOpen: London, UK, 2022. [Google Scholar]

- Qian, M.; Qian, C.; Yu, W. Chapter 11—Edge intelligence in smart agriculture CPS. In Edge Intelligence in Cyber-Physical Systems; Yu, W., Ed.; Intelligent Data-Centric Systems; Academic Press: Cambridge, MA, USA, 2025; pp. 265–291. [Google Scholar] [CrossRef]

- FAO. World Food and Agriculture; Pocketbook, FAOSTATFAO Statistical; FAO: Rome, Italy, 2015. [Google Scholar]

- Aidara-Kane, A.; Angulo, F.J.; Conly, J.M.; Minato, Y.; Silbergeld, E.K.; McEwen, S.A.; Collignon, P.J.; on behalf of the WHO Guideline Development Group. World Health Organization (WHO) guidelines on use of medically important antimicrobials in food-producing animals. Antimicrob. Resist. Infect. Control 2018, 7, 7. [Google Scholar] [CrossRef]

- Jebali, C.; Kouki, A. A proposed prototype for cattle monitoring system using RFID. In Proceedings of the 2018 International Flexible Electronics Technology Conference (IFETC), Ottawa, ON, Canada, 7–9 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–3. [Google Scholar]

- Sarwar, F.; Pasang, T.; Griffin, A. Survey of livestock counting and tracking methods. Nusant. Sci. Technol. Proc. 2020, 150–157. [Google Scholar] [CrossRef]

- Chamoso, P.; Raveane, W.; Parra, V.; González, A. UAVs applied to the counting and monitoring of animals. In Proceedings of the Ambient Intelligence-Software and Applications: 5th International Symposium on Ambient Intelligence, University of Salamanca, Salamanca, Spain, 4–6 June 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 71–80. [Google Scholar]

- Liang, H.; Gao, W.; Nguyen, J.H.; Orpilla, M.F.; Yu, W. Internet of Things Data Collection Using Unmanned Aerial Vehicles in Infrastructure Free Environments. IEEE Access 2020, 8, 3932–3944. [Google Scholar] [CrossRef]

- Chrétien, L.P.; Théau, J.; Ménard, P. Visible and thermal infrared remote sensing for the detection of white-tailed deer using an unmanned aerial system. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Ji, W.; Luo, Y.; Liao, Y.; Wu, W.; Wei, X.; Yang, Y.; He, X.Z.; Shen, Y.; Ma, Q.; Yi, S.; et al. UAV assisted livestock distribution monitoring and quantification: A low-cost and high-precision solution. Animals 2023, 13, 3069. [Google Scholar] [CrossRef]

- Hatcher, W.G.; Yu, W. A Survey of Deep Learning: Platforms, Applications and Emerging Research Trends. IEEE Access 2018, 6, 24411–24432. [Google Scholar] [CrossRef]

- Liang, F.; Yu, W.; Liu, X.; Griffith, D.; Golmie, N. Toward Edge-Based Deep Learning in Industrial Internet of Things. IEEE Internet Things J. 2020, 7, 4329–4341. [Google Scholar] [CrossRef]

- Song, J.; Guo, Y.; Yu, W. Chapter 10—Edge intelligence in smart home CPS. In Edge Intelligence in Cyber-Physical Systems; Yu, W., Ed.; Intelligent Data-Centric Systems; Academic Press: Cambridge, MA, USA, 2025; pp. 247–264. [Google Scholar] [CrossRef]

- Idama, G.; Guo, Y.; Yu, W. QATFP-YOLO: Optimizing Object Detection on Non-GPU Devices with YOLO Using Quantization-Aware Training and Filter Pruning. In Proceedings of the 2024 33rd International Conference on Computer Communications and Networks (ICCCN), Kailua-Kona, HI, USA, 29–31 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R. Fast r-cnn. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- Beal, J.; Kim, E.; Tzeng, E.; Park, D.H.; Zhai, A.; Kislyuk, D. Toward transformer-based object detection. arXiv 2020, arXiv:2012.09958. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1922–1933. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Yang, T.; Sun, J. Anchor detr: Query design for transformer-based detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 2567–2575. [Google Scholar]

- Alanezi, M.A.; Shahriar, M.S.; Hasan, M.B.; Ahmed, S.; Sha’aban, Y.A.; Bouchekara, H.R. Livestock management with unmanned aerial vehicles: A review. IEEE Access 2022, 10, 45001–45028. [Google Scholar] [CrossRef]

- Kellenberger, B.; Volpi, M.; Tuia, D. Fast animal detection in UAV images using convolutional neural networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 866–869. [Google Scholar]

- Brown, J.; Qiao, Y.; Clark, C.; Lomax, S.; Rafique, K.; Sukkarieh, S. Automated aerial animal detection when spatial resolution conditions are varied. Comput. Electron. Agric. 2022, 193, 106689. [Google Scholar] [CrossRef]

- Lee, J.; Bang, J.; Yang, S.I. Object detection with sliding window in images including multiple similar objects. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 18–20 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 803–806. [Google Scholar]

- Islam, S.; Abdullah-Al-Ka, M. Orientation Robust Object Detection Using Histogram of Oriented Gradients. Ph.D. Thesis, East West University, Dhaka, Bangladesh, 2017. [Google Scholar]

- Burger, W.; Burge, M.J. Scale-invariant feature transform (SIFT). In Digital Image Processing: An Algorithmic Introduction; Springer: Berlin/Heidelberg, Germany, 2022; pp. 709–763. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. arXiv 2016. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. ultralytics/yolov5: v3.0. Zenodo. 2020. Available online: https://docs.ultralytics.com/models/yolov5/ (accessed on 12 September 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://docs.ultralytics.com/models/yolov8/#key-features-of-yolov8 (accessed on 12 September 2025).

- Wang, C.Y.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics YOLO11. 2024. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 12 September 2025).

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 16965–16974. [Google Scholar]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Das, M.; Ferreira, G.; Chen, C. A model generalization study in localizing indoor Cows with COw LOcalization (COLO) dataset. arXiv 2024, arXiv:2407.20372. [Google Scholar] [CrossRef]

- Nascimento, J.C.; Marques, J.S. Performance evaluation of object detection algorithms for video surveillance. IEEE Trans. Multimed. 2006, 8, 761–774. [Google Scholar] [CrossRef]

- Simhambhatla, R.; Okiah, K.; Kuchkula, S.; Slater, R. Self-driving cars: Evaluation of deep learning techniques for object detection in different driving conditions. SMU Data Sci. Rev. 2019, 2, 23. [Google Scholar]

- Ragab, M.G.; Abdulkadir, S.J.; Muneer, A.; Alqushaibi, A.; Sumiea, E.H.; Qureshi, R.; Al-Selwi, S.M.; Alhussian, H. A comprehensive systematic review of YOLO for medical object detection (2018 to 2023). IEEE Access 2024, 12, 57815–57836. [Google Scholar] [CrossRef]

- Gowri, A.; Yovan, I.; Jebaseelan, S.S.; Selvasofia, S.A.; Nandhana, N. Satellite Image Based Animal Identification System Using Deep Learning Assisted Remote Sensing Strategy. In Proceedings of the 2024 International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI), Chennai, India, 9–10 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Gaurav, A.; Gupta, B.B.; Chui, K.T.; Arya, V. Unmanned Aerial Vehicle-Based Animal Detection via Hybrid CNN and LSTM Model. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2586–2591. [Google Scholar]

- Martha, G.W.; Mwangi, R.W.; Aramvith, S.; Rimiru, R. Comparing Deep Learning Object Detection Methods for Real-Time Cow Detection. In Proceedings of the TENCON 2023—2023 IEEE Region 10 Conference (TENCON), Chiang Mai, Thailand, 31 October–3 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1187–1192. [Google Scholar]

- Mücher, C.; Los, S.; Franke, G.; Kamphuis, C. Detection, identification and posture recognition of cattle with satellites, aerial photography and UAVs using deep learning techniques. Int. J. Remote Sens. 2022, 43, 2377–2392. [Google Scholar] [CrossRef]

- Gunawan, T.S.; Ismail, I.M.M.; Kartiwi, M.; Ismail, N. Performance Comparison of Various YOLO Architectures on Object Detection of UAV Images. In Proceedings of the 2022 IEEE 8th International Conference on Smart Instrumentation, Measurement and Applications (ICSIMA), Melaka, Malaysia, 26–28 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 257–261. [Google Scholar]

- Li, Y.; Gou, X.; Zuo, H.; Zhang, M. A Multi-scale Cattle Individual Identification Method Based on CMT Module and Attention Mechanism. In Proceedings of the 2024 7th International Conference on Computer Information Science and Application Technology (CISAT), Hangzhou, China, 12–14 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 336–341. [Google Scholar]

- Ismail, M.S.; Samad, R.; Pebrianti, D.; Mustafa, M.; Abdullah, N.R.H. Comparative Analysis of Deep Learning Models for Sheep Detection in Aerial Imagery. In Proceedings of the 2024 9th International Conference on Mechatronics Engineering (ICOM), Kuala Lumpur, Malaysia, 13–14 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 234–239. [Google Scholar]

- Lishchynskyi, M.; Cheng, I.; Nikolaidis, I. Trajectory Agnostic Livestock Counting Through UAV Imaging. In Proceedings of the 2024 IEEE 21st International Conference on Mobile Ad-Hoc and Smart Systems (MASS), Seoul, Republic of Korea, 23–24 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 682–687. [Google Scholar]

- Fang, C.; Li, C.; Yang, P.; Kong, S.; Han, Y.; Huang, X.; Niu, J. Enhancing livestock detection: An efficient model based on YOLOv8. Appl. Sci. 2024, 14, 4809. [Google Scholar] [CrossRef]

- Ocholla, I.A.; Pellikka, P.; Karanja, F.; Vuorinne, I.; Väisänen, T.; Boitt, M.; Heiskanen, J. Livestock Detection and Counting in Kenyan Rangelands Using Aerial Imagery and Deep Learning Techniques. Remote Sens. 2024, 16, 2929. [Google Scholar] [CrossRef]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J.M. Detection of cattle using drones and convolutional neural networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef]

- Robinson, C.; Ortiz, A.; Hughey, L.; Stabach, J.A.; Ferres, J.M.L. Detecting cattle and elk in the wild from space. arXiv 2021, arXiv:2106.15448. [Google Scholar] [CrossRef]

- Wang, W.; Xie, M.; Jiang, C.; Zheng, Z.; Bian, H. Cow Detection Model Based on Improved YOLOv5. In Proceedings of the 2024 39th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Dalian, China, 7–9 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1697–1701. [Google Scholar]

- Myint, B.B.; Onizuka, T.; Tin, P.; Aikawa, M.; Kobayashi, I.; Zin, T.T. Development of a real-time cattle lameness detection system using a single side-view camera. Sci. Rep. 2024, 14, 13734. [Google Scholar] [CrossRef]

- Han, L.; Tao, P.; Martin, R.R. Livestock detection in aerial images using a fully convolutional network. Comput. Vis. Media 2019, 5, 221–228. [Google Scholar] [CrossRef]

- Barrios, D.B.; Valente, J.; van Langevelde, F. Monitoring mammalian herbivores via convolutional neural networks implemented on thermal UAV imagery. Comput. Electron. Agric. 2024, 218, 108713. [Google Scholar] [CrossRef]

- Noe, S.M.; Zin, T.T.; Tin, P.; Kobayashi, I. Precision Livestock Tracking: Advancements in Black Cattle Monitoring for Sustainable Agriculture. J. Signal Process. 2023, 28, 179–182. [Google Scholar]

- Kurniadi, F.A.; Setianingsih, C.; Syaputra, R.E. Innovation in Livestock Surveillance: Applying the YOLO Algorithm to UAV Imagery and Videography. In Proceedings of the 2023 IEEE 9th International Conference on Smart Instrumentation, Measurement and Applications (ICSIMA), Kuala Lumpur, Malaysia, 17–18 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 246–251. [Google Scholar]

- Asdikian, J.P.H.; Li, M.; Maier, G. Performance evaluation of YOLOv8 and YOLOv9 on custom dataset with color space augmentation for Real-time Wildlife detection at the Edge. In Proceedings of the 2024 IEEE 10th International Conference on Network Softwarization (NetSoft), Saint Louis, MI, USA, 24–28 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 55–60. [Google Scholar]

- Xu, Z.; Wang, T.; Skidmore, A.K.; Lamprey, R. A review of deep learning techniques for detecting animals in aerial and satellite images. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103732. [Google Scholar] [CrossRef]

- Tian, M.; Zhao, F.; Chen, N.; Zhang, D.; Li, J.; Li, H. Application of Convolutional Neural Network in Livestock Target Detection of IoT Images. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1146–1151. [Google Scholar]

- Korkmaz, A.; Agdas, M.T.; Kosunalp, S.; Iliev, T.; Stoyanov, I. Detection of Threats to Farm Animals Using Deep Learning Models: A Comparative Study. Appl. Sci. 2024, 14, 6098. [Google Scholar] [CrossRef]

- A Deep Learning-Based Model for the Detection and Tracking of Animals for Safety and Management of Financial Loss. J. Electr. Syst. 2024, 20, 14–20. [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. RTDETRv2: All-in-One Detection Transformer Beats YOLO and DINO. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Qian, C.; Guo, Y.; Hussaini, A.; Musa, A.; Sai, A.; Yu, W. A New Layer Structure of Cyber-Physical Systems under the Era of Digital Twin. ACM Trans. Internet Technol. 2024; accepted. [Google Scholar] [CrossRef]

| Epochs | Batch Size | Imgsz | Momentum | lr | Close Mosaic | Workers |

|---|---|---|---|---|---|---|

| 100 | 16 | 0.937 | 0.01 | 10 | 8 |

| Model | Year | Anchor | Innovations | Strengths | Limitations |

|---|---|---|---|---|---|

| YOLOv5 | 2020 | Anchor-based | Scalable variants (n–x); PyTorch implementation | Widely adopted; lightweight for edge devices | Weak small-object detection; no transformer features |

| YOLOv7 | 2022 | Anchor-based | E-ELAN module; re-parameterization | High accuracy; efficient CNN | Still anchor-based; less suited for UAV small-object detection |

| YOLOv8 | 2023 | Anchor-free | Decoupled head; flexible input sizes | Strong small-object detection; real-time inference | Needs fine-tuning; unstable in dense livestock clusters |

| YOLOv12 | 2025 | Anchor-free + Attention-centric | R-ELAN; FlashAttention integration | SOTA accuracy; balances speed and robustness | Large models heavy for UAV edge hardware |

| Model | Precision | Recall | F1 Score | mAP@50 | mAP@50-95 | Parameters (M) | FLOPs (G) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv12-n | 0.96 | 0.93 | 0.94 | 0.97 | 0.63 | 2.57 | 6.3 | 4.5 |

| YOLOv12 s | 0.96 | 0.93 | 0.95 | 0.97 | 0.63 | 9.23 | 21.2 | 8.1 |

| YOLOv12 m | 0.95 | 0.95 | 0.95 | 0.98 | 0.66 | 20.1 | 67.1 | 14.9 |

| YOLOv12 l | 0.95 | 0.94 | 0.95 | 0.98 | 0.65 | 26.34 | 88.5 | 4.5 |

| YOLOv12-x | 0.95 | 0.95 | 0.96 | 0.98 | 0.67 | 59.04 | 198.5 | 6.8 |

| Model | Precision | Recall | F1 Score | mAP@50 | mAP@50-95 | Parameters (M) | FLOPs (G) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5 s | 0.96 | 0.94 | 0.95 | 0.98 | 0.64 | 7.01 | 15.8 | 102.5 |

| YOLOv7 | 0.97 | 0.96 | 0.96 | 0.99 | 0.64 | 37.19 | 105.1 | 90.7 |

| YOLOv8 s | 0.95 | 0.96 | 0.96 | 0.98 | 0.66 | 11.13 | 28.4 | 0.9 |

| YOLOv12 s | 0.96 | 0.93 | 0.95 | 0.97 | 0.63 | 9.23 | 21.2 | 8.1 |

| Model | Precision | Recall | F1 Score | mAP@50 | mAP@50-95 | Parameters (M) | FLOPs (G) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|

| SSD (MobileNet V2) | 0.62 | 0.65 | 0.63 | 0.59 | 0.21 | 25.12 | 88.2 | 115.86 |

| Faster R-CNN (ResNet50-FPN) | 0.84 | 0.94 | 0.89 | 0.94 | 0.63 | 41.53 | 216.58 | 28.06 |

| CenterNet (ResNet50 V1 FPN) | 0.93 | 0.62 | 0.74 | 0.93 | 0.57 | 25.25 | - | 1051.96 |

| YOLOv7-Tiny | 0.86 | 0.71 | 0.78 | 0.83 | 0.33 | 6.02 | 13.2 | 120.1 |

| RT-DETR | 0.95 | 0.94 | 0.95 | 0.97 | 0.65 | 31.9 | 103.4 | 3.2 |

| YOLOv12 s | 0.96 | 0.93 | 0.95 | 0.97 | 0.63 | 9.23 | 21.2 | 8.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adam, M.; Song, J.; Yu, W.; Li, Q. Deep Learning Approaches for Automatic Livestock Detection in UAV Imagery: State-of-the-Art and Future Directions. Future Internet 2025, 17, 431. https://doi.org/10.3390/fi17090431

Adam M, Song J, Yu W, Li Q. Deep Learning Approaches for Automatic Livestock Detection in UAV Imagery: State-of-the-Art and Future Directions. Future Internet. 2025; 17(9):431. https://doi.org/10.3390/fi17090431

Chicago/Turabian StyleAdam, Muhammad, Jianchao Song, Wei Yu, and Qingqing Li. 2025. "Deep Learning Approaches for Automatic Livestock Detection in UAV Imagery: State-of-the-Art and Future Directions" Future Internet 17, no. 9: 431. https://doi.org/10.3390/fi17090431

APA StyleAdam, M., Song, J., Yu, W., & Li, Q. (2025). Deep Learning Approaches for Automatic Livestock Detection in UAV Imagery: State-of-the-Art and Future Directions. Future Internet, 17(9), 431. https://doi.org/10.3390/fi17090431