1. Introduction

The exponential growth of the web and the proliferation of large-scale web applications have intensified the demand for efficient data access mechanisms. These systems must handle massive user bases and process high volumes of information under stringent latency constraints. The resulting traffic between clients and servers often causes significant access delays, particularly when multiple users request the same resources in short time intervals. To mitigate these delays and improve user-perceived response times, different optimization techniques have been developed over the last two decades, with web caching being one of the most widely adopted solutions.

Among the available optimization techniques, web caching remains a cornerstone, as it reduces redundant network traffic, increases effective bandwidth, alleviates server load, and shortens response times. By storing precomputed responses in strategically distributed cache nodes, web caching minimizes the need for repeated backend processing. The effectiveness of caching, however, is constrained by finite storage capacity, which necessitates intelligent admission and replacement decisions to prioritize entries that are likely to be reused.

A critical factor in designing such systems is user behavior. This behavior has two key characteristics. First, web queries often follow a Zipf-like distribution, where a small subset of requests dominates in frequency, creating the potential for cache node imbalance [

1,

2]. The skewness of this distribution, characterized by its exponent, directly impacts the optimal allocation between static and dynamic cache sections, as higher skew values increase the relative benefit of dedicating capacity to long-term popular items. Second, query popularity is not uniform over time; from a temporal perspective, user queries can be classified as permanent (consistently popular), periodic (popular on specific dates), or burst (experiencing a sudden, unexpected demand surge) [

3]. These burst queries, in particular, can dramatically increase request volume and degrade system performance.

To address these challenges, our proposed architecture partitions the cache into three sections that directly correspond to these user behaviors: a static section for permanent queries, a dynamic section for transiently popular queries (such as periodic ones), and a burst section to handle sudden demand surges. Burst queries are detected in real time using a statistical monitoring mechanism adapted from [

4] and prioritized for immediate admission. The design was evaluated through simulation experiments using real-world query logs in combination with the Least Recently Used (LRU) and Least Frequently Used (LFU) replacement policies.

The proposed architecture was validated against standard LRU and LFU replacement policies using real-world query logs, aligning with current literature that recognizes these strategies as persistent baselines in both research and production environments [

5]. In contrast to existing hybrid and multi-tier caching designs [

6,

7], this approach integrates user-behavior modeling with burst detection into a modular, three-section framework that is directly extensible to distributed deployments.

The results show that integrating user-behavior modeling with cache segmentation and burst detection yields consistent improvements in cache hit rates across diverse cache sizes, with particularly notable gains in smaller-capacity configurations. Beyond hit rate improvements, the architecture is designed to be extensible to distributed and hybrid caching environments without fundamental design changes.

The remainder of this paper is organized as follows.

Section 2 presents the problem overview and related work, incorporating recent advances in multi-tier and machine learning-based caching.

Section 3 details the proposed architecture and its algorithms.

Section 4 describes the simulation setup and reports the results.

Section 5 concludes with implications, limitations, and directions for future research.

2. Background and Related Works

Large-scale web search engines (WSEs) and other high-traffic web applications must process massive volumes of data while coordinating thousands of interconnected servers across geographically distributed data centers [

8,

9,

10]. Caching mechanisms are essential to reduce backend access, mitigate latency, and improve system throughput. An effective caching system should simultaneously optimize latency, robustness, transparency, scalability, load balancing, request heterogeneity, and operational simplicity.

Cache Policies and Content Volatility. Caching strategies can be categorized by content volatility into static and dynamic designs. Static caches store frequently accessed, relatively stable content, identified by analyzing historical request records (e.g., frequency, number of distinct users, click-through rates, and long-term popularity). In contrast, dynamic caches manage content with transient demand, frequently updating to reflect evolving request patterns. Efficient cache management under limited capacity requires effective admission and replacement policies tailored to these volatility profiles.

The challenge of optimizing cache eviction has led to a wide set of strategies, as cataloged in comprehensive surveys like the one by ElAarag [

11]. These works typically categorize policies into groups such as recency-based, frequency-based, and function-based, providing extensive quantitative comparisons. A key takeaway from this body of work is the consistent finding that foundational algorithms like LRU are often significantly outperformed by more sophisticated, context-aware policies. Furthermore, such surveys have also explored the application of machine learning, using neural networks to predict the future utility of cache items based on traffic patterns. Our work contributes to this field not by proposing a new eviction policy but by introducing a complementary architectural segmentation that can potentially enhance the performance of any underlying policy.

Replacement strategies vary in complexity. Widely adopted methods such as Least Recently Used (LRU) and Least Frequently Used (LFU) offer predictable behavior and low overhead, remaining standard baselines in both research and production environments.

In contrast, adaptive multi-tier architectures—such as Quality of Service Aware (QoS-aware) variants extending Adaptive Replacement Cache (ARC) and machine learning-based replacement policies leveraging deep reinforcement learning [

12]—have emerged recently to support tiered caching with service differentiation. Additionally, adaptive allocation via deep Q-networks (DQN) has shown promising hit-rate gains, especially in edge/cloud contexts [

13,

14].

Beyond the conventional LRU and LFU policies, researchers have proposed more sophisticated strategies based on principles from other areas of computer science to overcome their well-known limitations. A notable improvement was introduced by Lee et al. in the context of file system buffer caches, presenting the Least Recently/Frequently-Used (LRFU) policy. This approach blends both LRU and LFU into a single framework by assigning adjustable weights to both recency and frequency [

15,

16]. This approach allows for a spectrum of policies that can be adapted to different workload characteristics.

Another important advancement in replacement policies is the Re-Reference Interval Prediction (RRIP) framework, proposed by Jaleel et al. [

17], which addresses the poor performance of LRU of the Last-Level Cache (LLC) on workloads with scans or distant re-reference patterns. RRIP associates a prediction value (RRPV) with each cache block, estimating how soon it will be reused. Newly inserted blocks are typically assigned a long re-reference interval (a high RRPV), making the policy highly resistant to cache pollution from scans. The framework includes both a static version (SRRIP) and a dynamic one (DRRIP) that adapts to changing workload characteristics. Notably, RRIP has been shown to achieve significant throughput improvements over LRU with minimal hardware overhead, making it a powerful alternative.

Another family of techniques—devised initially for processor memory caches—relies on score-based replacement. The SCORE policy [

18] assigns an initial score to newly inserted cache lines, then increases the score on hits, and decreases it otherwise. When eviction is required, the items with scores below a given threshold are selected for removal. Although these methods provide greater flexibility, their effectiveness in the context of a web application is not guaranteed, as their performance often hinges on careful parameter tuning for workloads that differ from their original target.

Score-based principles have been directly applied to the domain of web caching. Hasslinger et al. [

19] propose the Score-Gated LRU (SG-LRU) policy, in which an incoming object is admitted into the cache only if its associated score—determined by factors such as access frequency, object size, or other heuristics—exceeds that of the lowest-scoring item currently stored. This method integrates the simplicity and low overhead of traditional LRU with a more selective, score-driven admission mechanism, offering a flexible and effective solution for web caching scenarios.

Recent research also highlights the need to adapt caching strategies to evolving network conditions and cloud infrastructures. In [

20], the authors argue that, in modern high-speed networks, latency has superseded bandwidth as the primary performance bottleneck. Thus, their work proposes a protocol-level optimization where servers proactively provide validation tokens to eliminate the round-trip time (RTT) associated with re-validation requests. Other empirical studies have focused on the practical impacts of caching in cloud environments. The authors of [

21] investigated whether latency caused by geographical distance between a cloud-hosted application and its users would degrade performance to an unusable level. Their study on a geographically distributed AWS environment quantifies the performance improvements achievable through a combination of caching techniques: dynamic caching using Redis for database query results, and static edge caching to render pages near the end user. Their key finding confirms that this multi-layered caching significantly mitigates latency, with performance gains directly correlating to the cache expiration times. While these approaches focus specifically on protocol-level latency and infrastructure-level performance, respectively, they share a common theme with our work: the necessity of intelligent, context-aware caching. Our architecture contributes a distinct perspective by proposing a segmentation model based not on infrastructure or protocol optimizations, but on the behavioral patterns of user queries themselves, which directly influence cache content and value.

Cache architectures are typically organized as hierarchical, distributed, or hybrid systems [

22]. Hierarchical designs, structured as parent–child tiers, can introduce latency and storage redundancy. Distributed systems eliminate intermediaries, enabling direct access across nodes and improving fault tolerance and load balancing. Hybrid and multi-level architectures combine these elements, storing content by type or volatility across tiers [

23,

24,

25,

26]. Recent surveys confirm the performance advantages of such designs in modern high-throughput web systems [

27].

Our proposed behavior-aware architecture extends the multi-level paradigm—previously explored in designs such as ResIn [

8], which prioritizes result caching and index pruning based on query frequency and computational cost, and the two-level cache in [

23], which separates static cache for persistently popular content from dynamic cache for frequently updated items—by introducing a dedicated burst section with real-time detection of demand surges. This additional layer addresses short-term volatility that conventional multi-level schemes do not explicitly isolate, thereby complementing prior work. While prior work has examined static/dynamic segmentation [

8,

23,

24,

25,

26,

28], the combined inclusion of burst handling in the same operational framework remains unexplored in empirical studies using real-world logs. Although recent ML-based approaches (e.g., deep RL models) deliver high hit rates, they often impose significant computational overhead and require extensive training pipelines [

12,

29]. Our architecture achieves orthogonal structural gains that enhance performance with lower complexity and full experimental reproducibility.

Given the increasing complexity of modern cache replacement strategies, a key methodological choice in this study was the selection of appropriate baseline policies. The central contribution of this work is architectural—namely, the segmentation of the cache based on user behavior—which operates independently of the specific replacement policy used within each segment. To isolate the impact of this architectural segmentation, we intentionally employed two fundamental and widely adopted replacement policies: LRU and LFU. Although more flexible approaches such as LRFU [

13,

14] can adaptively balance recency and frequency, their inclusion as baselines would have introduced additional tuning complexity, potentially confounding the attribution of observed gains to the segmentation itself. These algorithms are well-established in both academic research and production systems [

5], making them suitable for baseline comparisons. Incorporating more advanced, multi-parameter methods such as LRFU, score-based, and machine learning-based approaches would introduce confounding factors, complicating the attribution of observed performance improvements. By demonstrating substantial gains using standard baseline policies, we provide a more transparent and more rigorous validation of the proposed segmentation framework.

3. Proposed Cache Architecture

The proposed cache architecture is designed to optimize the performance of large-scale web applications by introducing a behavior-aware segmentation into three dedicated sections: static, dynamic, and burst. This segmentation is based on query reuse patterns derived from analysis of real-world query logs, enabling the system to adapt efficiently to heterogeneous and time-varying request behaviors. Unlike conventional static/dynamic partitioning, the design incorporates a dedicated burst cache that can detect and respond to sudden query surges in real time.

The static section retains responses to queries exhibiting long-term stability in demand, as identified through offline analysis of historical access logs. This is a conventional approach in static and static-dynamic caching models for web search engines [

28,

30]. The dynamic section stores responses to queries with transient popularity—queries whose demand is significant but decays within a bounded time window. The burst section provides short-term storage for queries experiencing sudden demand surges, detected through a statistical monitoring process that compares recent query arrival rates against historical baselines. This burst handling mechanism allows the system to serve temporary spikes without overloading backend servers or displacing high-value static or dynamic entries prematurely [

4].

Each cache section is governed by tailored admission, eviction, and old-entry elimination policies, selected to maximize the hit ratio under the constraints of limited capacity. The choice of policies reflects a balance between implementation simplicity, predictable performance, and adaptability to workload changes. These policies are summarized in

Table 1.

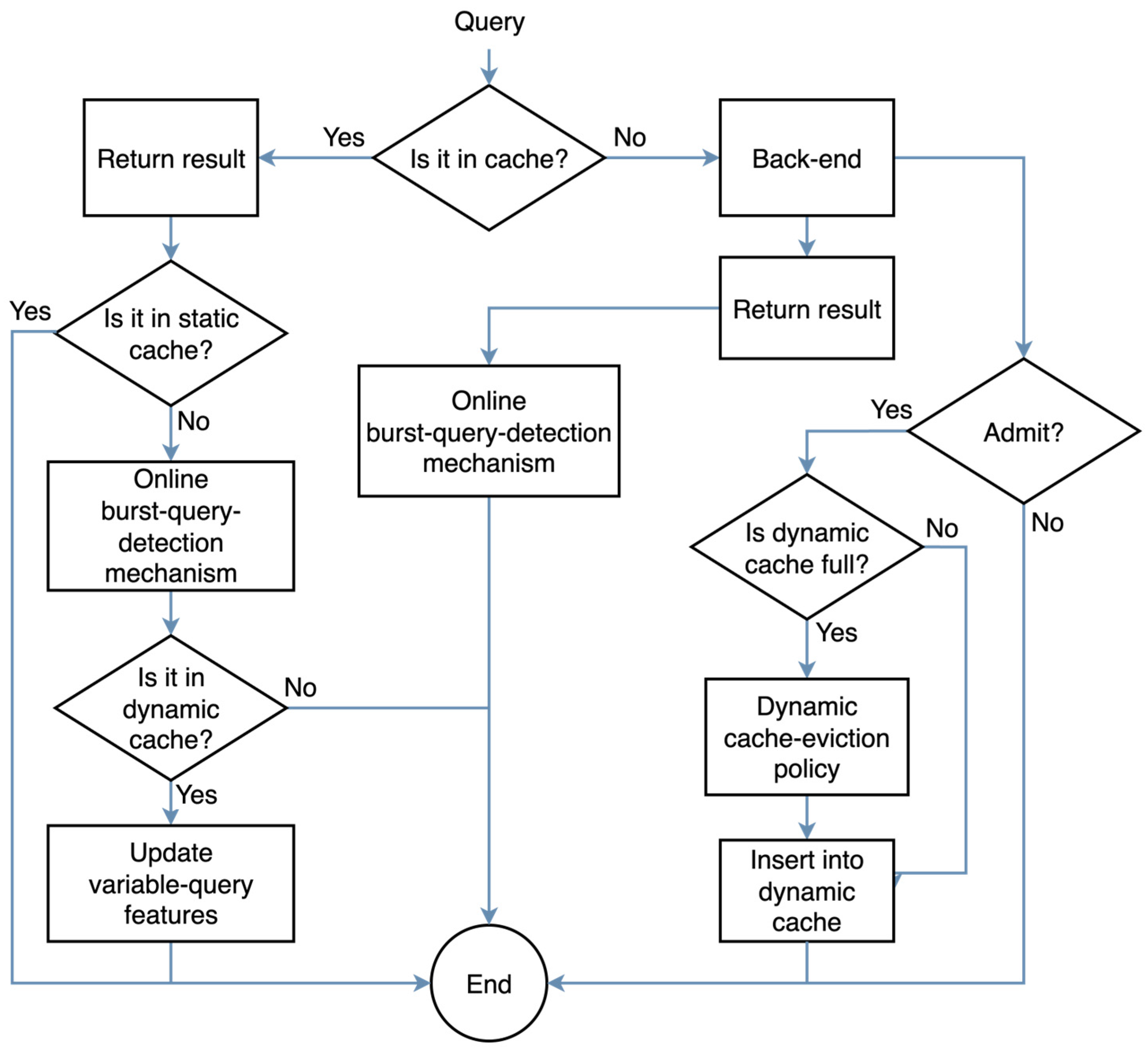

The operational flow for handling an incoming query is depicted in the flowchart in

Figure 1. The process begins by checking if the query result is already stored in any of the three cache sections. If a cache hit occurs, the result is returned immediately, and metadata is updated if the query resides in the dynamic cache. If it is a cache miss, the request is forwarded to the backend. The system then evaluates the new result for admission into the dynamic cache, applying the corresponding admission and eviction policies if necessary. In parallel, all queries not found in the static cache are fed into the online burst detection mechanism to identify potential demand surges. This entire process is formalized in Algorithm 1.

| Algorithm 1. Flow of operations of the proposed cache architecture |

Inputs: TF: log queries, ce: static cache, cd: dynamic cache, cr: burst cache, cv_max: maximum size of the variable cache.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

|

By combining static, dynamic, and burst sections in a unified framework, this architecture provides a more responsive allocation of cache resources to content with diverse reuse patterns, including transient spikes that conventional designs may fail to capture efficiently. This approach maintains compatibility with established replacement policies such as LRU and LFU, ensuring reproducibility in simulations and practical deployability in systems where the integration of machine learning-based or complex multi-tier policies is not feasible due to data or implementation constraints.

3.1. Burst Detection Mechanism

The burst detection mechanism is designed to identify queries undergoing a statistically significant surge in demand, enabling their proactive admission into the burst section. The detection process operates by monitoring incoming queries in real time and comparing their short-term arrival rate against a baseline derived from historical query logs. When the observed rate exceeds the baseline by a predefined threshold—expressed as a multiple of the standard deviation of historical rates—the query is classified as a burst and placed into the burst cache.

By isolating burst queries in a dedicated cache segment, the system responds immediately to short-term popularity spikes, preventing unnecessary backend load and avoiding premature eviction of valuable entries in the static and dynamic sections. This targeted allocation is particularly relevant for workloads exhibiting flash-crowd behavior or sudden trends, where rapid changes in query demand would otherwise degrade hit rates.

The procedure is formalized in Algorithm 2, which provides a reproducible pseudocode description of the detection logic. The threshold condition q.freq ≥ q.series + 0.5∙σ(q.series) identifies a statistically significant increase over the historical average. The conditions σ(q.series)/q.series > 0.3 and q.freq > 100 act as filters to ensure the query has sufficient volume and volatility, avoiding false positives from low-traffic queries.

| Algorithm 2. Burst detection mechanism |

Inputs: SS: monitoring structure of t top-k terms, cr: burst cache.

1.

2.

3.

4.

5.

6.

7.

|

The detection threshold is a tunable parameter, allowing the system to balance sensitivity (detecting genuine bursts quickly) against precision (avoiding false positives). In our evaluation, this parameter was selected empirically based on simulations using real-world query logs, ensuring that the mechanism reflects actual demand patterns rather than synthetic workload assumptions.

3.2. Mechanism to Enter Burst Cache

The burst cache admission mechanism is triggered whenever the burst detection module classifies a query as experiencing a statistically significant demand surge. This process ensures that such queries are admitted into the burst section for immediate reuse during their high-demand window, without displacing long-term or moderately popular entries from other sections.

Upon detection, if the burst cache has available capacity, the query result is stored immediately. If the burst cache is full, the section’s dedicated replacement policy—optimized for short-lived high-demand content—is applied to evict the least valuable entry according to predefined criteria. This policy differs from those of the static and dynamic caches, reflecting the volatile nature of burst workloads.

The complete admission logic is formalized in Algorithm 3, which outlines the sequence from detection to storage, including cache lookup, insertion, and eviction steps.

| Algorithm 3. Mechanism to enter the burst cache query |

Inputs: t: terms declared to be bursts, cr: burst cache, cd: dynamic cache, cv_max: maximum size of the variable cache.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11. |

By rapidly admitting detected bursts into the dedicated section, the mechanism improves hit rate during high-demand intervals, reduces backend load, and ensures that sudden popularity spikes are leveraged rather than penalized by generic replacement policies. The effectiveness of this process was confirmed in simulation experiments using real-world query logs, demonstrating measurable hit-rate gains under varying cache sizes and workloads.

3.3. Burst Query Replacement Policy

The burst query replacement policy governs the eviction of entries from the burst cache when capacity is reached and a newly detected burst query must be inserted. Its design prioritizes retaining entries that remain within their statistically determined high-demand window, ensuring that the burst section holds only actively valuable content.

When multiple candidates for removal are identified, the policy selects for eviction the entry with the lowest recent access frequency relative to its baseline demand level. This criterion reflects the volatile and short-lived nature of burst content, where demand decay can occur within minutes or hours.

The full procedure is formalized in Algorithm 4, detailing the steps for identifying eviction candidates, computing recent access statistics, and performing the replacement. An entry is evicted from the burst cache if it is no longer in the top-k queries and its Time-to-Live (TTL) counter expires. The TTL is set to 60 min when a query first leaves the top-k list. If it re-enters the top-k, its TTL is disabled. If the TTL reaches zero, the entry is moved to the dynamic cache.

| Algorithm 4. Burst query replacement policy mechanism |

Inputs: t: terms declared to be bursts, cr: burst cache, cd: dynamic cache, TTL: time-to-live of the query.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10. )

11.

12.

13.

14.

15.

16.

17.

18. |

By ensuring that only actively in-demand queries remain in the burst cache, this policy sustains a high hit rate, minimizes wasted capacity, and supports the broader behavior-aware architecture in adapting to rapid demand fluctuations. The effectiveness of this approach was validated through simulation experiments using real-world query logs, where it contributed to measurable improvements in hit ratio compared to baseline caching strategies.

4. Results

The effectiveness of the proposed architecture was evaluated through simulation experiments using real-world query logs. The analysis focuses on cache hit rate as the primary performance metric, comparing the proposed architecture (ACP) against the baseline LRU and LFU replacement policies across multiple cache sizes.

4.1. Experimental Setup

Dataset: The experiments were conducted using a real-world query log from a commercial search engine, covering the complete month of August 2012. The dataset comprises approximately 1.5 billion queries, with around 480 million distinct queries issued globally by a general-purpose user base, without restrictions to specific application domains, geographic regions, or languages. Approximately 78 million of these were new queries not observed in previous months, and close to 77% of distinct queries were singletons, appearing only once. The query frequency distribution follows a Zipf-like pattern, with the most frequent query accounting for about 2.6% of all requests, and the maximum achievable hit rate estimated at around 75%. This dataset is not publicly available due to commercial and privacy restrictions, but a detailed characterization is reported in [

4].

To populate the static cache, permanent queries were identified using the most frequent requests from a similar trace from the preceding month (July 2012), simulating a realistic scenario where historical data are used to configure the cache.

In all experiments, we explicitly assume a uniform item size. Under this assumption, cache capacity is expressed in terms of the number of entries rather than bytes, which enables isolating the effects of replacement policies from those of object size variability. In real-world deployments, web objects have heterogeneous sizes, and memory management is implemented in discrete units (e.g., words, pages, or fixed-size blocks), rather than at the bit level. In such scenarios, size-aware caching policies—capable of accounting for per-object size when admitting and evicting entries—often achieve higher hit rates than size-agnostic algorithms such as LRU. Incorporating variable object sizes into the evaluation framework is an important direction for future work, as discussed in the Conclusions and Future Work section.

Architecture Configuration: For each total cache size, two distinct distribution strategies were evaluated to analyze the impact of resource allocation. The total capacity was partitioned between the static cache and a shared dynamic-burst space, which flexibly accommodates both dynamic and burst queries. The two configurations tested were as follows:

A 30% static/70% dynamic-burst split, prioritizing space for transient and burst queries.

A 50% static/50% dynamic-burst split, creating an even balance between permanent and variable content.

These distributions were chosen to assess the architecture’s performance under different assumptions about the prevalence of permanent versus transient query patterns.

Simulation Parameters: The key parameters for the burst detection mechanism were configured in line with the analysis presented in [

4]. The query log was processed in discrete monitoring intervals of 60 s each. Within each interval, the system identified the most frequent queries (top-k), with k = 1000.

A query was identified as a burst if it met the following three criteria simultaneously:

A minimum frequency of 100 within the interval.

A current frequency at least 0.5 standard deviations above its moving average (x = 0.5).

A moving coefficient of variation greater than 0.3 (r > 0.3).

The Time-to-Live (TTL) for the burst cache replacement policy, which is initiated once a query is no longer in the top-k list, was set to 60 monitoring intervals (equivalent to 60 min).

The parameters for the burst detection mechanism were initially guided by the extensive empirical analysis in [

4], which provided a robust baseline for this type of workload. That study also established an upper bound for achievable hit rates of approximately 75% in large-scale web search caches, a limit imposed by the proportion of singleton queries; this contextualizes the improvements reported in our results. To ensure their suitability for our specific dataset, we validated these settings and, as discussed in our results, performed a sensitivity analysis by varying the minimum frequency threshold for burst detection. While a fully exhaustive grid search of the parameter space was beyond the scope of this study—which focuses on the architectural proposal—the selected values proved to be effective. We have identified a more comprehensive multi-parameter optimization as a key direction for future work.

4.2. Performance Evaluation

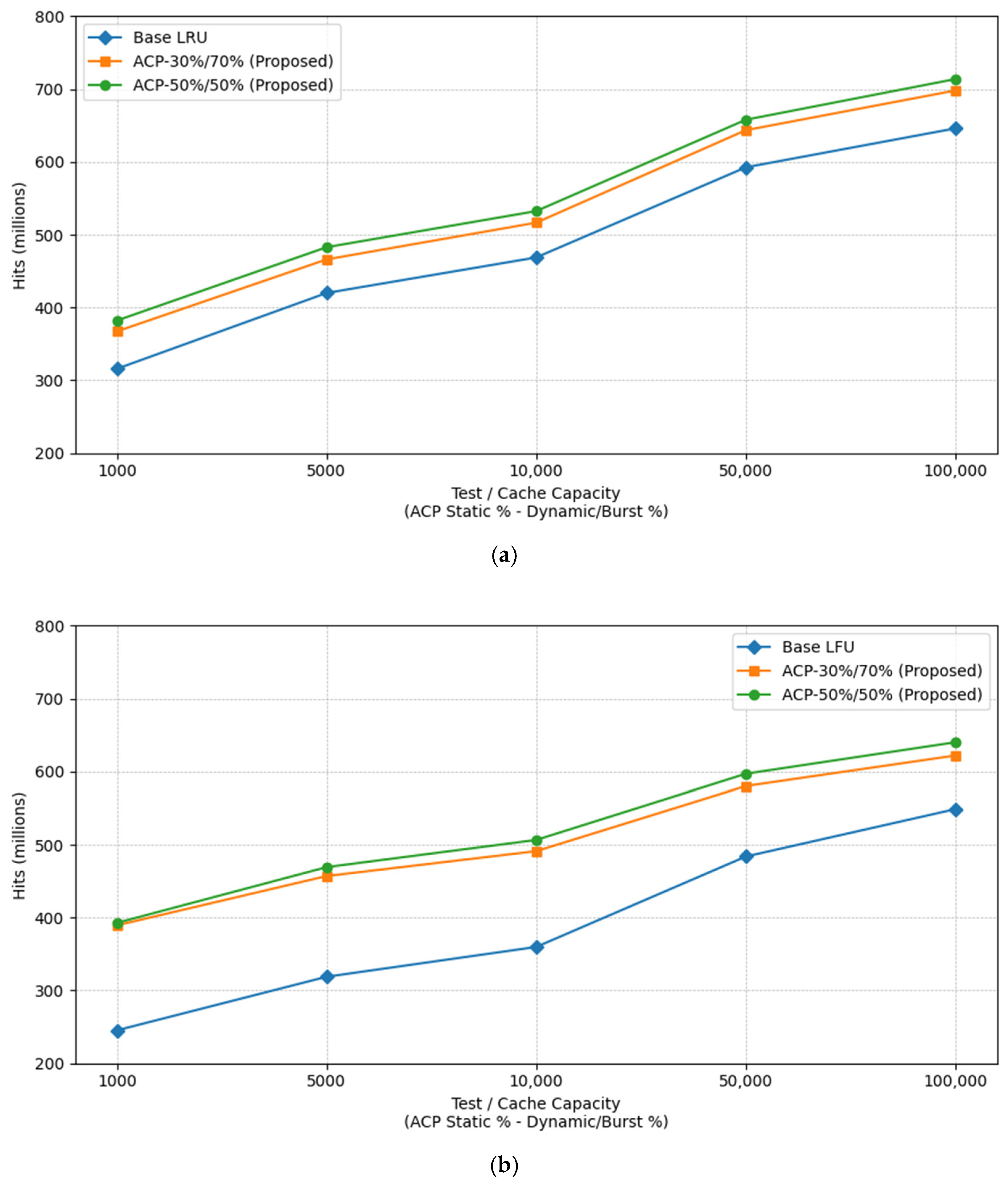

The results show that the proposed architecture consistently achieves higher hit rates than the baseline policies. As summarized in

Table 2, the improvements are particularly significant in smaller cache configurations and demonstrate the effectiveness of segmenting queries by behavior. The gains are driven by the architecture’s ability to protect long-term popular items in the static cache while efficiently managing temporary demand spikes in the burst cache.

The overall performance trends are visualized in the following figures.

Figure 2a presents the results for the LRU baseline and the proposed architecture with LRU integration, while

Figure 2b shows the same comparison for LFU. A complete numerical breakdown for all tested configurations is available in

Table 3 and

Table 4.

The relative improvement is more pronounced for LFU than for LRU. This can be explained by the interaction between LFU’s frequency-based eviction policy and the architecture’s segmentation: LFU benefits more from the isolation of burst queries, as this prevents high-frequency but short-lived entries from prematurely displacing longer-term valuable items. LRU, being recency-based, is less sensitive to this effect but still benefits from the separation of static and dynamic content.

Further experiments underscored this unique sensitivity of the LFU policy to the architecture’s parameters. When the minimum frequency threshold for the burst detection mechanism was lowered from 100 to 50, the ACP-LFU configuration showed a notable increase in cache hits, especially in smaller cache setups. In contrast, the same modification had a negligible effect on the ACP-LRU configuration. This suggests that LFU is highly responsive to a more aggressive burst detection strategy that captures emerging trends earlier and highlights a key avenue for future work: the dynamic tuning of detection thresholds based on the chosen replacement policy.

4.3. Analysis of Burst Query Lifecycles

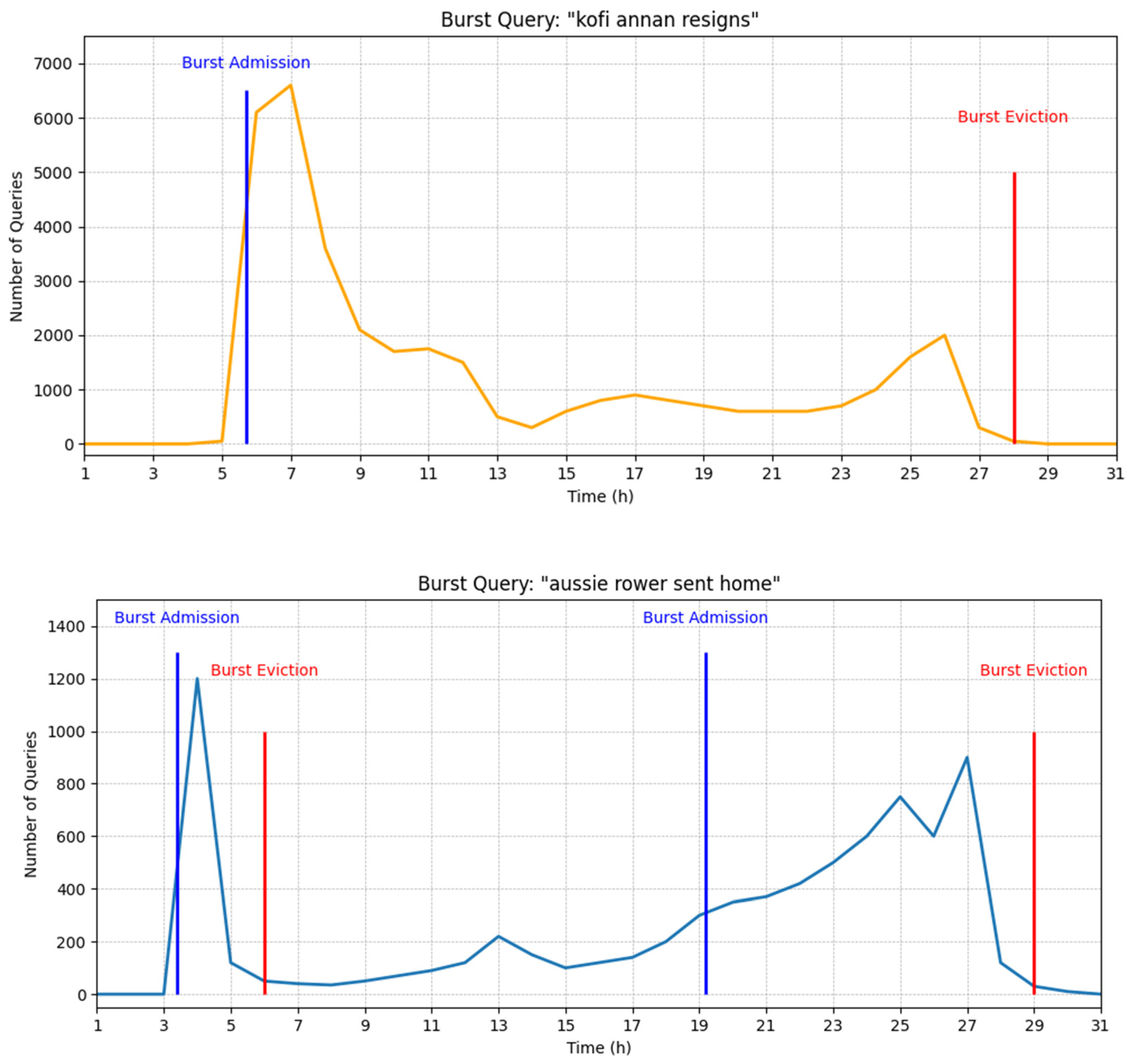

To illustrate the practical behavior of the burst handling mechanism,

Figure 3 shows the lifecycle of two queries, “Kofi Annan resigns” and “Aussie rower sent home”, which were identified as bursts during the simulation. Both queries exhibit a characteristic pattern: an initial period of near-zero activity followed by an abrupt and significant surge in user requests.

Our detection mechanism successfully identified these surges in real time, admitting them directly into the burst cache. For instance, “Kofi Annan resigns” was admitted at interval 5 (“Burst Admission” marker). The queries remained in the burst cache, serving a high volume of requests and contributing to the hit rate during their peak popularity.

Crucially, the architecture also demonstrates efficient eviction. Once a query’s popularity waned and it no longer appeared in the top-k most frequent queries for a continuous period (defined by a 60 min Time-to-Live, or TTL), the replacement policy removed it from the burst section. As shown by the “Burst Eviction” markers in

Figure 3, this timely removal prevents stale entries from occupying valuable cache space, freeing it up for new, emerging bursts. This qualitative example demonstrates how the architecture’s segmentation and specialized policies work in tandem to maximize hit rates during periods of volatile demand.

The burst section plays a critical role in these improvements. By admitting queries detected as bursts into a dedicated segment, the system prevents them from competing directly with static and dynamic content, reducing cache pollution. The burst query replacement policy ensures that only actively popular burst entries are retained, further sustaining the hit rate during high-demand periods.

Machine learning-based cache replacement policies and similar multi-tier architectures were not evaluated due to the unavailability of implementation code and datasets, which made reproducible comparison under our workload traces unfeasible. The chosen baselines (LRU and LFU) are widely recognized in both academic literature and industrial deployments, ensuring that the reported gains can be independently verified.

Overall, the proposed architecture delivers measurable performance gains without requiring complex or opaque replacement policies, making it practical for deployment in large-scale web applications where interpretability, reproducibility, and low overhead are essential.

5. Discussion

The proposed architecture demonstrated consistent improvements over baseline caching strategies, with gains observed across all tested cache sizes and for both LRU- and LFU-based configurations.

The results indicate that the segmentation into static, dynamic, and burst sections was a primary factor in the observed improvement. Each section isolates content based on access behavior, reducing cache pollution and optimizing replacement decisions. The burst section, in particular, prevents short-term demand spikes from displacing long-term valuable entries in other sections.

Compared to more advanced machine learning-based replacement strategies [

12,

13,

14,

29] and existing hybrid designs [

6,

7], which optimize rewards such as hit rate, average latency, and transfer cost under supervised or reinforcement learning models, our approach requires neither a prior training phase nor high-complexity online parameter tuning. In particular, refs. [

11,

28] apply multi-agent deep reinforcement learning to optimize storage in distributed and edge environments, while [

12,

13] explore adaptive multi-tier replacement strategies and content classification techniques based on neural networks and deep learning, with optimization objectives including hit ratio, latency, energy efficiency, and data freshness. Unlike predictive mid-tier frameworks such as ChronoCache [

27], which combine historical query patterns with short-term predictive models to prefetch anticipated future results, our design is reactive: it detects and prioritizes bursts as they emerge in real time, enabling immediate adaptation to unforeseen demand spikes. This difference reduces dependence on stable demand patterns—a critical factor in environments with high query volatility—and avoids the overhead of maintaining continuously updated predictive models.

Our evaluation did not include ML-based strategies due to the lack of publicly available implementations and datasets, which would be required for reproducible experiments with our real-world query logs. In addition, many ML-based methods rely on workload-specific training data, limiting portability across different deployment contexts. LRU and LFU were chosen as widely recognized and reproducible baselines.

A key advantage of our architecture is its modularity. The behavioral segmentation is orthogonal to the underlying replacement policy. This modularity enables our design to complement advanced policies; for instance, an ML-based replacement algorithm could be applied independently within the dynamic cache partition, potentially combining the benefits of both approaches.

The experiments were based on simulations using real-world query logs. While this approach allows for controlled, repeatable evaluations, it does not capture all aspects of a live deployment. Validation in operational environments will be important to assess the architecture’s behavior under network latency, concurrent request patterns, and heterogeneous resource constraints.

6. Conclusions and Future Work

This paper presented a behavior-aware caching architecture for large-scale web applications, designed to optimize hit rate by segmenting the cache into static, dynamic, and burst sections.

The architecture improves performance over widely used baseline strategies, achieving up to 10.8% improvement when combined with LRU and up to 36% when combined with LFU, as determined by simulation experiments using real-world query logs.

Our results confirm that a simple and modular segmentation strategy can yield reproducible performance gains without the complexity of ML-based replacement strategies or the high implementation cost of advanced multi-tier designs.

Based on the findings of this article, several promising directions for future research have been identified to extend and enhance the proposed architecture. First, a comprehensive sensitivity analysis will be conducted to systematically evaluate the impact of the burst detection parameters and identify optimal configurations for different workloads. An important extension of this will be to develop an adaptive mechanism capable of dynamically adjusting these detection thresholds in real time, responding to shifts in workload characteristics or the underlying replacement policy. Second, we plan to design and evaluate a dynamic resizing algorithm for the cache partitions, which would allocate space between the static, dynamic, and burst sections based on traffic volatility. Third, a critical next step is to extend the model to support the storing of variable-sized cache items by incorporating and evaluating size-aware replacement algorithms, which is essential for reflecting real-world conditions. Fourth, given the architecture’s modularity, we will investigate its integration with more advanced replacement policies such as ARC, CAR, or select ML-based methods. Finally, to complement these simulation-based findings, the architecture’s performance will be validated in a live operational environment to assess the impact of real-world factors such as network latency and concurrent request patterns.