Intercepting and Monitoring Potentially Malicious Payloads with Web Honeypots

Abstract

1. Introduction

2. State of the Art

2.1. Web Application Honeypots

- To design a honeypot focused on analysis of HTTP activity.

- The exposed web application should be as realistic as possible, ideally a fully fledged web application that offers varied interaction to the users.

- Integrated monitoring capabilities to perform queries over the logged HTTP activity.

- Honeypot infrastructure should be web application-agnostic, replacing the web app with any other choice but keeping the logs in the same locations should work as expected initially.

- Geared towards either a certain service or to a collection of services.

- Limited to a single technology for the HTTP service.

- Focused on detecting certain vulnerabilities.

- Loosely coupled components enabling future customization of the infrastructure.

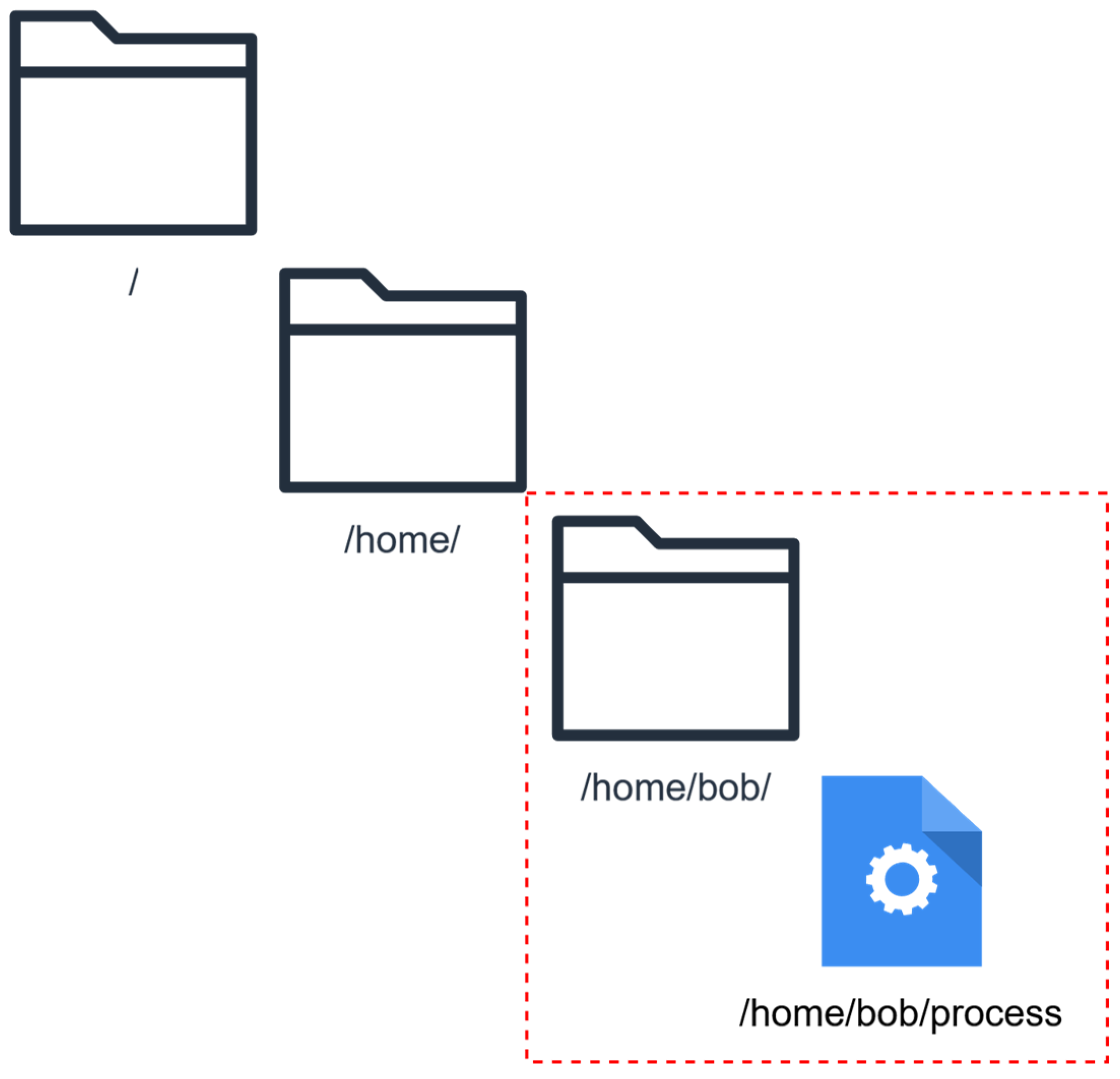

2.2. Infrastructure Security Aspects

- Filesystem.

- Network.

- Processes.

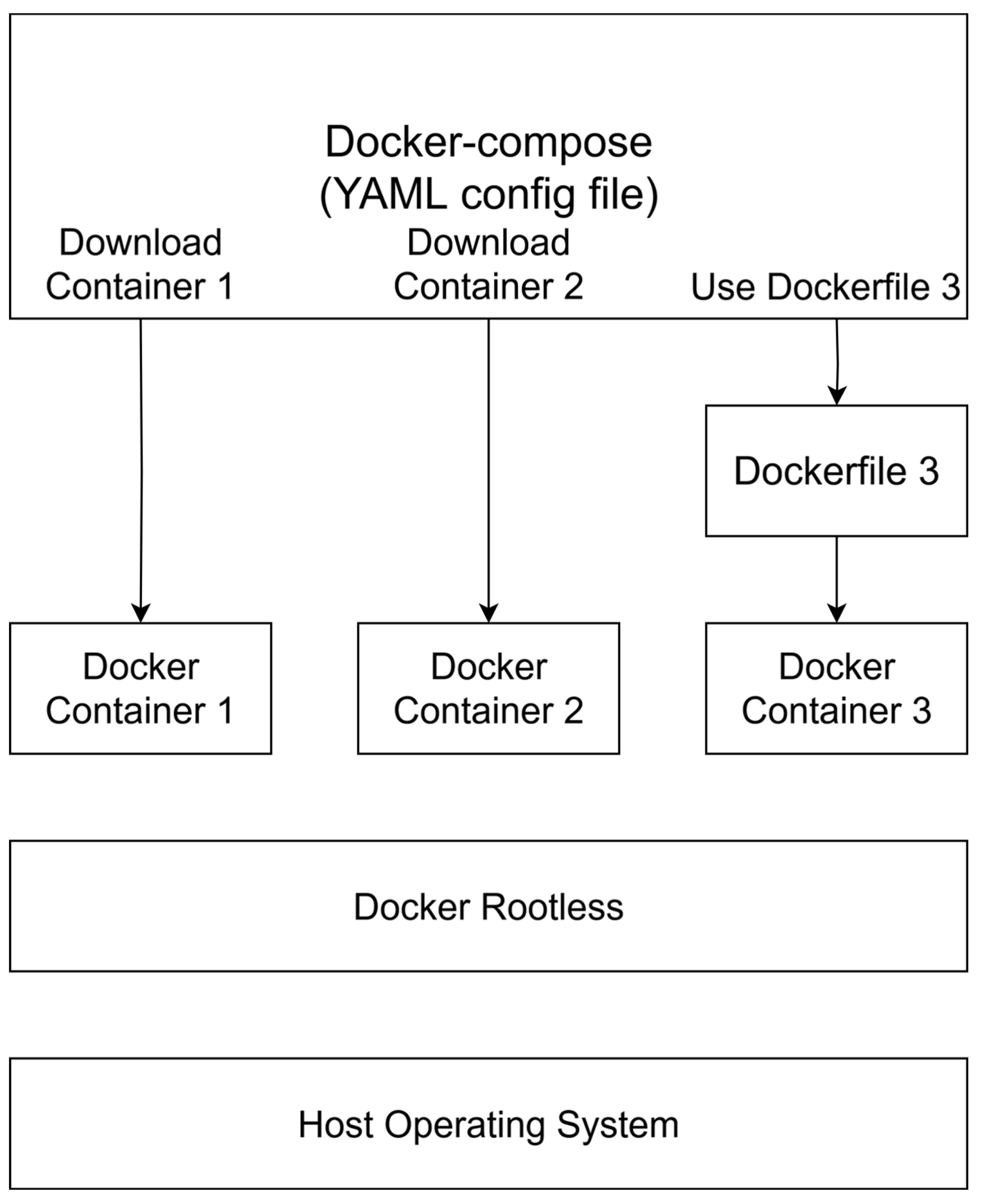

- Cross-platform operability to deploy on a wide range of underlying host operating systems.

- Automated deployments are instantiated most often by just running a script or a few commands.

- Reproducible builds to create fresh environments if older ones get compromised.

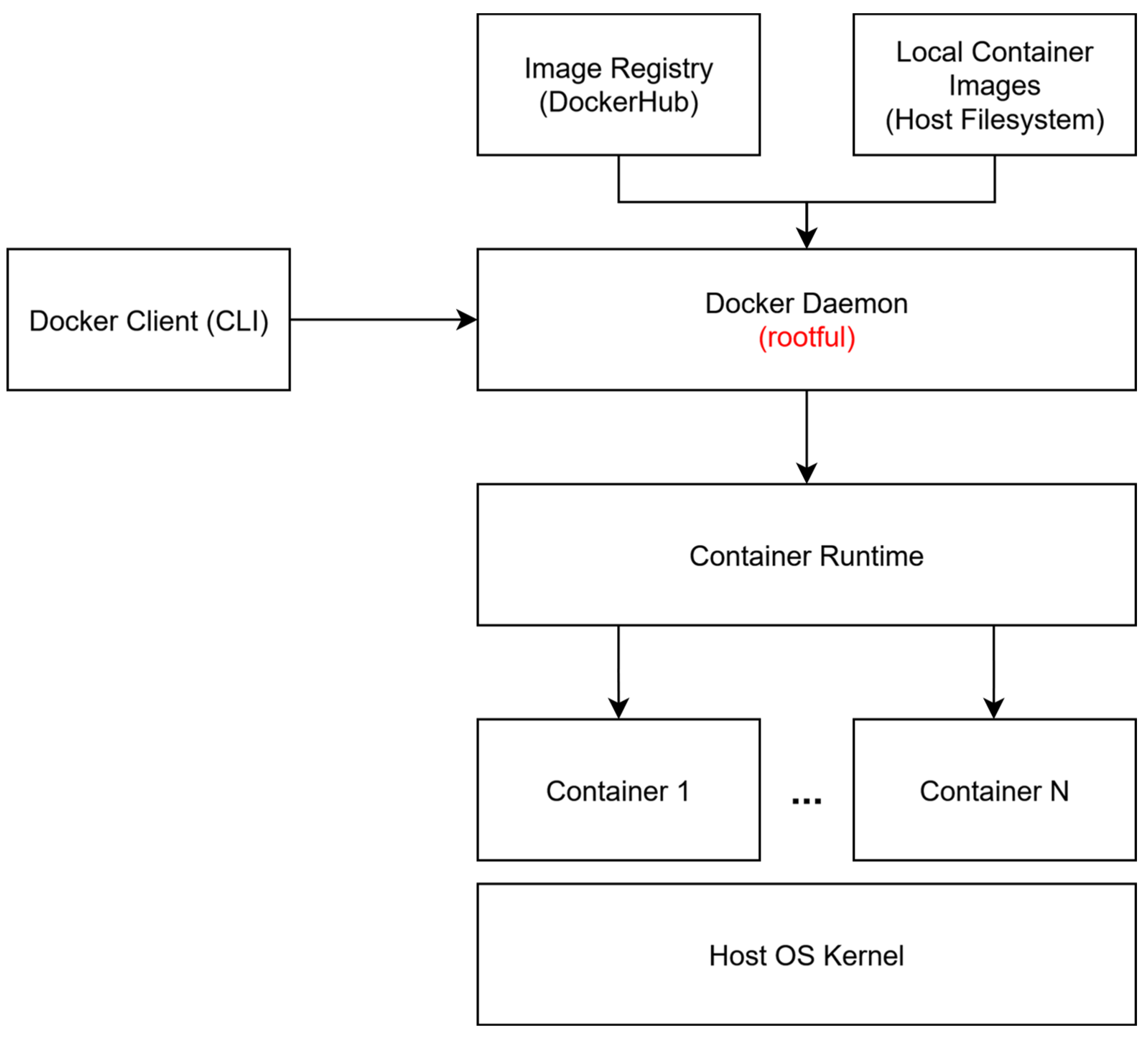

- Enhanced security via isolation of resources to ensure that the attacked web app does not compromise the rest of the infrastructure.

- Docker.

- Podman.

- LXC.

2.3. Logging Aspects

2.4. Correlate the Logs

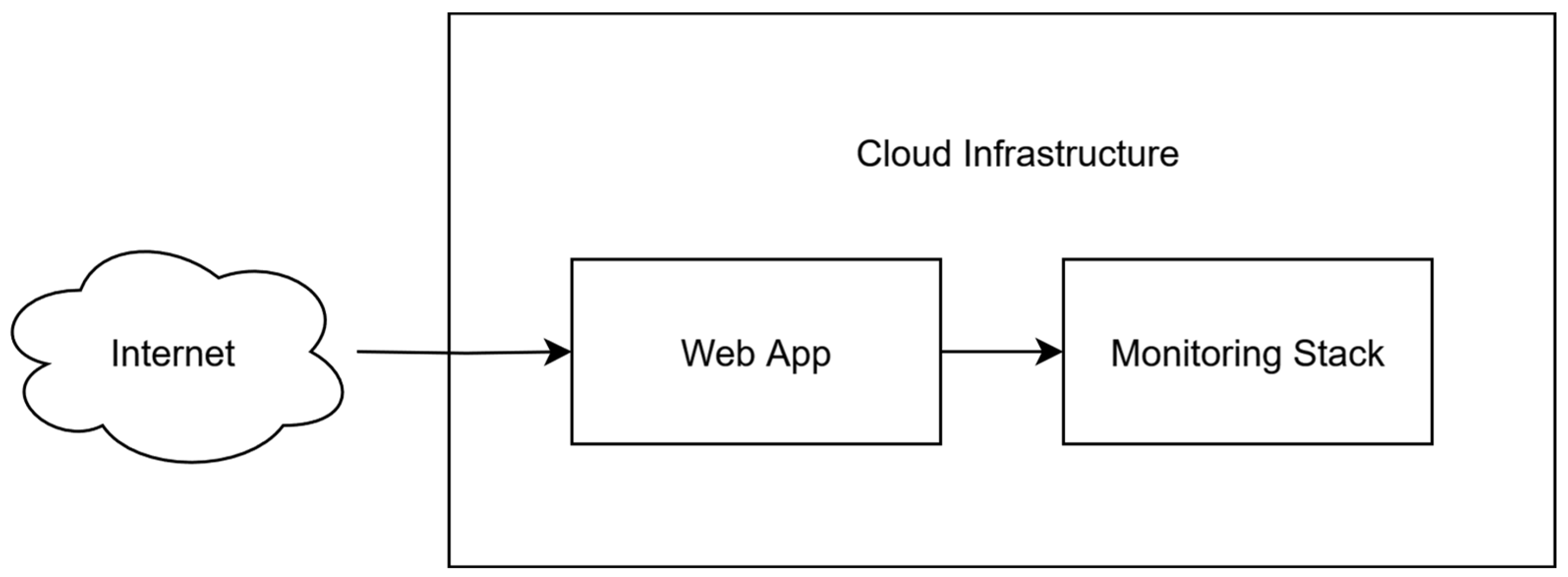

3. Proposed Solution Design

3.1. Deployment Technologies

- The honeypot is reachable from the Internet.

- Attackers will most certainly interact with the honeypot at some point.

- The honeypot will be scanned for vulnerabilities.

- The honeypot might use software that will become vulnerable in the future.

- Reproduce previous builds.

- Update current builds.

- Script the deployment of new builds to be as efficient as possible.

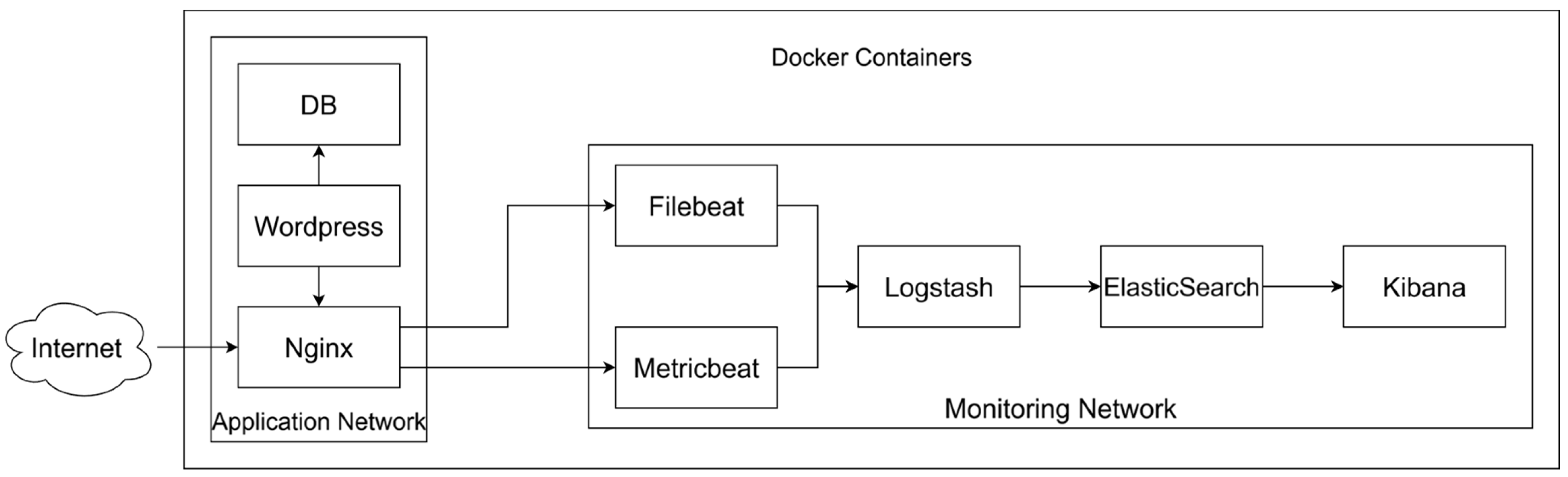

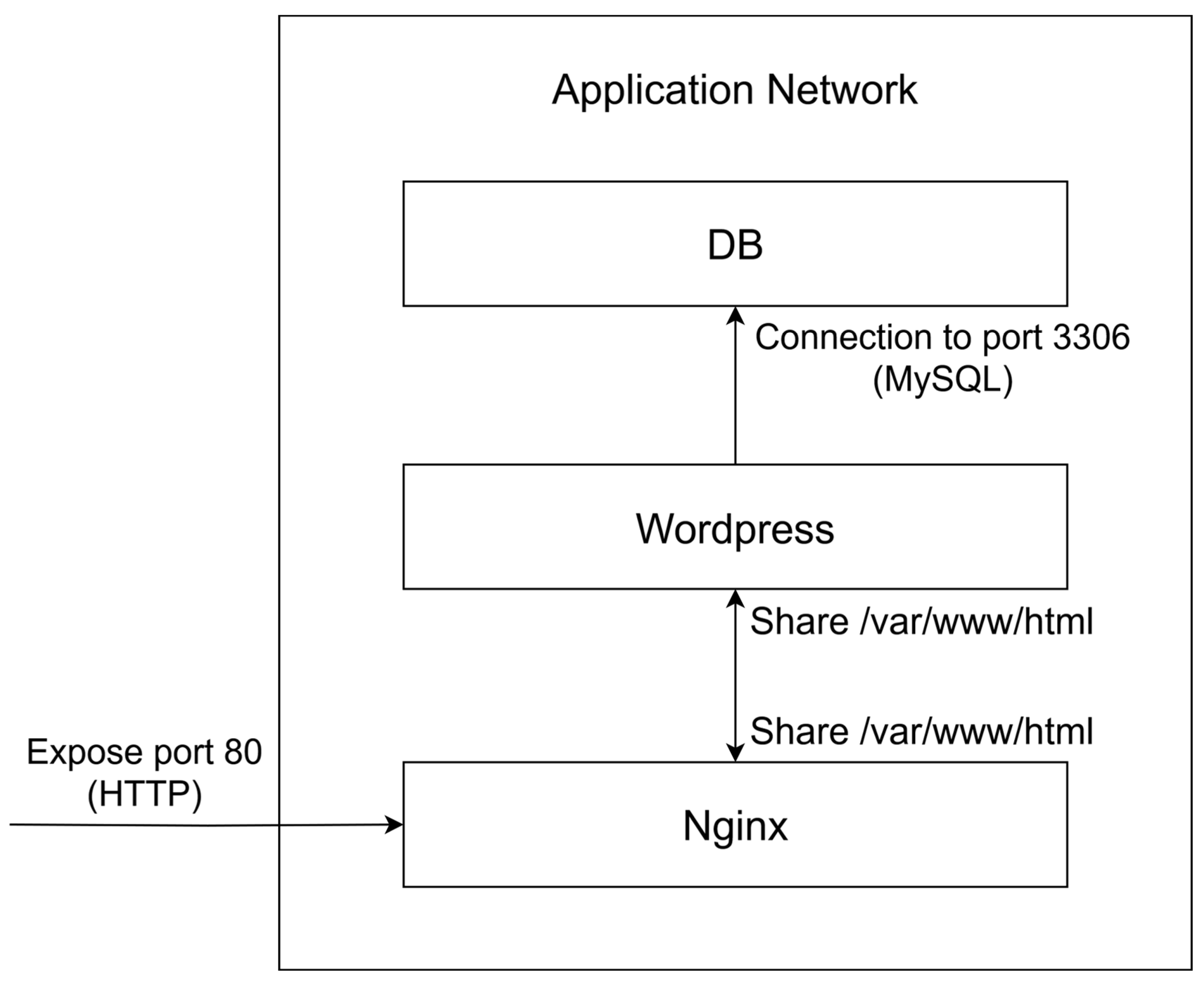

3.2. The Application Network

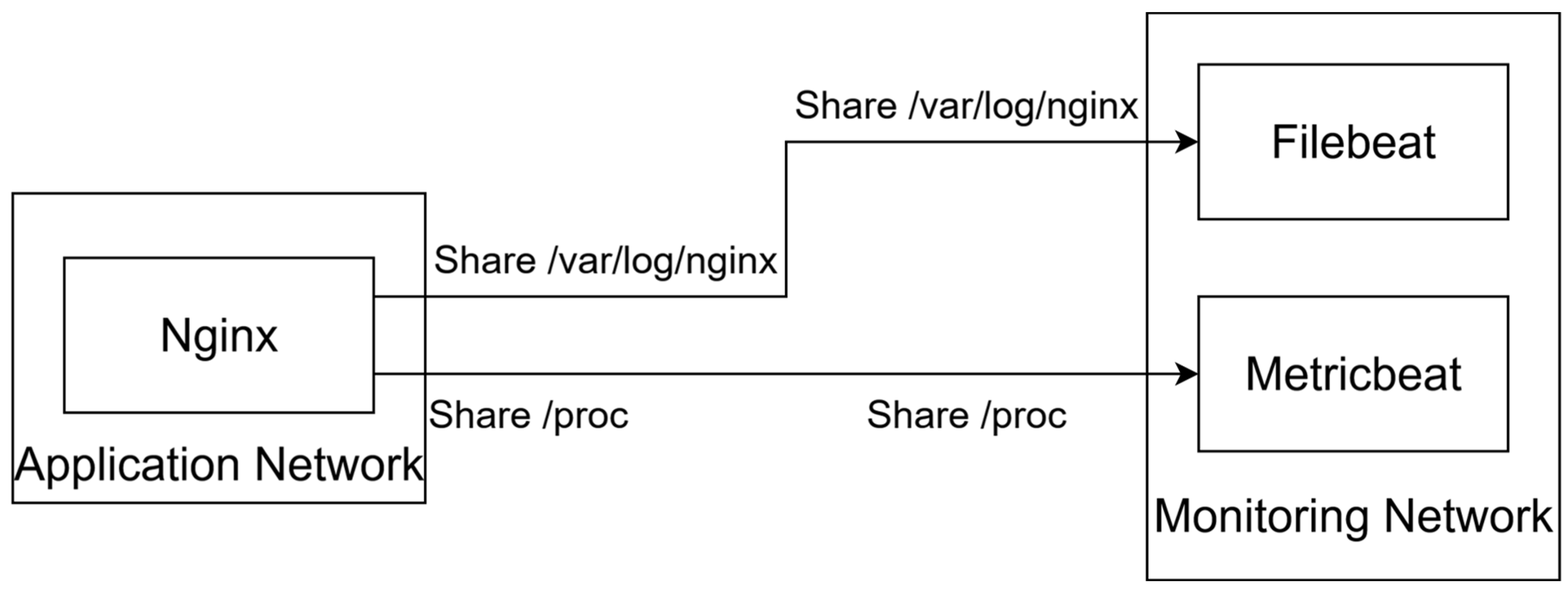

3.3. The Log Shipping Process

- Nginx container and the Filebeat container.

- Host operating system and Metricbeat container.

- /var/log/nginx is shared from the Nginx container to the Filebeat container so that Filebeat can collect access.log and error.log logs and ship them further.

- /proc is shared from within the host operating system to Metricbeat so that system metrics can be shipped further.

3.4. The Monitoring Network

- Logstash: Aggregates logs from various sources (Metricbeat, Filebeat) and sends them to Elasticsearch.

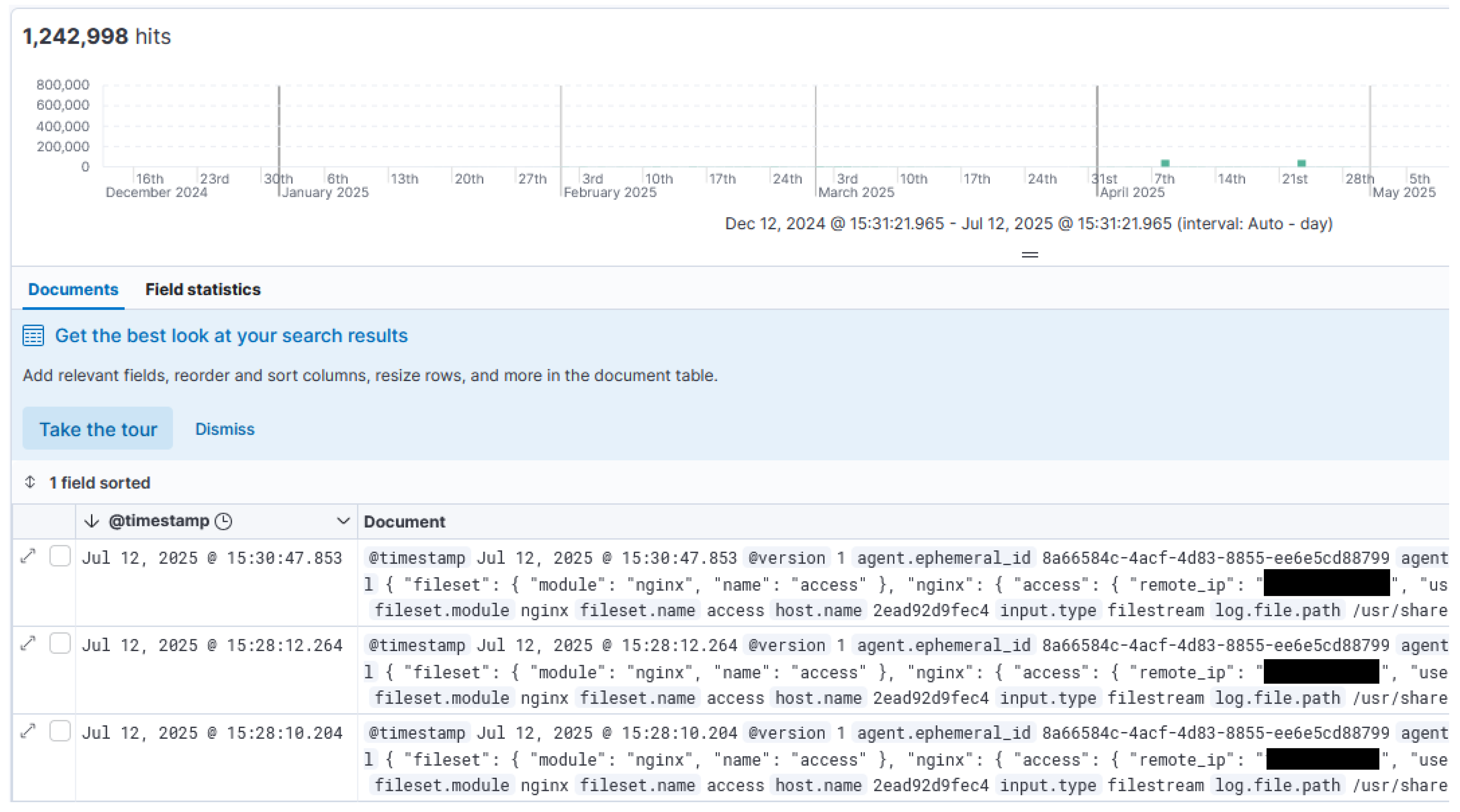

- Elasticsearch: Enables easy exploration and querying of data that is already collected and stored in the database.

- Kibana: Enables easy visualization of data.

- Filter access logs from Nginx.

- Enrich logs (e.g., via geolocation of source IPs).

- Transform the access logs into JSON for better control in Kibana.

3.5. Design Advantages

- Network segregation between Application Network and Monitoring Network.

- Filesystem segregation between containers, apart from instances where shared volumes are used for specific resources on purpose.

- Process isolation between main processes (web server, database, etc.) since each container runs processes in separate isolated namespaces.

- Nginx and Wordpress.

- Nginx and Filebeat.

- Host operating system and Metricbeat.

- TCP port 80 exposes the web application to the Internet.

- TCP port 5601 is exposed on the localhost interface of the host operating system, as it would be dangerous to expose it in the Internet.

- TCP port 22 exposes the SSH Service to the Internet and provides management capabilities to log into the host operating system and interact with the infrastructure; SSH Authentication is conducted via SSL Certificates and Password Authentication is disabled, thus increasing security.

4. Implementation Details

- Port 80 exposes a web application and this is the main entry point for attackers.

- Port 22 is secured via Public Key Authentication and provides management access to the underlying Cloud VM where everything is deployed.

- Access to the Kibana UI is achieved via Port Forwarding port 5601 through the SSH Tunnel.

- The web application is purposely created to be attacked and even successfully exploited if possible.

- Damage is contained within the internal networks and certain filesystem sandboxes.

- The disaster recovery plan can be easily implemented via running a script in case of a compromise.

4.1. Log Analysis

- 127.0.0.1 - Bob [08/Jul/2025:14:45:34 +0000] "GET / HTTP/1.1" 301 207

- 127.0.0.1: Source IP address of the client.

- -: Lack of information about user identity, usually unreliable field.

- Bob: Userid of the client, determined via HTTP Authentication, - if not present.

- [08/Jul/2025:14:45:34 +0000]: Timestamp of the request.

- “GET / HTTP/1.1”: HTTP Request Method (GET), Request path or endpoint requested by the user (/) and Request Protocol (HTTP/1.1).

- 301: HTTP Response Code.

- 207: Size of the response returned by the server.

- HTTP Verb: We mostly looked at GET requests as the main target for identifying Directory Discovery/Directory Brute-Forcing, although POST requests were also analyzed.

- Resource Path: This is the first relevant field for the purpose of our research, as it details the path that the client requests.

- URL Parameters: Both parameters and the value of those parameters are used very frequently to send malicious payloads to the server or even test for certain vulnerabilities.

- Host HTTP Request Header: The host of the request might sometimes be malformed and might contain relevant payloads that need to be inspected. This is especially important if there is no hostname associated with the honeypot and might hint at attempts to discover hostnames if the attacker assumed that the honeypot was actually a reverse proxy.

- User Agent: The user agent might sometimes reveal that the request is issued by a known Internet scanner, in which case we would not inspect it further.

- HTTP Response Code: A 404 response would mean that the client tried to access a non-existing resource and this behavior might qualify as Directory Discovery/Directory Brute-Forcing. Any response in the 4XX class could signal a potentially malicious request that was not fulfilled by the server.

- Source IP: No geoblocking measures were put in place to restrict traffic based on geographical criteria; however, this field was used for statistical purposes.

- −

- WHOIS: The IP would be verified through a WHOIS service to see if there is any information about the ownership of the IP.

- −

- ASN Analysis: The Autonomous System Number containing the IP would be checked to see if the geoip module correctly assigned the country and then the IP ownership details would be cross-checked with those originating from the WHOIS service.

- −

- −

- If the Source IP issued a malicious request, but it is owned by a major cloud provider, then we might not propagate it via threat intelligence sharing.

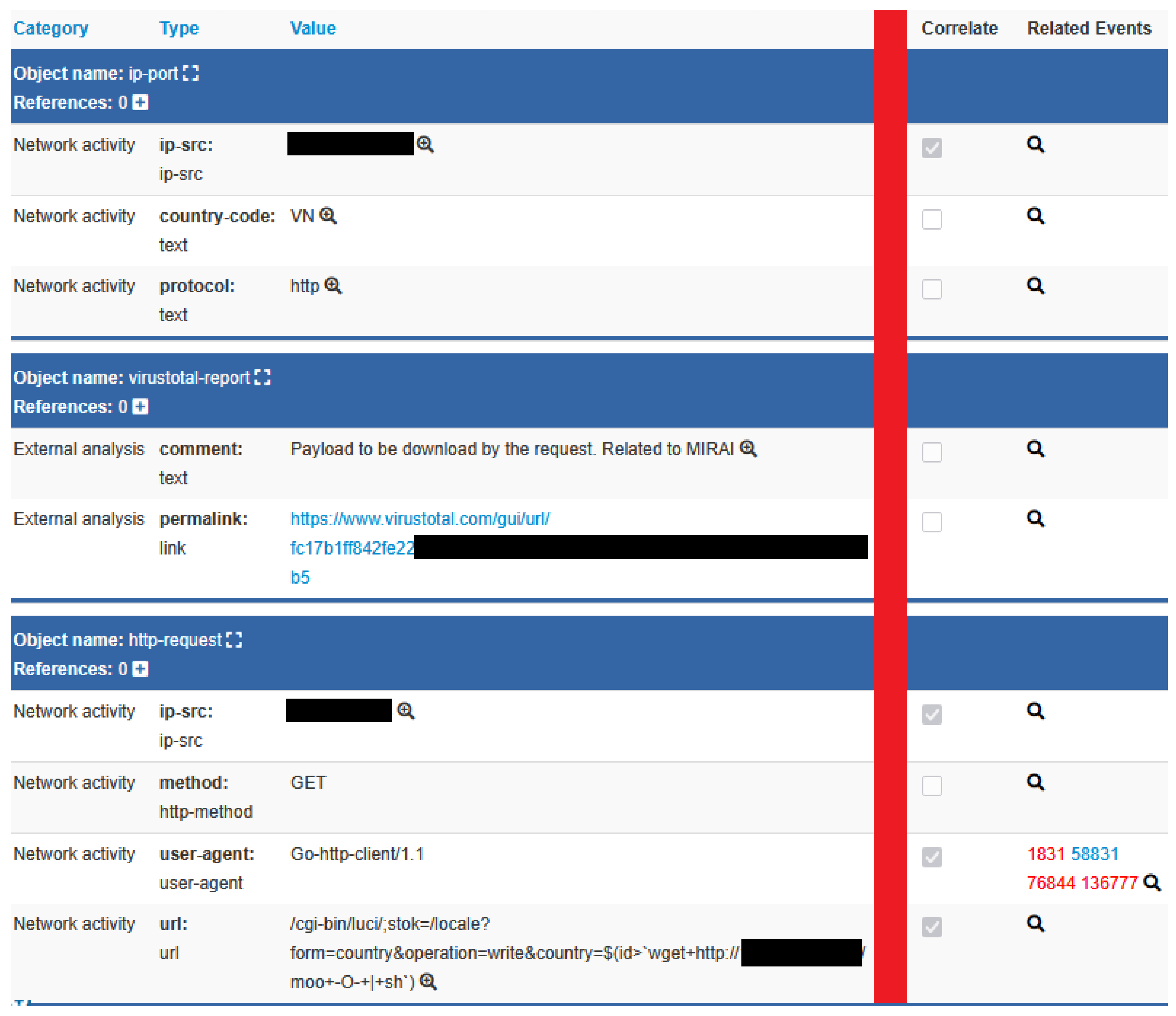

4.2. Threat Intelligence Sharing

5. Testing and Evaluation

5.1. Experimental Setup

5.2. Research Results

- nginx.access.url.keyword : *..*

- or nginx.access.url.keyword : *%2e%2e*

- or nginx.access.url.keyword : *%252e%252e*

- or nginx.access.url.keyword : *%u002e%u002e*

- nginx.access.url.keyword : *curl*

- or nginx.access.url.keyword : *wget*

- or nginx.access.url.keyword : *certutil*

- or nginx.access.url.keyword : *whoami*

- or nginx.access.url.keyword : *hostname*

- or nginx.access.url.keyword : *sysinfo*

- or nginx.access.url.keyword : *chmod*

- or nginx.access.url.keyword : *php\://*

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- {

- "fileset": {

- "module": "nginx",

- "name": "access"

- },

- "nginx": {

- "access": {

- "remote_ip": "<redacted>",

- "user_name": "",

- "time": "08/Jul/2025:14:45:34 +0000",

- "method": "GET",

- "host": "<redacted>",

- "url": "/",

- "http_protocol": "HTTP/1.1",

- "response_code": "301",

- "body_sent": {

- "bytes": "5"

- },

- "referrer": "",

- "agent": ""

- },

- "request": "GET / HTTP/1.1",

- "connection": "506126",

- "pipe": ".",

- "connection_requests": "1",

- "time": {

- "iso8601": "2025-07-08T14:45:34+00:00",

- "msec": "1751985934.778",

- "request": "0.008"

- },

- "bytes": {

- "request_length": "43",

- "body_sent": "5",

- "sent": "277"

- },

- "http": {

- "x_forwarded_for": "",

- "x_forwarded_proto": "",

- "x_real_ip": "",

- "x_scheme": ""

- },

- "upstream": {

- "addr": "<redacted>:9000",

- "status": "301",

- "response_time": "0.007",

- "connect_time": "0.000",

- "header_time": "0.007"

- }

- }

- }

References

- Alyasiri, H.; Clark, J.; Malik, A.; de Fréin, R. Grammatical Evolution for Detecting Cyberattacks in Internet of Things Environments. In Proceedings of the 2021 International Conference on Computer Communications and Networks (ICCCN), Athens, Greece, 19–22 July 2021. [Google Scholar] [CrossRef]

- Attaallah, A.; Khan, P.R. Estimating Usable-Security Through Hesitant Fuzzy Linguistic Term Sets Based Technique. Comput. Mater. Contin. 2021, 70, 5683–5705. [Google Scholar] [CrossRef]

- Akinlade, E.; Adeleye, E. Designing a Secure Interactive System: Balancing the Conflict Between Security, Usability, and Functionality. 2022, 1, 1–12. Available online: https://www.researchgate.net/publication/366252638_Designing_a_secure_interactive_system_balancing_the_conflict_between_security_usability_and_functionality (accessed on 1 August 2025).

- Verizon. 2025 Data Breach Investigations Report. Available online: https://www.verizon.com/business/resources/reports/dbir/ (accessed on 6 August 2025).

- Fuertes, W.; Arévalo, D.; Castro, J.; Ron, M.; Estrada, C.; Andrade, R.; Peña, F.; Benavides, E. Impact of Social Engineering Attacks: A Literature Review. In Developments and Advances in Defense and Security: Proceedings of MICRADS; Springer: Singapore, 2022; pp. 25–35. [Google Scholar] [CrossRef]

- Yang, X.; Yuan, J.; Yang, H.; Kong, Y.; Zhang, H.; Zhao, J. A Highly Interactive Honeypot-Based Approach to Network Threat Management. Future Internet 2023, 15, 127. [Google Scholar] [CrossRef]

- Papazis, K.; Chilamkurti, N. Hydrakon, a Framework for Measuring Indicators of Deception in Emulated Monitoring Systems. Future Internet 2024, 16, 455. [Google Scholar] [CrossRef]

- Rabzelj, M.; Sedlar, U. Beyond the Leak: Analyzing the Real-World Exploitation of Stolen Credentials Using Honeypots. Sensors 2025, 25, 3676. [Google Scholar] [CrossRef] [PubMed]

- Krawetz, N. Anti-honeypot technology. IEEE Secur. Priv. 2004, 2, 76–79. [Google Scholar] [CrossRef]

- Mesbah, M.; Elsayed, M.S.; Jurcut, A.D.; Azer, M. Analysis of ICS and SCADA Systems Attacks Using Honeypots. Future Internet 2023, 15, 241. [Google Scholar] [CrossRef]

- Omar, A.H.E.; Soubra, H.; Moulla, D.K.; Abran, A. An Innovative Honeypot Architecture for Detecting and Mitigating Hardware Trojans in IoT Devices. IoT 2024, 5, 730–755. [Google Scholar] [CrossRef]

- DShield Inc DShield. Available online: https://isc.sans.edu/honeypot.html (accessed on 1 August 2025).

- Mushorg. SNARE. Available online: https://snare.readthedocs.io/en/latest/index.html (accessed on 1 August 2025).

- Canary, T. OpenCanary. Available online: https://opencanary.readthedocs.io/en/latest/ (accessed on 1 August 2025).

- Deutsche Telekom Security GmbH T-Pot. Available online: https://github.com/telekom-security/tpotce?tab=readme-ov-file#honeypots-and-tools (accessed on 1 August 2025).

- Boparai, A. The Behavioural Study of Low Interaction Honeypots: DShield and Glastopf in Various Web Attacks. 2014. Available online: https://ualberta.scholaris.ca/items/291066b4-ea95-47c6-b143-a70cc8460a28 (accessed on 1 August 2025).

- Mushorg. TANNER. Available online: https://tanner.readthedocs.io/en/latest/ (accessed on 1 August 2025).

- Biswa, S.; Wangmo, P.; Rangdel, T.; Wangchuk, T.; Tshering, Y.; Yangchen, T. Securing Network using Honeypots: A Comparative Study on Honeytrap and T-Pot. JNEC Thruel Rig Sar Toed 2024, 7, 12–20. [Google Scholar]

- Aslan, C.B.; Turksanli, E.; Erkan, R.E.; Ozturk, M.; Akdeniz, C. Unveiling Hidden Patterns in T-Pot Honeypot Logs: A Latent Topic Analysis. In Proceedings of the 2024 17th International Conference on Information Security and Cryptology (ISCTürkiye), Ankara, Turkiye, 16–17 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Paralax. Awesome Honeypots. Available online: https://github.com/paralax/awesome-honeypots?tab=readme-ov-file (accessed on 1 August 2025).

- Nawrocki, M.; Wählisch, M.; Schmidt, T.C.; Keil, C.; Schönfelder, J. A survey on honeypot software and data analysis. arXiv 2016, arXiv:1608.06249. [Google Scholar] [CrossRef]

- Wagner, C.; Dulaunoy, A.; Wagener, G.; Iklody, A. Misp: The design and implementation of a collaborative threat intelligence sharing platform. In Proceedings of the 2016 ACM on Workshop on Information Sharing and Collaborative Security, Vienna, Austria, 24 October 2016; pp. 49–56. [Google Scholar]

- MISP Project MISP. Available online: https://www.misp-project.org/ (accessed on 1 August 2025).

- Docker. Dockerhub. Available online: https://hub.docker.com/ (accessed on 1 August 2025).

- Sysoev, I. Nginx. Available online: https://nginx.org/ (accessed on 1 August 2025).

- Juell, K. How to Install WordPress with Docker Compose. Available online: https://www.digitalocean.com/community/tutorials/how-to-install-wordpress-with-docker-compose (accessed on 1 August 2025).

- Elastic. Filebeat. Available online: https://www.elastic.co/beats/filebeat (accessed on 1 August 2025).

- Elastic. Metricbeat. Available online: https://www.elastic.co/beats/metricbeat (accessed on 1 August 2025).

- Elastic. Logstash. Available online: https://www.elastic.co/logstash (accessed on 1 August 2025).

- McDonnell, M. FileBeat Directly to ELS or via LogStash? Available online: https://stackoverflow.com/questions/39873791/filebeat-directly-to-els-or-via-logstash (accessed on 1 August 2025).

- Diab, D. Logs from Filebeat to Logstash to Elasticsearch—2. Available online: https://www.dbi-services.com/blog/logs-from-filebeat-to-logstash-to-elasticsearch-2/ (accessed on 1 August 2025).

- Lopchan, N. Sending Logs to Elasticsearch Using Filebeat and Logstash. Available online: https://medium.com/@lopchannabeen138/sending-logs-to-elasticsearch-using-filebeat-and-logstash-5fbfae64c0ad (accessed on 1 August 2025).

- Lopchan, N. Deploying ELK Inside Docker Container: Docker-Compose. Available online: https://medium.com/@lopchannabeen138/deploying-elk-inside-docker-container-docker-compose-4a88682c7643 (accessed on 1 August 2025).

- Mitchell, E. Docker Compose: Part 2. Available online: https://www.elastic.co/blog/getting-started-with-the-elastic-stack-and-docker-compose-part-2 (accessed on 1 August 2025).

- Elastic. Elasticsearch. Available online: https://www.elastic.co/elasticsearch (accessed on 1 August 2025).

- Elastic. Kibana. Available online: https://www.elastic.co/kibana (accessed on 1 August 2025).

- Foundation, A.S. Log Files. Available online: https://httpd.apache.org/docs/2.4/logs.html#page-header (accessed on 1 August 2025).

- (NiceGuyIT), D.R. Nginx JSON to Filebeat to Logstash to Elasticsearch. Available online: https://gist.github.com/NiceGuyIT/58dd4d553fe3017cbfc3f98c2fbdbc93 (accessed on 1 August 2025).

- HeadTea. Send Logs with Filebeat to Logstash. Available online: https://stackoverflow.com/questions/62950008/send-logs-with-filebeat-to-logstash (accessed on 2 August 2025).

- OWASP. OWASP TOP 10. Available online: https://owasp.org/www-project-top-ten/ (accessed on 2 August 2025).

- VirusTotal. VirusTotal. Available online: https://www.virustotal.com/gui/ (accessed on 2 August 2025).

- Cisco. Cisco Talos Intelligence Group. Available online: https://talosintelligence.com/ (accessed on 2 August 2025).

- SANS. SANS Internet Storm Center. Available online: https://isc.sans.edu/ (accessed on 2 August 2025).

- Elastic. Kibana Rollover. Available online: https://www.elastic.co/docs/manage-data/lifecycle/index-lifecycle-management/rollover (accessed on 1 August 2025).

- Mohamed Mohideen, M.A.; Nadeem, M.S.; Hardy, J.; Ali, H.; Tariq, U.U.; Sabrina, F.; Waqar, M.; Ahmed, S. Behind the Code: Identifying Zero-Day Exploits in WordPress. Future Internet 2024, 16, 256. [Google Scholar] [CrossRef]

- Kelly, C.; Pitropakis, N.; Mylonas, A.; McKeown, S.; Buchanan, W.J. A Comparative Analysis of Honeypots on Different Cloud Platforms. Sensors 2021, 21, 2433. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Abawajy, J. Reinforcement Learning-Based Edge Server Placement in the Intelligent Internet of Vehicles Environment. IEEE Trans. Intell. Transp. Syst. 2025, 1–11. [Google Scholar] [CrossRef]

| Criteria Candidate | DShield | SNARE | OpenCanary | T-Pot |

|---|---|---|---|---|

| Services | SSH, Telnet, HTTP | HTTP | 10+ services | 10+ honeypots |

| Single HTTP Technology | YES | YES | YES | NO |

| Certain vulnerabilities | NO | NO | YES | NO |

| Loosely coupled | NO | YES | NO | YES |

| Attack Classes | Supported/Only Queries Needed | Extra Implementation Needed |

|---|---|---|

| Remote Command Execution | Supported | NO |

| Directory Discovery | Supported | NO |

| Targeted Vulnerability Scanning | Supported | NO |

| SQL Injection | Only Queries Needed | NO |

| Cross-Site Scripting | Only Queries Needed | NO |

| Server-Side Request Forgery | Only Queries Needed | NO |

| Template Injection | Only Queries Needed | NO |

| HTTP/2 | *Only Queries Needed | YES |

| Deserialization | Not Supported | YES |

| POST Body Payloads | Not Supported | YES |

| WebSocket Anomalies | Not Supported | YES |

| Country | % Requests |

|---|---|

| US | 55.04% |

| CA | 10.35% |

| Others | 9.86% |

| GB | 9.47% |

| RU | 9% |

| DE | 6.26% |

| Attack Class | % of Total Requests |

|---|---|

| Content Discovery | 35.85% |

| Path Traversal | 0.427% |

| Command Injection | 0.0926% |

| Honeypot | Queries via Dashboard | Attack Class Assignation |

|---|---|---|

| Our Research | YES | Via Queries |

| DShield | YES | Via Queries |

| SNARE (via TANNER) | NO | Automatic Via Scripts |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Visalom, R.-M.; Mihăilescu, M.-E.; Rughiniș, R.; Țurcanu, D. Intercepting and Monitoring Potentially Malicious Payloads with Web Honeypots. Future Internet 2025, 17, 422. https://doi.org/10.3390/fi17090422

Visalom R-M, Mihăilescu M-E, Rughiniș R, Țurcanu D. Intercepting and Monitoring Potentially Malicious Payloads with Web Honeypots. Future Internet. 2025; 17(9):422. https://doi.org/10.3390/fi17090422

Chicago/Turabian StyleVisalom, Rareș-Mihail, Maria-Elena Mihăilescu, Răzvan Rughiniș, and Dinu Țurcanu. 2025. "Intercepting and Monitoring Potentially Malicious Payloads with Web Honeypots" Future Internet 17, no. 9: 422. https://doi.org/10.3390/fi17090422

APA StyleVisalom, R.-M., Mihăilescu, M.-E., Rughiniș, R., & Țurcanu, D. (2025). Intercepting and Monitoring Potentially Malicious Payloads with Web Honeypots. Future Internet, 17(9), 422. https://doi.org/10.3390/fi17090422