Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications

Abstract

1. Introduction

2. Machine Learning Background

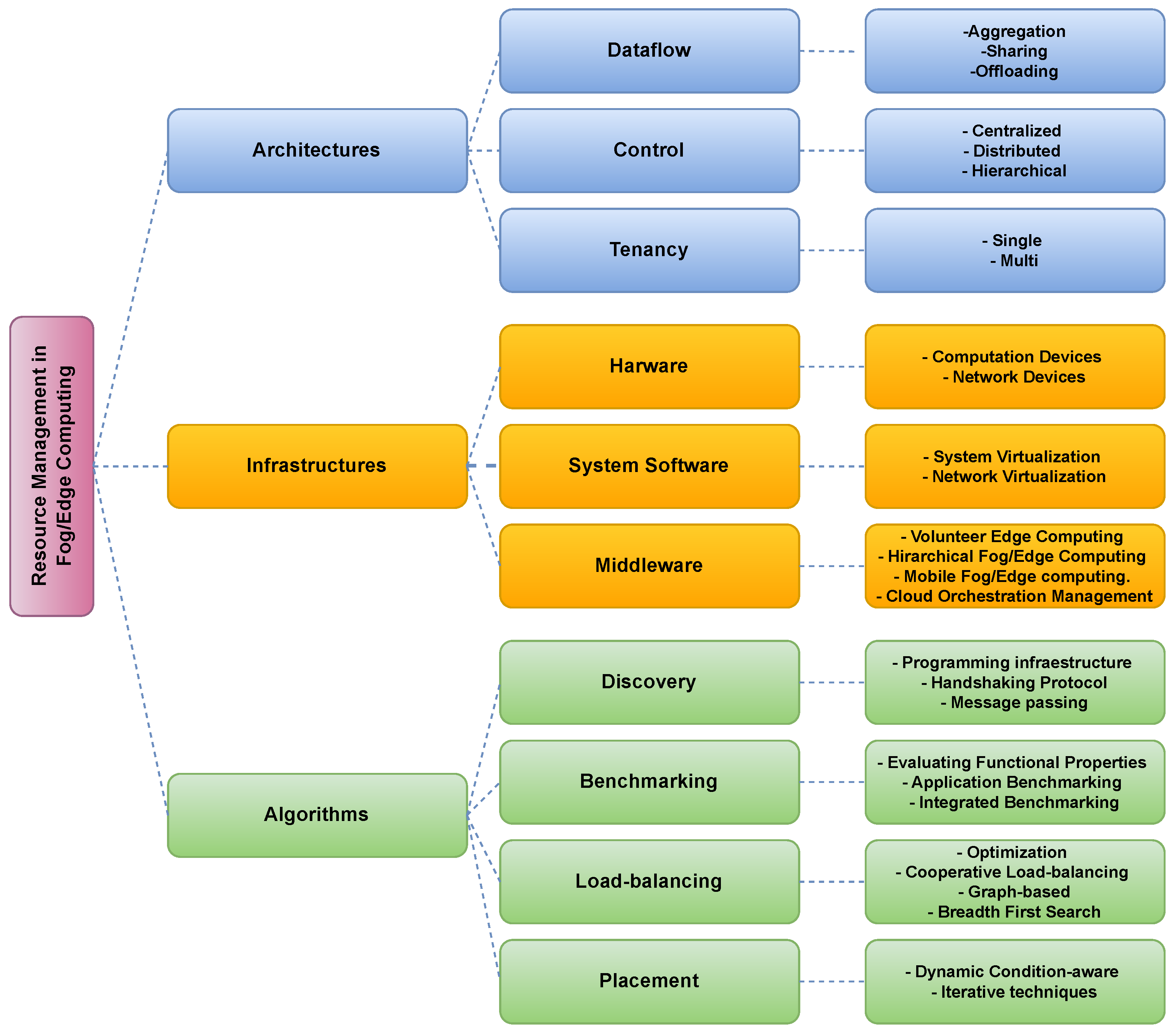

2.1. Intelligent Model Optimization at the Edge

2.1.1. Intelligent Pruning Techniques

2.1.2. Intelligent Quantization for Network Applications

- Post-training quantization: This approach reduces the precision of weights and activations after model training, supporting various quantization levels including 8-bit variants [90], 4-bit variants [91,92], 2-bit quantization [93], and 1-bit quantization (BitNet variants) [13,28] that replace matrix multiplication with integer addition for intelligent edge applications. While simple to implement for network deployment, this method might result in accuracy loss. It includes

- Quantizing only weights;

- Quantizing both weights and activations [94].

- Quantization-aware training: This employs quantization during model training, achieving better accuracy for intelligent network applications. This technique incorporates simulated quantization operations using automated tools from the TensorFlow and PyTorch libraries [89].

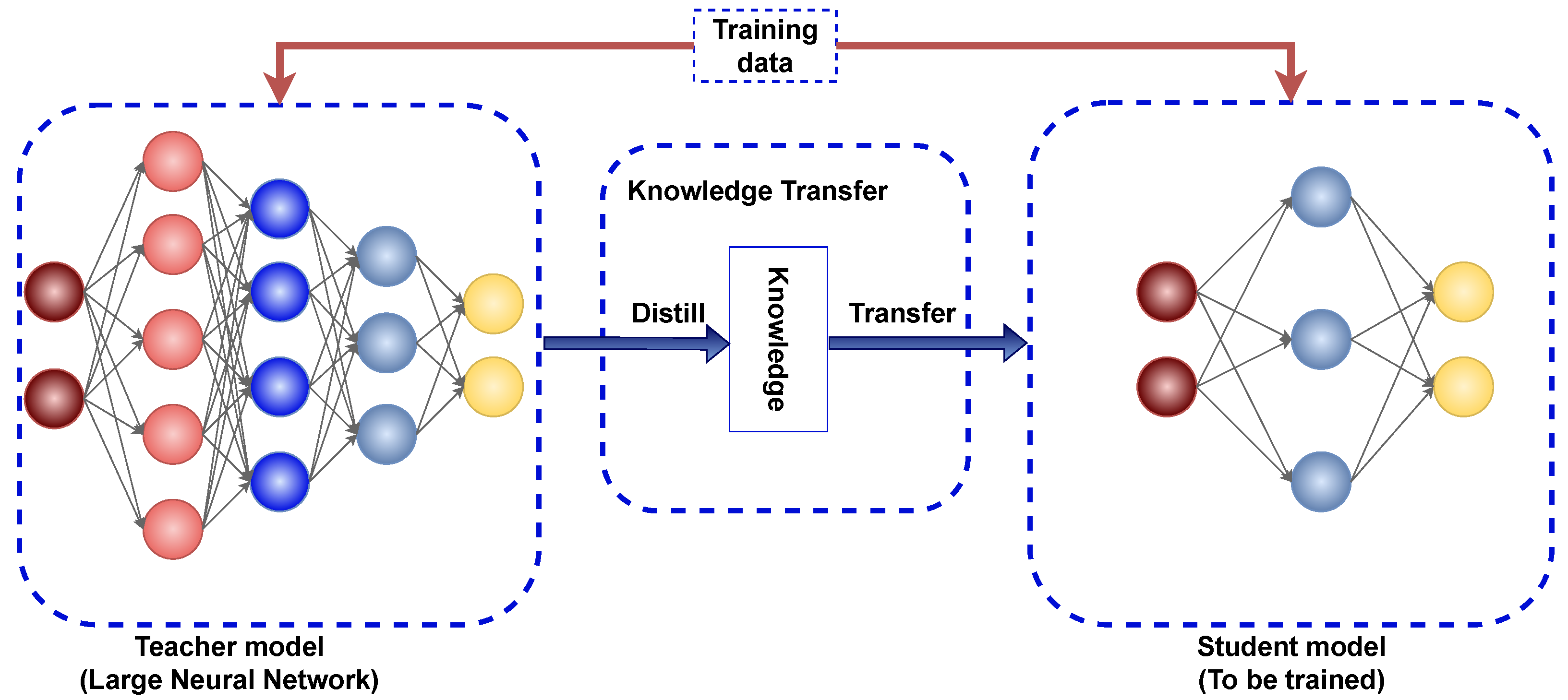

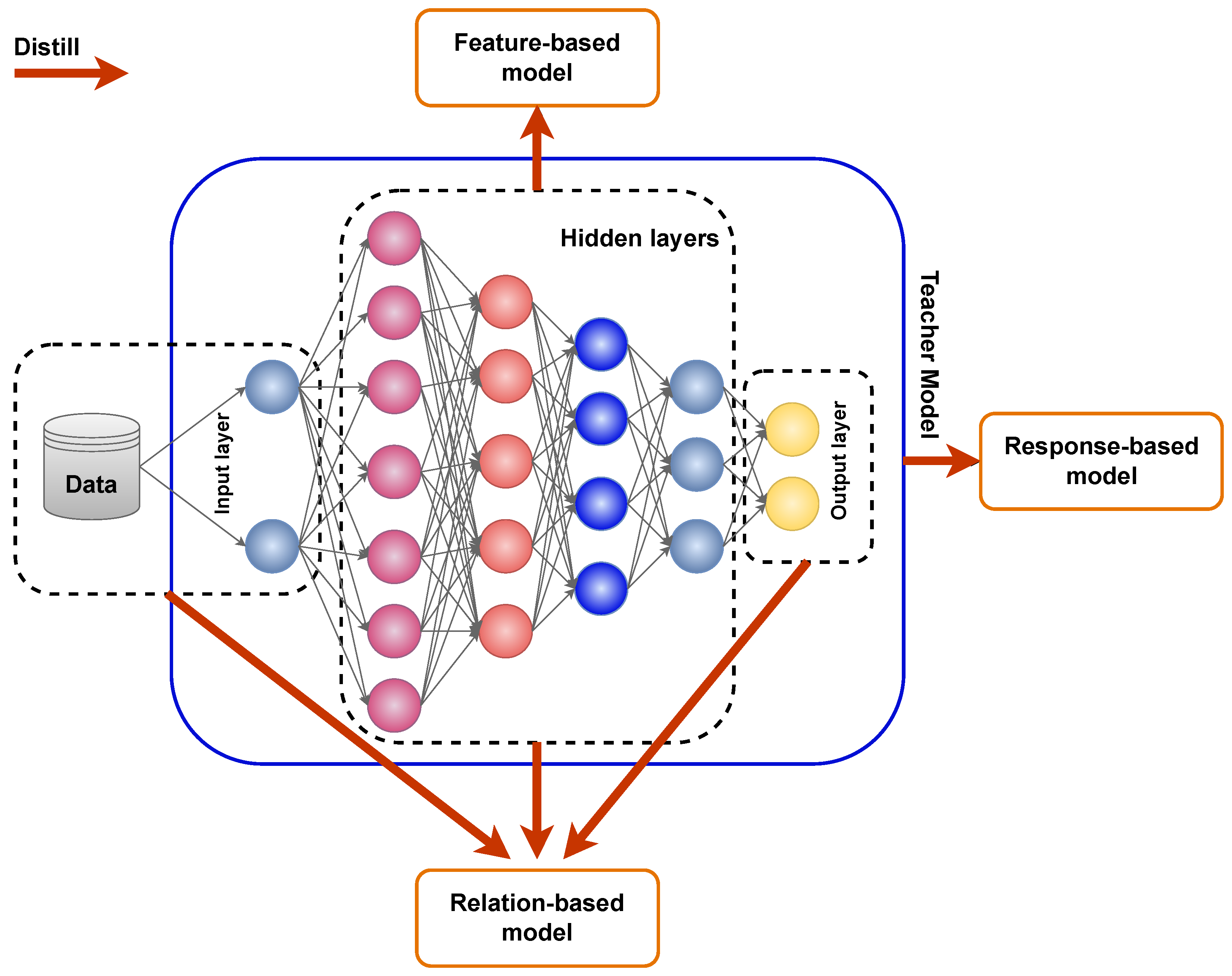

2.1.3. Knowledge Distillation for Intelligent Edge Networks

- Response-based knowledge: The student model learns from teacher predictions, with distillation loss reducing logit differences for intelligent network optimization [95].

- Feature-based knowledge: Intermediate layers reduce feature discrepancies between models, enabling students to emulate teacher neuron activations in distributed environments [96].

- Relational knowledge: This evaluates feature maps and similarity matrices, understanding feature correlations across multiple representations for intelligent edge applications [97].

2.1.4. Low-Rank Decomposition Methods for Intelligent Networks

- QLoRA [26] optimizes weight parameters by reducing the 32-bit format to 4-bit quantization space, significantly reducing memory usage for intelligent edge networks while maintaining training effectiveness through dynamic precision switching.

- QA-LoRA [25] combines quantization and fine-tuning of LoRA parameters, balancing adapter and quantization parameters through group-wise operators for distributed network optimization.

- DoRA [27] enhances LoRA by decomposing pre-trained weights into magnitude and direction components, focusing on directional adaptation to improve scalability and learning capacity while reducing training overhead for intelligent network applications.

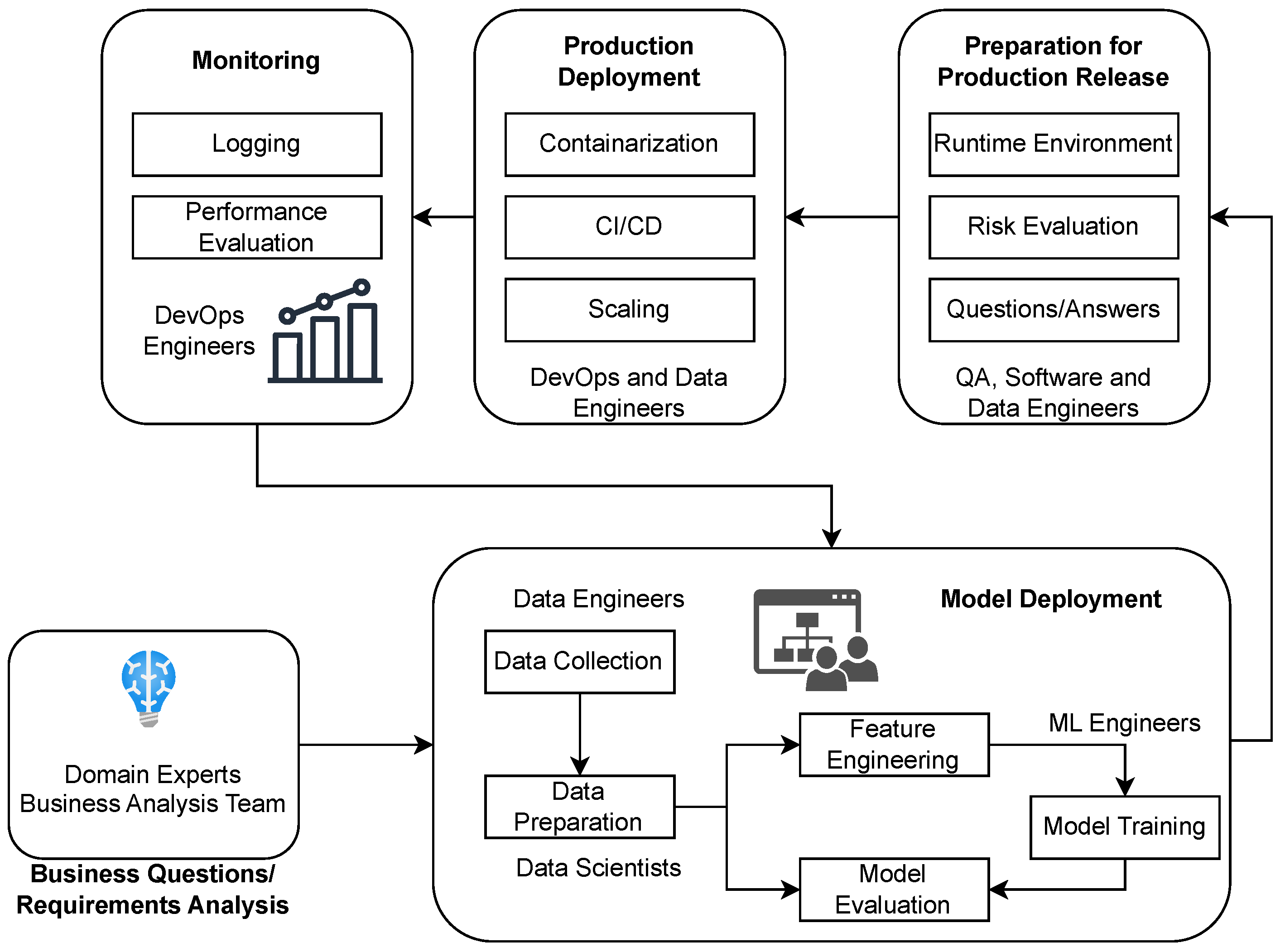

2.2. Intelligent MLOps at the Edge

2.2.1. Intelligent MLOps Pillars and Goals

- Intelligent Model Deployment and Experimentation: Simplifies model creation and deployment by optimizing data procedures and verifying that intelligent models function as intended in real-world network environments.

- Intelligent Model Monitoring: Monitors model performance across various network situations, recognizing data drift and limiting risks associated with incorrect predictions in distributed environments.

- Intelligent Production Deployment: Automates critical operations including model upgrades, troubleshooting, approval, updates, and scalability for seamless integration into operational network settings.

- Preparation for Intelligent Production Release: Includes version control, automated documentation, update tracking, and risk assessment, ensuring seamless model releases in network environments.

2.2.2. Intelligent MLOps Tools for Network Applications

2.3. Intelligent AI Degradation and Data Drifts in Network Environments

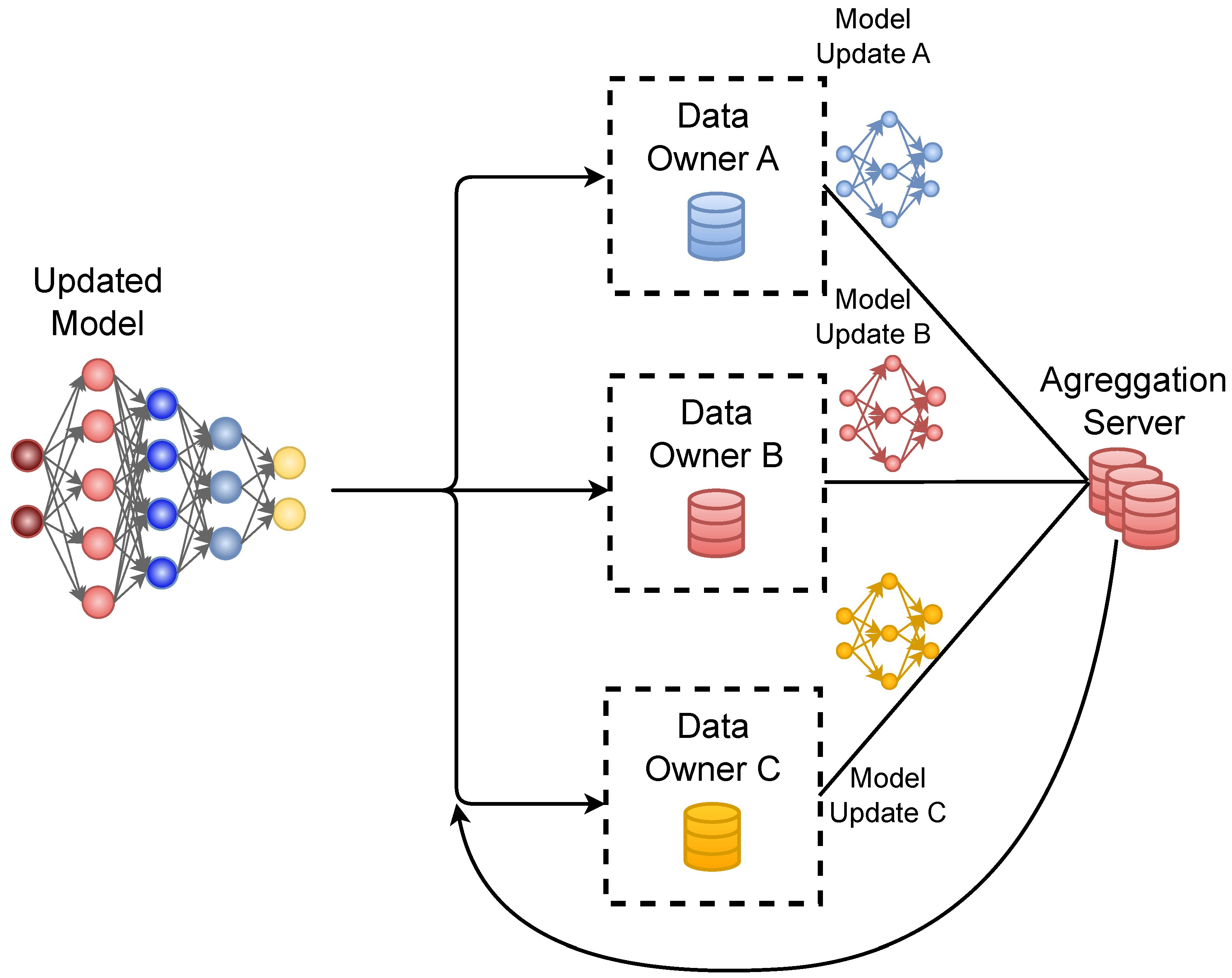

2.4. Intelligent Federated Learning at the Edge

- Horizontal-only frameworks: User-friendly APIs like Flower and FLUTE [136] emphasize simplicity for intelligent network applications.

2.5. Performance Evaluation Metrics for Intelligent Edge AI

- Computational metrics form the foundation for evaluating edge AI performance, with latency measured as the time from input to output completion, mathematically expressed as , where processing delays are critical for real-time applications. Throughput quantifies system capacity as the number of inference operations completed per unit time: . Inference time specifically measures the duration required for model prediction on input data [143,144].

- Resource utilization metrics assess system efficiency through energy consumption measurement, typically expressed as energy per inference operation , where represents average power consumption [145,146]. Memory utilization is quantified as , while CPU and GPU utilization percentages indicate processing resource efficiency [147,148].

- Model quality metrics ensure intelligent edge systems maintain acceptable accuracy levels. These metrics are highly dependent on the task performed by the AI models. An example for classification tasks would be classification-accuracy-related metrics. For instance, , , , and , where TP, TN, FP, and FN represent true positives, true negatives, false positives, and false negatives, respectively [3,6].

- System-level metrics evaluate operational characteristics including availability measured as , where MTBF is mean time between failures and MTTR is mean time to repair. Scalability metrics assess system performance under varying loads, while reliability quantifies system stability over extended operation periods [37].

- Standardized evaluation frameworks provide consistent benchmarking approaches, with, for instance, MLPerf serving as the industry standard for measuring AI inference performance across diverse hardware platforms, supporting edge-specific benchmarks including MLPerf Inference Edge and MLPerf Mobile for comprehensive system evaluation [141,149]. These frameworks enable fair comparison across different edge AI implementations while supporting reproducible research and development efforts in intelligent edge computing environments.

3. Intelligent Edge ML Use Cases and Application Domains for Next-Generation Networks

| Domain | Key Characteristics | Requirements | Main Challenges |

|---|---|---|---|

| Agriculture | Precision farming, crop tracking, weather prediction | Low power/wide coverage, weather resistance, real-time data | Rural connectivity, harsh conditions, cost |

| Energy | Smart grid, predictive maintenance, load balancing | High reliability, real-time decisions, system integration | Safety, regulatory compliance, scalability |

| Healthcare | Patient monitoring, diagnostics, wearables, emergency response | Ultra-low latency, high accuracy, privacy | Data privacy, life-critical accuracy, device size |

| Manufacturing | Quality control, predictive maintenance, robotics, supply chain | Real-time processing, high precision, system integration | Harsh environments, legacy systems, minimal downtime |

| Transportation | Autonomous vehicles, traffic management, fleet optimization | Ultra-low latency, high reliability/safety, real-time coordination | Safety, regulatory approval, infrastructure integration |

| Retail | Inventory, analytics, recommendations, checkout | Customer privacy, real-time analytics, scalability, cost | Privacy concerns, behavior patterns, POS integration |

| Smart Cities | Traffic/environmental monitoring, public safety | Wide area deployment, interoperability, scalability | Infrastructure complexity, data integration, public acceptance |

| Finance | Fraud detection, trading, risk assessment, automation | Ultra-low latency, high security, real-time processing | Regulatory demands, security threats, high-frequency decisions |

3.1. Intelligent Energy Management for Network Applications

3.2. Intelligent Smart Agriculture in Network Environments

3.3. Intelligent Smart Cities for Next-Generation Networks

- Quantity and quality of available data for intelligent city networks;

- Low-latency requirements for city edge nodes, varying by the specific functions required for each network application [37].

3.4. Intelligent Healthcare Networks

3.5. Intelligent Smart Industry

3.6. Intelligent Internet of Vehicles

3.7. Intelligent Smart Environment

3.8. Intelligent Operating Systems for Network Applications

4. State-of-the-Art Intelligent Edge ML Solutions for Next-Generation Network Applications

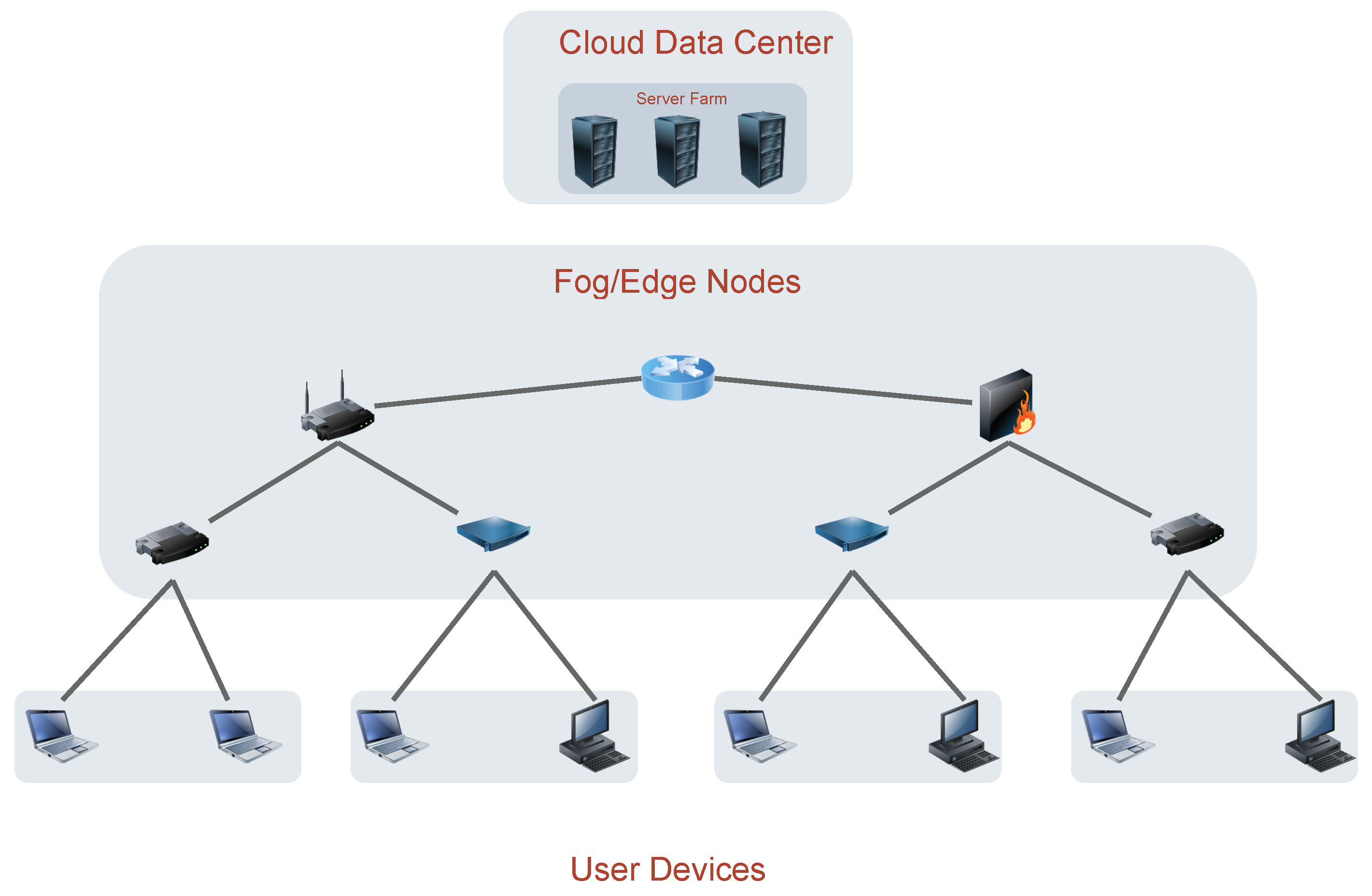

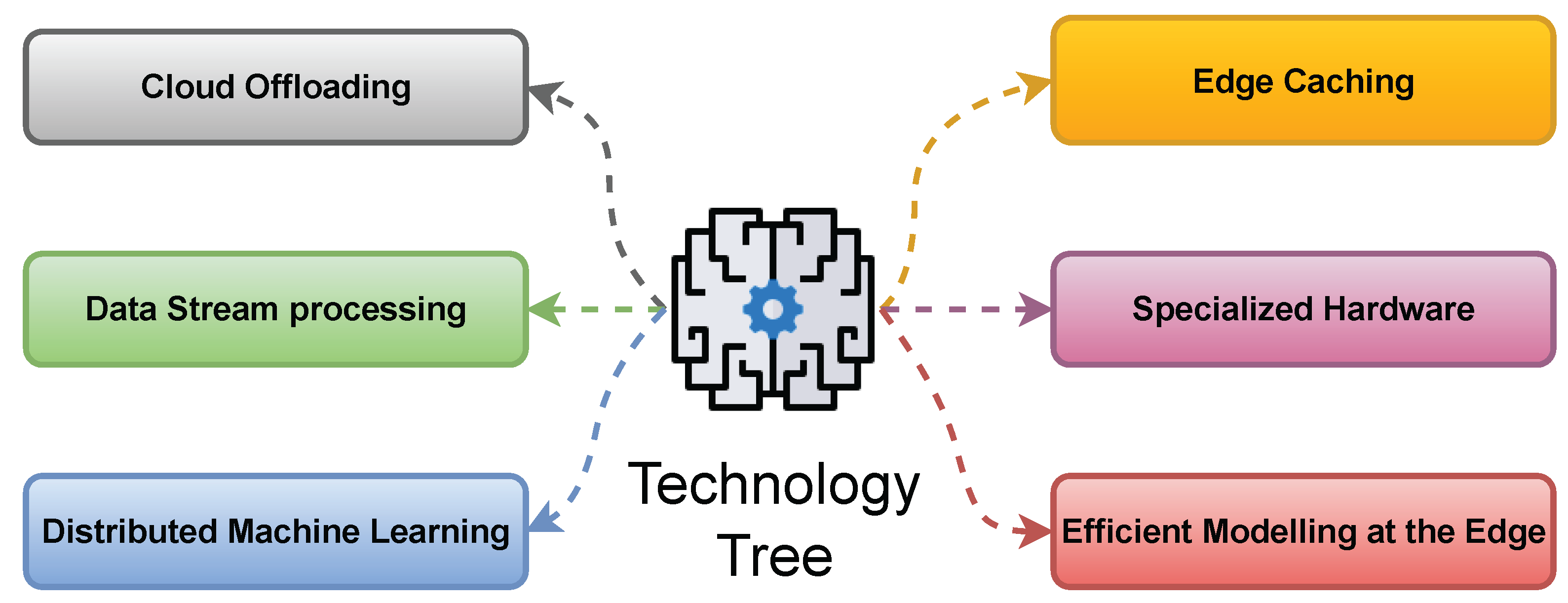

4.1. Intelligent Cloud Offloading for Network Applications

4.2. Intelligent Edge Caching for Network Environments

4.3. Intelligent Data Stream Processing for Network Applications

4.4. Intelligent Distributed Machine Learning for Networks

4.5. Intelligent Efficient Modeling for Network Edge Applications

4.6. Intelligent Specialized Hardware for Network Applications

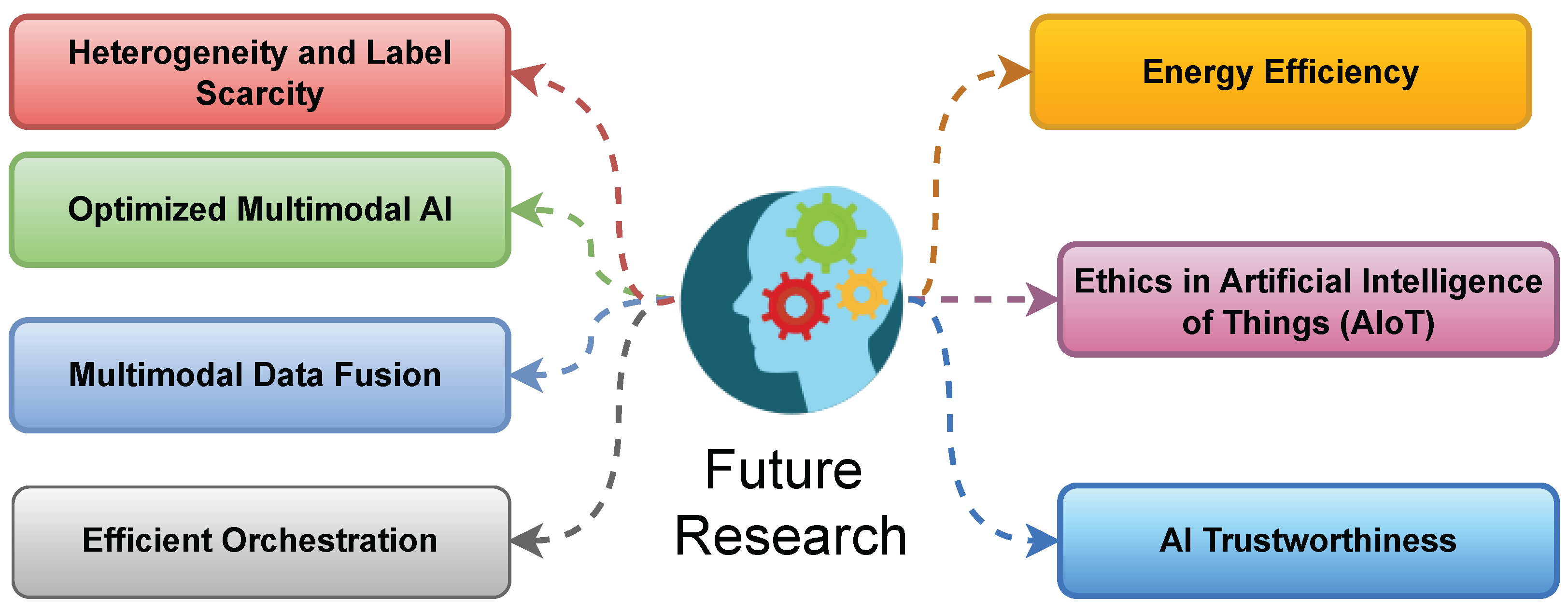

5. Research Challenges and Future Directions in Edge Machine Learning

5.1. Intelligent Heterogeneity and Label Scarcity in Network Environments

5.2. Intelligent Optimized Multimodal AI for Network Applications

5.3. Intelligent Multimodal Data Alignment and Fusion for Network Applications

- Early Fusion for Network Applications: This integrates low-level features from multiple modalities by concatenating or merging them into unified representations, enabling models to exploit cross-modal correlations for intelligent edge computing [267]. It has been successfully applied in semantic video analysis, audio–visual fusion, and healthcare applications in network environments.

- Late Fusion for Network Environments: This integrates classification outcomes from independently trained modality-specific models, providing flexibility for heterogeneous data in intelligent network applications [51,268]. Common applications include multimedia data analysis, health monitoring, and stress detection systems in next-generation networks.

- Hybrid Fusion for Intelligent Networks: This combines early and late fusion by integrating intermediate representations and final outputs, leveraging the strengths of both approaches for network applications [269]. Applications include emotion recognition, vehicle re-identification, and healthcare IoT-based multimodal fusion in intelligent network environments.

5.4. Intelligent Efficient Orchestration for Network Applications

5.5. Intelligent Energy Efficiency and Infrastructure Optimization for Networks

5.6. Intelligent Ethics in AIoT for Network Applications

5.7. Intelligent AI Trustworthiness for Network Applications

5.8. Intelligent Edge ML Challenges to Solutions Mapping for Networks

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Shi, Y.; Yang, K.; Jiang, T.; Zhang, J.; Letaief, K.B. Communication-Efficient Edge AI: Algorithms and Systems. IEEE Commun. Surv. Tutor. 2020, 22, 2167–2191. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef]

- Wang, J.; Pan, J.; Esposito, F.; Calyam, P.; Yang, Z.; Mohapatra, P. Edge cloud offloading algorithms: Issues, methods, and perspectives. ACM Comput. Surv. 2019, 52, 23. [Google Scholar] [CrossRef]

- Xu, D.; Li, T.; Li, Y.; Su, X.; Tarkoma, S.; Jiang, T.; Crowcroft, J.; Hui, P. Edge Intelligence: Architectures, Challenges, and Applications. arXiv 2020, arXiv:2003.12172. [Google Scholar] [CrossRef]

- Hong, C.H.; Varghese, B. Resource management in fog/edge computing: A survey on architectures, infrastructure, and algorithms. ACM Comput. Surv. (CSUR) 2019, 52, 97. [Google Scholar] [CrossRef]

- Ashish, V. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gan, Z.; Li, L.; Li, C.; Wang, L.; Liu, Z.; Gao, J. Vision-language pre-training: Basics, recent advances, and future trends. Found. Trends® Comput. Graph. Vis. 2022, 14, 163–352. [Google Scholar] [CrossRef]

- Chu, X.; Qiao, L.; Lin, X.; Xu, S.; Yang, Y.; Hu, Y.; Wei, F.; Zhang, X.; Zhang, B.; Wei, X.; et al. Mobilevlm: A fast, reproducible and strong vision language assistant for mobile devices. arXiv 2023, arXiv:2312.16886. [Google Scholar]

- Chu, X.; Qiao, L.; Zhang, X.; Xu, S.; Wei, F.; Yang, Y.; Sun, X.; Hu, Y.; Lin, X.; Zhang, B.; et al. MobileVLM V2: Faster and Stronger Baseline for Vision Language Model. arXiv 2024, arXiv:2402.03766. [Google Scholar] [CrossRef]

- Ma, S.; Wang, H.; Ma, L.; Wang, L.; Wang, W.; Huang, S.; Dong, L.; Wang, R.; Xue, J.; Wei, F. The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits. arXiv 2024, arXiv:2402.17764. [Google Scholar] [CrossRef]

- Bernabé-Sánchez, I.; Fernández, A.; Billhardt, H.; Ossowski, S. Problem Detection in the Edge of IoT Applications. IJIMAI 2023, 8. [Google Scholar] [CrossRef]

- Makhija, D.; Han, X.; Ho, N.; Ghosh, J. Architecture Agnostic Federated Learning for Neural Networks. arXiv 2022, arXiv:2202.07757. [Google Scholar] [CrossRef]

- Jiang, C.; Fan, T.; Gao, H.; Shi, W.; Liu, L.; Cérin, C.; Wan, J. Energy aware edge computing: A survey. Comput. Commun. 2020, 151, 556–580. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, H.B.; et al. Towards Federated Learning at Scale: System Design. arXiv 2019, arXiv:1902.01046. [Google Scholar] [CrossRef]

- Filho, C.P.; Marques, E.; Chang, V.; dos Santos, L.; Bernardini, F.; Pires, P.F.; Ochi, L.; Delicato, F.C. A Systematic Literature Review on Distributed Machine Learning in Edge Computing. Sensors 2022, 22, 2665. [Google Scholar] [CrossRef]

- Plastiras, G.; Terzi, M.; Kyrkou, C.; Theocharidcs, T. Edge Intelligence: Challenges and Opportunities of Near-Sensor Machine Learning Applications. In Proceedings of the International Conference on Application-Specific Systems, Architectures and Processors, Milano, Italy, 10–12 July 2018. [Google Scholar] [CrossRef]

- Wang, X.; Li, J.; Ning, Z.; Song, Q.; Guo, L.; Guo, S.; Obaidat, M.S. Wireless Powered Mobile Edge Computing Networks: A Survey. ACM Comput. Surv. 2022, 55, 263. [Google Scholar] [CrossRef]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient Inference Engine on Compressed Deep Neural Network. In Proceedings of the—2016 43rd International Symposium on Computer Architecture, Seoul, Republic of Korea, 18–22 June 2016; pp. 243–254. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Xu, Y.; Xie, L.; Gu, X.; Chen, X.; Chang, H.; Zhang, H.; Chen, Z.; Zhang, X.; Tian, Q. Qa-lora: Quantization-aware low-rank adaptation of large language models. arXiv 2023, arXiv:2309.14717. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. Qlora: Efficient finetuning of quantized llms. arXiv 2023, arXiv:2305.14314. [Google Scholar] [CrossRef]

- Liu, S.Y.; Wang, C.Y.; Yin, H.; Molchanov, P.; Wang, Y.C.F.; Cheng, K.T.; Chen, M.H. DoRA: Weight-Decomposed Low-Rank Adaptation. arXiv 2024, arXiv:2402.09353. [Google Scholar]

- Wang, H.; Ma, S.; Dong, L.; Huang, S.; Wang, H.; Ma, L.; Yang, F.; Wang, R.; Wu, Y.; Wei, F. Bitnet: Scaling 1-bit transformers for large language models. arXiv 2023, arXiv:2310.11453. [Google Scholar] [CrossRef]

- Alemdar, H.; Leroy, V.; Prost-Boucle, A.; Pétrot, F. Ternary neural networks for resource-efficient AI applications. In Proceedings of the IEEE 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2547–2554. [Google Scholar]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar] [CrossRef]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2020, 129, 1789–1819. [Google Scholar] [CrossRef]

- Hahm, O.; Baccelli, E.; Petersen, H.; Tsiftes, N. Operating Systems for Low-End Devices in the Internet of Things: A Survey. IEEE Internet Things J. 2016, 3, 720–734. [Google Scholar] [CrossRef]

- Pope, R.; Douglas, S.; Chowdhery, A.; Devlin, J.; Bradbury, J.; Levskaya, A.; Heek, J.; Xiao, K.; Agrawal, S.; Dean, J. Efficiently Scaling Transformer Inference. arXiv 2022, arXiv:2211.05102. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, X.; Zhang, J.; Cao, B.; Bao, W.; Yu, P.S. Not Just Privacy: Improving Performance of Private Deep Learning in Mobile Cloud. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–23 August 2018; pp. 2407–2416. [Google Scholar] [CrossRef]

- Allhoff, F.; Henschke, A. The Internet of Things: Foundational ethical issues. Internet Things 2018, 1–2, 55–66. [Google Scholar] [CrossRef]

- Iftikhar, S.; Gill, S.S.; Song, C.; Xu, M.; Aslanpour, M.S.; Toosi, A.N.; Du, J.; Wu, H.; Ghosh, S.; Chowdhury, D.; et al. AI-based fog and edge computing: A systematic review, taxonomy and future directions. arXiv 2023, arXiv:022.100674. [Google Scholar] [CrossRef]

- Hamdan, S.; Ayyash, M.; Almajali, S. Edge-Computing Architectures for Internet of Things Applications: A Survey. Sensors 2020, 20, 6441. [Google Scholar] [CrossRef]

- Rodrigues, T.K.; Suto, K.; Nishiyama, H.; Liu, J.; Kato, N. Machine Learning Meets Computation and Communication Control in Evolving Edge and Cloud: Challenges and Future Perspective. IEEE Commun. Surv. Tutor. 2020, 22, 38–67. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Convergence of Edge Computing and Deep Learning: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 869–904. [Google Scholar] [CrossRef]

- Singh, R.; Sukapuram, R.; Chakraborty, S. A survey of mobility-aware Multi-access Edge Computing: Challenges, use cases and future directions. Ad Hoc Netw. 2023, 140, 103044. [Google Scholar] [CrossRef]

- Verbraeken, J.; Wolting, M.; Katzy, J.; Kloppenburg, J.; Verbelen, T.; Rellermeyer, J.S. A Survey on Distributed Machine Learning. ACM Comput. Surv. (CSUR) 2020, 53, 30. [Google Scholar] [CrossRef]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A Survey on the Edge Computing for the Internet of Things. IEEE Access 2017, 6, 6900–6919. [Google Scholar] [CrossRef]

- Mao, Y.; Yu, X.; Huang, K.; Zhang, Y.J.A.; Zhang, J. Green edge AI: A contemporary survey. Proc. IEEE 2024, 112, 880–911. [Google Scholar] [CrossRef]

- Rodriguez, M.A.; Buyya, R. Container-based cluster orchestration systems: A taxonomy and future directions. Softw. Pract. Exp. 2019, 49, 698–719. [Google Scholar] [CrossRef]

- Zhong, Z.; Xu, M.; Rodriguez, M.A.; Xu, C.; Buyya, R. Machine Learning-based Orchestration of Containers: A Taxonomy and Future Directions. ACM Comput. Surv. 2022, 54, 217. [Google Scholar] [CrossRef]

- Casalicchio, E. Container Orchestration: A Survey. In EAI/Springer Innovations in Communication and Computing; Springer: Cham, Switzerland, 2019; pp. 221–235. [Google Scholar] [CrossRef]

- Isah, H.; Abughofa, T.; Mahfuz, S.; Ajerla, D.; Zulkernine, F.; Khan, S. A survey of distributed data stream processing frameworks. IEEE Access 2019, 7, 154300–154316. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef]

- Barua, A.; Ahmed, M.U.; Begum, S. A Systematic Literature Review on Multimodal Machine Learning: Applications, Challenges, Gaps and Future Directions. IEEE Access 2023, 11, 14804–14831. [Google Scholar] [CrossRef]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A Survey on Multimodal Large Language Models. arXiv 2023, arXiv:2306.13549. [Google Scholar] [CrossRef] [PubMed]

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Mtibaa, A. A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. Vis. Comput. 2022, 38, 2939–2970. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Liu, S.; Guo, B.; Fang, C.; Wang, Z.; Luo, S.; Zhou, Z.; Yu, Z. Enabling Resource-Efficient AIoT System With Cross-Level Optimization: A Survey. IEEE Commun. Surv. Tutor. 2023, 26, 389–427. [Google Scholar] [CrossRef]

- Surianarayanan, C.; Lawrence, J.J.; Chelliah, P.R.; Prakash, E.; Hewage, C. A Survey on Optimization Techniques for Edge Artificial Intelligence (AI). Sensors 2023, 23, 1279. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Vincent Poor, H. Federated Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Wu, J.; Drew, S.; Dong, F.; Zhu, Z.; Zhou, J. Topology-aware Federated Learning in Edge Computing: A Comprehensive Survey. J. ACM 2023, 37, 35. [Google Scholar] [CrossRef]

- Kar, B.; Yahya, W.; Lin, Y.D.; Ali, A. Offloading using Traditional Optimization and Machine Learning in Federated Cloud-Edge-Fog Systems: A Survey. IEEE Commun. Surv. Tutor. 2023, 25, 1199–1226. [Google Scholar] [CrossRef]

- Gama, J. A survey on learning from data streams: Current and future trends. Prog. Artif. Intell. 2012, 1, 45–55. [Google Scholar] [CrossRef]

- Suárez-Cetrulo, A.L.; Quintana, D.; Cervantes, A. A survey on machine learning for recurring concept drifting data streams. Expert Syst. Appl. 2023, 213, 118934. [Google Scholar] [CrossRef]

- Baruah, R.D.; Angelov, P. Evolving fuzzy systems for data streams: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 461–476. [Google Scholar] [CrossRef]

- Krawczyk, B.; Minku, L.L.; Gama, J.; Stefanowski, J.; Woźniak, M. Ensemble learning for data stream analysis: A survey. Inf. Fusion 2017, 37, 132–156. [Google Scholar] [CrossRef]

- Steinberger, J.; Schehlmann, L.; Abt, S.; Baier, H. Anomaly detection and mitigation at Internet scale: A survey. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2013; Volume 7943, pp. 49–60. [Google Scholar] [CrossRef]

- Martí, J.; Queralt, A.; Gasull, D.; Barceló, A.; Costa, J.J.; Cortes, T. Dataclay: A distributed data store for effective inter-player data sharing. J. Syst. Softw. 2017, 131, 129–145. [Google Scholar] [CrossRef]

- Gholami, A.; Kim, S.; Dong, Z.; Yao, Z.; Mahoney, M.W.; Keutzer, K. A Survey of Quantization Methods for Efficient Neural Network Inference. arXiv 2021, arXiv:2103.13630. [Google Scholar] [CrossRef]

- Zhao, H.X.; Magoulès, F. A review on the prediction of building energy consumption. Renew. Sustain. Energy Rev. 2012, 16, 3586–3592. [Google Scholar] [CrossRef]

- Gans, W.; Alberini, A.; Longo, A. Smart meter devices and the effect of feedback on residential electricity consumption: Evidence from a natural experiment in Northern Ireland. Energy Econ. 2013, 36, 729–743. [Google Scholar] [CrossRef]

- Rausser, G.; Strielkowski, W.; Štreimikienė, D. Smart meters and household electricity consumption: A case study in Ireland. Energy Environ. 2017, 29, 131–146. [Google Scholar] [CrossRef]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An Overview on Edge Computing Research. IEEE Access 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, W.; Dillon, T.; Rahayu, W.; Li, M. Empowering IoT Predictive Maintenance Solutions with AI: A Distributed System for Manufacturing Plant-Wide Monitoring. IEEE Trans. Ind. Informat. 2022, 18, 1345–1354. [Google Scholar] [CrossRef]

- Babaghayou, M.; Chaib, N.; Lagraa, N.; Ferrag, M.A.; Maglaras, L. A Safety-Aware Location Privacy-Preserving IoV Scheme with Road Congestion-Estimation in Mobile Edge Computing. Sensors 2023, 23, 531. [Google Scholar] [CrossRef]

- Maheshwari, S.; Zhang, W.; Seskar, I.; Zhang, Y.; Raychaudhuri, D. EdgeDrive: Supporting Advanced Driver AssistanceSystems using Mobile Edge Clouds Networks. In Proceedings of the IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019. [Google Scholar]

- Liu, L.; Zhao, M.; Yu, M.; Jan, M.A.; Lan, D.; Taherkordi, A. Mobility-Aware Multi-Hop Task Offloading for Autonomous Driving in Vehicular Edge Computing and Networks. IEEE Trans. Intell. Transport. Syst. 2022, 24, 2169–2182. [Google Scholar] [CrossRef]

- Leroux, S.; Simoens, P.; Lootus, M.; Thakore, K.; Sharma, A. TinyMLOps: Operational Challenges for Widespread Edge AI Adoption. In Proceedings of the 2022 IEEE 36th International Parallel and Distributed Processing Symposium Workshops, Lyon, France, 30 May–3 June 2022; pp. 1003–1010. [Google Scholar] [CrossRef]

- Symeonidis, G.; Nerantzis, E.; Kazakis, A.; Papakostas, G.A. MLOps—Definitions, Tools and Challenges. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference, Las Vegas, NV, USA, 26–29 January; pp. 453–460. [CrossRef]

- Pustokhina, I.V.; Pustokhin, D.A.; Gupta, D.; Khanna, A.; Shankar, K.; Nguyen, G.N. An Effective Training Scheme for Deep Neural Network in Edge Computing Enabled Internet of Medical Things (IoMT) Systems. IEEE Access 2020, 8, 107112–107123. [Google Scholar] [CrossRef]

- Sudharsan, B.; Patel, P.; Breslin, J.; Ali, M.I.; Mitra, K.; Dustdar, S.; Rana, O.; Jayaraman, P.P.; Ranjan, R. Toward Distributed, Global, Deep Learning Using IoT Devices. IEEE Internet Comput. 2021, 25, 6–12. [Google Scholar] [CrossRef]

- Paganini, M.; Forde, J. Streamlining tensor and network pruning in pytorch. arXiv 2020, arXiv:2004.13770. [Google Scholar] [CrossRef]

- Xu, C.; Gao, W.; Li, T.; Bai, N.; Li, G.; Zhang, Y. Teacher-student collaborative knowledge distillation for image classification. Appl. Intell. 2022, 53, 1997–2009. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2755–2763. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar]

- Choi, J.; Wang, Z.; Venkataramani, S.; Chuang, P.I.J.; Srinivasan, V.; Gopalakrishnan, K. PACT: Parameterized Clipping Activation for Quantized Neural Networks. arXiv 2018, arXiv:1805.06085. [Google Scholar] [CrossRef]

- Wang, K.; Liu, Z.; Lin, Y.; Lin, J.; Han, S. HAQ: Hardware-Aware Automated Quantization with Mixed Precision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8604–8612. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Jain, A.; Bhattacharya, S.; Masuda, M.; Sharma, V.; Wang, Y. Efficient Execution of Quantized Deep Learning Models: A Compiler Approach. arXiv 2020, arXiv:2006.10226. [Google Scholar] [CrossRef]

- Jaderberg, M.; Vedaldi, A.; Zisserman, A. Speeding up convolutional neural networks with low rank expansions. arXiv 2014, arXiv:1405.3866. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Anubhav Singh, R.B. Mobile Deep Learning with TensorFlow Lite, ML Kit and Flutter: Build Scalable Real-World Projects to Implement End-to-End Neural Networks on Android and iOS; Packt Publishing Ltd.: Mumbai, India, 2020. [Google Scholar]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar] [CrossRef]

- Xiao, G.; Lin, J.; Seznec, M.; Wu, H.; Demouth, J.; Han, S. Smoothquant: Accurate and efficient post-training quantization for large language models. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 38087–38099. [Google Scholar]

- Frantar, E.; Ashkboos, S.; Hoefler, T.; Alistarh, D. OPTQ: Accurate quantization for generative pre-trained transformers. In Proceedings of the 11th International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Lin, J.; Tang, J.; Tang, H.; Yang, S.; Dang, X.; Han, S. AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration. arXiv 2023, arXiv:2306.00978. [Google Scholar] [CrossRef]

- Chee, J.; Cai, Y.; Kuleshov, V.; De Sa, C.M. Quip: 2-bit quantization of large language models with guarantees. Adv. Neural Inf. Process. Syst. 2023, 36, 4396–4429. [Google Scholar]

- Sung, W.; Shin, S.; Hwang, K. Resiliency of Deep Neural Networks under Quantization. arXiv 2015, arXiv:1511.06488. [Google Scholar]

- Chen, G.; Choi, W.; Yu, X.; Han, T.; Chandraker, M. Learning Efficient Object Detection Models with Knowledge Distillation; Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Yim, J.; Joo, D.; Bae, J.; Kim, J. A Gift from Knowledge Distillation: Fast Optimization, Network Minimization and Transfer Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7130–7138. [Google Scholar] [CrossRef]

- Fang, G.; Song, J.; Shen, C.; Wang, X.; Chen, D.; Song, M. Data-Free Adversarial Distillation. arXiv 2020, arXiv:1912.11006. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Lee, S.; Song, B.C. Graph-based Knowledge Distillation by Multi-head Attention Network. In Proceedings of the 30th British Machine Vision Conference 2019, BMVC 2019, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Polino, A.; Pascanu, R.; Alistarh, D. Model compression via distillation and quantization. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018—Conference Track Proceedings, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Chuang, Y.S.; Su, S.Y.; Chen, Y.N. Lifelong Language Knowledge Distillation. In Proceedings of the EMNLP 2020—2020 Conference on Empirical Methods in Natural Language Processing, Online Event, 16–20 November 2020; pp. 2914–2924. [Google Scholar] [CrossRef]

- Li, C.; Farkhoor, H.; Liu, R.; Yosinski, J. Measuring the intrinsic dimension of objective landscapes. arXiv 2018, arXiv:1804.08838. [Google Scholar] [CrossRef]

- Aghajanyan, A.; Zettlemoyer, L.; Gupta, S. Intrinsic dimensionality explains the effectiveness of language model fine-tuning. arXiv 2020, arXiv:2012.13255. [Google Scholar] [CrossRef]

- Xu, L.; Xie, H.; Qin, S.Z.J.; Tao, X.; Wang, F.L. Parameter-efficient fine-tuning methods for pretrained language models: A critical review and assessment. arXiv 2023, arXiv:2312.12148. [Google Scholar]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Crespo, J.F.; Dennison, D. Hidden Technical Debt in Machine Learning Systems; Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Ruf, P.; Madan, M.; Reich, C.; Ould-Abdeslam, D. Demystifying mlops and presenting a recipe for the selection of open-source tools. Appl. Sci. 2021, 11, 8861. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Raj, E.; Buffoni, D.; Westerlund, M.; Ahola, K. Edge MLOps: An Automation Framework for AIoT Applications. In Proceedings of the 2021 IEEE International Conference on Cloud Engineering, IC2E 2021, San Francisco, CA, USA, 22 November 2021; pp. 191–200. [Google Scholar] [CrossRef]

- John, M.M.; Olsson, H.H.; Bosch, J. Towards MLOps: A Framework and Maturity Model. In Proceedings of the 2021 47th Euromicro Conference on Software Engineering and Advanced Applications, SEAA 2021, Palermo, Italy, 3 September 2021; pp. 334–341. [Google Scholar] [CrossRef]

- Tamburri, D.A. Sustainable MLOps: Trends and Challenges. In Proceedings of the 2020 22nd International Symposium on Symbolic and Numeric Algorithms for Scientific Computing, SYNASC 2020, Timisoara, Romania, 1–4 September 2020; pp. 17–23. [Google Scholar] [CrossRef]

- Kreuzberger, D.; Kühl, N.; Hirschl, S. Machine Learning Operations (MLOps): Overview, Definition, and Architecture. IEEE Access 2023, 11, 31866–31879. [Google Scholar] [CrossRef]

- Zaharia, M.; Chen, A.; Davidson, A.; Ghodsi, A.; Hong, S.A.; Konwinski, A.; Murching, S.; Nykodym, T.; Ogilvie, P.; Parkhe, M.; et al. Accelerating the Machine Learning Lifecycle with MLflow. IEEE Data Eng. Bull. 2018, 41, 39–45. [Google Scholar]

- Bisong, E. Kubeflow and kubeflow pipelines. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Springer: Berlin/Heidelberg, Germany, 2019; pp. 671–685. [Google Scholar]

- Bodor, A.; Hnida, M.; Najima, D. MLOps: Overview of current state and future directions. In Proceedings of the International Conference on Smart City Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 156–165. [Google Scholar]

- Chaves, A.J.; Martín, C.; Díaz, M. The orchestration of Machine Learning frameworks with data streams and GPU acceleration in Kafka-ML: A deep-learning performance comparative. Expert Syst. 2023, 41, e13287. [Google Scholar] [CrossRef]

- Klaise, J.; Van Looveren, A.; Cox, C.; Vacanti, G.; Coca, A. Monitoring and explainability of models in production. arXiv 2020, arXiv:2007.06299. [Google Scholar] [CrossRef]

- Woźniak, A.P.; Milczarek, M.; Woźniak, J. MLOps Components, Tools, Process, and Metrics: A Systematic Literature Review. IEEE Access 2025, 13, 22166–22175. [Google Scholar] [CrossRef]

- Barry, M.; Bifet, A.; Billy, J.L. StreamAI: Dealing with Challenges of Continual Learning Systems for Serving AI in Production. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Melbourne, Australia, 14 May 2023; pp. 134–137. [Google Scholar] [CrossRef]

- Vela, D.; Sharp, A.; Zhang, R.; Nguyen, T.; Hoang, A.; Pianykh, O.S. Temporal quality degradation in AI models. Sci. Rep. 2022, 12, 11654. [Google Scholar] [CrossRef]

- Lobo, J.L.; Laña, I.; Osaba, E.; Del Ser, J. On the Connection between Concept Drift and Uncertainty in Industrial Artificial Intelligence. arXiv 2023, arXiv:2303.07940. [Google Scholar] [CrossRef]

- Montiel, J.; Halford, M.; Mastelini, S.M.; Bolmier, G.; Sourty, R.; Vaysse, R.; Zouitine, A.; Gomes, H.M.; Read, J.; Abdessalem, T.; et al. River: Machine learning for streaming data in python. J. Mach. Learn. Res. 2021, 22, 4945–4952. [Google Scholar]

- Ordóñez, S.A.C.; Samanta, J.; Suárez-Cetrulo, A.L.; Carbajo, R.S. Adaptive Machine Learning for Resource-Constrained Environments. In Proceedings of the Discovering Drift Phenomena in Evolving Landscapes; Piangerelli, M., Prenkaj, B., Rotalinti, Y., Joshi, A., Stilo,, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 3–19. [Google Scholar]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Introduction. In Federated Learning; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–15. [Google Scholar] [CrossRef][Green Version]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Arivazhagan, M.G.; Aggarwal, V.; Singh, A.K.; Choudhary, S. Federated Learning with Personalization Layers. arXiv 2019, arXiv:1912.00818. [Google Scholar] [CrossRef]

- Reddi, S.; Charles, Z.; Zaheer, M.; Garrett, Z.; Rush, K.; Konečný, J.; Kumar, S.; McMahan, H.B. Adaptive Federated Optimization. arXiv 2021, arXiv:2003.00295. [Google Scholar] [CrossRef]

- Thapa, C.; Arachchige, P.C.M.; Camtepe, S.; Sun, L. SplitFed: When Federated Learning Meets Split Learning. Proc. AAAI Conf. Artif. Intell. 2022, 36, 8485–8493. [Google Scholar] [CrossRef]

- Zhang, Z.; He, N.; Li, D.; Gao, H.; Gao, T.; Zhou, C. Federated transfer learning for disaster classification in social computing networks. J. Saf. Sci. Resil. 2022, 3, 15–23. [Google Scholar] [CrossRef]

- Zhu, H.; Jin, Y. Multi-objective Evolutionary Federated Learning. arXiv 2019, arXiv:1812.07478. [Google Scholar] [CrossRef]

- Qi, L.; Hu, C.; Zhang, X.; Khosravi, M.R.; Sharma, S.; Pang, S.; Wang, T. Privacy-Aware Data Fusion and Prediction with Spatial-Temporal Context for Smart City Industrial Environment. IEEE Trans. Ind. Informat. 2021, 17, 4159–4167. [Google Scholar] [CrossRef]

- Liu, X.; Shi, T.; Xie, C.; Li, Q.; Hu, K.; Kim, H.; Xu, X.; Li, B.; Song, D. UniFed: A Benchmark for Federated Learning Frameworks. arXiv 2022, arXiv:2207.10308. [Google Scholar]

- Kholod, I.; Yanaki, E.; Fomichev, D.; Shalugin, E.; Novikova, E.; Filippov, E.; Nordlund, M. Open-Source Federated Learning Frameworks for IoT: A Comparative Review and Analysis. Sensors 2020, 21, 167. [Google Scholar] [CrossRef] [PubMed]

- He, C.; Li, S.; So, J.; Zeng, X.; Zhang, M.; Wang, H.; Wang, X.; Vepakomma, P.; Singh, A.; Qiu, H.; et al. FedML: A Research Library and Benchmark for Federated Machine Learning. arXiv 2020, arXiv:2007.13518. [Google Scholar] [CrossRef]

- Garcia, M.H.; Manoel, A.; Diaz, D.M.; Mireshghallah, F.; Sim, R.; Dimitriadis, D. FLUTE: A Scalable, Extensible Framework for High-Performance Federated Learning Simulations. arXiv 2022, arXiv:2203.13789. [Google Scholar] [CrossRef]

- Knott, B.; Venkataraman, S.; Hannun, A.; Sengupta, S.; Ibrahim, M.; van der Maaten, L. CrypTen: Secure Multi-Party Computation Meets Machine Learning. arXiv 2022, arXiv:2109.00984. [Google Scholar]

- Li, Q.; Zhaomin, W.; Cai, Y.; Yung, C.M.; Fu, T.; He, B. Fedtree: A federated learning system for trees. Proc. Mach. Learn. Syst. 2023, 5, 89–103. [Google Scholar]

- Roth, H.R.; Cheng, Y.; Wen, Y.; Yang, I.; Xu, Z.; Hsieh, Y.T.; Kersten, K.; Harouni, A.; Zhao, C.; Lu, K.; et al. NVIDIA FLARE: Federated Learning from Simulation to Real-World. arXiv 2022, arXiv:2210.13291. [Google Scholar]

- Ziller, A.; Trask, A.; Lopardo, A.; Szymkow, B.; Wagner, B.; Bluemke, E.; Nounahon, J.M.; Passerat-Palmbach, J.; Prakash, K.; Rose, N.; et al. Pysyft: A library for easy federated learning. In Federated Learning Systems: Towards Next-Generation AI; Springer: Berlin/Heidelberg, Germany, 2021; pp. 111–139. [Google Scholar]

- Reddi, V.J.; Cheng, C.; Kanter, D.; Mattson, P.; Schmuelling, G.; Wu, C.J.; Anderson, B.; Breughe, M.; Charlebois, M.; Chou, W.; et al. Mlperf inference benchmark. In Proceedings of the 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), 30 May 2020–3 June 2020; pp. 446–459. [Google Scholar]

- Varghese, B.; Wang, N.; Bermbach, D.; Hong, C.H.; Lara, E.D.; Shi, W.; Stewart, C. A survey on edge performance benchmarking. ACM Comput. Surv. (CSUR) 2021, 54, 66. [Google Scholar] [CrossRef]

- Mattson, P.; Cheng, C.; Diamos, G.; Coleman, C.; Micikevicius, P.; Patterson, D.; Tang, H.; Wei, G.Y.; Bailis, P.; Bittorf, V.; et al. Mlperf training benchmark. Proc. Mach. Learn. Syst. 2020, 2, 336–349. [Google Scholar]

- Saeed, E.; Coutinho, R.W. Performance evaluation of edge computing models for internet of things. In Proceedings of the 12th ACM International Symposium on Design and Analysis of Intelligent Vehicular Networks and Applications, Montreal, QC, Canada, 24–28 October 2022; pp. 63–69. [Google Scholar]

- Tu, X.; Mallik, A.; Chen, D.; Han, K.; Altintas, O.; Wang, H.; Xie, J. Unveiling energy efficiency in deep learning: Measurement, prediction, and scoring across edge devices. In Proceedings of the Eighth ACM/IEEE Symposium on Edge Computing, Wilmington, DE, USA, 6–9 December 2023; pp. 80–93. [Google Scholar]

- Fan, T.; Qiu, Y.; Jiang, C.; Wan, J. Energy aware edge computing: A survey. In Proceedings of the International Workshop on High Performance Computing for Advanced Modeling and Simulation in Nuclear Energy and Environmental Science, Beijing, China, 12 June 2018; pp. 79–91. [Google Scholar]

- Aslanpour, M.S.; Gill, S.S.; Toosi, A.N. Performance evaluation metrics for cloud, fog and edge computing: A review, taxonomy, benchmarks and standards for future research. Internet Things 2020, 12, 100273. [Google Scholar] [CrossRef]

- Caiazza, C.; Luconi, V.; Vecchio, A. Saving energy on smartphones through edge computing: An experimental evaluation. In Proceedings of the ACM SIGCOMM Workshop on Networked Sensing Systems for a Sustainable Society, Antipolis, France, 22 August 2022; pp. 20–25. [Google Scholar]

- Reddi, V.J.; Cheng, C.; Kanter, D.; Mattson, P.; Schmuelling, G.; Wu, C.J. The vision behind mlperf: Understanding ai inference performance. IEEE Micro 2021, 41, 10–18. [Google Scholar] [CrossRef]

- Vo, T.; Dave, P.; Bajpai, G.; Kashef, R. Edge, Fog, and Cloud Computing: An Overview on Challenges and Applications. arXiv 2022, arXiv:2211.01863. [Google Scholar] [CrossRef]

- Singh, P.P.; Khosla, P.K.; Mittal, M. Energy conservation in IoT-based smart home and its automation. Stud. Syst. Decis. Control 2019, 206, 155–177. [Google Scholar] [CrossRef]

- Dong, P.; Ge, J.; Wang, X.; Guo, S. Collaborative Edge Computing for Social Internet of Things: Applications, Solutions, and Challenges. IEEE Trans. Comput. Soc. Syst. 2022, 9, 291–301. [Google Scholar] [CrossRef]

- Alharbi, H.A.; Aldossary, M. Energy-Efficient Edge-Fog-Cloud Architecture for IoT-Based Smart Agriculture Environment. IEEE Access 2021, 9, 110480–110492. [Google Scholar] [CrossRef]

- Rathi, V.K.; Rajput, N.K.; Mishra, S.; Grover, B.A.; Tiwari, P.; Jaiswal, A.K.; Hossain, M.S. An edge AI-enabled IoT healthcare monitoring system for smart cities. Comput. Electr. Eng. 2021, 96, 107524. [Google Scholar] [CrossRef]

- Kamruzzaman, M.M. New Opportunities, Challenges, and Applications of Edge-AI for Connected Healthcare in Smart Cities. In Proceedings of the 2021 IEEE Globecom Workshops, GC Wkshps 2021, Madrid, Spain, 7–11 December 2021. [Google Scholar] [CrossRef]

- Ullah, Z.; Al-Turjman, F.; Mostarda, L.; Gagliardi, R. Applications of Artificial Intelligence and Machine learning in smart cities. Comput. Commun. 2020, 154, 313–323. [Google Scholar] [CrossRef]

- Fagnant, D.J.; Kockelman, K. Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Transp. Res. Part A Policy Pract. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Zonta, T.; da Costa, C.A.; da Rosa Righi, R.; de Lima, M.J.; da Trindade, E.S.; Li, G.P. Predictive maintenance in the Industry 4.0: A systematic literature review. Comput. Ind. Eng. 2020, 150, 106889. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Gurtov, A.; Zahid, N.; Pirbhulal, S.; Wang, L.; Rahman, M.M.U.; Imran, M.A.; Abbasi, Q.H. Toward Convergence of AI and IoT for Energy-Efficient Communication in Smart Homes. IEEE Internet Things J. 2021, 8, 9664–9671. [Google Scholar] [CrossRef]

- Saad Al-Sumaiti, A.; Ahmed, M.H.; Salama, M.M. Smart Home Activities: A Literature Review. Electr. Power Compon. Syst. 2014, 42, 294–305. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, H. A review of wireless sensor networks and its applications. In Proceedings of the IEEE International Conference on Automation and Logistics, ICAL, Zhengzhou, China, 15–17 August 2012; pp. 386–389. [Google Scholar] [CrossRef]

- Ray, P.P. Internet of things for smart agriculture: Technologies, practices and future direction. J. Ambient Intell. Smart Environ. 2017, 9, 395–420. [Google Scholar] [CrossRef]

- Chen, C.J.; Huang, Y.Y.; Li, Y.S.; Chen, Y.C.; Chang, C.Y.; Huang, Y.M. Identification of Fruit Tree Pests with Deep Learning on Embedded Drone to Achieve Accurate Pesticide Spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.S.; Xu, S.P.; Hu, W.W.; Wu, Y.J. Design and Implementation of Online Monitoring System for Soil Salinity and Alkalinity in Yangtze River Delta Tideland. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Industrial Design, Guangzhou, China, 28–30 May 2021; pp. 521–526. [Google Scholar] [CrossRef]

- Sakthi, U.; Rose, J.D. Smart agricultural knowledge discovery system using IoT technology and fog computing. In Proceedings of the 3rd International Conference on Smart Systems and Inventive Technology, ICSSIT, Tirunelveli, India, 20–22 August 2020; pp. 48–53. [Google Scholar] [CrossRef]

- Lynggaard, P.; Skouby, K.E. Deploying 5G-Technologies in Smart City and Smart Home Wireless Sensor Networks with Interferences. Wirel. Pers. Commun. 2015, 81, 1399–1413. [Google Scholar] [CrossRef]

- Ullah, I.; Baharom, M.N.; Ahmad, H.; Wahid, F.; Luqman, H.M.; Zainal, Z.; Das, B. Smart Lightning Detection System for Smart-City Infrastructure Using Artificial Neural Network. Wirel. Pers. Commun. 2019, 106, 1743–1766. [Google Scholar] [CrossRef]

- Neirotti, P.; De Marco, A.; Cagliano, A.C.; Mangano, G.; Scorrano, F. Current trends in Smart City initiatives: Some stylised facts. Cities 2014, 38, 25–36. [Google Scholar] [CrossRef]

- Morello, R.; Mukhopadhyay, S.C.; Liu, Z.; Slomovitz, D.; Samantaray, S.R. Advances on sensing technologies for smart cities and power grids: A review. IEEE Sens. J. 2017, 17, 7596–7610. [Google Scholar] [CrossRef]

- Mainetti, L.; Patrono, L.; Stefanizzi, M.L.; Vergallo, R. A Smart Parking System based on IoT protocols and emerging enabling technologies. In Proceedings of the IEEE World Forum on Internet of Things, WF-IoT, Milan, Italy, 14–16 December 2015; pp. 764–769. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Goundar, S. IoT enabled Smart Fog Computing for Vehicular Traffic Control. EAI Endorsed Trans. Internet Things 2019, 5, e3. [Google Scholar] [CrossRef]

- Sapienza, M.; Guardo, E.; Cavallo, M.; La Torre, G.; Leombruno, G.; Tomarchio, O. Solving Critical Events through Mobile Edge Computing: An Approach for Smart Cities. In Proceedings of the 2016 IEEE International Conference on Smart Computing SMARTCOMP, St. Louis, MO, USA, 18–20 May 2016. [Google Scholar] [CrossRef]

- Sigwele, T.; Hu, Y.F.; Ali, M.; Hou, J.; Susanto, M.; Fitriawan, H. Intelligent and Energy Efficient Mobile Smartphone Gateway for Healthcare Smart Devices Based on 5G. In Proceedings of the 2018 IEEE Global Communications Conference, GLOBECOM, Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar] [CrossRef]

- Varghese, B.; Wang, N.; Barbhuiya, S.; Kilpatrick, P.; Nikolopoulos, D.S. Challenges and Opportunities in Edge Computing. In Proceedings of the 2016 IEEE International Conference on Smart Cloud SmartCloud, New York, NY, USA, 18–20 November 2016; pp. 20–26. [Google Scholar]

- Haleem, A.; Javaid, M.; Singh, R.P.; Suman, R. Telemedicine for healthcare: Capabilities, features, barriers, and applications. Sens. Int. 2021, 2, 100117. [Google Scholar] [CrossRef]

- Klonoff, D.C.; Aimbe, F. Fog Computing and Edge Computing Architectures for Processing Data From Diabetes Devices Connected to the Medical Internet of Things. J. Diabetes Sci. Technol. 2017, 11, 647–652. [Google Scholar] [CrossRef]

- Hartmann, M.; Hashmi, U.S.; Imran, A. Edge computing in smart health care systems: Review, challenges, and research directions. Trans. Emerg. Telecommun. Technol. 2022, 33, e3710. [Google Scholar] [CrossRef]

- Kaur, A.; Jasuja, A. Health monitoring based on IoT using Raspberry PI. In Proceedings of the IEEE International Conference on Computing, Communication and Automation, ICCCA, Greater Noida, India, 5–6 May 2017; pp. 1335–1340. [Google Scholar] [CrossRef]

- Zheng, H.; Paiva, A.R.; Gurciullo, C.S. Advancing from Predictive Maintenance to Intelligent Maintenance with AI and IIoT. arXiv 2020, arXiv:2009.00351. [Google Scholar] [CrossRef]

- Hafeez, T.; Xu, L.; McArdle, G. Edge intelligence for data handling and predictive maintenance in IIoT. IEEE Access 2021, 9, 49355–49371. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Deep Learning for Smart Industry: Efficient Manufacture Inspection System with Fog Computing. IEEE Trans. Ind. Informat. 2018, 14, 4665–4673. [Google Scholar] [CrossRef]

- Cui, M.; Zhong, S.; Li, B.; Chen, X.; Huang, K. Offloading Autonomous Driving Services via Edge Computing. IEEE Internet Things J. 2020, 7, 10535–10547. [Google Scholar] [CrossRef]

- Agarwal, P.; Mittal, M.; Ahmed, J.; Idrees, S.M. (Eds.) Smart Technologies for Energy and Environmental Sustainability; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Su, X.; Liu, X.; Motlagh, N.H.; Cao, J.; Su, P.; Pellikka, P.; Liu, Y.; Petaja, T.; Kulmala, M.; Hui, P.; et al. Intelligent and Scalable Air Quality Monitoring with 5G Edge. IEEE Internet Comput. 2021, 25, 35–44. [Google Scholar] [CrossRef]

- Moursi, A.S.; El-Fishawy, N.; Djahel, S.; Shouman, M.A. An IoT enabled system for enhanced air quality monitoring and prediction on the edge. Complex Intell. Syst. 2021, 7, 2923–2947. [Google Scholar] [CrossRef] [PubMed]

- Bhardwaj, A.; Dagar, V.; Khan, M.O.; Aggarwal, A.; Alvarado, R.; Kumar, M.; Irfan, M.; Proshad, R. Smart IoT and Machine Learning-based Framework for Water Quality Assessment and Device Component Monitoring. Environ. Sci. Pollut. Res. 2022, 29, 46018–46036. [Google Scholar] [CrossRef]

- Mei, G.; Xu, N.; Qin, J.; Wang, B.; Qi, P. A Survey of Internet of Things (IoT) for Geohazard Prevention: Applications, Technologies, and Challenges. IEEE Internet Things J. 2020, 7, 4371–4386. [Google Scholar] [CrossRef]

- Ananthi, J.; Sengottaiyan, N.; Anbukaruppusamy, S.; Upreti, K.; Dubey, A.K. Forest fire prediction using IoT and deep learning. Int. J. Adv. Technol. Eng. Explor. 2022, 9, 246–256. [Google Scholar] [CrossRef]

- Huang, X.R.; Chen, W.H.; Pai, W.Y.; Huang, G.Z.; Hu, W.C.; Chen, L.B. An AI Edge Computing-Based Robotic Automatic Guided Vehicle System for Cleaning Garbage. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies, Osaka, Japan, 7–9 March 2022; pp. 446–447. [Google Scholar] [CrossRef]

- Morabito, R. Virtualization on internet of things edge devices with container technologies: A performance evaluation. IEEE Access 2017, 5, 8835–8850. [Google Scholar] [CrossRef]

- Levis, P.; Madden, S.; Polastre, J.; Szewczyk, R.; Whitehouse, K.; Woo, A.; Gay, D.; Hill, J.; Welsh, M.; Brewer, E.; et al. TinyOS: An operating system for sensor networks. In Ambient Intelligence; Springer: Berlin/Heidelberg, Germany, 2005; pp. 115–148. [Google Scholar] [CrossRef]

- Manzalini, A.; Crespi, N. An edge operating system enabling anything-as-a-service. IEEE Commun. Mag. 2016, 54, 62–67. [Google Scholar] [CrossRef]

- Li, B.; Fan, H.; Gao, Y.; Dong, W. ThingSpire OS: A WebAssembly-based IoT operating system for cloud-edge integration. In Proceedings of the MobiSys 2021 19th Annual International Conference on Mobile Systems, Applications, and Services, Virtual, 24 June–2 July 2021; pp. 487–488. [Google Scholar] [CrossRef]

- Silva, M.; Cerdeira, D.; Pinto, S.; Gomes, T. Operating Systems for Internet of Things Low-End Devices: Analysis and Benchmarking. IEEE Internet Things J. 2019, 6, 10375–10383. [Google Scholar] [CrossRef]

- Baccelli, E.; Gundogan, C.; Hahm, O.; Kietzmann, P.; Lenders, M.S.; Petersen, H.; Schleiser, K.; Schmidt, T.C.; Wahlisch, M. RIOT: An Open Source Operating System for Low-End Embedded Devices in the IoT. IEEE Internet Things J. 2018, 5, 4428–4440. [Google Scholar] [CrossRef]

- Borgohain, T.; Kumar, U.; Sanyal, S. Survey of Operating Systems for the IoT Environment. arXiv 2015, arXiv:1504.02517. [Google Scholar] [CrossRef]

- Nakano, W.; Shinohara, Y.; Ishiura, N. Full Hardware Implementation of FreeRTOS-Based Real-Time Systems. In Proceedings of the IEEE Region 10 Annual International Conference, Auckland, New Zealand, 7–10 December 2021; pp. 435–440. [Google Scholar] [CrossRef]

- Borges, M.; Paiva, S.; Santos, A.; Gaspar, B.; Cabral, J. Azure RTOS ThreadX Design for Low-End NB-IoT Device. In Proceedings of the 2020 IEEE 2nd International Conference on Societal Automation (SA) 2021, Madeira, Portugal, 9–11 September 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Sarwar Murshed, M.G.; Murphy, C.; Hou, D.; Khan, N.; Ananthanarayanan, G.; Hussain, F. Machine Learning at the Network Edge: A Survey. ACM Comput. Surv. 2022, 170, 54. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. When Edge Meets Learning: Adaptive Control for Resource-Constrained Distributed Machine Learning. In Proceedings of the IEEE INFOCOM, Honolulu, HI, USA, 15–19 April 2018; pp. 63–71. [Google Scholar] [CrossRef]

- Gupta, C.; Suggala, A.S.; Goyal, A.; Simhadri, H.V.; Paranjape, B.; Kumar, A.; Goyal, S.; Udupa, R.; Varma, M.; Jain, P. ProtoNN: Compressed and Accurate kNN for Resource-scarce Devices. Int. Conf. Mach. Learn. 2017, 70, 1331–1340. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the ACM Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar] [CrossRef]

- Mankins, J.C. Technology Readiness Levels; NASA: Washington, DC, USA, 1995. [Google Scholar]

- Benotmane, M.; Elhari, K.; Kabbaj, A. A review & analysis of current IoT maturity & readiness models and novel proposal. Sci. Afr. 2023, 21, e01748. [Google Scholar] [CrossRef]

- Kosta, S.; Aucinas, A.; Hui, P.; Mortier, R.; Zhang, X. ThinkAir: Dynamic resource allocation and parallel execution in the cloud for mobile code offloading. In Proceedings of the IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 945–953. [Google Scholar] [CrossRef]

- Chun, B.G.; Ihm, S.; Maniatis, P.; Naik, M.; Patti, A. CloneCloud. In Proceedings of the Sixth Conference on Computer Systems, New York, NY, USA, 10 April 2011 pp. 301–314. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in Edge: Deep Learning for the Internet of Things with Edge Computing. IEEE Netw. 2018, 32, 96–101. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Wu, C.; Mao, S.; Ji, Y.; Bennis, M. Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning. IEEE Internet Things J. 2019, 6, 4005–4018. [Google Scholar] [CrossRef]

- Lei, L.; Xu, H.; Xiong, X.; Zheng, K.; Xiang, W.; Wang, X. Multiuser Resource Control With Deep Reinforcement Learning in IoT Edge Computing. IEEE Internet Things J. 2019, 6, 10119–10133. [Google Scholar] [CrossRef]

- Xu, X.; Li, H.; Xu, W.; Liu, Z.; Yao, L.; Dai, F. Artificial Intelligence for Edge Service Optimization in Internet of Vehicles: A Survey; Technical Report; Tsinghua University Press (TUP): Beijing, China, 2022. [Google Scholar] [CrossRef]

- Chiu, T.C.; Shih, Y.Y.; Pang, A.C.; Wang, C.S.; Weng, W.; Chou, C.T. Semisupervised Distributed Learning with Non-IID Data for AIoT Service Platform. IEEE Internet Things J. 2020, 7, 9266–9277. [Google Scholar] [CrossRef]

- Zhou, X.; Bilal, M.; Dou, R.; Rodrigues, J.J.P.C.; Zhao, Q.; Dai, J.; Xu, X. Edge Computation Offloading With Content Caching in 6G-Enabled IoV. IEEE Trans. Intell. Transport. Syst. 2023, 25, 2733–2747. [Google Scholar] [CrossRef]

- Zhang, K.; Leng, S.; He, Y.; Maharjan, S.; Zhang, Y. Cooperative Content Caching in 5G Networks with Mobile Edge Computing. IEEE Wirel. Commun. 2018, 25, 80–87. [Google Scholar] [CrossRef]

- Duan, S.; Zhang, D.; Wang, Y.; Li, L.; Zhang, Y. JointRec: A Deep-Learning-Based Joint Cloud Video Recommendation Framework for Mobile IoT. IEEE Internet Things J. 2020, 7, 1655–1666. [Google Scholar] [CrossRef]

- Deng, Y.; Han, T.; Ansari, N. FedVision: Federated Video Analytics With Edge Computing. IEEE Open J. Comput. Soc. 2020, 1, 62–72. [Google Scholar] [CrossRef]

- Zyrianoff, I.; Gigli, L.; Montori, F.; Sciullo, L.; Kamienski, C.; Di Felice, M. Cache-it: A distributed architecture for proactive edge caching in heterogeneous iot scenarios. Ad Hoc Netw. 2024, 156, 103413. [Google Scholar] [CrossRef]

- Wu, Z.; Sun, S.; Wang, Y.; Liu, M.; Xu, K.; Wang, W.; Jiang, X.; Gao, B.; Lu, J. FedCache: A Knowledge Cache-Driven Federated Learning Architecture for Personalized Edge Intelligence. IEEE Trans. Mobile Comput. 2024, 23, 9368–9382. [Google Scholar] [CrossRef]

- Davidson, A.; Goldberg, I.; Sullivan, N.; Tankersley, G.; Valsorda, F. Privacy pass: Bypassing internet challenges anonymously. Proc. Priv. Enhancing Technol. 2018, 3, 164–180. [Google Scholar] [CrossRef]

- Gaber, M.M.; Zaslavsky, A.; Krishnaswamy, S. Mining data streams. ACM SIGMOD Rec. 2005, 34, 18–26. [Google Scholar] [CrossRef]

- Cheng, B.; Papageorgiou, A.; Cirillo, F.; Kovacs, E. GeeLytics: Geo-distributed edge analytics for large scale IoT systems based on dynamic topology. In Proceedings of the IEEE World Forum on Internet of Things, WF-IoT 2015, Milan, Italy, 14–16 December 2015; pp. 565–570. [Google Scholar] [CrossRef]

- Ananthanarayanan, G.; Bahl, P.; Bodik, P.; Chintalapudi, K.; Philipose, M.; Ravindranath, L.; Sinha, S. Real-Time Video Analytics: The Killer App for Edge Computing. Computer 2017, 50, 58–67. [Google Scholar] [CrossRef]

- Dias de Assunção, M.; da Silva Veith, A.; Buyya, R. Distributed data stream processing and edge computing: A survey on resource elasticity and future directions. J. Netw. Comput. Appl. 2018, 103, 1–17. [Google Scholar] [CrossRef]

- Garg, N. Apache Kafka; Packt Publishing: Birmingham, UK, 2013. [Google Scholar]

- Song, F.; Tomov, S.; Dongarra, J. Enabling and scaling matrix computations on heterogeneous multi-core and multi-GPU systems. In Proceedings of the International Conference on Supercomputing, Salt Lake City, UT, USA, 15 January 2013; pp. 365–375. [Google Scholar] [CrossRef]

- Qureshi, K.N.; Iftikhar, A.; Bhatti, S.N.; Piccialli, F.; Giampaolo, F.; Jeon, G. Trust management and evaluation for edge intelligence in the Internet of Things. Eng. Appl. Artif. Intell. 2020, 94, 103756. [Google Scholar] [CrossRef]

- Al-Rakhami, M.; Alsahli, M.; Hassan, M.M.; Alamri, A.; Guerrieri, A.; Fortino, G. Cost Efficient Edge Intelligence Framework Using Docker Containers. In Proceedings of the 2018 IEEE 16th Intl Conf on Dependable, Autonomic and Secure Computing, 16th Intl Conf on Pervasive Intelligence and Computing, 4th Intl Conf on Big Data Intelligence and Computing and Cyber Science and Technology Congress(DASC/PiCom/DataCom/CyberSciTech), Athens, Greece, 12–15 August 2018; pp. 800–807. [Google Scholar] [CrossRef]

- Mendez, J.; Bierzynski, K.; Cuéllar, M.P.; Morales, D.P. Edge Intelligence: Concepts, Architectures, Applications, and Future Directions. ACM Trans. Embed. Comput. Syst. (TECS) 2022, 21, 48. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the MCC’12 1st ACM Mobile Cloud Computing Workshop, Helsinki, Finland, 17 August 2012; pp. 13–15. [Google Scholar] [CrossRef]

- Yang, J.; Yuan, Q.; Chen, S.; He, H.; Jiang, X.; Tan, X. Cooperative Task Offloading for Mobile Edge Computing Based on Multi-Agent Deep Reinforcement Learning. IEEE Trans. Netw. Serv. Manag. 2023, 20, 3205–3219. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. arXiv 2017, arXiv:1807.11164. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Rahmani, A.M.; Haider, A.; Moghaddasi, K.; Gharehchopogh, F.S.; Aurangzeb, K.; Liu, Z.; Hosseinzadeh, M. Self-learning adaptive power management scheme for energy-efficient IoT-MEC systems using soft actor-critic algorithm. Internet Things 2025, 31, 101587. [Google Scholar] [CrossRef]

- Zhang, X.; Hou, D.; Xiong, Z.; Liu, Y.; Wang, S.; Li, Y. EALLR: Energy-aware low-latency routing data driven model in mobile edge computing. IEEE Trans. Consum. Electron. 2024, 71, 6612–6626. [Google Scholar] [CrossRef]

- Moghaddasi, K.; Rajabi, S.; Gharehchopogh, F.S.; Ghaffari, A. An advanced deep reinforcement learning algorithm for three-layer D2D-edge-cloud computing architecture for efficient task offloading in the Internet of Things. Sustain. Comput. Informatics Syst. 2024, 43, 100992. [Google Scholar] [CrossRef]

- Martin, C.; Garrido, D.; Diaz, M.; Rubio, B. From the edge to the cloud: Enabling reliable IoT applications. In Proceedings of the 2019 International Conference on Future Internet of Things and Cloud, FiCloud 2019, Istanbul, Turkey, 26–28 August 2019; pp. 17–22. [Google Scholar] [CrossRef]

- Grover, J.; Garimella, R.M. Reliable and Fault-Tolerant IoT-Edge Architecture. In Proceedings of the IEEE SENSORS, New Delhi, India, 28–31 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Mohammadi, V.; Rahmani, A.M.; Darwesh, A.; Sahafi, A. Fault tolerance in fog-based Social Internet of Things. Knowl. Based Syst. 2023, 265, 110376. [Google Scholar] [CrossRef]

- Tan, T.; Cao, G. FastVA: Deep learning video analytics through edge processing and NPU in mobile. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications, Virtual, 6–9 July 2020; pp. 1947–1956. [Google Scholar]

- Indirli, F.; Ornstein, A.C.; Desoli, G.; Buschini, A.; Silvano, C.; Zaccaria, V. Layer-wise Exploration of a Neural Processing Unit Compiler’s Optimization Space. In Proceedings of the 2024 10th International Conference on Computer Technology Applications, Vienna, Austria, 15–17 May 2024; pp. 20–26. [Google Scholar]

- Rico, A.; Pareek, S.; Cabezas, J.; Clarke, D.; Ozgul, B.; Barat, F.; Fu, Y.; Münz, S.; Stuart, D.; Schlangen, P.; et al. Amd xdna™ npu in ryzen™ ai processors. IEEE Micro 2024, 44, 73–82. [Google Scholar] [CrossRef]

- Lee, E.; Sung, M.; Jang, S.J.; Park, J.; Lee, S.S. Memory-centric architecture of neural processing unit for edge device. In Proceedings of the IEEE 2021 18th International SoC Design Conference (ISOCC), Jeju Island, Republic of Korea, 6–9 October 2021; pp. 240–241. [Google Scholar]

- Shahid, A.; Mushtaq, M. A Survey Comparing Specialized Hardware and Evolution in TPUs for Neural Networks. In Proceedings of the 2020 23rd IEEE International Multi-Topic Conference, INMIC 2020, Bahawalpur, Pakistan, 5–7 November 2020. [Google Scholar] [CrossRef]

- Jo, J.; Jeong, S.; Kang, P. Benchmarking GPU-accelerated edge devices. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing, BigComp 2020, Busan, Republic of Korea, 19–22 February 2020; pp. 117–120. [Google Scholar] [CrossRef]

- Seshadri, K.; Akin, B.; Laudon, J.; Narayanaswami, R.; Yazdanbakhsh, A. An Evaluation of Edge TPU Accelerators for Convolutional Neural Networks. In Proceedings of the 2022 IEEE International Symposium on Workload Characterization, IISWC 2022, Austin, TX, USA, 6–8 November 2022; pp. 79–91. [Google Scholar] [CrossRef]

- Alwahedi, F.; Aldhaheri, A.; Ferrag, M.A.; Battah, A.; Tihanyi, N. Machine learning techniques for IoT security: Current research and future vision with generative AI and large language models. Internet Things Cyber-Phys. Syst. 2024, 4, 167–185. [Google Scholar] [CrossRef]

- Cherbal, S.; Zier, A.; Hebal, S.; Louail, L.; Annane, B. Security in internet of things: A review on approaches based on blockchain, machine learning, cryptography, and quantum computing. J. Supercomput. 2024, 80, 3738–3816. [Google Scholar] [CrossRef]

- Kang, P.; Somtham, A. An Evaluation of Modern Accelerator-Based Edge Devices for Object Detection Applications. Mathematics 2022, 10, 4299. [Google Scholar] [CrossRef]

- Sufian, A.; You, C.; Dong, M. A deep transfer learning-based edge computing method for home health monitoring. In Proceedings of the IEEE 2021 55th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 24–26 March 2021; pp. 1–6. [Google Scholar]

- Yang, J.; Zou, H.; Cao, S.; Chen, Z.; Xie, L. MobileDA: Toward edge-domain adaptation. IEEE Internet Things J. 2020, 7, 6909–6918. [Google Scholar] [CrossRef]

- Qian, J.; Gochhayat, S.P.; Hansen, L.K. Distributed active learning strategies on edge computing. In Proceedings of the 2019 6th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud), Paris, France, 21–23 June 2019; pp. 221–226. [Google Scholar]

- Zhu, G.; Liu, D.; Du, Y.; You, C.; Zhang, J.; Huang, K. Toward an Intelligent Edge: Wireless Communication Meets Machine Learning. IEEE Commun. Mag. 2020, 58, 19–25. [Google Scholar] [CrossRef]

- Wang, W.; Lv, Q.; Yu, W.; Hong, W.; Qi, J.; Wang, Y.; Ji, J.; Yang, Z.; Zhao, L.; Song, X.; et al. Cogvlm: Visual expert for pretrained language models. arXiv 2023, arXiv:2311.03079. [Google Scholar]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V.; et al. Opt: Open pre-trained transformer language models. arXiv 2022, arXiv:2205.01068. [Google Scholar] [CrossRef]

- Chung, H.W.; Hou, L.; Longpre, S.; Zoph, B.; Tay, Y.; Fedus, W.; Li, Y.; Wang, X.; Dehghani, M.; Brahma, S.; et al. Scaling instruction-finetuned language models. arXiv 2022, arXiv:2210.11416. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. Adv. Neural Inf. Process. Syst. 2023, 36, 34892–34916. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv 2023, arXiv:2301.12597. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Lin, B.; Tang, Z.; Ye, Y.; Cui, J.; Zhu, B.; Jin, P.; Zhang, J.; Ning, M.; Yuan, L. Moe-llava: Mixture of experts for large vision-language models. arXiv 2024, arXiv:2401.15947. [Google Scholar]

- Li, S.; Tang, H. Multimodal Alignment and Fusion: A Survey. arXiv 2024, arXiv:2411.17040. [Google Scholar] [CrossRef]

- Li, J.; Selvaraju, R.; Gotmare, A.; Joty, S.; Xiong, C.; Hoi, S.C.H. Align before fuse: Vision and language representation learning with momentum distillation. Adv. Neural Inf. Process. Syst. 2021, 34, 9694–9705. [Google Scholar]

- Qin, J.; Xu, Y.; Lu, Z.; Zhang, X. Alternative Telescopic Displacement: An Efficient Multimodal Alignment Method. arXiv 2023, arXiv:2306.16950. [Google Scholar] [CrossRef]

- Cajas, S.A.; Restrepo, D.; Moukheiber, D.; Kuo, K.T.; Wu, C.; Chicangana, D.S.G.; Paddo, A.R.; Moukheiber, M.; Moukheiber, L.; Moukheiber, S.; et al. A Multi-Modal Satellite Imagery Dataset for Public Health Analysis in Colombia; PhysioNet: Online, 2024. [Google Scholar] [CrossRef]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal data fusion: An overview of methods, challenges, and prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Castanedo, F. A review of data fusion techniques. Sci. World J. 2013, 2013, 704504. [Google Scholar] [CrossRef]

- Snoek, C.G.; Worring, M.; Smeulders, A.W. Early versus late fusion in semantic video analysis. In Proceedings of the 13th annual ACM international conference on Multimedia, Singapore, 6–11 November 2005; pp. 399–402. [Google Scholar]

- Pereira, L.M.; Salazar, A.; Vergara, L. A comparative analysis of early and late fusion for the multimodal two-class problem. IEEE Access 2023, 11, 84283–84300. [Google Scholar] [CrossRef]

- Akbari, H.; Yuan, L.; Qian, R.; Chuang, W.H.; Chang, S.F.; Cui, Y.; Gong, B. Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. Adv. Neural Inf. Process. Syst. 2021, 34, 24206–24221. [Google Scholar]

- Zhou, H.Y.; Yu, Y.; Wang, C.; Zhang, S.; Gao, Y.; Pan, J.; Shao, J.; Lu, G.; Zhang, K.; Li, W. A transformer-based representation-learning model with unified processing of multimodal input for clinical diagnostics. Nat. Biomed. Eng. 2023, 7, 743–755. [Google Scholar] [CrossRef]

- Khader, F.; Kather, J.N.; Müller-Franzes, G.; Wang, T.; Han, T.; Tayebi Arasteh, S.; Hamesch, K.; Bressem, K.; Haarburger, C.; Stegmaier, J.; et al. Medical transformer for multimodal survival prediction in intensive care: Integration of imaging and non-imaging data. Sci. Rep. 2023, 13, 10666. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.H.; Blaschko, M.B.; Saarakkala, S.; Tiulpin, A. Clinically-inspired multi-agent transformers for disease trajectory forecasting from multimodal data. IEEE Trans. Med Imaging 2023, 43, 529–541. [Google Scholar] [CrossRef] [PubMed]

- Garcia, J.; Masip-Bruin, X.; Giannopoulos, A.; Trakadas, P.; Cajas Ordoñez, S.A.; Samanta, J.; Suárez-Cetrulo, A.L.; Simón Carbajo, R.; Michalke, M.; Admela, J.; et al. ICOS An Intelligent MetaOS for the Continuum. In Proceedings of the MECC ’25 2nd International Workshop on MetaOS for the Cloud-Edge-IoT Continuum, New York, NY, USA, 30 March–3 April 2025; pp. 53–59. [Google Scholar] [CrossRef]

- Ren, J.; Yu, G.; Cai, Y.; He, Y. Latency optimization for resource allocation in mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 5506–5519. [Google Scholar] [CrossRef]

- Zhu, S.; Ota, K.; Dong, M. Energy-Efficient Artificial Intelligence of Things With Intelligent Edge. IEEE Internet Things J. 2022, 9, 7525–7532. [Google Scholar] [CrossRef]

- Mao, W.; Zhao, Z.; Chang, Z.; Min, G.; Gao, W. Energy-Efficient Industrial Internet of Things: Overview and Open Issues. IEEE Trans. Ind. Informat. 2021, 17, 7225–7237. [Google Scholar] [CrossRef]

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Kumar, A.; Zhuang, V.; Agarwal, R.; Su, Y.; Co-Reyes, J.D.; Singh, A.; Baumli, K.; Iqbal, S.; Bishop, C.; Roelofs, R.; et al. Training language models to self-correct via reinforcement learning. arXiv 2024, arXiv:2409.12917. [Google Scholar]

- Hagendorff, T. The Ethics of AI Ethics: An Evaluation of Guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- Tsouparopoulos, T.; Koutsopoulos, I. Explainability and Continual Learning meet Federated Learning at the Network Edge. arXiv 2025, arXiv:2504.08536. [Google Scholar] [CrossRef]

- Chen, T. All versus one: An empirical comparison on retrained and incremental machine learning for modeling performance of adaptable software. In Proceedings of the ICSE Workshop on Software Engineering for Adaptive and Self-Managing Systems, Montreal, QC, Canada, 25 May 2019; pp. 157–168. [Google Scholar] [CrossRef]

- Aspis, M.; Ordónez, S.A.; Suárez-Cetrulo, A.L.; Carbajo, R.S. DriftMoE: A Mixture of Experts Approach to Handle Concept Drifts. arXiv 2025, arXiv:2507.18464. [Google Scholar]

| Technique | Memory Reduction | Accuracy Impact | Latency Improvement | Typical Use Case |

|---|---|---|---|---|

| Structured Pruning | 2×–10× smaller | 0.1–5% loss | 1.2×–3× faster | Hardware-friendly edge deployment |

| Unstructured Pruning | 5×–50× smaller | 1–8% loss | Limited improvement | Memory-constrained scenarios |

| INT8 Quantization | 4× smaller | 0.5–3% loss | 1.5×–3× faster (edge devices) | Mobile inference optimization |

| INT4/Binary Quantization | 8×–16× smaller | 2–15% loss | 2×–4× faster (specialized HW) | Ultra-low resource deployment |

| Knowledge Distillation | 2×–5× smaller | 0.5–3% loss | Proportional to compression | Model compression with accuracy retention |

| Low-Rank Factorization | 1.5×–4× smaller | 0.1–2% loss | 1.2×–2.5× faster | Fine-tuning large models |

| Platform | DV | HT | MEV | PV | CI/CD | MD | PM |

|---|---|---|---|---|---|---|---|

| AWS SageMaker | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| MLFlow | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Kubeflow | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| DataRobot | ✓ | ✓ | ✓ | ✓ | |||

| Iterative Enterprise | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| ClearML | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| MLReef | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Streamlit | ✓ | ✓ | ✓ | ✓ |

| Tool | Ease of Use | Scale | Edge Compat | Best Use Case |

|---|---|---|---|---|

| MLflow | Moderate | Good | Variable | Strong tracking/registry. Edge: model format. |

| W&B | High | Excellent | Variable | Excellent viz/tracking. Edge: model format. |

| Comet ML | High | Excellent | Variable | Robust tracking. Edge: model format. |

| Kubeflow | Complex | Excellent | Moderate | K8s-native, powerful but complex. |

| BentoML | Moderate | Good | Good | Optimized serving; edge-suitable. |

| SageMaker | Mod-High | Excellent | Good | Comprehensive suite, edge manager. |

| Databricks | Mod-High | Excellent | Variable | Big data scaling. Edge: model format. |

| Streamlit | High | Moderate | Variable | Quick dashboards, interactive viz. |

| MLReef | Moderate | Good | Good/Var. | Full-stack: deploy and monitor models. |

| DVC | Moderate | Excellent | Limited | Git-like versioning, reproducible ML. |

| DataRobot | High | Excellent | Good/Var. | End-to-end AutoML, explainability. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cajas Ordóñez, S.A.; Samanta, J.; Suárez-Cetrulo, A.L.; Carbajo, R.S. Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications. Future Internet 2025, 17, 417. https://doi.org/10.3390/fi17090417

Cajas Ordóñez SA, Samanta J, Suárez-Cetrulo AL, Carbajo RS. Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications. Future Internet. 2025; 17(9):417. https://doi.org/10.3390/fi17090417

Chicago/Turabian StyleCajas Ordóñez, Sebastián A., Jaydeep Samanta, Andrés L. Suárez-Cetrulo, and Ricardo Simón Carbajo. 2025. "Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications" Future Internet 17, no. 9: 417. https://doi.org/10.3390/fi17090417

APA StyleCajas Ordóñez, S. A., Samanta, J., Suárez-Cetrulo, A. L., & Carbajo, R. S. (2025). Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications. Future Internet, 17(9), 417. https://doi.org/10.3390/fi17090417