1. Introduction

The continuous increase in the use of mobile communications and cellular networks has created challenges related to the growth in load demand [

1]. This situation has generated the need to introduce specialized mechanisms to address these issues and prevent service quality degradation [

2]. In this complex environment, artificial intelligence (AI) plays a significant role, particularly within the technology and telecommunication sectors [

3].

Load balancing emerges as a fundamental tool for the implementation of mobile networks, especially in areas with a high user density. It involves optimizing aspects such as signal quality, traffic management, resource allocation and other parameters that could impact network performance [

4]. Load balancing is a comprehensive field that involves a variety of meta-heuristic techniques and methods, including machine learning models, resource allocation algorithms and deep learning, among others [

5].

Moreover, load balancing optimization is necessary to manage the unpredictable load conditions caused by uneven data usage and cell imbalances [

6]. This approach seeks to improve operational efficiency, quality of service (QoS) and user experience by enabling networks to adapt dynamically and proactively to changing resource demand behaviors [

7].

The introduction of 5G brings about highly complex network management scenarios, leading to the increased utilization of Self-Organizing Networks (SONs). As a result, many users who expect to maintain a certain quality of experience (QoE) are poorly served, and their user throughput is subjected to decision-making processes for adjusting classic parameters of the mobile network based on SON systems. The problem that arises when making network adjustments is the lack of decisions based on user behavior, their distribution, location, and multiple parameters that can be obtained from context awareness [

8]. With zero latency requirement of 5G networks, SONs need to change from reactive to proactive response through the holistic context in addition to the traditional context [

9].

Mobility robustness optimization (MRO) and mobility load balancing (MLB) are two distinct approaches to load balancing with different objectives. MLB is designed for macrocell scenarios and aims to address persistent congestion issues caused by factors like population growth in urban areas. On the other hand, MRO focuses on minimizing undesirable effects, such as dropped calls, resulting from the handover (HO) process that enables users to move freely within the network. Both concepts aim to enhance network efficiency and ensure equitable distribution of radio resources. They employ algorithms to balance the load of users among cells, effectively mitigating adverse effects [

10].

Classic parameters such as handover margins (HOMs), time to trigger (TTT), antenna tilt angles, and power traffic sharing have been used for years in the processes of self-healing and load balancing in mobile networks and fuzzy logic controllers [

11]. These systems have been widely adopted due to their low data processing overhead, and they can be combined with socially aware information to identify user crowds across event locations, thereby reducing data overload by leveraging knowledge of each user’s location in the radio scenario [

12].

Machine learning-based algorithms have also played a significant role in recent years in automatic implementations for changes in classic and context-aware parameters. A typical implementation involves the use of fuzzy logic combined with the Q-learning algorithm to take actions that are less reliant on expert knowledge and more adapted to the real circumstances of the network, considering various possible outputs for the same input state [

13].

This study defines three classes of algorithms by level of autonomy in next-generation mobile networks.

Automated algorithms (e.g., deep reinforcement learning) operate without human intervention, targeting latency-sensitive scenarios requiring millisecond decisions (e.g., URLLC for industrial automation, autonomous vehicles).

Semi-automated approaches combine predefined rules with ML-based adjustments, excelling in dynamic mMTC environments (e.g., stadiums, IoT densification) where operators adjust parameters based on real-time KPIs.

Hybrid systems integrate predictive AI with emergency heuristic solutions, making it possible to address volatile 5G/6G use cases, such as network segment reconfiguration or edge computing handoffs, where reliability-critical transitions require coordination between humans and AI.

Supervised learning techniques and deep reinforcement learning have also been implemented in predicting cellular data usage [

14], taking into account mobility models adapted to human behavior or real traces obtained from the mobile network [

15], as well as information from social sources such as calendars, Twitter and open databases, among others. Despite the considerable number of studies about load balancing in mobile networks [

16], very few have conducted comparisons of automatic or semi-automatic control methods incorporating social awareness.

However, implementing a load balancing system is quite challenging due to the various possibilities of controllers, radio environment characteristics, traffic schemes, user mobility models and their adaptability to different social behaviors. Furthermore, an important limitation lies in maintaining the confidentiality of user location data and calculating location with respect to network-specific positioning techniques adds further overhead.

In this context, our objective is to conduct a systematic review of the literature focused on load balancing techniques. The main purpose of this review is to identify and examine the most-used techniques, as well as evaluating their feasibility across different study scenarios. Our intention is not limited to determining the most common practices in the field of load balancing in mobile networks but also verifies their applicability and performance in diverse case studies.

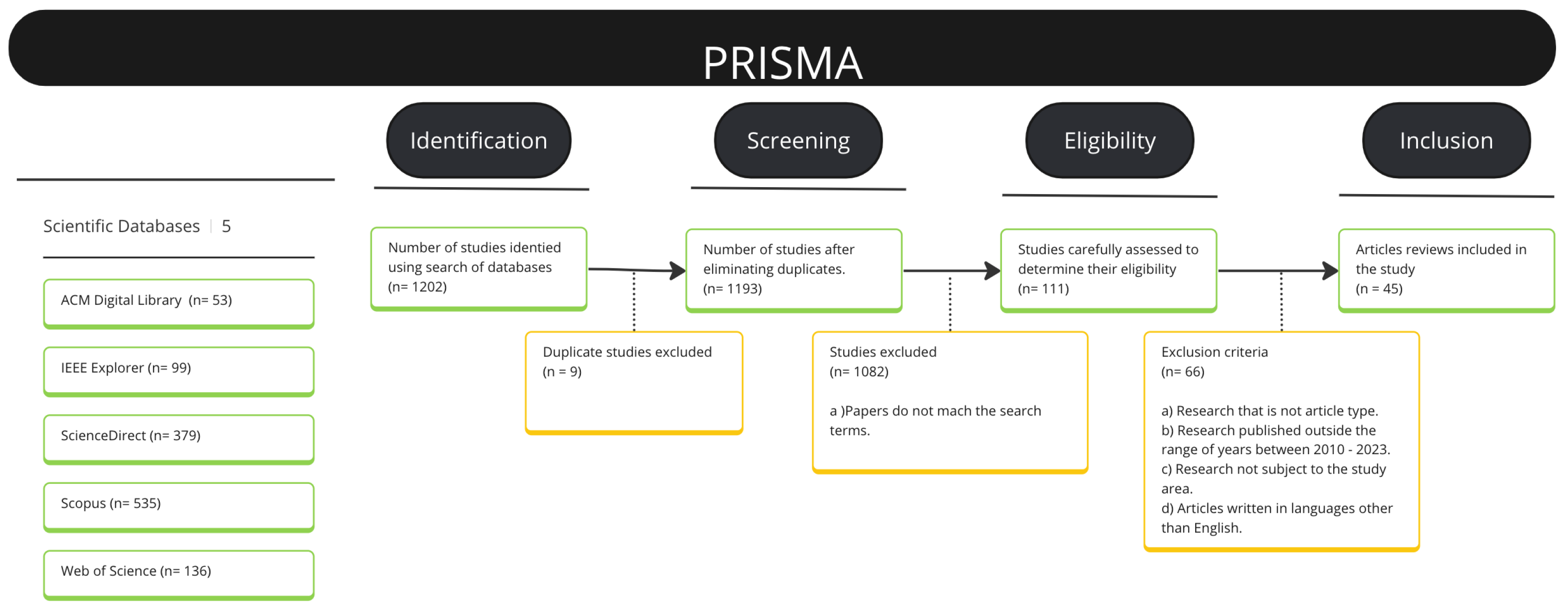

In addition, extensive research has been presented in several bibliographic sources focused on work related to optimization systems for load balancing in mobile networks. In this study, we use the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) technique [

17], which involves the collection and synthesis of evidence from multiple studies to address specific research questions.

The remainder of this paper is divided into several sections.

Section 2 sets out the research objectives.

Section 3 addresses the methodology, in which the research questions are posed and the literature collected from various sources is presented, along with the inclusion and exclusion criteria following the PRISMA method.

Section 4 includes graphs summarizing certain properties of the studies, as well as a summary and analysis of each paper selected from the database.

Section 5 provides a discussion aiming to identify commonalities among the publications in relation to specific topics.

2. Research Objectives

Over the past several years, the optimization of load management in next-generation mobile networks has been pursued with the aim of enhancing the performance and QoS provided. Several applicable techniques have been identified for this purpose; however, most of them focus on describing possible heuristic or metaheuristic algorithms based predominantly on typical parameters obtained from conventional network management systems, without considering the dynamic and random behavior of the environments where mobile networks are deployed. For instance, in scenarios with user concentration hotspots, traditional algorithms do not account for social information or human behavior (such as mobility routes, dwell times, interactivity, most demanded applications, energy consumption, etc.). As a result, algorithms that overlook these sources of information may only partially solve the problem, leading to the omission of external factors that could help reduce excessive energy consumption or prioritize radio resource allocation. Therefore, it is crucial to identify which algorithms are currently being used and which ones can be adapted to integrate measurable parameters outside the mobile network. The purpose of this study is to conduct a systematic literature review (SLR) to identify and evaluate the most frequently used load balancing algorithms in mobile networks, and to use this as a foundation for adapting them with new parameters that more accurately represent real-world network deployment scenarios.

Finally, the significance of this study lies in classifying the types of algorithms according to their area of applicability, level of automation, and relevance within the structure of new radio resource management schemes.

3. Methodology

To determine the state-of-the-art studies, an SLR was performed following the methodology of PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [

18]. PRISMA provides a structured, transparent framework to minimize bias and ensure reproducibility in literature synthesis, which is critical for addressing complex, interdisciplinary topics like next-generation network optimization. Its four-phase workflow (identification, screening, eligibility and inclusion) guided the systematic evaluation of scientific publications, ensuring comprehensive coverage of emerging algorithms while avoiding selection bias [

19].

The process began by defining the research question.

PRISMA’s emphasis on explicit search strategies ensured rigorous delimitation of the study scope. To align with this principle, key terms (

Table 1) were operationalized with synonyms and abbreviations, covering technical parameters (e.g., latency, scalability) and sociotechnical factors (e.g., user mobility patterns, data demand fluctuations). Five multidisciplinary databases were selected to capture diverse perspectives: Once the search terms had been defined, scientific databases aligning with the areas of study were established.

Association for Computing Machinery (ACM) Digital Library (

https://dl.acm.org/, accessed on 5 September 2024).

In this regard, the basic search string is depicted in

Figure 1, considering that its structure may vary according to the specific features and limitations of each database.

Finally, the exclusion and inclusion criteria determined for the study were defined to delineate the scope of articles to be evaluated

Exclusion Criteria

- (a)

Submissions that are not articles.

- (b)

Studies published outside the time frame of 2014 to 2023.

- (c)

Research unrelated to the field of study.

- (d)

Articles written in languages other than English.

- (e)

Duplicated articles.

Inclusion Criteria

- (a)

Articles included at the discretion of the researchers.

After conducting a systematic search for articles across the various established databases, a total of 1202 base records were retrieved. These records underwent a content-based analysis to evaluate their relevance according to the search terms, resulting in the exclusion of 1082 records. Among the remainder, 9 were identified as duplicates, leading to a new total of 111 validated articles. Subsequently, considering the exclusion criteria, 66 studies were further refined, resulting in 45 articles that were considered to effectively address the research question, as evidenced in

Figure 1.

In addition, for a detailed discussion of the algorithmic principles of DL/RL, including mathematical formulations and architectural tradeoffs, see the specifically in

Supplementary S1.

Also, the full mathematical specifications, assumption frameworks, and applicability limits of the regression-heuristic models are formalized in the

Supplementary S2.

4. Results

After the screening phase was completed, the resulting 45 papers were selected in order to conduct an in-depth analysis. The resulting study period spanned from 2014 to 2023, and can thus be regarded as involving novel and current research areas. It should be noted that the volume of papers on the subject has been increasing over the years, and most of the articles selected for the study were published in recent years (see

Figure 2).

The selected papers were those that relate most to the research question that the authors are interested in solving and can be classified into various categories (which are not mutually exclusive, such that the same article may be included in several categories), as shown in

Figure 3.

The results of this analysis are presented in the following sections.

4.1. Semi-Automatic

Social-Aware Load Balancing System for Crowds in Cellular Networks [

20]: In this paper, the implementation of a social-aware load balancing system for cellular networks using fuzzy logic controllers in the context of crowded events is discussed. Through integrating social event data with traditional network and user data, the system optimizes load sharing between cells, resulting in improved load balancing without compromising signal quality. Simulation results demonstrated the effectiveness of the system in maintaining acceptable Signal-to-Interference-plus-Noise Ratio (SINR) levels in a 5G network scenario. This study highlighted the significance of considering user behaviors and network traffic correlation in addressing load imbalance challenges during mass events.

Mobility Context Awareness to Improve Quality of Experience in Traffic Dense Cellular Networks [

21]: In this paper, a system model designed to predict traffic severity at specific locations based on vehicular user activity was presented. It included algorithms for detecting vehicular clusters, predicting traffic jams and implementing proactive load balancing and small cell activation. The proposed strategies leverage mobility context-awareness to improve the quality of experience in traffic-dense cellular networks, addressing challenges related to high mobility and data demand. The simulation results demonstrated improvements in key performance indicators, energy consumption and significant reductions in user dropping and blocking, enhancing the overall user experience in high-load scenarios.

Context-Aware Handover Policies in HetNets [

22]: In this paper, a Context-Aware Handover Policy (CAHP) to optimize handover decisions in Heterogeneous Networks (HetNets) was proposed and validated. The CAHP considers various context parameters, including the transmit power, path loss coefficients, UE speed and cell traffic load to improve user capacity and performance. The authors employed a theoretical model based on a Markov Chain to develop the optimal Time-to-Trigger (TTT) value and maximize the average performance of the user equipment (UE) in HetNet scenarios. This model considers transitions among neighboring cells and analyzes the impact of context parameters on handover decisions. Simulation results showed that the proposed CAHP outperforms standard handover processes with fixed TTT values in various scenarios. The CAHP enhances the average capacity of mobile users crossing different cells within the HetNet, demonstrating the effectiveness of context-awareness in enhancing handover performance.

Traffic-Aware Carrier Allocation [

23]: This study introduced a two-step resource allocation algorithm designed to enhance service differentiation in heterogeneous traffic scenarios within wireless networks. The main goal of the algorithm is to prioritize inelastic traffic for licensed bands while directing elastic traffic to unlicensed bands, particularly under high traffic loads. The algorithm involves resource grouping and allocation, with primary carriers assigned using the Hungarian algorithm and secondary carriers allocated based on traffic loads and types. Simulation results demonstrate improved QoS for inelastic traffic, increased connection robustness, and enhanced system utility and QoS satisfaction, even under high traffic conditions. However, it was noted that the proposed approach could lead to resource wastage during periods of low traffic.

Based on the Use Case of Load Balancing in Small-Cell Networks [

24]: This study discussed the integration of context-aware algorithms in load balancing for cellular networks. It introduced the power traffic sharing (PTS) algorithm and a modified version, power load sharing (PLS). These algorithms leverage context information to enhance network performance and reduce user dissatisfaction. The study demonstrated the advantages of context-aware LB algorithms in optimizing network performance in dynamic scenarios. Additionally, a framework for context-aware self-optimization in small-cell networks was outlined, highlighting improved convergence time and user experience. The research emphasized the benefits of incorporating user context awareness in load balancing algorithms to enhance network performance and user satisfaction.

QoS Aware Predictive Radio Resource Management Approach Based on MIH Protocol [

25]: In this paper, a QoS-aware predictive radio resource management approach based on the IEEE 802.21 Media Independent Handover (MIH) protocol for heterogeneous wireless networks was proposed. The approach seeks to enhance vertical handover processes and overall network efficiency through the integration of radio resource allocation in handover decisions and target network selection. The algorithm involves user classification, priority call indicators, resource allocation decisions and handover processes. It also features modules for monitoring handovers, estimating information, scheduling users and radio resource control. The approach estimates available resources in different networks, predicts handover events and manages interference to improve QoS. The scheduling process prioritizes users based on QoS requirements and channel conditions, with the goal of maximizing resource allocation while ensuring efficient bandwidth usage.

Context-Aware Group Buying in Ultra-Dense Small Cell Networks [

26]: This study discussed the concept of Context-Aware Group Buying in small-cell networks (SCNs) to optimize resource allocation. It explored the relationship between context-awareness and group buying, emphasizing their roles in enhancing resource efficiency. Technologies such as spectrum management, energy efficiency and caching were discussed in this context. Case studies of spectrum auction and cooperative caching using the Context-Aware Group Buying (CAGB) mechanism were presented. Additionally, challenges in theoretical game models versus practical communication systems were highlighted.

Graph-Based Joint User-Centric Overlapped Clustering and Resource Allocation in Ultradense Networks [

27]: In this paper, resource allocation in ultra-dense networks (UDNs) was discussed, focusing on user-centric overlapped clustering and limited orthogonal resource blocks (RBs). The proposed framework involves a novel distributed clustering solution and a graph-based resource allocation scheme to maximize spectral efficiency. The algorithm includes a two-stage process: initially, it sends requests to access points (APs) and compares throughput requests; subsequently, it yields a graph-based resource allocation solution using graph modeling and coloring. The algorithm efficiently allocates RBs to mitigate interference and maximize throughput and showed improved performance in terms of per area aggregated user rate and user rate when compared to traditional approaches.

Energy Efficient Throughput Aware Traffic Load Balancing in Green Cellular Networks [

28]: The main objective of this paper was to propose a dynamic point selection coordinated multipoint (DPS CoMP)-based load balancing paradigm for energy-efficient and throughput-aware traffic management in green cellular networks. The key focus was on maximizing renewable energy usage while minimizing grid energy consumption to improve network performance. Additionally, a SINR-based traffic steering algorithm was employed to optimize energy efficiency, throughput and radio efficiency. The use of actor–critic reinforcement learning approaches for resource allocation in HetNets with hybrid energy supply was also discussed. The results showed significant improvements in energy efficiency, throughput performance and spectral efficiency compared to conventional schemes.

A Self-Organizing Base Station Sleeping and User Association Strategy for Dense Cellular Networks [

29]: This study presented a self-organizing strategy, called the Green Shadow Price User Association algorithm (GSPA), for the optimization of dense cellular networks by balancing energy consumption and user-perceived performance. It dynamically adjusts base station operation modes based on load measurements and SINR values and outperformed benchmarks in terms of power consumption and performance. It aims to make consistent decisions without prior optimization, reacting to changing load conditions. The approach considers traffic demands, user SINR values and power consumption to optimize user association and base station operation modes, and its effectiveness was demonstrated through simulations. The proposed GSPA adapts to varying traffic demands and network conditions and showed promising results in terms of reducing power consumption, minimizing service denials and enhancing user satisfaction.

Delay and Energy-Aware Load Balancing in Ultra-Dense Heterogeneous 5G Networks [

30]: In this paper, an enhanced approach for load balancing in a cellular network was proposed, specifically focusing on performance, energy consumption and delay awareness in ultra-dense heterogeneous 5G networks with small cells and a macrocell. Both static and dynamic load balancing strategies were introduced to achieve this optimization. The static strategy involves a nonlinear convex optimization problem, while the dynamic strategy employs the Fixed-Point Iteration (FPI) approach. Additionally, a probabilistic strategy was proposed, where small cells independently serve flows with specific probabilities. Numerical results demonstrated that the dynamic strategy consistently surpasses the static strategy in terms of load balancing.

Towards Energy Efficient Load Balancing for Sustainable Green Wireless Networks Under Optimal Power Supply [

31]: This study focused on enhancing load balancing and energy efficiency in green cellular networks powered by grid-tied solar PV/battery systems. The study utilized load balancing techniques, centralized resource allocation for cell zooming and cost modeling to optimize energy efficiency and reduce the carbon footprint. The results demonstrated improvements in throughput, energy efficiency and spectral efficiency in the grid-tied solar PV/battery system for LTE base stations. Load balancing algorithms further enhance energy efficiency, while cell zooming techniques improve spectral efficiency despite certain implementation challenges. Overall, the research highlighted the potential for green cellular networks to achieve sustainability and cost savings through the integration of renewable energy.

Mobility Management in HetNets [

32]: In this paper, innovative context-aware mobility management procedures and scheduling methods for heterogeneous networks (HetNets) in cellular networks were proposed, aiming to enhance handover performance, throughput and fairness. The algorithms included reinforcement learning techniques for context-aware mobility management in small-cell networks, a multi-armed bandit (MAB)-based approach for system performance maximization in HetNets, a satisfaction-based learning approach to reduce handover failure probability and a context-aware scheduler for optimizing throughput in HetNets through dynamically adjusting resource allocation based on factors such as UE velocity and history. The results showed significant improvements in UE throughput, with gains of up to 80% when compared to classical methods, along with reduced handover failure probability and improved fairness in resource allocation among UE in a cell. Overall, the proposed approach outperformed classical mobility management approaches in terms of throughput, fairness and handover performance.

Channel Access-Aware User Association [

33]: This study explored the impact of bias values and interference coordination on the spectral efficiency of different user association schemes in a heterogeneous network. It introduced a channel access-aware user association scheme for two-tier downlink cellular networks, highlighting the importance of optimal bias values and interference coordination in enhancing network performance and achieving fairness between macro and small-cell users.

Improving Load Balancing Techniques [

34]: In this paper, several indoor positioning and optimization methods in femtocell networks were discussed, focusing on improving user experience, load balancing and network performance. It introduced an algorithm that prioritizes voice traffic, adjusts cell transmit power and optimizes femtocell coverage areas based on user location and received signal strength. The effectiveness of these algorithms in terms of improving network performance and user satisfaction was also evaluated through field tests and simulations.

A Novel, Efficient, Green and Real-Time Load Balancing Algorithm for 5G Network [

35]: This study proposed a novel load balancing algorithm, called LPWLC (Load Prediction Weighted Least Connection), designed for 5G network measurement report-collecting clusters. The LPWLC algorithm leverages historical load data to predict server load and dynamically adjust server weights, aiming to enhance efficiency and scalability and reduce costs and power consumption compared to existing methods. Experimental results demonstrated significant improvements in transmission efficiency, load balancing and energy consumption. The algorithm was further evaluated in a simulation environment, showing reduced response times and power consumption savings.

User-Driven Context-Aware Network Controlled RAT Selection for 5G Networks [

36]: The main objective of this paper was to address the challenges posed by the increasing number of devices and data traffic in 5G networks. It focused on optimizing network performance through a context-aware RAT selection mechanism that integrates Wi-Fi with cellular networks, ensuring seamless handovers between different access technologies. The COmpAsS algorithm focuses on context acquisition, performance evaluation and adherence to 3GPP guidelines. It considers user preferences, triggers based on events and automatically adapts parameters based on network conditions to optimize QoS. The results indicated that COmpAsS outperformed the baseline algorithm in terms of throughput and delay, showcasing its effectiveness in optimizing RAT selection and enhancing network performance.

A New Fuzzy-Based Method for Energy-Aware Resource Allocation in Vehicular Cloud Computing [

37]: The primary objective of this paper was to introduce a fuzzy-based approach for energy-conscious resource allocation in vehicular cloud computing, employing a nature-inspired algorithm. In particular, a fuzzy-based cuckoo search algorithm was employed for virtual cloud resource allocation, and its performance was compared against genetic algorithms, ant colony optimization and standard cuckoo search algorithms. The results indicated that the proposed method outperformed the other algorithms in terms of execution time, delay and makespan while exhibiting superior energy efficiency, SLA adherence and an average number of powered-off virtual machines.

Application Association and Load Balancing to Enhance Energy Efficiency in Heterogeneous Wireless Networks [

38]: The main objective of this paper was to enhance energy efficiency in heterogeneous wireless networks through the proposal of a game-based analysis for applications and network characteristics. The study focused on load balancing, resource allocation and network selection based on application requirements in order to achieve energy efficiency and fairness in resource allocation. The algorithms used in the study included a weight-based rotation game algorithm for load balancing and BLNF and TBNF algorithms for load balancing in heterogeneous wireless networks. The proposed algorithms outperformed existing methods in terms of power consumption, with the TBNF algorithm showing significant improvements in reducing power consumption for transmission- and intermittent-bound applications.

4.2. Automatic

Context-Aware Proactive 5G Load Balancing and Optimization for Urban Areas [

15]: This study discussed the application of data analytics, machine learning techniques and social media data for proactive load balancing and optimization of 5G networks in urban areas. Various frameworks and methods were proposed to detect network anomalies, forecast high-traffic areas during events and enhance network performance through the integration of context awareness and event detection. This study aimed to enhance network efficiency and capacity through the use of social network data analytics and proactive optimization strategies.

Machine Learning Aided Context-Aware Self-Healing Management [

39]: In this paper, a self-healing scheme designed to address cell outages in small-cell networks using machine learning algorithms was proposed. The scheme includes a Cell Outage Detection (SCOD) mechanism that efficiently detects outages, even with limited key performance indicator (KPI) information, through the utilization of support vector data description (SVDD). It also incorporates a Cell Outage Compensation (SCOC) mechanism that optimizes resource allocation to mitigate the impact of outages while considering load balancing and user QoS requirements. The framework aims to automatically manage cell outages in ultra-dense small-cell networks, improving network performance and user satisfaction.

Context-Aware Mobility Management In HetNets [

40]: This study evaluated different mobility management approaches in a HetNet scenario with picocell deployment. It proposed coordination-based and context-aware mobility management approaches for small-cell networks in HetNets using reinforcement learning. These methods utilize multi-armed bandit and satisfaction-based learning algorithms to optimize handover performance, UE throughput, load balancing, context-aware scheduling and fairness. Simulation results revealed significant improvements in UE throughput, handover probability, sum-rate and cell-edge UE throughput, when compared to classical mobility management methods.

Mobility Management in HetNets Consider on QOS and Improve Throughput [

41]: This study discussed mobility management in HetNets, focusing on QoS and throughput improvement. A context-aware mobility management approach was proposed for small cellular networks, using reinforcement learning for optimal cell range extension and UE scheduling. This method aims to minimize handover failures and ping-pong effects, improving overall system capacity and fairness conditions. The method involves optimizing load balancing and scheduling based on tissue-aware timing, including short-term scheduling based on user speed and history, as well as long-term learning-based solutions for optimal performance. The algorithm combines reinforcement learning with tissue-aware timing for load balancing and scheduling in HetNets.

SON Conflict Resolution [

42]: This study introduced SONCO, a solution for conflict resolution in Self-Organizing Networks (SONs) using reinforcement learning with state aggregation. SONCO optimizes parameter configurations in an SON through minimizing regret based on operator policies. The algorithm has a simplified state space and reduced complexity, compared to traditional methods, by employing a Markov Decision Process framework and state aggregation technique. Simulation results demonstrated the effectiveness of SONCO in resolving conflicts between SON functions, particularly in scenarios with conflicting update requests.

Delay-Oriented Scheduling in 5G [

43]: In this paper, a novel delay-oriented downlink scheduling framework based on deep reinforcement learning for 5G wireless networks was presented, which significantly outperformed existing approaches in terms of both tail delay and average delay.

Crowding Game and Deep Q-Networks [

44]: This study introduced dynamic resource allocation algorithms for Radio Access Network (RAN) slicing in 5G networks, focusing on Ultra-Reliable Low-Latency Communications (URLLC) and enhanced Mobile Broadband (eMBB) services. It compared a distributed crowding game-based algorithm with a centralized Deep Q-Network (DQN) algorithm for the optimization of resource allocation while meeting QoS requirements. The algorithms aim to improve resource utilization efficiency without compromising user performance. Performance evaluations showed that both approaches achieved similar results, with the distributed approach converging faster and the centralized approach being more adaptable. The algorithms outperformed both legacy and state-of-the-art methods, showcasing improvements in resource utilization and user performance.

Congestion Minimization of LTE Networks [

45]: In this paper, the use of deep learning combined with optimization algorithms to minimize congestion in LTE and LTE-A networks was discussed. Two algorithms—Block Coordinated Descent Simulated Annealing (BCDSA) and the genetic algorithm (GA)—were proposed to address the optimization problem through the adjustment of cell power and handover thresholds. The results showed that BCDSA is faster but has lower success rates compared to the GA, with real-time comparisons demonstrating reduced overhead and increased throughput without significant changes in signal strength. The study highlighted the advantages of the DL-BCDSA (Downlink–Block Coordinated Descent Simulated Annealing) algorithm in the network optimization context.

Mobility Load Management in Cellular Networks [

46]: This study proposed a deep reinforcement learning (RL) framework for load management in cellular networks, focusing on the direction of Individual Cell Compensation (IOC) to improve downlink throughput and reduce the number of blocked users. The authors summarized their contributions in terms of reformulating the load balancing problem as a Markov Decision Process (MDP), defining a new state based on key performance indicators (KPIs), formulating a flexible optimization framework with different reward definitions, proposing RL agents for discrete and continuous action spaces and building a realistic system-level simulator for LTE cellular networks. The proposed framework seeks to address the challenges of balancing traffic in cellular networks and optimizing network performance.

Deep Learning in Heterogeneous Wireless Networks [

47]: In this paper, the application of deep learning for radio resource allocation in 5G networks was discussed, specifically focusing on user associations in heterogeneous wireless networks. The authors proposed a deep learning-based user association model that employs a modified U-Net architecture to determine the association matrix between users and base stations. The model was trained using labeled data generated using a heuristic algorithm, with the training phase including pre-training and constraints on the number of base stations to which a user can be associated. The objective was to maximize the minimum achievable rate of users at the cell edge.

Efficient Resource Management in 6G [

48]: In this paper, the development of a future hybrid quantum deep learning model for 6G communication systems was discussed, aiming to enhance network slicing, load balancing and system availability. Through combining Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) architectures, the proposed model improves QoS performance, accurately allocates network slices, manages load balance in congested scenarios and provides backup solutions for slice failures.

A D2D Learning-Based Approach to LTE Traffic Offloading [

49]: This study focused on optimizing data dissemination in LTE networks through a joint multicast/D2D approach using reinforcement learning algorithms. The main objective was to minimize the number of resource blocks required for content delivery while ensuring reliable and efficient distribution. The algorithm, which is based on a multi-armed bandit framework, dynamically selects the optimal user allocation between multicast and D2D based on channel quality and mobility patterns. Results indicated significant resource savings of up to 90% at the base station, improved offloading performance and near-optimal configurations, outperforming fixed allocation strategies.

Characterizing Throughput and Convergence Time in Dynamic Multi-Connectivity [

50]: The main objective of this paper was to optimize throughput in dynamic multi-RAT 5G systems through UE-centric learning-based algorithms. The study focused on network-assisted and collaborative learning mechanisms to enhance system performance in high-density deployments. Regarding the algorithms used, user-centric RL algorithms and collaborative learning mechanisms were employed to optimize RAT selection and throughput, while transfer learning techniques were utilized to enhance learning performance across different network scenarios. The proposed user-centric reinforcement learning approach for network selection showed promising results in terms of optimizing network performance, as demonstrated by the simulation results.

A Cost-Effective Trilateration-Based Radio Localization Algorithm [

51]: The primary aim of this paper was to introduce a trilateration-based outdoor positioning technique for estimating the locations of mobile terminals within cellular networks, employing machine learning and optimization methodologies. The study employed the k-nearest neighbors (k-NN) algorithm alongside optimization techniques such as Nelder–Mead, genetic algorithms and Sequential Least-Squares Programming (SLSQP), optimizing the neighbor count and implementing a K-D Tree search strategy to enhance computational efficiency while preserving accuracy. The results indicated that the proposed method, integrating k-NN with SLSQP, achieved notable accuracy and computational speed in predicting distances between users and base stations across diverse network conditions and environmental settings, surpassing benchmarks established through Nelder–Mead and genetic algorithm-based optimization, thus underscoring its efficacy in outdoor terminal positioning.

Revised Reinforcement Learning Based on Anchor Graph Hashing for Autonomous Cell Activation in Cloud-RANs [

52]: This study proposed a framework for autonomous cell activation and customized physical resource allocation in cloud radio access networks (C-RANs) using a revised reinforcement learning model. The main goal was to balance energy consumption and QoS in wireless networks. The algorithm effectively balances both energy consumption and user satisfaction, demonstrating improved QoS and minimized energy consumption.

Efficient and Reliable Hybrid Deep Learning-Enabled Model for Congestion Control [

53]: This study focused on enhancing the efficiency of 5G network slicing through the implementation of hybrid AI-based solutions. The main objective was to optimize load balancing and resource allocation and prevent network slice failure. A hybrid model that combines long short-term memory (LSTM) and support vector machine (SVM) algorithms was introduced to achieve accurate predictions and efficient resource utilization. The model effectively manages network traffic to avoid overloading and slice failure conditions and outperformed comparative algorithms in other performance metrics.

Joint User Association and Resource Allocation in HetNets Based on User Mobility Prediction [

54]: This study focused on tackling the dual challenge of user association and resource allocation in wireless networks, with a specific focus on HetNets featuring virtual small-cell (VSC) deployment, aiming to optimize network efficiency, fairness, system capacity and spectral efficiency through the use of novel algorithms and solutions. Key algorithms employed include the Multi-Agent Q-Learning (MAQL)-based algorithm, which optimizes user association and resource allocation by considering user load ratios, base station candidates and QoS requirements while utilizing deep Q-networks to efficiently manage large state and action spaces. The outcomes suggested that the proposed solutions, utilizing both decoupling and coupling approaches with MAQL and DQL algorithms, demonstrate good potential for VSC deployment to augment system capacity and spectral efficiency.

Deep Convolutional Neural Networks for Pedestrian Detection [

55]: This study focused on the utilization of Deep Convolutional Neural Networks for pedestrian detection—a critical task in the automotive, surveillance, and robotics fields, among others. Optimizations were proposed to enhance detection accuracy, achieve state-of-the-art performance and ensure efficient operations on modern hardware. The system was evaluated on an NVIDIA Jetson TK1 platform. The pedestrian detection system, based on a Convolutional Neural Network (CNN), surpassed alternative methods in terms of accuracy and computational complexity. The study emphasized the importance of large training datasets and backpropagation for CNNs in order to learn visual concepts effectively. Various optimization techniques, such as region proposals, fine-tuning, data preprocessing and region proposal scores, were employed to enhance pedestrian detection accuracy.

UAV Positioning for Throughput Maximization Using Deep Learning Approaches [

56]: The primary aim of this study was to optimize UAV positioning using deep learning-based methodologies—specifically, the multi-layer perceptron (MLP) and long short-term memory (LSTM) algorithms—to maximize user throughput in UAV-assisted wireless networks. A hybrid MLP–LSTM algorithm was employed for UAV positioning, integrating the data collection, preprocessing, training and testing phases utilizing DL approaches, K-means clustering and interpolation techniques. The obtained results indicate that the proposed hybrid MLP–LSTM algorithm surpasses the SVM, MLP and LSTM algorithms in terms of both accuracy and computational time, exhibiting superior accuracy, reduced error distributions and shorter training and testing durations compared to the individual MLP and LSTM algorithms.

Deep Reinforcement Learning for Dynamic Resource Allocation in High-Mobility 5G HetNet [

57]: The main objective of this paper was to propose deep reinforcement learning-based algorithms for adaptive TDD resource allocation in 5G HetNets, specifically focusing on dynamic uplink/downlink resource allocation in high-mobility scenarios. The algorithms include a deep reinforcement learning algorithm for adaptive TDD resource allocation in 5G HetNets, dynamic uplink/downlink resource allocation in high mobility 5G HetNets, and intelligent allocation of TDD resources for uplink and downlink in a dynamic and heterogeneous 5G network. These algorithms utilize deep Q-learning, deep neural networks, a Deep Belief Neural Network and online Q-learning for training and decision making. The results obtained through simulations revealed that the proposed algorithms significantly improve network performance in terms of throughput, packet loss rate and channel utilization when compared to conventional methods.

4.3. Hybrid

Proposed Algorithm for Transfer Decisions in LTE-A [

58]: This study proposed a regression model for optimizing the handoff process in mobile networks, specifically focusing on LTE and LTE-A based HetNets, with the aim of improving load balancing and overall network performance through utilization of Regression Heuristics of Quality Metrics (RHQM) in the handover decision process. RHQM leverages regression heuristics to scale quality factors of the target cell during handover. Considering key parameters such as radio resources, signal quality metrics, and load balancing capacity, the algorithm seeks to enhance the call blocking ratio, call dropping ratio, signaling cost, handover failures and radio link failures. Experimental studies showed that the algorithm outperforms existing handover optimization models. The results highlighted significant improvements in the considered parameters, indicating the efficacy of the regression-based approach in optimizing handoff procedures in heterogeneous networks.

Backhaul-Aware and Context-Aware User-Cell Association [

59]: The main objective of this study was to address the user–BS association problem in 5G networks through introducing a User-Centric Backhaul (UCB) scheme that optimizes the usage of constrained backhaul networks while considering both backhaul and user context. The study developed and solved a multi-objective optimization problem for the UCB user-BS association. This problem was considered with the aim of finding the optimal association policy between users and candidate small cells to maximize throughput while adhering to capacity limits and user-defined QoS gaps. The implementation of UCB was discussed using Q-learning. The benefits of a context-aware and backhaul-aware user-cell association approach in enhancing network performance were demonstrated. By optimizing user–BS associations based on biased average received power and user priorities, the UCB scheme effectively enhances throughput while sustaining QoS requirements.

Spectral Efficient and Energy Aware Clustering in Cellular Networks [

60]: In this paper, clustering algorithms based on device-to-device communications were proposed to increase the capacity of cellular networks. These algorithms aim to reduce resource requirements, balance uplink and downlink traffic and address energy over-consumption using cluster heads. The algorithms—namely, eCORE, CaLB and CEEa—are designed for FDD LTE-A networks. eCORE focuses on improving spectral efficiency by creating conflict-free user clusters, while CaLB aims to balance the load between uplink and downlink traffic. CEEa limits energy over-consumption by setting thresholds for cluster head selection. The results showed that CaLB provides the highest capacity gain, while CEEa achieves better energy consumption performance. These algorithms aim to improve network capacity while considering energy efficiency and signaling overhead in D2D communications.

Joint Uplink and Downlink Cell Selection in Cognitive Small-Cell Heterogeneous Networks [

61]: In this paper, the optimization of energy efficiency in cognitive small cell heterogeneous networks through a joint uplink and downlink cell selection approach was discussed, and a heuristic algorithm that efficiently associates user equipment’s by considering both access and backhaul energy demand was introduced. The algorithm aims to maximize network energy efficiency while maintaining user equipment QoS. It consists of two stages: initial candidate cell selection based on user equipment requirements and backhaul conditions, followed by the selection of the optimal cell based on backhaul load and available resources. The algorithm outperformed reference approaches in terms of energy efficiency, spectral efficiency and user equipment power consumption.

Swarm Intelligence for Next-Generation Networks [

62]: The main objective of this paper was to explore the application of Swarm Intelligence (SI) techniques in next-generation networks, especially in the context of wireless communications for 5G and 6G. The algorithms used included particle swarm optimization (PSO), ant colony optimization (ACO), the artificial bee colony algorithm, the firefly algorithm, the gray wolf optimizer and the whale optimization algorithm. Regarding the results, the use of SI techniques such as PSO and ACO showed promising results regarding the optimization of wireless communication systems, improving D2D transmission rate, user association, load balancing, MIMO detection, beamforming and channel estimation. SI techniques have been applied to enhance network security, UAV communication designs and clustering and routing in IoT networks within 5G networks, showcasing their potential in optimizing network performance and security.

Load-Aware Self-Organizing User-Centric Dynamic CoMP Clustering for 5G Networks [

63]: In this paper, the authors focused on introducing innovative load-aware, user-centric Coordinated Multi-Point (CoMP) clustering algorithms designed for 5G networks, with the primary aim of enhancing spectral efficiency, load balancing and user contentment through the dynamic adjustment of cluster sizes in response to network conditions and user behaviors. Key features include a load-aware, user-centric CoMP clustering algorithm, which expands the cluster size in heavily loaded cells to maximize capacity and incorporates a re-clustering mechanism to migrate users from overloaded cells to less burdened ones, thereby mitigating user dissatisfaction and augmenting system throughput, which is particularly beneficial in densely populated deployment scenarios. The simulation results demonstrated significant enhancements in user satisfaction, load distribution and system throughput, effectively mitigating load imbalances in hotspot areas, optimizing cluster utilization in highly loaded cells and improving overall system performance.

5. Discussion

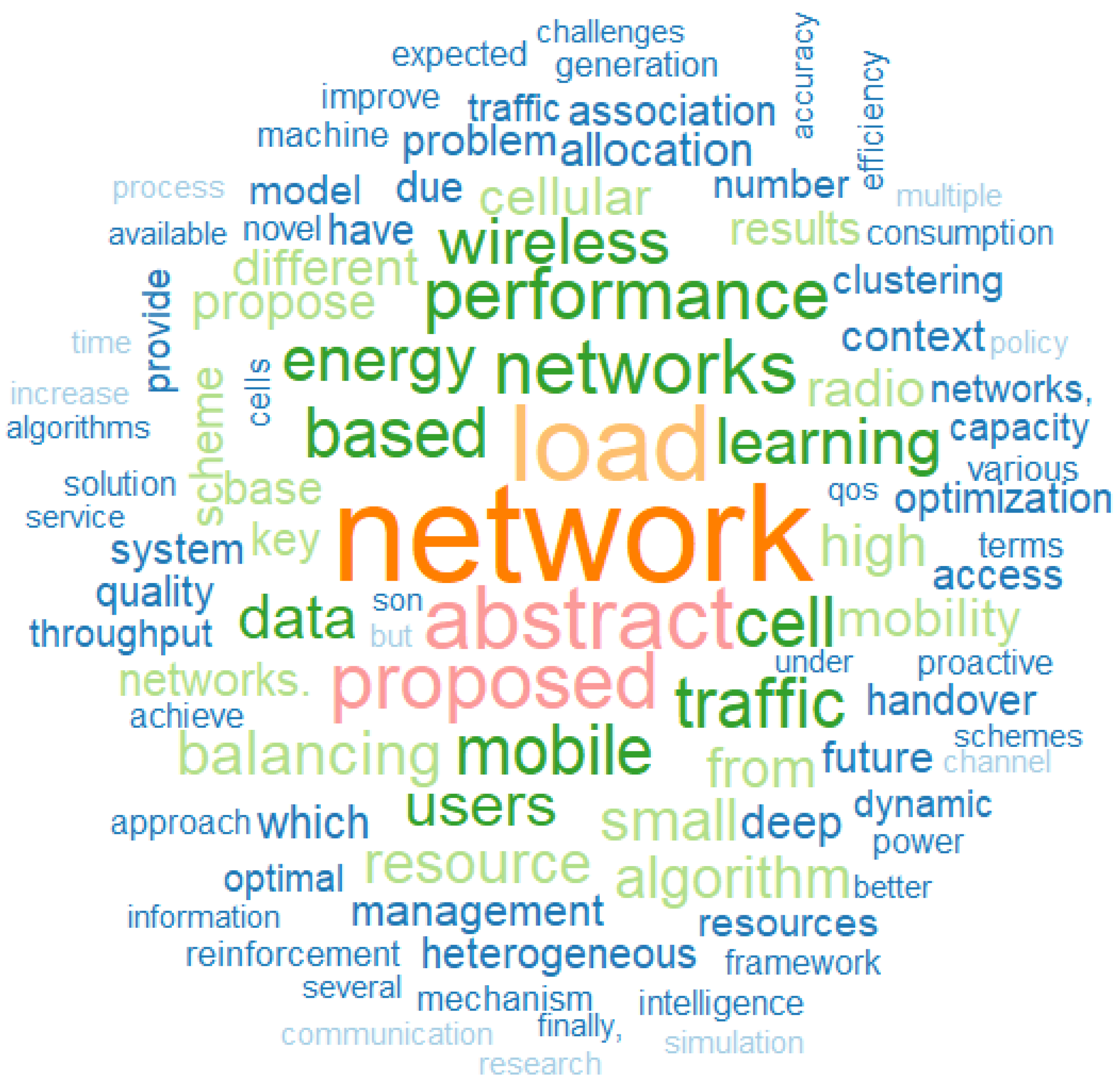

Most of the results are presented in international journals or have been presented at conferences and congresses. We can classify them into three main areas: (1) context awareness, (2) resource allocation, and (3) artificial intelligence. This is reasonable, since the study field considered in this research combines these three areas.

This article presents a classification of the articles based on their content and study area. The categories are as follows: Load Balancing (LB), Mobility Prediction (MP), Mobility Load Balancing (MLB), Resource Allocation (RA), Self-Organizing Networks (SONs), Long-Term Evolution (LTE) and Long Term Evolution—Advanced (LTE-A), 5G, Machine Learning (ML), Deep Learning (DL), Context-Aware (CA), Load Management (LM), and Handover (HO).

The categorization is detailed in

Table 2 and in more detail and further visualized in

Figure 4 using a pie chart for a clearer representation.

Although there is a significant body of literature on the design of cellular networks, especially concerning load balancing in networks, the reality is that research on the application of artificial intelligence—specifically the use of both automatic and semi-automatic algorithms for the allocation of network resources—remains scarce. From the analyzed studies, the authors of this article clearly determined the type of algorithm (semi-automatic, automatic or hybrid) used in the papers. This information is summarized in

Table 3 and, for better visualization of the data, we present an associated bar plot in

Figure 5.

Although it is crucial to recognize that load balancing in cellular networks involves multiple stages, identifying which type of algorithm is best suited for each stage remains essential. In addition, the evolution of load balancing algorithms reflects the shifting performance priorities and architectural demands of mobile networks during the 4G to 5G transition (2014–2023).

Semi-automatic algorithms, which combine rule-based human intervention with threshold-driven automation, were instrumental in optimizing throughput and reliability for mature 4G deployments. For example, LTE-Advanced carrier aggregation strategies (2014–2018) achieved peak throughputs of 1 Gbps in urban macrocells by enabling operators to manually prioritize traffic sectors, although at the cost of limited adaptability to dynamic 5G requirements.

In contrast, fully automatic algorithms, driven by AI/ML frameworks, emerged as 5G standardization accelerated (post 2018), prioritizing ultra-low latency (<10 ms for URLLC) and energy efficiency. A case study [

46] demonstrated that reinforcement learning-based load balancers reduced base station energy consumption by 30% in ultra-dense networks while simultaneously enhancing scalability for IoT deployments. Hybrid algorithms bridged these eras, harmonizing legacy 4G infrastructure with 5G innovations.

For example, SDN-enabled hybrid approaches [

29] dynamically allocated traffic between 4G and 5G nodes, maintaining 85% spectral efficiency during network slicing trials. This taxonomy underscores a critical trade-off: while semi-automatic methods offered stability for 4G’s throughput-centric KPIs, automatic and hybrid paradigms unlocked the agility needed for 5G’s heterogeneous service demands.

Collectively, these algorithmic advancements highlight how load balancing strategies evolved from human-dependent optimization to intelligent, context-aware systems, mirroring the broader technological leap from 4G to 5G.

The taxonomy of load balancing algorithms and their alignment with evolving network demands is systematically summarized in

Table 4. This table synthesizes the interplay between semi-automatic, automatic and hybrid approaches, their prioritized performance indicators (e.g., throughput, latency, energy efficiency) and the techniques validated in real-world case studies (2014–2023).

Regarding the methodology used, it is worth highlighting that PRISMA provides a clear and systematic structure for conducting reviews, which enhances the quality and transparency of the study. Moreover, the traceability offered by the PRISMA flow diagram is a valuable asset when it comes to quickly identifying the amount of information and documentation available on the subject. However, in some cases, it can be a lengthy and tedious process, depending on the potential bias that may be introduced when adapting bibliographic references to the research topic. In addition, if there is limited documentation in the study area, the use of PRISMA may further restrict the available published literature. Additionally, we have added a cost–benefit matrix (

Table 5) to analyze the advantages and disadvantages of AI adoption.

6. Conclusions

In recent years, there has been a significant wave of research on the design and dimensioning of mobile networks, especially with respect to load balancing techniques at the radio interface. However, there have been no comprehensive studies that address the application of artificial intelligence-based computational algorithms, whether automatic, semi-automatic or hybrid, in the load balancing of traffic and resources managed by mobile networks.

This article conducted an SLR following PRISMA as an analytical methodology. Out of 1202 identified papers, 45 were selected for further analysis. As a result, we presented an analysis of the state of the art at the intersection of context awareness, resource allocation and artificial intelligence, aiming to delineate the scope of research and identify any existing gaps.

By adhering to PRISMA’s documentation standards, we systematically recorded exclusion criteria, resolved discrepancies through consensus and generated a flow diagram (see

Figure 1) to visualize attrition rates. This approach not only strengthened the validity of findings but also enabled replicability in future studies on adaptive algorithms for socially influenced network environments.

The results revealed that, despite the relative scarcity of literature on the application of artificial intelligence-based computational algorithms, many types of algorithms are being used in the load balancing context.

Figure 4 shows the categorization of the studies based on their relation to the research topics, while

Figure 5 displays a bar plot indicating the number of semi-automatic (19), automatic (20) and hybrid (6) algorithms utilized in the analyzed studies.

In the course of carrying out this study, a series of more specific questions emerged, as follows:

What are the most suitable algorithms for radio resource re-allocation in next-generation mobile networks, based on machine learning models for load balancing?

What are the most suitable algorithms for radio resource re-allocation in next-generation mobile networks, based on machine learning models for localization?

What is the computational cost relating to the use of hybrid algorithms versus semi-automatic and/or automatic algorithms?

How can we classify semi-automatic, automatic and hybrid algorithms when applied to different context-aware approaches?

In addition, this study highlights the strategic importance of load balancing algorithms to address 5G optimization challenges such as latency reduction, dynamic resource allocation, and scalability in dense user environments. Adaptive algorithmic frameworks, such as those that integrate network fragmentation and edge computing, are critical to enable mission-critical applications such as industrial automation and ultra-reliable communications. Our classification provides practical insights for balancing computational efficiency and quality of service in heterogeneous architectures.

In closing, we highlight five key trajectories for the development of load balancing algorithms. (1) Full automation dominance will prevail in 5G/6G networks, driven by AI/ML for real-time adaptability. (2) Persistence of semi-automation will endure in high-risk scenarios (e.g., ethics, compliance). (3) Hybrid algorithms will continue to be transition enablers, reconciling legacy and next-generation infrastructures. (4) Context-aware automation will prioritize explainability in safety-critical domains.(5) Sustainability-driven automation will optimize energy efficiency in the context of green grid mandates.

Our analysis identifies inherent limitations of current load balancing algorithms that affect the feasibility of next-generation deployment. Automatic approaches incur latency penalties due to computational overhead during dynamic state transitions, while semi-automatic/hybrid methods exhibit accuracy degradation when high-quality training data is not available. These drawbacks, compounded by poor explainability and lack of alignment with latency targets, highlight the importance of trade-offs between algorithmic sophistication and operational deployability in 5G/6G networks.

Furthermore, case studies demonstrate the operational effectiveness of the proposed taxonomy. Automatic algorithms achieve fewer handover failures in smart factory URLLC scenarios, meeting low latency objectives. Semi-automatic algorithms resolve congestion in large scenarios (e.g., stadiums) with higher access success rates. In contrast, hybrid models ensure high reliability during critical network outage transitions in 5G testing.

While existing taxonomies classify load balancing algorithms by technical attributes, our framework introduces an operational autonomy axis aligned with zero-touch industrial paradigms. This reveals critical insights for the deployment of next-generation networks.

Our taxonomy transcends incremental evolution by revealing how autonomy-centric load balancing will pioneer 6G’s cognitive networks [

64]. Quantum-secure hybrids [

65] and holographic projection leveraging electromagnetic information theory [

66] will transform balancing into a physics-aware service. These disruptions—grounded in 3GPP Release 19 studies—position our framework as the governance backbone for zero-touch 6G ecosystems [

67].

Although this systematic review provides a comprehensive taxonomy of load balancing algorithms for next-generation networks, we acknowledge the limitation regarding experimental validation. The absence of an empirical testing framework is due to the conceptual nature of this work, focusing on classification and gap analysis rather than performance benchmarking.

However, this limitation reveals a fundamental research avenue: future studies should rigorously validate these algorithmic categories through controlled simulations and field testing. Specifically, we propose the following: comparative tests between algorithms in simulated ultra-dense urban scenarios (e.g., using NS-3/5G-LENA) with QoS metrics (latency, throughput, handover failure rate) as key variables [

68], followed by performing real-world validations through partnerships with mobile operators, deploying the best performing algorithms in standalone 5G networks. These experiments will quantitatively establish the various deployment contexts while addressing practical constraints such as computational overhead and scalability. Our proposed experimental framework (see the specifically in

Supplementary S3) employs 12 normalized KPIs, including energy per bit and PRB variance, to holistically evaluate the trade-offs between QoS, resource efficiency, and sustainability.

Author Contributions

Conceptualization, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; methodology, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; software, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; validation, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; formal analysis, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; research, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; resources, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; data curation, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; writing original draft, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; drafting, revising and editing, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; visualization, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; supervision, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; project administration, J.O.-A., C.S.-C., R.T., J.I.G. and S.F.; fund raising, J.O.-A., C.S.-C., R.T., J.I.G. and S.F. All authors have read and accepted the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Clarke, R. Expanding Mobile Wireless Capacity: The Challenges Presented by Technology and Economics. Telecommun. Policy 2013, 38, 693–708. [Google Scholar] [CrossRef]

- Kanellopoulos, D.; Sharma, V.K.; Panagiotakopoulos, T.; Kameas, A. Networking Architectures and Protocols for IoT Applications in Smart Cities: Recent Developments and Perspectives. Electronics 2023, 12, 2490. [Google Scholar] [CrossRef]

- Ochoa-Aldeán, J.; Silva-Cárdenas, C. A New Linear Model for the Calculation of Routing Metrics in 802.11s Using ns-3 and RStudio. Computers 2023, 12, 172. [Google Scholar] [CrossRef]

- Saad, W.K.; Shayea, I.; Alhammadi, A.; Sheikh, M.M.; El-Saleh, A.A. Handover and load balancing self-optimization models in 5G mobile networks. Eng. Sci. Technol. Int. J. 2023, 42, 101418. [Google Scholar] [CrossRef]

- Kaur, A.; Kaur, B.; Singh, P.; Devgan, M.; Toor, H. Load Balancing Optimization Based on Deep Learning Approach in Cloud Environment. Int. J. Inf. Technol. Comput. Sci. 2020, 12, 8–18. [Google Scholar] [CrossRef]

- Martínez, K.Y.; Torres, R.B.; Gonzalez, J.I.; Ochoa, J.G.; Fortes, S. Evaluation of Load Balancing Systems for Urban-Macro Mobile Network Based on Social Context. In Proceedings of the 2023 IEEE Seventh Ecuador Technical Chapters Meeting (ECTM), Ambato, Ecuador, 10–13 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Lawson, B.; Samson, D. Developing Innovation Capability in Organisations: A Dynamic Capabilities Approach. Int. J. Innov. Manag. 2001, 5, 377–400. [Google Scholar] [CrossRef]

- Fortes, S.; Aguilar Garcia, A.; Fernandez-Luque, J.A.; Garrido, A.; Barco, R. Context-Aware Self-Healing: User Equipment as the Main Source of Information for Small-Cell Indoor Networks. IEEE Veh. Technol. Mag. 2016, 11, 76–85. [Google Scholar] [CrossRef]

- Asghar, M.Z.; Nieminen, P.; Hämäläinen, S.; Ristaniemi, T.; Imran, M.A.; Hämäläinen, T. Towards proactive context-aware self-healing for 5G networks. Comput. Netw. 2017, 128, 5–13. [Google Scholar] [CrossRef]

- Luengo, P.M.; Moreno, R.B. Optimization of Mobility Parameters Using Fuzzy Logic and Reinforcement Learning in Self-Organizing Networks; Servicio de Publicaciones y Divulgación Científica—Universidad de Málaga: Málaga, Spain, 2013. [Google Scholar]

- Rodríguez, J.; Bandera, I.D.L.; Muñoz, P.; Barco, R. Load balancing in a realistic urban scenario for LTE networks. In Proceedings of the IEEE Vehicular Technology Conference, Budapest, Hungary, 15–18 May 2011. [Google Scholar] [CrossRef]

- Villegas, J.; Baena, E.; Fortes, S.; Barco, R. Social-Aware Forecasting for Cellular Networks Metrics. IEEE Commun. Lett. 2021, 25, 1931–1934. [Google Scholar] [CrossRef]

- Muñoz, P.; Barco, R.; de la Bandera, I. Optimization of load balancing using fuzzy Q-Learning for next generation wireless networks. Expert Syst. Appl. 2013, 40, 984–994. [Google Scholar] [CrossRef]

- Qin, Z.; Cao, F.; Yang, Y.; Wang, S.; Liu, Y.; Tan, C.; Zhang, D. CellPred: A Behavior-Aware Scheme for Cellular Data Usage Prediction. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–24. [Google Scholar] [CrossRef]

- Ma, B.; Yang, B.; Zhu, Y.; Zhang, J. Context-Aware Proactive 5G Load Balancing and Optimization for Urban Areas. IEEE Access 2020, 8, 8405–8417. [Google Scholar] [CrossRef]

- Fortes, S.; Palacios, D.; Serrano, I.; Barco, R. Applying Social Event Data for the Management of Cellular Networks. IEEE Commun. Mag. 2018, 56, 36–43. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Trifu, A.; Smîdu, E.; Badea, D.O.; Bulboacă, E.; Haralambie, V. Applying the PRISMA method for obtaining systematic reviews of occupational safety issues in literature search. MATEC Web Conf. 2022, 354, 00052. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Torres, R.; Fortes, S.; Baena, E.; Barco, R. Social-Aware Load Balancing System for Crowds in Cellular Networks. IEEE Access 2021, 9, 107812–107823. [Google Scholar] [CrossRef]

- Kuruvatti, N.P.; Saavedra Molano, J.F.; Schotten, H.D. Mobility context awareness to improve Quality of Experience in traffic dense cellular networks. In Proceedings of the 2017 24th International Conference on Telecommunications (ICT), Limassol, Cyprus, 3–5 May 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Guidolin, F.; Pappalardo, I.; Zanella, A.; Zorzi, M. Context-Aware Handover Policies in HetNets. IEEE Trans. Wirel. Commun. 2016, 15, 1895–1906. [Google Scholar] [CrossRef]

- Lee, H.; Vahid, S.; Moessner, K. Traffic-aware carrier allocation with aggregation for load balancing. In Proceedings of the 2017 European Conference on Networks and Communications (EuCNC), Oulu, Finland, 12–15 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Aguilar-Garcia, A.; Fortes, S.; Fernandez Duran, A.; Barco, R. Context-Aware Self-Optimization: Evolution Based on the Use Case of Load Balancing in Small-Cell Networks. IEEE Veh. Technol. Mag. 2016, 11, 86–95. [Google Scholar] [CrossRef]

- Ali, K.B.; Zarai, F.; Khdhir, R.; Obaidat, M.S.; Kamoun, L. QoS Aware Predictive Radio Resource Management Approach Based on MIH Protocol. IEEE Syst. J. 2018, 12, 1862–1873. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, Y.; Anpalagan, A.; Wu, Q.; Xu, Y.; Sun, Y.; Feng, S.; Luo, Y. Context-Aware Group Buying in Ultra-Dense Small Cell Networks: Unity Is Strength. IEEE Wirel. Commun. 2019, 26, 118–125. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, R.; Li, C.; Yang, L.; Hanzo, L. Graph-Based Joint User-Centric Overlapped Clustering and Resource Allocation in Ultradense Networks. IEEE Trans. Veh. Technol. 2018, 67, 4440–4453. [Google Scholar] [CrossRef]

- Jahid, A.; Alsharif, M.H.; Uthansakul, P.; Nebhen, J.; Aly, A.A. Energy Efficient Throughput Aware Traffic Load Balancing in Green Cellular Networks. IEEE Access 2021, 9, 90587–90602. [Google Scholar] [CrossRef]

- Post, B.; Borst, S.; Van Den Berg, H. A self-organizing base station sleeping and user association strategy for dense cellular networks. Wirel. Netw. 2021, 27, 307–322. [Google Scholar] [CrossRef]

- Taboada, I.; Aalto, S.; Lassila, P.; Liberal, F. Delay- and energy-aware load balancing in ultra-dense heterogeneous 5G networks. Trans. Emerg. Telecommun. Technol. 2017, 28, e3170. [Google Scholar] [CrossRef]

- Hossain, M.S.; Jahid, A.; Islam, K.Z.; Alsharif, M.H.; Rahman, K.M.; Rahman, M.F.; Hossain, M.F. Towards Energy Efficient Load Balancing for Sustainable Green Wireless Networks Under Optimal Power Supply. IEEE Access 2020, 8, 200635–200654. [Google Scholar] [CrossRef]

- Simsek, M.; Bennis, M.; Guvenc, I. Mobility management in HetNets: A learning-based perspective. EURASIP J. Wirel. Commun. Netw. 2015, 2015, 26. [Google Scholar] [CrossRef]

- Siddique, U.; Tabassum, H.; Hossain, E.; Kim, D.I. Channel-Access-Aware User Association With Interference Coordination in Two-Tier Downlink Cellular Networks. IEEE Trans. Veh. Technol. 2016, 65, 5579–5594. [Google Scholar] [CrossRef]

- Aguilar-Garcia, A.; Fortes, S.; Garrido, A.; Fernandez-Duran, A.; Barco, R. Improving load balancing techniques by location awareness at indoor femtocell networks. EURASIP J. Wirel. Commun. Netw. 2016, 2016, 201. [Google Scholar] [CrossRef]

- Zhang, P.; Li, J.; Tang, Y.; Wang, H.; Cao, T. A Novel, Efficient, Green and Real-Time Load Balancing Algorithm for 5G Network Measurement Report Collecting Clusters. J. Circuits Syst. Comput. 2022, 31, 2250313. [Google Scholar] [CrossRef]

- Barmpounakis, S.; Kaloxylos, A.; Spapis, P.; Alonistioti, N. Context-aware, user-driven, network-controlled RAT selection for 5G networks. Comput. Netw. 2017, 113, 124–147. [Google Scholar] [CrossRef]

- Li, C.; Zuo, X.; Mohammed, A.S. A new fuzzy-based method for energy-aware resource allocation in vehicular cloud computing using a nature-inspired algorithm. Sustain. Comput. Inform. Syst. 2022, 36, 100806. [Google Scholar] [CrossRef]

- Wen, Y.F.; Lien, T.H.; Lin, F.S. Application association and load balancing to enhance energy efficiency in heterogeneous wireless networks. Comput. Electr. Eng. 2018, 68, 348–365. [Google Scholar] [CrossRef]

- Qin, M.; Yang, Q.; Cheng, N.; Zhou, H.; Rao, R.R.; Shen, X. Machine Learning Aided Context-Aware Self-Healing Management for Ultra Dense Networks With QoS Provisions. IEEE Trans. Veh. Technol. 2018, 67, 12339–12351. [Google Scholar] [CrossRef]

- Simsek, M.; Bennis, M.; Guvenc, I. Context-aware mobility management in HetNets: A reinforcement learning approach. In Proceedings of the 2015 IEEE Wireless Communications and Networking Conference (WCNC), New Orleans, LA, USA, 9–12 March 2015; pp. 1536–1541. [Google Scholar] [CrossRef]

- Niasar, F.A.; Aghdam, M.J.; Nabipour, M.; Momen, A. Mobility management in HetNets consider on QOS and improve Throughput. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 27–30 January 2021; pp. 1354–1359. [Google Scholar] [CrossRef]

- Iacoboaiea, O.C.; Sayrac, B.; Ben Jemaa, S.; Bianchi, P. SON conflict resolution using reinforcement learning with state aggregation. In Proceedings of the 4th Workshop on All Things Cellular: Operations, Applications & Challenges, Chicago, IL, USA, 22 August 2014; pp. 15–20. [Google Scholar] [CrossRef]

- Hao, Y.; Li, F.; Zhao, C.; Yang, S. Delay-Oriented Scheduling in 5G Downlink Wireless Networks Based on Reinforcement Learning With Partial Observations. IEEE/ACM Trans. Netw. 2023, 31, 380–394. [Google Scholar] [CrossRef]

- Saad, J.; Khawam, K.; Yassin, M.; Costanzo, S.; Boulos, K. Crowding Game and Deep Q-Networks for Dynamic RAN Slicing in 5G Networks. In Proceedings of the 20th ACM International Symposium on Mobility Management and Wireless Access, Montreal, QC, Canada, 24–28 October 2022; pp. 37–46. [Google Scholar] [CrossRef]

- Albanna, A.; Yousefi’Zadeh, H. Congestion Minimization of LTE Networks: A Deep Learning Approach. IEEE/ACM Trans. Netw. 2020, 28, 347–359. [Google Scholar] [CrossRef]

- Alsuhli, G.; Banawan, K.; Attiah, K.; Elezabi, A.; Seddik, K.; Gaber, A.; Zaki, M.; Gadallah, Y. Mobility Load Management in Cellular Networks: A Deep Reinforcement Learning Approach. IEEE Trans. Mob. Comput. 2021, 22, 1581–1598. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiong, L.; Yu, J. Deep Learning Based User Association in Heterogeneous Wireless Networks. IEEE Access 2020, 8, 197439–197447. [Google Scholar] [CrossRef]

- Ashwin, M.; Alqahtani, A.S.; Mubarakali, A.; Sivakumar, B. Efficient resource management in 6G communication networks using hybrid quantum deep learning model. Comput. Electr. Eng. 2023, 106, 108565. [Google Scholar] [CrossRef]

- Rebecchi, F.; Valerio, L.; Bruno, R.; Conan, V.; De Amorim, M.D.; Passarella, A. A joint multicast/D2D learning-based approach to LTE traffic offloading. Comput. Commun. 2015, 72, 26–37. [Google Scholar] [CrossRef]

- Pirmagomedov, R.; Moltchanov, D.; Samuylov, A.; Orsino, A.; Torsner, J.; Andreev, S.; Koucheryavy, Y. Characterizing throughput and convergence time in dynamic multi-connectivity 5G deployments. Comput. Commun. 2022, 187, 45–58. [Google Scholar] [CrossRef]

- Marques, J.P.P.; Cunha, D.C.; Harada, L.M.; Silva, L.N.; Silva, I.D. A cost-effective trilateration-based radio localization algorithm using machine learning and sequential least-square programming optimization. Comput. Commun. 2021, 177, 1–9. [Google Scholar] [CrossRef]

- Sun, G.; Zhan, T.; Gordon Owusu, B.; Daniel, A.M.; Liu, G.; Jiang, W. Revised reinforcement learning based on anchor graph hashing for autonomous cell activation in cloud-RANs. Future Gener. Comput. Syst. 2020, 104, 60–73. [Google Scholar] [CrossRef]

- Khan, S.; Hussain, A.; Nazir, S.; Khan, F.; Oad, A.; Alshehri, M.D. Efficient and reliable hybrid deep learning-enabled model for congestion control in 5G/6G networks. Comput. Commun. 2022, 182, 31–40. [Google Scholar] [CrossRef]

- Cheng, Z.; Chen, N.; Liu, B.; Gao, Z.; Huang, L.; Du, X.; Guizani, M. Joint user association and resource allocation in HetNets based on user mobility prediction. Comput. Netw. 2020, 177, 107312. [Google Scholar] [CrossRef]

- Tomè, D.; Monti, F.; Baroffio, L.; Bondi, L.; Tagliasacchi, M.; Tubaro, S. Deep Convolutional Neural Networks for pedestrian detection. Signal Process. Image Commun. 2016, 47, 482–489. [Google Scholar] [CrossRef]

- Munaye, Y.Y.; Lin, H.-P.; Adege, A.B.; Tarekegn, G.B. UAV Positioning for Throughput Maximization Using Deep Learning Approaches. Sensors 2019, 19, 2775. [Google Scholar] [CrossRef]

- Tang, F.; Zhou, Y.; Kato, N. Deep Reinforcement Learning for Dynamic Uplink/Downlink Resource Allocation in High Mobility 5G HetNet. IEEE J. Sel. Areas Commun. 2020, 38, 2773–2782. [Google Scholar] [CrossRef]

- Mannem, K.; Rao, P.N.; Reddy, S.M. Handover Decision in LTE & LTE-A based Hetnets using Regression Heuristics of Quality Metrics (RHQM) for Optimal Load Balancing. In Proceedings of the 2020 Fourth International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 8–10 January 2020; pp. 732–739. [Google Scholar] [CrossRef]

- Jaber, M.; Onireti, O.; Imran, M.A. Backhaul-Aware and Context-Aware User-Cell Association Approach. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Kollias, G.; Adelantado, F.; Verikoukis, C. Spectral Efficient and Energy Aware Clustering in Cellular Networks. IEEE Trans. Veh. Technol. 2017, 66, 9263–9274. [Google Scholar] [CrossRef]

- Mesodiakaki, A.; Adelantado, F.; Alonso, L.; Verikoukis, C. Joint uplink and downlink cell selection in cognitive small cell heterogeneous networks. In Proceedings of the 2014 IEEE Global Communications Conference, Austin, TX, USA, 8–12 December 2014; pp. 2643–2648. [Google Scholar] [CrossRef]

- Pham, Q.V.; Nguyen, D.C.; Mirjalili, S.; Hoang, D.T.; Nguyen, D.N.; Pathirana, P.N.; Hwang, W.J. Swarm intelligence for next-generation networks: Recent advances and applications. J. Netw. Comput. Appl. 2021, 191, 103141. [Google Scholar] [CrossRef]

- Bassoy, S.; Jaber, M.; Imran, M.A.; Xiao, P. Load Aware Self-Organising User-Centric Dynamic CoMP Clustering for 5G Networks. IEEE Access 2016, 4, 2895–2906. [Google Scholar] [CrossRef]

- Hexa-X-II Consortium. D3.1: Expanded 6G Vision, Use Cases and Societal Values; Technical Report; European Commission: Paris, France, 2023. [Google Scholar]

- National Institute of Standards and Technology. FIPS 203 (Initial Public Draft): Module-Lattice-Based Key-Encapsulation Mechanism Standard; Federal Information Processing Standards Publication: Gaithersburg, MD, USA, 2023. [Google Scholar]

- International Telecommunication Union. M.2160: Framework and Overall Objectives of the Future Development of IMT for 2030 and Beyond; Recommendation, ITU Radiocommunication Sector: Geneva, Switzerland, 2023. [Google Scholar]

- 3rd Generation Partnership Project. TR 22.841: Study on Enhancement for Cyber-Physical Control Applications in Vertical Domains; Technical Report V19.0.0; 3GPP. 2024. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=3684 (accessed on 22 April 2025).

- Patriciello, N.; Lagen, S.; Bojovic, B.; Giupponi, L. An Integrated 5G/6G System-Level Simulator for ns-3: Design, Calibration, and Validation. IEEE Trans. Mob. Comput. 2023, 22, 6076–6091. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |