Abstract

Resource-constrained devices, including low-power Internet of Things (IoT) nodes, microcontrollers, and edge computing platforms, have increasingly become the focal point for deploying on-device intelligence. By integrating artificial intelligence (AI) closer to data sources, these systems aim to achieve faster responses, reduce bandwidth usage, and preserve privacy. Nevertheless, implementing AI in limited hardware environments poses substantial challenges in terms of computation, energy efficiency, model complexity, and reliability. This paper provides a comprehensive review of state-of-the-art methodologies, examining how recent advances in model compression, TinyML frameworks, and federated learning paradigms are enabling AI in tightly constrained devices. We highlight both established and emergent techniques for optimizing resource usage while addressing security, privacy, and ethical concerns. We then illustrate opportunities in key application domains—such as healthcare, smart cities, agriculture, and environmental monitoring—where localized intelligence on resource-limited devices can have broad societal impact. By exploring architectural co-design strategies, algorithmic innovations, and pressing research gaps, this paper offers a roadmap for future investigations and industrial applications of AI in resource-constrained devices.

1. Introduction

Artificial intelligence (AI) has seen extraordinary advances over the past decade, driven by improvements in deep neural network architectures, the availability of massive datasets, and the proliferation of specialized hardware accelerators [1]. As these technologies continue to mature, there is a growing interest in pushing AI capabilities beyond centralized data centers and cloud-based architectures to the very edge of the network, where data are initially generated and collected [2,3]. This shift in focus toward edge or embedded AI is driven by a set of converging demands, including the need to reduce inference latency for real-time applications, the desire to cut bandwidth costs by transmitting fewer data to the cloud, and the imperative to preserve user privacy through localized data processing [4]. Despite these strong motivations, implementing AI models on resource-constrained devices remains a formidable challenge. Traditional deep learning pipelines typically rely on large-scale computing clusters, powered by graphics processing units (GPUs) or tensor processing units (TPUs), which deliver the necessary memory and arithmetic throughput to train and infer from very large models [5].

In stark contrast, many IoT and embedded systems operate with only a few megabytes of memory, minimal floating-point capabilities, and extremely tight energy budgets [6]. Consequently, the straightforward transfer of standard AI methods to edge devices inevitably encounters severe computational bottlenecks, prompting researchers and practitioners to explore alternative approaches [7]. Techniques such as model compression, which includes quantization, pruning, and knowledge distillation, have emerged as key enablers in shrinking neural network footprints for edge deployment. Simultaneously, frameworks like TensorFlow Lite for Microcontrollers and microTVM are specifically designed to streamline inference on microcontrollers and other resource-limited hardware [8]. Furthermore, federated learning (FL) has opened avenues for collaborative, decentralized model training, which not only conserves bandwidth but also enhances data privacy by keeping sensitive information local [9]. However, challenges persist, including the need to balance model accuracy with hardware limitations, ensure robust performance under real-time constraints, and maintain data security against sophisticated adversaries. Specifically, the key contributions of this manuscript are:

- A comprehensive analysis of the fundamental challenges inherent in deploying AI on resource-constrained hardware, including computational limits, memory constraints, energy efficiency, real-time requirements, data security, and reliability;

- An in-depth examination of state-of-the-art methodologies and emergent techniques enabling TinyML, covering model compression strategies (quantization, pruning, knowledge distillation), specialized software frameworks, hardware accelerators, and distributed learning paradigms like federated learning;

- An illustration of the significant opportunities and transformative potential of resource-constrained AI across diverse application domains, including healthcare, smart cities, smart agriculture, and Industry 4.0, highlighting the unique demands of each context;

- A discussion of critical cross-cutting themes vital for responsible deployment, such as data security, privacy, ethical considerations, and environmental sustainability in the context of edge AI;

- Identification of pressing research gaps and future directions, offering a roadmap for advancing the field of TinyML and resource-constrained AI systems.

By systematically reviewing these aspects, this paper provides a holistic perspective on enabling AI at the edge and in embedded systems. The remainder of this paper is organized as follows: Section 2 provides background and motivation, Section 3 details the key challenges, Section 4 reviews emerging solutions and state-of-the-art approaches, Section 5 illustrates opportunities in key application domains, Section 6 outlines future directions, and Section 7 concludes the paper.

2. Background and Motivation

2.1. The Evolution of TinyML

The past decade has witnessed an extraordinary surge in AI capabilities, primarily fueled by breakthroughs in deep learning architectures, the availability of massive datasets, and the proliferation of powerful, centralized computing resources like GPUs and TPUs in data centers and cloud environments [1]. This cloud-centric model enabled unprecedented advances in complex tasks such as image recognition, natural language processing, and recommendation systems, processing vast amounts of data remotely.

However, the landscape of computing has been simultaneously transformed by the explosive growth of the Internet of Things (IoT). Billions of devices, ranging from simple sensors and actuators to complex embedded systems, are being deployed across diverse environments—homes, industries, cities, and remote natural settings [2,5,10]. These devices generate data at an unprecedented rate, creating a fundamental tension with the traditional cloud model. Transmitting all raw data to the cloud incurs significant bandwidth costs, introduces unacceptable latency for real-time applications, and raises substantial privacy concerns by centralizing sensitive information [4,11,12,13].

This tension has catalyzed a significant shift in the AI paradigm: moving intelligence closer to the data source—to the “edge” of the network [2]. Edge computing broadly encompasses processing data on local servers or gateways near the devices. Tiny machine learning (TinyML) represents a specific, increasingly vital subset of this edge AI movement, focusing explicitly on deploying machine learning models on ultra-low-power, resource-constrained microcontrollers and embedded systems [8,14,15]. These devices operate with severe limitations in terms of computational power (often lacking floating-point units), memory (kilobytes rather than gigabytes), and energy budgets (relying on batteries or energy harvesting) [15,16].

The evolution towards TinyML has been driven by several converging factors:

- The Proliferation of IoT Devices: The sheer volume and diversity of connected devices make a purely cloud-based approach economically and technically unsustainable for many applications [5,10].

- Demand for On-Device Intelligence: Applications like real-time anomaly detection in industrial machinery, immediate wake-word recognition in voice assistants, or continuous health monitoring on wearables require decisions to be made locally with minimal latency [4,17,18].

- Privacy and Security Imperatives: Processing sensitive data (e.g., health metrics, voice commands, location) directly on the device significantly enhances user privacy and reduces the risk of data breaches associated with cloud transmission and storage [12,13].

- Hardware Advancements: While still constrained, modern microcontrollers offer increasing computational capabilities, including specialized instructions or small accelerators suitable for integer-based AI operations [16]. The development of ultra-low-power specialized hardware such as application-specific integrated circuits (ASICs) and field-programmable gate arrays (FPGAs) tailored for AI inference on embedded systems has also been crucial [15,19].

- Software Framework Innovation: The emergence of specialized frameworks and toolchains like TensorFlow Lite for Microcontrollers, microTVM, and CMSIS-NN has abstracted away some of the low-level hardware complexities, making it feasible for developers to optimize and deploy models on these constrained platforms [8,20,21].

TinyML signifies a paradigm shift towards truly pervasive, distributed intelligence. It moves beyond simply collecting data at the edge to processing and acting on it locally, enabling new applications and fundamentally changing how we interact with technology and the environment. Its growing significance lies in its potential to unlock valuable insights from the vast edge data stream while adhering to critical constraints imposed by the physical world—power, size, cost, latency, and privacy. This aligns explicitly with technological needs (efficient processing, distributed systems), societal demands (privacy, responsiveness), and environmental considerations (reduced data transmission energy, enabling smart agriculture/monitoring for sustainability) [12,22].

The transition discussed in Section 2.1, driven by the explosive growth of IoT devices and the inherent limitations of the traditional cloud computing model, can be clearly understood by comparing the characteristics of different AI deployment paradigms. As processing moves closer to the data source, the design priorities shift dramatically from maximizing computational power to optimizing for stringent resource constraints. Table 1 provides a comparative overview of these paradigms, highlighting the key drivers and trade-offs at each stage of this evolution.

Table 1.

Evolution of AI deployment.

The limitations of sending data to the cloud—namely latency and privacy concerns—are fueling a major shift in AI deployment, a progression detailed in Table 1. While cloud AI handles big tasks, edge AI brings processing closer, reducing delays. TinyML represents the next frontier, embedding AI directly onto resource-constrained devices. This unlocks critical advantages: near-instantaneous processing, built-in data privacy, minimal power consumption, and massive scalability, even in disconnected locations. This requires new hardware and hyper-efficient software, paving the way for a truly ubiquitous intelligent future.

2.2. Defining Resource-Constrained Devices

Resource-constrained devices are often microcontrollers or small single-board computers that exhibit stringent limitations in memory, compute capacity, and power supply [15]. These devices may only support a few megabytes of RAM and rely on low-frequency CPUs, making them vastly less capable than typical smartphones or personal computers [31]. Many of these devices operate intermittently on battery power or energy-harvesting sources such as solar or kinetic energy, adding further constraints to how often (and how intensively) AI tasks can be executed. Network connectivity also varies widely in such setups, with some devices operating in remote or isolated locations that only occasionally synchronize data [32].

Hardware diversity compounds these challenges. Unlike the relatively homogeneous environments of cloud data centers, embedded systems exist in a fragmented ecosystem of different instruction sets, memory hierarchies, and peripheral configurations [13]. This heterogeneity forces developers to consider platform-specific optimizations to ensure that AI pipelines run efficiently [33]. The specific application also dictates the AI task’s complexity and associated resource needs. Detecting a simple wake word for voice control is computationally inexpensive, while predicting machine anomalies from real-time vibration data demands significant processing power and memory [34]. In each scenario, limited hardware capabilities demand specialized techniques for model design, training, and deployment to ensure that performance metrics—such as inference time, accuracy, and energy usage—meet application requirements [20].

The practical implementation of TinyML applications is deeply intertwined with the capabilities and limitations of the underlying hardware, a landscape marked by significant resource constraints and platform heterogeneity. To concretely illustrate this operational environment, Table 2 presents a selection of commonly used development boards prominent in the TinyML ecosystem. This table highlights the substantial diversity found across these platforms, detailing key specifications such as the microcontroller unit (MCU), CPU architecture, clock frequency, memory resources (flash and SRAM), and physical form factor. By also listing typical applications for each board, the table underscores the varied hardware requirements driven by different edge AI tasks and provides context for the platform-specific optimization challenges inherent in the field.

Table 2.

Examples of commonly used boards for TinyML projects.

2.3. Significance of On-Device AI

Integrating AI directly into constrained devices offers several compelling benefits that align with modern technological and societal needs [50]. One advantage is reduced inference latency, as local processing eliminates the round-trip delay of sending data to a central server, which is critical in real-time systems like drones or industrial robots [18]. Another benefit is improved privacy, as raw data can remain on the device, thereby minimizing exposure to potential eavesdropping or data breaches. This aspect is particularly important in sectors handling sensitive information, such as healthcare and finance, where data protection regulations and ethical considerations are paramount [25].

Additionally, bandwidth conservation emerges as an important incentive for on-device processing. As the volume of IoT data grows, transmitting these data continuously to a remote server can strain networks, particularly in rural or remote settings where connectivity is sporadic or expensive [11]. By performing key inferences locally, the system drastically reduces the volume of data needed to be sent. In scenarios such as environment monitoring or agricultural sensing, local AI can differentiate between normal and exceptional conditions, ensuring that only relevant data trigger an alert or a database update [12]. Beyond these technical motivations, on-device AI also paves the way for large-scale, distributed intelligence architectures, where billions of devices can each contribute to localized analysis, collectively forming a robust, decentralized network of computing resources [51].

3. Key Challenges in AI for Constrained Devices

3.1. Computational and Memory Limitations

One of the most significant challenges in edge AI stems from the inherently limited computational resources available on these devices. Many microcontrollers lack hardware floating-point units or are restricted to a handful of specialized accelerators [16]. Standard deep neural networks, which can contain millions or even billions of parameters, are highly impractical in this setting due to the sheer size of their model weights and the complexity of their mathematical operations [18]. Memory constraints further exacerbate the problem, as loading large models into the main memory can easily exceed the device’s capacity. Even storing intermediate activations during inference can become a hurdle if the model is not carefully optimized [52].

These limitations place a ceiling on the complexity of algorithms that can be feasibly deployed on constrained hardware. For example, a device with a few hundred kilobytes of RAM might only be able to accommodate simple feedforward networks or highly compressed convolutional architectures [15]. If the model demands recurrent computations or multi-branch topologies, the overhead for storing hidden states and intermediate layer outputs might overwhelm the device’s resources [53]. Consequently, edge-focused AI has turned to novel compression and optimization techniques to ensure that the final compiled model fits within the device’s memory envelope while still achieving acceptable accuracy levels [32].

3.2. Energy Efficiency and Thermal Management

Energy efficiency is paramount in many edge deployments, especially in battery-powered or energy-harvesting scenarios. Executing computationally heavy AI tasks often leads to rapid battery drain, which not only shortens device lifespan but can also introduce thermal management challenges if components overheat [54]. The interplay between computation and power usage is complex: while simpler models require fewer multiply–accumulate operations, they might necessitate more frequent inference cycles to achieve real-time responsiveness. Designers must carefully weigh these trade-offs when deciding on an AI architecture or inference schedule [55].

Furthermore, certain embedded use cases demand continuous operation to provide always-on monitoring. This requirement can conflict with the desire to conserve power through extended sleep modes [56]. Hence, techniques such as dynamic voltage and frequency scaling, scheduling AI workloads to periods of ample power availability, and advanced power gating for idle subsystems are being explored [57]. These solutions aim to strike a balance between responsiveness and energy consumption, ensuring that the device can sustain its tasks for the desired operational duration. The need for efficient thermal design is also non-trivial, as high-performance AI tasks can generate heat in tightly enclosed form factors, prompting consideration of lightweight heat sinks or self-regulating workloads to avoid temperature-induced failures [19].

3.3. Real-Time Constraints

Real-time applications—such as robotics, autonomous systems, and certain industrial processes—add another layer of complexity to AI deployment on constrained devices. In these scenarios, decisions must be made within strict timing windows to ensure safe and effective operation [58]. Consider a robotic arm in a smart factory that must adapt its movements based on vision-based AI inferences: if the computations are delayed beyond a few milliseconds, the entire control loop could become unstable, leading to errors or safety issues. Balancing the intricacy of AI models, which often correlates with their predictive accuracy, against the system’s real-time requirements is a key challenge in edge AI [24].

Techniques to address this challenge typically involve model simplification or designing specialized hardware accelerators that can run inference quickly. Real-time operating systems (RTOSs) may also be employed to guarantee scheduling priorities for AI tasks, ensuring that they preempt lower-priority processes. In some instances, partial offloading strategies are used, where less time-critical workloads are sent to nearby edge servers or the cloud when feasible, while mission-critical tasks stay local [59]. Nevertheless, the margins for error are slim, and ensuring deterministic response times frequently pushes developers toward rigorous profiling and optimization at every stage of the pipeline, from data acquisition to final output [16].

3.4. Data Security and Privacy

Although on-device inference can enhance privacy by limiting data exposure, many security vulnerabilities still exist. Edge devices deployed “in the wild” are physically accessible and can be tampered with by adversaries who might extract sensitive information from stored models or training parameters [50]. Lightweight encryption and secure boot processes become essential for protecting both firmware integrity and any locally stored data. In more advanced scenarios, secure enclaves or hardware-based trusted execution environments (TEEs) can isolate sensitive computations from other system components [12].

Moreover, privacy is not solely a matter of data encryption. Complex, intelligent systems can inadvertently leak information through side channels or by revealing model outputs [60]. Federated learning, for instance, transmits model updates or gradients rather than raw data, but these gradients can sometimes be reversed or manipulated to expose sensitive details about the local dataset [61]. As a result, implementing techniques like differential privacy or homomorphic encryption in resource-constrained settings has become an active area of research. Balancing robust security with minimal overhead is challenging, as cryptographic operations themselves can be computationally expensive, consuming both energy and processing cycles that are already at a premium [62].

3.5. Model Generalization vs. Specialization

Another persistent dilemma is deciding between highly specialized models tailored to a single task and more generalized models that can handle multiple tasks or adapt to new conditions. Specialized models tend to be smaller and more efficient, since they incorporate only the parameters needed for a specific domain [29]. This approach can be ideal for devices that serve well-defined functions, such as a vibration sensor used exclusively for bearing fault detection. However, if the use case evolves—say, the sensor must also detect temperature anomalies—then a specialized model might not transfer well to the new requirement [63].

On the other hand, more generalized architectures can support multiple tasks but often at the cost of increased parameter counts and complexity, which can be prohibitive for constrained environments [64]. Transfer learning or incremental learning techniques that adapt a pre-trained model to new tasks on-device offer potential workarounds, but they introduce additional inference or fine-tuning overhead that might exceed the device’s resource budget [32]. Researchers continue to explore ways to construct models that are flexible yet compact, possibly through dynamic neural networks that can switch off unused modules or layers to meet different objectives [65].

3.6. Reliability and Fault Tolerance

Edge devices may operate in harsh or unpredictable conditions, such as extreme temperatures, vibrations, or exposure to moisture, which can degrade hardware components and sensors over time [66]. Ensuring consistent AI performance in these environments demands robust hardware design, but it also relies on fault-tolerant software that can handle partial failures gracefully. For instance, sensor readings may become noisy or intermittent, yet the AI system must still produce reliable inferences [29]. Traditional machine learning models trained on clean, consistent datasets may falter in such real-world conditions.

In response, developers employ redundancy strategies, sensor fusion, and error-correcting techniques to mitigate hardware-induced variability [67]. At the software layer, models can be trained with augmented data that simulate potential noise or missing channels, improving resilience during inference [68]. Another approach is to integrate continual learning methods that periodically retrain or fine-tune models in situ, helping the system adapt to evolving environmental conditions or device aging [9]. These strategies, while effective, add additional layers of complexity that must be balanced against already-constrained resources.

4. Emerging Solutions and State-of-the-Art Approaches

4.1. Model Compression Techniques

Model compression stands at the forefront of enabling AI in resource-constrained environments by reducing the memory footprint and computational demands of neural networks [28]. Among these techniques, quantization is a widely adopted method that converts floating-point weights and activations to lower bit representations—commonly 8-bit integers—to diminish the size of the model and accelerate inference [69]. Advanced quantization approaches push precision even lower, exploring 4-bit or 1-bit representations, though such aggressive strategies can degrade accuracy if not carefully calibrated and retrained [12]. Tools like TensorFlow Lite provide built-in utilities for post-training quantization, which can drastically cut the size of pre-trained models with minimal performance loss [70].

Pruning and sparsity target the removal or zeroing out of redundant weights or channels within a network to reduce computation. Structured pruning, for instance, systematically eliminates entire filters in convolutional layers, leading to more regular sparsity patterns that can be more efficiently accelerated by specialized hardware [71]. Combined with quantization, pruning can reduce model size by an order of magnitude or more, making it feasible to deploy deep neural networks on microcontrollers with very limited memory resources. Knowledge distillation offers another powerful technique, in which a smaller “student” network learns to emulate the outputs of a larger “teacher” model, effectively capturing the teacher’s learned representations while operating on a fraction of the parameters [72]. Often, these techniques are combined in a holistic approach to maximize memory savings, striking a fine balance between a model’s resource footprint and inference accuracy [29].

Among the various model compression techniques that are vital for enabling AI on constrained hardware, quantization and pruning represent two foundational approaches, often used in conjunction. While both aim to reduce model size and computational cost, they operate via distinct mechanisms and entail different trade-offs regarding accuracy, hardware compatibility, and implementation complexity. To clarify their respective strengths and weaknesses, Table 3 offers a critical analysis comparing these methods across several key dimensions. This includes their typical impact on memory footprint, suitability for hardware acceleration, potential effects on model accuracy, deployment complexity, and achievable real-world speedups, thereby highlighting the essential considerations involved in selecting and applying these techniques for TinyML applications.

Table 3.

Critical analysis: quantization vs. pruning: accuracy vs. speed trade-offs.

4.2. TinyML Frameworks

The growing popularity of TinyML has spawned specialized frameworks and toolchains designed to streamline the deployment of machine learning models on resource-constrained devices. One of the most prominent examples is TensorFlow Lite for Microcontrollers, a pared-down inference engine that can execute neural network operations in a memory footprint in the order of tens of kilobytes [8]. By trimming unnecessary runtime features and relying on carefully optimized kernels, TensorFlow Lite for Microcontrollers can run on various microcontroller platforms, including ARM Cortex-M series CPUs and ESP32 boards. Another framework, microTVM, extends the TVM compiler infrastructure to facilitate automated code generation and hardware-specific optimizations, enabling developers to explore different back-end configurations [20].

Additionally, CMSIS-NN from ARM enhances the performance of neural network computations by providing hand-optimized kernels for common operations like convolution and activation functions. These frameworks typically require developers to conduct model training offline on a more capable system, after which the models undergo optimization steps like quantization or weight compression [21]. The resulting executables can then be flashed onto the embedded device. The synergy between these specialized libraries and carefully pruned or quantized models allows even limited systems to achieve meaningful AI functionality in applications like keyword spotting, anomaly detection, and basic computer vision tasks.

Successfully deploying optimized machine learning models onto microcontrollers necessitates more than just model compression; it requires specialized software frameworks designed to manage inference within severe resource limitations. These frameworks provide runtime engines, optimized mathematical kernels, and often tools for model conversion and code generation tailored to embedded environments. To provide developers with an overview of the available toolchains, Table 4 presents a comparative analysis of popular TinyML frameworks, such as TensorFlow Lite for Microcontrollers, microTVM, and Edge Impulse. The table summarizes their core features and discusses their primary advantages (pros) and disadvantages (cons), offering insights into their suitability for different development workflows, target hardware platforms, and optimization requirements.

Table 4.

Benchmarking data: popular TinyML framework comparison.

4.3. Hardware Accelerators and Architectures

Although general-purpose microcontrollers have improved steadily, some deployments demand specialized hardware to meet strict performance and power goals. ASICs (application-specific integrated circuits) can be optimized around specific neural network layers or dataflow patterns to reduce overhead and deliver higher throughput per watt. By tailoring the memory hierarchy and arithmetic units to AI workloads, designers can minimize data movement, a major source of energy consumption. This leads to significant improvements in efficiency, although the development costs are non-trivial [19].

FPGA-based accelerators offer a reconfigurable alternative, enabling hardware-level parallelism without sacrificing programmability. Developers can adapt an FPGA’s logic blocks and interconnect to accelerate convolutional layers, matrix multiplications, or other computational bottlenecks. This adaptability is particularly useful for rapidly evolving AI workloads but can pose challenges in terms of design complexity and timing closure. A more radical direction involves in-memory computing—placing computation logic directly within memory arrays to drastically cut data transfer overhead [74].

Many microcontrollers lack dedicated MAC (multiply–accumulate) units or hardware accelerators, driving research into custom ASICs, FPGAs, and near-memory computing for TinyML workloads. Hardware-aware neural architecture search (NAS) can produce specialized topologies that conform to memory or MAC-operation constraints (e.g., TinyTNAS for time-series classification). Some designs adopt data-channel extension (DEX) to better utilize the limited parallelism in tiny AI accelerators, improving throughput [10]. RISC-V-based heterogeneous architectures with built-in co-processors for neural nets have also been proposed [75].

Table 5 presents a comparison of various TinyML hardware platforms, highlighting their computing power, memory constraints, and suitability for machine learning applications at the edge.

Table 5.

Comparison of TinyML-compatible hardware platforms.

4.4. Federated Learning and Edge AI

Federated learning (FL) has emerged as a powerful technique for collaborative model training across distributed, resource-constrained devices without requiring raw data to leave each node [29]. In a typical FL setup, each device locally trains a model using its own dataset and periodically sends only model updates or gradients to a central server, which aggregates and updates a global model [27]. This architecture can significantly reduce the bandwidth burden associated with traditional centralized training and helps preserve privacy, as personal data rarely traverses the network in raw form [9]. However, many challenges remain, including dealing with heterogeneous hardware, unbalanced data distributions, and asynchronous update cycles.

Beyond federated learning, the broader concept of edge AI seeks to partition intelligence tasks among end devices, edge servers, and the cloud in a hierarchical fashion [76]. Low-latency tasks or privacy-sensitive computations are handled locally, while more computationally demanding operations can be offloaded to powerful edge servers when necessary [60]. This flexible approach acknowledges the heterogeneity of device capabilities within an IoT ecosystem and allows for more complex collaborative scenarios [61]. For instance, certain data might be partially processed on a microcontroller and then encrypted and forwarded to an edge server for refined analysis [63]. The design of such distributed architectures involves careful orchestration of resource scheduling, data caching, and robust communication protocols [9].

4.5. Security Mechanisms and Lightweight Encryption

Securing AI pipelines in resource-limited settings is an integral component of deploying edge intelligence at scale. While the focus is often on performance optimization, insufficient attention to security can expose critical infrastructures to malicious threats [24]. Lightweight cryptography protocols, which are specifically adapted to devices with small footprints, have gained traction [69]. These protocols strike a balance between algorithmic complexity and security guarantees, ensuring that data remain encrypted during transmission and at rest with minimal overhead. As an example, elliptic-curve cryptography (ECC) variants can offer strong encryption with relatively smaller key sizes than RSA-based approaches, thus reducing computational cost [70].

Beyond encryption, trusted execution environments (TEEs) such as ARM TrustZone or Intel SGX enable the creation of secure enclaves that isolate sensitive computations from the rest of the system [71]. In the context of AI, TEEs can store and execute model parameters securely, preventing adversarial access. Additional layers of defense, like secure bootloaders, can ensure that only verified firmware is executed on the device, thwarting attempts to run malicious code. Implementing such measures requires a comprehensive approach that covers hardware, software, and network layers, all while preserving the fundamental constraints of power, memory, and compute capacity that characterize edge devices [12].

4.6. Software and System Optimizations

System-level optimizations that transcend individual models or frameworks also play a pivotal role. Embedded or real-time operating systems must provide efficient task scheduling to ensure that AI inference does not monopolize the CPU or block critical sensing routines [72]. Approaches like event-driven task scheduling or preemptive multitasking can coordinate low-priority background tasks with higher-priority inference jobs [17]. Dynamic power management—where system components enter low-power states when idle—further helps to conserve energy in always-on monitoring applications. Moreover, techniques like sensor fusion can reduce redundant processing steps by combining complementary data streams, potentially lowering the overhead required for complex AI inference [55].

Another emerging theme is the use of containerization and virtualization in resource-limited contexts, although these concepts remain largely uncharted territory in extremely constrained microcontroller environments [29]. Lightweight container solutions may one day permit multiple AI applications to coexist on a single embedded platform, each securely isolated to maintain reliability and data confidentiality. However, such advanced features typically come with non-trivial memory and CPU overhead, illustrating the perennial trade-off between functionality and resource usage [75]. Moving forward, bridging the gap between full-fledged operating systems and bare-metal solutions is likely to inspire new forms of specialized runtime environments optimized for AI at the edge [32].

5. Opportunities and Cross-Cutting Themes

5.1. Healthcare and Wearables

Healthcare stands as one of the most promising domains where resource-constrained AI can have a transformative impact [17]. Wearable devices, such as smartwatches, fitness bands, or specialized medical sensors, are increasingly incorporating machine learning capabilities to track vital signs, detect abnormalities, and offer personalized health recommendations [29]. By performing AI inference locally, these wearables can protect sensitive health information from being routinely transmitted to the cloud, significantly reducing privacy risks and potential regulatory hurdles [73]. For patients with chronic conditions like diabetes or cardiac arrhythmia, continuous on-device monitoring can detect early warning signs and trigger timely interventions.

Nevertheless, wearables must maintain battery life over extended periods to remain unobtrusive and user-friendly. Achieving this goal requires efficient AI architectures that can run quickly and minimize power drain [50]. Additionally, user comfort and adoption hinge on the devices’ physical form factor, which limits the potential for large heat sinks or bulky batteries. Innovations in model compression and hardware–software co-design can help address these constraints, as can synergy with other medical IoT components like smart patches or implantables [22]. Over time, these converging solutions may result in integrated healthcare ecosystems where personalized diagnostics and treatment plans are partly executed on ultra-low-power devices worn by patients in real-world settings [32].

5.2. Smart Cities and Infrastructure

City planners worldwide are increasingly relying on sensor networks to monitor and manage critical infrastructure, spanning traffic flow, waste management, air quality, and energy distribution [74]. Implementing AI at the edge within these urban ecosystems enables real-time decision-making, such as rerouting traffic after an accident or identifying energy waste in smart grids [3]. By embedding intelligence in streetlights, traffic signals, and other municipal assets, cities can adapt dynamically to evolving conditions, optimizing resource allocation and reducing operational costs. Furthermore, localized AI processing reduces the bandwidth needed for collecting vast volumes of raw sensor data, thus making large-scale sensor deployments more feasible [76].

Yet, the sheer scale of smart city infrastructures introduces complexities. Citywide sensor networks comprise thousands or even millions of devices with highly varied hardware profiles and communication methods [28]. Managing firmware updates, security patches, and consistent model deployment across such a heterogeneous landscape becomes a monumental undertaking [57]. Federated learning approaches might alleviate some challenges by enabling distributed model training, but guaranteeing reliability and security at such a scale also necessitates robust device identification, tamper-resistant hardware, and flexible policy enforcement [77]. If successfully implemented, resource-constrained AI in smart city environments can provide actionable insights, streamline municipal services, and promote sustainability [12].

5.3. Agriculture and Environmental Monitoring

Resource-constrained AI also finds significant application in smart agriculture, enabling low-cost sensors and drones to track soil moisture, temperature, crop vitality, and pest levels. By analyzing these signals in situ, farmers can make data-driven decisions about irrigation schedules, pesticide use, and harvesting times, improving yields and minimizing waste [30]. Importantly, many agricultural lands are remote, lacking stable internet connectivity, making local AI inference essential. Processing data locally can drastically reduce the volume of transmissions—limited to event triggers or summary statistics—thus preserving battery life for devices powered by solar panels or other off-grid sources [78].

Similarly, environmental monitoring efforts depend on scattered sensors tracking air, water, or wildlife conditions across large geographic areas, including wilderness or marine habitats. Resource-constrained devices capable of on-site inference can detect pollution spikes, illegal deforestation, or changes in animal populations, alerting authorities only when anomalies occur [26]. This approach is more sustainable than continuously streaming high-resolution data, which may be difficult in areas with limited infrastructure [32]. Additionally, distributed sensor networks can offer robust resilience if certain nodes go offline, since intelligence is not solely centralized. By empowering local AI capabilities, environmental monitoring systems foster more immediate and effective conservation interventions while operating under constrained power and connectivity conditions [68].

5.4. Industry 4.0 and Predictive Maintenance

The concept of Industry 4.0 emphasizes automation, data exchange, and interconnectivity in manufacturing systems. Factories increasingly employ sensors for condition-based monitoring, quality control, and predictive maintenance, aiming to predict machine failures before they occur [78]. Embedding AI into these sensors or edge gateways can enable real-time anomaly detection, reducing unplanned downtime and increasing overall equipment effectiveness [74]. Instead of collecting vast amounts of raw vibration or acoustic signals from each machine, local AI can parse relevant features, identify outliers, and send only crucial insights to a centralized platform, thus lowering bandwidth demands [21].

In practice, these environments are often harsh, with vibration, dust, and potential interference from other machinery. Resource-constrained AI hardware must be ruggedized and shielded against electromagnetic disturbances [75]. Moreover, the diversity of industrial protocols and hardware calls for flexible solutions capable of interfacing with legacy systems while delivering advanced AI. Intermittent connectivity or strict real-time control loops amplify the challenges. As with other domains, solutions for Industry 4.0 are gravitating toward compressed and efficient models, specialized accelerators, and co-design strategies that integrate the entire data pipeline, from sensor to analytics, under a unified optimization framework [23].

5.5. Ethical and Sustainability Considerations

While technical progress in embedded AI is crucial, ethical and sustainability dimensions cannot be overlooked. Data bias and representativeness pose major concerns, particularly when training or refining models on localized datasets that may not capture the diversity of real-world conditions [79]. This risk is aggravated if multiple edge devices gather skewed or incomplete data, inadvertently reinforcing biases in collaborative systems. Careful curation, federated data sharing among diverse demographics, and ongoing validation of model outputs can mitigate this problem, though each step increases complexity [50].

Privacy also emerges as both a motivator and a challenge. On-device analysis does lower the risk of mass data collection, but it does not inherently solve transparency issues around what is being inferred and how decisions are made. Regulators and consumers alike demand clarity on how AI-based devices handle personal or environmental data. Furthermore, the surge in IoT device manufacturing raises environmental concerns, as large-scale hardware production consumes materials and energy [22]. Proponents of edge AI argue that locally processed data can significantly lower global data center energy usage; however, the net ecological impact depends on device lifecycles, e-waste handling, and responsible recycling [80]. Thus, a holistic perspective—integrating the entire product chain—is key to ensuring that AI in constrained devices yields net benefits to society and the planet [56].

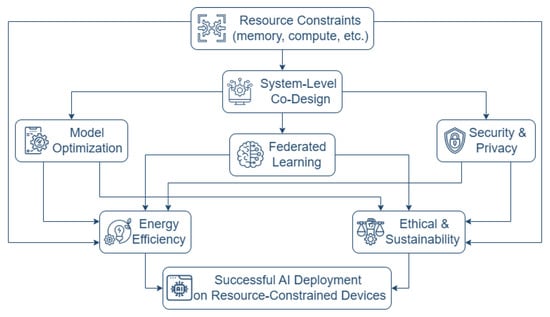

Understanding the interdependencies between resource limitations, technical solutions, application requirements, and broader societal concerns is crucial for advancing the field. Figure 1 provides a system-level perspective, illustrating how these various elements interact and contribute to the successful deployment of AI on resource-constrained devices, synthesizing the key challenges, solutions, and considerations discussed in the preceding sections.

Figure 1.

System-level perspective for TinyML deployment: an architectural flowchart outlining key challenges, solutions, and cross-cutting themes.

6. Future Directions

As AI deployment in resource-constrained settings accelerates, a plethora of research opportunities and practical challenges lies ahead. This section outlines several directions that hold promise for advancing the field.

6.1. Dynamic Model Adaptation and On-Device Training

The majority of edge AI solutions rely on static, pre-trained models that seldom adapt after deployment. However, real-world conditions are rarely static; input distributions can drift over time as environments change or user behaviors shift [61]. Therefore, techniques for dynamic model adaptation, such as runtime pruning or partial retraining, are expected to gain traction. By allowing models to adjust their parameters or structure based on real-time feedback, devices can maintain higher accuracy without frequent server communications [81]. The advent of incremental and transfer learning for microcontrollers remains an active research frontier, as training steps themselves can be computationally taxing. Nevertheless, approaches like federated or split learning could enable incremental updates, provided that algorithms are carefully optimized for local hardware constraints [82].

6.2. Co-Design of Hardware and Algorithms

Hardware–software co-design involves developing AI algorithms and hardware architectures synergistically, ensuring that each is optimized for the other [24]. Rather than designing a generic neural network and attempting to retrofit it for an embedded processor, co-design encourages selecting or inventing neural architectures that map efficiently onto specialized hardware blocks. Techniques like memory-centric computing or near-sensor intelligence can drastically cut energy usage by reducing data transfers [32]. For instance, certain convolutional neural network (CNN) layers can be implemented as streaming pipelines directly connected to sensor inputs, circumventing the need for large intermediate buffers [75]. As the AI ecosystem becomes increasingly heterogeneous, we anticipate novel instruction sets and domain-specific accelerators designed in tandem with lightweight neural models to catalyze breakthroughs in efficiency [19].

6.3. Advanced Security and Trust Mechanisms

Securing AI workflows on constrained devices calls for continuous innovation in cryptography, secure enclaves, and tamper-evident hardware. One emerging trend is the exploration of homomorphic encryption, which allows computations to be performed on encrypted data without exposing raw information [12]. Although computationally heavy for large-scale networks, scaled-down variants of homomorphic encryption might become more feasible as specialized hardware accelerators evolve. Another area of interest is secure multiparty computation (MPC), which enables multiple parties to jointly compute functions over their data while keeping inputs private [24]. Applied to federated learning, MPC can further mitigate privacy risks when aggregating updates across untrusted devices. Ensuring that these security measures remain lightweight enough for microcontrollers remains a central challenge [23].

6.4. Privacy-Preserving Learning at Scale

Federated learning has demonstrated the feasibility of distributed AI, yet scaling to millions or billions of edge devices can introduce performance bottlenecks, fairness concerns, and vulnerability to malicious updates [83]. Researchers are investigating novel aggregation algorithms, incentive mechanisms, and robust outlier detection strategies to sustain large-scale, federated deployments [61]. Additionally, differential privacy frameworks aim to provide formal guarantees that individual data points cannot be re-inferred from aggregated model parameters, an especially relevant consideration for sensitive medical or personal data. Combining such techniques with compression and resource-aware scheduling is essential to ensure that large-scale federated learning remains computationally viable on highly constrained devices [29].

6.5. Resilience and Reliability for Real-World Environments

The operational environments for edge devices often include harsh elements like extreme weather conditions that inevitably compromise hardware and sensor integrity over time [52]. Ensuring consistent AI performance in these environments demands robust hardware design, but it also relies on fault-tolerant software that can handle partial failures gracefully. For instance, sensor readings may become noisy or intermittent, yet the AI system must still produce reliable inferences [29]. Traditional machine learning models trained on clean, consistent datasets may falter in such real-world conditions. In response, developers employ redundancy strategies, sensor fusion, and error-correcting techniques to mitigate hardware-induced variability [61].

At the software layer, models can be trained with augmented data that simulate potential noise or missing channels, improving resilience during inference. Another approach is to integrate continual learning methods that periodically retrain or fine-tune models in situ, helping the system adapt to evolving environmental conditions or device aging [9]. These strategies, while effective, add additional layers of complexity that must be balanced against already-constrained resources [59].

6.6. Benchmarking and Standardization

As the field grows, the lack of universally accepted benchmarks and metrics for AI on constrained devices presents hurdles to reproducibility and fair evaluation [84]. While standard image classification or object detection benchmarks exist for large-scale networks, they do not necessarily reflect the unique constraints of TinyML contexts [20]. Moreover, measuring energy consumption, memory usage, and inference latency in a consistent manner can be challenging, given the diversity of hardware platforms. Initiatives like MLPerf Tiny, which focus on embedded inference, are steps in the right direction [32]. Further standardization efforts would promote transparent comparisons of compression methods, frameworks, and hardware designs, ultimately accelerating the pace of innovation in the ecosystem [23].

6.7. Opportunities and Future Outlook

The rapid expansion of the Internet of Things and the demand for real-time, privacy-preserving analytics have fueled the drive to incorporate artificial intelligence into resource-constrained devices. By migrating inference and, in some cases, training from centralized cloud servers to embedded microcontrollers, this paradigm promises to reduce latency, conserve bandwidth, and protect sensitive data [12]. However, these benefits come at the cost of formidable technical challenges, as typical deep learning pipelines must be significantly re-engineered to fit within tight memory, compute, and power budgets. Researchers and practitioners have responded with a surge of innovations, including model compression (quantization, pruning, knowledge distillation), TinyML frameworks, hardware accelerators, federated learning, and advanced security mechanisms [23].

This paper has explored the key challenges—spanning computational constraints, energy efficiency, real-time requirements, security, privacy, and reliability—that confront AI deployment in edge devices. We then reviewed state-of-the-art solutions, highlighting how the synergy of specialized architectures, optimized software stacks, and collaborative learning protocols makes on-device intelligence increasingly viable [24]. Moreover, by examining opportunities in healthcare, smart cities, agriculture, and Industry 4.0, we revealed the breadth of potential applications poised to benefit from localized AI inference. In parallel, we emphasized that ethical and sustainability considerations remain central to responsible AI adoption, particularly as billions of edge devices enter our homes, streets, and workplaces [28].

Looking ahead, future directions such as dynamic model adaptation, co-design of hardware and algorithms, advanced security mechanisms, large-scale privacy-preserving learning, and rigorous benchmarking offer fertile ground for continued progress [32]. The development of robust, low-power, and secure AI solutions capable of thriving under real-world conditions will require not only breakthroughs in algorithms and hardware but also interdisciplinary collaboration among data scientists, embedded systems engineers, ethicists, and policymakers [50]. Overcoming these challenges will pave the way for a new generation of pervasive, intelligent devices that seamlessly integrate into everyday life while preserving privacy, efficiency, and sustainability. In doing so, edge AI stands to transform industries and societies, enabling us to harness the power of machine intelligence wherever data are generated, even in the most constrained environments [23].

6.8. Key Insights for TinyML Researchers

- Embrace System-Level Co-Design: AI compression alone is insufficient; synergy between algorithms, firmware, and hardware is critical for meaningful speedups and reliability [19,24].

- Benchmark Under Real Constraints: True performance cannot be assessed without measuring energy consumption, latency, and memory usage against real application scenarios, especially under harsh or unpredictable conditions [23,32].

- Layered Security is Essential: On-device inference helps preserve privacy, but resource-starved devices also require robust encryption, secure enclaves, and trustworthy firmware pipelines to combat tampering or data leakage [12,24].

- Adaptation and Specialization: Balancing specialized models (extreme efficiency) with adaptive or multi-task approaches (flexibility) remains an open research question in resource-constrained AI [29,65].

- Ethical and Sustainable Deployments: Large-scale IoT expansions demand addressing fairness, bias, environmental impact, and responsible data management, ensuring TinyML’s long-term societal benefits [22].

7. Conclusions

The rapid expansion of the Internet of Things and the demand for real-time, privacy-preserving analytics have fueled the drive to incorporate on-device artificial intelligence in resource-constrained devices. By migrating inference—and, in some cases, training—from centralized cloud servers to embedded microcontrollers, this paradigm holds the promise of reducing latency, conserving bandwidth, and protecting sensitive data. However, these benefits entail overcoming a wide array of technical hurdles, from managing stringent memory and compute limits to securing physically exposed hardware and meeting real-time performance.

We reviewed the key challenges encountered in deploying AI on constrained hardware, focusing on computational limits, energy efficiency, real-time requirements, security, privacy, and reliability. We further examined the tools and techniques essential to addressing these constraints, including cutting-edge model compression, specialized TinyML frameworks, hardware accelerators, federated learning mechanisms, and advanced security approaches. Concrete application scenarios in healthcare, smart cities, agriculture, and industry highlight the transformative potential of embedded AI while also illustrating the unique demands placed upon these devices.

Ultimately, the future of TinyML depends not only on individual breakthroughs in compression or specialized hardware but also on system-level integration, wherein every layer—from neural architecture design to runtime scheduling and cryptographic defense—is tuned for synergy within extreme resource limits. The success of such holistic solutions will facilitate a new era of distributed AI that is both efficient and ethically grounded, extending sophisticated analytics to the remotest corners of our increasingly connected world.

Funding

This research received no external funding and The APC was funded by Escuela Politécnica Nacional.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Satyanarayanan, M. The emergence of edge computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Shi, R.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Li, S.; Da Xu, L.; Zhao, S. The internet of things: A survey. Inf. Syst. Front. 2015, 17, 243–259. [Google Scholar] [CrossRef]

- Bayoudh, K. A survey of multimodal hybrid deep learning for computer vision: Architectures, applications, trends, and challenges. Inf. Fusion 2023, 105, 102217. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Warden, P.; Situnayake, D. TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Micro-Controllers; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Xu, L.D.; He, W.; Li, S. Internet of Things in industries: A survey. IEEE Trans. Ind. Informat. 2014, 10, 2233–2243. [Google Scholar] [CrossRef]

- Koomey, G. Growth in Data Center Electricity Use 2005 to 2010; Analytics Press: Oakland, CA, USA, 2011; Available online: https://alejandrobarros.com/wp-content/uploads/old/4363/Growth_in_Data_Center_Electricity_use_2005_to_2010.pdf (accessed on 3 February 2025).

- Dehrouyeh, F.; Yang, L.; Ajaei, F.B.; Shami, A. On TinyML and cybersecurity: Electric vehicle charging infrastructure use case. IEEE Access 2024, 12, 108703–108730. [Google Scholar] [CrossRef]

- Shokri, R.; Shmatikov, V. Privacy-preserving deep learning. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 1310–1321. [Google Scholar]

- Han, H.; Siebert, J. TinyML: A Systematic review and synthesis of existing research. In Proceedings of the 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Republic of Korea, 21–24 February 2022. [Google Scholar] [CrossRef]

- Venkataramanaiah, S.K.; Ma, Y.; Yin, S.; Nurvithadhi, E.; Dasu, A.; Cao, Y.; Seo, J.-S. DeepX: A software accelerator for low-power deep learning inference on mobile devices. In Proceedings of the 15th International Conference on Information Processing in Sensor Networks (IPSN), Vienna, Austria, 11–14 April 2016; pp. 1–12. [Google Scholar]

- Reddi, V.J.; Plancher, B.; Kennedy, S.; Moroney, L.; Warden, P.; Suzuki, L.; Agarwal, A.; Banbury, C.; Banzi, M.; Bennett, M.; et al. Widening access to applied machine learning with TinyML. Harv. Data Sci. Rev. 2022, 4. [Google Scholar] [CrossRef]

- Johnvictor, A.C.; Poonkodi, M.; Sankar, N.P.; Vs, T. TinyML-based lightweight AI healthcare mobile chatbot deployment. J. Multidiscip. Heal. 2024, 17, 5091–5104. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Krishna, T.; Emer, J.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef]

- Wiese, P.; İsLamoğlu, G.; Scherer, M.; Macan, L.; Jung, V.J.; Burrello, A.; Conti, F.; Benini, L. Toward Attention-based TinyML: A heterogeneous accelerated architecture and automated deployment flow. IEEE Micro. 2025, 1. [Google Scholar] [CrossRef]

- Sudharsan, B.; Salerno, S.; Nguyen, D.-D.; Yahya, M.; Wahid, A.; Yadav, P.; Breslin, J.G.; Ali, M.I. TinyML Benchmark: Executing fully connected neural networks on commodity microcontrollers. In Proceedings of the 2021 IEEE World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 14 June 2021–31 July 2021. [Google Scholar] [CrossRef]

- Andalib, N.; Selimi, M. Exploring local and cloud-based training use cases for embedded Machine Learning. In Proceedings of the 2024 Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 11–14 June 2024. [Google Scholar] [CrossRef]

- Tsoukas, V.; Boumpa, E.; Giannakas, G.; Kakarountas, A. A review of Machine Learning and TinyML in healthcare. In Proceedings of the Panhellenic Conference on Informatics, Volos, Greece, 26–28 November 2021. [Google Scholar] [CrossRef]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Advancements in TinyML: Applications, limitations, and impact on IoT devices. Electronics 2024, 13, 3562. [Google Scholar] [CrossRef]

- Zhang, Y.; Wijerathne, D.; Li, Z.; Mitra, T. Power-performance characterization of TinyML systems. In Proceedings of the 2022 IEEE International Conference on Computer Design (ICCD), Lake Tahoe, NV, USA, 23–26 October 2022. [Google Scholar] [CrossRef]

- Bai, L.; Zhao, Y.; Huang, X. A CNN accelerator on FPGA using depthwise separable convolution. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1415–1419. [Google Scholar] [CrossRef]

- Arpaia, P.; Capobianco, L.; Caputo, F.; Cioffi, A.; Esposito, A.; Isgrò, F.; Moccaldi, N.; Pau, D.; Siorpaes, D.; Toscano, E. Accurate energy measurements for Tiny Machine Learning workloads. In Proceedings of the 2024 IEEE International Workshop on Metrology for eXtended Reality, AI and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Tsoukas, V.; Gkogkidis, A.; Boumpa, E.; Kakarountas, A. A Review on the emerging technology of TinyML. ACM Comput. Surv. 2024, 56, 1–37. [Google Scholar] [CrossRef]

- Alajlan, N.; Ibrahim, D.M. TinyML: Adopting tiny machine learning in smart cities. J. Auton. Intell. 2024, 7. [Google Scholar] [CrossRef]

- Yang, L.T.; Lei, S.; Jianing, C.; Amine, F.M.; Jun, W.; Edmond, N.; Kai, H. A survey on smart agriculture: Development modes, technologies, and security and privacy challenges. IEEE/CAA J. Autom. Sin. 2021, 8, 273–302. [Google Scholar] [CrossRef]

- Ren, H.; Anicic, D.; Runkler, T.A. TinyOL: TinyML with Online-Learning on microcontrollers. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021. [Google Scholar] [CrossRef]

- Schizas, N.; Karras, A.; Karras, C.; Sioutas, S. TinyML for Ultra-Low Power AI and large scale IoT deployments: A systematic review. Future Internet 2022, 14, 363. [Google Scholar] [CrossRef]

- Pavan, M.; Caltabiano, A.; Roveri, M. On-device subject recognition in UWB-radar data with Tiny Machine Learning. In Proceedings of the CPS Summer School PhD Workshop 2022, Sardinia, Italy, 19–23 September 2022. [Google Scholar]

- Yousefpour, A.; Ishigaki, G.; Jue, J.P. Fog computing: Towards minimizing delay in the Internet of Things. In Proceedings of the IEEE International Conference on Edge Computing (EDGE), Honolulu, HI, USA, 25–30 June 2017; pp. 17–24. [Google Scholar]

- Adafruit. Adafruit EdgeBadge—TensorFlow Lite for Microcontrollers. 2025. Available online: https://www.adafruit.com/product/4400 (accessed on 5 February 2025).

- Arducam. Pico4ML-BLE TinyML Dev Kit User Manual. 2025. Available online: https://www.arducam.com/downloads/B0330-Pico4ML-BLE-User-Manual.pdf (accessed on 5 February 2025).

- Arduino. Arduino Nano 33 BLE Sense Datasheet. 2025. Available online: https://docs.arduino.cc/resources/datasheets/ABX00031-datasheet.pdf (accessed on 5 February 2025).

- STMicroelectronics. B-L475E-IOT01A-STM32L4 Discovery Kit IoT Node. 2025. Available online: https://www.st.com/en/evaluation-tools/b-l475e-iot01a.html (accessed on 5 February 2025).

- Systems, E. ESP32-S3-DevKitC-1. 2025. Available online: https://docs.espressif.com/projects/esp-dev-kits/en/latest/esp32s3/esp32-s3-devkitc-1/index.html (accessed on 5 February 2025).

- Himax. Himax WE-I Plus EVB Endpoint AI Development Board. 2025. Available online: https://www.sparkfun.com/himax-we-i-plus-evb-endpoint-ai-development-board.html (accessed on 5 February 2025).

- NVIDIA. Jetson Nano Developer Kit Downloads. 2025. Available online: https://developer.nvidia.com/embedded/downloads (accessed on 5 February 2025).

- Arduino. Portenta H7 Datasheet. 2025. Available online: https://docs.arduino.cc/resources/datasheets/ABX00042-ABX00045-ABX00046-datasheet.pdf (accessed on 5 February 2025).

- Pi, R. Raspberry Pi 4 Model B Datasheet. 2025. Available online: https://datasheets.raspberrypi.com/rpi4/raspberry-pi-4-datasheet.pdf (accessed on 5 February 2025).

- Pi, R. Raspberry Pi Pico Datasheet. 2025. Available online: https://datasheets.raspberrypi.com/pico/pico-datasheet.pdf (accessed on 5 February 2025).

- Studio, S. Get Started with Seeeduino XIAO. 2025. Available online: https://wiki.seeedstudio.com/Seeeduino-XIAO/ (accessed on 5 February 2025).

- Sony. Spresense Products. 2025. Available online: https://developer.sony.com/spresense/products (accessed on 5 February 2025).

- SparkFun. SparkFun Edge Hookup Guide. 2025. Available online: https://learn.sparkfun.com/tutorials/sparkfun-edge-hookup-guide/all (accessed on 5 February 2025).

- Corp, S. Syntiant TinyML. 2025. Available online: https://www.digikey.com/en/products/detail/syntiant-corp/SYNTIANT-TINYML/15293343 (accessed on 5 February 2025).

- Studio, S. Get Started with Wio Terminal. 2025. Available online: https://wiki.seeedstudio.com/Wio-Terminal-Getting-Started/ (accessed on 5 February 2025).

- Abadade, Y.; Benamar, N.; Bagaa, M.; Chaoui, H. Empowering healthcare: TinyML for precise lung disease classification. Future Internet 2024, 16, 391. [Google Scholar] [CrossRef]

- Zaidi, S.A.R.; Hayajneh, A.M.; Hafeez, M.; Ahmed, Q.Z. Unlocking edge intelligence through Tiny Machine Learning (TinyML). IEEE Access 2022, 10, 100867–100877. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Venkatesh, G.; Sim, J.; Marr, D.; Huang, R.; Hock, J.O.G.; Liew, Y.T.; Srivatsan, K.; Moss, D.; Subhaschandra, S.; et al. Can FPGAs beat GPUs in accelerating next-generation deep neural networks? In Proceedings of the ACM/SIGDA International Symposium Field-Programmable Gate Arrays (FPGA), Monterey, CA, USA, 22–24 February 2017; pp. 5–14. [Google Scholar]

- Conde, J.; Munoz-Arcentales, A.; Alonso, L.; Salvacha, J.; Huecas, G. Enhanced FIWARE-Based architecture for Cyber-physical systems with Tiny Machine Learning and Machine Learning operations: A Case Study on Urban Mobility Systems. IEEE IT Prof. 2024, 26, 55–61. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Capogrosso, L.; Cunico, F.; Cheng, D.S.; Fummi, F.; Cristani, M. A Machine Learning-oriented survey on Tiny Machine learning. IEEE Access 2024, 12, 23406–23426. [Google Scholar] [CrossRef]

- Alaa, R.; Hussein, E.; Al-libawy, H. Object detection algorithms implementation on embedded devices: Challenges and suggested solutions. Kufa J. Eng. 2024, 15, 148–169. [Google Scholar] [CrossRef]

- Abadade, Y.; Temouden, A.; Bamoumen, H.; Benamar, N.; Chtouki, Y.; Hafid, A.S. A comprehensive survey on TinyML. IEEE Access 2023, 11, 96892–96922. [Google Scholar] [CrossRef]

- Datta, A.; Pal, A.; Marandi, R.; Chattaraj, N.; Nandi, S.; Saha, S. Real-Time air quality predictions for smart cities using TinyML. In Proceedings of the International Conference of Distributed Computing and Networking, Chennai, India, 4–7 January 2024. [Google Scholar] [CrossRef]

- Rb, M.; Tuchel, P.; Sikora, A.; Mueller-Gritschneder, D. A continual and incremental learning approach for TinyML On-device Training Using Dataset Distillation and Model Size Adaption. In Proceedings of the 2024 IEEE International Conference on Industrial Cyber-Physical Systems (ICPS), St. Louis, MO, USA, 12–15 May 2024. [Google Scholar] [CrossRef]

- Karras, A.; Giannaros, A.; Karras, C.; Theodorakopoulos, L.; Mammassis, C.S.; Krimpas, G.A.; Sioutas, S. TinyML algorithms for big data management in large-scale IoT systems. Future Internet 2024, 16, 42. [Google Scholar] [CrossRef]

- Lin, J.; Zhu, L.; Chen, W.-M.; Wang, W.-C.; Han, S. Tiny Machine Learning: Progress and futures [Feature]. IEEE Solid-State Circuits Mag. 2023, 23, 8–34. [Google Scholar] [CrossRef]

- Chen, T.; Moreau, T.; Jiang, Z.; Zheng, L.; Yan, E.; Cowan, M.; Shen, H.; Wang, L.; Hu, Y.; Ceze, L.; et al. TVM: End-to-end optimization stack for deep learning. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), Carlsbad, CA, USA, 8–10 October 2018; pp. 1–15. [Google Scholar]

- Oufettoul, H.; Chaibi, R.; Motahhir, S. TinyML applications, research challenges, and future research directions. In Proceedings of the 2024 International Conference on Learning Technologies & Technologies (LTT), London, UK, 17–18 April 2024. [Google Scholar] [CrossRef]

- Zhang, J.; Li, J. Improving the performance of openCL-based FPGA accelerator for convolutional neural network. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2783–2794. [Google Scholar]

- Jaiswal, S.; Goli, R.; Kumar, A.; Seshadri, V.; Sharma, R. MinUn: Accurate ML inference on microcontrollers. In Proceedings of the ACM SIGPLAN Conference on Languages, Compilers, and Tools for Embedded Systems, San Diego, CA, USA, 14 June 2022. [Google Scholar] [CrossRef]

- Ovtcharov, K.; Ruwase, O.; Kim, J.-Y.; Fowers, J.; Strauss, K.; Chung, E.S. Accelerating Deep Convolutional Neural Networks Using Specialized Hardware. Microsoft Research: Redmond, WA, USA, White Paper, February 2015. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/CNN20Whitepaper.pdf (accessed on 5 February 2025).

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tuts. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Kim, K.; Jang, S.; Park, J.-H.; Lee, E.; Lee, S.-S. Lightweight and energy-efficient deep learning accelerator for real-time object detection on edge devices. Sensors 2023, 23, 1185. [Google Scholar] [CrossRef] [PubMed]

- Gallager, R.G. A perspective on multiaccess channels. IEEE Trans. Inf. Theory 1985, 31, 124–142. [Google Scholar] [CrossRef]

- Costan, V.; Devadas, S. Intel SGX Explained. Cryptology ePrint Archive, Paper 2016/086, 2016. Available online: https://eprint.iacr.org/2016/086 (accessed on 5 February 2025).

- King, S.; Nadal, S. PPCoin: Peer-to-Peer Crypto-Currency with Proof-of-Stake. Documento Técnico Auto-Publicado, 19 de Agosto de 2012. Available online: https://decred.org/research/king2012.pdf (accessed on 5 February 2025).

- Al Faruque, M.; Mancini, L.V. Energy management-as-a-service over fog computing platform. IEEE Internet Things J. 2016, 3, 161–169. [Google Scholar] [CrossRef]

- Azimi, I.; Anzanpour, A.; Rahmani, A.M.; Pahikkala, T.; Levorato, M.; Liljeberg, P.; Dutt, N. Hich: HIERARCHICAL fog-assisted computing architecture for healthcare IoT. ACM Trans. Embed. Comput. Syst. 2020, 19, 1–29. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Jiang, L.; Xie, S.; Zhang, Y. Intelligent edge computing for IoT-based energy management in smart cities. IEEE Netw. 2019, 33, 111–117. [Google Scholar] [CrossRef]

- Xu, K.; Zhang, H.; Li, Y.; Zhang, Y.; Lai, R.; Liu, Y. An ultra-low power TinyML system for real-time visual processing at edge. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 2640–2644. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tuts. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Neethirajan, M. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef]

- Yin, S.; Li, X.; Gao, H. Data-based techniques focused on modern industry: An overview. IEEE Trans. Ind. Electron. 2015, 62, 657–667. [Google Scholar] [CrossRef]

- Crawford, K. The Hidden Biases in Big Data. Harvard Business Review. Available online: https://hbr.org/2013/04/the-hidden-biases-in-big-data (accessed on 5 February 2025).

- Yang, T.-C.; Howard, A.; Chen, B.; Zhang, X.; Go, A.; Sandler, M.; Sze, V.; Adam, H. NetAdapt: Platform-aware neural network adaptation for mobile applications. In Proceedings of the European Computer Vision Association (ECCV), Munich, Germany, 8–14 September 2018; pp. 285–300. [Google Scholar]

- Horowitz, M. Computing’s energy problem (and what we can do about it). In Proceedings of the IEEE International Solid-State Circuits Conference—Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 9–13 February 2014; pp. 10–14. [Google Scholar]

- Gentry, C. Fully homomorphic encryption using ideal lattices. In Proceedings of the 41st Annual ACM Symposium on Theory of Computing, Bethesda, MD, USA, 31 May 2009–2 June 2009; pp. 169–178. [Google Scholar]

- Signoretti, G.; Silva, M.; Andrade, P.; Silva, I.; Sisinni, E.; Ferrari, P. An evolving TinyML compression algorithm for IoT environments based on data eccentricity. Sensors 2021, 21, 4153. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).