Federated XAI IDS: An Explainable and Safeguarding Privacy Approach to Detect Intrusion Combining Federated Learning and SHAP †

Abstract

1. Introduction

- To assess the efficacy of an intrusion detection system utilizing cutting-edge machine learning methodologies, federated learning for decentralized training, and explainable artificial intelligence for enhanced interpretability and transparency.

- To minimize computational overhead by utilizing federated learning, which allows decentralized data processing and eliminates the need for central data aggregation.

- To reduce the dangers of single points of failure and centralized data breaches by implementing a distributed, node-based architecture in federated learning.

- To ensure the reliability and trustworthiness of IDS predictions by employing explainable AI, which provides insights into model decisions and fosters greater user trust in automated systems.

2. Literature Review

3. Methodology

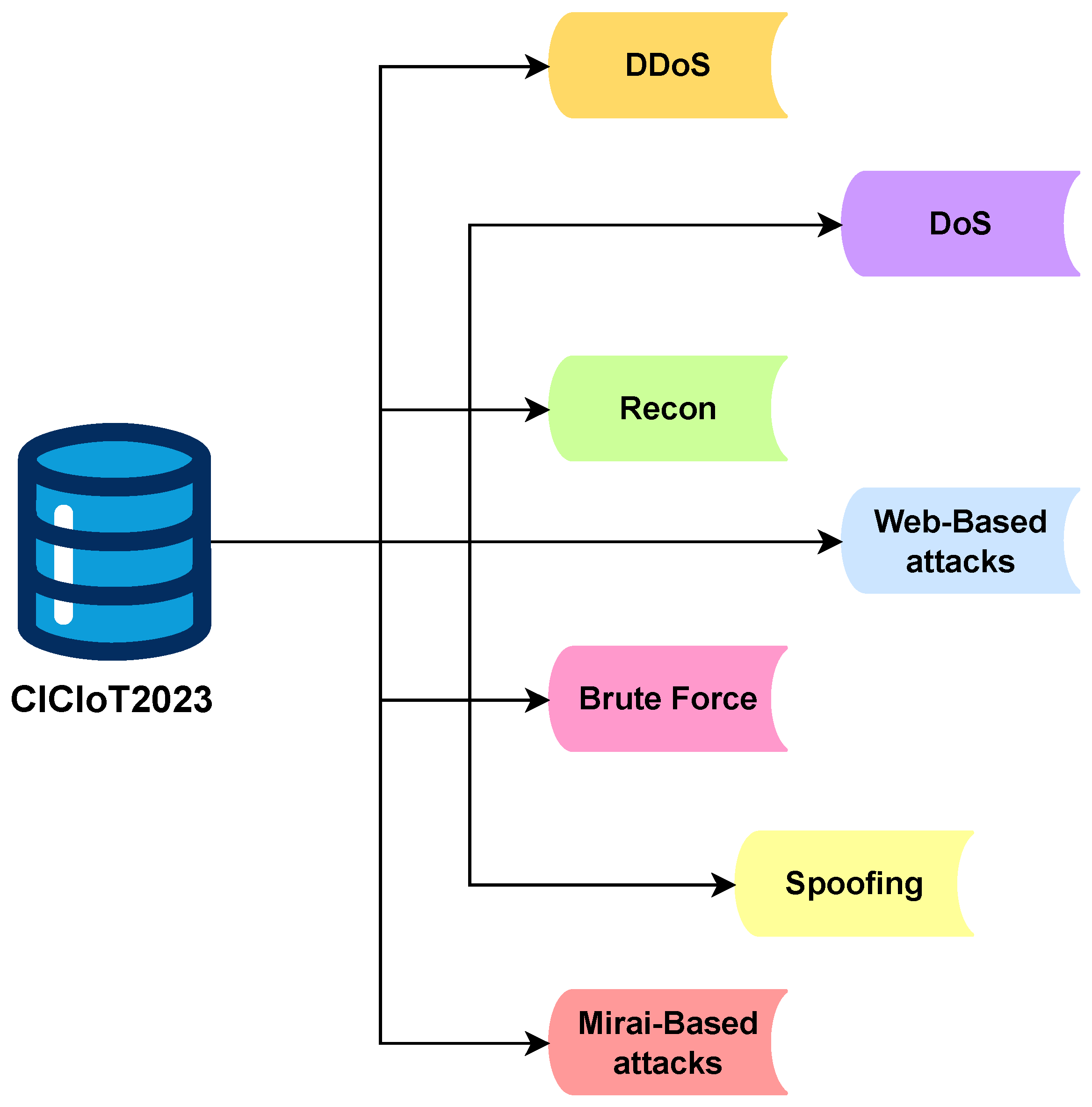

3.1. Data Set Compilation

- I.

- Distributed Denial of Service (DDoS): Large-scale flooding assaults meant to harm multiple IoT gadgets simultaneously to exhaust computational resources and disrupt network availability.

- II.

- Denial of service (DoS): single-source attack tactics designed to overwhelm a specific IoT device, making it unresponsive to valid queries.

- III.

- Reconnaissance (Recon): passive and active network scanning techniques are used to obtain information on vulnerable IoT devices, services, and network setups.

- IV.

- Web-based attacks: exploitation of IoT web interfaces using security holes in IoT web interfaces, including SQL infiltration, command insertion, and cross-website scripting to gain unauthorized access.

- V.

- Brute-force attacks: systematic password-guessing attacks targeting IoT authentication mechanisms to compromise credentials and gain illicit control over devices.

- VI.

- Spoofing attacks: identity forging techniques, such as ARP and IP spoofing, are used to masquerade as legitimate IoT organizations in order to eavesdrop or manipulate communications.

- VII.

- Mirai-based attacks: malware-driven attacks use the Mirai botnet to exploit vulnerabilities in IoT device security, allowing for large-scale infections and subsequent coordinated cyber attacks.

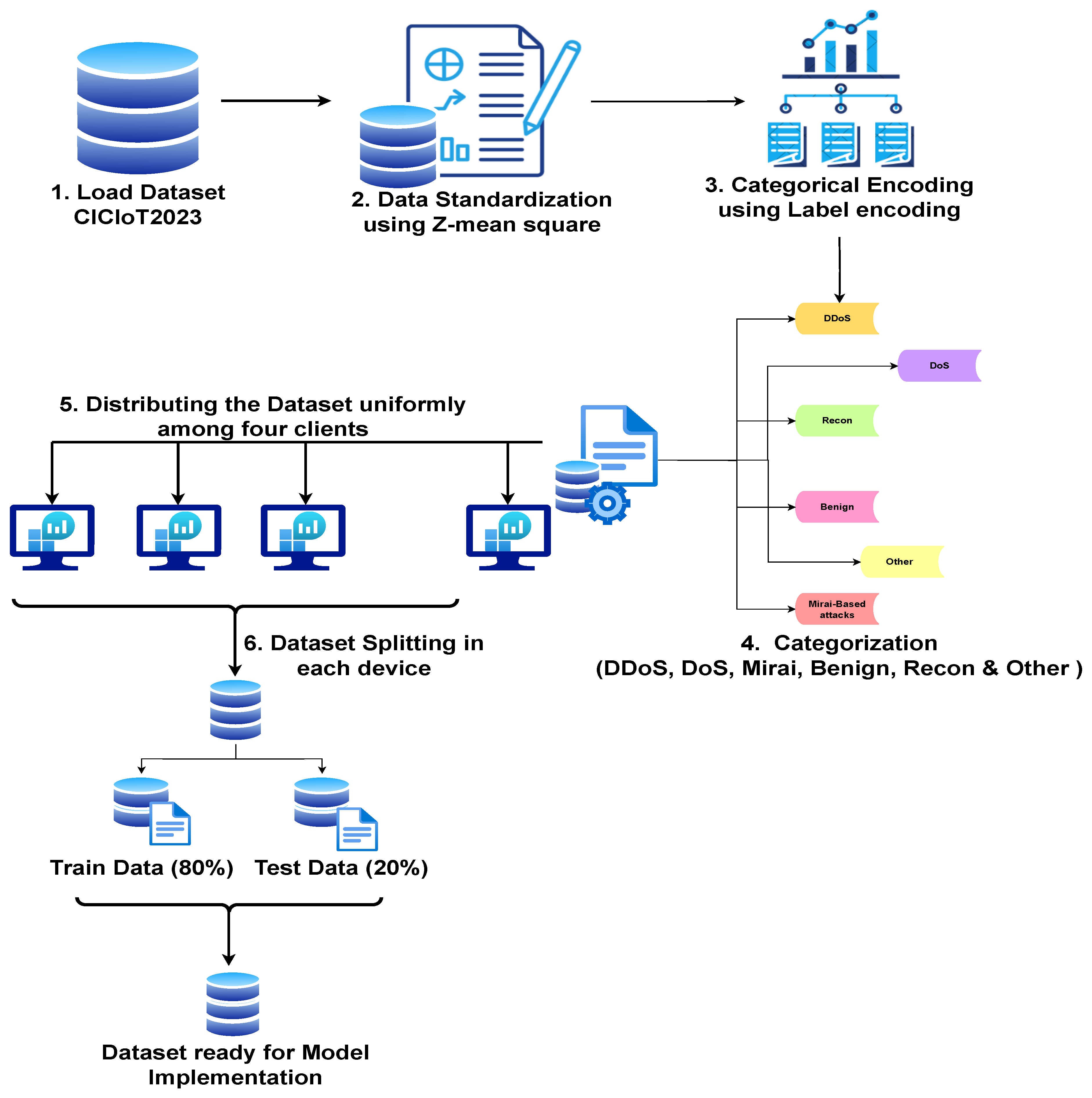

3.2. Data Investigation & Pre-Processing

3.2.1. Data-Type Correcting

- a.

- Data standardizing: Standardizing the data is a transformation process that increases the integrity and quality of the data that is used in future calculations. For this study, we used Z-index standardization shown in Equation (1). This method normalizes the data to have zero mean and unit variance.Here:

- Z denotes the standardized merit,

- X signifies the initial data instance,

- represents the mean value of the data,

- indicates the standard deviation of the results.

- b.

- Categorical encoding: Any machine learning algorithm requires numerical input to perform mathematical operations, so categorical encoding converts categorical data, which contains arbitrary labels or discrete components, into a numerical format. For the encoding technique, we used label encoding in this study to convert the attack classes labels to numeric values, such as converting label DDoS to class “0”. Unlike one-hot encoding, label encoding preserves the ordinal nature of categorical variables, allowing for interaction between dummy variables while providing a meaningful numeric representation of the synonyms. It treats categorical features as a variable to keep the categorical variables’ interpretability and thus helps in efficient data processing, such as the given in Equation (2).Here,

- denotes the function for encoding categorical values to numeric values;

- represents the set of categorical data;

- represents the set of converted numerical data.

3.2.2. Class-Conversion

3.2.3. Data Set Splitting

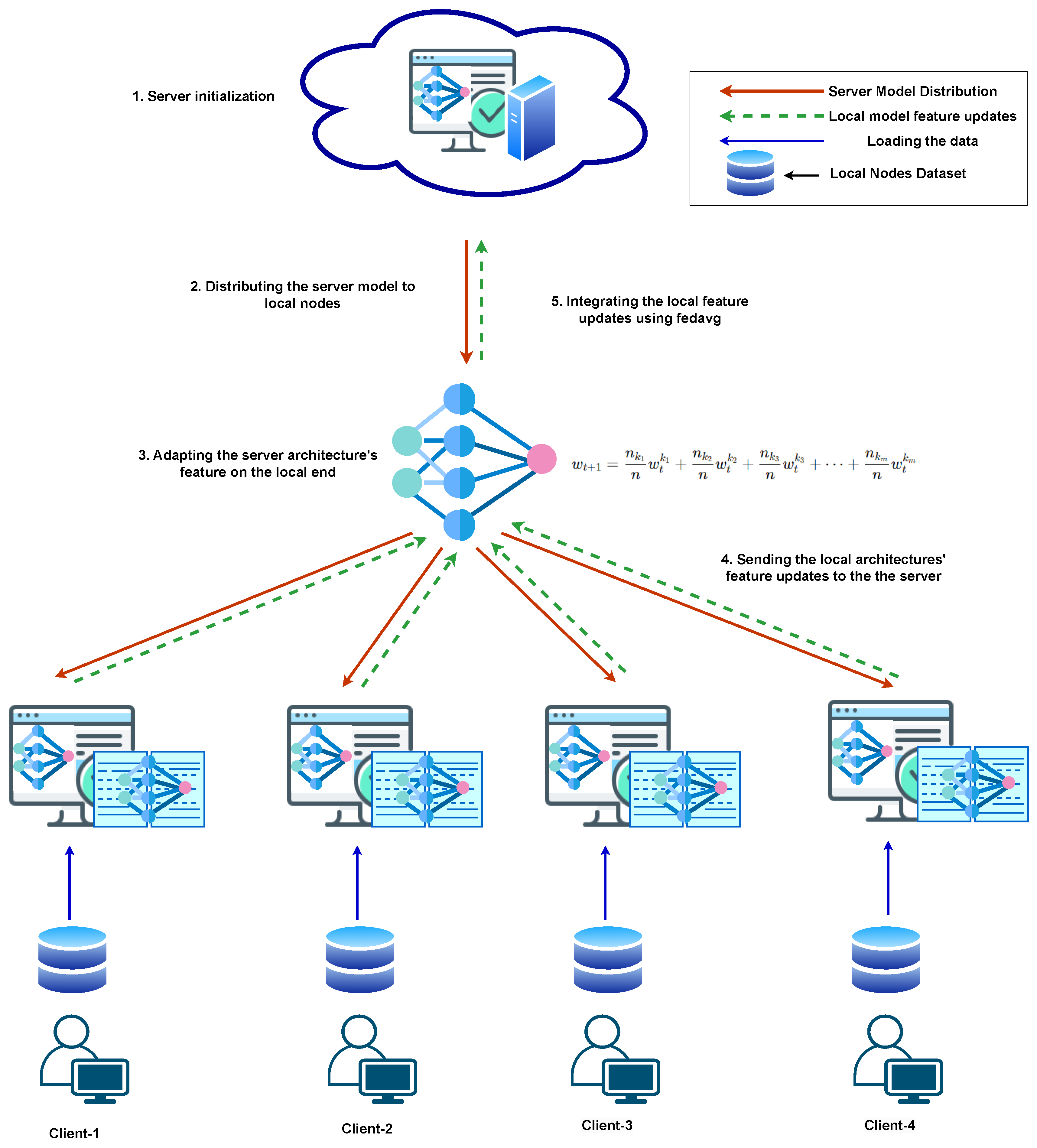

3.3. Proposed Framework

FedXAIIDS

- Initialization: In FL, initialization implies the procedure of establishing the initial universal model prior to the commencement with instruction across various client endpoints. Our experiments started with this initialization; a centralized computer establishes a global architecture and disseminates it to all collaborating peers.

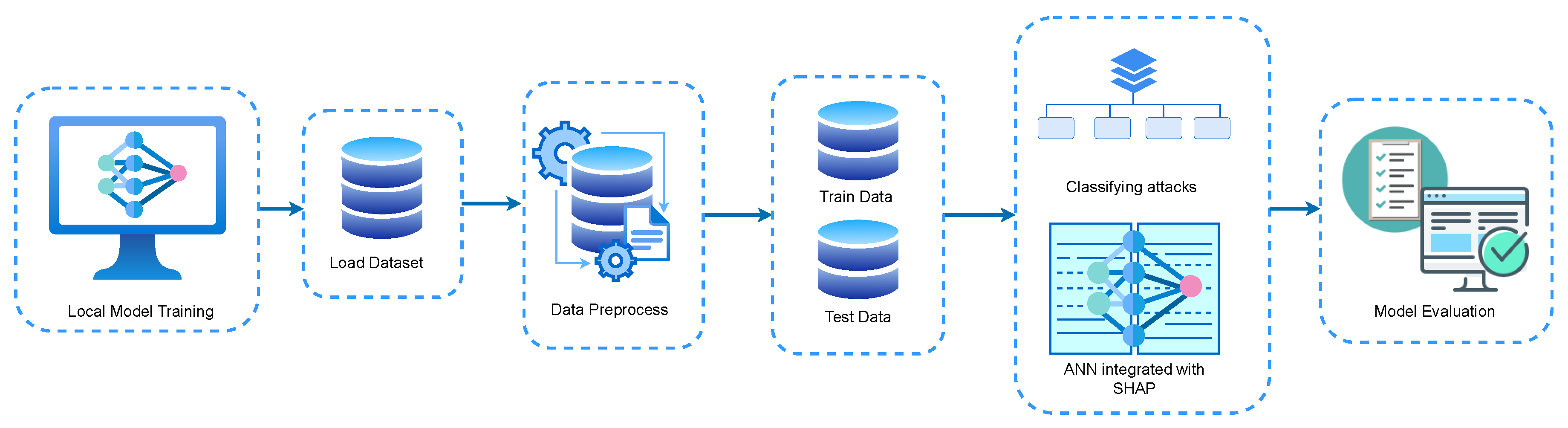

- Local model training: In our next step, each client initiates local training utilizing their specific data upon acquiring the global framework. At this stage, every collaborating client autonomously trains a replica of the global framework on its own data set prior to transmitting updates to the central server. The depiction of the regional architecture is shown in Figure 4. Every client develops a distinct ANN architecture and explains its results utilizing SHAP. ANN is modeled after the architecture of the human brain, utilizing layers of interconnected neurons. In this work, the ANN framework is fabricated as follows:

- (a)

- Input layer: The input layer in an artificial neural network (ANN) with 64 neurons representing the 64 features of the data set, where each neuron processes a corresponding feature. Using the activation strategy of ReLU (3),It passes only positive values, ensuring efficient learning and faster convergence. Each feature has calculated weighted inputs while making negative inputs are 0. Due to its simplicity and non-linearity, ReLU allows for sparse activation and, therefore, scalability, making it suitable for more complicated tasks such as intrusion detection.

- (b)

- Dropout layer: Two dropout layers with a fifty percent reduction in rate are utilized, and that helps reduce overfitting by randomly adjusting fifty percent of the units used for input to zero throughout each training iteration. This technique minimizes reliance on single neurons, allowing the network to learn more robust properties.

- (c)

- Hidden layer: The model has two hidden layers to improve learning and feature abstraction. The first hidden layer is made up of 128 neurons with ReLU activation, which allows the network to record complicated patterns using non-linear transformations. The second hidden layer has 64 neurons and uses ReLU activation to reduce dimensionality while retaining abstraction for better computational efficiency. This layered structure strikes a compromise between learning capacity and processing speed, allowing for deeper pattern identification and more effective generalization.

- (d)

- Output layer: The layer that is designed to provide outcomes, contains six neuronal cells, each corresponding to one of the six categories of interest in the classifying task. The activation function of softmax is employed, transforming the output into the distribution of probabilities among every category. The softmax function guarantees the sum of probabilities is 1 and enables the model to simply make the most likely class prediction and give more probability to whatever output is more relevant, which makes it popular for multi-class classification problems.

- (e)

- Loss function: This framework employs a loss function approach that is known as categorical cross-entropy, quantifying the disparity between the actual label distribution and the projected distribution of probabilities. This method is used when there is multi-class classification, and it penalizes when the prediction is neither close to the labels nor close to the class by solving a loss of negative log likelihood of the actual class. Preventing this loss can help tune the model so that it is more left-leaning or right-leaning, which improves its accuracy, resulting in predicted probability aligning better with actual labels.

- (f)

- Optimizer: This model employs Adam optimizing techniques with a primary learning ratio of 0.001 to take advantage of momentum and adaptation rates for improved training. Adam adapts the learning rates for each individual parameter, which leads to quicker convergence and resilient performance across different types of issues, making it frequently employed in deep learning models.

- Global aggregator: The centralized computer consolidates modifications to the model from each client to formulate a unified framework utilizing federated averaging (FedAvg) (4). This method involves the server systematically calculating a weighted average of the parameters (weights) that are achieved from client models according to the magnitude of their local data. The method has shown its efficacy in several fields, for example, a mobile healthcare application [40], IoT-based intrusion detection [41], and picture categorization employing non-IID data [42]. FedAvg enhances the global model by integrating varied local insights while maintaining data privacy, establishing it as a fundamental technique in FL [43]. The calculation is as follows [43],where

- represents the new global model parameters;

- K denotes the number of participating clients;

- is the number of local training samples for client k;

- is the total number of training samples across all selected clients;

- represents the locally updated model parameters from client k at round t.

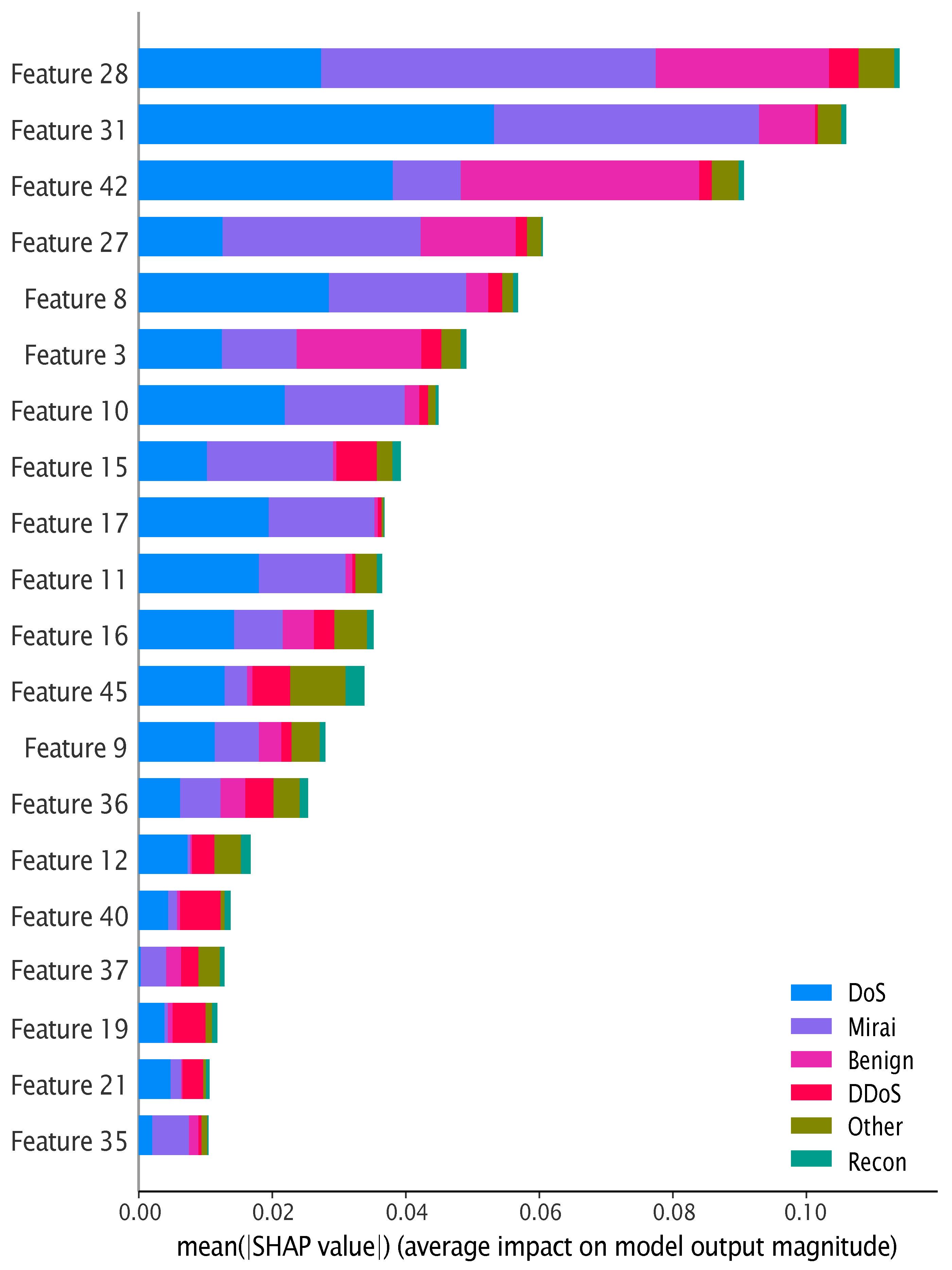

- Explainable AI (XAI) integration: Explainability is a crucial characteristic for analyzing different machine learning and deep learning models. In this experiment, the explainable AI technique SHAP was deployed on the global end of the framework, after combining all results from local frameworks. SHAP is a model-agnostic technique that leverages the interpretability of the implemented model in the experiment. SHAP incorporates the Shapely values, which are inspired by game theory, to calculate the contribution of each feature to the projected result [9]. In this study, each characteristic of the data set is represented as a player of a collaborative game, and the result of the proposed framework is considered as the outcome of that game. After that, the SHAP evaluated the Shapely value for all the features separately by calculating the average marginal significance among all possible combinations of features. Therefore, the library for SHAP was implemented to assess the SHAP values for the testing subset of the experimental data set, CICIoT2023. In this proposed framework, Kernel SHAP, an approach that was introduced in the article [9], was deployed to interpret predictions and represent the interpretability of CICIoT2023. This method enables us to achieve insights about the features that are prominently influencing the outcome of the proposed framework.

3.4. Evaluative Metric

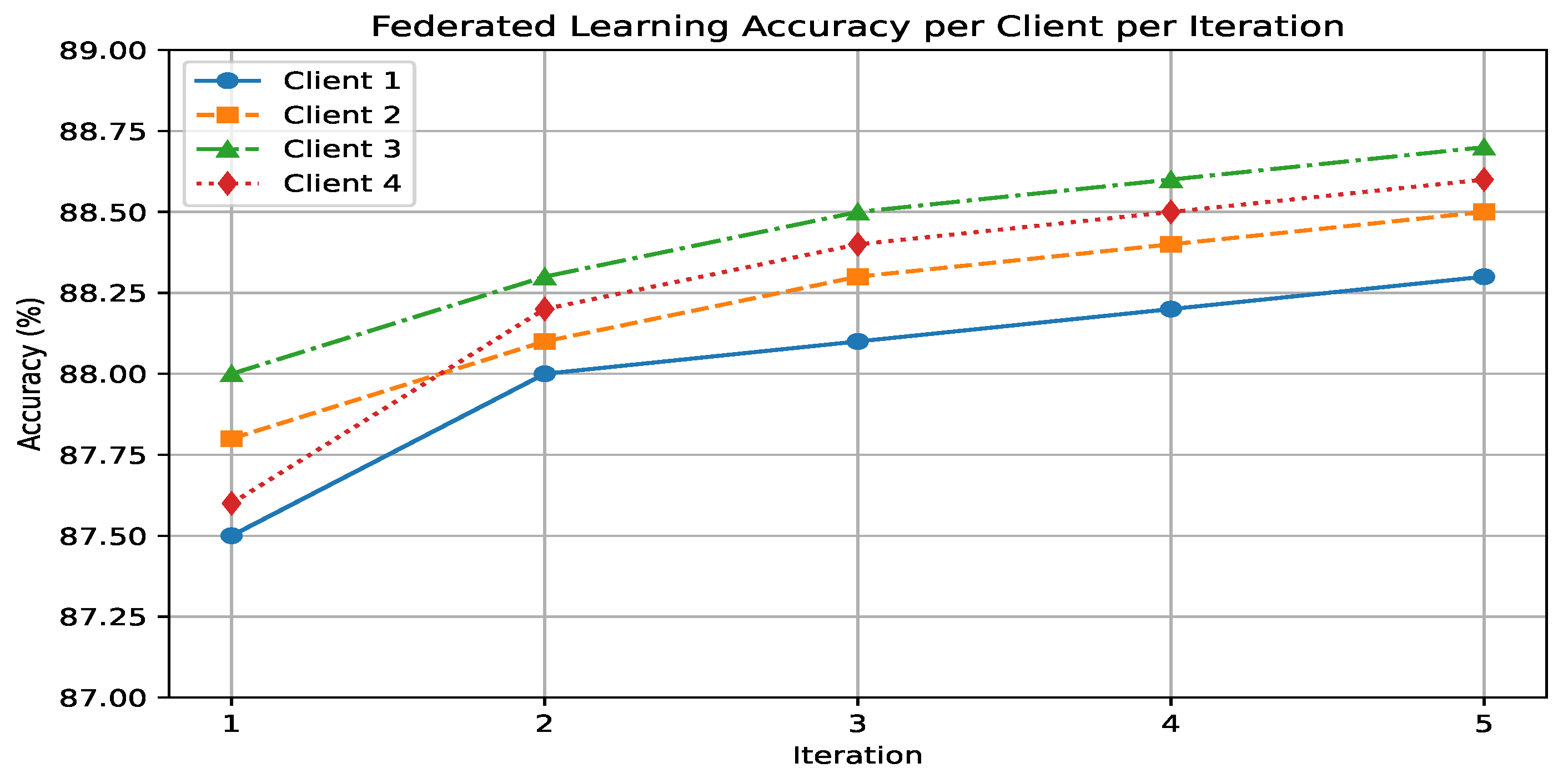

4. Experimental Result

FedXAIIDS

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IDS | Intrusion Detection System |

| FL | Federated Learning |

| XAI | eXplainable AI |

| AI | Artificial Intelligence |

| SHAP | SHapley Additive exPLanation |

| ML | Machine Learning |

| DL | Deep Learning |

| ANN | Artificial Nueral Network |

| FedXAIIDS | Federated Explainable IDS |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

References

- Wang, S.; Asif, M.; Shahzad, M.F.; Ashfaq, M. Data privacy and cybersecurity challenges in the digital transformation of the banking sector. Comput. Secur. 2024, 147, 104051. [Google Scholar] [CrossRef]

- Otoum, Y.; Nayak, A. As-ids: Anomaly and signature based ids for the internet of things. J. Netw. Syst. Manag. 2021, 29, 23. [Google Scholar] [CrossRef]

- Okoye, C.C.; Nwankwo, E.E.; Usman, F.O.; Mhlongo, N.Z.; Odeyemi, O.; Ike, C.U. Securing financial data storage: A review of cybersecurity challenges and solutions. Int. J. Sci. Res. Arch. 2024, 11, 1968–1983. [Google Scholar] [CrossRef]

- Agrawal, S.; Sarkar, S.; Aouedi, O.; Yenduri, G.; Piamrat, K.; Alazab, M.; Bhattacharya, S.; Maddikunta, P.K.R.; Gadekallu, T.R. Federated learning for intrusion detection system: Concepts, challenges and future directions. Comput. Commun. 2022, 195, 346–361. [Google Scholar] [CrossRef]

- Karatas, G.; Demir, O.; Sahingoz, O.K. Increasing the performance of machine learning-based IDSs on an imbalanced and up-to-date dataset. IEEE Access 2020, 8, 32150–32162. [Google Scholar] [CrossRef]

- Waswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Fatema, K.; Anannya, M.; Dey, S.K.; Su, C.; Mazumder, R. Securing Networks: A Deep Learning Approach with Explainable AI (XAI) and Federated Learning for Intrusion Detection. In Data Security and Privacy Protection; Springer: Berlin/Heidelberg, Germany, 2024; pp. 260–275. [Google Scholar]

- Lundberg, S. A unified approach to interpreting model predictions. arXiv 2017, arXiv:1705.07874. [Google Scholar]

- Dong, T.; Li, S.; Qiu, H.; Lu, J. An interpretable federated learning-based network intrusion detection framework. arXiv 2022, arXiv:2201.03134. [Google Scholar]

- Neto, E.C.P.; Dadkhah, S.; Ferreira, R.; Zohourian, A.; Lu, R.; Ghorbani, A.A. CICIoT2023: A real-time dataset and benchmark for large-scale attacks in IoT environment. Sensors 2023, 23, 5941. [Google Scholar] [CrossRef]

- Hodo, E.; Bellekens, X.; Hamilton, A.; Tachtatzis, C.; Atkinson, R. Machine learning methods for cyber security intrusion detection: Overview, issues, and open challenges. Int. J. Commun. Syst. 2016, 29, 2056–2085. [Google Scholar]

- Li, W.; Liao, X.; Wang, W.; Zhang, W. Intrusion detection system based on SVM with feature selection. Int. J. Commun. Syst. 2012, 25, 934–945. [Google Scholar]

- Singh, G.; Singh, L. Network anomaly detection using hybrid decision tree. Int. J. Innov. Technol. Explor. Eng. IJITEE 2019, 8, 2451–2456. [Google Scholar]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A deep learning approach for intrusion detection using recurrent neural networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Maseer, Z.K.; Yusof, R.; Bahaman, N.; Mostafa, S.A.; Foozy, C.F.M. Benchmarking of machine learning for anomaly based intrusion detection systems in the CICIDS2017 dataset. IEEE Access 2021, 9, 22351–22370. [Google Scholar] [CrossRef]

- Sarhan, M.; Layeghy, S.; Portmann, M. Evaluating standard feature sets towards increased generalisability and explainability of ML-based network intrusion detection. Big Data Res. 2022, 30, 100359. [Google Scholar] [CrossRef]

- Fatema, K.; Dey, S.K.; Bari, R.; Mazumder, R. A Novel Two-Stage Classification Architecture Integrating Machine Learning and Artificial Immune System for Intrusion Detection on Balanced Dataset. In Proceedings of the International Conference on Information and Communication Technology for Intelligent Systems, Las Vegas, NV, USA, 22–23 May 2024; Springer: Singapore, 2024; pp. 179–189. [Google Scholar]

- Nguyen, S.N.; Nguyen, V.Q.; Choi, J.; Kim, K. Design and implementation of intrusion detection system using convolutional neural network for DoS detection. In Proceedings of the 2nd International Conference on Machine Learning and Soft Computing, Phu Quoc Island, Vietnam, 2–4 February 2018; pp. 34–38. [Google Scholar]

- de Carvalho Bertoli, G.; Junior, L.A.P.; Saotome, O.; dos Santos, A.L. Generalizing intrusion detection for heterogeneous networks: A stacked-unsupervised federated learning approach. Comput. Secur. 2023, 127, 103106. [Google Scholar] [CrossRef]

- Toldinas, J.; Venčkauskas, A.; Damaševičius, R.; Grigaliūnas, Š.; Morkevičius, N.; Baranauskas, E. A novel approach for network intrusion detection using multistage deep learning image recognition. Electronics 2021, 10, 1854. [Google Scholar] [CrossRef]

- Markovic, T.; Leon, M.; Buffoni, D.; Punnekkat, S. Random forest based on federated learning for intrusion detection. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Hersonissos, Greece, 17–20 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 132–144. [Google Scholar]

- Lazzarini, R.; Tianfield, H.; Charissis, V. Federated learning for IoT intrusion detection. AI 2023, 4, 509–530. [Google Scholar] [CrossRef]

- Torre, D.; Chennamaneni, A.; Jo, J.; Vyas, G.; Sabrsula, B. Toward Enhancing Privacy Preservation of a Federated Learning CNN Intrusion Detection System in IoT: Method and Empirical Study. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–48. [Google Scholar] [CrossRef]

- Almadhor, A.; Altalbe, A.; Bouazzi, I.; Hejaili, A.A.; Kryvinska, N. Strengthening network DDOS attack detection in heterogeneous IoT environment with federated XAI learning approach. Sci. Rep. 2024, 14, 24322. [Google Scholar] [CrossRef]

- Alsaleh, S.; Menai, M.E.B.; Al-Ahmadi, S. A Heterogeneity-Aware Semi-Decentralized Model for a Lightweight Intrusion Detection System for IoT Networks Based on Federated Learning and BiLSTM. Sensors 2025, 25, 1039. [Google Scholar] [CrossRef]

- Bensaid, R.; Labraoui, N.; Ari, A.A.A.; Saidi, H.; Emati, J.H.M.; Maglaras, L. SA-FLIDS: Secure and authenticated federated learning-based intelligent network intrusion detection system for smart healthcare. PeerJ Comput. Sci. 2024, 10, e2414. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Sharma, P.; Nwodo, K.; Stavrou, A.; Wang, H. FedMADE: Robust Federated Learning for Intrusion Detection in IoT Networks Using a Dynamic Aggregation Method. In Proceedings of the International Conference on Information Security, Arlington, VA, USA, 23–25 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 286–306. [Google Scholar]

- Al-Imran, M.; Ripon, S.H. Network intrusion detection: An analytical assessment using deep learning and state-of-the-art machine learning models. Int. J. Comput. Intell. Syst. 2021, 14, 200. [Google Scholar] [CrossRef]

- Arslan, R.S. FastTrafficAnalyzer: An efficient method for intrusion detection systems to analyze network traffic. Dicle Üniversitesi Mühendislik Fakültesi Mühendislik Dergisi 2021, 12, 565–572. [Google Scholar] [CrossRef]

- Tonni, Z.A.; Mazumder, R. A Novel Feature Selection Technique for Intrusion Detection System Using RF-RFE and Bio-inspired Optimization. In Proceedings of the 2023 57th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 22–24 March 2023; pp. 1–6. [Google Scholar]

- Haque, N.I.; Khalil, A.A.; Rahman, M.A.; Amini, M.H.; Ahamed, S.I. Biocad: Bio-inspired optimization for classification and anomaly detection in digital healthcare systems. In Proceedings of the 2021 IEEE International Conference on Digital Health (ICDH), Chicago, IL, USA, 5–10 September 2021; pp. 48–58. [Google Scholar]

- Aldhaheri, S.; Alghazzawi, D.; Cheng, L.; Alzahrani, B.; Al-Barakati, A. DeepDCA: Novel network-based detection of IoT attacks using artificial immune system. Appl. Sci. 2020, 10, 1909. [Google Scholar] [CrossRef]

- Rashid, M.M.; Khan, S.U.; Eusufzai, F.; Redwan, M.A.; Sabuj, S.R.; Elsharief, M. A federated learning-based approach for improving intrusion detection in industrial internet of things networks. Network 2023, 3, 158–179. [Google Scholar] [CrossRef]

- Liu, W.; Xu, X.; Wu, L.; Qi, L.; Jolfaei, A.; Ding, W.; Khosravi, M.R. Intrusion detection for maritime transportation systems with batch federated aggregation. IEEE Trans. Intell. Transp. Syst. 2022, 24, 2503–2514. [Google Scholar] [CrossRef]

- Yaras, S.; Dener, M. IoT-Based Intrusion Detection System Using New Hybrid Deep Learning Algorithm. Electronics 2024, 13, 1053. [Google Scholar] [CrossRef]

- Becerra-Suarez, F.L.; Tuesta-Monteza, V.A.; Mejia-Cabrera, H.I.; Arcila-Diaz, J. Performance Evaluation of Deep Learning Models for Classifying Cybersecurity Attacks in IoT Networks. Proc. Inform. 2024, 11, 32. [Google Scholar] [CrossRef]

- Khan, M.M.; Alkhathami, M. Anomaly detection in IoT-based healthcare: Machine learning for enhanced security. Sci. Rep. 2024, 14, 5872. [Google Scholar] [CrossRef]

- Boateng, A.; Odoom, C.; Mensah, E.T.; Fobi, S.M.; Maposa, D. Predictive Analysis of Misuse of Alcohol and Drugs using Machine Learning Algorithms: The Case of using an Imbalanced Dataset from South Africa. Appl. Math. 2023, 17, 261–271. [Google Scholar]

- Brisimi, T.S.; Chen, R.; Mela, T.; Olshevsky, A.; Paschalidis, I.C.; Shi, W. Federated learning of predictive models from federated electronic health records. Int. J. Med. Inform. 2018, 112, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, Y.; Kang, J.; Yu, R.; Deng, Q. Secure Federated Learning for Autonomous Driving. IEEE Trans. Veh. Technol. 2020, 69, 7131–7140. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-iid data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Adamova, A.; Zhukabayeva, T.; Mukanova, Z.; Oralbekova, Z. Enhancing internet of things security against structured query language injection and brute force attacks through federated learning. Int. J. Electr. Comput. Eng. 2025, 15, 1187. [Google Scholar] [CrossRef]

- Saadouni, R.; Gherbi, C.; Aliouat, Z.; Harbi, Y.; Khacha, A.; Mabed, H. Securing smart agriculture networks using bio-inspired feature selection and transfer learning for effective image-based intrusion detection. Internet Things 2025, 29, 101422. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Z.; Yang, S.; Luo, X.; He, D.; Chan, S. Intrusion detection using synaptic intelligent convolutional neural networks for dynamic Internet of Things environments. Alex. Eng. J. 2025, 111, 78–91. [Google Scholar] [CrossRef]

- Shirley, J.J.; Priya, M. An Adaptive Intrusion Detection System for Evolving IoT Threats: An Autoencoder-FNN Fusion. IEEE Access 2025, 12, 4201–4217. [Google Scholar] [CrossRef]

- Ji, R.; Selwal, A.; Kumar, N.; Padha, D. Cascading Bagging and Boosting Ensemble Methods for Intrusion Detection in Cyber-Physical Systems. Secur. Priv. 2025, 8, e497. [Google Scholar] [CrossRef]

- Sakraoui, S.; Ahmim, A.; Derdour, M.; Ahmim, M.; Namane, S.; Dhaou, I.B. FBMP-IDS: FL-based blockchain-powered lightweight MPC-secured IDS for 6G networks. IEEE Access 2024, 12, 105887–105905. [Google Scholar] [CrossRef]

- Gulzar, Q.; Mustafa, K. Enhancing network security in industrial IoT environments: A DeepCLG hybrid learning model for cyberattack detection. Int. J. Mach. Learn. Cybern. 2025, 16, 1–19. [Google Scholar] [CrossRef]

- Wang, J.; Yang, K.; Li, M. NIDS-FGPA: A federated learning network intrusion detection algorithm based on secure aggregation of gradient similarity models. PLoS ONE 2024, 19, e0308639. [Google Scholar] [CrossRef]

| Authors | FL Technique Used (Yes/No) | Framework | Limitation | Performance Evaluation |

|---|---|---|---|---|

| Sinh-Ngoc et al. [19] | No | Employed CNN architecture for categorization. | This study entirely focused on detecting only the DoS type of malicious activities | Achieved a 99.8% detection rate. |

| Bertoli et al. [20] | Yes | Constructed a multilayered autonomous FL architecture that integrates an autoencoder with an energy flow classifier, enabling enhanced feature extraction and classification performance while maintaining privacy in a distributed learning environment. | A new privacy concern was generated | 90% success rate on CICIDS2018. |

| Toldinas et al. [21] | No | The initial processing technique that combines a predetermined number of network flow feature records. Three independent ML methodologies, federated transfer learning, traditional transfer learning, and federated learning, were used on NIDS, employing deep learning for image classification. | Focused on detecting DDoS attack | 99.7% success rate. |

| Markovic et al. [22] | Yes | Implemented a federated learning (FL) model that utilizes the shared model incorporating RF, enabling learning across multiple consumers collaboratively while safeguarding the privacy of information. | This framework does not perform well while implemented on an entire data set | 91.7% accurateness on CICIDS2017 |

| Lazzarini et al. [23] | Yes | Developed an IDS incorporating FL, a shallow ANN, as the regional framework and FedAvg as the aggregation method. | Computationally expensive, as MLP, DNN, CNN, and LSTM were integrated in the proposed framework | 98.7% success rate on CICIDS2017. |

| Ref | Year | Federated Learning Applied | Method | Data Set | Performance Metrics (Accuracy) |

|---|---|---|---|---|---|

| A. Adamova et al. | 2025 | Yes | Implemented federated learning on SQL injection and brute-force attacks. | CICIOT2023 | 100% accuracy in predicting SQL injection attacks and 98.25% accuracy for brute-force attacks [44]. |

| R. Saadouni et al. | 2025 | No | Incorporated VGG16 along with BBinary Greylag Goose Optimization (BGGO) and Random Forest Classifier [45]. | CICIOT2023 | 99.41% accuracy for multiclass classification and 99.83% for binary classification. |

| H. Chen et al. | 2025 | No | Proposed synaptic structures transformation from 1D to 3D. Additionally, imbalance categorization issue is mitigated implement a unique strategy for calculating loss. | CIC_IDS_2017, CICIOT2023 | demonstrated a 88.48% on CICIDS2017 and a 97.69% on CICIoT2023 [46]. |

| J. J. Shirley et al. | 2025 | No | Deployed autoencoder along with a feedforward neural network (AE-FNN) [47]. | CICIOT2023 | 99.55% accuracy in binary classification and 90.91% in multiclass classification. |

| R. Ji et al. | 2025 | No | Introduced a hybrid IDS Cyber-Physical Systems (CPSs), integrating AdaBoost and RF techniques [48]. | CICIOT2023 | accuracy of 98.27%, with recall, precision, and F1-score all at 0.98 and a false detection rate of 0.0006, along with a testing time of 0.1563 s |

| Sabrina et al. | 2025 | Yes | Proposed a secure gradient-exchange algorithm utilizing FL and blockchain, incorporating CNN1D and multi-head attention. | CICIOT2023 | accuracy of 79.92%, 77.41% identification percentage, and 2.55% of false detection rate [49]. |

| Qawsar et al. | 2025 | Yes | Introduced a hybrid learning infrastructure integrating CNN, LSTM, GRU, and a capsule network [50]. | CICIoT 2023 and UNSW_NB15 | accuracy of 99.82% on CICIoT 2023 and 95.55% on UNSW_NB15. |

| Torre et al. | 2025 | Yes | Presented an FL-based using 1D-CNN incorporating differential privacy, Diffie–Hellman key exchange, and homomorphic encryption [24]. | TONIoT, IoT23, BoTIoT, CICIoT2023, CICIoMT2024, RTIoT2022, and Edge-IIoT | The model achieved an estimated accurateness of 97.31%, across the various data sets. |

| Ahmad et al. | 2024 | Yes | This study proposes using federated deep neural networks (FDNNs) and explainable AI (XAI) [25]. | DDoS-ICMP-Flood, DDoS-UDP-Flood, DDoS-TCP-Flood, DDoS-PSHACK-Flood, DDoS-SYN-Flood, DDoS-RSTFINFlood, DDoS-SynonymousIP-Flood, DoS-UDP-Flood, DoS-TCP-Flood, and DoS-SYN-Flood. | The model achieved 99.78% accuracy. |

| JiaMing et al. | 2025 | Yes | Proposed an NIDS-FGPA NIDS-FGPA with Paillier encryption for secure training and uses GSA to optimize updates and reduce overhead. | Edge-IIoTset and CICIoT2023 | Edge-IIoTset and CICIoT2023 data sets exhibit accurateness of 94.5% and 99.2%, correspondingly [51]. |

| FedXAIIDS | 2025 | Yes | Federated XAI IDS(FedXAIIDS) uses Federated Learning (FL) and SHAP for a privacy-preserving, explainable IDS. An ANN is distributed across four federated clients, aggregated with FedAvg on CICIoT2023. | CICIOT2023 | SHAP enhances interpretability, and the model achieved 88.4% training and 88.2% testing accuracy, balancing security, privacy, and trustworthiness. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fatema, K.; Dey, S.K.; Anannya, M.; Khan, R.T.; Rashid, M.M.; Su, C.; Mazumder, R. Federated XAI IDS: An Explainable and Safeguarding Privacy Approach to Detect Intrusion Combining Federated Learning and SHAP. Future Internet 2025, 17, 234. https://doi.org/10.3390/fi17060234

Fatema K, Dey SK, Anannya M, Khan RT, Rashid MM, Su C, Mazumder R. Federated XAI IDS: An Explainable and Safeguarding Privacy Approach to Detect Intrusion Combining Federated Learning and SHAP. Future Internet. 2025; 17(6):234. https://doi.org/10.3390/fi17060234

Chicago/Turabian StyleFatema, Kazi, Samrat Kumar Dey, Mehrin Anannya, Risala Tasin Khan, Mohammad Mamunur Rashid, Chunhua Su, and Rashed Mazumder. 2025. "Federated XAI IDS: An Explainable and Safeguarding Privacy Approach to Detect Intrusion Combining Federated Learning and SHAP" Future Internet 17, no. 6: 234. https://doi.org/10.3390/fi17060234

APA StyleFatema, K., Dey, S. K., Anannya, M., Khan, R. T., Rashid, M. M., Su, C., & Mazumder, R. (2025). Federated XAI IDS: An Explainable and Safeguarding Privacy Approach to Detect Intrusion Combining Federated Learning and SHAP. Future Internet, 17(6), 234. https://doi.org/10.3390/fi17060234