C6EnPLS: A High-Performance Computing Job Dataset for the Analysis of Linear Solvers’ Power Consumption

Abstract

1. Introduction

- We present a novel execution framework to automate job launches with specific constraints, in terms of both resources and scheduling.

- We describe an extensive dataset of job runs with several dimensional configurations created through the aforementioned framework and made available to the scientific community. Each job run is reported together with the information coming from a variety of sensors.

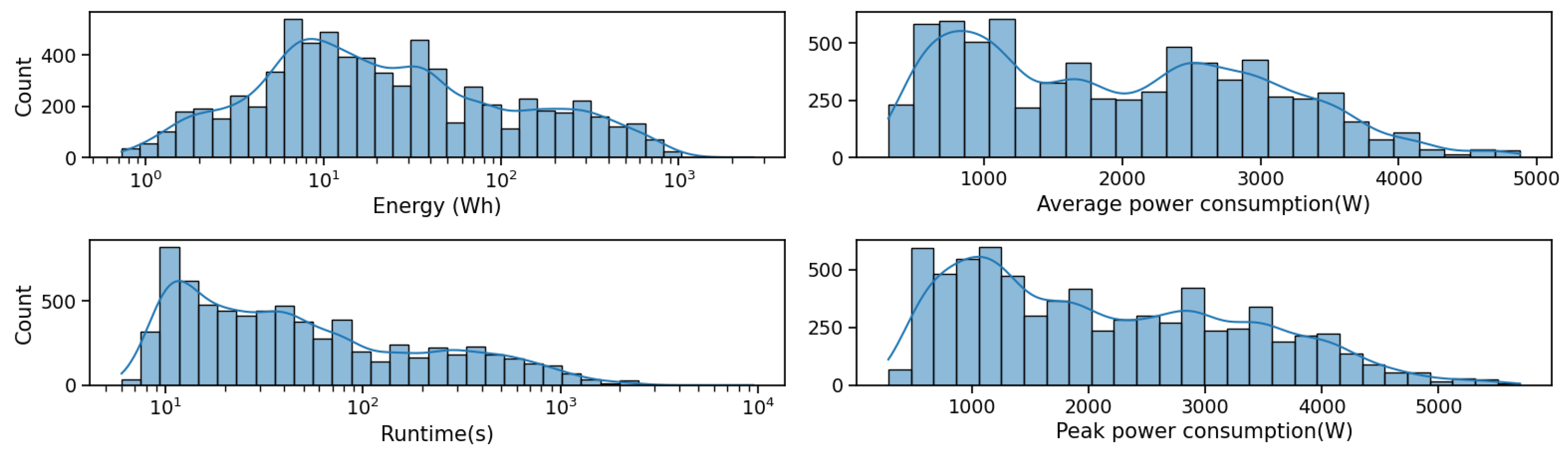

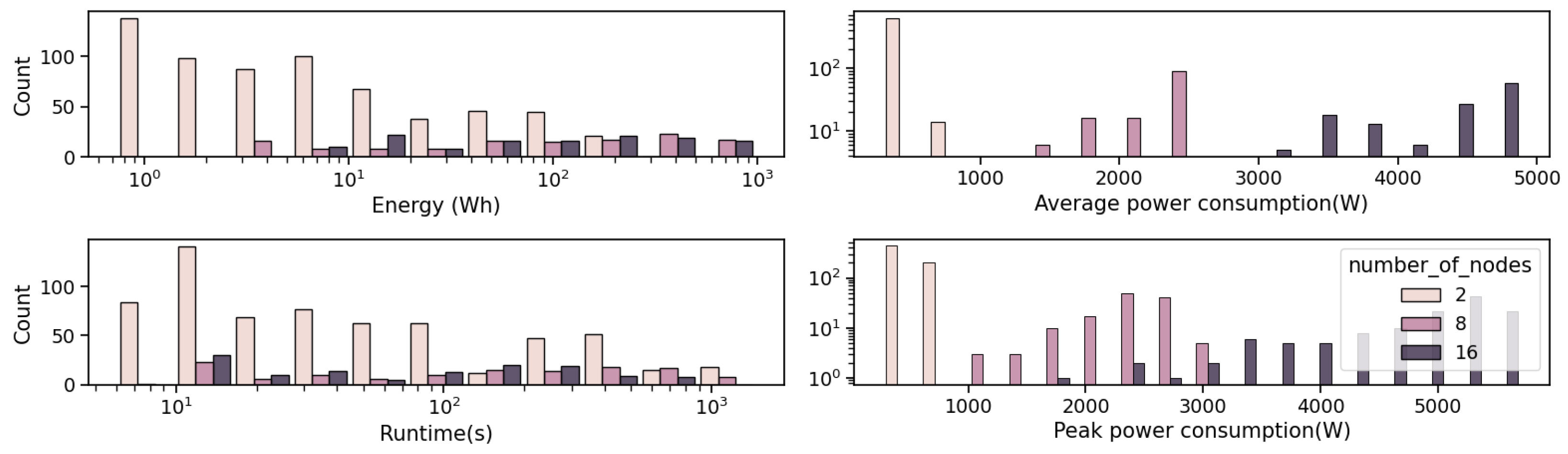

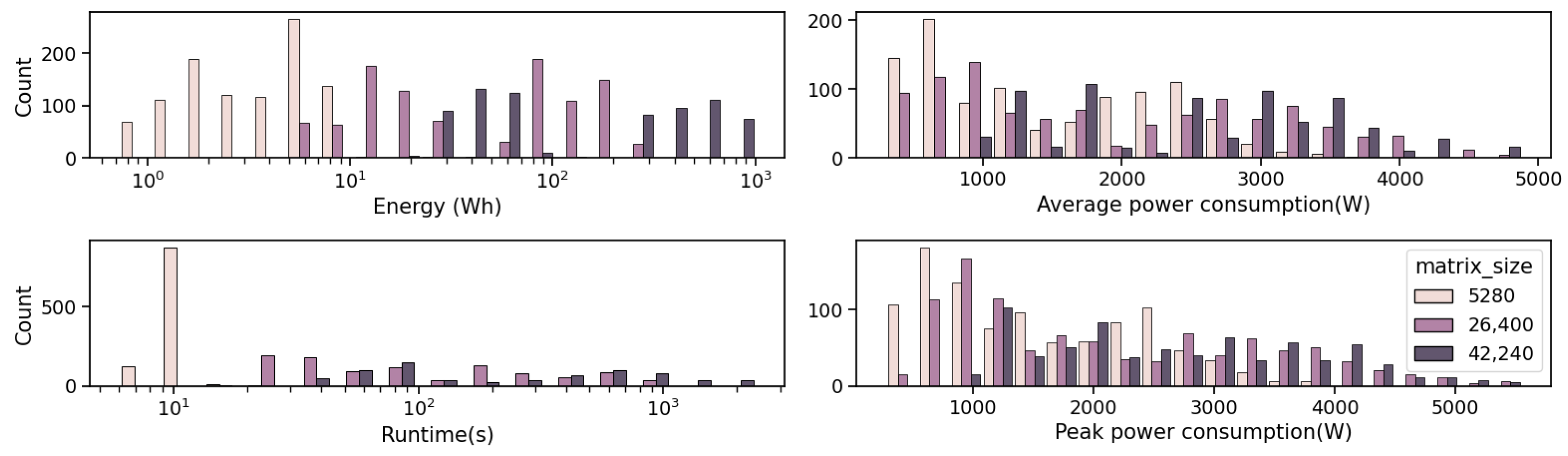

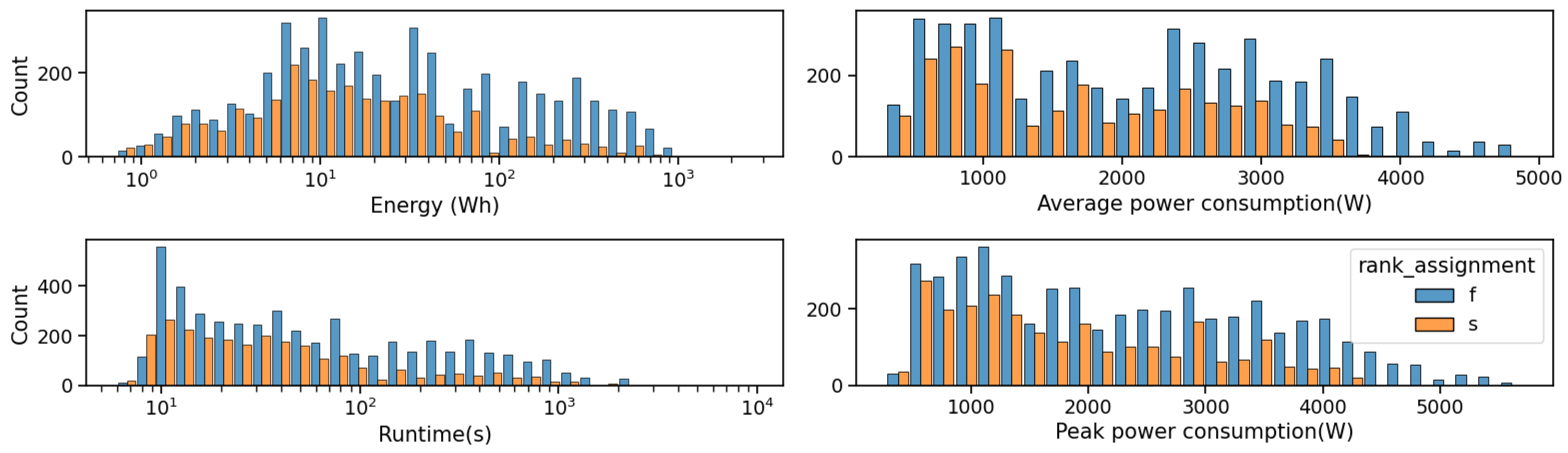

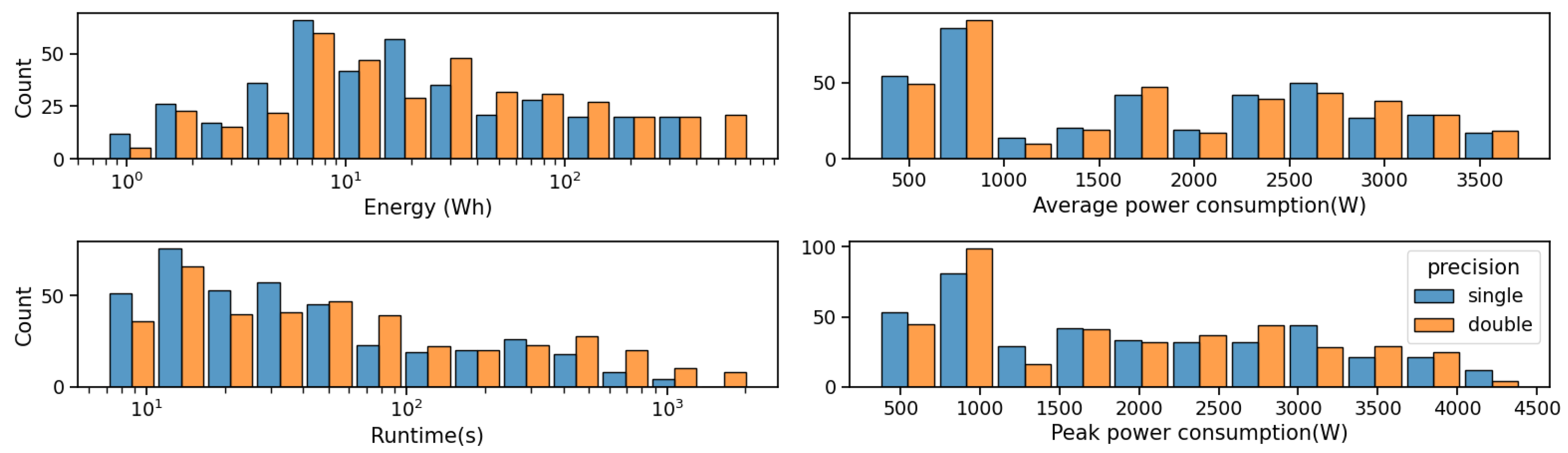

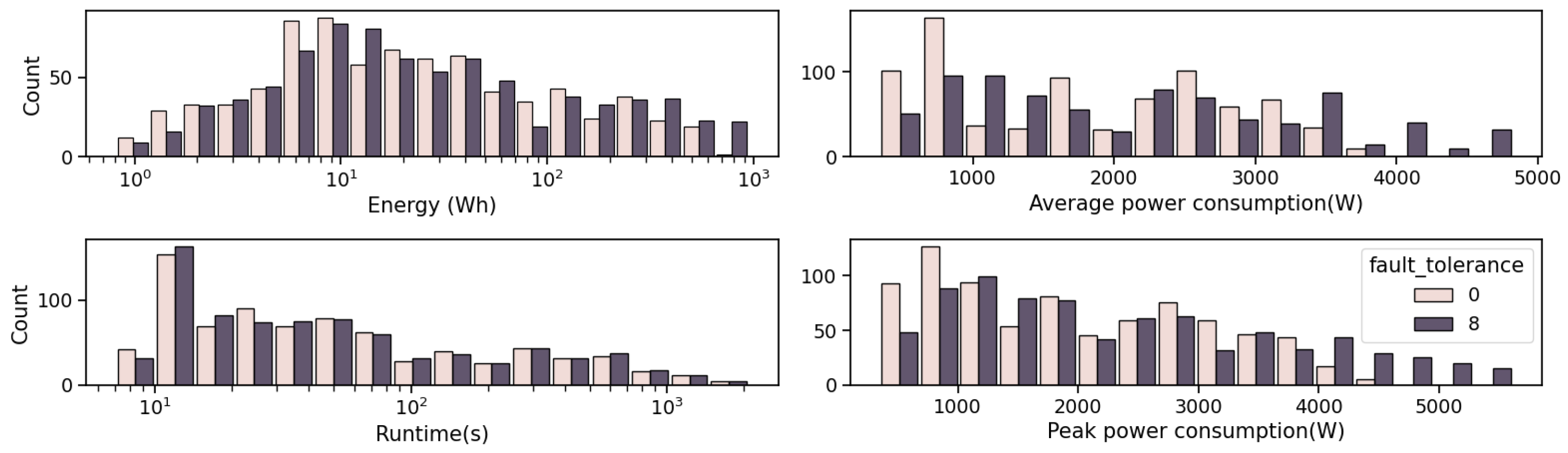

- We provide a first glance at the proposed data by analysing the distribution of energy-related targets with respect to other meaningful dataset dimensions.

- We present a preliminary experimental evaluation of the predictive capabilities of standard ML models trained based on the proposed dataset. In this regard, we underline that proposing innovative ML models to analyse the data is out of the scope of this work. The evaluation is therefore intended as a first test of the efficacy of the obtained dataset when employed to train well-established ML methods.

2. Related Work

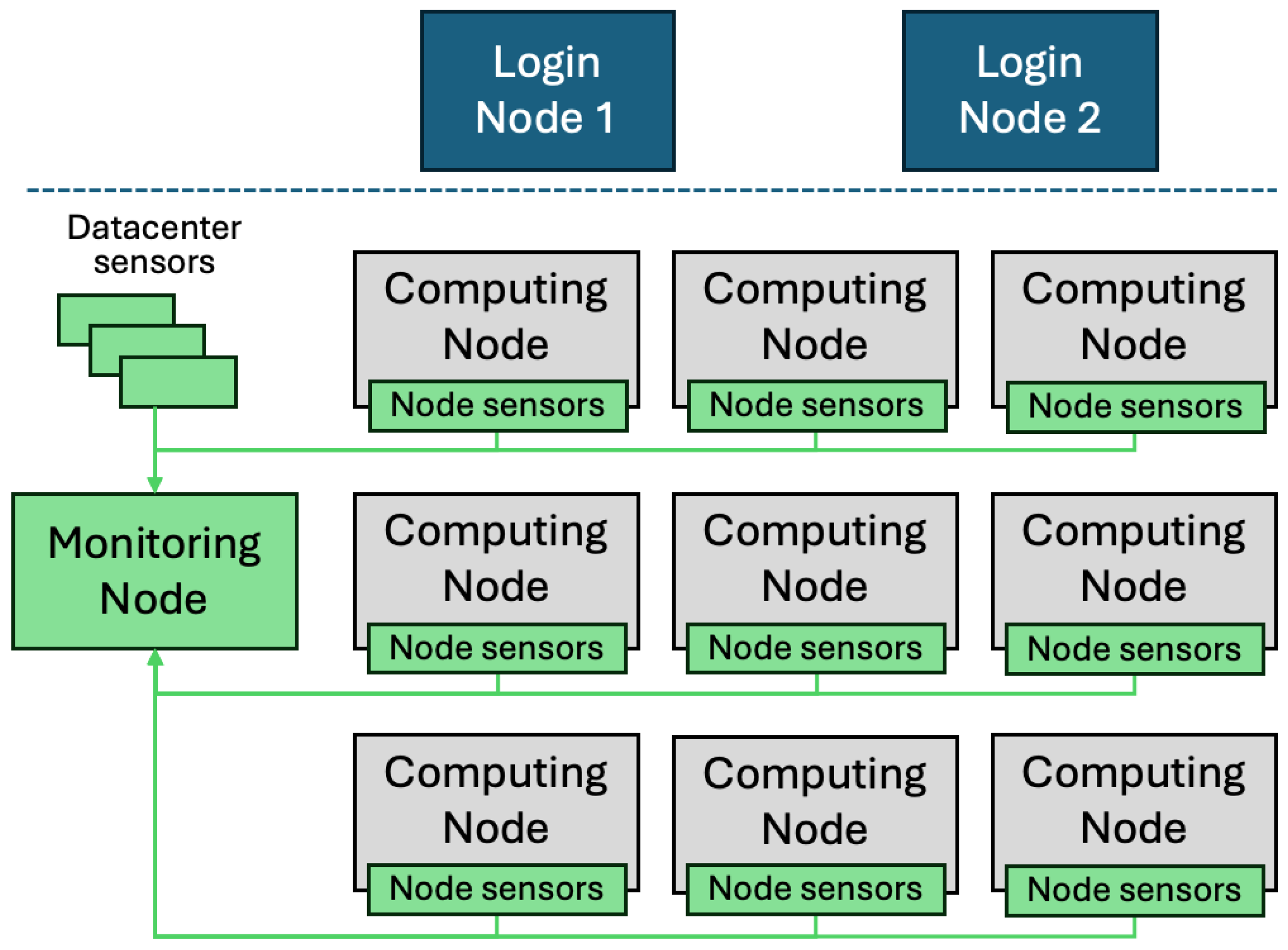

3. Execution Framework

- (R1)

- No other user has to access/use the nodes employed by a job;

- (R2)

- The LSF job must use the minimum possible number of nodes;

- (R3)

- Jobs must be executed in sequence.

4. Dataset Structure

- The LSF resource usage summary, which includes: CPU time, maximum and average memory utilisation, maximum number of processes, maximum number of threads, runtime, turnaround time, etc.

- The output of the tested algorithm, which includes: norm-wise relative error of the solution, powercap energy counters, runtime of the algorithm’s subparts (i.e., initialisation and execution), etc.

5. Statistical Analysis of the Dataset

6. Regressor Evaluation and Prediction Error Analysis

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Malms, M.; Cargemel, L.; Suarez, E.; Mittenzwey, N.; Duranton, M.; Sezer, S.; Prunty, C.; Rossé-Laurent, P.; Pérez-Harnandez, M.; Marazakis, M.; et al. ETP4HPC’s SRA 5—Strategic Research Agenda for High-Performance Computing in Europe—2022. Zenodo 2022. [Google Scholar] [CrossRef]

- Gupta, U.; Kim, Y.G.; Lee, S.; Tse, J.; Lee, H.H.S.; Wei, G.Y.; Brooks, D.; Wu, C.J. Chasing carbon: The elusive environmental footprint of computing. In Proceedings of the 2021 IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, Republic of Korea, 27 February–3 March 2021; pp. 854–867. [Google Scholar]

- Orgerie, A.; de Assunção, M.D.; Lefèvre, L. A survey on techniques for improving the energy efficiency of large-scale distributed systems. ACM Comput. Surv. 2013, 46, 1–31. [Google Scholar] [CrossRef]

- Xie, G.; Xiao, X.; Peng, H.; Li, R.; Li, K. A Survey of Low-Energy Parallel Scheduling Algorithms. IEEE Trans. Sustain. Comput. 2022, 7, 27–46. [Google Scholar] [CrossRef]

- Czarnul, P.; Proficz, J.; Krzywaniak, A. Energy-aware high-performance computing: Survey of state-of-the-art tools, techniques, and environments. Sci. Program. 2019, 2019, 8348791. [Google Scholar] [CrossRef]

- Jin, C.; de Supinski, B.R.; Abramson, D.; Poxon, H.; DeRose, L.; Dinh, M.N.; Endrei, M.; Jessup, E.R. A survey on software methods to improve the energy efficiency of parallel computing. Int. J. High Perform. Comput. Appl. 2017, 31, 517–549. [Google Scholar] [CrossRef]

- Tran, V.N.; Ha, P.H. ICE: A General and Validated Energy Complexity Model for Multithreaded Algorithms. In Proceedings of the 22nd IEEE International Conference on Parallel and Distributed Systems, ICPADS 2016, Wuhan, China, 13–16 December 2016; pp. 1041–1048. [Google Scholar] [CrossRef]

- Choi, J.; Bedard, D.; Fowler, R.J.; Vuduc, R.W. A Roofline Model of Energy. In Proceedings of the 27th IEEE International Symposium on Parallel and Distributed Processing, IPDPS 2013, Cambridge, MA, USA, 20–24 May 2013; pp. 661–672. [Google Scholar] [CrossRef]

- Korthikanti, V.A.; Agha, G.; Greenstreet, M.R. On the Energy Complexity of Parallel Algorithms. In Proceedings of the International Conference on Parallel Processing, ICPP 2011, Taipei, Taiwan, 13–16 September 2011; pp. 562–570. [Google Scholar] [CrossRef]

- Zhu, D.; Melhem, R.G.; Mossé, D. The effects of energy management on reliability in real-time embedded systems. In Proceedings of the 2004 International Conference on Computer-Aided Design, ICCAD 2004, San Jose, CA, USA, 7–11 November 2004; pp. 35–40. [Google Scholar] [CrossRef]

- Tang, X. Large-scale computing systems workload prediction using parallel improved LSTM neural network. IEEE Access 2019, 7, 40525–40533. [Google Scholar] [CrossRef]

- Borghesi, A.; Bartolini, A.; Lombardi, M.; Milano, M.; Benini, L. Predictive Modeling for Job Power Consumption in HPC Systems. In Proceedings of the High Performance Computing—31st International Conference, ISC High Performance 2016, Frankfurt, Germany, 19–23 June 2016; Volume 9697, pp. 181–199. [Google Scholar] [CrossRef]

- Sîrbu, A.; Babaoglu, O. Power consumption modeling and prediction in a hybrid CPU-GPU-MIC supercomputer. In Proceedings of the Euro-Par 2016: Parallel Processing: 22nd International Conference on Parallel and Distributed Computing, Grenoble, France, 24–26 August 2016; pp. 117–130. [Google Scholar]

- Bugbee, B.; Phillips, C.; Egan, H.; Elmore, R.; Gruchalla, K.; Purkayastha, A. Prediction and characterization of application power use in a high-performance computing environment. Stat. Anal. Data Min. ASA Data Sci. J. 2017, 10, 155–165. [Google Scholar] [CrossRef]

- Hu, Q.; Sun, P.; Yan, S.; Wen, Y.; Zhang, T. Characterization and prediction of deep learning workloads in large-scale gpu datacenters. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, St. Louis, MO, USA, 14–19 November 2021; pp. 1–15. [Google Scholar]

- O’Brien, K.; Pietri, I.; Reddy, R.; Lastovetsky, A.L.; Sakellariou, R. A Survey of Power and Energy Predictive Models in HPC Systems and Applications. ACM Comput. Surv. 2017, 50, 1–38. [Google Scholar] [CrossRef]

- Antici, F.; Yamamoto, K.; Domke, J.; Kiziltan, Z. Augmenting ML-based Predictive Modelling with NLP to Forecast a Job’s Power Consumption. In Proceedings of the SC’23 Workshops of the International Conference on High Performance Computing, Network, Storage, and Analysis, SC-W 2023, Denver, CO, USA, 12–17 November 2023; pp. 1820–1830. [Google Scholar] [CrossRef]

- Antici, F.; Ardebili, M.S.; Bartolini, A.; Kiziltan, Z. PM100: A Job Power Consumption Dataset of a Large-scale Production HPC System. In Proceedings of the SC ’23 Workshops of the International Conference on High Performance Computing, Network, Storage, and Analysis, SC-W 2023, Denver, CO, USA, 12–17 November 2023; pp. 1812–1819. [Google Scholar] [CrossRef]

- Fahad, M.; Shahid, A.; Manumachu, R.R.; Lastovetsky, A. A Comparative Study of Methods for Measurement of Energy of Computing. Energies 2019, 12, 2204. [Google Scholar] [CrossRef]

- Shahid, A.; Fahad, M.; Manumachu, R.R.; Lastovetsky, A.L. Improving the accuracy of energy predictive models for multicore CPUs by combining utilization and performance events model variables. J. Parallel Distrib. Comput. 2021, 151, 38–51. [Google Scholar] [CrossRef]

- Intel Inc. Running Average Power Limit Energy Reporting. 2022. Available online: https://www.intel.com/content/www/us/en/developer/articles/technical/software-security-guidance/advisory-guidance/running-average-power-limit-energy-reporting.html (accessed on 12 March 2024).

- Mei, X.; Chu, X.; Liu, H.; Leung, Y.; Li, Z. Energy efficient real-time task scheduling on CPU-GPU hybrid clusters. In Proceedings of the 2017 IEEE Conference on Computer Communications, INFOCOM 2017, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Chau, V.; Chu, X.; Liu, H.; Leung, Y. Energy Efficient Job Scheduling with DVFS for CPU-GPU Heterogeneous Systems. In Proceedings of the Eighth International Conference on Future Energy Systems, e-Energy 2017, Hong Kong, China, 16–19 May 2017; pp. 1–11. [Google Scholar] [CrossRef]

- Wang, Q.; Mei, X.; Liu, H.; Leung, Y.; Li, Z.; Chu, X. Energy-Aware Non-Preemptive Task Scheduling With Deadline Constraint in DVFS-Enabled Heterogeneous Clusters. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 4083–4099. [Google Scholar] [CrossRef]

- Hsu, C.; Feng, W. A Power-Aware Run-Time System for High-Performance Computing. In Proceedings of the ACM/IEEE SC2005 Conference on High Performance Networking and Computing, Seattle, WA, USA, 12–18 November 2005; p. 1. [Google Scholar] [CrossRef]

- Freeh, V.W.; Lowenthal, D.K.; Pan, F.; Kappiah, N.; Springer, R.; Rountree, B.; Femal, M.E. Analyzing the Energy-Time Trade-Off in High-Performance Computing Applications. IEEE Trans. Parallel Distrib. Syst. 2007, 18, 835–848. [Google Scholar] [CrossRef]

- Fraternali, F.; Bartolini, A.; Cavazzoni, C.; Benini, L. Quantifying the Impact of Variability and Heterogeneity on the Energy Efficiency for a Next-Generation Ultra-Green Supercomputer. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 1575–1588. [Google Scholar] [CrossRef]

- Auweter, A.; Bode, A.; Brehm, M.; Brochard, L.; Hammer, N.; Huber, H.; Panda, R.; Thomas, F.; Wilde, T. A Case Study of Energy Aware Scheduling on SuperMUC. In Proceedings of the Supercomputing—29th International Conference, ISC 2014, Leipzig, Germany, 22–26 June 2014; Volume 8488, pp. 394–409. [Google Scholar] [CrossRef]

- Aupy, G.; Benoit, A.; Robert, Y. Energy-aware scheduling under reliability and makespan constraints. In Proceedings of the 19th International Conference on High Performance Computing, HiPC 2012, Pune, India, 18–22 December 2012; pp. 1–10. [Google Scholar] [CrossRef]

- Kumbhare, N.; Marathe, A.; Akoglu, A.; Siegel, H.J.; Abdulla, G.; Hariri, S. A Value-Oriented Job Scheduling Approach for Power-Constrained and Oversubscribed HPC Systems. IEEE Trans. Parallel Distrib. Syst. 2020, 31, 1419–1433. [Google Scholar] [CrossRef]

- Etinski, M.; Corbalán, J.; Labarta, J.; Valero, M. Parallel job scheduling for power constrained HPC systems. Parallel Comput. 2012, 38, 615–630. [Google Scholar] [CrossRef]

- Etinski, M.; Corbalán, J.; Labarta, J.; Valero, M. Optimizing job performance under a given power constraint in HPC centers. In Proceedings of the International Green Computing Conference 2010, Chicago, IL, USA, 15–18 August 2010; pp. 257–267. [Google Scholar] [CrossRef]

- Raffin, G.; Trystram, D. Dissecting the Software-Based Measurement of CPU Energy Consumption: A Comparative Analysis. IEEE Trans. Parallel Distrib. Syst. 2025, 36, 96–107. [Google Scholar] [CrossRef]

- David, H.; Gorbatov, E.; Hanebutte, U.R.; Khanna, R.; Le, C. RAPL: Memory power estimation and capping. In Proceedings of the 2010 International Symposium on Low Power Electronics and Design, Austin, TX, USA, 18–20 August 2010; pp. 189–194. [Google Scholar] [CrossRef]

- Bodas, D.; Song, J.J.; Rajappa, M.; Hoffman, A. Simple power-aware scheduler to limit power consumption by HPC system within a budget. In Proceedings of the 2nd International Workshop on Energy Efficient Supercomputing, E2SC’14, New Orleans, LA, USA, 16–21 November 2014; pp. 21–30. [Google Scholar] [CrossRef]

- Ellsworth, D.A.; Malony, A.D.; Rountree, B.; Schulz, M. Dynamic power sharing for higher job throughput. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC 2015, Austin, TX, USA, 15–20 November 2015; pp. 1–11. [Google Scholar] [CrossRef]

- Schöne, R.; Ilsche, T.; Bielert, M.; Velten, M.; Schmidl, M.; Hackenberg, D. Energy Efficiency Aspects of the AMD Zen 2 Architecture. In Proceedings of the IEEE International Conference on Cluster Computing, CLUSTER 2021, Portland, OR, USA, 7–10 September 2021; pp. 562–571. [Google Scholar] [CrossRef]

- Bhattacharya, A.A.; Culler, D.E.; Kansal, A.; Govindan, S.; Sankar, S. The need for speed and stability in data center power capping. Sustain. Comput. Inform. Syst. 2013, 3, 183–193. [Google Scholar] [CrossRef]

- Khemka, B.; Friese, R.D.; Pasricha, S.; Maciejewski, A.A.; Siegel, H.J.; Koenig, G.A.; Powers, S.; Hilton, M.; Rambharos, R.; Poole, S. Utility maximizing dynamic resource management in an oversubscribed energy-constrained heterogeneous computing system. Sustain. Comput. Inform. Syst. 2015, 5, 14–30. [Google Scholar] [CrossRef]

- Leal, K. Energy efficient scheduling strategies in Federated Grids. Sustain. Comput. Inform. Syst. 2016, 9, 33–41. [Google Scholar] [CrossRef]

- Sensi, D.D.; Kilpatrick, P.; Torquati, M. State-Aware Concurrency Throttling. In Proceedings of the Parallel Computing is Everywhere, Proceedings of the International Conference on Parallel Computing, ParCo 2017, Bologna, Italy, 12–15 September 2017; Volume 32, pp. 201–210. [Google Scholar] [CrossRef]

- Demmel, J.; Gearhart, A.; Lipshitz, B.; Schwartz, O. Perfect Strong Scaling Using No Additional Energy. In Proceedings of the 27th IEEE International Symposium on Parallel and Distributed Processing, IPDPS 2013, Cambridge, MA, USA, 20–24 May 2013; pp. 649–660. [Google Scholar] [CrossRef]

- Borghesi, A.; Di Santi, C.; Molan, M.; Seyedkazemi, M.; Mauri, A.; Guarrasi, M.; Galetti, D.; Cestari, M.; Barchi, F.; Benini, L.; et al. M100 ExaData: A data collection campaign on the CINECA’s Marconi100 Tier-0 supercomputer. Sci. Data 2023, 10, 288. [Google Scholar] [CrossRef]

- Shoukourian, H.; Wilde, T.; Auweter, A.; Bode, A. Predicting the Energy and Power Consumption of Strong and Weak Scaling HPC Applications. Supercomput. Front. Innov. 2014, 1, 20–41. [Google Scholar] [CrossRef]

- Chen, R.; Lin, W.; Huang, H.; Ye, X.; Peng, Z. GAS-MARL: Green-Aware job Scheduling algorithm for HPC clusters based on Multi-Action Deep Reinforcement Learning. Future Gener. Comput. Syst. 2025, 167, 107760. [Google Scholar] [CrossRef]

- Lührs, S.; Rohe, D.; Schnurpfeil, A.; Thust, K.; Frings, W. Flexible and Generic Workflow Management; Advances in Parallel Computing; IOS Press: Amsterdam, The Netherlands, 2016; Volume 27, pp. 431–438. [Google Scholar] [CrossRef]

- ARM. Workload Manager. Available online: https://github.com/ARM-software/workload-automation (accessed on 25 November 2024).

- Iannone, F.; Ambrosino, F.; Bracco, G.; De Rosa, M.; Funel, A.; Guarnieri, G.; Migliori, S.; Palombi, F.; Ponti, G.; Santomauro, G.; et al. CRESCO ENEA HPC clusters: A working example of a multifabric GPFS Spectrum Scale layout. In Proceedings of the 2019 International Conference on High Performance Computing Simulation (HPCS), Dublin, Ireland, 15–19 July 2019; pp. 1051–1052. [Google Scholar]

- Gebreyesus, Y.; Dalton, D.; Nixon, S.; De Chiara, D.; Chinnici, M. Machine Learning for Data Center Optimizations: Feature Selection Using Shapley Additive exPlanation (SHAP). Future Internet 2023, 15, 88. [Google Scholar] [CrossRef]

- Blackford, L.S.; Choi, J.; Cleary, A.; D’Azevedo, E.; Demmel, J.; Dhillon, I.; Dongarra, J.; Hammarling, S.; Henry, G.; Petitet, A.; et al. ScaLAPACK Users’ Guide; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1997. [Google Scholar]

- Loreti, D.; Artioli, M.; Ciampolini, A. Rollback-Free Recovery for a High Performance Dense Linear Solver With Reduced Memory Footprint. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 1307–1319. [Google Scholar] [CrossRef]

- Huang, K.; Abraham, J.A. Algorithm-Based Fault Tolerance for Matrix Operations. IEEE Trans. Comput. 1984, 33, 518–528. [Google Scholar] [CrossRef]

- Colonna, M.; Loreti, D.; Artioli, M. C6EnPLS Dataset, 2024. Available online: https://doi.org/10.5281/zenodo.14135916 (accessed on 29 April 2025).

- Loreti, D.; Visani, G. Parallel approaches for a decision tree-based explainability algorithm. Future Gener. Comput. Syst. 2024, 158, 308–322. [Google Scholar] [CrossRef]

- Mincolelli, G.; Marchi, M.; Giacobone, G.A.; Chiari, L.; Borelli, E.; Mellone, S.; Tacconi, C.; Cinotti, T.S.; Roffia, L.; Antoniazzi, F.; et al. UCD, Ergonomics and Inclusive Design: The HABITAT Project. Adv. Intell. Syst. Comput. 2019, 824, 1191–1202. [Google Scholar] [CrossRef]

- Calori, G.; Briganti, G.; Uboldi, F.; Pepe, N.; D’Elia, I.; Mircea, M.; Marras, G.F.; Piersanti, A. Implementation of an On-Line Reactive Source Apportionment (ORSA) Algorithm in the FARM Chemical-Transport Model and Application over Multiple Domains in Italy. Atmosphere 2024, 15, 191. [Google Scholar] [CrossRef]

- Chesani, F.; Ciampolini, A.; Loreti, D.; Mello, P. Abduction for Generating Synthetic Traces. In Proceedings of the Business Process Management Workshops—BPM 2017 International Workshops, Barcelona, Spain, 10–11 September 2017; Volume 308, pp. 151–159. [Google Scholar] [CrossRef]

- Loreti, D.; Chesani, F.; Ciampolini, A.; Mello, P. Generating synthetic positive and negative business process traces through abduction. Knowl. Inf. Syst. 2020, 62, 813–839. [Google Scholar] [CrossRef]

- Alman, A.; Maggi, F.M.; Montali, M.; Patrizi, F.; Rivkin, A. Monitoring hybrid process specifications with conflict management: An automata-theoretic approach. Artif. Intell. Med. 2023, 139, 102512. [Google Scholar] [CrossRef]

| Dimension | Description | Values |

|---|---|---|

| Job name | Job identification name | - |

| Matrix size | Rank of the input matrix | 5280, 10,560, 15,840, 21,120, 26,400, 31,680, 36,960, 42,240 |

| Calculation processes | Number of processes dedicated exclusively to the calculation of the system’s solution | 64, 100, 144, 256, 400, 484, 576, 768 |

| Nodes | Number of employed physical nodes | [1,...,16] |

| algorithm | Considered linear solvers | IMe, ScaLAPACK |

| Precision | Numerical representation of real numbers | Single, double |

| Fault tolerance level | Number of faulty processes that can be handled | 0 (no fault tolerance), 1, 2, 4, 8 |

| Number of simulated faults | Number of faults to be simulated (and recovered) | 0, maximum fault tolerance level |

| Rank assignment | Way to assign ranks to computing processors | Span, fill |

| Field | Description |

|---|---|

| jobid | ID of LSF job |

| nodename | Name of the physical node. Generally, a number |

| timestamp_measure | Timestamp of the measure expressed in Unix time |

| sys_power | Total instantaneous power measurement of the computing node in watts |

| node_energy | Energy meter consumed by the node up to the time of reading. Useful for making differences between two readings in kWh |

| delta_e | Difference between previous and current measurement of energy in kWh for that node |

| Fields\Index | Mean | Std | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|

| Matrix size | 22,176 | 11,760.75 | 5280 | 10,560 | 21,120 | 31,680 | 42,240 |

| Calculation processes | 314 | 181.084 | 64 | 144 | 256 | 484 | 576 |

| Spare processes | 29.73 | 45.73 | 0 | 2 | 8 | 40 | 192 |

| Total processes | 343.73 | 195.41 | 64 | 148 | 320 | 492 | 768 |

| Nodes | 7.67 | 4.02 | 2 | 4 | 7 | 11 | 16 |

| Fault tolerance level | 3.33 | 2.7 | 0 | 1 | 2 | 4 | 8 |

| Simulated faults | 1.67 | 2.58 | 0 | 0 | 0 | 2 | 8 |

| Processes per socket | 10.99 | 11.11 | 0 | 0 | 8 | 23 | 24 |

| ScaLAPACK checkpoint | 4928 | 6762.64 | 0 | 0 | 0 | 10,560 | 21,120 |

| ScaLAPACK blocking factor | 10.38 | 11.63 | 0 | 0 | 0 | 24 | 25 |

| Total energy (Wh) | 77.73 | 144.39 | 0.73 | 6.88 | 17.69 | 69.4 | 2626.28 |

| Peak power (W) | 2131.32 | 1195.63 | 280 | 1120 | 1900 | 3030 | 5700 |

| Average power (W) | 1960.72 | 1064.42 | 304 | 990.10 | 1835.87 | 2813.40 | 4879.89 |

| Runtime (s) | 142.07 | 282.77 | 6 | 15 | 36 | 129.75 | 9481 |

| TARGET | REGRESSOR | BEST DEPTH | RMSE | MAE | MAPE |

|---|---|---|---|---|---|

| Total Energy | Decision tree | 19 | 0.013 | 0.004 | 0.076 |

| Min value: 0.000470 kWh | Random forest | 13 | 0.010 | 0.003 | 0.062 |

| Max value: 0.938590 kWh | GBDT | 5 | 0.010 | 0.003 | 0.119 |

| Max power | Decision tree | 10 | 12.676 | 7.966 | 0.024 |

| Min value: 150.000000 W | Random forest | 11 | 10.241 | 6.947 | 0.020 |

| Max value: 440.000000 W | GBDT | 6 | 10.339 | 7.089 | 0.021 |

| Mean power | Decision tree | 9 | 7.297 | 5.188 | 0.024 |

| Min value: 115.000000 W | Random forest | 11 | 6.530 | 4.632 | 0.022 |

| Max value: 330.158420 W | GBDT | 5 | 5.942 | 4.319 | 0.020 |

| Runtime | Decision tree | 10 | 22.238 | 7.296 | 0.065 |

| Min value: 6.000000 s | Random forest | 12 | 19.651 | 7.033 | 0.060 |

| Max value: 2097.000000 s | GBDT | 6 | 14.637 | 5.560 | 0.065 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Artioli, M.; Borghesi, A.; Chinnici, M.; Ciampolini, A.; Colonna, M.; De Chiara, D.; Loreti, D. C6EnPLS: A High-Performance Computing Job Dataset for the Analysis of Linear Solvers’ Power Consumption. Future Internet 2025, 17, 203. https://doi.org/10.3390/fi17050203

Artioli M, Borghesi A, Chinnici M, Ciampolini A, Colonna M, De Chiara D, Loreti D. C6EnPLS: A High-Performance Computing Job Dataset for the Analysis of Linear Solvers’ Power Consumption. Future Internet. 2025; 17(5):203. https://doi.org/10.3390/fi17050203

Chicago/Turabian StyleArtioli, Marcello, Andrea Borghesi, Marta Chinnici, Anna Ciampolini, Michele Colonna, Davide De Chiara, and Daniela Loreti. 2025. "C6EnPLS: A High-Performance Computing Job Dataset for the Analysis of Linear Solvers’ Power Consumption" Future Internet 17, no. 5: 203. https://doi.org/10.3390/fi17050203

APA StyleArtioli, M., Borghesi, A., Chinnici, M., Ciampolini, A., Colonna, M., De Chiara, D., & Loreti, D. (2025). C6EnPLS: A High-Performance Computing Job Dataset for the Analysis of Linear Solvers’ Power Consumption. Future Internet, 17(5), 203. https://doi.org/10.3390/fi17050203