A3C-R: A QoS-Oriented Energy-Saving Routing Algorithm for Software-Defined Networks

Abstract

1. Introduction

- We investigated the research value and significance of SDN-oriented QoS and energy-saving routing schemes, analyzed the research status of existing routing algorithms, and proposed an A3C-R intelligent routing algorithm that takes into account both QoS and energy saving.

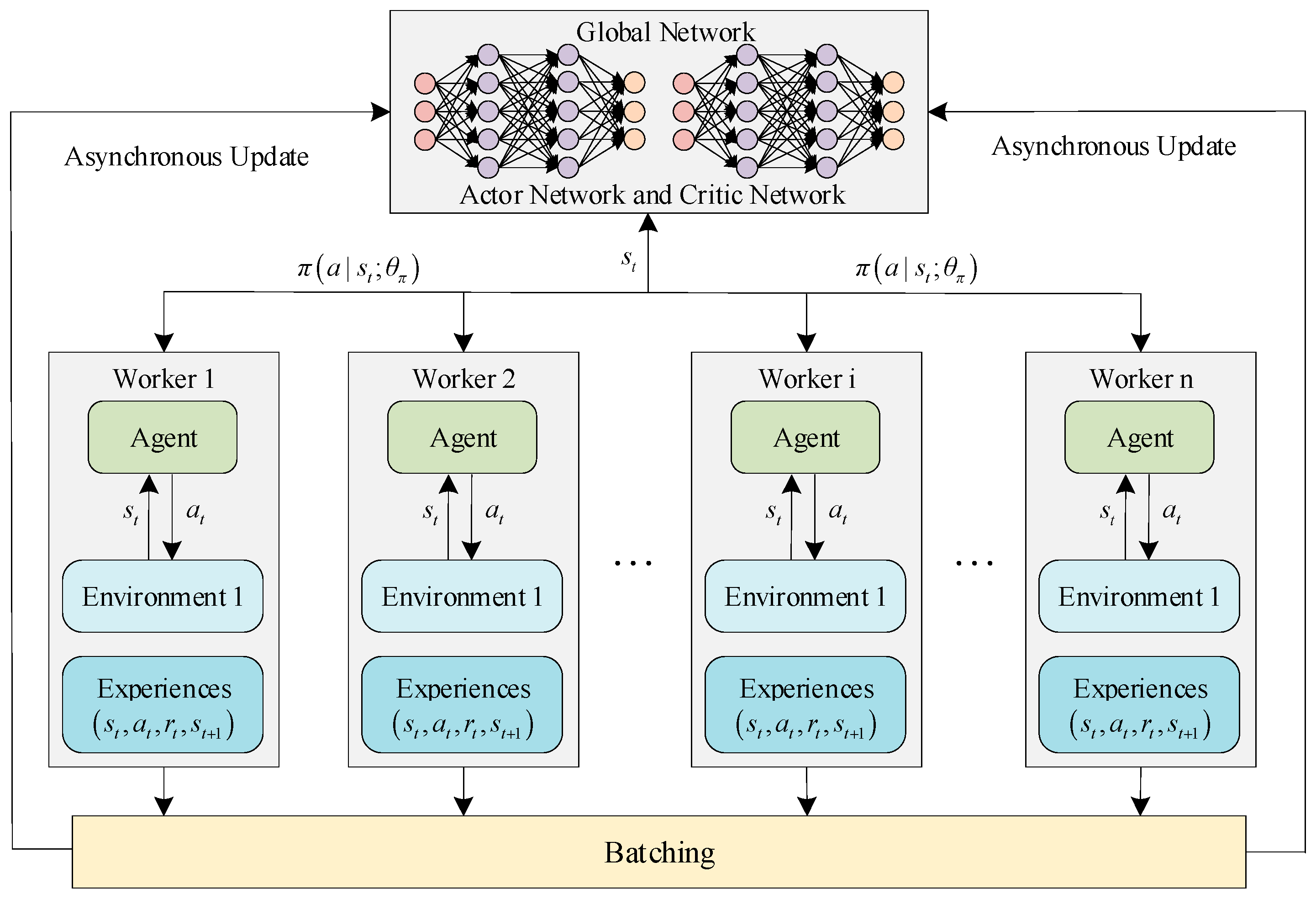

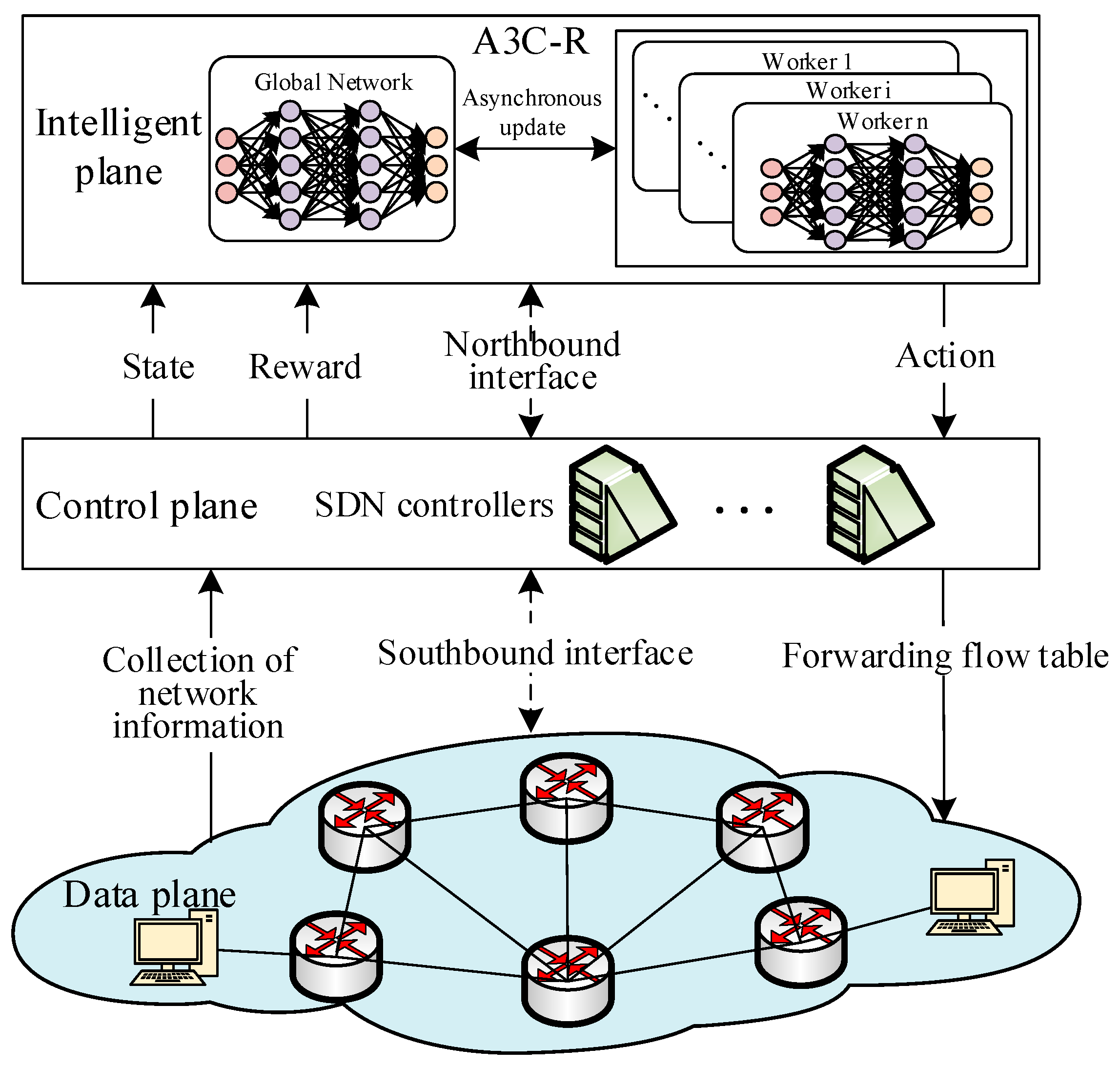

- A routing optimization goal that takes into account both QoS and energy saving is designed, and the A3C-R intelligent routing algorithm training framework is built based on A3C’s asynchronous training and advantage functions. And we design the state, action, and reward of the intelligent routing and network environment interaction process, carry out multi-agent model training and global parameter sharing, and improve algorithm efficiency and convergence.

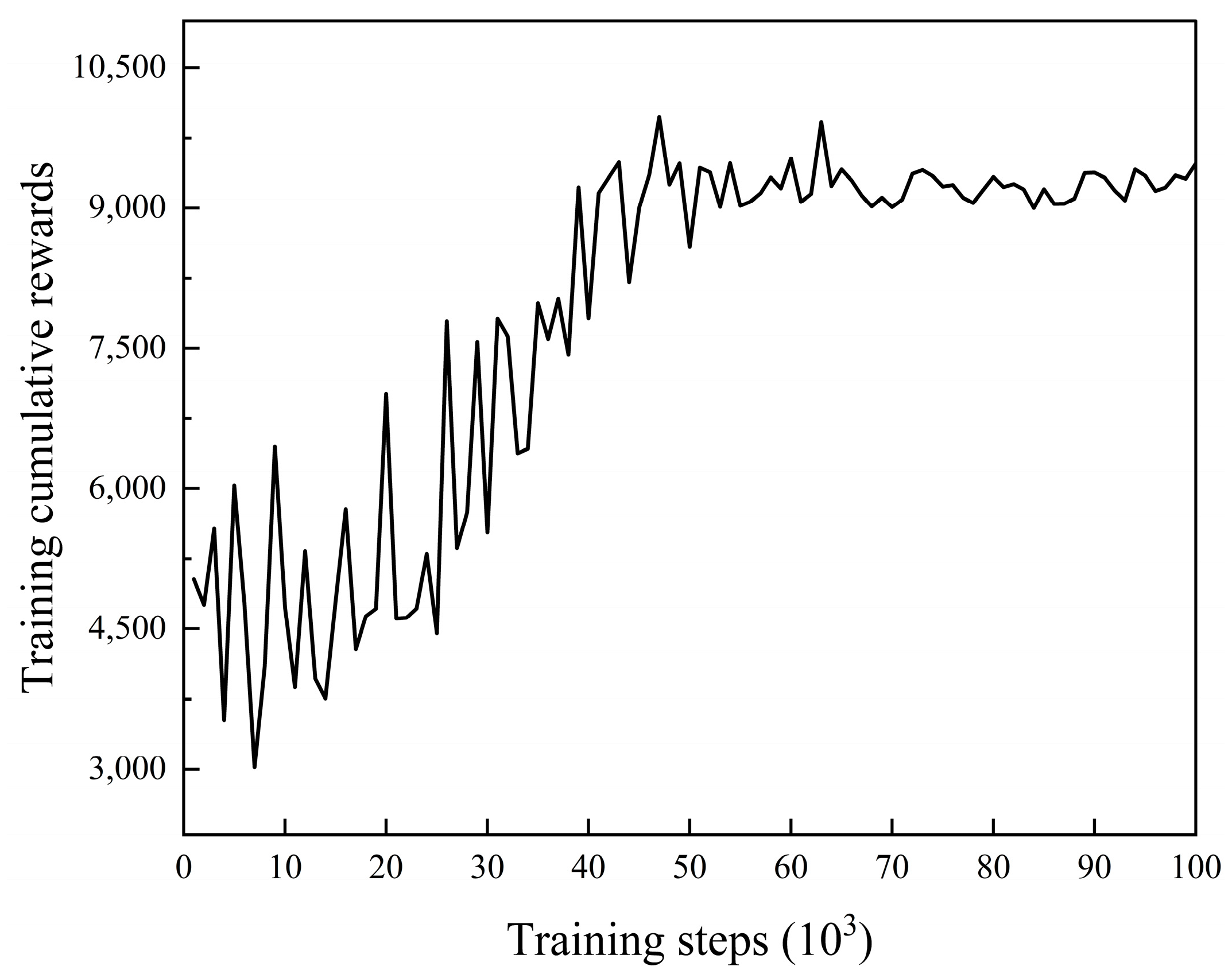

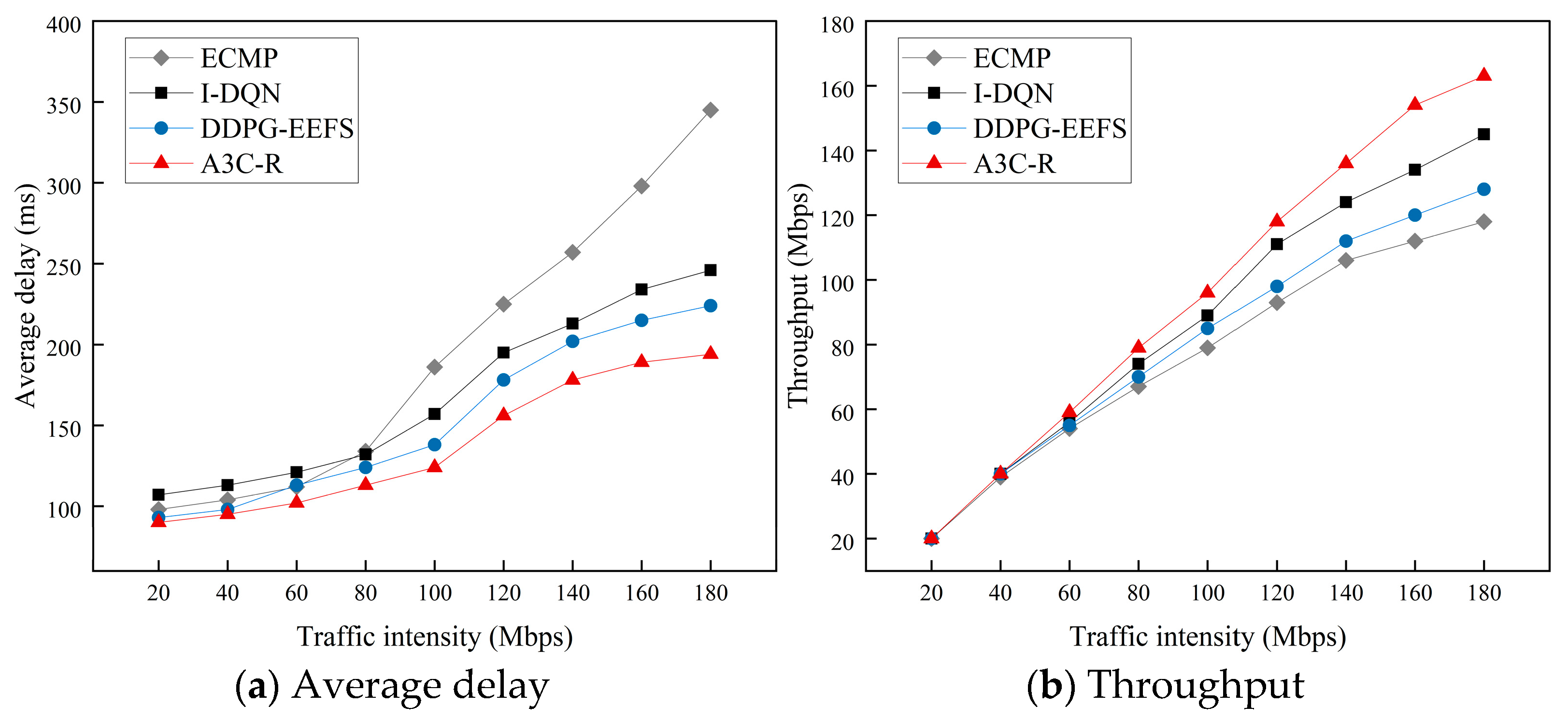

- A simulation experiment environment was built, and we verified the convergence of the A3C-R routing algorithm, as well as the QoS and energy-saving optimization effects of flows under different network traffic loads.

2. Related Research

- Research Status of QoS Routing Technology

- 2.

- Research status of energy-saving routing technology

3. Modeling of QoS and Energy-Efficient Routing

3.1. Modeling of QoS Routing

- Delay

- 2.

- Bandwidth

- 3.

- Packet loss rate

3.2. Modeling of Energy-Efficient Routing

4. QoS and Energy-Saving Routing Based on A3C

4.1. A3C-R Routing Framework

4.2. Design of A3C-R Routing and Environment Interaction

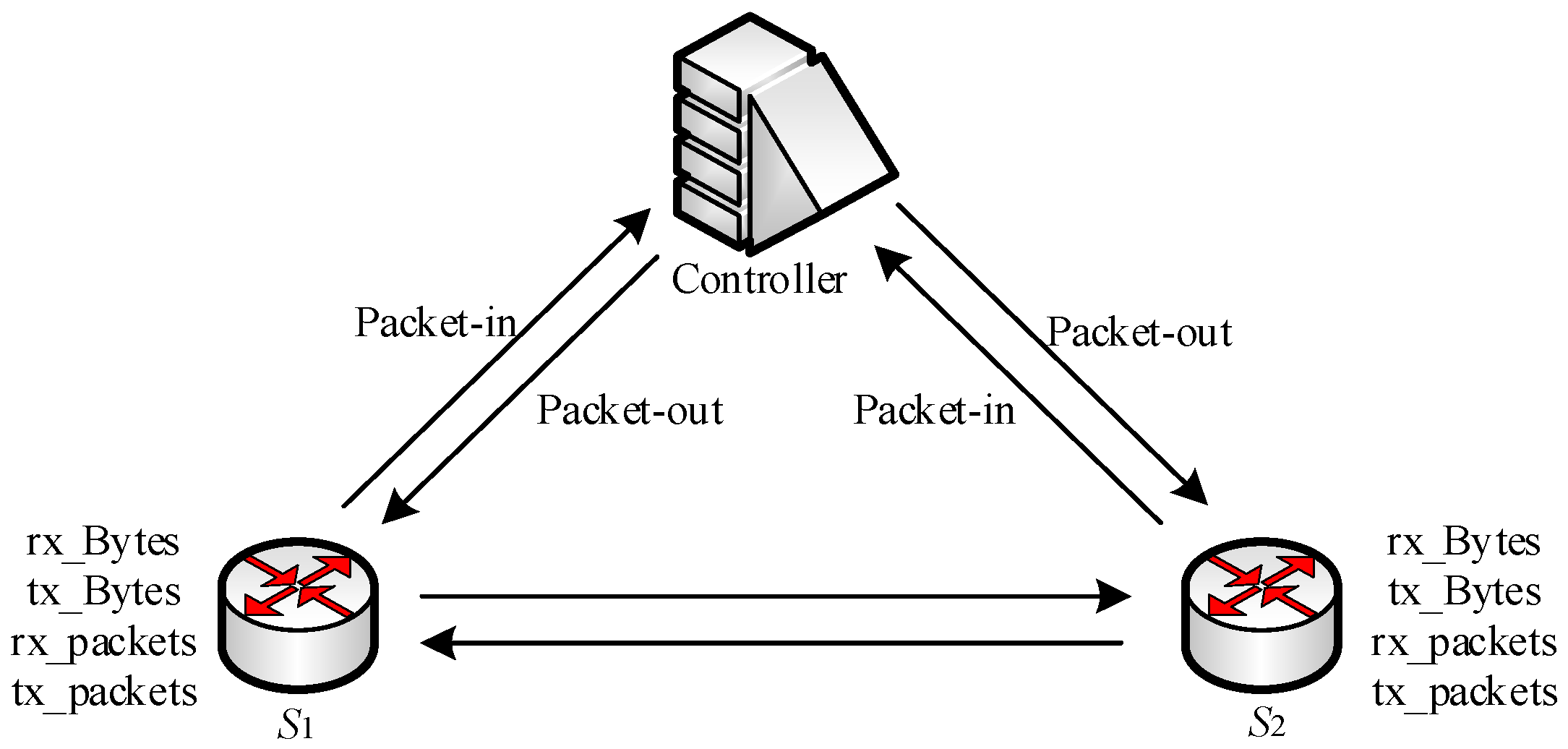

- State

- 2.

- Action

- 3.

- Reward

4.3. A3C-R Routing Algorithm Process

| Algorithm 1 A3C-R Routing Algorithm Process for QoS and Energy Saving |

| Input: The set of network states |

| Output: The set of network link weights |

| Initialize: Critic parameter and Actor parameter of the global network. Critic parameter and Actor parameter of Worker thread network. |

| Initialize: Global shared counter , global maximum shared count , thread step size . |

| (1) Repeat: |

| (2) Reset , |

| (3) , |

| (4) Repeat |

| (5) The Worker thread executes |

| (6) Obtain |

| (7) , |

| (8) Until is the terminal state, or |

| (9) |

| (10) For do |

| (11) Calculate at each moment |

| (12) |

| (13) |

| (14) End For |

| (15) Global network asynchronous update , |

| (16) Until |

5. Experimental Evaluation

5.1. Experimental Environment and Comparison Algorithms

5.2. Convergence of Routing Algorithms

5.3. Performance Analysis of Routing Algorithms

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, L.; Lu, Y.; Zhang, D.; Cheng, H.; Dong, P. DSOQR: Deep Reinforcement Learning for Online QoS Routing in SDN-Based Networks. Secur. Commun. Netw. 2022, 2022, 4457645. [Google Scholar]

- Wang, S.; Yuan, J.; Zhang, X.; Qian, Z.; Li, X.; You, I. QoS-aware flow scheduling for energy-efficient cloud data centre network. Int. J. Ad Hoc Ubiquitous Comput. 2020, 34, 141–153. [Google Scholar] [CrossRef]

- Lin, Y.J. Research on the development of time-sensitive networks and their security technologies. In Proceedings of the 4th International Conference on Informatics Engineering & Information Science (ICIEIS2021), Tianjin, China, 19–21 November 2021; SPIE: Bellingham, WA, USA, 2022; Volume 12161, pp. 90–95. [Google Scholar]

- Keshari, S.K.; Kansal, V.; Kumar, S. A systematic review of quality of services (QoS) in software defined networking (SDN). Wirel. Pers. Commun. 2021, 116, 2593–2614. [Google Scholar] [CrossRef]

- Rana, D.S.; Dhondiyal, S.A.; Chamoli, S.K. Software defined networking (SDN) challenges, issues and solution. Int. J. Comput. Sci. Eng. 2019, 7, 884–889. [Google Scholar] [CrossRef]

- Chenhui, W.; Hong, N.; Lei, L. A Routing Strategy with Optimizing Linear Programming in Hybrid SDN. IEICE Trans. Commun. 2022, 105, 569–579. [Google Scholar]

- Ding, Y.; Guo, J.; Li, X.; Shi, X.; Yu, P. Data Transmission Evaluation and Allocation Mechanism of the Optimal Routing Path: An Asynchronous Advantage Actor-Critic (A3C) Approach. Wirel. Commun. Mob. Comput. 2021, 2021, 6685722. [Google Scholar] [CrossRef]

- Li, S.H. Research and Implementation of Routing Optimization Technology Based on Traffic Classification in SDN. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2021. [Google Scholar]

- Fei, H.; Jia, D.; Zhang, B.; Li, C.; Zhang, Y.; Luo, T.; Zhou, J. A novel energy efficient QoS secure routing algorithm for WSNs. Sci. Rep. 2024, 14, 25969. [Google Scholar]

- Wang, Y.; Othman, M.; Choo, W.O.; Liu, R.; Wang, X. DFRDRL: A dynamic fuzzy routing algorithm based on deep reinforcement learning with guaranteed latency and bandwidth for software-defined networks. J. Big Data 2024, 11, 150. [Google Scholar] [CrossRef]

- Sanchez LP, A.; Shen, Y.; Guo, M. DQS: A QoS-driven routing optimization approach in SDN using deep reinforcement learning. J. Parallel Distrib. Comput. 2024, 188, 104851. [Google Scholar]

- Wang, Z.; Zeng, W.; Yang, S.; He, D.; Chan, S. UCRTD: An Unequally Clustered Routing Protocol Based on Multi Hop Threshold Distance for Wireless Sensor Networks. IEEE Internet Things J. 2024, 11, 29001–29019. [Google Scholar]

- Tang, Q.; Nie, F. Clustering routing algorithm of wireless sensor network based on swarm intelligence. Wirel. Netw. 2024, 30, 7227–7238. [Google Scholar] [CrossRef]

- Shu, X.; Lin, A.; Wen, X. Energy-Saving Multi-Agent Deep Reinforcement Learning Algorithm for Drone Routing Problem. Sensors 2024, 24, 6698. [Google Scholar] [CrossRef] [PubMed]

- Niranjana, M.I.; Daisy, J.; RamNivas, D.; Gayathree, K.; Vignesh, M.; Parthipan, V. Grid Based Reliable Routing Algorithm with Energy Efficient in Wireless Sensor Networks Using Image Processing. In Proceedings of the 2024 5th International Conference on Communication, Computing & Industry 6.0 (C2I6), Bengaluru, India, 6–7 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Akyıldız, O.; Kök, İ.; Okay, F.Y.; Özdemir, S. A p4-assisted task offloading scheme for fog networks: An intelligent transportation system scenario. Internet Things 2023, 22, 100695. [Google Scholar] [CrossRef]

- Qadir, G.A.; Zeebaree, S.R.M. Evaluation of QoS in Distributed Systems: A Review. Int. J. Sci. Bus. 2021, 5, 89–101. [Google Scholar]

- Wang, L.; Zhang, F.; Zheng, K.; Vasilakos, A.V.; Ren, S.; Liu, Z. Energy-Efficient Flow Scheduling and Routing with Hard Deadlines in Data Center Networks. In Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems, Madrid, Spain, 30 June–3 July 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; PmLR: Cambridge, MA, USA, 2016; pp. 1928–1937. [Google Scholar]

- Zheng, X.; Huang, W.; Wang, S.; Zhang, J.; Zhang, H. Research on Energy-Saving Routing Technology Based on Deep Reinforcement Learning. Electronics 2022, 11, 2035. [Google Scholar] [CrossRef]

- Pradhan, A.; Bisoy, S.K. Intelligent Action Performed Load Balancing Decision Made in Cloud Datacenter Based on Improved DQN Algorithm. In Proceedings of the 2022 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 9–11 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Yao, Z.; Wang, Y.; Meng, L.; Qiu, X.; Yu, P. DDPG-Based Energy-Efficient Flow Scheduling Algorithm in Software-Defined Data Centers. Wirel. Commun. Mob. Comput. 2021, 2021, 6629852. [Google Scholar]

- Qiu, H.; Lv, C.; Zhou, D. Energy-saving routing algorithm for mobile blockchain Device-to-Device network in 5G edge computing environment. In Proceedings of the AIIPCC 2022; The Third International Conference on Artificial Intelligence, Information Processing and Cloud Computing, Online, 21–22 June 2022; VDE: Osaka, Japan, 2022; pp. 1–10. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Song, R.; Zheng, X.; Huang, W.; Liu, H. A3C-R: A QoS-Oriented Energy-Saving Routing Algorithm for Software-Defined Networks. Future Internet 2025, 17, 158. https://doi.org/10.3390/fi17040158

Wang S, Song R, Zheng X, Huang W, Liu H. A3C-R: A QoS-Oriented Energy-Saving Routing Algorithm for Software-Defined Networks. Future Internet. 2025; 17(4):158. https://doi.org/10.3390/fi17040158

Chicago/Turabian StyleWang, Sunan, Rong Song, Xiangyu Zheng, Wanwei Huang, and Hongchang Liu. 2025. "A3C-R: A QoS-Oriented Energy-Saving Routing Algorithm for Software-Defined Networks" Future Internet 17, no. 4: 158. https://doi.org/10.3390/fi17040158

APA StyleWang, S., Song, R., Zheng, X., Huang, W., & Liu, H. (2025). A3C-R: A QoS-Oriented Energy-Saving Routing Algorithm for Software-Defined Networks. Future Internet, 17(4), 158. https://doi.org/10.3390/fi17040158