The Role of AI-Based Chatbots in Public Health Emergencies: A Narrative Review

Abstract

1. Introduction

2. Chatbots and Their Psychological Relevance for Public Health Communication

2.1. The ELIZA Effect and Its Implications in Public Health Communication

2.2. PARRY, Personification in Chatbots and Its Relevance in Public Health

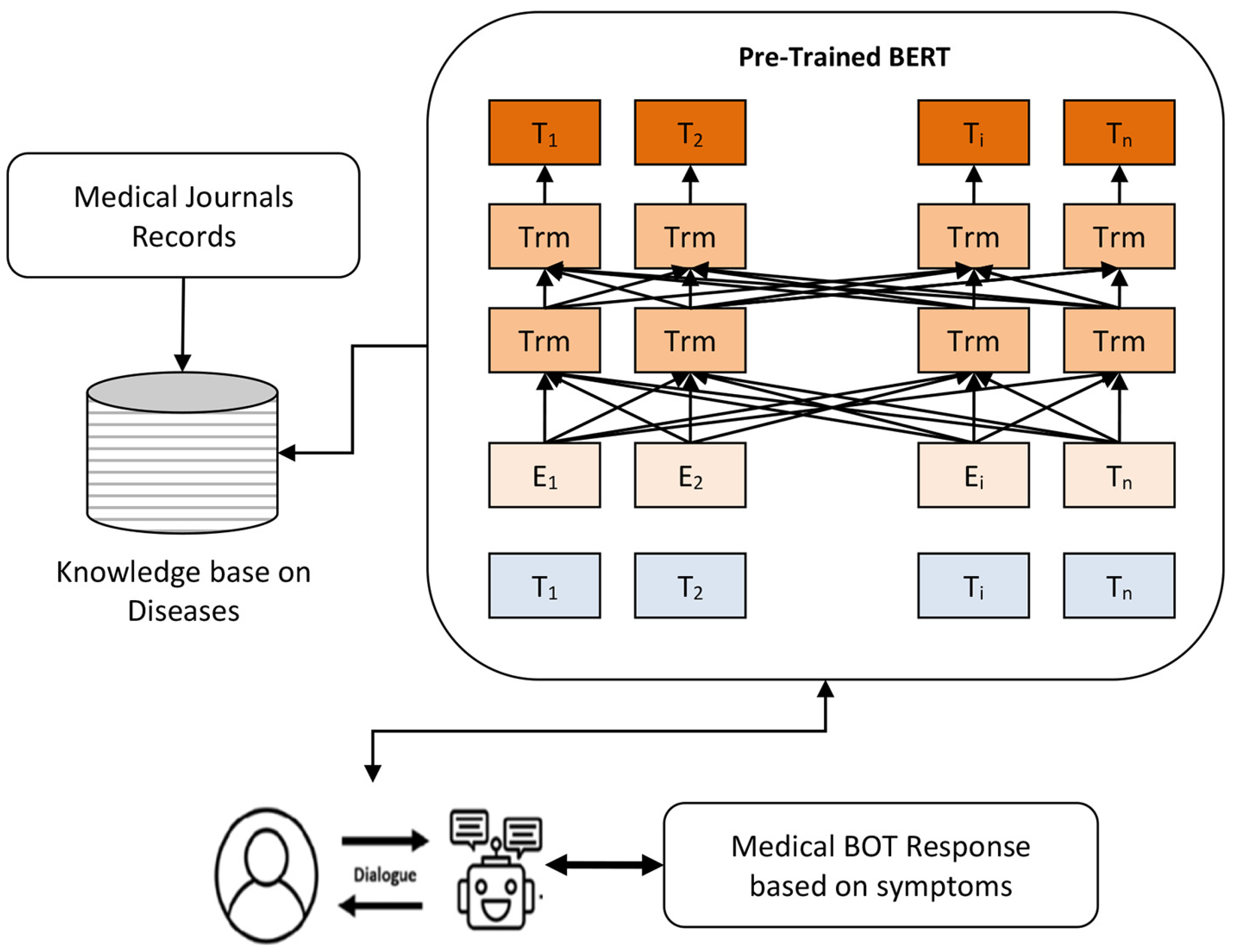

2.3. From Early Chatbots to Generative Pre-Trained Transformers

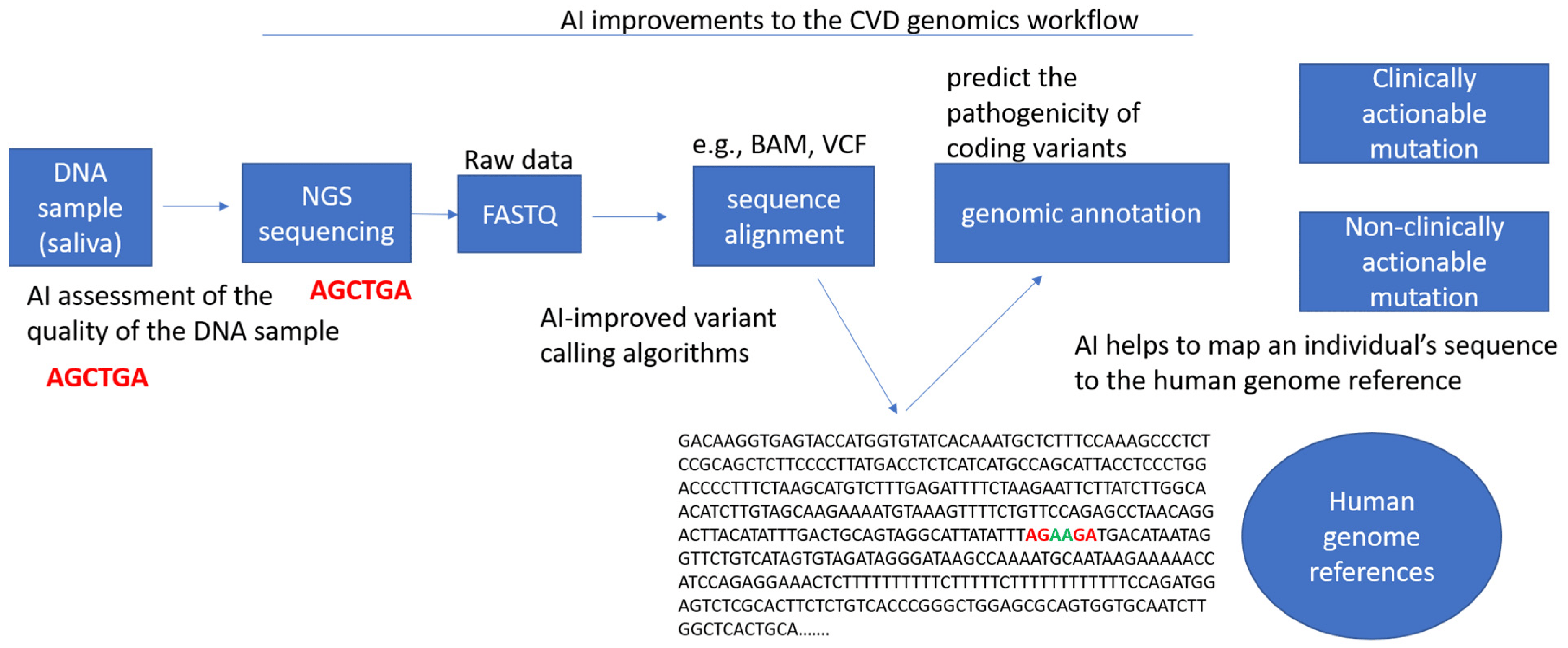

3. Artificial Intelligence in Genetics and Genomics

3.1. AI in Genetics and Computational Genomics

3.2. AI-Based Chatbots in Genomics

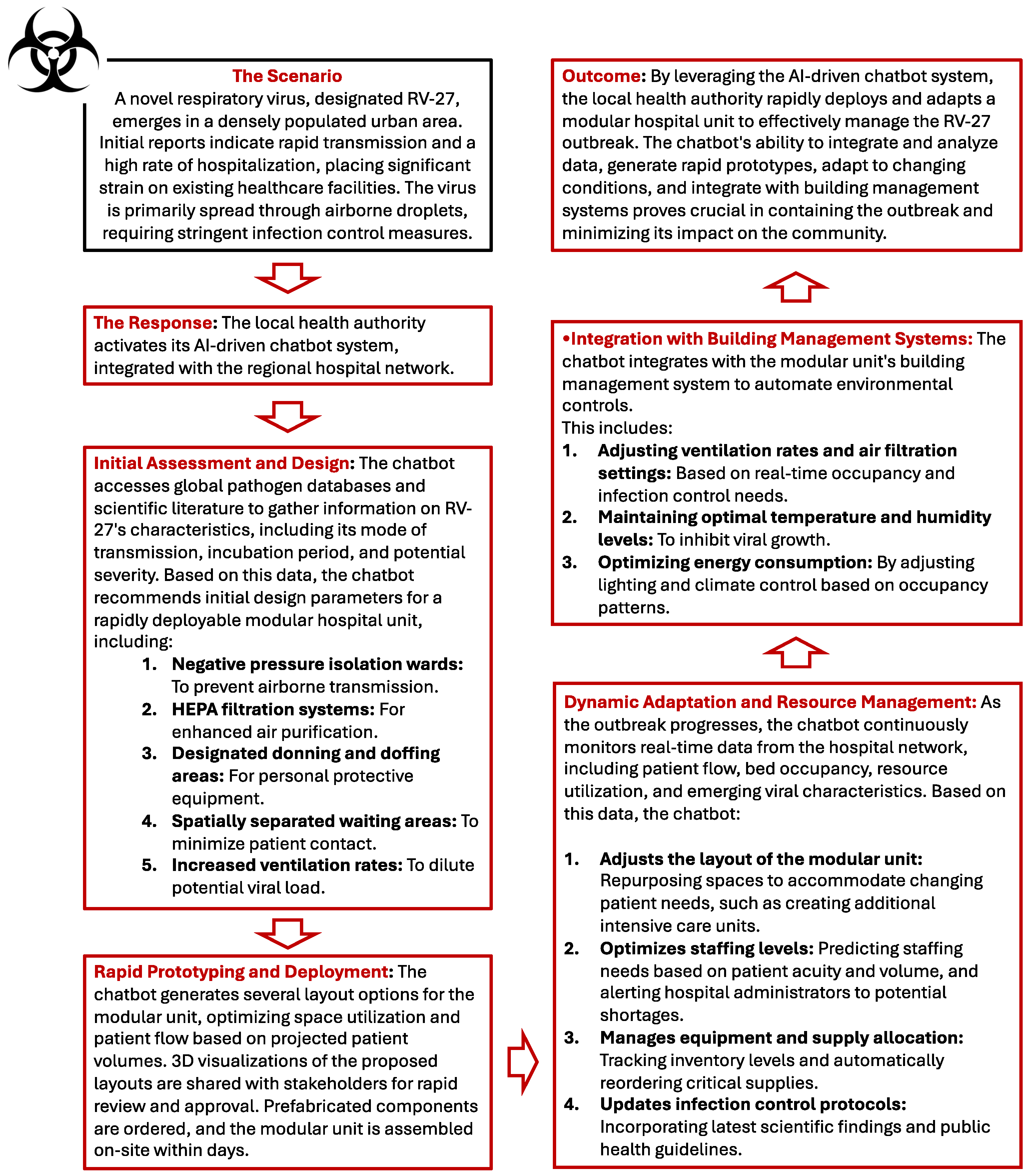

4. Leveraging AI-Driven Chatbots for Dynamically Adaptable Healthcare Spaces During Outbreaks

4.1. A Pragmatic Architectural Approach to the COVID-19 Response: The Lessons of the Pandemic

4.2. Post-Pandemic Architecture: Utilizing Digital Tools to Mitigate Airborne Transmission Risk

4.3. Building the Future of Outbreak Response: AI Chatbots and Adaptive Hospital Design

4.4. Example Scenario

5. Chatbots as Statistical Consultants in Infectious Disease Settings: Potential and Reliability

5.1. Potential of Chatbots as Statistical Advisors

5.2. Evaluating the Reliability of Chatbots in Recommending Statistical Techniques

- 1.

- Accuracy of recommendations: AI chatbots are not immune from making mistakes or offering incorrect statistical advice. Although ChatGPT may suggest well-known statistical methods, it does not always recommend the techniques best suited to a specific dataset. For example, it might suggest the use of a linear regression when, given the distribution of the data, a nonlinear model would be more appropriate. Studies have shown that artificial intelligence models, despite their sophistication, are often unable to fully understand the domain-specific requirements of medical or epidemiological datasets [85]. This limitation could lead to erroneous recommendations, especially when users are unfamiliar with the subtleties of statistical techniques.

- 2.

- Assumptions and limitations: Every statistical technique has inherent assumptions, such as the normality of data distribution, independence of observations, or homoscedasticity. Chatbots do not always provide sufficient warning or detail about these assumptions, potentially leading to the misuse of statistical methods. For example, recommending ANOVA (analysis of variance) without clarifying the need for homogeneity of variances could lead to erroneous analysis results [86]. In this regard, human statisticians possess the nuances of judgment necessary to adapt methods based on data anomalies, something that current chatbots lack.

- 3.

- Interpretability and user understanding: Even when ChatGPT correctly identifies an appropriate statistical method, explaining why a particular technique should be used remains a challenge. Although the model can describe statistical concepts in general terms, it does not always provide clear guidance on why certain assumptions are important or how to interpret the results in a meaningful way. This limitation could be particularly problematic for users who do not have a strong background in statistics. A chatbot might recommend logistic regression for analyzing binary outcomes, but not guide the user through the necessary diagnostic tests, such as testing for multicollinearity or overfitting [87]. The absence of in-depth explanations could hinder the user’s ability to effectively apply the recommended techniques.

- 4.

- Dependence on training data: ChatGPT and similar models are trained on large datasets that include vast amounts of information, but are not necessarily up-to-date with the latest advances in statistical theory or infectious disease epidemiology. This lag in knowledge could cause the chatbot to suggest outdated or less efficient methods. In addition, these models are based on probabilities derived from training data, which means they lack a true understanding of the problem at hand. They generate answers based on patterns and correlations in the data they have seen, but they do not reason about the statistical principles involved as a human expert would [88].

- 5.

- Risk of misinformation: One of the significant risks of using AI chatbots for statistical guidance is the potential for misinformation. ChatGPT, while very adept at generating human-like text, can sometimes produce “hallucinations”, generating plausible but factually incorrect information [89]. In a statistical context, this could lead the chatbot to recommend inappropriate or even nonexistent methods. For example, it might suggest a test or statistical procedure that is not applicable to the user’s dataset or, in extreme cases, does not exist in the statistical literature. In the world of public health, where data-driven decisions can impact millions of people, the consequences of such errors could be severe.

5.3. Enhancing the Reliability of Chatbots: A Hybrid Approach

6. Clinical Insights from an Infectious Disease Specialist

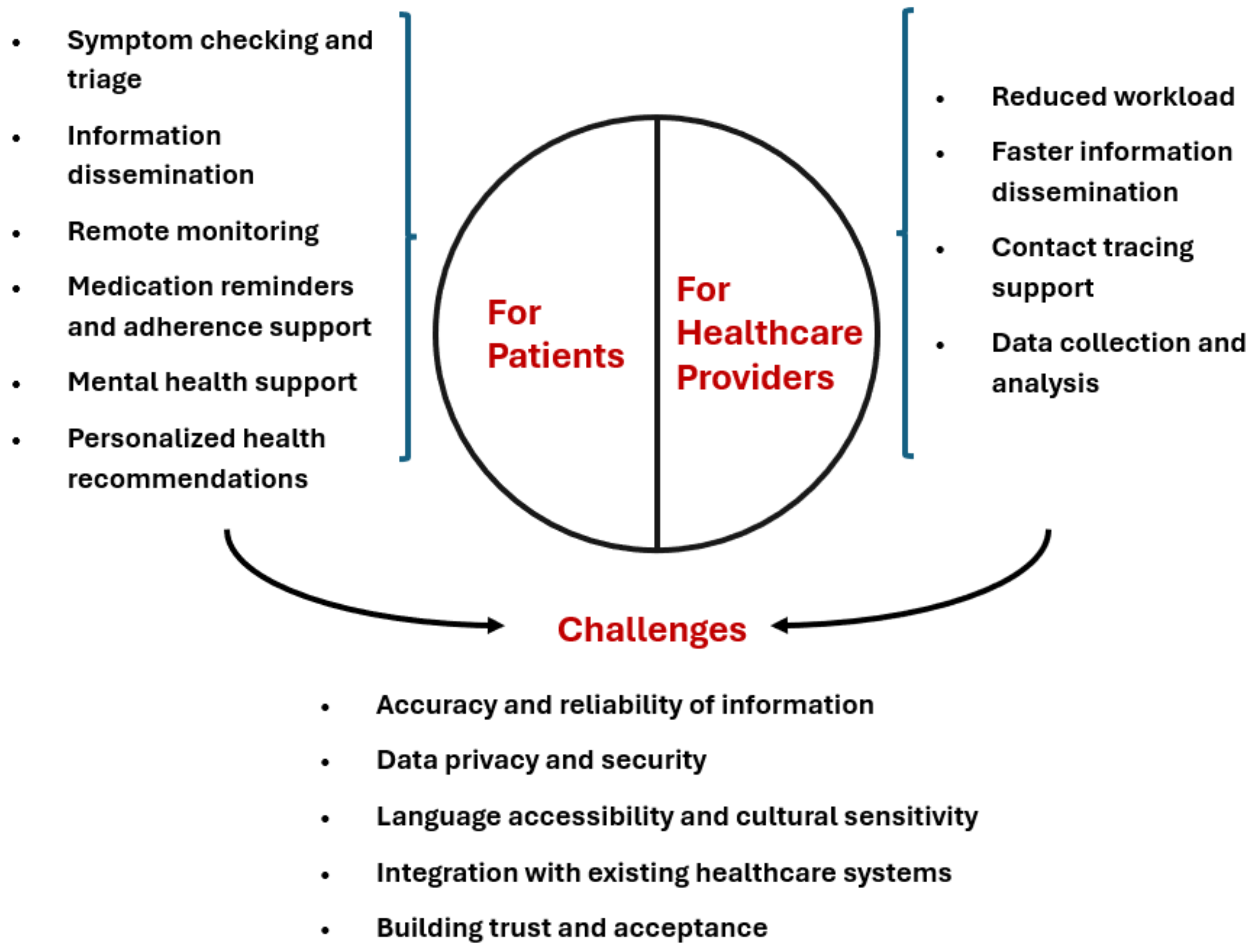

6.1. AI-Powered Chatbots for Clinical Management During Outbreaks

6.2. Enhancing Outbreak Response with Chatbots: Benefits and Challenges in Clinical Management of Patients

6.3. Future Directions and Research Needs

7. Challenges and Limitations of AI Chatbots in Infectious Disease Outbreaks

7.1. Unpredictability and Reliability Issues

7.2. Dissemination of Outdated Information

7.3. Amplification of Misinformation

7.4. Ethical and Accountability Concerns

7.5. Privacy and Data Security Risks

7.6. Ineffectiveness with Diverse Populations

7.7. Technical Limitations in Communication

7.8. Resource Allocation Concerns

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Managing Epidemics: Key Facts About Major Deadly Diseases, 2nd Edition. Available online: https://www.who.int/publications/i/item/9789240083196 (accessed on 28 August 2024).

- Fagherazzi, G.; Goetzinger, C.; Rashid, M.A.; Aguayo, G.A.; Huiart, L. Digital health strategies to fight COVID-19 worldwide: Challenges, recommendations, and a call for papers. J. Med. Internet Res. 2020, 22, e19284. [Google Scholar]

- Miner, A.S.; Laranjo, L.; Kocaballi, A.B. Chatbots in the fight against the COVID-19 pandemic. NPJ Digit. Med. 2020, 3, 65. [Google Scholar] [CrossRef] [PubMed]

- Watters, C.; Lemanski, M.K. Universal skepticism of ChatGPT: A review of early literature on chat generative pre-trained transformer. Front. Big Data 2023, 6, 1224976. [Google Scholar] [CrossRef]

- Gao, S.; He, L.; Chen, Y.; Li, D.; Lai, K. Public perception of artificial intelligence in medical care: Content analysis of social media. J. Med. Internet Res. 2020, 22, e16649. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training; Technical report; OpenAI: San Francisco, CA, USA, 2018. [Google Scholar]

- Judson, T.J.; Odisho, A.Y.; Neinstein, A.B.; Chao, J.; Williams, A.; Miller, C.; Moriarty, T.; Gleason, N.; Intinarelli, G.; Gonzales, R. Rapid design and implementation of an integrated patient self-triage and self-scheduling tool for COVID-19. J. Am. Med. Inform. Assoc. 2020, 27, 860–866. [Google Scholar]

- Sezgin, E.; Huang, Y.; Ramtekkar, U.; Lin, S. Readiness for voice assistants to support healthcare delivery during a health crisis and pandemic. npj Digit. Med. 2020, 3, 122. [Google Scholar] [PubMed]

- Kocaballi, A.B.; Berkovsky, S.; Quiroz, J.C.; Laranjo, L.; Tong, H.L.; Rezazadegan, D.; Briatore, A.; Coiera, E. The personalization of conversational agents in health care: Systematic review. J. Med. Internet Res. 2019, 21, e15360. [Google Scholar] [PubMed]

- Brown, T.B. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Nadarzynski, T.; Miles, O.; Cowie, A.; Ridge, D. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digit. Health 2019, 5, 2055207619871808. [Google Scholar]

- Jungmann, S.M.; Witthöft, M. Health anxiety, cyberchondria, and coping in the current COVID-19 pandemic: Which factors are related to coronavirus anxiety? J. Anxiety Disord. 2020, 73, 102239. [Google Scholar]

- Cuan-Baltazar, J.Y.; Muñoz-Perez, M.J.; Robledo-Vega, C.; Pérez-Zepeda, M.F.; Soto-Vega, E. Misinformation of COVID-19 on the internet: Infodemiology study. JMIR Public Health Surveill. 2020, 6, e18444. [Google Scholar] [CrossRef] [PubMed]

- Béranger, J. Big Data and Ethics: The Medical Datasphere; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Palanica, A.; Flaschner, P.; Thommandram, A.; Li, M.; Fossat, Y. Physicians’ perceptions of chatbots in health care: Cross-sectional web-based survey. J. Med. Internet Res. 2019, 21, e12887. [Google Scholar]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar]

- Dillon, S. The Eliza effect and its dangers: From demystification to gender critique. J. Cult. Res. 2020, 24, 1–15. [Google Scholar]

- De Duro, E.S.; Improta, R.; Stella, M. Introducing CounseLLMe: A dataset of simulated mental health dialogues for comparing LLMs like Haiku, LLaMAntino and ChatGPT against humans. Emerg. Trends Drugs Addict. Health 2024, 5, 100170. [Google Scholar]

- Frith, C.; Frith, U. Theory of mind. Curr. Biol. 2005, 15, R644–R645. [Google Scholar]

- Cummings, L. The “trust” heuristic: Arguments from authority in public health. Health Commun. 2014, 29, 1043–1056. [Google Scholar] [PubMed]

- Stella, M.; Vitevitch, M.S.; Botta, F. Cognitive Networks Extract Insights on COVID-19 Vaccines from English and Italian Popular Tweets: Anticipation, Logistics, Conspiracy and Loss of Trust. Big Data Cogn. Comput. 2022, 6, 52. [Google Scholar] [CrossRef]

- Johnson, D.; Grayson, K. Cognitive and affective trust in service relationships. J. Bus. Res. 2005, 58, 500–507. [Google Scholar] [CrossRef]

- Branda, F. Social impact: Trusting open science for future pandemic resilience. Soc. Impacts 2024, 3, 100058. [Google Scholar] [CrossRef]

- Kasperson, J.X.; Kasperson, R.E.; Pidgeon, N.; Slovic, P. The social amplification of risk: Assessing fifteen years of research and theory. In The Feeling of Risk; Routledge: London, UK, 2013; pp. 317–344. [Google Scholar]

- Devine, D.; Gaskell, J.; Jennings, W.; Stoker, G. Trust and the coronavirus pandemic: What are the consequences of and for trust? An early review of the literature. Political Stud. Rev. 2021, 19, 274–285. [Google Scholar] [CrossRef] [PubMed]

- Hall, M.A.; Dugan, E.; Zheng, B.; Mishra, A.K. Trust in physicians and medical institutions: What is it, can it be measured, and does it matter? Milbank Q. 2001, 79, 613–639. [Google Scholar] [PubMed]

- Colby, K.M.; Weber, S.; Hilf, F.D. Artificial paranoia. Artif. Intell. 1971, 2, 1–25. [Google Scholar] [CrossRef]

- Heiser, J.F.; Colby, K.M.; Faught, W.S.; Parkison, R.C. Can psychiatrists distinguish a computer simulation of paranoia from the real thing?: The limitations of turing-like tests as measures of the adequacy of simulations. J. Psychiatr. Res. 1979, 15, 149–162. [Google Scholar] [CrossRef]

- Bickmore, T.W.; Picard, R.W. Establishing and maintaining long-term human–computer relationships. ACM Trans. Comput.-Hum. Interact. (TOCHI) 2005, 12, 293–327. [Google Scholar]

- Trzebiński, W.; Claessens, T.; Buhmann, J.; De Waele, A.; Hendrickx, G.; Van Damme, P.; Daelemans, W.; Poels, K. The effects of expressing empathy/autonomy support using a COVID-19 vaccination chatbot: Experimental study in a sample of Belgian adults. JMIR Form. Res. 2023, 7, e41148. [Google Scholar]

- Fogg, B.J. Persuasive technology: Using computers to change what we think and do. Ubiquity 2002, 2002, 2. [Google Scholar] [CrossRef]

- Ciechanowski, L.; Przegalinska, A.; Magnuski, M.; Gloor, P. In the shades of the uncanny valley: An experimental study of human–chatbot interaction. Future Gener. Comput. Syst. 2019, 92, 539–548. [Google Scholar]

- Wallace, R.S. The anatomy of A.L.I.C.E. In Parsing the Turing Test; Springer: Berlin/Heidelberg, Germany, 2009; pp. 181–210. [Google Scholar]

- Zhou, C.; Li, Q.; Li, C.; Yu, J.; Liu, Y.; Wang, G.; Zhang, K.; Ji, C.; Yan, Q.; He, L.; et al. A comprehensive survey on pretrained foundation models: A history from bert to chatgpt. arXiv 2023, arXiv:2302.09419. [Google Scholar] [CrossRef]

- Li, J.; Dada, A.; Puladi, B.; Kleesiek, J.; Egger, J. ChatGPT in healthcare: A taxonomy and systematic review. Comput. Methods Programs Biomed. 2024, 245, 108013. [Google Scholar]

- Brunet-Gouet, E.; Vidal, N.; Roux, P. Can a Conversational Agent Pass Theory-of-Mind Tasks? A Case Study of ChatGPT with the Hinting, False Beliefs, and Strange Stories Paradigms. In Proceedings of the International Conference on Human and Artificial Rationalities, Paris, France, 19–22 September 2023; Springer: Cham, Switzerland, 2023; pp. 107–126. [Google Scholar]

- Stella, M.; Hills, T.T.; Kenett, Y.N. Using cognitive psychology to understand GPT-like models needs to extend beyond human biases. Proc. Natl. Acad. Sci. USA 2023, 120, e2312911120. [Google Scholar] [PubMed]

- Metze, K.; Morandin-Reis, R.C.; Lorand-Metze, I.; Florindo, J.B. Bibliographic research with ChatGPT may be misleading: The problem of hallucination. J. Pediatr. Surg. 2024, 59, 158. [Google Scholar] [CrossRef] [PubMed]

- Giuffrè, M.; You, K.; Shung, D.L. Evaluating ChatGPT in medical contexts: The imperative to guard against hallucinations and partial accuracies. Clin. Gastroenterol. Hepatol. 2024, 22, 1145–1146. [Google Scholar]

- Brashier, N.M. Do conspiracy theorists think too much or too little? Curr. Opin. Psychol. 2023, 49, 101504. [Google Scholar]

- Pugliese, N.; Wong, V.W.S.; Schattenberg, J.M.; Romero-Gomez, M.; Sebastiani, G.; Castera, L.; Hassan, C.; Manousou, P.; Miele, L.; Peck, R.; et al. Accuracy, reliability, and comprehensibility of ChatGPT-generated medical responses for patients with nonalcoholic fatty liver disease. Clin. Gastroenterol. Hepatol. 2024, 22, 886–889. [Google Scholar] [PubMed]

- Dixit, S.; Kumar, A.; Srinivasan, K.; Vincent, P.; Ramu Krishnan, N. Advancing genome editing with artificial intelligence: Opportunities, challenges, and future directions. Front. Bioeng. Biotechnol. 2024, 1, 1335901. [Google Scholar] [CrossRef]

- Vilhekar, R.S.; Rawekar, A. Artificial intelligence in genetics. Cureus 2024, 16, e52035. [Google Scholar]

- Abdelwahab, O.; Belzile, F.; Torkamaneh, D. Performance analysis of conventional and AI-based variant callers using short and long reads. BMC Bioinform. 2023, 24, 2956. [Google Scholar]

- Krzysztof, K.; Magda, M.; Przemysław, B.; Bernt, G.; Joanna, S. Exploring the impact of sequence context on errors in SNP genotype calling with whole genome sequencing data using AI-based autoencoder approach. NAR Genom. Bioinform. 2024, 6, lqae131. [Google Scholar]

- Vaz, J.; Balaji, S. Convolutional neural networks (CNNs): Concepts and applications in pharmacogenomics. Mol. Divers. 2021, 6, lqae131. [Google Scholar]

- Sebiha, C.; Pei, Z.; Atiyye, Z.; Mustafa, S.P.; Wenyin, B.; Oktay, I.K. Matching variants for functional characterization of genetic variants. G3 Genes Genomes Genet. 2023, 13, jkad227. [Google Scholar]

- Liu, Y.; Zhang, T.; You, N.; Wu, S.; Shen, N. MAGPIE: Accurate pathogenic prediction for multiple variant types using machine learning approach variants for functional characterization of genetic variants. Genome Med. 2024, 16, 3. [Google Scholar] [PubMed]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar]

- Johnston, A.D.; Simões-Pires, C.A.; Thompson, T.V.; Suzuki, M.; Greally, J.M. Functional genetic variants can mediate their regulatory effects through alteration of transcription factor binding. Nat. Commun. 2019, 10, 3472. [Google Scholar] [PubMed]

- Frasca, M.; La Torre, D.; Repetto, M.; De Nicolò, V.; Pravettoni, G.; Cutica, I. Artificial intelligence applications to genomic data in cancer research: A review of recent trends and emerging areas. Discov. Anal. 2024, 2, 10. [Google Scholar]

- Qureshi, R.; Irfan, M.; Gondal, T.; Khan, S.; Wu, J.; Hadi, M.; Heymach, J.; Le, X.; Yan, H.; Alam, T. AI in drug discovery and its clinical relevance. Heliyon 2023, 9, e17575. [Google Scholar] [CrossRef]

- Manzoni, C.; Kia, D.A.; Vandrovcova, J.; Hardy, J.; Wood, N.W.; Lewis, P.A.; Ferrari, R. Genome, transcriptome and proteome: The rise of omics data and their integration in biomedical sciences. Brief. Bioinform. 2018, 19, 286–3025. [Google Scholar]

- Chen, F.; Zou, G.; Wu, Y.; Ou-Yang, L. Clustering single-cell multi-omics data via graph regularized multi-view ensemble learning. Bioinformatics 2024, 40, btae169. [Google Scholar]

- Miner, A.S.; Milstein, A.; Hancock Jefferey, T.K.C.; Johnson, K.; Choi, E.; Kaplin, S.; Venner, E.; Murugan, M.; Wang, Z.; Glicksberg, B.; et al. Artificial Intelligence and Cardiovascular Genetics. Life 2022, 12, 279. [Google Scholar] [CrossRef]

- Abe, S.; Tago, S.; Yokoyama, K.; Ogawa, M.; Takei, T.; Imoto, S.; Fuji, M. Explainable AI for Estimating Pathogenicity of Genetic Variants Using Large-Scale Knowledge Graphs. Cancers 2023, 15, 1118. [Google Scholar] [CrossRef]

- Birney, E.; Andrews, T.; Bevan, P.; Caccamo, M.; Chen, Y.; Clarke, L.; Coates, G.; Cuff, J.; Curwen, V.; Cutts, T.; et al. An overview of Ensembl. Genome Res. 2004, 14, 925–928. [Google Scholar]

- Al-Hilli, Z.; Noss, R.; Dickard, J.; Wei, W.; Chichura, A.; Wu, V.; Renicker, K.; Pederson, H.; Eng, C. A Randomized Trial Comparing the Effectiveness of Pre-test Genetic Counseling Using an Artificial Intelligence Automated Chatbot and Traditional In-person Genetic Counseling in Women Newly Diagnosed with Breast Cancer. Ann. Surg. Oncol. 2023, 30, 5990–5996. [Google Scholar] [PubMed]

- Floris, M.; Moschella, A.; Alcalay, M.; Montella, A.; Tirelli, M.; Fontana, L.; Idda, M. Pharmacogenetics in Italy: Current landscape and future prospects. Hum. Genom. 2024, 18, 78. [Google Scholar]

- Idda, M.; Zoledziewska, M.; Urru, S.; McInnes, G.; Bilotta, A.; Nuvoli, V.; Lodde, V.; Orrù, S.; Schlessinger, D.; Cucca, F.; et al. Genetic Variation among Pharmacogenes in the Sardinian Population. J. Mol. Sci. 2022, 23, 10058. [Google Scholar]

- Chakraborty, C.; Pal, S.; Bhattacharya, M.; Dash, S.; Lee, S. Overview of Chatbots with special emphasis on artificial intelligence-enabled ChatGPT in medical science. Front. Artif. Intell. 2023, 6, 1237704. [Google Scholar]

- Jeyaraman, M.; Balaji, S.; Jeyaraman, N.; Yadav, S. Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 2023, 15, e43262. [Google Scholar]

- Li, J. Security Implications of AI Chatbots in Health Care. J. Med. Internet Res. 2023, 25, e47551. [Google Scholar]

- Mortice, Z. 5 Insights as Architects Lead Hospital Conversion for COVID-19. Autodesk 2020. Available online: https://www.autodesk.com/design-make/articles/hospital-conversion (accessed on 1 October 2024).

- AIA COVID-19 Task Force 1: Health Impact Briefing #2 COVID-19 Frontline Perspective Design Considerations to Reduce Risk and Support Patients and Providers in Facilities for COVID-19 Care. The American Institute of Architects 22 June 2020. Available online: https://content.aia.org/sites/default/files/2020-07/AIA_COVID_Frontline_Perspective.pdf (accessed on 1 October 2024).

- Cogley, B. AIA Task Force to Offer Advice on Converting Buildings into Healthcare Facilities-Dezeen 2020. Available online: https://www.dezeen.com/2020/03/27/aia-task-force-converting-buildings-into-healthcare-facilities/ (accessed on 1 October 2024).

- De Yong, S.; Rachmawati, M.; Defiana, I. Theorizing security-pandemic aspects and variables for post-pandemic architecture. Build. Environ. 2024, 258, 111579. [Google Scholar]

- Gan, V.J.; Luo, H.; Tan, Y.; Deng, M.; Kwok, H. BIM and data-driven predictive analysis of optimum thermal comfort for indoor environment. Sensors 2021, 21, 4401. [Google Scholar] [CrossRef]

- Yang, T.; White, M.; Lipson-Smith, R.; Shannon, M.M.; Latifi, M. Design Decision Support for Healthcare Architecture: A VR-Integrated Approach for Measuring User Perception. Buildings 2024, 14, 797. [Google Scholar] [CrossRef]

- Junaid, S.B.; Imam, A.A.; Balogun, A.O.; De Silva, L.C.; Surakat, Y.A.; Kumar, G.; Abdulkarim, M.; Shuaibu, A.N.; Garba, A.; Sahalu, Y.; et al. Recent advancements in emerging technologies for healthcare management systems: A survey. Healthcare 2022, 10, 1940. [Google Scholar] [CrossRef]

- Chrysikou, E.; Papadonikolaki, E.; Savvopoulou, E.; Tsiantou, E.; Klinke, C.A. Digital technologies and healthcare architects’ wellbeing in the National Health Service Estate of England during the pandemic. Front. Med. Technol. 2023, 5, 1212734. [Google Scholar]

- Service, R.F. Could chatbots help devise the next pandemic virus? Science 2023, 380, 1211. [Google Scholar]

- Cheng, K.; He, Y.; Li, C.; Xie, R.; Lu, Y.; Gu, S.; Wu, H. Talk with ChatGPT about the outbreak of Mpox in 2022: Reflections and suggestions from AI dimensions. Ann. Biomed. Eng. 2023, 51, 870–874. [Google Scholar] [PubMed]

- Remuzzi, A.; Remuzzi, G. COVID-19 and Italy: What next? Lancet 2020, 395, 1225–1228. [Google Scholar]

- Lauer, S.A.; Grantz, K.H.; Bi, Q.; Jones, F.K.; Zheng, Q.; Meredith, H.R.; Azman, A.S.; Reich, N.G.; Lessler, J. The Incubation Period of Coronavirus Disease 2019 (COVID-19) From Publicly Reported Confirmed Cases: Estimation and Application. Ann. Intern. Med. 2020, 172, 577–582. [Google Scholar] [CrossRef] [PubMed]

- Branda, F.; Pierini, M.; Mazzoli, S. Monkeypox: Early estimation of basic reproduction number R0 in Europe. J. Med. Virol. 2023, 95, e28270. [Google Scholar] [PubMed]

- Alavi, H.; Gordo-Gregorio, P.; Forcada, N.; Bayramova, A.; Edwards, D.J. AI-driven BIM integration for optimizing healthcare facility design. Buildings 2024, 14, 2354. [Google Scholar] [CrossRef]

- Kaliappan, S.; Maranan, R.; Malladi, A.; Yamsani, N. Optimizing Resource Allocation in Healthcare Systems for Efficient Pandemic Management using Machine Learning and Artificial Neural Networks. In Proceedings of the 2024 International Conference on Advancements in Smart, Secure and Intelligent Computing (ASSIC), Bhubaneswar, India, 27–29 January 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Amiri, P.; Karahanna, E. Chatbot use cases in the COVID-19 public health response. J. Am. Med. Inform. Assoc. 2022, 29, 1000–1010. [Google Scholar] [CrossRef]

- Wang, C.W.; Hsu, B.Y.; Chen, D.Y. Chatbot applications in government frontline services: Leveraging artificial intelligence and data governance to reduce problems and increase effectiveness. Asia Pac. J. Public Adm. 2024, 46, 488–511. [Google Scholar]

- Chakraborty, S.; Paul, H.; Ghatak, S.; Pandey, S.K.; Kumar, A.; Singh, K.U.; Shah, M.A. An AI-based medical chatbot model for infectious disease prediction. IEEE Access 2022, 10, 128469–128483. [Google Scholar]

- Shumway, R.H.; Stoffer, D.S.; Stoffer, D.S. Time Series Analysis and Its Applications; Springer: Berlin/Heidelberg, Germany, 2000; Volume 3. [Google Scholar]

- Smith, C.M.; Le Comber, S.C.; Fry, H.; Bull, M.; Leach, S.; Hayward, A.C. Spatial methods for infectious disease outbreak investigations: Systematic literature review. Eurosurveillance 2015, 20, 30026. [Google Scholar]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar]

- Chen, S.; Yu, J.; Chamouni, S.; Wang, Y.; Li, Y. Integrating machine learning and artificial intelligence in life-course epidemiology: Pathways to innovative public health solutions. BMC Med. 2024, 22, 354. [Google Scholar]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; Sage Publications Limited: London, UK, 2024. [Google Scholar]

- Harrell, F.E. Regression Modeling Strategies, 2nd ed.; Springer: New York, NY, USA, 2015. [Google Scholar]

- Bender, E.M.; Koller, A. Climbing towards NLU: On meaning, form, and understanding in the age of data. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 5185–5198. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Krishnan, G.; Singh, S.; Pathania, M.; Gosavi, S.; Abhishek, S.; Parchani, A.; Dhar, M. Artificial intelligence in clinical medicine: Catalyzing a sustainable global healthcare paradigm. Front. Artif. Intell. 2023, 6, 1227091. [Google Scholar]

- Singh, J.; Sillerud, B.; Singh, A. Artificial intelligence, chatbots and ChatGPT in healthcare—Narrative review of historical evolution, current application, and change management approach to increase adoption. J. Med. Artif. Intell. 2023, 6, 30. [Google Scholar] [CrossRef]

- Van Melle, W. MYCIN: A knowledge-based consultation program for infectious disease diagnosis. Int. J. Man–Mach. Stud. 1978, 10, 313–322. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Babu, A.; Boddu, S.B. Bert-based medical chatbot: Enhancing healthcare communication through natural language understanding. Explor. Res. Clin. Soc. Pharm. 2024, 13, 100419. [Google Scholar]

- Chiche, A.; Yitagesu, B. Part of speech tagging: A systematic review of deep learning and machine learning approaches. J. Big Data 2022, 9, 10. [Google Scholar]

- Yu, B.; Fan, Z. A comprehensive review of conditional random fields: Variants, hybrids and applications. Artif. Intell. Rev. 2020, 53, 4289–4333. [Google Scholar]

- Franke, M.; Degen, J. The softmax function: Properties, motivation, and interpretation. PsyArXiv 2023. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The role of AI in hospitals and clinics: Transforming healthcare in the 21st century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Jadczyk, T.; Wojakowski, W.; Tendera, M.; Henry, T.D.; Egnaczyk, G.; Shreenivas, S. Artificial intelligence can improve patient management at the time of a pandemic: The role of voice technology. J. Med. Internet Res. 2021, 23, e22959. [Google Scholar] [CrossRef] [PubMed]

- Haque, A.; Chowdhury, M.N.U.R.; Soliman, H. Transforming chronic disease management with chatbots: Key use cases for personalized and cost-effective care. In Proceedings of the 2023 Sixth International Symposium on Computer, Consumer and Control (IS3C), Taichung, Taiwan, 30 June–3 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 367–370. [Google Scholar]

- Sun, G.; Zhou, Y.H. AI in healthcare: Navigating opportunities and challenges in digital communication. Front. Digit. Health 2023, 5, 1291132. [Google Scholar] [CrossRef] [PubMed]

- McKillop, M.; South, B.R.; Preininger, A.; Mason, M.; Jackson, G.P. Leveraging conversational technology to answer common COVID-19 questions. J. Am. Med. Inform. Assoc. 2021, 28, 850–855. [Google Scholar] [CrossRef]

- Cinelli, M.; Quattrociocchi, W.; Galeazzi, A.; Valensise, C.M.; Brugnoli, E.; Schmidt, A.L.; Zola, P.; Zollo, F.; Scala, A. The COVID-19 social media infodemic. Sci. Rep. 2020, 10, 16598. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Thorne, J.; Vlachos, A. Automated fact checking: Task formulations, methods and future directions. arXiv 2018, arXiv:1806.07687. [Google Scholar]

- Cabitza, F.; Campagner, A.; Malgieri, G.; Natali, C.; Schneeberger, D.; Stoeger, K.; Holzinger, A. Quod erat demonstrandum?—Towards a typology of the concept of explanation for the design of explainable AI. Expert Syst. Appl. 2023, 213, 118888. [Google Scholar] [CrossRef]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; Suh, J.; Iqbal, S.; Bennett, P.N.; Inkpen, K.; et al. Guidelines for human-AI interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Lee, J.; Lee, E.H.; Chae, D. eHealth literacy instruments: Systematic review of measurement properties. J. Med. Internet Res. 2021, 23, e30644. [Google Scholar] [CrossRef]

- Aljamaan, F.; Temsah, M.H.; Altamimi, I.; Al-Eyadhy, A.; Jamal, A.; Alhasan, K.; Mesallam, T.A.; Farahat, M.; Malki, K.H. Reference Hallucination Score for Medical Artificial Intelligence Chatbots: Development and Usability Study. JMIR Med. Inform. 2024, 12, e54345. [Google Scholar] [PubMed]

- Vishwanath, P.R.; Tiwari, S.; Naik, T.G.; Gupta, S.; Thai, D.N.; Zhao, W.; Kwon, S.; Ardulov, V.; Tarabishy, K.; McCallum, A.; et al. Faithfulness Hallucination Detection in Healthcare AI. In Proceedings of the Artificial Intelligence and Data Science for Healthcare: Bridging Data-Centric AI and People-Centric Healthcare, Barcelona, Spain, 29 June 2024; Available online: https://openreview.net/forum?id=6eMIzKFOpJ (accessed on 25 March 2025).

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar]

- Liu, Q.; Zheng, Z.; Zheng, J.; Chen, Q.; Liu, G.; Chen, S.; Chu, B.; Zhu, H.; Akinwunmi, B.; Huang, J.; et al. Health communication through news media during the early stage of the COVID-19 outbreak in China: Digital topic modeling approach. J. Med. Internet Res. 2020, 22, e19118. [Google Scholar] [CrossRef]

- Peng, C.; Xia, F.; Naseriparsa, M.; Osborne, F. Knowledge graphs: Opportunities and challenges. Artif. Intell. Rev. 2023, 56, 13071–13102. [Google Scholar]

- Wu, L.; Cui, P.; Pei, J.; Zhao, L.; Guo, X. Graph neural networks: Foundation, frontiers and applications. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 4840–4841. [Google Scholar]

- Pap, I.A.; Oniga, S. eHealth Assistant AI Chatbot Using a Large Language Model to Provide Personalized Answers through Secure Decentralized Communication. Sensors 2024, 24, 6140. [Google Scholar] [CrossRef] [PubMed]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Fernández, I.; Aceta, C.; Fernandez, C.; Torres, M.I.; Etxalar, A.; Méndez, A.; Agirre, M.; Torralbo, M.; Del Pozo, A.; Agirre, J.; et al. Incremental Learning for Knowledge-Grounded Dialogue Systems in Industrial Scenarios. In Proceedings of the 25th Annual Meeting of the Special Interest Group on Discourse and Dialogue, Kyoto, Japan, 18–20 September 2024; pp. 92–102. [Google Scholar]

- Eysenbach, G. How to fight an infodemic: The four pillars of infodemic management. J. Med. Internet Res. 2020, 22, e21820. [Google Scholar]

- Ashktorab, Z.; Jain, M.; Liao, Q.V.; Weisz, J.D. Resilient chatbots: Repair strategy preferences for conversational breakdowns. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Khurana, A.; Alamzadeh, P.; Chilana, P.K. ChatrEx: Designing explainable chatbot interfaces for enhancing usefulness, transparency, and trust. In Proceedings of the 2021 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), St. Louis, MO, USA, 10–13 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–11. [Google Scholar]

- Dhaliwal, N. Validating software upgrades with AI: Ensuring DevOps, data integrity and accuracy using CI/CD pipelines. J. Basic Sci. Eng. 2020, 17, 1–10. Available online: https://www.researchgate.net/publication/381002098 (accessed on 25 March 2025).

- Wang, Y.; McKee, M.; Torbica, A.; Stuckler, D. Systematic literature review on the spread of health-related misinformation on social media. Soc. Sci. Med. 2019, 240, 112552. [Google Scholar]

- Adebesin, F.; Smuts, H.; Mawela, T.; Maramba, G.; Hattingh, M. The role of social media in health misinformation and disinformation during the COVID-19 pandemic: Bibliometric analysis. JMIR Infodemiology 2023, 3, e48620. [Google Scholar] [CrossRef]

- Tasnim, S.; Hossain, M.M.; Mazumder, H. Impact of rumors and misinformation on COVID-19 in social media. J. Prev. Med. Public Health 2020, 53, 171–174. [Google Scholar] [PubMed]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. (CSUR) 2020, 53, 1–40. [Google Scholar]

- Kim, K.; Shin, S.; Kim, S.; Lee, E. The relation between eHealth literacy and health-related behaviors: Systematic review and meta-analysis. J. Med. Internet Res. 2023, 25, e40778. [Google Scholar]

- Graves, D. Understanding the Promise and Limits of Automated Fact-Checking; Reuters Institute for the Study of Journalism: Oxford, UK, 2018. [Google Scholar]

- Bondielli, A.; Marcelloni, F. A survey on fake news and rumour detection techniques. Inf. Sci. 2019, 497, 38–55. [Google Scholar]

- Longoni, C.; Bonezzi, A.; Morewedge, C.K. Resistance to medical artificial intelligence. J. Consum. Res. 2019, 46, 629–650. [Google Scholar]

- Shen, J.; Zhang, C.J.; Jiang, B.; Chen, J.; Song, J.; Liu, Z.; He, Z.; Wong, S.Y.; Fang, P.H.; Ming, W.K.; et al. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med. Inform. 2019, 7, e10010. [Google Scholar]

- Dergaa, I.; Ben Saad, H.; Glenn, J.M.; Amamou, B.; Ben Aissa, M.; Guelmami, N.; Fekih-Romdhane, F.; Chamari, K. From tools to threats: A reflection on the impact of artificial-intelligence chatbots on cognitive health. Front. Psychol. 2024, 15, 1259845. [Google Scholar]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar]

- Voigt, P.; Von dem Bussche, A. The EU General Data Protection Regulation (GDPR): A Practical Guide, 1st ed.; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- World Health Organization. Ethics and Governance of Artificial Intelligence for Health. WHO Guidance; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar]

- Bryson, J.; Winfield, A. Standardizing ethical design for artificial intelligence and autonomous systems. Computer 2017, 50, 116–119. [Google Scholar]

- Goddard, K.; Roudsari, A.; Wyatt, J.C. Automation bias: A systematic review of frequency, effect mediators, and mitigators. J. Am. Med. Inform. Assoc. 2012, 19, 121–127. [Google Scholar]

- Thapa, C.; Camtepe, S. Precision health data: Requirements, challenges and existing techniques for data security and privacy. Comput. Biol. Med. 2021, 129, 104130. [Google Scholar]

- Abouelmehdi, K.; Beni-Hessane, A.; Khaloufi, H. Big healthcare data: Preserving security and privacy. J. Big Data 2018, 5, 1–18. [Google Scholar]

- Hasal, M.; Nowaková, J.; Ahmed Saghair, K.; Abdulla, H.; Snášel, V.; Ogiela, L. Chatbots: Security, privacy, data protection, and social aspects. Concurr. Comput. Pract. Exp. 2021, 33, e6426. [Google Scholar]

- Wangsa, K.; Karim, S.; Gide, E.; Elkhodr, M. A Systematic Review and Comprehensive Analysis of Pioneering AI Chatbot Models from Education to Healthcare: ChatGPT, Bard, Llama, Ernie and Grok. Future Internet 2024, 16, 219. [Google Scholar] [CrossRef]

- Garcia Valencia, O.A.; Suppadungsuk, S.; Thongprayoon, C.; Miao, J.; Tangpanithandee, S.; Craici, I.M.; Cheungpasitporn, W. Ethical implications of chatbot utilization in nephrology. J. Pers. Med. 2023, 13, 1363. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Chen, Y.L.; Por, L.Y.; Ku, C.S. A systematic literature review of information security in chatbots. Appl. Sci. 2023, 13, 6355. [Google Scholar] [CrossRef]

- Siponen, M.; Willison, R. Information security management standards: Problems and solutions. Inf. Manag. 2009, 46, 267–270. [Google Scholar]

- Abd-Alrazaq, A.A.; Alajlani, M.; Alalwan, A.A.; Bewick, B.M.; Gardner, P.; Househ, M. An overview of the features of chatbots in mental health: A scoping review. Int. J. Med. Inform. 2019, 132, 103978. [Google Scholar]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; PMLR: New York, NY, USA, 2018; pp. 77–91. [Google Scholar]

- Anastasopoulos, A.; Neubig, G. Should all cross-lingual embeddings speak English? arXiv 2019, arXiv:1911.03058. [Google Scholar]

- Conneau, A. Unsupervised cross-lingual representation learning at scale. arXiv 2019, arXiv:1911.02116. [Google Scholar]

- Huang, K.; Altosaar, J.; Ranganath, R. Clinicalbert: Modeling clinical notes and predicting hospital readmission. arXiv 2019, arXiv:1904.05342. [Google Scholar]

- Mackert, M.; Mabry-Flynn, A.; Champlin, S.; Donovan, E.E.; Pounders, K. Health literacy and health information technology adoption: The potential for a new digital divide. J. Med. Internet Res. 2016, 18, e264. [Google Scholar] [PubMed]

- Spinuzzi, C. The methodology of participatory design. Tech. Commun. 2005, 52, 163–174. [Google Scholar]

- Miner, A.S.; Milstein, A.; Hancock, J.T. Talking to machines about personal mental health problems. JAMA 2017, 318, 1217–1218. [Google Scholar]

- Hirschberg, J.; Manning, C.D. Advances in natural language processing. Science 2015, 349, 261–266. [Google Scholar]

- Feine, J.; Morana, S.; Gnewuch, U. Measuring service encounter satisfaction with customer service chatbots using sentiment analysis. In Proceedings of the 14th International Conference on Wirtschaftsinformatik, Siegen, Germany, 24–27 February 2019. [Google Scholar]

- Laymouna, M.; Ma, Y.; Lessard, D.; Schuster, T.; Engler, K.; Lebouché, B. Roles, Users, Benefits, and Limitations of Chatbots in Health Care: Rapid Review. J. Med. Internet Res. 2024, 26, e56930. [Google Scholar]

- Han, B.; Baldwin, T. Lexical normalisation of short text messages: Makn sens a# twitter. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 368–378. [Google Scholar]

- Nikhil, G.; Yeligatla, D.R.; Chaparala, T.C.; Chalavadi, V.T.; Kaur, H.; Singh, V.K. An Analysis on Conversational AI: The Multimodal Frontier in Chatbot System Advancements. In Proceedings of the 2024 Second International Conference on Inventive Computing and Informatics (ICICI), Bangalore, India, 11–12 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 383–389. [Google Scholar]

- Jiang, J.; Karran, A.J.; Coursaris, C.K.; Léger, P.M.; Beringer, J. A situation awareness perspective on human-AI interaction: Tensions and opportunities. Int. J. Hum.–Comput. Interact. 2023, 39, 1789–1806. [Google Scholar]

- Zhang, R.W.; Liang, X.; Wu, S.H. When chatbots fail: Exploring user coping following a chatbots-induced service failure. Inf. Technol. People 2024, 37, 175–195. [Google Scholar]

- Følstad, A.; Brandtzæg, P.B. Chatbots and the new world of HCI. Interactions 2017, 24, 38–42. [Google Scholar]

- Kocielnik, R.; Xiao, L.; Avrahami, D.; Hsieh, G. Reflection companion: A conversational system for engaging users in reflection on physical activity. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–26. [Google Scholar] [CrossRef]

- Følstad, A.; Araujo, T.; Law, E.L.C.; Brandtzaeg, P.B.; Papadopoulos, S.; Reis, L.; Baez, M.; Laban, G.; McAllister, P.; Ischen, C.; et al. Future directions for chatbot research: An interdisciplinary research agenda. Computing 2021, 103, 2915–2942. [Google Scholar] [CrossRef]

- Benatar, S.; Brock, G. Global Health: Ethical Challenges; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Vaidyam, A.N.; Wisniewski, H.; Halamka, J.D.; Kashavan, M.S.; Torous, J.B. Chatbots and conversational agents in mental health: A review of the psychiatric landscape. Can. J. Psychiatry 2019, 64, 456–464. [Google Scholar] [CrossRef] [PubMed]

- Milne-Ives, M.; de Cock, C.; Lim, E.; Shehadeh, M.H.; de Pennington, N.; Mole, G.; Normando, E.; Meinert, E. The effectiveness of artificial intelligence conversational agents in health care: Systematic review. J. Med. Internet Res. 2020, 22, e20346. [Google Scholar] [CrossRef]

- Martins, A.; Nunes, I.; Lapão, L.; Londral, A. Unlocking human-like conversations: Scoping review of automation techniques for personalized healthcare interventions using conversational agents. Int. J. Med. Inform. 2024, 185, 105385. [Google Scholar] [CrossRef]

- Kostkova, P. Grand challenges in digital health. Grand Chall. Digit. Health 2015, 3, 134. [Google Scholar] [CrossRef]

- Ren, R.; Zapata, M.; Castro, J.W.; Dieste, O.; Acuña, S.T. Experimentation for chatbot usability evaluation: A secondary study. IEEE Access 2022, 10, 12430–12464. [Google Scholar] [CrossRef]

- Drummond, M.F.; Sculpher, M.J.; Claxton, K.; Stoddart, G.L.; Torrance, G.W. Methods for the Economic Evaluation of Health Care Programmes; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Cameron, G.; Cameron, D.; Megaw, G.; Bond, R.R.; Mulvenna, M.; O’Neill, S.; Armour, C.; McTear, M. Best practices for designing chatbots in mental healthcare—A case study on iHelpr. In Proceedings of the British HCI Conference 2018, Belfast, UK, 2–6 July 2018; BCS Learning & Development Ltd.: Pimpri-Chinchwad, India, 2018. [Google Scholar]

- Bermeo-Giraldo, M.C.; Toro, O.N.P.; Arias, A.V.; Correa, P.A.R. Factors influencing the adoption of chatbots by healthcare users. J. Innov. Manag. 2023, 11, 75–94. [Google Scholar] [CrossRef]

| Aspect | Key Points |

|---|---|

| Historical Chatbots |

|

| Psychological Relevance |

|

| Modern Chatbots (GPTs) |

|

| Future Directions |

|

| Strategy | Chatbots Role | Chatbots Functions |

|---|---|---|

| Data-Driven Design Parameters | Chatbots can be integrated with databases containing information on various pathogens, including their modes of transmission, incubation periods, and virulence. This data can inform the design parameters for outbreak-specific spaces. |

|

| Rapid prototyping and space planning | Chatbots can assist with rapid prototyping and space planning by generating layout options based on the specific needs of the outbreak. |

|

| Real-time adaptation and optimization | During an outbreak, the situation can change rapidly. Chatbots can continuously monitor data and adapt the design of the space accordingly. |

|

| Capacity | Information Accuracy and Reliability |

|---|---|

| 1. | Unpredictability and Reliability Issues |

| 2. | Dissemination of Outdated Information |

| 3. | Amplification of Misinformation |

| 4. | Technical Limitations in Communication |

| Alignment | Ethical and Legal Concerns |

| 1. | Ethical and Accountability Concerns |

| 2. | Privacy and Data Security Risks |

| 3. | Ineffectiveness with Diverse Populations |

| 4. | Resource Allocation Concerns |

| 5. | Unpredictability and Reliability Issues |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Branda, F.; Stella, M.; Ceccarelli, C.; Cabitza, F.; Ceccarelli, G.; Maruotti, A.; Ciccozzi, M.; Scarpa, F. The Role of AI-Based Chatbots in Public Health Emergencies: A Narrative Review. Future Internet 2025, 17, 145. https://doi.org/10.3390/fi17040145

Branda F, Stella M, Ceccarelli C, Cabitza F, Ceccarelli G, Maruotti A, Ciccozzi M, Scarpa F. The Role of AI-Based Chatbots in Public Health Emergencies: A Narrative Review. Future Internet. 2025; 17(4):145. https://doi.org/10.3390/fi17040145

Chicago/Turabian StyleBranda, Francesco, Massimo Stella, Cecilia Ceccarelli, Federico Cabitza, Giancarlo Ceccarelli, Antonello Maruotti, Massimo Ciccozzi, and Fabio Scarpa. 2025. "The Role of AI-Based Chatbots in Public Health Emergencies: A Narrative Review" Future Internet 17, no. 4: 145. https://doi.org/10.3390/fi17040145

APA StyleBranda, F., Stella, M., Ceccarelli, C., Cabitza, F., Ceccarelli, G., Maruotti, A., Ciccozzi, M., & Scarpa, F. (2025). The Role of AI-Based Chatbots in Public Health Emergencies: A Narrative Review. Future Internet, 17(4), 145. https://doi.org/10.3390/fi17040145