Abstract

The rapid emergence of infectious disease outbreaks has underscored the urgent need for effective communication tools to manage public health crises. Artificial Intelligence (AI)-based chatbots have become increasingly important in these situations, serving as critical resources to provide immediate and reliable information. This review examines the role of AI-based chatbots in public health emergencies, particularly during infectious disease outbreaks. By providing real-time responses to public inquiries, these chatbots help disseminate accurate information, correct misinformation, and reduce public anxiety. Furthermore, AI chatbots play a vital role in supporting healthcare systems by triaging inquiries, offering guidance on symptoms and preventive measures, and directing users to appropriate health services. This not only enhances public access to critical information but also helps alleviate the workload of healthcare professionals, allowing them to focus on more complex tasks. However, the implementation of AI-based chatbots is not without challenges. Issues such as the accuracy of information, user trust, and ethical considerations regarding data privacy are critical factors that need to be addressed to optimize their effectiveness. Additionally, the adaptability of these chatbots to rapidly evolving health scenarios is essential for their sustained relevance. Despite these challenges, the potential of AI-driven chatbots to transform public health communication during emergencies is significant. This review highlights the importance of continuous development and the integration of AI chatbots into public health strategies to enhance preparedness and response efforts during infectious disease outbreaks. Their role in providing accessible, accurate, and timely information makes them indispensable tools in modern public health emergency management.

1. Introduction

The world is witnessing an increasing frequency of infectious disease outbreaks, such as the COVID-19, Ebola, and mpox pandemics, which exposed significant gaps in public health communication and crisis management [1]. In these scenarios, the dissemination of timely and accurate information is critical to control the spread of disease and manage public anxiety. Digital health solutions [2] have emerged as essential tools to address these challenges, offering scalable, immediate, and reliable communication solutions because, during these health emergencies, traditional communication channels are often saturated. In this context, artificial intelligence (AI)-based chatbots have emerged as powerful tools to address the growing need for immediate and reliable responses during public health crises. These AI-driven systems, capable of engaging in human-like conversations, offer a scalable solution to handle increased public inquiries and provide crucial information around the clock [3]. Despite their potential, the adoption of AI chatbots in public health remains an ongoing challenge. Initially, the technology faced considerable resistance due to concerns about the accuracy of the information provided, ethical implications, and the risk of misuse. Early research highlighted AI’s limitations in comprehending complex human emotions and the dangers of misinformation, which could heighten public anxiety during health crises [4]. For example, Gao et al. [5] found that public trust in AI systems was initially low, driven by fears of biased or inaccurate responses. However, as the technology has advanced, so has its perceived utility. Innovations in natural language processing (NLP) and machine learning (ML) have greatly enhanced chatbots’ ability to generate accurate, contextually relevant responses [6]. Moreover, the COVID-19 pandemic acted as a turning point, accelerating the widespread adoption of AI chatbots as healthcare systems worldwide struggled to cope with the overwhelming demand for reliable information and support [7]. The shift from skepticism to optimism can be attributed to several factors. First, the demonstrated ability of chatbots to handle large volumes of inquiries in real-time has alleviated some of the initial concerns about their reliability [8]. Second, the integration of AI chatbots into existing healthcare systems has shown tangible benefits, such as reducing the workload on healthcare professionals and improving patient outcomes through timely information dissemination [9]. Finally, the development of more sophisticated models, such as GPT-4, has enhanced the ability of chatbots to understand and respond to complex queries, further building trust among users [10].

The potential of AI chatbots in public health emergencies is manifold. First, they serve as frontline communicators, offering real-time answers to citizens’ questions about symptoms, preventive measures, and local health guidelines [11]. This immediate access to information helps to alleviate public anxiety and promote adherence to health protocols, which is critical to curbing the spread of infectious diseases [12]. In addition, by providing accurate and consistent information, AI chatbots play a key role in countering the spread of misinformation, which has been identified as a significant challenge during health crises [13]. Beyond their role in public communication, AI chatbots also offer substantial support to healthcare systems. By sorting requests and providing initial guidance, these systems can help reduce the burden on healthcare providers, allowing them to focus on more complex cases and critical patient care [7]. This is especially valuable during the peak of epidemics, when healthcare resources are often limited. In addition, chatbots can help with data collection and analysis, providing health authorities with valuable information about public concerns and epidemic progression [8]. The implementation of artificial intelligence chatbots in healthcare emergencies, however, is not without its challenges. Ensuring the accuracy and reliability of the information provided by these systems is critical, as misinformation can have serious consequences in healthcare [9]. There are also privacy and data security concerns, particularly when dealing with sensitive health information [14]. In addition, the issue of public trust in AI systems remains a significant factor that can affect their effectiveness [5]. Despite these challenges, the potential benefits of AI chatbots in health emergency management are considerable. Their ability to provide immediate, scalable, and personalized responses makes them valuable tools in modern crisis communication strategies. As AI technology continues to advance, these systems are becoming more sophisticated, able to understand context, adapt to new information, and even detect emotional states to provide more empathetic responses [15].

In recent years, it has become clear that artificial intelligence chatbots are not just technological innovations, but essential tools in the fight against public health threats, bridging the gap between authorities and the public and ensuring that vital information reaches those who need it most, when they need it most. The ongoing development and integration of artificial intelligence chatbots into the public health infrastructure is a critical area of research and implementation. This review aims to explore the current state of the use of AI chatbots in public health emergencies, their benefits, challenges, and potential future developments, providing insights into how these technologies can be optimized to improve public health response efforts. By harnessing the power of AI in public health communication, we can potentially create more resilient and responsive health systems that can meet the complex demands of future public health emergencies.

In this paper, we systematically explore the role of artificial intelligence-based chatbots in public health emergencies, with a focus on infectious disease outbreaks. After a review of the psychological relevance of chatbots in health communication, we analyze their historical development, from early experiments such as ELIZA and PARRY to current advancements with generative artificial intelligence models (Section 2). Next, we examine the specific applications of these tools in genetics and genomics (Section 3), as well as their use for the dynamic management of healthcare spaces during epidemics (Section 4). The paper continues with an analysis of the potential of chatbots as statistical consultants in epidemiology (Section 5) and their reliability in clinical decision support (Section 6). Finally, we address the main challenges and limitations related to their use (Section 7), concluding with a discussion of future prospects and research needs to further improve the integration of these tools into public health strategies (Section 8).

2. Chatbots and Their Psychological Relevance for Public Health Communication

Chatbots have a long history within the domains of artificial intelligence and psychology, as summarized in Table 1. This section reviews historical (ELIZA, PARRY) and more recent (GPTs) contributions to the field. Focus is given to highlighting the psychological implications of chatbots for public health communication, in view of foundational and more recent scientific literature.

Table 1.

A brief outline of the conversational systems tested in psychological fields and relevant for public health communication.

2.1. The ELIZA Effect and Its Implications in Public Health Communication

ELIZA was a protoypical chatbot developed in the 1960s by Joseph Weizenbaum [16]. ELIZA mimicked a psychotherapist, engaging users in text-based conversations based on if–else statements and simple pattern matching [16]. Despite its simplicity, ELIZA demonstrated that machines could interact with humans while providing simple recommendations or elements of psychotherapeutic knowledge adapting to human requests [17]. In the psychological literature, it is known that the patient–psychoterapist dialogue is founded not only on knowledge but also on trust, engagement, care and empathy, which all promote a soothing environment [18]. Weizenbaum observed an “ELIZA effect”: Even when individuals were aware that ELIZA was a computer program, they still treated it as if it had genuine human qualities like intentions or emotions [16]. This phenomenon is linked with the theory of mind in developmental psychology [17,19]. A theory of mind is the cognitive ability to understand that others have thoughts, feelings, and perspectives, potentially different from one’s own. This ability typically develops in early childhood and is fundamental to human social interactions, enabling empathy, communication, and prediction of others’ behavior [19]. Despite knowing ELIZA’s mechanistic nature, users projected mental states onto the machine, assuming that ELIZA could understand and respond to their emotional cues. This projection, a manifestation of the ELIZA effect, illustrates that the theory of mind can extend to chatbots: Humans talking to even simple chatbots have the tendency to ascribe mental states to the latter and make sense of their behavior.

The ELIZA effect and its theory-of-mind component have deep repercussions for public health communication. These psychological phenomena show that humans can engage psychologically with chatbots, ultimately building trustful rapports with them. In public health, trust is a critical emotion that underlies effective communication and the success of health interventions [20,21]. Public health communication can be considered as a service where users are gaining health knowledge from one or several sources. Extensive psychological research [22] has shown that trust in service relationships can rise from affective phenomena (trusting a person perceived as friendly) or cognitive phenomena (trusting someone after a logical reasoning). Furthermore, several scientific studies have emphasized the importance of building trust between public health communicators and the public to ensure the effective dissemination of health information, e.g., open data [23], and the adoption of recommended health behaviors, e.g., face masking [21]. The studies by Kasperson and colleagues [24] highlight the concept of the social amplification of risk: the public’s perception of risk is significantly influenced by the trust they have in the source of information. When public health communicators are perceived as trustworthy, their messages are more likely to be accepted and acted upon by the public [24]. Trustworthiness, or its lack, can either amplify or attenuate the public’s response to health risks, hampering engagement with safety health measures during a pandemic [25]. Hall and colleagues [26] emphasized that, beyond public health communication, higher trust was also found to be associated with higher satisfaction, better adherence to medical advice, and improved health outcomes. These elements underline how chatbots can be attributed trust by humans and could thus play a relevant role for building trustful, and hence more successful, communication campaigns about public health.

2.2. PARRY, Personification in Chatbots and Its Relevance in Public Health

PARRY [27] is a key example of personification in chatbots, i.e., the ability for chatbots to tune their responses according to a given set of human traits [18]. Developed in the 1970s, PARRY was designed to simulate a person with paranoid schizophrenia [27]. PARRY’s ability to generate responses that closely mirrored some symptoms of paranoia, like suspiciousness or delusional thinking, demonstrated that a computer could be programmed to replicate some complex human thought patterns and thus impersonate some human traits in conversation. These achievements were obtained through significant advancement in NLP, at the time, incorporating decision-making models and weighted responses that simulated paranoid reasoning [27]. The dialogues produced by PARRY were convincing enough to induce even trained psychiatrists into believing that PARRY’s quips came from human patients [28]. PARRY remains a historical example of how even simple chatbots can portray personifications of complex human features.

In public health communication, personification is crucial for chatbots’ engagement and retention. Firstly, personification can make chatbots appear more relatable and approachable, fostering user engagement. According to Bickmore and Picard [29], users are more likely to engage in sustained interactions with chatbots perceived as having human-like characteristics, such as empathy and understanding. In public health contexts, where conveying information and promoting behavior change are key goals [23], increased engagement can enhance the effectiveness of health communication. For instance, a chatbot designed to encourage vaccination might be more persuasive if users feel it genuinely understands and cares about their concerns [30]. Secondly, chatbots with personified features may help improve user retention by making interactions more enjoyable and memorable. Studies suggest that when chatbots exhibit human-like behaviors, such as humor or even personalized conversations, users are more likely to return for subsequent interactions [31]. This is especially relevant in public health, where ongoing engagement can be critical for chronic disease management, health education, and behavior modification [20,30]. Personification in chatbots could be managed at the level of textual responses but also through other components, like avatars or animations. Importantly, the latter may also influence user engagement. For instance, Ciechanowski and colleagues [32] showed that simpler textual chatbots, without complex animations or avatars, induced in users less negative affect and less intense psychophysiological reactions than simpler, text-only, chatbots. These findings indicate that not only text but also additional visual elements might influence user engagement with public health chatbots.

2.3. From Early Chatbots to Generative Pre-Trained Transformers

After ALICE (Artificial Linguistic Internet Computer Entity) [33], the real breakthrough for chatbots began with the advent of generative pre-trained transformers (GPTs) in the mid-2010s [6]. GPTs enabled chatbots to generate more human-like text and understand context with greater accuracy. ELMo (Embedding from Language Model), BERT (Bidirectional Encoder Representations from Transformers) and the more recent Large Language Models (LLMs), like GPT-4, all represent advanced architectures of artificial neural networks, trained on vast amounts of human texts and able to produce human-like responses [34]. In public health, modern chatbots are now being deployed to assist in disease surveillance, health education, and behavior change interventions [35], as discussed in the remainder of this review.

From a psychological perspective, LLM-based chatbots can be powerful for public health communication since they (i) are capable of personification [18], like PERRY, fostering engagement; (ii) can be subject to the ELIZA effect [35], fostering trust; and (iii) can display basic elements of a theory of mind [36]. However, LLMs are also complex systems, trained via reinforcement learning to closely reproduce human data [35]. Consequently, LLM-based chatbots also end up inheriting human biases [37]. In cognitive psychology, biases are deformations from common ways of combining intuitive and logical reasoning when processing knowledge [18]. Biases can be considered cognitive shortcuts reducing the effort required for processing information. For instance, confirmation bias makes it easier to accommodate novel knowledge if it confirms one’s own pre-existing information. In public health communication, LLMs displaying biases similar to human communicators’ might be considered neutral addition to the system. However, LLMs also possess non-human biases, due to their artificial nature [37]: (i) myopic overconfidence, e.g., pushing through inaccurate reasoning while appearing overly self-confident, and (ii) hallucinations, i.e., attempting to satisfy users’ requests while interpolating over missing data from training knowledge. In public health contexts, hallucinations represents a deeply concerning phenomenon. Hallucinations might range from subtle—like fake but vaguely familiar scientific papers mentioned as sources backing up health facts [38]—to outright disastrous—like recommending toxic levels of herbal remedies for clinical pathologies [39]. Hallucinations, like myopic overconfidence, stem from two key factors. On the one hand, the reinforcement learning used to train GPTs pushes LLMs to always provide an answer, even in the presence of faulty, missing, or inaccurate data [37]. On the other hand, LLMs and the chatbots based on them do not possess metacognitive skills for filtering out less reliable information. This is different from what humans can do, e.g., filtering out dubious info about vaccines coming from extremely unreliable sources [40]. This lack of metacognitive skills makes LLMs poor fact searchers and providers. Because of myopic overconfidence, LLM-based chatbots can produce recommendations sounding fair but ultimately completely false. For instance, Metze and colleagues [38] found that, while producing 50 small literature reviews on Chagas’ disease, GPT 3.5 produced references with major hallucinations in 86.7% of the cases. Always when investigating GPT 3.5, recent studies [39,41] found that the LLM recommended to patients with the metabolic dysfunction-associated steatotic liver disease using herbal remedies that are considered by experts as harmful. These concrete examples underline the grave consequences that hallucinations can have over LLMs’ performance as public health chatbots. The future developments of LLM-based chatbots for public health should keep capitalizing on the enormous potential for personification, trust-creation and cognitive reasoning that LLMs provide, while addressing key issues originating from human and non-human LLMs’ biases. A synergy between psychology, computer science and public health communication would greatly push the scientific community towards such a promising future direction.

3. Artificial Intelligence in Genetics and Genomics

3.1. AI in Genetics and Computational Genomics

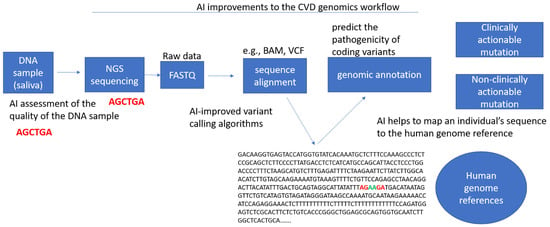

The convergence of artificial intelligence (AI) with genetic sciences has ushered in a new era of innovation in genomics, driven by the need to analyze vast quantities of biological data [42]. AI algorithms, especially those in the realms of ML and deep learning (DL), are pivotal in addressing the complex and multifaceted challenges posed by modern genomics [43]. With the advancement of high-throughput sequencing technologies, such as next-generation sequencing (NGS) and single-cell sequencing, the quantity of genomic data generated has exponentially increased. Interpreting this massive volume of data necessitates sophisticated computational tools that are capable of handling both the scale and complexity of the human genome, which is where AI systems have demonstrated transformative potential. A key application of AI in genomics is in variant calling. Genomic variation, such as single nucleotide polymorphisms (SNPs), insertions, deletions, and structural variants, are critical to understanding human disease, evolution, and genetic diversity [44]. Traditional approaches to variant calling, which heavily rely on statistical models, often struggle with distinguishing true variants from sequencing errors, particularly in the repetitive or poorly characterized regions of the genome [45]. AI, and more specifically deep learning models such as convolutional neural networks (CNNs), have been successfully employed to overcome these limitations by learning complex patterns directly from raw sequencing data [46]. For example, Google DeepVariant (available at https://github.com/google/deepvariant, accessed on 5 February 2025) is an AI-powered tool that uses deep learning techniques to improve the accuracy of variant calling, significantly reducing error rates compared to traditional bioinformatics pipelines. DeepVariant was trained on a diverse set of genomic data to predict genetic variants directly from sequencer reads, demonstrating how AI can surpass rule-based methods and enhance the fidelity of genomic data interpretation. Beyond variant calling, AI plays a crucial role in functional genomics, particularly in predicting the functional impact of genetic variants. Not all genetic variants are functionally significant; some may be benign, while others can have profound effects on gene regulation, protein structure, or cellular pathways [47]. AI models, such as those based on recurrent neural networks (RNNs) and attention mechanisms, have been used to predict the pathogenicity of variants [48]. These models are trained on annotated databases, like ClinVar (https://www.ncbi.nlm.nih.gov/clinvar/, accessed on 5 February 2025) and the Genome Aggregation Database (gnomAD) (https://gnomad.broadinstitute.org, accessed on 5 February 2025), which contain information on the clinical significance of known variants. By leveraging AI, it is possible to prioritize variants of unknown significance (VUSs) for further study, thus accelerating the identification of potential disease-causing mutations [42]. Additionally, AI-driven approaches like AlphaFold, developed by DeepMind, have revolutionized protein structure prediction, which is inherently linked to genomics [49]. AlphaFold’s ability to accurately predict 3D protein structures from amino acid sequences has opened new avenues in understanding how genetic variants affect protein folding and function, which is crucial for identifying novel drug targets and understanding the molecular basis of diseases. In the context of genome-wide association studies (GWAS), which aim to identify associations between genetic variants and traits or diseases, AI techniques have been invaluable in managing the immense computational burden associated with these studies. GWAS typically involve scanning millions of genetic variants across large populations to identify those associated with specific phenotypes. Traditional GWAS methods rely on linear models and statistical thresholds to detect significant associations, but these approaches may fail to capture complex interactions between genetic variants (epistasis) or between genes and environmental factors. AI, particularly in the form of deep learning and neural networks, can model these nonlinear interactions and capture subtle genetic effects that might be missed by conventional methods [50]. For instance, AI algorithms can integrate genomic data with other layers of biological data, such as transcriptomics, epigenomics, and proteomics, to provide a more holistic view of the genetic architecture underlying complex diseases like cancer, diabetes, and neurodegenerative disorders [42,51]. One of the most promising areas of AI application in genomics is drug discovery and development [52]. Genomic data play a critical role in identifying drug targets and understanding the genetic basis of diseases, and AI can accelerate this process by analyzing large-scale genomic datasets to identify patterns that human researchers might miss [42]. For example, AI models can be trained on genomic data from patients with a particular disease to identify common genetic variants or gene expression profiles that could be targeted by new drugs, or can be utilized to predict the pathogenicity of variants, map an individual’s sequence to genome references, and identify any clinically significant mutations (Figure 1). In addition to target identification, AI can also be used to predict how patients with different genetic backgrounds might respond to certain drugs, thus paving the way for more personalized treatments. This approach, often referred to as pharmacogenomics, is particularly important for diseases like cancer, where genetic mutations can influence drug efficacy and resistance. AI-driven insights from genomic data can help researchers develop targeted therapies that are more likely to be effective for specific patient populations, thereby reducing the time and cost associated with traditional drug discovery processes [51]. In addition to these specific applications, AI has become an integral part of data integration and interpretation in genomics. The wealth of genomic data generated by sequencing technologies is often accompanied by other types of omics data, such as transcriptomics (gene expression), proteomics (protein levels), and epigenomics (DNA methylation patterns) [53]. Integrating these diverse datasets is essential for gaining a comprehensive understanding of biological processes, but it is also extremely challenging due to the sheer volume and heterogeneity of the data. AI models, particularly those using unsupervised learning techniques, can help by identifying hidden patterns and relationships within the data that might not be apparent through traditional analytical methods. For example, AI-driven approaches can cluster similar samples based on multi-omic data, revealing subtypes of diseases that were previously unrecognized. This capability is especially valuable in cancer research, where tumors are often genetically heterogeneous, and identifying subtypes can lead to more effective, personalized treatment strategies [54].

Figure 1.

Example of the use of artificial intelligence to predict the pathogenicity of variants [55].

3.2. AI-Based Chatbots in Genomics

AI-based chatbots are emerging as valuable tools in genomics, offering new ways to provide instant access to complex genomic information for both professionals and the general public. In the genomic context, these chatbots have significant potential in streamlining data interpretation, facilitating patient engagement, and supporting personalized medicine [56]. One of the primary applications of chatbots in genomics is to assist researchers and clinicians in interpreting genetic data. Given the complexity and volume of data generated by genome sequencing technologies, AI-powered chatbots can serve as intermediaries, enabling users to query genomic databases, receive summaries of variant pathogenicity, or retrieve information on gene–disease associations in real time [48]. By integrating with genomic databases such as ClinVar, gnomAD, and Ensembl, these chatbots can provide quick, reliable responses to questions about specific genetic variants, their clinical relevance, or population frequency. Such tools can be especially useful in clinical settings, where genetic counselors or healthcare providers may need to rapidly access information to make informed decisions about patient care [57]. In addition to aiding professionals, AI chatbots can play a transformative role in genetic counseling by interacting directly with patients. The increasing availability of direct-to-consumer genetic testing services, such as 23andMe (https://www.23andme.com/en-int/, accessed on 5 February 2025) and AncestryDNA (https://www.ancestry.com, accessed on 5 February 2025), has led to a surge in individuals seeking to understand their genetic information. AI-driven chatbots can act as digital genetic counselors, answering common questions about genetic reports, explaining the implications of certain genetic variants, and even guiding users through the steps they should take after receiving potentially concerning results [58]. For example, a chatbot could explain whether a particular genetic variant is linked to an increased risk of cancer or other inherited conditions and advise the user on follow-up actions, such as consulting with a genetic counselor or undergoing further testing. This democratizes access to genetic insights, particularly for individuals who may not have easy access to professional genetic counseling services. By making genomic information more accessible and understandable, chatbots can help reduce the anxiety and confusion that often accompany the interpretation of personal genetic data. Another area where AI-based chatbots are gaining traction is in supporting personalized medicine by helping patients and healthcare providers interpret genomic data in the context of treatment options. Pharmacogenomics, the study of how genes affect an individual’s response to drugs [59], is a key aspect of personalized medicine [60], and AI chatbots can play a crucial role in this field [61]. For example, a chatbot integrated with pharmacogenomic databases could help a physician determine whether a patient’s genetic profile suggests a potential adverse reaction to a specific medication or whether they might benefit from a particular drug therapy based on their genetic makeup. This capability could significantly enhance the decision-making process in real-time, providing immediate, personalized recommendations for medication regimens that are tailored to an individual’s genetic background. Furthermore, such chatbots could be designed to continually update and refine their knowledge base as new research and clinical trials provide more data, ensuring that patients and healthcare providers are working with the most current information available. Finally, AI-based chatbots also have the potential to address ethical concerns in genomics by promoting informed decision making and consent [62]. As genomic data become increasingly integrated into healthcare and research, ensuring that individuals understand the implications of sharing their genetic information is crucial. Chatbots can be programmed to explain the privacy risks, data-sharing policies, and potential consequences of genomic testing in simple, comprehensible language [63]. This empowers individuals to make informed choices about whether to participate in genomic studies or share their genetic data with healthcare providers. In research settings, chatbots could be used to facilitate the informed consent process, answering participant questions about genomic research protocols, data usage, and long-term storage, which can improve transparency and trust between researchers and participants. While the role of AI-driven chatbots in ensuring that patients understand the ethical and legal aspects of genomic data sharing is promising, particularly in the context of insurance and employment discrimination based on genetic information, further research is needed to explore the practical implementation of such functions. As the field of genomics evolves, chatbots may play a supportive role in helping individuals navigate the complex ethical landscape, but it remains to be seen how effectively they can address these concerns in real-world applications.

4. Leveraging AI-Driven Chatbots for Dynamically Adaptable Healthcare Spaces During Outbreaks

4.1. A Pragmatic Architectural Approach to the COVID-19 Response: The Lessons of the Pandemic

Early in the 2020 COVID-19 pandemic, the rapid construction of modular hospitals in Wuhan, China, using prefabricated components and negative-pressure ventilation, demonstrated the critical role of rapidly deployable quarantine facilities [64]. As the pandemic reached the United States, the American Institute of Architects (AIA) convened a task force to develop guidelines for converting existing structures into COVID-19 hospitals [65]. This effort resulted in a checklist, integrating expertise from diverse fields, to facilitate the rapid adaptation of buildings, prioritizing functionality over esthetics [66]. The initial phase of the pandemic response highlighted the challenge of determining optimal building typologies for quarantine hospitals. Open-plan structures (e.g., arenas) and closed-plan structures (e.g., hotels) each presented distinct advantages and disadvantages regarding patient isolation and infection control. By late spring 2020, following the first wave, it became clear that an ad hoc approach to such large-scale epidemiological and public health events was insufficient. Future pandemic preparedness necessitates a shift towards formalized pop-up testing infrastructure, prefabricated hospitals, and the integration of pandemic-resilient features into residential design, such as decontamination zones [67]. A growing consensus emerged that architects must address the challenge of designing built environments capable of maintaining essential societal functions, including work and education, during future pandemics, thereby mitigating the need for widespread societal shutdowns [67]. Shaping the future of architectural design to accommodate the possibility of recurring pandemic events requires a structured, predictive approach, integrating microbiological and epidemiological variables into the design process [66].

4.2. Post-Pandemic Architecture: Utilizing Digital Tools to Mitigate Airborne Transmission Risk

The COVID-19 pandemic has underscored the crucial role of architectural design in mitigating the spread of airborne infections. Digital simulation techniques, including CAD, CFD, and BIM, are increasingly employed to analyze the dynamics of viral particles within built environments [68,69]. These tools enable architects and engineers to model airflow patterns and assess the effectiveness of ventilation systems in reducing transmission risks. This interdisciplinary collaboration facilitates the development of predictive models that inform the design of more resilient architecture, addressing critical knowledge gaps regarding airborne viral transmission and ventilation efficacy.

4.3. Building the Future of Outbreak Response: AI Chatbots and Adaptive Hospital Design

The rapid emergence and spread of infectious diseases necessitate agile and adaptable healthcare infrastructure capable of responding effectively to diverse pathogens and evolving outbreak dynamics. Traditional healthcare facility design often proves inflexible and slow to adapt, hindering the effective management of rapidly unfolding public health crises [70]. This underscores the need for innovative approaches that leverage technology to create dynamically adaptable spaces capable of meeting the unique challenges posed by different outbreaks [71]. AI-driven chatbots, with their ability to process vast amounts of data and generate real-time insights, offer a promising avenue for achieving this goal (Table 2) [72,73].

Table 2.

AI-driven chatbots for the rapid design of outbreak-specific context.

One of the potential benefits of using artificial intelligence-based chatbots in this context lies in their ability to integrate and analyze data from different sources. Linking chatbots to databases containing information on various pathogens—including modes of transmission, incubation periods, virulence, and susceptibility to different intervention measures—could support the definition of design parameters for spaces dedicated to managing epidemic outbreaks. Although chatbots cannot independently determine the transmissibility of a pathogen, they can be used to provide information from reliable sources, facilitating access to official guidelines on containment measures such as ventilation, isolation, and personal protective equipment. However, it is important to note that the reliability of such tools depends on the quality of the underlying data and their ability to interpret it. Because chatbots are probabilistic models of language, they may generate responses that appear plausible but are not necessarily correct. Therefore, their use should be considered a decision support rather than a definitive source, and any recommendations should be verified by experts in the field before being implemented.

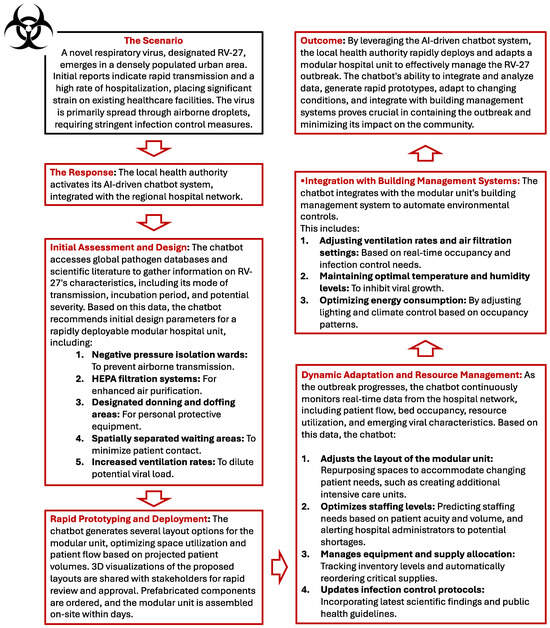

Beyond informing initial design parameters, AI-driven chatbots can play a crucial role in rapid prototyping and space planning. By leveraging algorithms capable of generating multiple layout options based on specific outbreak characteristics and projected patient volumes, chatbots can significantly accelerate the design process. This can involve recommending modular designs that are easily assembled and reconfigured, optimizing the space allocation for different functions such as triage, testing, and isolation, and even generating the 3D visualizations of proposed layouts for stakeholder review. This rapid prototyping capability is essential for the timely deployment of adaptable healthcare spaces during outbreaks.

The dynamic nature of outbreaks demands continuous monitoring and adaptation. AI-driven chatbots can excel in this area by analyzing real-time data on patient flow, resource utilization, and emerging pathogen characteristics. This information can be used to adjust the layout of healthcare spaces, optimize staffing levels and equipment allocation, and update infection control protocols as needed. For example, a chatbot can analyze patient flow data to identify bottlenecks and recommend adjustments to the layout to improve efficiency and minimize wait times. Similarly, real-time data on resource utilization can inform decisions regarding the allocation of medical personnel, equipment, and supplies.

Furthermore, integrating chatbots with building management systems offers the potential for the automated control of environmental parameters. This can include adjusting ventilation rates and air filtration settings based on the specific needs of the outbreak, maintaining optimal temperature and humidity levels to inhibit pathogen growth, and optimizing energy consumption by adjusting lighting and climate control systems based on occupancy patterns. This level of integration can significantly enhance the efficiency and effectiveness of outbreak management.

4.4. Example Scenario

AI-driven chatbots can be valuable tools for rapidly designing and adapting spaces to manage different outbreaks, taking into account the specific characteristics of each pathogen (Figure 2).

Figure 2.

Example scenario of a novel hypothetic respiratory virus outbreak.

The chatbot can access data on the virus’s transmission characteristics and recommend a design that prioritizes airborne infection control measures, such as negative pressure rooms, HEPA filtration, and designated airflow patterns. As the outbreak progresses and more data become available, the chatbot can adapt the design to optimize patient flow, resource allocation, and infection control protocols (Figure 2). By leveraging AI-driven chatbots, we can create more responsive and adaptable healthcare spaces that are better equipped to manage the unique challenges of different outbreaks.

5. Chatbots as Statistical Consultants in Infectious Disease Settings: Potential and Reliability

In the field of infectious disease management, chatbots with advanced artificial intelligence, such as ChatGPT, are being studied not only as tools for disseminating information, but also to assist in data analysis and decision making. These artificial intelligence systems have the potential to guide public health officials, researchers, and even less experienced users through the complex process of selecting appropriate statistical techniques for epidemic data analysis. With the increasing use of large-scale data collection during epidemics, chatbots could provide accessible real-time advice on which statistical methods to employ based on the characteristics of the available data. However, the effectiveness and reliability of chatbots in this advisory role depend on several factors, including the accuracy of their recommendations, their interpretability, and their potential limitations.

5.1. Potential of Chatbots as Statistical Advisors

When faced with questions such as "I have this data set, what statistical method should I use?", chatbots such as ChatGPT can quickly generate plausible answers by leveraging their knowledge of general statistical principles. For example, if a user presents time series data on infection rates, the chatbot might suggest using techniques such as ARIMA (AutoRegressive Integrated Moving Average) models or exponential smoothing to predict future trends [82]. For spatial data involving epidemic outbreaks, the chatbot might recommend geospatial analysis tools such as kriging or spatial autocorrelation, which are useful for identifying disease clusters [83]. These recommendations can be useful, especially in situations where human expertise is not readily available, making chatbot-guided suggestions a scalable solution for public health workers during emergencies.

One of the strengths of the chatbot is its ability to handle different types of data. If asked a question about how to handle multivariable datasets, the chatbot might suggest principal component analysis (PCA) to reduce data dimensionality and identify key factors driving disease progression [84]. Where the user is dealing with survey data from affected populations, ChatGPT could recommend logistic regression or chi-square tests to explore relationships between variables, such as symptoms and risk factors. This wide range of functionality could support decision making at various stages of the epidemic response, from initial surveillance to resource allocation and intervention evaluation.

5.2. Evaluating the Reliability of Chatbots in Recommending Statistical Techniques

Although artificial intelligence chatbots such as ChatGPT have considerable potential to offer statistical guidance, their reliability in providing accurate and contextually appropriate recommendations is a critical issue. Language models are trained on vast datasets, often encompassing a wide range of disciplines, including statistics. However, they lack a deep understanding of the specific nuances of a given dataset or the subtleties of research questions in infectious disease contexts. This raises several reliability issues:

- 1.

- Accuracy of recommendations: AI chatbots are not immune from making mistakes or offering incorrect statistical advice. Although ChatGPT may suggest well-known statistical methods, it does not always recommend the techniques best suited to a specific dataset. For example, it might suggest the use of a linear regression when, given the distribution of the data, a nonlinear model would be more appropriate. Studies have shown that artificial intelligence models, despite their sophistication, are often unable to fully understand the domain-specific requirements of medical or epidemiological datasets [85]. This limitation could lead to erroneous recommendations, especially when users are unfamiliar with the subtleties of statistical techniques.

- 2.

- Assumptions and limitations: Every statistical technique has inherent assumptions, such as the normality of data distribution, independence of observations, or homoscedasticity. Chatbots do not always provide sufficient warning or detail about these assumptions, potentially leading to the misuse of statistical methods. For example, recommending ANOVA (analysis of variance) without clarifying the need for homogeneity of variances could lead to erroneous analysis results [86]. In this regard, human statisticians possess the nuances of judgment necessary to adapt methods based on data anomalies, something that current chatbots lack.

- 3.

- Interpretability and user understanding: Even when ChatGPT correctly identifies an appropriate statistical method, explaining why a particular technique should be used remains a challenge. Although the model can describe statistical concepts in general terms, it does not always provide clear guidance on why certain assumptions are important or how to interpret the results in a meaningful way. This limitation could be particularly problematic for users who do not have a strong background in statistics. A chatbot might recommend logistic regression for analyzing binary outcomes, but not guide the user through the necessary diagnostic tests, such as testing for multicollinearity or overfitting [87]. The absence of in-depth explanations could hinder the user’s ability to effectively apply the recommended techniques.

- 4.

- Dependence on training data: ChatGPT and similar models are trained on large datasets that include vast amounts of information, but are not necessarily up-to-date with the latest advances in statistical theory or infectious disease epidemiology. This lag in knowledge could cause the chatbot to suggest outdated or less efficient methods. In addition, these models are based on probabilities derived from training data, which means they lack a true understanding of the problem at hand. They generate answers based on patterns and correlations in the data they have seen, but they do not reason about the statistical principles involved as a human expert would [88].

- 5.

- Risk of misinformation: One of the significant risks of using AI chatbots for statistical guidance is the potential for misinformation. ChatGPT, while very adept at generating human-like text, can sometimes produce “hallucinations”, generating plausible but factually incorrect information [89]. In a statistical context, this could lead the chatbot to recommend inappropriate or even nonexistent methods. For example, it might suggest a test or statistical procedure that is not applicable to the user’s dataset or, in extreme cases, does not exist in the statistical literature. In the world of public health, where data-driven decisions can impact millions of people, the consequences of such errors could be severe.

5.3. Enhancing the Reliability of Chatbots: A Hybrid Approach

To mitigate the risks associated with chatbot recommendations, a human-in-the-loop approach is advisable, especially in high-risk settings such as infectious disease management. In this context, chatbots provide preliminary recommendations that can be verified and refined by human experts. This hybrid approach ensures that the scalability and efficiency of AI is balanced with the critical judgment and domain-specific expertise provided by human statisticians. In addition, human experts can also review the chatbot’s suggestions, providing additional insights or corrections that can improve the quality of the analysis. In addition, integrating real-time feedback systems that allow users to report inaccuracies or clarify uncertainties can help improve the chatbot’s performance over time, increasing both accuracy and user confidence. Regular updates of the chatbot’s training data can also ensure that it remains current with the latest developments in statistical methods and public health practices.

Future developments could focus on creating domain-specific chatbots trained on datasets directly relevant to public health and epidemiology. By incorporating knowledge about recent infectious disease outbreaks and advances in statistical methods adapted to these contexts, chatbots could become more reliable tools for public health professionals. This would also involve incorporating more sophisticated models for error detection, allowing the chatbot to flag potential risks in its recommendations and alert users to seek expert advice. Furthermore, collaboration with interdisciplinary teams that include statisticians, epidemiologists, and data scientists can help refine the chatbot’s capabilities. This collaboration can improve the chatbot’s ability to provide personalized recommendations that closely align with the specific needs of public health practitioners.

Artificial intelligence-based chatbots such as ChatGPT offer significant potential as statistical consultants in infectious disease settings, providing quick and accessible guidance on the use of appropriate analytical techniques. However, their recommendations should not be considered a substitute for expert judgment. Although these systems can suggest general methods, their limitations—such as lack of deep understanding, potential for error, and inability to explain complex statistical nuances—mean they should be used with caution. The future of chatbots in this role likely lies in hybrid models that combine the scalability of AI with the critical oversight of human experts, ensuring more reliable and contextually appropriate statistical advice. As public health continues to evolve in an increasingly data-driven world, the integration of these technologies can improve decision-making processes and, ultimately, health outcomes.

6. Clinical Insights from an Infectious Disease Specialist

6.1. AI-Powered Chatbots for Clinical Management During Outbreaks

Infectious disease outbreaks pose significant challenges to global health security, placing immense strain on healthcare systems. The rapid spread of infection, coupled with often-limited resources, necessitates efficient and timely patient management. Artificial intelligence-powered tools, particularly chatbots, offer a promising avenue for enhancing patient care and optimizing resource allocation during these critical periods. Chatbots can provide readily accessible information, facilitate preliminary triage, and enable remote monitoring, contributing to a more sustainable global healthcare paradigm [90].

Artificial intelligence is rapidly transforming healthcare delivery within hospitals and clinics. Its applications span various areas, from enhancing clinical decision making and streamlining operational efficiency to improving diagnostic accuracy and revolutionizing patient care. The evolution of AI has led to the development of increasingly sophisticated chatbots with diverse healthcare applications [91]. Historically, programs like MYCIN [92] aided physicians with differential diagnosis based on patient symptoms. Modern chatbots can play a crucial role during infectious disease outbreaks by providing up-to-date information about symptoms, prevention measures, and treatment options.

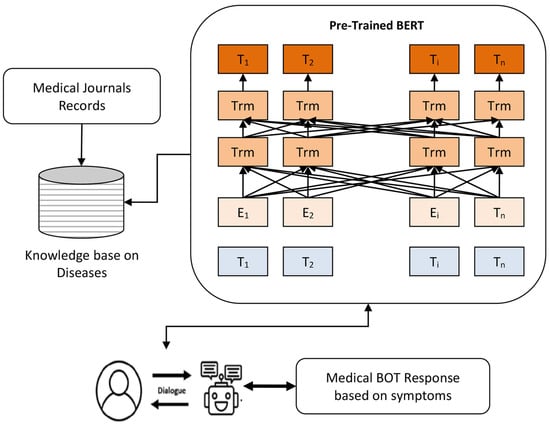

An example of such advanced AI technology is the BERT-based medical chatbot, which leverages Bidirectional Encoder Representations from Transformers (BERT) [93], a state-of-the-art deep learning model for natural language understanding (NLU). BERT, unlike traditional models that process text in a single direction (left-to-right or right-to-left), considers the context of a word by looking at both the words that come before and after it. This makes it highly effective in understanding the contextual meaning of sentences, especially in complex domains like medicine, where jargon and linguistic nuances are critical for accurate interpretation. Figure 3 illustrates the architecture of a BERT-based medical chatbot [94], where the BERT model processes user queries, extracts medical entities (such as symptoms or conditions), and generates relevant responses. Key components of the system include the user interface (frontend), which allows users to interact with the chatbot via text or voice input; the text processing module, which preprocesses queries by performing tokenization, stop word removal, stemming, lemmatization, and Part-of-Speech (PoS) tagging [95]; the BERT model, which parses text bidirectionally, recognizes medical entities and classifies user intent; context management, which maintains the history and context of the conversation to ensure consistent responses in complex interactions; the entity and intent recognition unit, which extracts medical entities and classifies intent using techniques such as Conditional Random Fields (CRFs) [96] and softmax [97]; dialogue management and response generation, which combines predefined templates, retrieval methods from a medical knowledge base, and generative templates to produce appropriate responses; the medical knowledge base, an up-to-date database with information on symptoms, conditions, treatments and guidelines, consulted by the chatbot to provide accurate advice; and finally, response delivery (backend), which sends the generated response to the user via the frontend interface, completing the interaction cycle.

Figure 3.

An example of BERT-based medical BOT [94].

They can also facilitate appointment scheduling and answer frequently asked questions, reducing the burden on healthcare workers. In particular, AI’s evolution in healthcare has been driven by advancements in machine learning and neural networks, coupled with increased computational power and data availability. These technologies are empowering healthcare professionals with tools to analyze patient data more effectively, leading to more precise diagnoses and personalized treatment plans. AI is also optimizing hospital operations by automating administrative tasks, improving patient flow, and enhancing resource allocation. In medical diagnostics, AI is accelerating image analysis in radiology and pathology, enabling faster and more accurate results [98].

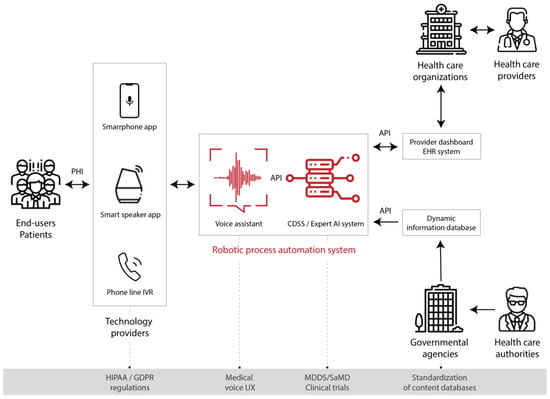

Furthermore, chatbots contribute significantly to telehealth delivery, encompassing remote patient monitoring, medication reminders, and personalized health advice (https://www.irjmets.com/uploadedfiles/paper//issue_4_april_2024/53081/final/fin_irjmets1713334010.pdf, accessed on 9 March 2025) virtual assistance, creating new possibilities for patient–physician interaction. For example, recent advancements in voice-based AI technologies, as highlighted by Jadczyk et al. [99], demonstrate the potential of voice chatbots to streamline healthcare delivery during pandemics. These systems can automate patient triage, provide real-time health information, and support remote monitoring, reducing the burden on healthcare providers and improving patient outcomes. Figure 4 illustrates the workflow of an AI-driven voice chatbot in healthcare delivery, showcasing its integration with clinical decision support systems and electronic health records. The workflow begins with the user (patient or healthcare provider) interacting with the chatbot through a voice interface, such as a smartphone or smart speaker. The chatbot processes the user’s input using NLP techniques and connects to a clinical decision support system (CDSS) to provide accurate, context-aware responses. The system then integrates the collected data with electronic health records (EHRs), enabling real-time updates and alerts for healthcare providers. This seamless integration allows for efficient patient triage, remote monitoring, and personalized care, reducing the workload on healthcare professionals and improving patient outcomes.

Figure 4.

Workflow of the AI-driven voice chatbot in healthcare delivery [99].

6.2. Enhancing Outbreak Response with Chatbots: Benefits and Challenges in Clinical Management of Patients

AI-powered chatbots can play a significant role in improving clinical management during infectious disease outbreaks. These tools offer tangible benefits for both patients and healthcare professionals by optimizing workflows and enhancing operational efficiency.

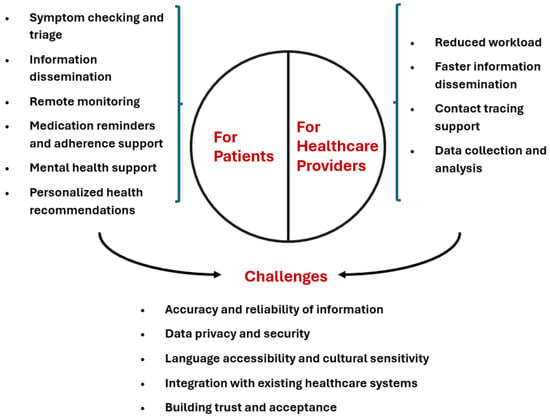

For patients, chatbots serve as an immediate source of information and support. They can guide users through symptom assessment and triage, helping them determine the appropriate level of care and potentially reducing unnecessary hospital visits [3]. This is particularly crucial during outbreaks, as it minimizes exposure risk and alleviates the burden on healthcare facilities. Additionally, chatbots can disseminate accurate and up-to-date information about the outbreak, counteracting misinformation and enabling individuals to make informed decisions [3]. For patients managing their illness remotely, chatbots can facilitate remote monitoring by tracking symptoms and vital signs, allowing for the early detection of any health deterioration [100]. They can also provide medication reminders, support treatment adherence, and offer basic mental health assistance to help manage the stress and anxiety often associated with public health emergencies [101]. Furthermore, by analyzing individual patient data, chatbots can generate personalized health recommendations tailored to specific needs.

Healthcare professionals also stand to benefit from chatbot integration. By handling routine inquiries, distributing important updates, and reinforcing clinical guidelines, chatbots can reduce the workload of medical staff, enabling them to focus on more complex cases and direct patient care [101]. Additionally, chatbots can assist with contact tracing efforts and collect valuable epidemiological data for research purposes.

However, to ensure responsible and effective deployment, several challenges must be addressed. The accuracy and reliability of chatbot-provided information must be continuously monitored [102]. Safeguarding data privacy and security is paramount, and chatbot interfaces should be designed to support linguistic accessibility and cultural sensitivity. Furthermore, seamless integration with existing healthcare systems is crucial for successful implementation. Building trust and acceptance among both patients and healthcare providers is another key factor in widespread adoption.

It is important to emphasize that chatbots are not intended to replace healthcare professionals but rather to complement existing healthcare strategies. Their integration can enhance the quality and efficiency of healthcare delivery during outbreaks by providing timely and targeted support (Figure 5).

Figure 5.

The role of chatbots in epidemic response.

6.3. Future Directions and Research Needs

Future research should focus on exploring the potential of AI-powered chatbots for handling more complex tasks, such as assisting with preliminary diagnosis and offering personalized treatment recommendations. A thorough evaluation of the effectiveness of chatbots in real-world outbreak scenarios is essential to assess their true impact on patient outcomes and the performance of healthcare systems. Ethical considerations regarding the use of chatbots, especially during public health emergencies, must be carefully addressed to ensure they are deployed responsibly and equitably. Despite these challenges, chatbots have considerable potential to transform patient management during infectious disease outbreaks. By providing timely and accurate information, facilitating remote monitoring, and alleviating the strain on healthcare systems, chatbots can contribute to more effective and equitable responses to public health crises. To fully realize their benefits, further research, careful implementation, and continuous evaluation are necessary. This will help maximize the advantages while minimizing the potential risks associated with the adoption of this emerging technology.

7. Challenges and Limitations of AI Chatbots in Infectious Disease Outbreaks

The integration of chatbots and large language models (LLMs) into healthcare communication during epidemics offers some notable advantages such as innovative ways to both collect and disseminate information and opportunities for resource optimization.

While these potential benefits can significantly support doctors, policymakers, and citizens by providing timely information and aiding in resource management, this class of software applications also present important limitations and risks in the context of infectious diseases, which must be known to allow for their cautious and responsible implementation.

The challenges and risks discussed below are similar to those associated with medical and health issues affecting individuals. However, they are intensified by the scale of those impacted, the uncertainty surrounding the nature of symptoms, the relationship between symptoms and disease severity, and the ambiguity regarding modes of disease transmission and mitigation. In other words, in what follows, we will survey problems that are known to be related to the use of conversational agents in the management of health issues, but exacerbated by the contexts in which epidemics and pandemic occur, that is, in contexts of heightened severity, spread, and uncertainty.

The main limitations and risks can be grouped in two broad ambits: capacity and alignment. Capacity refers to the intrinsic abilities of these systems in terms of their functions and skills. This domain encompasses quality dimensions such as accuracy, reliability, and effectiveness. Limitations in capacity involve technical shortcomings like misinformation propagation, the inability to interpret complex or ambiguous user inputs, and challenges in processing real-time updates. Essentially, capacity-related issues regard the tasks these AI systems are not capable of carrying out as expected and the kind of failure they can exhibit.

Alignment, on the other hand, pertains to the extent to which the capacities of these AI systems are coherent with the values, principles, and norms of the society and community they serve. This includes compliance with ethical standards, cultural sensitivities, and legal regulations such as data protection laws and medical practice guidelines. Limitations in alignment involve ethical dilemmas, privacy violations, lack of transparency, and potential misuse of technology. In essence, alignment-related issues regard shortcomings of these systems in adhering to societal expectations and legal requirements. For both ambit, we will discuss four main challenges, as they are reported in Table 3. The following sections will explore each of these challenges in detail, highlighting the critical issues that emerge in infectious disease contexts.

Table 3.

Overview of issues related to AI-based chatbots in public health emergencies.

7.1. Unpredictability and Reliability Issues

The unpredictability and potential unreliability of LLMs, such as GPT-3 and GPT-4, raise significant challenges when used in decision support tools during health crises like pandemics. These models generate responses based on patterns from extensive datasets without true understanding, which can lead to “hallucinations”, that is, plausible yet factually incorrect information [89]. In critical situations, such misinformation can mislead individuals, potentially worsening disease spread. While LLMs offer scalable, immediate responses, their risks can undermine public trust and hinder effective health interventions [103].

Although improvements have been made to reduce inaccuracies [10], LLMs still struggle with maintaining factual consistency. Further research is needed to develop evaluation metrics and real-time verification systems to ensure reliability in high-stakes domains [104]. Enhancing training protocols by incorporating domain-specific data and real-time fact-checking algorithms are key technical measures to improve AI reliability [105]. Tools that promote AI explainability can also help identify and correct errors [106].

Beyond technical solutions, socio-technical measures are essential. Human-in-the-loop systems allow experts to validate AI outputs, reducing the risk of misinformation [107]. Educating users on AI’s limitations and establishing clear regulatory frameworks are essential for responsible AI deployment in healthcare [108].

Research should focus on addressing AI hallucinations, enhancing real-time verification, and understanding how users interact with AI-generated health information [109,110]. Regulatory frameworks must evolve to ensure the safe, ethical deployment of AI in health crises [111].

7.2. Dissemination of Outdated Information

During health crises, AI chatbots that rely on outdated data risk providing incorrect guidance, leading to public confusion and non-compliance with current health measures [112]. AI models, especially LLMs, often require continuous updates to reflect the latest health guidelines, yet maintaining this real-time accuracy is technically challenging and resource-intensive. While dynamic knowledge graphs and incremental learning techniques show promise for keeping AI systems updated [113,114], gaps remain in ensuring the real-time integration of new data during fast-changing situations like pandemics.

Technical measures, such as integrating real-time data from authoritative sources via APIs, are necessary to ensure the accuracy of chatbot responses [115]. A modular AI design allows for more flexible updates by separating the knowledge base from the language model, which can significantly reduce the need for full retraining [116]. Incremental learning techniques can also enhance the system’s adaptability, enabling it to learn from new information without large-scale retraining [117].

Socio-technical interventions, such as partnerships with health authorities like the WHO and CDC, ensure that AI systems receive timely updates [118]. User feedback systems allow individuals to report outdated information, helping improve chatbot accuracy and accountability [119]. Additionally, notifying users when significant updates occur fosters transparency and trust [120].

Key research areas include improving real-time update integration and maintaining data consistency in AI models [121]. Understanding how outdated information affects user behavior and trust is essential for developing design strategies that promote engagement with accurate, up-to-date data [122].

7.3. Amplification of Misinformation

Epidemics often trigger an “infodemic”—an overabundance of information, including rumors and misinformation [118]. AI chatbots, if not properly monitored, can unintentionally amplify false information due to their perceived authority, leading to harmful behaviors and undermining public health efforts [123]. Detecting misinformation is challenging because it evolves rapidly and is often context-dependent. While machine learning algorithms for misinformation detection exist, their integration into conversational AI systems remains underexplored [124].

To mitigate misinformation, it is necessary to integrate advanced NLP models capable of identifying false information in chatbot responses [125]. Chatbots should also cross-reference trusted databases from health organizations to ensure the reliability of the information provided [105]. Socio-technical measures, such as user reporting mechanisms and transparent disclaimers, foster a collaborative and trustworthy environment [126]. Partnering with fact-checking organizations can ensure that chatbots remain up-to-date with verified corrections [127].

Key research areas include developing real-time misinformation detection in AI chatbots, understanding the impact of misinformation on user behavior, and maintaining user trust through transparent content moderation policies [128]. Ethical considerations also require collaboration with policymakers to ensure responsible chatbot deployment, emphasizing transparency and accountability [111].

7.4. Ethical and Accountability Concerns

The deployment of AI chatbots in healthcare during crises raises ethical and accountability concerns, as users may over-rely on them for medical advice, potentially leading to self-diagnosis and the avoidance of professional consultation [129]. When chatbots provide incorrect or misleading information, determining liability is complex, as the advice comes from an AI system rather than a human. Current frameworks do not sufficiently address these issues, leaving gaps in legal and ethical responsibility [130].

While AI chatbots offer scalable solutions for disseminating health information, they also pose risks such as misdiagnosis and data privacy breaches. Users tend to trust these systems, which increases the likelihood of over-reliance on chatbot advice [131]. Balancing the benefits of widespread access to health information with the potential harms requires a comprehensive approach that includes technical and socio-technical measures.

On the technical side, ensuring transparency in chatbot decision making is fundamental for building trust. Chatbots should provide clear explanations for their recommendations, and safety protocols should be in place to alert users to seek professional help when necessary [132]. Protecting user privacy through compliance with regulations like GDPR is also essential to maintain confidentiality and secure sensitive data [133].

Socio-technical interventions are equally important. Ethical guidelines tailored to AI in healthcare must be integrated into chatbot design to ensure patient safety and fairness [134]. Educating users about the limitations of AI chatbots can prevent over-reliance and reinforce the importance of consulting healthcare professionals when needed [135]. Clear accountability frameworks should define the responsibilities of AI developers and healthcare providers to ensure ethical compliance and legal clarity [136].

Research is needed to develop frameworks for determining accountability when AI chatbots cause harm and to explore how users’ over-reliance on AI affects their health outcomes [137]. Additionally, protecting user privacy while enabling personalized healthcare remains a critical challenge. Research into advanced encryption and anonymization techniques is essential for ensuring data security while providing personalized health solutions [138].

7.5. Privacy and Data Security Risks

The deployment of AI chatbots in healthcare often requires collecting personal health information (PHI) to provide tailored advice, raising significant privacy concerns [139]. Ensuring compliance with regulations such as HIPAA and GDPR is essential, but many chatbot platforms may lack the necessary infrastructure, posing risks to user data security [133]. The challenge is especially relevant during epidemics when individuals may share more sensitive data, increasing the potential for breaches that can lead to identity theft, discrimination, and a loss of trust in healthcare systems [63].

One of the key challenges is ensuring that chatbots collect only the minimum amount of data necessary to provide accurate responses. The principle of data minimization should be a cornerstone of chatbot design, ensuring that sensitive information is not unnecessarily stored or shared [140]. Additionally, end-to-end encryption and secure authentication protocols are critical to safeguarding PHI [141]. These technical measures must be complemented by user education, ensuring that individuals understand how their data are being used and what protections are in place [118]. Another important consideration is the transparency of data usage. Users should be informed about the types of data being collected, the purposes for which it will be used, and the entities with which it may be shared. This transparency is crucial for building trust, particularly in communities that may be skeptical of AI technologies [142]. Furthermore, real-time feedback mechanisms should be implemented to allow users to report privacy concerns or data breaches, enabling the continuous improvement of chatbot security measures [143]. The issue of data ownership also warrants attention. In many cases, the data collected by chatbots are stored and analyzed by third-party providers, raising questions about who ultimately controls this information. Clear guidelines and agreements must be established to ensure that data ownership remains with the individual or the healthcare provider, rather than the technology vendor [63]. Finally, the long-term storage of health data poses additional risks. While historical data can be valuable for research and improving chatbot performance, it also increases the potential for misuse. Policies must be put in place to ensure that data are anonymized and securely stored, with strict access controls to prevent unauthorized use [144].

To safeguard PHI, techniques like end-to-end encryption, anonymization, and secure authentication protocols are critical [141]. Anonymization reduces the risk of data breaches by ensuring that compromised data cannot be linked to individuals, while multi-factor authentication helps secure access to the chatbot system [143]. Regular security audits should be conducted to identify and mitigate vulnerabilities in the chatbot infrastructure [144]. Socio-technical strategies are also vital. Compliance with regulations like HIPAA and GDPR must be demonstrated, and users should be informed about data collection practices and asked for informed consent [142]. Adopting the principle of data minimization—collecting only what is necessary for the chatbot’s function—can limit exposure to sensitive data [140]. Educating users on how their data are protected and their rights under privacy laws is key to fostering trust [118]. Research is needed to explore how AI chatbots can deliver personalized advice while maintaining compliance with privacy regulations. Incorporating privacy by design from the outset ensures that privacy considerations are integrated at every stage of chatbot development [142]. Additionally, advanced techniques like federated learning and real-time encryption should be investigated to balance data utility and privacy without compromising chatbot performance [63].

7.6. Ineffectiveness with Diverse Populations

AI chatbots often fail to meet the needs of diverse populations due to linguistic, cultural, and socioeconomic limitations. These systems struggle to interpret input from non-native speakers and lack cultural context, leading to miscommunication and misinformation, particularly among marginalized communities [145]. This shortfall exacerbates existing health disparities, as these groups face barriers in accessing reliable health information, increasing their risk during epidemics.

The biases in AI models, stemming from non-representative training data, limit their effectiveness in underrepresented languages and cultural contexts [146]. While multilingual models and culturally adaptive systems have been developed, significant gaps remain in scaling these solutions and ensuring they meet the needs of marginalized communities [147]. To address this, expanding training datasets to include more diverse languages and developing speech recognition systems that accommodate different dialects are critical steps [148]. In addition to technical innovations, socio-technical measures are essential. Community-centered design approaches, which involve local populations in chatbot development, ensure that the technology is culturally relevant and accessible [149]. Collaborations with health agencies and local organizations can help tailor information dissemination strategies to meet the specific needs of different communities [150]. Furthermore, digital literacy programs are vital for enabling marginalized populations to effectively engage with AI chatbots, improving access to critical health information. Key research areas include enhancing AI chatbots for low-resource languages, reducing bias, and assessing the public health impacts of communication barriers created by ineffective AI systems. Participatory design methods, which involve end-users in chatbot development, are essential for increasing engagement and trust among marginalized communities [151].

7.7. Technical Limitations in Communication

AI chatbots and LLMs face significant technical challenges in effectively communicating with users during health crises, often struggling with ambiguous queries, slang, and emotional cues. The misinterpretation of symptoms or concerns can result in inappropriate or unsafe advice, delaying necessary medical intervention [152]. This problem is exacerbated by limitations in NLP, as chatbots often fail to comprehend nuanced language or emotional states [153].

While transformer-based models like BERT and GPT-3 have improved language understanding, they still struggle with context-specific slang and emotion recognition [154]. Emotion recognition, a key area for improvement in healthcare chatbots, remains underexplored, and integrating it into real-world applications is challenging [155]. Expanding chatbot training datasets to include slang, colloquial language, and regional dialects is an important step for improving communication with diverse populations [156].

To address these challenges, adopting advanced NLP techniques and emotion recognition technologies is essential. Context-aware algorithms and multimodal systems that combine text analysis with speech and emotion recognition offer promising solutions for improving chatbot communication [157]. In addition, human–AI collaboration is also of paramount importance—systems should allow human experts to intervene when a chatbot detects ambiguity or emotional distress, ensuring appropriate responses [158,159].

User education is also important; informing users about chatbot limitations and encouraging clear communication can reduce misunderstandings [160]. Feedback mechanisms that enable users to report errors can lead to continuous improvements in chatbot performance [161].

Research is needed to explore how AI chatbots can better interpret ambiguous queries and context-specific language, especially in healthcare. Developing domain-specific language models and integrating emotion recognition technologies are critical steps toward improving chatbot effectiveness in high-stakes health scenarios [48]. Interdisciplinary approaches from linguistics, psychology, and human–computer interaction will be vital for enhancing chatbot communication [162].

7.8. Resource Allocation Concerns