1. Introduction

Skeleton extraction, as a core task of object shape analysis, aims to extract an abstract representation from image data or point cloud data that characterizes the object’s geometric structure and topological connectivity. This compact skeletal structure not only preserves the key topological features of the original object’s shape (such as branches, loop structures, or hierarchical relationships), but also provides an essential description for understanding the object’s geometric attributes, motion patterns, and functional characteristics, serving as a bridge connecting low-level geometric data and high-level semantic understanding. Currently, skeleton extraction technology has been widely applied in many fields, such as shape recognition, 3D reconstruction [

1,

2,

3], segmentation [

4,

5], shape matching [

6,

7], pose estimation [

8,

9,

10], action recognition [

11], and agricultural cultivation management [

12].

In recent decades, researchers have conducted extensive research on object skeleton extraction [

13,

14,

15,

16,

17,

18]. Due to the lack of depth information, 2D images struggle to effectively describe object occlusion and overall structure [

19], limiting their application in complex scenes. With the development of 3D sensing technology, research has gradually shifted towards information-richer 3D point cloud data [

20,

21,

22]. Furthermore, to achieve complete skeleton modeling, how to reasonably topologically connect the extracted skeleton points is also one of the key issues, and related research has also been conducted on the effective connection of skeleton points [

23,

24,

25]. This paper will summarize the various aforementioned methods along with their advantages and limitations.

Skeleton extraction algorithms based on 2D images: In 2D images, the skeleton is represented as the object’s centerline extracted through thinning operations. Currently, skeleton thinning algorithms are mainly divided into two categories [

13,

14,

15]: iterative (pixel-based) and non-iterative (non-pixel-based). Iterative methods use pixels as the basic unit and extract the skeleton by progressively iterating to remove non-skeleton pixels from the image. This type of method has the advantages of algorithm simplicity and ease of implementation, but it heavily relies on the binarization quality of the image and is susceptible to noise. Furthermore, multiple iterative operations may also incur significant computational costs. Non-iterative methods are typically based on geometric or mathematical transformations, calculating global image information to directly generate the skeleton from a global perspective. This type of method mainly includes distance transform, curvature calculation, and watershed methods. These methods greatly reduce computational overhead, but they are prone to producing pseudo-skeletons for images with complex structures or multi-connected regions. Although 2D image data has been widely used in skeleton extraction tasks, its lack of depth information prevents it from reflecting the object’s 3D morphology in space. This easily leads to problems such as skeleton positioning deviation and loss of structural information, making it difficult to meet the demand for high-precision spatial structure reconstruction.

With the development of computer vision and 3D sensing and scanning technology, the acquisition of point clouds has become more convenient than ever before. 3D point clouds not only provide richer spatial information, but also faithfully represent the geometric morphology and detailed structure of objects, providing more detailed data support for skeleton extraction in complex scenes. Therefore, many researchers have applied 3D point cloud technology to skeleton extraction tasks. Performing object structure analysis in 3D space has higher robustness and accuracy. Currently, skeleton extraction algorithms based on 3D point clouds can be roughly divided into the following three categories:

Traditional Geometry-Based Skeleton Extraction Algorithms: This category of algorithms primarily relies on information such as geometric proximity, topological connectivity, and curvature to extract compact representations of target shapes. The core objective of these methods is to reduce redundancy while preserving the intrinsic structure of the original shape as much as possible, thereby enhancing the efficiency of shape analysis and processing. Among them, Cao et al. [

26] proposed a Laplacian-based contraction algorithm for skeleton extraction. By iteratively optimizing Laplacian smoothing constraints, it causes the point cloud to gradually contract inward while maintaining the overall topological structure, generating the object’s skeleton representation after multiple iterations. As this method requires constructing adjacency relationships using k-nearest neighbors (KNN) and a Laplacian matrix, it demands that the input point cloud model possesses sufficient point density and is noise-free. Consequently, Huang et al. [

27] proposed an L1-medial skeleton extraction algorithm for extracting and smoothing skeletons from point cloud models. This method can generate object skeletons from incomplete data through distance field optimization and global constraints. However, this approach only achieves favorable results when the object’s point cloud shape is simple, and the construction of the distance field requires voxelization, where the voxel resolution parameter directly impacts the result quality. Tagliasacchi et al. [

28] introduced a generalized Rotational Symmetry Axis (ROSA) method, which can effectively extract skeletons from incomplete point cloud models. Nonetheless, this method assumes the object shape is cylindrical and requires accurate point normals, while also necessitating preprocessing for noise and outliers, lacking generality for non-cylindrical object shapes. Jiang et al. [

29] proposed a graph-contraction-based skeleton extraction algorithm. This method treats the tree skeleton as a combination of edge and node relationships and defines a corresponding energy function. This function consists of a contraction term and a topology-preserving term that prevents the point cloud from contracting in local principal directions. The contraction term constraints the minimization of the sum of graph geodesic distances to the k-nearest geodesic neighbors. It then extracts the contracted tree skeleton points via downsampling and performs topological connections. However, this method involves high computational complexity as it requires recalculating graph geodesics at each iteration.

Although traditional geometric methods have achieved considerable success in skeleton extraction tasks at the current stage, in practical applications, they are sensitive to noise and sampling density, entail significant computational overhead, and require fine-tuning of parameters for different objects to obtain better skeleton extraction results. These limitations make them difficult to apply in complex 3D scenes. In recent years, with the development of deep learning technology, deep learning-based skeleton extraction methods have gradually become a research hotspot. Such methods utilize data-driven approaches for feature learning, extract structural information from point clouds, avoid tedious parameter adjustment, and possess stronger adaptability and generalization capabilities.

Methods based on supervised deep learning: Zhou et al. [

30] used the PointNet network combined with a ridge regression loss to extract features from human body point cloud data at the point level, achieving human skeleton point extraction under supervision. Qin et al. [

31] proposed a PointSkelCNN network based on an x-transformation operator, which converts 3D human skeleton extraction into offset vector regression and human body segmentation, and integrates a disambiguation strategy to improve the robustness of joint point regression, finally extracting the human skeleton. Vasileiadis et al. [

32] voxelized the human point cloud, extracted features through a 3DCNN network, and then compared them with 15 manually calibrated joint parts, outputting a 3D maximum likelihood map for each joint to obtain key points to complete the 3D pose estimation task. Arindam et al. [

33] proposed an Mmpose-NLP network, which extracts features from simulated radar point clouds between two consecutive frames after voxelization and aggregation using seq2seq, and further uses sparse categorical cross-entropy to predict the final skeleton points. However, the aforementioned skeleton extraction models mostly rely on keypoint labels in the point cloud data to supervise the model’s training and learning, assisting the model in completing the task of extracting skeleton points from the point cloud. However, annotating 3D data, whether choosing point-wise annotation or keypoint annotation, involves enormous workload and cost. In addition, 3D annotation lacks unified quantitative metric supervision. The definition of point-wise scope and keypoint by annotators is based on experience, which leads to strong subjectivity in the annotation results and an unguaranteed annotation quality, consequently affecting the model’s performance in subsequent training. Therefore, some researchers have also carried out a series of research studies on the problem of how to extract object skeleton points in unsupervised networks.

Methods based on unsupervised deep learning: Jakab et al. [

34] proposed a keypoint extraction network, KeypointDeformer, with a Siamese network as the main body. By introducing a farthest point keypoint regularization loss function, it treats the initialized farthest points as a noise prior on the keypoint locations. As the model trains, it minimizes the Chamfer distance between the predicted keypoint and the sampled points, enabling the predicted keypoint to be evenly distributed on the object’s surface. Shi et al. proposed an unsupervised aligned keypoint detector [

35] based on an autoencoder architecture, which predicts point-wise scores through the encoder and performs weighted averaging to obtain keypoint, and further proposed a Composite Chamfer Distance (CCD) loss function applied to constrain the generation locations of the keypoint. Lin et al. [

36] used PointNet as the basic architecture and proposed a joint loss function based on geometric transformations, achieving the extraction of object skeleton points. However, in the aforementioned literature, the feature extraction part mostly uses PointNet. This network aggressively max-pools all point feature maps into a single global feature, causing the local correlations between points not to be learned by the network, resulting in the loss of local features, and leading to the problem of skeleton point extraction deviation.

Skeleton point connection methods: In addition, most current deep learning methods mainly focus on the extraction of skeleton points. Regarding the problem of how to topologically connect the extracted skeleton points to form a skeleton, most rely on manual connection. Such an operation is not conducive to the automated processing and analysis of high-throughput data. Therefore, some researchers have conducted related research on the problem of achieving end-to-end automated connection of skeletons. Liu et al. [

37] proposed a network with FCGF as the backbone, converting the object skeleton extraction problem into a problem of corner localization and corner connection. Li et al. [

38] proposed a deep neural network, WireframeNet, that converts point clouds into wireframes. The network predicts a set of edge points from the original point cloud by learning geometric transformations, and finally analyzes the connectivity between the edge points to construct a complete wireframe structure. Luo et al. based on the [

39] model encoded sufficient raw point cloud contour information to predict the existence probability of potential connection points, and queried the probability of connection between two connection points. In the aforementioned research studies, the problem of connecting the points and edges of the skeleton is simplified into the problem of connecting the edge points and wireframes of polyhedra, and the skeleton structure extracted by these algorithms resembles a mesh grid shape, and does not possess the original topological structure of the object.

In summary, existing skeleton extraction methods exhibit significant limitations at various stages. Two-dimensional image-based methods lack depth information, making it difficult to represent complete spatial structures. Consequently, current research has gradually shifted towards 3D point cloud data; however, traditional 3D geometric methods rely on complex parameter settings and are highly sensitive to noise and sampling density. Supervised deep learning models have improved robustness to a certain extent, but they still require extensive manual annotation, incurring high costs and limited generalization capabilities. While unsupervised methods address the issue of high annotation costs, they may suffer from feature loss or skeleton point deviation during local structure modeling.

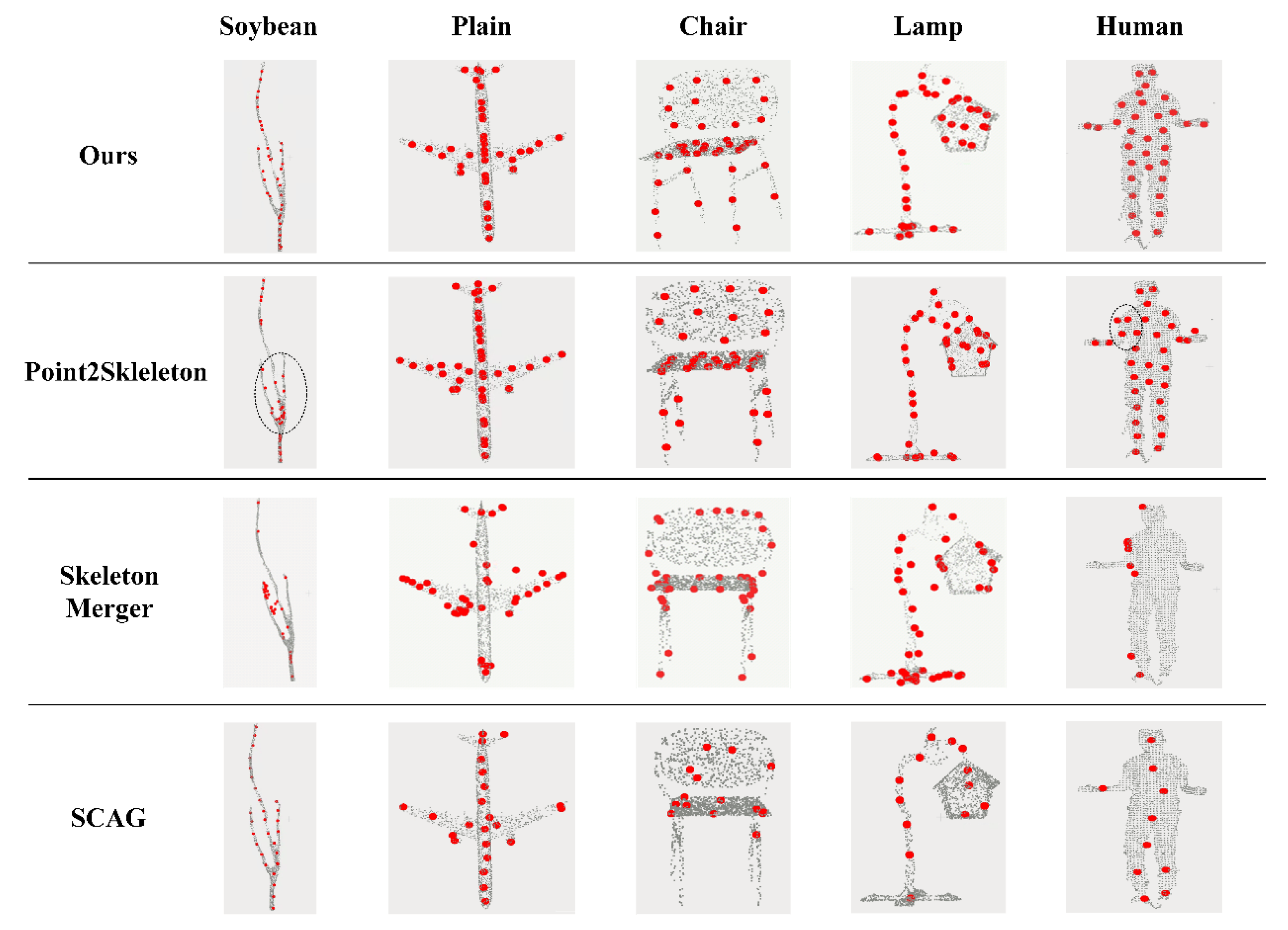

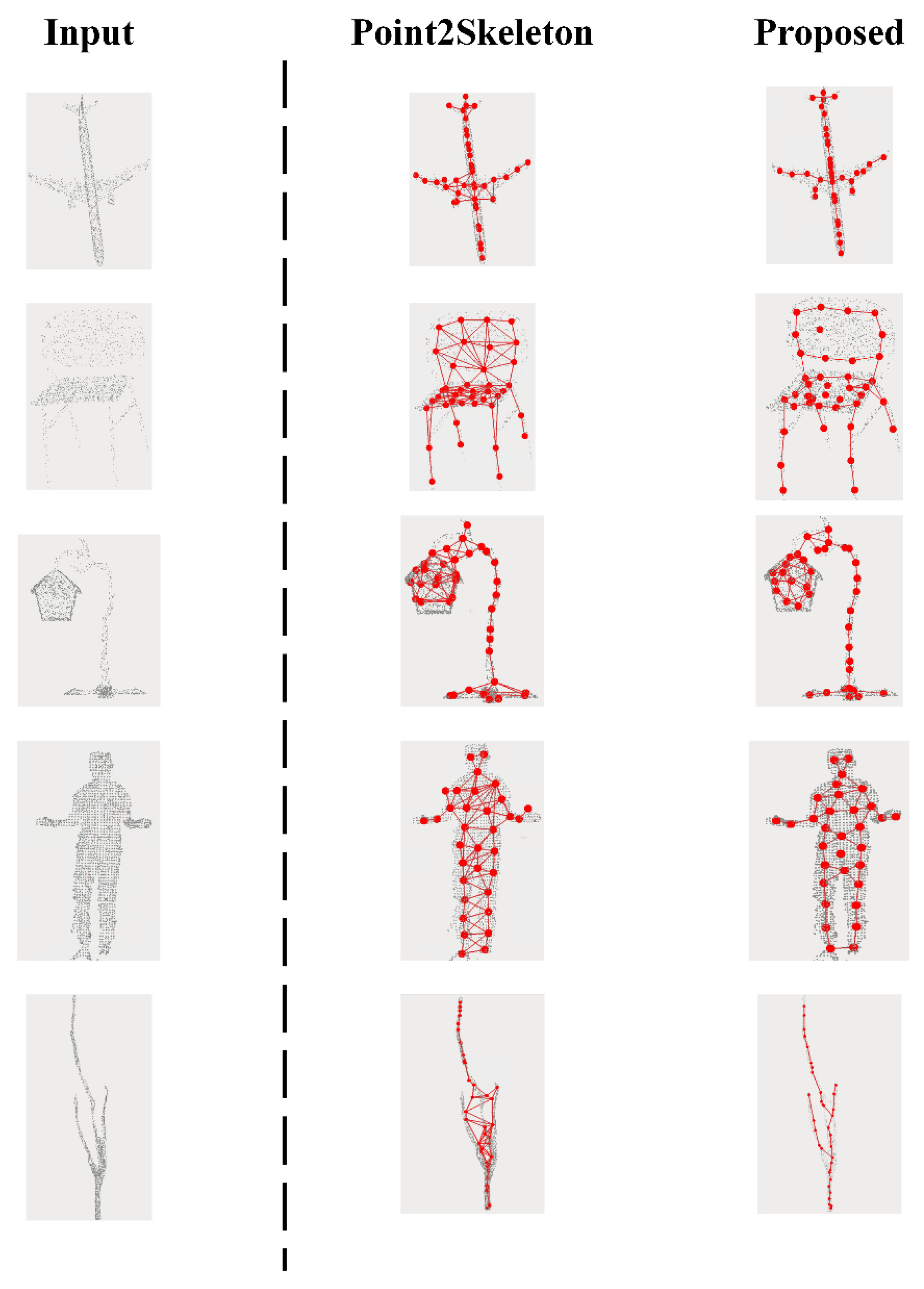

Addressing the common issues of skeleton point deviation and insufficient local structure representation in unsupervised methods, the Graph-SENet proposed in this paper introduces dynamic graph convolution to replace global pooling structures. This approach enables more effective modeling of local geometric features, demonstrating superior capability and specificity in feature modeling and topological recovery. regarding skeleton topological connection, many deep learning methods still rely on manual connection. Meanwhile, existing automated connection methods often simplify the skeleton connection problem into edge point or wireframe prediction. The resulting structures resemble polyhedral meshes rather than the original tree-like topological structure of the object, thus failing to meet the requirements for fine-grained structure analysis. Our method integrates a graph autoencoder with a direction vector-based cosine similarity thresholding mechanism to achieve the automatic prediction of connection relationships between skeleton points, thereby extracting high-quality tree-like semantic skeletons.

Therefore, the main innovations of the method proposed in this paper include the following points:

Aiming at the problems that deep learning methods for extracting skeleton points from point clouds require extensive annotated information, and that feature extraction suffers from information loss and data redundancy. This paper proposes a novel unsupervised skeleton point extraction network, Graph-SENet. By introducing a DGCNN into the encoder part of feature extraction and combining it with a multi-layer feature fusion strategy, the network’s ability to extract local neighborhood features from point cloud data is enhanced; and further proposes a novel point-to-point multi-dimensional feature unsupervised loss function to strengthen the network’s perceptual ability for skeleton point location extraction, achieving uniform extraction of object skeleton points under unsupervised conditions, significantly reducing the cost of manual annotation, effectively avoiding the impact of subjective manual annotation on model performance, and ensuring the objective consistency of the skeleton point extraction results.

Aiming at the problem that after current deep learning networks extract skeleton points from point clouds, they require manual topological connection to form a skeleton, making it difficult to achieve full end-to-end learning. This paper proposes a connection prediction network centered on a GAE and fused with the skeleton point extraction network, combining a prior connection strategy and a topological connection optimization strategy, to learn and optimize the potential skeleton connection relationships in the point cloud, achieving full process end-to-end learning from point cloud to skeleton, reducing the manual cost of skeleton point post-processing, and significantly improving the efficiency of skeleton extraction.

2. Materials and Methods

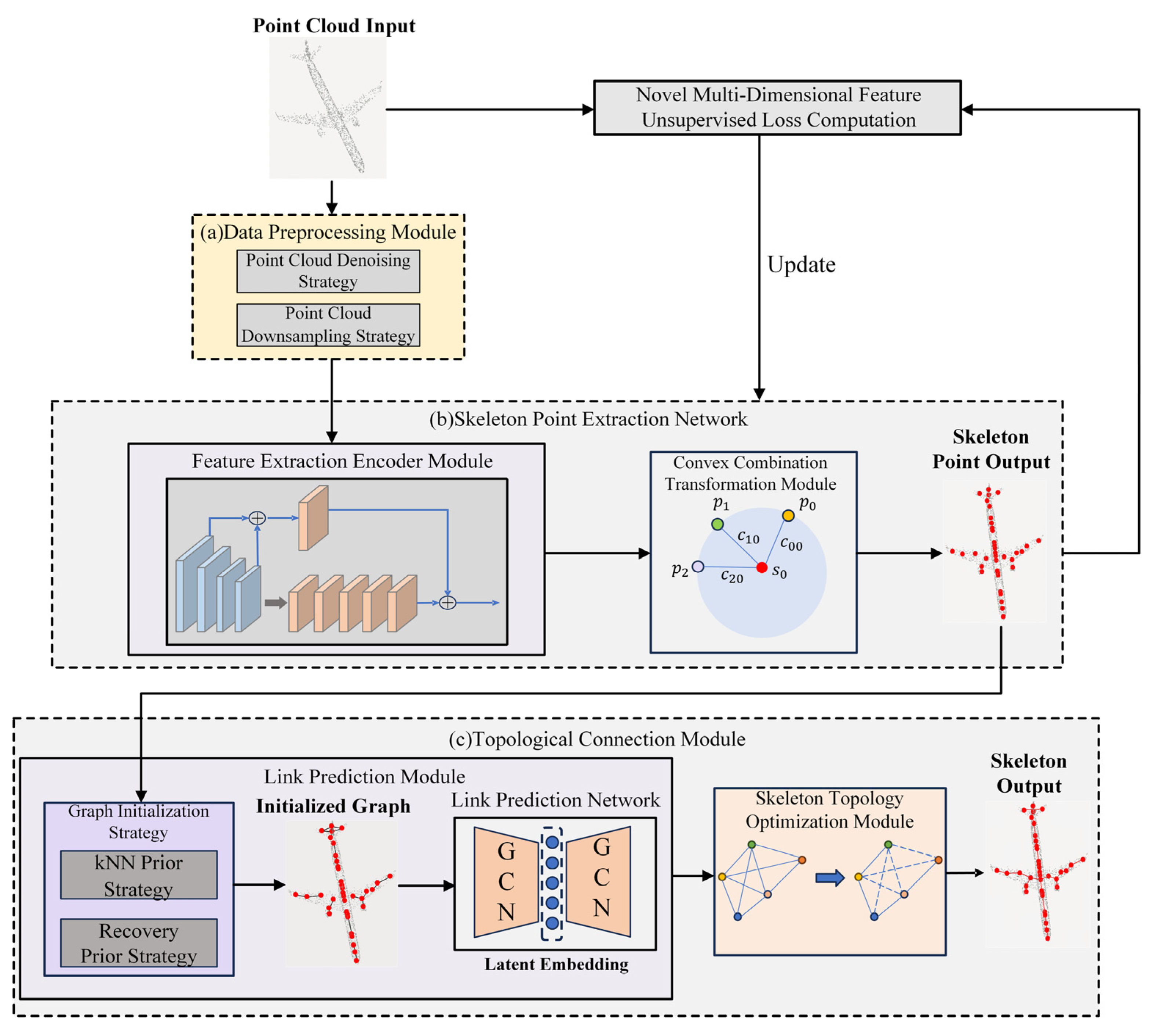

This paper starts from the spatial structural characteristics of 3D point clouds. Due to the fact that point clouds naturally possess a non-Euclidean data distribution form and geometric properties of local irregularity, this makes it difficult to directly apply traditional convolutional neural networks during modeling. In contrast, Graph Neural Network (GNN), by constructing graph structures, can flexibly represent the unstructured adjacency relationships in point clouds and effectively capture the local geometric connections between points during feature propagation; therefore, GNNs have become an ideal backbone choice for processing point cloud data. Based on the above analysis, this paper designs an unsupervised graph neural network model, Graph-SENet, which can accurately localize skeleton points from point cloud data and extract the object’s skeletal topological structure. The overall architecture for automated skeleton extraction using the method proposed in this paper is shown in

Figure 1. mainly including three modules: the point cloud preprocessing module, the skeleton point extraction network, and the topological connection module.

Data preprocessing module: This module consists of a point cloud data cleaning strategy and a data downsampling strategy. The function of point cloud data cleaning is to remove outliers, duplicate points, and background noise interference from the original point cloud, improve the point cloud quality, and ensure the effectiveness and robustness of subsequent network input; downsampling sparsifies the point cloud through methods such as random sampling to reduce computational complexity, while preserving the overall structural features of the object, ensuring the geometric consistency of subsequent skeleton point extraction.

Skeleton point extraction network: This network is composed of a feature extraction encoder module and a convex combination transformation module. The feature extraction encoder module mainly includes dynamic graph convolutional layers and multilayer perceptron layers, wherein, the dynamic graph convolutional layer reconstructs the graph structure in each forward propagation process based on the extracted point features; through the construction of dynamic graph neighborhoods, it can capture richer geometric relationships and structural features, providing more effective features for subsequent skeleton point classification; the multilayer perceptron is an artificial multi-convolutional layer, which is mainly responsible for further performing non-linear mapping based on the local features extracted by the graph convolution. The convex combination transformation module utilizes the potential sparse structural features in the point cloud to find optimal linear combination centers in the local neighborhoods of the original point cloud, enabling the network to focus on keypoint with more distinct features. Finally, the network achieves regression prediction from the preprocessed point cloud to the skeleton points through the constraint of the multi-dimensional unsupervised loss function.

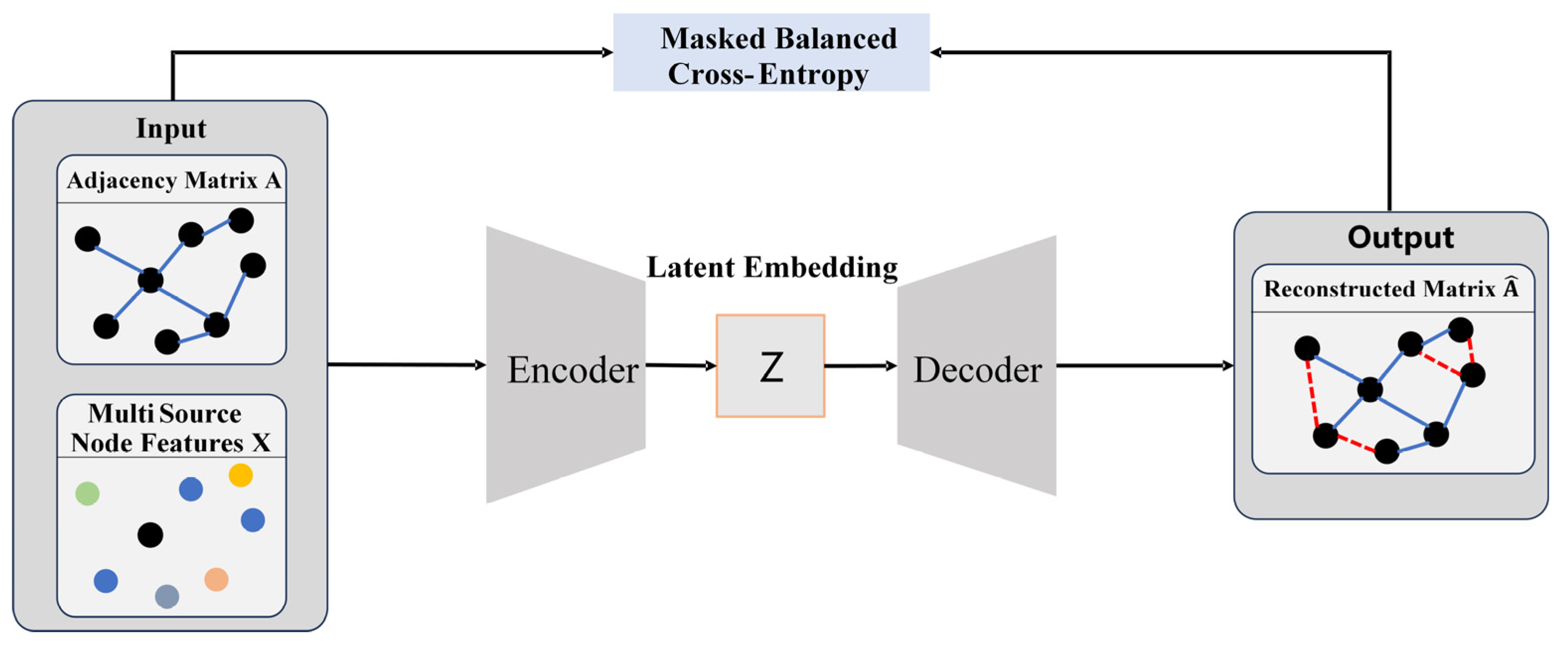

Topological connection module: This module consists of a connection prediction module and a skeleton topological connection optimization module. The connection prediction module is mainly composed of a graph initialization strategy and a connection prediction network. The graph initialization strategy constructs an initial adjacency graph through the K-nearest neighbors algorithm and the mapping between skeleton points and the clean point cloud, forming a basic graph structure representation to provide an initial input graph for the graph autoencoder. The connection prediction network, based on a graph autoencoder architecture, extracts the latent embedding (Latent Embedding) of skeleton points through the encoder, and uses a decoder to predict whether a connection relationship exists between any pair of points, thereby generating a candidate skeleton connection graph. The skeleton topological connection structure optimization module, on the basis of the preliminary connection graph, further introduces geometric priors for structural optimization, removing pseudo-connections, repairing missing connections, and improving the accuracy and coherence of the skeleton topological structure. Through the aforementioned joint network training, the algorithm proposed in this paper can achieve end-to-end accurate extraction of the object skeleton.

2.1. Data Preprocessing

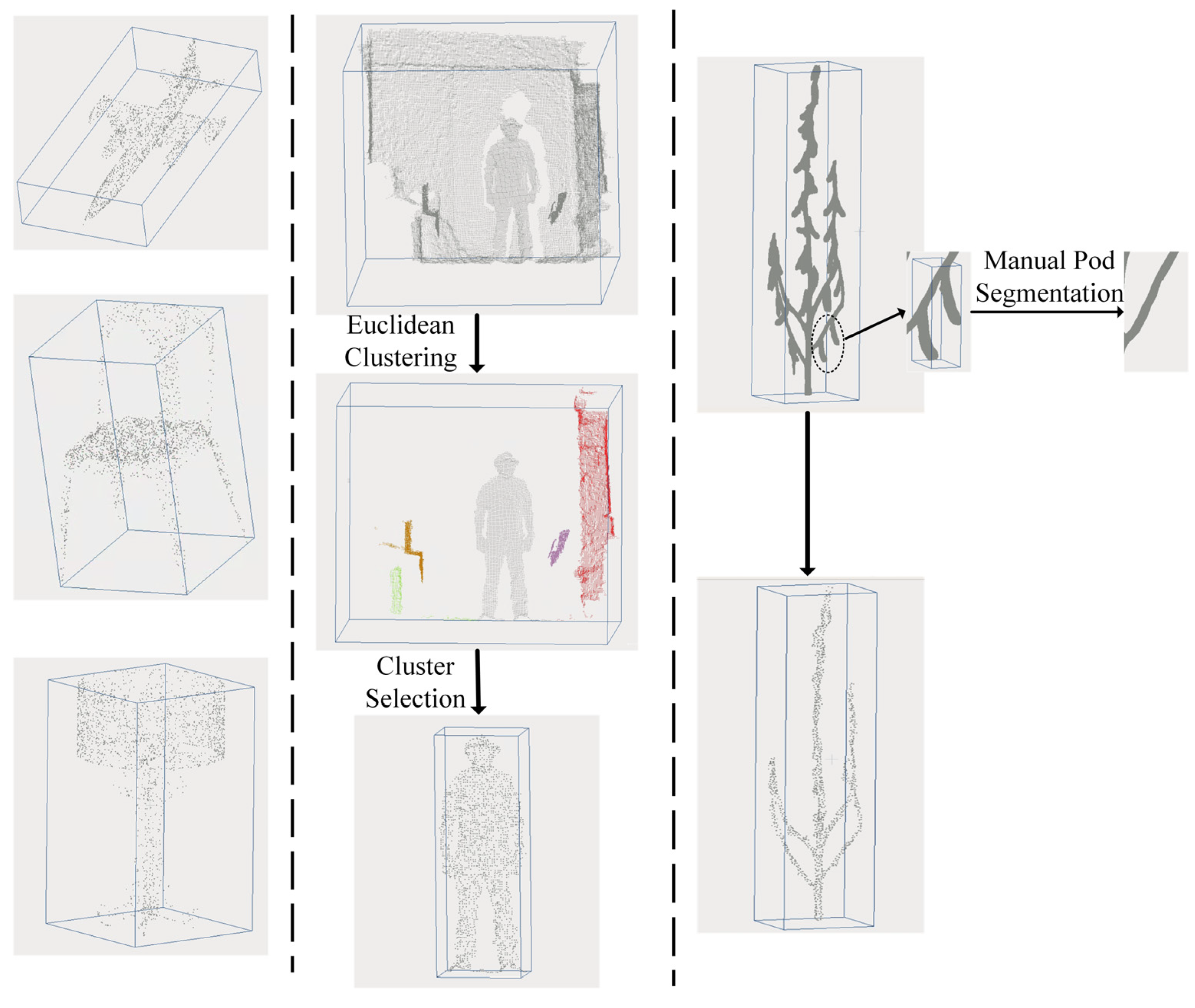

The datasets used in this experiment mainly include the following three, and

Figure 2 shows samples of the point cloud datasets from each dataset and their corresponding processing:

The general object dataset: ShapeNet [

40]. This is a large-scale annotated 3D point cloud dataset widely used in the fields of 3D vision and geometric deep learning. This dataset contains over 51,300 high-quality CAD models that have been manually created and cleaned. Specifically, following the method of et al. [

41], this paper selected 3 representative categories for research: chairs, airplanes, and table lamps. Since the 3D CAD objects were originally stored as meshes, we resampled the meshes into point clouds and uniformly downsampled them to 2000 points via random downsampling, covering a total of 8600 point clouds, with a training set/test set split ratio of 8:2.

The human body dataset: ITOP [

42]. This dataset is a 3D human pose estimation benchmark dataset constructed based on a ToF (Time-of-Flight) depth sensor, with its core data collected by a Microsoft Kinect v2 device. It specifically includes 15 different actions from 20 individuals. The depth sensor provides two depth image data acquisition viewpoints. One corresponds to the top viewpoint, while the other corresponds to the front viewpoint. To better extract the human limb skeleton, we used 8000 point cloud data generated from the front viewpoint acquisition as the research object, with the training set/test set split ratio also being 8:2. After performing point cloud reconstruction on the depth images collected by the depth sensor, we found that in addition to the human body, the point cloud data also contained a large amount of foreground and background noise point clouds. Therefore, this paper utilized a method based on threshold filtering and Euclidean clustering for point cloud data cleaning [

30] to segment the human body from the complex background noise, and uniformly downsampled the human body point clouds to 2000 points.

The soybean dataset: Soybean-MVS [

43]. This dataset consists of 102 3D soybean plant models reconstructed using Multi-View Stereo (MVS) technology. The dataset spans the entire soybean growth period, including five varieties, and color data is included in the point clouds (XYZRGB format). Although this dataset provides branch segmentation annotation data for the soybean dataset, for soybeans in the mature stage, researchers are more concerned with plant architecture parameters rather than segmentation parameters. However, the point clouds of soybean plants in the mature stage in this dataset have a large number of pod point clouds attached to the branches. For the skeleton extraction network, these pod point clouds introduce unnecessary noise effects. Therefore, we used CloudCompare [

44] software to manually segment the pods from the point clouds of 25 mature soybean plants to obtain clean soybean branch point cloud data, and uniformly downsampled them to 2000 points, where the training set/test set split ratio was also 8:2.

To address the issue of model robustness, we applied random downsampling to the point clouds across all datasets. The randomness of the sampling points simulates, to a certain extent, the incompleteness and non-uniformity inherent in real-world point cloud acquisition. This enables the model to learn the distribution characteristics of sparse and non-uniformly dense point clouds, thereby achieving robustness in extracting object skeletons from low-density or incomplete data.

2.2. Skeleton Point Extraction Network

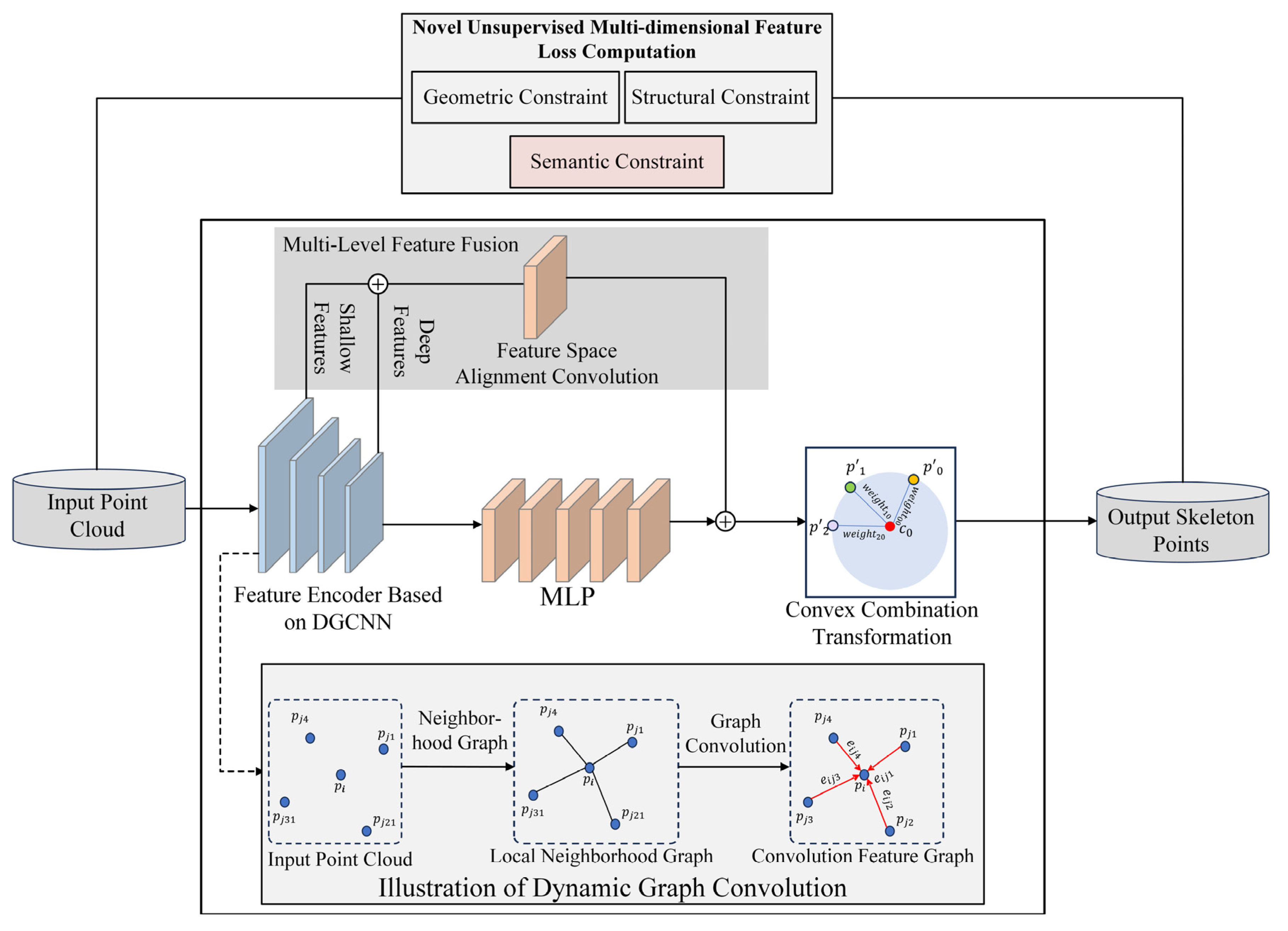

The training flowchart of the skeleton point extraction network is shown in

Figure 3. Among them, in the feature extraction encoder module part, this paper proposes a dynamic graph convolutional layer with stronger feature capture capabilities to adaptively and dynamically construct point cloud local geometric relationship features (introduced in

Section 2.2.1), and in the loss function part, a novel multi-dimensional feature unsupervised loss function is constructed to apply unsupervised constraints on the skeleton point extraction results from multiple dimensions such as geometric position, semantic features, and local structure, guiding the model to learn the latent representation of the skeleton structure from the original point cloud more effectively, constraining the refined object skeleton point extraction, and providing more accurate prior knowledge for subsequent skeleton connection (introduced in

Section 2.2.2). The improvement work for each part is introduced in detail below.

2.2.1. Dynamic Graph Convolution Based Feature Extraction Module

In traditional point cloud feature extractors, most deep learning networks employ PointNet for feature extraction. PointNet aggregates features based on global symmetric functions; although it has an advantage in maintaining input invariance, it results in a certain degree of feature loss. Furthermore, its ability to model the local structure of point clouds is weak, making it difficult to effectively capture complex local geometric information. Particularly in non-uniformly sampled or noisy actual point cloud scenarios, this often leads to a decrease in skeleton point extraction accuracy. Therefore, given that point clouds are essentially unstructured discrete data and lack explicit topological relationships, graph structures can explicitly model the adjacency and geometric connections between points, thereby endowing the discrete point cloud with a structured representation. This paper introduces a dynamic graph convolutional network (DGCNN) into the skeleton point extraction network as the backbone structure (as shown in

Figure 3), to enhance the model’s feature extraction capability.

In the DGCNN encoder, the input F-dimensional point cloud set is assumed to be denoted as

, where

denotes the

point of the point cloud, and

N is the total number of points in the input point cloud, and the simplest case is considered, where each point contains only the 3D coordinates

. When performing graph convolution, a local neighborhood map needs to be constructed for the point cloud (as shown in the “Illustration of Dynamic Graph Convolution” in

Figure 3). Therefore, a directed graph

is further assumed to represent the local structure of the point cloud, where

,

denotes vertices and edges, respectively. In this paper, the initial point cloud

is first constructed as a k-nearest neighbor graph

using the k-nearest neighbor algorithm, and the edge features

are extracted by the graph convolution operation, and finally the vertex outputs

are obtained by applying the channel symmetric aggregation operation on the edge features associated with all the edges emanating from each vertex as shown in Equations (1) and (2).

where the edge feature function in Equation (1) is an asymmetric edge function, which combines the global shape structure (captured by the coordinates of the patch center to

) and the local neighborhood information (captured by

), and

denotes the convolutional operation of the graph neural network; and

denotes as the output of the encoder’s convolutional feature maps after aggregating the edge features.

Furthermore, to further enhance the network’s expression capability for point cloud features, this paper introduces a multi-layer feature fusion strategy into the skeleton point extraction network, to fully integrate the local and global information extracted by different deep network layers. Specifically, this study selects the output features of the n-th layer and the (n + 4)-th layer of the dynamic graph convolution modules in the feature encoder (in this study, n is taken as 1), which, respectively, represent the shallow local structural information and the deep abstract semantic information of the point cloud. And after concatenating these two layers of features along the channel dimension, convolutional operations are used to compress and fuse the feature expression dimensions, enabling them to possess stronger representation capability and semantic consistency. Through this structured multi-layer feature fusion approach, the model is enabled to simultaneously capture the detailed features and global morphological features of the point cloud, significantly enhancing the expression capability and generalization ability of the skeleton point extraction network for complex structures. Especially when facing objects with large inter-class deformation or significant local structural changes, it can still maintain high robustness and prediction accuracy.

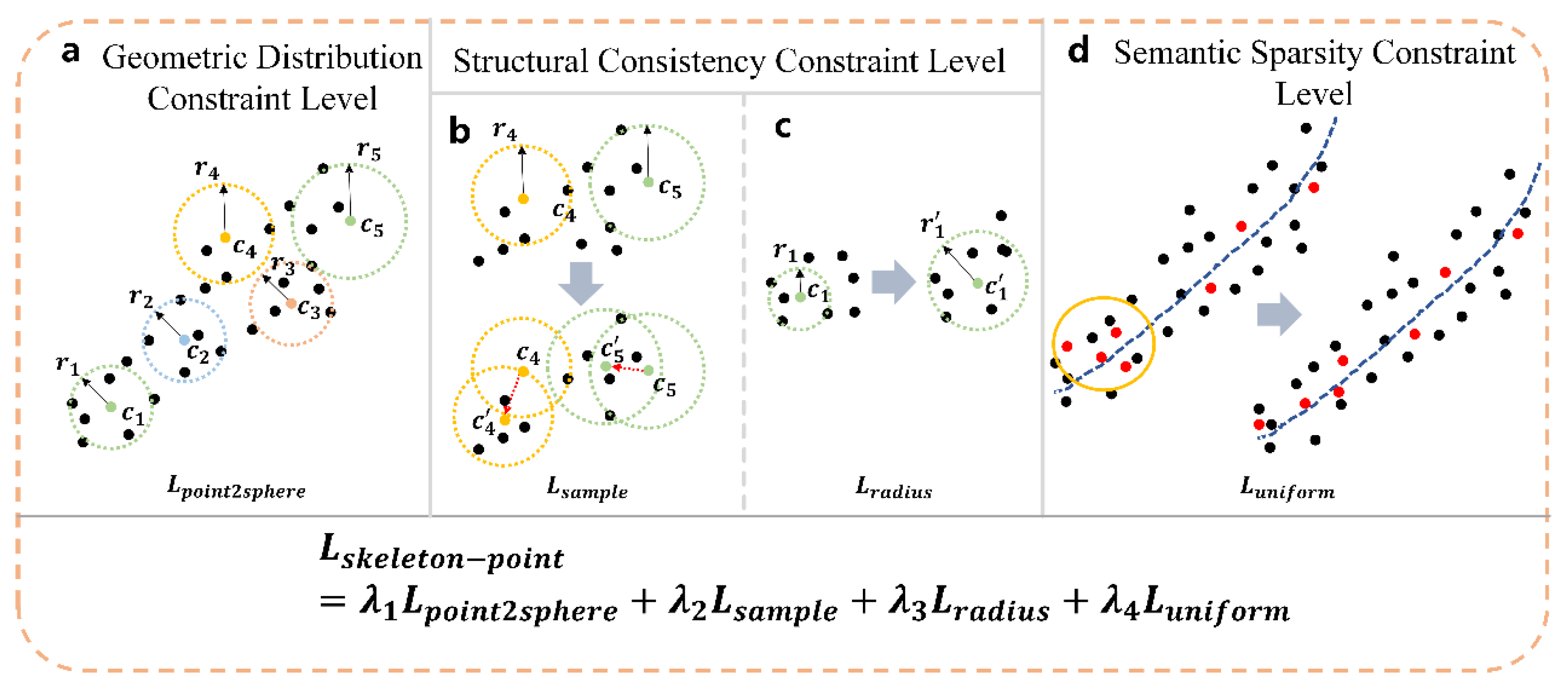

2.2.2. Unsupervised Loss Function Optimization Based on Multi-Dimensional Features

In the task of unsupervised skeleton point extraction, how to guide the network to accurately learn the skeletal structure within the point cloud, given the absence of manually annotated information, is the core difficulty in loss function design. Existing research [

36] constructs unsupervised loss functions from two aspects: geometric distribution constraint and structural consistency constraint. The former maintains geometric accuracy by measuring the degree of spatial fitness between the skeleton points and the original point cloud, while the latter enhances the coherence and structural stability of the skeleton points by introducing sampling consistency and radius regularization terms. Although these two types of constraints have improved the accuracy of skeleton extraction to a certain extent, problems such as dense distribution of skeleton points, structural redundancy, or excessive aggregation in regions often occur in actual skeleton point data, leading to insufficient overall discriminability and expressive clarity of the skeleton representation. To further address the aforementioned issues, this paper, based on the original loss function design, introduces a third key constraint dimension—semantic sparsity constraint—and further proposes an optimized unsupervised loss function with multi-dimensional feature fusion. This constraint, by constructing a uniformity loss term, performs global regulation on the spatial distribution of skeleton points, encouraging the skeleton points to exhibit a sparser, more uniform, and more discriminative distribution pattern in terms of structural semantics, thereby significantly enhancing the semantic clarity and structural expression capability of the skeleton point extraction.

Next, this paper will, starting from the loss terms designed at each level, sequentially introduce their calculation methods, constraint objectives, and their roles in the overall optimization process.

Given an input point cloud

, the goal is to predict a set of

skeleton points. To better cover the object’s shape, the network first predicts a set of skeleton balls

, whose centers are treated as candidate skeleton points

. Inspired by mathematical linear programming [

45], we propose to use convex combinations to describe the relationship between the input point cloud and the predicted skeleton points:

where

denotes the predicted skeleton points, and

denotes the predicted weights.

Furthermore, this paper represents the radii of all skeleton sphere as a linear combination of the nearest distances from all skeleton points

to the input points

, and

is denoted as the nearest distance from the input point cloud

to the output skeleton points

. The specific calculation formula is as follows:

Therefore, based on the above definitions of skeleton balls and skeleton points, the objective of this paper is transformed into designing a set of loss functions to constrain the network training to obtain the predicted convex combination weights .

First is the loss at the level of the geometric distribution constraint: the point2sphere loss (as shown in

Figure 4a). The procedure for predicting skeleton points in this paper is as follows: first, a set of skeleton spheres that can characterize and cover the object’s shape is predicted from the point cloud, and then the final skeleton points are estimated based on the skeleton sphere centers. Therefore, this paper first measures the skeleton sphere reconstruction error by explicitly optimizing the coordinates of the skeleton points and their radii:

where

denotes the predicted skeleton point,

denotes the input point,

denotes the radius of the skeleton point

, and

denotes the skeleton point closest to the input point

. The first term constrains each input point to lie on the surface of its nearest skeleton sphere, and the second term encourages each skeleton sphere to touch its nearest input point.

Then there is the loss at the level of the structural consistency constraint: the sampling consistency loss (as shown in

Figure 4b). Although the loss function

alone can make the structure of the skeleton points cover the input point cloud, this does not mean that the generated skeleton points can geometrically restore the original surface shape, i.e., a situation exists where the skeleton point positions and radii might allow the spheres to cover the point cloud, but their skeleton points exhibit large differences on the geometric surface. Therefore, this paper samples on the surface of each skeleton sphere to generate sampling points

used to represent the “approximate surface” of the object, and measures the Chamfer distance (CD) between the sampling points

and the input points

, to constrain the surface generated by the skeleton to also be consistent with the details of the point cloud geometry:

where sampling points

are generated by intersecting the sphere surface with 8 uniformly distributed directional vectors in 3D Cartesian coordinates. The bidirectional Chamfer Distance is used for better geometric alignment between sampled and original surface points.

Radius regularization loss (as shown in

Figure 4c), in practical applications, this paper finds that the inscribed spheres of 3D shapes are sensitive to surface noise, that is, a sphere can be inscribed in the space between several noise points, thereby producing a tiny radius. To focus on the underlying geometry, in order to achieve better abstraction and avoid instability, therefore, we use a radius regularization loss to encourage larger radii, reducing the influence of fine noise point interference in the point cloud:

Through the joint constraint of the above loss functions, the network can estimate the positions of the skeleton points from the original point cloud. However, the generated skeleton points will exhibit the phenomenon of regional aggregation, leading to unevenly generated skeleton points, which affects the subsequent skeleton connection effect. This paper adds a uniformity loss based on the semantic sparsity constraint (as shown in

Figure 4d) to the original loss function, in order to constrain the uniformity of the generated skeleton points.

First, based on the extracted skeleton points

c and the corresponding sphere radii

r, we iterate through each skeleton point and determine if redundancy exists in its surroundings. If redundancy exists, we calculate the number of skeleton points within its redundant range, and calculate the corresponding uniformity loss. Specifically, for each skeleton point

, we search for other skeleton points within its neighborhood sphere with radius

. If

is satisfied, the redundancy within the neighborhood is incremented by one. When the redundancy exceeds a set threshold, the skeleton point area within that region is recorded as redundant. Therefore, this study defines the redundant neighbor set as:

where, when

(which is an empirically set threshold), the region of this point is considered to have excessive density and redundancy. Next, the uniformity loss needs to be calculated for this region to optimize the skeleton point position distribution.

where

denotes the set of generated skeleton points;

denotes the Euclidean distance between the

-th neighboring skeleton points;

denotes the average of all neighboring distances, and

denotes the uniformity loss calculation result.

In summary, the total loss function for the skeleton point extraction network part,

, can be expressed as:

where

,

,

,

respectively represent the weighting coefficients for the

,

,

,

loss functions during the training process. In this study, we empirically set the weight ratios to

,

,

,

.

2.3. Topology Connection Module

In this part, the objective of this section is to predict connections for the extracted skeleton points to form a skeleton with a specific topological structure. Traditional skeleton connection methods rely on Euclidean distance or heuristic rules, have high algorithmic complexity, and perform poorly when handling complex topologies (such as loops, bifurcations, etc.). Therefore, in this section, this paper proposes a graph autoencoder connection prediction network based on a connection optimization strategy. This network utilizes an encoder–decoder architecture to further predict missing links by analyzing the correlations of skeleton points in the latent space. By combining reconstruction loss and an optimized connection strategy for the adjacency matrix, it optimizes the adjacency matrix, enhancing its ability to predict the connection relationships between skeleton points.

When using a graph autoencoder-based network to perform connection prediction on skeleton points, it is necessary to first construct a graph structure of the local neighborhood, to be used by the network to extract their high-dimensional latent space feature representations and to mine the inherent structures and mapping relationships within the data. Therefore, this paper adopts the following two graph neighborhood construction strategies for graph initialization.

k-nearest neighbors strategy, after extracting the skeleton points using the skeleton point extraction network, apply the k-nearest neighbors algorithm to the skeleton points, compute the k nearest neighboring nodes, and set the corresponding edges in the adjacency matrix to 1 (indicating a connection). Among them, this paper sets k = 1, indicating that each node is only connected to its nearest node.

Recovery priority strategy, given an input point and its two nearest skeleton points , , then a skeleton edge should exist connecting them. This is because in 3D point cloud or contour data, skeleton points are usually the center points of the object’s shape. An input point is usually located on the object’s surface, and its two nearest skeleton points are likely located at the two ends of the object’s internal “skeleton segment” corresponding to that point. Therefore, if is simultaneously close to and , this implies that and are also in the same local region of the object, and they should be connected in the true skeleton.

Based on the two graph initialization strategies above, this paper obtains an initial graph possessing partial connection links, the subsequent task is determined to be predicting the links that may exist in this graph, and making the overall links conform to the object’s shape structure to obtain the final object skeleton.

2.3.1. Graph Autoencoder-Based Connection Prediction

After performing the graph neighborhood construction operation on the extracted skeleton points, we obtain a series of discrete, undirected, and unweighted initial graphs

, where

denotes the

M skeleton points output by the skeleton point extraction network, and

denotes the set of edges. Furthermore, the graph

can be encoded using an adjacency matrix

to represent the edge connectivity in the graph structure. The network architecture of the graph autoencoder is shown in

Figure 5. In addition to the adjacency matrix

, the input data also requires node feature information. To effectively utilize the geometric correlation between the skeleton and the input point cloud points, this paper adopts a multi-source fusion approach to establish the node features, that is, the node features

are jointly represented by the skeleton point coordinates

, the skeleton sphere radius

, and the feature output

from the dynamic graph convolution, denoted as

.

The encoder part of the GAE is composed of a residual graph convolutional structure, mainly responsible for compressing node features into the latent embedding

. The decoder part of the GAE employs a vector inner product to measure the correlation of latent features to reconstruct a new adjacency matrix

, as shown in Equation (13):

When training the GAE network model, the objective of this paper is to enable the reconstructed adjacency matrix

to predict the unknown links in the initial adjacency matrix

. Therefore, this paper introduces masked balanced cross-entropy to supervise the GAE learning:

where

denotes the mask matrix, and the loss is calculated only among a specific set of candidate edges;

is the element-wise product, ensuring that only items within the mask’s scope participate in the loss calculation;

denotes the positive and negative sample weight coefficient, used to balance the training sample ratio between the existence and non-existence of edges;

denotes the input adjacency matrix;

denotes the adjacency matrix predicted and output by the model;

denotes the activation function.

2.3.2. Connection Optimization Strategy

In

Section 2.3.1, we utilized the GAE network to perform connection prediction on the initial graph input into the network. However, when utilizing the graph autoencoder network to output skeleton topological connections, a significant number of incorrect connections and self-loops occur. This type of connection structure is unreasonable and unnatural in the actual object skeleton structure. To ensure the generated skeleton conforms to the topological structure of a normal object, it is usually necessary to manually correct the incorrectly connected edges based on the object’s original shape. However, in practical application scenarios, the shape differences among different categories, or even different objects within the same category, are very significant. The workload of performing manual correction on a case-by-case basis is enormous and does not align with high-throughput automated data processing and analysis.

To solve the aforementioned connection error problem, this paper designs a connection optimization strategy based on cosine similarity threshold judgment. By relying on the directional distribution characteristics of edges in space, it filters out structurally unreasonable connections by matching the cosine similarity with predefined direction vectors. This method is capable of, without relying on manual intervention, effectively eliminating non-topologically consistent connected edges, while retaining connection relationships that align with the directionality of the true skeleton structure, thereby enhancing the topological naturalness and accuracy of the skeleton structure.

Point clouds are discrete points distributed in 3D space that represent the shape of an object, and any point in a 3D Cartesian coordinate system can be represented by a combination of several uncorrelated vectors. Therefore, the core idea of this strategy is: first, define eight uncorrelated direction vectors centered at the origin of the coordinate system in 3D space, which are

, where

is the unit vector component. Subsequently, for each connected edge, its normalized direction vector is calculated, and the cosine similarity between it and the eight aforementioned direction vectors is computed, thereby determining the spatial tendency of the edge. For each skeleton point, the number of direction vector categories corresponding to its connected edges is counted, and the reasonableness of its topological structure is judged based on this. When the connection directions of a certain point are too dense, it indicates that the connection structure of that point deviates from the natural skeleton directional characteristics, and should be regarded as a redundant connection structure. Within a tree-structured skeleton, each skeleton point typically connects geometrically to either two other points (serving as an intermediate node that links a preceding and a succeeding node) or three points (situated at a bifurcation point connecting the trunk and branches). Consequently, after the spatial tendency of the connecting edges is calculated, any excess edges should be regarded as redundant connections and pruned. Therefore, in this paper, the spatial tendency threshold for edges is set to 4. The specific implementation process of this optimization strategy is shown in Algorithm 1.

| Algorithm 1 Algorithm for skeleton edge screening strategy based on direction vector |

Input:

|

| Output: filtered adjacency matrix |

1: e ←

2: Define the direction vector: Directions ← [[e, e, e], [e, e, −e], [e, −e, e], [e, −e, −e], [−e, e, e], [−e, e, −e], [−e, −e, e], [−e, −e, −e]]

3: for b = 0 to B − 1 do

4: for i = 0 to N − 1 do

5: pt1 ← skel_xyz[b, i, :] current node position

6: Neighbors ← {j|[b, i, j] = 1}

7: if |Counts ← zeros(8)

8: for each j ∈ Neighbors do

9: pt2 = skel [b, j, :]

10: Edge = pt2 − pt1

11: , Directions)

12: Closest = argmax( Similarities)

13: Counts[Closest] = Counts[Closest] + 1

14: end for

15: Nums ← count(Counts > 0)

16: if Nums > 4 then

17: [b, i, :] ← 0

18: [b, :, i] ← 0

19: end if

20: end for

21: end for

22: ← max(, transpose(, 1, 2))

23: return |

This strategy fully considers the directional characteristics of 3D object skeleton connections. For objects with long-axis structures, the connecting edges usually extend along the principal axis direction; therefore, the correct connection relationships should be concentrated in a small number of spatial directions. In contrast, points with excessively discrete connection directions are usually caused by connection errors or prediction redundancy. Through the direction vector filtering mechanism, these unreasonable connections can be automatically identified and eliminated, thereby enhancing the structural expression capability and semantic consistency of the overall skeleton.

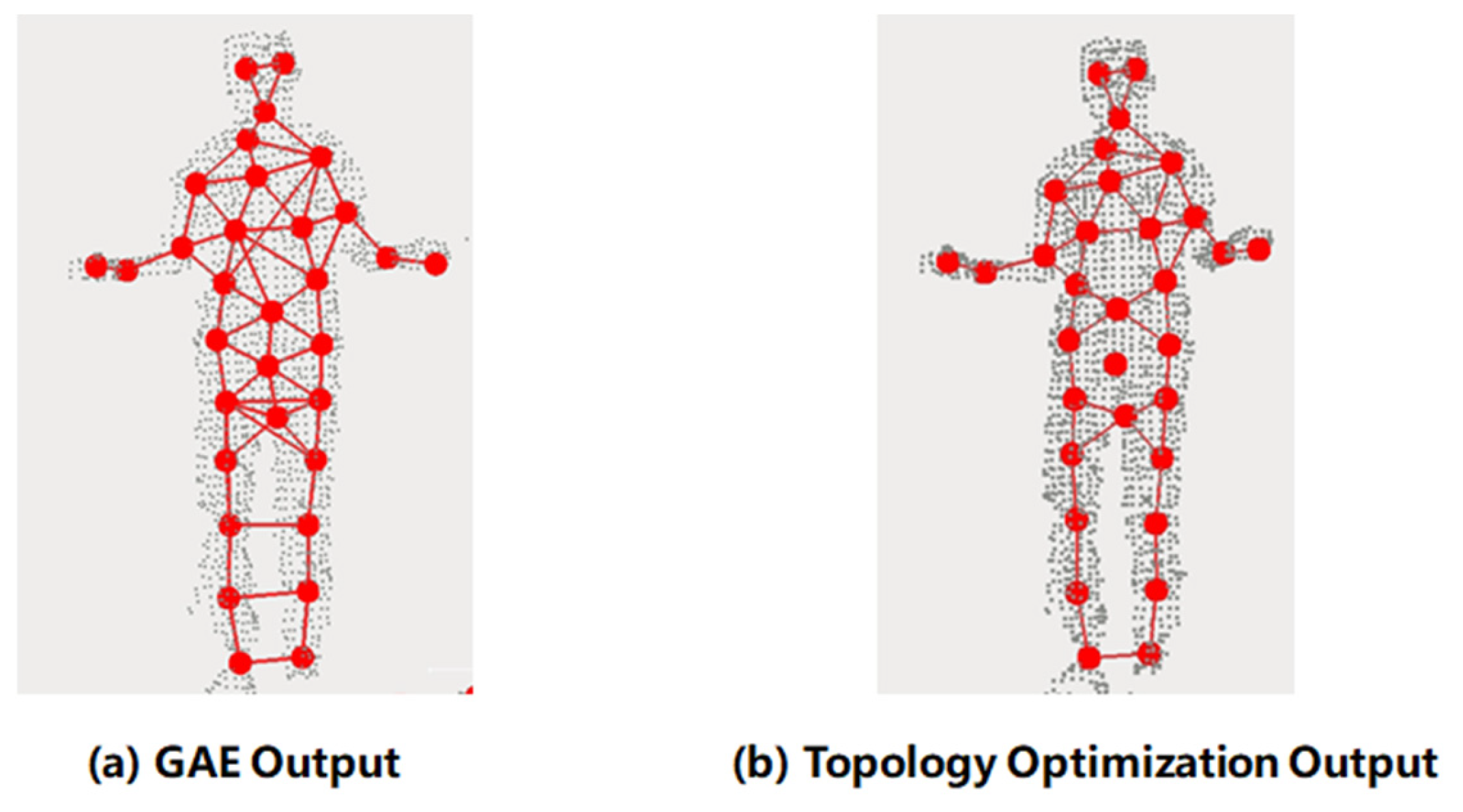

Figure 6 shows a comparison of the skeleton structures before and after the connection optimization strategy, based on the graph autoencoder’s prediction results. As shown in

Figure 6a, the edge connection results predicted by the GAE network contain a large number of redundant edge connections and self-loop structures;

Figure 6b shows the result optimized by the method in this paper, the main structure of the connections is clear, most of the erroneous redundant edge connections have been removed, and it possesses the ability to eliminate redundant points, significantly enhancing the topological reasonableness and shape expression capability of the skeleton.

4. Discussion and Conclusions

To address the prevalent issues in current deep learning networks for 3D skeleton extraction, such as high annotation costs, skeleton point deviations, and difficulties in structure recovery, this paper proposes Graph-SENet, an unsupervised learning-based graph neural network method for skeleton extraction, achieving the end-to-end automated generation of object skeletons from points to structures. By leveraging dynamic graph convolution and multi-level feature fusion, the proposed method effectively enhances the capability for local geometric structure modeling. Furthermore, it utilizes a multi-dimensional unsupervised loss to significantly strengthen the precision and robustness of skeleton point localization. Simultaneously, by combining a graph autoencoder with a topology optimization strategy, the method realizes the automatic prediction of skeleton connection relationships, yielding semantically consistent and structurally complete 3D skeletons. Experimental results indicate that Graph-SENet achieves the best Chamfer Distance (CD) and F1-scores across three cross-category datasets: ShapeNet, ITOP, and Soybean-MVS. Moreover, it maintains stable performance under unified hyperparameter settings, demonstrating significant advantages in precision, robustness, and cross-category generalization. These results suggest that the framework proposed in this paper not only effectively reduces the reliance on manual annotation for 3D skeleton extraction tasks but also achieves a performance breakthrough in structured skeleton recovery under unsupervised conditions, providing a reliable structural foundation for downstream 3D understanding and point cloud analysis tasks.

First, the method proposed in this paper still relies on the combination of weights for multi-dimensional feature losses during the training phase. Since optimal weight settings may vary across different object categories, this introduces a certain degree of hyperparameter sensitivity. Currently, the optimal weights are determined empirically; this process is time-consuming, and it is difficult to guarantee that each adjustment aligns with the direction of positive convergence. Therefore, future research could further explore mechanisms for the dynamic and adaptive adjustment of loss weights during the training process to reduce manual intervention, accelerate loss convergence, and enhance the robustness of the algorithm.

Second, based on the experimental results, it can be found that in the skeleton point extraction results, instances of redundant skeleton point extraction exist in certain regions, for example, the human upper limb regions and the chair backrest regions. The reason is that, compared to supervised learning, unsupervised learning lacks the supervision from manually annotated points that carry specific semantic meaning (annotation information). It can only extract points capable of representing that specific region through feature learning and loss function constraints. Although reducing the number of extracted skeleton points might ameliorate this situation, the possibility of incomplete skeleton point representation also exists. Therefore, in future work, enhancing skeleton point feature aggregation and shape representation is a possible direction for improvement.

Furthermore, point clouds in real-world scenes are often accompanied by issues such as noise, occlusion, outliers, and incomplete observations. Although sparsity has been introduced into the training data of this study through random downsampling, the robustness of the network under conditions of severe noise perturbation or extreme partial observation has not been further systematically evaluated. In future work, the applicability of the model in complex point cloud scenes can be enhanced by incorporating noise augmentation strategies and designing noise-invariant features.

Furthermore, in terms of practical application, the proposed method relies solely on a single forward pass during the inference phase. It requires neither complex post-processing nor manual intervention, exhibiting a high degree of automation, and can be directly integrated into existing point cloud processing pipelines. In field applications, simply feeding the object point cloud data collected by sensors into the model automatically generates skeleton points and their topological structures. This makes it suitable for tasks requiring immediate structural information, such as robot navigation, agricultural plant structure analysis, and rapid scene understanding. Simultaneously, we conducted a comparative statistical analysis of the computational cost, parameter size, and average test time against the comparison algorithms. The model complexity metrics of our method are 4.51 GFLOPs, 0.49 MB, and 0.708 s, respectively. Experimental results indicate that the proposed algorithm significantly outperforms the other two comparison algorithms in terms of both model complexity and real-time performance. This implies that Graph-SENet possesses the capability for real-time application in practical systems, holding significant importance for advancing 3D data analysis capabilities in fields such as autonomous driving, smart healthcare, and smart agriculture.