A Resilient Deep Learning Framework for Mobile Malware Detection: From Architecture to Deployment

Abstract

1. Introduction

- Diverse Security Threats: Mobile devices are vulnerable to theft, social engineering, and loss, all of which can expose sensitive data.

- Growing Data Sensitivity: Mobile devices store increasing amounts of personal, financial, and business data, making them prime targets.

- Evolving Malware Landscape: Attackers constantly develop new methods to bypass defenses [5].

- Real-Time Protection Needs: Always-connected devices require real-time protection. Traditional solutions often fall short [6]. The real-time constraints depend heavily on the device’s interactive latency and characteristics.

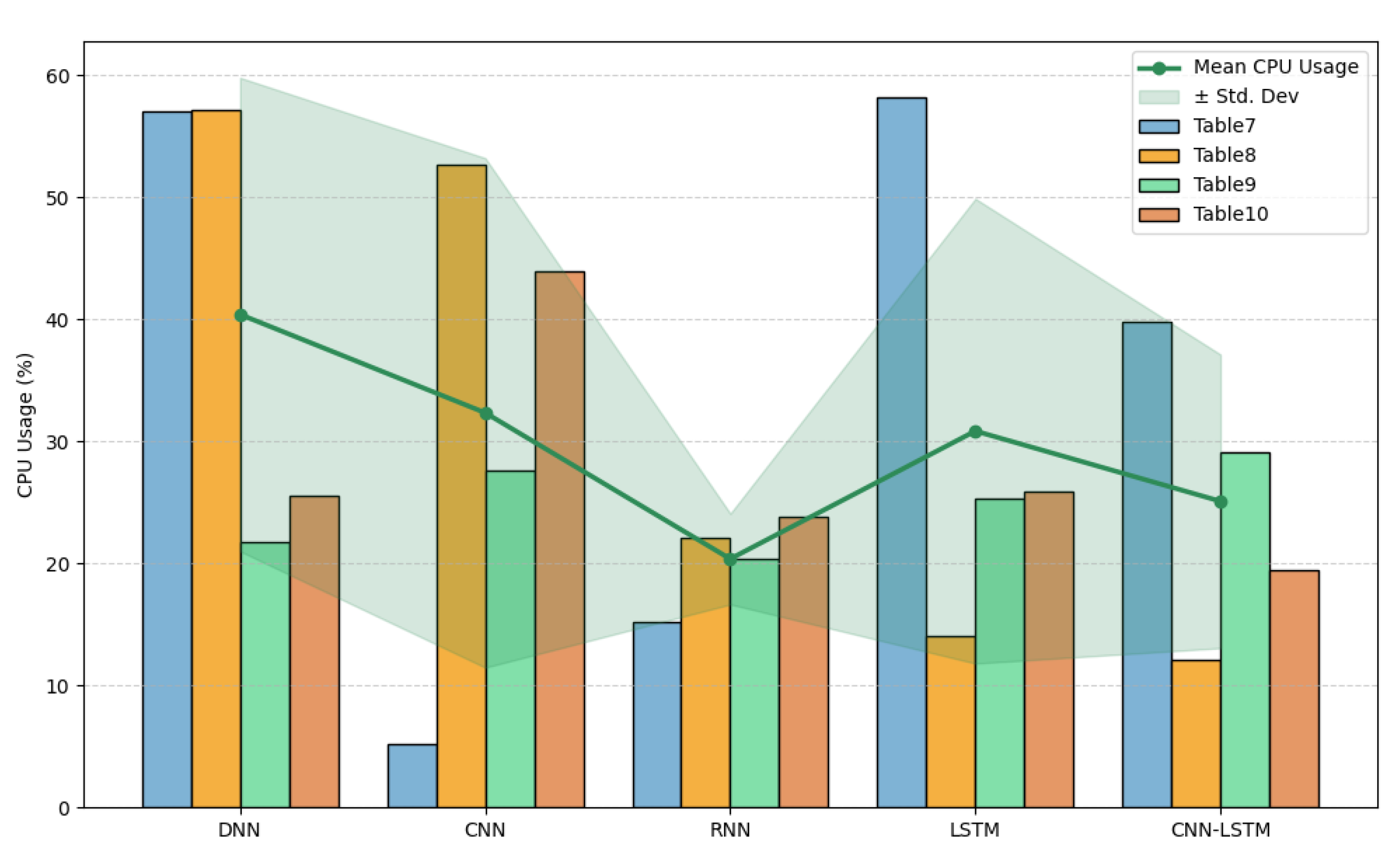

- It introduces a CPU-adaptive multi-model design that dynamically selects the most suitable neural network at runtime depending on system load.

- It conducts a structured evaluation of five deep learning architectures (DNN, CNN, RNN, LSTM, CNN-LSTM) across two preprocessing settings to explore the balance between dimensionality reduction and performance.

- It demonstrates a working prototype, implemented as a TensorFlow Lite Android app, confirming the framework’s robustness and scalability in real-world use.

2. Background and Related Work

2.1. Indicators of Compromise (IoCs)

- Atomic Indicators: Cannot be divided further without losing significance; autonomously determine if a network is compromised.

- Behavioural Indicators: Extracted from event information, e.g., hash values of malicious files.

- Computed Indicators: Represent adversary behaviors or tactics, helping detect hostile activity and, in some cases, attribute responsibility.

2.2. Mobile Malware Threats

2.3. Machine Learning Approaches for Malware Detection

2.4. Deep Learning Approaches for Malware Detection

2.5. Datasets Used in Literature

3. Proposed Mobile Malware Detection Framework

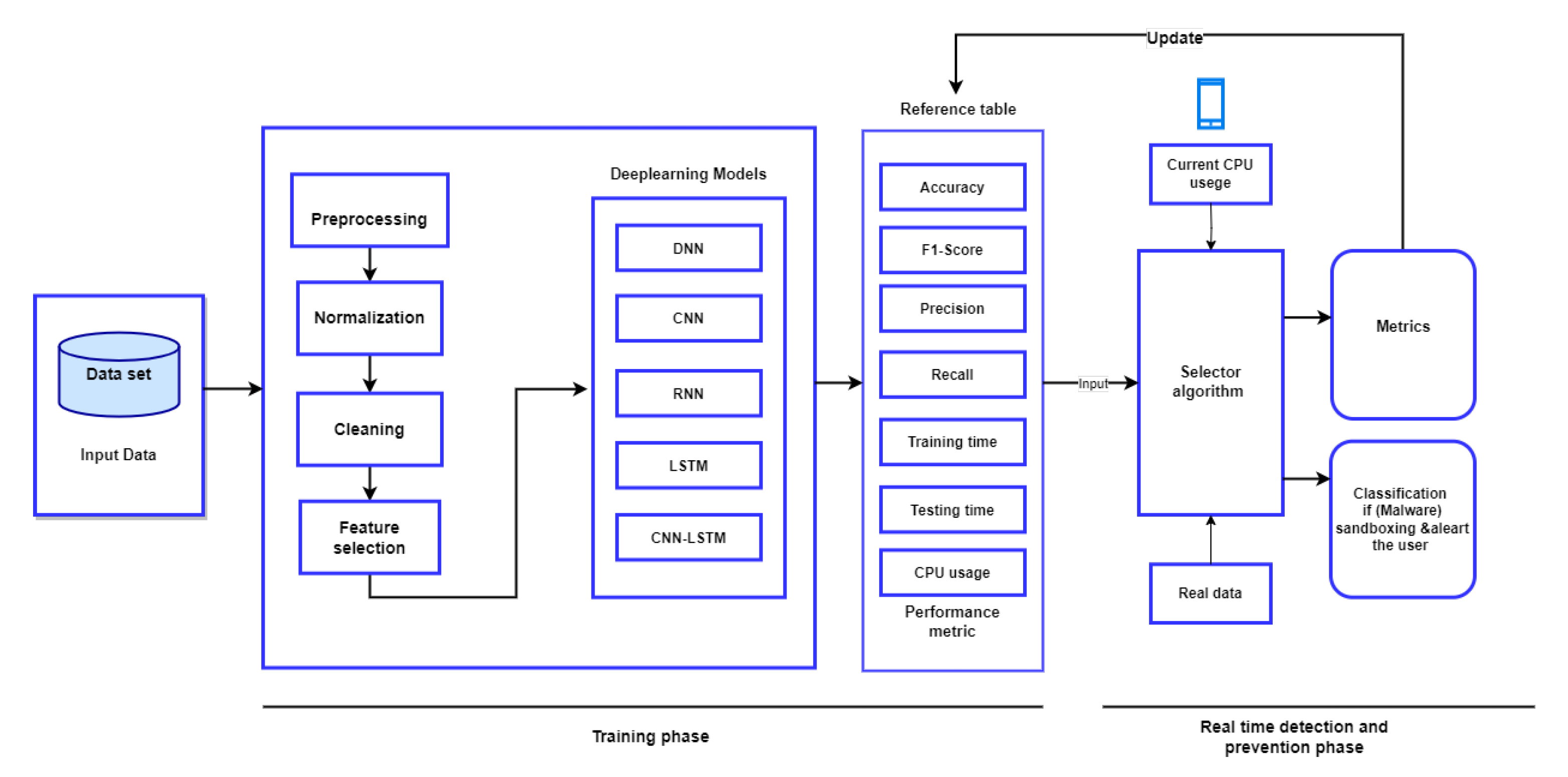

3.1. System Architecture Overview

3.2. Systems Components

3.2.1. Input Data Preprocessing, Normalization, and Feature Extraction

- Static features: Extracted from app code, including permissions, API calls, code structure, or opcodes.

- Dynamic features: Extracted from app behavior, such as system calls, network traffic, file system access, and user interface interactions.

- Data preprocessing: Cleaning, transforming, and integrating data to improve quality and make it suitable for analysis.

- Normalization: Scaling features to a standard range so that machine-learning algorithms work more effectively.

- Data cleaning: Removing duplicates, anomalies, and missing values through deletion, imputation, or transformation.

- Feature selection: Choosing a subset of features to reduce dimensionality and improve algorithm efficiency and performance [49].

- IoC-to-feature transformation: This process involves converting each Indicator of Compromise (IoC) into a form suitable for machine learning models. Each IoC becomes a model-ready representation before data ingestion. Static IoCs, including manifest permissions, API call signatures, intents, and command patterns, are converted into binary or one-hot encoded features, following established methods in Android malware datasets such as the 215-feature Drebin-derived dataset. Dynamic IoCs captured from runtime behavior—like API call traces, system or network activity, and execution-phase artifacts—are represented as temporal sequences or tabular flow features, which include about 80 network flow attributes documented in CIC-InvesAndMal2019 and adopted in systems such as DL-Droid. This transformation enables CNN and CNN-LSTM models to capture spatial and temporal structures through sequence or matrix representations, while RNN and LSTM models process sequential behavioral evidence efficiently.

3.2.2. Model Selection Algorithm

- CPU < 20%: Use the best-performing model regardless of size.

- CPU 20–40%: Use a balanced model (performance vs. CPU).

- CPU 50–80%: Use a smaller model optimized for low CPU usage.

- CPU > 80%: the selector defaults to the lightweight DNN model to minimize resource consumption.

| Algorithm 1 Adaptive Model Selector based on CPU Utilization and Latency Constraint |

|

3.3. Deep Learning Used Models

- CNN-LSTM model: CNN-LSTM hybrids combine convolutional layers for spatial feature extraction with LSTM layers for sequential processing, making them suitable for tasks involving sequential data with spatial characteristics [24].

Metrics and Real-Time Update

3.4. Classification and Response

4. Experimental Setup

4.1. Training Scenarios

- Scenario 1 (Without Feature Selection): In this setup, the entire feature set was used without applying any dimensionality reduction techniques. The dataset was divided into 85% training and 15% testing to ensure sufficient data for model learning while preserving a portion for unbiased evaluation. This scenario highlights how models behave when exposed to all available features, including potential redundancy and noise.

- Scenario 2 (With Feature Selection): In this setup, feature dimensionality was reduced prior to training using the SelectKBest method, which selects features based on statistical significance. The dataset was split into 75% training and 25% testing, allowing more samples for evaluation compared to Scenario 1. For the Android dataset, the top 100 features were retained, while for the CIC dataset, the top 50 features were selected. This scenario investigates the impact of dimensionality reduction on both computational efficiency and predictive accuracy, emphasizing whether eliminating less informative features can improve generalization and reduce resource consumption.

4.2. Datasets

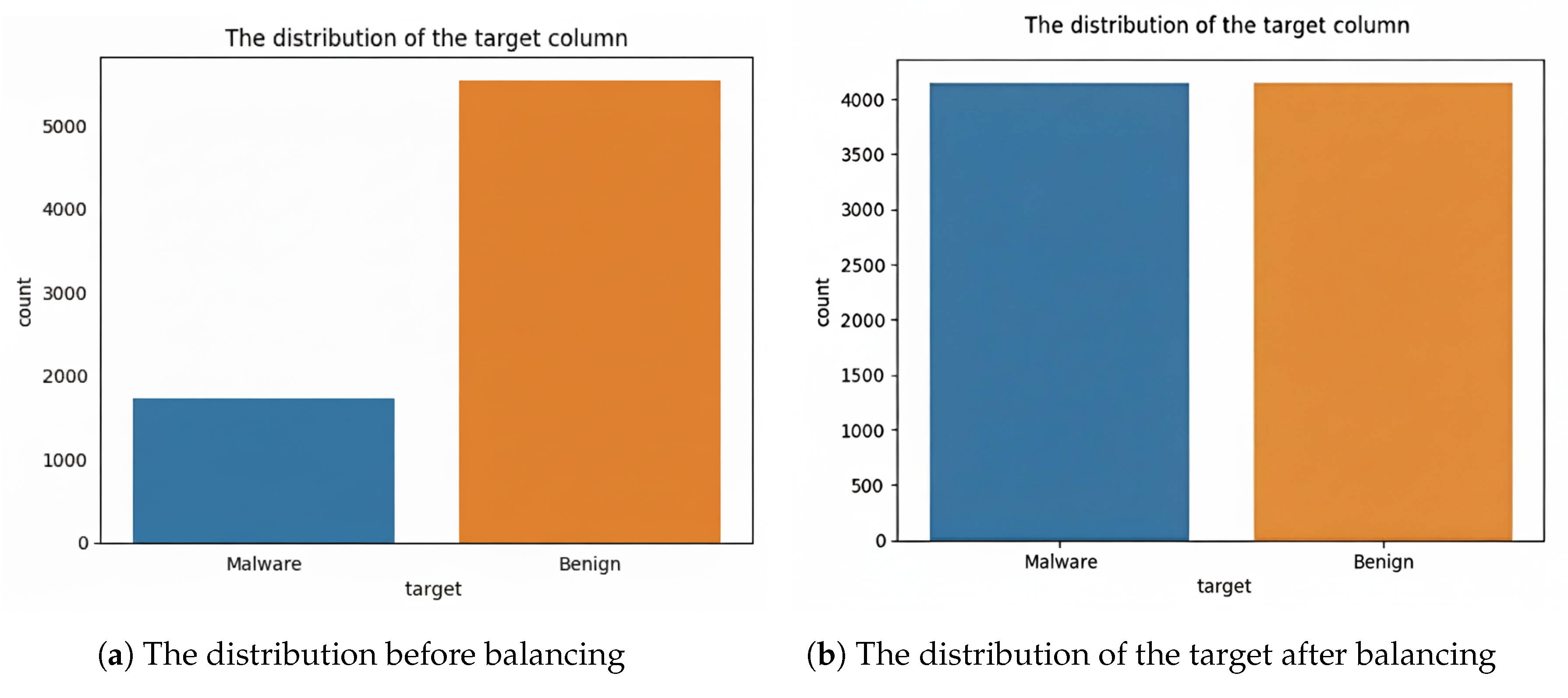

4.2.1. Android Malware Dataset for Machine Learning [48]

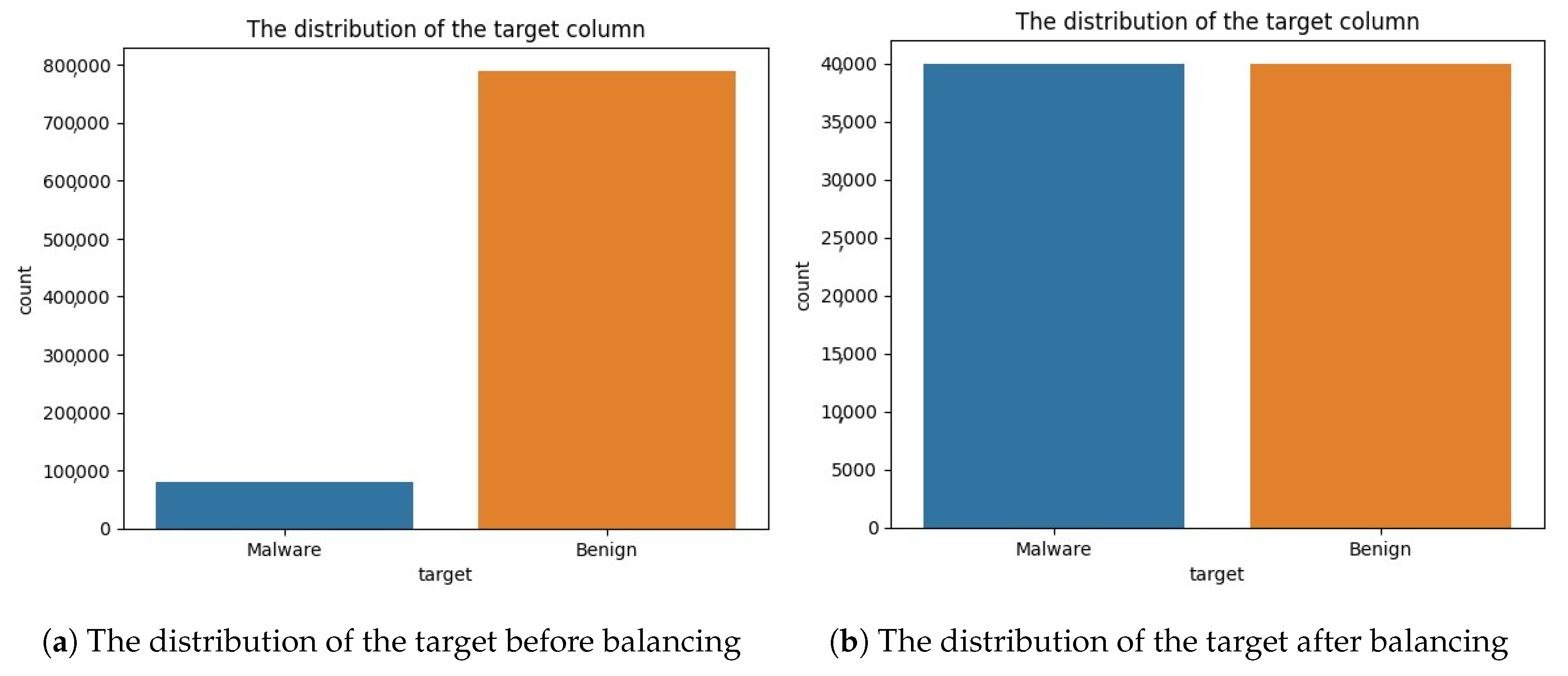

4.2.2. CIC-InvesAndMal2019 Dataset [44]

4.3. Data Preprocessing and Model Architectures

5. Results and Analysis

5.1. Computational Environment

5.2. Performance Evaluation

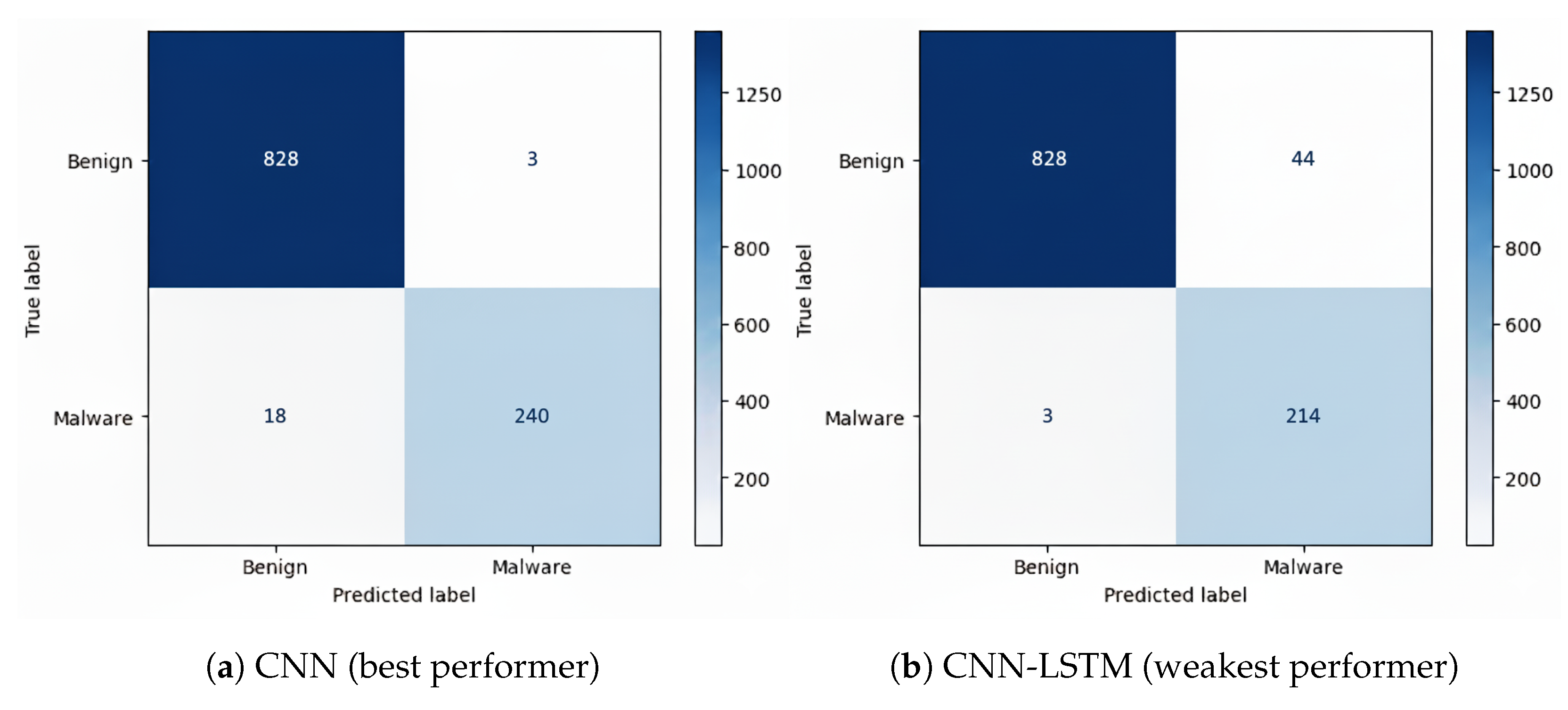

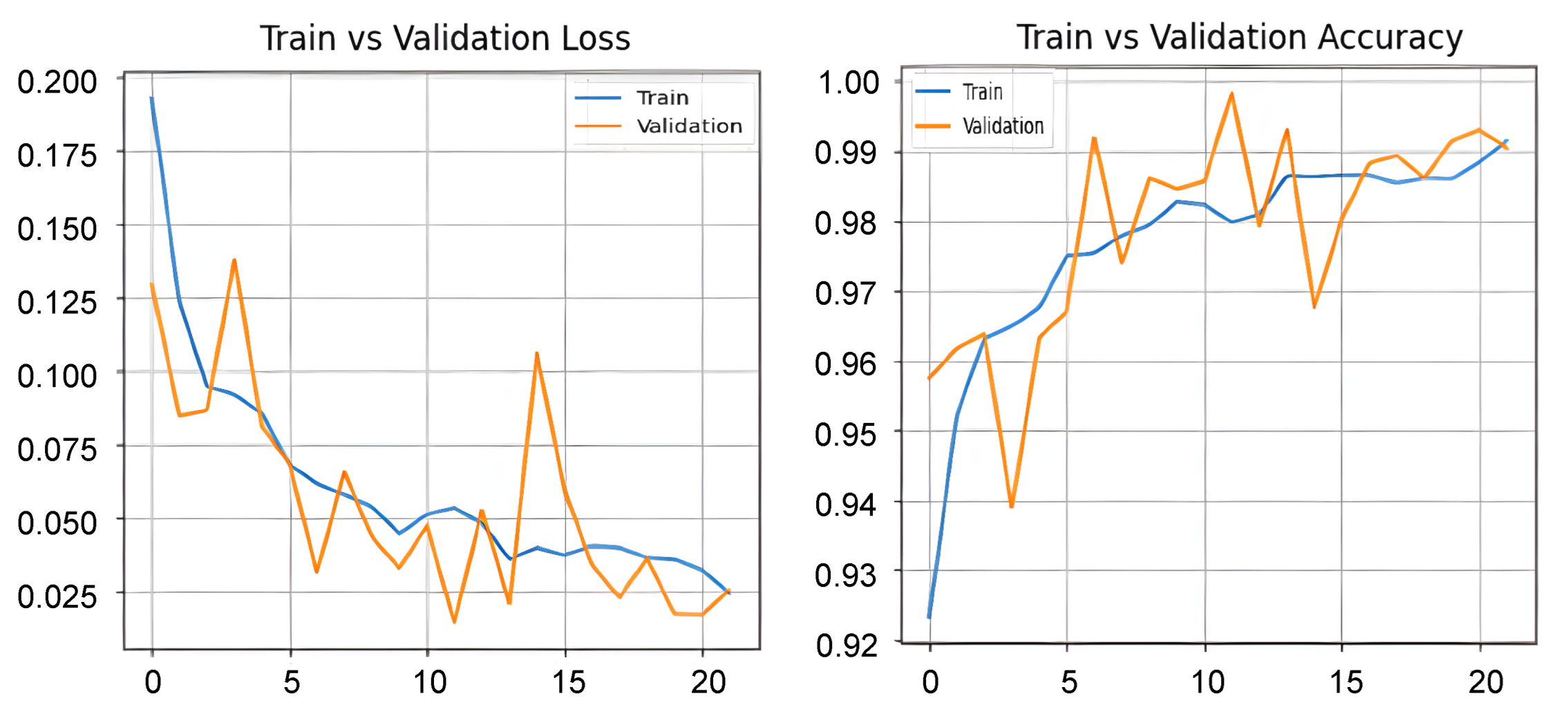

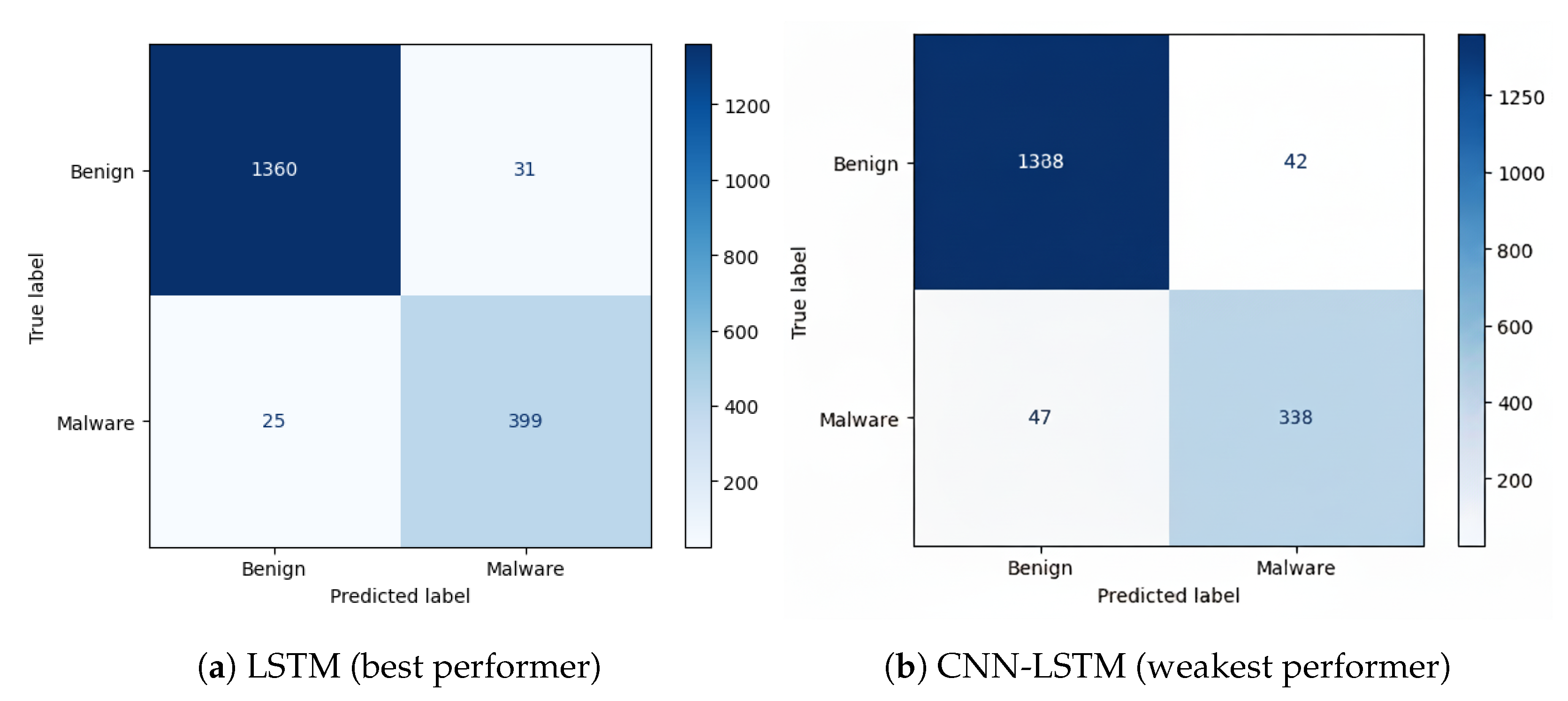

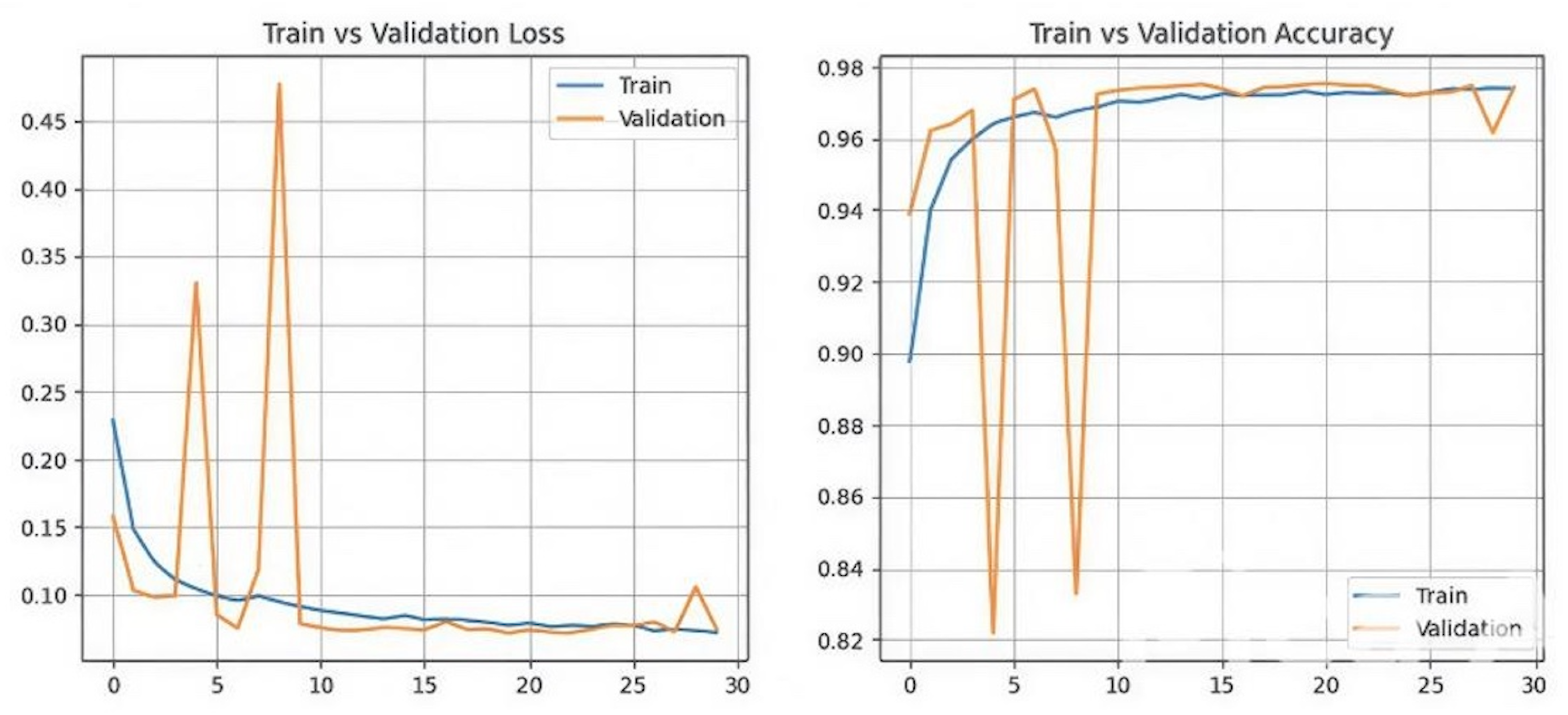

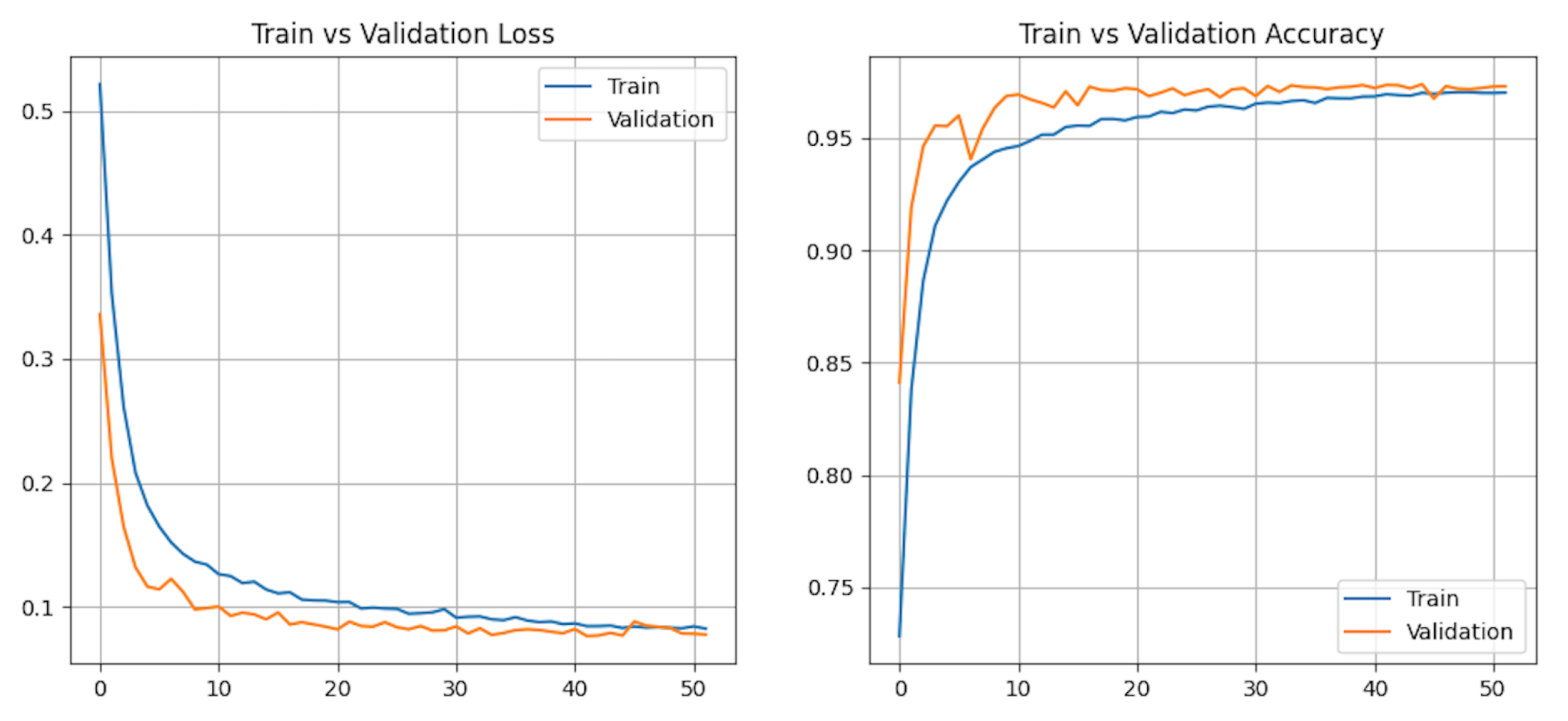

5.2.1. Android Malware Dataset

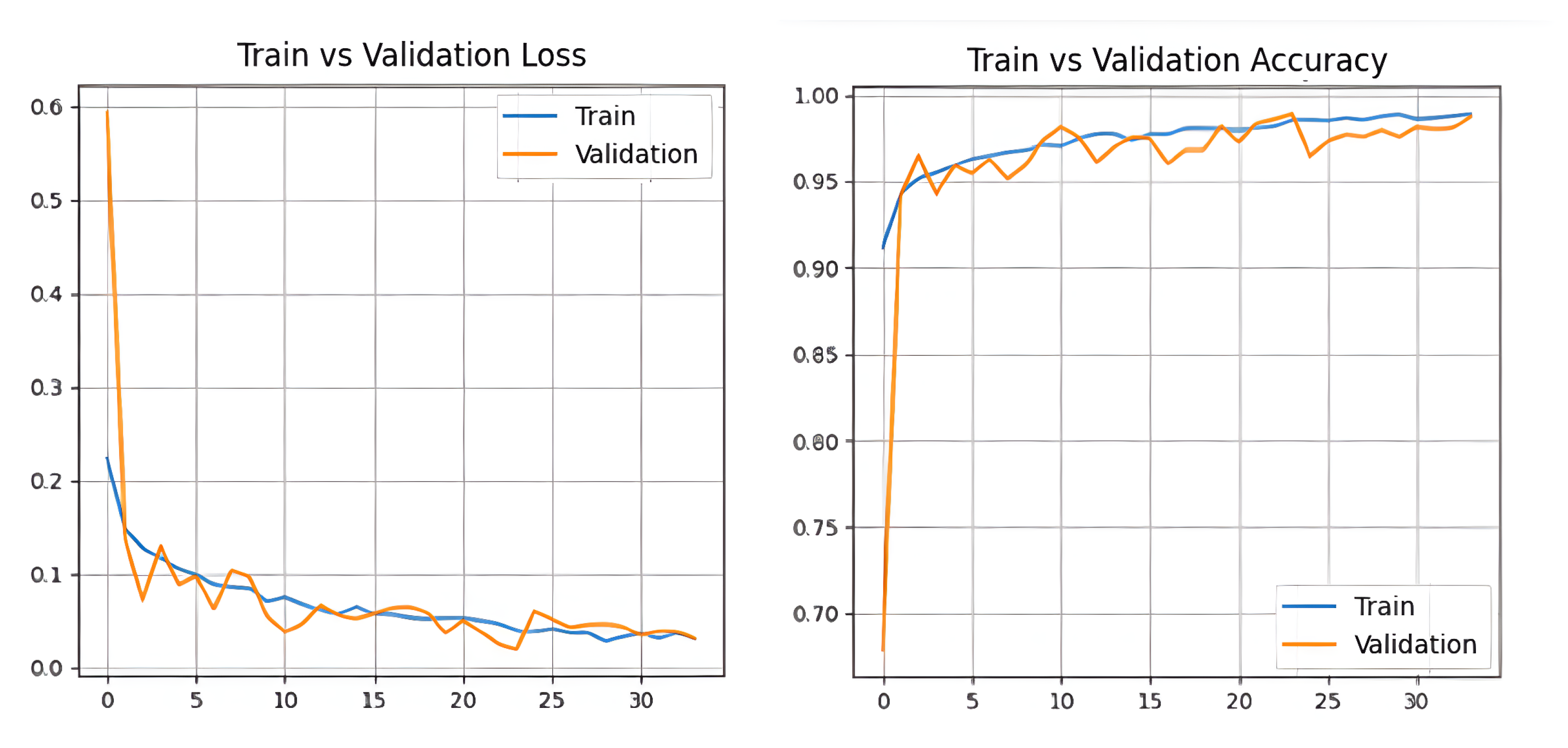

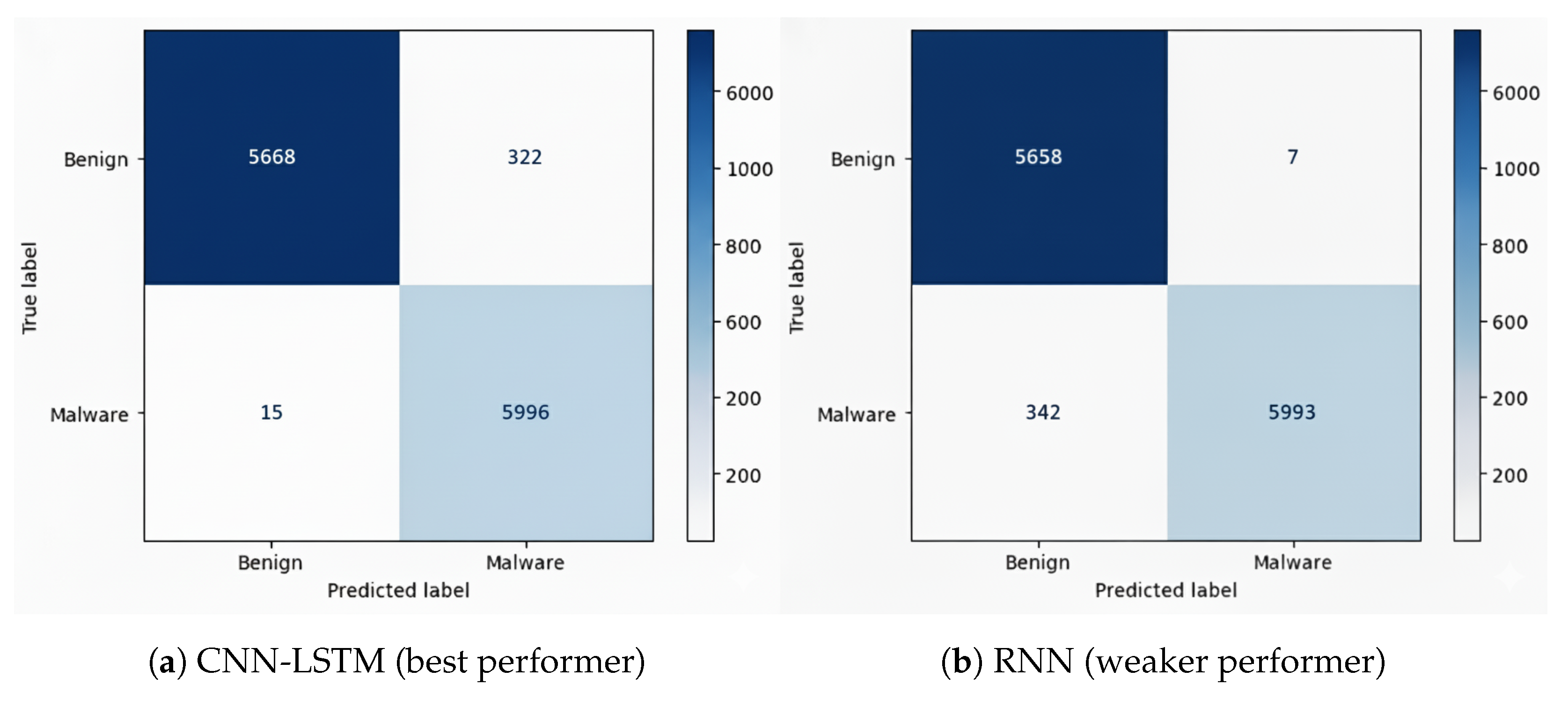

5.2.2. CIC-InvesAndMal2019 Dataset

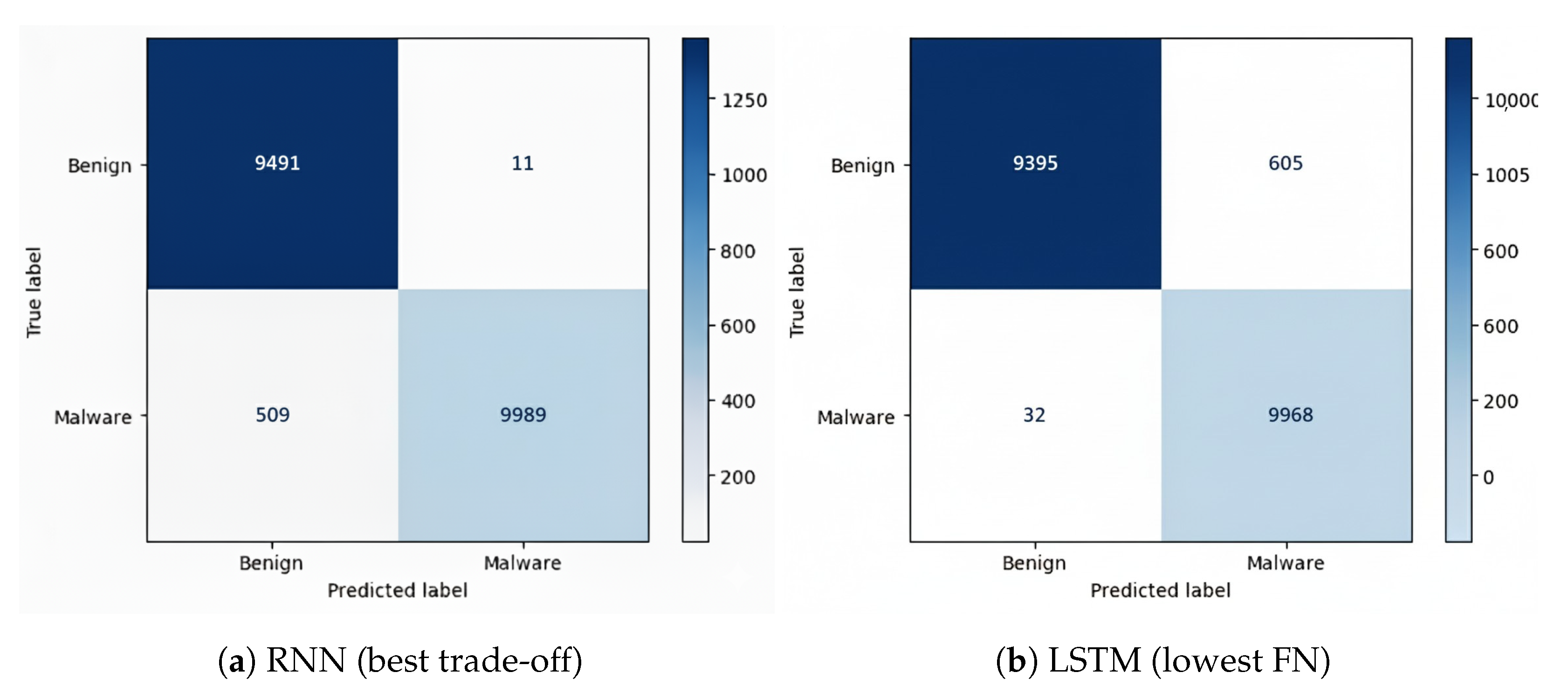

- RNN achieved the best overall balance, with the lowest FP (11) and relatively low FN (509).

- CNN also performed well, with the lowest FP (11) but higher FN (536).

- LSTM minimized FN (32), but at the cost of higher FP (505).

- DNN showed balanced but slightly weaker results compared to RNN and CNN.

- CNN-LSTM had higher FP (481) than most models, though FN (36) was comparable to LSTM.

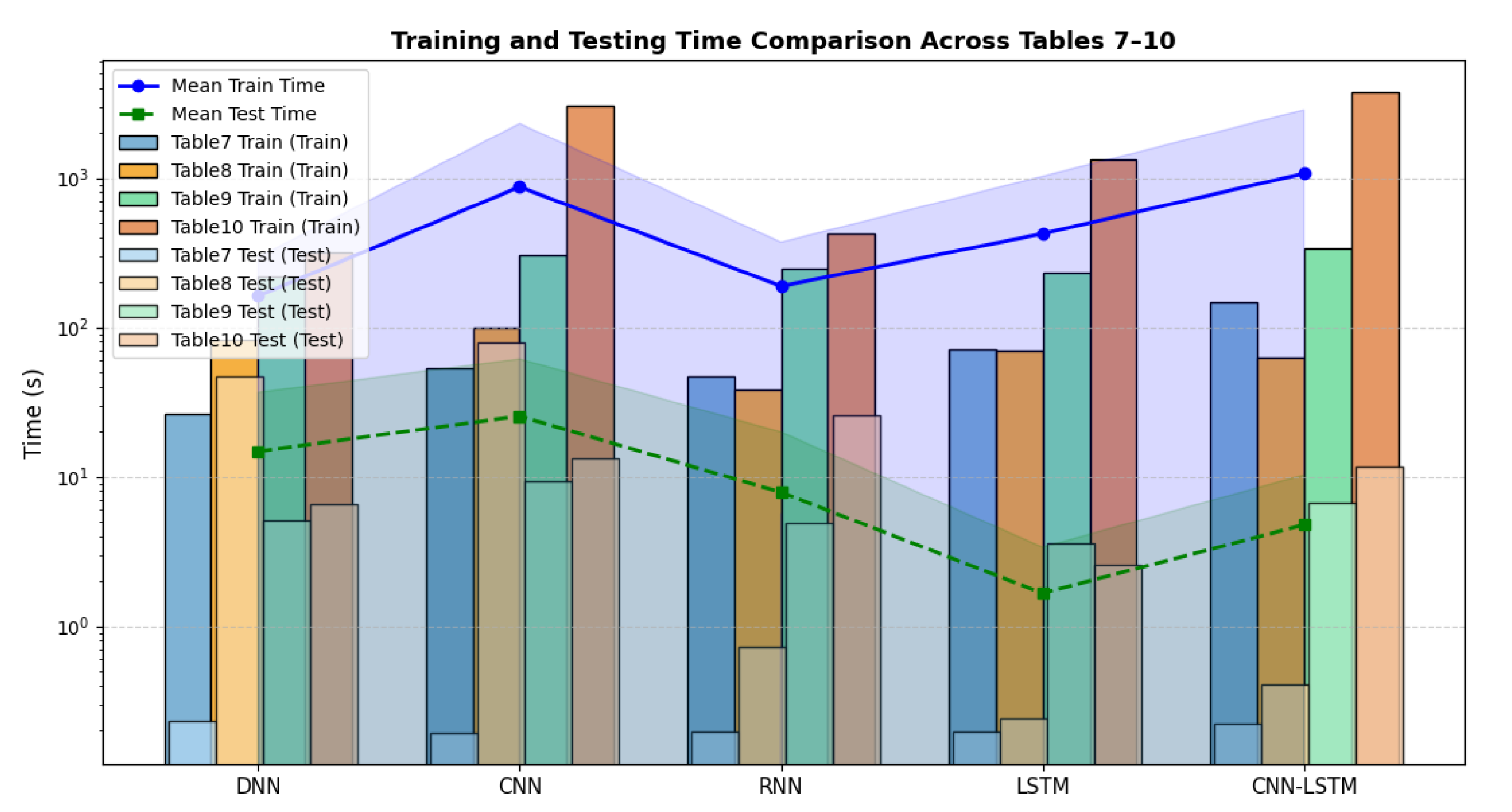

5.3. Statistical Reliability and Aggregated Performance Analysis

6. Android App Deployment

6.1. Model Conversion and Optimization

6.2. Deployment Results

- CNN: Achieved the best overall performance with an accuracy of 98.5% and a reasonable inference time of 45 ms, making it the most reliable model.

- DNN: Delivered faster inference (38 ms) but lower accuracy (91.3%), which was still acceptable, leading to a Pass outcome.

- LSTM: Produced the lowest performance, with an accuracy of 87.6% and the slowest inference (105 ms), resulting in a Fail.

- CNN-LSTM: Balanced accuracy (94.2%) and inference time (68 ms), representing a suitable trade-off between speed and reliability.

- RNN: Showed unstable predictions with the lowest accuracy (83.9%) and high inference time (97 ms), leading to a Fail.

7. Discussion

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Indicators of Compromise | IoC |

| Deep Neural Network | DNN |

| Convolutional Neural Network | CNN |

| Long Short-Term Memory | LSTM |

| Application | app |

| CICAndMal2019 | CIC |

References

- Khanachivskyi, O. How Many Apps Are in the Google Play Store in 2025? A Look at the Mobile Landscape. 2025. Available online: https://litslink.com/blog/how-many-apps-are-in-the-google-play-store (accessed on 10 September 2025).

- Anees, A.; Hussain, I.; Khokhar, U.M.; Ahmed, F.; Shaukat, S. Machine learning and applied cryptography. Secur. Commun. Netw. 2022, 2022, 1–3. [Google Scholar] [CrossRef]

- Mbunge, E.; Muchemwa, B.; Batani, J.; Mbuyisa, N. A review of deep learning models to detect malware in Android applications. Cyber Secur. Appl. 2023, 1, 100014. [Google Scholar] [CrossRef]

- Preuveneers, D.; Joosen, W. Sharing machine learning models as indicators of compromise for cyber threat intelligence. J. Cybersecur. Priv. 2021, 1, 140–163. [Google Scholar] [CrossRef]

- Su, X.; Xiao, L.; Li, W.; Liu, X.; Li, K.C.; Liang, W. DroidPortrait: Android malware portrait construction based on multidimensional behavior analysis. Appl. Sci. 2020, 10, 3978. [Google Scholar] [CrossRef]

- Yerima, S.Y. High Accuracy Detection of Mobile Malware Using Machine Learning. Electronics 2023, 12, 1408. [Google Scholar] [CrossRef]

- Chowdhury, M.N.U.R.; Haque, A.; Soliman, H.; Hossen, M.S.; Fatima, T.; Ahmed, I. Android malware Detection using Machine learning: A Review. arXiv 2023, arXiv:2307.02412. [Google Scholar] [CrossRef]

- Augello, A.; De Paola, A.; Re, G.L. M2FD: Mobile malware federated detection under concept drift. Comput. Secur. 2025, 152, 104361. [Google Scholar] [CrossRef]

- Nayak, P.; Sharma, A. A review on machine learning in cryptography: Future perspective and application. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2025; Volume 3224, p. 020031. [Google Scholar]

- Catakoglu, O.; Balduzzi, M.; Balzarotti, D. Automatic extraction of indicators of compromise for web applications. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; pp. 333–343. [Google Scholar]

- Zhou, S.; Long, Z.; Tan, L.; Guo, H. Automatic identification of indicators of compromise using neural-based sequence labelling. arXiv 2018, arXiv:1810.10156. [Google Scholar] [CrossRef]

- Asiri, M.; Saxena, N.; Gjomemo, R.; Burnap, P. Understanding indicators of compromise against cyber-attacks in industrial control systems: A security perspective. ACM Trans.-Cyber-Phys. Syst. 2023, 7, 1–33. [Google Scholar] [CrossRef]

- Akremi, A. A forensic-driven data model for automatic vehicles events analysis. PeerJ Comput. Sci. 2022, 8, e841. [Google Scholar] [CrossRef]

- Rouached, M.; Akremi, A.; Macherki, M.; Kraiem, N. Policy-Based Smart Contracts Management for IoT Privacy Preservation. Future Internet 2024, 16, 452. [Google Scholar] [CrossRef]

- Liu, Y.; Tantithamthavorn, C.; Li, L.; Liu, Y. Deep learning for android malware defenses: A systematic literature review. ACM Comput. Surv. 2022, 55, 1–36. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, D.; Wang, J.; Wang, Y.; Wang, Y.; Ding, P. Application of deep learning in malware detection: A review. J. Big Data 2025, 12, 99. [Google Scholar] [CrossRef]

- Garg, S.; Baliyan, N. Comparative analysis of Android and iOS from security viewpoint. Comput. Sci. Rev. 2021, 40, 100372. [Google Scholar] [CrossRef]

- Senanayake, J.; Kalutarage, H.; Al-Kadri, M.O. Android mobile malware detection using machine learning: A systematic review. Electronics 2021, 10, 1606. [Google Scholar] [CrossRef]

- Akremi, A.; Sallay, H.; Rouached, M. An efficient intrusion alerts miner for forensics readiness in high speed networks. Int. J. Inf. Secur. Priv. (IJISP) 2014, 8, 62–78. [Google Scholar] [CrossRef]

- Aslan, Ö.A.; Samet, R. A comprehensive review on malware detection approaches. IEEE Access 2020, 8, 6249–6271. [Google Scholar] [CrossRef]

- Panman de Wit, J.; Bucur, D.; van der Ham, J. Dynamic detection of mobile malware using smartphone data and machine learning. Digit. Threat. Res. Pract. (DTRAP) 2022, 3, 1–24. [Google Scholar] [CrossRef]

- Senanayake, J.; Kalutarage, H.; Al-Kadri, M.O.; Petrovski, A.; Piras, L. Android source code vulnerability detection: A systematic literature review. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Alromaihi, N.; Rouached, M.; Akremi, A. Design and Analysis of an Effective Architecture for Machine Learning Based Intrusion Detection Systems. Network 2025, 5, 13. [Google Scholar] [CrossRef]

- Ucci, D.; Aniello, L.; Baldoni, R. Survey of machine learning techniques for malware analysis. Comput. Secur. 2019, 81, 123–147. [Google Scholar] [CrossRef]

- Kaur, A.; Jain, S.; Goel, S.; Dhiman, G. A review on machine-learning based code smell detection techniques in object-oriented software system (s). Recent Adv. Electr. Electron. Eng. (Former. Recent Patents Electr. Electron. Eng. 2021, 14, 290–303. [Google Scholar] [CrossRef]

- Buczak, A.L.; Guven, E. A survey of data mining and machine learning methods for cyber security intrusion detection. IEEE Commun. Surv. Tutorials 2015, 18, 1153–1176. [Google Scholar] [CrossRef]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Voas, J. DDoS in the IoT: Mirai and other botnets. Computer 2017, 50, 80–84. [Google Scholar] [CrossRef]

- Meng, G.; Xue, Y.; Xu, Z.; Liu, Y.; Zhang, J.; Narayanan, A. Semantic modelling of android malware for effective malware comprehension, detection, and classification. In Proceedings of the 25th International Symposium on Software Testing and Analysis, Saarbrücken, Germany, 18–20 July 2016; pp. 306–317. [Google Scholar]

- Onwuzurike, L.; Mariconti, E.; Andriotis, P.; Cristofaro, E.D.; Ross, G.; Stringhini, G. Mamadroid: Detecting android malware by building markov chains of behavioral models (extended version). ACM Trans. Priv. Secur. (TOPS) 2019, 22, 1–34. [Google Scholar] [CrossRef]

- Mahindru, A.; Sangal, A. FSDroid:-A feature selection technique to detect malware from Android using Machine Learning Techniques: FSDroid. Multimed. Tools Appl. 2021, 80, 13271–13323. [Google Scholar] [CrossRef]

- Hasan, H.; Ladani, B.T.; Zamani, B. MEGDroid: A model-driven event generation framework for dynamic android malware analysis. Inf. Softw. Technol. 2021, 135, 106569. [Google Scholar] [CrossRef]

- Akremi, A. Software security static analysis false alerts handling approaches. Int. J. Adv. Comput. Sci. Appl 2021, 12, 1–10. [Google Scholar] [CrossRef]

- Liu, L.; Wang, B. Automatic malware detection using deep learning based on static analysis. In Proceedings of the Data Science: Third International Conference of Pioneering Computer Scientists, Engineers and Educators, ICPCSEE 2017, Changsha, China, 22–24 September 2017; Proceedings, Part I. pp. 500–507. [Google Scholar]

- Saxe, J.; Berlin, K. Deep neural network based malware detection using two dimensional binary program features. In Proceedings of the 2015 10th International Conference on Malicious and Unwanted Software (MALWARE), Fajardo, PR, USA, 20–22 October 2015; pp. 11–20. [Google Scholar]

- Xu, K.; Li, Y.; Deng, R.H.; Chen, K. DeepRefiner: Multi-layer Android Malware Detection System Applying Deep Neural Networks. In Proceedings of the 2018 IEEE European Symposium on Security and Privacy, London, UK, 24–26 April 2018; pp. 473–487. [Google Scholar] [CrossRef]

- Ullah, F.; Srivastava, G.; Ullah, S. A malware detection system using a hybrid approach of multi-heads attention-based control flow traces and image visualization. J. Cloud Comput. 2022, 11, 1–21. [Google Scholar] [CrossRef]

- Amin, M.; Tanveer, T.A.; Tehseen, M.; Khan, M.; Khan, F.A.; Anwar, S. Static malware detection and attribution in android byte-code through an end-to-end deep system. Future Gener. Comput. Syst. 2020, 102, 112–126. [Google Scholar] [CrossRef]

- Oak, R.; Du, M.; Yan, D.; Takawale, H.; Amit, I. Malware detection on highly imbalanced data through sequence modeling. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 37–48. [Google Scholar]

- Alzaylaee, M.K.; Yerima, S.Y.; Sezer, S. DL-Droid: Deep learning based android malware detection using real devices. Comput. Secur. 2020, 89, 101663. [Google Scholar] [CrossRef]

- Mahindru, A.; Sangal, A. MLDroid—framework for Android malware detection using machine learning techniques. Neural Comput. Appl. 2021, 33, 5183–5240. [Google Scholar] [CrossRef]

- Vu, L.N.; Jung, S. AdMat: A CNN-on-matrix approach to Android malware detection and classification. IEEE Access 2021, 9, 39680–39694. [Google Scholar] [CrossRef]

- Arp, D.; Spreitzenbarth, M.; Hubner, M.; Gascon, H.; Rieck, K.; Siemens, C. Drebin: Effective and explainable detection of android malware in your pocket. In Proceedings of the NDSS, San Diego, CA, USA, 23–26 February 2014; Volume 14, pp. 23–26. [Google Scholar]

- Wei, F.; Li, Y.; Roy, S.; Ou, X.; Zhou, W. Deep ground truth analysis of current android malware. In Proceedings of the Detection of Intrusions and Malware, and Vulnerability Assessment: 14th International Conference, DIMVA 2017, Bonn, Germany, 6–7 July 2017; Proceedings 14. pp. 252–276. [Google Scholar]

- Lashkari, A.H.; Kadir, A.F.A.; Taheri, L.; Ghorbani, A.A. Toward Developing a Systematic Approach to Generate Benchmark Android Malware Datasets and Classification. In Proceedings of the 2018 International Carnahan Conference on Security Technology (ICCST), Montreal, QC, Canada, 22–25 October 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Chen, K.; Wang, P.; Lee, Y.; Wang, X.; Zhang, N.; Huang, H.; Zou, W.; Liu, P. Finding unknown malice in 10 seconds: Mass vetting for new threats at the google-play scale. In Proceedings of the 24th {USENIX} Security Symposium ({USENIX} Security 15), Washington, DC, USA, 12–14 August 2015; pp. 659–674. [Google Scholar]

- Allix, K.; Bissyandé, T.F.; Klein, J.; Le Traon, Y. Androzoo: Collecting millions of android apps for the research community. In Proceedings of the 13th International Conference on Mining Software Repositories, Austin, TX, USA, 14–15 May 2016; pp. 468–471. [Google Scholar]

- Yerima, S.Y.; Sezer, S. DroidFusion: A Novel Multilevel Classifier Fusion Approach for Android Malware Detection. IEEE Trans. Cybern. 2019, 49, 453–466. [Google Scholar] [CrossRef]

- Alomari, E.S.; Nuiaa, R.R.; Alyasseri, Z.A.A.; Mohammed, H.J.; Sani, N.S.; Esa, M.I.; Musawi, B.A. Malware detection using deep learning and correlation-based feature selection. Symmetry 2023, 15, 123. [Google Scholar] [CrossRef]

- Alexandropoulos, S.A.N.; Kotsiantis, S.B.; Vrahatis, M.N. Data preprocessing in predictive data mining. Knowl. Eng. Rev. 2019, 34, e1. [Google Scholar] [CrossRef]

- Dogan, A.; Birant, D. Machine learning and data mining in manufacturing. Expert Syst. Appl. 2021, 166, 114060. [Google Scholar] [CrossRef]

- Acikmese, Y.; Alptekin, S.E. Prediction of stress levels with LSTM and passive mobile sensors. Procedia Comput. Sci. 2019, 159, 658–667. [Google Scholar] [CrossRef]

- Almahmoud, M.; Alzu’bi, D.; Yaseen, Q. ReDroidDet: Android malware detection based on recurrent neural network. Procedia Comput. Sci. 2021, 184, 841–846. [Google Scholar] [CrossRef]

- Bulut, I.; Yavuz, A.G. Mobile malware detection using deep neural network. In Proceedings of the 2017 25th Signal Processing and Communications Applications Conference (SIU), Antalya, Turkey, 15–18 May 2017; pp. 1–4. [Google Scholar]

- Kriegeskorte, N.; Golan, T. Neural network models and deep learning. Curr. Biol. 2019, 29, R231–R236. [Google Scholar] [CrossRef]

- Yeboah, P.N.; Baz Musah, H.B. NLP technique for malware detection using 1D CNN fusion model. Secur. Commun. Netw. 2022, 2022, 2957203. [Google Scholar] [CrossRef]

- Parameswaran Lakshmi, S. A lightweight 1-D CNN Model to Detect Android Malware on the Mobile Phone. Ph.D. Thesis, National College of Ireland, Dublin, Ireland, 2020. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Lindemann, B.; Maschler, B.; Sahlab, N.; Weyrich, M. A survey on anomaly detection for technical systems using LSTM networks. Comput. Ind. 2021, 131, 103498. [Google Scholar] [CrossRef]

- Feizollah, A.; Anuar, N.B.; Salleh, R.; Wahab, A.W.A. A review on feature selection in mobile malware detection. Digit. Investig. 2015, 13, 22–37. [Google Scholar] [CrossRef]

- Wu, Y.; Li, M.; Zeng, Q.; Yang, T.; Wang, J.; Fang, Z.; Cheng, L. DroidRL: Feature selection for android malware detection with reinforcement learning. Comput. Secur. 2023, 128, 103126. [Google Scholar] [CrossRef]

- Mimura, M. Impact of benign sample size on binary classification accuracy. Expert Syst. Appl. 2023, 211, 118630. [Google Scholar] [CrossRef]

- Bisong, E.; Bisong, E. Introduction to Scikit-learn. Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 215–229. [Google Scholar]

- McKinney, W. pandas: A foundational Python library for data analysis and statistics. Python High Perform. Sci. Comput. 2011, 14, 1–9. [Google Scholar]

- Calik Bayazit, E.; Koray Sahingoz, O.; Dogan, B. Deep learning based malware detection for android systems: A Comparative Analysis. Teh. Vjesn. 2023, 30, 787–796. [Google Scholar]

- Guan, J.; Jiang, X.; Mao, B. A method for class-imbalance learning in android malware detection. Electronics 2021, 10, 3124. [Google Scholar] [CrossRef]

- Akremi, A. ForensicTwin: Incorporating Digital Forensics Requirements Within a Digital Twin. Computers 2025, 14, 115. [Google Scholar] [CrossRef]

| Author | Detection Approach | Feature Extraction Method | Dataset | ML Algorithm | Accuracy |

|---|---|---|---|---|---|

| [28] | Machine deterministic symbolism and semantic modeling for Android malware detection. | Opcode/bytecode analysis | Drebin | RF | 97% |

| [29] | Application call graphs modeled as Markov chains, extracting API call sequences with MaMaDroid. | API call analysis | Drebin, OldBenign | RF | 94% |

| [30] | Feature selection applied to static features for malware detection. | Requested permissions, API calls, intents, URLs, strings | 200,000 Android apps | LSSVM | 98.7% |

| [31] | Decompilation, model discovery, transformation, integration, and event generation. | Java class and intent analysis | AMD | MEGDroid | 91.6% |

| Author | Detection Approach | Feature Extraction Method | Dataset | Selected DL Models | Accuracy |

|---|---|---|---|---|---|

| [35] | Applying two different detection layers to achieve efficient detection and validating with DeepRefiner. | Opcode/bytecode analysis | Google Play, VirusShare, MassVet | LSTM | 97.74% |

| [36] | Combining ACGs with malware images as a new feature extraction method. | Bytecode analysis | CIC-Inves, AndMal2019 | CNN | 99.27% |

| [37] | Malware attributes detected by vectorizing opcodes with one-hot encoding before applying DL. | Opcode analysis | AMD, VirusShare | BiLSTM | 99.9% |

| [38] | Created a simulated real-world dataset (imbalanced) to evaluate malware detection with BERT. | Opcode analysis | Google Play | LSTM | 91.9% |

| [39] | Used DynaLog to extract features from logs and DL Droid for classification. | Code instrumentation analysis (Java) | Intel Security | DL | 99.6% |

| Author | Detection Approach | Feature Extraction Method | Dataset | Selected DL Models | Accuracy |

|---|---|---|---|---|---|

| [40] | Feature selection for malware detection using ML/DL models. | Java classes, API calls at runtime, permissions | Android Permissions Dataset, Security and Computer Dataset | DL (MLDroid framework) | 98.8% |

| [41] | Characterizing apps as images, creating adjacency matrices, then applying CNN with the AdMat framework. | API calls, opcodes, information flow | Drebin, AMD | CNN | 98.2% |

| Dataset | Time of Collection | Malware Samples | Source |

|---|---|---|---|

| DREBIN | Augugst 2010–October 2012 | 5560 | https://drebin.mlsec.org/(accessed on 20 October 2025) |

| Android Malware Genome Project | August 2010–October 2011 | 1260 | http://www.malgenomeproject.org(accessed on 10 March 2025) |

| Contagio | December 2011–March 2013 | 1150 | https://contagiodump.blogspot.com |

| VirusShare | 2018–2020 | 4038 | https://virusshare.com(accessed on 10 March 2025) |

| CICAndMal2017 | 2017 | 365 | https://www.unb.ca/cic/datasets/andmal2017.html (accessed on 12 March 2025) |

| MassVet | 2015 | 127,429 | https://massvis.mit.edu (accessed on 12 March 2025) |

| VirusSign | 2011 | 146 | https://www.virussign.com (accessed on 12 March 2025) |

| VirusTotal | 2012–2018 | Not available | https://www.virustotal.com (accessed on 10 March 2025) |

| AndroZoo | Not available | 1,000,000 | https://androzoo.uni.lu (accessed on 14 March 2025) |

| Android Malware Dataset for ML | 2018 | 15,036 | https://www.kaggle.com (accessed on 14 March 2025) |

| Dataset | Scenario | Model (Layers) | Neurons | Epochs |

|---|---|---|---|---|

| Android | 1 | DNN: ReLU, 3 BatchNorm, Dropout(0.2, 0.3) | (32, 64, 128) | 100 |

| CNN: ReLU, 2 Conv(32, 64; k = 5), 2 MaxPool(2), Flatten, BatchNorm, Dropout(0.3, 0.3) | (128, 200) | 100 | ||

| RNN: RNN(128), tanh/ReLU, 3 BatchNorm, Flatten, Dropout(0.4) | (128, 200) | 100 | ||

| LSTM: LSTM(256), tanh, 3 BatchNorm, Flatten, ReLU, Dropout(0.3, 0.3) | (64, 128) | 100 | ||

| CNN-LSTM: ReLU, 2 Conv(64, 128; k = 4), 2 MaxPool(2), LSTM(64; tanh), 5 BatchNorm, Flatten, Dropout(0.4) | (128) | 100 | ||

| 2 | DNN: ReLU, 3 BatchNorm, Dropout(0.5, 0.4) | (32, 64, 128) | 100 | |

| CNN: ReLU, 2 Conv(128, 128; k = 3), 2 MaxPool(2), Flatten, 5 BatchNorm, Dropout(0.5, 0.4) | (128, 256) | 100 | ||

| RNN: RNN(256), tanh/ReLU, 2 BatchNorm, Flatten, Dropout(0.3) | (64, 128) | 100 | ||

| LSTM: LSTM(200), tanh, 3 BatchNorm, Flatten, ReLU, Dropout(0.4, 0.3) | (128, 200) | 100 | ||

| CNN-LSTM: ReLU, 2 Conv(128, 128; k = 4), 2 MaxPool(2), LSTM(200; tanh), 5 BatchNorm, Flatten, Dropout(0.4) | (128) | 100 | ||

| CIC | 1 | DNN: ReLU, 2 BatchNorm, Dropout(0.2, 0.3) | (32, 64, 128) | 100 |

| CNN: ReLU, 2 Conv(32, 64; k = 5), 2 MaxPool(2), 2 Flatten, BatchNorm, Dropout(0.2, 0.3) | (32, 64) | 100 | ||

| RNN: RNN(32), tanh/ReLU, 2 BatchNorm, 2 Flatten, Dropout(0.2, 0.3) | (32, 64) | 100 | ||

| LSTM: LSTM(64), tanh, BatchNorm, Flatten, Dropout(0.4, 0.4) | (32, 64) | 100 | ||

| CNN-LSTM: ReLU, 2 Conv(16, 32; k = 5), 2 MaxPool(2), LSTM(64; tanh), 2 BatchNorm, Flatten, Dropout(0.2) | (32) | 100 | ||

| 2 | DNN: ReLU, 2 BatchNorm, Dropout(0.3, 0.4) | (64, 128, 256) | 100 | |

| CNN: ReLU, 3 Conv(64, 128, 200; k = 5), 2 MaxPool(2), Flatten, BatchNorm, Dropout(0.3, 0.4) | (64, 128) | 100 | ||

| RNN: RNN(256), tanh/ReLU, 2 BatchNorm, Flatten, Dropout(0.3, 0.4) | (64, 128) | 100 | ||

| LSTM: LSTM(256), tanh, BatchNorm, Flatten, Dropout(0.4, 0.4) | (64, 128) | 100 | ||

| CNN-LSTM: ReLU, 2 Conv(64, 128; k = 3), 2 MaxPool(2), LSTM(200; tanh), 3 BatchNorm, Flatten, Dropout(0.3) | (128) | 100 |

| Models | Accuracy | F1-Score | Precision | Recall | Training Time | Testing Time | CPU Usage |

|---|---|---|---|---|---|---|---|

| DNN | 97.98% | 98% | 98% | 98% | 26.42 s | 0.234 s | 57.10 |

| CNN | 98.07% | 98% | 98% | 98% | 53.1 s | 0.195 s | 5.20 |

| RNN | 98.00% | 98% | 98% | 98% | 47.01 s | 0.198 s | 15.20 |

| LSTM | 98.07% | 98% | 98% | 98% | 70.75 s | 0.199 s | 58.20 |

| CNN-LSTM | 96.00% | 96.0% | 96.0% | 96.0% | 145.97 s | 0.224 s | 39.80 |

| Models | Accuracy | F1-Score | Precision | Recall | Training Time | Testing Time | CPU Usage |

|---|---|---|---|---|---|---|---|

| DNN | 96.25% | 97% | 97% | 97% | 82.35 s | 47.24 s | 57.2% |

| CNN | 96.58% | 96% | 96% | 96% | 98.29 s | 78.75 s | 52.69% |

| RNN | 96% | 96% | 96% | 96% | 38.03 s | 0.722 s | 22.10% |

| LSTM | 96.86% | 97% | 97% | 97% | 70.38 s | 0.244 s | 14.0% |

| CNN-LSTM | 95.0% | 95.0% | 95.0% | 95.0% | 63.08 s | 0.4058 s | 12.09% |

| Models | Accuracy | F1-Score | Precision | Recall | Training Time | Testing Time | CPU Usage |

|---|---|---|---|---|---|---|---|

| DNN | 96.65% | 96.75% | 93.97% | 99.70% | 589.81 s | 37.54 s | 7.00 |

| CNN | 97.14% | 97.21% | 94.83% | 99.72% | 2631.84 s | 3.70 s | 29.00 |

| RNN | 97.09% | 97.17% | 94.60% | 99.88% | 416.69 s | 5.26 s | 23.29 |

| LSTM | 97.09% | 97.17% | 94.71% | 99.75% | 1155.06 s | 11.66 s | 26.10 |

| CNN-LSTM | 97.20% | 97.27% | 94.75% | 99.93% | 2594.23 s | 20.01 s | 16.70 |

| Models | Accuracy | F1-Score | Precision | Recall | Training Time | Testing Time | CPU Usage |

|---|---|---|---|---|---|---|---|

| DNN | 97.14% | 97.22% | 94.72% | 99.85% | 319.58 s | 6.53 s | 25.50% |

| CNN | 97.26% | 97.33% | 94.91% | 99.89% | 3015.96 s | 13.36 s | 43.90% |

| RNN | 97.40% | 97.46% | 95.15% | 99.89% | 420.63 s | 25.65 s | 23.79% |

| LSTM | 96.82% | 96.90% | 94.28% | 99.68% | 1316.91 s | 2.59 s | 25.90% |

| CNN-LSTM | 97.42% | 97.47% | 95.39% | 99.64% | 3727.84 s | 11.68 s | 19.40% |

| Accuracy (%) | F1-Score (%) | Precision (%) | Recall (%) | Train Time (s) | Test Time (s) | CPU Usage (%) | |

|---|---|---|---|---|---|---|---|

| Mean | 96.91 | 96.93 | 96.46 | 97.90 | 1112.18 | 25.31 | 26.37 |

| Std. dev. | 0.82 | 0.81 | 1.05 | 1.48 | 1020.25 | 12.05 | 15.64 |

| Model | Description | Expected Output | Actual Output | Accuracy (%) | Inference Time (ms) | CPU Usage (%) | Remarks | Pass/Fail |

|---|---|---|---|---|---|---|---|---|

| CNN | Input valid test image | Correct class label | Correct | 98.5% | 45 ms | 48% | Highest performance, best accuracy | [✓] Pass |

| DNN | Input valid test image | Correct class label | Mostly correct | 91.3% | 38 ms | 42% | Faster but less accurate | [✓] Pass |

| LSTM | Input valid test image | Correct class label | Moderate | 87.6% | 105 ms | 67% | Lower accuracy, slowest | [✓] Fail |

| CNN-LSTM | Input valid test image | Correct class label | Near-correct | 94.2% | 68 ms | 55% | Balanced trade-off | [✓] Pass |

| RNN | Input valid test image | Correct class label | Fluctuates | 83.9% | 97 ms | 63% | Unstable predictions | [✓] Fail |

| Author | Methodology | Accuracy (%) | Feature Sel. | Test Time |

|---|---|---|---|---|

| [48] | Dense/LSTM | 98.30 | No | – |

| This Work | LSTM | 98.07 | No | 0.199 s |

| [48] | Dense/LSTM | 94.59 | Yes | – |

| This Work | LSTM | 96.86 | Yes | 0.244 s |

| Author | Methodology | Accuracy (%) | Feature Sel. | Test Time |

|---|---|---|---|---|

| [64] | LSTM | 98.80 | No | – |

| This Work | LSTM | 97.09 | No | 11.66 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alfaw, A.; Rouached, M.; Akremi, A. A Resilient Deep Learning Framework for Mobile Malware Detection: From Architecture to Deployment. Future Internet 2025, 17, 532. https://doi.org/10.3390/fi17120532

Alfaw A, Rouached M, Akremi A. A Resilient Deep Learning Framework for Mobile Malware Detection: From Architecture to Deployment. Future Internet. 2025; 17(12):532. https://doi.org/10.3390/fi17120532

Chicago/Turabian StyleAlfaw, Aysha, Mohsen Rouached, and Aymen Akremi. 2025. "A Resilient Deep Learning Framework for Mobile Malware Detection: From Architecture to Deployment" Future Internet 17, no. 12: 532. https://doi.org/10.3390/fi17120532

APA StyleAlfaw, A., Rouached, M., & Akremi, A. (2025). A Resilient Deep Learning Framework for Mobile Malware Detection: From Architecture to Deployment. Future Internet, 17(12), 532. https://doi.org/10.3390/fi17120532