MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals

Abstract

1. Introduction

1.1. Motivation

1.2. Main Contributions

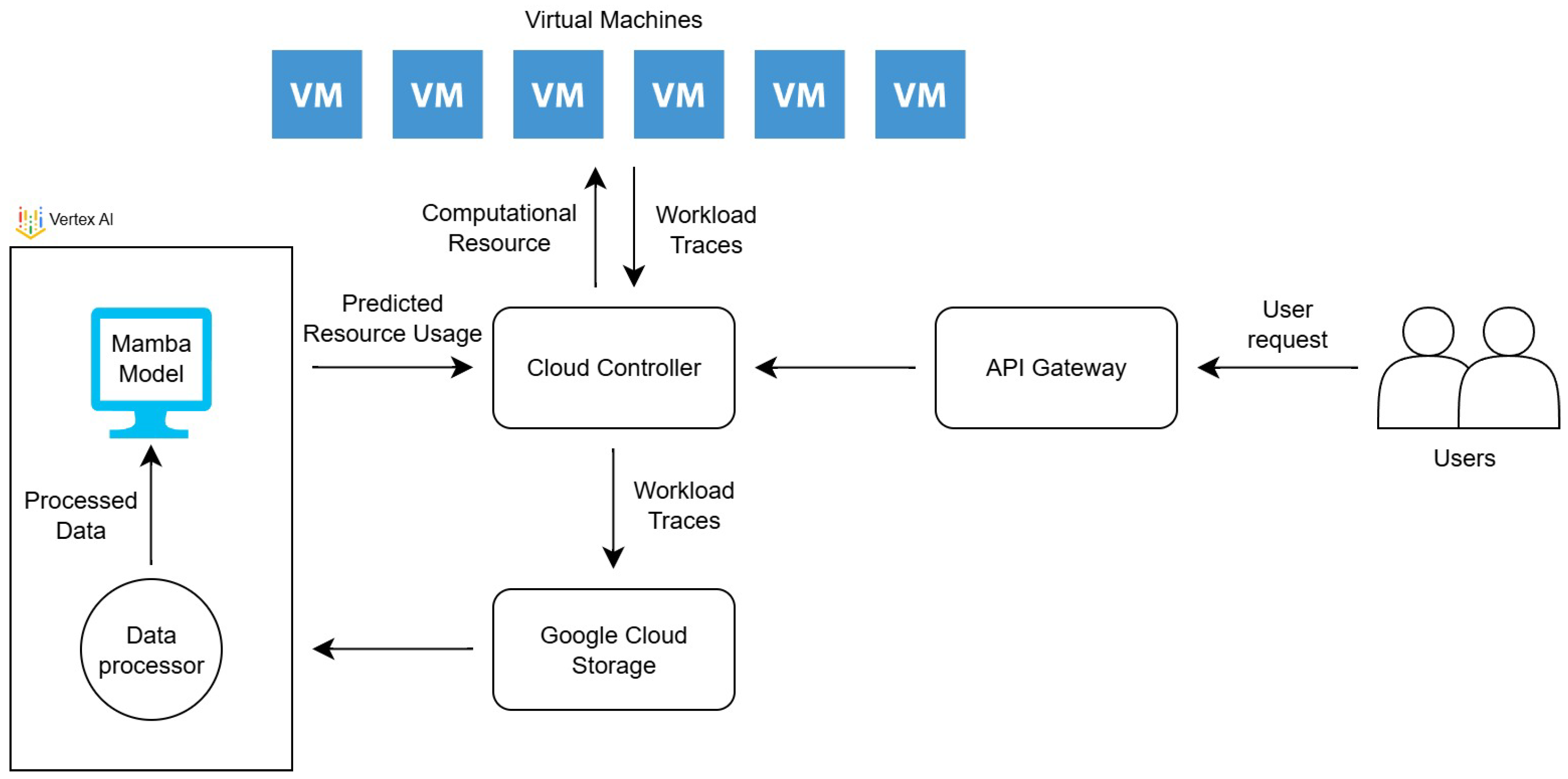

- To propose the new MambaNet0 framework that utilises the Mamba model to forecast cloud resource usage, optimising its efficiency to reduce waste of energy.

- To deploy and validate the framework on the Google Cloud’s Vertex AI environment.

- To evaluate the performance of the proposed framework using error metrics against other baseline models.

2. Related Work

Critical Analysis

3. Methodology: MambaNet0 Framework

3.1. Mamba

3.2. MambaNet0 Framework

3.3. Parameters and Configuration

3.4. Model Training Process

| Algorithm 1 Pseudocode of MambaNet0’s training algorithm. |

|

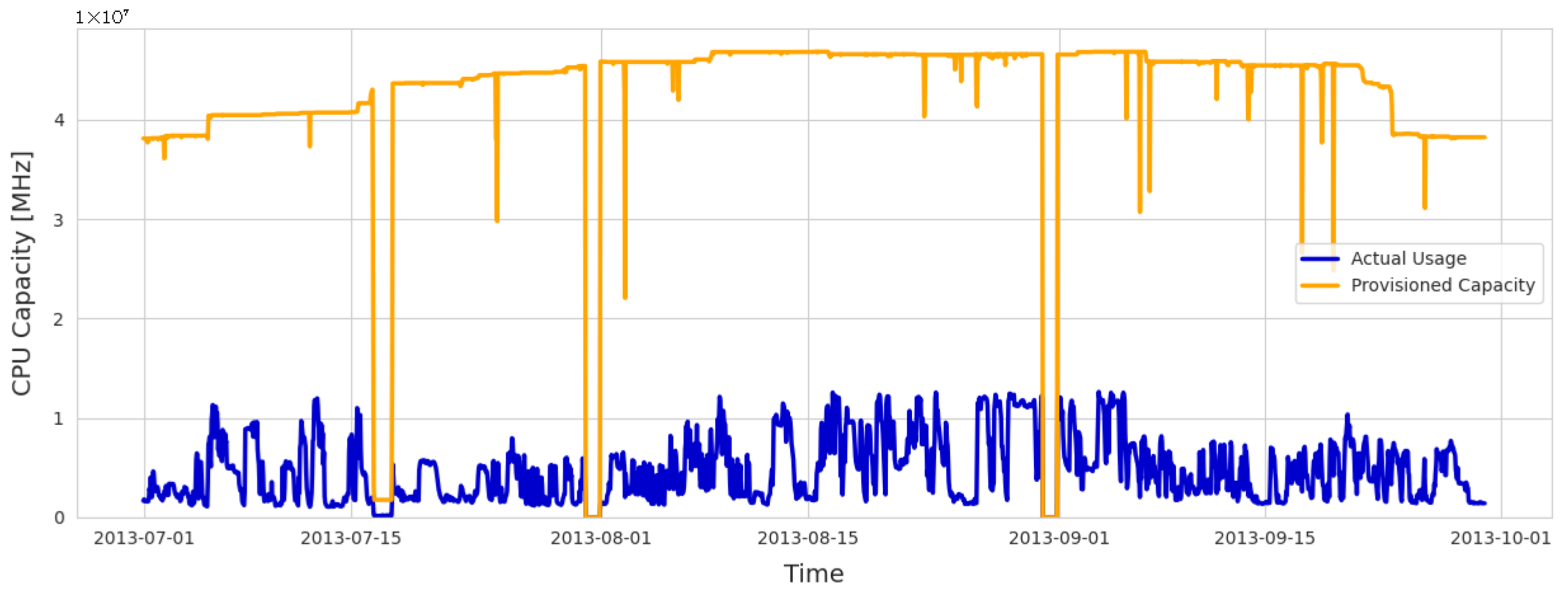

3.5. Dataset

3.6. Data Preprocessing

4. Performance Evaluation

4.1. Experimental Setup

4.2. Baseline Models

4.2.1. Autoregressive Integrated Moving Average (ARIMA)

4.2.2. Long Short-Term Memory (LSTM)

4.2.3. Chronos

4.3. Evaluation Metrics

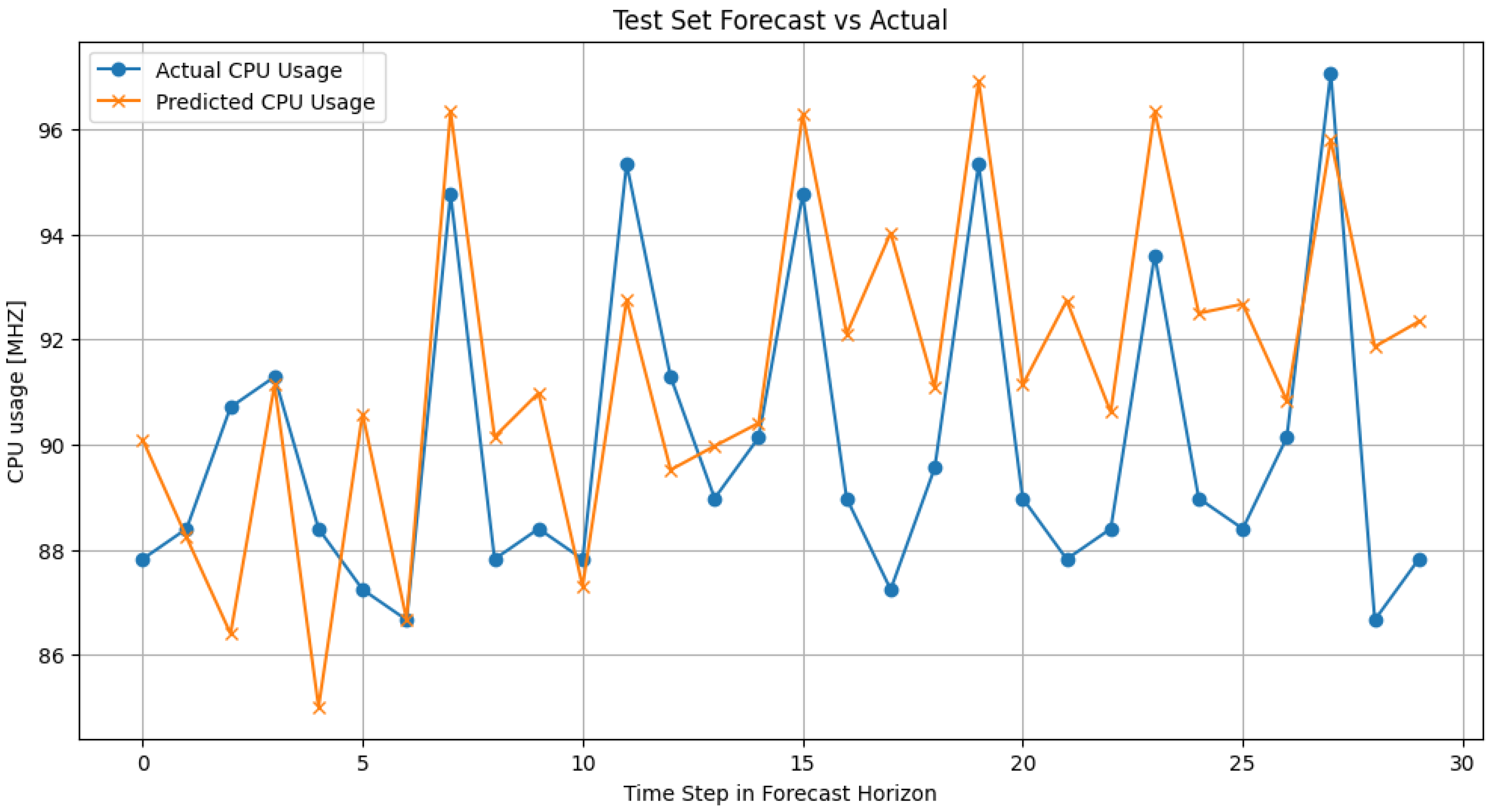

4.4. Results

5. Conclusions and Future Directions

Future Directions

- The natural next step for this research would be the integration of the MambaNet0 predictive framework with a resource allocation system. While this paper focuses on validating the model’s forecasting capabilities, a complete solution requires a system that would deploy these predictions to the cloud environment. Future work could explore a resource allocation scheme, such as the online auction mechanism [31]. This integration could take advantage of MambaNet0’s predictions to formulate a complete solution that could deliver an improvement in cloud resource utilisation, reduce over-provisioning costs, and improve social welfare in its distributions.

- The framework’s generalisability can be confirmed by evaluating its performance on diverse time-series datasets from various cloud providers, which would validate its robustness and adaptability in real-world environments.

- The framework’s versatility could be further validated by extending its forecasting capabilities to other cloud resources, such as memory usage, disk I/O, and network traffic. This would confirm its effectiveness beyond CPU utilisation prediction.

- Moreover, the framework’s environmental impact could be quantified using a digital twin of a cloud environment. By simulating daily energy consumption based on MambaNeto’s forecasts, this approach would precisely measure potential reductions in energy waste and carbon emissions, directly assessing the framework’s contribution to cloud sustainability [32].

- The Mamba architecture’s flexibility presents an opportunity to create hybrid models. Integrating Mamba with complementary forecasting techniques could leverage their combined strengths to achieve even greater predictive accuracy.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Islam, R.; Patamsetti, V.; Gadhi, A.; Gondu, R.M.; Bandaru, C.M.; Kesani, S.C.; Abiona, O. The future of cloud computing: Benefits and challenges. Int. J. Commun. Netw. Syst. Sci. 2023, 16, 53–65. [Google Scholar] [CrossRef]

- Mistry, H.K.; Mavani, C.; Goswami, A.; Patel, R. The impact of cloud computing and AI on industry dynamics and competition. Educ. Adm. Theory Pract. 2024, 30, 797–804. [Google Scholar] [CrossRef]

- Galaz, V.; Centeno, M.A.; Callahan, P.W.; Causevic, A.; Patterson, T.; Brass, I.; Baum, S.; Farber, D.; Fischer, J.; Garcia, D.; et al. Artificial intelligence, systemic risks, and sustainability. Technol. Soc. 2021, 67, 101741. [Google Scholar] [CrossRef]

- Mahida, A. Comprehensive review on optimizing resource allocation in cloud computing for cost efficiency. J. Artif. Intell. Cloud Comput. 2022, 232, 2–4. [Google Scholar] [CrossRef]

- Yadav, R.; Zhang, W.; Kaiwartya, O.; Singh, P.R.; Elgendy, I.A.; Tian, Y.C. Adaptive energy-aware algorithms for minimizing energy consumption and SLA violation in cloud computing. IEEE Access 2018, 6, 55923–55936. [Google Scholar] [CrossRef]

- Velu, S.; Gill, S.S.; Murugesan, S.S.; Wu, H.; Li, X. CloudAIBus: A testbed for AI based cloud computing environments. Clust. Comput. 2024, 27, 11953–11981. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, X. Cloud Computing Energy Consumption Prediction Based on Kernel Extreme Learning Machine Algorithm Improved by Vector Weighted Average Algorithm. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA), Xi’an, China, 28–30 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1355–1361. [Google Scholar]

- Yadav, R.; Zhang, W.; Li, K.; Liu, C.; Laghari, A.A. Managing overloaded hosts for energy-efficiency in cloud data centers. Clust. Comput. 2021, 24, 2001–2015. [Google Scholar] [CrossRef]

- UK Government. Net Zero Strategy: Build Back Greener; Technical Report; UK Government: London, UK, 2021.

- Zhou, Q.; Sheu, J.B. The use of Generative Artificial Intelligence (GenAI) in operations research: Review and future research agenda. J. Oper. Res. Soc. 2025, 1–21. [Google Scholar] [CrossRef]

- Lee, R. Large Language Models (LLMs) and Generative Artificial Intelligence (GenAI). In Natural Language Processing: A Textbook with Python Implementation; Springer: Singapore, 2025; pp. 241–273. [Google Scholar]

- Gill, S.S.; Jackson, J.; Qadir, J.; Parlikad, A.K.; Alam, M.; Jarvis, L.; Fuller, S.; Morris, G.R.; Shah, R.; Zawahreh, Y.I.; et al. A Toolkit for Sustainable Educational Environment in the Modern AI Era: Guidelines for Mitigating the Misuse of GenAI in Assessments. TechRxiv 2025, 1–18. [Google Scholar] [CrossRef]

- Van Der Vlist, F.; Helmond, A.; Ferrari, F. Big AI: Cloud infrastructure dependence and the industrialisation of artificial intelligence. Big Data Soc. 2024, 11, 20539517241232630. [Google Scholar] [CrossRef]

- Egbuhuzor, N.S.; Ajayi, A.J.; Akhigbe, E.E.; Agbede, O.O. Leveraging AI and cloud solutions for energy efficiency in large-scale manufacturing. Int. J. Sci. Res. Arch. 2024, 13, 4170–4192. [Google Scholar] [CrossRef]

- Chen, Z.; Ma, M.; Li, T.; Wang, H.; Li, C. Long sequence time-series forecasting with deep learning: A survey. Inf. Fusion 2023, 97, 101819. [Google Scholar] [CrossRef]

- Prangon, N.F.; Wu, J. AI and computing horizons: Cloud and edge in the modern era. J. Sens. Actuator Netw. 2024, 13, 44. [Google Scholar] [CrossRef]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data centers: A survey on software technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef]

- Wang, H.; Mathews, K.J.; Golec, M.; Gill, S.S.; Uhlig, S. AmazonAICloud: Proactive resource allocation using amazon chronos based time series model for sustainable cloud computing. Computing 2025, 107, 77. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Masoumi, E.; Ranjan, R.; Buyya, R. Workload prediction using ARIMA model and its impact on cloud applications’ QoS. IEEE Trans. Cloud Comput. 2014, 3, 449–458. [Google Scholar] [CrossRef]

- Duggan, M.; Mason, K.; Duggan, J.; Howley, E.; Barrett, E. Predicting host CPU utilization in cloud computing using recurrent neural networks. In Proceedings of the 2017 12th International Conference for Internet Technology and Secured Transactions (ICITST), Cambridge, UK, 11–14 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 67–72. [Google Scholar]

- Tang, X.; Liu, Q.; Dong, Y.; Han, J.; Zhang, Z. Fisher: An efficient container load prediction model with deep neural network in clouds. In Proceedings of the 2018 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Ubiquitous Computing & Communications, Big Data & Cloud Computing, Social Computing & Networking, Sustainable Computing & Communications (ISPA/IUCC/BDCloud/SocialCom/SustainCom), Melbourne, Australia, 11–13 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 199–206. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, Z.; Kong, F.; Feng, S.; Wang, M.; Yang, X.; Zhao, H.; Wang, D.; Zhang, Y. Is Mamba effective for time series forecasting? Neurocomputing 2025, 619, 129178. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Hodson, T.O.; Over, T.M.; Foks, S.S. Mean squared error, deconstructed. J. Adv. Model. Earth Syst. 2021, 13, e2021MS002681. [Google Scholar] [CrossRef]

- Introduction to Vertex AI. 2025. Available online: https://cloud.google.com/vertex-ai/docs/start/introduction-unified-platform (accessed on 27 July 2025).

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. A comparison of ARIMA and LSTM in forecasting time series. In Proceedings of the 2018 17th IEEE international conference on machine learning and applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1394–1401. [Google Scholar]

- Ansari, A.F.; Stella, L.; Turkmen, C.; Zhang, X.; Mercado, P.; Shen, H.; Shchur, O.; Rangapuram, S.S.; Arango, S.P.; Kapoor, S.; et al. Chronos: Learning the language of time series. arXiv 2024, arXiv:2403.07815. [Google Scholar] [CrossRef]

- Model Evaluation in Vertex AI. 2025. Available online: https://cloud.google.com/vertex-ai/docs/evaluation/introduction#forecasting (accessed on 27 July 2025).

- Kreinovich, V.; Nguyen, H.T.; Ouncharoen, R. How to Estimate Forecasting Quality: A System-Motivated Derivation of Symmetric Mean Absolute Percentage Error (SMAPE) and Other Similar Characteristics; University of Texas at El Paso: El Pasoz, TX, USA, 2014. [Google Scholar]

- Zhang, J.; Yang, X.; Xie, N.; Zhang, X.; Vasilakos, A.V.; Li, W. An online auction mechanism for time-varying multidimensional resource allocation in clouds. Future Gener. Comput. Syst. 2020, 111, 27–38. [Google Scholar] [CrossRef]

- Henzel, J.; Wróbel, Ł.; Fice, M.; Sikora, M. Energy consumption forecasting for the digital-twin model of the building. Energies 2022, 15, 4318. [Google Scholar] [CrossRef]

| Work | Model | Environment | Metrics | Dataset |

|---|---|---|---|---|

| Calheiros et al. [19] | ARIMA | CloudSim | RMSD, NRMSD, MAD, MAPE | Wikipedia Foundation |

| Dunggan et al. [20] | RNN | CloudSim | MAE, MSE | PlanetLab |

| Tang et al. [21] | LSTM | Kubernetes | RMSE | Web and database service load trace |

| Velu et al. [6] | DeepAR | Google Colab | MAE, MSE, MAPE | Bitbrains |

| Wang et al. [18] | Amazon Chronos | Amazon SageMaker | MAE, MSE, MAPE | Bitbrains |

| MambaNet0 (This Paper) | Mamba | Vertex AI | MAE, RMSE, SMAPE | Bitbrains |

| Parameter | Configuration |

|---|---|

| Hidden Layer: | 3 Mamba layers |

| Hidden Dimension: | 16 |

| Input Size: | 16 × 10 × 1 |

| Forecast Horizon: | 30 |

| Output Size: | 16 × 30 |

| Optimiser: | Adam |

| Learning rate: | 0.001 |

| Scheduler: | Patience = 10 and factor = 0.5 |

| Timestamp | CPU Cores | CPU Provisioned [MHZ] | CPU Usage [MHZ] | CPU Usage [%] | Memory Provisioned [KB] | Memory Usage [KB] | Disk Read [KB/s] | Disk Write [KB/s] | Network Received [KB/s] | Network Transmitted [KB/s] |

|---|---|---|---|---|---|---|---|---|---|---|

| 1372629804 | 2 | 5851.998 | 87.779 | 1.500 | 8,218,624.0 | 160.866 | 21.733 | 0.266 | 1.467 | |

| 1372630104 | 2 | 5851.998 | 29.259 | 0.500 | 8,218,624.0 | 0.000 | 2.333 | 0.200 | 1.000 | |

| 1372629804 | 2 | 5851.998 | 27.309 | 0.466 | 8,218,624.0 | 32.066 | 4.200 | 0.133 | 1.067 | |

| 1372629804 | 2 | 5851.998 | 23.407 | 0.400 | 8,218,624.0 | 0.000 | 0.866 | 0.067 | 1.000 | |

| 1372629804 | 2 | 5851.998 | 19.506 | 0.333 | 8,218,624.0 | 0.000 | 0.200 | 0.133 | 1.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chevaphatrakul, T.; Wang, H.; Gill, S.S. MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals. Future Internet 2025, 17, 480. https://doi.org/10.3390/fi17100480

Chevaphatrakul T, Wang H, Gill SS. MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals. Future Internet. 2025; 17(10):480. https://doi.org/10.3390/fi17100480

Chicago/Turabian StyleChevaphatrakul, Thananont, Han Wang, and Sukhpal Singh Gill. 2025. "MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals" Future Internet 17, no. 10: 480. https://doi.org/10.3390/fi17100480

APA StyleChevaphatrakul, T., Wang, H., & Gill, S. S. (2025). MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals. Future Internet, 17(10), 480. https://doi.org/10.3390/fi17100480