1. Introduction

Ensuring the quality and reliability of software systems is a foundational concern in modern software engineering. As applications become more complex and integrated into critical sectors, rigorous testing is essential to validate correctness and performance prior to deployment. Traditionally, testing processes have relied heavily on manual test case generation and execution—a practice that, while flexible, is time consuming, error-prone, and difficult to scale in fast-paced development environments [

1,

2].

To address these limitations, automated testing has emerged as a standard practice, offering efficiency, repeatability, and broader test coverage. A wide range of automation frameworks now supports various testing needs, from low-level unit testing to high-level system and interface validations [

3]. However, these tools often require programming expertise and ongoing maintenance to adapt to evolving software features, limiting their accessibility and long-term scalability [

1].

Recent advancements in artificial intelligence (AI), particularly in machine learning (ML) and natural language processing (NLP), present promising alternatives. Large language models (LLMs) such as GPT-4 [

4], BERT [

5], and T5 [

6] demonstrate strong capabilities in understanding context and generating human-like language, which can be leveraged to automate test script creation [

7,

8]. These models enable users to generate test scripts from natural language inputs, reducing the need for programming knowledge and expediting the testing process [

9,

10]. Recent studies also highlight the growing role of LLM-based agents and Parameter-Efficient Fine-Tuning (PEFT) methods in improving adaptability and scalability in software engineering contexts [

11,

12].

LLM-based approaches also offer adaptability across different systems, platforms, and programming environments [

13]. Their ability to understand and generalize software behavior makes them particularly useful for dynamic applications and cross-platform test case migration [

14]. This evolution in testing automation indicates a move from rigid, code-centric tools toward more intuitive, context-aware systems that align with modern development methodologies while remaining potentially complementary to traditional approaches.

Despite these advancements, a significant gap persists in the automation of functional test case generation, particularly in creating test cases that capture high-level user flows and complex system behaviors [

15,

16,

17,

18,

19]. Addressing this challenge requires models that can reason across structured inputs, user requirements, and multi-layered software interfaces. While state-space models (SSMs), such as Mamba, offer promising capabilities in handling long sequences with high throughput and global context [

20,

21], general-purpose LLMs often fall short in completeness, correctness, and adaptability when applied to functional testing in dynamic, real-world environments.

1.1. Key Contributions

This work introduces a novel approach to automate software test case generation by leveraging the Codestral Mamba 7B model, which was fine-tuned with Low-Rank Adaptation (LoRA). Unlike traditional Transformer-based models, which rely on self-attention mechanisms and often face challenges in scalability, computational efficiency, and long-sequence modeling, our method exploits the unique advantages of SSMs. Specifically, Mamba’s linear-time inference and global context modeling capabilities enable more efficient and scalable test case generation particularly for complex, real-world software systems.

There are five primary contributions of this paper:

Novel Integration of LoRA with a State-Space Model: We propose and evaluate a method for embedding LoRA matrices into the Codestral Mamba 7B architecture, enabling efficient, domain-specific adaptation. This approach contrasts with existing Transformer-based fine-tuning techniques, which often require full-model updates or lack the linear scalability of SSMs. By focusing on LoRA, we achieve PEFT while preserving Mamba’s inherent advantages in handling long sequences and maintaining computational efficiency.

Dual-Dataset Evaluation with Emphasis on Real-World Applicability: Our method is evaluated on both the CodeXGLUE/CONCODE benchmark and a proprietary TestCase2Code dataset, which comprises real-world manual-to-Pytest conversions. This dual evaluation demonstrates superior performance in syntactic accuracy, semantic alignment, and test coverage compared to baseline Transformer models. Notably, our approach achieves these improvements without the computational overhead typically associated with fine-tuning large Transformer architectures.

Prototype Development (Codestral Mamba_QA): We present a functional chatbot prototype that integrates the fine-tuned Mamba model into a user-friendly interface, showcasing its potential for seamless integration into real-world software testing pipelines. This prototype highlights the practical applicability of our method, offering a scalable and efficient alternative to existing tools like GitHub Copilot or GPT-4-based solutions.

Computational Efficiency and Scalability: The LoRA-based fine-tuning approach significantly reduces the number of trainable parameters, enabling rapid adaptation (e.g., 200 epochs in just 20 min for the proprietary TestCase2Code dataset). This efficiency is particularly advantageous for industrial applications, where quick iteration and low resource consumption are critical. In contrast, Transformer-based models often require extensive computational resources for fine-tuning, limiting their scalability in resource-constrained environments.

Compatibility with CI/CD Workflows: The results demonstrate that LoRA-enhanced state-space models can be effectively integrated into Continuous Integration and Continuous Delivery (CI/CD) workflows. This alignment presents a strong alternative to Transformer-based methods, which often face limitations when dealing with the dynamic and rapidly evolving nature of modern software pipelines. By enhancing both efficiency and adaptability, the proposed approach promotes more sustainable and scalable test automation practices.

1.2. Why This Matters

While Transformer-based models (e.g., CodeT5 [

22], GitHub Copilot [

23], GPT-4 [

4]) have dominated the field of automated test generation, their reliance on self-attention mechanisms introduces computational bottlenecks, particularly for long-sequence tasks and large-scale deployments. In contrast, state-space models like Mamba offer linear-time inference and global context modeling, making them inherently more scalable and efficient for real-world applications. By combining Mamba with LoRA, we not only retain these advantages but also introduce a PEFT strategy that outperforms traditional approaches in both performance and resource utilization.

This work thus bridges a critical gap in the literature, demonstrating that state-space models when fine-tuned with LoRA can surpass Transformer-based methods in automated test generation, particularly for complex, dynamic software environments.

1.3. Paper Organization

The remainder of this paper is structured as follows:

Section 2 (Related Work): reviews the state-of-the-art in automated software testing and the application of LLMs for test case generation.

Section 3 (Materials and Methods): details the model architecture, datasets, and prompt engineering strategy.

Section 4 (Experiments and Results): presents the experimental setup, evaluation metrics, and analysis of results.

Section 5 (Discussion): interprets the findings of the present work and critically examines its limitations.

Section 6 (Conclusions): concludes the study and outlines directions for future research.

Appendix A, Appendix B and Appendix C:

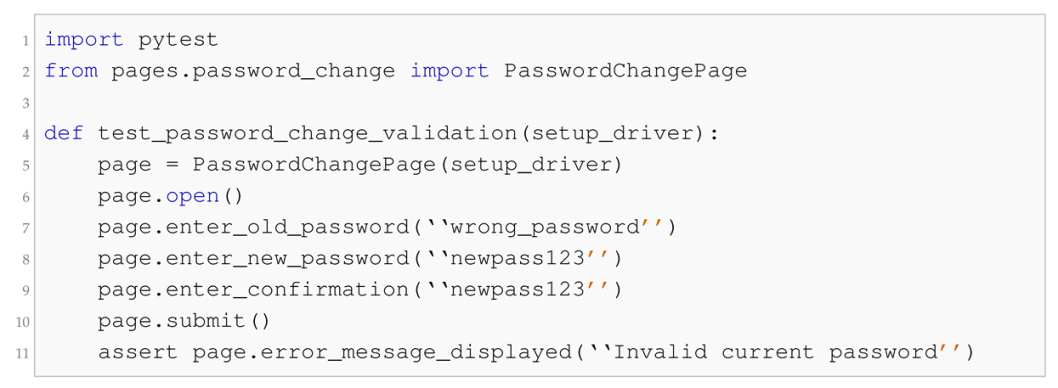

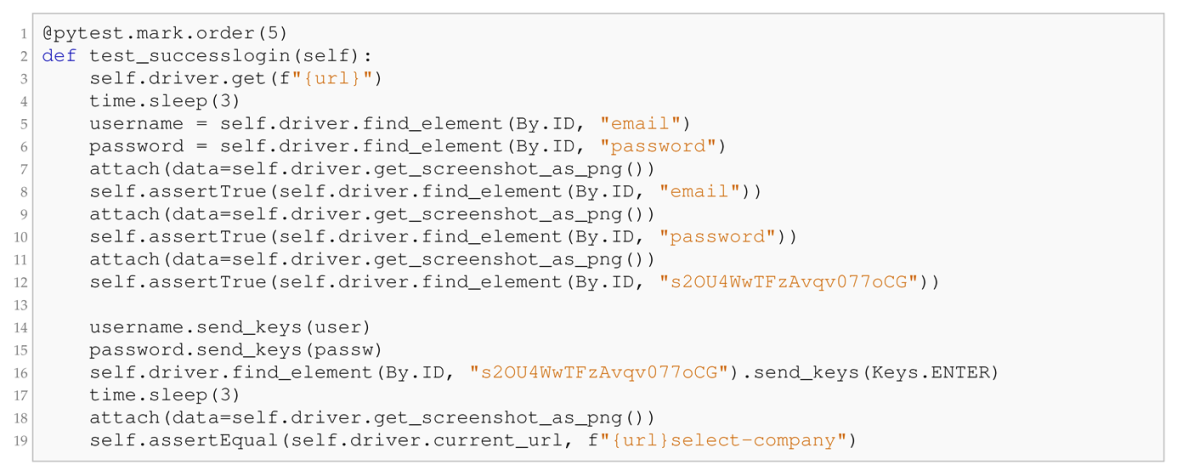

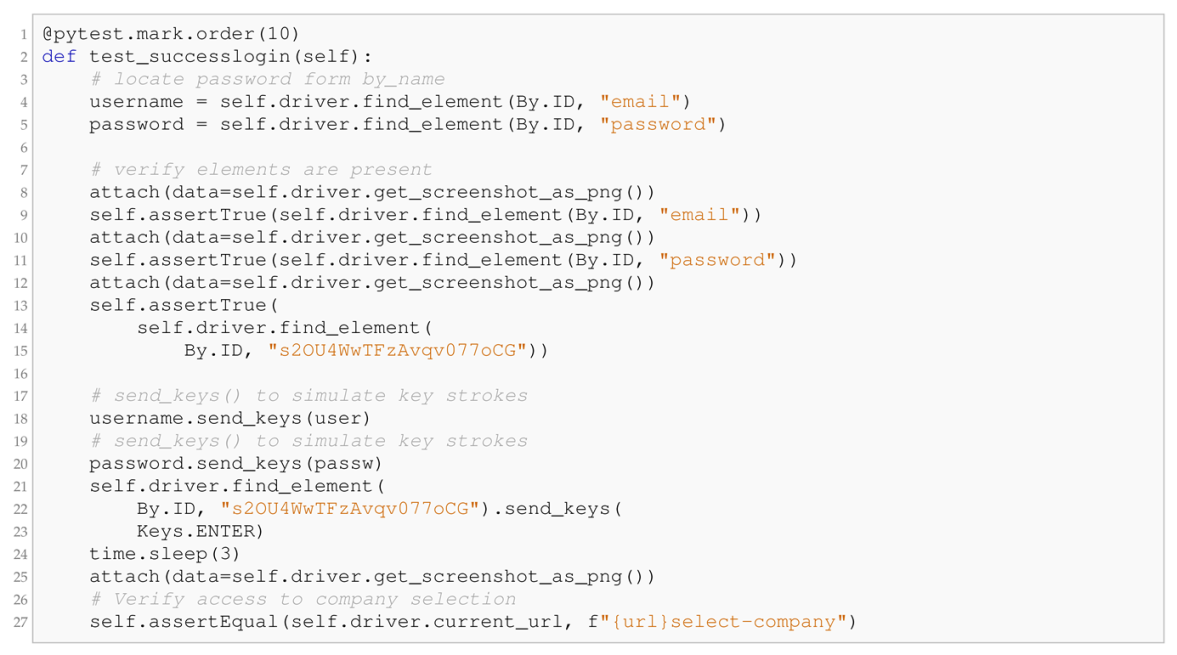

Appendix A provides two non-sensitive dataset samples illustrating data structure and content to ensure transparency and reproducibility.

Appendix B presents a representative prompt–response pair from the proprietary TestCase2Code dataset used for fine-tuning the Codestral Mamba 7B model, clarifying the prompt engineering process.

Appendix C includes representative prompt–response examples of model-generated Pytest code (

Table A1,

Table A2,

Table A3,

Table A4,

Table A5 and

Table A6), demonstrating the model’s behavior under varying configurations and its ability to produce precise, reusable, and semantically coherent test cases.

2. Related Work

2.1. Automated Software Testing: From Traditional Frameworks to AI-Assisted Approaches

The evolution of automated software testing has been closely tied to the adoption of agile and DevOps practices, where rapid iteration cycles demand efficient and reliable validation mechanisms. Traditional frameworks such as Selenium and JUnit [

24] have laid the groundwork for test automation, yet the need for extensive scripting and domain expertise often constrains their effectiveness. While these tools excel in structured environments, they struggle to scale in dynamic codebases where requirements evolve continuously [

25]. The Testing Pyramid [

26] advocates for a balanced distribution of unit, integration, and end-to-end tests, but manual implementation remains a bottleneck, particularly for complex system-level validations.

2.2. Transformer-Based LLMs in Test Automation: Strengths and Limitations

The advent of Transformer-based LLMs such as GPT-4 [

4], CodeBERT [

27], and CodeT5 [

22] has revolutionized AI-assisted software development by enabling natural language-to-code generation. These models have demonstrated proficiency in tasks ranging from code synthesis [

28] to vulnerability detection [

29] with tools like GitHub Copilot [

23] and UniXcoder [

30] further refining the alignment between human intent and executable outputs. However, their application to functional test automation, where test cases must capture high-level user flows and cross-component interactions, reveals critical limitations:

Computational Inefficiency: Transformer architectures rely on self-attention mechanisms with quadratic complexity (

), which becomes prohibitive for long-sequence tasks such as end-to-end test suites [

16].

Context Window Constraints: Limited token windows (e.g., 8K for GPT-4) restrict the models’ ability to process extensive test scenarios or maintain global context across interconnected software modules [

14].

Unit-Level Focus: Most prior work [

31,

32,

33] has concentrated on unit-level testing, leaving a gap in generating test cases for system-level behaviors [

15,

17].

Fine-Tuning Overhead: Adapting large Transformer models (e.g., CodeT5’s 220M parameters) to domain-specific tasks requires full fine-tuning, which is resource-intensive and impractical for many industrial settings [

22].

2.3. State-Space Models: A Paradigm Shift for Long-Sequence Tasks

To address these challenges, SSMs such as Mamba [

21] have emerged as a compelling alternative. Unlike Transformers, SSMs employ linear-time inference and global context modeling, making them inherently more scalable for tasks requiring long-range dependencies. Key advantages include the following:

Linear Scalability: Selective SSM blocks process sequences in

time, enabling the efficient handling of large test suites without the computational bottlenecks of self-attention [

20].

Extended Context Retention: SSMs maintain state across entire sequences, improving coherence in generated test cases that span multiple software layers [

18].

Despite these strengths, SSMs remain underexplored in test automation with existing research primarily focusing on general code synthesis rather than functional validation [

19].

2.4. PEFT: Bridging the Gap for Functional Testing

Although SSMs offer architectural advantages, their adaptation to specialized domains, such as functional test generation, requires fine-tuning strategies that balance performance and computational cost. LoRA [

34] addresses this need by injecting trainable low-rank matrices into pretrained models, reducing the number of updated parameters by orders of magnitude. Recent studies on PEFT techniques further highlight their value in optimizing large models for domain-specific applications without prohibitive resource costs [

12,

35,

36]. Prior applications of LoRA have focused on Transformer-based models [

34], but its integration with SSMs remains largely unexplored. This gap presents an opportunity to combine the scalability of SSMs with the efficiency of PEFT, which is a synergy that our work exploits to advance automated test generation.

2.5. Positioning Our Work in the Landscape

This study distinguishes itself from existing research in the following aspects:

First Integration of LoRA with SSMs for Testing: Unlike prior work that applies LoRA to Transformers (e.g., CodeT5), we leverage it to fine-tune Codestral Mamba 7B, demonstrating that SSMs can surpass Transformer-based models in both performance and resource efficiency for functional test automation.

Focus on Real-World Functional Testing: While datasets like CodeXGLUE/CONCODE [

37] evaluate general code synthesis, our proprietary TestCase2Code dataset targets end-to-end test scripts, providing a more realistic benchmark for industrial applications.

Prototype-Driven Validation: We develop Codestral Mamba_QA, a chatbot prototype that showcases the practical deployment of LoRA-fine-tuned SSMs in CI/CD pipelines, a capability lacking in Transformer-based tools like Copilot, which rely on external APIs and lack project-specific adaptability.

2.6. Summary of Research Gaps

Table 1 summarizes key limitations in prior work and how our approach addresses them.

3. Materials and Methods

Software testing ensures software systems conform to specified requirements and perform reliably [

38,

39]. Test constructs such as individual tests, test cases, and test suites support structured validation across different testing levels and techniques, including black-box, white-box, and gray-box, and they are often augmented by design strategies like boundary value analysis and fuzzing [

40,

41,

42,

43].

While manual and rule-based approaches have historically dominated test generation, large language models (LLMs) now enable an automated, context-aware generation of test code. Their ability to understand both natural language and source code facilitates the scalable, dynamic creation of test suites aligned with functional and exploratory testing goals.

3.1. LLM Architecture Grounded in State-Space Models

This work employs the Codestral Mamba 7B model, which is a state-space model (SSM) optimized for efficient long-range sequence modeling [

20,

21]. By integrating selective SSM blocks with multi-layer perceptrons, Mamba enables linear-time inference. This architecture builds on the findings presented in [

44]. Its implementation is publicly available at

https://github.com/state-spaces/mamba (accessed on 9 October 2025), promoting reproducibility and encouraging further exploration. The model’s extended context capacity supports the effective retrieval and utilization of information from large inputs such as codebases and documentation, thereby enhancing performance in tasks like debugging and test generation (Mistral AI, Codestral Mamba, available at:

https://mistral.ai/en/news/codestral-mamba, accessed on 9 October 2025. NVIDIA Developer Blog, Revolutionizing Code Completion with Codestral Mamba: The Next-Gen Coding LLM, available at:

https://developer.nvidia.com/blog/revolutionizing-code-completion-with-codestral-mamba-the-next-gen-coding-llm/, accessed on 9 October 2025).

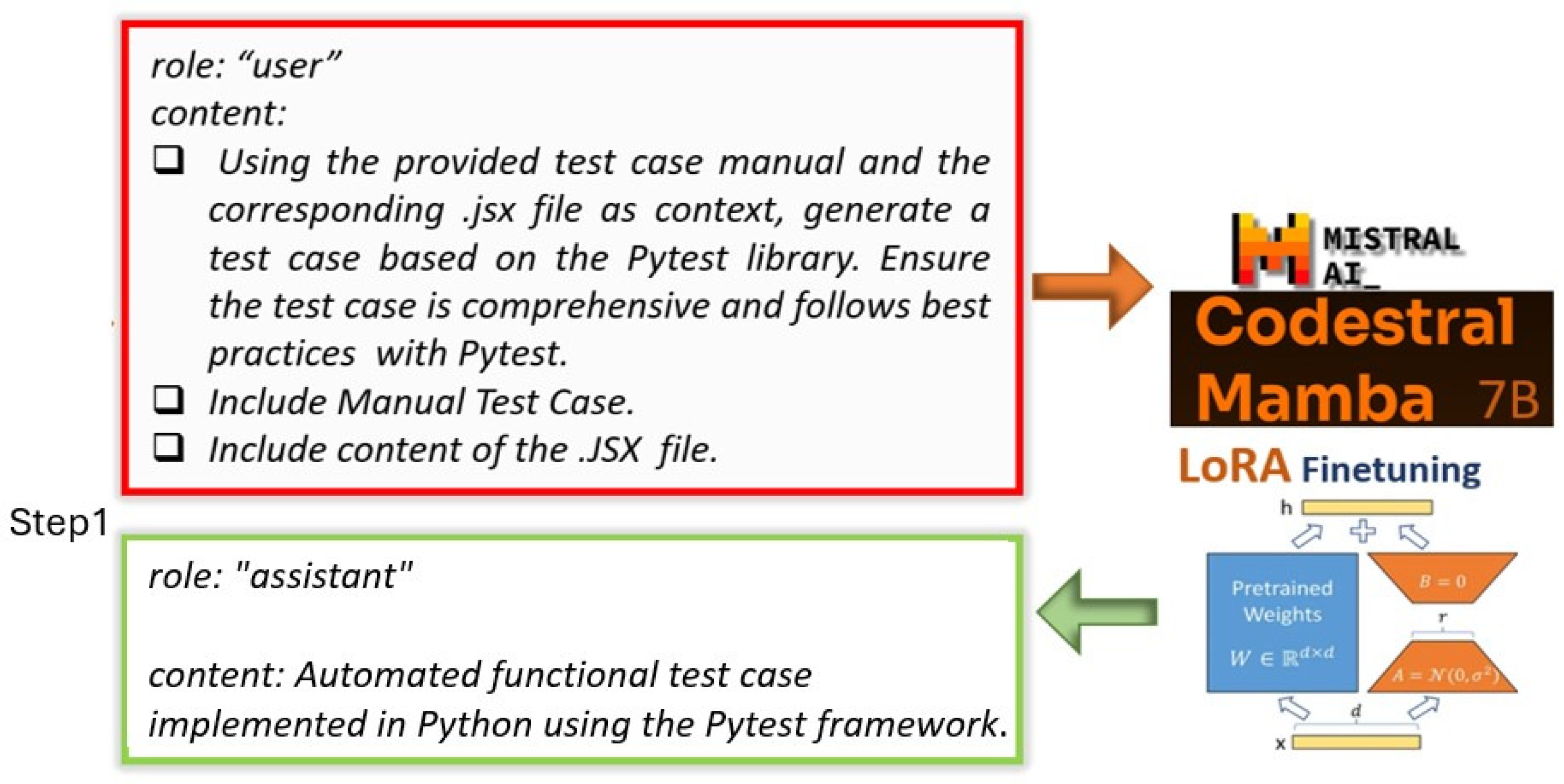

3.2. Integrating LoRA Fine-Tuning into the Mamba-2 Architecture

To specialize the Mamba-2 architecture for functional test generation, we employ LoRA [

34], which is a PEFT technique that significantly reduces the number of trainable parameters while maintaining the model’s general-purpose capabilities. Rather than updating the entire set of pretrained weights, LoRA injects trainable low-rank matrices into selected projection layers, allowing for efficient task-specific adaptation.

In the context of Mamba-2, LoRA is seamlessly integrated into the

input projection matrices (

and the

output projection matrix (

) (see

Figure 1), which are key components in the Selective State-Space Model (SSSM) blocks. The original projection weights can be expressed as a combination of the pretrained weights and a low-rank update. This decomposition is formalized in Equation (

1):

where

and

, and

r is the rank of the low-rank decomposition with

. This decomposition introduces only a small number of trainable parameters compared to the Mamba model’s total parameter count, resulting in a lightweight adaptation mechanism that preserves its computational efficiency. The modified input projection, referred to as the LoRA-adjusted input transformation, is formally defined in Equation (

2):

An analogous formulation is applied to the output projection, following the same decomposition principle. This structured approach enables the targeted adaptation of the model to the specific domain of software testing while preserving the integrity of the original pretrained weights and avoiding the computational cost of full-model fine-tuning.

By leveraging LoRA within Mamba-2’s architecture, we achieve an efficient and modular specialization pipeline, where general-purpose language capabilities are retained, and only task-relevant components are fine-tuned. This is especially advantageous in the domain of automated test generation, where scalability, low resource consumption, and contextual understanding are critical. As shown in

Figure 1, this architecture supports fine-grained control over adaptation, enabling precise alignment between software artifacts (e.g., function definitions, comments) and the resulting test case generation.

In summary, the integration of LoRA into Mamba-2 establishes a flexible and domain-adaptive framework for automated test case generation. This combination brings together the long-context modeling and scalability of SSMs with the efficiency and modularity of low-rank fine-tuning, enabling the generation of high-quality, context-aware functional tests directly from source code.

3.3. Datasets

This work employs two different datasets to support model training and evaluation: The CONCODE dataset [

45] from the CodeXGLUE benchmark [

37] and the proprietary TestCase2Code dataset. CodeXGLUE/CONCODE is part of the CodeXGLUE benchmark suite, which is a comprehensive platform for evaluating models on diverse code intelligence tasks. For our experiments, we use the text-to-code generation task, specifically the CONCODE dataset (

https://github.com/microsoft/CodeXGLUE/tree/main/, accessed on 9 October 2025), which consists of 100K training samples, 2K development samples, and 2K testing samples. Each example includes a natural language description, contextual class information, and the corresponding Java code snippet. The structured format, with fields like

nl and

code, facilitates context-aware code generation, making it well suited for benchmarking large language models in source code synthesis from textual prompts.

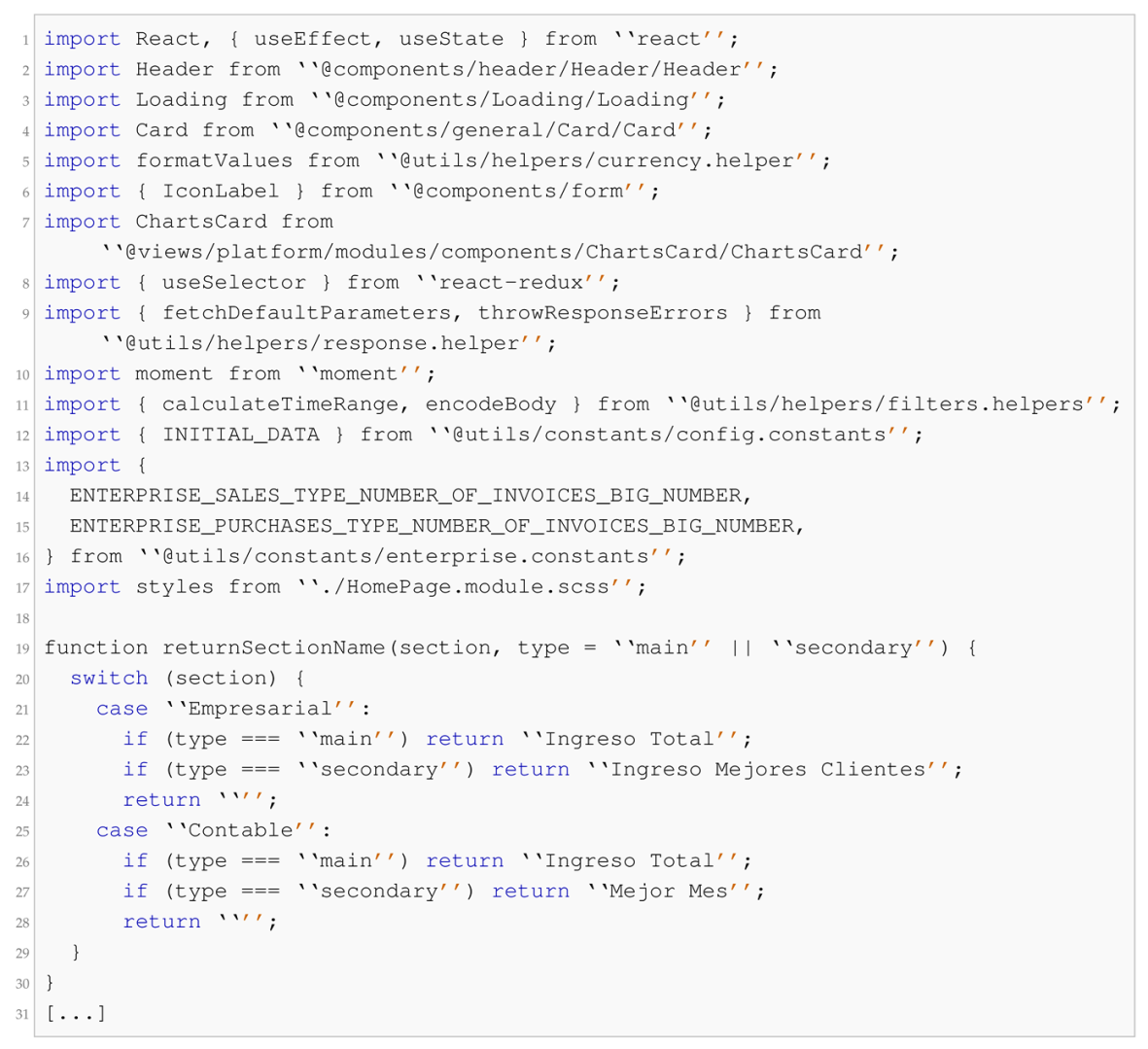

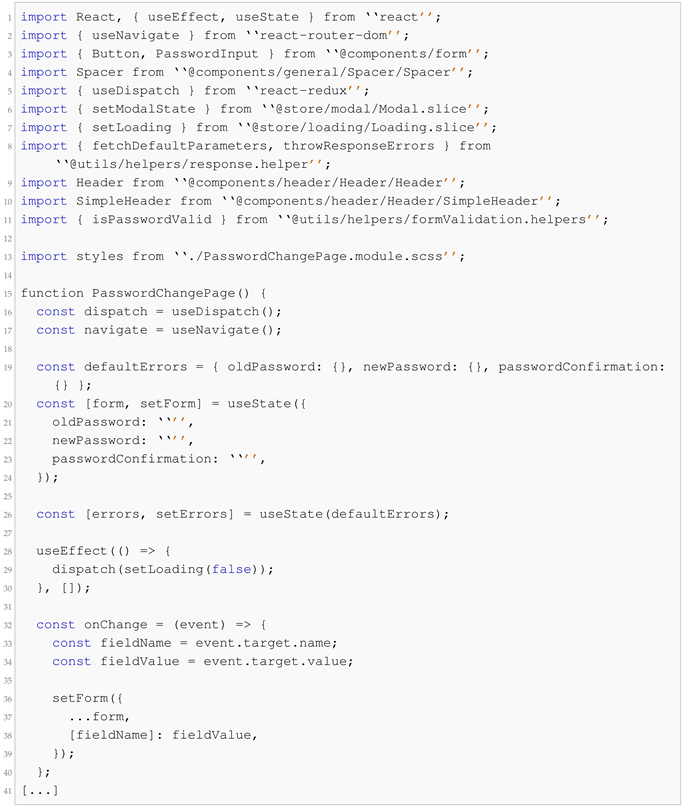

The proprietary TestCase2Code dataset was developed to address the lack of datasets containing functional test cases written in Pytest, which is paired with their corresponding manual descriptions. It comprises 870 real-world examples collected from an enterprise software testing environment, totaling 2,610 files organized into triplets: manual test case (

m.txt), associated source code in JSX/JavaScript (

c.txt), and the corresponding Pytest-based automated test (

a.txt). The overall structure of the dataset is outlined in

Table 2.

The manual test case corpus comprises 86,803 words in 870 files, with a vocabulary of 939 unique terms and a lexical diversity score of 92.44, reflecting a specialized yet varied linguistic structure. The Pytest corpus, in turn, contains 20,600 words with an average lexical diversity of 0.66, illustrating a balance between syntactic repetition and functional variability. These statistics demonstrate a consistent yet expressive dataset capable of supporting robust language-to-code learning.

To ensure proper evaluation and mitigate overfitting risks associated with the dataset’s limited size, a stratified partitioning was applied: 770 samples (88.5%) were allocated for training and 100 samples (11.5%) were allocated for testing. Both subsets were extracted from different functional sections of the same enterprise project, ensuring that each test case represents a unique testing scenario. This partitioning strategy preserves domain consistency while preventing data leakage and guaranteeing that no test case is duplicated across subsets. Statistical comparison of the two partitions based on word frequency distributions, lexical diversity, and code length (Welch’s t-test, p < 0.01) confirmed that they are significantly different, ensuring genuine model generalization across unseen functional contexts.

Although the dataset is relatively small and not publicly accessible, it serves as a controlled benchmark for assessing model performance in realistic, domain-specific automated testing contexts. Its compactness favors efficient fine-tuning while preserving linguistic and structural variability adequate for studying generalization patterns. In future work, we plan to expand the dataset and explore regularization techniques to further minimize overfitting risks.

To promote transparency and reproducibility, non-sensitive dataset samples are provided in

Appendix A, illustrating their internal organization without disclosing any proprietary information. The dataset remains an internal, non-public resource due to confidentiality constraints, though a formal anonymization and release process is under consideration for future public dissemination.

3.4. Prompt Engineering Strategy

The fine-tuning of the Codestral Mamba 7B model followed a systematic prompt engineering methodology in the Instruct format, where the user provides task-oriented instructions and the assistant generates the corresponding output. This structure ensures alignment between the intended task and the model’s syntactically coherent response, fostering consistency during both training and inference.

For the proprietary TestCase2Code dataset, each prompt combined a manual test case description with its associated .jsx source file to guide the generation of automated test scripts in Pytest. The instructional component provided by the user remained constant across all samples and was defined as follows:

“Using the provided test case manual and the corresponding .jsx file as context, generate a test case based on the Pytest library. Ensure the test case is comprehensive and follows best practices with Pytest.”

Only the embedded manual test case and the corresponding .jsx file varied between samples, providing domain-specific context while preserving a uniform structure throughout the dataset. This standardized design was essential for establishing a stable learning signal during fine-tuning, enabling the model to effectively translate heterogeneous natural-language descriptions and UI source code into executable Pytest implementations.

Figure 2 illustrates this structured prompt–response interaction, where the

user block represents the input instruction with contextual elements, and the

assistant block denotes the model-generated Pytest test case. This formulation enhanced reproducibility and interpretability across the training process.

To ensure transparency and reproducibility, the general prompt template and a representative example are provided in

Appendix B. This example illustrates the structured format used during training, allowing researchers to understand and replicate the experimental setup while preserving data confidentiality.

4. Experiments and Results

4.1. Experimental Setup

The Codestral Mamba 7B model was fine-tuned using the CodeXGLUE and proprietary TestCase2Code dataset. All experiments employed a consistent set of hyperparameters to ensure comparability and reliability. The core model settings such as learning rate (), batch size (1), sequence length (1024 tokens), and optimizer (AdamW) remained fixed across both datasets. LoRA was applied with a rank of 128, and cross-entropy with masking was used as the loss function during training, which was conducted over 200 epochs.

Training was performed on the Vision supercomputer (

https://vision.uevora.pt/, accessed on 9 October 2025) at the University of Évora, utilizing a single NVIDIA A100 GPU (40 GB VRAM), 32 CPU cores, and 122 GB of RAM. The model featured approximately 286 million trainable parameters and 7.3 billion frozen parameters with LoRA matrices occupying 624.12 MiB of GPU memory. This high-performance setup enabled the efficient processing of large-scale model fine-tuning.

The implementation leveraged the official Codestral Mamba 7B release from Hugging Face (

https://huggingface.co/mistralai/Mamba-Codestral-7B-v0.1, accessed on 9 October 2025), incorporating architectural features such as RMS normalization, fused operations, and 64-layer Mamba blocks. Detailed layer-wise configurations and parameter dimensions are summarized in

Table 3 and

Table 4, respectively.

4.2. Results

This paper presents the first integration of LoRA, a PEFT technique, into the Codestral Mamba framework to evaluate its capacity for generating accurate and contextually relevant code from natural language descriptions. Model performance was assessed using two datasets, CONCODE/CodeXGLUE and TestCase2Code, focusing on how LoRA improves code generation quality and adaptability.

4.2.1. Model Performance on the CONCODE/CodeXGLUE Dataset

The CONCODE/CodeXGLUE dataset was used to benchmark the Codestral Mamba model against leading text-to-code generation systems using Exact Match (EM), BLEU, and CodeBLEU metrics. Unlike conventional fine-tuning that may overwrite pretrained knowledge, LoRA enables targeted adaptation while preserving the model’s general reasoning abilities. This balance between specialization and retention enhances robustness and contextual accuracy.

This experiment primarily aimed to validate the feasibility and efficiency of LoRA integration rather than to exceed state-of-the-art benchmarks. Therefore, no extensive hyperparameter tuning or architectural adjustments were applied. Embedding LoRA matrices enabled rapid adaptation to domain-specific data while maintaining the model’s general-purpose capabilities.

As shown in

Table 5, the LoRA-enhanced Codestral Mamba achieved marked gains over its baseline with EM = 22%, BLEU = 40%, and CodeBLEU = 41%. The fine-tuning process required only 1.5 h for 200 epochs, confirming its computational efficiency. Although improvements over top-performing models remain moderate, they substantiate the study’s objective—demonstrating LoRA’s effectiveness in extending Mamba’s adaptability to novel tasks.

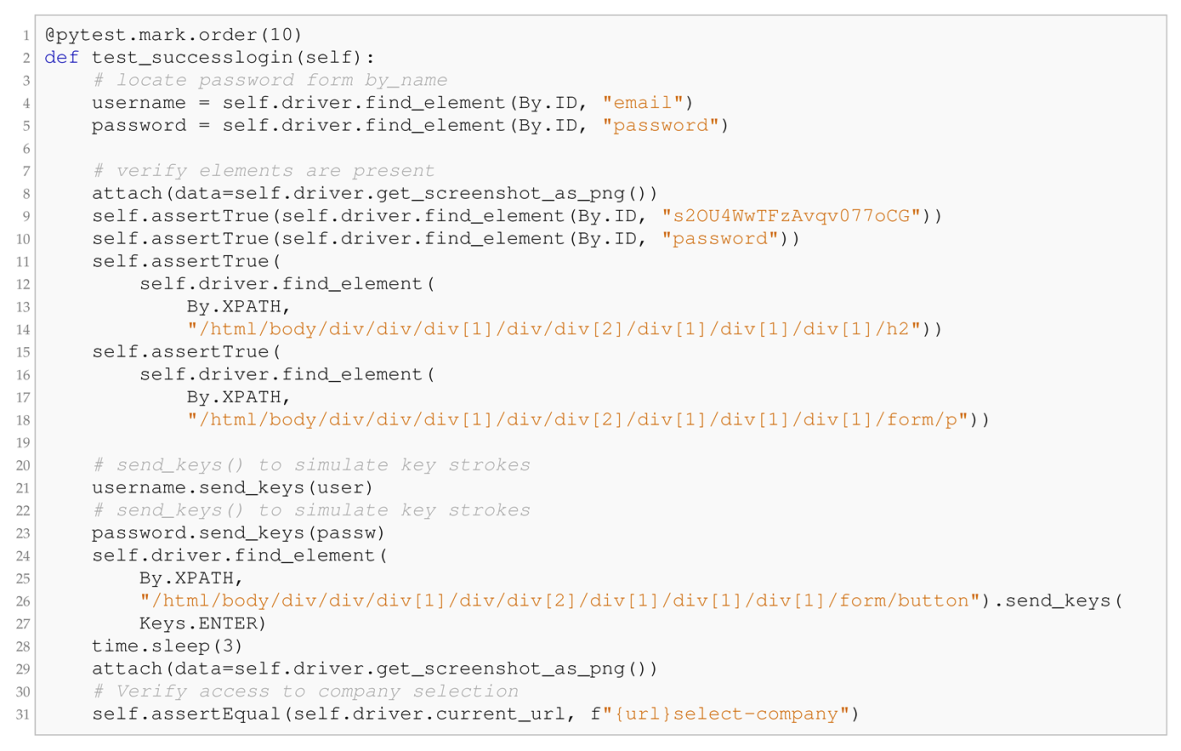

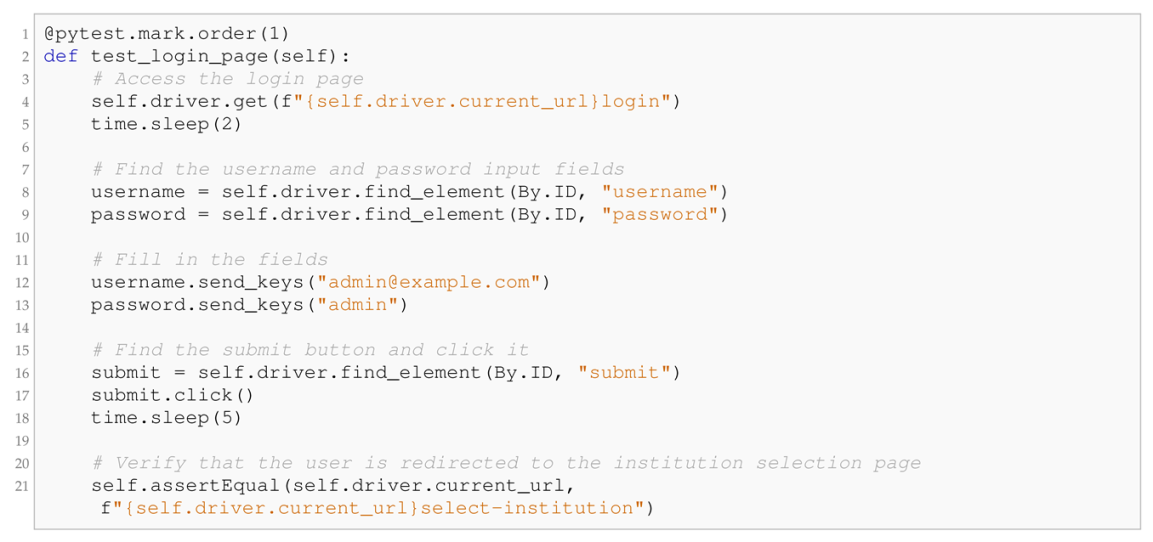

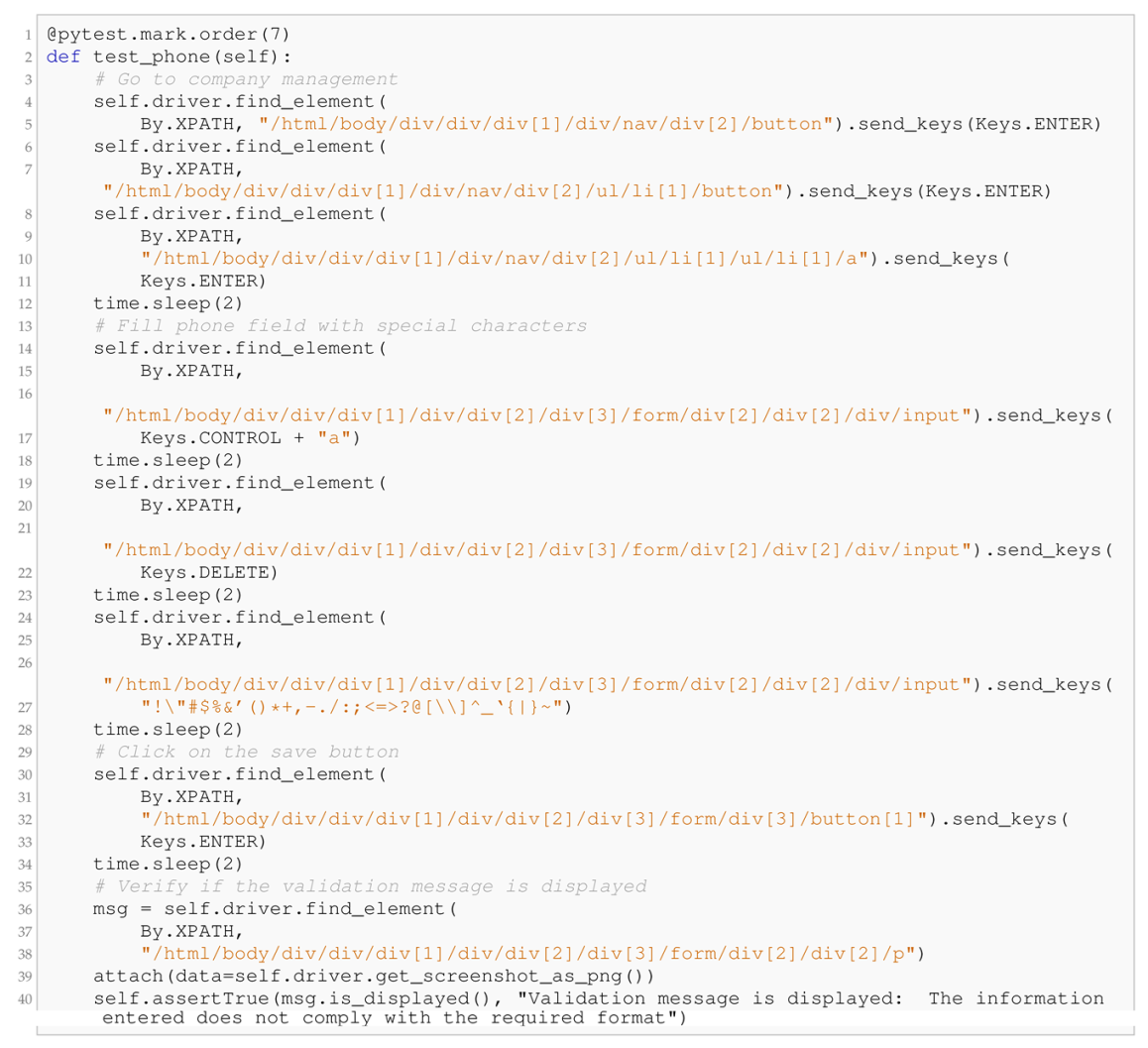

4.2.2. Model Performance on the TestCase2Code Dataset

The proprietary TestCase2Code dataset, derived from real enterprise projects, provided a domain-specific benchmark for assessing test case generation. As shown in

Table 6, the baseline Codestral Mamba exhibited limited accuracy across n-gram, weighted n-gram, Syntax Match (SM), Dataflow Match (DM), and CodeBLEU metrics. After LoRA fine-tuning, substantial improvements were observed: n-gram rose from 4.8% to 56.2%, w-ngram from 11.8% to 67.3%, SM from 39.5% to 91.0%, DM from 51.4% to 84.3%, and CodeBLEU from 26.9% to 74.7%. The model thus achieved superior syntactic and semantic alignment with reference test cases.

Fine-tuning remained highly efficient, completing 200 epochs in 20 min, demonstrating LoRA’s suitability for iterative refinement and rapid deployment.

4.2.3. Interpretation of Results

Results across both datasets confirm that LoRA significantly enhances the Codestral Mamba model’s ability to generate coherent, contextually relevant, and executable code. The fine-tuning process was both effective and computationally lightweight, underscoring its practicality for continuous testing environments. These findings validate the feasibility of LoRA-based adaptation for large models, paving the way for scalable, efficient, and domain-specific automation in software testing workflows.

4.2.4. Practical Performance Evaluation

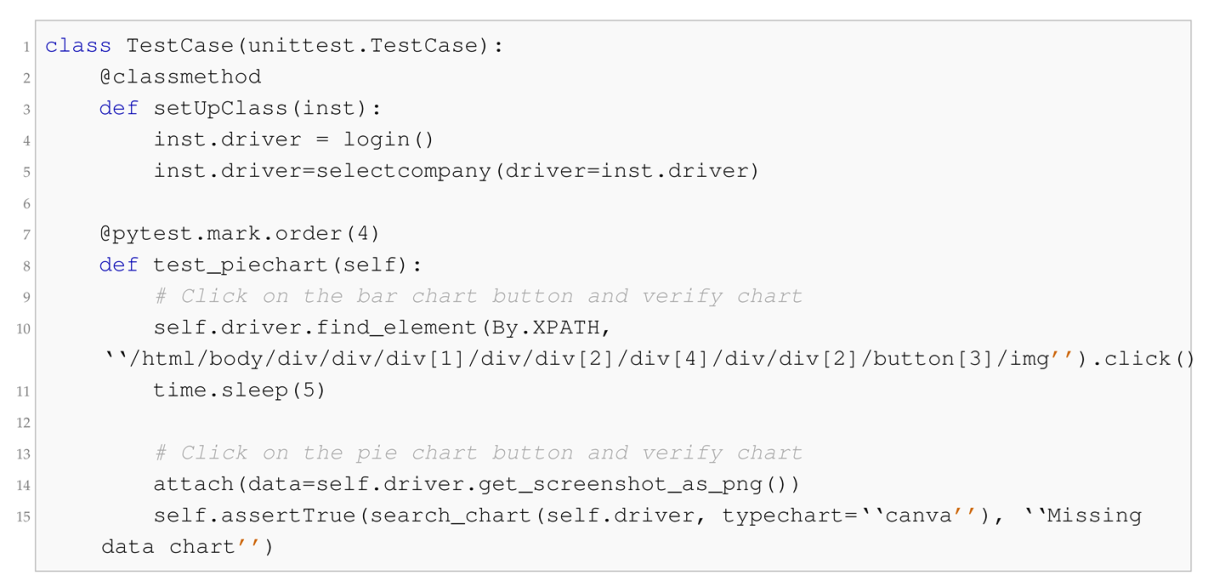

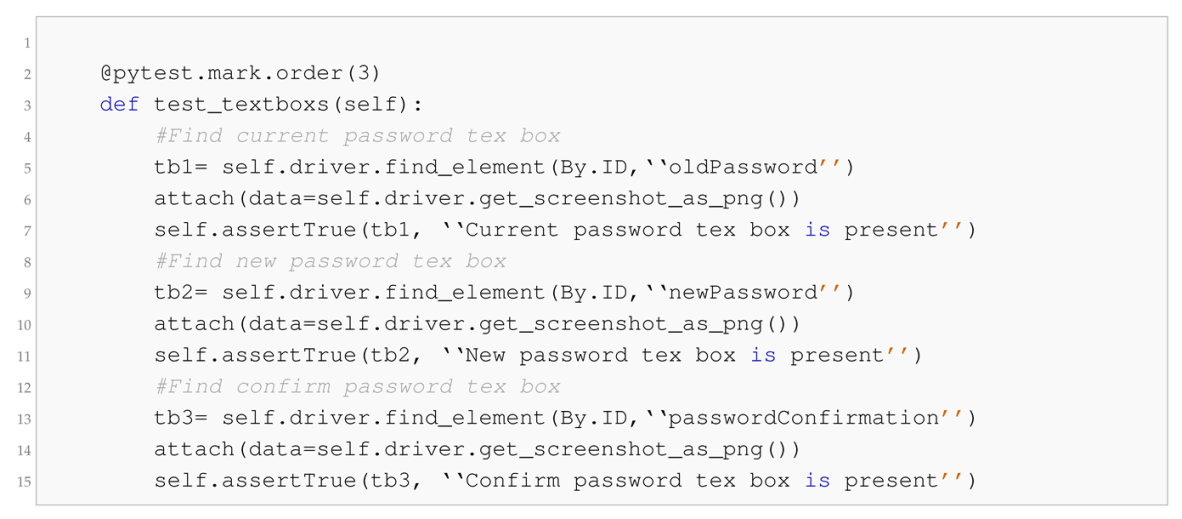

This section presents the practical evaluation of the fine-tuned Codestral Mamba model through its integration into the Codestral Mamba_QA chatbot, which was implemented as a secure web-based service. Unlike purely quantitative assessments, this analysis relied on expert inspection and the visual examination of model outputs. As illustrated in

Figure 2, the evaluation followed a prompt–response paradigm in which the

system input (user role) combined task-specific instructions and manual test case descriptions with associated

.jsx files, while the fine-tuned model, guided by the

system prompt, generated the

system response (assistant role) namely, executable Pytest scripts.

The model was fine-tuned using the proprietary TestCase2Code dataset, ensuring strict separation between training and test subsets. Tests employed both dataset cases and newly designed instructions to assess the model’s ability to produce syntactically valid and functionally coherent test scripts. Experiments explored variations in LoRA scaling, temperature, and prompt design, providing insights into adaptability and robustness under realistic conditions.

Importantly, the chatbot is currently deployed within the collaborating enterprise as a secure prototype supporting internal quality assurance workflows. This deployment enables iterative validation of the model’s real-time performance in generating test cases and reducing manual effort. Although qualitative and exploratory in nature, the results demonstrate strong potential for integration into continuous testing pipelines.

The evaluation considered several configurable elements influencing generation behavior:

System Prompt: predefined instructions aligning model behavior with Pytest-based test generation best practices.

Temperature: controls output variability; lower values promote determinism, while higher values introduce creativity and diversity.

LoRA Scaling Factor: determines the strength of fine-tuning adaptation; higher values increase domain specificity.

System Input: user-provided instruction, test case description, or both, serving as the basis for generation.

System Response: model-generated output, typically structured Pytest code and, in some cases, explanatory logic.

To illustrate the model’s behavior across configurations, representative examples of prompt–response pairs are presented in

Appendix C (

Table A1,

Table A2,

Table A3,

Table A4,

Table A5 and

Table A6). These include scenarios such as baseline query interpretation, LoRA-enhanced test generation, temperature and scaling influence, extended test coverage, cross-project adaptability, and automated bug prevention. Together, these examples demonstrate the model’s capacity to generate precise, reusable, and semantically coherent Pytest code across diverse testing contexts.

5. Discussion

This study demonstrates the effective use of large language models for automating functional software testing, emphasizing the generation of precise and context-aware test cases. Integrating the Codestral Mamba model with LoRA yielded substantial improvements in CodeBLEU and semantic matching scores, confirming the model’s capability to translate natural-language inputs into executable, functionally coherent Pytest scripts.

Evaluation across two datasets: CONCODE/CodeXGLUE and the proprietary TestCase2Code revealed complementary strengths. The former validated generalization across diverse coding domains, while the latter assessed domain-specific robustness in real-world enterprise contexts. The LoRA-enhanced model preserved broad adaptability while effectively specializing to project-specific requirements, demonstrating the benefits of PEFT.

The introduction of the TestCase2Code dataset represents a significant contribution toward realistic benchmarking. Constructed from real project artifacts, it includes paired manual and automated test cases, enabling rigorous assessment under conditions that closely mirror industrial practice. On this dataset, the fine-tuned model achieved notable gains in syntactic precision and functional coherence, reinforcing its applicability for enterprise-level testing workflows.

Beyond model optimization, the internally deployed Codestral Mamba_QA chatbot ensures secure, organization-specific integration. Its operation without external dependencies safeguards data confidentiality while supporting adaptive interactions. Dynamic hyperparameter tuning enhances responsiveness and efficiency, while a structured repository for LoRA matrices enables project-level model management, promoting scalability and maintainability.

Nonetheless, certain limitations merit consideration. Although internal deployment mitigates security risks, the potential exposure of sensitive logic during fine-tuning or inference remains a concern. Biases arising from uneven data representation may also influence test coverage or prioritization. Furthermore, maintaining alignment between evolving software systems and fine-tuned models requires periodic re-adaptation to sustain performance and relevance.

Overall, these findings highlight both the practical benefits and ethical imperatives of deploying LoRA-enhanced state-space models in automated software testing. The study underscores the dual need for technical performance and responsible deployment, paving the way for scalable, secure, and sustainable AI-driven testing solutions.

6. Conclusions

This work demonstrates the viability of leveraging large language models, specifically the Codestral Mamba architecture, in combination with LoRA, to advance automated test case generation. The central contribution lies in the comparative evaluation between the baseline Codestral Mamba model and its LoRA-enhanced counterpart across two datasets: CONCODE/CodeXGLUE and the newly proposed TestCase2Code benchmark. Results show that fine-tuning with LoRA yields improvements in both syntactic precision and functional correctness. These findings provide clear empirical evidence of the effectiveness of PEFT in software testing contexts.

In addition to the main findings, this work offers complementary contributions that reinforce the practical relevance of the approach. The proprietary TestCase2Code dataset, constructed from real-world project data, provides a starting point for evaluating the alignment between manual and automated functional test cases. Although this dataset is not publicly available, and its use is therefore limited to the scope of this work, it nevertheless highlights the potential of project-specific data for advancing research in automated testing. Furthermore, the development of a domain-adapted AI chatbot and the structured management of LoRA matrices demonstrate how these methods can be operationalized in practice, supporting the adaptability, scalability, and long-term maintainability of fine-tuned models.

Taken together, these contributions highlight the transformative potential of LoRA-augmented large language models in software engineering. By improving accuracy, computational efficiency, and adaptability, this work lays a solid foundation for the broader adoption of AI-driven solutions in software quality assurance. The demonstrated results establish a pathway toward more intelligent, reliable, and sustainable test automation practices, positioning this approach as a promising direction for future research and industrial deployment.

Future Work

This study demonstrates notable progress in automated test case generation using the Codestral Mamba model with LoRA. Nonetheless, several research directions remain open to enhance its generalization, applicability, and reproducibility. Expanding dataset diversity is a key priority, particularly by extending beyond selective file inclusion toward full project repositories from platforms such as GitLab. Such datasets would provide richer contextual information, strengthening cross-domain generalization and reducing overfitting risks.

The proprietary TestCase2Code dataset remains restricted due to confidentiality constraints; however, the release of a fully anonymized or synthetic version is planned to ensure compliance with data protection standards while supporting transparency and reproducibility. This process will involve generating synthetic samples and metadata consistent with the original dataset schema, enabling future open-access research.

Further investigations will explore adaptive fine-tuning strategies to balance performance and computational efficiency, facilitating adoption by teams with limited resources. Complementary evaluation metrics such as maintainability, readability, and integration effort, combined with developer and tester feedback, will help align model evaluation with real-world software engineering needs.

The chatbot developed in this study has been deployed within the collaborating enterprise as an early-stage prototype, allowing iterative validation of the model’s practical utility in generating automated test cases and reducing manual testing effort. While the current assessment is qualitative, it demonstrates strong potential for integration into continuous testing pipelines. Future work will include a formal user study involving software professionals to quantitatively assess usability, accuracy, and real-world impact.

Finally, research into continuous adaptation mechanisms and standardized integration frameworks could enable the seamless incorporation of such models into CI/CD environments, ensuring sustained relevance and facilitating broader industrial adoption.