1. Introduction

With the continuous progress and development of science and technology, people’s lives are increasingly dependent on technology and its applications. In this context, Artificial Intelligence (AI) [

1] technology plays a crucial role, profoundly affecting humans’ lives and productivity, and in recent years, global investment in AI has grown rapidly, accelerating its development. Among the many related areas of research,

computer vision has made remarkable progress, with object detection being one of the most critical tasks [

2]. In particular,

dense pedestrian detection has gained significant attention due to its wide-ranging real-world applications. For instance, in

intelligent transportation, pedestrian detection is essential for traffic monitoring, the functioning of smart intersections, and safe autonomous driving [

3]. In

public security and crowd management, robust detection is indispensable for monitoring high-density environments such as stadiums, subway stations, and airports [

4]. These examples clearly illustrate the urgency and importance of reliable dense pedestrian detection.

Despite the high value of pedestrian-target-detection technology, its application still faces many challenges in real-life scenarios, including some research gaps and challenges. Examples follow. (1)

Class imbalance and hard examples: Pedestrians are concentrated in limited regions of the image, while the majority of pixels correspond to background, resulting in an overwhelming proportion of negative samples. Moreover, heavy occlusion produces numerous low-quality positives (e.g., pedestrians with only legs or arms visible), which may cause overfitting if the model relies excessively on such incomplete features; (2)

Scale variation: The presence of pedestrians at different distances results in large scale differences, posing challenges for single-resolution feature extractors; (3)

Occlusion: In crowded scenarios, pedestrians often overlap heavily, such that bounding boxes capture only partial features, making recognition difficult [

5]. For example, in the widely used CrowdHuman benchmark dataset, each image contains on average 22.6 persons, with a large proportion of them being heavily occluded [

6]. Such high-density crowd images not only exacerbate occlusion but also intensify the challenges posed by appearance similarity and scale variation. With numerous individuals wearing similar attire or exhibiting comparable postures, distinguishing between targets becomes increasingly difficult for detection models. Moreover, the wide range of pedestrian sizes—an effect of their varying distances from the camera—further complicates feature extraction.

Although many pedestrian-detection algorithms have been developed, significant gaps remain. Traditional anchor-based detectors struggle with occlusion and background clutter, while anchor-free methods often fail in dense scenes [

7]. The YOLO family achieves real-time performance but still suffers from redundant features, difficulty in handling hard samples with standard loss functions, and limited cross-dimensional feature modeling. These limitations reveal an urgent need for further improvements.

The aim of this paper is to enhance the algorithm’s ability to cope with problems such as occlusion, appearance similarity, and scale variation so as to improve the accuracy and stability of pedestrian detection and tracking in crowded environments.

2. Related Work

Real-time efficiency vs. model complexity. Early one-stage detectors such as YOLOv1, YOLOv2, YOLOv3, [

8,

9,

10] and SSD [

11] pioneered end-to-end real-time detection by replacing proposal generation with direct regression on grid cells or anchors. YOLOv1 introduced grid-based prediction for categories, bounding boxes, and confidence, an approach that enabled fast inference but struggled with small and overlapping targets. YOLOv2 improved upon this with anchor boxes, multi-scale training, and a Darknet-19 backbone, boosting accuracy while keeping speed. YOLOv3 further enhanced multi-scale prediction with Darknet-53, enabling the detection of objects at three different scales. SSD exploited multi-scale feature pyramids on top of VGG [

12] to jointly leverage deep semantics and shallow details. Although these models differ in design, they shared the common goal of maximizing real-time efficiency. However, they show limited robustness in dense pedestrian scenarios: small-scale persons are easily missed, heavy occlusions lead to bounding-box confusion, and performance in adverse conditions such as low illumination or against cluttered backgrounds remains suboptimal.

To address these weaknesses, later variants, YOLOv4–YOLOv6 [

13,

14], introduced progressively more complex architectural modifications. YOLOv4 adopted CSPDarknet53 as a backbone, integrated enhanced SPP and PAN in the neck, and applied extensive training strategies (mosaic augmentation, self-adversarial training, CmBN, attention modules), significantly improving multi-scale fusion and robustness. YOLOv5 further incorporated Focus and CSP modules to reduce the number of parameters while maintaining strong feature-fusion capacity. YOLOv6 adopted EfficientRep as a backbone, Rep-PAN for efficient multi-scale aggregation, and a decoupled head with hybrid channels, accelerating convergence and improving accuracy under challenging conditions. These advances indeed improved performance on complex detection tasks, including in crowded pedestrian scenes.

Nevertheless, such improvements come at the cost of higher model complexity, more parameters, and greater computational overhead. This trade-off makes their deployment difficult in real-time dense pedestrian-detection scenarios, such as in surveillance cameras or intelligent transportation systems on edge devices, where efficiency and accuracy must be simultaneously guaranteed. Therefore, the key research gap remains the question of how to balance detection accuracy in dense, occlusion-heavy environments with lightweight computation suitable for real-time deployment.

Class imbalance and hard examples. RetinaNet introduced Focal Loss to address foreground–background imbalance by downweighting easy negatives [

15]. Anchor-free and decoupled-head designs in YOLOX/YOLOv6 further stabilized training and simplified localization [

14,

16]. However, in dense pedestrian scenes, extreme imbalance persists: many overlapping negatives surround a few ambiguous positives (e.g., truncated or heavily occluded persons). Standard focal-type losses may still underfit these hard cases.

YOLO11 further incorporates the Complete IoU (CIoU) loss to enhance bounding-box regression by jointly considering IoU overlap, aspect-ratio consistency, and center-point distance between predicted and ground-truth boxes [

17]. By integrating these complementary factors, CIoU evaluates localization quality from multiple dimensions rather than relying on IoU alone, thereby improving bounding-box alignment and convergence stability in general detection tasks.

However, CIoU also exhibits notable limitations when it is applied to dense pedestrian detection. First, when the predicted box and ground-truth box are completely unrelated, the denominator may approach zero, causing numerical instability or even computational errors. Second, its sensitivity to extreme aspect ratios may lead to over-penalization of minor shape deviations, which is unfavorable for pedestrian detection, where bounding boxes often vary irregularly due to occlusion or truncation. Third, the additional computation of center-point distance and diagonal length increases complexity, potentially undermining real-time performance. Finally, its performance heavily depends on the quality of annotations: any labeling errors may amplify penalties for shape differences, exacerbating training instability on low-quality or noisy samples. These limitations suggest that while CIoU improves regression alignment, current loss formulations remain insufficient to handle severe imbalance and noisy hard examples in dense pedestrian datasets.

Scale variation and small pedestrians. Multi-scale prediction and feature pyramids (e.g., FPN/PAN) were integrated into YOLOv3/v4 and SSD to better capture objects at different resolutions [

10,

11,

13]. However, pedestrians in urban scenes often occupy only a few pixels at long range, and naïve pyramid fusion may dilute fine-grained cues. In dense datasets such as CrowdHuman and CityPersons [

6,

18], the prevalence of small or partially visible persons exacerbates recall drops for small scales. Dense pedestrian detection is therefore highly correlated with the challenge of small-object detection: most pedestrians in crowded scenes appear as small, overlapping targets whose features are easily lost during down-sampling and fusion. Addressing small-scale pedestrians is thus not only a subproblem but a decisive factor in improving overall detection accuracy in dense crowd scenarios. Hence, improved multi-scale aggregation with minimal redundancy remains essential.

Occlusion. Occlusion is a common and long-standing challenge in object detection, especially in crowded urban environments where pedestrians frequently overlap. A number of studies have specifically examined algorithms under conditions of occlusion. For example, Tanzim Mostafa et al. [

19] employed a transfer-learning approach to evaluate YOLOv5, YOLOX, and Faster R-CNN on occluded road-scene datasets and demonstrated that YOLOv5 and Faster R-CNN exhibited clear shortcomings in handling the imbalance of positive and negative samples under conditions of occlusion. Building on this work, Hong Wang et al. [

20] proposed a normalized attention module (NAM) with weight gain to optimize the PAFPN structure in YOLOX, thereby diversifying feature receptive fields and improving detection precision in occlusion scenarios. These cases indicate that combining moderately complex backbones with attention mechanisms can help models learn to distinguish features of occluded objects and reduce confusion between overlapping targets.

Attention and feature enhancement. Recent YOLO variants and related detectors have explored using attention or enhanced aggregation to boost representational quality (e.g., spatial/channel attention and cross-stage fusion) [

13,

21]. However, many modules modulate either channel or spatial dimensions in isolation, overlooking cross-dimensional interactions that are crucial for distinguishing tightly packed, visually similar pedestrians. Moreover, heavy attention blocks may undermine real-time constraints. There is a need for lightweight attention that captures channel–spatial interplay without excessive overhead.

In summary, for dense pedestrian detection, several recurring challenges remain despite extensive iterations and optimizations of existing detectors: the balance between accuracy and computational cost, the interference of low-quality positive samples, small-object detection, and occlusion. The remaining research gaps can be summarized as follows: (1) Complex feature-extraction structures often improve accuracy but conflict with the dual requirements of real-time efficiency and high precision in dense pedestrian detection; (2) Focal Loss, while addressing class imbalance to some extent, lacks flexibility and remains insufficient when facing severe positive–negative imbalance and noisy samples; (3) There is a lack of lightweight attention mechanisms capable of comprehensively capturing spatial–channel interactions, which limits the algorithm’s ability to distinguish features under conditions of heavy occlusion and high appearance similarity. These verified gaps confirm the challenges outlined in the Introduction and provide the motivation for the development of the improved YOLO11 framework proposed in this work.

To address the above gaps, our method builds on YOLO11 with four targeted enhancements: a lightweight C3K2-Lighter backbone for efficiency, a Triplet Attention module to capture channel–spatial cross-dimensional interactions for occlusion robustness, and Variable Focus Loss (VFL) to emphasize hard positives under conditions of severe imbalance. As demonstrated in our experiments, these components synergize to improve accuracy and stability in crowded scenes while preserving real-time performance.

3. Methodology

Dense pedestrian detection in crowded scenarios faces several challenges. High computational demands make real-time inference difficult, while heavy occlusion, scale variation, and small targets complicate effective feature extraction. In addition, severe class imbalance and the presence of low-quality positive samples further hinder accurate detection.

The objective of this work is therefore to design a lightweight yet accurate detection framework tailored for dense pedestrian detection. Specifically, the aims are as follows: (i) to alleviate computational bottlenecks for real-time deployment on edge devices, ensuring that the detector can run efficiently in resource-constrained environments such as surveillance cameras or embedded traffic-monitoring systems; (ii) to enhance feature representation under conditions of occlusion and scale variation, enabling the model to reliably detect partially visible or small-scale pedestrians, which is critical for safety-critical applications like autonomous driving and crowd management; (iii) to improve robustness to class imbalance and hard examples through advanced loss design so that the model focuses on high-quality positive samples while reducing overfitting to noisy or redundant data, thereby ensuring stable performance in large-scale, real-world deployments.

To achieve this goal, we propose a threefold methodological framework. First, the Improved C3K2 Module introduces FasterNet-inspired partial convolution to improve efficiency and receptive-field utilization. Second, a Triplet Attention Mechanism captures cross-dimensional interactions, enhancing robustness against occlusion and small-scale pedestrians. Finally, an Enhanced Loss Function based on VariFocal Loss (VFL) mitigates class imbalance by adaptively weighting high-quality positive samples while suppressing redundant negatives.

3.1. Overall Methodological Framework

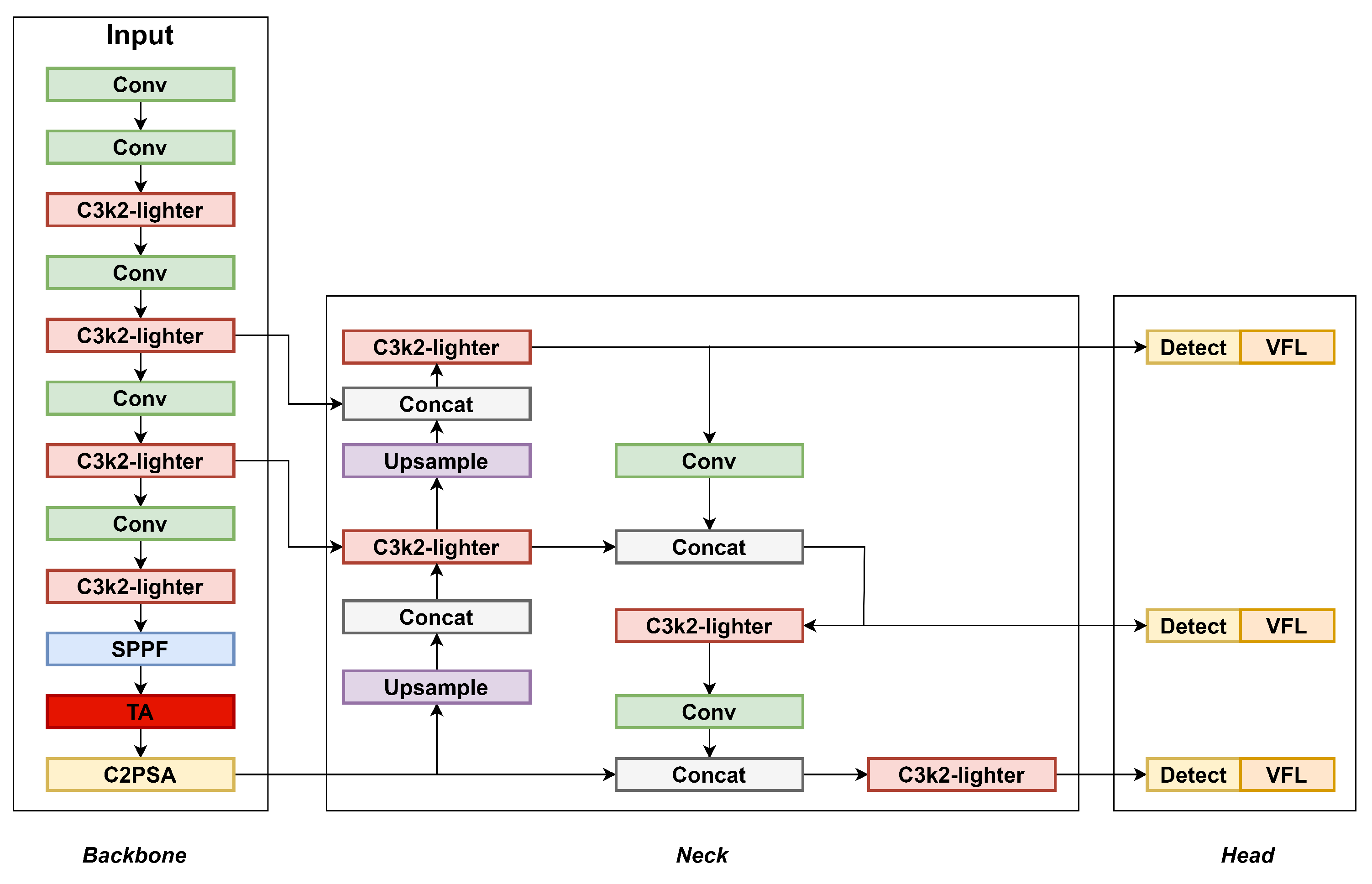

The overall architecture of the proposed method is shown in

Figure 1, where these components are shown to integrate cohesively into the detection pipeline. From the figure, it can be observed that the lightweight C3k2-lighter modules are embedded in both the backbone and the neck, substantially reducing the number of parameters and computational complexity while maintaining feature-extraction capacity. The Triplet Attention (TA) module is strategically integrated into the deeper backbone layers, enabling the network to capture discriminative cues under conditions of severe occlusion and scale variation. At the detection head, the VariFocal Loss (VFL) replaces the original regression loss, providing adaptive weighting that balances class imbalance and emphasizes high-quality positive samples. Taken together, these components form a cohesive framework in which structural efficiency, feature robustness, and loss-level optimization complement one another, comprehensively strengthening YOLO11 from low-level feature extraction to high-level decision making.

To comprehensively enhance detection performance, three targeted modules are integrated into the framework, each addressing a specific challenge:

Triplet Attention (TA) is introduced to strengthen cross-dimensional feature interactions, effectively mitigating issues caused by occlusion and small-object detection.

VariFocal Loss (VFL) is adopted to counteract the impact of low-quality annotations and class imbalance by adaptively weighting samples, thereby improving training stability and robustness.

C3k2-lighter, inspired by FasterNet, streamlines the network by removing redundant structures, enabling lightweight deployment without compromising accuracy.

This targeted mapping between challenges and solutions ensures that each improvement directly responds to a critical bottleneck, while their integration across different network stages yields a complementary and cohesive optimization framework.

As illustrated in

Figure 2, these three components form a complementary triangular relationship, where

C3k2-Lighter ensures deployability and efficiency,

Triplet Attention (TA) guarantees accurate feature representation in complex scenarios, and

VariFocal Loss (VFL) stabilizes and optimizes the training objective. Their roles are described more specifically below:

C3k2-Lighter ↔ TA: The lightweight design may reduce feature representation capacity, while TA compensates for this effect by enhancing cross-dimensional interactions. Thus, parameter numbers are reduced in the backbone and the neck but robustness is preserved.

C3k2-Lighter ↔ VFL: Lightweight modules lower model capacity, which may hinder the learning of hard samples. VFL alleviates this issue by adaptively weighting high-quality positives, ensuring that efficiency gains do not come at the expense of accuracy.

TA ↔ VFL: Attention strengthens feature representation, but in cases of noisy or imbalanced labels, the model may overfit spurious patterns. VFL mitigates this risk by focusing training on reliable samples, forming a synergy that improves generalization under conditions of occlusion and density.

Overall, these three improvements are point-to-point solutions targeting the key challenges of dense pedestrian detection. As discussed above, their coupling effect is highly synergistic, striking a balance among efficiency, feature representation, and training stability.

3.2. Improvement of C3K2 Module

The C3k2 module is designed based on the Bottleneck architecture, which achieves lightweight feature extraction by stacking 1 × 1 depth-separable convolution, 3 × 3 local feature-capture convolution, and 1 × 1 feature-fusion convolution and introduces cross-layer leapfrog linking to mix shallow details and deep semantic features to enhance multi-scale adaptability. However, this module faces multiple challenges in dense pedestrian-detection scenarios. The gradient attenuation caused by the presence of consecutive convolutional layers limits the effective transfer of local features. It is difficult to dynamically adapt the hybrid strategy of fixed branching to sudden changes at the target scale. The depth-separable convolution reduces the number of parameters, but this approach still suffers from the problem of computational delay. In addition, the static feature-fusion mechanism is sensitive to complex poses such as occlusion and stooping and is prone to omission or misjudgment due to feature confusion, while the high memory occupancy and unfriendly caching caused by the layer-stacking structure further aggravate the real-time pressure associated with lightweight models; this ultimately restricts the model’s accuracy and robustness in complex scenarios.

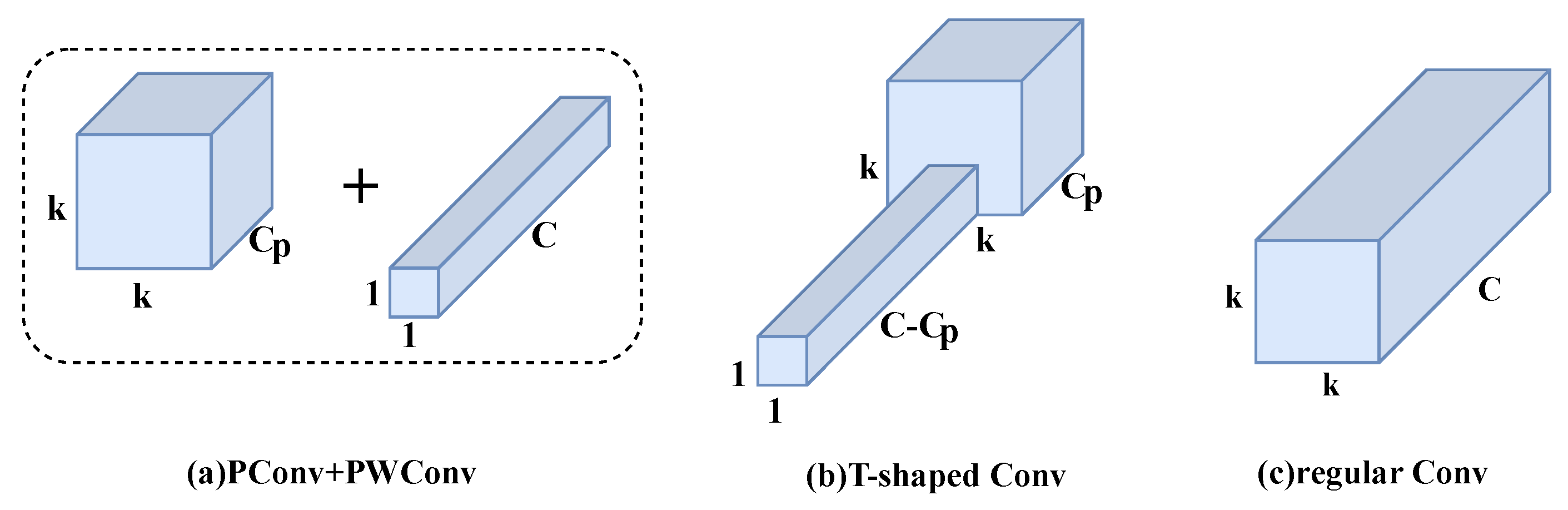

In this paper, we adopt the FasterNet concept and propose replacing the Bottleneck module in C3k2 with the FasterNet Block, which consists of one PConv (Partial Convolution) partial convolution layer and two PWConv (Point-by-Point Convolution) layers, forming a residual block. (Conv 1 × 1) layers. The structure of the module is shown in

Figure 3. Partial convolution is fast and efficient because it involves applying filters to only a few input channels while keeping the rest of the channels unchanged. pConv reduces both computational redundancy and memory access by applying a regular Conv to only a portion of the input channels for spatial feature extraction while keeping the rest of the channels unchanged.

For continuous or regular memory-access patterns, the

consecutive channels of the first or last segment can be treated as representative portions of the feature map. The number of channels in the input and output feature maps is assumed to remain the same, preserving the generality of the study. Therefore, the FLOPs of PConv are computed as (

1)

For a typical partial ratio

, PConv’s FLOPs are only

of a regular convolution. In addition, PConv has smaller memory accesses, calculated as in (

2), which is only

for regular convolution

.

To enhance the efficiency of channel information utilization, PConv introduces point-by-point convolution (PW-Conv) on the basis of partial convolution. After the two have exerted their synergistic effect, an effective T-shaped receptive field is constructed on the input feature map. Compared with conventional convolution, which extracts features uniformly across the entire region, this structure emphasizes feature representation in the central region, as shown in

Figure 4.

Partial convolution (PConv) followed by a point-by-point convolution (PWConv) (

Figure 4a) is similar to T-shaped convolutional maps (

Figure 4b), which spend more computation at the center compared to regular convolution (

Figure 4c). While the T-shaped convolution can be used directly for efficient computation, it is better to decompose it into PConv and PWConv because this decomposition utilizes redundancy between filters and further saves FLOPs. For the same input

and output

, the FLOPs of the T-convolution are calculated as follows (

3):

This is higher than the FLOPs of PConv and PWConv, calculated as follows (

4):

3.3. Adding Attention Mechanisms

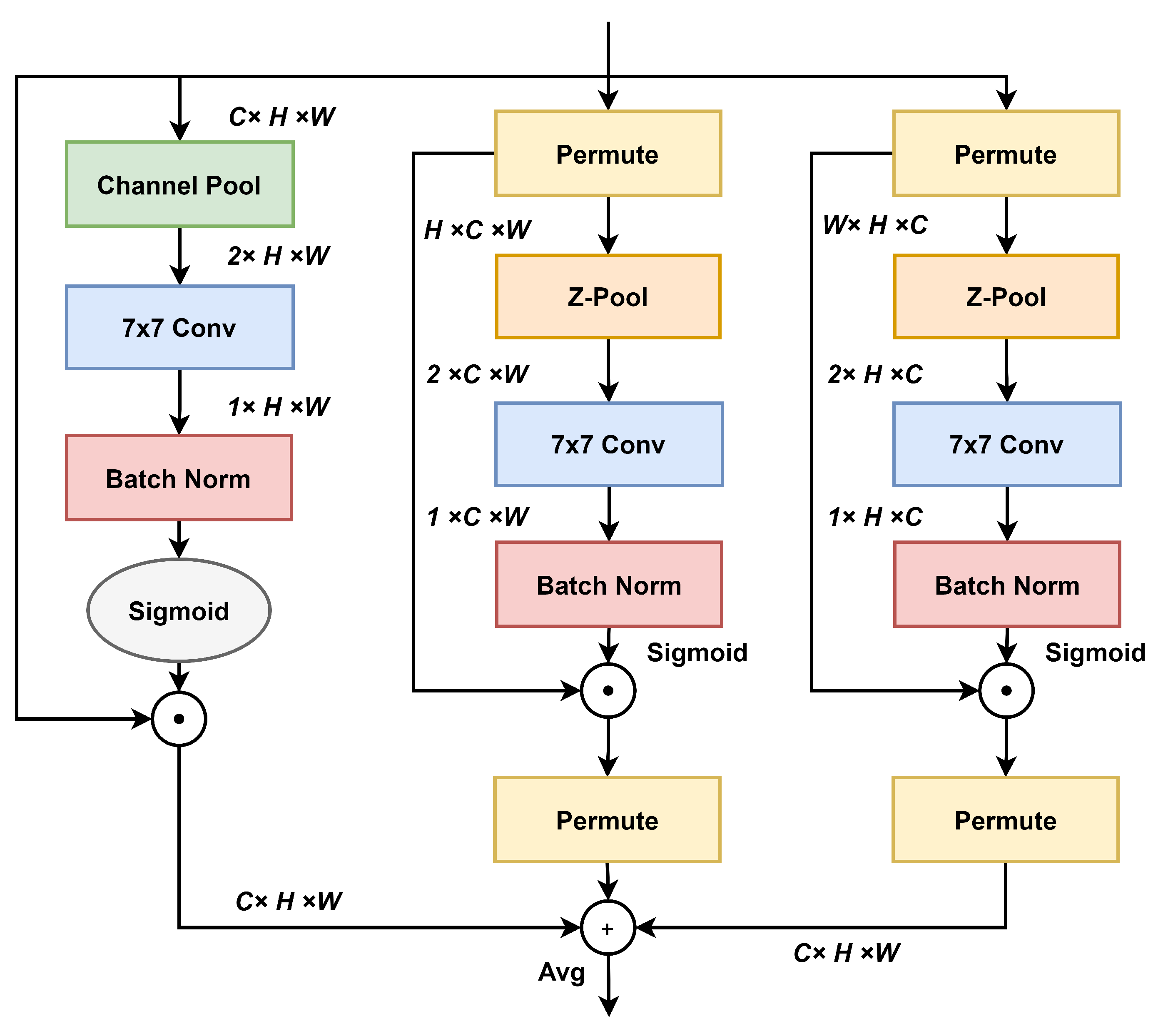

Triplet attention is a new approach to computing attention weights using a three-branch structure to capture cross-dimensional interactions. Combining spatial and cross-channel information to enhance the deep learning model’s attention to the target features, the core concept of triple attention is to compute the attention on the input feature maps along three different dimensions, namely, the horizontal, vertical, and channel dimensions. In this way, the interaction of spatial and channel information can be captured more comprehensively, thus improving the feature representation of the model. For the input tensor, the ternary attention is rotated to establish inter-dimensional dependencies, then residual transformed, and the inter-channel and spatial information is encoded with negligible computational overhead, as shown in

Figure 5.

The top branch is responsible for computing the attentional weights across the channel dimension

C and the space

W. The middle captures the weights between

C and

H, and a similar bottom branch captures the spatial correlation between H and W. In the first two branches, a rotation operation is used to establish the connection between the channel dimension and either of the spatial dimensions, and the weights are finally summarized by a simple averaging. Inspired by the way spatial attention is built, the concept of cross-dimensional interaction is proposed to address this shortcoming by capturing the interaction between the spatial and channel dimensions of the input tensor. Cross-dimensional interactions are introduced in triple attention by obtaining the dependencies between the tensor

,

and

dimensions through three branches. Here the

is responsible for reducing the 0th dimension to two dimensions by concatenating the average pooling and maximum pooling elements in that dimension. This allows the layer to retain a rich representation of the actual tensor while reducing its depth to make further computation lightweight. Mathematically, this can be expressed by Formula (

5):

The process of applying the tensor

y with fine attention obtained from the triple attention of the input tensor

can be represented by Equation (

6), below.

where

represents the sigmod activation function;

,

, and

represent the standard two-dimensional convolutional layers defined by the kernel size k in the three branches of triple attention; and the simple

y can be replaced with Equation (

7):

where

,

, and

are the three cross-dimensional attention weights computed in triple attention.

and

in the equation represent 90° clockwise rotation to maintain the original input shape of

in the above equation. When the target is partially occluded, Triplet attention can establish an association with the global context through the features in the unoccluded region to reduce the omission due to the lack of local information and mitigate the feature-sparsity problem by enhancing the feature expression of small targets, such as pedestrians at a distance, through the long-range feature interaction.

3.4. Improvement of the Loss Function

In the context of dense pedestrian detection, the classification task involves distinguishing foreground pedestrian instances from abundant background regions. Here, positive samples are defined as candidate boxes whose Intersection-over-Union (IoU) with ground-truth annotations exceeds a predefined threshold, while negative samples correspond to background boxes with negligible overlap. The employed loss function thus plays a crucial role: by adaptively weighting positives and suppressing easy negatives, it enables the model to focus on hard positives such as small or occluded pedestrians. This not only mitigates the severe class imbalance inherent in dense-crowd datasets but also facilitates more discriminative feature learning.

VariFocal Loss (VFL), which is an improved version of Focal Loss, achieves an optimization breakthrough through an asymmetric positive and negative sample-weighting mechanism. This loss function focuses on enhancing the training contribution of high-quality positive samples, adopts a binary cross-entropy framework to construct dynamic focus coefficients, differentially handles the continuous IoU-aware classification score regression of positive samples and the suppression strategy of negative samples, and effectively mitigates the category imbalance in the detection task, whose mathematical expression is shown in Equation (

8).

where

p is the predicted IACS and

q is the target score. For foreground points, their true category

q is set to the IoU between the generated bounding box and its true bounding box (gt_ IoU), otherwise 0, while for background points, the target

q for all categories is 0. This loss function demonstrates pervasive performance enhancement across a wide range of experimental validations across frameworks and datasets, and in particular, it exhibits enhanced detection robustness, especially in target-dense scenarios. Its mathematical architecture breaks new ground by introducing a dynamic weight-adjustment mechanism, which maintains the focus on difficult samples and realizes the adaptive capability of the task through parameterized design. This loss function not only effectively balances the weight distribution of positive and negative samples, but also empowers the model to be compatible with different data features through the flexible hyperparameter system, which doubly enhances the localization accuracy and classification stability of the detector in complex scenes.

4. Experiments

4.1. Dataset

The experiment uses the CrowdHuman large-scale complex scene pedestrian-detection dataset released by Kuangyi Technology in 2018, which contains 15,000 training images, 4370 validation images, and 5000 test images, corresponding to a ratio of approximately 3:1:1. The average single-image density is 23 people. The dataset covers real scenarios such as streets, transportation hubs, and commercial complexes. It provides triple-fine annotations for head bounding boxes, visible-area bounding boxes, and full-body association bounding boxes, and additionally offers multi-dimensional features for different occlusion levels (fully visible/partially occluded/severely occluded) and human body postures, with a coverage rate of up to 78% of the densely occluded scenarios. It provides a challenging validation benchmark for the research of pedestrian detection algorithms in high-occlusion environments and effectively supports the evaluation of model generalization ability and optimization iteration in complex scenarios.

Figure 6 illustrates the distribution of images across various levels of occlusion in the CrowdHuman dataset. In academic research, it is generally accepted that images with occlusion less than or equal to 30% are categorized as bare, those with occlusion between 30% and 70% are categorized as partial, and those with occlusion greater than 70% are categorized as heavy. As shown in the figure, the dataset maintains a relatively balanced proportion across these occlusion levels, with bare, partial, and heavy occlusion accounting for 29.89%, 32.13%, and 37.98% of the images, respectively. This distribution makes it well-suited for comprehensive analysis and evaluation of pedestrian-detection algorithms under conditions of severe obstruction.

4.2. Experimental Setup

The hardware configuration used for all experiments is summarized in

Table 1. On the software side, we used Python 3.8 as the programming language, PyTorch 1.10 as the deep learning framework, and CUDA 12.1 for GPU acceleration.

The training was conducted under the following hyperparameter settings in

Table 2. The input image size was uniformly set to

, i.e., each image was resized to 640 pixels in both width and height with three color channels. The training process was scheduled for 300 epochs, and Stochastic Gradient Descent (SGD) was employed as the optimizer to efficiently update model weights. To accelerate data loading, eight worker threads were configured. The initial learning rate was set to 0.01, determining the step size of weight updates. In addition, a patience value of 50 was used in conjunction with early stopping, meaning that if no improvement in validation performance was observed within 50 epochs, training would be terminated early to prevent overfitting. These settings were chosen to balance training efficiency and detection performance.

Although fixed random seeds and single-GPU training were adopted in this study to minimize randomness-induced variations, to ensure statistical reliability, all reported experimental results were obtained by averaging three independent runs. The corresponding standard deviations are also provided alongside the mean values in the tables and formatted as “mean ± standard deviation”.

4.3. Comparison of C3k2 Module Improvement

In this section, we evaluate the effect of replacing the Bottleneck module in C3k2 with the FasterNet Block. The FasterNet Block introduces Partial Convolution (PConv), which significantly reduces computational redundancy and memory-access costs.As shown in

Table 3, the baseline YOLO11 model achieves 78.5% AP with 2.6 M parameters and 28.6 G FLOPs, while the lightweight variant YOLO11-C3k2-lighter achieves 77.2% AP with only 1.9 M parameters and 21.5 G FLOPs. Although there is a 1.1% drop in AP, the model parameters are reduced by 26.92% and FLOPs are reduced by 24.83%.

Such reductions are particularly meaningful in the broader context of research on lightweight detection, where even single-digit reductions in parameters are considered significant. For example, prior lightweight detection models such as YOLOv5n achieve lower accuracy with 9.1 M parameters, highlighting the substantial efficiency gains achieved by our proposed structure. This indicates that C3k2-lighter provides a strong foundation for practical deployment in embedded- or edge-computing environments, and the slight accuracy drop can be compensated for by subsequent improvements, such as the addition of attention mechanisms.

4.4. Comparison of Attention Mechanisms

To verify the impact of attention mechanisms, we conduct experiments on three variants, YOLO11-ECA, YOLO11-CA, and YOLO11-Triplet, while keeping training settings identical to the baseline. The results are shown in

Table 4. Compared to the baseline YOLO11 (78.5 % AP), YOLO11-CA improves AP to 80.5 %, while YOLO11-Triplet achieves 81.6 %. Notably, YOLO11-Triplet also increases precision to 94.5%.

Although adding attention modules increases parameter counts (e.g., YOLO11-CA to 3.0 M), the performance gains are worthwhile in applications where detection reliability is critical. In practical terms, the triplet attention mechanism effectively models cross-dimensional dependencies, which is essential in crowded pedestrian-detection scenarios where occlusion and high similarity often lead to feature confusion. This demonstrates that integrating multi-branch attention is not just a marginal numerical improvement but a crucial step towards enhancing robustness under dense and occluded conditions. In real-world terms, this improvement translates into more reliable pedestrian detection in highly populated urban areas, significantly enhancing the practicality of using intelligent surveillance systems during holidays, in situations with large gatherings, and in other crowd-intensive scenarios.

4.5. Comparison of Loss Functions

We further investigate the impact of the VariFocal Loss (VFL) on model performance. As shown in

Table 5, YOLO11-VFL improves the baseline AP from 78.5% to 80.2%, AP50 from 91.5% to 92.7%, and AP75 from 86.2% to 88.4% These improvements, particularly under stricter IoU thresholds, highlight the strength of VFL in handling class imbalance and hard examples.

In dense pedestrian detection, low-quality positive samples (e.g., heavily occluded persons with only partial body parts visible) often dominate, making standard Focal Loss insufficiently flexible. By dynamically weighting high-quality positives, VFL ensures that the model focuses more on reliable samples, reducing overfitting risks and improving detection robustness. Thus, the experimental evidence supports the practical significance of adopting VFL in dense and occluded pedestrian scenarios, aligning numerical gains with meaningful real-world performance improvements.

4.6. Ablation Experiments

The experimental results are shown in

Table 6, which shows that different combinations of modules have significant effects on the performance of the YOLO11 model. Especially when YOLO11 is combined with VFL, C3k2-lighter, and Triplet modules, the average accuracy of the model reaches 82.2% in terms of AP%, 75.6 % in terms of recall% and 94.7 % in terms of precision%.

These results indicate that the three modules are not only individually effective but also highly complementary when combined. Specifically, VFL improves robustness under class imbalance (as a module for comprehensive positive improvement), C3k2-lighter reduces parameters and computation without major accuracy loss, and Triplet attention enhances feature discrimination under conditions of occlusion. Their integration allows the model to simultaneously optimize for accuracy, efficiency, and robustness, avoiding the common trade-offs observed when only one aspect is improved.

From a broader research perspective, performance gains in pedestrian detection are typically incremental. For example, on the same CrowdHuman dataset, the recently proposed CNN–ViT hybrid NAS-PED [

22] achieved a 1.9% AP improvement but required 12.9 M parameters, illustrating the difficulty of achieving accuracy improvements without sharply increasing complexity. In contrast, our combined approach improves AP by nearly 4% over the baseline YOLO11 while keeping the parameter count at only 2.1 M. In object detection, an AP gain of around 2% is already regarded as a notable achievement, particularly in dense and occluded settings where further improvements are hard to obtain.

Therefore, the additional computational complexity introduced by our method is justified. Unlike many competing approaches, where accuracy gains come at the cost of significant increases in model size, our design demonstrates that meaningful improvements in dense pedestrian detection can be achieved while maintaining lightweight characteristics. This makes the proposed modifications both scientifically valuable and practically deployable in resource-constrained real-world environments.

4.7. Comparison Experiment

Compared with the benchmark algorithm YOLO11, the proposed algorithm in this paper exhibits some improvement in all accuracy-evaluation indexes. In order to better evaluate the advantages and disadvantages of the proposed algorithms, this paper selects mainstream algorithms in the field of target detection, including the two-stage target-detection algorithm Faster R-CNN, the single-stage target-detection algorithms YOLOv5n and YOLOv8n, and the end-to-end target-detection algorithm RT-DETR, etc., and conducts experiments on the CrowdHuman dataset with the same environment configuration. The results are shown in

Table 7.

The YOLO11-Improved algorithm achieves 83.2% of average AP%, which is the highest among all the listed algorithms, and also has the lowest number of floating-point operations of 21.5 G, which indicates that the algorithm not only performs well in the target detection task, but also has a significant advantage in computational efficiency. In addition, YOLO11-Improved is the lightest of all algorithms in terms of the number of model parameters, Params/M, at only 2.1 M, which makes it ideal for deployment in resource-constrained environments. This balance is critical for real-world applications, especially in scenarios where fast and accurate target detection is required. It is worth noting, however, that the miss rate (MR) of YOLO11-Improved is not the lowest among all models. This is largely because the improved architecture adopts a more conservative prediction strategy: while it achieves higher precision by avoiding false positives, this cautious behavior also leads to slightly more missed detections in crowded scenes.

4.8. Experimental Results and Limitations Analysis

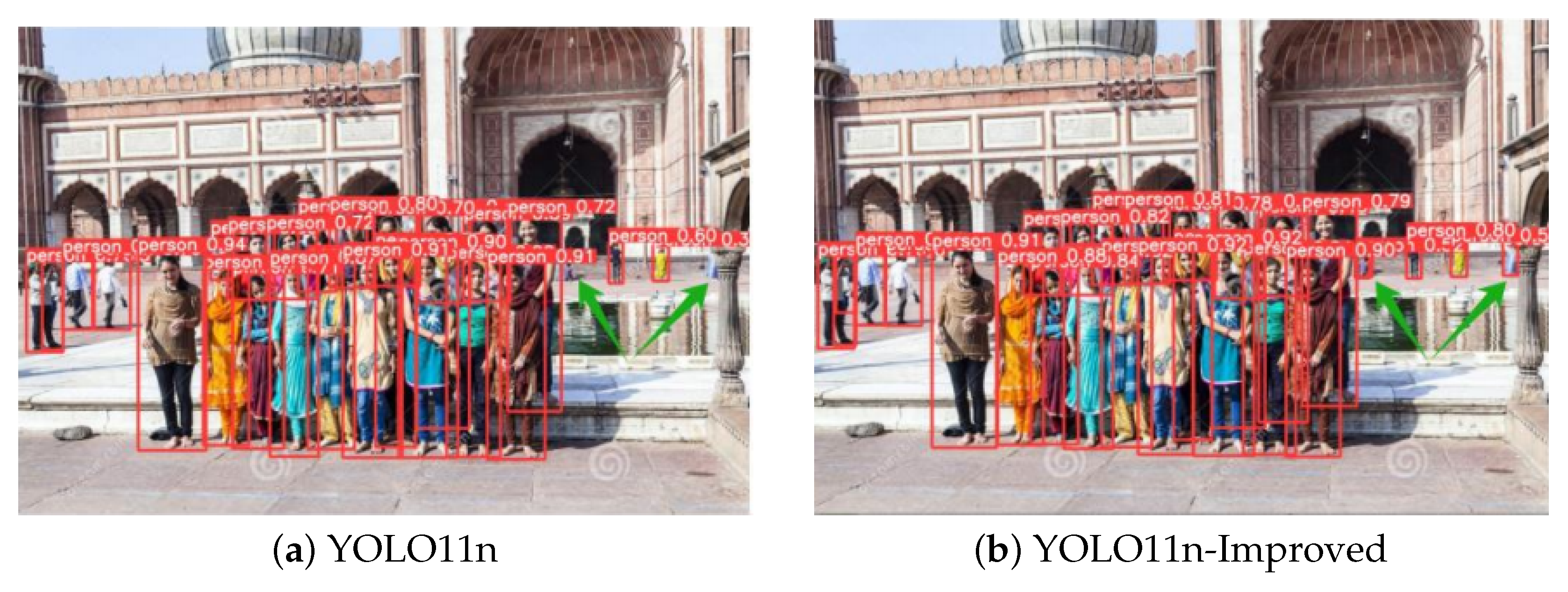

To provide more intuitive evidence of the improvements, we present two groups of qualitative detection results from YOLO11-Improved on the CrowdHuman test set. Each group includes (a) detection results from the baseline YOLO11 and (b) detection results from the proposed YOLO11-Improved model. These visualizations highlight how the improvements translate into practical detection performance in crowded pedestrian scenarios.

In the first group of images (

Figure 7), pedestrians are gathered in front of a building with classical architectural style. The diverse and colorful clothing indicates an outdoor activity during daytime. In

Figure 7a, the bounding box indicated by the green arrow is relatively small and slightly misaligned with the actual target, suggesting that the baseline YOLO11 suffers from localization inaccuracies in dense crowd scenes. In contrast, in

Figure 7b, the same bounding box is larger and more precisely aligned with the target pedestrian, demonstrating improved localization accuracy after incorporating the proposed modules. The improved algorithm can more accurately recognize and localize individuals, and it can maintain high precision even in densely crowded scenarios. At the same time, the improved model performs well in handling background interference, being able to more precisely distinguish pedestrians from the background and avoiding false detections caused by complex backgrounds. These observations directly support the quantitative results, showing that the improvements lead to enhanced precision and recall in high-density pedestrian detection, making the model more suitable for application scenarios requiring high accuracy, such as urban intelligent transportation systems, public security, and crowd management.

However, limitations remain. In the second group of images (

Figure 8), pedestrians are fewer and located close to the camera, representing a relatively simple occlusion case. Under such conditions, YOLO11 and YOLO11-Improved produce similar results. Both models achieve complete detection without missed targets, and their confidence scores show only minor differences. In the improved results, the confidence scores for the pedestrians in the middle of the image are increased, but the confidence scores for the pedestrians on both sides of the road are decreased. This may be attributed to the attention mechanism emphasizing the densely populated central region, inadvertently reducing focus on less prominent individuals at the periphery.

In summary, YOLO11-Improved demonstrates clear advantages in dense pedestrian detection, particularly in terms of precision, recall, and robustness to background interference, validating the necessity of combining the VFL, C3k2-lighter, and Triplet modules. Its lightweight design and improved accuracy suggest strong potential for real-world deployment. Nonetheless, limitations persist: the model shows less pronounced advantages in simple detection scenarios, and conditions of extreme occlusion remain challenging. These findings highlight both the achievements in this field and the remaining open problems, guiding future directions for further improvement in dense pedestrian detection.