Abstract

Throughout the last decade, chatbots have gained widespread adoption across various industries, including healthcare, education, business, e-commerce, and entertainment. These types of artificial, usually cloud-based, agents have also been used in airport customer service, although there has been limited research concerning travelers’ perspectives on this rather techno-centric approach to handling inquiries. Consequently, the goal of the presented study was to tackle this research gap and explore potential use cases for chatbots at airports, as well as investigate travelers’ acceptance of said technology. We employed an extended version of the Technology Acceptance Model considering Perceived Usefulness, Perceived Ease of Use, Trust, and Perceived Enjoyment as predictors of Behavioral Intention, with Affinity for Technology as a potential moderator. A total of travelers completed our survey. The results show that Perceived Usefulness, Trust, Perceived Ease of Use, and Perceived Enjoyment positively correlate with the Behavioral Intention to use a chatbot for airport customer service inquiries, with Perceived Usefulness showing the highest impact. Travelers’ Affinity for Technology, on the other hand, does not seem to have any significant effect.

1. Introduction

Recent developments in conversational artificial intelligence, particularly those showcased by OpenAI’s ChatGPT [1] and other large language model-driven applications, have jumpstarted discussions about chatbots and their potential application areas in business [2]. But even before 2022, these chatbots or conversational agents (i.e., computer programs with natural language capabilities that offer real-time conversations with human users; cf. [3]) had already been adopted by various industries, including healthcare, education, e-commerce, and entertainment [4,5]. While voice-controlled digital assistants, such as Apple’s Siri [6], Amazon’s Alexa [7], or Google’s Assistant [8], have seamlessly integrated into our daily routines at home, text-based chatbots have been increasingly populating instant messaging platforms or become embedded into company websites [3,9]. In customer service, chatbots have been commonly used because they reduce staff costs and, at the same time, increase efficiency by giving assistance and providing answers to many customers at the same time [10]. Primarily tasked with addressing frequently asked questions, they reduce human intervention to more complicated customer inquiries [11], thereby optimizing interactions between an organization and its customers and consequently fostering perceived service quality [12]. Also, in airport settings, we have witnessed the implementation of chatbots as artificial first-level customer support agents (e.g., in Singapore, Seoul, Athens, Frankfurt, and Milan), leading to significant cost savings and shorter waiting times for passengers who seek information assistance [13,14].

Despite these technological advancements and enthusiastic predictions, interactions with chatbots still raise several issues. In particular, skepticism toward the technology has been noted among potential users [15]. Respective challenges include unmet user expectations, which might lead to frustration [16]. Also, doubts regarding chatbot authenticity and lack of trust and privacy are issues raised in this context [12,16,17]. And while there have been studies focusing on different use cases for chatbots at airports, travelers’ acceptance of such solutions has barely been investigated [13,18]. Consequently, our goal was to close this knowledge gap and find an answer to the following research question:

“What are potential use cases for chatbot applications in airport customer service and what is their respective technology acceptance by travelers?”

Our report on this undertaking starts with a discussion of relevant background work in Section 2. Next, we report on an initial exploration of potential use cases for chatbot applications in airport customer service in Section 3. In Section 4, we then describe the development of our conceptual model. Next, Section 5 elaborates on the employed method, the used material, and the hypotheses that were created for the investigation of travelers’ acceptance. Subsequently, Section 6 reports on our results, and Section 7 discusses their implications. Finally, Section 8 concludes our report, outlines limitations, and suggests directions for further research.

2. Background and Related Work

The quest for conversational machines began with Alan Turing’s (1950) provocative question, “Can machines think?” [19]. The Turing test, a benchmark for intelligent behavior, evaluated this concept. Today, the Loebner Prize competition carries this torch, recognizing advancements in chatbot technology. Early chatbots, like ELIZA, relied on rule-based systems [20]. Specifically, inspired by Rogerian psychotherapy, ELIZA mimicked a therapist by reflecting the user’s own statements. Successors like PARRY built upon this approach, simulating a patient with schizophrenia and incorporating emotional responses [4,21]. ALICE, another landmark chatbot, introduced the Artificial Intelligence Markup Language (AIML) for pattern-matching and conversation flow [22], while later on, the chatbot Mitsuku particularly focused on social interaction, which led to multiple Loebner Prize wins [23]. Modern advancements have invigorated chatbot development [11]. Voice assistants like Alexa and Siri exemplify this progress [15], while ChatGPT represents the most recent leap in Natural Language Processing (NLP). Its transformer architecture allows for the automatic generation of complex text and thus supports nuanced conversations, underscoring the remarkable progress AI technology has made throughout the last two decades of research and application development [2].

2.1. Categorization of Chatbots

The landscape of chatbots and conversational agents is complex, and its terminology is not universally defined. Consequently, the terms chatbot and conversational agent are often used synonymously. Radziwill and Benton [24], however, aim to give a clear categorization of these types of software dialogue systems. In this, they distinguish between Interactive Voice Response (IVR) systems and conversational agents (CAs). IVR systems implement decision trees and are therefore considered less flexible than CAs. An example of an IVR may be found in a “Press or Say 1 for English” interaction on the phone. CAs, on the other hand, allow for a more open interaction style. They may be subcategorized into chatbots and Embodied Conversational Agents (ECAs) [24]. They are activated by either text- or voice-based natural language input and usually designed to execute specific tasks. When embodied in software (avatars) or physical form (robots), they are called ECAs. Grudin and Jacques [25], on the other hand, divide chatbots based on their conversation focus: first, virtual companions that converse on any topic for entertainment; second, intelligent assistants that are also capable of chatting about any topic but are explicitly aimed at keeping conversations short; and finally, task-focused chatbots that have a much narrower, task-focused range. This classification is similar to the ones by Hussain et al. [26] and Rapp et al. [15], who differentiate chatbots in terms of their functionality and, consequently, distinguish between task-oriented and conversation-oriented chatbots. Typically, chatbots are designed to address one or more specific user goals, and the majority of chatbots are task-oriented [27]. They implement structured problem-solving within specialized domains, aiding users in task completion (e.g., bookings, orders, scheduling, information access). While they can maintain conversational flow, these chatbots lack the general knowledge required to address inquiries outside their predefined scope. Non-task-oriented chatbots, on the other hand, are programmed to keep a conversation going or even establish some kind of relationship with a user [15]. They aim to generate human-like responses [28] and thus often hold some entertainment value [26].

2.2. Chatbot Design Techniques

A key success factor for chatbots is how well they can keep up a conversation and give useful output. In this process, the initial step involves interpreting user input, often leveraging various NLP techniques. Subsequently, the chatbots execute relevant commands and formulate responses, with conversational flow and output quality being critical success factors [10]. To this end, chatbots employ various input-processing strategies, which are summarized below.

2.2.1. Rule-Based Chatbots

Rule-based chatbots, the earliest form of conversational AI, rely on predefined rules and patterns to provide responses [29]. They operate by matching user input against specific keywords or patterns, triggering pre-scripted responses [30]. Often implemented using AIML, rule-based systems lack more sophisticated NLP capabilities [28]. They excel within closed, task-specific domains, but their reliance on manually crafted rules limits their adaptability and their ability to handle complex queries [31]. Despite these limitations, their ease of development ensures that rule-based chatbots will remain a popular choice for clearly defined application scenarios.

2.2.2. AI-Based Chatbots

AI-based chatbots depart from rule-based systems, leveraging machine learning and NLP [32]. These chatbots are trained using existing conversation databases or specifically designed datasets [30]. This empowers them to comprehend user intent and foster context-aware conversations. NLP plays a crucial role by dissecting and interpreting human language to understand user requests [2,33]. It facilitates AI-based chatbots in handling diverse user inputs, including variations in wording and synonyms. Machine learning algorithms eliminate the need for predefined rules, leading to increased flexibility and reduced reliance on domain-specific knowledge [29].

2.2.3. Retrieval-Based Chatbots

Retrieval-based chatbots function by selecting the most relevant response from a curated database of question–answer pairs, akin to a search engine [30]. This database, potentially sourced from social media or forums, houses pre-existing responses [32]. The chatbot is trained to match user inquiries with the most appropriate stored answer within the conversational context [34], with NLP playing a key role in comprehending user input and facilitating this matching process. A notable advantage of retrieval-based models is the grammatical and lexical correctness of responses, as they are not generated on the fly [29,31].

2.2.4. Generative Chatbots

Generative chatbots exhibit a distinct approach, crafting responses word-by-word based on user input rather than relying on a fixed set of answers [28]. Extensive training on large conversational datasets is required to teach these models proper sentence structure and syntax, as inadequate training can lead to output lacking in quality or consistency [29]. Underpinning modern generative chatbot techniques are deep neural networks and their diverse architectures. A prominent architecture is the encoder–decoder model utilizing Long Short-Term Memory (LSTM) mechanisms, frequently implemented as Sequence-to-Sequence models for dialogue generation [35]. The probabilistic models underpinning generative chatbots aim to produce the most contextually relevant and linguistically correct response given an input statement [34]. More recent advances in generative chatbots are centered on replacing LSTM models with transformers, enabling parallelized training on massive datasets. Those pre-trained transformer systems like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) have been trained on vast language corpora such as Wikipedia and Common Crawl [29]. The line between retrieval-based and generative chatbots blurs with the advent of hybrid methods, where retrieved and generated responses undergo a re-ranking process to determine the final output [28].

3. An Initial Exploration of Chatbots in Airport Customer Service

In order to gain some inspiration for potential use cases for using chatbot applications at airports, we arranged for an interview with the PR representative of a small local airport. The airport can be considered small, since it offers only one terminal, which processes both domestic and international flights. The interview was semi-structured and lasted for approx. 25 min. Questions revolved around the current customer service process at the airport, including touch points for customers, typical process times, and travelers’ common inquiries. Furthermore, we asked about potential use cases for chatbots and possible factors that may influence travelers’ acceptance of using such a technology in customer service scenarios.

Feedback from this interview shows that travelers contact the airport predominantly by phone or email. There is a respective contact form on the airport’s website, which acts as a typical entry point. Alternatively, there is a receptionist who takes calls and handles inquiries during office hours. Inquiries outside office hours are forwarded to the passenger service. If, in exceptional cases, an inquiry cannot be answered on the same day, a traveler receives a message confirming that his/her question has been noticed and will be processed as soon as possible. Interestingly, it was found that the airport currently does not run any statistics on the number and/or success rate of received customer service inquiries. Yet, the airport knows typical questions that a traveler would send to the airport customer service department, of which the PR representative named the following as the most frequent ones:

- When can I check in?

- Can I check in the evening before?

- Is there luggage storage?

- What are the opening hours of the visitor terrace?

- Is the visitor terrace open to the public?

- How can I get to the airport?

- Can I take my cat?

- Can my dog enter the airport?

- When will the airport festival take place?

Questions about pets and airport events (i.e., in our case, the airport festival) seemed rather surprising to us, as they were not mentioned in any of the literature that we screened before the interview (e.g., [13,14,36,37]). Also, inquiries concerning the visitor terrace seemed to be unique to the investigated airport. To validate these questions, we thus screened the FAQ lists of other airports. We found examples for both pets (e.g., [38,39,40]) and visitor terrace inquiries (e.g., [41,42,43]), confirming these aspects as being relevant in airport customer service. Asked about solution strategies, the PR representative claimed that most, if not all, of the information in question would be provided by the airport website. When asked about the potential implementation of a chatbot, however, she raised some concerns, highlighting that, in her opinion, travelers would rather appreciate the personal interaction with employees, so a techno-centric interaction with a chatbot would probably put them off. The situation could be different at larger airports, though, for travelers may be more understanding toward standardized answers to questions. Another factor mentioned by the PR representative, which may speak against the use of a chatbot, concerns the topic of the inquiry, where more general questions could probably be handled by a chatbot, whereas questions that hold some emotional bearing would certainly require the attention of a human. And, finally, the PR representative highlighted that, from her point of view, the level of trust travelers put into chatbot technology and the reliability they attach to the provided information represent needs to be considered as well, and that this could definitely impact the eventual adoption of such a chatbot.

Based on this rather dismissive opinion on the use of chatbots for customer service inquiries at airports provided by the PR representative, our goal was also to evaluate the travelers’ perspectives. To this end, we first turned to the literature so as to create a guiding conceptual model of chatbot acceptance.

4. Conceptual Model

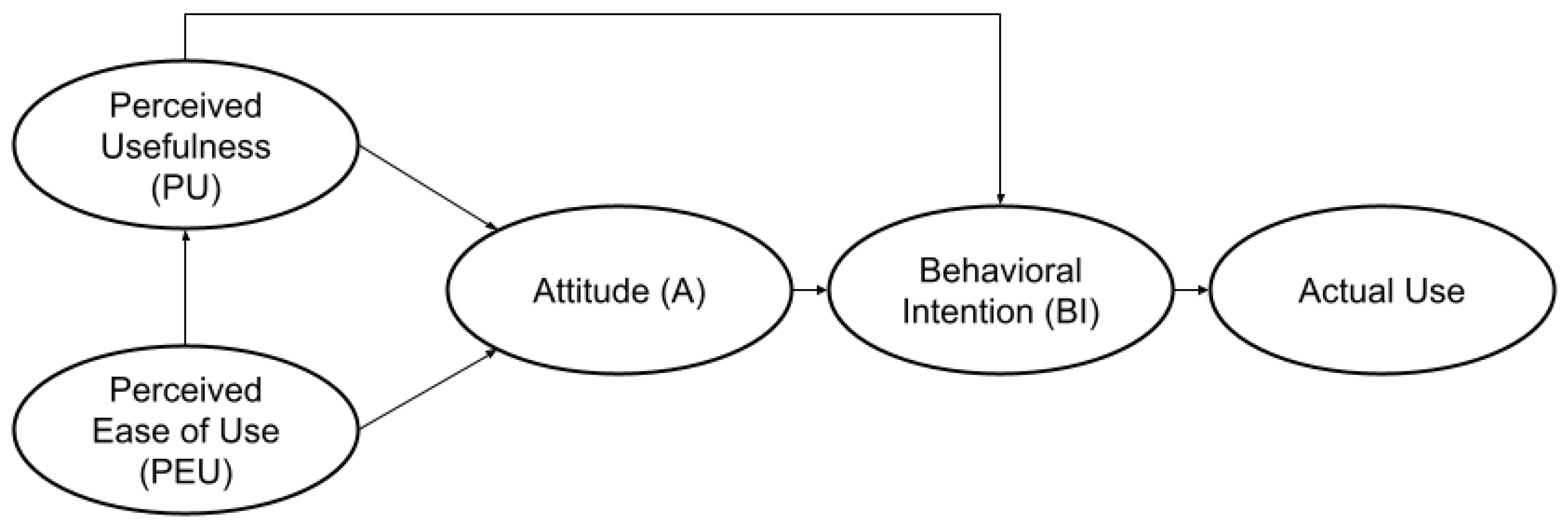

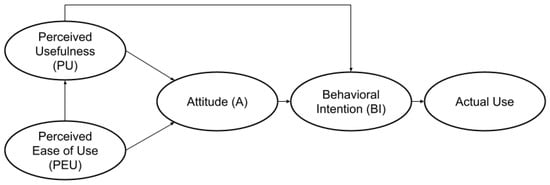

Following the literature, users’ acceptance and consequent adoption of technology are usually assessed either via the so-called Technology Acceptance Model (TAM), developed by Davis [44], or via the more holistic Unified Theory of Acceptance and Use of Technology (UTAUT), proposed by Venkatesh and colleagues [45]. Both research models aim to understand the factors that determine the acceptance or rejection of a given technology by its users [46]. The TAM, which, due to its simpler structure, has been employed more often, focuses on information technology adoption, i.e., an individual’s intention to use the technology, based on its Perceived Usefulness (PU) and its Perceived Ease of Use (PEU). To this end, PU is considered the degree to which a person believes that using the technology would enhance his or her job performance, and PEU is the degree to which using the technology would be free of effort. PEU has been shown to have a direct effect on PU and a positive relationship with a user’s Behavioral Intention (BI), both directly and indirectly via its PU. Research has furthermore shown that there is a positive relationship between PU and a user’s BI via his/her Attitude (A) [44]. Davis’ original TAM is illustrated in Figure 1.

Figure 1.

The Technology Acceptance Model according to Davis [44].

Over the years, the original TAM has been expanded to TAM2, consisting of five additional factors directly impacting PU (i.e., Subjective Norm, Image, Job Relevance, Output Quality, and Result Demonstrability) and two factors moderating these relationships (i.e., Experience and Voluntariness) [47]. Finally, the most recent adaptation of the model, i.e., TAM3, elaborates on the PEU branch, adding Computer Self-efficacy, Perception of External Control, Computer Anxiety, Computer Playfulness, Perceived Enjoyment, and Objective Usability as respective predictors [48].

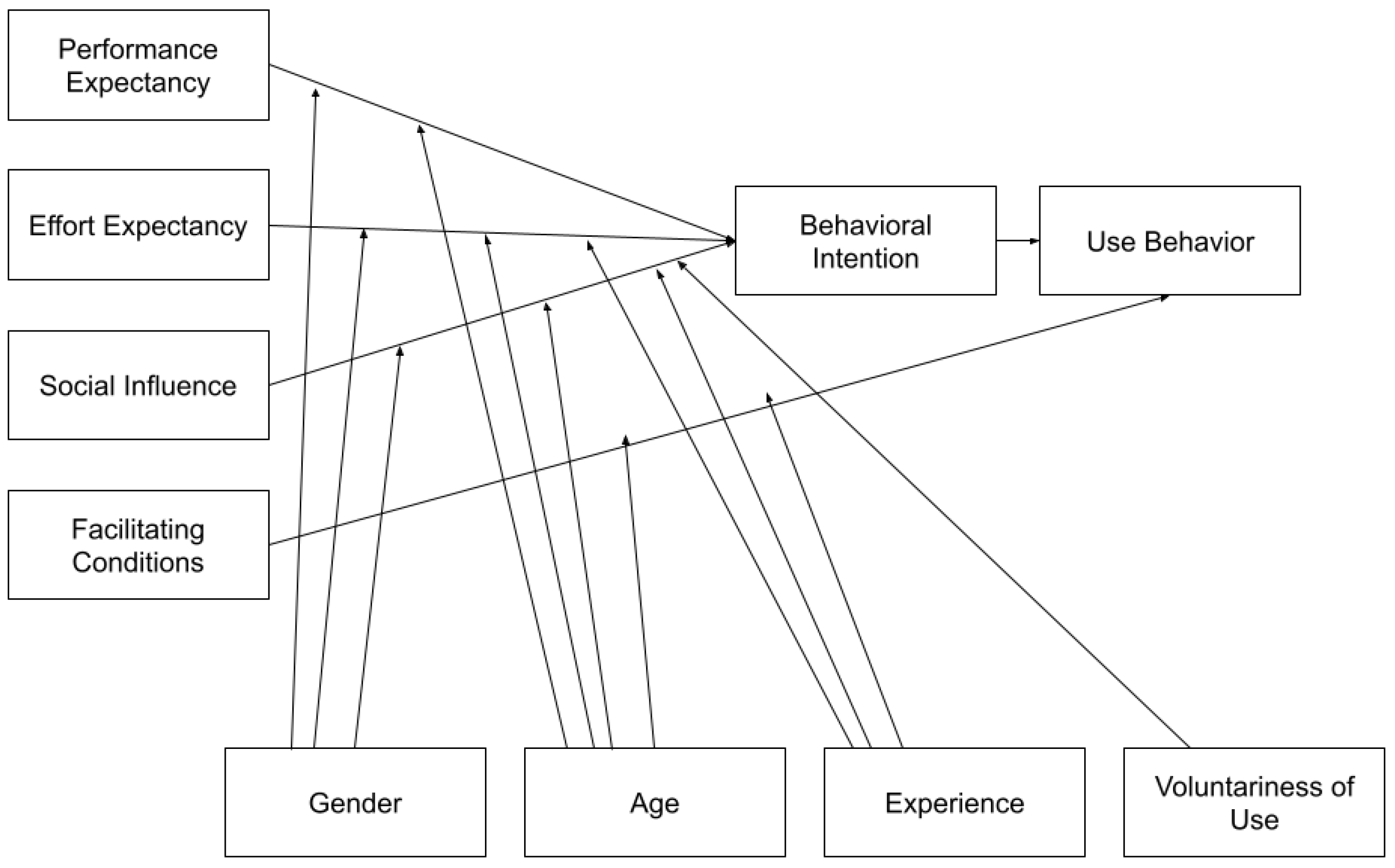

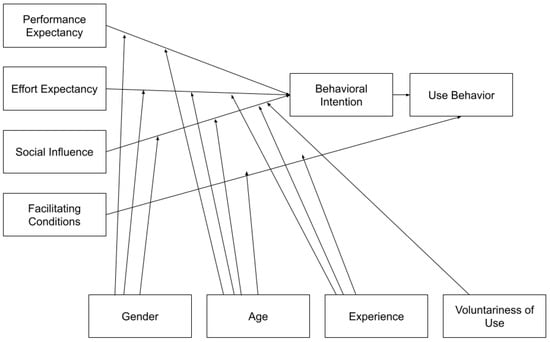

The UTAUT model, on the other hand, aims to unify a number of existing IT acceptance theories [45]. Just like the TAM, it suggests that the actual user behavior with regard to technology adoption is determined by a user’s Behavioral Intention (BI). Yet, with UTAUT, BI is directly influenced by a user’s Performance Expectancy, Effort Expectancy, Social Influence, and other Facilitating Conditions. The effect of these factors is then moderated by Gender, Age, Experience, and Voluntariness of Use. Venkatesh et al.’s original UTAUT model is depicted in Figure 2.

Figure 2.

The Unified Theory of Acceptance and Use of Technology according to Venkatesh et al. [45].

A later revision of the model, i.e., UTAUT2, tried to tackle the prevailing criticism of most technology acceptance research being targeted at organizational as opposed to private settings, which led to the introduction of additional factors, such as Price Value, Hedonic Motivation, and Habit [49].

Although UTAUT aims to provide a more holistic understanding of technology acceptance, it is considered a more complex model and thus less suitable for the initial explorations of a field. Furthermore, with respect to the acceptance of chatbots, the use of the TAM has seen a higher uptake in the literature [46,50], reporting on investigations in online shopping [16,46,51], veterinary consultation [52], mental health care, and online banking [53]. In all of these instances, researchers have used the original version of the TAM, which, for simplicity reasons, neglects factors that impact users’ PU and PEU. Consequently, we decided to also use this base version of the TAM as a starting point for our investigations.

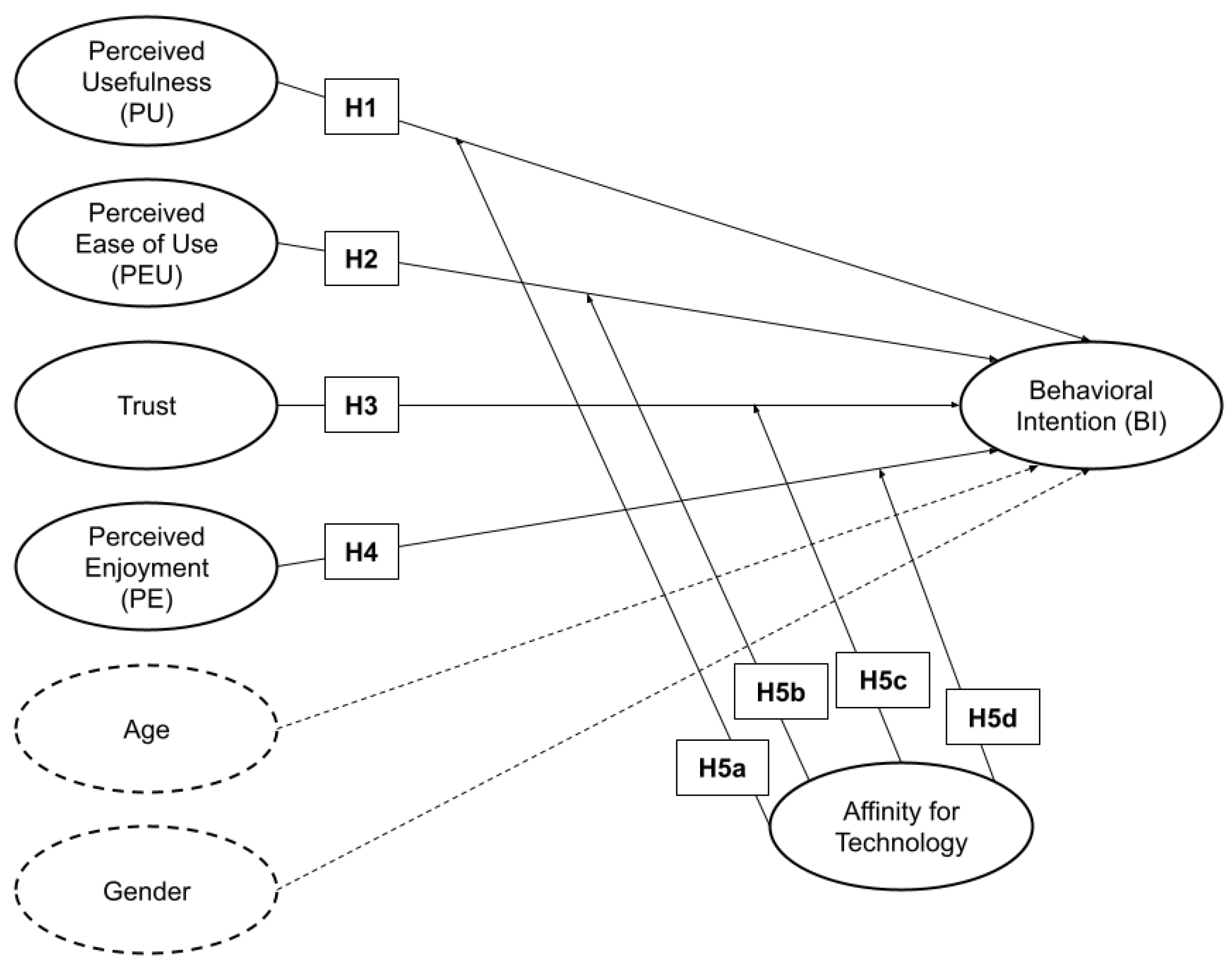

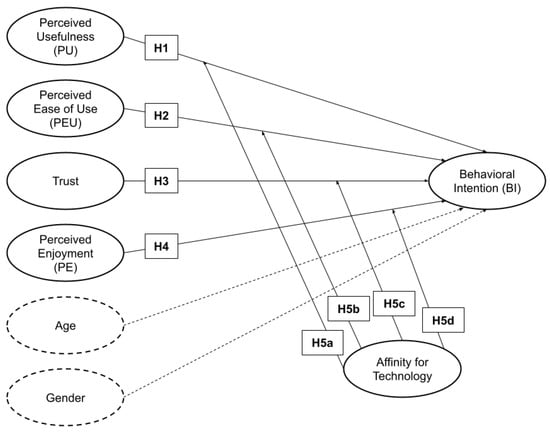

When applying the TAM to a specific focus of interest, researchers usually adapt and extend the model, adding factors such as Perceived Enjoyment, Price Consciousness, Perceived Risk, Trust, Compatibility, Technological Anxiety, or Level of Anthropomorphism. In the tourism industry, the TAM has already been used to investigate the acceptance of social media for choosing travel destinations, self-service hotel technology, and web-based self-service technology [50]. Consequently, we may argue that adapting the existing model can also be considered a valid approach when investigating travelers’ acceptance of chatbots for airport customer service. Thus, in order to better fit the model to the airport domain, we propose the following adaptations. First, we suggest adding two additional constructs hypothesized to be relevant, i.e., Trust and Perceived Enjoyment (PE). Furthermore, we expect people’s Affinity for Technology to have a moderating effect on the relationship between the predictor variables and BI. Finally, we propose to control for Age and Gender as potential confounding variables. The result of this adapted conceptual technology acceptance model is illustrated in Figure 3. All variable constructs that were added to Davis’ original TAM are described and further justified in the following Section 4.1, Section 4.2, Section 4.3 and Section 4.4.

Figure 3.

Conceptual acceptance model and respective hypotheses (cf. Section 5.2) extending Davis’ original Technology Acceptance Model.

4.1. Trust

Trust is considered a critical factor impacting the adoption of chatbots, for it describes whether or not people believe the information provided through the technology to be valid [54]. Particularly in domains such as banking, education, and shopping [46], trust plays a significant role, as well as in settings that involve a certain risk [55] or service need [56]. Also, in tourism, it has been shown that trust positively influences the Behavioral Intention to adopt a new technology [57]. Since potential chatbot use cases at airports include seat upgrades and flight inquiries, which require reliable transactions and trustworthy information provision, we may argue that, here also, one should expect a positive connection between people’s perceived trust in the chatbot and their Behavioral Intention to use it.

4.2. Perceived Enjoyment

Previous work has shown that, next to rational considerations, emotional aspects also need to be considered relevant indicators of technology acceptance [49]. Particularly in private contexts, consumers embrace technology not only for performance improvements but also because they enjoy using it [51]. This hedonic motivation to use technology has been conceptualized as Perceived Enjoyment [16,45,58]. It captures a user’s intrinsic motivation and willingness to engage with technology and indirectly measures the degree of enjoyment derived from these interactions [59]. Therefore, we may argue that the extent to which a traveler enjoys the interaction with a chatbot influences his/her Behavioral Intention to use such a tool [60,61].

4.3. Age and Gender

Research suggests that younger individuals adapt more readily to emerging technologies, while older users may take longer to acclimate and trust respective technological changes [46]. Venkatesh et al. [45] stress the significance of age as well as gender [47] in technology adoption. Likewise, Kelly et al. [53] introduced Age and Gender when investigating Behavioral Intention in mental health care, online shopping, and online banking and found that, particularly for risk- and trust-related topics, Gender is a valuable predictor. Although gender effects could be driven by socially constructed gender roles [45], it may still be argued that both Age and Gender should be added as control variables to a model-driven technology acceptance study.

4.4. Affinity for Technology

Studies show that existing knowledge about technology, a certain technological savviness, or simply an affinity for technology use has a substantial influence on the Behavioral Intention to use technology [53]. To this end, Bröhl et al. [62] as well as Svendsen et al. [63] theorized that Affinity for Technology would affect the relationship between predictor variables and Behavioral Intention. Similarly, Aldás-Manzano et al. [64] stated that, when users are more technology-affine, they are also more likely to use a technology. Thus, we may consider that Affinity for Technology should have a moderating impact on the influence that PU, PEU, Trust, and PE have on BI.

5. Method, Material, and Hypotheses

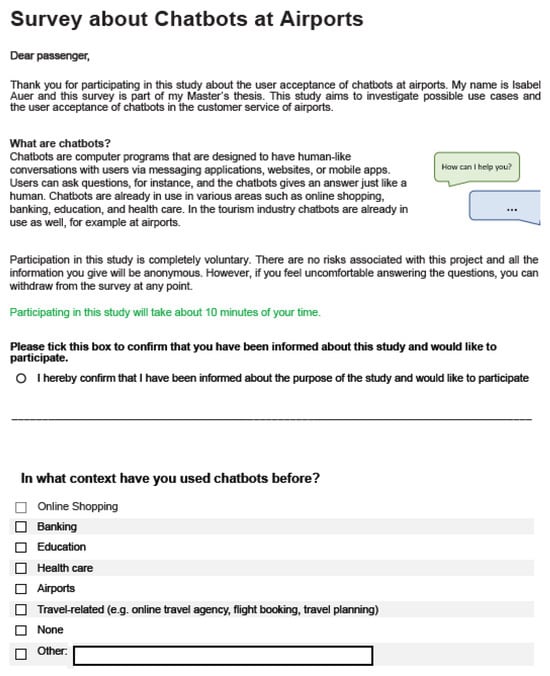

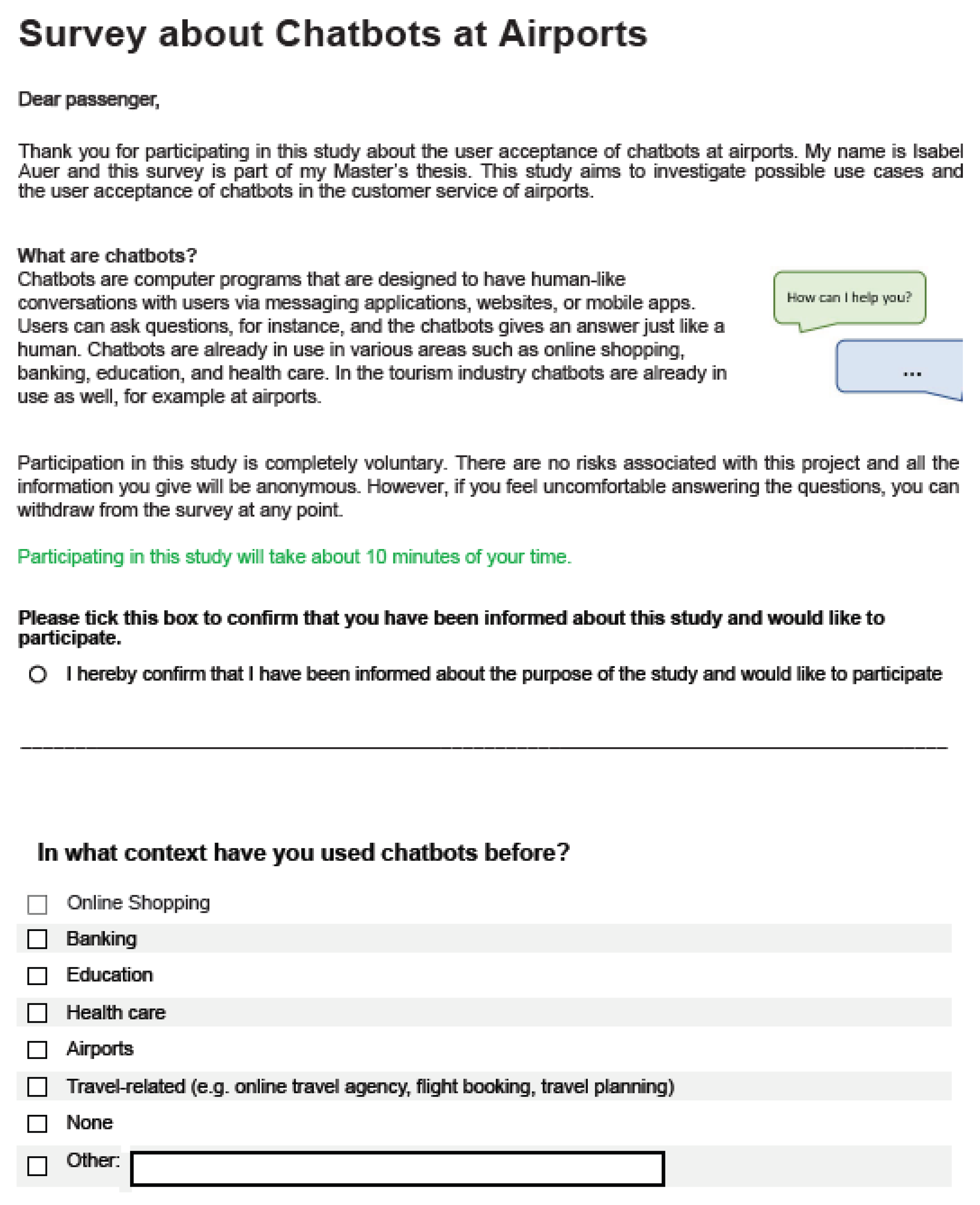

In order to investigate the acceptance of chatbots in airport customer service based on the above-defined conceptual acceptance model, we employed a questionnaire instrument composed of existing, previously validated survey components (cf. Section 5.1). Additionally, we asked for people’s feedback on a number of potential use cases in which chatbots could support service quality at airports. The questionnaire was available in English and German and distributed among travelers who were queuing at the security check-in of the same local airport that was already subject to our initial exploration (cf. Section 3). Participation was entirely voluntary and in accordance with the University’s ethical guidelines on conducting studies that require human participation.

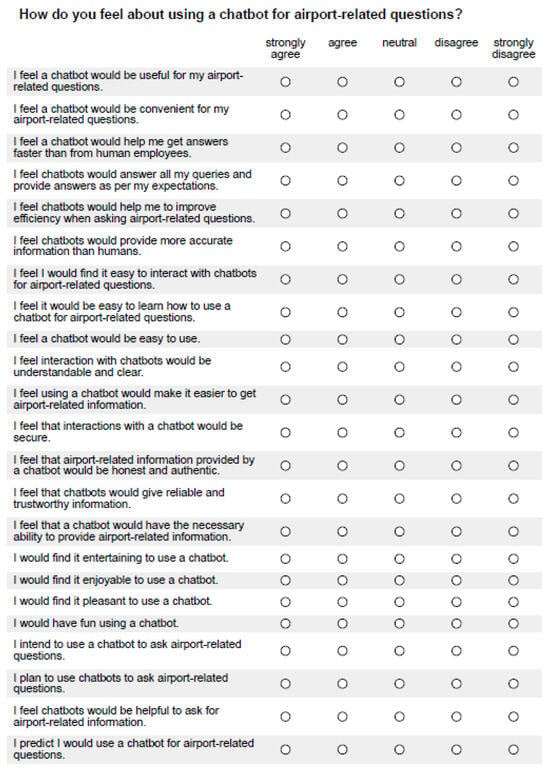

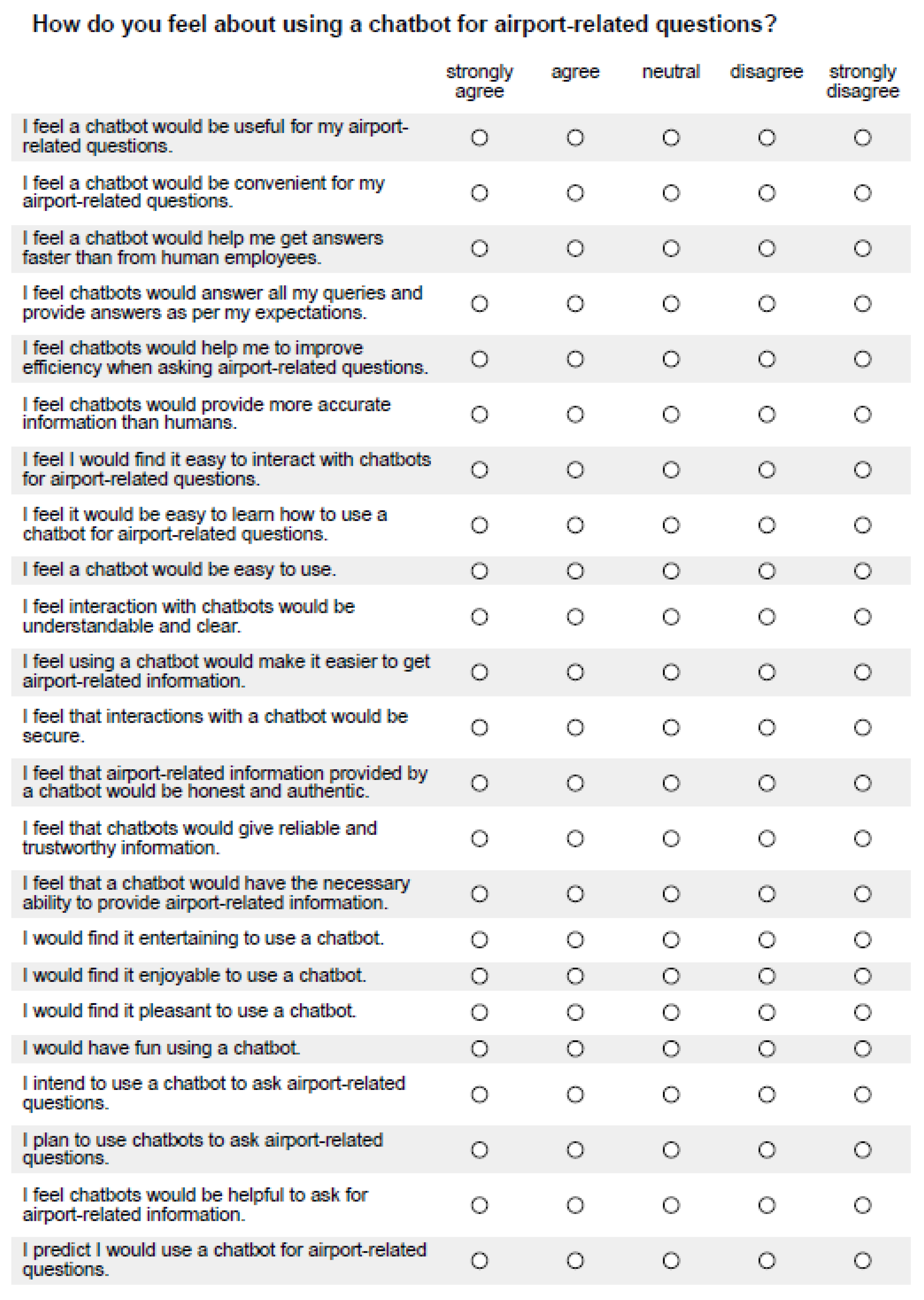

5.1. Questionnaire

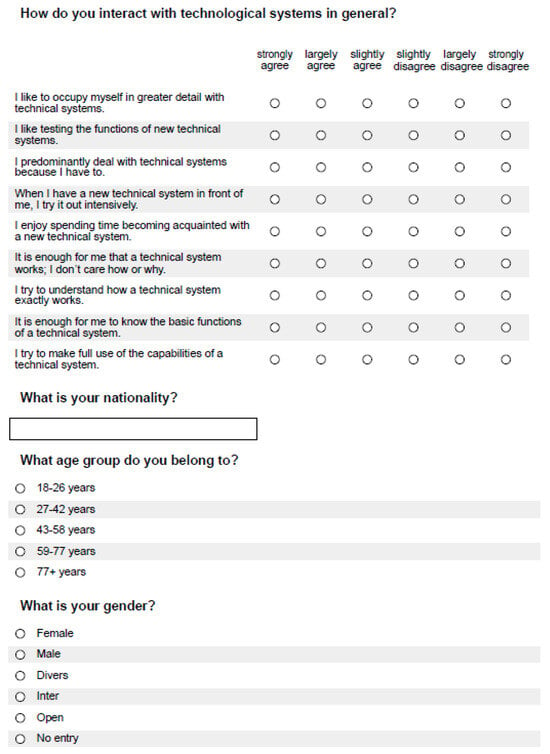

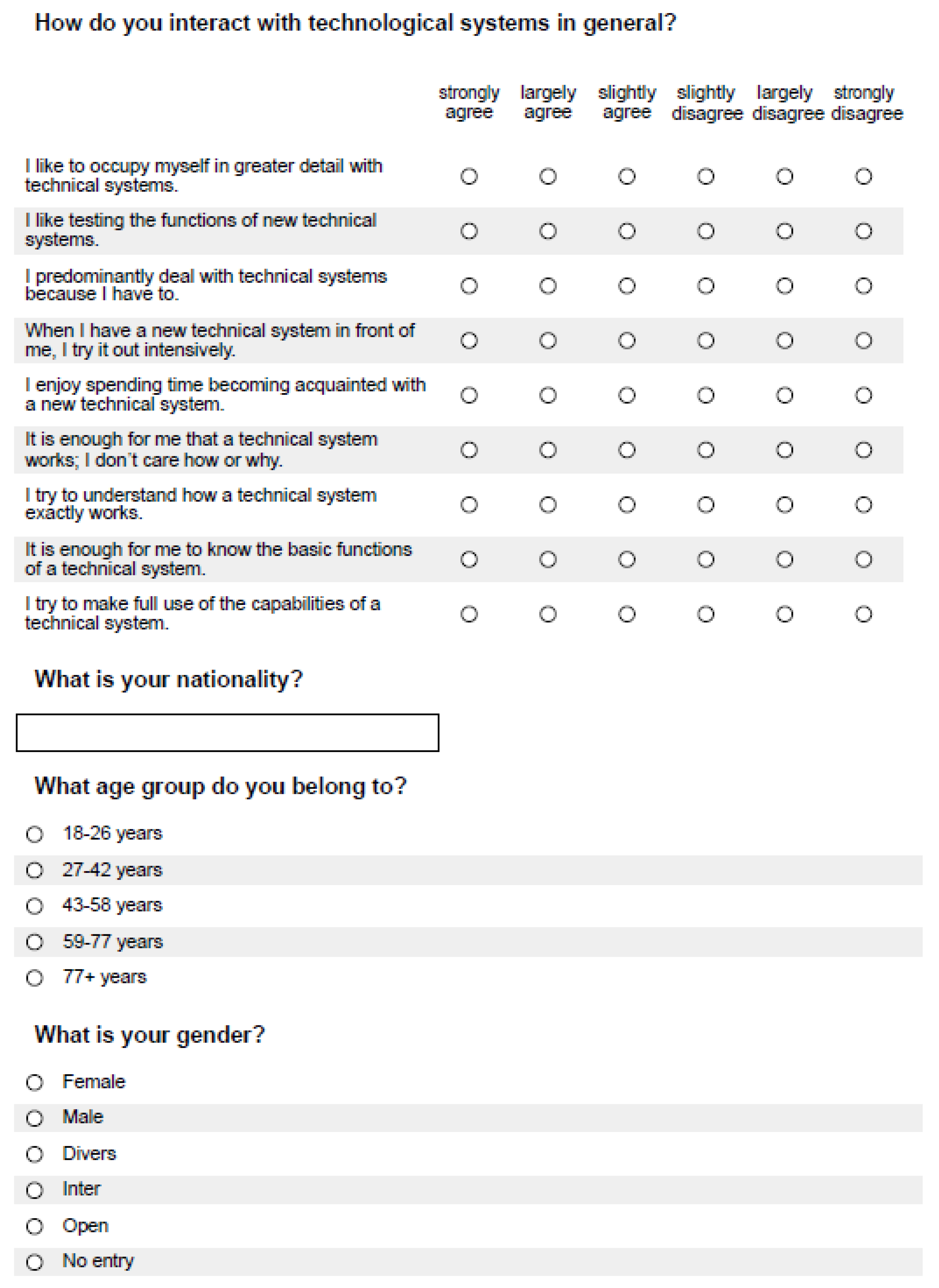

Based on the conceptual model illustrated in Figure 3, the goal of the questionnaire instrument was to investigate travelers’ acceptance of using chatbots for customer service inquiries at airports. Questionnaire items for PU and PEU were taken from Davis [44], Davis and Venkatesh [65], Abou-Shouk et al. [59], Patil and Kulkarni [66], Pereira et al. [67], and Pillai et al. [50]. For Trust, we used items previously employed by Pillai and Sivathanu [57] and Pillai et al. [50]. PE was measured via items proposed by Van der Heijden [58] and Abou-Shouk et al. [59]. BI used scales from Davis [44], Davis and Venkatesh [65], Van der Heijden [58], Venkatesh and Davis [47], and Buabeng-Andoh [68], and in order to measure people’s Affinity for Technology, we used the ATI scale developed and validated by Franke et al. [69].

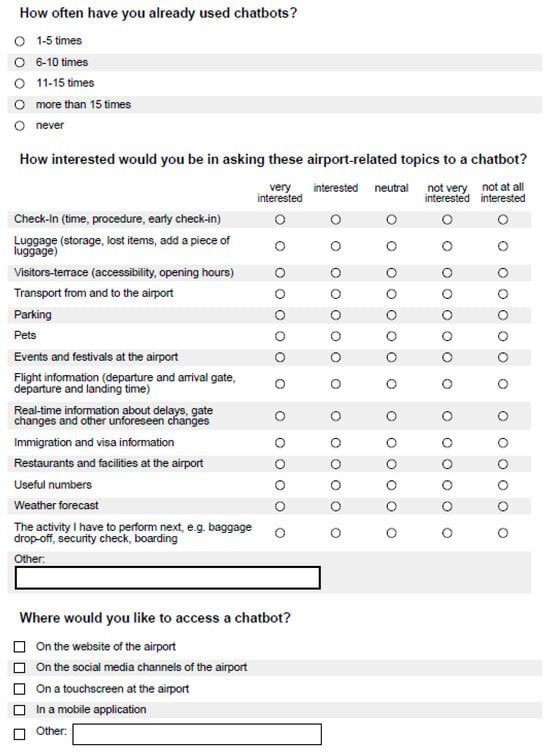

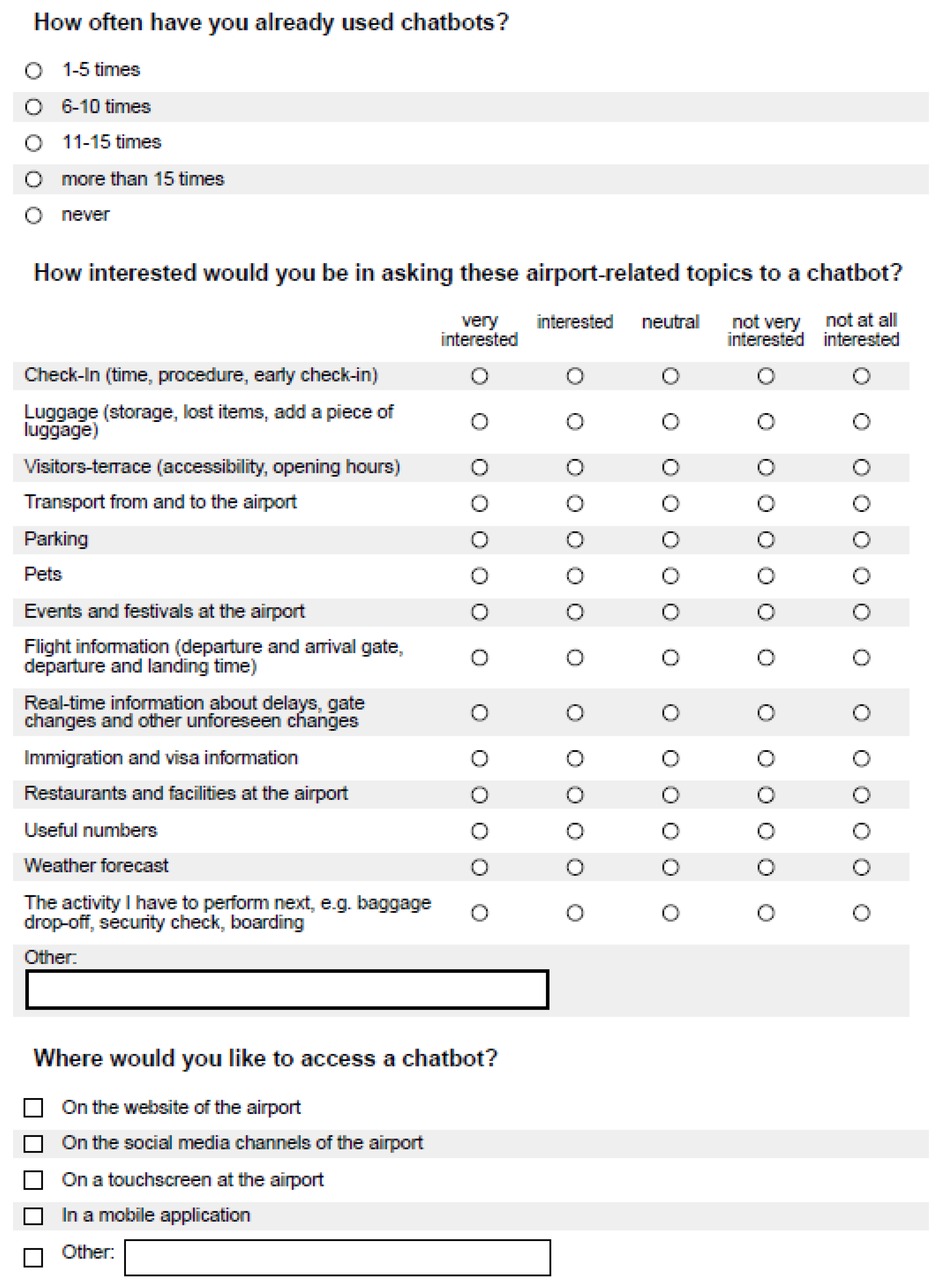

In addition, we asked about people’s previous experiences with chatbots and preferences for different chatbot use cases at the airport (note: these use cases were extracted from the interview with the PR representative reported on in Section 3). Specifically, they were asked about the context in which they had already used chatbots, how often they had used chatbots, what airport-related topics they would use a chatbot for, and how they would like to access such a chatbot. Finally, we collected data on people’s nationality, age, and gender. The complete questionnaire in English can be found in Appendix A.

Before handing out the questionnaire to actual travelers at the airport, we pre-tested it for coherence and clarity with students and, afterward, sent it to the University’s Ethics Committee for clearance.

5.2. Hypotheses

Building upon the previous work discussed in Section 4, the following hypotheses were used to underpin our investigation:

- H1: The Perceived Usefulness (PU) of an airport chatbot has a positive effect on travelers’ Behavioral Intention (BI) to use a chatbot at the airport.

- H2: The Perceived Ease of Use (PEU) of an airport chatbot has a positive effect on travelers’ Behavioral Intention (BI) to use a chatbot at the airport.

- H3: Trust has a positive effect on travelers’ Behavioral Intention (BI) to use a chatbot at the airport.

- H4: Perceived Enjoyment (PE) has a positive effect on travelers’ Behavioral Intention to use an airport chatbot.

- H5a: Affinity for Technology moderates the influence of Perceived Usefulness (PU) on the Behavioral Intention (BI) to use an airport chatbot.

- H5b: Affinity for Technology moderates the influence of Perceived Ease of Use (PEU) on the Behavioral Intention (BI) to use an airport chatbot.

- H5c: Affinity for Technology moderates the influence of Trust on the Behavioral Intention (BI) to use an airport chatbot.

- H5d: Affinity for Technology moderates the influence of Perceived Enjoyment (PE) on the Behavioral Intention to use an airport chatbot.

These hypotheses (i.e., H1–H5a, H5b, H5c, H5d) are also depicted in Figure 3.

6. Results

We received a total of questionnaires, of which were fully completed. Descriptive analyses were conducted for people’s age, gender, and the context in which they had used chatbots before, as well as the airport-related contexts they perceived as suitable to use a chatbot in and the entry points they would imagine for such an interaction. For these analyses, we used all the data that we were able to collect. For the hypothesis-driven analyses focusing on our proposed conceptual acceptance model, however, we only used fully completed questionnaire responses.

6.1. Descriptive Analyses

The majority of our study participants were male (58.1% of all participants), between 27 and 42 years old (42.4% of all participants), and came from the Netherlands (31.4% of all participants). Consequently, 141 (i.e., 73.8%) of the total number of questionnaire responses were provided in English, and only 50 (i.e., 26.2%) were in German. More details on the sample description can be found in Table 1.

Table 1.

The descriptive characteristics of the collected sample ().

A mean comparison between the two language groups showed that the sample holds no significant differences concerning age, gender, the frequency of previous chatbot use, people’s preferred point of access for an airport chatbot, and the contexts in which they had used chatbots before. Table 2 provides additional information on these aspects.

Table 2.

Chatbot experiences and access point preferences for an airport chatbot found in the collected sample ().

Concerning possible use cases for chatbots at airports, however, we found significant differences between the language groups. Specifically, local (i.e., German-speaking) participants were less interested in asking about events that would happen at the airport than respondents to the English questionnaire (). On the other hand, English-speaking respondents were significantly more interested in asking about visa and immigration information (), as well as activities they could perform right after they arrived at their destination ().

6.2. Hypothesis Analyses

In order to test the hypotheses outlined in Section 5.2, we only used the fully completed questionnaires. Cronbach’s analysis for PU, Trust, PE, and BI showed high construct validity, i.e., >. For PEU, we excluded the question “I feel a chatbot would be easy to use” so as to raise Cronbach’s from to . Furthermore, in order to ensure validity and reliability, we tested the independence of the residuals, the normality of the residuals, the homoscedasticity of the residuals, and potential multicollinearity among the independent variables.

Next, we conducted three different regression analyses to test our conceptual model. The first analysis focused on the two control variables, Age and Gender. The second analysis computed the influence of PU, PEU, Trust, and PE on BI (Hypotheses H1–H4). Finally, the third analysis evaluated the moderating effect of Affinity for Technology (Hypotheses H5a–H5d). Since a mean comparison analysis between the two language groups showed a significant difference in PE between the English sample () and the German sample (), all three analyses were conducted with three different sample groups, i.e., the entire sample (), the English sample (), and the German sample ().

6.2.1. The Control Variables Age and Gender

Looking at Age and Gender, we found no significant connection with a respondent’s Behavioral Intention to use a chatbot in an airport customer service scenario, neither with the full sample (; ) nor with the English (; ) or German (; ) sub-sample.

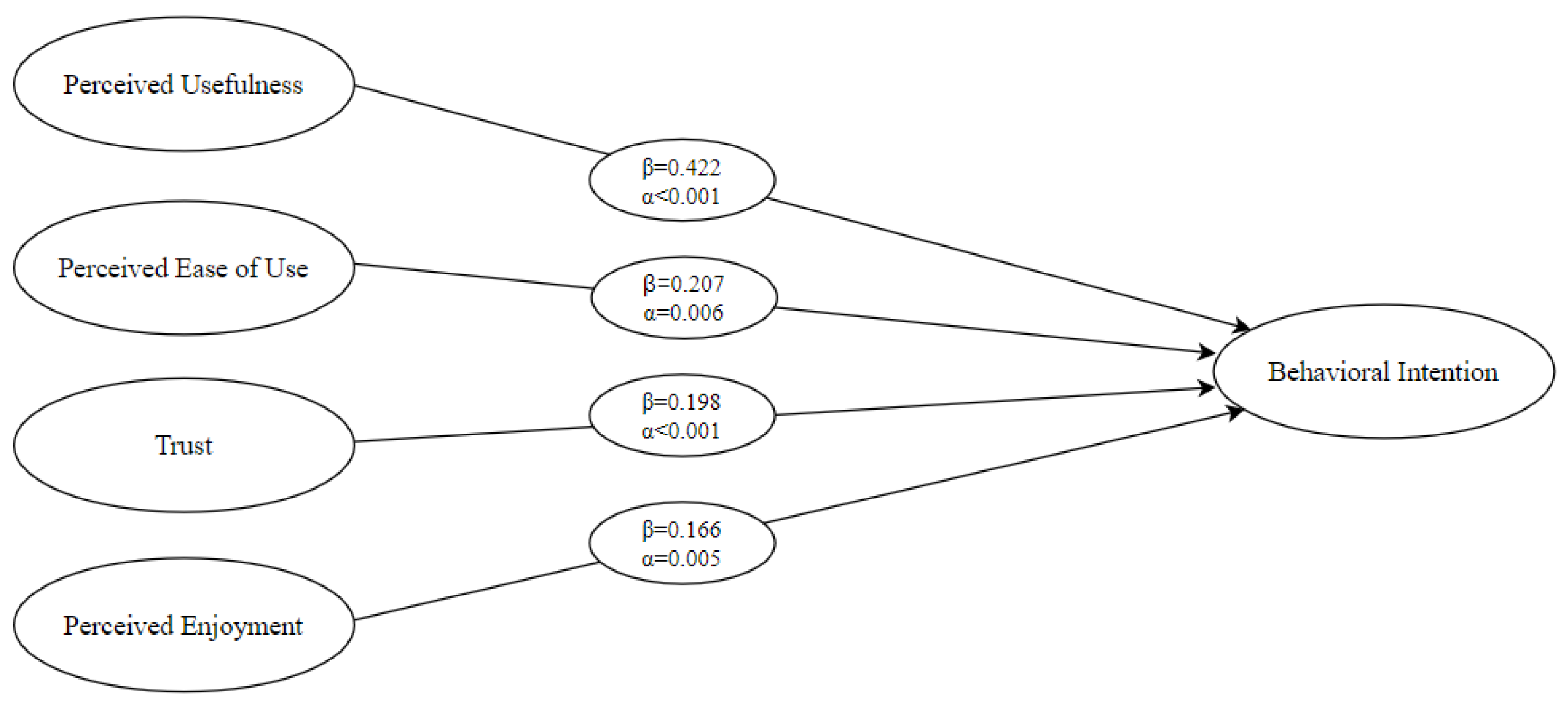

6.2.2. The Impact of PU, PEU, Trust, and PE on BI

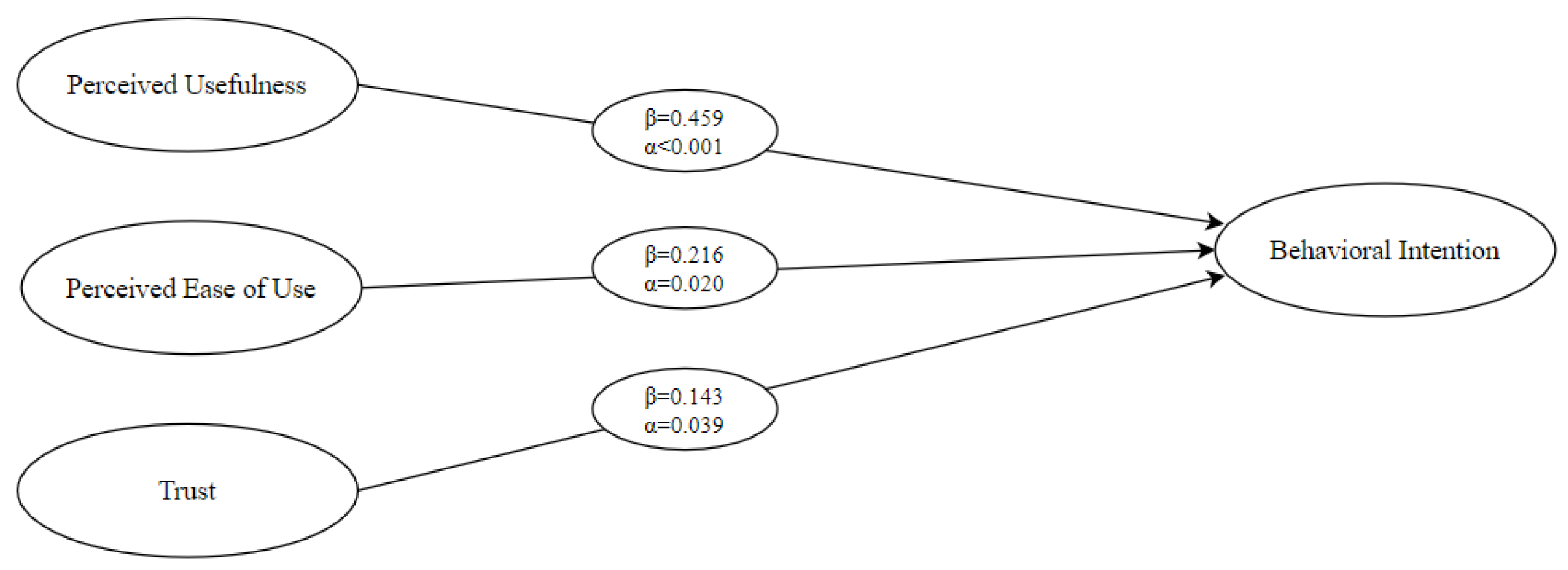

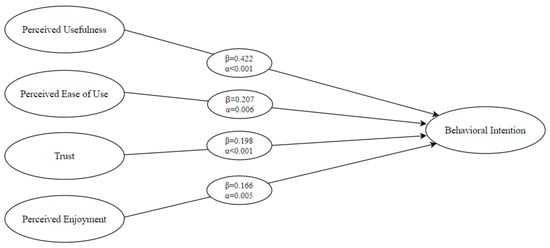

A linear regression analysis conducted with the entire sample points to a highly significant influence of PU on BI (; ), and PEU (; ), Trust (; ), and PE (; ) also show a significant positive impact on respondents’ Behavioral Intention to use a chatbot in an airport customer service scenario. The overall model fit lies at , explaining 66% of the variance and consequently showing that Hypotheses H1–H4 are all supported by the collected data (cf. Figure 4).

Figure 4.

Perceived Usefulness, Perceived Ease of Use, Trust, and Perceived Enjoyment explain 66% of the variance in all respondents’ Behavioral Intention to use chatbots for airport customer service scenarios.

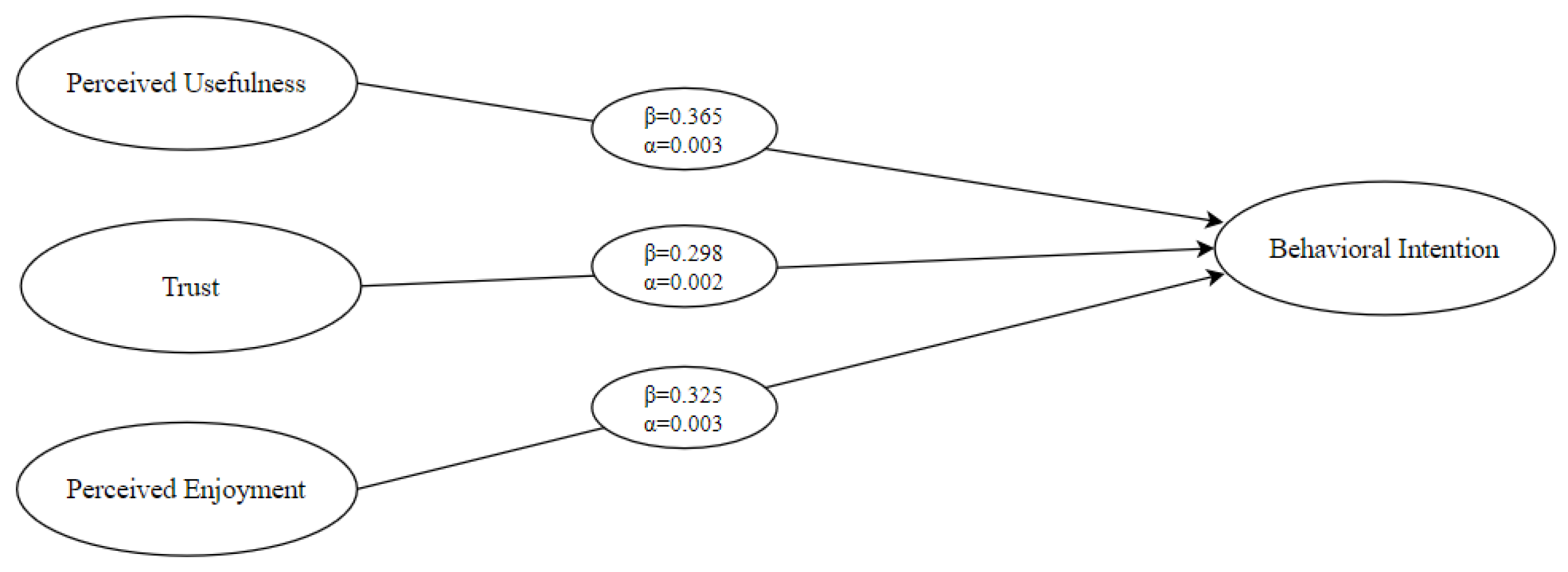

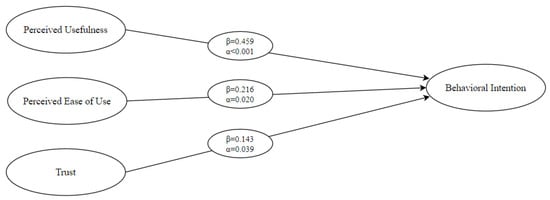

Looking at the two language groups separately, we found less support for our conceptual model. Specifically, in the English sample, it is again PU that has the highest positive impact on BI (; ), followed by PEU (; ) and Trust (; ). The influence of PE on BI, however, is not significant (; ). Thus, when only considering the English sample, H4 needs to be rejected. Yet, the other three constructs, i.e., PU, PEU, and Trust, are still able to explain 65% of the variance in people’s Behavioral Intention to use chatbots for airport customer service scenarios (corrected English model: ; cf. Figure 5).

Figure 5.

Perceived Usefulness, Perceived Ease of Use, and Trust explain 65% of the variance in English-speaking respondents’ Behavioral Intention to use chatbots for airport customer service scenarios.

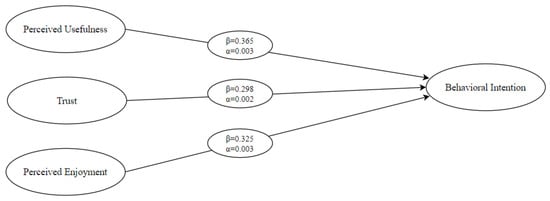

Finally, when only considering the German sample, we also see some deviations from the initial results. Again, PU has the highest impact on BI (; ). This time, however, it is followed by PE (; ) and Trust (; ), whereas PEU does not have a significant positive effect on BI in the German sample (; ). Consequently, if we only consider the German sample, H2 needs to be rejected. Interestingly, we see that when only considering PU, PE, and Trust for these data, the model fit increases and is then able to explain almost 77% of the variance of BI (corrected German model: ; cf. Figure 6).

Figure 6.

Perceived Usefulness, Perceived Enjoyment, and Trust explain almost 77% of the variance in German-speaking respondents’ Behavioral Intention to use chatbots for airport customer service scenarios.

6.2.3. The Moderating Effect of Affinity for Technology

Our final analysis evaluates the potential moderating effect of Affinity for Technology on the relationships between PU, PEU, Trust, PE, and BI. Surprisingly, the data do not point to any statistically significant moderation, neither for the complete sample nor for the English or German sub-sample. Therefore, Hypotheses H5a, H5b, H5c, and H5d have to be rejected, and Affinity for Technology must be excluded as an influencing factor.

7. Discussion

Our study investigating potential use cases for chatbot applications in airport customer service and their respective acceptance by traveling customers shows that the majority of respondents are generally familiar with using chatbots, with almost 85% of them claiming to have already used such a conversational technology. Consequently, we may argue that chatbots have become mainstream and are commonly used and accepted in various fields of application. As for the context of airport customer service, travelers perceive the use of chatbots as particularly beneficial in situations where they require real-time feedback and information on flights, when they have questions regarding check-in and luggage, or when they require help regarding transportation from and to the airport or concerning parking. Other relevant use cases include visa and immigration inquiries and the search for specific airport contact numbers. All of these results are in line with earlier suggestions by Carisi et al. [13], Kim and Park [36], and Kattenbeck et al. [37].

In order to more deeply investigate travelers’ acceptance of chatbots for these kinds of application scenarios, we tested a conceptual model constructed based on previous work in technology acceptance. First, we evaluated whether people’s Age and Gender have an effect on their Behavioral Intention to use a chatbot. Surprisingly, in contrast to previous work by Kasilingam [46] and Kelly et al. [53], our data do not point to such a connection. One reason for this lack of influence may be found in people’s familiarity with the use of chatbots, with only about 15% of our participants stating that they had never used a chatbot before. Another hypothesis that we had to reject from our assumptions concerns the potential moderating effect that people’s Affinity for Technology would have on their Behavioral Intention to use a chatbot. Again, our data contradict previous suggestions (e.g., [64]) in that they do not support such a connection.

On the other hand, our study confirms previous work by Kelly et al. [53] and De Cicco et al. [51] in that it underlines the significant influence of Perceived Usefulness (PU), Trust, Perceived Ease of Use (PEU), and Perceived Enjoyment (PE) on the Behavioral Intention (BI) to use a chatbot. To this end, it is shown that PU is by far the most important determinant of BI, explaining approx. 40% of its variance. A possible explanation for this is the strong task orientation that chatbots offer, which aligns with travelers’ concrete information needs.

Overall, these research results have implications for airports that consider implementing a chatbot to support their customer service. First, it may be assumed that travelers are already familiar with the concept and use of a chatbot. Second, from our empirical analysis, it may be concluded that Perceived Usefulness is by far the most important factor that decides over whether such a chatbot will be adopted by travelers. To this end, it has also been shown that, in particular, use cases involving important flight and check-in information are perceived to be helpful and may thus be considered a door opener for the successful implementation of chatbot technology at airports. Yet, next to usefulness, it is also technology trust that needs to be addressed by developers. Here, our research shows that travelers need to feel that the information provided by such a chatbot is reliable and trustworthy. Lastly, chatbots should not only serve a utilitarian function but also be easy and fun so as to not provoke frustration but rather provide a joyous addition to the overall travel experience.

8. Conclusions, Limitations, and Future Outlook

The above-presented study has investigated potential use cases and predictors for the acceptance of chatbots in airport customer service. Inspired by previous literature on technology acceptance, we used an extended TAM in order to collect relevant data. A total of travelers participated in our study, which showed that people’s Perceived Usefulness of a chatbot may be considered the most important predictor of its acceptance and that this perception of usefulness mainly focuses on the speedy provision of flight-relevant information. These results, however, face some limitations. Firstly, they revolve around chatbots for customer service contexts and may thus not be generalized to other airport departments or operations. Secondly, our study was conducted at a rather small local airport. A comparable investigation at an international, potentially more digitized airport may yield different results, particularly since we found differences between local and international travelers in our sample. A larger and particularly more diverse sample would thus be required to validate our findings. Thirdly, our research did not make participants interact with a chatbot before filling out the questionnaire, relying on their hypothetical or previously gained experiences from interacting with such tools. And, finally, we used a rather basic conceptual model to study travelers’ Behavioral Intention to use an airport customer service chatbot. A more holistic approach focusing on, e.g., UTAUT may have generated a more comprehensive understanding of the given problem space.

Despite these limitations, we believe that our findings provide relevant insights concerning the implementation of chatbot technology at airports and may be considered a starting point for additional investigations. To this end, future work should particularly explore the actual suitability of certain use cases (e.g., visa and immigration needs) and their respective challenges. Also, a better understanding of contextual factors, such as place and time, is required to better adapt chatbot services to the needs of individual travelers. Finally, it may be worth investigating airport chatbot acceptance from a cultural point of view, as there seems to be a great difference in people’s openness toward this type of conversational technology, and a better understanding of these differences might help prioritize respective implementation efforts.

Author Contributions

The article was a collaborative effort by all three co-authors. Conceptualization, I.A. and S.S.; methodology, I.A.; validation, I.A. and S.S.; formal analysis, I.A.; investigation, I.A.; data curation, I.A., S.S. and G.G.; writing—original draft preparation, I.A. and S.S.; writing—review and editing, S.S. and G.G.; visualization, S.S.; supervision, S.S.; project administration, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

The research was not directly funded but conducted as part of an internship placement at Amadeus IT Group S.A.

Data Availability Statement

The data presented in this study are openly available via Zenodo at https://doi.org/10.5281/zenodo.11074341 (accessed: 26 April 2024).

Acknowledgments

We want to thank the travelers at Innsbruck Airport who took the time to complete our questionnaire while standing in line for their security check. Also, we would like to thank the Public Relations Officer of Innsbruck Airport for providing her insights concerning typical customer care requests and their handling. Finally, we want to thank the Amadeus IT Group S.A. for supporting this research as part of an internship placement.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NLP | Natural Language Processing |

| IVR | Interactive Voice Response |

| CA | Conversational agent |

| ECA | Embodied Conversational Agent |

| LSTM | Long Short-Term Memory |

| GPT | Generative Pre-trained Transformer |

| BERT | Bidirectional Encoder Representations from Transformers |

| TAM | Technology Acceptance Model |

| UTAUT | Unified Theory of Acceptance and Use of Technology |

| PU | Perceived Usefulness |

| PEU | Perceived Ease of Use |

| PE | Perceived Enjoyment |

| BI | Behavioral Intention |

Appendix A

Figure A1.

Questionnaire page 1.

Figure A1.

Questionnaire page 1.

Figure A2.

Questionnaire page 2.

Figure A2.

Questionnaire page 2.

Figure A3.

Questionnaire page 3.

Figure A3.

Questionnaire page 3.

Figure A4.

Questionnaire page 4.

Figure A4.

Questionnaire page 4.

References

- OpenAI. ChatGPT. Available online: https://chat.openai.com/auth/login (accessed on 24 April 2024).

- Lund, B.D.; Wang, T. Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Libr. Hi Tech News 2023, 40, 26–29. [Google Scholar] [CrossRef]

- Sheehan, B.; Jin, H.S.; Gottlieb, U. Customer service chatbots: Anthropomorphism and adoption. J. Bus. Res. 2020, 115, 14–24. [Google Scholar] [CrossRef]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Laranjo, L.; Dunn, A.G.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.; et al. Conversational agents in healthcare: A systematic review. J. Am. Med. Inform. Assoc. 2018, 25, 1248–1258. [Google Scholar] [CrossRef] [PubMed]

- Apple. Siri. Available online: https://www.apple.com/siri/ (accessed on 24 April 2024).

- Amazon. Alexa. Available online: https://alexa.amazon.com/ (accessed on 24 April 2024).

- Google. Google Assistant. Available online: https://assistant.google.com/ (accessed on 24 April 2024).

- Dale, R. The return of the chatbots. Nat. Lang. Eng. 2016, 22, 811–817. [Google Scholar] [CrossRef]

- Følstad, A.; Brandtzæg, P.B. Chatbots and the new world of HCI. Interactions 2017, 24, 38–42. [Google Scholar] [CrossRef]

- Haugeland, I.K.F.; Følstad, A.; Taylor, C.; Bjørkli, C.A. Understanding the user experience of customer service chatbots: An experimental study of chatbot interaction design. Int. J. Hum.-Comput. Stud. 2022, 161, 102788. [Google Scholar] [CrossRef]

- Adam, M.; Wessel, M.; Benlian, A. AI-based chatbots in customer service and their effects on user compliance. Electron. Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Carisi, M.; Albarelli, A.; Luccio, F.L. Design and implementation of an airport chatbot. In Proceedings of the 5th EAI International Conference on Smart Objects and Technologies for Social Good, Valencia, Spain, 25–27 September 2019; pp. 49–54. [Google Scholar]

- Lee, S.; Miller, S.M. AI gets real at Singapore’s Changi Airport (Part 1). Asian Manag. Insights (Singapore Manag. Univ.) 2019, 6, 10–19. [Google Scholar]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Rese, A.; Ganster, L.; Baier, D. Chatbots in retailers’ customer communication: How to measure their acceptance? J. Retail. Consum. Serv. 2020, 56, 102176. [Google Scholar] [CrossRef]

- Neururer, M.; Schlögl, S.; Brinkschulte, L.; Groth, A. Perceptions on authenticity in chat bots. Multimodal Technol. Interact. 2018, 2, 60. [Google Scholar] [CrossRef]

- Ukpabi, D.C.; Aslam, B.; Karjaluoto, H. Chatbot adoption in tourism services: A conceptual exploration. In Robots, Artificial Intelligence, and Service Automation in Travel, Tourism and Hospitality; Emerald Publishing Limited: Bingley, UK, 2019; pp. 105–121. [Google Scholar]

- Turing, A.M. Computing Machinery and Intelligence. Mind New Ser. 1950, 59, 433–466. [Google Scholar] [CrossRef]

- Weizenbaum, J. CELIZA—A Computer Program for the Study of Natural Language Communication Between Man and Machine. Commun. ACM 1966, 9, 433–466. [Google Scholar] [CrossRef]

- Colby, K.M. Artificial Paranoia: A Computer Simulation Model of Paranoid Processes; Pergamon Press: Amsterdam, The Netherlands, 1975. [Google Scholar]

- AbuShawar, B.; Atwell, E. ALICE chatbot: Trials and outputs. Comput. Y Sist. 2015, 19, 625–632. [Google Scholar] [CrossRef]

- Io, H.; Lee, C. Chatbots and conversational agents: A bibliometric analysis. In Proceedings of the 2017 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Singapore, 10–13 December 2017; pp. 215–219. [Google Scholar]

- Radziwill, N.M.; Benton, M.C. Evaluating quality of chatbots and intelligent conversational agents. arXiv 2017, arXiv:1704.04579. [Google Scholar]

- Grudin, J.; Jacques, R. Chatbots, humbots, and the quest for artificial general intelligence. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–11. [Google Scholar]

- Hussain, S.; Ameri Sianaki, O.; Ababneh, N. A survey on conversational agents/chatbots classification and design techniques. In Web, Artificial Intelligence and Network Applications: Proceedings of the Workshops of the 33rd International Conference on Advanced Information Networking and Applications (WAINA-2019), Kunibiki, Messe, 27–29 March 2019; Springer: Cham, Switzerland, 2019; pp. 946–956. [Google Scholar]

- Følstad, A.; Brandtzaeg, P.B. Users’ experiences with chatbots: Findings from a questionnaire study. Qual. User Exp. 2020, 5, 3. [Google Scholar] [CrossRef]

- Agarwal, R.; Wadhwa, M. Review of state-of-the-art design techniques for chatbots. SN Comput. Sci. 2020, 1, 246. [Google Scholar] [CrossRef]

- Caldarini, G.; Jaf, S.; McGarry, K. A literature survey of recent advances in chatbots. Information 2022, 13, 41. [Google Scholar] [CrossRef]

- Mnasri, M. Recent advances in conversational NLP: Towards the standardization of Chatbot building. arXiv 2019, arXiv:1903.09025. [Google Scholar]

- Thorat, S.A.; Jadhav, V. A review on implementation issues of rule-based chatbot systems. In Proceedings of the International Conference on Innovative Computing & Communications (ICICC), New Delhi, India, 21–23 February 2020. [Google Scholar]

- Suta, P.; Lan, X.; Wu, B.; Mongkolnam, P.; Chan, J.H. An overview of machine learning in chatbots. Int. J. Mech. Eng. Robot. Res. 2020, 9, 502–510. [Google Scholar] [CrossRef]

- Montejo-Ráez, A.; Jiménez-Zafra, S.M. Current approaches and applications in natural language processing. Appl. Sci. 2022, 12, 4859. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, J. Speech and Language Processing. An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition; Youcanprint: Tricase, Italy, 2023. [Google Scholar]

- Ali, A.; Amin, M.Z. Conversational AI Chatbot based on encoder-decoder architectures with attention mechanism. Artif. Intell. Festiv. 2019, 2. [Google Scholar]

- Kim, S.Y.; Park, M.S. Robot, AI and Service Automation (RAISA) in Airports: The Case of South Korea. In Proceedings of the 2022 IEEE/ACIS 7th International Conference on Big Data, Cloud Computing, and Data Science (BCD), Danang, Vietnam, 4–6 August 2022; pp. 382–385. [Google Scholar]

- Kattenbeck, M.; Kilian, M.A.; Ferstl, M.; Alt, F.; Ludwig, B. Towards task-sensitive assistance in public spaces. Aslib J. Inf. Manag. 2019, 71, 344–367. [Google Scholar]

- Manchester Airport FAQs. Available online: https://www.manchesterairport.co.uk/help/frequently-asked-questions/ (accessed on 24 April 2024).

- Narita International Airport FAQs. Available online: https://www.narita-airport.jp/en/faq/airport/ (accessed on 24 April 2024).

- Frankfurt Airport FAQs. Available online: https://www.frankfurt-airport.com/en/faqs/overview-faqs/faq-animals.html (accessed on 24 April 2024).

- Schipol Airport Panorama Terrace. Available online: https://www.schiphol.nl/en/schiphol-as-a-neighbour/blog/schiphols-panorama-terrace-is-open-again/ (accessed on 24 April 2024).

- San Francisco International Airport Sky Terrace. Available online: https://www.flysfo.com/skyterrace (accessed on 24 April 2024).

- Budapest Airport Visitor Terrace. Available online: https://www.bud.hu/en/passengers/shopping_and_passenger_experience/convenience_services/visitor_terrace (accessed on 24 April 2024).

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Kasilingam, D.L. Understanding the attitude and intention to use smartphone chatbots for shopping. Technol. Soc. 2020, 62, 101280. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B.; Metri, B.; Kaushik, N. Students’ adoption of AI-based teacher-bots (T-bots) for learning in higher education. Inf. Technol. People 2023, 37, 328–355. [Google Scholar] [CrossRef]

- De Cicco, R.; Iacobucci, S.; Aquino, A.; Romana Alparone, F.; Palumbo, R. Understanding users’ acceptance of chatbots: An extended TAM approach. In Chatbot Research and Design, Proceedings of the International Workshop on Chatbot Research and Design, Virtual Event, 23–24 November 2021; Springer: Cham, Switzerland, 2021; pp. 3–22. [Google Scholar]

- Huang, D.H.; Chueh, H.E. Chatbot usage intention analysis: Veterinary consultation. J. Innov. Knowl. 2021, 6, 135–144. [Google Scholar] [CrossRef]

- Kelly, S.; Kaye, S.A.; Oviedo-Trespalacios, O. A multi-industry analysis of the future use of AI chatbots. Hum. Behav. Emerg. Technol. 2022, 2022, 2552099. [Google Scholar] [CrossRef]

- Mehta, R.; Verghese, J.; Mahajan, S.; Barykin, S.; Bozhuk, S.; Kozlova, N.; Vasilievna Kapustina, I.; Mikhaylov, A.; Naumova, E.; Dedyukhina, N. Consumers’ behavior in conversational commerce marketing based on messenger chatbots. F1000Research 2022, 11, 647. [Google Scholar] [CrossRef]

- Cai, D.; Li, H.; Law, R. Anthropomorphism and OTA chatbot adoption: A mixed methods study. J. Travel Tour. Mark. 2022, 39, 228–255. [Google Scholar] [CrossRef]

- Fernandes, T.; Oliveira, E. Understanding consumers’ acceptance of automated technologies in service encounters: Drivers of digital voice assistants adoption. J. Bus. Res. 2021, 122, 180–191. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B. Adoption of AI-based chatbots for hospitality and tourism. Int. J. Contemp. Hosp. Manag. 2020, 32, 3199–3226. [Google Scholar] [CrossRef]

- Van der Heijden, H. User acceptance of hedonic information systems. MIS Q. 2004, 28, 695–704. [Google Scholar] [CrossRef]

- Abou-Shouk, M.; Gad, H.E.; Abdelhakim, A. Exploring customers’ attitudes to the adoption of robots in tourism and hospitality. J. Hosp. Tour. Technol. 2021, 12, 762–776. [Google Scholar] [CrossRef]

- Dickinger, A.; Arami, M.; Meyer, D. The role of perceived enjoyment and social norm in the adoption of technology with network externalities. Eur. J. Inf. Syst. 2008, 17, 4–11. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S.; Loureiro, S.M.C. I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telemat. Inform. 2020, 54, 101473. [Google Scholar] [CrossRef]

- Bröhl, C.; Nelles, J.; Brandl, C.; Mertens, A.; Schlick, C.M. TAM reloaded: A technology acceptance model for human-robot cooperation in production systems. In Proceedings of the HCI International 2016—Posters’ Extended Abstracts: 18th International Conference, HCI International 2016, Toronto, ON, Canada, 17–22 July 2016; Proceedings, Part I 18. Springer: Cham, Switzerland, 2016; pp. 97–103. [Google Scholar]

- Svendsen, G.B.; Johnsen, J.A.K.; Almås-Sørensen, L.; Vittersø, J. Personality and technology acceptance: The influence of personality factors on the core constructs of the Technology Acceptance Model. Behav. Inf. Technol. 2013, 32, 323–334. [Google Scholar] [CrossRef]

- Aldás-Manzano, J.; Ruiz-Mafé, C.; Sanz-Blas, S. Exploring individual personality factors as drivers of M-shopping acceptance. Ind. Manag. Data Syst. 2009, 109, 739–757. [Google Scholar] [CrossRef]

- Davis, F.D.; Venkatesh, V. A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. Int. J. Hum.-Comput. Stud. 1996, 45, 19–45. [Google Scholar] [CrossRef]

- Patil, K.; Kulkarni, M. Can we trust Health and Wellness Chatbot going mobile? Empirical research using TAM and HBM. In Proceedings of the 2022 IEEE Region 10 Symposium (TENSYMP), Mumbai, India, 1–3 July 2022; pp. 1–6. [Google Scholar]

- Pereira, T.; Limberger, P.F.; Ardigó, C.M. The moderating effect of the need for interaction with a service employee on purchase intention in chatbots. Telemat. Inform. Rep. 2021, 1, 100003. [Google Scholar] [CrossRef]

- Buabeng-Andoh, C. Predicting students’ intention to adopt mobile learning: A combination of theory of reasoned action and technology acceptance model. J. Res. Innov. Teach. Learn. 2018, 11, 178–191. [Google Scholar] [CrossRef]

- Franke, T.; Attig, C.; Wessel, D. A personal resource for technology interaction: Development and validation of the affinity for technology interaction (ATI) scale. Int. J. Hum. -Interact. 2019, 35, 456–467. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).