Abstract

In detecting large-scale attacks, deep neural networks (DNNs) are an effective approach based on high-quality training data samples. Feature selection and feature extraction are the primary approaches for data quality enhancement for high-accuracy intrusion detection. However, their enhancement root causes usually present weak relationships to the differences between normal and attack behaviors in the data samples. Thus, we propose a Classification Tendency Difference Index (CTDI) model for feature selection and extraction in intrusion detection. The CTDI model consists of three indexes: Classification Tendency Frequency Difference (CTFD), Classification Tendency Membership Difference (CTMD), and Classification Tendency Distance Difference (CTDD). In the dataset, each feature has many feature values (FVs). In each FV, the normal and attack samples indicate the FV classification tendency, and CTDI shows the classification tendency differences between the normal and attack samples. CTFD is the frequency difference between the normal and attack samples. By employing fuzzy C means (FCM) to establish the normal and attack clusters, CTMD is the membership difference between the clusters, and CTDD is the distance difference between the cluster centers. CTDI calculates the index score in each FV and summarizes the scores of all FVs in the feature as the feature score for each of the three indexes. CTDI adopts an Auto Encoder for feature extraction to generate new features from the dataset and calculate the three index scores for the new features. CTDI sorts the original and new features for each of the three indexes to select the best features. The selected CTDI features indicate the best classification tendency differences between normal and attack samples. The experiment results demonstrate that the CTDI features achieve better detection accuracy as classified by DNN for the Aegean WiFi Intrusion Dataset than their related works, and the detection enhancements are based on the improved classification tendency differences in the CTDI features.

1. Introduction

As the Internet of Things influences how people live and work, wireless intrusions have also become significant threads for wireless applications [1]. Machine learning methods are effective classifiers for detecting wireless intrusions based on the training datasets [2]. As the scale of wireless intrusion increases, deep learning becomes the emerging approach for wireless intrusion detection models [3].

Machine learning models often incorporate feature selection techniques to improve the accuracy of intrusion detection. Several works [4,5,6,7] employ machine learning-based classifiers with feature selection methods for different kinds of attacks in the CIC-IDS2017 [8], NSL-KDD [9], and UNSW-NB15 [10] datasets. To improve the detection capability, a deep neural network (DNN) combined with an Auto Encoder for feature extraction [11,12,13,14] can achieve better detection accuracy than the machine learning models.

Aegean Wi-Fi Intrusion Dataset (AWID) is a dedicated wireless attack with 154 features and consists of three major types of attacks: impersonation, injection, and flooding [15]. The ensemble of feature selection and tree-based classifiers can effectively detect the attacks in AWID [16]. The combination of DNN and Auto Encoder shows better detection accuracy than these tree-based models [17,18,19,20] in AWID. As the ensemble of DNN and Auto Encoder also integrates with feature selection and data balancing, the detection accuracy can be the best for AWID [17,18].

As these works employ feature selection and extraction to improve the detection capability, the improvements are usually evaluated by the detection accuracy, which is based on the False Positives (FP) and False Negatives (FN). Because the improvements cannot be directly explained, the root cause of the improvements is unclear. During detection, the gray areas of normal and attack behaviors in the data can cause FP and FN. Thus, the data tendency of normal and attack behaviors is the fundamental measurement for the dataset quality, and these related works cannot address this issue.

To provide a measurable feature selection model for the data tendency against normal and attack behaviors, we propose a Classification Tendency Difference Index (CTDI) model for feature selection and extraction in intrusion detections. CTDI provides three data tendency measurement methods for feature selection: Classification Tendency Frequency Difference (CTFD), Classification Tendency Membership Difference (CTMD), and Classification Tendency Distance Difference (CTDD). To identify the data tendency, CTDI targets each data value in each data feature as the essential measurement target unit. CTDI calculates the differences between normal and attack labels for the data value. CTFD directly counts the frequencies of the normal and attack labels in the feature value (FV) and calculates the absolute difference between the two frequencies. Then, CTFD computes the average count difference in the feature as a CTFD score. CTFD shows the differential frequency between normal and attack labels, and this differential frequency has a direct impact on the DNN inputs. High CTFD shows the feature has a high tendency toward the detection label, so this feature can help the classifier distinguish the attacks directly. Thus, CTFD can provide a direct data tendency measurement against the detection results. High CTFD can potentially avoid the detection of gray areas and avoid FP and FN.

CTMD and CTDD adopt fuzzy C means (FCM) to measure the data tendency. FCM provides membership values for each data value to represent the degree of relationship between normal and attack behaviors. CTMD calculates the absolute difference between the membership values of the normal and attack labels for the data value. Then, CTMD computes the average membership difference in the feature as a CTMD score. CTMD shows the differential fuzzy degree between normal and attack behaviors based on FCM clustering. High CTMD shows the feature has a high degree of data tendency toward the detection labels for high detection accuracy.

FCM also provides two cluster centers for each feature’s normal and attack behaviors. CTDD calculates the distances between the data values and the two centers and the difference between the two distances. Then, CTDD computes the average distance difference in the feature as the CTDD score. CTDD shows the differential distance between the normal and attack centers of FCM clusters. High CTDD shows the feature has a high data differential distance between the two cluster centers, resulting in high detection accuracy.

CTDI selects the high data tendency features based on three data tendency measurement methods to avoid the gray areas in these features. Thus, the selected features can assist the DNN classifier in reducing potential FP and FN to improve detection accuracy. Because CTDI directly provides the relationship between input data tendency measurement and detection result labels for the DNN classifier, it provides direct evaluation assistance for FP and FN against the dataset. Therefore, the detection results in the deep learning-based intrusion detection models can be explained based on the data tendency. This can be essential assistance in evaluating the root cause of detection errors and the potential improvement space against the dataset for the deep learning model.

CTDI adopts Auto Encoder as the feature extraction because Auto Encoder can provide additional high-quality features [11,12,13,14,17,18,19,20]. CTDI also selects the features in this input to improve the detection accuracy. Both the original and Auto-Encoder-selected data features are the inputs for the DNN classifier.

The remainder of this paper is organized as follows: Section 2 shows how the related feature selection works. Section 3 introduces the CTDI design. Section 4 illustrates the experimental results. Section 5 compares detection results with the related works in AWID. Section 6 gives the conclusion and future work.

2. Related Works

Machine learning models often incorporate data enhancement techniques, such as feature selection, Auto Encoders, and data balancing, to improve the accuracy of intrusion detection, as shown in Table 1.

Table 1.

Machine learning models incorporate data enhancement techniques for intrusion detection.

Alduailij et al. [4] employed Random Forest as the optimal classifier and utilized a hybrid feature selection method that combines Mutual Information and Random Forest Gini impurity to enhance intrusion detection accuracy in the CIC-IDS2017 dataset [8]. Subbiah et al. [5] utilized Random Forest as the classifier and Boruta as the feature selection method to identify diverse network attacks in the NSL-KDD dataset. Alsaleh et al. [6] employed XGBoost as the classifier and the Salp Swarm algorithm for feature selection by using both the NSL-KDD [9] and UNSW-NB15 [10] datasets. Shah et al. [7] adopted logistic regression for feature selection by using the NSL-KDD dataset.

Deep neural networks (DNNs) have become an effective classifier in intrusion detection models and have demonstrated superior accuracy compared to machine learning-based models for various attack types across different datasets. Auto Encoder has emerged as a popular data augmentation technique for enhancing DNN performance. Several studies [11,12,13,14] have successfully utilized Auto Encoder in conjunction with DNN for intrusion detection in datasets such as CIS-IDS2017, NSL-KDD, and UNSW-NB15.

In an effort to detect the three distinct AWID wireless attacks, Kolias et al. [15] employed manual feature selection and identified J48 as the most effective classifier. Mikhail et al. [16] utilized Gini impurity as the feature selection method and a semi-boosted tree as the classifier. Aminanto et al. [17] also utilized DNN with an Auto Encoder and proposed three feature selection methods with data balancing. D-FES achieved exceptionally high accuracy for impersonation attacks but did not detect flooding or injection attacks. Lee et al. [18] employed Support Vector Machines (SVM) with feature selection, Auto Encoder, and data balancing, demonstrating superior binary detection for the three AWID attacks. Parker et al. [19] utilized Logistic Regression (LR) with Auto Encoder, while Caminero et al. [20] employed reinforcement learning with Auto Encoder for binary detection in AWID.

3. Method

In this section, we first introduce the CTDI model design. Then, we illustrate the details of the three indexes as well as the usage of Auto Encoder as feature extraction in the CTDI model. Finally, an example scenario of an essential wireless feature is given to illustrate the use of CTDI.

3.1. Model Design

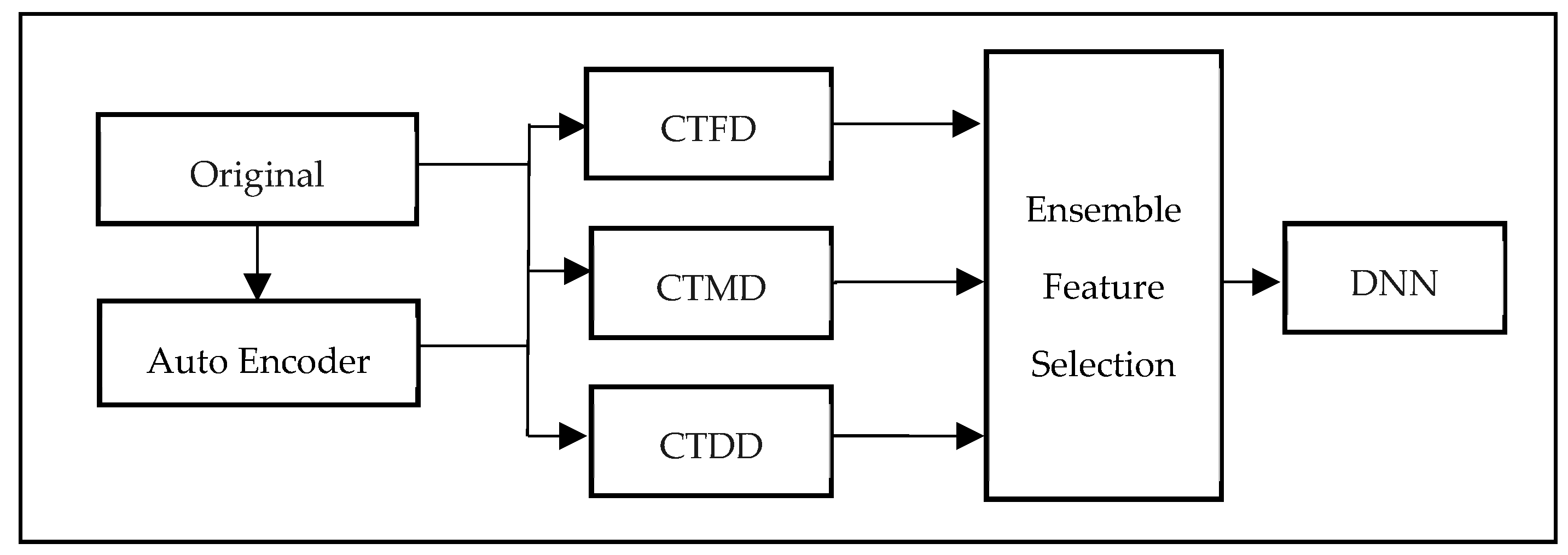

As Figure 1 shows, the CTDI model proposes three indexes to identify features that effectively distinguish between normal and attack network traffic. These indexes are:

Figure 1.

CTDI model design.

- Classification Tendency Frequency Difference (CTFD): Measures the difference in the frequency of occurrence of each FV between normal and attack samples.

- Classification Tendency Membership Difference (CTMD): Employs FCM to cluster normal and attack samples, then calculates the membership difference between the two clusters for each FV.

- Classification Tendency Distance Difference (CTDD): Calculates the distance difference between the cluster centers of normal and attack samples for each FV.

In addition, data preprocessing is applied to the original features. Minimax is applied to all original features to standardize their range from 0 to 1. Also, missing and unknown values are set to 0 in the original features.

For the feature extraction, the CTDI model first applies Auto Encoder to generate new extracted features from the dataset and then calculates each score of three indexes for both the original and new features. Finally, CTDI ranks the features based on their index scores and selects the top m% features for each index.

- Ensemble Feature Selection (EFS): EFS is a method that combines the results of feature selection conducted using three CTDI indexes: CTFD, CTMD, and CTDD. To identify the most pertinent features, EFS first selects those that consistently appear among the top m% of features for each index. This ensures that the selected features have a high degree of representativeness. As a feature appears in two or more of the top m% features of CTDI indexes, it suggests that it has strong representativeness, and thus, this feature is chosen in CTDI. Table 2 summarizes the common abbreviations and notations in CTDI.

Table 2. Abbreviations and notations in CTDI.

Table 2. Abbreviations and notations in CTDI.

3.2. CTFD

CTFD directly counts the frequency of the label k (0 is normal and 1 is an attack) in the ith FV, , by Equation (1), where is the total frequency of the repeating and is the jth repeating .

Then, CTDI calculates the absolute difference between the two frequencies as Diff_CTFD and summarizes Diff_CTFD from all FVs in the feature as the CTFD feature score, CTFDScore, by Equations (2) and (3), where D is the size of the dataset.

3.3. CTDD and CTMD

Based on FCM, CTMD is the membership difference of the clusters, and CTDD is the distance difference of the cluster centers. As CTDI adopts FCM, it calculates the cluster center, , for the cluster k and the membership of the cluster k, , for by Equations (4) and (5), where D is the size of the dataset.

While applying FCM, the fuzziness variable m is set to 2, and the number of clusters is set to 2. As is randomly initialized, the first initial is calculated by Equation (5). The least-squares error function (6) calculates the minimum error to determine the best and . Equations (4) and (5) keep updating and until the minimum error is satisfied, which is set to 10−4.

For CTMD, CTDI calculates the absolute difference between the two FV memberships, Diff_CTMD, and summarizes Diff_CTMD from all FVs in the feature as the CTMF feature score, CTMDScore, expressed in Equations (7) and (8).

For CTDD, CTDI calculates the absolute difference of the two distances between the FV and the cluster center, Diff_CTDD, and summarizes Diff_CTDD from all FVs in the feature as the CTDD feature score, CTDDScore, by Equations (9) and (10).

3.4. Auto Encoder

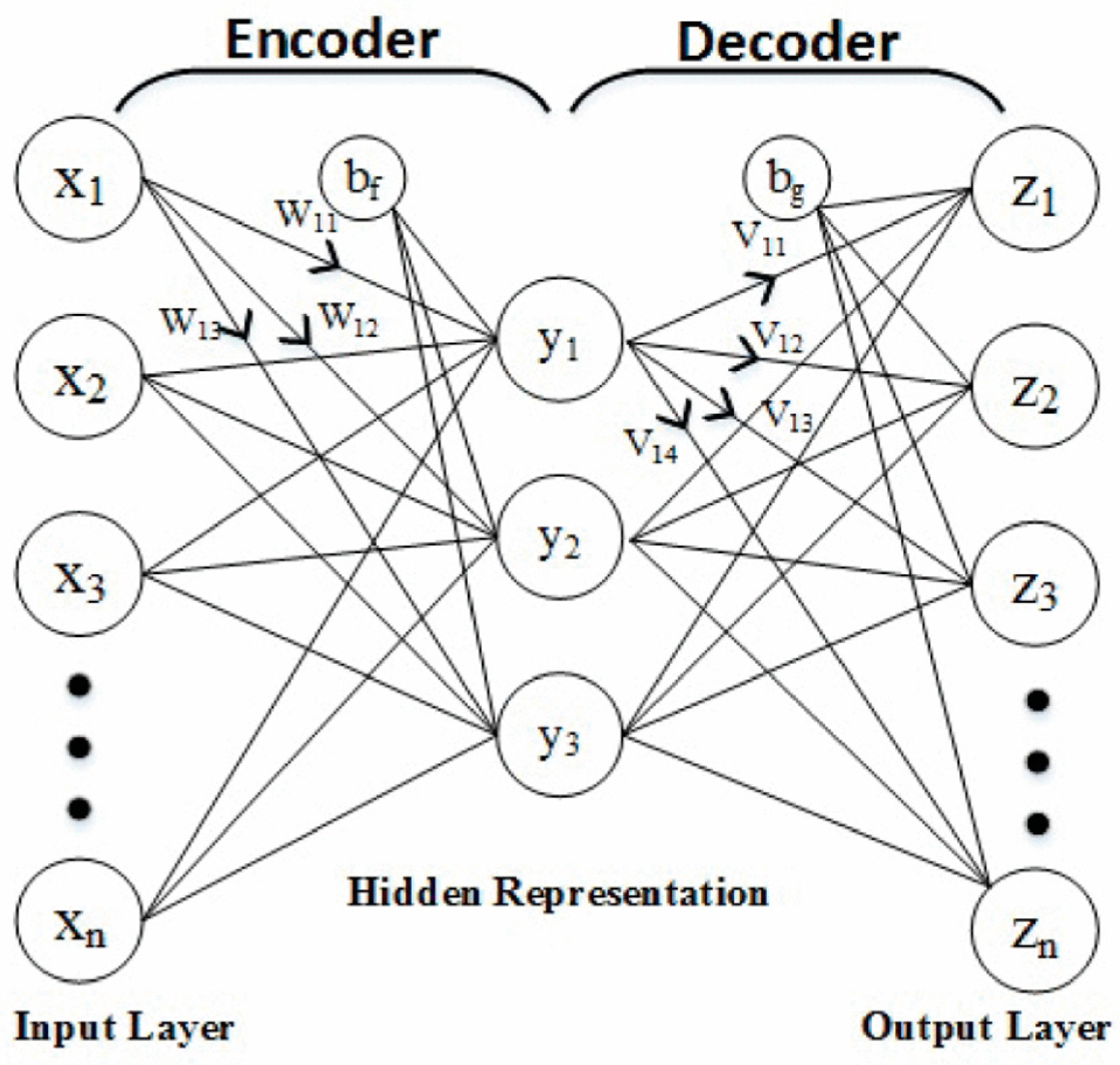

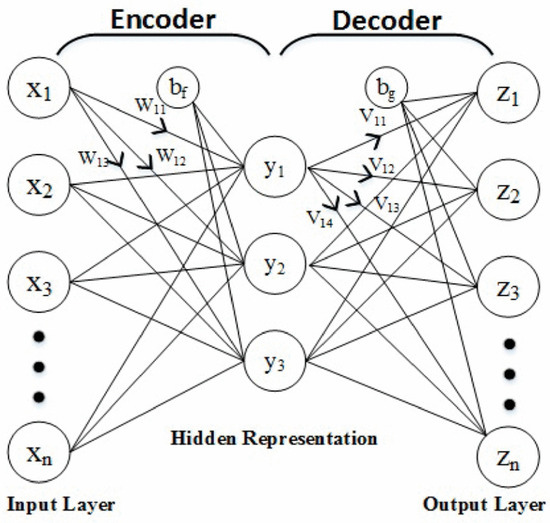

CTDI adopts an Auto Encoder for feature extraction with similar settings to the related works [12,13,17,18], as depicted in Figure 2. The encoder transforms the original feature X into the extracted feature Y. The decoder transforms the extracted feature back to output feature Z. Y is the Auto Encoder feature extracted from X, and the size of Y is the same as X.

Figure 2.

Auto Encoder neural network.

The encoder expressed in Equation (11) transforms X to Y with the weight W, bias bf, and activation function f in the encoder.

The decoder expressed in Equation (12) transforms Y to Z with the weight V, bias bg, and activation function f in the decoder layer. The activation function is Relu, which is the common choice of the related works [12,13].

Auto Encoder trains the hidden representation to minimize the differences between X and Z by the loss function, which consists of Mean Square Error (MSE), L2 regularization, and Kullback-Leibler divergence and is similar to the related works [12,17,18]. MSE, expressed in Equation (13) is the mean square error between X and Z, where D is the size of the dataset.

L2 regularization expressed in Equation (14) can avoid overfitting between the hidden layers by reducing their weights, where H is the number of neurons in the hidden layers and F is the number of features in the dataset.

Kullback–Leibler (KL) divergence expressed in Equation (15) calculates the sparsity regularization to prevent overfitting of the hidden neurons by reducing them. The variable is the sparsity parameter, as it is near 0 to reduce neuron values. KL divergence calculates the difference between the sparsity parameter and average activation, , to minimize their differences.

The final loss Function (16) summarizes MSE, L2 regularization, and sparsity regularization, and the minimum error is set to 10−4.

3.5. Example Scenario for CTDI

To illustrate the design of CTDI, an essential wireless feature, wireless LAN frame control type (wlan.fc.type), is given to illustrate the usage of CTDI.

CTFD requires calculating the frequency of the repeating feature values. For example, the ith feature value, , is equal to 1. This feature value repeats in 10,000 samples, of which 8000 belong to the normal ones and 2000 belong to the attack ones. Hence, is 10,000, is 8000, is 2000, and is 6000. Thus, this feature value contributes 6000 samples to for the feature wlan.fc.type. Clearly, this feature value has high differences between normal and attack samples. When other feature values also contribute high to becomes very high. This reveals that the feature, wlan.fc.type, presents high differences between normal and attack samples for each of the wireless LAN type values. Thus, it this feature is valuable for detecting the attack and should be selected by CTFD.

For CTMD and CTDD, they require FCM to generate the cluster center, , for the cluster k and the membership of the cluster k, , for . When is 1, because is close to cluster 0, is high (such as 85%) and is low (such as 15%). Thus, is also high (70%) to make of the feature high.

Besides, because is also close to and far away from , becomes high. Therefore, of the feature also becomes high because of the many high contributions of . As a result, this feature is selected by both CTMD and CTDD, and CTDI selects this feature based on the agreement of the three indexes.

For Auto Encoder, this feature, wlan.fc.type, is transformed into the extracted feature. Auto Encoder preserves the essential distribution of the feature values in the given feature, so the distribution difference between normal and attack samples should be highly related to the original feature. If the distribution difference in the original feature is large, the distribution difference in the extracted feature should be consistent. Thus, this extracted feature can have high scores for the three indexes and be selected by CTDI.

4. Experiment Results

4.1. Experiment Setting

The CTDI experiment program is written in Python, and the experiment software platform is Keras. The experiment is running on a desktop computer with a 2.9 GHz Intel Core i5-10400 processor and 32 GB of RAM.

AWID is the experiment dataset that has one normal class and three major attack classes: flooding, injection, and impersonation. In the adopted AWID training set, AWID-CLS-R-Trn, here are the distributions of four classes: (1) normal: 1,633,190 (91%), (2) flooding: 48,484 (3%), (3) injection: 65,379 (3%), and (4) impersonation: 48,522 (3%). To avoid training bias, the number of normal samples should be the same as the total number of attack samples in the training set. As Table 3 shows, the size of the normal class in the training set is reduced by random sampling. The AWID testing set, AWID-CLS-R-Tst, does not need to be balanced. Table 3 shows the sizes of four classes in the testing set, and their sizes remain the same. For validation purposes during the training, the training set is divided into the training and validation samples in the ratio of 8:2, and their sizes are 259,816 and 64,954, respectively.

Table 3.

Distribution of normal and attack data samples.

The experiment adopts two primary evaluation metrics: accuracy (ACC) and F1 score, which are based on the four major parameters in the confusion metrics: True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN). Accuracy measures the overall detection capability and is defined as follows: Accuracy = (TP + TN)/(TP + TN + FP + FN). The F1 score integrates precision and recall to evaluate the detection of attack behaviors and is defined as follows: F1 score = (2 × TP)/(2 × TP + FP + FN). In comparison to accuracy, the F1 score directly considers both FP and FN against TP without TN. Therefore, both accuracy and F1 scores are valuable for evaluating the effectiveness of intrusion detection models.

The AWID dataset has 154 features, which are designed to detect the three wireless attacks: flooding, injection, and impersonation. These features are mainly related to Wi-Fi major parameters for frames, radio channels, wireless LAN control, and management. From these original features, CTDI generates 154 Auto Encoder features. Then, CTDI selects the effective features from both the original and Auto Encoder data samples.

4.2. Feature Selection Results

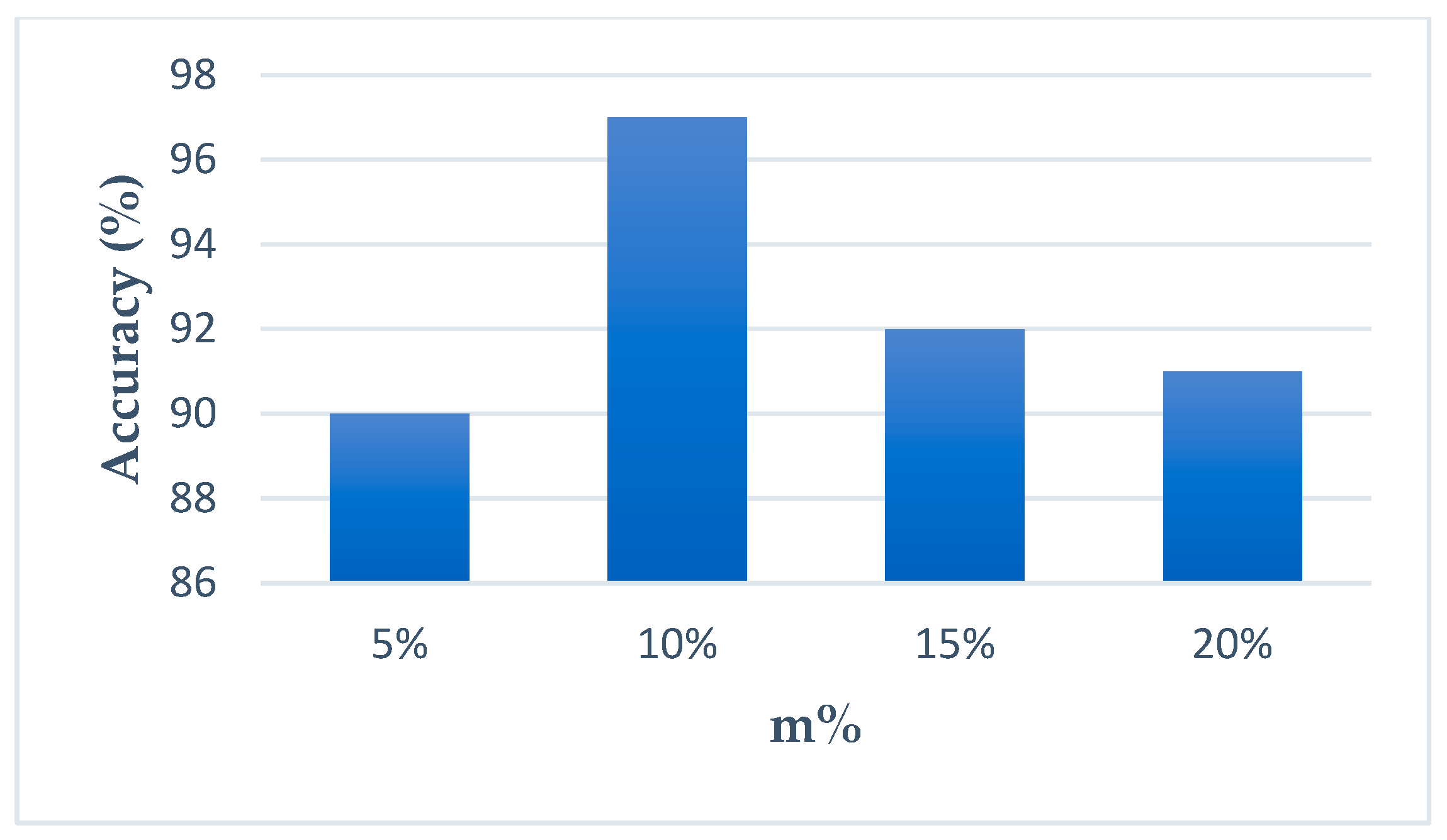

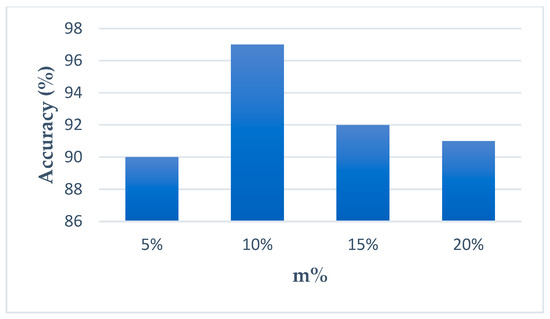

Figure 3 shows the example in CTDD using original features as we determine m%. As m% is 10%, clearly, accuracy is the best. For the other CTDI index cases, the results are similar. Thus, m% is set to 10%, and the 16 features are selected for each index from the 154 features.

Figure 3.

The accuracy for different m%.

Table 4 shows the feature selection results. For original features, the selected features are similar, so EFS can have 18 features from them. This shows the selected 18 features are representative since the three indexes reach a high consensus on these features. The Auto Encoder features are extracted from the original features, and their features are less similar than the original features. EFS selects six features from them. Overall, EFS in CTDI selects 24 features from both the original and Auto Encoder features.

Table 4.

Feature selection results.

Table 5 shows the names of selected original and Auto Encoder features in the CTDI. The #8 feature, frame length, and #66 feature, WLAN frame control type, are selected in both original and Auto Encoder features, so they are recognized as the critical features to distinguish the attack from normal behaviors. The #66 feature, WLAN frame control type, is clearly the key feature to identify wireless network behaviors by its definition. Some type-related features, such as #50, #51, #64, and #67, are also selected to distinguish the attack behaviors. It shows that the CTDI model can identify features with clear differences between normal and attack behaviors.

Table 5.

The names of selected original and Auto Encoder features in CTDI.

The packet length features #8 frame.len, #9 frame.cap_len, and #154 data.len are also selected to detect the attack behaviors. Other selected original features are related to notable wireless parameters, such as time (#4 and #7), frame control (#70, #71, and #73), and WEP (#140 and #142). Auto Encoder features are extracted from the original features, so their selected features show four different wireless parameters (#39, #76, #78, and #139).

4.3. Detection Results

Table 6 shows the detection results for all sets of selected features. Of the 24 selected features, CTDI achieves the best results with 99.92% accuracy and a 99.52% F1 score, which are very convincing for detecting AWID wireless attacks. For the originally selected feature sets, they also achieved very good results in accuracy and F1 score. This shows these selected features are very representative and match the observation of feature selection results. CTFD shows the best results for the original selected feature sets. For the Auto Encoder features, CTMD also shows the best detection results, so the Auto Encoder selected features are also very helpful.

Table 6.

Detection results from all sets of selected features.

The results show that the selected features representing the high differences between normal and attack classes are very effective in distinguishing between normal and attack behaviors in wireless networks. CTDI accumulates the best selected features from the original and Auto Encoder features, and thus it achieves the best convincing detection results.

The EFS in CTDI adopts the majority voting approach to select the features from the three indexes as two of the indexes agree with the same feature. Other related works adopting the ensemble approach usually select several machine learning methods, such as Random Forest and Mutual Information [4], Decision Tree 4.5, Support Vector Machine, and Artificial Neural Network [17]. However, they do not merge the selected features from the different feature selection methods. The results show that CTDI achieves better results than CTFD, CTMD, and CTDD, and thus CTDI shows the merged feature sets by EFS are more effective than the related works, which only use one feature selection method to generate one separated selected feature set individually.

5. Comparing Detection Results with the Related Works in AWID

Table 7 shows the detection results of the related works in AWID compared with the proposed CTDI model. CTDI applies the three data enhancement approaches: feature selection (FS), data balancing (DB), and Auto Encoder (AE), and CTDI achieves the best results for detecting all three attacks. Aminanto et al. [17] only detected impersonation attacks, so they could achieve the highest results. As we reproduce the results of its selected features against the three attacks, its results become much lower than those of CTDI. The author of AWID [15] adopted J48 as its best classifier with FS. Its results show that the baseline of AWID is lower than that of CTDI.

Table 7.

Comparing detection results with the related works in AWID.

Lee et al. [18] adopted SVM as the classifier and applied FS, DB, and AE, and it shows the second-best results in Table 7. Parker et al. [19] adopted Logical Regression as the classifier with AE, and its results are slightly lower than Lee et al.’s method [18]. They both adopt machine learning-based classifiers with AE to achieve good detection results, but they are still worse than CTDI. The combination of reinforcement learning (RL) with AE [20] shows lower accuracy, but its F1 is relatively high. This shows RL is less effective in detecting normal behaviors in AWID. The semi-boosted tree with FS and DB [16] has lower results than those employing DNN or AE. In conclusion, the proposed CTDI shows the best detection result among the related works for the three types of attacks in AWID. This shows that the proposed CTDI, which chooses the selected features with high differences between normal and attack behaviors, is very effective for wireless attacks.

6. Conclusions

In this paper, we propose CTDI, which consists of three indexes: CTFD, CTMD, and CTDD for feature selection for original and Auto Encoder features, to detect three types of wireless attacks in AWID. After integrating the results of feature selection by three CTDI indexes, we extract the most relevant features that show high differences between normal and attack behaviors. During the CTDI process, 18 features are selected from the original features, and 6 features are selected from the AE features, for a total of 24 features. The experimental results demonstrate that the CTDI features achieve 99.92% accuracy and a 99.52% F1 score with the DNN classifier, outperforming the related works in AWID. Unlike other related works adopting the ensemble approach and only using the individual feature selection method to select features separately, the proposed CTDI merged the features from the three indexes by EFS, the majority voting. The results show that CTDI achieves better results than CTFD, CTMD, and CTDD, which are individual feature selection indexes. Therefore, EFS enables CTDI to achieve better results than the individual feature selection method adopted by other ensemble works.

Besides, other network attack datasets, such as UNSW-NB15 and CIC-IDS2017, have similar issues with AWID. These datasets also require selecting features containing clear differences between normal and attack behaviors. Our feature work will apply CTDI to these datasets to discover effective features, as we found in AWID.

Author Contributions

Conceptualization, C.H.T.; methodology, C.H.T.; software, C.H.T.; validation, C.H.T. and W.-J.T.; formal analysis, C.H.T. and W.-J.T.; investigation, Y.-M.S.; resources, C.H.T.; data curation, C.H.T.; writing—original draft preparation, C.H.T. and Y.-M.S.; writing—review and editing, W.-J.T.; visualization, C.H.T.; supervision, W.-J.T.; project administration, W.-J.T.; funding acquisition, W.-J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Science and Technology Council in Taiwan under contract numbers NSTC 111-2221-E-305-005-MY2 and NSTC 112-2622-E-305-004.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to location privacy.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Badii, C.; Bellini, P.; Difino, A.; Nesi, P. Smart City IoT Platform Respecting GDPR Privacy and Security Aspects. IEEE Access 2020, 8, 23601–23623. [Google Scholar] [CrossRef]

- Al Lail, M.; Garcia, A.; Olivo, S. Machine Learning for Network Intrusion Detection—A Comparative Study. Future Internet 2023, 15, 243. [Google Scholar] [CrossRef]

- Aldweesh, A.; Derhab, A.; Emam, A.Z. Deep learning approaches for anomaly based intrusion detection systems: A survey, taxonomy, and open issues. Knowl.-Based Syst. 2020, 189, 105124. [Google Scholar] [CrossRef]

- Alduailij, M.; Khan, Q.W.; Tahir, M.; Sardaraz, M.; Alduailij, M.; Malik, F. Machine-Learning-Based DDoS Attack Detection Using Mutual Information and Random Forest Feature Importance Method. Symmetry 2022, 14, 1095. [Google Scholar] [CrossRef]

- Subbiah, S.; Anbananthen, K.S.M.; Thangaraj, S.; Kannan, S.; Chelliah, D. Intrusion detection technique in wireless sensor network using grid search random forest with Boruta feature selection algorithm. J. Commun. Netw. 2022, 24, 264–273. [Google Scholar] [CrossRef]

- Alsaleh, A.; Binsaeedan, W. The Influence of Salp Swarm Algorithm-Based Feature Selection on Network Anomaly Intrusion Detection. IEEE Access 2021, 9, 112466–112477. [Google Scholar] [CrossRef]

- Shah, R.A.; Qian, Y.; Kumar, D.; Ali, M.; Alvi, M.B. Network Intrusion Detection through Discriminative Feature Selection by Using Sparse Logistic Regression. Future Internet 2017, 9, 81. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP), Funchal, Portugal, 22–24 January 2018. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015. [Google Scholar]

- Dao, T.-N.; Lee, H. Stacked Autoencoder-Based Probabilistic Feature Extraction for On-Device Network Intrusion Detection. IEEE Internet Things J. 2022, 9, 14438–14451. [Google Scholar] [CrossRef]

- Rao, K.N.; Rao, K.V.; Prasad Reddy, P.V.G.D. A hybrid Intrusion Detection System based on Sparse autoencoder and Deep Neural Network. Comput. Commun. 2021, 180, 77–88. [Google Scholar]

- Bhardwaj, A.; Mangat, V.; Vig, R. Hyperband Tuned Deep Neural Network with Well Posed Stacked Sparse AutoEncoder for Detection of DDoS Attacks in Cloud. IEEE Access 2020, 8, 181916–181929. [Google Scholar] [CrossRef]

- Yaser, A.L.; Mousa, H.M.; Hussein, M. Improved DDoS Detection Utilizing Deep Neural Networks and Feedforward Neural Networks as Autoencoder. Future Internet 2022, 14, 240. [Google Scholar] [CrossRef]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Gritzalis, S. Intrusion Detection in 802.11 Networks: Empirical Evaluation of Threats and a Public Dataset. IEEE Commun. Surv. Tutor. 2016, 18, 184–208. [Google Scholar] [CrossRef]

- Mikhail, J.W.; Fossaceca, J.M.; Iammartino, R. A Semi-Boosted Nested Model with Sensitivity-Based Weighted Binarization for Multi-Domain Network Intrusion Detection. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–27. [Google Scholar] [CrossRef]

- Aminanto, M.E.; Choi, R.; Tanuwidjaja, H.C.; Yoo, P.D.; Kim, K. Deep Abstraction and Weighted Feature Selection for Wi-Fi Impersonation Detection. IEEE Trans. Inf. Forensics Secur. 2018, 13, 621–636. [Google Scholar] [CrossRef]

- Lee, S.J.; Yoo, P.D.; Asyhari, A.T.; Jhi, Y.; Chermak, L.; Yeun, C.Y.; Taha, K. IMPACT: Impersonation Attack Detection via Edge Computing Using Deep Autoencoder and Feature Abstraction. IEEE Access 2020, 8, 65520–65529. [Google Scholar] [CrossRef]

- Parker, L.R.; Yoo, P.D.; Asyhari, T.A.; Chermak, L.; Jhi, Y.; Taha, K. DEMISe: Interpretable deep extraction and mutual information selection techniques for IoT intrusion detection. In Proceedings of the International Conference on Availability, Reliability and Security (ARES ‘19), New York, NY, USA, 26–29 August 2019. [Google Scholar]

- Caminero, G.; Lopez-Martin, M.; Carro, B. Adversarial environment reinforcement learning algorithm for intrusion detection. Comput. Netw. 2019, 159, 96–109. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).